Abstract

The neural basis of language processing, in the context of naturalistic reading of connected text, is a crucial but largely unexplored area. Here we combined functional MRI and eye tracking to examine the reading of text presented as whole paragraphs in two experiments with human subjects. We registered high-temporal resolution eye-tracking data to a low-temporal resolution BOLD signal to extract responses to single words during naturalistic reading where two to four words are typically processed per second. As a test case of a lexical variable, we examined the response to noun manipulability. In both experiments, signal in the left anterior inferior parietal lobule and posterior inferior temporal gyrus and sulcus was positively correlated with noun manipulability. These regions are associated with both action performance and action semantics, and their activation is consistent with a number of previous studies involving tool words and physical tool use. The results show that even during rapid reading of connected text, where semantics of words may be activated only partially, the meaning of manipulable nouns is grounded in action performance systems. This supports the grounded cognition view of semantics, which posits a close link between sensory–motor and conceptual systems of the brain. On the methodological front, these results demonstrate that BOLD responses to lexical variables during naturalistic reading can be extracted by simultaneous use of eye tracking. This opens up new avenues for the study of language and reading in the context of connected text.

SIGNIFICANCE STATEMENT The study of language and reading has traditionally relied on single word or sentence stimuli. In fMRI, this is necessitated by the fact that time resolution of a BOLD signal much lower than that of cognitive processes that take place during natural reading of connected text. Here, we propose a method that combines eye tracking and fMRI, and can extract word-level information from the BOLD signal using high-temporal resolution eye tracking. In two experiments, we demonstrate the method by analyzing the activation of manipulable nouns as subjects naturally read paragraphs of text in the scanner, showing the involvement of action/motion perception areas. This opens up new avenues for studying neural correlates of language and reading in more ecologically realistic contexts.

Keywords: embodiment, eye tracking, fMRI, language, reading, semantics

Introduction

The study of language and reading has traditionally relied on single word or sentence stimuli, for a good reason. Linguistic stimuli contain a jumble of covarying variables that represent sublexical to narrative levels of representation. To disentangle the effects of particular variables, it is necessary to carefully control some properties of the stimuli while manipulating others. Single word stimuli, and to some extent, sentences, make this feasible. A treasure trove of insights into reading, and more generally, into language processing, has accumulated using this approach. However, most daily reading consists of connected sentences and passages forming a narrative. Some theories of text processing suggest that syntactic and semantic analysis of text in such contexts can sometimes be shallow or incomplete (McKoon and Ratcliff, 1992; Ferreira et al., 2002), and thus potentially different from the analysis of text presented in smaller units such as words or phrases.

Investigating the reading of connected text using fMRI is especially difficult, because a BOLD response to a single word can be expected to last ∼16–20 s. The typical ∼2 s image acquisition time is also much slower than the speed of natural reading, where typically two to four words are processes per second. Rapid serial visual presentation has been used to mitigate these problems. Here, each word is presented for a fixed duration, such as 500 ms (Wehbe et al., 2014), or for a duration that is varied within a window based on word characteristics, such as the number of letters (Buchweitz et al., 2009). During natural reading, however, words are fixated for varying amounts of time depending on multiple characteristics of the word itself as well as its context, and also processed parafoveally. Some words are skipped entirely, while some words are processed again through regressions or backward eye movements. The neural basis of reading and language processing in such naturalistic conditions remains a crucial but largely unexplored area (Willems, 2015).

Here, we combine eye tracking with fMRI to study naturalistic reading of connected text. We examine whether high-time resolution eye tracking can be used to extract a signal related to rapid events from the low-time resolution BOLD signal. Entire paragraphs of text were presented on the screen. Subjects' eye movements were recorded while they read the paragraphs. We conducted two separate experiments with different texts and subjects, to examine the extent to which the results can be replicated with different sets of unselected materials.

As a test case, we examine BOLD responses to noun manipulability. This lexical variable was chosen for multiple reasons. Currently, there is a vigorous debate on the nature of semantic representations, and especially action semantics. The grounded cognition view suggests that sensory–motor systems play an important role in semantic representations (Barsalou, 2008; Kiefer and Pulvermüller, 2012). The symbolic view posits that semantic processing is performed through manipulation of amodal symbols (Fodor, 1983; Pylyshyn, 1984). As a test of the grounded cognition view, a number of imaging studies on noun manipulability, and action semantics in general, have been conducted using traditional designs, which can serve as a baseline for comparison. The results can potentially contribute to this debate, while validating the method. On the practical side, it is necessary to have a sufficient number of items to isolate the response a lexical variable, which is necessarily mixed with that to the surrounding text. In the text materials used, there were relatively large number of nouns that varied in manipulability in both experiments (see Materials and Methods), making this choice possible. In sum, we address the following two questions in this study: (1) whether the BOLD response to a lexical variable can be extracted in naturalistic reading of connected text; and (2) whether the semantics of manipulable nouns, when read in the context of a narrative, are represented in sensory–motor regions of the brain.

Materials and Methods

Subjects

Thirty-one subjects (12 male; age range, 18–35 years; mean age, 21.5 years) participated in Experiment 1. Two additional subjects who did not finish the experiment were excluded. Forty subjects (13 male; age range, 18–34 years; mean age, 21.9 years) participated in Experiment 2. All subjects were right handed native speakers of English, gave informed consent, and were screened for MRI safety, according to a protocol approved by the Institutional Review Board of the University of South Carolina. All subjects reported normal or corrected-to-normal vision and were either paid or received course credit for participation in the study.

Materials

Experiment 1.

The text consisted of 22 paragraphs selected equally from two sources, The Emperor's New Clothes by Hans Christian Andersen, and a Nelson-Denny Practice Test (a standardized test of reading ability aimed at high school and college students). Paragraphs consisted of 49–66 words each. (The experiment also included nonword conditions that we do not discuss here.)

Experiment 2.

Forty short passages of text were adapted from the Gray Oral Reading Tests—Fifth Edition (Wiederholt and Bryant, 2012) and the Gray Silent Reading Tests (Wiederholt and Blalock, 2000). All texts were trimmed to be between 49 and 77 words long.

The text was processed through an automated part-of-speech parser (Toutanova et al., 2003) to identify word classes, and nouns were used for the current analysis. Characteristics of the text in each experiment are shown in Table 1.

Table 1.

Characteristics of the materials used in both experiments

| Total words | Total nouns | Unique noun word forms | Unique lemmas | Freq (SD) | Conc (SD) | Manip (SD) | Freq-Manip r | Conc-Manip r | |

|---|---|---|---|---|---|---|---|---|---|

| Experiment 1 | 1312 | 281 | 163 | 143 | 9.41 (1.93) | 4.17 (0.87) | 2.77 (1.24) | −0.025 | 0.66 |

| Experiment 2 | 2486 | 614 | 511 | 469 | 9.15 (2.11) | 3.72 (1.06) | 2.73 (1.19) | 0.04 | 0.69 |

Freq, Frequency; Conc, concreteness; Manip, manipulability; r, Pearson correlation.

To estimate manipulability, participants from Amazon Mechanical Turk were asked to rate whether the noun refers to an object that can be physically manipulated on a scale from 0 (not manipulable at all) to 6 (very manipulable; 20 ratings for each item on average).

We also examined whether nouns appearing in subject or object positions have an influence on manipulability ratings. Text was processed with the Stanford parser universal dependencies (de Marneffe et al., 2006; Socher et al., 2013) to obtain subject/object assignments, and was manually spot-checked. In a two-sample t test, there was no difference in manipulability between nouns used as subjects and as objects in both experiments (Experiment 1, p > 0.18; Experiment 2, p > 0.45).

Apparatus

Stimuli were presented using an Avotec Silent Vision 6011 projector in its native resolution (1024 × 768) and a refresh rate of 60 Hz. Eye movements were monitored via an SR Research Eyelink 1000 long-range MRI eye tracker with a sampling rate of 1000 Hz. Viewing was binocular, and eye movements were recorded from the right eye.

Procedure

In the scanner, a 13 point calibration procedure was administered before each of the two functional runs to correctly map eye position to screen coordinates. Eye movements were recorded throughout the runs to ensure that natural reading eye movements were executed. Text was presented in Courier New font (monospaced) with 4.3 characters subtending 1° of visual angle. The target area for each word was defined automatically using the Experiment Builder software, which uses centers of spaces between words and between lines to delineate boundaries. In both experiments, paragraphs were divided equally between two scanning runs. The order of the paragraphs was randomized for each participant. Participants were asked to read these paragraphs silently “as if they were reading a novel.”

MRI data acquisition

Both experiments used identical MR acquisition protocol on a Siemens 3 T Trio scanner. A 3D, T1-weighted, MPRAGE radio frequency-spoiled rapid flash scan in the sagittal plane, and a T2/proton density-weighted multislice, axial, 2D, dual Fast Turbo spin-echo scan in the axial plane was used. The multiecho whole-brain T1 scans had 1 mm isotropic voxel size (TR = 2530 ms; flip angle = 7°). Functional runs were acquired using gradient echo, echoplanar images with TR = 1850 ms, TE = 30 ms, flip angle = 75°, FOV = 208 mm, and matrix of 64 × 64. Volumes consisted of 34 3 mm axial slices, resulting in 3.3 × 3.3 × 3 mm voxel size.

MRI analysis

Within-subject analysis using AFNI (Cox, 1996) involved slice timing correction, spatial coregistration, and registration of functional images to the anatomy. A binary condition regressor corresponding to nouns was created by coding the start of each fixation on each noun, which we name FIRE (fixation-related) analysis. A regressor coding the manipulability rating was included, which was the primary variable of interest. A regressor representing fixations on all other words was also included. Furthermore, reference functions coding the number of letters, word frequency [log HAL (Hyperspace Analogue to Language) from the English Lexicon Project], noun concreteness, and the duration of fixation were also included as covariates of no interest. Because the words were not preselected, these regressors were included to account for potential activation differences due to these psycholinguistic variables. Additionally, reference functions representing the six motion parameters, and the average signal extracted from CSF and white matter (segmented using program 3dSeg) were also included as noise covariates of no interest.

Voxelwise multiple linear regression was performed with the program 3dREMLfit, using these reference functions representing each condition convolved with a standard hemodynamic response function. General linear tests were conducted to obtain contrasts between conditions of interest.

The individual statistical maps and the anatomical scans were projected into standard stereotaxic space (Talairach and Tournoux, 1988) and smoothed with a Gaussian filter of 5 mm FWHM. In a random-effects analysis, group maps were created by comparing activations against a constant value of 0. The group maps were thresholded at voxelwise p < 0.01 and corrected for multiple comparisons by using Monte Carlo simulations to achieve a mapwise corrected p < 0.05. The analysis was restricted to a mask that excluded areas outside the brain, as well as deep white matter areas and the ventricles.

Additionally, we examined activation in two independently selected regions of interest (ROIs) that are consistently associated with action semantics. Using coordinates taken from Desai et al. (2010), who studied action versus abstract sentences, we placed spherical ROIs of 5 mm radius centered at [−57, 32, 32] (left anterior inferior parietal lobule) and [−49 −55 −1] [left posterior inferior temporal sulcus (pITS)]. The significance of regression coefficients in these ROIs was examined with a one-sample t test.

Results

Eye movement results

Table 2 shows basic eye movement measures. This general pattern of results in eye movements was similar to those obtained outside the scanner (Henderson and Luke, 2014; Choi et al., 2015). As expected, there was negative correlation between frequency and fixation duration (of all fixations) for all words (Experiment 1: −0.060, p < 0.05; Experiment 2: −0.11, p < 0.0001). The correlation between noun manipulability and fixation duration was 0.015 (not significant) in Experiment 1 and −0.064 in Experiment 2 (not significant).

Table 2.

Eye movement measures (mean and SD across subjects) in both experiments

| Number of total fixations | Number of fixations on nouns | Fixation duration (ms) | First fixation duration (ms) | Single fixation duration (ms) | Gaze duration (ms) | |

|---|---|---|---|---|---|---|

| Experiment 1 | 1042 (113) | 285 (39) | 215 (89) | 220 (84) | 222 (85) | 257 (127) |

| Experiment 2 | 1814 (194) | 568 (74) | 233 (112) | 241 (111) | 245 (109) | 299 (178) |

The values are for nouns except for the first column.

fMRI results

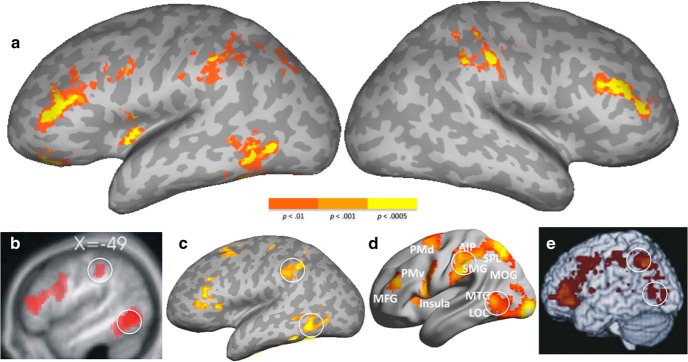

The imaging results of Experiment 1 are shown in Figure 1a and Table 3. Noun manipulability was positively correlated with signal in the left inferior parietal lobule, including the dorsal supramarginal gyrus (SMG) and postcentral sulcus, extending into the intraparietal sulcus (IPS). The SMG and anterior IPS activation was also seen in the right hemisphere (RH) to a lesser extent. The left posterior inferior temporal gyrus (pITG) and inferior temporal sulcus (ITS), extending into the posterior middle temporal gyrus (MTG), were also activated. Middle frontal gyrus (MFG) and inferior frontal gyrus (IFG) were activated bilaterally, with the left hemisphere activation spreading into the lateral orbitofrontal cortex. Medially, cuneus and the mid-cingulate gyrus were also activated.

Figure 1.

a, Positive correlations with noun manipulablity ratings in Experiment 1. b–e, Other studies that implicate the similar regions (the left IPL and posterior ITS, highlighted with white circles) in action performance and action semantics. b, Activation for tools > animal words/pictures in a semantic decision task (adapted from Noppeney et al., 2006). c, Activation for literal action sentences relative to those with abstract verbs (adapted from Desai et al. (2013)). d, Activation for physically using tools relative to moving a bar-like object (adapted from Brandi et al. (2014)). e, Lesion map of areas associated with producing grasping errors in patients relative to those patients who do not exhibit grasping errors (adapted from Randerath et al. (2010)). The color scale applies only to a.

Table 3.

Activations positively correlated with noun manipulability ratings in Experiment 1

| Volume (mm3) | Max | Talairach coordinates |

Anatomical structures | ||

|---|---|---|---|---|---|

| x | y | z | |||

| 11664 | 5.9 | −40 | 34 | 14 | LH middle frontal g, inferior frontal g |

| 4.4 | −28 | 37 | −6 | LH orbital g, s | |

| 3.9 | −40 | 4 | 35 | LH precentral s | |

| 3.3 | −37 | 31 | 38 | LH middle frontal g | |

| 9639 | 4.8 | −52 | −34 | 41 | LH supramarginal g, postcentral s |

| 3.8 | −19 | −52 | 41 | LH intraparietal s | |

| 7479 | 5.3 | −46 | −55 | −3 | LH inferior temporal g, s, middle temporal g |

| 4.6 | −34 | −70 | −9 | LH middle occipital g | |

| 3.2 | −28 | −49 | −18 | LH cerebellum | |

| 3591 | 4.7 | 43 | 37 | 14 | RH middle frontal g, inferior frontal g |

| 3240 | 4.6 | 52 | −37 | 47 | RH supramarginal g |

| 4.4 | 55 | −22 | 32 | RH postcentral s | |

| 1782 | 4.5 | −37 | −1 | 2 | LH insula |

| 1593 | 3.4 | −1 | −79 | 2 | LH/RH cuneus |

| 1485 | 3.8 | −1 | 1 | 35 | LH/RH mid-cingulate g |

Cluster volume, maximum z-score, Talairach coordinates of the peak voxel, and approximate brain structures are shown for each cluster. Volume, Cluster volume; Max, maximum z-score; g, gyrus; s, sulcus.

For comparison with the current results, examples from previous studies are shown in Figure 1b–e. Figure 1, a and b, shows activation from tools (Noppeney et al., 2006) and action sentence semantics (Desai et al., 2013), respectively. Figure 1c shows activation to the physical use of tools inside the scanner, relative to moving bar-like objects (Brandi et al., 2014). Figure 1d shows a map of lesioned areas in patients with deficits in grasping movements, relative to those who performed functional grasping flawlessly (Randerath et al., 2010). In all cases, activations or lesions can be seen in the network of areas found in the left inferior parietal lobule (IPL), MFG/IFG, and MTG/ITG.

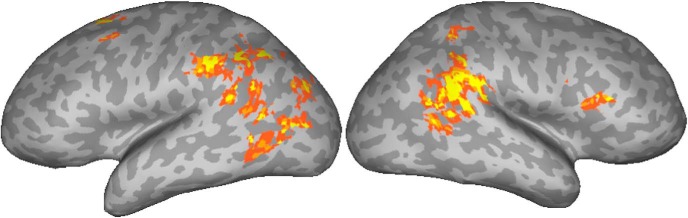

In Experiment 2 (Table 4, Fig. 2), the signal in the left anterior IPL (aIPL) and pITS/pITG were found to be positively correlated with noun manipulability. The RH activation in the SMG was stronger than in Experiment 1, extending into superior temporal gyrus (STG), angular gyrus (AG), and MTG. The left STG, AG, and MTG were also activated. In the frontal lobe, a posterior region of the superior frontal gyrus (SFG) was activated. The precuneus was activated bilaterally.

Table 4.

Activations positively correlated with noun manipulability ratings in Experiment 2

| Volume | Max | Talairach coordinates |

Anatomical structures | ||

|---|---|---|---|---|---|

| x | y | z | |||

| 10,341 | 5.0 | 55 | −46 | 20 | RH supramarginal g, angular g, middle temporal g |

| 4.1 | 52 | −40 | 41 | RH supramarginal g | |

| 5508 | 4.2 | −40 | −70 | 11 | LH middle occipital g |

| 3.9 | −49 | −49 | 17 | LH superior temporal g, s, angular g | |

| 3.2 | −43 | −52 | −3 | LH inferior temporal g, s, middle temporal g | |

| 3564 | 4.0 | −7 | −61 | 47 | LH precuneus |

| 2808 | 4.1 | −31 | −55 | 35 | LH anterior intraparietal s |

| 2349 | 4.2 | −55 | −34 | 41 | LH supramarginal g |

| 1620 | 3.5 | 16 | −73 | 32 | RH precuneus |

| 1431 | 4.4 | −13 | 10 | 56 | LH superior frontal g, s |

| 1134 | 3.7 | 52 | 25 | 17 | RH inferior frontal g (pars triangularis) |

Cluster volume, maximum z-score, Talairach coordinates of the peak voxel, and approximate brain structures are shown for each cluster. Volume, Cluster volume; Max, maximum z-score; g, gyrus; s, sulcus.

Figure 2.

Activations positively correlated with noun manipulablity ratings in Experiment 2. The color scale is the same as in Figure 1.

In the ROI analysis, Experiment 1 resulted in significant activation in the aIPL (t = 3.8, p < 0.005), and the pITS (t = 6.28, p < 0.0001). Experiment 2 results were also significant in both ROIs (aIPL: t = 2.97, p < 0.006; pITS: t = 2.83, p < 0.008). Thus, activation in these regions was not dependent on the use of specific thresholding methods.

Discussion

Natural reading involves continuous and rapid variation in orthographic, phonological, semantic, and syntactic processes, in addition to modulation of more general executive functions, such as attention and working memory. These processes operate at sublexical, lexical, phrasal, sentential, and narrative levels. While it is desirable to study language in this more ecologically realistic way, it is not clear whether the rapid modulation of numerous complex processes and be studied with techniques such as fMRI, which typically provide a measurement every 2 s or so. We examined whether one lexical semantic variable, manipulability of nouns, can be examined in naturalistic reading using fMRI, with the aid of high-time resolution eye tracking. In two separate experiments, using unselected nouns, we found that noun manipulability was correlated with signal in a well known network involving anterior inferior parietal and posterior inferior temporal areas.

The aIPL and the anterior intraparietal area are strongly associated with the planning and performance of skilled actions, including reaching and grasping. A number of imaging studies show aIPL involvement in planning and executing actions and tool use (Frey et al., 2005; Johnson-Frey et al., 2005; Hermsdorfer et al., 2007; Brandi et al., 2014). Corroborating evidence is provided by lesion studies that relate aIPL damage with impairments in tool use or grasping actions (Haaland et al., 2000; Buxbaum et al., 2005; Jax et al., 2006; Goldenberg and Spatt, 2009; Randerath et al., 2010). Through comparisons of humans with monkeys trained in tool use, Peeters et al. (2009) proposed that aIPL has evolved only in humans to subserve tool use.

The occipitotemporal cortex (posterior ITG/ITS) is typically coactivated with the IPL in studies of tool and action semantics (Kable et al., 2005; Tettamanti et al., 2005; Noppeney et al., 2006; Desai et al., 2010, 2011, 2013; Rueschemeyer et al., 2010). Activation in or close to the motion perception area MT+ was found in these studies to tool and action semantics, as well as in studies of tool use mentioned above. Vitali et al. (2005) found that the functional connectivity among frontal, inferior parietal, and occipitotemporal areas was enhanced selectively when generating tool names. Similarly, Ramayya et al. (2010) found a structural tool use network formed by aIPL structural connections to posterior middle temporal and inferior frontal regions.

In sum, the modulation of these two regions by noun manipulability is consistent with a large body of studies of tool use and action semantics. This serves to demonstrate that BOLD responses to lexical semantic variables can be extracted in naturalistic reading tasks using FIRE fMRI. This opens up new avenues for studying language in the context of naturalistic reading of connected text.

Several differences were also observed between the two experiments. The frontal activation in MFG and parts of IFG was found in Experiment 1, but not in Experiment 2. Frontal activation is often, but not always, found in studies of action semantics. It can be interpreted as reflecting executive processing related to effortful retrieval of semantics. Activation patterns in three previous studies from our laboratory (Desai et al., 2010, 2011, 2013), each comparing sentences with action verbs to sentences with abstract verbs (among other things), can be a useful comparison. All three studies showed activations in the left aIPL and pITG/ITS for actions. In the first two studies, the action and abstract sentences were exactly matched in their syntactic structure, and no MFG/IFG activation was found in the action > abstract comparison. In the study by Desai et al. (2013), sentence structures were similar but not identical. The action sentences also had lower accuracy and numerically higher reaction times (RTs; in a sentence sensibility judgment task), suggesting higher processing difficulty. Here, MFG/IFG activation was indeed found. Experiment 2 had a much larger stimulus set, with more than twice the number of unique nouns and lemmas, and hence executive-processing demands may be better decoupled from manipulability.

Bilateral AG activation was found in Experiment 2, unlike Experiment 1. AG is a general semantic region, associated especially with semantic integration (Binder et al., 2009; Price et al., 2015). Greater AG and less frontal activation in Experiment 2 may reflect more automatic and less effortful integration of manipulable noun meaning.

The left posterior SFG and precuneus were also activated in Experiment 2. Activation in the dorsomedial prefrontal cortex is frequently found for action semantics as well as for tool use, and is thought to play a role in self-directed retrieval of semantics (Binder et al., 2009; Randerath et al., 2010; Desai et al., 2013). The precuneus activation, just dorsal to the parieto-occipital sulcus and near the posterior edge of the hemisphere, termed the “parietal reach region,” is found in both monkeys and humans, and plays a role in planning visually guided reaching movements (Connolly et al., 2003; Filimon et al., 2009).

Thus, the two most common areas related to action semantics—aIPL and pITG/ITS—were activated across both experiments, while several differences were also found. These results have theoretical implications regarding the nature of semantic representations. As mentioned in the Introduction, some theories of text processing suggest that comprehension sometime involves only “good enough” processing (Ferreira et al., 2002). Comprehension does not necessarily entail complete syntactic or semantic analysis of the text, but can be rather shallow and incomplete. It is conceivable that even at the lexical level only a shallow semantic access occurs in natural reading. Combining this view with the hypothesis that words have a “core” meaning that is automatically and rapidly activated (Whitney et al., 1985; Mahon and Caramazza, 2008; Dove, 2009), perhaps only this core meaning is activated in natural reading. On this view, common single-word experimental settings result in an unusually deep and/or wider processing of the concept, resulting in activation of “peripheral” semantic features, which may be grounded in sensory–motor cortices but do not represent the core aspects of the meaning, whatever they are. Whether cores exist and whether they are abstract are open questions (Lebois et al., 2015), and claims regarding conceptual cores are remarkably underspecified. The current results show that, even in naturalistic reading, sensory–motor areas similar to those seen in previous imaging as well as lesion studies are activated. We conclude that even if semantic processing is shallow and incomplete in naturalistic reading, and only a putative core or central lexical meaning is activated, that meaning is grounded in sensory–motor systems.

An obvious limitation of using prewritten passages is that one does not exercise fine-grained control over stimulus materials. This control is important to match or equate the materials on confounding variables, which allows selective investigation of the effects of a variable of interest. However, FIRE fMRI analysis permits inclusion of variables of no interest as covariates, allowing us to statistically reduce or eliminate the effects of these variables. We used word frequency, concreteness, number of letters, and fixation durations in this way. Fixation durations can be especially useful in accounting for visual attention and time-on-task effects, and are arguably superior to proxy behavioral measures such as RTs. With a large enough set, a subset of words or sentences can also be selected to dissociate particular variables. Thus, FIRE fMRI allows the investigation of reading and language in naturalistic, connected text settings, while also allowing us to reduce confounding factors that are naturally present in connected text.

Notes

Supplemental material for this article is available at http://www.mccauslandcenter.sc.edu/delab/webdocs/SupplementaryMaterial.pdf. A list of nouns used in Experiment 1 and in Experiment 2 is provided. This material has not been peer reviewed.

Footnotes

This research was supported by the National Science Foundation (Grant BCS-1151358) and the National Institutes of Health/National Institute on Deafness and Other Communication Disorders (Grant R01-DC-010783).

References

- Barsalou LW. Grounded cognition. Annu Rev Psychol. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brandi ML, Wohlschläger A, Sorg C, Hermsdörfer J. The neural correlates of planning and executing actual tool use. J Neurosci. 2014;34:13183–13194. doi: 10.1523/JNEUROSCI.0597-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchweitz A, Mason RA, Tomitch LM, Just MA. Brain activation for reading and listening comprehension: an fMRI study of modality effects and individual differences in language comprehension. Psychol Neurosci. 2009;2:111–123. doi: 10.3922/j.psns.2009.2.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buxbaum LJ, Johnson-Frey SH, Bartlett-Williams M. Deficient internal models for planning hand–object interactions in apraxia. Neuropsychologia. 2005;43:917–929. doi: 10.1016/j.neuropsychologia.2004.09.006. [DOI] [PubMed] [Google Scholar]

- Choi W, Lowder MW, Ferreira F, Henderson JM. Individual differences in the perceptual span during reading: evidence from the moving window technique. Atten Percept Psychophys. 2015;77:2463–2475. doi: 10.3758/s13414-015-0942-1. [DOI] [PubMed] [Google Scholar]

- Connolly JD, Andersen RA, Goodale MA. FMRI evidence for a “parietal reach region” in the human brain. Exp Brain Res. 2003;153:140–145. doi: 10.1007/s00221-003-1587-1. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- de Marneffe MC, MacCartney B, Manning C. Generating typed dependency parses from phrase structure parses. Paper presented at LREC 2006; May; Genoa, Italy. 2006. [Google Scholar]

- Desai RH, Binder JR, Conant LL, Seidenberg MS. Activation of sensory-motor areas in sentence comprehension. Cereb Cortex. 2010;20:468–478. doi: 10.1093/cercor/bhp115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desai RH, Binder JR, Conant LL, Mano QR, Seidenberg MS. The neural career of sensory-motor metaphors. J Cogn Neurosci. 2011;23:2376–2386. doi: 10.1162/jocn.2010.21596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desai RH, Conant LL, Binder JR, Park H, Seidenberg MS. A piece of the action: modulation of sensory-motor regions by action idioms and metaphors. Neuroimage. 2013;83:862–869. doi: 10.1016/j.neuroimage.2013.07.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dove G. Beyond perceptual symbols: a call for representational pluralism. Cognition. 2009;110:412–431. doi: 10.1016/j.cognition.2008.11.016. [DOI] [PubMed] [Google Scholar]

- Ferreira F, Bailey K, Ferraro V. Good-enough representations in language comprehension. Curr Dir Psychol Sci. 2002;11:11–15. doi: 10.1111/1467-8721.00158. [DOI] [Google Scholar]

- Filimon F, Nelson JD, Huang RS, Sereno MI. Multiple parietal reach regions in humans: cortical representations for visual and proprioceptive feedback during on-line reaching. J Neurosci. 2009;29:2961–2971. doi: 10.1523/JNEUROSCI.3211-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fodor JA. The modularity of mind: an essay on faculty psychology. Cambridge, MA: MIT; 1983. [Google Scholar]

- Frey SH, Vinton D, Norlund R, Grafton ST. Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Brain Res Cogn Brain Res. 2005;23:397–405. doi: 10.1016/j.cogbrainres.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Goldenberg G, Spatt J. The neural basis of tool use. Brain. 2009;132:1645–1655. doi: 10.1093/brain/awp080. [DOI] [PubMed] [Google Scholar]

- Haaland KY, Harrington DL, Knight RT. Neural representations of skilled movement. Brain. 2000;123:2306–2313. doi: 10.1093/brain/123.11.2306. [DOI] [PubMed] [Google Scholar]

- Henderson JM, Luke SG. Stable individual differences in saccadic eye movements during reading, pseudoreading, scene viewing, and scene search. J Exp Psychol Hum Percept Perform. 2014;40:1390–1400. doi: 10.1037/a0036330. [DOI] [PubMed] [Google Scholar]

- Hermsdorfer J, Terlinden G, Muhlau M, Goldenberg G, Wohlschlager AM. Neural representations of pantomimed and actual tool use: evidence from an event-related fMRI study. Neuroimage. 2007;36(Suppl 2):T109–T118. doi: 10.1016/j.neuroimage.2007.03.037. [DOI] [PubMed] [Google Scholar]

- Jax SA, Buxbaum LJ, Moll AD. Deficits in movement planning and intrinsic coordinate control in ideomotor apraxia. J Cogn Neurosci. 2006;18:2063–2076. doi: 10.1162/jocn.2006.18.12.2063. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cereb Cortex. 2005;15:681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Kan IP, Wilson A, Thompson-Schill SL, Chatterjee A. Conceptual representations of action in the lateral temporal cortex. J Cogn Neurosci. 2005;17:1855–1870. doi: 10.1162/089892905775008625. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Pulvermüller F. Conceptual representations in mind and brain: theoretical developments, current evidence and future directions. Cortex. 2012;48:805–825. doi: 10.1016/j.cortex.2011.04.006. [DOI] [PubMed] [Google Scholar]

- Lebois LA, Wilson-Mendenhall CD, Barsalou LW. Are automatic conceptual cores the gold standard of semantic processing? The context-dependence of spatial meaning in grounded congruency effects. Cogn Sci. 2015;39:1764–1801. doi: 10.1111/cogs.12174. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. J Physiol Paris. 2008;102:59–70. doi: 10.1016/j.jphysparis.2008.03.004. [DOI] [PubMed] [Google Scholar]

- McKoon G, Ratcliff R. Inference during reading. Psychol Rev. 1992;99:440–466. doi: 10.1037/0033-295X.99.3.440. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ, Penny WD, Friston KJ. Two distinct neural mechanisms for category-selective responses. Cereb Cortex. 2006;16:437–445. doi: 10.1093/cercor/bhi123. [DOI] [PubMed] [Google Scholar]

- Peeters R, Simone L, Nelissen K, Fabbri-Destro M, Vanduffel W, Rizzolatti G, Orban GA. The representation of tool use in humans and monkeys: common and uniquely human features. J Neurosci. 2009;29:11523–11539. doi: 10.1523/JNEUROSCI.2040-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price AR, Bonner MF, Peelle JE, Grossman M. Converging evidence for the neuroanatomic basis of combinatorial semantics in the angular gyrus. J Neurosci. 2015;35:3276–3284. doi: 10.1523/JNEUROSCI.3446-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pylyshyn ZW. Computation and cognition. Cambridge, MA: MIT; 1984. [Google Scholar]

- Ramayya AG, Glasser MF, Rilling JK. A DTI investigation of neural substrates supporting tool use. Cereb Cortex. 2010;20:507–516. doi: 10.1093/cercor/bhp141. [DOI] [PubMed] [Google Scholar]

- Randerath J, Goldenberg G, Spijkers W, Li Y, Hermsdörfer J. Different left brain regions are essential for grasping a tool compared with its subsequent use. Neuroimage. 2010;53:171–180. doi: 10.1016/j.neuroimage.2010.06.038. [DOI] [PubMed] [Google Scholar]

- Rueschemeyer SA, van Rooij D, Lindemann O, Willems RM, Bekkering H. The function of words: distinct neural correlates for words denoting differently manipulable objects. J Cogn Neurosci. 2010;22:1844–1851. doi: 10.1162/jocn.2009.21310. [DOI] [PubMed] [Google Scholar]

- Socher R, Bauer J, Manning CD, Ng AY. Parsing with compositional vector grammars. Paper presented at ACL; August 2013; Sofia, Bulgaria. 2013. [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme Medical; 1988. [Google Scholar]

- Tettamanti M, Buccino G, Saccuman MC, Gallese V, Danna M, Scifo P, Fazio F, Rizzolatti G, Cappa SF, Perani D. Listening to action-related sentences activates fronto-parietal motor circuits. J Cogn Neurosci. 2005;17:273–281. doi: 10.1162/0898929053124965. [DOI] [PubMed] [Google Scholar]

- Toutanova K, Klein D, Manning C, Singer Y. Feature-rich part-of-speech tagging with a cyclic dependency network. Paper presented at HLT-NAACL 2003: Human Language Technology Conference; May; Edmonton, AB, Canada. 2003. [Google Scholar]

- Vitali P, Abutalebi J, Tettamanti M, Rowe J, Scifo P, Fazio F, Cappa SF, Perani D. Generating animal and tool names: an fMRI study of effective connectivity. Brain Lang. 2005;93:32–45. doi: 10.1016/j.bandl.2004.08.005. [DOI] [PubMed] [Google Scholar]

- Wehbe L, Murphy B, Talukdar P, Fyshe A, Ramdas A, Mitchell T. Simultaneously uncovering the patterns of brain regions involved in different story reading subprocesses. PLoS One. 2014;9:e112575–e112575. doi: 10.1371/journal.pone.0112575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitney P, McKay T, Kellas G, Emerson WA., Jr Semantic activation of noun concepts in context. J Exp Psychol Learn Mem Cogn. 1985;11:126–135. doi: 10.1037/0278-7393.11.1.126. [DOI] [PubMed] [Google Scholar]

- Wiederholt J, Bryant B. Gray oral reading test-fifth edition (GORT-5) Austin, TX: Pro-Ed; 2012. [Google Scholar]

- Wiederholt J, Blalock G. Gray silent reading test. Austin, TX: Pro-Ed; 2000. [Google Scholar]

- Willems RM, editor. Cognitive neuroscience of naturalistic language use. NY: Cambridge UP; 2015. [Google Scholar]