Abstract

There have been long-standing differences of opinion regarding the influence of the face relative to that of contextual information on how individuals process and judge facial expressions of emotion. However, developmental changes in how individuals use such information have remained largely unexplored and could be informative in attempting to reconcile these opposing views. The current study tested for age-related differences in how individuals prioritize viewing emotional faces versus contexts when making emotion judgments. To do so, we asked 4-, 8-, and 12-year-old children as well as college students to categorize facial expressions of emotion that were presented with scenes that were either congruent or incongruent with the facial displays. During this time, we recorded participants’ gaze patterns via eye tracking. College students directed their visual attention primarily to the face, regardless of contextual information. Children, however, divided their attention between both the face and the context as sources of emotional information depending on the valence of the context. These findings reveal a developmental shift in how individuals process and integrate emotional cues.

Keywords: emotion perception, development, facial expressions, context, gaze patterns

There have been long-standing differences of opinion regarding the influence of the face relative to that of contextual information on the perception of emotion. One view holds that features of facial musculature alone are both necessary and sufficient cues for an observer to recognize another’s emotion (e.g., Buck, 1994; Ekman, 1992). The alternative view is that contextual information beyond the face—body postures, gestures, or emotionally charged objects and scenes, for example—is necessary for emotion recognition (e.g., Aviezer et al., 2008; Carroll & Russell, 1996; Noh & Isaacowitz, 2013). There is some empirical support for both of these theories; however, relatively little data exist on possible developmental changes that might integrate these contrasting perspectives. It is possible that both views are correct depending upon the developmental stage of the individual being tested. In the current experiment, we examine potential changes in the visual processing of facial and contextual information about emotion from childhood to adulthood. Our goal was to determine if the information individuals rely upon in making judgments about emotion changes with age and experience.

Contrasting Views on Facial Emotion Perception

Historically, most research on emotion perception has used methods in which participants view pictures of faces in isolation. Such procedures were quite reasonable given that the formative theories of Tomkins (1962, 1963) and Ekman (1992) posited that there were a limited number of basic emotions, that these emotions were expressed by distinct configurations of the face, and that these expressions are neurally determined and universal. Building upon these theories and data, Izard (1992) proposed that in early infancy, displays of emotion in the face were a direct readout of distinct emotion modules in the brain. On this view, the configuration of facial musculature should, itself, provide sufficient information about an individual’s underlying emotional state.

However, facial expressions of emotion are rarely seen in isolation. Rather, facial cues co-occur with many other sources of information that include hand gestures, body language, objects, scenes and general information about events that precede the emotional reaction (Russell, 1980; Trope, 1986). For this reason, one could make a judgment about a facial expression in isolation that is different from the conclusion one might make if that same facial expression was viewed within a social context. One classic illustration of this point was described by Camras (1992). She reported that when adult expert emotion coders viewed film clips of a child expressing facial expressions without any situational context, they made inferences about the child’s emotional state that were inconsistent with the actual situation in which the child’s emotions were filmed. Such examples suggest that some context is needed to resolve the ambiguity of facial cues.

Similarly, there is evidence that contextual information may change the visual scanning patterns that people use when viewing facial expressions. For example, Aviezer et al. (2008) had adult participants view images of people expressing facial expressions of anger or disgust while simultaneously expressing either the same or a different emotion with their bodies (e.g., a raised, clenched fist signifying anger; holding soiled underpants with only fingertips suggesting disgust). They found that the amount of time participants spent looking at certain facial features was modulated by the emotion displayed by the context. Specifically, participants looked more to the eye region when the context conveyed anger and to the nose and mouth when the context displayed disgust, regardless of the facial emotion. These data provide support for the view that contextual information has the capacity to influence how an individual views a given facial expression.

This has been a difficult issue to resolve in the field of emotion: there are data consistent with the view that facial musculature is the primary source of information that we use to read other people’s emotional states, but also evidence that contextual information is critical in the perception and judgment of emotion expressions. Here, we explore whether developmental data may help resolve this inconsistency. One possibility is that both views are correct, but the relative primacy of facial data may change over development. Rather than being a stable process, it is possible that there are age-related changes in the ways individuals use facial and contextual information.

Changes in Processes Underlying the Perception of Emotion

There is evidence for developmental changes in the processing of faces. Both behavioral (Malatesta & Izard, 1984) and neural (Aylward et al., 2005) aspects of visual processing of faces and contextual information change over time. For example, the strategies people use for face processing shift across development—from an analytical strategy utilized by young children to a more configural or holistic strategy utilized by adults (Schwarzer, 2000). Similarly, early event-related potential components that show sensitivity to emotions in adults do not appear until late adolescence (Batty & Taylor, 2006).

In addition to these maturational changes in face processing, cross-cultural differences also suggest a role for experience and learning in the shaping of emotion perception. People are able to discriminate Japanese individuals from Japanese Americans and Caucasian Americans from Caucasian Australians simply by viewing others’ facial expressions of emotion (Marsh, Elfenbein, & Ambady, 2003). In addition, individuals from a preliterate culture categorized facial expressions differently than individuals from western cultures. This study found that people from western cultures tended to use anger, disgust, fear, happiness, sadness, and surprise as guides for categorizing facial emotions. Yet when asked to sort images of emotional expressions, individuals from the preliterate Himba ethnic group from Namibia used different categories. Although they sorted happy faces (with smiles) and fearful faces (with wide eyes) into categories consistent with happiness and fear, they sorted all other emotions into categories inconsistent with the basic emotions used in the west (Gendron, Roberson, van der Vyver, & Feldman Barrett, 2014). Other types of data also reveal cultural variation in how people attend to faces, and these types of variation suggest that perception of emotion involves processes that change based upon people’s social experience. As an example, within western cultures people attend more to the mouth as a primary source of emotion information than do people from eastern cultures (Eisenbarth & Alpers, 2011; Yuki, Maddux, & Masuda, 2007). Taken together, the cross-cultural data suggest that emotion recognition is not a fixed process, but one that develops based upon relevant social input.

As mentioned earlier, most of the methods used to examine how emotion perception occurs have relied upon participants’ responses to facial stimuli in the absence of contextual information. For this reason, little is known about children’s processing of emotional scenes or contexts. Yet, some research is beginning to address these gaps, raising questions about potential developmental changes in how individuals integrate facial and contextual information. For example, similar to adults, young children show difficulties in recognizing familiar emotional faces when paired with incongruent contextual information early in development (Mondloch, Horner, & Mian, 2013). However, they rely heavily on bodily cues when identifying emotions they are uncertain about (Mondloch, 2012). Moreover, compared with younger adults, older adults allocate greater attention toward contextual information relative to facial cues (Noh & Isaacowitz, 2013). This research provides evidence for age-related changes in how individuals combine facial and contextual information in emotion processing.

The Present Study

These studies thus lay the groundwork for the current experiment. We sought to examine potential developmental changes in how individuals integrate contextual and facial information when making emotion judgments. To do so, we presented participants from four age groups (spanning preschoolers to college students) with stimuli displaying faces paired with either congruent or incongruent contexts. On congruent trials, the facial expression and surrounding context (body language and scene) conveyed consistent emotional information. For example, in a congruent trial, participants might see an anger face on a body posture signifying anger. Incongruent trials had one emotion conveyed in the face but a different emotion suggested by the surrounding context. For example, an incongruent trial might involve an anger face imposed upon a scene signifying disgust. We measured participants’ responses to these stimuli using eye-tracking technology, which has the capability to provide a fine-grained analysis of visual attention including the amount of time individuals spent looking at the face versus the contextual information, and their latency to fixate on the face versus the context. Because there are little data examining this issue in children, we had no a priori basis for selecting specific ages at which emotion processing differences might emerge. Therefore, we compared college students to youth drawn from three different developmental epochs: preschool aged, school aged, and early adolescence.

We reasoned that adults have likely learned through experience that facial expressions are usually consistent with social contexts; therefore, although not a pure signal or a perfect predictor, basic facial configurations provide a quick and reliable cue as to how another person feels. This learning leads to increased efficiency (i.e., directing attention to more reliable cues) in social processing that can be elaborated in ambiguous or unusual situations. For this reason, we predicted that young adults would show greater allocation of visual attention to faces relative to surrounding context. We also theorized that young children may still be learning which facial expressions reliably co-occur with various social contexts. Therefore, they may still feel the need to attend to both facial and contextual information when making emotion judgments. We tested this hypothesis: that children would shift attention between the face and the surrounding context. Such a finding would be consistent with the view that children are refining and learning how to use facial expressions to make emotion judgments, rather than relying on a fixed readout directly from the face.

Additionally, we examined a related issue regarding developmental changes in eye gaze. As reviewed above, contextual information appears to modulate which aspects of the face adults allocate attention. We therefore planned to explore whether eyegaze patterns to different regions of the face varied across age groups to further elucidate developmental changes in emotion processing. The purpose of this analysis was to explore whether contextual effects do not just reflect late, high-level, interpretive processes, but also change basic levels of visual processing of emotional expressions.

Method

Participants

One hundred participants in four age groups were recruited and completed the current study: children ages 4, 8, and 12 and college students. Twenty-five 4-year-old children (Mage = 4 years, 3 months; SD = 6 months; 48% girls) were recruited through a local preschool. Twenty-five 8-year-old children (Mage = 8 years, 6 months; SD = 4 months; 52% girls) and 25 12-year-old children (Mage = 12 years, 6 month; SD = 4 months; 48% girls) were recruited from community elementary and middle schools. For our adult sample, we recruited 25 students (Mage = 19 years, 11 months; SD = 1 year, 6 months; 52% women) from a university introductory psychology course. Child participants of all ages were awarded a prize for their participation; university students were compensated with course credit. All participants had normal or corrected visual acuity. The university’s institutional review board approved the study procedures.

Stimuli

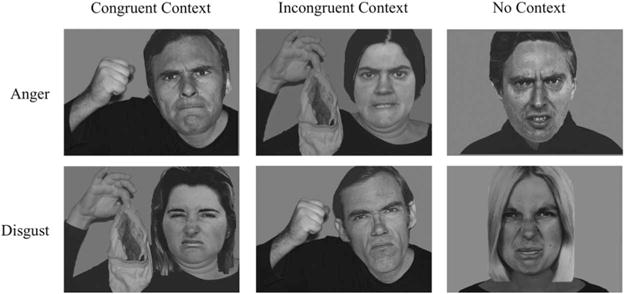

Stimuli were taken from Ekman and Friesen’s (1976) facial set and adapted by Aviezer et al., (2008, Experiment 3). Images of 10 individuals (5 females, 5 males) posing the basic facial expressions of anger and disgust (20 total faces) were combined with an image of a body posing anger, disgust, or no emotion. Each facial expression was paired with an anger context, a disgust context, and in isolation (with no context). This resulted in a total of 60 images (see Figure 1). Five portraits of individuals expressing four other emotions (i.e., sad, happy, fear, surprise), for a total of 20 images, were presented as filler stimuli. Ten anger and disgust contexts consisting of bodies with blank ellipses covering faces were used to measure participants’ accuracy in identifying contexts of emotion in isolation. Participants in all age groups accurately identified all filler stimuli (ps < .001; see the online supplemental Table S1) and isolated contexts of anger and disgust (ps < .001; Table 1) at levels better than chance.

Figure 1.

Examples of anger and disgust faces with a congruent, incongruent, or no context. From Aviezer et al. (2008). Facial expressions from Ekman and Friesen (1976). Reproduced with permission from the Paul Ekman Group.

Table 1.

Corrected Mean Proportion Accuracy (Standard Error) for Contexts in the Absence of Facial Expressions

| Context

|

||

|---|---|---|

| Age group | Anger | Disgust |

| 4-year-olds | .60 (.10) | .48 (.12) |

| 8-year-olds | .96 (.02) | .91 (.03) |

| 12-year-olds | .98 (.01) | .91 (.03) |

| College students | .94 (.04) | .88 (.04) |

Note. Values displayed represent corrected mean proportions to account for different probabilities of guessing correct due to the different number of response options across age groups. Corrected means calculated via formula scoring (i.e., the proportion of incorrect responses divided by one less than the number of response options, subtracted from the proportion of correct responses). Identifying all stimuli correctly would result in a score of 1, and chance performance would result in a score of 0. Negative values indicate below chance performance.

Procedure

Stimuli were presented on a 24-in. monitor at a resolution of 1920 × 1200 pixels, preceded by a fixation cross to reposition participants’ gaze to the center of the screen. The fixation cross was replaced by stimuli only after 1,000 ms of continuous gaze was directed toward the cross. Stimuli were presented for 5,000 ms to provide for adequate eye tracking collection. All stimuli remained present on screen while participants categorized portraits. Stimuli were presented randomly in a within-participants design with the exception of the control stimuli, which were always presented at the end of the experiment.

Participants were prompted with the question “What is this person feeling?” and, with the exception of 4-year-olds, instructed to choose from a list of seven emotions, anger, disgust, fear, happiness, sadness, surprise, and pride, which was included because of its perceptual similarity, yet opposing level of valence to disgust (see Aviezer et al., 2008, Experiment 2). Pilot data indicated that the majority of the 4-year-old participants had difficulty remembering seven choices. Therefore, we presented 4-year-olds with two options (the emotions expressed by the face and the body). Participants were asked to look freely at the image and respond at their own pace.

The computer task was administered using E-Prime Psychology Software 2.0 (Psychology Software Tools; Pittsburgh, PA) on a wide-screen Tobii T60XL Eye Tracker using binocular pupil tracking and sampling at a rate of 60 Hz. Participants were seated at a viewing distance of approximately 70 cm and eye movements were calibrated using a 5-point calibration-accuracy test. We removed data from any face-context condition where a participant had missing eye-tracking data for three consecutive trials within that condition. Less than 1% of data was removed from analyses.

All data were collected in a suite designed specifically for eye-tracking studies that provided minimal distractions. With the exception of 4-year-old participants, all trials were completed in one 50-min session. Four-year-old participants completed the same number of trials as all other age groups; however, the task was completed over three equal-length sessions to reduce fatigue. All 4-year-old participants completed the three sessions every other day for 5 days. All participants completed the task without difficulty and none were excluded from data analyses.

Results

Data Analytic Plan

We first examined age-related differences in recognition of, and attention to, facial expressions of emotion, with and without accompanying contextual information. Next, we tested our a priori hypothesis that young adults would allocate greater attention to the face relative to context, whereas children would divide their attention between the face and context. To do so, we analyzed age-related differences in the percentage of time participants looked at the face relative to the surrounding context. When these age-related differences in visual attention emerged, we conducted planned analyses to explore the potential influence of context on attention to different regions of the face (i.e., eyes vs. mouth). We also examined age-related differences for latency to first fixation to the face relative to the context; the pattern of results was similar to that for gaze duration, therefore the means are presented only in online supplemental Table S2.

Because 4-year-olds selected from two response options, whereas other participants had seven choices, we used a formula to adjust for the probability of correct guesses across age groups. Accuracy data is presented as the proportion of incorrect responses divided by one less than the number of response options, subtracted from the proportion of correct responses (see Frary, 1988). These standardized scores reflect chance performance as 0, perfect performance as 1, and below chance performance as below zero. All p values for analyses of variance (ANOVAs) were adjusted using the Greenhouse–Geisser correction for violations of sphericity; post hoc comparisons were Bonferroni corrected. Sex of participant was considered, but did not emerge as a significant covariate in any analysis.

Accuracy in Identifying Emotion With and Without Context

We began by assessing whether pairing facial expressions with congruent or incongruent contexts affected accuracy. We then tested for differences in participants’ identification of the facial stimuli in isolation.

We examined participants’ performance using one-sample t tests comparing corrected performance to chance in identifying angry and disgust faces paired with congruent and incongruent contexts. There were no differences between age groups in participants’ identification of anger and disgust faces when viewed within congruent contexts (at levels better than chance, ps < .001). However, differences emerged when the facial expressions and surrounding contexts were incongruent. When angry faces were presented with an incongruent context, 4-year-olds, 8-year-olds, and 12-year-olds were more likely to identify the person in the image as feeling the emotion conveyed by the context (ps < .001) whereas young adult participants selected the emotion expressed in the face (see Table 2). When a disgust face was paired with an incongruent context, participants in all of the age groups chose the emotion conveyed in the context rather than the emotion in the face (ps < .01; Table 3). When angry faces were presented in isolation (without context), all participants identified them as angry at levels better than chance (ps < .001; Table 2). However, 4-year-olds, 8-year-olds, and 12-year-olds were more likely to identify isolated disgust faces as angry (ps < .01), whereas college students mostly chose disgust (p < .001; Table 3).

Table 2.

Corrected Mean Proportion of All Responses (Standard Error) for Facial Expressions of Anger

| Labeled emotion

|

|||||||

|---|---|---|---|---|---|---|---|

| Age group and context | Angry | Disgusted | Scared | Happy | Proud | Sad | Surprised |

| 4-year-olds | |||||||

| Congruent | .66 (.11) | −.66 (.08) | |||||

| Incongruent | −.65 (.12) | .65 (.13) | |||||

| None | .51 (.12) | −.51 (.09) | |||||

| 8-year-olds | |||||||

| Congruent | .92 (.02) | −.15 (.01) | −.17 (.00) | −.16 (.00) | −.17 (.00) | −.13 (.02) | −.14 (.01) |

| Incongruent | .13 (.06) | .67 (.07) | −.15 (.01) | −.17 (.00) | −.17 (.00) | −.16 (.00) | −.16 (.01) |

| None | .68 (.05) | −.12 (.01) | −.13 (.01) | −.15 (.01) | −.16 (.00) | .00 (.04) | −.11 (.01) |

| 12-year-olds | |||||||

| Congruent | .91 (.03) | −.15 (.01) | −.14 (.02) | −.17 (.00) | −.17 (.00) | −.12 (.02) | −.17 (.00) |

| Incongruent | .21 (.05) | .54 (.06) | −.16 (.01) | −.17 (.00) | −.17 (.00) | −.12 (.02) | −.14 (.01) |

| None | .62 (.04) | −.12 (.02) | −.10 (.02) | −.17 (.00) | −.17 (.00) | .02 (.03) | −.08 (.02) |

| College students | |||||||

| Congruent | .91 (.02) | −.16 (.00) | −.15 (.01) | −.17 (.00) | −.17 (.00) | −.12 (.02) | −.13 (.01) |

| Incongruent | .42 (.04) | .26 (.04) | −.14 (.01) | −.16 (.00) | −.16 (.00) | −.13 (.01) | −.09 (.02) |

| None | .72 (.03) | −.12 (.02) | −.15 (.01) | −.15 (.01) | −.16 (.01) | .07 (.02) | −.07 (.02) |

Note. Values displayed represent corrected mean proportions to account for different probabilities of guessing correct due to the different number of response options across age groups. Corrected means calculated via formula scoring (i.e., the proportion of incorrect responses divided by one less than the number of response options, subtracted from the proportion of correct responses). Identifying all stimuli correctly would result in a score of 1, and chance performance would result in a score of 0. Negative values indicate below chance performance. Bolded column represents corrected proportion of correct responses; nonbolded columns represent corrected proportion of endorsements of incorrect responses.

Table 3.

Corrected Mean Proportion of All Responses (Standard Error) for Facial Expressions of Disgust

| Labeled emotion

|

|||||||

|---|---|---|---|---|---|---|---|

| Age group and context | Angry | Disgusted | Scared | Happy | Proud | Sad | Surprised |

| 4-year-olds | |||||||

| Congruent | −.61 (.09) | .61 (.12) | |||||

| Incongruent | .68 (.12) | −.68 (.09) | |||||

| None | .53 (.12) | −.53 (.11) | |||||

| 8-year-olds | |||||||

| Congruent | −.06 (.03) | .85 (.04) | −.17 (.00) | −.15 (.01) | −.16 (.01) | −.15 (.01) | −.16 (.00) |

| Incongruent | .94 (.02) | −.13 (.02) | −.17 (.00) | −.16 (.00) | −.17 (.00) | −.16 (.01) | −.16 (.00) |

| None | .47 (.05) | .17 (.05) | −.15 (.01) | −.13 (.01) | −.16 (.00) | −.07 (.02) | −.13 (.01) |

| 12-year-olds | |||||||

| Congruent | −.05 (.03) | .81 (.04) | −.16 (.00) | −.16 (.01) | −.16 (.00) | −.12 (.02) | −.16 (.01) |

| Incongruent | .88 (.03) | −.12 (.02) | −.16 (.00) | −.16 (.00) | −.15 (.02) | −.14 (.01) | −.14 (.02) |

| None | .41 (.07) | .24 (.06) | −.15 (.01) | −.15 (.01) | −.15 (.01) | −.06 (.02) | −.15 (.01) |

| College students | |||||||

| Congruent | −.08 (.02) | .88 (.02) | −.16 (.00) | −.16 (.01) | −.16 (.00) | −.16 (.01) | −.16 (.01) |

| Incongruent | .83 (.05) | −.08 (.03) | −.16 (.00) | −.16 (.00) | −.14 (.02) | −.13 (.02) | −.15 (.01) |

| None | .27 (.05) | .44 (.05) | −.16 (.01) | −.16 (.01) | −.15 (.01) | −.10 (.02) | −.14 (.01) |

Note. Values displayed represent corrected mean proportions to account for different probabilities of guessing correct due to the different number of response options across age groups. Corrected means calculated via formula scoring (i.e., the proportion of incorrect responses divided by one less than the number of response options, subtracted from the proportion of correct responses). Identifying all stimuli correctly would result in a score of 1, and chance performance would result in a score of 0. Negative values indicate below chance performance. Bolded column represent corrected proportion of correct responses; nonbolded columns represent corrected proportion of endorsements of incorrect responses.

Attention Allocation to Faces in Congruent and Incongruent Contexts

Next, we assessed visual allocation of attention to the various stimuli. To do so we conducted a 4 (age: 4-year-olds, 8-year-olds, 12-year-olds, and college students) × 2 (facial expression: anger, disgust) × 2 (context: congruent, incongruent) mixed-model ANOVA for the percent gaze duration toward the face. Age was a between-subjects factor and facial expression and context were within-subject factors. An ellipse that spanned from the hairline down to the chin and from temple to temple defined the face. The context included stimuli outside of this defined face area.

Main effects of age, F(1, 96) = 40.40, p < .001, , and of facial expression, F(1, 96) = 3.53, p = .063, emerged, and were qualified by a Facial Expression × Context interaction, F(1, 96) = 354.20, p < .001, . Yet, this interaction was further qualified by a three-way interaction, F(3, 96) = 9.83, p < .001, . Taken together, these analyses indicate that participants of different ages attended differently to each facial expression of emotion depending upon the context in which the facial expression was presented. These means are reported in Table 4.

Table 4.

Mean Percent Fixation Duration (Standard Error) Toward the Face Relative to the Context for Anger and Disgust Faces in Congruent, Incongruent, and no Contexts

| Presented facial emotion and context congruence

|

||||||

|---|---|---|---|---|---|---|

| Anger

|

Disgust

|

|||||

| Age group | Congruent | Incongruent | None | Congruent | Incongruent | None |

| 4-year-olds | 70.08 (2.08) | 49.55 (2.71) | 83.55 (1.97) | 46.64 (1.93) | 67.91 (3.06) | 80.81 (2.34) |

| 8-year-olds | 74.77 (1.85) | 49.41 (2.40) | 82.10 (2.29) | 47.75 (2.84) | 71.68 (2.40) | 80.36 (3.54) |

| 12-year-olds | 79.45 (1.88) | 60.33 (2.53) | 87.84 (1.74) | 60.48 (3.16) | 80.35 (1.76) | 85.65 (2.01) |

| College students | 88.10 (1.11) | 79.37 (1.64) | 94.01 (1.37) | 76.75 (2.44) | 87.95 (1.80) | 95.31 (0.89) |

Note. Percent gaze duration reflects the percent of time participants looked toward the face. Exclusive focus on the face would result in a score of 100, and looking entirely at the context would result in a score of 0.

We first examined the facial expression by context interaction to explore general differences in attention irrespective of age. We found that participants allocated less attention to the face and more attention to the context when viewing anger, F(1, 96) = 241.58, p < .001, , and disgust, F(1, 96) = 210.89, p < .001, , faces paired with a disgust context than they did when viewing those same faces in an anger context (ps < .001).

To understand the three-way interaction, we conducted separate analyses of gaze duration toward each facial expression. The primary test of our hypothesis concerned how participants processed stimuli when the face and the context conveyed different emotions. Indeed, there were differences across the age groups with regard to both angry, F(3, 96) = 36.03, p < .001, , and disgust, F(3, 96) = 15.14, p < .001, , faces. When presented with incongruent emotion information, college students focused more on the face than 4-year-olds and 8-year-olds for both emotions (ps < .001). Compared with 12-year-olds, college students looked more to the face when viewing anger (p < .001) but were no different when viewing disgust (p = .13). Within the child sample, 12-year-olds allocated more attention to the faces than did 4-year-olds and 8-year-olds for both emotions (ps < .05). Four-year-olds’ and 8-year-olds’ did not differ from one another in how much time they spent viewing the faces (ps > 1).

Further, parameter estimates revealed that regardless of context, 12-year-olds and college students focused significantly more on the face relative to the context (ps < .001). Four-year-olds and 8-year-olds also looked more to the face compared with contextual information when viewing emotional faces with an anger context (ps < .001), yet looked to the face and context equally when the faces appeared with a disgust context (ps > .20). In sum, increasing age of participants was associated with increased attention to faces and decreased attention to contexts.

Do Age Differences in Attention to Faces Explain Results?

We next examined whether visual attention to faces without context accounted for the differences that we observed between age groups when viewing angry and disgust faces in congruent and incongruent contexts. To do so, we analyzed visual attention to facial expressions of anger and disgust in the absence of emotional contextual information using a 4 (age: 4-year-olds, 8-year-olds, 12-year-olds, and college students) × 2 (facial expression: anger, disgust) mixed-model ANOVA. Gaze duration toward the face was the outcome variable, age was a between-subjects factor, and facial expression was a within-subjects factor. Face and context areas were defined in the same manner as stated above.

As shown in Table 4, there was a significant main effect of age, F(3, 96) = 12.23, p < .001, . Further examination of this finding revealed that college students allocated more visual attention to both anger and disgust faces when presented without context than did all other age groups, (ps < .01). The three groups of children, however, did not differ from one another (ps > .18). We entered the percent of time each participant spent viewing faces in isolation as a covariate into our analyses examining visual attention toward angry and disgust faces in congruent and incongruent contexts. These covariates did not emerge as significant, nor did they change the pattern of results.

Does Context Influence Attention to the Face?

Given the differences between younger and older participants in their allocation of visual attention to faces versus contexts, we conducted an additional exploratory analysis. Here we examined whether we could detect visual processing differences in how participants attended to faces. Such data would suggest that contexts were influencing visual processing of emotion expressions despite the absence of an effect on later, higher-order interpretations. We defined the eye region by an ellipse from the upper nose to the brow and from temple to temple; the mouth region was the area immediately surrounding the mouth and lips extending up to the middle of the nose. We conducted a 4 (age: 4-year-olds, 8-year-olds, 12-year-olds, and college students) × 2 (facial expression: anger, disgust) × 2 (context: congruent, incongruent) mixed-model ANOVA for difference in percent gaze duration toward the eyes and mouth with age as a between-subjects factor. We also conducted an analysis for latency to first fixation and found similar results.

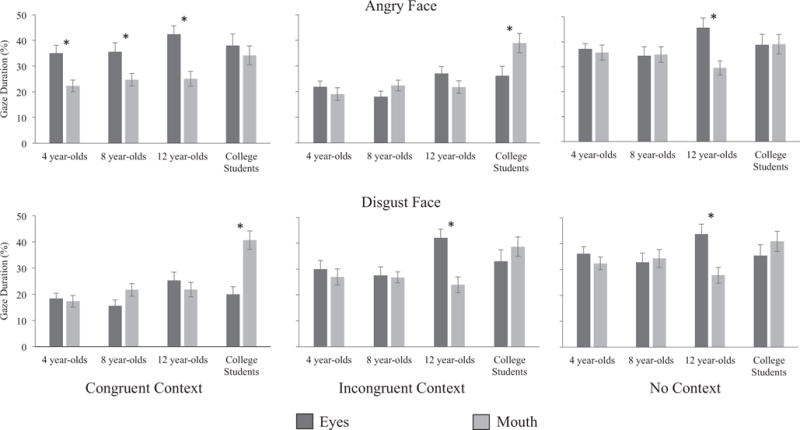

Main effects emerged for facial expression, F(1, 96) = 20.87, p < .001, , context, F(1, 96) = 3.05, p = .06, , and age F(3, 96) = 2.21, p = .09, . These main effects were accompanied by a Facial Expression × Age interaction, F(3, 96) = 3.11, p = .03, ; a Facial Expression × Context Interaction, F(3, 96) = 57.70, p < .001, ; and a Context × Age interaction, F(3, 96) = 2.88, p = .01, . However, all of these effects were further qualified by a three-way Facial Expression × Context × Age interaction, F(2, 96) = 2.22, p = .05, . As explained below, and shown in Figure 2, context differentially influenced visual scanning of facial features across the age groups.

Figure 2.

Percent gaze duration toward eyes versus mouth by different age groups when viewing an anger and disgust face with a congruent, incongruent, and no context. Error bars represent standard errors of the means. *p < .05.

When viewing angry faces in a congruent context, college students allocated equal attention to the eyes and mouth (p = .36); yet when the context was incongruent with the angry face, they looked more toward the mouth (p = .01), F(2, 96) = 15.47, p = < .001, . In contrast, all three groups of children showed an opposite pattern: they relied on the eyes when viewing anger in a congruent context (ps < .08), but equally attended to the eyes and the mouth when the face and context were incongruent (ps > .21), 4-year-olds: F(2, 96) = 8.68, p < .001, ; 8-year-olds: F(2, 96) = 14.59, p < .001, ; 12-year-olds: F(2, 96) = 8.80, p < .001, .

College students, F(2, 95) = 13.17, p < .001, , allocated their attention equally to the eyes and mouth when viewing a disgust face in incongruent context (p = .36), yet looked more toward the mouth when viewing disgust in a congruent context (p < .001). Twelve-year-olds, F(2, 95) = 10.90, p < .001, , on the other hand, looked equally to the eyes and mouth when viewing a disgust face in a congruent context (p = .29) and looked more to the eyes when viewing a disgust face in an incongruent context (p = .005). However, 4-year-olds, F(2, 95) = .356, p = .702, , and 8-year-olds, F(2, 95) = 2.21, p = .115, , allocated their attention to the eye and mouth regions equally regardless of context congruence (ps > .21).

Discussion

In the present study, we examined how people of different ages processed stimuli where different emotions were conveyed in the face and the surrounding context. Consistent with our hypotheses, we found that older children and young adults allocated more visual attention to facial expressions relative to accompanying contextual information when making emotion judgments. Younger children, on the other hand, allocated their attention toward facial and contextual information differently depending on the contextual content. Further, age-related differences were evident in participants’ visual attention toward particular features of the face. In sum, the manner in which younger participants devoted processing to different sources of potential emotional information was dependent on the emotional signal of the context. Older participants, however, consistently allocated more attention to emotional information gleaned from the face over that provided by the context.

Findings from the current study suggest that an important change in emotional development concerns the manner in which individuals attend to contextual information in perceiving and making judgments about facial expressions. Though all participants looked more toward contexts that depicted disgust than anger, how the context influenced individuals’ allocation of attention toward the face relative to the context differed by age. Older children and college students allocated most of their visual attention to the face regardless of the context emotion. Younger participants actively attended to contextual information when making emotion judgments, particularly on incongruent trials. It may be that younger children look more to contextual information when they have difficulty identifying the face. Indeed, 4-year-old children accurately identified isolated contexts of disgust but were unable to accurately identify isolated disgust faces. However, participants of all ages were able to identify isolated angry faces, though only four and 8-year-olds split their attention equally between facial and contextual information. Thus, how individuals allocate their attention to facial and contextual cues may have less to do with their ability to identify isolated facial expressions and more to do with how they learn to attend to competing emotion cues.

Although more mature participants focused more exclusively on the face, it was not the case that context had no influence on their processing of emotion cues. College students and older children did view contextual information, particularly when the context conveyed disgust, but only for a short period of time. Additionally, unlike 4-year-olds and 8-year-olds, older children’s and college students’ gaze patterns toward specific features of the face were dependent on the emotion in the context. Past research corroborates the influence of context on viewing emotional faces. Aviezer and colleagues (2008) found that among adults, context influenced visual attention toward specific facial features. The current study found similar results for 12-year-olds and college students but not for younger children. And although 12-year-old participants more closely resembled college students than the two groups of younger children on many measures, adult-like emotion perception was not yet fully achieved by the 12-year-olds. These findings provide further evidence for developmental changes in emotion perception and suggest the possibility of the emergence of adult-like patterns of emotion perception beginning sometime after 8 years of age and developing into early adolescence.

Development of Contextual Integration

The current data are consistent with the view that emotion perception involves learning processes. Although more experienced emotion perceivers—who have presumably honed their skills—can make efficient judgments from facial cues, younger children are not making such judgments based solely on facial displays. Both faces and the contexts in which the facial expressions are produced carry information necessary for determining another’s emotional state. One possibility is that young emotion learners are tracking the co-occurrence of facial expressions with situational events. Such a process, however, may be resource intensive early in the course of learning (e.g., Couperus, 2011). In particular, young emotion learners may have more experience with the emotions of anger and happiness than they do an emotion such as disgust and can therefore more easily and efficiently predict the environmental antecedents of those emotions. Lack of experience with an emotion such as disgust may result in greater ambiguity and, consistent with the current results, possibly make it more difficult for younger children to interpret. More experienced individuals may be able to make efficient—and though not definitive, at least probabilistically sound—assessments based upon rapid decoding of facial cues.

In much the same manner as children—who are presumably learning what situational contexts are associated with changes in facial musculature—adults, too, may need to refer back to contextual cues. Adults may do so when facial signals alone are too indeterminate because of perceptual similarity (e.g., anger and disgust, sadness and shame; see Aviezer et al., 2008). In most real life situations, facial and contextual emotions are likely to be closely aligned. However, ambiguous and unusual situations also naturally occur, and in these cases, perceivers seem to adopt a strategy of combining or integrating information available to them from both the context and the face (Noh & Isaacowitz, 2013). Indeed, the current findings provide evidence that all participants attended to both the face and the context and that emotion judgments were not solely based on one cue over the other. This suggests that individuals of all ages were integrating facial and contextual information when making emotion decisions but to different extents. This strategy has also been found to emerge early and vary across development (Mondloch, 2012; Mondloch et al., 2013).

In this manner, reliably judging facial expressions appears to be a skill that develops with experience (Batty & Taylor, 2006; Gao & Maurer, 2009; Herba, Landau, Russell, Ecker, & Phillips, 2006). Part of this learning process also likely involves determining what contextual information is relevant to making an emotion judgment versus what can be ignored without further processing. A more experienced emotion learner is likely to attend to a raised fist, hunched shoulders, or interpersonal context (a job interview, a birthday party) when trying to disambiguate an unclear facial expression. Such a skilled learner is less likely to attend to features in the environment such as the hairstyle or the shirt color of another individual in this type of situation. This is because such sources of information would not have yielded aid in emotion perception over the perceiver’s learning history. Thus, part of emotional development is learning which cues in the environment are helpful and which are not.

Cross-cultural research also suggests that judgments of facial expressions of emotion reflect learning. Research analyzing cultural differences in the influence of context on facial judgments has found that Eastern cultures place greater emphasis on contextual information than does Western cultures (see Ko, Lee, Yoon, Kwon, & Mather, 2011; Nisbett, Peng, Choi, & Norenzayan, 2001). As mentioned earlier, individuals from Eastern and Western cultures have also been found to show differences in how they prioritize specific features of the face (Eisenbarth & Alpers, 2011; Yuki et al., 2007). These studies provide further support for the role of experience and change in how individuals perceive and integrate facial and contextual emotion and aid in the interpretation of the current findings.

Future Directions

The present study was able to provide a fine-grained analysis of children’s and college students’ visual processing of emotional stimuli. However, it is unclear as to why the specific disgust stimulus used in the current study exerted a differential influence on children’s visual attention. It may be that the specific disgust stimulus we used in the current study is more complex, interesting, unusual, or difficult to decode. Future research will need to further unpack this issue to confirm that these results do not reflect stimulus-specific effects, ensuring that these results generalize beyond the depictions we used to represent disgust and anger, which consisted of only one exemplar of each emotion to reduce variability. In addition, future research may want to investigate visual attention to neutral contextual information in addition to a lack of contextual information, as in the present study. Pilot data indicated that our 4-year-old sample had difficulty with the seven emotion choices presented during the task. Therefore, we had them choose from only two emotion options. This change in procedure is not likely to impact the main dependent variables here. Nonetheless, future research in this area could work to design tasks and instructions that do not change across age groups. Further, we presented participants with stimuli for 5,000 ms. This duration is appropriate for the analysis of gaze patterns and general attention allocation, but also gave participants a relatively long period of time to label facial expressions of emotion. This time window may have also allowed for elaborated processing of stimuli before participants provided a response. Future work could determine if age-related changes continue to emerge in the case of fast or subliminal judgments of facial expressions.

Conclusion

The current findings provide support for the role of experience and learning in the development of emotion perception. Further, these results contribute to the debate over whether the face or context is most influential in the perception of emotion. A considerable amount of past research has focused on the relative importance of facial versus contextual information when making emotion judgments. It may be that inconsistent findings about the primacy of the face in the emotion literature reflect an assumption that emotion processing is a relatively stable process, rather than one with elements of learning and change over development. The results of this experiment are consistent with the view that the relative predominance of facial versus contextual cues in emotion perception may vary depending upon developmental stage. Adults, who are more experienced emotion viewers, appear to allocate more of their attention toward faces and use contextual information when they need further information to aid their judgments of emotional expressions. Younger children, however, devote greater attention toward contextual information and actively cross-reference facial and contextual cues, presumably to better understand those signals. Thus, the efficiency with which individuals integrate sources of emotional information may be subject to both learning and experience. The issue, then, may not be whether the face or the context is more important in emotion recognition, but rather when in development the face or the context is more important.

Supplementary Material

Acknowledgments

This research was supported by the U.S. National Institute of Mental Health through Grant MH61285 to Seth D. Pollak and support for Brian T. Leitzke through T32-MH018931-23, as well as the National Institute of Child Health and Human Development through the Waisman Center Intellectual and Developmental Disabilities Research Center Grant P30HD03352.

We thank Kristin Shutts and Heather Kirkorian for their advice throughout this project; Rista Plate and Lori Hilt for comments on drafts of this article; Robert Olson for help processing the gaze data; and Jordin Barber, Austin Kayser, Abby Studinger, Mercedes Voet, and Barb Roeber for their help with data collection.

References

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, Bentin S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science. 2008;19:724–732. doi: 10.1111/j.1467-9280.2008.02148.x. http://dx.doi.org/10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Aylward EH, Park JE, Field KM, Parsons AC, Richards TL, Cramer SC, Meltzoff AN. Brain activation during face perception: Evidence of a developmental change. Journal of Cognitive Neuroscience. 2005;17:308–319. doi: 10.1162/0898929053124884. http://dx.doi.org/10.1162/0898929053124884. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental Science. 2006;9:207–220. doi: 10.1111/j.1467-7687.2006.00480.x. http://dx.doi.org/10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Buck R. Social and emotional functions in facial expression and communication: The readout hypothesis. Biological Psychology. 1994;38:95–115. doi: 10.1016/0301-0511(94)90032-9. http://dx.doi.org/10.1016/0301-0511(94)90032-9. [DOI] [PubMed] [Google Scholar]

- Camras LA. Expressive development and basic emotions. Cognition and Emotion. 1992;6:269–283. http://dx.doi.org/10.1080/02699939208411072. [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology. 1996;70:205–218. doi: 10.1037//0022-3514.70.2.205. http://dx.doi.org/10.1037/0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Couperus JW. Perceptual load influences selective attention across development. Developmental Psychology. 2011;47:1431–1439. doi: 10.1037/a0024027. http://dx.doi.org/10.1037/a0024027. [DOI] [PubMed] [Google Scholar]

- Eisenbarth H, Alpers GW. Happy mouth and sad eyes: Scanning emotional facial expressions. Emotion. 2011;11:860–865. doi: 10.1037/a0022758. http://dx.doi.org/10.1037/a0022758. [DOI] [PubMed] [Google Scholar]

- Ekman P. An argument for basic emotions. Cognition and Emotion. 1992;6:169–200. http://dx.doi.org/10.1080/02699939208411068. [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Frary RB. Formula scoring of multiple-choice tests (correction for guessing) Educational Measurement: Issues and Practice. 1988;7:33–38. http://dx.doi.org/10.1111/j.1745-3992.1988.tb00434.x. [Google Scholar]

- Gao X, Maurer D. Influence of intensity on children’s sensitivity to happy, sad, and fearful facial expressions. Journal of Experimental Child Psychology. 2009;102:503–521. doi: 10.1016/j.jecp.2008.11.002. http://dx.doi.org/10.1016/j.jecp.2008.11.002. [DOI] [PubMed] [Google Scholar]

- Gendron M, Roberson D, van der Vyver JM, Barrett LF. Perceptions of emotion from facial expressions are not culturally universal: Evidence from a remote culture. Emotion. 2014;14:251–262. doi: 10.1037/a0036052. http://dx.doi.org/10.1037/a0036052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herba CM, Landau S, Russell T, Ecker C, Phillips ML. The development of emotion-processing in children: Effects of age, emotion, and intensity. Journal of Child Psychology and Psychiatry. 2006;47:1098–1106. doi: 10.1111/j.1469-7610.2006.01652.x. http://dx.doi.org/10.1111/j.1469-7610.2006.01652.x. [DOI] [PubMed] [Google Scholar]

- Izard CE. Basic emotions, relations among emotions, and emotion-cognition relations. Psychological Review. 1992;99:561–565. doi: 10.1037/0033-295x.99.3.561. http://dx.doi.org/10.1037/0033-295X.99.3.561. [DOI] [PubMed] [Google Scholar]

- Ko SG, Lee TH, Yoon HY, Kwon JH, Mather M. How does context affect assessments of facial emotion? The role of culture and age. Psychology and Aging. 2011;26:48–59. doi: 10.1037/a0020222. http://dx.doi.org/10.1037/a0020222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malatesta C, Izard CE. The ontogenesis of human social signals: From biological imperative to symbol utilization. In: Fox N, Davidson R, editors. The psychobiology of affective development. Hillsdale, NJ: Erlbaum; 1984. pp. 161–206. [Google Scholar]

- Marsh AA, Elfenbein HA, Ambady N. Nonverbal “accents”: Cultural differences in facial expressions of emotion. Psychological Science. 2003;14:373–376. doi: 10.1111/1467-9280.24461. http://dx.doi.org/10.1111/1467-9280.24461. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ. Sad or fearful? The influence of body posture on adults’ and children’s perception of facial displays of emotion. Journal of Experimental Child Psychology. 2012;111:180–196. doi: 10.1016/j.jecp.2011.08.003. http://dx.doi.org/10.1016/j.jecp.2011.08.003. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Horner M, Mian J. Wide eyes and drooping arms: Adult-like congruency effects emerge early in the development of sensitivity to emotional faces and body postures. Journal of Experimental Child Psychology. 2013;114:203–216. doi: 10.1016/j.jecp.2012.06.003. http://dx.doi.org/10.1016/j.jecp.2012.06.003. [DOI] [PubMed] [Google Scholar]

- Nisbett RE, Peng K, Choi I, Norenzayan A. Culture and systems of thought: Holistic versus analytic cognition. Psychological Review. 2001;108:291–310. doi: 10.1037/0033-295x.108.2.291. http://dx.doi.org/10.1037/0033-295X.108.2.291. [DOI] [PubMed] [Google Scholar]

- Noh SR, Isaacowitz DM. Emotional faces in context: Age differences in recognition accuracy and scanning patterns. Emotion. 2013;13:238–249. doi: 10.1037/a0030234. http://dx.doi.org/10.1037/a0030234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russell JA. A circumplex model of affect. Journal of Personality and Social Psychology. 1980;39:1161–1178. doi: 10.1037//0022-3514.79.2.286. http://dx.doi.org/10.1037/h0077714. [DOI] [PubMed] [Google Scholar]

- Schwarzer G. Development of face processing: The effect of face inversion. Child Development. 2000;71:391–401. doi: 10.1111/1467-8624.00152. http://dx.doi.org/10.1111/1467-8624.00152. [DOI] [PubMed] [Google Scholar]

- Tomkins SS. Affect, imagery, consciousness: Vol. I. The positive affects. New York, NY: Springer; 1962. [Google Scholar]

- Tomkins SS. Affect, imagery, consciousness: Vol. II. The Negative Affects. New York, NY: Springer; 1963. [Google Scholar]

- Trope Y. Identification and inferential processes in dispositional attribution. Psychological Review. 1986;93:239–257. http://dx.doi.org/10.1037/0033-295X.93.3.239. [Google Scholar]

- Yuki M, Maddux WW, Masuda T. Are the windows to the soul the same in the East and West? Cultural differences in using the eyes and mouth as cues to recognize emotions in Japan and the United States. Journal of Experimental Social Psychology. 2007;43:303–311. http://dx.doi.org/10.1016/j.jesp.2006.02.004. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.