Abstract

Delay discounting refers to the decrease in subjective value of an outcome as the time to its receipt increases. Across species and situations, animals discount delayed rewards, and their discounting is well-described by a hyperboloid function. The current review begins with a comparison of discounting models and the procedures used to assess delay discounting in nonhuman animals. We next discuss the generality of discounting, reviewing the effects of different variables on the degree of discounting delayed reinforcers by nonhuman animals. Despite the many similarities in discounting observed between human and nonhuman animals, several differences have been proposed (e.g., the magnitude effect; nonhuman animals discount over a matter of seconds whereas humans report willing to wait months, if not years before receiving a reward), raising the possibility of fundamental species differences in intertemporal choice. After evaluating these differences, we discuss delay discounting from an adaptationist perspective. The pervasiveness of discounting across species and situations suggests it is a fundamental process underlying decision making.

Keywords: Delay, Discounting, Magnitude Effect, Human, Animal, Hyperbolic

Pigeon, rat, monkey, which is which? It doesn’t matter…once you have allowed for differences in the ways in which they make contact with the environment, and in the ways in which they act upon the environment, what remains of their behavior shows astonishingly similar properties (Skinner, 1956, pp. 230–231).

Organisms continually are confronted with options that differ in amount, delay, quality, likelihood, effort, and valence, and must decide which among the available options to choose. For example, organisms make decisions concerning which food items to consume and when to do so. These decisions involve several factors like the price of the foods, their taste, the effort involved in obtaining them, how recently they were last consumed, their caloric value, and when they might next be available for consumption. Nonhuman animals make decisions regarding which prey to pursue and which plants or fruits to consume. Their decisions involve multiple tradeoffs in which many factors are considered, such as the amount of energy required to obtain a food source, the likelihood of success, or how depleted a patch is before leaving it to spend time and energy searching for and foraging in a more bountiful patch.

Choices are relatively simple when the available options differ on only one dimension: Individuals tend to prefer larger to smaller rewards, to receive them sooner rather than later, to engage in less rather than more effort, and to receive them with a greater degree of certainty. Choice becomes substantially more difficult, however, when the options vary on more than one dimension. Consider, for example, the choice between an immediate $50 and a $100 available in 1 year. Whereas $100 is preferred to $50, a sooner reward typically is preferred to a later reward. Preference between a smaller, but immediate reward and a larger, but delayed reward changes within the same individual depending on the context, the commodities involved, their amounts, and the delay.

In the example above, some individuals may choose the immediate $50, despite $100 being an objectively larger amount. In such a case, the delayed $100 is said to have less present or subjective value than the immediate $50. The process by which outcomes lose subjective value as the delay to their receipt increases is termed delay discounting. The discounting framework (Green & Myerson, 2004) allows for the study and interpretation of a wide range of choice behaviors across species. A person’s desire for an immediately available sweet might lead some people to discount steeply the otherwise more highly valued physical fitness and health later in life. A monkey’s desire for a plant available within-reach might lead it to discount an otherwise more preferred fruit that requires greater effort and more time to obtain. The pervasiveness of discounting and its relation to behavioral traits such as impulsivity, self-control, and risk-taking, have made discounting a topic of much interest across many fields, including psychology (for a review, Green & Myerson, 2004), economics (for a review, Frederick, Loewenstein, & O’Donoghue, 2002), marketing (Hershfield et al., 2011; Zauberman, Kim, Malkoc, & Bettman, 2009), and behavioral ecology (Kacelnik, 2003; Kacelnik & Bateson, 1996).

A related area of research, probability discounting, examines risky choices within the discounting framework. With probability discounting, the subjective value of an outcome decreases as the likelihood of its receipt increases. There are many interesting similarities and differences between delay and probability discounting, and both are well-described by a similar mathematical function (the hyperboloid discounting function; see Green, Myerson, & Vanderveldt, 2014, for a review). Because far less work has examined probability discounting in nonhuman animals, we restrict our review of the animal discounting literature to that of delay discounting.

Delay Discounting Models

Over the last several decades, psychologists and economists have been developing and empirically testing different mathematical models to describe discounting. The standard model in economics is the discounted utility model (Samuelson, 1937), an exponential discounting model of the form:

| (Equation 1) |

where V is the present subjective value of a larger delayed reward of amount A, D is the delay to its receipt, and k is a free parameter that represents the rate of discounting. Larger values of k are associated with steeper discounting (i.e., the delayed reward loses subjective value more quickly), which may indicate a higher degree of impulsivity (Madden & Bickel, 2010)1. One of the characteristics of an exponential discounting model is the assumption that the risk associated with waiting for the reward is constant. This assumption, called the stationary axiom, assumes that the rate of discounting remains constant during any given time period. If this axiom is true, then an organism’s preference between two alternatives that differ in amount and delay should not change with the addition of a common delay to both outcomes. In other words, only the time difference between the delays to the smaller and larger rewards, and not the absolute delays to both, should play a role in the organism’s choices (Koopmans, 1960). For example, if an individual prefers to receive $50 immediately rather than wait 1 year for $100, then this person still would prefer $50 in five years over $100 in 6 years (Green & Myerson, 1996; Loewenstein & Prelec, 1991).

Despite its strong standing in economic theory, the stationary assumption contradicts many everyday experiences. People often make plans to eat a healthy meal, but later find at dinner that they prefer unhealthy but tastier foods. Similarly, a person might say she will save her tax refund, but when later receives it, spends it. A preference reversal refers to the change from preferring a larger, more delayed reward when both outcomes are delayed in time, to preferring the smaller, more immediate reward as the time to the receipt of those rewards approaches. The occurrence of preference reversals is not predicted by the exponential model (Frederick et al., 2002), unless the added assumption is made that the k parameters differ between the reward amounts in a particular way (Green & Myerson, 1993).

In psychology, the most widely studied model of discounting is the hyperbolic function proposed by Mazur (1987):

| (Equation 2) |

where the variables all have the same meaning as in Equation 1. Contrary to an exponential model, the hyperbolic model does not assume a constant rate of discounting. Rather, it predicts that the value of the delayed reward decreases faster at shorter delays and slower at longer delays, and predicts preference reversals.

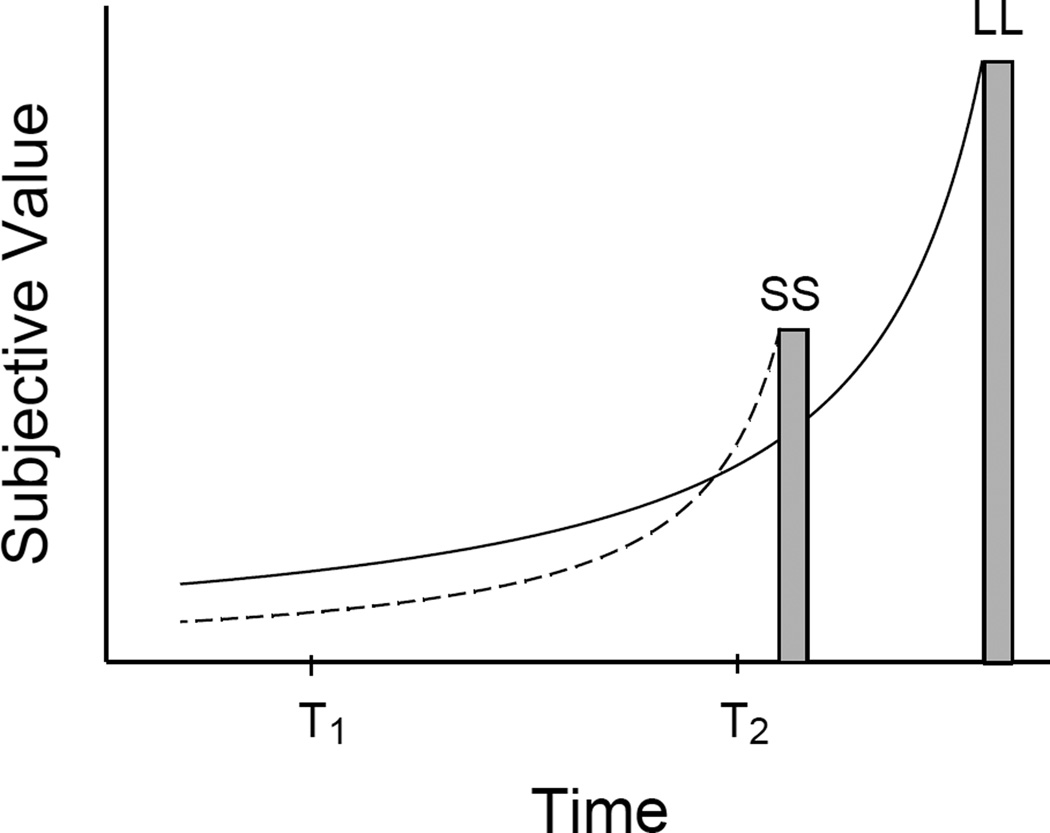

Figure 1 illustrates a preference reversal using the predictions from a hyperbolic model (Eq. 2). The height of the bars represents the nominal (i.e., undiscounted) reward amounts, and the curves represent hyperbolic discounting of those rewards. The organism’s preference depends on the relative subjective values of both rewards at each point in time. When both rewards are significantly delayed (at T1), the organism is more likely to choose the larger, later reward whose subjective value is greater. As the time to receipt of the smaller reward approaches, the likelihood that the organism would now choose the smaller reward (at T2) increases as its subjective value increases proportionally more. In the laboratory, several studies with humans using a variety of procedures have demonstrated the occurrence of preference reversals (Green, Fristoe, & Myerson, 1994; Holt, Green, Myerson, & Estle, 2008; Kirby & Herrnstein, 1995; Madden, Bickel, & Jacobs, 1999; Rodriguez & Logue, 1988). These studies provide strong support for a hyperbolic discounting model and suggest that the stationary axiom is an inadequate assumption of models of intertemporal choice.

Figure 1.

Changes in subjective value of a smaller-sooner (SS) reward and a larger-later (LL) reward. The heights of the bars represent the nominal (i.e., undiscounted) amount of reward. The curves show how subjective values change as a function of delay to the rewards according to the hyperbolic model of discounting (Equation 2). The point at which the two curves intersect indicates the point of preference reversal from LL at T1 to SS at T2.

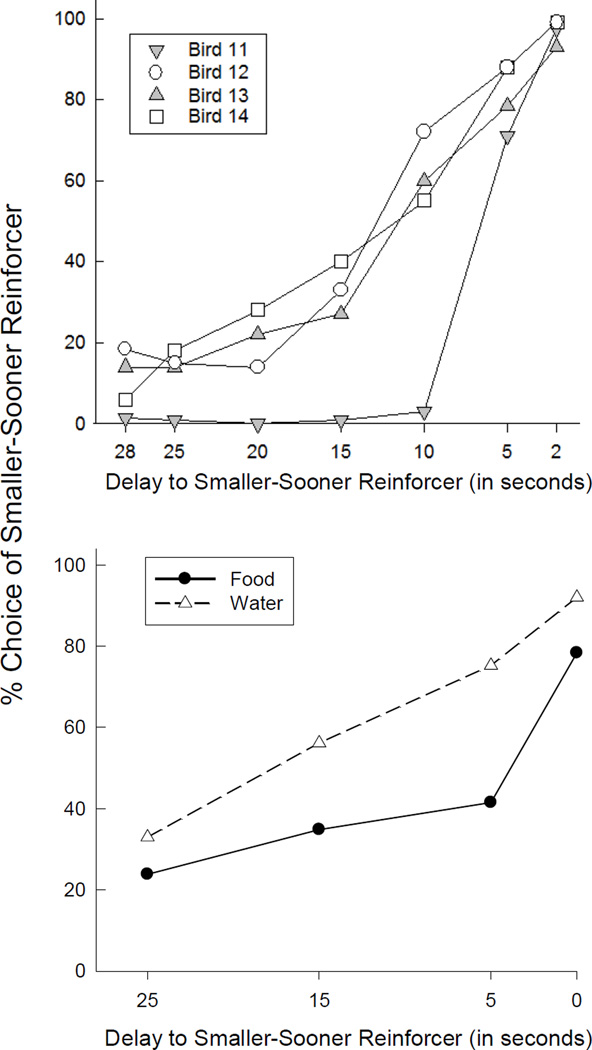

Preference reversals also have been obtained in studies with pigeons (Ainslie & Herrnstein, 1981; Calvert, Green, & Myerson, 2010; Green, Fisher, Perlow, & Sherman, 1981; Rachlin & Green, 1972; Rodriguez & Logue, 1988) and rats (Green & Estle, 2003). Green et al. (1981) presented pigeons with choices between a smaller, sooner reinforcer and a larger, later reinforcer. In conditions in which the smaller (2-s access to grain) and larger (6-s access to grain) reinforcers were very delayed (28 s and 32 s, respectively), every pigeon overwhelmingly preferred the larger amount. However, when the same reinforcers were available after much shorter delays (2 s and 6 s, respectively), each pigeon now overwhelmingly preferred the smaller, sooner reinforcer, demonstrating a preference reversal (see Fig. 2, top panel). Note that these two conditions are equivalent to points T1 and T2 in Figure 1, and that the delay difference between the two amounts remained constant at 4 s. The bottom panel of Figure 2 shows preference reversals with food and water reinforcers in rats (Green & Estle, 2003). For both reinforcer types, when the choice was offered well in advance of the outcomes, the rats preferred the larger, more delayed reinforcer. As the choice was offered closer in time to the outcome, the rats increased their preference for the smaller, sooner reinforcer. The presence of preference reversals in these studies suggests that, as with humans, an exponential function is an inadequate description of choice in nonhuman animals as well.

Figure 2.

Percent choice of a smaller, sooner reinforcer as a function of the delay until its receipt. Preference for the smaller, sooner reinforcer increased as time to its receipt approached. The top panel shows percent choice of a smaller, sooner food reinforcer by individual pigeons; the bottom panel shows the mean percent choice by rats of a smaller, sooner food (filled circles) and water (open triangles) reinforcer. Data are from “Preference reversal and self control: Choice as a function of reward amount and delay” by L. Green, E. B., Fisher, S. Perlow, and L. Sherman, 1981, Behaviour Analysis Letters, 1, pp. 43–51, and from “Preference reversals with food and water reinforcers in rats” by L. Green and S. J. Estle, 2003, Journal of the Experimental Analysis of Behavior, 5, pp. 233–242.

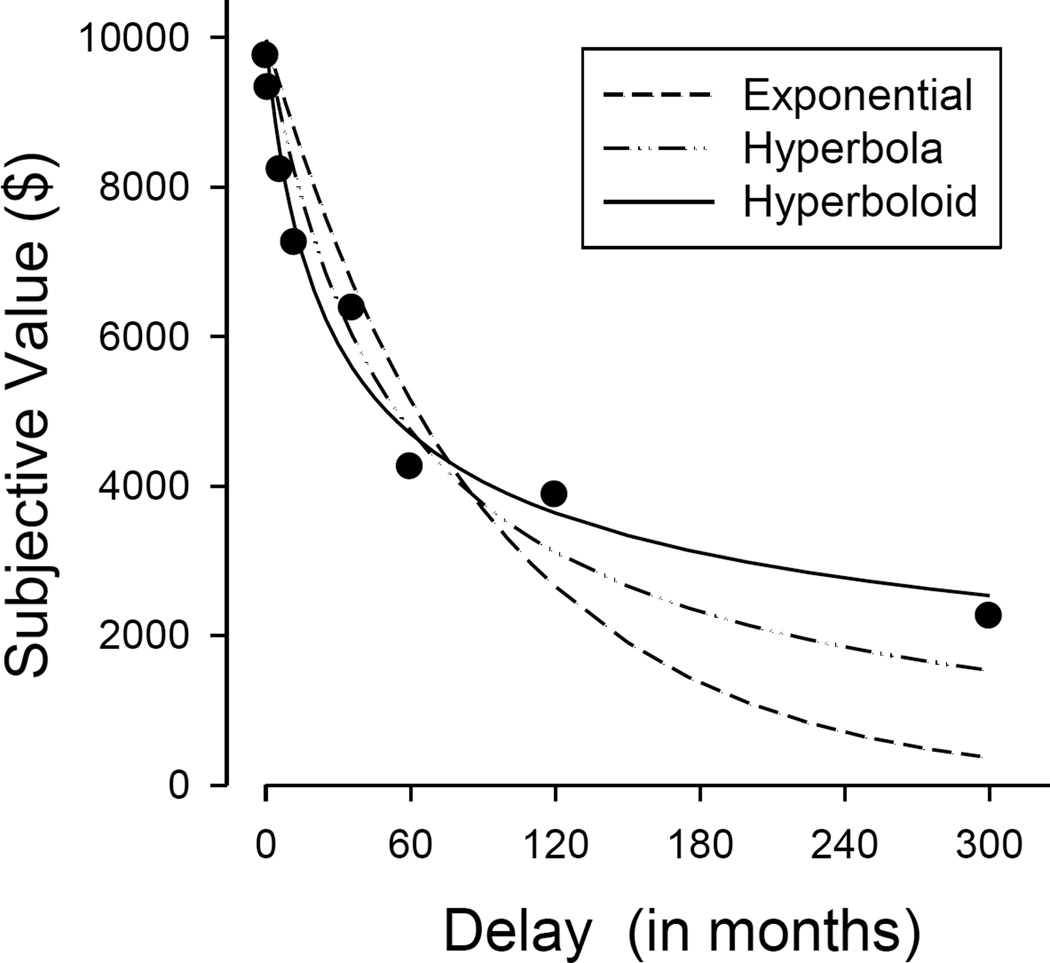

Empirical tests between Equations 1 and 2 have overwhelmingly reported that the hyperbolic model accounts for a greater proportion of the variance in delay discounting data than does the exponential function (e.g., Myerson & Green, 1995). As may be seen in Figure 3, an exponential function tends to greatly overestimate subjective value at briefer delays and to greatly underestimate subjective value at longer delays. The finding that a hyperbolic function provides a better fit to discounting data than does an exponential function has been replicated numerous times in humans across a wide range of reward domains and populations (e.g., Bickel, Odum, & Madden, 1999; Johnson, Herrmann, & Johnson, 2015; Kirby & Santiesteban, 2003; Madden, Begotka, Raiff, & Kastern, 2003; Myerson & Green, 1995; Raineri & Rachlin, 1993; Simpson & Vuchinich, 2000).

Figure 3.

Fits of the exponential (Eq. 1), simple hyperbola (Eq. 2), and hyperboloid (Eq. 3) functions to the discounting of a delayed hypothetical $10,000 reward. Data are from “Discounting of delayed rewards: A life-span comparison” by L. Green, A. Fry, and J. Myerson, 1994, Psychological Science, 5, p. 35.

In nonhuman animals as well, the hyperbolic function has been shown to provide a better description of choice than the exponential (Aparicio, 2015; Farrar, Kieres, Hausknecht, de Wit, & Richards, 2003; Huskinson & Anderson, 2013; Mazur, 1987; Mazur & Biondi, 2009; Rodriguez & Logue, 1988). Most studies have assessed discounting by rats and pigeons, but the hyperbolic model has been found to provide a good description of data from other animals as well, including rhesus monkeys (Hwang, Kim, & Lee, 2009; Woolverton, Myerson, & Green, 2007), chimpanzees and bonobos (Rosati, Stevens, Hare, & Hauser, 2007), and mice (Mitchell, Reeves, Li, & Phillips, 2006; Oberlin & Grahame, 2009). Furthermore, it provides a good fit across a range of reinforcer types in animals, including plain water, sweetened liquids, alcohol, drugs, and food of varying preferences (Calvert et al., 2010; Farrar et al., 2003; Freeman, Green, Myerson, & Woolverton, 2009; Freeman, Nonnemacher, Green, Myerson, & Woolverton, 2012; Mitchell et al., 2006).

The hyperbolic function (Eq. 2) actually is a special case of a more general hyperboloid function in which the entire denominator is raised to a power (Green, Fry, & Myerson, 1994):

| (Equation 3) |

in which it has been argued that the parameter s reflects the nonlinear scaling of amount and of delay (McKerchar, Green, & Myerson, 2010; Myerson & Green, 1995). If delay and amount are scaled linearly, then s = 1.0, and Equation 3 reduces to Equation 2. In many cases, however, amount and delay appear to be scaled nonlinearly. As evident from the Weber-Fechner law and Stevens' (1957, 1960) power law, much of perception involves the nonlinear scaling of a physical stimulus. The difference between lifting 1 pound and 2 pounds, for example, is more easily perceived than is the difference between lifting 51 and 52 pounds. So, too, time and amount are perceived nonlinearly (Green, Myerson, Oliveira, & Chang, 2013; Zauberman et al., 2009). For example, most people would consider the difference between $5 and $20 to be substantial compared to the difference between $1,005 and $1,020. In both of these cases, the objective difference in amount is $15, but this difference is not perceived as subjectively the same. The value of the s exponent has implications for the shape of the discounting curve. When s is less than 1.0 the hyperboloid discounting function declines at a slower rate at the longer delays when compared to the hyperbolic model (Eq. 2), implying that discounting may level off when the reward is far in the future (see Fig. 3).

When the hyperboloid model (Eq. 3) is fit to human discounting data, it typically provides a superior fit over both simple hyperbolic and exponential functions (see Fig. 3), beyond the increase in proportion of variance accounted for merely by the addition of an additional free parameter (Myerson & Green, 1995). That is, the difference in fits between the hyperboloid and the simple hyperbolic functions is greater than would be expected simply due to the additional free parameter in the hyperboloid model. Across situations, populations, and reward domain, the hyperboloid model has been shown to provide a better fit to human discounting data than a simple hyperbola (e.g., Friedel, DeHart, Madden, & Odum, 2014; Green, Myerson, & Ostaszewski, 1999b; Lawyer, Williams, Prihodova, Rollins, & Lester, 2010).

Furthermore, when a hyperboloid function is fit to human discounting data, s is found to be significantly less than 1.0 (Myerson & Green, 1995). In contrast, in nonhuman animals, s typically does not differ from 1.0 (Freeman et al., 2012; Green, Myerson, & Calvert, 2010; Green, Myerson, Holt, Slevin, & Estle, 2004; Mazur, 2007; Woolverton et al., 2007). Recently, Aparicio (2015) compared fits of five different discounting models, including the exponential (Eq. 1), the hyperbola (Eq. 2), and the hyperboloid (Eq. 3), to data from two species of rats (Lewis and Fischer 3444 rats). Using the Akaike information criterion (AIC; Akaike, 1974), which weights both the goodness of fit and the number of free parameters of each model, Aparicio concluded that the simple hyperbola (Eq. 2) was the most parsimonious and best model to describe the rat data. Thus, a simple hyperbola (Eq. 2) is sufficient to describe choice by nonhuman animals, whereas a hyperboloid (Eq. 3), in which s is less than 1.0, provides a better description of choice by humans.

It is important to note that the vast majority of work investigating the hyperboloid function in nonhuman animals has examined pigeons and rats, with very little data obtained from nonhuman primates. There is limited evidence that s does not differ from 1.0 in rhesus monkeys (Woolverton et al., 2007), but this has not been investigated in other primates. More data across a wider range of species might reveal a transition from hyperbolic to hyperboloid discounting across species.

Delay Discounting Assessment Procedures

The increasing interest in discounting processes, in the variables that affect discounting, and in testing the adequacy and generality of different discounting models has led to a surge in the number of both human and nonhuman animal studies (Madden & Bickel, 2010). In order to assess how organisms make decisions between outcomes of varying amounts and delays, several procedures have been developed. Although there is no standard delay discounting task consistently used across all laboratories and experiments, most studies involve a series of choices between a smaller reward available after a very brief delay and a larger reward available after a longer delay. Across trials, one aspect of the outcomes is varied (e.g., the amount of the smaller, sooner reward), until a point is reached in which the two outcomes are judged to be approximately equal in subjective value (i.e., the indifference point). By then estimating points of indifference across different delays to the larger outcome, a discounting curve can be determined in which the subjective value of the larger, but delayed reward is plotted as a function of the delay to its receipt.

One discounting procedure, called the adjusting-delay procedure, was introduced in the seminal work by Mazur (1987). Pigeons were presented with choices between 2 s of access to grain after a short delay and 6 s of access to grain after a longer delay. Across trials, the amounts of the two reinforcers were held constant while the delay to the larger reinforcer changed after several trials. Mazur originally divided each experimental session into blocks, consisting of four trials each. Importantly, the first two trials of each block were forced-choice trials, in which only one of the alternatives was presented on a trial. The purpose of the forced-choice trials was to ensure that the pigeon was exposed to the contingencies associated with each of the two outcomes. Following the forced-choice trials were two free-choice trials, during which the pigeons chose, via a single response, between the smaller, sooner reinforcer and the larger, more delayed reinforcer. Depending on the pigeon’s responses, the delay to the larger reinforcer was adjusted before the next trial block. Specifically, if the pigeon chose the smaller amount on both free-choice trials, then the delay to the larger reinforcer was decreased by 1 s for the next block; if the pigeon chose the larger amount on both free-choice trials, then the delay to that amount was increased by 1 s for the next block; finally, if the pigeon chose each outcome once, then the delay to the larger amount remained the same in the next block. This procedure converges on a point of subjective equality in which the pigeon is indifferent between the smaller and the larger reinforcers.

The obtained indifference point also can be conceptualized as the maximum delay the pigeon will endure before switching to the smaller, sooner reinforcer. Thus, smaller indifference points are associated with a higher degree of impulsivity (i.e., greater degree of discounting of the larger, delayed reinforcer), and larger indifference points are associated with a higher degree of self-control (i.e., lower degree of discounting of the larger, delayed reinforcer). In order to obtain a more complete picture of the organism’s discounting behavior, the procedure is repeated with different amounts or reinforcement schedules of the smaller, sooner reinforcer (Mazur & Biondi, 2009). Different discounting models then can be fitted to the resulting indifference points, like those shown in Figure 3.

The adjusting-delay procedure originally was developed for pigeons (Mazur, 1987, 2000; Mazur & Biondi, 2009), but has been used with other species, including humans (Rodriguez & Logue, 1988), rats (Logue et al., 1992; Mazur, 2007; Mazur & Biondi, 2009; Mazur, Stellar, & Waraczynski, 1987; Perry, Larson, German, Madden, & Carroll, 2005, but see Cardinal, Daw, Robbins, & Everitt, 2002, who could not obtain stable performance with rats using an adjusting-delay procedure), tamarins and marmosets (Stevens, Hallinan, & Hauser, 2005), capuchins (Addessi, Paglieri, & Focaroli, 2011), and rhesus monkeys (Hwang et al., 2009).

A similar titration procedure, first developed for nonhuman animals by Richards, Mitchell, de Wit, and Seiden (1997), is the adjusting-amount procedure. Richards et al. adapted the procedure used by Mazur (1987) in a series of experiments in which rats chose between a smaller amount of water delivered immediately and a larger amount of water delivered after a delay. The amount of the smaller, immediate water reinforcer was adjusted based on the rats’ choices until an amount was assumed to be approximately equal in subjective value to the larger, delayed amount. This procedure was repeated at different delays to the larger reinforcer, and a discounting function then was fitted to the indifference points, showing how the present subjective value of a delayed reinforcer changes as the delay to its receipt increases. The adjusting-amount procedure has been successfully implemented in studies using pigeons (Green, Myerson, Shah, Estle, & Holt, 2007), rats (Green et al., 2004; Reynolds, de Wit, & Richards, 2002), rhesus monkeys (Freeman et al., 2009), and humans (Rachlin, Raineri, & Cross, 1991).

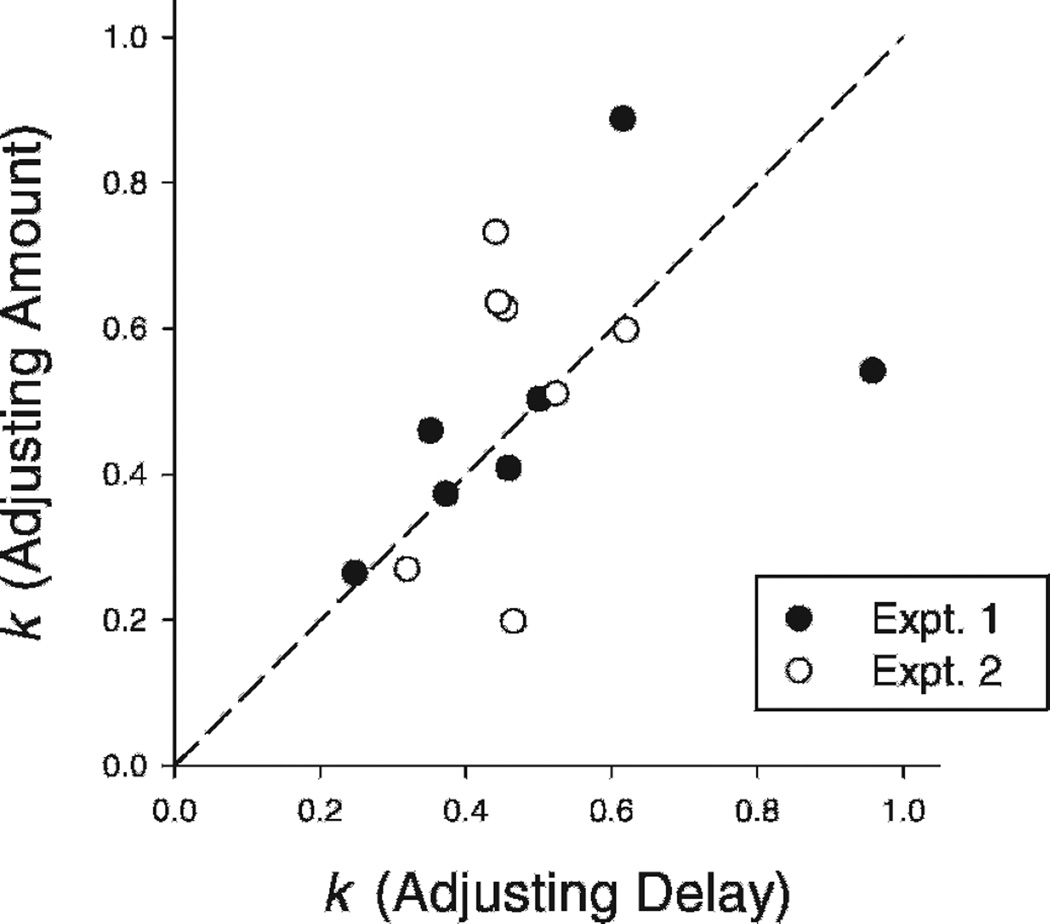

Green et al. (2007) compared the indifference point estimates obtained with both the adjusting-amount and adjusting-delay procedures using pigeons as subjects. In this study, a within-subject yoking technique was used in which indifference points obtained with each procedure were used as the starting points for the other procedure. For example, some pigeons were exposed first to an adjusting-amount procedure in which they chose between a smaller, immediate amount and a larger, delayed amount. The smaller amount was adjusted until an indifference point was reached. This indifference point, in turn, was used as the value of the smaller, immediate amount in an adjusting-delay procedure using the same pigeons. If both procedures yield equivalent estimates, then the delay at which the pigeons were indifferent in the adjusting-delay procedure should be similar to the fixed delay used in the adjusting-amount procedure. In a like manner, some pigeons were exposed to the adjusting-delay procedure first, and the delay to the larger reinforcer was adjusted until the pigeon was indifferent between the two reinforcer amounts. This obtained delay then was used as the delay to the larger reinforcer in the adjusting-amount procedure. If both procedures yield equivalent estimates, then the amount of the smaller reinforcer in the adjusting-amount procedure should be similar to the fixed, smaller amount used in the adjusting-delay procedure

In two experiments, Green et al. (2007) found that the adjusting-amount and the adjusting-delay procedures produced reasonably similar estimates of discounting, as indicated by the discounting rate parameter k (see Fig. 4). Furthermore, the hyperbolic function (Eq. 2) provided generally good fits to the indifference points, showing that the discounting functions obtained with both procedures had similar shapes. With humans, Holt, Green, and Myerson (2012) compared the discounting estimates obtained with the adjusting-amount and adjusting-delay procedures. Similar to Green et al., Holt et al. found no significant differences between the two procedures. These findings provide strong support for the assumption that both the adjusting-delay and the adjusting-amount procedures are tapping into the same underlying decision-making processes in the discounting of delayed rewards by human and nonhuman animals alike.

Figure 4.

Individual estimates of k for the adjusting-amount procedure plotted against the individual estimates for the corresponding adjusting-delay procedure from both Experiment 1 (filled circles) and Experiment 2 (open circles) of Green et al. (2007). The dashed line represents equivalent rates of discounting. Figure is from “Do adjusting-amount and adjusting-delay procedures produce equivalent estimates of subjective value in pigeons?” by L. Green, J. Myerson, A. K. Shah, S. J. Estle, and D. D. Holt, 2007, Journal of the Experimental Analysis of Behavior, 87, p. 345. Copyright 2007 by John Wiley & Sons, Inc.

The two procedures just described were designed to obtain several indifference points between smaller and larger amounts, thereby allowing different mathematical models of discounting to be fitted and evaluated. Although the adjusting-amount and the adjusting-delay procedures assess the present value of delayed reinforcers over a range of delays and/or amounts, other procedures have been designed to obtain a quicker, albeit less comprehensive, measure of discounting, but which are well suited for other purposes. One such procedure was developed by Evenden and Ryan (1996). They presented rats with a choice between a smaller amount of food (1 food pellet) to be delivered immediately and a larger amount (3 or 5 food pellets) to be delivered after a delay, and the delay was increased systematically during a session. At the beginning of the session, when shorter delays were in effect, the rats overwhelmingly preferred the larger reinforcer, but preference for the larger reinforcer decreased as the delay to its receipt increased.

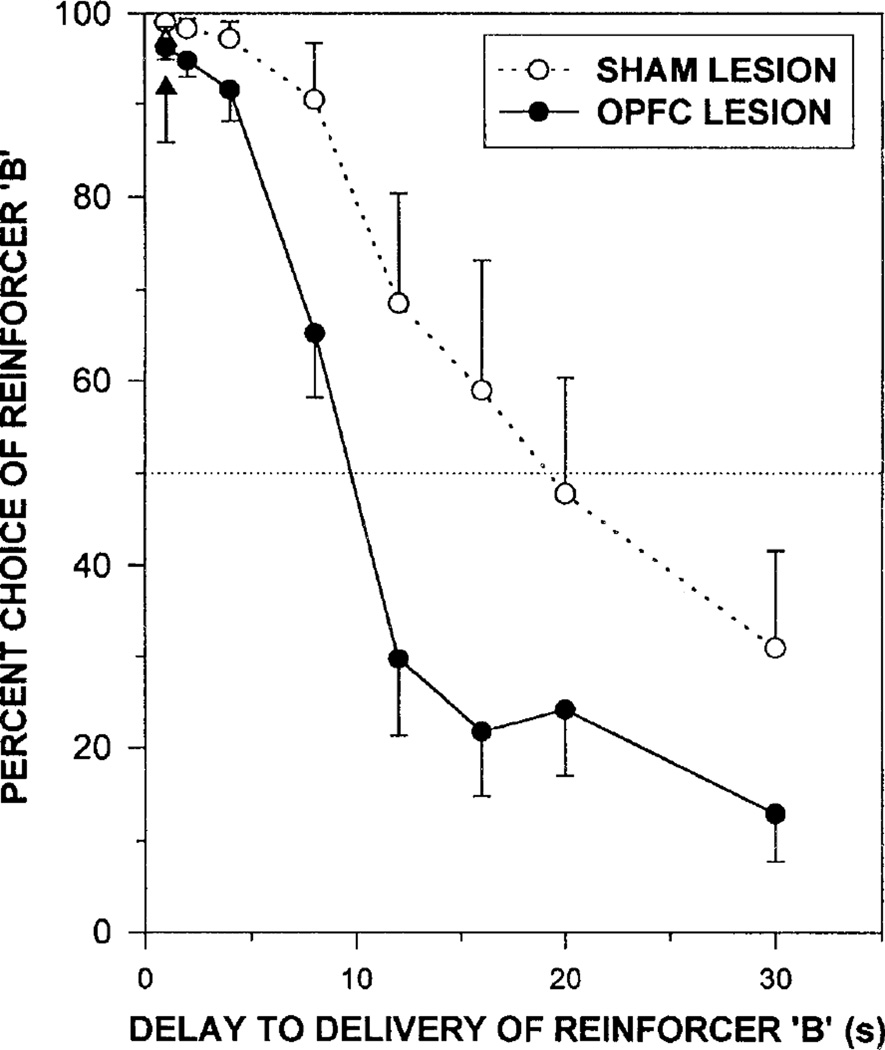

Although the Evenden and Ryan (1996) procedure provides only a single indifference point (the point at which the preference function crosses the 50% level; see Fig. 5), it can be used to assess how different variables affect the level of self-controlled or impulsive choice. Specifically, if an organism is more likely to wait for the larger reinforcer (i.e., greater self-control), this produces a function shifted to the right of the dotted function shown in Figure 5. Likewise, if an organism is less likely to wait for a larger reinforcer (i.e., greater impulsivity), this produces a function shifted to the left. Using their procedure, Evenden and Ryan compared how different drugs affected the level of impulsivity in rats. They found that functions produced under the influence of diazepam (an anxiolytic often used to reduce anxiety) and metergoline (a serotonin antagonist) were shifted to the right (i.e., increased self-control), whereas the function produced under the influence of d-amphetamine (a stimulant) was shifted to the left (i.e., increased impulsivity). As shown in Figure 5, Mobini et al. (2002) found that rats with lesions in the orbital prefrontal cortex exhibited increased impulsivity (i.e., a function shifted to the left), compared to a control group that received a sham lesion. The Evenden and Ryan procedure is widely used for investigating discounting in animals. It provides a relatively quick measure of choice, which makes it particularly advantageous in studies investigating the effects of drugs (e.g., Cardinal, Robbins, & Everitt, 2000; Evenden & Ryan, 1999) and brain lesions (e.g., Cardinal et al., 2001; Mobini et al., 2002; Mobini, Chiang, Ho, Bradshaw, & Szabadi, 2000). However, some caution must be noted because this procedure appears to be more susceptible to carry-over effects (for details, see Madden & Johnson, 2010).

Figure 5.

Mean percentage choice of the larger reinforcer as a function of the delay to the reinforcer. Filled circles represent choice by rats after orbital prefrontal cortex (OPFC) lesion, and open circles represent choice by the sham lesion control rats. (Error bars show one standard error of the mean.) The dotted horizontal line represents the indifference point, at which the smaller, sooner and the larger, later reinforcers each are chosen 50% of the time. Figure is adapted from “Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement” by S. Mobini, S. Body, M.-Y. Ho, C. Bradshaw, E Szabadi, J. Deakin, and I Anderson, 2002, Psychopharmacology, 160, p. 292. Copyright 2002 by Springer.

A final set of procedures to assess the subjective value of delayed reinforcers uses concurrent-chains. The procedures discussed until now typically require a single response on each trial to determine the animal’s preference. In contrast, a concurrent-chains procedure assesses the proportion of responses allocated to a choice alternative. Furthermore, a concurrent-chains procedure allows for the assessment of additional information, such as rate of response, because subjects can respond throughout the entire initial-link period.

Grace (1999) used a two-component concurrent-chains procedure to study the effect of amount of reinforcement on pigeons’ sensitivity to delay. According to Grace (1996,1999), sensitivity to delay can be interpreted as the rate of discounting, with greater sensitivity to delay being associated with a greater degree of discounting. In both components of his procedure (Grace, 1999), the initial-links of the chain were associated with independent, concurrent variable-interval schedules (specifically, concurrent VI-30 s VI-30 s schedules). The two components differed in key color and duration of reinforcement (the reinforcement magnitudes were in a 2.5:1 ratio between components). More specifically, in one component, the initial-link response keys were illuminated with red light and both terminal links were associated with a smaller amount of food (e.g., 1.7 s access to food). In the other component, the initial-link keys were illuminated with green light and both terminal links were associated with a larger amount of food (e.g., 4.25 s access to food). In the terminal links, the left and right keys were associated with different pairs of VI schedules (e.g., VI-10 s and VI-20 s) across different experimental conditions.

By examining response allocation during the initial link, Grace determined the pigeons’ sensitivity to the delays experienced in the terminal links, and therefore their approximate degree of discounting. The more sensitive the pigeon is to delay, the more extreme the initial-link preference will be for the terminal link with the briefer VI delay. Other studies have employed this concurrent-chains procedure both with pigeons and with rats (Grace, Sargisson, & White, 2012; Ong & White, 2004; Orduña, Valencia-Torres, Cruz, & Bouzas, 2013), but none has directly estimated the indifference points between larger, later and smaller, sooner reinforcers. Grace et al. (2012) did present their data in the form of a discounting function, but rather than plotting the indifference point as a function of the delay to the larger, later reinforcer, they plotted a transform of the logarithm of the response ratio in the initial links as a function of the delay in the terminal links.

Recently, Oliveira, Green, and Myerson (2014) combined a concurrent-chains procedure with an adjusting-amount procedure so that discounting functions could be directly obtained. During the initial link of each trial, pigeons were presented with two white keys on a non-independent VI-30 schedule. The schedules were non-independent to ensure that each session resulted in an equal number of smaller, immediate-reinforcer and larger, delayed-reinforcer terminal-link trials. On smaller, immediate-reinforcer trials, a peck on the left key turned that key red after the VI schedule in the initial link had timed out2. After three pecks to the terminal-link red key, the pigeon would be presented with a small number of food pellets, the amount of which varied across sessions. For larger, later-reinforcer trials, a peck on the right key turned that key green after the VI schedule in the initial link had timed out. After three pecks to the terminal-link green key, a green cue light flashed for the duration of the delay, which varied across different conditions. After the delay, the pigeon was presented with the larger, fixed amount of food (e.g., 32 food pellets).

Oliveira et al. (2014) assessed preference for each reinforcer by measuring the relative number of responses throughout a session to the right and left keys during the initial link. As with other adjusting-amount procedures, the amount of the smaller, immediate reinforcer in the terminal link was adjusted until the two reinforcers were approximately equally preferred (i.e., the pigeon was responding approximately equally on the left and right keys during the initial link). With this combined concurrent-chains/adjusting-amount procedure, as with the adjusting-delay and the adjusting-amount procedures, the subjective value of a reinforcer decreased as it was delayed in time, and subjective value was well-described by the hyperbolic function (Eq. 2).

Delay Discounting Models and Procedures: Summary

We have reviewed some of the important mathematical models of discounting and shown that discounting is better described by a hyperboloid model than by an exponential model. Furthermore, the s parameter in the hyperboloid function often is necessary to account for discounting in humans, but typically is not needed to account for discounting in other animals. Several types of procedures have been used to study the discounting of delayed rewards, and more generally, to examine the degree of impulsivity exhibited under different situations, demonstrating that both humans and animals show preference reversals. In what follows, we review the effects of different variables on the degree of discounting delayed reinforcers by nonhuman animals and compare and contrast these effects with those obtained with humans.

Generality of Discounting

Discounting of Different Types of Reinforcers

The majority of discounting studies with animals have examined choices between either different amounts of food (Green et al., 2004; Mazur et al., 1987; Rosati et al., 2007) or different amounts of plain water (Green & Estle, 2003; Reynolds et al., 2002; Richards et al., 1997). However, discounting of other reinforcers also has been studied, including choices involving sucrose (Calvert et al., 2010; Farrar et al., 2003; Freeman et al., 2012), saccharin (Freeman et al., 2009), alcohol (Mitchell et al., 2006; Oberlin & Grahame, 2009), and cocaine (Woolverton, Freeman, Myerson, & Green, 2012; Woolverton et al., 2007). Across reinforcer type and species, animals show discounting of delayed reinforcers, and their behavior typically is reasonably well described by a hyperbolic function (Eq. 2).

With humans, hypothetical monetary rewards are the most commonly studied outcomes, although, as with other animals, humans discount other commodities and do so hyperbolically. The rewards studied have included hypothetical food (Estle, Green, Myerson, & Holt, 2007; Kirby & Guastello, 2001; Odum & Rainaud, 2003; Tsukayama & Duckworth, 2010), real juice rewards (Jimura et al., 2011; Jimura, Myerson, Hilgard, Braver, & Green, 2009; McClure, Ericson, Laibson, Loewenstein, & Cohen, 2007), real monetary outcomes (Johnson & Bickel, 2002; Lagorio & Madden, 2005; Madden et al., 2003), various drugs (MacKillop et al., 2011), health outcomes (Chapman, 1996), as well as activities like car and vacation use (Raineri & Rachlin, 1993), entertainment (Charlton & Fantino, 2008), massage time (Manwaring, Green, Myerson, Strube, & Wilfley, 2011), and sexual and companionate relationships (Lawyer, 2008; Lawyer et al., 2010; Tayler, Arantes, & Grace, 2009). As with animals’ choices, discounting of each reward type was well described by a hyperboloid function (Eq. 3).

Despite the finding that the same mathematical model describes choice of these different commodities, humans discount different commodity domains to different degrees. Humans discount food, for example, more steeply than money, but different types of food are discounted at relatively similar rates (Estle et al., 2007; Kirby & Guastello, 2001; Odum & Rainaud, 2003; Tsukayama & Duckworth, 2010). Charlton and Fantino (2008) found that non-food consumable commodities (books, CDs, DVDs) were discounted more steeply than money but less steeply than food. People also tend to discount their health less steeply than money (Chapman, 1996; Petry, 2003), and substance abusers discount their drug of choice more steeply than money (e.g., cigarettes: Bickel et al., 1999; cocaine: Johnson, Bruner, & Johnson, 2015; heroin: Madden, Petry, Badger, & Bickel, 1997).

Across reward domains, then, people tend to discount different commodities at different rates. In contrast, rate of discounting has not been shown to differ systematically and reliably across reinforcer type in animals. It should be noted, however, that studies with animals have used primary, consumable reinforcers and that, unlike humans, other domains of reinforcers have not been investigated. Both rats and rhesus monkeys discounted different concentrations of sucrose at similar rates (Farrar et al., 2003; Freeman et al., 2012), and Calvert et al. (2010) found that rats discounted qualitatively different food reinforcers at similar rates and qualitatively different liquid reinforcers at similar rates.

Freeman et al. (2012) presented limited evidence that rhesus monkeys discount qualitatively different consumable reinforcers at different rates. Comparing discounting rates from studies that used many of the same subjects (Freeman et al., 2009, 2012; Woolverton et al., 2007), they reported that rhesus monkeys discounted saccharin more steeply than sucrose, which in turn was discounted more steeply than cocaine. Although these results suggest that primates may discount the value of commodities at different rates, caution is warranted since the comparisons were made across experiments.

Recently, Huskinson, Woolverton, Green, Myerson, and Freeman (2015) compared choice by rhesus monkeys when the alternatives were immediate and delayed food reinforcers (isomorphic choice) and when the alternatives were immediate cocaine and delayed food reinforcers (allomorphic choice). In both types of choice situations, the rhesus monkeys discounted the larger food reward as the delay to its receipt increased. Interestingly, delayed food was discounted more steeply when cocaine was the immediate reinforcer than when food was the immediate reinforcer. These results provide limited evidence that reinforcer type, at least when each of the choice alternatives involve different reinforcers, affects choice in nonhuman animals.

Although the vast majority of research on discounting has examined choice involving reinforcers, there is evidence that discounting also occurs with aversive outcomes. Just as preference reversals are found with delayed gains, so, too, preference reversals are observed when a common delay is added to a smaller, sooner and a larger, later monetary loss (Holt, Green, Myerson, & Estle, 2008) and to a smaller, sooner and a larger, later electric shock punisher (Deluty, 1978). When both outcomes were far into the future, individuals generally preferred the smaller, sooner negative outcome, but when the two outcomes become closer in time, preference often switched to the larger, later negative outcome. Similarly as with reinforcers, individuals frequently prefer a delayed negative outcome to an immediate negative outcome, even if the immediate one is smaller, suggesting that the subjective value (i.e., aversiveness) of a negative outcome decreases as it is delayed in time (Deluty, 1978; Murphy, Vuchinich, & Simpson, 2001; Woolverton et al., 2012).

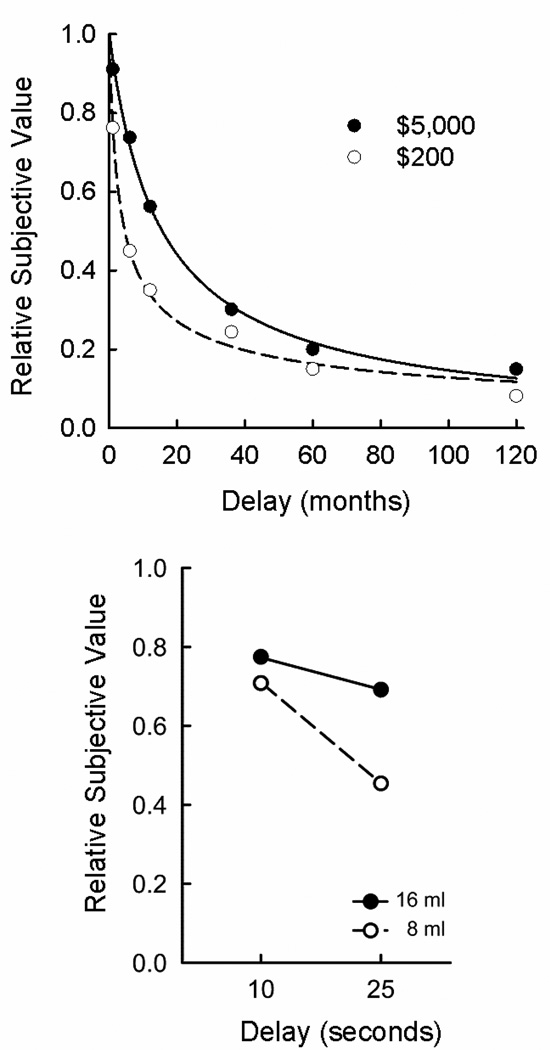

The Magnitude Effect

One of the most robust findings in delay discounting is that humans discount different amounts of the same commodity at different rates. More specifically, the magnitude effect is the finding that humans discount small, delayed rewards more steeply than larger, delayed rewards (Benzion, Rapoport, & Yagil, 1989; Christensen, Parker, Silberberg, & Hursh, 1998; Grace & McLean, 2005; Green, Myerson, & McFadden, 1997; Kirby, 1997). For example, Figure 6 shows how the relative subjective values of two amounts decrease as the delays to each increase. Relative subjective value is subjective value expressed as a proportion of the actual amount of the delayed reward, and is plotted in Figure 6 so as to facilitate comparisons of rate of discounting across different reward amounts. As may be seen in the top panel of Figure 6, $200 was subjectively worth only about 45% of its actual value when the delay was 6 months, whereas at this same delay, $5,000 was subjectively worth about 75% of its actual amount. The magnitude effect has been observed not only with monetary amounts (Green et al. 2013) but as well with non-monetary outcomes (cigarettes: Baker, Johnson, & Bickel, 2003; health: Chapman, 1996; vacations and rental car use: Raineri & Rachlin, 1993). The bottom panel of Figure 6 shows the discounting of two liquid rewards that actually were consumed on each trial. As with monetary rewards, the smaller amount of liquid was discounted more steeply than the larger amount.

Figure 6.

Relative subjective value as a function of delay to receipt of a reward. The top panel shows the discounting of two monetary rewards and the bottom panel shows the discounting of two real liquid rewards of different amounts. In each panel, the larger delayed amount is discounted statistically significantly less steeply as a function of time to its receipt than the smaller, delayed amount (a magnitude effect). Data are from Experiment 2 of “Amount of reward has opposite effects on the discounting of delayed and probabilistic outcomes,” by L. Green, J. Myerson, and P. Ostaszewski, 1999, Journal of Experimental Psychology: Learning, Memory, and Cognition, 25, pp. 418–427, and Experiment 3 of “Are people really more patient than other animals? Evidence from human discounting of real liquid rewards,” by K. Jimura, J. Myerson, J. Hilgard, T. S. Braver, and L. Green, 2009, Psychonomic Bulletin & Review, 16, pp. 1071–1075.

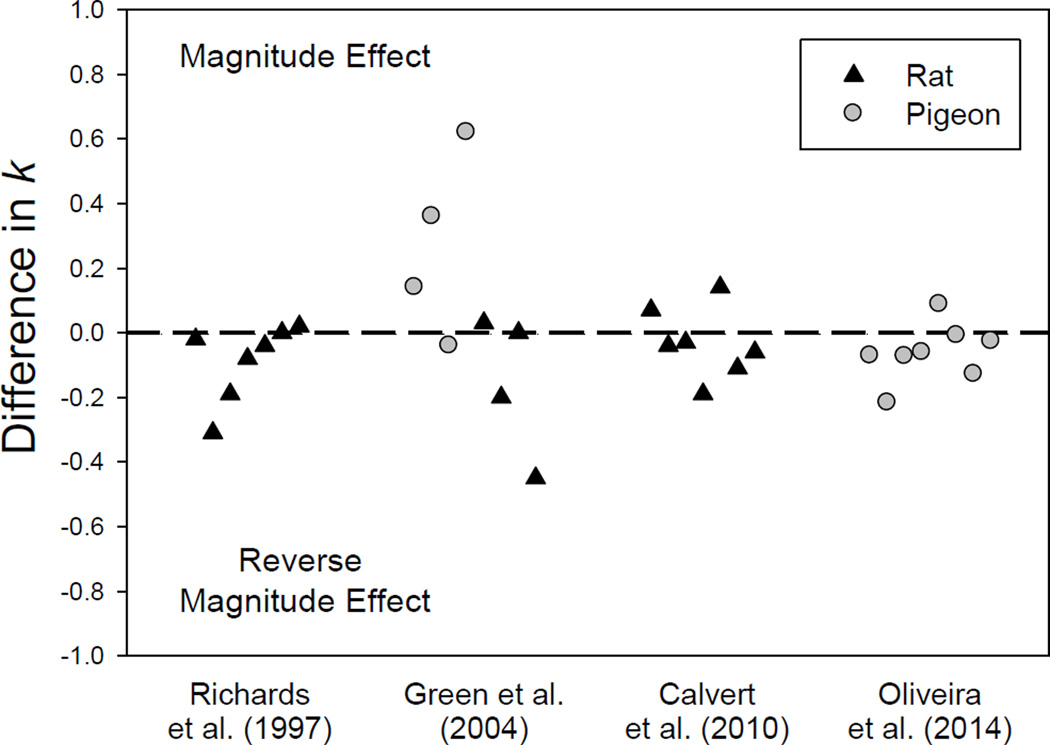

Despite its overwhelming presence in human choice, a magnitude effect has not been observed consistently with nonhuman animals (e.g., Grace, 1999; Oliveira et al., 2014; Ong & White, 2004; Orduña et al., 2013). Using an adjusting-amount procedure, Richards et al. (1997) reported no effect of amount on rats’ discounting using delayed water reinforcers, and Green et al. (2004) found no effect of amount on the discounting of delayed food reinforcers in either pigeons or rats. Even with nonhuman primates, rhesus monkeys had similar discounting rates between 2.0 and 4.0 ml of saccharin (Freeman et al., 2009). Figure 7 plots the difference in the k values (derived from Eq. 2) for two reinforcer amounts (i.e., the discounting rate parameter for the smaller delayed amount minus that for the larger delayed amount) in individual rats and pigeons under the adjusting-amount procedure3. A positive difference represents a magnitude effect, a negative difference represents a reverse magnitude effect, and values close to the dashed line at 0.0 indicate no difference in degree of discounting. Over the individual subjects in four studies, there appears to be no systematic effect of amount on rate of discounting (as measured by the parameter k) either by rats or pigeons with the adjusting-amount procedure.

Figure 7.

Difference in the discounting rate parameter (k) between smaller and larger delayed amounts in individual rats (dark triangles) and pigeons (grey circles) across four studies using an adjusting-amount procedure. Data are from “Determination of discount functions in rats with an adjusting-amount procedure” by J. B. Richards, S. H. Mitchell, H. de Wit, and L. S. Seiden, 1997, Journal of the Experimental Analysis of Behavior, 67, pp. 353–366, “Discounting of delayed food rewards in pigeons and rats: Is there a magnitude effect?” by L. Green, J. Myerson, D. D. Holt, J. R. Slevin, and S. J. Estle, 2004, Journal of the Experimental Analysis of Behavior, 81, pp. 39–50, “Delay discounting of qualitatively different reinforcers in rats” by A. L. Calvert, L. Green, and J. Myerson, 2010, Journal of the Experimental Analysis of Behavior, 93, pp. 171–184, and “Pigeons’ delay discounting functions established using a concurrent-chains procedure” by L. Oliveira, L. Green, and J. Myerson, 2014, Journal of the Experimental Analysis of Behavior, 102, pp. 151–161.

An alternative set of procedures for measuring discounting uses concurrent-chains (see, Delay Discounting Assessment Procedures, above). Rather than estimating a k value as in an adjusting-amount procedure, sensitivity to delay is assessed. Sensitivity to delay is determined by calculating the slope of the regression line relating the log of the ratio of responses made to each alternative in the initial link as a function of the log of the immediacy (i.e., the reciprocal of delay) associated with each alternative in the terminal link. A larger slope represents greater sensitivity to delay, and therefore steeper discounting (e.g., Grace, 1999; Ong & White, 2004). A magnitude effect would be evident if the slope for the smaller amount was greater than that for the larger amount.

Grace (1999) reported no significant difference in slopes between the larger and smaller reinforcer amounts, at both the mean and individual level, indicating no effect of amount. Both Ong and White (2004) and Orduña et al. (2013) reported no magnitude effect but, interestingly, most individual subjects in their experiments (pigeons and rats, respectively) showed a reverse magnitude effect. To date, only one study has provided evidence that nonhuman animals show a magnitude effect, such that a smaller delayed reinforcer is discounted more steeply than a larger delayed reinforcer. Using a concurrent-chains procedure, Grace et al. (2012) reported that the relative preference for a smaller reinforcer decreased more rapidly as it was delayed in time than did the relative preference for a larger reinforcer, consistent with the view that the smaller reinforcer amount was discounted more steeply. Grace et al., however, did not report the slopes for each of the five pigeons in their study, and so this effect could not be assessed at the individual level. More recently, using a combined concurrent-chains/adjusting-amount procedure, Oliveira et al. (2014) found no evidence of a magnitude effect in pigeons (see Fig. 7 for individual subject results). Taken together, results from both the mean and individual level, regardless of procedure, suggest that nonhuman animals do not show a reliable magnitude effect. That is to say, animals do not consistently discount small amounts of a reinforcer more steeply than larger amounts of the same reinforcer.

Although no consistent difference in the degree to which different amounts of the same delayed reinforcer are discounted has been observed, it might be that varying reinforcer value in other ways would produce support for a magnitude effect in nonhuman animals. A more preferred reinforcer can vary on many dimensions and may be greater in quantity or in quality. A 30% concentration of sucrose solution, for example, usually is preferred to a 10% or 3% concentration of sucrose, and thus is assumed to have greater value. Farrar et al. (2003) compared delay discounting of these different concentrations of sucrose in rats and found that rats discounted the 30% concentration sucrose more steeply than the lower sucrose concentrations. Although this result appeared to be the opposite of their hypothesis (that the presumably higher-valued reinforcer would be discounted less steeply), Farrar et al. conducted a follow-up test using the different sucrose concentrations, the results from which were interpreted as indicating that the 30% solution was the least preferred. This limited post-hoc test, however, did not use the same rats in which discounting had been evaluated.

Calvert et al. (2010) replicated Farrar et al.’s (2003) procedure but included several direct preference assessments throughout their experiment. Importantly, Calvert et al. compared sucrose, cellulose, and precision pellets, which are qualitatively different reinforcers. Although the rats showed a very strong preference for the sucrose and the precision pellets over the cellulose pellets, they showed similar discounting rates across all three reinforcers. In a second experiment, Calvert et al. replicated these findings with qualitatively different liquid reinforcers (saccharin and quinine solutions): Despite the overwhelming preference for the saccharin solution, there were no systematic differences in the degree of discounting of the quinine and saccharin solutions.

Both Farrar et al. (2003) and Calvert et al. (2010) used an adjusting-amount procedure in which the amount of the sooner reinforcer was varied in order to determine a point of subjective equivalence between the smaller, sooner and the larger, later reinforcers. If animals are more sensitive to the quality of a reinforcer than to its absolute amount, then a more sensitive procedure for observing a magnitude effect might be to adjust the quality of the smaller reinforcer, rather than its amount. Freeman et al. (2012) evaluated discounting rates between delayed 10% and 20% sucrose concentrations in rhesus monkeys. Unlike other experiments, the percentage, rather than the amount, of an immediate, lower-concentration reinforcer was adjusted in order to find the subjective equivalent to the delayed, higher-concentration (10 and 20%) reinforcer. Similar to the findings from previous studies, they did not observe any difference in the degree of discounting between the delayed 10% and 20% sucrose concentrations, despite the fact that the monkeys strongly preferred the 20% concentration. As noted above, Freeman et al. (2012) did report that rhesus monkeys discounted their most preferred reinforcer (cocaine) less steeply than other reinforcers (sucrose and saccharin) when comparisons were made across studies. Although this finding suggests that at least some animals might show a magnitude effect when reinforcers differ on quality rather than absolute amount, the overall evidence is weak and not consistent.

In addition to amount and quality, greater deprivation of a reinforcer typically increases its subjective value (Michael, 1993). In experiments using food as the outcome, animals typically are kept below 100% their maximum, free-feeding body weight to ensure that food will function as a reinforcer. Manipulating level of deprivation might affect the subjective value of a delayed food reinforcer. In humans, income level has been found to be associated with discounting rate such that those with lower incomes tend to discount money more steeply than those with higher incomes (Green, Myerson, Lichtman, Rosen, & Fry, 1996). It could be argued that those with lower incomes are more deprived than those with higher incomes, and that deprivation of a reward is associated with greater discounting of that reward. Similar findings have been observed with substance-dependent individuals temporally deprived of their drug (cigarettes: Field, Santarcangelo, Sumnall, Goudie, & Cole, 2006; heroin: Giordano et al., 2002). Specifically, smokers showed steeper discounting of delayed cigarettes when deprived of nicotine, and opioid-dependent individuals discounted delayed heroin more steeply when deprived of than when satiated with the drug.

Contrary to the findings in the human literature, however, deprivation had relatively little effect on animals’ discounting rate. Richards et al. (1997) found no effect of water deprivation on rats’ discounting rates, and Ostaszewski and colleagues did not observe a consistent effect of food deprivation in rats (Ostaszewski, Karzel, & Bialaszek, 2004; Ostaszewski, Karzel, & Szafranska, 2003). Oliveira, Calvert, Green, and Myerson (2013) manipulated food deprivation in pigeons in two ways. In their first experiment, food deprivation was manipulated by varying free-feeding body weight (i.e., discounting was studied at both 75% and 95% of free-feeding body weight), and in their second experiment, food deprivation was manipulated by varying time since the last feeding (i.e., discounting was studied after both 1 and 23 hours of food deprivation). In both experiments, Oliveira et al. observed no systematic effect of deprivation on the rate of discounting.

Putative Differences in Delay Discounting Between Humans and Nonhumans

It would be rash to assert at this point that there is no essential difference between human behavior and the behavior of lower species; but until an attempt has been made to deal with both in the same terms, it would be equally rash to assert that there is (Skinner, 1953, p. 38).

Both human and nonhuman animals discount future outcomes, and their discounting can be well-described by a hyperboloid model. Despite such significant similarities observed across species, several fundamental differences between human and nonhuman animal discounting have been proposed. Humans, but not other animals, reliably show a magnitude effect; humans, but not other animals, typically require that the s parameter of the hyperboloid (Eq. 3) be less than 1.0; humans, but not other animals, consistently discount rewards of different quality and from different domains at different rates. These purported differences raise questions as to why differences in amount, quality, and deprivation, each of which influences an animal’s preference for and the value of a reinforcer, have relatively little differential effect on the animal’s discounting rate. The purported differences are surprising in light of the fact that humans and nonhuman animals all show hyperbolic discounting.

In addition to these purported differences, arguably the most essential difference that has been proposed that distinguishes human from nonhuman animal decision making is the relative degree of self-control. Nonhuman animals discount over a matter of seconds whereas humans report willing to wait months, if not years before receiving a reward. It has been argued that humans have the capacity to mentally time travel and consider future outcomes, thereby accounting for the orders of magnitude difference in discounting (Roberts, 2002; Suddendorf & Corballis, 1997). Before a species difference can be assumed, however, it is essential that procedural differences between human and animal discounting experiments be ruled out as likely explanations (Moore, 2010).

Consider, for example, the findings from Calvert, Green, and Myerson (2011) who in their first experiment found that pigeons showed an increase in delay discounting (i.e., the larger, later reinforcer was chosen less often) when a common delay was added before both the smaller, sooner and the larger, later reinforcer. This finding stands in sharp contrast to the results obtained with humans who, consistent with preference reversals, show a decrease in delay discounting (i.e., the larger, later reward is chosen more often) when a common delay is added before both rewards (e.g., Green, Myerson, & Macaux, 2005). Calvert et al. noted that the saliency of the common delay appeared to differ between the human and animal procedure. When choosing between two rewards with delays of two and seven years, respectively, it is relatively easy for humans to separate the common delay (two years) associated with both rewards from that of the delay unique to the larger reward (the additional five years). By contrast, the common delay to the smaller and to the larger reinforcers in Calvert et al. was differentially signaled by using two differently colored keys. In their Experiment 2, Calvert et al. used the same stimulus to signal the delay common to each reinforcer (i.e., a flashing light), and a different stimulus to signal the remainder of the delay unique to the larger reinforcer. Under this procedure, pigeon and human data were remarkably consistent.

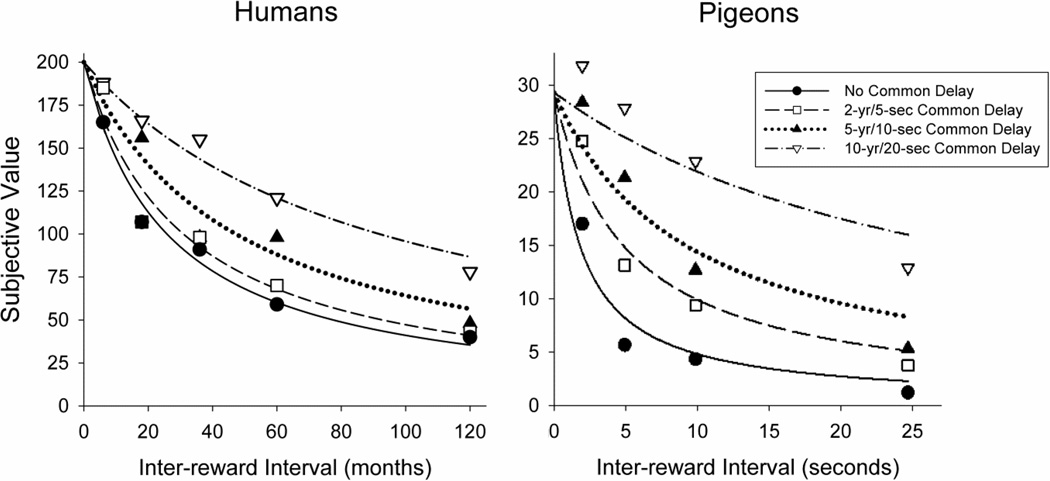

Figure 8 plots the subjective value of a larger, more delayed reward as a function of the time between the smaller, sooner and the larger, more delayed rewards (i.e., the inter-reward interval) for humans (left panel) and pigeons (right panel). Each curve represents the best-fitting hyperbolic discounting function at a different common delay. Like humans, the pigeons now also showed a decrease in their rate of discounting when both reinforcers were delayed, and the degree of discounting systematically decreased as the common delay was increased. Of course, the time-scale is orders of magnitude different, but the same pattern is evident. Thus, a procedural, rather than a fundamental species difference appeared to be driving the original results (see also, Mazur & Biondi, 2011, for an example in which differences in rats’ and pigeons’ choice behavior turned out to be the result of procedural details, and was not a fundamental difference in how the two species make choices between certain and probabilistic delayed reinforcers).

Figure 8.

Obtained subjective values and the best-fitting hyperbolic curves (Eq. 1) as a function of the delay unique to the delivery of the larger, delayed reward. Each curve represents a different common delay condition (i.e., the delay common to the smaller, sooner and the larger, later rewards). The left panel shows the mean subjective value of a $200 reward discounted by humans, and the right panel shows the mean subjective value of a 30-pellet reinforcer discounted by pigeons. Data are from Experiment 1 of “Temporal discounting when the choice is between two delayed rewards,” by L. Green, J. Myerson, and E. W. Macaux, 2005, Journal of Experimental Psychology: Learning, Memory, and Cognition, 31, 1121–1133 and Experiment 2 of “Discounting in pigeons when the choice is between two delayed rewards: Implications for species comparisons,” by A. L. Calvert, L. Green, and J. Myerson, 2011, Frontiers in Neuroscience, 5, 1–10.

Even when efforts are made to create reasonably similar procedures, differences between species may be due to the fact that behavior is not under the control of the same stimulus (Sidman et al., 1982; Urcuioli, 2008). That is, there may be a misspecification of the functional stimulus – different species might be attending to a different aspect of a discriminative stimulus from that which the experimenter intended. (For an excellent example of how misspecification of the functional stimulus might have been the reason for a proposed difference between human and animals, see Swisher & Urcuioli, 2013).

One of the most obvious procedural differences between human and animal discounting studies is the type of outcome usually presented. Whereas animals typically choose between real primary reinforcers that are consumed on each trial, most discounting procedures with humans involve hypothetical monetary rewards and delays. Any differences observed might then be due to the use of real versus hypothetical outcomes or to the use of consumable versus monetary rewards. This latter possibility is particularly reasonable given humans do show differences in their discounting of hypothetical monetary and consumable rewards (e.g., Estle et al., 2007; Odum & Rainaud, 2003). Contrary to the hypothesis that the difference between animals and humans relates to the use of real versus hypothetical outcomes, however, humans have been shown to discount hypothetical and real or potentially real monetary rewards at approximately the same rate (Johnson & Bickel, 2002; Madden et al., 2003). Moreover, a magnitude effect has been observed with real or potentially real monetary rewards (Johnson & Bickel, 2002; Kirby & Maraković, 1995; Madden et al., 2003), hypothetical food rewards (Odum, Baumann, & Rimington, 2006), and real liquid rewards (Jimura et al., 2009; see bottom panel of Fig. 6). It should be noted, however, that when hypothetical consumable rewards are used with humans, the magnitude effect is smaller than that observed with hypothetical monetary rewards (Estle et al., 2007; Odum et al., 2006). It thus remains possible that procedural differences might play a critical role in the apparent species difference.

An alternative to examining the discounting of consumable rewards in humans is to examine the discounting of conditioned reinforcers (i.e., tokens) in nonhuman animals. In one experiment, pigeons chose between immediate and delayed tokens (light-emitting diodes; LEDs) that later could be exchanged for food during exchange periods (Jackson & Hackenberg, 1996). Pigeons displayed greater self-control (i.e., chose the larger, delayed reinforcer more often) when the reinforcers were tokens than typically is observed when primary reinforcers are used (but see Evans, Beran, Paglieri, & Addessi, 2012, who obtained different patterns of results with capuchins and chimpanzees when using what they termed an accumulation task, in which tokens or food items were individually added to a pile until the animal took from the pile). Jackson and Hackenberg’s finding is similar to that found with humans who discount monetary rewards (i.e., conditioned, generalized token reinforcers) less steeply than they discount consumable rewards, suggesting that at least some of the difference between humans and nonhumans might be accounted for by this procedural aspect. No study, as yet, has investigated the magnitude effect with different amounts of token reinforcers in animals.

The oft-cited, if not essential difference between human and other animals’ decision making is that humans can wait for rewards over delays that are orders of magnitude greater than those for which other animals wait. Whereas animals make choices concerning reinforcers delayed by a matter of seconds, humans report a willingness to wait months if not years for a delayed reward. Recently, however, humans have been shown to discount real, consumable rewards over a matter of minutes (McClure et al., 2007; Rosati et al., 2007), and Jimura et al. (2011, 2009) showed that humans discount real liquid rewards by 40–50% when the delay to the reward was 30 to 60 seconds. Rosati et al. (2007) reported that when humans and chimpanzees were required to wait for juice rewards delayed by two minutes, the chimpanzees actually displayed greater self-control by choosing the larger, delayed option more often than human participants. However, because of concerns raised about the procedure used in that study, an alternative explanation has been offered (see below).

A procedural difference between human and animal discounting studies that has received little attention is that animals learn about the delays and the outcomes by having to experience them, whereas delays and reward amounts are explicitly signaled to humans. Furthermore, animals make repeated choices, often over a series of weeks or months, until stability is achieved, whereas a human discounting task can be completed in a single session in a matter of minutes. To partially address these different aspects, Lagorio and Madden (2005) required participants to make repeated choices over multiple sessions over a period of a few months. As with animal experiments, participants were given forced-choice trials in order to experience each alternative, and had to meet a stability criterion before moving on to the next condition. Lagorio and Madden found no systematic difference between the rate of discounting real and hypothetical rewards using this procedure. Furthermore, participants discounted rewards hyperbolically, like that observed in typical human discounting tasks (e.g., in which preference is assessed in a single session).

Of note, the delays in the Lagorio and Madden (2005) study were not experienced in the same way that animals typically experience delays in discounting experiments. Lagorio and Madden used delays of up to one month, and although participants actually experienced the delay if they chose the larger, later reward, they, of course, were free to do other things during this time. Paglieri (2013) has argued that there are differential costs to delays—all delays are not experienced in the same way. The biggest difference is between a delay that one must endure without having the opportunity to engage in an alternative, and potentially distracting, activity (what Paglieri refers to as “waiting”) and a delay during which one can do whatever one wants (what Paglieri refers to as “postponing”).

In a nonhuman animal experiment, when the animal chooses the delayed option, it must wait until the reinforcer is delivered, and has few response activities available during the interim. In the studies with humans using hypothetical monetary rewards, it is implicitly assumed, if not actually allowed as in Lagorio and Madden, that participants are free to do whatever they want during the delay period. There is more cost to the delay when waiting than when postponing, and as a consequence it might be expected that discounting would be greater in tasks in which the outcomes are actually experienced and alternative, ‘postponing’ activities are minimal (i.e., the typical nonhuman animal experimental procedure). Indeed, Mischel, Ebbesen, and Zeiss (1972) showed that children’s self-control (i.e., waiting to receive a more-preferred reward) increased when they had access to playing with a toy during the delay period. In an animal analog, Grosch and Neuringer (1981) found that when pigeons were provided the opportunity to engage in an alternative response during the delay period, their self-control choices (waiting for the more-preferred food reinforcer) were far greater than when not provided the opportunity to engage in the alternative response.

The length of the delays and the amounts of the outcomes used in human and nonhuman animal experiments also may play a crucial role in understanding why the s parameter rarely is less than 1.0 in nonhuman animals despite being the norm in humans. Recall that s is assumed to reflect the nonlinear scaling of time and amount. One cause for the difference in s between humans and nonhumans might be the length of the delays and the range of amounts presented in the experiments. The longest delays in a human experiment might not appear as subjectively distinct (e.g., the difference between 10 and 20 years), whereas in a nonhuman animal experiment, the longest delays still might appear quite subjectively different (e.g., the difference between 10 and 20 seconds). The large range of delays and amounts used in human experiments might make the nonlinear scaling of time and amount more apparent than is the case in animal studies. In the animal experiments, the smaller range of values might only capture a portion of the utility function, causing the appearance of a more linear scaling of time and amount. Of note, however, is that Jimura and colleagues (2011, 2009) tested human participants in a similar environment to animals and found that when the hyperboloid model was fit to the data, s still was reliably less than 1.0.

Although the duration of the delays and the reward amounts were experienced in Lagorio and Madden (2005) and in Jimura et al. (2011, 2009), participants still were told explicitly the amounts and delays prior to experiencing them. Therefore, the participants did not have to experience the delays and rewards so as to learn their values, an aspect that stands in contrast to animal procedures where delays and amounts must be experienced. To date, no published study with humans has examined discounting under a condition in which only symbolically presented information, and no specifically stated information, is provided about the delays and amounts. One study, however, has examined the effect of symbolic information on discounting in animals. Hwang et al. (2009) trained rhesus monkeys on a task in which the amounts of and the delays to juice reinforcers were signaled symbolically by presenting differential visual cues (i.e., colored shapes) on a monitor. They found that rhesus monkeys’ choices were well-described by a hyperbolic discounting function, but their experiments did not assess the s parameter in the hyperboloid (Eq. 3), nor was reinforcer amount varied in order to determine whether there was a magnitude effect. Thus, it remains unclear whether these procedural variants might account for differences between human and nonhuman animal discounting.

A final consideration in evaluating results from humans and nonhuman animals concerns the ecological validity of the one-shot, two-alternative paradigm typically used in discounting experiments. Although human and nonhuman animals undoubtedly make intertemporal choices, many daily experiences arguably do not involve a single encounter of a choice situation in which two concurrent response options reap certain rewards available at different times in the future. Animals might behave differently in the two-alternative delay discounting task than they would when making intertemporal choices in their natural environments (Beran et al., 2014; Kacelnik, 2003; Paglieri et al., 2013; Stephens, McLinn, & Stevens, 2002; for a recent discussion on the ecological validity of discounting procedures, see Hayden, 2015). Stephens and Anderson (2001) compared blue jays’ choices in a standard discounting task (i.e., choice between a small, immediate reinforcer and a larger, delayed reinforcer) and a patch task modeled after the foraging behavior of blue jays in their natural environment. In the patch task, blue jays chose either to leave the current patch and receive a small amount of food after a short time or to stay in the current patch and receive more food after a longer amount of time. Stephens and Anderson found that blue jays behaved more impulsively and chose the smaller, sooner reward more often in the standard discounting task than in the patch task. Additionally, Fawcett, McNamara, and Houston (2011) have argued that opportunity costs and repeated choice opportunities can alter the appearance and direction of preference reversals. It is clear that when comparing across species, the ecological relevance of the paradigm to each species must be considered.

In summary, there are at least three major findings that have been reported in which human and nonhuman animal discounting have been claimed to diverge: (1) Humans discount rewards from different domains and of different quality and magnitude at different rates, whereas other animals do not; (2) the s parameter of the hyperboloid (Eq. 3) is necessary to describe discounting in humans, but does not usually differ from 1.0 in other animals; and (3) humans appear to display much greater self-control (i.e., they discount far less steeply) than other animals. There typically are significant procedural differences between human and animal discounting tasks, however, and only some of these differences have received experimental evaluation. For example, the greater self-control displayed by humans appears to be a function of the type of rewards and delays used, and the ecological validity of the task to the animal. Both humans and animals discount real consumable outcomes on the order of seconds (e.g., Jimura et al., 2009; McClure et al., 2007; Rosati et al., 2007). When conditioned reinforcers are used in animal studies, the smaller, sooner reinforcer is chosen less than typically is observed when real, primary reinforcers are used, a finding similar to that obtained with humans. Nevertheless, several important differences between human and animal discounting are still not well understood. To date, procedural differences have not been shown to account either for a lack of a magnitude effect nor for the finding that the s parameter in the hyperboloid function typically does not differ from 1.0 in nonhuman animals. Future work examining the role of symbolic information, the use of token reinforcers in animals, and other procedural variations on choice eventually will resolve these discrepancies.

Comment on the magnitude effect - relative versus absolute rate of reinforcement

A magnitude effect is considered an anomaly according to standard normative economic theory (e.g., Loewenstein & Prelec, 1991, 1992; Loewenstein & Thaler, 1989). The question to ask, then, might not be, “Why do animals not show the magnitude effect”, but rather, “Why do humans show the effect”. Several theories have been proposed to account for the magnitude effect in humans (e.g., Loewenstein & Prelec, 1991; Myerson & Green, 1995; Raineri & Rachlin, 1993). One of the most influential theories in economics is Thaler’s theory of mental accounting (Thaler, 1985). Thaler suggested that humans have different “mental accounts” for different amounts of rewards. He argued, for example, that people conceptualized smaller amounts of money as “spending money” but larger amounts as “savings”. By waiting for smaller amounts of “spending” money, people must postpone consumption, but by waiting for larger amounts of “savings”, people must postpone earning interest on savings. The magnitude effect occurs because people are more willing to forgo earning interest than consuming. It might reasonably be argued that whereas humans have various mental accounts, other animals (e.g., pigeons and rats) do not have different mental accounts (skirting the issue of whether they have even one) and, accordingly, Thaler’s theory of mental accounting then would explain why humans, but not other animals, show the magnitude effect.

Oliveira et al. (2013) proposed that choice in nonhuman animals may well be influenced more by relative, rather than absolute, rates of reinforcement, consistent with the matching law (Herrnstein, 1970). Specifically, in the context of the effect of deprivation on discounting rate, they suggested that deprivation in animals might result in proportionally equivalent changes in the subjective value of both reinforcer alternatives. Whereas in humans, deprivation appears to affect the immediate reward to a greater extent, thereby increasing its value relative to that of the larger, delayed reward, in nonhuman animals, deprivation might affect both the smaller and larger reinforcers in proportionally similar ways. If deprivation, quality, and amount affect the value of each outcome in proportionally equivalent ways, then choice would be unaffected by these manipulations.

Although not tested directly, several findings are consistent with this proposal. Logue and Peña-Correal (1985) evaluated pigeons’ choices for larger, delayed reinforcers and smaller, less-delayed reinforcers as body weight and post-session feeding were manipulated. They found that at a lower body weight, pigeons responded more during reinforcer delays (during which responses were not reinforced). Additionally, they found that pigeons not provided with post-session feeding had shorter latencies to inserting their heads into the food hopper than pigeons who were fed up to their 80% free-feeding weight immediately following each experimental session. Interestingly, however, Logue and Peña-Correal found no systematic effect of deprivation on choice of the larger, later and smaller, sooner reinforcers. Thus, deprivation affected some feeding behaviors, but did not affect choice. Although the hypothesis that nonhuman animals choices’ are based more on relative rather than absolute values of reinforcers might account for the putative species difference, the reason for such a difference, should it be sustained, remains unclear.

An Adaptationist Perspective of Self-control and Impulsivity

Regardless of any differences between human and nonhuman decision making, there are fundamental similarities. All animals discount future outcomes, and they do so in a similar manner: Outcomes lose subjective value in a decreasing, decelerating fashion as the delay until receipt increases. In addition, the same hyperboloid model describes the choice of many different types of outcomes across all species studied. These similarities suggest that delay discounting is a fundamental aspect of decision making across situations and species.

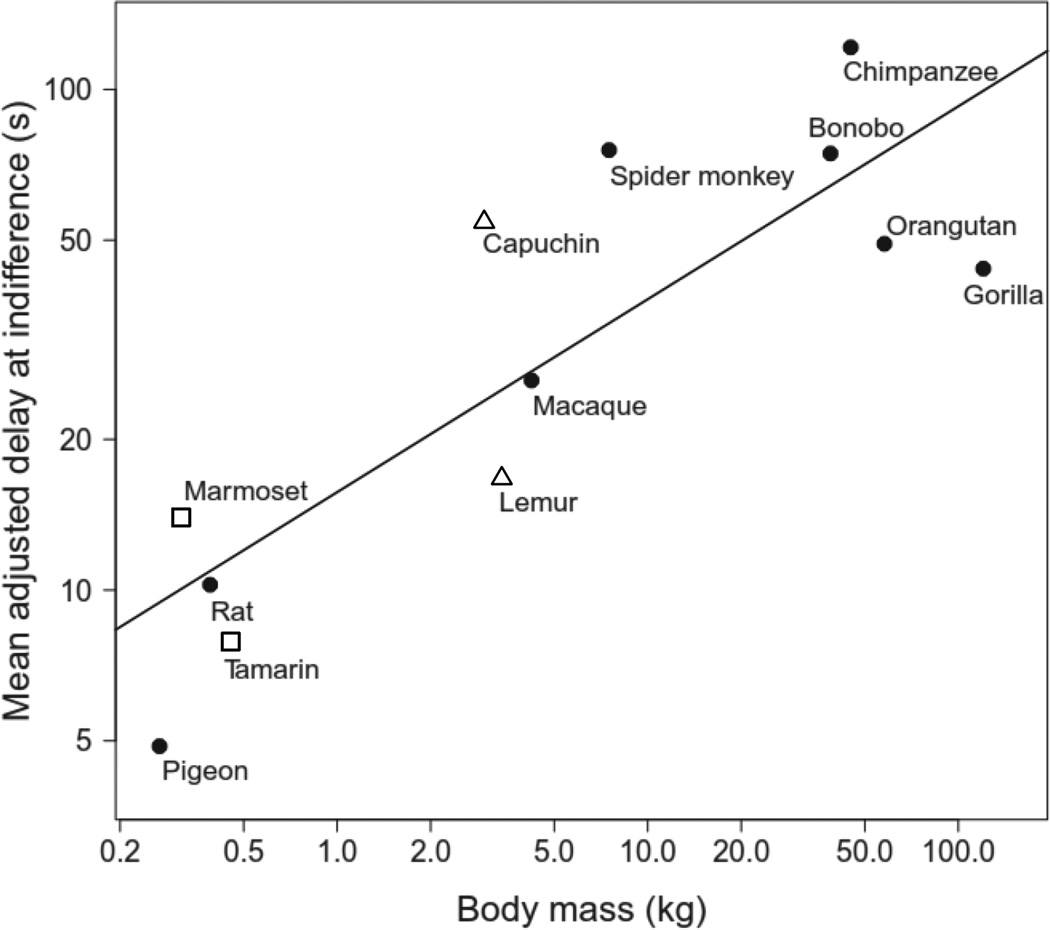

Where differences do exist, some are quantitative, rather than qualitative and, moreover, demonstrate the adaptive significance of the discounting of future rewards. Environmental constraints and evolved adaptations to different environmental demands play a significant role in the degree of discounting and in choice between smaller, sooner and larger, more delayed outcomes, and help inform our understanding of some species differences. Monkeys, for example, are reported to discount delayed reinforcers less steeply than rodents, but more steeply than great apes (Addessi et al., 2011; Mazur, 2000). It has been suggested that life expectancy provides an account for this pattern of finding (e.g., Stevens & Mühlhoff, 2012). For organisms with shorter lifespans (e.g., rodents), it often would not be adaptive to forgo a smaller reinforcer for the potential of a larger reinforcer far in the future. For these species, it may well be more advantageous to take what they can obtain in the present. In contrast, for organisms with far longer lifespans (e.g., great apes), it often would be more beneficial in the long run to wait for a larger reinforcer in the future.