Abstract

Choice behavior requires weighing multiple decision variables, such as utility, uncertainty, delay, or effort, that combine to define a subjective value for each considered option or course of action. This capacity is based on prior learning about potential rewards (and punishments) that result from prior actions. When made in a social context, decisions can involve strategic thinking about the intentions of others and about the impact of others' behavior on one's own outcome. Valuation is also influenced by different emotions that serve to adaptively regulate our choices in order to, for example, stay away from excessively risky gambles, prevent future regrets, or avoid personal rejection or conflicts. Drawing on economic theory and on advances in the study of neuronal mechanisms, we review relevant recent experiments in nonhuman primates and clinical observations made in neurologically impaired patients suffering from impaired decision-making capacities.

Keywords: decision making, emotion, neuroeconomics, social cognition

Abstract

La conducta de elección requiere sopesar múltiples variables de decisión, como la utilidad, la incertidumbre, el retraso o el esfuerzo que se combinan para definir un valor subjetivo para cada opción o curso de acción que se considere. Esta capacidad está basada en el aprendizaje previo acerca de las potencia les recompensas (y castigos) que resultan de las acciones anteriores. Cuando las decisiones se realizan en un contexto social pueden involucrar un pensamiento estratégico acerca de las intenciones de otros y acerca del impacto de las conductas de otros en el propio resultado de uno. La valoración también está influenciada por diferentes emociones que sirven para regular adaptativamente nuestras electiones con el fin de, por ejemplo, alejarse de juegos ex-cesivamente riesgosos, prevenir futuras lamentaciones o evitar rechazo o conflictos personales. Basándose en la teoría económica y en los avances en el estudio de los mecanismos neurales, se revisan experimentos relevantes recientes en primates no humanos y observaciones clínicas realizadas en pacientes deteriorados neurológicamente quienes tienen alteraciones en la capacidad de tomar decisiones.

Abstract

Choisir nécessite de soupeser plusieurs variables de décision, comme l'utilité, l'incertitude, l'attente ou l'effort, qui se combinent pour définir une valeur subjective pour chaque option envisagée ou pour chaque plan d'action. Cette aptitude se fonde sur les expériences d'apprentissage lors des récompenses (et punitions) potentielles résultant d'actes antérieurs. Prises dans un contexte social, les décisions peuvent impliquer une pensée stratégique sur les intentions de l'autre et sur le rôle du comportement de l'autre sur notre propre choix. L'estimation est aussi influencée par différentes émotions qui servent à adapter nos choix afin de, par exemple, éviter les paris excessivement risqués, prévenir les regrets futurs ou échapper aux rejets personnels ou aux conflits. En nous appuyant sur une théorie économique et sur les avancées des études des mécanismes neuronaux, nous analysons les essais récents pertinents chez les primates non humains et les observations cliniques faites chez des patients atteints neurologiquement et souffrant d'une perturbation de l'aptitude à la prise de décision.

Introduction

Neuroeconomics is a general framework for studying the neural basis of economic decision making. At first glance, one may wonder how this discipline can be relevant to the sort of issues that neurologists and psychiatrists are concerned with, beyond identifying the causes of pathological gambling or compulsive spending disorders. However, neuroeconomics is neither based on nor primarily concerned with monetary exchanges. Although money is often used as a convenient proxy of value in behavioral and neuroimaging studies, economic analysis of choice behavior can be applied in various situations where individuals need to estimate the desirability of goods and services in the broadest possible sense, for example, when making choices about food items on a restaurant menu, physical exercise, career prospects, commitment in a relationship, a child's education. The goal of economic theory is to account for choice behavior, using a restricted set of assumptions, the most fundamental one being that agents are essentially self-interested, rational maximizers, computing utility functions, weighing the expected value of an outcome by its probability or the delay in obtaining it. Behavioral economics further attempts to account for observations that seem to deviate from the predictions of standard economic theory, like the fact that individuals sometimes systematically over- or underestimate probabilities or act altruistically, by taking into account how cognitive, emotional, or social factors interact to bias our choices. Bringing concepts from economic theory into the realm of everyday behavior thus provides a formalization of what makes a prospect attractive or repulsive in terms of specific, tractable computations. These computations can then be traced to the activity of single neurons or populations of neurons in different brain regions, or revealed through specific selective deficits in pathological conditions.

Our objectives in this brief review are to introduce some of the core concepts used in behavioral economics, to make arguments based on data from animal research for the evolutionary origin of the basic neuronal mechanisms for value-based decision making, and to illustrate with clinical studies the relevance of a neuroeconomic approach in appraising different types of behavioral and cognitive disturbances.

Behavioral economics

In most natural situations, our choices are rarely totally black or white, and predicting how people will choose requires an accurate model of how value is assigned to outcomes. The simplest possible computation of subjective value is a product of “utility,” u, and its probability of occurrence, P (let us assume that the cost associated with 1-P is null). This seems like a reasonable starting point since most decisions are probabilistic: for example, expectations of a successful day at the beach hinge on rainfall statistics, and deciding to wait for a hotline operator is influenced by the probability of simultaneous calls from other customers at that particular time of the day. However, options with seemingly identical expected values may lead to different choice preferences. The prospects of winning 1€ with a fixed probability of P=1 or winning 10€ with a fixed probability of P=1/10 lead, in the long run, to similar average gains, but can generate different preferences depending on an individual's attitude toward risk. Furthermore, in real-world settings, probabilities are generally not fixed and fluctuate over time. Experimental studies have shown that expected values are computed by taking into account the mean and variance of outcome probability distributions.1 Another key issue is time. Decisions generally produce outcomes in the future, and outcomes that occur later are devalued relative to identical, but immediate, ones. Again, experimental data show that optimal decision making in humans is based on time discounted expected values of outcomes.

More complex situations arise when multiple individuals interact. In behavioral economics, game-theory approaches are used to analyze scenarios in which an outcome is contingent upon mutual decisions made by two or more individuals. A typical example is the “prisoner's dilemma” Two players must simultaneously choose one of two possible options. Payoffs are organized such that it is most advantageous for both players to choose option A. However, if only the first player picks option A and the second player opts for B, the first player will end up with a far worse outcome than had he/she chosen the less attractive option B.This type of game is a model of actual social interactions where individuals need to anticipate each other's intentions. If the prisoner's dilemma is played only once, game theory predicts that rational self-interested players will choose option B. If repeated multiple times, players can respond to each other's actions and have opportunities to “retaliate” for or “forgive” noncooperative behavior, and this may engender different dominant strategies (eg, tit for tat, win-stay/lose-shift).2

Rationality assumptions posited by economic theory generate predictions that are often verified, but the literature is replete with paradoxes and violations to what is sometimes referred to as the “standard model.” In fact, much current work in behavioral economics is devoted to refining or proposing alternative theories in order to accommodate experimental observations. Calculating subjective values is not always a straightforward process. Probability weighting and delay discounting functions are notably nonlinear. For instance, biases—such as the tendency to overestimate small probabilities—can lead to an exaggerated aversion to certain types of risky choices. Utilities also show nonlinearities with losses looming larger than same-sized gain, a phenomenon referred to as loss aversion.3 Unexpected outcomes in social games occur because of individual differences in the “theory of mind” abilities required for strategic reasoning. However, some of the most important challenges to the standard model are those that question the rationality assumption itself and highlight the impact of emotions, such as regret, envy, altruism, and inequity aversion. For example, in another widely used test, the “ultimatum game,” a player is given a sum of money, part of which has to be transferred to a second player; that player can then accept the offer, and both players keep their respective share, or reject the offer, and both players leave empty-handed. Strict utilitarianism predicts that the first player should transfer the smallest nonzero amount possible and the second player should accept it, because something is always better than nothing. But the common finding is that the first player transfers a sizeable share of the money, possibly because of an inherent prosocial motivation for cooperation and reciprocity or perhaps more pragmatically because, anticipating the other's inequity aversion, he/she wishes to preempt rejection of an offer that would be considered unfair.4

Neuronal mechanisms

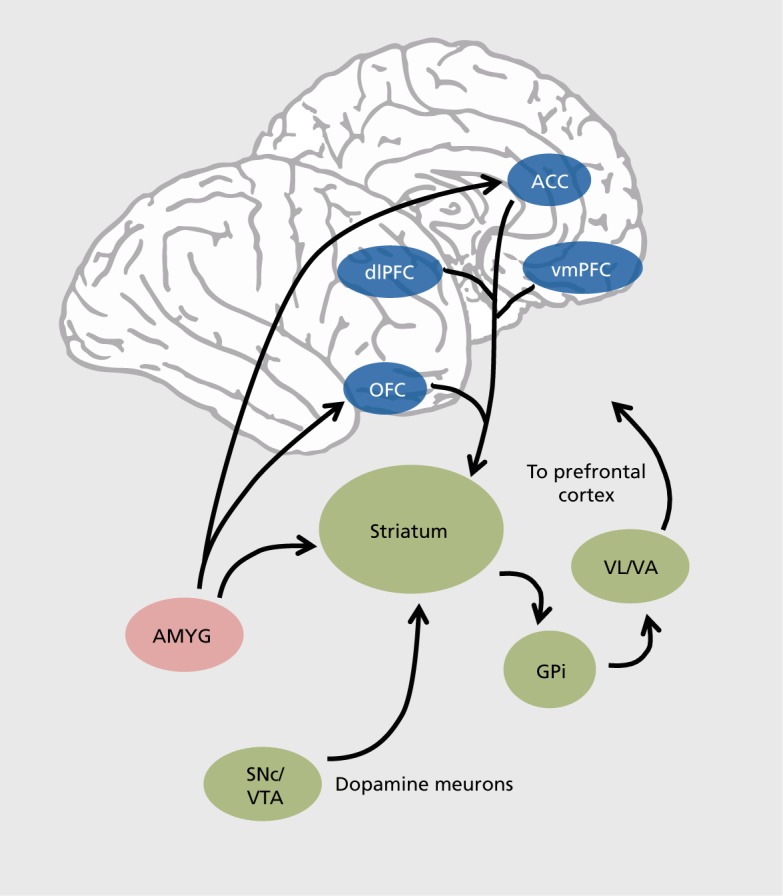

It can thus be seen that empirical descriptions provided by models of real-world decisions can be considered as normative behavior when evaluating pathological deviations in patient populations. These models are also useful in the quest to elucidate the underlying neurobiological mechanisms. Neuroimaging studies have contributed to the identification of the cerebral networks activated by value estimations and choice behavior in humans. Many of the behavioral observations made in humans have been verified in animals, including probability distortions, long-term reward rate maximization,5 and inequity aversion, thus underlining the relevance of animal research.6 In fact, much of the human neuroimaging work has been directly inspired by findings from neuronal recording experiments conducted in rodents and nonhuman primates (Figure 1). We briefly summarize some of the key results regarding the neuronal basis of reward processing and choice behavior, drawing on work conducted in nonhuman primates, the species whose cerebral organization is closest to ours. An extensive presentation of the flourishing literature based on human functional magnetic resonance imaging (fMRI) is beyond the scope of the present paper and interested readers can find several detailed reviews in refs 7-9.

Figure 1. Simplified schematic representation of the primate reward and decision-making network. Midbrain nuclei containing dopaminergic neurons: AMYG, amygdala; GPi, internal globus palIidus; SNc/VTA, substantia nigra pars compacta/ventral tegmental area. VL/VA, ventral lateral and anterior thalamic nuclei. Subdivisions of the prefrontal cortex: ACC, anterior cingulate cortex; dIPFC, dorsolateral prefrontal cortex; OFC, orbitofrontal cortex; vmPFC, ventromedial prefrontal cortex.

The biological cornerstone of valuation processes is the brain's reward system and one of its major components, the dopamine neuronal pathway. Midbrain dopamine neurons have been implicated in different functions but first and foremost in signaling the hedonic value of stimuli in the environment and are thus often considered as forming the brain's “reward retina.” The response properties of macaque dopamine neurons have been found to compute variables essential for reinforcement learning, such as the difference between expected and actual reward outcomes. In simple classical conditioning experiments, dopamine neurons respond to unexpected rewards, with a sharp increase in discharge rate, and to expected but absent rewards, with a sharp decrease in discharge rate.10 These signals are believed to contribute to learning by signaling both positive and negative changes in the environment, corresponding to reward or utility prediction error postulated by models of economic decision. Dopamine neurons also encode reward expectation through tonic discharges that, interestingly, scale with reward probability and thus convey information about risk and uncertainty.11

Explicit reward signals are found in most midbrain dopamine neurons, as well as in subsets of neurons receiving dopaminergic projections in the orbitofrontal cortex (OFC), in the ventral striatum, and in the amygdala. These neurons further respond to conditioned stimuli that predict future reward. Another class of cells link information about reward with sensory or action information. The OFC contains a representation of primary reinforcers, such as food or sexual stimuli. These are neurons that discriminate sensory quality within and across category, but do not merely encode the physical characteristics of stimuli. Activity is elicited by a specific food item when the animal is motivated to acquire it, but stops if the animal is sated.12 In other words, reward-coding neurons respond if the “marginal utility” of that particular food is sufficiently high, ie, if it satisfies a current need or desire. Rewards and reward expectation also affect the activity of more dorsal and medial regions of the prefrontal cortex involved in action selection and planning. In fact, many cortical (prefrontal, cingulate, parietal, infero temporal cortex) and subcortical (striatum, amygdala, superior colliculus) structures involved in high-level sensory and/or motor integration receive reward information.13 Unrewarding and aversive events are also represented most commonly in distinct neuronal subpopulations in the midbrain, cortex, and amygdala. However, cells in the posterior parietal cortex have been shown to encode both rewarding and aversive stimuli, in which case activity is interpreted as reflecting motivational salience rather than value.14 Together, these results suggest that reward information serving to select relevant stimuli and to guide goal-directed approach and avoidance behavior is broadcast widely throughout the primate brain.

If reward neurons are to encode decision variables, their activity should reflect subjective value in a generic way; that is, preferences for different goods should be encoded in a common “currency.” Monkeys do not use money to purchase goods. However, their preferences can be precisely estimated by offering fruits or juices in different amounts and measuring subjective equivalences; for example, how much a grape is worth in bits of cucumber. When recorded during the performance of such a task, action potentials of dopamine neurons and reward-sensitive neurons in the OFC have been shown to be discharged in equal numbers for subjectively equivalent food rewards, irrespective of nature or amount, and to scale their firing rate in direct function of behavioral preference, thus effectively representing value in a common neural currency.15,16 Further studies have focused on how the brain translates subjective value into actual choices and actions. Real-world economic decisions can sometimes be straightforward but mostly involve multiple, ambiguous outcomes and require the integration of several factors, for example, delay, uncertainty, and action cost. Formal models of decision generally postulate a competition between signals that accumulate in favor of each option, racing toward a threshold and culminating into a sort of winner-take-all decision. Evidence for such a process has been observed in several regions, including the dorsal and ventral striatum, and the dorsolateral prefrontal, posterior parietal, and anterior cingulate cortex (ACC), although the models best fitting the data remain a subject of debate.17,18

Patient studies

Reward and dopamine

Identifying the elementary neural mechanisms used to compute expected values and guide rational decision making is a central objective of research in neuroeconomics, but also in the domains of reinforcement learning (concerned with animals and humans) and machine learning (concerned with computer models and robots). All three disciplines are interested in how rewards and punishments shape responses to the environment bymeans of signals arising in midbrain dopamine neurons. Studies conducted in patients in whom dopamine function is altered, like Parkinson disease, Tourette syndrome, or attention-deficit hyperactivity disorder, are thus directly relevant to this issue. For example, patients with Tourette syndrome show early onset physical and vocal tics, believed to result from dopamine-induced basal ganglia dysfunction and often treated with neuroleptics and dopamine antagonists. In a series of elegant studies, Worbe et al19 and Palminteri et al20,21 studied reinforcement learning in Tourette patients, both on and off medication. They used a simple cued go/no-go task to expose participants to cue-reward associations (monetary gain) as well as cue-punishment associations (monetary loss). Patients showed learning profiles according to their treatment status that varied in a manner consistent with the hypothesis of dopamine hyperfunctioning in Tourette syndrome. When off medication, patients had impaired punishment learning, whereas when on medication they had impaired reward learning. The authors interpret this pattern of findings according to the theory of dopamine's dual action in reward and punishment learning through, respectively, positive and negative prediction error signals.10 In untreated patients, because baseline levels of dopamine are too high, punishments cannot pull the dopamine response low enough to generate a negative prediction error signal. By contrast, in patients treated with dopamine antagonists, baseline levels of dopamine are kept too low, and rewards cannot push the dopamine response high enough to generate a positive prediction error. In support of this hypothesis, a similar, but mirror-reversed dissociation in reward and punishment learning pattern has been found in patients with Parkinson disease on (high baseline dopamine) and off (low baseline dopamine) L-Dopa treatment.22,23

Although a faulty reinforcement learning mechanism cannot account for the entire spectrum of deficits observed in basal ganglia disorders, it could partly explain a number of motor and cognitive symptoms. Reduced negative reinforcement due to excessive dopamine activity could be involved in the inability to suppress tics in Tourette syndrome, but also in impulse control disorders, such as pathological gambling and hypersexuality, all of which have also been observed as side effects of L-Dopa treatment in Parkinson disease. The major drugs of addiction have a variety of pharmacological actions resulting in overstimulation of the dopaminergic reward system.24 Reduced reward sensitivity due to low dopamine levels might be involved in early nonmotor symptoms of Parkinson disease and in psychiatric conditions associated with depression and apathy. Considerations about the role of dopamine imbalance must also take into account the heterogeneity of lesions and of functional anomalies in the midbrain, striatum, and associated cortical and limbic networks. Understanding the specificity of behavioral disturbances in light of the known anatomy and pharmacology of the basal ganglia is a key objective for much human and nonhuman primate research in this field.25

Nonsocial decision making

The ventromedial and orbitofrontal regions are important cortical targets of dopaminergic projections, but also receive inputs from limbic structures, hippocampus, and other cortical areas. Probably the first and best-known study of economic decision making in brain-lesioned patients is that of Bechara and collaborators,26 who introduced the Iowa gambling task, in which participants are presented with four different decks from which they pick cards in order to earn game money. Two decks contain some cards with small gains and fewer cards with small losses, yielding, on average, positive payoffs. Two other decks contain some cards with high gains and a larger number of cards with high losses, yielding, on average, negative payoffs. Normal subjects, after sampling cards from each deck, develop a preference for the good decks and avoidance of the bad ones. Also, as learning takes place, subjects show an increase in skin conductance before picking cards from the bad decks, even before they become aware of any difference in expected value among the four decks. This implicit stress response is considered evidence for the activation of somatic and emotional signals in guiding decisions, the so-called somatic marker hypothesis. When tested with the Iowa gambling task, patients with ventromedial prefrontal cortex (vmPFC) lesions and patients with bilateral amygdala lesions failed to develop a preference for the safer advantageous deck and either chose randomly or had a preference for the risky gamble, leading to overall monetary losses. Interestingly, while control subjects and prefrontal cortex-lesioned patients showed skin conductance responses to winning and losing, amygdala-lesioned patients failed to show such responses. Furthermore, contrary to controls, neither patient group showed anticipatory skin conductance responses to the risky deck, suggesting that defective emotional signaling in the amygdala and defective routing of this information through prefrontal cortex resulted in a similar negative impact on loss (or risk) aversion learning. Bechara et al27 point out that some of the vmPFC patients were aware that the decks were bad yet persevered in their risky choices; the authors draw a parallel with other pathological conditions, such as drug addiction or psychopathy where maladaptive or pathological behavior persists despite intact awareness of the consequence of one's actions.

Our group used a different type of gambling game in order to study the interactions between emotions and value-based decisions in patients with OFC lesions.28 Subjects chose between two gambles of known probabilities and rated subjectively felt emotions after the outcome was revealed. Different types of emotions could be measured depending on how much information was supplied. In the presence of feedback on the chosen gamble only, subjects experienced either satisfaction or disappointment, depending on the outcome value. When feedback was given on both gambles, they could compare “what is” with “what could have been” and hence could experience more complex forms of emotions, such as rejoicing or regret. This is an important issue, because in behavioral economics, decisions made under uncertainty generate regret aversion, leading subjects to estimate (and sometimes overestimate) future regret when making choices in order to minimize the risk of experiencing it later.29 Patients with OFC lesions reported feeling elated and saddened when their chosen gamble produced wins and losses, respectively. They also showed commensurate emotional arousal, as measured by their skin conductance response, in agreement with previous findings.26 However, contrary to control subjects, neither their emotional responses nor their decision strategies were affected by information on the foregone option. For instance, when the chosen gamble yielded a small positive earning, their satisfaction level was not reduced by discovering that the outcome of the other gamble was even more advantageous, nor was it enhanced when discovering it was much worse.

Although lack of regret could also reflect blunted somatic markers, it should be emphasized that this is a cognitive-based emotion requiring a capacity to perform counterfactual comparison, a process that might be impaired in these patients. Work on monkeys shows that the ACC, an area closely connected to limbic and prefrontal regions, contains neurons that encode the size of rewards, obtained as well as unobtained, in a simple decision task.30 The ACC is thus suited to provide “Active” outcome value signals needed to compare factual and counterfactual information. Neuroimaging studies in humans have shown a cerebral regret network that includes the amygdala, OFC, ACC, and hippocampus. Whereas simple monetary wins and losses in the two-choice gambling task modulate reward-related areas, such as the ventral striatum, experienced and anticipated regret are correlated with enhanced activity in the OFC, amygdala, and ACC.31 Other imaging studies have confirmed the key role of the OFC in the regret mechanism, with a possible functional distinction between lateral and medial OFC (see ref 32 for a review).

Social decision making

Interest in the mechanisms of social decision making is more recent. Access to vital resources requires the establishment of trust and cooperation with social partners. One function of social information is its intrinsic rewarding quality. The tremendous success of smartphone photo exchange apps and online social networks is a patent testimony to the high value we assign to observing and learning about our conspecifics. Another function is how “others” are used as comparison points in own-reward evaluation. Social comparison is a basic human activity. As Karl Marx noted in Wage Labor and Capital,33 it is a major driving force in the burning pursuit of wealth, and it is a source of no less burning frustration:

A house may be large or small; as long as the neighboring houses are likewise small, it satisfies all social requirement tor a residence. But let there arise next to the little house a palace, and the little house shrinks to a hut. The little house now makes it clear that its inmate has no social position at all to maintain, or but a very insignificant one; and however high it may shoot up in the course of civilization, if the neighboring palace rises in equal or even in greater measure, the occupant ot the relatively little house will always find himself more uncomfortable, more dissatisfied, more cramped within his four walls. The role of inequity aversion in decision making is well documented in humans and in several nonhuman primate species.34 In contrast, if the other's reward serves as reference point, ie, when an individual has to make decisions about somebody else's money rather than about t heir's own money, emotional engagement is reduced and risk evaluation less constrained by loss aversion.35 Impairments in socially based cognition and communication have been reported in brain-lesioned patients. Patients with vmPFC lesions engage in socially inappropriate behavior, lacking in awareness of their social norm violations,36 and fail to adjust their communicative behavior according to the social partner,37 thus raising the question of the role of prefrontal areas in social decision making.

Experiments in nonhuman primates have explored how neural activity is affected when decisions are made in a social context. When given the opportunity to provide food or to prevent infliction of unpleasant stimuli to conspecifics, monkeys express variable, partner-dependent social tendencies but mainly make prosocial decisions.38 In the OFC, we have shown that own-reward sensitive neurons respond to a fixed reward to self with different discharge rates, depending on whether monkeys are working to obtain a reward for self only or for both self and a partner monkey. Activity modulations are consistent with simultaneously recorded behavior, as equity-averse animals show reduced neuronal activity to “shared” reward (same amount granted to both) and equity-seeking animals show enhanced activity.39 OFC thus appears suited to perform the neural computations involved in social comparisons. Whereas neurons in the OFC and in the striatum only encode own rewards in a social context,39,40 ACC neurons distinguish between rewards to self and to others,41 and medial prefrontal neurons track rewards and action of partner monkeys.42 Finally, in a recent experiment using as experimental paradigm an iterated prisoner's dilemma game, neuronal activity in the dorsal ACC was shown to predict another player's intention to cooperate or to “defect,” and disruption of its activity by electrical stimulation reduced the tendency to produce mutually beneficial choices.43 Although food trading may seem quite rudimentary in comparison with complex human social exchanges, these results highlight the key role played by prefrontal cortical areas in regulating the basic mechanisms of cooperation and competition.

Few studies have adopted a neuroeconomic perspective on social decisions made after brain damage. Patients with vmPFC lesions were found to reject unfair offers more often than control subjects in a single-round ultimatum game, but only when outcomes were obtained after a delay and not when outcomes were displayed and gains immediately available.44 Thus, rather than reflecting anomalous inequity aversion, the explanation advanced by the authors is that after damage to the vmPFC, future gains are more rapidly discounted (a so-called myopia for the future), thereby making the subjective value of an unfair offer appear even lower—and more unacceptable—than it would to a normal individual. Interestingly, patients also exhibited a more global social impairment. Whereas control subjects display more inequity aversion when facing a human opponent than a computer opponent, choices made by patients with lesions in the vmPFC did not distinguish between the two situations and only depended on the distribution of monetary outcomes Differentiation of human versus computer partners is believed to reflect the role of social norms in economic decision making and, in the present case, the need felt to administer costly altruistic punishment to human partners who behave unfairly.45 By contrast, since inanimate objects cannot be held responsible for their choices, the dominant strategy when playing against a computer is rational maximization (ie, accepting a very small offer is better than nothing). In a later study, decisions made by vmPFC-lesioned patients were analyzed in the context of the “trust game,” a social exchange test evaluating trust and reciprocity.46 In this game, participants play the role of investor and trustee engaged in a financial exchange. The investor has to transfer all or part of an initial endowment, knowing that the amount invested is tripled as it is transferred to the trustee, and the trustee must then decide what fraction of that amount to return to the investor. As in the previous study, in contrast to control subjects who were more risk adverse (less trusting) with a human than a computer partner, vmPFC-lesioned patients did not act differently in the two settings and they displayed less generosity as trustee. The human vs computer dissociation is taken as evidence of fear of betrayal, another social emotion influencing risky decisions. The complexities of social-economic games call for caution in data interpretation. Nevertheless, observations made in patients with OFC and vmPFC lesions suggest that this region plays a key role in regulating decision making through nonsocial emotions, such as anticipated regret and social/moral emotions, such as envy, fairness, betrayal, and guilt.

Conclusions

The use of experimental approaches and concepts from behavioral economics is a relatively new direction in neuroscience and neuropsychology. In interaction with other domains such as reinforcement learning, computational neuroscience, and social psychology, economic analysis provides a mechanistic framework and explicit hypotheses to investigate covert reward evaluation processes and emotional regulation of individual and social decision making. Further work drawing on comparative electrophysiological and neuroimaging approaches in animals and humans is needed to develop an integrated view of motivated behavior and pave the way toward novel approaches to the treatment of neuropsychiatric disorders.

Acknowledgments

Dr Sirigu's work was supported by a ANR-11—LABEX-0042 grant from the University of Lyon I with in the program “Investissement d'Avenir.” Dr Duhamel's work was supported by BLAN-SVSE4-023-01 and BS4-0010-01 grants from Agence Nationale de la Recherche.

Contributor Information

Angela Sirigu, Institut des Sciences Cognitives Marc Jeannerod, Centre National de la Recherche Scientifique, 69675 Bron, France; Département de Biologie Humaine, Université Lyon 1, 69622 Villeurbanne, France.

Jean-René Duhamel, Institut des Sciences Cognitives Marc Jeannerod, Centre National de la Recherche Scientifique, 69675 Bron, France; Département de Biologie Humaine, Université Lyon 1, 69622 Villeurbanne, France.

REFERENCES

- 1.Camerer CF. Behavioral economics. Curr Biol. 2014;24(18):R867–R871. doi: 10.1016/j.cub.2014.07.040. [DOI] [PubMed] [Google Scholar]

- 2.Axelrod R., Hamilton WD. The evolution of cooperation. Science. 1981;211(4489):1390–1396. doi: 10.1126/science.7466396. [DOI] [PubMed] [Google Scholar]

- 3.McGraw AP., Larsen JT., Kahneman D., Schkade D. Comparing gains and losses. Psychol Sci. 2010;21(10):1438–1445. doi: 10.1177/0956797610381504. [DOI] [PubMed] [Google Scholar]

- 4.McCabe KA., Rassenti SJ., Smith VL. Game theory and reciprocity in some extensive form experimental games. Proc Natl Acad Sci U S A. 1996;93(23):13421–13428. doi: 10.1073/pnas.93.23.13421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kalenscher T., van Wingerden M. Why we should use animals to study economic decision making - a perspective. Front Neurosci. 2011;5:82. doi: 10.3389/fnins.2011.00082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Brosnan SF., De Waal FB. Monkeys reject unequal pay. Nature. 2003;425(6955):297–299. doi: 10.1038/nature01963. [DOI] [PubMed] [Google Scholar]

- 7.Phelps EA., Lempert KM., Sokol-Hessner P. Emotion and decision making: multiple modulatory neural circuits. Annu Rev Neurosci. 2014;37:263–287. doi: 10.1146/annurev-neuro-071013-014119. [DOI] [PubMed] [Google Scholar]

- 8.O'Doherty JP. The problem with value. Neurosci Biobehav Rev. 2014;43:259–268. doi: 10.1016/j.neubiorev.2014.03.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sharp C., Monterosso J., Montague PR. Neuroeconomics: a bridge for translational research. Biol Psychiatry. 2012;72(2):87–92. doi: 10.1016/j.biopsych.2012.02.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schultz W., Dayan P., Montague PR. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 11.Fiorillo CD., Tobler PN., Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299(5614): 1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 12.Critchley HD., Rolls ET. Hunger and satiety modify the responses of olfactory and visual neurons in the primate orbitofrontal cortex. J Neurophysiol. 1996;75(4):1673–1686. doi: 10.1152/jn.1996.75.4.1673. [DOI] [PubMed] [Google Scholar]

- 13.Schultz W. Neuronal reward and decision signals: from theories to data. Physiol Rev. 2015;95(3):853–951. doi: 10.1152/physrev.00023.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Leathers ML., Olson CR. In monkeys making value-based decisions, LIP neurons encode cue salience and not action value. Science. 2012;338(6103):132–135. doi: 10.1126/science.1226405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Padoa-Schioppa C., Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441(7090):223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tremblay L., Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398(6729):704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- 17.Shadlen MN., Kiani R. Decision making as a window on cognition. Neuron. 2013;80(3):791–806. doi: 10.1016/j.neuron.2013.10.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dayan P., Daw ND. Decision theory, reinforcement learning, and the brain. Cogn Affect Behav Neurosci. 2008;8(4):429–453. doi: 10.3758/CABN.8.4.429. [DOI] [PubMed] [Google Scholar]

- 19.Worbe Y., Palminteri S., Hartmann A., Vidailhet M., Lehericy S., Pessiglione M. Reinforcement learning and Gilles de la Tourette syndrome: dissociation of clinical phenotypes and pharmacological treatments. Arch Gen Psychiatry. 2011;68(12):1257–1266. doi: 10.1001/archgenpsychiatry.2011.137. [DOI] [PubMed] [Google Scholar]

- 20.Palminteri S., Lebreton M., Worbe Y., et al Dopamine-dependent reinforcement of motor skill learning: evidence from Gilles de la Tourette syndrome. Brain J Neurol. 2011;134(pt 8):2287–2301. doi: 10.1093/brain/awr147. [DOI] [PubMed] [Google Scholar]

- 21.Palminteri S., Pessiglione M. Reinforcement learning and Tourette syndrome. Int Rev Neurobiol. 2013;112:131–153. doi: 10.1016/B978-0-12-411546-0.00005-6. [DOI] [PubMed] [Google Scholar]

- 22.Frank MJ., Seeberger LC., O'reilly RC. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. 2004;306(5703):1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- 23.Palminteri S., Lebreton M., Worbe Y., GrabIi D., Hartmann A., Pessiglione M. Pharmacological modulation of subliminal learning in Parkinson's and Tourette's syndromes. Proc Natl Acad Sci USA. 2009;106(45):19179–19184. doi: 10.1073/pnas.0904035106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wise RA. Brain reward circuitry: insights from unsensed incentives. Neuron. 2002;36(2):229–240. doi: 10.1016/s0896-6273(02)00965-0. [DOI] [PubMed] [Google Scholar]

- 25.Tremblay L., Worbe Y., Thobois S., Sgambato-Faure V., Feger J. Selective dysfunction of basal ganglia subterritories: from movement to behavioral disorders. Mov Disord. 2015;30(9):1155–1170. doi: 10.1002/mds.26199. [DOI] [PubMed] [Google Scholar]

- 26.Bechara A., Damasio AR., Damasio H., Anderson SW. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition. 1994;50(1-3):7–15. doi: 10.1016/0010-0277(94)90018-3. [DOI] [PubMed] [Google Scholar]

- 27.Bechara A. The role of emotion in decision-making: evidence from neurological patients with orbitofrontal damage. Brain Cogn. 2004;55(1):30–40. doi: 10.1016/j.bandc.2003.04.001. [DOI] [PubMed] [Google Scholar]

- 28.Camille N., Coricelli G., Sallet J., Pradat-Diehl P., Duhamel JR., Sirigu A. The involvement of the orbitofrontal cortex in the experience of regret. Science. 2004;304(5674):1167–1170. doi: 10.1126/science.1094550. [DOI] [PubMed] [Google Scholar]

- 29.Mellers BA., Ritov A., Schwartz A. Emotion-based choice. J Exp Psychol Gen. 1999;128(3):332–345. [Google Scholar]

- 30.Hayden BY., Pearson JM., Piatt ML. Fictive reward signals in the anterior cingulate cortex. Science. 2009;324(5929):948–950. doi: 10.1126/science.1168488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Coricelli G., Critchley HD., Joffily M., O'Doherty JP., Sirigu A., Dolan RJ. Regret and its avoidance: a neuroimaging study of choice behavior. Nat Neurosci. 2005;8(9):1255–1262. doi: 10.1038/nn1514. [DOI] [PubMed] [Google Scholar]

- 32.Sommer T., Peters J., Glascher J., Buchel C. Structure-function relationships in the processing of regret in the orbitofrontal cortex. Brain Struct Fund. 2009;213(6):535–551. doi: 10.1007/s00429-009-0222-8. [DOI] [PubMed] [Google Scholar]

- 33.Marx K. Wage labour and capital. Neue Rheinische Zeitung; Online version: Marx K. Wage labour and capital. Engels F, trans-ed. Marx/ Engels Internet Archive. Available at: www.marxists.org/archive/marx/ works/down load/Marx_Wage_ Labour_and_Cap ital.pdf. 1849 [Google Scholar]

- 34.Brosnan SF., de Waal FBM. Evolution of responses to (un)fairness. Science. 2014;346(6207):1251776. doi: 10.1126/science.1251776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mengarelli F., Moretti L., Faralla V., Vindras P., Sirigu A. Economic decisions for others: an exception to loss aversion law. PloS One. 2014;9(1):e85042. doi: 10.1371/journal.pone.0085042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Beer JS., John OP., Scabini D., Knight RT. Orbitofrontal cortex and social behavior: integrating self -monitoring and emotion-cognition interactions. J Cogn Neurosci. 2006;18(6):871–879. doi: 10.1162/jocn.2006.18.6.871. [DOI] [PubMed] [Google Scholar]

- 37.Stolk A., D'lmperio D., di Pellegrino G., Toni I. Altered communicative decisions following ventromedial prefrontal lesions. Curr Biol. 2015;25(11):1469–1474. doi: 10.1016/j.cub.2015.03.057. [DOI] [PubMed] [Google Scholar]

- 38.Ballesta S., Duhamel JR. Rudimentary empathy in macaques' social decision-making. Proc Natl Acad Sci U S A. 2015;112(50):15516–15521. doi: 10.1073/pnas.1504454112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Azzi JCB., Sirigu A., Duhamel JR. Modulation of value representation by social context in the primate orbitofrontal cortex. Proc Natl Acad Sci U S A. 2012;109(6):2126–2131. doi: 10.1073/pnas.1111715109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Baez-Mendoza R., Schultz W. The role of the striatum in social behavior. Front Neurosci. 2013;7:233. doi: 10.3389/fnins.2013.00233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Chang SW., Gariepy JF., Piatt ML. Neuronal reference frames for social decisions in primate frontal cortex. Nat Neurosci. 2013;16(2):243–250. doi: 10.1038/nn.3287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Yoshida K., Saito N., Iriki A., Isoda M. Representation of others' action by neurons in monkey medial frontal cortex. Curr Biol. 2011;21(3):249–253. doi: 10.1016/j.cub.2011.01.004. [DOI] [PubMed] [Google Scholar]

- 43.Haroush K., Williams ZM. Neuronal prediction of opponent's behavior during cooperative social interchange in primates. Cell. 2015;160(6):1233–1245. doi: 10.1016/j.cell.2015.01.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Moretti L., Dragone D., di Pellegrino G. Reward and social valuation deficits following ventromedial prefrontal damage. J Cogn Neurosci. 2009;21(1):128–140. doi: 10.1162/jocn.2009.21011. [DOI] [PubMed] [Google Scholar]

- 45.Fehr E., Gachter S. Altruistic punishment in humans. Nature. 2002;415(6868):137–140. doi: 10.1038/415137a. [DOI] [PubMed] [Google Scholar]

- 46.Moretto G., Sellitto M., di Pellegrino G. Investment and repayment in a trust game after ventromedial prefrontal damage. Front Hum Neurosci. 2013;7:593. doi: 10.3389/fnhum.2013.00593. [DOI] [PMC free article] [PubMed] [Google Scholar]