Abstract

Generalized Estimating Equations (GEE) is a general statistical method to fit marginal models for longitudinal data in biomedical studies. The variance-covariance matrix of the regression parameter coefficients is usually estimated by a robust “sandwich” variance estimator, which does not perform satisfactorily when the sample size is small. To reduce the downward bias and improve the efficiency, several modified variance estimators have been proposed for bias-correction or efficiency improvement. In this paper, we provide a comprehensive review on recent developments of modified variance estimators, and compare their small-sample performance theoretically and numerically through simulation and real data examples. In particular, Wald tests and t–tests based on different variance estimators are used for hypothesis testing, and the guideline on appropriate sample sizes for each estimator is provided for preserving Type I error in general cases based on numerical results. Also, we develop a user-friendly R package “geesmv” incorporating all of these variance estimators for public usage in practice.

Keywords: Generalized Estimating Equations, longitudinal data, variance estimator, small sample size, Type I error, hypothesis testing

1. Introduction

Longitudinal data is commonly encountered in biomedical studies [1, 2, 3, 4]. For example, in a diabetes study, repeated primary efficacy measures on HbA1c were taken over time (baseline and follow-up visits after treatment) for each patient, and the question of interest was to investigate the trend of HbA1c changing over time or the insulin treatment effect on HbA1c [5]. For such a situation, the responses from the same individual turn to be “more alike”, thus incorporating within-subject correlation and between-subject variations into model fitting is necessary to improve the efficiency of the estimation and enhance the power.

To analyze repeated measures, several simple traditional approaches exist (i.e., MANOVA) [6]. However, mixed-effect models (MEM) [2] and Generalized Estimating Equations (GEE) [7] are popularly applied. Of note, MEM is an individual-level approach that is able to adopt random effects to capture the correlation among observations from the same subject [8]; GEE is a population-level model based on the quasi-likelihood function [9, 10]. In this paper, we focus on GEE which holds several defining features: 1) Under mild regularity conditions, the parameter estimates are consistent and asymptotically normal even under the mis-specified “working” correlation structure of the responses; 2) When the inference is intended to be population-based, for instance, the overall treatment effect, GEE treats the variance-covariance matrix of the responses as nuisance parameters [9]; 3) GEE relaxes the distribution assumption, and only requires correct specification of marginal mean and variance as well as the link function between the mean and covariates of interest. GEE has been implemented in statistical software (i.e., SAS, R), and can be directly adopted for analysis.

It is known that the variance estimators of parameters of interest are utilized in hypothesis testing, thus its accuracy is important for valid inference. Under some specific conditions such as small sample size, the traditional GEE with the classic “sandwich” variance estimator does not perform satisfactorily, and considerable downward bias is exhibited [11, 12, 13, 14], in turn leading to inflated type I errors and lower coverage rates of the resulting confidence intervals [15, 16]. Until now, several remedy strategies on modifications of variance estimators have been proposed to improve the finite small-sample performance [13, 17]. To our knowledge, few studies exist to cover various variance estimators including the most recently developed ones on GEE with small samples for comprehensive comparisons, and there is a lack of a guideline on the adequate sample size for preserving Type I error. Note that the recent paper related to this area was discussed by Li and Redden [18] with emphasis on the small sample performance of bias-corrected sandwich estimators particularly for cluster-randomized trials with binary outcomes. However, this work has several limitations: 1) only the scenarios with binary outcomes were considered; 2) the influence of misspecified correlation structure was not explored; 3) The degrees of freedom of the approximate t–distribution did not take the variability of the variance estimator into account [16, 19, 20], but only depended on the number of clusters; 4) limited variance estimators implemented by SAS were considered, but the most recent ones were not. In this paper, we attempt to address these issues and provide a more comprehensive and accurate comparisons of different modified variance estimators. Furthermore, we develop a user-friendly R package including functions for calculating the modified variance estimators as well as the degrees of freedom defined as a function of the variance of the estimator for t–tests [16, 20].

The remainder of the article is organized as follows. In Section 2, we introduce the notations and provide nine variance estimators of GEE as well as their theoretical comparisons. In addition, two types of hypothesis testing, Wald tests and t–tests, are emphasized. Later, in Section 3, we provide extensive simulation to compare the performance of different variance estimators, and identify the suitable sample size for each to ensure their satisfactory performance in controlling Type I error. Importantly, we develop an R package “geesmv” for public use with small samples. Also, we illustrate the application of our R package via a real data example in Section 4. The conclusion with a brief discussion and future work is shown in Section 5.

2. Method

2.1. Notation and GEE

Given longitudinal data consisting of K subjects, denote Yij as the jth response for the ith subject with ni observations, i = 1, 2, …, K, j = 1, …, ni, and Xij is a p × 1 vector of covariates. Yi = (Yi1, Yi2, …, Yini)′ denotes the response vector with the mean vector noted by μi = (μi1, μi2, …, μini)′ where μij is the corresponding jth mean for subject i. There exists within-subject correlation, but the observations across subjects are assumed to be independent. In addition, the marginal model specifying an association between μij and the covariates of interest Xij is given by

| (1) |

with g as a known link function, β an unknown p × 1 vector of regression coefficients. The conditional variance of Yij given Xij is Var(Yij|Xij) = ν(μij)ϕ with ν as a known variance function of μij and ϕ a scale parameter which may need to be estimated. Of note, ν and ϕ depend on the distributions of outcomes. For example, if Yij is a continuous variable, ν(μij) is 1, and ϕ represents the error variance; if Yij is a count variable, ν(μij) = μij, and ϕ is equal to 1. Also, the variance-covariance matrix for Yi is noted by , where Ai = Diag{ν(μi1), ⋯, ν(μini)}, and the “working” correlation structure Ri(α) describes the correlation pattern of observations within-subject with α as a vector of association parameters depending on the correlation structure. Several types of “working” correlation structures including independent , exchangeable , autoregressive (α = ρ, Corr(Yij, Yi,j+m) = ρm, j + m ≤ ni), Toeplitz , and also unstructured ones are commonly used. The estimation of α is based on an iterative fitting process using the Pearson residual given the current value of β; also, the scale parameter ϕ is estimated by with the total number of observations .

The GEE method yields asymptotically consistent β̂, even when the “working” correlation structure (Ri(α)) is misspecified [7], and β̂ is obtained by the following estimating equation

| (2) |

where . Given the true value of β as βt and mild regularity conditions, β̂ is asymptotically normally distributed with a mean βt and a covariance matrix estimated based on the “sandwich” estimator by

| (3) |

with

| (4) |

by replacing α, β and ϕ with their consistent estimates, where with r̂i = Yi − μ̂i is an estimator of the variance-covariance matrix of Yi [7, 21]. This “sandwich” estimator is robust in that it is consistent even if the correlation structure is misspecified. Note that if Vi is correctly specified, a consistent estimator for the covariance matrix of β̂ is given by , which is often referred as the model-based variance estimator [7]. Next, we will discuss the small-sample properties of GEE with several modifications on variance estimators and hypotheses testing.

2.2. Modified variance estimators of GEE with small samples

Due to the fact that the fitted value μ̂i tends to be closer to Yi than the true value μi and when sample size is small, in VLZ is biased downward for estimating , and the bias turns to be larger when the sample is much smaller; meanwhile, a greater variability may arise [16, 22]. Therefore, the hypothesis testing using VLZ tends to be liberal, and the resulting confidence interval is narrow. Table 1 provides a comprehensive summary of the recent modified variance estimators, and the details of the estimators are provided next.

- VMK is the degrees-of-freedom corrected “sandwich” variance estimator proposed by MacKinnon [23]. This estimator incorporates the simplest adjustment by adopting an adjustment factor of , which is shown by

When K → ∞, VMK →p VLZ. VMK corrects the bias, but meanwhile increases the variability.(5) - VKC is a bias-corrected “sandwich” variance estimator under the assumption of correctly specified correlation structure proposed by Kauermann and Carroll [24], which is

with(6)

where Ii is a ni × ni identify matrix, and the subject leverage Hii is a diagonal matrix with the leverage of the ith subjects, which can be calculated by(7) - VPAN is proposed by Pan [20] given two additional assumptions satisfied: (A1) The conditional variance of Yij given Xij is correctly specified; (A2) A common correlation structure, Rc, exists across all subjects. The modified variance estimator is

with(8)

VPAN pools data across all subjects in estimating Cov(Yi), which performs more efficient.(9) - VGST made an additional modification on Pan’s estimator by incorporating the bias of for small K, which was proposed by Gosho et al. [25]. The modified variance estimator is written as:

with(10)

VGST also pools data across all subjects in estimating Cov(Yi). In particular, when K ≫ p and K is large enough, VGST approximately equals to VPAN.(11) - VMD is another bias-corrected “sandwich” variance estimator proposed by Mancl and DeRouen [22]. Unlike VKC, this estimator does not assume a correctly specified correlation structure, and it is written by

with(12)

where Ii and Hii are defined as the same as VKC. Note that to correct this bias in finite samples, Mancl and DeRouen [22] relied on the following approximate identity(13)

but they ignore one term from its first order Taylor’s expansion, leading to overcorrection. - VFG indicated by Fay and Graubard [26] made a further adjustment on VMD by multiplying a scale factor, which is given by

with(14)

where ηi = Ip − Ni. Note that the jjth diagnal value of equals to (1 − min(b, {Ni}jj))−1, where for a simple bias correction, and b is prespecified subjectively to avoid extreme adjustments when Ni is quite close to 1.(15) - VMBN is a bias-corrected estimator recommended by Morel et al. [27] by incorporating correlation on the residual cross-products and sample size, provided as

with(16)

where and with 0 ≤ r ≤ 1. Note that k is a factor to adjust the bias of empirical variance estimator of Cov(Yi) and δm given by Morel can be bounded by 1/d [27]. The default values for d and r are 2 and 1 respectively according to Morel et al. [27].(17) - VWL is a combined variance estimator suggested by Wang and Long [16], which considered both the strength of VPAN and VMD for pooling information from all subjects and reducing the bias of the estimate for . The estimator is below

where(18)

This estimator was confirmed to perform as well as or better than VPAN and VM, but the two additional assumptions specified above also need to be satisfied.(19)

Table 1.

Summary of eight modified variance estimators for GEE with small sample

| Variance Estimator | Modification | Reference |

|---|---|---|

| VMK | Degrees-of-Freedom adjustment | MacKinnon (1985) |

| VKC | Bias correction | Kauermann and Carroll (2001) |

| VPAN | Efficiency improvement | Pan (2001) |

| VGST | Efficiency improvement | Gosho et al. (2014) |

| VMD | Bias correction | Mancl and DeRouen (2001) |

| VFG | Bias correction | Fay and Graubard (2001) |

| VMBN | Bias correction | Morel et al. (2003) |

| VWL | Bias correction & Efficiency improvement | Wang and Long (2011) |

We now present theoretical comparisons among those variance estimators. As shown above, all variance estimators share the same two outside terms, i.e., . Thus, we focus on assessing and comparing the middle matrix, M, of different variance estimators. The derived covariance matrix for vec(M) are given in Table 2. It has been shown by Wang and Long [16] that Cov(vec(MLZ)) − Cov(vec(MWL)) and Cov(vec(MMD)) − Cov(vec(MWL)) are non-negative definite with probability 1, while Cov(vec(MPAN)) − Cov(vec(MWL)) converges to 0 with probability 1 as K → ∞. For comparisons among the other alternatives,

Table 2.

Covariance matrix of the middle part from nine variance estimators for GEE. ; Gi = (Ii − Hii)−1 ⊗ (Ii − Hii)−1; .

| Matrix M | Covariance Matrix of vec(M) | |

|---|---|---|

| MLZ | ||

| MMK | ||

| MKC | ||

| MPAN | ||

| MGST | ||

| MMD | ||

| MFG | ||

| MMBN | ||

| MWL |

Based on the above derivations, under mild conditions, Cov(vec(MLZ)) − Cov(vec(MMK)), Cov(vec(MLZ)) − Cov(vec(MKC)), Cov(vec(MLZ)) − Cov(vec(MFG)), and Cov(vec(MLZ)) − Cov(vec(MMBN)) will converge to 0 with probability 1, while Cov(vec(MLZ)) − Cov(vec(MGST)) is non-negative definite with probability 1 as K → ∞. Hence, these variance estimators are asymptotically equivalent. But when the sample size is small, VLZ tends to underestimate the variance. Therefore, the modifications through the bias-correction or degrees-of-freedom adjustment are mostly applied (Table 1). On the other hand, the efficiency gain by pooling data across all subjects to improve the estimator of Cov(Yi) instead of only using data from the ith subject, is incorporated in VPAN, VGST and VWL. Thus, VWL is the only estimator that takes into consideration both bias correction and efficiency improvement. Therefore, it is expected intuitively to outperform the other alternatives if the assumptions (A1) and (A2) are satisfied. In Section 3, extensive numerical comparisons via simulations will be conducted for further investigation.

2.3. Hypotheses Testing

For tests of hypotheses in GEE, the Wald test and score test have been popularly applied [16, 20, 28]. However, when the sample size is small, the Wald test leads to inflated type I error, which seems too liberal [20, 28], and score test has smaller test size than the pre-specified nominal level [28]. Therefore, several modifications have been proposed to obtain improved finite performance for GEE, i.e., t–test and modified score test. According to Guo et al. [28], the score test was modified under the context of small sample, which was shown to be less conservative than the t–test via simulation. Currently, we consider t–tests when the sample size is small, and the brief derivation is shown next.

Without generality, a simple univariate hypothesis testing is taken as an example. Suppose in a clinical trial, the mean model is specified as μij = α + β × treatment. The hypothesis of interest for the treatment parameter β is given by H0 : β = 0 vs Ha : β ≠ 0. Thus, the test statistic for the Wald test is , where V̂ (β̂) can be replaced by any estimator mentioned above. For small samples, the t–test was proposed by Pan [20], and was also extensively studied by Wang and Long [16]. Denote κ and ν as the estimated mean and variance of V (β̂). It follows the derivation based on vec operator that the distribution of is approximated with a Chi-square distribution , where the scale parameter and the degrees of freedom . The test statistic for t–test is , which is the same as the Wald test statistic with the degrees of freedom [16, 20]. This satterthwaite-type degrees of freedom approximation incorporates the variability of the variance estimator, and thus performs better compared to depending only on the number of clusters in Li and Redden [18]. The outperformance of t–test over the Wald test was identified in the settings with small sample [16, 29].

3. Simulation Studies

In this section, we conduct simulation studies to numerically compare the finite small-sample performance of nine types of variance estimators including the original “sandwich” variance estimator under different settings. Also, we focus on the Wald test and t–test for hypothesis testing to calculate the Type I error rate for each estimator, and further provide the recommendation on suitable sample size for each one to ensure test sizes at the nominal levels. In particular, three scenarios with continuous, count and binary repeated outcomes are considered. The models for data generation are below

| (20) |

where β0 = 0 and β1 = 0, i = 1, …, K with sample size K = 10, 20, 30, 40, 50 and j = 1, …, n with equal number of observations within-subject (i.e., cluster size) n = 5, 10, 20. The covariate xij are independent and identical distributed (i.i.d) from a standard normal distribution N(0, 1). The subject-level random effects bi’s are i.i.d. from with , and the random error εij ’s are i.i.d. from with . The details for each scenarios are listed: 1) For the case with continuous outcomes, bi and εij are independent with each other, leading to the true exchangeable correlation structure with the correlation parameter as ; 2) For the case with count outcomes, based on the derivation by Guo et al. [28], the correlation parameter ; 3) For the case with binary outcomes, the correlation parameter according to Guo et al. [28].

In particular, 1,000 Monte Carlo data sets are generated for each scenarios, where the parameter estimate β̂1 along with nine variance estimates are calculated. For each set-up, three types of “working” correlation structures are used, independence, exchangeable and AR-1. The Wald and t–tests are both applied for hypotheses testing, and Type I error is calculated given the significance level of 0.05. Note that the degrees of freedom for t–distribution vary across different variance estimators. For example, the average degrees of freedom for the first scenario with continuous outcomes, K = 10 and n = 5 are rounded by 13, 13, 69, 69, 11, 14, 14, 22, 54 respectively, indicating the variability influence of variance estimators on statistical inference.

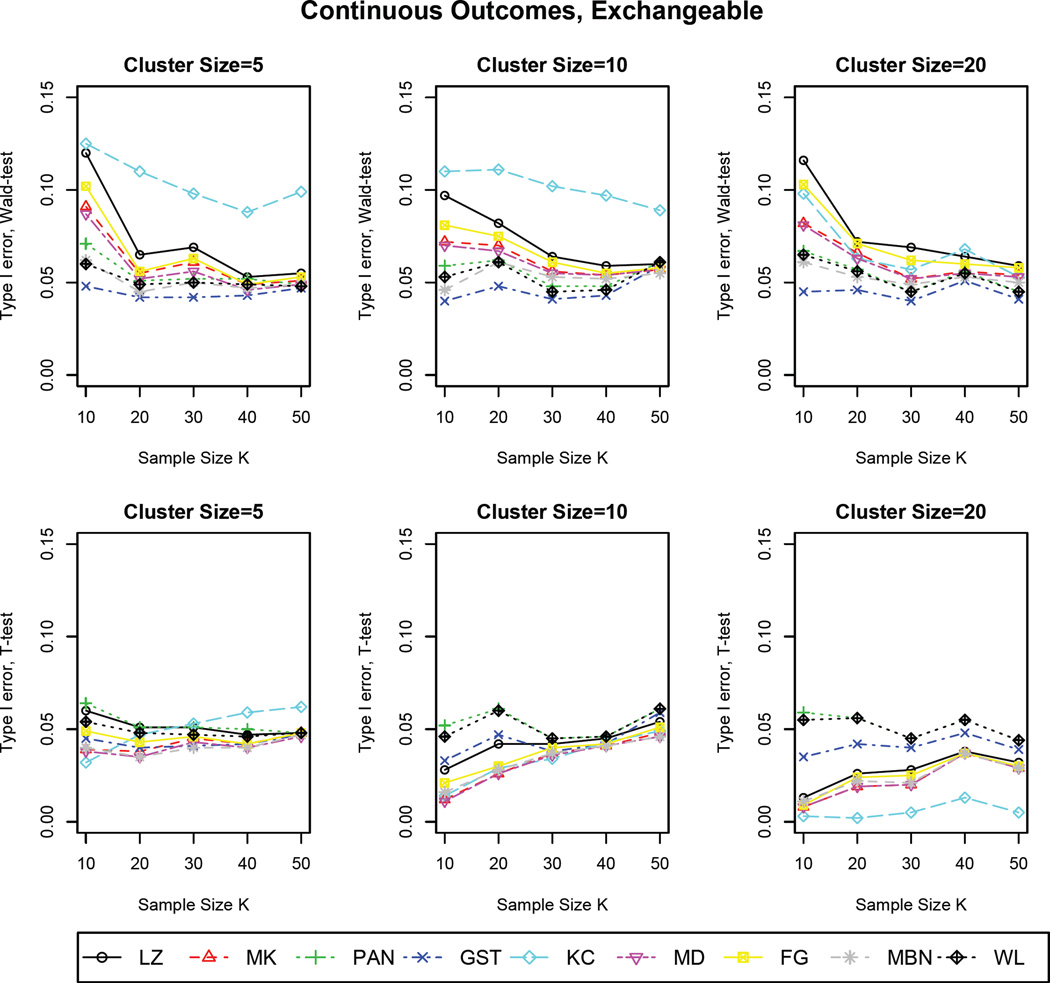

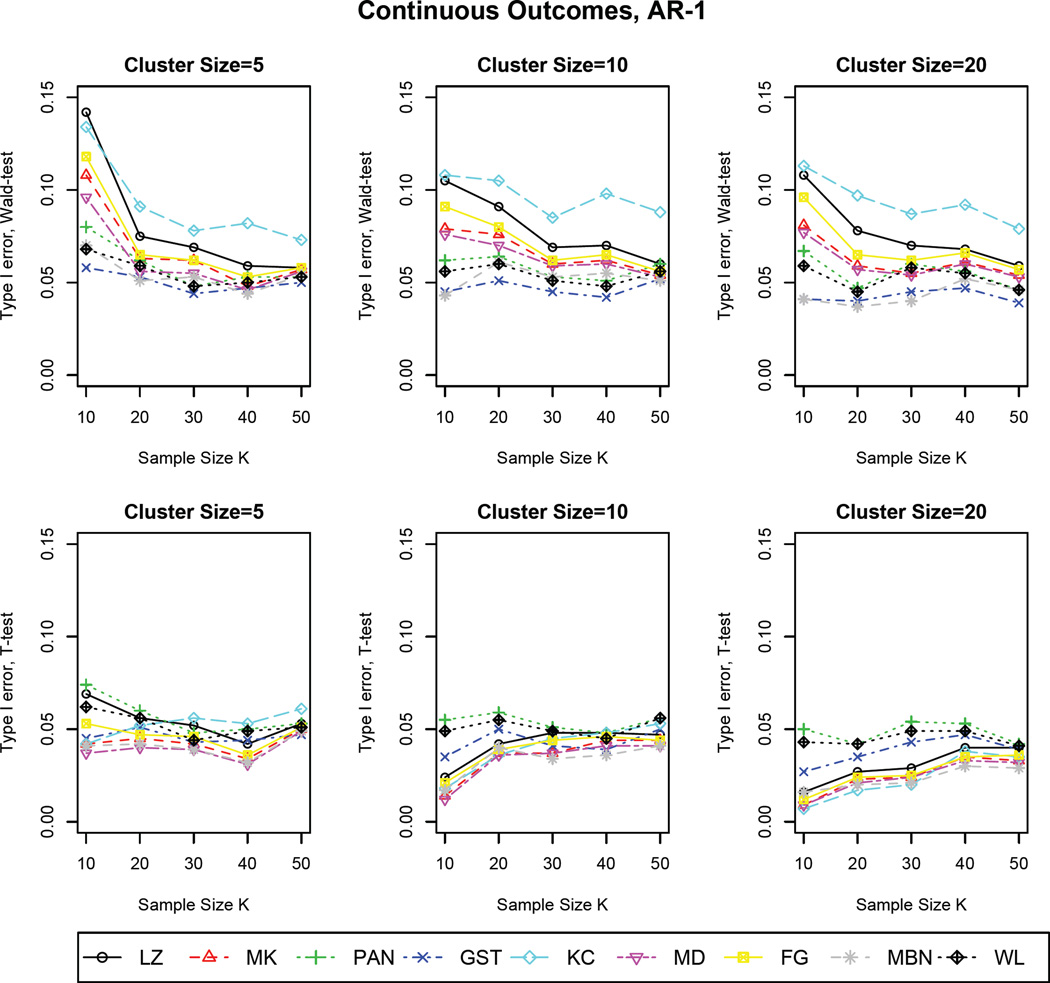

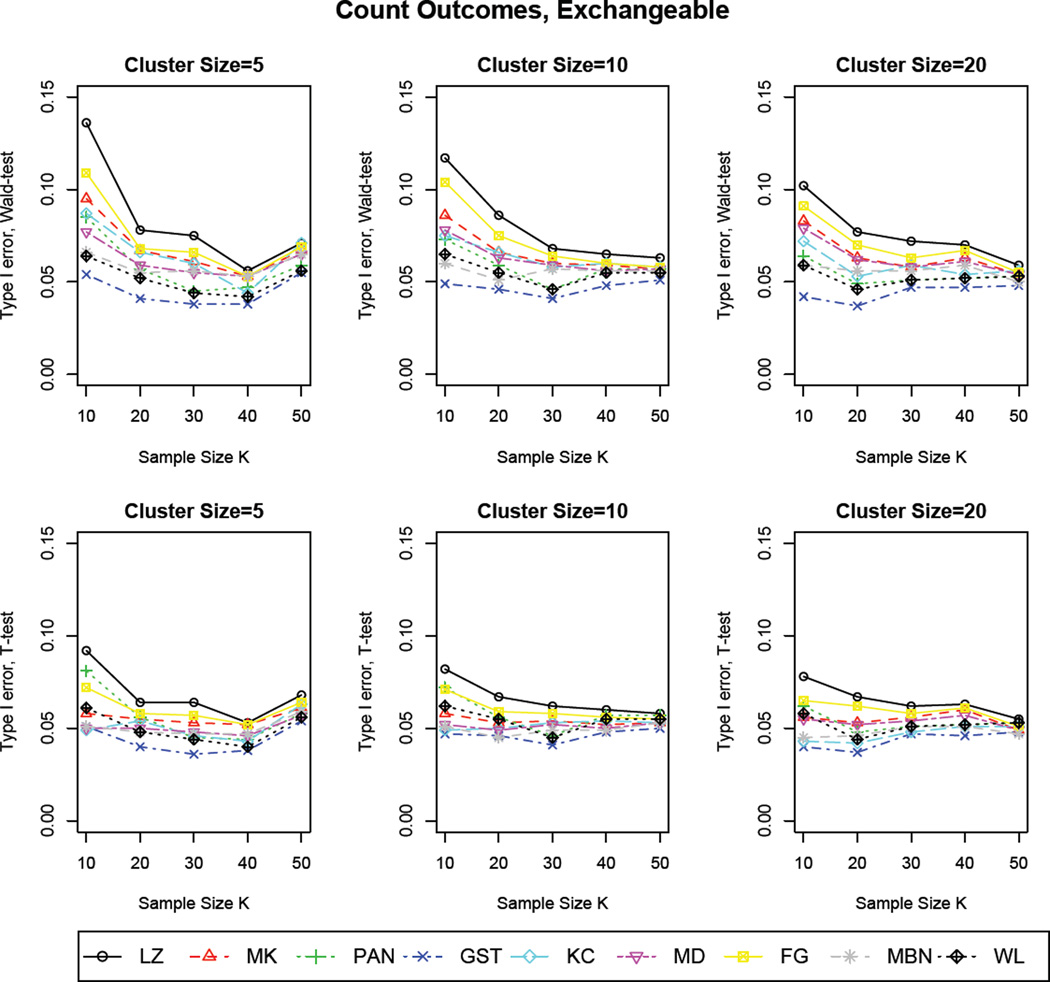

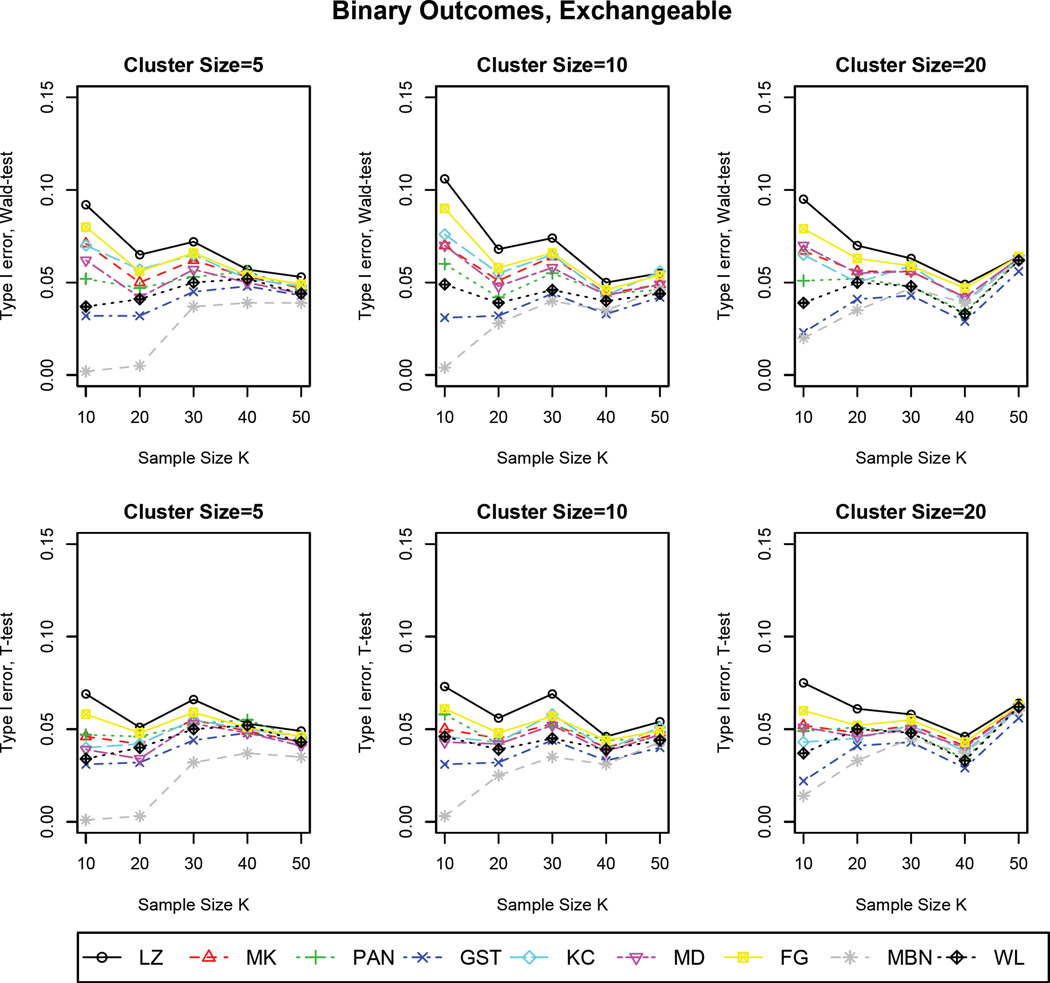

The partial results are shown in Figures 1–6, are summarized as follows: 1) The results based on Wald tests show that the use of robust variance estimator VLZ always leads to inflated Type I error when the sample size is small (i.e., ≤ 50), which is consistent with our expectation; however, the tests using the other estimators also have inflation to some extent, but the degrees of freedom are relatively smaller with VWL performing the best; 2) t–tests for hypotheses testing attain better performance than Wald tests in terms of the control of Type I error across all estimators. The estimator VLZ still leads to some degree of inflation. Interestingly, when the “working” correlation structure is specified correctly, VLZ achieves satisfactory performance even though the sample size is as small as 10; 3) For t–tests, the performance of variance estimators are substantially influenced by sample size K, while larger cluster size n leads to more conservative results; 4) Note that VKC performs worse than VLZ based on Wald tests as indicated by larger inflation on Type I error, but improves with increasing cluster size; In addition, some estimators, such as VGST and VMBN, perform conservatively for small samples; 5) Among all nine variance estimators, VWL has superior performance consistently across a variety of setups. Thus, it is a preferable estimator for GEE even when the sample size is as small as 10. Note that the results on the independent “working” correlation structure are not provided due to the similar trend as AR-1. In the end, according to our current numerical studies as well as literatures [16, 28, 29], we recommend the sample size requirements to preserve Type I error for all variance estimators as follows: VLZ(≥ 50), VMK(≥ 40), VKC(≥ 50), VPAN(≥ 30), VGST(≥ 20), VMD(≥ 30), VFG(≥ 40), VMBN(≥ 50), and VWL(≥ 10). Note that we also investigated the effect of cluster sizes via additional simulations (results available upon request), and found out that the higher cluster size n can somewhat improve the performance in preserving Type I error, but the effect is not as substantial as the sample size K. Due to the fact that in most practical longitudinal designs, the cluster size (i.e., the number of observations within-subject) is usually less than 30 [40, 41]. Thus, our recommendation can be applied in general cases (i.e., n ≥ 5) based on current extensive simulations.

Figure 1.

Type I errors based on Wald and t–tests for continuous outcomes with the true correlation structure as exchangeable

Figure 6.

Type I errors based on Wald and t–tests for binary outcomes with the true correlation structure as AR-1

4. Data examples with small samples

In this paper, we present the results using two real data applications to test our R program and compare the finite performances of different variance estimators under finite sample size, one with continuous outcomes and the other one with count outcomes. The first data is from a study of orthodontic measurements on children, which includes 11 girls and 16 boys measured at ages 8, 10, 12, and 14 [30]. The response is the measurement of the distance (in millimeters) from the center of the pituitary to the pteryomaxillary fissure, and the primary covariates of interest are age (in years) and gender (Male; Female). The objective is to investigate whether there exist statistically significant gender differences in dental growth measurements and their temporal trends as age increases. This example has been analyzed byWang and Long [16] for small-sample properties of several estimators. Here, we conduct comparisons by considering eight types of modified variance estimators in addition to the robust original “sandwich” estimator. Therefore, the mean model of GEE is given by

| (21) |

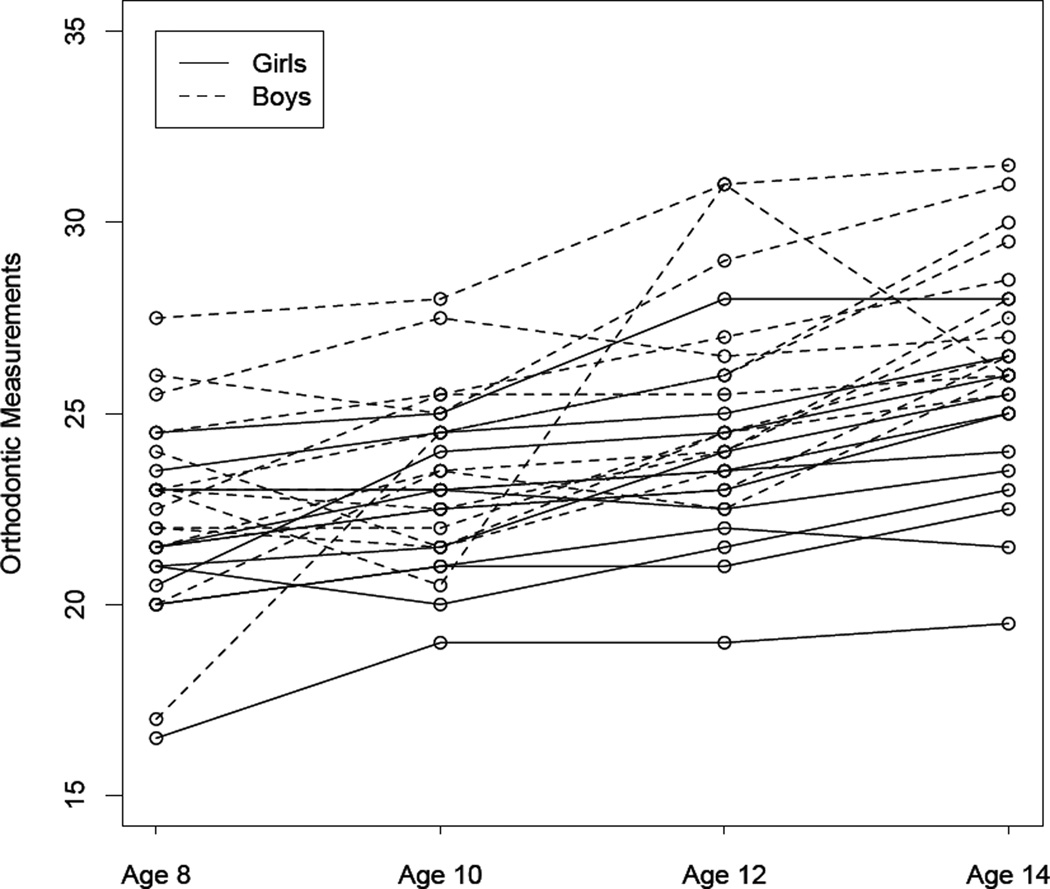

The scatter plot of orthodontic measurements is shown in Figure 7, where the black lines are for girls and the red lines are for boys. It turns out that the boys have higher measurements than the girls on average, and the measurements tend to increase with age. GEE analysis results, including parameter estimates and various variance estimators, are shown in Table 3. Both Wald and t–tests with the significance levels of 0.01 and 0.05 are applied for hypotheses testing. All variance estimators provide comparable results on hypotheses testing of using Wald tests, but when using t–tests at the significance level of 0.01, different testing conclusions for gender are obtained, indicating that the choice of different small-sample adjustments in variance estimators may affect the testing results.

Figure 7.

Orthodontic measurements by subject over time

Table 3.

Estimation results for the case study of orthodontic measurements

| β̂ | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Independence | |||||||||||||||||||

| 4.32 | 0.461 | 0.489 | 0.461 | 0.489 | 0.470 | 0.479 | 0.486 | 0.574 | 0.479 | ||||||||||

| Gender(=M) | 2.32 | 0.750 | 0.795 | 0.733 | 0.777 | 0.782 | 0.816 | 0.836† | 0.803 | 0.793 | |||||||||

| Exchangeable | |||||||||||||||||||

| 4.32 | 0.461 | 0.489 | 0.461 | 0.489 | 0.470 | 0.479 | 0.469 | 0.498 | 0.479 | ||||||||||

| Gender(=M) | 2.32 | 0.750 | 0.795 | 0.733 | 0.777 | 0.782 | 0.816 | 0.836‡ | 0.818 | 0.793 | |||||||||

| AR-1 | |||||||||||||||||||

| 4.25 | 0.480 | 0.509 | 0.480 | 0.509 | 0.472 | 0.499 | 0.525 | 0.538 | 0.499 | ||||||||||

| Gender(=M) | 2.41 | 0.754 | 0.800 | 0.734 | 0.778 | 0.783 | 0.821 | 0.841† | 0.813 | 0.795 | |||||||||

| Unstructured | |||||||||||||||||||

| 4.27 | 0.466 | 0.495 | 0.466 | 0.495 | 0.516 | 0.484 | 0.473 | 0.507 | 0.484 | ||||||||||

| Gender(=M) | 2.22 | 0.730 | 0.774‡ | 0.713 | 0.756 | 0.783‡ | 0.795‡ | 0.814† | 0.794‡ | 0.772 | |||||||||

All are significant at both significance levels of 0.01 and 0.05 using Wald or t–tests except the ones with the superscripts.

: not significant based on either Wald or t–tests at the significance level of 0.01;

: significant based on Wald tests but not on t–tests at the significance level of 0.01.

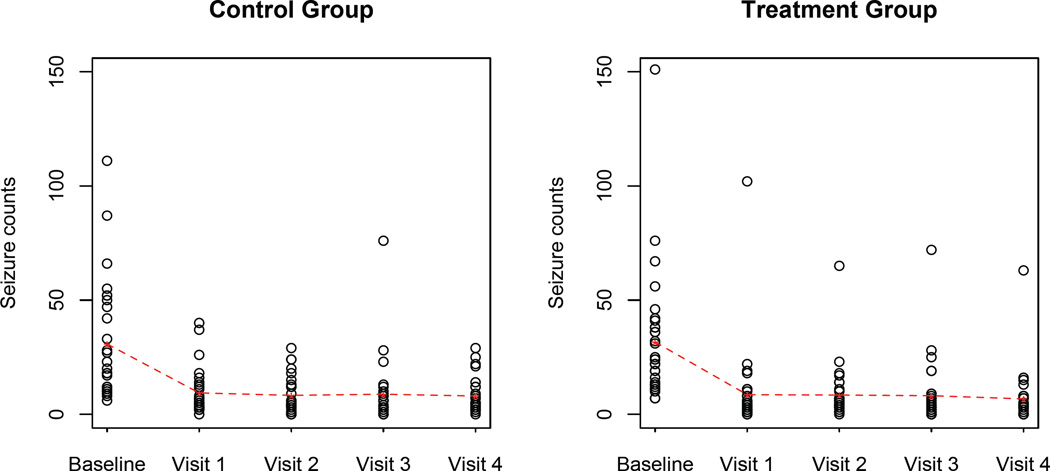

The second example is from the randomized trial of progabide consisting of 59 individuals [31]. The subjects were randomly assigned to receive the anti-epileptic treatment (progabide) or placebo (control). The outcome is the number of epileptic seizures in each of four consecutive two-week intervals, and the variables recorded include age and baseline epileptic seizure counts (in an eight-week interval) prior to the treatment assignment and the indicator for treatment (Trt, 1=progabide; 0=control). In particular, for modeling fitting, the variable Baseline is noted by the baseline epileptic seizure count rate per week; Time is the number of weeks, which is valued by 2, 4, 6, and 8; Interval duration is the duration of each interval (i.e., 2 weeks), and log(Interval_duration) is treated as an offset variable in the model. The goal of this trial is to evaluate whether the anti-epileptic treatment is effective. We use the complete data set of all 59 subjects and a subset of 30 children, which are randomly drawn from the original complete data without replacement, to perform hypotheses testing and evaluate the small-sample properties of different estimators. Note that the interaction term of Trt and Time is also investigated, but is not significant, thus the final log-linear model for this study is given by

| (22) |

The scatter plots of seizure counts by time intervals for progabide and control groups are shown in Figure 8, and indicate that the counts dramatically decrease after the treatment in the first two weeks and remain stable afterwards for both groups. The GEE-based parameter estimates as well as the square root of various variance estimates are shown in Table 4. No significant (progabide) treatment effect or temporal trend is detected using either complete data or subset data based on all variance estimators, but Baseline has significant effect on the seizure counts throughout. However, for subset data analysis, only slightly different conclusions of significance on temporal trend are obtained depending on the type of tests and the significance level. For instance, the tests of temporal effect using VGST, VMD and VWL are significant at the significance level of 0.05 but not at the significance level of 0.01 based on Wald or t–tests, but VKC and VFG are significant only based on Wald tests at the significance level of 0.05. This data example shows that when the sample size is smaller (i.e., ≤ 30), the validity of hypothesis testing could be influenced by the bias of the variance estimators.

Figure 8.

Seizure counts over time for treatment and control groups. The dotted red line is the average number of seizure counts over time

Table 4.

Estimation results for the case study of epileptic seizures

| β̂ | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Complete data (K=59) | |||||||||||||||||||

| Independence | |||||||||||||||||||

| Baseline | 0.17 | 0.008 | 0.009 | 0.013 | 0.014 | 0.009 | 0.010 | 0.009 | 0.009 | 0.014 | |||||||||

| Trt(= 1)† | −0.23 | 0.176 | 0.182 | 0.159 | 0.165 | 0.181 | 0.191 | 0.180 | 0.182 | 0.167 | |||||||||

| Time† | −0.03 | 0.017 | 0.018 | 0.016 | 0.016 | 0.018 | 0.018 | 0.018 | 0.019 | 0.016 | |||||||||

| Exchangeable | |||||||||||||||||||

| Baseline | 0.17 | 0.008 | 0.009 | 0.013 | 0.014 | 0.009 | 0.010 | 0.009 | 0.009 | 0.014 | |||||||||

| Trt(= 1)† | −0.22 | 0.174 | 0.180 | 0.158 | 0.164 | 0.182 | 0.189 | 0.179 | 0.181 | 0.166 | |||||||||

| Time† | −0.03 | 0.017 | 0.018 | 0.016 | 0.016 | 0.019 | 0.018 | 0.018 | 0.018 | 0.016 | |||||||||

| AR-1 | |||||||||||||||||||

| Baseline | 0.17 | 0.008 | 0.009 | 0.012 | 0.013 | 0.010 | 0.010 | 0.009 | 0.009 | 0.013 | |||||||||

| Trt(= 1)† | −0.25 | 0.166 | 0.172 | 0.149 | 0.155 | 0.185 | 0.182 | 0.171 | 0.173 | 0.157 | |||||||||

| Time† | −0.03 | 0.017 | 0.018 | 0.015 | 0.016 | 0.018 | 0.018 | 0.017 | 0.018 | 0.015 | |||||||||

| Subset data (K=30) | |||||||||||||||||||

| Independence | |||||||||||||||||||

| Baseline | 0.18 | 0.014 | 0.015 | 0.017 | 0.018 | 0.014 | 0.026 | 0.015 | 0.015 | 0.019 | |||||||||

| Trt(= 1)† | −0.29 | 0.270 | 0.290 | 0.257 | 0.276 | 0.293 | 0.302 | 0.286 | 0.289 | 0.290 | |||||||||

| Time | −0.05 | 0.020† | 0.021# | 0.019# | 0.021# | 0.023* | 0.022# | 0.023* | 0.025† | 0.020# | |||||||||

| Exchangeable | |||||||||||||||||||

| Baseline | 0.18 | 0.013 | 0.014 | 0.017 | 0.019 | 0.015 | 0.025 | 0.015 | 0.015 | 0.020 | |||||||||

| Trt(= 1)† | −0.27 | 0.288 | 0.310 | 0.261 | 0.281 | 0.296 | 0.325 | 0.305 | 0.311 | 0.295 | |||||||||

| Time | −0.05 | 0.020# | 0.021# | 0.019‡ | 0.021# | 0.021* | 0.022# | 0.021* | 0.022† | 0.020# | |||||||||

| AR-1 | |||||||||||||||||||

| Baseline | 0.18 | 0.013 | 0.014 | 0.017 | 0.018 | 0.020 | 0.025 | 0.015 | 0.015 | 0.019 | |||||||||

| Trt(= 1)† | −0.34 | 0.279 | 0.299 | 0.255 | 0.274 | 0.296 | 0.314 | 0.295 | 0.299 | 0.289 | |||||||||

| Time | −0.05 | 0.023# | 0.025‡ | 0.021# | 0.023# | 0.022* | 0.026# | 0.026* | 0.026† | 0.022# | |||||||||

Complete data All are significant at both significance levels of 0.05 and 0.01 using Wald or t–tests except the ones with the superscripts. Subset data: All are significant at both significance levels of 0.05 and 0.01 using Wald or t–tests except the ones with the superscripts. Note that if the notation is put with the variable, the significance result is the same for the whole row.

: not significant on either Wald or t–tests at the significance level of either 0.05 or 0.01;

: significant on Wald test, but not significant on t–test at the significance level of 0.01;

: significant at the significance level of 0.05 but not at the significance level of 0.01 based on Wald or t–tests;

: significant only based on Wald tests at the significance level of 0.05.

5. Conclusions and Discussions

In this paper, we provide a systematic review of recent developments on modified variance estimators for GEE to improve finite small-sample properties, including the formulation of these modifications and their theoretical and numerical comparisons. In addition, to conveniently implement these modifications, we develop the R package “geesmv” which is available at http://cran.r-project.org/web/packages/geesmv/ for free-download and public access. We also discuss two main types of hypothesis testing for GEE,Wald and t–tests, and evaluate their corresponding Type I error when sample size is small. Through extensive simulation studies and two real data examples, we compared the performance of various variance estimators under different scenarios, and provide the guidance of the appropriate sample size for controlling Type I error. As indicated in our simulation study, in general, t–tests based on the variance estimator VML perform robustly well across different set-ups. In particular, the degrees of freedom for t–statistic are more accurately approximated as compared to Li and Redden [18]. However, there are still several limitations for this work. First, the modifications discussed here for variance estimation are directly focusing on the “sandwich” variance estimator, but some other methods were also proposed but not covered here (i.e., improving the efficiency and robustness of parameter estimates) [28, 29, 32, 33, 34]; Second, our recommendation on the appropriate sample sizes for each estimator for preserving Type I error is obtained through limited simulation studies under general set-ups (i.e., equal cluster sizes); however, this guideline may not be always applicable, for instance, the cases with unequal cluster sizes; Third, we only evaluate the Type I error, but the Type II error or the power warrants further investigations. It is also worth pointing out that the selection of an appropriate modification method relies on various aspects of the real application (i.e., study design or intra-subject correlation) [2, 4, 35].

In addition to modifications on variance estimators and test statistics, another important issue, power analysis, tends to be challenging, for example, in a cluster randomized trial (CRT) with a small number of clusters [5, 36, 37]. Previous studies on sample size/power calculation included Liu and Liang [38], where the generalized score test was utilized to draw statistical inference and the resulting non-central Chi-square distribution of test statistic under the alternative hypothesis was derived. Afterwards, Shih provided an alternative formula on sample size and power calculation, which relied on Wald tests using the estimates of regression parameters and robust variance estimators [39]. This power analysis is valid only when the V (β̂) is unbiased and asymptotic normality is satisfied. While, when K is small, the estimated power tends to be overestimated. Hence, the modification on the power estimation is necessary to guarantee its unbiasedness, where an approximated t–distribution could be considered. Also, the adjustment of power estimation incorporating the variance estimators in Section 2 for efficiency improvement is expected to be advantageous. In the application of GEE method, other issues such as model selection or missing data under the circumstance of small samples or even with informative cluster sizes are also of interest. Thus, novel methodologies are still necessary and urged to develop for GEE to accommodate various data features for valid inference in real applications.

Supplementary Material

Figure 2.

Type I errors based on Wald and t–tests for continuous outcomes with the true correlation structure as AR-1

Figure 3.

Type I errors based on Wald and t–tests for count outcomes with the true correlation structure as exchangeable

Figure 4.

Type I errors based on Wald and t–tests for count outcomes with the true correlation structure as AR-1

Figure 5.

Type I errors based on Wald and t–tests for binary outcomes with the true correlation structure as exchangeable

Acknowledgement

The author was supported by a pilot grant and KL2 career grant from Penn State Clinical and Translational Science Institute (CTSI). The project was supported by the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health (NIH), through Grant 5 UL1 RR0330184-04 and Grant 5 KL2 TR 126-4. The content is solely the responsibility of the author and does not represent the views of the NIH. The authors thank Nicholas Sterling for correcting grammar errors during the revision of the manuscript.

References

- 1.Feng ZD, Diehr P, Peterson A, McLerran D. Selected statistical issues in group randomized trials. Annual Review of Public Health. 2001;22:167–187. doi: 10.1146/annurev.publhealth.22.1.167. [DOI] [PubMed] [Google Scholar]

- 2.Diggle P, Heagerty P, Liang KY, Zeger SL. Analysis of longitudinal data. Oxford, UK: Oxford University Press; 2002. [Google Scholar]

- 3.Fitzmaurice G, Davidian M, Verbeke G, Molenberghs G. Longitudinal data anlaysis. Chapman and Hall/CRC; 2008. [Google Scholar]

- 4.Hedeker D, Gibbons RD. Analysis of longitudinal data. John Wiley & Sons; 2006. [Google Scholar]

- 5.Friedman LM, Furberg CD, DeMets DL. Fundamentals of Clinical Trials 3nd edition. New York: Springer; 1989. [Google Scholar]

- 6.McCullagh P, Nelder JA. Generalized linear models. London: Chapman and Hall; 1989. [Google Scholar]

- 7.Liang KY, Zeger SL. A Comparison of Two Bias-Corrected Covariance Estimators for Generalized Estimating Equations. Biometrika. 1986;73:13–22. [Google Scholar]

- 8.Crowder M. On the use of a working correlation matrix in using generalized linear model for repeated measures. Biometrika. 1995;82:407–410. [Google Scholar]

- 9.Wedderburn RWM. Quasi-likelihood functions, generalized linear models, and the Gauss-Newton method. Biometrika. 1974;61:439–447. [Google Scholar]

- 10.Hardin JW, Hilbe JM. Generalized estimating equations. Boca Raton, FL: Chapman and Hall/CRC Press; 2003. [Google Scholar]

- 11.Paik MC. Repeated measurement analysis for nonnormal data in small samples. Communications in Statistics: Simulations. 1988;17(4):1155–1171. [Google Scholar]

- 12.Feng Z, McLerran D, Grizzle J. A comparison of statistical methods for clustered data analysis with Gaussian error. Statistics in Medicine. 1996;15:1793–1806. doi: 10.1002/(SICI)1097-0258(19960830)15:16<1793::AID-SIM332>3.0.CO;2-2. [DOI] [PubMed] [Google Scholar]

- 13.Cox DR, Hinkley DV. Theoretical Statistics. London: Chapman and Hall; 1974. [Google Scholar]

- 14.Gunsolley JC, Getchell C, Chinchilli VM. Small sample characteristics of generalized estimating equations. Communications in Statistics-Simulations. 1995;24:869–878. [Google Scholar]

- 15.Qu Y, Piedmonte MR, Williams GW. Small sample validity of latent variable models for correlated binary data. Communications in Statistics: Simulations. 1994;23:243–269. [Google Scholar]

- 16.Wang M, Long Q. Modified robust variance estimator for generalized estimating equations with improved small-sample performance. Statistics in medicine. 2011;30(11):1278–1291. doi: 10.1002/sim.4150. [DOI] [PubMed] [Google Scholar]

- 17.Sharples K, Breslow N. Regression analysis of correlated binary data: some small sample results for the estimating equation approach. Journal of Statistical Computation and Simulation. 1992;42:1–20. [Google Scholar]

- 18.Li P, Redden DT. Small sample performance of bias-corrected sandwich estimators for cluster-randomized trials with binary outcomes. Statistics in Medicine. 2015 doi: 10.1002/sim.6344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fay MP, Graubard BI, Freedman LS, Midthune DN. Midthune DN conditional logistic regression with sandwich estimators application to a meta analysis. Biometrics. 1998;54:195–208. [PubMed] [Google Scholar]

- 20.Pan W. On the Robust Variance Estimator in Generalized Estimating Equations. Biometrika. 2001;88:901–906. [Google Scholar]

- 21.Qu A, Lindsay B, Li B. Improving generalised estimating equations using quadratic inference functions. Biometrika. 2000;87:823–836. [Google Scholar]

- 22.Mancl LA, DeRouen TA. A Covariance Estimator for GEE with Improved Small-Sample Properties. Biometrics. 2001;57:126–134. doi: 10.1111/j.0006-341x.2001.00126.x. [DOI] [PubMed] [Google Scholar]

- 23.MacKinnon JG. Some heteroskedasticity-consistent covariance matrix estimators with improved finite sample properties. Journal of Econometrics. 1985;29:305–325. [Google Scholar]

- 24.Kauermann G, Carroll RJ. A note on the efficiency of sandwich covariance matrix estimation. Journal of the American Statistical Association. 2001;96:1387–1398. [Google Scholar]

- 25.Gosho M, Sato Y, Takeuchi H. Robust covariance estimator for small-sample adjustment in the generalized estimating equations: A simulation study. Science Journal of Applied Mathematics and Statistics. 2014;2(1):20–25. [Google Scholar]

- 26.Fay MP, Graubard BI. Small-sample adjustments for Wald-type tests using sandwich estimators. Biometrics. 2001;57:1198–1206. doi: 10.1111/j.0006-341x.2001.01198.x. [DOI] [PubMed] [Google Scholar]

- 27.Morel JG, Bokossa MC, Neerchal NK. Small sample correction for the variance of GEE estimators. Biometrical Journal. 2003;45(4):395–409. [Google Scholar]

- 28.Guo X, Pan W, Connett JE, Hannan PJ, French SA. Small-sample performance of the robust score test and its modifications in generalized estimating equations. Statistics in Medicine. 2005;24:3479–3495. doi: 10.1002/sim.2161. [DOI] [PubMed] [Google Scholar]

- 29.Pan W, Wall MM. Small-sample adjustments in using the sandwich variance estimator in generalized estimating equations. Statistics in Medicine. 2002;21:1429–1441. doi: 10.1002/sim.1142. [DOI] [PubMed] [Google Scholar]

- 30.Potthoff RF, Roy SW. A generalized multivariate analysis of variance model useful especially for growth curve problems. Biometrika. 1964;51:313–326. [Google Scholar]

- 31.Thall PF, Vail SC. Some covariance models for longitudinal count data with overdispersion. Biometrics. 1990;46:657–671. [PubMed] [Google Scholar]

- 32.Lipsitz ST, Laird NM, Harrington DP. Using the jackknife to estimate the variance of regression estimators from mearure studies. Communications in Statistics: Theory and Methods. 1990;19:821–845. [Google Scholar]

- 33.Sherman M, Le Cessie S. A comparison between bootstrap methods and generalized linear model. Communications in Statistics: Simulations. 1997;26:901–925. [Google Scholar]

- 34.Wang L, Zhou J, Qu A. Penalized generalized estimating equations for high-dimensional longitudinal data analysis. Biometrics. 2011;68(2):353–360. doi: 10.1111/j.1541-0420.2011.01678.x. [DOI] [PubMed] [Google Scholar]

- 35.Fitzmaurice G, Larid NM, Ware JH. Applied longitudinal data. John Wiley & Sons; 2004. [Google Scholar]

- 36.Shuster JJ. Practical Handbook of Sample Size Guidelines for Clinical Trials. Boca Raton, FL: CRC Press; 1993. [Google Scholar]

- 37.Teerenstra S, Lu B, Preisser JS, van Achterberg T, Borm GF. Sample size considerations of GEE analyses of three-level cluster randomized trials. Biometrics. 2010;66:1230–1237. doi: 10.1111/j.1541-0420.2009.01374.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Liu G, Liang KY. Sample size calculations for studies with correlated observations. Biometrics. 1997;53:937–947. [PubMed] [Google Scholar]

- 39.Shih WJ. Sample size and power calculations for periodontal and other studies with clustered samples using the method of generalized estimating equations. Biometrical Journal. 1997;39:899908. [Google Scholar]

- 40.Ma Y, Mazumdar M, Memtsoudis SG. Beyond repedated measures ANOVA: advanced statistical methods for the analysis of longitudinal data in anesthesia research. Reg Anesth pain Med. 2012;37(1):99–105. doi: 10.1097/AAP.0b013e31823ebc74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Locascio JJ, Atri A. An overview of longitudinal data analysis methods for neurological research. Dement Geriatr Cogn Discord Extra. 2011;1:330–357. doi: 10.1159/000330228. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.