Abstract

The current, and welcome, focus on standardization of techniques and transparency of reporting in the biomedical, peer-reviewed literature is commendable. However, that focus has been intermittent as well as lacklustre and so failed to tackle the alarming lack of reliability and reproducibly of biomedical research. Authors have access to numerous recommendations, ranging from simple standards dealing with technical issues to those regulating clinical trials, suggesting that improved reporting guidelines are not the solution. The elemental solution is for editors to require meticulous implementation of their journals’ instructions for authors and reviewers and stipulate that no paper is published without a transparent, complete and accurate materials and methods section.

Keywords: Research, Reproducibility, Biomedicine, qPCR, Microarrays, Next generation sequencing

The treatment of some scientific topics, particularly in biomedical research, is very much like that afforded to the catwalk fashion industry; something becomes hyped, everyone talks about it and eventually the popular press picks up the topic and generally distort its conclusions, only for the band wagon to move on to the next hot topic. Tellingly, this excitement is usually misplaced and serves more to publicize the particular authors, institutions and journals than it does to contribute to any advancement in scientific knowledge or translational benefit. In contrast, vast amounts of scientific data are published without eliciting any interest whatsoever, leaving the authors to cite their own papers in the hope that their work will, one day, become the hyped fashion. Regardless, the results and conclusions from much, if not most, of the publications of biomedical research are questionable: the majority are not reproducible [1], [2], [3] and so do not satisfy one of the fundamental requirements of scientific research. There are a number of reasons why published results cannot be reproduced:

-

1.

The original research was carried out incorrectly, for example without sufficient regard for sample selection, template quality or inappropriate data analysis.

-

2.

The attempts to replicate results are flawed because the information provided in the publication is not sufficiently detailed and explicit.

-

3.

The replicating laboratories do not have sufficient understanding of the uncertainty associated with their experiments. For example, the high precision of methods like digital PCR can generate different results, but a more focused look at reproducibility may show they are all describing different parts of a data distribution, which, once understood, would allow a definition of what can actually be measured.

Any of these explanations is objectionable and results in billions of dollars being wasted every year [4]. This message is, of course, not new [5] and over the last twenty years or so there have been numerous, often high profile, publications lamenting this state of affairs and proposing solutions, most recently summarized in a review article published in this journal [6].

Why is there this apparent indifference to publication quality? Is it because detailed scrutiny of the reliability, standardization, reproducibility and transparency of methods is perceived as comparatively mundane and unexciting? Is the current peer review process inadequate to provide a reliable analysis of all techniques? In theory, there is no disagreement about the importance of the methods section of a scientific manuscript [7] or that it requires a clear, accurate [8] and, crucially, adequate description of how an experiment was carried out. In theory, it is also accepted that the aim of a methods section is to provide the information required to assess the validity of a study and hence be sufficiently detailed so that competent readers with access to the necessary experiment components and data can reproduce the results.

Certainly, despite the wealth of evidence that published methods are wholly deficient, there has never been any determined, consistent and coherent effort to address these issues and deal with their consequences. Therefore a welcome, recent effort involves the publication of a report based on the proceedings of a symposium held earlier this year, aimed at exploring the challenges and chances for improving the reliability and reproducibility and of biomedical research in the UK (http://www.acmedsci.ac.uk/policy/policy-projects/reproducibility-and-reliability-of-biomedical-research/). However, a close reading of the report suggests that it simply summarizes all of the findings and opinions that are already published and suggests the same solutions that have been ignored until now. These include “top-down measures from journals, funders and research organisations” that aim to improve the quality of training and institute a research culture and career structure that reduces the emphasis on novelty and publication, as well as “bottom-up ones from individual researchers and laboratories” that address issues of poor study design and statistical practices, inadequate reporting of methods, and problems with quality control. What is lacking is a decisive, headline-grabbing call to action.

Some of the suggestions also imply that the authors of this report appear not to be overly familiar with existing, long standing efforts to standardize protocols and improve transparency. For example, in a section with the heading “strategies to improve research practice and the reproducibility of biomedical research” contains the suggestion that establishing standards could address some of the issues associated with reproducibility and points to the Minimum Information About a Microarray Experiment (MIAME) guidelines [9] as the exemplary standard. In fact, there are numerous “Minimum Information” standards projects following on from that paper, most of which have been registered with the Minimum Information for Biological and Biomedical Investigations initiative (http://www.dcc.ac.uk/resources/metadata-standards/mibbi-minimum-information-biological-and-biomedical-investigations), where they are collected and curated and can be accessed through a searchable portal of inter-related data standards, databases, and https://biosharing.org/standards. Complementary information is also available from the US National Library of Medicine website, which lists the organizations that provide advice and guidelines for reporting research methods and findings (https://www.nlm.nih.gov/services/research_report_guide.html). Medical research studies, in particular, are well served with reporting guidelines, for example by the EQUATOR Network, which aims to improve the reliability and value of the medical research literature by promoting transparent and accurate reporting (http://www.equator-network.org). There are reporting guidelines for many different study designs such as CONSORT (www.consort-statement.org) for randomized trials, STARD for studies of diagnostic accuracy (www.stard-statement.org/) and SPIRIT for study protocols (http://www.spirit-statement.org).

If it were simply a matter of developing standards, then the state of the peer-reviewed literature would not be as scandalous as it is. The real problem stems from the lack of application of those standards. This is most easily demonstrated by looking at, arguably, the most widely used molecular techniques, real-time PCR (qPCR) and reverse transcription (RT)-qPCR. These methods have found supporting roles as part of a huge number of publications in every area of the life sciences, clinical diagnostics, biotechnology, forensics and agriculture. qPCR-based assays are usually described as simple, accurate and reliable. This is true, but only if certain technical and analytical criteria are met. It is especially important to emphasize that the accuracy of results is critically dependent on the choice of calibration, whether this be a control sample or a calibration curve. This method is easily abused and one particularly egregious example is provided by its use to detect measles virus in the intestine of autistic children. Numerous, independent replication attempts, including those carried out by the original authors, failed to reproduce the original data and an analysis of the raw data, carried out as part of the US autism omnibus trial in Washington DC, revealed that the conclusions were based on fallacious results obtained by a combination of sample contamination with DNA, incorrect analysis procedures and poor experimental methods [10], [11]. A paper publishing these data remains to be retracted 13 years after publication. While this delay is typical, it is totally unacceptable and results in an underestimation of the role of fraud in the ongoing retraction epidemic [12], [13].

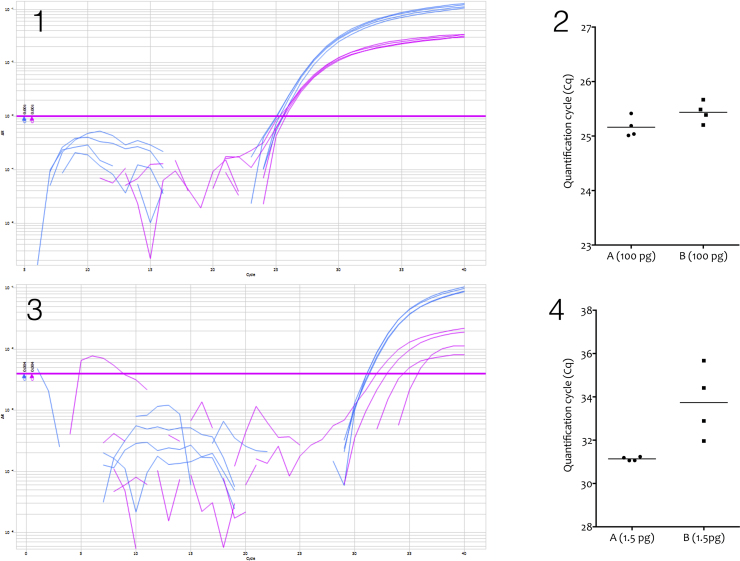

A typical problem associated with qPCR assay variability is illustrated in Fig. 1, which demonstrates that qPCR assays can behave significantly different under different experimental conditions. As the data demonstrate, at the higher target DNA concentration both assays generate reliable data. However, at the lower concentration, results are reliable from only one of the assays (A), with the ΔCq of 5.98 ± 0.21 between two different target DNA concentrations being in line with the expected value for the dilution factor. In contrast, the results of the other assay (B) are much more variable, (ΔCq of 8.29 ± 1.65) and also do not accurately reflect the dilution factor. The report by Dr. Andreas Nitsche in this issue shows that some assays are particularly sensitive to variability in different buffers and even different batches of same buffer. If assay behavior is not thoroughly assessed such that experimental conditions are simulated, prior to carrying out real-life tests, this can lead to false results and confound any potential conclusions.

Fig. 1.

Comparison of a dualplex qPCR assay targeting Candida dublinensis or Candida glabrata. (1) A PCRmax Eco (http://www.pcrmax.com) was used to amplify 100 pg of C. glabrata (A) and C. dublinensis (B) and DNA. Four replicate reactions were run of 5 μl each using Agilents’s Brilliant III qPCR mastermix, with PCR amplicons detected using FAM-(C. glabrata, A, blue)- or HEX-(C. dublinensis, B, pink) labelled hydrolysis probes. The assays are 98% and 97% efficient, respectively. (2) Plots of the two assays recording average Cqs of 25.16 ± 0.19 and 25.44 ± 0.19 for C. glabrata and C. dublinensis, respectively (Mean ± SD). (3) Conditions as described for 1, except that the replicates contained 1.5 pg of each of the fungal DNAs. (4) Plot of the two assays recording average Cqs of 31.14 ± 0.09 and 33.73 ± 1.64 for C. glabrata and C. dublinensis, respectively (Mean ± SD).

The MIQE guidelines, published in 2009 [14], are among the most cited molecular recommendations (nearly 4000 citations vs around 3500 for the MIAME guidelines published in 2001). They describe the minimum information necessary for evaluating qPCR experiments and include a checklist comprising nine sections to help guide the author to the full disclosure of all reagents, assay sequences and analysis methods and so help to minimise this kind of variability or potential inaccuracy. The guidelines suggest appropriate parameters for qPCR assay design and reporting, and have become widely accepted by both the research community and, especially, the companies producing and selling qPCR reagents and instrumentation. Implementation of these guidelines has been demonstrated to result in the publication of more complete and transparent papers, although the majority of qPCR-based papers continue to provide inadequate information on experimental detail [15].

There can be no doubt that there are a vast number of unreliable and incorrect results published that have been generated by qPCR, a relatively simple technique. This begs the obvious question of how reliable the results are that have been obtained using significantly more demanding methods. An example is digital PCR (dPCR), which involves the dilution and partitioning of target molecules into large numbers of separate reaction chambers so that each contains either one or no copies of the sequence of interest [16]. A comparison of the number of partitions in which the target is detected vs those in which it is not, allows quantitative analysis without the need for a calibration curve. Hence data analysis can be not just more precise, but also more straightforward than with qPCR. However, there are additional parameters that any reader of a publication using this technology needs to be aware of, most obviously the mean number of target copies per partition, the number of partitions, individual partition volumes and the total volume of the partitions measured. Hence the necessary requirement for the publication of the digital PCR MIQE guidelines, which address known requirements for dPCR that were identified during the early stage of its development and commercial implementation [17]. Expression microarrays and next generation sequencing incorporate an additional layer of complexity. Whilst the parameters required to ensure reliable qPCR and dPCR results are reasonably few, those required to assess the validity of expression microarrays or RNA sequencing are significantly more complex. There have been several papers investigating the effects of technical and bioinformatics variability of RNA-seq results [18], [19], [20], [21] and standards for RNA sequencing [22], [23] (http://www.modencode.org/publications/docs/index.shtml) as well as Chromatin immunoprecipitation and high-throughput DNA sequencing (ChIP-seq) [24] are being developed, but again there is no decisive push for their universal acceptance.

There is a correlation between the number of retractions and the impact factor of a journal [12]. While this could be due to the greater subsequent scrutiny afforded results published in a high impact factor journal, it surely is worth noting that despite the exclusivity of such publications and the pre-publication scrutiny they endure, such publications occur at all. It is also striking that there is an inverse correlation between MIQE compliance and impact factor [25] demonstrating the lack of adequate reporting of method for, at least, this single technique. The link between adequate reporting of methods and lack of reproducibility has become more widely accepted, and the publishers of Nature have released a coordinated set of editorial statements that admitted that the Nature publishing group had failed to “exert sufficient scrutiny over the results that they publish” and introduced a checklist to encourage the publication of more detailed experimental methods [26], [27], [28], [29], [30], [31], [32], [33], [34], [35]. However, these laudable aims have not been followed up by meaningful action and a recent analysis of Nature-published articles suggests that none of the parameters deemed to be essential for reliable RT-qPCR reporting are actually being reported [6]. We have repeated the analysis with six papers [36], [37], [38], [39], [40], [41] published in 2015 by the Nature group and found that authors and reviewers are still not heeding their own journals’ publication guidelines. The following description of the RNA extraction, reverse transcription and RT-qPCR steps published in Nature genetics is typical: “RNA was extracted with the Nucleospin II kit (Macherey-Nagel) and reverse-transcribed using the High-Capacity cDNA Reverse Transcription Kit (Applied Biosystems). PCRs were performed either using TaqMan assays with qRT-PCR Mastermix Plus without UNG (Eurogentec) or using SYBR green (Applied Biosystems). Oligonucleotides were purchased from MWG Eurofins Genomics (supplementary data). Reactions were run on an ABI/PRISM 7500 instrument and analyzed using the 7500 system SDS software (Applied Biosystems)” [39]. At least the authors published the primer sequences, although access to this information reveals that, once again, they used a single unvalidated reference gene.

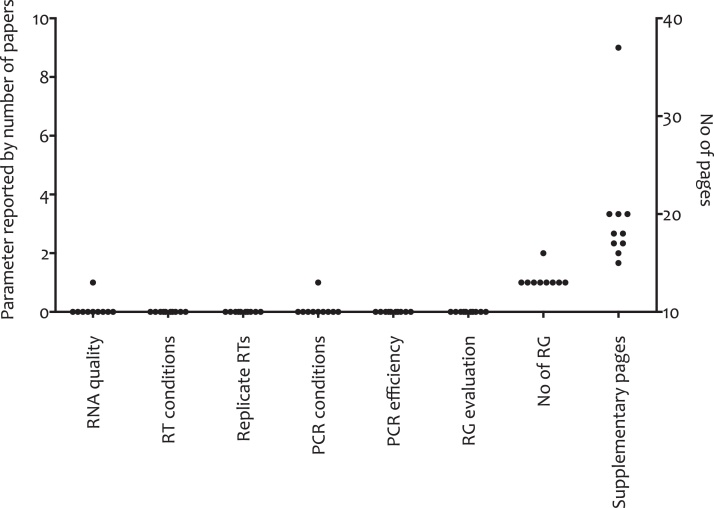

What about other high impact factor journals? We have analyzed ten papers that include RT-qPCR-based results published in 2015 in Cell and its associated journals, to see whether the editors of these high impact factor journals pay any more attention to transparency of reporting (Fig. 2). Not one paper reports any experimental detail, all use inappropriate normalization procedures yet all of them include extensive supplementary information. Since there is no limit on the pages published with these online supplements, there is no excuse for omitting these basic criteria. Furthermore, all but one use single, unvalidated reference genes for data normalization, which is similar to what was found in a previous large scale study confined to colorectal cancer biomarker publications [42]. This practice was demonstrated to be unreliable a long time ago for the kind of small fold-changes these papers are reporting and should have been abandoned by now [43], [44]. Hence the validity of the reported data are, at the very least, open to doubt. The results of this analysis reinforce the shocking conclusion that the editors of these high impact factor journals have still not received and acted upon the requirement for reporting of qPCR and RT-qPCR experimental procedures.

Fig. 2.

Analysis of ten papers published in Cell [45], [46], Cell Stem Cell [47], [48], Cancer Cell [49], [50], [51] and Molecular Cell [52], [53], [54] between June and November 2015. Materials and methods and supplementary sections were screened for information on seven key parameters required to assess the technical validity of RT-qPCR assays, detailed on the x-axis. The number of papers reporting any one of these parameters are shown on the left hand y-axis. The right hand y-axis shows the number of supplemental pages published with each of the ten papers. The one paper using two reference genes (RG) uses both one and two RG in different experiments without explicit validation of the stability of these targets, either alone or in combination.

So what is the solution? Obviously, since the problem is multifactorial involving, researchers, grant awarding bodies and journal publishers, no single party is solely responsible, and therefore no single solution will suffice [55]. However, an essential step is that journal editors enforce their own, published standards and insist that reviewers scrutinize carefully the materials and methods sections of submitted manuscripts. Whilst no single reviewer has the expertise to assess the nuances of every single technique used, adherence to guidelines helps with that evaluation and every journal should retain a pool of technical reviewers who would evaluate not just the technical acceptability but, equally important, the transparency of reporting. As the data in Fig. 2 show, most papers provide no information at all about experimental procedures relating to RT-qPCR. Of course, there is also an onus on the readers of a paper to be more critical of published research findings and use their common sense to evaluate the likelihood of a result being real or not. In this age of blogs and public comments, it would be a simple procedure to email the editor handling any particular paper so that any publication could be open to reader comments regarding manuscripts on the website. This would allow the peer reviewed literature to be updated and those remaining that fail to be sufficiently transparent or where results do not support conclusions could be called out as questionable, thus gradually reducing their impact and removing them from the ongoing citations. The good intentions have been published, now it is time for editors to act.

We would offer some practical solutions for discussion:

-

1.Journals

-

a.Maintain a checklist of reporting requirements for each technique (such as provided by MIQE guidelines) and reject at submission any manuscript without associated standards checklists completed.

-

b.Manuscripts should be submitted with data from replicate experiments, even if these are not to be published in the body of the paper so that the reviewer sees a demonstration that the experiment is reproducible in the authors’ hands.

-

c.Insist that papers are reviewed by technical experts, such that all techniques reported in the paper are reviewed, using more than the traditional two reviewers if necessary.

-

d.Publish reader comments, queries and challenges to manuscripts in an open forum.

-

e.Publishers could pay for group of independent monitors that carry out an annual survey of peer reviewed publications, grouped into various specialties, and publish annual league tables of compliance with sector-specific guidelines.

-

a.

-

2.Reviewers

-

a.Call to account papers without sufficient detail for an accurate review of the methods and data.

-

b.Declare lack of expertise to the editor for any included techniques so that these can receive additional review.

-

a.

-

3.Authors

-

a.Prior to experimentation, review relevant standards databases and use the recommendations to guide the study design.

-

b.Consult experts in each of the required techniques.

-

c.Include as much experimental detail as possible in manuscripts, making use of supplementary information.

-

a.

Meanwhile, we will just have to accept that many results published over the last 20 years or so will not stand up to detailed scrutiny and that it will take a while to improve the consistency and reliability of the scientific literature archive. At the same time, we offer a plea that these suggestions are accepted by the scientific community and that the concerns expressed in this editorial become redundant in the very near future. To assist this progression, we recommend that authors, reviewers and editors read the published proceedings of the symposium that analyses the challenges associated with tackling the reliability and reproducibility and of biomedical research in the UK (http://www.acmedsci.ac.uk/policy/policy-projects/reproducibility-and-reliability-of-biomedical-research/).

References

- 1.Mobley A., Linder S.K., Braeuer R., Ellis L.M., Zwelling L. A survey on data reproducibility in cancer research provides insights into our limited ability to translate findings from the laboratory to the clinic. PLoS One. 2013;8:e63221. doi: 10.1371/journal.pone.0063221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Begley C.G., Ellis L.M. Drug development: raise standards for preclinical cancer research. Nature. 2012;483:531–533. doi: 10.1038/483531a. [DOI] [PubMed] [Google Scholar]

- 3.Prinz F., Schlange T., Asadullah K. Believe it or not: how much can we rely on published data on potential drug targets? Nat. Rev. Drug Discov. 2011;10:712. doi: 10.1038/nrd3439-c1. [DOI] [PubMed] [Google Scholar]

- 4.Chalmers I., Bracken M.B., Djulbegovic B. How to increase value and reduce waste when research priorities are set. Lancet. 2014;383:156–165. doi: 10.1016/S0140-6736(13)62229-1. [DOI] [PubMed] [Google Scholar]

- 5.Gunn I.P. Evidence-based practice, research, peer review, and publication. CRNA. 1998;9:177–182. [PubMed] [Google Scholar]

- 6.Bustin S.A. The reproducibility of biomedical research: sleepers awake! Biomol. Detect. Quantif. 2014;2:35–42. doi: 10.1016/j.bdq.2015.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liumbruno G.M., Velati C., Pasqualetti P., Franchini M. How to write a scientific manuscript for publication. Blood Transfus. 2013;11:217–226. doi: 10.2450/2012.0247-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kallet R.H. How to write the methods section of a research paper. Respir. Care. 2004;49:1229–1232. [PubMed] [Google Scholar]

- 9.Brazma A., Hingamp P., Quackenbush J. Minimum information about a microarray experiment (MIAME)-toward standards for microarray data. Nat. Genet. 2001;29:365–371. doi: 10.1038/ng1201-365. [DOI] [PubMed] [Google Scholar]

- 10.Bustin S.A. Why there is no link between measles virus and autism. In: Fitzgerald M., editor. vol. I. Intech-Open Access Company; 2013. (Recent Advances in Autism Spectrum Disorders). pp. 81–98. [Google Scholar]

- 11.Bustin S.A. RT-qPCR and molecular diagnostics: no evidence for measles virus in the GI tract of autistic children. Eur. Pharm. Rev. Dig. 2008;1:11–16. [Google Scholar]

- 12.Fang F.C., Casadevall A. Retracted science and the retraction index. Infect. Immun. 2011;79:3855–3859. doi: 10.1128/IAI.05661-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fang F.C., Steen R.G., Casadevall A. Misconduct accounts for the majority of retracted scientific publications. Proc. Natl. Acad. Sci. U. S. A. 2012;109:17028–17033. doi: 10.1073/pnas.1212247109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bustin S.A., Benes V., Garson J.A. The MIQE guidelines: minimum information for publication of quantitative real-time PCR experiments. Clin. Chem. 2009;55:611–622. doi: 10.1373/clinchem.2008.112797. [DOI] [PubMed] [Google Scholar]

- 15.Bustin S.A., Benes V., Garson J. The need for transparency and good practices in the qPCR literature. Nat. Methods. 2013;10:1063–1067. doi: 10.1038/nmeth.2697. [DOI] [PubMed] [Google Scholar]

- 16.Sykes P.J., Neoh S.H., Brisco M.J., Hughes E., Condon J., Morley A.A. Quantitation of targets for PCR by use of limiting dilution. Biotechniques. 1992;13:444–449. [PubMed] [Google Scholar]

- 17.Huggett J.F., Foy C.A., Benes V. The digital MIQE guidelines: minimum information for publication of quantitative digital PCR experiments. Clin. Chem. 2013;59:892–902. doi: 10.1373/clinchem.2013.206375. [DOI] [PubMed] [Google Scholar]

- 18.Sultan M., Amstislavskiy V., Risch T. Influence of RNA extraction methods and library selection schemes on RNA-seq data. BMC Genom. 2014;15:675. doi: 10.1186/1471-2164-15-675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wu P.Y., Phan J.H., Wang M.D. Assessing the impact of human genome annotation choice on RNA-seq expression estimates. BMC Bioinform. 2013;14(Suppl. 11):S8. doi: 10.1186/1471-2105-14-S11-S8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Seyednasrollah F., Laiho A., Elo L.L. Comparison of software packages for detecting differential expression in RNA-seq studies. Brief Bioinform. 2015;16:59–70. doi: 10.1093/bib/bbt086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Li P., Piao Y., Shon H.S., Ryu K.H. Comparing the normalization methods for the differential analysis of illumina high-throughput RNA-Seq data. BMC Bioinform. 2015;16:347. doi: 10.1186/s12859-015-0778-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.t Hoen P.A., Friedländer M.R., Almlöf J. Reproducibility of high-throughput mRNA and small RNA sequencing across laboratories. Nat. Biotechnol. 2013;31:1015–1022. doi: 10.1038/nbt.2702. [DOI] [PubMed] [Google Scholar]

- 23.Castel S.E., Levy-Moonshine A., Mohammadi P., Banks E., Lappalainen T. Tools and best practices for data processing in allelic expression analysis. Genome Biol. 2015;16:195. doi: 10.1186/s13059-015-0762-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Landt S.G., Marinov G.K., Kundaje A. ChIP-seq guidelines and practices of the ENCODE and modENCODE consortia. Genome Res. 2012;22:1813–1831. doi: 10.1101/gr.136184.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bustin S. Transparency of reporting in molecular diagnostics. Int. J. Mol. Sci. 2013;14:15878–15884. doi: 10.3390/ijms140815878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Anon Announcement reducing our irreproducibility. Nature. 2013;496:398. [Google Scholar]

- 27.Anon Enhancing reproducibility. Nat. Methods. 2013;10:367. doi: 10.1038/nmeth.2471. [DOI] [PubMed] [Google Scholar]

- 28.Anon Raising reporting standards. Nat. Cell Biol. 2013;15:443. [Google Scholar]

- 29.Anon Raising standards. Nat. Biotechnol. 2013;31:366. [Google Scholar]

- 30.Anon Raising standards. Nat. Med. 2013;19:508. [Google Scholar]

- 31.Anon Raising standards. Nat. Struct. Mol. Biol. 2013;20:533. doi: 10.1038/nsmb.2590. [DOI] [PubMed] [Google Scholar]

- 32.Anon Raising standards. Nat. Genet. 2013;45:467. doi: 10.1038/ng.2621. [DOI] [PubMed] [Google Scholar]

- 33.Anon Raising standards. Nat. Neurosci. 2013;16:517. doi: 10.1038/nn.3391. [DOI] [PubMed] [Google Scholar]

- 34.Anon Raising standards. Nat. Immunol. 2013;14:415. doi: 10.1038/ni.2603. [DOI] [PubMed] [Google Scholar]

- 35.Anon Journals unite for reproducibility. Nature. 2014;515:7. doi: 10.1038/515007a. [DOI] [PubMed] [Google Scholar]

- 36.Silginer M., Burghardt I., Gramatzki D. The aryl hydrocarbon receptor links integrin signaling to the TGF-β pathway. Oncogene. 2015 doi: 10.1038/onc.2015.387. (in press) [DOI] [PubMed] [Google Scholar]

- 37.Jing H., Liao L., An Y. Suppression of EZH2 prevents the shift of osteoporotic MSC fate to adipocyte and enhances bone formation during osteoporosis. Mol. Ther. 2015 doi: 10.1038/mt.2015.152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kim S.K., Joe Y., Chen Y. Carbon monoxide decreases interleukin-1β levels in the lung through the induction of pyrin. Cell. Mol. Immunol. 2015 doi: 10.1038/cmi.2015.79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Grünewald T.G., Bernard V., Gilardi-Hebenstreit P. Chimeric EWSR1-FLI1 regulates the ewing sarcoma susceptibility gene EGR2 via a GGAA microsatellite. Nat. Genet. 2015;47:1073–1078. doi: 10.1038/ng.3363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Xu C., Liberatore K.L., MacAlister C.A. A cascade of arabinosyltransferases controls shoot meristem size in tomato. Nat. Genet. 2015;47:784–792. doi: 10.1038/ng.3309. [DOI] [PubMed] [Google Scholar]

- 41.DeNicola G.M., Chen P.H., Mullarky E. NRF2 regulates serine biosynthesis in non-small cell lung cancer. Nat. Genet. 2015 doi: 10.1038/ng.3421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Dijkstra J.R., van Kempen L.C., Nagtegaal I.D., Bustin S.A. Critical appraisal of quantitative PCR results in colorectal cancer research: can we rely on published qPCR results? Mol. Oncol. 2014;8:813–818. doi: 10.1016/j.molonc.2013.12.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tricarico C., Pinzani P., Bianchi S. Quantitative real-time reverse transcription polymerase chain reaction: normalization to rRNA or single housekeeping genes is inappropriate for human tissue biopsies. Anal. Biochem. 2002;309:293–300. doi: 10.1016/s0003-2697(02)00311-1. [DOI] [PubMed] [Google Scholar]

- 44.Vandesompele J., De Paepe A., Speleman F. Elimination of primer-dimer artifacts and genomic coamplification using a two-step SYBR green I real-time RT-PCR. Anal. Biochem. 2002;303:95–98. doi: 10.1006/abio.2001.5564. [DOI] [PubMed] [Google Scholar]

- 45.Whittle M.C., Izeradjene K., Rani P.G. RUNX3 controls a metastatic switch in pancreatic ductal adenocarcinoma. Cell. 2015;161:1345–1360. doi: 10.1016/j.cell.2015.04.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Park H.W., Kim Y.C., Yu B. Alternative wnt signaling activates YAP/TAZ. Cell. 2015;162:780–794. doi: 10.1016/j.cell.2015.07.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Saito Y., Chapple R.H., Lin A., Kitano A., Nakada D. AMPK protects leukemia-initiating cells in myeloid leukemias from metabolic stress in the bone marrow. Cell Stem Cell. 2015 doi: 10.1016/j.stem.2015.08.019. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kubaczka C., Senner C.E., Cierlitza M. Direct induction of trophoblast stem cells from murine fibroblasts. Cell Stem Cell. 2015 doi: 10.1016/j.stem.2015.08.005. (in press) [DOI] [PubMed] [Google Scholar]

- 49.Matkar S., Sharma P., Gao S. An epigenetic pathway regulates sensitivity of breast cancer cells to HER2 inhibition via FOXO/c-myc axis. Cancer Cell. 2015;28:472–485. doi: 10.1016/j.ccell.2015.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Stromnes I.M., Schmitt T.M., Hulbert A. T cells engineered against a native antigen can surmount immunologic and physical barriers to treat pancreatic ductal adenocarcinoma. Cancer Cell. 2015 doi: 10.1016/j.ccell.2015.09.022. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Pfister S.X., Markkanen E., Jiang Y., Sarkar S. Inhibiting WEE1 selectively kills histone H3K36me 3-deficient cancers by dNTP starvation. Cancer Cell. 2015 doi: 10.1016/j.ccell.2015.09.015. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Vincent E.E., Sergushichev A., Griss T. Mitochondrial phosphoenolpyruvate carboxykinase regulates metabolic adaptation and enables glucose-independent tumor growth. Mol. Cell. 2015;60:195–207. doi: 10.1016/j.molcel.2015.08.013. [DOI] [PubMed] [Google Scholar]

- 53.Elia A.E., Wang D.C., Willis N.A. RFWD3-dependent ubiquitination of RPA regulates repair at stalled replication forks. Mol. Cell. 2015;60:280–293. doi: 10.1016/j.molcel.2015.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.de Wit E., Vos E.S., Holwerda S.J. CTCF binding polarity determines chromatin looping. Mol. Cell. 2015 doi: 10.1016/j.molcel.2015.09.023. [DOI] [PubMed] [Google Scholar]

- 55.Begley C.G., Ioannidis J.P. Reproducibility in science: improving the standard for basic and preclinical research. Circ. Res. 2015;116:116–126. doi: 10.1161/CIRCRESAHA.114.303819. [DOI] [PubMed] [Google Scholar]