Abstract

Recent research indicates that sensory and motor cortical areas play a significant role in the neural representation of concepts. However, little is known about the overall architecture of this representational system, including the role played by higher level areas that integrate different types of sensory and motor information. The present study addressed this issue by investigating the simultaneous contributions of multiple sensory-motor modalities to semantic word processing. With a multivariate fMRI design, we examined activation associated with 5 sensory-motor attributes—color, shape, visual motion, sound, and manipulation—for 900 words. Regions responsive to each attribute were identified using independent ratings of the attributes' relevance to the meaning of each word. The results indicate that these aspects of conceptual knowledge are encoded in multimodal and higher level unimodal areas involved in processing the corresponding types of information during perception and action, in agreement with embodied theories of semantics. They also reveal a hierarchical system of abstracted sensory-motor representations incorporating a major division between object interaction and object perception processes.

Keywords: concepts, embodiment, fMRI, lexical semantics, multimodal processing, semantic memory

Introduction

Recent research on the neural representation of concepts has largely focused on the roles of sensory and motor information. Since conceptual representations for objects (such as “chair”) and actions (such as “throw”) develop in large part through experiences involving perception and action, some current theories propose that comprehending concrete words involves reactivating the sensory-motor representations that provided the basis for the acquisition of the corresponding concepts (e.g., Barsalou 2008; Glenberg and Gallese 2012). The same process may apply to abstract words whose meanings are analyzed as metaphoric extensions from sensory-motor experience (Lakoff and Johnson 1980; Gibbs 2006).

Several lines of evidence support this account. Behavioral studies, for example, suggest that language comprehension involves mental simulations (reenactments) of perceptual and motor experiences (for reviews, see Fischer and Zwaan 2008; Meteyard and Vigliocco 2008). Neuroimaging studies also find that modality-specific cortical areas related to sensory and motor processing are involved in semantic processing (for reviews, see Binder and Desai 2011; Kiefer and Pulvermüller 2012; Meteyard et al. 2012). Furthermore, disorders affecting the motor system are associated with selectively impaired processing of action-related words and sentences (e.g., Buxbaum and Saffran 2002; Grossman et al. 2008; Fernandino et al. 2013a, 2013b).

These important findings nevertheless leave open questions about the involvement of sensory-motor association and multimodal integration areas in concept representation. During perceptual experiences, primary sensory cortices mainly receive input from sensory organs of a single modality (e.g., visual, auditory, somatosensory) and direct their output toward higher level areas that extract more specific kinds of information, such as color, shape, motion, and spatial location, among others. These areas, in turn, are connected to cortical regions that integrate information across different sensory modalities, resulting in more complex, multimodal representations. If word comprehension requires the reactivation of relevant sensory-motor representations, these integration areas should be differentially recruited depending not only on the salience of individual modalities, but also on the salience of different combinations of modalities.

In this article, we use the terms unimodal, bimodal, and multimodal to designate cortical areas that have been independently shown to play a role in integrating information originating primarily from 1, 2, or more than 2 modalities, respectively, during sensory-motor tasks. The existence of bimodal and multimodal integration areas is well established. Studies of monkeys and humans have demonstrated, for instance, the convergence of visual motion and sound information in the posterior dorsolateral temporal region (e.g., Bruce et al. 1981; Beauchamp et al. 2004; Werner and Noppeney 2010). The behavioral relevance of this convergence comes from the fact that many sounds, particularly those arising from biological sources, are produced by movement, and the integration of this correlated information into bimodal representations presumably provides an advantage in detecting, identifying, and/or behaving in response to a stimulus (Grant and Seitz 2000; Beauchamp et al. 2010). Similarly, the integration of correlated visual and haptic inputs in the lateral occipital cortex underlies the representation of object shape (e.g., Hadjikhani and Roland 1998; Amedi et al. 2001; Peltier et al. 2007). Another example is the distributed network underlying the representation of object-directed actions—involving the anterior portion of the supramarginal gyrus (SMG), the lateral temporo-occipital (LTO) cortex and the premotor cortex—which merges information from motor, proprioceptive, visual biological motion, and object shape representations (Johnson-Frey 2004; Caspers et al. 2010; Goodale 2011).

These integrative neural structures reflect the fact that experience typically involves multiple sensory-motor dimensions that are correlated in complex ways. In contrast, neuroimaging studies of concept embodiment typically focus on a single sensory-motor dimension (e.g., Hauk et al. 2004; Aziz-Zadeh and Wilson et al. 2006; Kiefer et al. 2008; Hsu et al. 2012) or on the contrast between 2 modalities (e.g., Simmons et al. 2007; Kiefer et al. 2012), using stimuli that load especially heavily on a single dimension (e.g., “thunder” is a concept learned almost entirely from auditory experience). These methods are well suited to assessing the involvement of unimodal cortical areas in language comprehension, but are less able to evaluate the contribution of multimodal integration areas. A fuller, more general characterization of the neural substrates of conceptual knowledge requires examining how sensory-motor information is activated at various levels of integration during concept retrieval. This, in turn, requires examining multiple dimensions simultaneously.

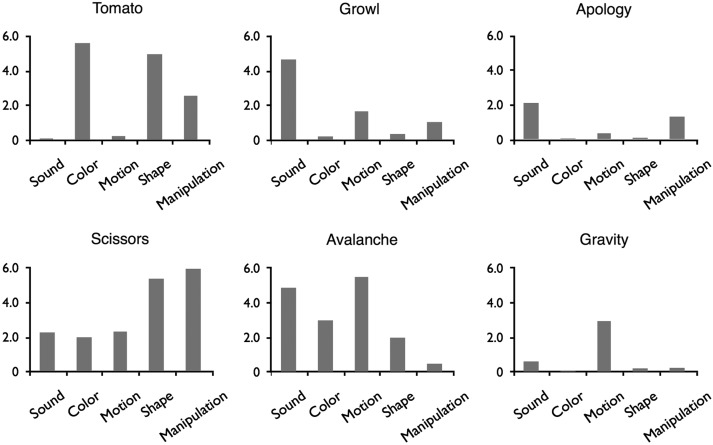

We addressed these issues using a novel approach that exploits the natural variation in salience of 5 attributes closely tied to sensory-motor experience—color, shape, manipulation (i.e., the extent to which an object affords physical manipulation), sound, and visual motion—in a sample of 900 common words (Fig. 1). The salience of these attributes to each word was assessed using ratings obtained from a large independent sample of individuals. These words were then used as stimuli in an fMRI experiment using an event-related design. The ratings were used in a multivariate regression model to identify brain regions responsive to each attribute or combination of attributes.

Figure 1.

Examples of words used in the study. Every word had a rating for each of the five semantic features.

It is important to stress that the 5 sensory-motor attributes selected for study do not correspond to 5 different modalities. While sound, color, and visual motion are modality-specific, Shape knowledge involves at least 2 modalities (i.e., visual and somatosensory), and manipulation involves at least 3 (motor, visual, and somatosensory). However, each of these attributes is associated with relatively well-studied cortical networks, allowing clear hypotheses about brain areas that might be involved in their representation (for areas involved in color perception, see, e.g., Corbetta et al. 1991; Clark et al. 1997; McKeefry and Zeki 1997; Bartels and Zeki 2000; Wade et al. 2008; for visual shape perception networks, see, e.g., Bartels and Zeki 2000; Grill-Spector and Malach 2004; for areas involved in somatosensory shape perception, see Reed et al. 2004; Bodegård et al. 2001; Bohlhalter et al. 2002; Peltier et al. 2007; Miquée et al. 2008; Hömke et al. 2009; for visual motion areas, see, e.g., Grill-Spector and Malach 2004; Seiffert et al. 2003; for low- and high-level auditory perceptual systems, see Lewis et al. 2004; Rauschecker and Scott 2009; Leaver and Rauschecker 2010; for action execution, action observation, and object manipulation networks, see Lewis 2006; Caspers et al. 2010; Grosbras et al. 2012). Previous research also led to the expectation that some regions, such as the angular gyrus (Bonner et al. 2013), would respond to multiple attributes. Our main goal was to determine the extent to which concept retrieval involves brain areas previously implicated in integration of sensory-motor information.

As noted by several authors (e.g., Wittgenstein 1953/2010; Barsalou 1982; Kiefer and Pulvermüller 2012), the conceptual features activated by a word are not invariant, but are partially determined by contextual cues and current task goals. Thus, we should expect the patterns of cortical activation elicited by a word to be similarly context-dependent. In the present study, we explore the cortical activation patterns underlying the retrieval of concept-related sensory-motor features under a particular set of circumstances: the retrieval of a lexical concept, cued by a single word, with the goal of deciding “yes” or “no” to a question about its concreteness. Since these circumstances are the same for all concepts in the study, we can assume that differential activation to the words, averaged across participants, reflects semantic information that is consistently activated by those words in this particular context, and thus can be said to be part of their corresponding conceptual representations.

Materials and Methods

Participants

Participants were 44 healthy, native speakers of English (16 women; mean age 28.2 years, range 19–49 years) with no history of neurological or psychiatric disorders. All were right-handed (Edinburgh Handedness Inventory; Oldfield 1971). Participants were compensated for their participation and gave informed consent in conformity with the protocol approved by the Medical College of Wisconsin Institutional Review Board.

Stimuli

The stimuli were 900 words and 300 pseudowords (word-like nonwords). Pseudowords were included for purposes not relevant to the present study and will not be discussed in detail. The words were all familiar (mean CELEX frequency = 37.4 per million, SD = 118.5), primarily used as nouns, and 3–9 letters in length. Six hundred of the words were relatively concrete, and 300 were relatively abstract (see Supplementary Methods for details). The stimulus set was chosen to include a wide range of imageability/concreteness ratings and various semantic categories, such as manipulable objects, nonmanipulable objects, animals, emotions, professions, time spans, and events, although we did not attempt to distribute words equally across sensory-motor domains.

In a separate norming study, ratings of the relevance of the 5 attributes (sound, color, manipulation, visual motion, and shape) to the meaning of each word were obtained from 342 participants. Relevance was rated on a 7-point Likert scale ranging from “not at all relevant” to “very relevant” (see Supplemental Materials for complete instructions). Mean ratings for 6 representative words are shown in Figure 1. Means and standard deviations for each attribute are presented in Table 1, and histograms are presented in Supplementary Figure 1.

Table 1.

Means and standard deviations for the 5 conceptual attribute ratings

| Rating | Mean | SD |

|---|---|---|

| Sound | 2.00 | 1.66 |

| Color | 1.91 | 1.65 |

| Manipulation | 1.75 | 1.29 |

| Visual motion | 1.74 | 1.43 |

| Shape | 2.47 | 1.98 |

Procedure

In addition to the 1200 task trials (900 words, 300 pseudowords), 760 passive fixation events (+) were included to act as a baseline and provide jittering for the deconvolution analysis. The 1960 stimulus events were distributed across 10 imaging runs, with order pseudorandomized by participant.

On task trials, participants were required to decide whether the stimulus (word or pseudoword) referred to something that can be experienced through the senses, responding quickly by pressing one of 2 response keys. They were instructed to respond “no” for pseudowords. All participants responded with their right hand using the index and middle fingers.

Gradient-echo EPI images were collected in 10 runs of 196 volumes each. Twenty-three participants were scanned on a GE 1.5-T Signa MRI scanner (TR = 2000 ms, TE = 40 ms, 21 axial slices, 3.75 × 3.75 × 6.5 mm voxels), and the other 21 were scanned on a GE 3-T Excite MRI scanner (TR = 2000 ms, TE = 25 ms, 40 axial slices, 3 × 3 × 3 mm voxels). T1-weighted anatomical images were obtained using a 3D spoiled gradient-echo sequence with voxel dimensions of 1 × 1 × 1 mm.

Data Analysis

Preprocessing and statistical analyses of fMRI data were done with the AFNI software package (Cox 1996). EPI volumes were corrected for slice acquisition time and head motion. Functional volumes were aligned to the T1-weighted anatomical volume and transformed into standard space (Talairach and Tournoux 1988), resampled at 3-mm isotropic voxels, and smoothed with a 6-mm FWHM Gaussian kernel. Each voxel time series was then scaled to percent of mean signal level.

Individual time courses were analyzed with a generalized least squares (GLS) regression model. The goal was to identify brain regions associated with each attribute on the basis of correlations between activity levels and attribute relevance ratings across all 900 words (for a similar approach, see Hauk, Davis and Pulvermüller et al. 2008; Kiefer et al. 2008). The ratings were converted into z-scores and included as simultaneous predictor variables in the regression model (see Supplementary Methods). We performed a random-effects analysis at the group level to test the significance of the regression parameters at each voxel. Activation maps were thresholded at a combined P < 0.005 at the voxel level and minimum cluster size of 864 mm3, resulting in an alpha threshold of P < 0.05 corrected for multiple comparisons.

We also conducted 2 additional analyses. The first was identical to the original analysis except that abstract words were coded separately, so that only the concrete words contributed to the attribute maps. The second one differed from the original analysis in that the attribute ratings were converted to ranks prior to entering the regression model. This procedure eliminated any differences in the distribution of the ratings by imposing a flat distribution to all of them, although at the cost of introducing a distortion to their relative values.

In the full-model analysis, all 5 semantic attributes were included in a single regression model. This analysis identified voxels where activity was correlated with a given attribute rating after accounting for the influence of all other attributes (i.e., activations driven by the unique variance associated with each attribute). Since collinearity can mask the contribution of highly correlated variables (Supplementary Table 1), we also performed 5 leave-one-out analyses (i.e., analyses where one of the attribute ratings was left out of the regression model), to identify activations driven by variance that is shared between 2 correlated attributes. High correlations were observed between the ratings for shape and color (r = 0.76), shape and manipulation (r = 0.58), shape and visual motion (r = 0.53), and sound and visual motion (r = 0.59). The results of interest from the leave-one-out analyses were the attribute maps for one member of these highly correlated pairs (e.g., shape) when the other member (e.g., color) was left out of the analysis.

To further characterize activations driven by shared variance between attributes, we conducted another set of analyses where only one of the 5 attributes was included in the regression model at a time, resulting in 5 activation maps. Each map was thresholded at a combined P < 0.005 at the voxel level and a minimum cluster size of 864 mm3 (corrected P < 0.05). The 5 maps were binarized and multiplied together to compute a conjunction map, which identified regions where activation was modulated by all 5 semantic attributes. We also generated a map showing regions activated exclusively by each attribute as well as regions activated by 2, 3, or 4 attributes.

Finally, we conducted a principal components analysis on the attribute ratings and used the 5 resulting components as simultaneous predictors in a GLS regression model of the BOLD signal.

Results and Discussion

Participants performed a semantic decision task (Does the item refer to something that can be experienced with the senses?) during fMRI. Mean accuracy was 0.84 for words and 0.96 for pseudowords, showing that participants attended closely to the task.

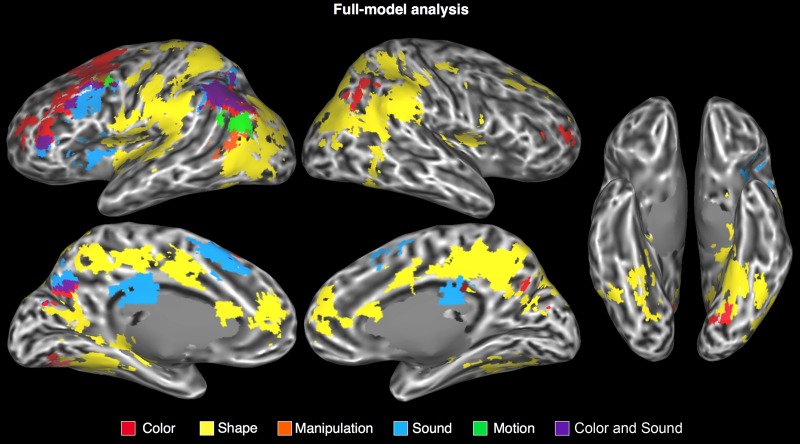

As discussed in detail below, the cortical areas where activity was modulated by a particular semantic attribute included unimodal, bimodal, and multimodal areas involved in the processing of that attribute during sensory-motor experience (Table 2, Figs 2 and 3, Supplementary Table 2). For all attributes, activations were strongly left-lateralized.

Table 2.

Results of the full-model analysis

| Cluster size | x | y | z | Max. t | |

|---|---|---|---|---|---|

| Shape | |||||

| Bilateral parietal lobe/posterior medial area | 90 045 | ||||

| L anterior SMG | −53 | −29 | 28 | 4.89 | |

| L posterior cingulate/precuneus | −16 | −34 | 38 | 5.74 | |

| L dorsal postcentral sulcus | −22 | −49 | 56 | 6.12 | |

| L ventral postcentral sulcus | −40 | −34 | 29 | 5.71 | |

| L ventral precentral sulcus | −49 | −1 | 14 | 5.07 | |

| L postcentral gyrus | −49 | −13 | 47 | 5.02 | |

| R angular gyrus/SMG | 56 | −52 | 26 | 8.29 | |

| R anterior SMG | 50 | −34 | 35 | 6.03 | |

| R caudal IPS | 35 | −76 | 23 | 6.39 | |

| R posterior cingulate/precuneus | 11 | −46 | 38 | 7.00 | |

| R dorsal postcentral sulcus | 22 | −50 | 46 | 5.51 | |

| Bilateral anterior cingulate gyrus | 7236 | ||||

| L anterior cingulate gyrus | −4 | 48 | 11 | 5.43 | |

| R anterior cingulate gyrus | 8 | 47 | 11 | 5.63 | |

| L ventral temporal/lateral occipital | 25 164 | ||||

| L fusiform gyrus | −34 | −52 | −7 | 7.84 | |

| L parahippocampal gyrus | −28 | −40 | −9 | 7.23 | |

| L hippocampus | −29 | −25 | −9 | 5.00 | |

| L lateral occipital cortex | −43 | −61 | 2 | 5.40 | |

| L caudal IPS | −28 | −79 | 17 | 6.75 | |

| L parieto-occipital sulcus | 2106 | −13 | −55 | 11 | 4.32 |

| R temporal lobe | 5589 | ||||

| R fusiform gyrus | 41 | −49 | −10 | 4.50 | |

| R hippocampus | 26 | −34 | −1 | 4.42 | |

| R superior frontal sulcus | 1971 | 19 | 37 | 35 | 4.51 |

| R parietal operculum | 1863 | 37 | −4 | 17 | 4.50 |

| R putamen | 1539 | 29 | −19 | 2 | 5.00 |

| Color | |||||

| L superior frontal sulcus | 16 605 | −22 | 11 | 47 | 6.55 |

| L angular gyrus | 8775 | −37 | −64 | 38 | 7.64 |

| L parieto-occipital sulcus | 1485 | −10 | −67 | 23 | 4.43 |

| L posterior collateral sulcus | 999 | −16 | −70 | −7 | 4.52 |

| R angular gyrus | 1701 | 41 | −61 | 35 | 4.64 |

| R middle frontal gyrus | 945 | 32 | 38 | 11 | 4.22 |

| Manipulation | |||||

| L lateral temporal-occipital junction | 1215 | −52 | −58 | 5 | 3.79 |

| Sound | |||||

| L angular gyrus/IPS | 7209 | −34 | −61 | 41 | 6.11 |

| L precentral sulcus | 4320 | −37 | 7 | 35 | 5.67 |

| L medial superior frontal gyrus | 4266 | −4 | 20 | 44 | 5.30 |

| L posterior callosal sulcus | 2808 | −7 | −19 | 29 | 5.64 |

| L parieto-occipital sulcus | 1728 | −7 | −70 | 35 | 5.64 |

| L anterior insula | 1512 | −31 | 17 | 2 | 4.75 |

| L inferior frontal gyrus | 1161 | −40 | 38 | 8 | 5.52 |

| Visual motion | |||||

| L angular gyrus/STS | 1809 | −52 | −64 | 17 | 4.43 |

Note: Cluster sizes are reported in mm3. Activation maps were thresholded at P < 0.005 at the voxel level and minimum cluster size of 864 mm3 (corrected P < 0.05). For large clusters spanning multiple regions, local maxima were listed separately. Coordinates according to the Talairach and Tournoux (1988) atlas.

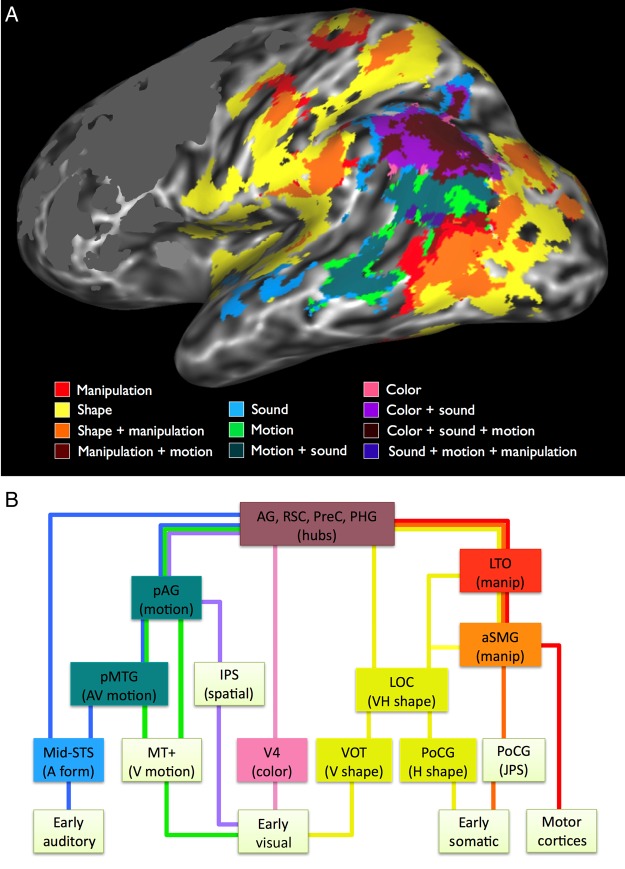

Figure 2.

Areas in which the BOLD signal was modulated by the attribute ratings in the full-model analysis, displayed on an inflated cortical surface. Intermediary colors designate overlapping activations.

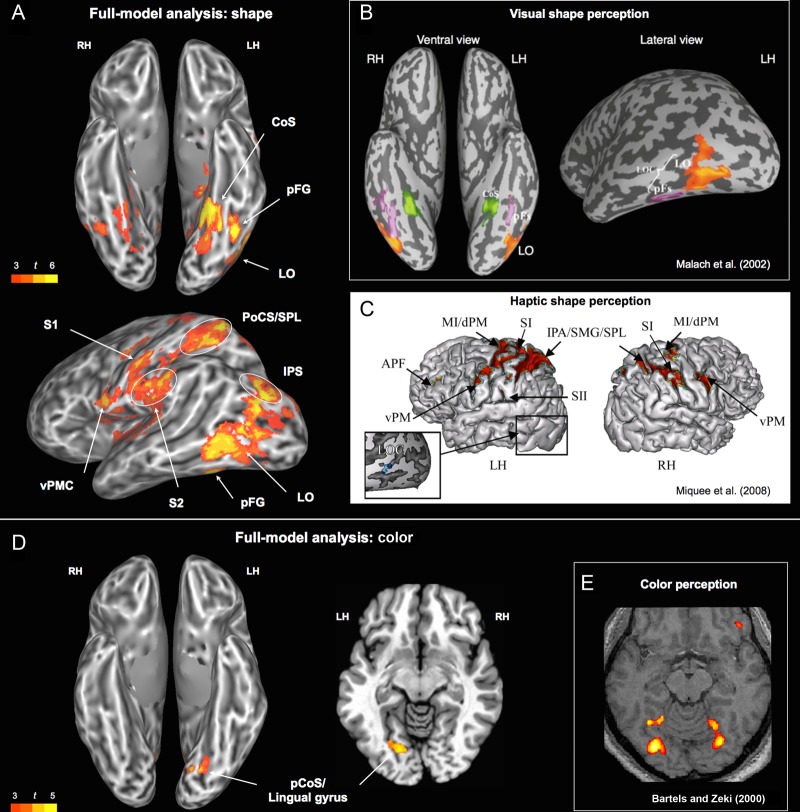

Figure 3.

(A) Areas associated with the shape rating. (B) Areas involved in visual analysis of objects, buildings, and scenes. Adapted and reprinted from Malach et al. (2002). (C) Activation patterns related to haptic shape encoding. Adapted and reprinted from Miqueé et al. (2008). (D) Areas associated with the color rating (left: ventral view; right: horizontal slice at z = −7). (E) Areas activated in a color perception task (colored Mondrians > grayscale Mondrians). Adapted and reprinted from Bartels and Zeki (2000). CoS, collateral sulcus; pCoS, posterior collateral sulcus; pFG, posterior fusiform gyrus; IPS, intraparietal sulcus; LO, lateral occipital; LOC, lateral occipital complex; vPMC, ventral premotor cortex; PoCS, postcentral sulcus; S1, primary somatosensory; S2, secondary somatosensory; SMG, supramarginal gyrus; SPL, superior parietal lobule.

Shape

In the full-model analysis (Fig. 2), the shape attribute robustly modulated activity in ventral stream areas involved in the analysis of visual shape (Fig. 3A,B), including the ventral occipitotemporal (VOT) cortex and the lateral occipital complex (LOC). There was also strong shape-related activation in the superior occipital gyrus and adjacent posterior intraparietal sulcus (IPS), a region previously found to respond more strongly to pictures of objects than to pictures of faces or scenes (Grill-Spector and Malach 2004).

Shape also modulated activity in a set of regions involved in processing haptic and visuo-tactile information from the hand (Stoeckel et al. 2004; Gentile et al. 2013), including the postcentral gyrus, the junction of the postcentral sulcus with the superior parietal lobule (PoCS/SPL), anterior SMG, parietal operculum, insula, and ventral premotor cortex (Fig. 3A,C). All of these areas have been implicated in the haptic perception of object shape in neuroimaging (e.g., Bodegård et al. 2001; Reed et al. 2004; Peltier et al. 2007; Miquée et al. 2008) and focal lesion studies (Bohlhalter et al. 2002; Hömke et al. 2009). The association of these regions with the shape rating suggests that the conceptual representation of object shape is inherently bimodal, grounded in both visual and haptic representations. In fact, several studies show that a ventral subregion of the LOC (LOtv) responds to shape information from both modalities, and thus can be characterized as a bimodal shape area (e.g., Amedi et al. 2002; Reed et al. 2004; Miquée et al. 2008).

A set of higher level, paralimbic areas on the medial wall also showed modulation by the shape attribute, namely the precuneus/posterior cingulate gyrus, the anterior cingulate, and the rostral cingulate/medial prefrontal cortex (Supplementary Fig. 5). These areas have been associated with a variety of cognitive processes, including general semantic processing (Binder et al. 2009), mental scene construction and visuo-spatial imagery (Cavanna and Trimble 2006; Hassabis et al. 2007; Schacter and Addis 2009; Spreng et al. 2009), self-referential processing (Gusnard et al. 2001), and autobiographical memory (Svoboda et al. 2006; Spreng et al. 2009), and may play a role in multimodal integration of sensory-motor features, contextual binding, and/or control processes involved in concept retrieval (see Conjunction Analysis and Semantic “Hubs” section).

Due to the high correlation between shape and color ratings (r = 0.76), an analysis was conducted to identify any additional regions that show activity changes correlated with the shape attribute when color is removed from the model. The results were very similar to the full model, but with additional involvement of bilateral primary and secondary visual cortices in the calcarine sulcus, lingual gyrus, cuneus, posterior fusiform gyrus, and posterior parahippocampus (Supplementary Table 2 and Fig. 5).

Although the shape rating was also highly correlated with manipulation (r = 0.58) and with visual motion (r = 0.53), excluding each of these predictors in turn from the model did not significantly change the shape map. Having shape as the only predictor in the model (i.e., all other attributes excluded) revealed an additional small activation cluster in the left angular gyrus (Supplementary Fig. 6).

Color

In the full-model analysis, the color attribute modulated activity in the left ventromedial occipital cortex at the junction of lingual and fusiform gyri (Fig. 3D). This region is part of the ventral visual pathway, and has been strongly implicated in color perception (e.g., Beauchamp et al. 1999; Bartels and Zeki 2000). This finding suggests that retrieval of concepts associated with salient color attributes involves the activation of perceptually encoded color information. This conclusion is supported by 2 previous studies that also found that the ventral occipital cortex was activated by words associated with color information (Simmons et al. 2007; Hsu et al. 2012). Other regions modulated by color in the full-model analysis included the angular gyrus, parieto-occipital sulcus, and dorsal prefrontal cortex.

Due to the high correlation between color and shape ratings, an additional analysis was conducted to identify regions that show activity changes correlated with the color attribute when shape ratings are removed from the model. Most of the areas activated by shape in the full-model analysis showed modulation by color in the shape-excluded analysis (Supplementary Table 2 and Fig. 5). Additionally, the same primary and secondary visual areas (calcarine sulcus, lingual gyrus, cuneus) that had shown modulation by shape in the color-excluded analysis showed modulation by color in the shape-excluded analysis (Supplementary Fig. 5). Thus, both the color and the shape ratings explained a significant amount of the variance in activity in early visual areas, independent of the other attribute ratings. These results did not change when color was the only predictor in the model.

Manipulation

A single cluster located at the junction of the left posterior MTG (pMTG) and anterior occipital cortex was modulated by the manipulation rating in the full-model analysis (Fig. 2). This activation, which we label LTO cortex (Talairach coordinates x = –52, y = –58, z = 5), is anterior and lateral to the typical location of visual motion area MT + (x = –47, y = –76, z = 2; Dumoulin et al. 2000). LTO is part of the action representation network, showing particularly strong responses to the observation and imitation of object-directed hand actions (Caspers et al. 2010; Grosbras et al. 2012), as well as to motor imagery and pantomime of tool use (Lewis 2006). Although the left LTO has sometimes been regarded as an area specialized for the visual processing of object motion (e.g., Martin and Chao 2001; Beauchamp and Martin 2007), the evidence better supports a characterization based on multimodal representations of hand actions. First, this area is equally activated by pictures of hands and pictures of tools (x = −49, y = −65, z = −2; Bracci et al. 2012). Second, at least 3 separate studies have shown motor-related activation in this region when subjects performed unseen hand actions (Peelen et al. 2005; Aziz-Zadeh and Koski et al. 2006; Molenberghs et al. 2010). In one such study (Aziz-Zadeh and Koski et al. 2006), this region (x = –58, y = –58, z = 6) was more strongly activated during execution of an unseen hand action than during passive observation of the same action. Third, activity in the LTO reliably discriminates between different types of hand actions, regardless of whether participants watch videos of the actions or perform the actions themselves without visual feedback (x = –53, y = –56, z = 3; Oosterhof et al. 2010). Finally, functional connectivity analyses have shown that the hand/tool-selective area in the LTO is more strongly connected to fronto-parietal areas involved in action processing—including anterior SMG (aSMG), IPS, and premotor cortex—than are neighboring areas LO and the extra-striate body area (Bracci et al. 2012; Peelen et al. 2013).

The left LTO has also been linked with processing of action concepts in a variety of language studies. Kable et al. (2002) identified this region as the only site responding more strongly to action-related than to nonaction-related words, and Simmons et al. (2007) identified the LTO (x = −57, y = −53, z = 7) as the only region activated by verification of motor properties of named objects relative to verification of color properties. The LTO region identified in our study partially overlaps with the area modulated by “action-relatedness” ratings in the study by Hauk, Davis and Kherif et al. (2008).

Our results are also in agreement with 2 activation likelihood estimation meta-analyses of action-related language processing. Binder et al. (2009) examined studies that contrasted words for manipulable artifacts with words for living things (total of 29 foci), or words representing action knowledge relative to other types of knowledge (40 foci), and found significant activation overlap for action-related semantics only in the left LTO and the left SMG. Coordinates for the LTO in that analysis (x = −54, y = −62, z = 4) are almost identical to those in the current study. Likewise, Watson et al. (2013) found the largest overlap of activation peaks for action-related concepts in the LTO.

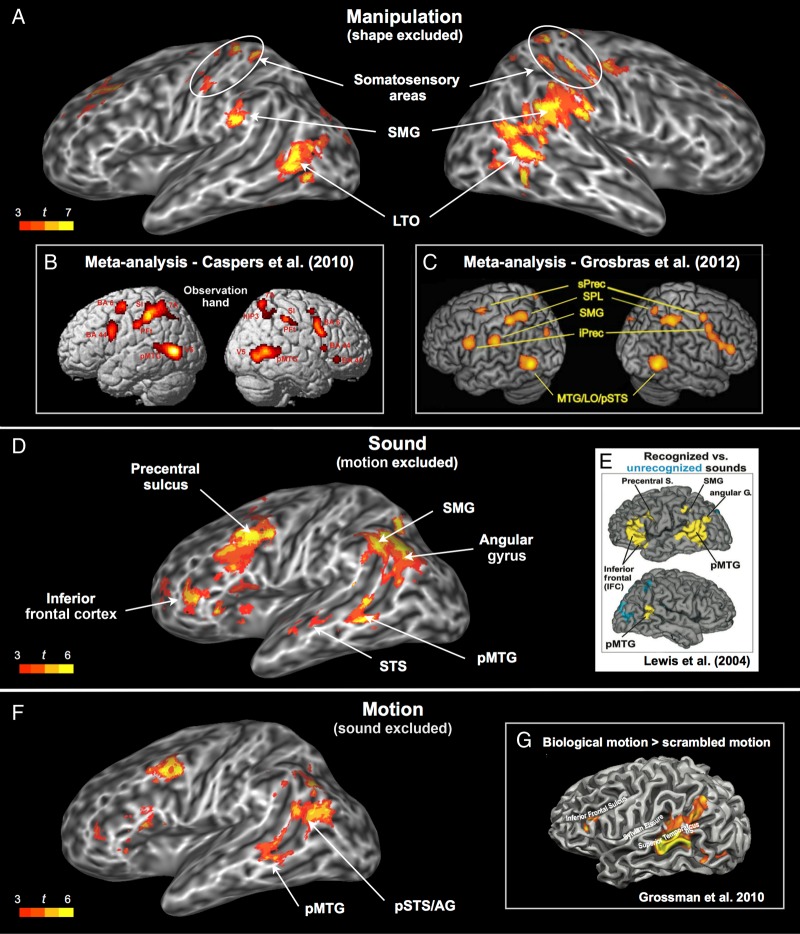

Ratings for manipulation and shape were highly correlated, reflecting the fact that manipulable objects also have characteristic shapes. When the shape attribute was left out of the regression model, other putative nodes of the action representation network also showed an effect of manipulation, namely the right LTO, bilateral anterior SMG, bilateral somatosensory cortex, and the right precentral gyrus (Fig. 4A). The anterior SMG is a multimodal sensory-motor area involved in the planning and control of object-directed hand actions, as evidenced by lesion and neuroimaging studies (see Desai et al. 2013 for a review). Damage to this region can lead to performance impairments during skilled motor actions, gesture imitation, tool use, and execution of an appropriate action in response to a visual object (Buxbaum et al. 2005; Goldenberg and Spatt 2009). It is activated by planning tool-directed actions relative to planning random movements (Johnson-Frey et al. 2005) as well as by object grasping relative to pointing (Frey et al. 2005). Additional areas modulated by manipulation when shape was removed from the analysis included several of the same medial wall regions (medial prefrontal cortex, precuneus) modulated by shape and color, as well as parahippocampus and VOT areas modulated by both shape and color. The analysis in which manipulation was the only predictor in the model revealed additional small activations in the angular gyrus, opercular cortex, retrosplenial cortex, and lingual gyrus (Supplementary Fig. 6).

Figure 4.

(A) Areas associated with the manipulation rating when shape was left out of the regression model. (B) ALE meta-analysis for observation of hand actions, adapted and reprinted from Caspers et al. (2010). (C) ALE meta-analysis for observation of object-directed hand movements, adapted and reprinted from Grosbras et al. (2012). (D) Areas associated with the sound rating when visual motion was left out of the regression model. (E) Areas activated during perception of environmental sounds relative to temporally reversed versions of the same sounds, adapted and reprinted from Lewis et al. (2004). (F) Areas associated with the visual motion rating when sound was left out of the regression model. (G) Areas involved in perception of biological motion, adapted and reprinted from Grossman et al. (2010). AG, angular gyrus; LO, lateral occipital; LTO, lateral temporal-occipital junction; MTG, middle temporal gyrus; pMTG, posterior MTG; iPrec, inferior precentral gyrus; sPrec, superior precentral sulcus; SMG, supramarginal gyrus; SPL, superior parietal lobule; STS, superior temporal sulcus; pSTS, posterior STS.

Overall, the activations associated with the manipulation rating show that word-cued retrieval of concepts associated with hand actions recruits a distributed action representation network also involved in processing shape information, as well as cortex in the left LTO that is more specifically involved in object manipulation.

Sound

The sound attribute was associated with robust activation of the left ventrolateral prefrontal cortex (VLPFC), which has been repeatedly implicated in nonspatial auditory processing during perception, imagery, and recall tasks (e.g., Wheeler et al. 2000; Arnott et al. 2004; Lewis et al. 2004). In monkeys, the VLPFC contains auditory neurons tuned to complex sound features, including those that distinguish between categories of conspecific vocalizations (see Romanski and Averbeck 2009, for a review).

The full-model analysis found no effects of sound in primary or association auditory cortices after activations were corrected for multiple comparisons. The absence of an effect in early auditory cortex (e.g., Heschl's gyrus) is in agreement with previous studies focused on higher level aspects of acoustic representation (Wheeler et al. 2000; Lewis et al. 2004). We did observe subthreshold activation for sound in the left lateral temporal cortex, extending from the middle portion of the STS to the pMTG, and this cluster reached significance when the rating for motion (which correlated with sound at r = 0.59) was left out of the model (Fig. 4D; Supplementary Table 2). The middle and anterior portions of the STG and STS are considered part of the auditory ventral stream, dedicated to nonspatial auditory object perception (Arnott et al. 2004; Zatorre et al. 2004). The pMTG site has also been implicated in high-level auditory processing in several studies. Lewis et al. (2004), for example, found that this region responded more strongly to recognizable environmental sounds than to temporally reversed (and therefore unrecognizable) versions of the same sounds. Kiefer et al. (2008) obtained a similar result by comparing sounds produced by animals and artifacts against amplitude-modulated colored noise. Thus, these activations provide evidence that high-level auditory representations are activated when concepts containing auditory-related features are retrieved.

The role of the pMTG in processing sound-related concepts is also supported by studies that used sound-related words as stimuli. In the study by Kiefer et al. (2008), this was the only region where activation was specific to auditory concepts during a lexical decision task. Also using lexical decision, Kiefer et al. (2012) found the pMTG to be significantly activated by auditory conceptual features, but not by action-related features. Interestingly, this pattern was reversed in a more posterior region of the left pMTG, which corresponds to the area modulated by Manipulation in the present study that we have named LTO. Finally, Trumpp and Kliese et al. (2013) described a patient with a circumscribed lesion in the left pSTG/pMTG who displayed a specific deficit (slower response times and higher error rate) in visual recognition of sound-related words.

A novel finding of the current study, enabled by the simultaneous assessment of multiple attributes, is that the same pMTG area modulated by sound-related meaning is also modulated by meaning related to visual motion. As discussed in the next section, this overlap, coupled with the known role of this region in audiovisual integration during perception, suggests the activation of bimodal audiovisual representations during concept retrieval (see Beauchamp (2005) for a review of audiovisual integration in the lateral temporal lobe).

The other areas associated with the sound attribute were the precuneus and the angular gyrus, both of which have been proposed to function as high-level cortical hubs (Buckner et al. 2009; Sepulcre et al. 2012). The analysis in which sound was the only predictor in the model revealed additional activations in the retrosplenial cortex, parahippocampal gyrus, and postcentral gyrus (Supplementary Fig. 6).

Visual Motion

Contrary to our expectations, the typical location of area MT+ (i.e., the junction of the inferior temporal sulcus with the middle occipital gyrus) was not modulated by the visual motion ratings, even at more lenient thresholds. Instead, the full-model analysis identified motion-associated activity in a more dorsal region located in the ventrolateral portion of the left angular gyrus (Fig. 2). Studies by Chatterjee and colleagues indicate that the left angular gyrus is involved in representing spatial aspects of event- and motion-related concepts (Chatterjee 2008; Chen et al. 2008; Kranjec et al. 2012), including information regarding path of motion through space (Wu et al. 2008). This region is also adjacent to cortex in the IPS that is known to encode spatial information (Gottlieb 2007). Together, these data suggest that the visual motion rating may load primarily on information associated with characteristic motion patterns in space.

There was also subthreshold activity in the left pMTG, which became significant when the sound rating was left out of the regression model (Fig. 4F). This area overlapped almost exactly with the one modulated by sound when visual motion was left out of the model (Fig. 4D). In principle, this overlap could reflect the indirect activation of modality-specific information (e.g., visual motion) triggered by processing in a different but highly associated modality (e.g., auditory motion). In this scenario, the pMTG region activated by both sound and visual motion would encode information in only one of these two modalities (i.e., its representations of motion would have emerged, in the course of human development, from inputs from a single sensory modality). Alternatively, this finding may reflect the activation of truly bimodal representations, formed by the integration of visual and auditory inputs during perception of moving stimuli. The extensive literature on multisensory integration in both humans and animals lends support to the latter interpretation, as several studies have found that activations elicited in the posterior lateral temporal cortex during bimodal stimulation were stronger than those elicited by either modality alone, even at the level of single neurons (for reviews, see Amedi et al. 2005; Beauchamp 2005; Ghazanfar and Schroeder 2006; Doehrmann and Naumer 2008). The pMTG site identified in the present study is adjacent to and overlaps with the posterior STS/pMTG area that has been associated with perception of biological motion (Grossman and Blake 2002; Kontaris et al. 2009), with audiovisual integration during identification of animals and manipulable objects (Beauchamp et al. 2004) and with audiovisual integration in speech perception studies, particularly in relation to the McGurk effect (Miller and D'Esposito 2005; Beauchamp et al. 2010). Thus, the pMTG appears to be a sensory integration area where auditory and visual information are combined into bimodal representations of object motion. By combining visual and auditory signals into a common code, this pMTG site may play a role in capturing correlated changes in visual space and auditory spectral domains, as is the case in speech and many other dynamic environmental (particularly biological) phenomena.

The absence of a visual motion effect in the typical location of area MT+ suggests, at least in the context of our task, that the processing of motion-related aspects of word meaning does not rely on cortical areas specialized for processing low-level visual motion information. Our negative finding regarding MT+ is consistent with prior studies that contrasted motion-related words or sentences with matched stimuli unrelated to motion (Kable et al. 2002, 2005; Bedny et al. 2008; Humphreys et al. 2013) and also found activations in the pMTG/pSTS but not in MT+ itself. Kable et al. (2005) argued for a continuum of abstraction for motion information going from MT+ toward the MTG and perisylvian areas. Our data support this proposal insofar as the temporal lobe processing of visual motion is concerned, and they also identify a previously unreported posterior angular gyrus region involved in representing motion-related conceptual information.

Leaving shape (r = 0.53) out of the model did not significantly change the results for visual motion. When visual motion was the only predictor in the model, additional activations were found in angular gyrus, STS, postcentral gyrus, medial prefrontal cortex, posterior cingulate/precuneus, retrosplenial cortex, parahippocampal gyrus, and posterior fusiform gyrus (Supplementary Fig. 6).

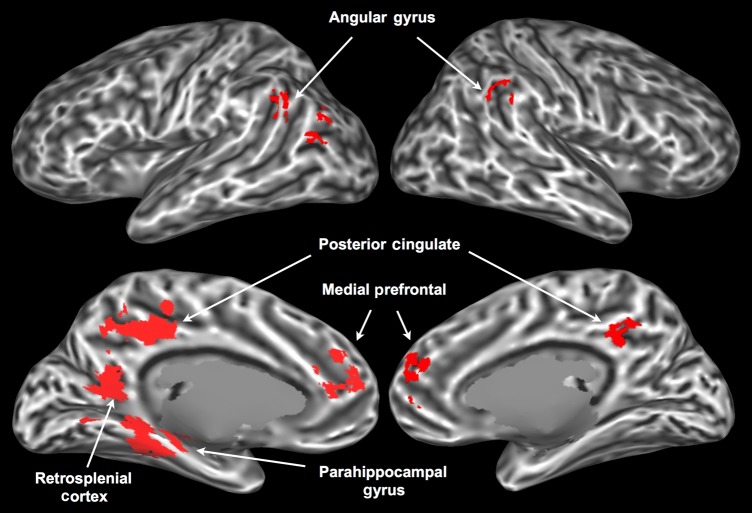

Conjunction Analysis and Semantic “hubs”

The conjunction of voxels activated by all 5 attributes (Fig. 5) identified known multimodal areas in the left parahippocampal gyrus, left retrosplenial cortex, bilateral posterior cingulate/precuneus, bilateral medial prefrontal cortex, and bilateral angular gyrus. Each of these areas has been proposed to play a role in high-level multimodal integration. The parahippocampal gyrus and retrosplenial cortex are strongly interconnected (Kahn et al. 2008) and have been implicated in cognitive tasks requiring the structural binding and coordination of multiple representations, such as recognition of scenes, as opposed to single objects (Epstein and Kanwisher 1998; Henderson et al. 2011), spatial navigation (Ekstrom et al. 2003), source recollection vis-à-vis item recognition (Davachi et al. 2003; Ranganath et al. 2004), episodic retrieval of emotional contexts (Smith et al. 2004), and construction of future or imaginary scenarios based on past memories (Vann et al. 2009).

Figure 5.

Areas associated with all 5 semantic attributes in the conjunction analysis.

The medial prefrontal cortex, posterior cingulate/precuneus, and angular gyrus receive inputs from widespread cortical areas, and have been identified in functional connectivity studies as top-level convergence zones (CZs) for sensory-motor processing streams (Buckner et al. 2009; Sepulcre et al. 2012; Bonner et al. 2013). They also constitute the core nodes of the so-called “default-mode” network, a set of functionally interconnected regions that are consistently deactivated during demanding cognitive tasks (Raichle et al. 2001), and that have been strongly linked to conceptual information processing (Binder et al. 1999, 2009). The results of our conjunction analysis provide further evidence that this network of cortical hubs plays a role in the semantic processing of single words, possibly by encoding information about the co-activation patterns of lower level CZs and unimodal areas, thus allowing simultaneous reactivation of the appropriate semantic features associated with a word (Damasio 1989).

Although the anterior temporal lobes have been proposed to be a major hub for integrating conceptual information, based on studies of semantic dementia patients as well as TMS and fMRI studies (Patterson et al. 2007; Pobric et al. 2010a; 2010b; Visser et al. 2010), they were not activated by any of the 5 attribute ratings. It is possible that the reduced signal-to-noise ratio of the BOLD signal in these areas prevented us from detecting these activations (see Supplementary Fig. 3). Alternatively, the representations encoded in these regions may be truly supramodal, such that their activity is not correlated with the salience of sensory-motor attributes.

Negative Correlations

While it is expected that a brain region involved in processing a certain type of information (e.g., color) would become more active as the demand for that kind of processing increases, we have no a priori expectation that any brain region should become “less” active as the processing demands for that particular attribute increase. Thus, interpretation of negative correlations in the present study is considerably more speculative than that of positive ones. Three regions (inferior frontal gyrus, medial superior frontal gyrus, and pSTS) showed negative correlations with shape, color, and manipulation; for sound and motion, these effects were much weaker or nonexistent (Supplementary Fig. 6). These “deactivations” may be associated with domain-general processes that are more strongly engaged when processing words with low sensory-motor ratings, as such words are less likely to automatically evoke context and other associated information and therefore may require more retrieval effort (Schwanenflugel et al. 1988; Hoffman et al. 2010). The IFG, in particular, has been implicated in the cognitive control of memory (Badre and Wagner 2007; Kouneiher et al. 2009), and it is activated by abstract words relative to concrete words (Binder et al. 2005). Portions of the medial SFG are involved in attentional control and response monitoring (Rushworth et al. 2004, 2007). The pSTS deactivation partially overlaps with the pMTG site that was activated by sound and motion in the leave-one-out analyses, suggesting that this area distinguishes between representations involving shape, color, and manipulation, on the one hand, and sound and motion, on the other (see next section for discussion of a possible reason for this dissociation).

Principal Components Analysis

We performed principal components analysis on the attribute ratings to explore how their co-occurrence patterns map onto the properties of natural ontological categories. The first component accounted for 57% of the explained variance, and distinguished between concrete concepts (i.e., those that involve direct, highly multimodal sensory-motor experience, such as “ambulance,” “train,” “fireworks,” “blender,” “drum”) and abstract ones (e.g., “rarity,” “lenience,” “proxy,” “gist,” “clemency”). The second component accounted for 23% of the explained variance, and distinguished between objects (particularly those with prominent, characteristic color and shape, but not typically associated with sound or motion; e.g., “tomato,” “banana,” “rose,” “sun,” “dandelion”) and events (no characteristic shape or color, but associated with typical sounds and/or motion; e.g., “applause,” “bang,” “scream,” “riot,” “stampede”). The third component (11% of the explained variance) distinguished between manipulable objects or materials (e.g., “hairbrush,” “cigarette,” “harmonica,” “scissors,” “dough,” “clay”) and nonmanipulable entities (e.g., “ocean,” “tornado,” “jaguar,” “explosion,” “navy,” “carnival”). The fourth (6%) distinguished between entities associated with motion but not with sound (e.g., “muscle,” “acrobat,” “escalator,” “velocity,” “boomerang”) and entities associated with sound but not with motion (e.g., “choir,” “horn,” “siren,” “alarm”). The fifth component (3%) appeared to distinguish between concrete entities with no characteristic shape (e.g., “water,” “ink,” “sunlight,” “clay,” “beer,” “fireworks”) and all the others, which could be interpreted as the classic count-mass distinction in psycholinguistics.

As might be expected, the structure captured by these components thus reflects correlation patterns between the original attributes as they occur in the world, producing mainly general ontological category distinctions (concrete vs. abstract, object vs. event) that reflect prevalent combinations of attributes. On the other hand, such combinatorial components in themselves offer little insight into the biological mechanisms underlying these general categories, which we have assumed involve known neurobiological perception and action systems. The goal of the current study was to examine the representation of these more basic sensory-motor attributes.

The 5 PCA components performed very similarly to the 5 original attributes as predictors of the BOLD signal. The mean F score for the full model (averaged across participants and across voxels) was 4.101 using the original attributes and 4.092 using the PCA components.

Additional Analyses

When the attribute regressors included only the 600 concrete words (i.e., abstract words were coded separately), the resulting maps were very similar to the original analysis, although with overall lower t values, presumably due to the smaller number of trials and the reduced variance of the ratings. The analysis using the ranks of the attribute ratings as predictors of the BOLD signal also produced similar results, again with somewhat lower t values.

General Discussion

Three novel findings emerge from the present study. First, the cortical regions where activity was modulated by sensory-motor attributes of word meaning consisted mostly of secondary sensory areas and multimodal integration areas. The only primary sensory area revealed in the full-model analysis was somatosensory area S1, which was modulated by shape information. This suggests that the primary auditory and visual cortices (areas A1 and V1, respectively) do not contribute as much as higher level areas to the aspects of word meaning investigated in this study. However, as noted above (Shape and Color section; Supplementary Fig. 4), activity in V1 was significantly correlated with the shape and the color ratings when either one was included in the regression model without the other (while showing no correlation with any of the other attributes, in any of the analyses). This finding suggests that V1 does contribute to general visual aspects of word meaning, although higher level areas such as V4, VOT, and LOC are relatively more important for the representation of semantic features relating to specific types of visual information (i.e., color and shape). A1, on the other hand, showed no association with sound in any of the analyses, even when the activation threshold was lowered to P = 0.05 (uncorrected). The regions most strongly modulated by the sound rating were the angular gyrus, the VLPFC, and the posterior cingulate gyrus, which are highly multimodal areas. This pattern of results suggests that early auditory areas may play a smaller role than the corresponding visual and somatosensory areas in the representation of word meaning.

Second, our results indicate that the LTO and the aSMG, which function as multimodal nodes in a network dedicated to the representation of object-directed actions (see Manipulation section), are more strongly implicated in the comprehension of action-related words than the motor and premotor cortices. We propose that the LTO and aSMG contribute to action execution by integrating information originating in modality-specific motor, somatosensory, and visual cortices, generating multimodal (and more “abstract”) action representations. When an action-related concept is retrieved in response to a word (e.g., “hammer”), the corresponding action representation is activated in the LTO and aSMG, leading, in turn, to the activation of more detailed aspects of the action in lower level visual and motor areas. The extent to which these lower level areas are recruited, however, likely depends on the semantic context and the nature of the task. Further studies would be required to properly investigate this hypothesis.

Finally, the data reveal a hierarchical system of converging sensory and motor pathways underlying the representation of word meaning, according to which many conceptual attributes are represented in specific multimodal subnetworks. As illustrated in Figure 6A, these include several regions that have been independently implicated in multisensory integration, including pMTG and angular gyrus, where auditory and visual motion information are jointly represented (Beauchamp et al. 2004, 2010; Seghier 2013), as well as a network involved in the integration of visual and haptic sensory inputs, consisting of LOC, aSMG, postcentral sulcus, and posterior IPS (Amedi et al. 2001; Grefkes et al. 2002; Saito et al. 2003; Gentile et al. 2011). As discussed in the Introduction and in the Manipulation section, the LTO may be a multimodal association area encoding representations of hand actions. These midlevel convergences illustrate the fundamentally cross-modal nature of sensory-motor experience, perhaps best exemplified by the inherently inseparable processing of motor, proprioceptive, and haptic shape information during object manipulation. Neurobiologically realistic theories of concept representation, therefore, cannot be limited to unimodal “spokes” and amodal “hubs,” but must incorporate cross-modal representations at varying (i.e., bimodal and trimodal) levels of convergence.

Figure 6.

(A) Activation overlap in the posterior lateral surface of the left hemisphere. The color and shape maps are from the full-model analysis, the others are from the leave-one-out analyses: Manipulation with shape excluded, sound with motion excluded, and motion with sound excluded. Individual maps were thresholded at P < 0.015 (uncorrected) for illustration only. Activations in the frontal lobe were masked. (B) Proposed model of some sensory-motor systems underlying concept representation. The model is hierarchical, with bidirectional information flow. During perception and action, information flows mainly from low-level modal cortical areas toward multimodal areas and cortical hubs. During concept retrieval, information flows mainly in the opposite direction, resulting in the top-down activation of sensory-motor features. Areas at the top of the figure are most reliably activated, and areas at the bottom are activated more variably and depending on task demands. Colors of the connecting lines indicate modal information types, including sound (blue), visual motion (green), color (pink), shape (yellow), somatic joint position (orange), and motor (red). A hypothesized pathway for visual spatial information, not assessed in the current study, is shown in violet. The model suggests a larger division between information concerning motion and sound (left half of figure), and information concerning shape and object manipulation (right half of figure). Colored boxes indicate regions activated in the present study.

A tentative model of such a hierarchical system is illustrated in Figure 6B. At the lowest level of the system are unimodal cortical areas strongly activated during sensory-motor experience, encoding low-level perceptual features and motor commands. They are connected to areas that specialize in different types of information, encoding higher level modal representations (color, visual motion, visual and haptic shape, motor schema, auditory form), followed by bimodal and trimodal convergences across modalities, and finally convergence across all modalities at the level of heteromodal hubs. Concept retrieval follows the same hierarchical relationships, but with information flowing mainly in the opposite (top-down) direction, such that activation of modality-specific areas is driven by multimodal CZs.

Finally, the data shown in Figure 6A suggest an important division of the posterior conceptual system into 2 larger subnetworks, which we tentatively characterize as 1) object interaction and 2) temporal-spatial perception systems. The object interaction system combines visual shape, haptic shape, proprioceptive, and motor representations to enable object-directed body actions. Object interactions have been a central focus of the larger situated cognition program (Gibson 1979), which emphasizes the central role of sensory-motor integration during object-directed actions as a basis for much of behavior. Our proposal for a multimodal object interaction system resonates with this general view.

The temporal-spatial perception network is complementary to the object interaction network. Many entities about which we have knowledge are not things we typically manipulate, that is, animals, people, and events. Sounds, movements, and motion through space are salient perceptual and conceptual attributes of such phenomena. The activation patterns shown in Figure 6A suggest a striking segregation of this type of conceptual information from the object interaction system. In a broader sense, this segregation may reflect a basic ontological distinction between static objects and dynamic events.

This division is interesting to consider in relation to Goodale and Milner's (1992) functional model of the dorsal and ventral visual streams. In that model, the ventral stream supports processes involved in visual object recognition, while the dorsal stream performs visual computations supporting the planning and monitoring of actions directed at the object. Object shape (ventral stream) and manipulation (dorsal stream) knowledge should be segregated according to the Goodale and Milner model, whereas the data presented here suggest partial co-representation of object shape and manipulation knowledge, as well as additional representation of shape knowledge in haptic regions outside modal visual cortex. Furthermore, whereas visual motion processing is associated mainly with action in the two-stream model, the present results link visual motion knowledge more closely with temporal-spatial perception. Thus, despite some superficial similarity, the 2 conceptual subnetworks described here do not correspond to the 2 visual streams. This is not surprising, given that the present study included other sensory modalities in addition to vision, and concerned concept representations rather than perceptual processes.

Our findings are consistent with several aspects of previous theoretical proposals about the neural organization of conceptual knowledge (see Kiefer and Pulvermüller 2012, for a review). Damasio (1989) put forward the idea that a hierarchy of CZs integrate elementary sensory-motor features encoded in modality-specific cortex during perception and action, and that these same CZs control the time-locked reactivation of those features during concept retrieval, leading to a reenactment of sensory-motor experience. Simmons and Barsalou (2003) elaborated on the idea of CZs by incorporating the principle of “similarity-in-topography,” according to which neurons that integrate similar sets of features in CZs are located closer to one another than neurons that integrate different sets of features. The resulting conceptual topography theory posits 4 different types of CZs, which are also hierarchically organized: analytic CZs bind together elementary sensory-motor features to give rise to representations of object parts and properties. Holistic CZs extract global, gestalt-like information from the elementary features in early cortical areas, in parallel with analytic CZs. Modality CZs encode information about regularities in the activation patterns of neurons in analytic and holist CZs, capturing modality-specific similarities between exemplars of a taxonomic category. Finally, cross-modal CZs conjoin properties between different modalities, giving rise to multimodal representations. Unlike Damasio's model, conceptual topography theory posits that, under appropriate circumstances, neurons in CZs can function as stand-alone representations, enabling conceptual processing without activation of elementary sensory-motor features. A related account, known as the Hub and Spokes Model (Patterson et al. 2007; Lambon Ralph et al. 2010), maintains that all modality-specific representations are integrated by a single amodal hub located in the temporal pole.

Consistent with conceptual topography theory, we found that 1) activation patterns across a wide expanse of cortex reflected the natural correlations of modalities and attributes in the world (e.g., shape associated with both visual and somatosensory modalities, manipulation associated with shape, visual motion associated with sound), 2) areas previously implicated in multisensory integration were co-activated by the corresponding attributes (e.g., pSTS/pMTG activated by both sound and visual motion), and 3) the only areas activated by all attributes were high-level cortical hubs (angular gyrus, precuneus/posterior cingulate/retrosplenial cortex, parahippocampal gyrus, and medial prefrontal cortex).

In conjunction with findings from the multisensory integration literature reviewed above, our results suggest the existence of multiple bimodal, trimodal, and polymodal CZs (Fig. 6). These results stand in contrast to the predictions of the Hub and Spokes model, at least as presented in Patterson et al. (2007). Although our results are neutral about the role of the temporal pole as an amodal hub essential to conceptual processing, they strongly suggest that other cortical regions play a role in the multimodal integration of concept features.

As mentioned in the Introduction, these results should be considered in the context of the task used to elicit concept retrieval. “Conceptual processing” is a vaguely defined phenomenon that can be triggered by a wide variety of stimulus categories, in the service of a multiplicity of behavioral goals. As such, the particular neural structures involved are likely to depend on many factors, including the depth of processing required by the task and the level of contextualization of the stimuli. In the present study, we were interested in characterizing the cortical regions involved in the explicit retrieval of sensory-motor features associated with isolated nouns, in a task that requires relatively deep, deliberate semantic processing. Thus, our results likely reflect a combination of automatic and deliberate processes involved in concept retrieval, including conscious imagery.

It should be noted that some previous studies have used more implicit tasks (e.g., lexical decision, masked priming) to minimize the influence of conceptual elaboration and conscious imagery, allowing them to isolate the neural substrates of unconscious, automatic conceptual retrieval processes (e.g., Kiefer et al. 2008; Trumpp, Traub et al. 2013). Conversely, other studies have employed tasks requiring deeper, more elaborate semantic processing in order to study conceptual combination (Graves et al. 2010) and sentence-level meaning (e.g., Glenberg et al. 2008; Rueschemeyer et al. 2010). Importantly, both types of studies found evidence of sensory-motor involvement in semantic processing. A recent study by Lebois et al. (2014) provides direct evidence that task-related factors can influence which sensory-motor features are activated during concept retrieval. Thus, the activation patterns reported here would probably change to some extent if a different task were used (e.g., lexical decision, sentence comprehension), although we believe they capture some important aspects of the organization of conceptual knowledge that would hold for a wide variety of tasks. Future studies are required to explore this issue.

Conclusions

We investigated the cortical representation of word meaning by focusing on 5 semantic attributes that are associated with distinct types of sensory-motor information. Four of the 5 attributes (all except visual motion) were associated with activation in corresponding sensory-motor regions, consistent with embodied theories of concept representation. However, the results also provide new evidence that these patterns vary by attribute, with some showing stronger involvement of early unimodal areas and others recruiting only higher level modal or multimodal areas. The attribute ratings also modulated activity in regions previously identified as structural and functional “hubs” at the highest level of the sensory-motor integration hierarchy.

Our results provide the most detailed picture yet of the neural substrates of conceptual knowledge. Whereas the vast majority of studies on this topic have focused on the role of a single sensory-motor modality, our results show that even seemingly elementary conceptual attributes such as “shape” and “motion” are not straightforwardly mapped onto unimodal cortical areas, highlighting the importance of bimodal and multimodal CZs, and supporting the notion of hierarchical sensory-motor integration as an essential architectural feature of semantic memory. Further research is required to determine the details of these representations, particularly the extent to which they abstract away from unimodal sensory and motor information.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This project was supported by National Institute of Neurological Diseases and Stroke grant R01 NS33576, by National Institutes of Health General Clinical Research Center grant M01 RR00058, and by National Institute of General Medical Sciences grant T32 GM89586.

Supplementary Material

Notes

Conflict of Interest: None declared.

References

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. 2002. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb Cortex. 12:1202–1212. [DOI] [PubMed] [Google Scholar]

- Amedi A, Kriegstein K, van Atteveldt NM, Beauchamp MS, Naumer MJ. 2005. Functional imaging of human crossmodal identification and object recognition. Exp Brain Res. 166:559–571. [DOI] [PubMed] [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. 2001. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 4:324–330. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Binns MA, Grady CL, Alain C. 2004. Assessing the auditory dual-pathway model in humans. Neuroimage. 22:401–408. [DOI] [PubMed] [Google Scholar]

- Aziz-Zadeh L, Koski L, Zaidel E, Mazziotta JC, Iacoboni M. 2006. Lateralization of the human mirror neuron system. J Neurosci. 26:2964–2970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aziz-Zadeh L, Wilson SM, Rizzolatti G, Iacoboni M. 2006. Congruent embodied representations for visually presented actions and linguistic phrases describing actions. Curr Biol. 16:1818–1823. [DOI] [PubMed] [Google Scholar]

- Badre D, Wagner AD. 2007. Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia. 45:2883–2901. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. 1982. Context-independent and context-dependent information in concepts. Mem Cognit. 10:82–93. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. 2008. Grounded cognition. Annu Rev Psychol. 59:617–645. [DOI] [PubMed] [Google Scholar]

- Bartels A, Zeki S. 2000. The architecture of the colour centre in the human visual brain: new results and a review. Eur J Neurosci. 12:172–193. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS. 2005. See me, hear me, touch me: multisensory integration in lateral occipital-temporal cortex. Curr Opin Neurobiol. 15:145–153. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Haxby JV, Jennings JE, DeYoe EA. 1999. An fMRI version of the Farnsworth-Munsell 100-Hue test reveals multiple color-selective areas in human ventral occipitotemporal cortex. Cereb Cortex. 9:257–263. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A. 2004. Integration of auditory and visual information about objects in superior temporal sulcus. Neuron. 41:809–823. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Martin A. 2007. Grounding object concepts in perception and action: evidence from fMRI studies of tools. Cortex. 43:461–468. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Nath AR, Pasalar S. 2010. fMRI-Guided transcranial magnetic stimulation reveals that the superior temporal sulcus is a cortical locus of the McGurk effect. J Neurosci. 30:2414–2417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Caramazza A, Grossman E, Pascual-Leone A, Saxe R. 2008. Concepts are more than percepts: the case of action verbs. J Neurosci. 28:11347–11353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH. 2011. The neurobiology of semantic memory. Trends Cogn Sci (Regul Ed). 15:527–536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. 2009. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 19:2767–2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Rao SM, Cox RW. 1999. Conceptual processing during the conscious resting state: a functional MRI study. J Cogn Neurosci. 11:80–95. [DOI] [PubMed] [Google Scholar]

- Binder JR, Westbury CF, McKiernan KA, Possing ET, Medler DA. 2005. Distinct brain systems for processing concrete and abstract concepts. J Cogn Neurosci. 17:905–917. [DOI] [PubMed] [Google Scholar]

- Bodegård A, Geyer S, Grefkes C, Zilles K, Roland PE. 2001. Hierarchical processing of tactile shape in the human brain. Neuron. 31:317–328. [DOI] [PubMed] [Google Scholar]

- Bohlhalter S, Fretz C, Weder B. 2002. Hierarchical versus parallel processing in tactile object recognition: a behavioural-neuroanatomical study of aperceptive tactile agnosia. Brain. 125:2537–2548. [DOI] [PubMed] [Google Scholar]

- Bonner MF, Peelle JE, Cook PA, Grossman M. 2013. Heteromodal conceptual processing in the angular gyrus. Neuroimage. 71:175–186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bracci S, Cavina-Pratesi C, Ietswaart M, Caramazza A, Peelen MV. 2012. Closely overlapping responses to tools and hands in left lateral occipitotemporal cortex. J Neurophysiol. 107:1443–1456. [DOI] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross CG. 1981. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J Neurophysiol. 46:369–384. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Sepulcre J, Talukdar T, Krienen FM, Liu H, Hedden T, Andrews-Hanna JR, Sperling RA, Johnson KA. 2009. Cortical hubs revealed by intrinsic functional connectivity: mapping, assessment of stability, and relation to Alzheimer's disease. J Neurosci. 29:1860–1873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buxbaum LJ, Johnson-Frey SH, Bartlett-Williams M. 2005. Deficient internal models for planning hand-object interactions in apraxia. Neuropsychologia. 43:917–929. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Saffran EM. 2002. Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain Lang. 82:179–199. [DOI] [PubMed] [Google Scholar]

- Caspers S, Zilles K, Laird AR, Eickhoff SB. 2010. ALE meta-analysis of action observation and imitation in the human brain. Neuroimage. 50:1148–1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanna AE, Trimble MR. 2006. The precuneus: a review of its functional anatomy and behavioural correlates. Brain. 129:564–583. [DOI] [PubMed] [Google Scholar]

- Chatterjee A. 2008. The neural organization of spatial thought and language. Semin Speech Lang. 29:226–38–quizC6. [DOI] [PubMed] [Google Scholar]

- Chen E, Widick P, Chatterjee A. 2008. Functional-anatomical organization of predicate metaphor processing. Brain Lang. 107:194–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark VP, Parasuraman R, Keil K, Kulansky R, Fannon S, Maisog JM, Ungerleider LG, Haxby JV. 1997. Selective attention to face identity and color studied with fMRI. Hum Brain Mapp. 5:293–297. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Miezin FM, Dobmeyer S, Shulman GL, Petersen SE. 1991. Selective and divided attention during visual discriminations of shape, color, and speed: functional anatomy by positron emission tomography. J Neurosci. 11:2383–2402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. 1996. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 29:162–173. [DOI] [PubMed] [Google Scholar]

- Damasio AR. 1989. Time-locked multiregional retroactivation: a systems-level proposal for the neural substrates of recall and recognition. Cognition. 33:25–62. [DOI] [PubMed] [Google Scholar]

- Davachi L, Mitchell JP, Wagner AD. 2003. Multiple routes to memory: distinct medial temporal lobe processes build item and source memories. Proc Natl Acad Sci USA. 100:2157–2162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desai RH, Conant LL, Binder JR, Park H, Seidenberg MS. 2013. A piece of the action: modulation of sensory-motor regions by action idioms and metaphors. Neuroimage. 83:862–869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doehrmann O, Naumer MJ. 2008. Semantics and the multisensory brain: how meaning modulates processes of audio-visual integration. Brain Res. 1242:136–150. [DOI] [PubMed] [Google Scholar]

- Dumoulin SO, Bittar RG, Kabani NJ, Baker CL, Le Goualher G, Bruce Pike G, Evans AC. 2000. A new anatomical landmark for reliable identification of human area V5/MT: a quantitative analysis of sulcal patterning. Cereb Cortex. 10:454–463. [DOI] [PubMed] [Google Scholar]

- Ekstrom AD, Kahana MJ, Caplan JB, Fields TA, Isham EA, Newman EL, Fried I. 2003. Cellular networks underlying human spatial navigation. Nature. 425:184–188. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. 1998. A cortical representation of the local visual environment. Nature. 392:598–601. [DOI] [PubMed] [Google Scholar]

- Fernandino L, Conant LL, Binder JR, Blindauer K, Hiner B, Spangler K, Desai RH. 2013a. Parkinson's disease disrupts both automatic and controlled processing of action verbs. Brain Lang. 127:65–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandino L, Conant LL, Binder JR, Blindauer K, Hiner B, Spangler K, Desai RH. 2013b. Where is the action? Action sentence processing in Parkinson's disease. Neuropsychologia. 51:1510–1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer MH, Zwaan RA. 2008. Embodied language: a review of the role of the motor system in language comprehension. Q J Exp Psychol. 61:825–850. [DOI] [PubMed] [Google Scholar]

- Frey SH, Vinton D, Norlund R, Grafton ST. 2005. Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Cogn Brain Res. 23:397–405. [DOI] [PubMed] [Google Scholar]

- Gentile G, Guterstam A, Brozzoli C, Ehrsson HH. 2013. Disintegration of multisensory signals from the real hand reduces default limb self-attribution: an fMRI study. J Neurosci. 33:13350–13366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentile G, Petkova VI, Ehrsson HH. 2011. Integration of visual and tactile signals from the hand in the human brain: an fMRI study. J Neurophysiol. 105:910–922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. 2006. Is neocortex essentially multisensory? Trends Cogn Sci (Regul Ed). 10:278–285. [DOI] [PubMed] [Google Scholar]

- Gibbs R. 2006. Metaphor interpretation as embodied simulation. Mind Lang. 21:434–458. [Google Scholar]

- Gibson JJ. 1979. The ecological approach to visual perception. Boston: Houghton Mifflin. [Google Scholar]

- Glenberg AM, Gallese V. 2012. Action-based language: a theory of language acquisition, comprehension, and production. Cortex. 48:905–922. [DOI] [PubMed] [Google Scholar]

- Glenberg AM, Sato M, Cattaneo L, Riggio L, Palumbo D, Buccino G. 2008. Processing abstract language modulates motor system activity. Q J Exp Psychol. 61:905–919. [DOI] [PubMed] [Google Scholar]

- Goldenberg G, Spatt J. 2009. The neural basis of tool use. Brain. 132:1645–1655. [DOI] [PubMed] [Google Scholar]

- Goodale MA. 2011. Transforming vision into action. Vision Res. 51:1567–1587. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. 1992. Separate visual pathways for perception and action. Trends Neurosci. 15:20–25. [DOI] [PubMed] [Google Scholar]

- Gottlieb J. 2007. From thought to action: the parietal cortex as a bridge between perception, action, and cognition. Neuron. 53:9–16. [DOI] [PubMed] [Google Scholar]

- Grant KW, Seitz P-F. 2000. The use of visible speech cues for improving auditory detection of spoken sentences. J Acoust Soc Am. 108:1197–1208. [DOI] [PubMed] [Google Scholar]

- Graves WW, Binder JR, Desai RH, Conant LL, Seidenberg MS. 2010. Neural correlates of implicit and explicit combinatorial semantic processing. Neuroimage. 53:638–646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grefkes C, Weiss PH, Zilles K, Fink GR. 2002. Crossmodal processing of object features in human anterior intraparietal cortex: an fMRI study implies equivalencies between humans and monkeys. Neuron. 35:173–184. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. 2004. The human visual cortex. Annu Rev Neurosci. 27:649–677. [DOI] [PubMed] [Google Scholar]

- Grosbras M-H, Beaton S, Eickhoff SB. 2012. Brain regions involved in human movement perception: a quantitative voxel-based meta-analysis. Hum Brain Mapp. 33:431–454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman ED, Blake R. 2002. Brain areas active during visual perception of biological motion. Neuron. 35:1167–1175. [DOI] [PubMed] [Google Scholar]

- Grossman ED, Jardine NL, Pyles JA. 2010. fMR-adaptation reveals invariant coding of biological motion on the human STS. Front Hum Neurosci. 4: doi:10.3389/neuro.09.015.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman M, Anderson C, Khan A, Avants B, Elman L, McCluskey L. 2008. Impaired action knowledge in amyotrophic lateral sclerosis. Neurology. 71:1396–1401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gusnard DA, Akbudak E, Shulman GL, Raichle ME. 2001. Medial prefrontal cortex and self-referential mental activity: relation to a default mode of brain function. Proc Natl Acad Sci USA. 98:4259–4264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N, Roland PE. 1998. Cross-modal transfer of information between the tactile and the visual representations in the human brain: a positron emission tomographic study. J Neurosci. 18:1072–1084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Maguire EA. 2007. Using imagination to understand the neural basis of episodic memory. J Neurosci. 27:14365–14374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Davis MH, Kherif F, Pulvermüller F. 2008. Imagery or meaning? Evidence for a semantic origin of category-specific brain activity in metabolic imaging. Eur J Neurosci. 27:1856–1866. [DOI] [PMC free article] [PubMed] [Google Scholar]