Abstract

Patient reported outcome measures (PROMs) are prevalent in child mental health services. In this point of view, we discuss our experience of training clinicians to use PROMs and to interpret and discuss feedback from measures. Findings from pre–post observational data from clinicians who attended either a 1- or 3-day training course showed that clinicians in both courses had more positive attitudes and higher levels of self-efficacy regarding administering measures and using feedback after training. We hope that this special issue will lead the way for future research on training clinicians to use outcome measures so that PROMs may be a source of clinically useful practice based evidence.

Keywords: Patient reported outcome measures, Child, Adolescent, Mental health, CAMHS

Introduction

Patient reported outcome measures (PROMs) are recommended by healthcare systems internationally (Department of Health 2011, 2012; National Quality Forum 2013). PROMs dovetail with policy on increasing service user involvement in care (Department of Health 2010; Institue of Medicine 2001) as they facilitate patient-clinician communication, enabling patients to collaborate in treatment decisions (Carlier et al. 2012; Chen et al. 2013). PROMs are believed to provide clinicians with evidence as to what treatments are working, or not working, for their patients (Lambert et al. 2006; Whipple and Lambert 2011). Evidence suggests that the use of PROMs has a positive impact on treatment outcome and in child mental health research in particular, patients improve faster when clinicians use PROMs and can receive feedback on patient-scores than when clinicians use PROMs alone (Bickman et al. 2011; Carlier et al. 2012; Kelley and Bickman 2009; Knaup et al. 2009; Lambert and Shimokawa 2011).

However, there are a number of challenges to implementing and using PROMs (Black 2013; Boswell et al. 2013; de Jong 2014; Douglas et al. 2014; Fleming et al. 2014; Hall et al. 2014; Hoenders et al. 2013; Lohr and Zebrack 2009; Meehan et al. 2006; Mellor-Clark et al. 2014; Smith and Street 2013; Wolpert 2013). Barriers discussed elsewhere in this special issue include organisational, technical, and administrative support; psychometric properties of measures; attributing change in outcomes to care received; outcome data potentially being used—or misused—for decisions about service funding; and a lack of feedback on PROM data. In this point of view, we focus on the potential barrier of attitudes to using PROMs. We discuss our experience of training clinicians to use PROMs and to interpret and discuss feedback from measures, presenting findings from pre–post observational data on changes in attitudes and self-efficacy regarding the use of PROMs and feedback.

The majority of clinicians believe that providing patients with feedback based on assessment measures benefits patient insight, experience, and involvement (Smith et al. 2007). Clinicians report that PROMs could be used to help target treatment to the needs of the family (Wolpert et al. 2014). Notwithstanding, a large percentage of clinicians would also be unwilling to administer outcome measures even if it improved patient care (Walter et al. 1998). One reason clinicians may not use measures is uncertainty over what they assess and low levels of self-efficacy about how they should be used (Norman et al. 2013). Clinicians in adult mental health services report being initially anxious and resistant to using PROMs but nevertheless, that PROMs facilitate the patient-clinician relationship by promoting communication, suggesting that experience of using measures may help ameliorate negative attitudes (Unsworth et al. 2012).

Survey and case note audit studies have found the use of measures at one time point to range from 65 to 87 % but at more than one time point from only 16 to 40 % (Batty et al. 2013; Johnston and Gowers 2005; Mellor-Clark et al. 1999). Clinicians are more likely to use outcome measures when they believe that measures are practically useful (Jensen-Doss and Hawley 2010). Similarly, clinicians are more likely to use feedback from outcome measures when they hold a positive attitude to feedback (de Jong et al. 2012).

Authors recommend training for clinicians to use outcome measures in child mental health to overcome these potential barriers (Hall et al. 2014), and clinicians are more likely to use outcome measure if they have received training (Hatfield and Ogles 2004). Studies of Australian mental health workers have shown that clinicians find measures more practically useful with on-going guidance on using PROMs (Trauer et al. 2009) and that one session of PROM training improved attitudes to using outcome measures and feeding back data from measures to patients (Willis et al. 2009).

Aims and Objectives

The above evidence suggests that training clinicians may support the use of PROMs. Still, evidence is needed that explores whether training clinicians to use outcome measures in child mental health is associated with more positive attitudes and higher levels of self-efficacy regarding administering PROMs and using feedback from measures. Over the past 5 years, we have developed training for clinicians about when to use—and when not to use—outcome measures in child mental health, how to administer measures, and how to safely interpret and feed data back in a way that complements clinical work (Wolpert 2013; Wolpert et al. 2014). In this point of view, we present pre–post observational data from this training, regarding changes in attitudes and self-efficacy related to administering PROMs and using feedback from measures. In particular, we report on samples of clinicians who attended 1- and 3-day versions of the training.

Method

Overview of UPROMISE Training

Using PROMs to Improve Service Effectiveness (UPROMISE) has been developed by the Child Outcomes Research Consortium (CORC) (Fleming et al. 2014) and the Evidence Based Practice Unit (Wolpert et al. 2012). The curriculum, structure, and learning activities of the training were based on previous projects in child mental health services across England: a 3-year Masterclass series for promoting evidence based, outcomes informed practice and user participation (Childs 2013) and a project to develop and promote shared decision making (Abrines-Jaume et al. 2014). In addition to expert input from child mental health professionals and service users, literature on training development and evaluation for adult learners and professional audiences was used in the development, design, delivery, and evaluation of UPROMISE (Booth et al. 2003; Law 2012; Michelson et al. 2011; The Health Foundation 2012).

UPROMISE has four overarching learning objectives and modes of training:

Understand and challenge personal barriers to using outcome measures. Clinicians reflect on their experience of using PROMs and their stage of behavior change (Prochaska and DiClemente, 1983; Prochaska et al. 1992). Interactive group discussions are used to explore current challenges to PROM implementation and to identify possible actions for change.

Understand how measures can be useful and meaningful in clinical practice. Didactic teaching is used to address the strengths and limitations of a range outcome measures—drawing on reviews of measures for children (Deighton et al. 2014)—and how to involve young service users in completion, discussion, and analysis of results.

Learn how to collaboratively use measures. This involves communication skills training based on videos and role play on using PROMs in collaboration with young people, drawing on the above work on shared decision making. In the 3-day course, this also involves reflection on practice with real clients between sessions.

Strategies for embedding the use of measures in practice and supervision. This involves the use of Plan Do Study Act (Demming 1986) log books to help clinicians capture and reflect on their experiences of using PROMs and experiment with new ways of using PROMs. Drawing on Goal Theory (Locke and Latham 1990, 2002), at the end of the 3-day training course, clinicians set and record goals to implement changes to practice regarding PROM use, which they can then use to monitor progress after training (see “Measures” section).

The training prioritises sustainability to ensure new methods of using PROMs are embedded within particular service contexts; for instance, consideration of how outcome data can become a regular part of on-going supervision and meetings. The key difference between the 1-day (7 h) and 3-day (21 h) training courses is that the latter enables more active learning and practice and encourages embedding in the individual’s service context (Abrines-Jaume et al. 2014; The Health Foundation 2012). There are between 1 and 3 weeks between the individual training sessions in the longer training, thus affording clinicians more time to try out techniques and approaches between sessions, to reflect on learning, and also to share experiences and techniques in group discussions.

A pre–post observational design was employed to evaluate the UPROMISE training, and clinicians completed measures up to 4 weeks before training (Time 1, T1) and at the very end of training (Time 2, T2). Clinicians were non-randomly assigned to attend either an 1-day version of UPROMISE or a 3-day version.

Participants

Sample 1: One-day Training

Out of 48 attendees of the 1-day UPROMISE training, 58 % completed T1 and T2 questionnaires, resulting in a pre–post sample of N = 28 clinicians (25 females, 3 males). Most attendees worked in government funded mental health services (25), with the remainder working in a voluntary service (1), a private practice (1), and a school (1). Attendees were psychotherapists (15), consultant psychotherapists (5), clinical leads (3), trainee psychotherapists (3), and mental health workers (2). All attendees had direct patient contact, and half of attendees used PROMs with a few patients (14), with 8 using PROMs with most or all patients, and 6 not using PROMs with any patients.

Sample 2: Three-day Training

Out of 17 attendees of the 3-day UPROMISE training, 71 % completed the T1 and T2 questionnaires, resulting in a pre–post sample of N = 12 clinicians (10 females, 2 males). Attendees worked in government funded mental health services (5), voluntary services (5), and charities or other services (2). Attendees were psychotherapists (3), clinical leads (2), mental health workers (4), researchers (2), and managers (1). Most attendees had direct patient contact (10), and of these 3 attendees used PROMs with a few patients, with 5 using PROMs with most or all patients, and 2 not using PROMs with any patients.

Measures

PROM Attitudes and Feedback Attitudes (Samples 1 and 2)

To measure PROM attitudes and feedback attitudes, the 23-item attitudes to routine outcome assessment (ROA) (Willis et al. 2009) questionnaire was used. The ROA captures PROM attitudes, which are general attitudes to administering and using PROMs (15 items; e.g., “Outcome measures do not capture what is happening for my patients” reverse scored) and feedback attitudes, which are attitudes to using and providing feedback based on outcome measures (8 items; e.g., “Providing feedback from outcome measures will help the clinician and service user work more collaboratively in treatment”1). Attendees responded on a six-point scale from strongly disagree (1) to strongly agree (6). The ROA has been used in a previous study and demonstrated acceptable reliability (Willis et al. 2009). Table 1 shows the Cronbach’s alphas for the T1 and T2 scores, which were acceptable.

Table 1.

Descriptive statistics for PROM and feedback attitudes and self-efficacy

| Overall | Sample 1: 1-day training | Sample 2: 3-day training | |||||

|---|---|---|---|---|---|---|---|

| M | SD | α | M | SD | M | SD | |

| ROA | |||||||

| T1 PROM attitudes | 4.01 | 0.56 | .79 | 3.84 | 0.54 | 4.40 | 0.41 |

| T2 PROM attitudes | 4.37 | 0.57 | .85 | 4.18 | 0.55 | 4.82 | 0.36 |

| T1 feedback attitudes | 4.30 | 0.68 | .81 | 4.14 | 0.70 | 4.68 | 0.46 |

| T2 feedback attitudes | 4.70 | 0.57 | .88 | 4.54 | 0.55 | 5.11 | 0.35 |

| ROSE | |||||||

| T1 PROM self-efficacy | 2.60 | 0.94 | .79 | 2.54 | 0.99 | 2.73 | 0.84 |

| T2 PROM self-efficacy | 3.44 | 1.01 | .88 | 3.18 | 1.99 | 4.07 | 0.77 |

| T1 feedback self-efficacy | 1.97 | 1.04 | .80 | 1.80 | 0.92 | 2.36 | 1.23 |

| T2 feedback self-efficacy | 2.92 | 1.08 | .83 | 2.62 | 1.04 | 3.64 | 0.82 |

ROA routine outcome assessment questionnaire (Willis et al. 2009), ROSE routine outcome self-efficacy questionnaire, PROM patient reported outcome measure

n sample 1 = 28. n sample 2 = 12

PROM Self-Efficacy and Feedback Self-Efficacy (Samples 1 and 2)

To measure PROM self-efficacy and feedback self-efficacy, a bespoke eight-item routine outcome self-efficacy (ROSE) questionnaire was used as we were unable to find an existing measure. The structure of ROSE was based on an existing measure of self-efficacy regarding mental health diagnosis (Michelson et al. 2011). Attendees were asked the initial question stem: “How well do you feel able to perform the following activities?” Next, a list of activities was presented related to PROM self-efficacy, which regards how outcome measures are used and administered (5 items; e.g., “Introduce the ideas around service user feedback and outcomes to children, young people and carers”) and feedback self-efficacy, which regards how feedback is used and provided (3 items; e.g., “Use the results from questionnaires to help decide when a different approach in therapy, or a different therapist, is needed”). These activities were taken from a national curriculum for best practice for child mental health service staff about competencies for administering PROMs and using and proving feedback (Children and Young People’s Improving Access to Psychological Therapies Programme 2013). Attendees responded to the activities on a six-point scale from not at all well (1) to extremely well (6). Table 1 shows the Cronbach’s alphas for the T1 and T2 scores, which were acceptable.

Goals for Implementing Changes to Practice (Sample 2 Only)

To record clinicians’ goals for implementing changes to practice regarding PROM use, we used a bespoke measure based on an existing measure (Michelson et al. 2011). At the end of training (T2 only), clinicians were asked to record three goals related to changes in PROM use in direct patient work that they would implement after training.

Results

Change Associated With Training

To explore changes in attitudes and self-efficacy related to PROMs and feedback associated with training, 2 × 2 repeated measures analysis of covariance (ANCOVA) were conducted with time (T1 vs. T2) as the repeated measures factor and training duration (1- vs. 3-day) as the between-participants factor, adjusting for amount of patient contact and use of PROMs. Descriptive statistics for all variables are shown in Table 1.

When adjusting for amount of patient contact and use of PROMs,2 there were significant main effects of time [F (1, 36) = 6.94, p < .05] and training duration [F (1, 36) = 13.71, p < .001] on PROM attitudes, however the interaction between time and training duration was not significant [F (1, 36) = 0.38, p = .541]. When adjusting for amount of patient contact and use of PROMs, there were significant main effects of time [F (1, 36) = 6.39, p < .05] and training duration [F (1, 36) = 8.68, p < .01] on feedback attitudes, however the interaction between time and training duration was not significant [F (1, 36) = 0.10, p = .758]. Clinicians had more positive attitudes to administering PROMs and using feedback from PROMs after training, and clinicians who attended the 3-day training had more positive attitudes to administering PROMs and using feedback from PROMs than clinicians who attended the 1-day training.

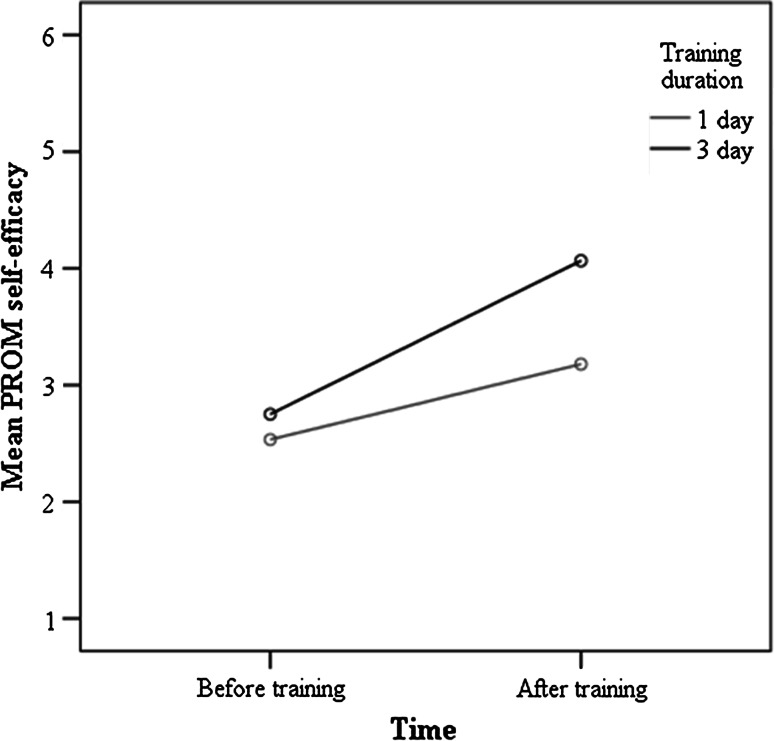

When adjusting for amount of patient contact and use of PROMs, there were significant main effects of time [F (1, 36) = 19.80, p < .001] but not training duration [F (1, 36) = 3.83, p = .058] on PROM self-efficacy, however the interaction between time and training duration was significant [F (1, 36) = 4.98, p < .05]. Figure 1 shows the interaction between time and training duration, and clinicians who attended the 3-day training had higher levels of PROM self-efficacy after training than clinicians who attended the 1-day training. When adjusting for amount of patient contact and use of PROMs, there were significant main effects of time [F (1, 36) = 13.80, p < .001] and training duration [F (1, 36) = 7.48, p < .01] on feedback self-efficacy, however the interaction between time and training duration was not significant [F (1, 36) = 1.58, p = .218]. Clinicians had higher levels of feedback self-efficacy after training, and clinicians who attended the 3-day training had higher levels of feedback self-efficacy than clinicians who attended the 1-day training.

Fig. 1.

Time × training duration interaction effect for PROM self-efficacy. PROM patient reported outcome measure

Goals for Implementing Changes to Practice

Clinicians’ goals for implementing changes to practice regarding PROM use were thematically analysed. Clinicians produced 27 goals, and the most frequent theme that emerged referred to plans to use PROMs more frequently (7 out of 27 goals), followed by plans to promote the use of PROMs with colleagues (6), use PROMs for treatment or quality improvement (5; e.g., “Use measures collaboratively with patients to inform treatment”), and improve how PROMs are organised (5; e.g., “Set up central access system online”). Less frequent themes were to more carefully select outcome measures (2), to use a specific, named outcome measure (1), and to use PROMs to monitor treatment progress (1). These goals suggest that clinicians intended to administer PROMs and use feedback from measures more regularly after training, in line with the learning objectives of the training (see “Method” section).

Discussion

The aim of this point of view was to reflect on our experience of developing and evaluating training for clinicians to use PROMs and to interpret and discuss feedback from measures. We presented pre–post observational data from this training on samples of clinicians who attended 1- and 3-day versions.

Clinicians in both versions had more positive attitudes and higher levels of self-efficacy regarding administering PROMs and using feedback from PROMs after training. There was one significant interaction effect between time and training duration, and clinicians who attended the 3-day version had greater increases in PROM self-efficacy than clinicians who attended the 1-day version. However, inferences about causation should not be made with a non-randomised design, as pre-existing differences between the two samples may have contributed to the effects observed. Still, it is not surprising that the longer training was associated with greater improvements in PROM self-efficacy as it may have afforded clinicians more time to practice and embed strategies for using PROMs in daily work (see Overview of UPROMISE training).

Findings of the present point of view should be considered in in the context of a number of limitations. Self-selection bias may mean that our samples were not representative of general clinicians in child mental health services. As an observational, non-randomised design was employed, pre-existing differences between the two samples may have contributed to the effects found. Finally, without a longer follow-up, we cannot conclude that changes in PROM and feedback attitudes and self-efficacy were sustained or that these changes resulted in actual changes to practice.

Authors recommend training clinicians to use outcome measures in child mental health (Hall et al. 2014). Over the past 5 years, we have developed and evaluated training for clinicians about when to use—and when not to use—outcome measures in child mental health, how to administer measures, and how to safely interpret and feed data back in a way that complements clinical work (Wolpert 2013). Findings from pre–post observational data from clinicians who attended either a 1- or 3-day training course showed that clinicians in both courses had more positive attitudes and higher levels of self-efficacy regarding administering measures and using feedback after training. Our experience supports recommendations that clinicians should be trained to use outcome measures. We hope that this special issue will lead the way for future research on training clinicians to use outcome measures so that PROMs may be a source of clinically useful practice based evidence.

Footnotes

As the measure was developed in Australia, the word “client” was changed to “patient” and “consumer” to “service user” to make the items more applicable to clinicians in mental health services in England, without changing the meaning of the items.

The effects of the covariates were not significant in any of the ANCOVAs.

References

- Abrines-Jaume, N., Midgley, N., Hopkins, K., Hoffman, J., Martin, K., Law, D., et al. (2014). A qualitative analysis of implementing shared decision making in child and adolescent mental health services in the United Kingdom: Stages and facilitators. Clinical Child Psychology and Psychiatry. doi:10.1177/1359104514547596. [DOI] [PubMed]

- Batty MJ, Moldavsky M, Foroushani PS, Pass S, Marriott M, Sayal K, Hollis C. Implementing routine outcome measures in child and adolescent mental health services: From present to future practice. Child and Adolescent Mental Health. 2013;18(2):82–87. doi: 10.1111/j.1475-3588.2012.00658.x. [DOI] [PubMed] [Google Scholar]

- Bickman L, Kelley SD, Breda C, de Andrade AR, Reimer M. Effects of routine feedback to clinicians on mental health outcomes of youths: Results of a randomized trial. Psychiatric Services. 2011;62(12):1423–1429. doi: 10.1176/appi.ps.002052011. [DOI] [PubMed] [Google Scholar]

- Black N. Patient reported outcome measures could help transform healthcare. BMJ. 2013;346(1):d167–f167. doi: 10.1136/bmj.f167. [DOI] [PubMed] [Google Scholar]

- Booth A, Sutton A, Falzon L. Working together: Supporting projects through action learning. Health Information and Libraries Journal. 2003;20:225–231. doi: 10.1111/j.1471-1842.2003.00461.x. [DOI] [PubMed] [Google Scholar]

- Boswell JF, Kraus DR, Miller SD, Lambert MJ. Implementing routine outcome monitoring in clinical practice: Benefits, challenges, and solutions. Psychotherapy Research. 2013 doi: 10.1080/10503307.2013.817696. [DOI] [PubMed] [Google Scholar]

- Carlier IVE, Meuldijk D, Van Vliet IM, Van Fenema E, Van der Wee NJA, Zitman FG. Routine outcome monitoring and feedback on physical or mental health status: Evidence and theory. Journal of Evaluation in Clinical Practice. 2012;18(1):104–110. doi: 10.1111/j.1365-2753.2010.01543.x. [DOI] [PubMed] [Google Scholar]

- Chen J, Ou L, Hollis S. A systematic review of the impact of routine collection of patient reported outcome measures on patients, providers and health organisations in an oncologic setting. BMC Health Services Research. 2013;13(1):211. doi: 10.1186/1472-6963-13-211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Children and Young People’s Improving Access to Psychological Therapies Programme . National curriculum for core, cognitive behavioural therapy, parenting training (3–10 year olds), systemic family practice, interpersonal psychotherapy for adolescents, supervision, and transformational service leadership. London: IAPT; 2013. [Google Scholar]

- Childs, J. (2013). Masterclasses: Promoting excellence in evidence-based, outcomes informed practice and user participation for child mental health professionals. London: CAMHS Press.

- de Jong, K. (2014). Deriving implementation strategies for outcome monitoring from theory, research and practice. Administration and Policy in Mental Health and Mental Health Services Research. doi:10.1007/s10488-014-0589-6. [DOI] [PubMed]

- de Jong K, van Sluis P, Nugter MA, Heiser WJ, Spinhoven P. Understanding the differential impact of outcome monitoring: Therapist variables that moderate feedback effects in a randomized clinical trial. Psychotherapy Research. 2012;22(4):464–474. doi: 10.1080/10503307.2012.673023. [DOI] [PubMed] [Google Scholar]

- Deighton J, Croudace T, Fonagy P, Brown J, Patalay P, Wolpert M. Measuring mental health and wellbeing outcomes for children and adolescents to inform practice and policy: A review of child self-report measures. Child and Adolescent Psychiatry and Mental Health. 2014;8(1):14. doi: 10.1186/1753-2000-8-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demming W. Out of the crisis. Cambridge, MA: Massachusetts Institute of Technology; 1986. [Google Scholar]

- Department of Health. (2010). Equity and excellence: Liberating the NHS. Norwich: The Stationery Office.

- Department of Health. (2011). Talking therapies: A four-year plan of action. London: Author.

- Department of Health. (2012). IAPT three-year report: The first million patients. London: Author.

- Douglas, S., Button, S., & Casey, S. E. (2014). Implementing for sustainability: Promoting use of a measurement feedback system for innovation and quality improvement. Administration and Policy in Mental Health and Mental Health Services Research. [DOI] [PubMed]

- Fleming, I., Jones, M., Bradley, J., & Wolpert, M. (2014). Learning from a learning collaboration: The CORC approach to combining research, evaluation and practice in child mental health. Administration and Policy in Mental Health and Mental Health Services Research. doi:10.1007/s10488-014-0592-y. [DOI] [PMC free article] [PubMed]

- Hall, C. L., Taylor, J., Moldavsky, M., Marriott, M., Pass, S., & Newell, K. (2014). A qualitative process evaluation of electronic session-by-session outcome measurement in child and adolescent mental health services. BMC Psychiatry, 14, 113. [DOI] [PMC free article] [PubMed]

- Hatfield DR, Ogles BM. The use of outcome measures by psychologists in clinical practice. Professional Psychology: Research and Practice. 2004;35(5):485–491. doi: 10.1037/0735-7028.35.5.485. [DOI] [Google Scholar]

- Hoenders RHJ, Bos EH, Bartels-Velthuis AA, Vollbehr NK, Ploeg K, Jonge P, Jong JTVM. Pitfalls in the assessment, analysis, and interpretation of routine outcome monitoring (ROM) data: Results from an outpatient clinic for integrative mental health. Administration and Policy in Mental Health and Mental Health Services Research. 2013 doi: 10.1007/s10488-013-0511-7. [DOI] [PubMed] [Google Scholar]

- Institue of Medicine . Crossing the quality chasm: A new health system for the 21st Century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- Jensen-Doss A, Hawley KM. Understanding barriers to evidence-based assessment: Clinician attitudes toward standardized assessment tools. Journal of Clinical Child & Adolescent Psychology. 2010;39(6):885–896. doi: 10.1080/15374416.2010.517169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston C, Gowers S. Routine outcome measurement: A survey of UK child and adolescent mental health services. Child and Adolescent Mental Health. 2005;10(3):133–139. doi: 10.1111/j.1475-3588.2005.00357.x. [DOI] [PubMed] [Google Scholar]

- Kelley SD, Bickman L. Beyond outcomes monitoring: measurement feedback systems in child and adolescent clinical practice. Current Opinion in Psychiatry. 2009;22(4):363–368. doi: 10.1097/YCO.0b013e32832c9162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knaup C, Koesters M, Schoefer D, Becker T, Puschner B. Effect of feedback of treatment outcome in specialist mental healthcare: Meta-analysis. The British Journal of Psychiatry. 2009;195(1):15–22. doi: 10.1192/bjp.bp.108.053967. [DOI] [PubMed] [Google Scholar]

- Lambert MJ, Shimokawa K. Collecting client feedback. Psychotherapy. 2011;48(1):72–79. doi: 10.1037/a0022238. [DOI] [PubMed] [Google Scholar]

- Lambert MJ, Whipple JL, Hawkins EJ, Vermeersch DA, Nielsen SL, Smart DW. Is it time for clinicians to routinely track patient outcome? A meta-analysis. Clinical Psychology: Science and Practice. 2006;10(3):288–301. [Google Scholar]

- Law D. A practical guide to using service user feedback and outcome tools to inform clinical practice in child and adolescent mental health. London: IAPT National Team; 2012. [Google Scholar]

- Locke EA, Latham GP. A theory of goal setting and task performance. Englewood Cliff, NH: Prentice-Hall; 1990. [Google Scholar]

- Locke EA, Latham GP. Buiding a practically useful theory of goal setting and task motivation: A 35 year odyssey. American Psychologist. 2002;57:705–717. doi: 10.1037/0003-066X.57.9.705. [DOI] [PubMed] [Google Scholar]

- Lohr KN, Zebrack BJ. Using patient-reported outcomes in clinical practice: Challenges and opportunities. Quality of Life Research. 2009;18(1):99–107. doi: 10.1007/s11136-008-9413-7. [DOI] [PubMed] [Google Scholar]

- Meehan T, McCombes S, Hatzipetrou L, Catchpoole R. Introduction of routine outcome measures: Staff reactions and issues for consideration. Journal of Psychiatric and Mental Health Nursing. 2006;13(5):581–587. doi: 10.1111/j.1365-2850.2006.00985.x. [DOI] [PubMed] [Google Scholar]

- Mellor-Clark J, Barkham M, Connell J, Evans C. Practice-based evidence and strandardised evaluation: informing the design of the CORE system. European Journal of Psychotherapy Counselling and Health. 1999;2:357–374. doi: 10.1080/13642539908400818. [DOI] [Google Scholar]

- Mellor-Clark, J., Cross, S., Macdonald, J., & Skjulsvik, T. (2014). Leading horses to water: Lessons from a decade of helping psychological therapy services use routine outcome measurement to improve practice. Administration and Policy in Mental Health and Mental Health Services Research. doi:10.1007/s10488-014-0587-8. [DOI] [PubMed]

- Michelson D, Rock S, Holliday S, Murphy E, Myers G, Tilki S, Day C. Improving psychiatric diagnosis in multidisciplinary child and adolescent mental health services. The Psychiatrist. 2011;35(12):454–459. doi: 10.1192/pb.bp.111.034066. [DOI] [Google Scholar]

- National Quality Forum . Patient Reported Outcomes (PROs) in performance measurement. Washington, DC: National Quality Forum; 2013. [Google Scholar]

- Norman S, Dean S, Hansford L, Ford T. Clinical practitioner’s attitudes towards the use of Routine Outcome Monitoring within Child and Adolescent Mental Health Services: A qualitative study of two Child and Adolescent Mental Health Services. Clinical Child Psychology and Psychiatry. 2013 doi: 10.1177/1359104513492348. [DOI] [PubMed] [Google Scholar]

- Prochaska J, DiClemente C. Stages and processes of self-change of smoking: Toward an integrative model of change. Journal of Consulting and Clinical Psychology. 1983;51:390–395. doi: 10.1037/0022-006X.51.3.390. [DOI] [PubMed] [Google Scholar]

- Prochaska J, DiClemente C, Norcross J. In search of how people change: Applications to addictive behaviors. American Psychologist. 1992;47:1002–1114. doi: 10.1037/0003-066X.47.9.1102. [DOI] [PubMed] [Google Scholar]

- Smith PC, Street AD. On the uses of routine patient-reported health outcome data. Health Economics. 2013;22(2):119–131. doi: 10.1002/hec.2793. [DOI] [PubMed] [Google Scholar]

- Smith SR, Wiggins CM, Gorske TT. A survey of psychological assessment feedback practices. Assessment. 2007;14(3):310–319. doi: 10.1177/1073191107302842. [DOI] [PubMed] [Google Scholar]

- The Health Foundation . Quality improvement training for healthcare professionals. London: The Health Foundation; 2012. [Google Scholar]

- Trauer T, Pedwell G, Lisa G. The effect of guidance in the use of routine outcome measures in clinical meetings. Australian Health Review. 2009;33:144–151. doi: 10.1071/AH090144. [DOI] [PubMed] [Google Scholar]

- Unsworth G, Cowie H, Green A. Therapists’ and clients’ perceptions of routine outcome measurement in the NHS: A qualitative study. Counselling and Psychotherapy Research. 2012;12(1):71–80. doi: 10.1080/14733145.2011.565125. [DOI] [Google Scholar]

- Walter G, Cleary M, Rey JM. Attitudes of mental health personnel towards rating outcome. Journal of Quality in Clinical Practice. 1998;18(2):109–115. [PubMed] [Google Scholar]

- Whipple JL, Lambert MJ. Outcome Measures for Practice. Annual Review of Clinical Psychology. 2011;7(1):87–111. doi: 10.1146/annurev-clinpsy-040510-143938. [DOI] [PubMed] [Google Scholar]

- Willis A, Deane FP, Coombs T. Improving clinicians’ attitudes toward providing feedback on routine outcome assessments. International Journal of Mental Health Nursing. 2009;18:211–215. doi: 10.1111/j.1447-0349.2009.00596.x. [DOI] [PubMed] [Google Scholar]

- Wolpert M. Do patient reported outcome measures do more harm than good? BMJ. 2013;346(1):f2669–f2669. doi: 10.1136/bmj.f2669. [DOI] [PubMed] [Google Scholar]

- Wolpert, M., Curtis-Tyler, K., & Edbrooke-Childs, J. (2014). A qualitative exploration of clinician and service user views on Patient Reported Outcome Measures in child mental health and diabetes services. Administration and Policy in Mental Health and Mental Health Services Research. doi:10.1007/s10488-014-0586-9. [DOI] [PMC free article] [PubMed]

- Wolpert M, Ford T, Trustam E, Law D, Deighton J, Flannery H, Fugard RJB. Patient-reported outcomes in child and adolescent mental health services (CAMHS): Use of idiographic and standardized measures. Journal of Mental Health. 2012;21(2):165–173. doi: 10.3109/09638237.2012.664304. [DOI] [PubMed] [Google Scholar]