Abstract

Facial expressions of emotion are thought to have evolved from the development of facial muscles used in sensory regulation and later adapted to express moral judgment. Negative moral judgment includes the expressions of anger, disgust and contempt. Here, we study the hypothesis that these facial expressions of negative moral judgment have further evolved into a facial expression of negation regularly used as a grammatical marker in human language. Specifically, we show that people from different cultures expressing negation use the same facial muscles as those employed to express negative moral judgment. We then show that this nonverbal signal is used as a co-articulator in speech and that, in American Sign Language, it has been grammaticalized as a non-manual marker. Furthermore, this facial expression of negation exhibits the theta oscillation (3–8 Hz) universally seen in syllable and mouthing production in speech and signing. These results provide evidence for the hypothesis that some components of human language have evolved from facial expressions of emotion, and suggest an evolutionary route for the emergence of grammatical markers.

Introduction

Humans communicate with peers using nonverbal facial expressions and language. Crucially, some of these facial expressions have grammatical function and, thus, are part of the grammar of the language (Baker & Padden, 1978; Liddell, 1978; Klima & Bellugi, 1979; Pfau & Quer, 2010). These facial expressions are thus grammatical markers and are sometimes called grammaticalized facial expressions (Reilly, McIntire, & Bellugi, 1990). A longstanding question in science is: where do these grammatical markers come from? The recent evolution of human language suggests several or most components of language evolved for reasons other than language and where only later adapted for this purpose (Hauser, Chomsky, & Fitch, 2002). Since scientific evidence strongly supports the view that facial expressions of emotion evolved much earlier than language and are used to communicate with others (Darwin, 1872), it is reasonable to hypothesize that some grammaticalized facial expressions evolved through the expression of emotion.

The present paper presents the first evidence in favor of this hypothesis. Specifically, we study the hypothesis that the facial expressions of emotion used by humans to communicate negative moral judgment have been compounded into a unique, universal grammatical marker of negation.

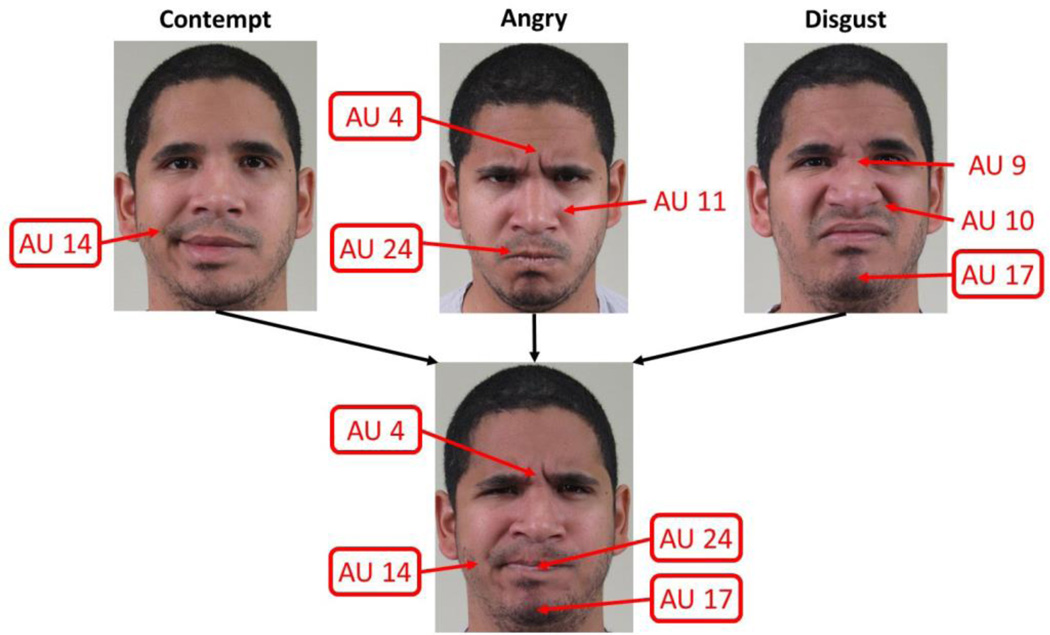

Humans express negative moral judgment using one of three facial expressions of emotion: anger, disgust or contempt. These facial expressions communicate violations of one’s rights, societal norms or beliefs (Oatley, Keltner, & Jenkins, 2006; Greene & Haidt, 2002; Rozin, Lowery, Imada, & Haidt, 1999; Shweder, Much, Mahapatra, & Park, 1997; Ekman & Friesen, 1969), and are thus used to communicate negation and disagreement to observers, Fig. 1A–C. The production of each of these expressions is unique (Ekman, Sorenson, & Friesen, 1969), meaning that the facial articulations (known as Action Units, AUs) used to produce these expressions are distinct from one another. Different facial articulations are identified with a distinct AU number. For example, anger is produced with AUs 4, 7 and 24, disgust with AUs 9, 10, 17, and contempt with AU 14 (Ekman & Friesen,1978), Fig. 1. As seen in the figure, each AU involves a unique activation of facial muscles, e.g., AU 4 uses a set of facial muscles that result in the lowering of the inner corners of the brows, while AU 9 wrinkles the nose.

Figure 1.

Example images of facial expressions used to convey negative moral judgment: anger (A), disgust (B) and contempt (C). Compound facial expressions of emotion include a subset of AUs from each of their subordinate categories, e.g., angrily disgusted includes AUs typically seen in the expression of anger and disgust (D).

We hypothesize that these facial expressions of negative moral judgment have been compounded to create a unique facial expression of negation. A compound facial expression is one that evolved from the expression of two or more emotions. As shown by Du et al. (2014), when facial expressions of emotion are compounded to create new categories, the resulting expression is defined using a subset of the AUs employed by the original (subordinate) categories. For example, the facial expression of angrily disgusted is produced with AUs 4, 7, 10 and 17 (Fig. 1D), a subset of those used to express its subordinate categories, i.e., anger and disgust, which are AUs 4, 7, 9, 10, 17, 24. This new combination of AUs must be distinct from the ones employed to express any other emotion category; otherwise, the resulting category could not be visually differentiated from the rest. The combination of AUs used to express angrily disgusted, for instance, is distinct from those seen when expressing other emotions (Du et al., 2014).

Thus, since the AUs used to express negative moral judgment are 4, 7, 9, 10, 14, 17 and 24, a facial expression of negation that has evolved through the expression of negative moral judgment should be defined by a unique subset of these AUs. The most accepted way to study this, is to determine if the AUs used by subjects of different cultures and languages are the same (Ekman, 1986; Bartlett, Littlewort, Frank, & Lee, 2014; Du et al., 2014). An identical use of AUs across cultures and languages is considered strong evidence of a biological origin of this expression. We analyze a large number of facial expression of negation produced in isolation (i.e., in nonverbal communication) as well as facial expressions used in speech and signing of negative sentences. Our studies include native speakers of English, Spanish and Mandarin Chinese and native signers of American Sign Language (ASL). The same facial expression of negation was identified in participants of all languages and cultural backgrounds. Crucially, this expression was identified as a compound of the expression of negative moral judgment as hypothesized, Fig. 2.

Figure 2.

We test the hypothesis that compounding the expressions of negative moral judgment (anger, disgust, contempt) yields a grammaticalized universal facial expression of negation. Shown on top are the three facial expressions of anger, disgust and contempt and their corresponding AUs. The AUs with an outer box are the ones that are combined to express negation (bottom image).

Our results demonstrate that the identified facial expression of negation is used as a nonverbal signal by people of distinct cultural upbringings, as a co-articulator in negative sentences in spoken languages and as a grammatical marker in signing. That is, in ASL, this facial expression of negation is used as a grammatical marker of negation in lieu of the manual sign for “no” and the headshake, which, until now, were the only other two substantiated ways to mark negation in signing (Pfau & Quer, 2010).1 This means that, in some cases, the only way to know if a signed sentence has positive or negative polarity is to visually identify this facial expression of negation because it is the only grammatical marker of negation. We called this facial expression, the “not face.” To prove that this “not face” is indeed easily identified by observers, we demonstrate that the newly identified facial expression of negation is distinct from all known facial expressions of emotion and is, hence, readily visually identifiable by people.

Additionally, syllable production in speech and signing falls within the theta band, between 3 and 8 Hz (theta oscillation) (Chandrasekaran et al., 2009; MacNeilage, 1998), i.e., the function defining speech or sign movement of a syllable has a frequency of 3–8 Hz. This is true even in facial expressions used by primates, e.g., lip-smacking (Ghazanfar, Morrill, & Kayser, 2013). Increasing or decreasing these frequencies beyond these margins reduces intelligibility of speech and facial articulations in primates. This is thought to be due to oscillations in auditory cortex, which allow us to segment theta-band signals from non-coding distractors (Gross et al., 2013). Thus, the herein identified grammatical marker of negation should also be produced within this intelligible theta rhythm. We test this prediction and find that the production of the identified facial expression of negation exhibits this rhythm in all languages (spoken and signed). We also measure the production of the “not face” in spontaneous expressions of negation without speech or signing and found it to be within the same theta range. The fact that this facial expression of negation is produced within the theta band not only in signing and speech, but also in isolation as a nonverbal signal, demonstrates that this rhythm of production is not an adaptation to language but an intrinsic property of this compound facial expression.

In sum, to the authors knowledge, these results provide the first evidence of the evolution of grammatical markers through the expression of emotion. Our results suggest a possible route from expression of emotion to the recent evolution of human language.

Materials and Methods

Participants

The experiment design and protocol was approved by the Office of Responsible Research Practices at The Ohio State University (OSU). A total of 184 human subjects (94 females; mean age 23; SD 5.8) were recruited from the local community and received a small compensation in exchange for participation. This sample size is consistent with recent results on the study of emotion (Du et al., 2014). In addition, we analyzed the ASL dataset of Benitez-Quiroz et al. (2014), which includes 15 native or near-native signers of ASL. This is the largest annotated set available for the study of non-manual markers in ASL.

Data acquisition

Subjects participating in Experiments 1–2 were seated 1.2 meters from a Canon IXUS 110 camera and faced it frontally. Two 500-W photography hot lights were located left and right from the midline, passing through the center of the subject and the camera. The light was diffused with two inverted umbrellas, i.e., the lights pointed away from the subject toward the center of the photography umbrellas, resulting in a diffuse lighting environment.

In Experiment 1, participants were asked to produce a facial expression of negation. Additionally, the experimenter suggested possible situations that may cause a negative answer and expression. Then, participants were photographed making their own facial expression of negation. Crucially, subjects were not asked to move specific muscles or facial components. Instead, they were encouraged to express negation as clearly as possible while minimizing head movement (i.e., expressing negation without a headshake). Photographs were taken at the apex of the expression and were full-color images of 4000 × 3000 pixels. In addition, a neutral facial expression of each subject was collected. A neutral expression is one where all the muscles of the face are relaxed (not active) and thus no AU is present. These images are available to researchers.

In Experiment 2, a computer screen was placed to the right of the camera to allow subjects to read and memorize a set of sentences in their native language. Specifically, a sentence was shown on the screen and participants were asked to memorize it. The experimenter instructed participants to indicate when they had memorized this sentence, at which point the screen went blank. Then, participants were asked to reproduce the memorized sentence as if speaking (frontally) to the experimenter, who remained behind the camera for the entirety of the experiment. After successful completion of this task, a new sentence was displayed on the computer monitor and the same procedure was repeated. The sentences used in this experiment are listed in the Supplemental Matrerial file. These videos are available to researchers from the authors website or by request.

The data used in Experiment 3 is that collected by the authors in a previous study (Benitez-Quiroz et al., 2014). Importantly, the sentences used in Experiments 2 and 3 were the same. A list of these sentences can be found in the Supplemental Information file. The videos are available to researchers at http://cbcsl.ece.ohio-state.edu/downloads.html.

Data acquisition was always completed using the participant’s native language (English, Spanish, Mandarin Chinese or ASL).

Manual analysis of the facial expressions in Experiment 1

An AU is the fundamental action of individual or group of muscles that produces a visible change in the image of a face. For example, AU 4 is defined as the contraction of two muscles that in isolation produces the lowering of the eyebrows. To detect this movement in each of the images of Experiment 1, a FACS expert (coder) compared the images of the collected “not face” with an image of a neutral expression of the same subject. This allowed the FACS coder to successfully identify those AUs present in the image.

We manually FACS coded the data collected in Experiment 1. We identified AUs that were present in more than 70% of the subjects, since this is known to show universal consistency across subjects of distinct cultural upbringings (Du et al., 2014). This identified the AUs in Table 1. We then identified AUs with 30% consistency (i.e., AUs used by a small number of participants only). Only one such secondary AU (AU 7) was found to be used by of the participants.

Table 1.

Consistently used Action Units (AUs) by subjects of different cultures when producing a facial expression of negation.

| AU # | Percentage of people using this AU |

|---|---|

| 4 | 96.21 |

| 17 | 71.21 |

| 14 or 24 | 71.97 |

Computer vision approach for AU detection

In Experiments 1 and 2, we FACS coded images and videos downloaded from the internet. We used Google Images with different combinations of search keywords: disagreement, negation, soccer, referee, missed penalty. We also used videos downloaded from Youtube using the same combinations of keywords. The number of images to be FACS coded was too large to be completed manually. Thus, we used two computer vision algorithms, one developed by our group (Benitez-Quiroz et al., 2016) and a commercial software (Emotient 5.2.1, http://emotient.com), to identify possible candidate frames with the AUs that define the “not face.” Computer vision algorithms are now accurate and reliable and approach or surpass human performance (Girard et al., 2014; O’Toole et al., 2012). To further improve the accuracy of the software, we manually assigned a set of frames with neutral expression as baseline for the detector. Furthermore, the images / frames identified by this software as including our target AUs were manually FACS coded to confirm the results. Identified images were disregarded if: i) the sequence did not contain the target AUs, ii) the sequence contained AUs with intensities lower than the target AUs, and iii) the sequence was visually difficult to discriminate because of its short duration (<100 ms).

Frequency of production

We computed the frequency of the target facial expression in the identified segments described above. We defined the frequency of the ith video fragment as , where di is the number of consecutive frames with the target facial expression and fr = 29.97 frames/second is the camera’s sample rate.

Results

We study the hypothesis that a linguistic marker of negation has evolved through the expression of negative moral judgment. We provide evidence favoring this hypothesis by showing that this facial expression of negation is universally produced by people of different cultural backgrounds and, hence, defined by a unique subset of AUs originally employed to express negative moral judgment (Experiment 1), used as a co-articulator in speech (Experiment 2), employed as a linguistic non-manual marker in signing (Experiment 3), and produced at an intelligible theta rhythm (Experiment 4).

Experiment 1. Nonverbal expression of negation

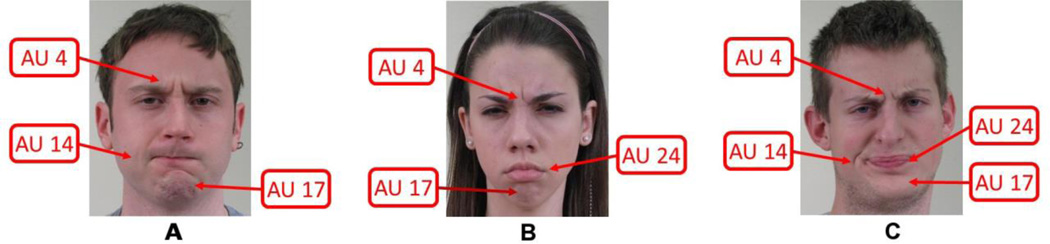

Participants were asked to produce a facial expression that clearly indicated negation, e.g., negating a statement. No instructions on which muscles to move were given. Participants had the opportunity to practice the expression in front of a mirror before picture acquisition. Pictures of the facial expression were taken at the apex (i.e., maximum deformation of the face). A total of 158 subjects of different cultural and ethnic backgrounds participated in this experiment. The mother tongue of the participants thus varied. All pictures were frontal view. Images were manually coded using FACS (Facial Action Coding System) (Ekman & Friesen, 1978) to identify the AUs used by each participant. Table 1 shows the percentage of AUs in these images. Participants consistently used AUs 4, 17 and either 14 or 24 or both, Fig. 3. AU 7 was sometimes added but by a significantly smaller percentage of subjects (37.88%). The consistency of use of AUs 4, 17 and either 14 or 24 is comparable to the consistency observed in AU usage in universal facial expressions of emotion (>70%) (Du et al., 2014).

Figure 3.

A few examples of people conveying negation with a facial expression. A–C. People from different cultures use AUs 4, 17 and either 14 or 24 or both.

As can be seen in Table 1 and Fig. 3, the AUs used to communicate negation are a compound of those employed to express negative moral judgment. AUs 4 comes from the facial expression of anger, AUs 17 and 24 from disgust, and AU 14 from contempt, Fig. 2. AU 4 lowers the inner corner of the eyebrows, AU 17 raises the chin, and AUs 14 and 24 (which are rarely co-articulated) press the upper and lower lips against one another. Since the actions of AU 14 and 17 results in pressing the lower and upper lip against one another, only one of them is necessary. AU 7 is only used by a small number of people. Its role is to tighten the eyelids and is commonly used to express anger.

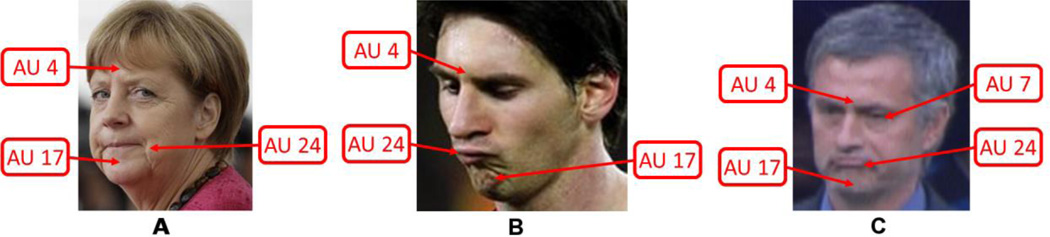

The consistency of AU production of the “not face” across participants of different cultural backgrounds suggests that this facial expression of negation is universally used across populations. To see if this finding generalizes beyond images collected in the lab, we used a computer vision algorithm (Methods) to find spontaneous samples of the “not face” in the web. Specifically, we automatically analyzed images and videos of sports. We selected sports because players usually negate the action of the referee (e.g., after a foul) or themselves (e.g., after missing an open shot) using nonverbal gestures. Thus, it is expected that some players will use the above-identified facial expression of negation. Additionally, these expressions are spontaneous, which will allow us to determine if the AU pattern seen in lab conditions is consistent with that used by people in naturalistic environments. Our search identified several instances of the “not face,” Fig. 4, demonstrating that the “not face” defined above is also observed in spontaneous expressions of negation.

Figure 4.

Samples of spontaneous expressions of negation. A. Chancellor Angela Merkel of Germany. B. A picture of a professional soccer players after missing an open shot. C. A coach disagreeing with the referee.

In summary, the results of this first experiment demonstrate the universality of this nonverbal gesture. Next, we study if this expression is used as a co-articulator in speech across languages. The concept of negation changes somewhat as it is brought from nonverbal gesture into language, as the meanings expand to include those possible in language but not outside of language. The relevance here is that nonverbal negation such as that elicited in Experiment 1, when subjects are asked to produce a face equivalent to negation in speech, is limited to meanings like denying permission (You can’t do that), responding to requests / offers (No, I don’t want that), disagreements (No, I don’t want to), or information states (No, I don’t know the answer). Brought into language, negation also allows us to deny the truth of a statement (No, that’s not right he didn’t do that) and to provide the speaker’s evaluation of another’s actions (Unfortunately, he failed the test), among other more complex meanings.

Experiment 2. Co-articulation of the not face in speech

We filmed speech production of English, Spanish and Mandarin Chinese of twenty-six participants. Speech production for a total of 78 sentences, corresponding to four clause types and the two polarities, were recorded. Specifically, nineteen of these sentences had negative polarity (see Supplemental Materials for a list of the sentences). The same sentences were collected in each language. Native speakers read one sentence at a time, memorized it, and then reproduced it from memory while looking frontally at the camera. Subjects were instructed to imagine they were having a conversation with the experimenter, who remained behind the camera for the entirety of the experiment. This allowed us to film more naturalistic speech. Filming was uninterrupted, meaning that subjects were filmed while reading, memorizing and uttering these sentences. Additionally, we collected subject responses to four questions that can yield a negative response (e.g., a study shows that tuition should increase 30% for in-state students, what do you think?; a list of these questions is in the Supplemental Materials). Subjects were filmed while responding to these questions.

All frames of these video sequences were FACS coded using a computer vision algorithm (Methods). Frames with a facial expression produced with only AUs 4, 17 and either 14 or 24 or both were identified. This approach found 41 instances of the “not face.” Twelve of these instances were produced during a negative sentence, seventeen were used by the subjects to indicate to the experimenter that they had forgotten the sentence and wanted to read it again, ten followed the incorrect reproduction of a sentence, and two were during the production of positive sentences by two English speakers, Table 2 (the Supplemental Video shows a few examples). The twelve examples of co-articulation of the “not face” and speech in negative sentences are observed in all clauses and were found in English, Spanish and Mandarin Chinese. In contrast there were only two instances of the “not face” in positive sentences (a hypothetical and a yes/no-question), both produced by English speakers. This corresponds to 0.13% of the positive sentences in our dataset, compared to the production of the “not face” in 2.83% of the negative sentences. We note that the “not face” was more commonly produced by English speakers than Spanish or Mandarin Chinese speakers. It is well-known that Americans emphasize expressions more than subjects from other cultural backgrounds (Ekman, 1972; Matsumoto & Kupperbusch, 2001). A recent study (Rychlowska et al., 2015) shows that expressivity is correlated with the cultural diversity of the source migrants, suggesting a mechanism for exaggerating expressions in countries like the US. This seems to be the case for this expression of negation.

Table 2.

Usage of the “not face” in different languages. The second and third column indicate that the expression was produced during a negative or positive sentence (co-articulator), respectively. The fourth column shows the number of times that participants used the “not face” to indicate a wrong reproduction of the intended sentence (nonverbal signal). The fifth column indicates the number of times that participants forgot the memorized sentence; these cases were immediately followed by a request to re-read the sentence. No other instances of the not face were identified. Thus, the “not face” was used in 2.83% of the negative sentences, but in only 0.13% of the positive sentences.

| Language | Negative Sentence | Positive Sentence | Incorrect Performance | Forgotten Sentence |

|---|---|---|---|---|

| English | 8 | 2 | 4 | 5 |

| Spanish | 2 | 0 | 5 | 12 |

| Mandarin | 2 | 0 | 1 | 0 |

| Total | 12 | 2 | 10 | 17 |

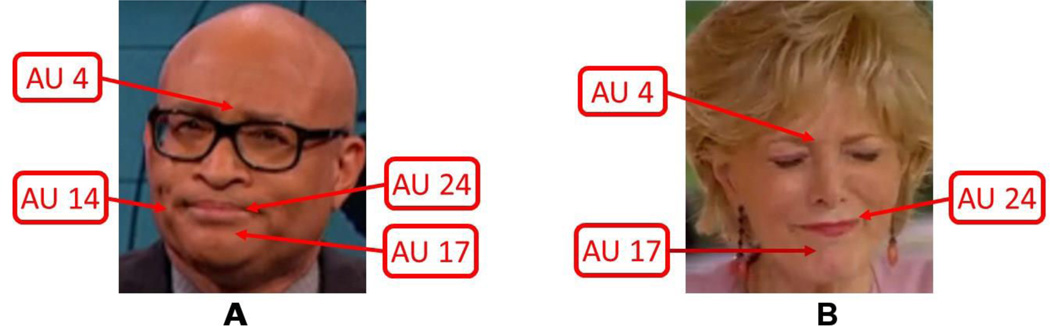

These results demonstrate the co-articulation of the “not face” in speech in negative sentences. Also, we did not find any difference in the production of the “not face” as a function of the sentence, clause or response. As before though, one may ask if these expressions are also found outside the lab. To study this, we used the computer vision algorithm (Methods) to identify spontaneous samples of the “not face” in 52 minutes of videos of American television. We identified two instances where people used the “not face” in negative sentences, Fig. 5. No instance of the “not face” was found in positive sentences.

Figure 5.

Sample frames of the “not face” in videos of American television.

Experiment 3. Grammatical (non-manual) marker in signing

In sign languages, some facial expressions serve as non-manual markers and thus have grammatical function (Baker & Padden, 1978). A linguistic non-manual marker can co-occur with a manual sign of the same grammatical function or can be the sole marker (without the manual component). Zeshan (2006) separates sign languages that can negate a sentence using only a non-manual marker (“non-manual dominant”) from those that require a manual sign (“manual dominant”). A typical non-manual marker of negation in ASL is a headshake, which changes the polarity of a sentence even in the absence of the manual sign for “not” (Veinberg & Wilbur, 1990; Zeshan, 2006; Pfau, 2015).

Our hypothesis is that the “not face” is used as a non-manual marker of negation in ASL. We used the computer vision algorithm (Methods) to identify occurrences of the “not face” in videos of fifteen ASL signers signing the same 78 sentences of Experiment 2 (see Supplemental Materials for a list of these sentences). We identified 114 instances of the “not face” in the signing of negative sentences (the Supplemental Video shows an example). This corresponds to 15.4% of the negative sentences we analyzed. This number of occurrences is comparable to the number of negative sentences that include the nonmanual marker headshake, which is 14.75% of the sentences in our database. Further, our analysis revealed that the “not face” was used to indicate negation: in conjunction with the manual sign “not” (62.7%), in combination with the non-manual marker headshake (94.1%), and as the sole marker of negation (i.e., without a headshake or a manual marker of negation, 3.4%). This result thus demonstrates the “not face” performs grammatical function in ASL.

In summary, the results described in Experiments 1–3 support the existence of a facial expression of negation that has been grammaticalized through the modification of facial expressions of emotion. However, a grammaticalized “not face” should also have a frequency of production similar to that seen in speech and signing, i.e., a theta oscillation (3–8 Hz). We test this prediction next.

Experiment 4. Frequency of production

Syllable production and mouthing in speech universally exhibits a theta oscillation (Chandrasekaran et al., 2009). Similarly, ASL syllables are signed in the theta range (Wilbur & Nolen, 1986). Cells in auditory cortex also oscillate at this frequency which is thought to facilitate segmentation of the speech (theta-oscillation) signals from non-coding distractors oscillating at other frequencies (Gross et al., 2013). If the facial expression of negation identified above has indeed been grammaticalized and is thus interpreted by the brain as a grammatical marker, its rhythmicity should parallel that seen in speech and signing.

First, we measured the frequency of production of the “not face” in the videos of English, Spanish and Mandarin Chinese collected in our lab. The frequency is defined as the number of frames per second over the number of consecutive frames with the target expression and is thus given in Hz (Methods). The frequency of production of the “not face” was found to fall within the theta band, with the mean over all spoken languages at 5.68 Hz. This was also true for each of the languages independently. In English, the “not face” was produced at 4.33 Hz. Spanish speakers had a similar production frequency of 5.23 Hz, while Mandarin Chinese speakers had a slightly faster tempo of 7.49 Hz.

We repeated the above procedure to compute the frequency of production of the “not face” in ASL. That is, we analyzed the video sequences of ASL described in Experiment 3. As in speech, the mean frequency of production was identified at 5.48 Hz and, hence, falls within the theta band as well.

Discussion

Face perception is an essential component of human cognition. People can recognize identity, emotion, intent and other non-verbal signals from faces. But facial expressions can also have grammatical function, which might be a precursor of the development of spoken languages. Surprisingly, little is known about this latter use of the face.

Herein, we identified a facial expression of negation that is consistently used across cultures as a nonverbal gesture and in languages as a co-articulator and a grammatical marker. Crucially, our results support a common origin of the “not face” in facial expressions of emotion, suggesting a possible evolutionary path for the development of nonverbal communication and the emergence of grammatical markers in human language. The results reported herein hence provide evidence favoring the hypothesis of an evolution of (at least some) grammatical markers through the expression of emotion.

Universality of the “not face.”

The present paper identified a facial expression of negation that is produced using the same AUs by people of different cultures, suggesting this expression is universal. We then studied the origins of this expression. Since the facial expressions used to indicate negative moral judgment are used to communicate negation and disagreement, we hypothesized that a facial expression of (grammatical) negation evolved through the expression of moral judgment. We found this to be the case, supporting the hypothesis that the “not face” evolved through the expression of negative moral judgment. Crucially, we found the exact same production in nonverbal gesturing (without speech) in people of distinct cultures, as a co-articulator in speech in several spoken languages, and as a grammatical marker in ASL. The extensive use of this expression further supports the universality hypothesis and, importantly, illustrates a possible evolutionary path from emotional expression to the development of a grammatical marker of polarity.

The rhythms of facial action production in the “not face" and syllable production in language are consistent

Communicative signals can be very difficult to analyze. To resolve this problem, it is believed that the brain takes advantage of statistical regularities in the signal. For example, speech production universally follow a theta-based rhythm (Chandrasekaran et al., 2009); this also holds true in signing (Wilbur & Nolen, 1986). Thus, to discriminate coding (i.e., important communicative) signals from non-coding distractors, the brain only needs to process signals that fall within the theta band (3 to 8 Hz). Supporting this theory, cells in auditory cortex are known to oscillate within this range, which may facilitate the segmentation of coding versus non-coding signals (Gross et al., 2013). Furthermore, lipreading in hearing adults (Calvert et al., 1997) and signing in congenitally deaf adults (Nishimura et al., 1999; Neville et al., 1998) activate auditory cortex; non-linguistic lip movement and other AUs are thought to be coded in posterior Superior Temporal Sulcus (pSTS) instead (Srinivasan et al., 2016). And, nonverbal facial expressions in non-human primates are also produced within this theta range (Ghazanfar et al., 2013), suggesting that verbal and nonverbal communication signals are identified by detecting signals in this frequency band.

We found that the “not face” is also universally produced using this rhythm. This was true in English, Spanish, Mandarin Chinese and ASL. Thus, this facial expression would be identified as a coding segment of a communicative signal, whether it is used as a nonverbal signal or as a grammatical marker in human language. This rhythm is consistently used in the subordinate categories of the “not face,” suggesting this is an intrinsic property of this facial expression.

A linguistic non-manual marker of negation

Previous studies have primarily focused on the grammaticalization of the negative headshake (Pfau, 2015). However, there is still debate on the role and origins of this linguistic marker. Kendon (2002), for instance, reports that headshakes are widely, but not universally, used across cultures, with backwards head tilt being one frequent alternative. And, importantly, there is no convincing data on the origins of the headshake, with feeding avoidance in babies a putative unsubstantiated assumption (Pfau, 2015; Spitz, 1957).

The results reported in the present study have not only identified a non-manual marker of negation, but also provided evidence of their possible evolution through the expression of emotion. This lays the foundation for a research program focusing more specifically on facial expressions both in the context of and in the absence of associated head movements and their origin on the expression of emotion and sensory regulation.

These results are also important for the design of human-computer interfaces where the role of multimodal signals is essential for the design of efficient algorithms (Turk, 2014), e.g., even for humans it is easier to understand a conversation over video chat than over the phone. Recent neuroimaging results also suggest that redundant multimodal communications yields more efficient neural processings of language in humans (Weisberg et al., 2014).

From expression of emotion to grammatical function

The fact that some facial expressions of emotion have acquired grammatical function has implications for the understanding of how human language emerged (Berwick, Pietroski, Yankama, & Chomsky, 2011; Fitch, 2010) and offers a unique opportunity to study the minimally required abstract properties of human linguistic knowledge (Chomsky, 1995; Pastra & Aloimonos, 2012) and face perception (Martinez & Du, 2012) and emotion (Darwin, 1872). The present study, for example, suggests a recent evolution of the herein identified linguistic marker as part of the evolution of human language. However, expressions of emotion evolved much earlier, providing a plausible explanation for the apparent sudden development (Bolhuis, Tattersall, Chomsky, & Berwick, 2014; Hauser et al., 2002) of grammatical markers through the grammaticalization of facial expressions of emotion.

Supplementary Material

Acknowledgments

This research was supported by the National Institutes of Health, grants R01 EY 020834 and R01 DC 014498.

Footnotes

Other authors have studied additional non-manual markers, in particular the eyebrows (Veinberg and Wilbur 1990; Weast, 2008; Gökgöz, 2011) which could be a component of the “not face.”

Author Contributions

AMM conceived the study. AMM, CFBQ and RBW designed the experiment. CFBQ collected the data. CFBQ, AMM and RBW analyzed the data and wrote the paper.

References

- Baker C, Padden C. Focusing on the nonmanual components of American Sign Language. In: Siple P, editor. Understanding language through sign language research. New York: Academic Press; 1978. [Google Scholar]

- Bartlett M, Littlewort G, Frank M, Lee K. Automatic decoding of facial movements reveals deceptive pain expressions. Current Biology. 2014;24(7):738–743. doi: 10.1016/j.cub.2014.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benitez-Quiroz CF, Gokgoz K, Wilbur RB, Martinez AM. Discriminant features and temporal structure of nonmanuals in American Sign Language. PLoS ONE. 2014;9(2):e86268. doi: 10.1371/journal.pone.0086268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benitez-Quiroz CF, Srinivasan R, Martinez AM. EmotioNet: An accurate, real-time algorithm for the automatic annotation of a million facial expressions in the wild. 2016 [Google Scholar]

- Berwick RC, Pietroski P, Yankama B, Chomsky N. Poverty of the stimulus revisited. Cognitive Science. 2011;35(7):1207–1242. doi: 10.1111/j.1551-6709.2011.01189.x. [DOI] [PubMed] [Google Scholar]

- Bolhuis JJ, Tattersall I, Chomsky N, Berwick RC. How could language have evolved? PLoS Biology. 2014;12(8):e1001934. doi: 10.1371/journal.pbio.1001934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA, Bullmore ET, Brammer M, Campbell R, Williams SC, McGuire P, Woodruff PWR, Iversen SD, David A. Activation of auditory cortex during silent lipreading. Science. 1997;276(5312):593–596. doi: 10.1126/science.276.5312.593. [DOI] [PubMed] [Google Scholar]

- Chandrasekaran C, Trubanova A, Stillittano S, Caplier A, Ghazanfar AA. The natural statistics of audiovisual speech. PLoS Computational Biology. 2009;5(7):e1000436. doi: 10.1371/journal.pcbi.1000436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chomsky N. The minimalist program. MIT Press; 1995. [Google Scholar]

- Darwin C. The expression of the emotions in man and animals. London: John Murray; 1872. [Google Scholar]

- Du S, Tao Y, Martinez AM. Compound facial expressions of emotion. Proceedings of the National Academy of Sciences. 2014;111(15):E1454–E1462. doi: 10.1073/pnas.1322355111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P. Universals and cultural differences in facial expressions of emotion. In: Cole J, editor. Nebraska symposium of motivation. Lincoln: University of Nebraska Press; 1972. pp. 207–283. [Google Scholar]

- Ekman P, Friesen WV. Facial action coding system: A technique for the measurement of facial movement. Palo Alto, California: Consulting Psychologists Press; 1978. [Google Scholar]

- Ekman P, Sorenson ER, Friesen WV. Pan-cultural elements in facial display of emotions. Science. 1969;164:86–88. doi: 10.1126/science.164.3875.86. [DOI] [PubMed] [Google Scholar]

- Fitch WT. The evolution of language. Cambridge University Press; 2010. [Google Scholar]

- Ghazanfar AA, Morrill RJ, Kayser C. Monkeys are perceptually tuned to facial expressions that exhibit a theta-like speech rhythm. Proceedings of the National Academy of Sciences. 2013;110(5):1959–1963. doi: 10.1073/pnas.1214956110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girard JM, Cohn JF, Jeni LA, Sayette MA, De la Torre F. Spontaneous facial expression in unscripted social interactions can be measured automatically. Behavior Research Methods. 2014:1–12. doi: 10.3758/s13428-014-0536-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gökgöz K. Negation in Turkish Sign Language: The syntax of nonmanual markers. Sign Language & Linguistics. 2011;14(1):49–75. [Google Scholar]

- Greene J, Haidt J. How (and where) does moral judgment work? Trends in Cognitive Sciences. 2002;6(12):517–523. doi: 10.1016/s1364-6613(02)02011-9. [DOI] [PubMed] [Google Scholar]

- Gross J, Hoogenboom N, Thut G, Schyns P, Panzeri S, Belin P, Garrod S. Speech rhythms and multiplexed oscillatory sensory coding in the human brain. PLoS Biology. 2013;11(12):e1001752. doi: 10.1371/journal.pbio.1001752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauser MD, Chomsky N, Fitch WT. The faculty of language: what is it, who has it, how did it evolve? Science. 2002;298(5598):1569–1579. doi: 10.1126/science.298.5598.1569. [DOI] [PubMed] [Google Scholar]

- Kendon A. Some uses of the headshake. Gesture. 2002;2(2):147–182. [Google Scholar]

- Klima E, Bellugi U. The signs of language. Cambridge, MA: Harvard University Press; 1979. [Google Scholar]

- Lee DH, Susskind JM, Anderson AK. Social transmission of the sensory benefits of eye widening in fear expressions. Psychological Science. 2013;24(6):957–965. doi: 10.1177/0956797612464500. [DOI] [PubMed] [Google Scholar]

- Liddell S. An introduction to relative clauses in ASL. In: Siple P, editor. Understanding language through sign language research. New York: Academic Press; 1978. [Google Scholar]

- MacNeilage PF. The frame/content theory of evolution of speech production. Behavioral and Brain Sciences. 1998;21(4):499–511. doi: 10.1017/s0140525x98001265. [DOI] [PubMed] [Google Scholar]

- Martinez AM, Du S. A model of the perception of facial expressions of emotion by humans: Research overview and perspectives. The Journal of Machine Learning Research. 2012;13(1):1589–1608. [PMC free article] [PubMed] [Google Scholar]

- Matsumoto D, Kupperbusch C. Idiocentric and allocentric differences in emotional expression, experience, and the coherence between expression and experience. Asian Journal of Social Psychology. 2001;4(2):113–131. [Google Scholar]

- Neville HJ, Bavelier D, Corina D, Rauschecker J, Karni A, Lalwani A, Braun A, Clark V, Jezzard P, Turner R. Cerebral organization for language in deaf and hearing subjects: biological constraints and effects of experience. Proceedings of the National Academy of Sciences. 1998;95(3):922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishimura H, Hashikawa K, Doi K, Iwaki T, Watanabe Y, Kusuoka H, Nishimura T, Kubo T. Sign language ’heard’ in the auditory cortex. Nature. 1999;397(6715):116. doi: 10.1038/16376. [DOI] [PubMed] [Google Scholar]

- Oatley K, Keltner D, Jenkins JM. Understanding emotions. Blackwell Publishing; 2006. [Google Scholar]

- O'Toole AJ, An X, Dunlop J, Natu V, Phillips PJ. Comparing face recognition algorithms to humans on challenging tasks. ACM Transactions on Applied Perception (TAP) 2012;9(4):16. [Google Scholar]

- Pastra K, Aloimonos Y. The minimalist grammar of action. Philosophical Transactions of the Royal Society B: Biological Sciences. 2012;367(1585):103–117. doi: 10.1098/rstb.2011.0123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfau R. The grammaticalization of headshakes: From head movement to negative head. In: Smith ADM, Trousdale G, Waltereit R, editors. new directions in grammaticalization research. Amsterdam: Benjamins; 2015. pp. 9–50. [Google Scholar]

- Pfau R, Quer J. Nonmanuals: Their prosodic and grammatical roles. In: Brentari D, editor. Sign languages. A Cambridge language survey. Cambridge University Press; 2010. pp. 381–402. [Google Scholar]

- Reilly JS, McIntire M, Bellugi U. The acquisition of conditionals in American Sign Language: Grammaticized facial expressions. Applied Psycholinguistics. 1990;11(4):369–392. [Google Scholar]

- Rozin P, Lowery L, Imada S, Haidt J. The CAD triad hypothesis: a mapping between three moral emotions (contempt, anger, disgust) and three moral codes (community, autonomy, divinity) Journal of Personality and Social Psychology. 1999;76(4):574. doi: 10.1037//0022-3514.76.4.574. [DOI] [PubMed] [Google Scholar]

- Rychlowska M, Miyamoto Y, Matsumoto D, Hess U, Gilboa-Schechtman E, Kamble S, Muluk H, Masuda T, Niedenthal PM. Heterogeneity of long-history migration explains cultural differences in reports of emotional expressivity and the functions of smiles. Proceedings of the National Academy of Sciences. 2015;112(19):E2429–E2436. doi: 10.1073/pnas.1413661112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shweder R, Much N, Mahapatra M, Park L. Divinity and the “big three” explanations of suffering. In: Brandt A, Rozin P, editors. Morality and health. Vol. 119. New York: Routledge; 1997. pp. 119–169. [Google Scholar]

- Spitz RA. No and yes: On the genesis of human communication. 1957 [Google Scholar]

- Srinivasan R, Golomb JD, Martinez AM. A Neural Basis of Facial Action Recognition in Humans. Journal of Neuroscience. 2016 doi: 10.1523/JNEUROSCI.1704-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turk M. Multimodal interaction: A review. Pattern Recognition Letters. 2014;36:189–195. [Google Scholar]

- Veinberg SC, Wilbur RB. A linguistic analysis of the negative headshake in American Sign Language. Sign Language Studies. 1990;68:217–244. [Google Scholar]

- Weisberg J, McCullough S, Emmorey K. Simultaneous perception of a spoken and a signed language: The brain basis of ASL-English code-blends. Brain and language. 2015;147:96–106. doi: 10.1016/j.bandl.2015.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weast T. Ph.D. dissertation. Arlington, TX: University of Texas at Arlington; 2008. Questions in American Sign Language: A quantitative analysis of raised and lowered eyebrows. [Google Scholar]

- Zeshan U. Interrogative and negative constructions in sign languages. Nijmegen: Ishara Press; 2006. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.