Abstract

Computational modeling and associated methods have greatly advanced our understanding of cognition and neurobiology underlying complex behaviors and psychiatric conditions. Yet, no computational methods have been successfully translated into clinical settings. This review discusses three major methodological and practical challenges (A. precise characterization of latent neurocognitive processes, B. developing optimal assays, C. developing large-scale longitudinal studies and generating predictions from multi-modal data) and potential promises and tools that have been developed in various fields including mathematical psychology, computational neuroscience, computer science, and statistics. We conclude by highlighting a strong need to communicate and collaborate across multiple disciplines.

Introduction

Computational modeling has greatly contributed to understanding cognitive processes underlying our decision-making. By providing a mechanistic account of the processes, computational modeling allows us to generate quantitative predictions and test them in a precise manner. Computational modeling also provides a framework for studying the neural mechanisms of complex behaviors. Ever since reinforcement learning models were shown to well describe phasic activity changes in midbrain dopamine neurons [1], computational modeling has been widely combined with electrophysiological data and human functional magnetic resonance imaging (fMRI) signals to identify brain regions implementing specific cognitive processes [2,3]. A systematic line of research based on the computational framework suggests that the brain has multiple systems for decision-making [4,5]: the Pavlovian system, which sets a strong prior on our actions when we are faced with rewards or punishments and the instrumental system, which is further divided into habitual (i.e., model-free; efficient but inflexible) and goal-directed (model-based; effortful but flexible) systems. While the Pavlovian system has been traditionally regarded as purely model-free, new ample evidence suggests Pavlovian learning might also involve model-based evaluation [6].

There is a growing consensus that computational modeling can also be helpful to understand psychiatric disorders. Computational models can break maladaptive behaviors into distinct cognitive components, and the model parameters associated with the components can be used to understand the latent cognitive sources of their deficits. Therefore, computational modeling can provide a useful framework in understanding comorbidity among psychiatric disorders in a systematic way. Such a framework can specify psychiatric conditions with basic dimensions of neurocognitive functioning and offer a novel approach to assess and diagnose psychiatric patients [7–10].

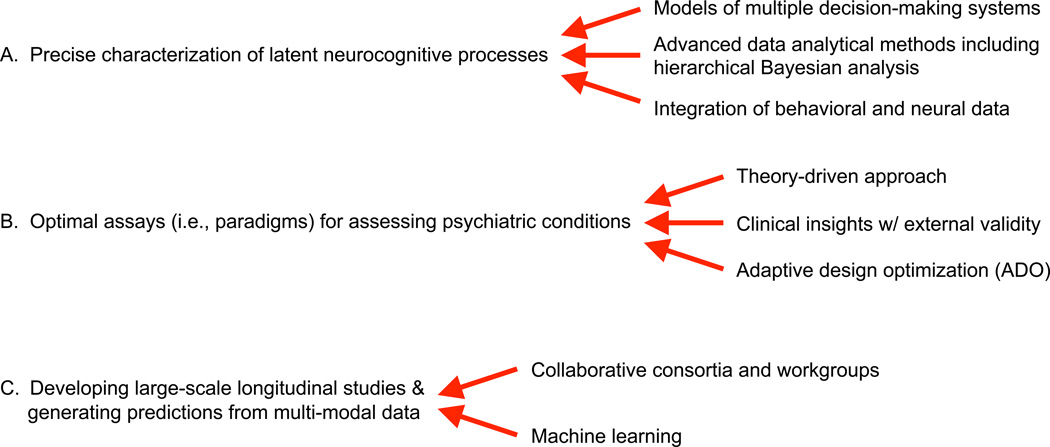

Despite the growing enthusiasm, no computational assays or methods have influenced clinical practice yet. There remain several major methodological and practical challenges that need to be solved for translating computational modeling tools into clinical practice. In this article, among many others, we focus on the following challenges as summarized in Figure 1: (A) precise characterization of latent neurocognitive processes, (B) development of optimal assays for assessing psychiatric conditions, (C) development of large-scale longitudinal studies and generating predictions using multi-modal and multi-dimensional data. In the following sections, we provide a general overview of each challenge and discuss how we can potentially address them. Our review focuses on computational modeling of human decision-making and fMRI studies, which are most relevant to the challenges we consider. We also briefly review how mathematical psychologists and computational neuroscientists have independently attempted to understand psychiatric disorders using computational methods. We hope this article will help researchers in each field identify strengths of the other field and stimulate further communication and interaction between the fields. There are some important topics that are not addressed in this article including biophysically-based models and readers can refer to existing review papers on the topics [11–13]. Because of space limit, our review excludes a survey of model comparison methods and mathematical details of Bayesian data analysis, which are covered in other reviews [14–16].

Figure 1.

Promising approaches to address three major changes for translating computational tools into clinical practice

A. Precise characterization of latent neurocognitive processes

Early applications of computational modeling to psychiatric populations were initiated by mathematical psychologists. Traditionally they focused on identifying cognitive processes embedded in a cognitive or decision-making task. Mathematical psychologists including Batchelder, Townsend, Ratcliff, Neufeld, and Treat advocated as well as empirically demonstrated that computational modeling can be used to assess clinical populations [17]. The computational approach began to receive additional attention as Busemeyer and Stout [18] developed the Expectancy-Valence Learning (EVL) model for the Iowa Gambling Task (IGT) and apply the EVL to several clinical populations [19]. The model has been subsequently revised to improve its performance, which led to a newer version called the Prospect Valence Learning (PVL) model [20,21]. Despite criticism on the IGT for its complicated design and performance heterogeneity [22], the PVL model showed good model-fits and simulation performance [e.g., 23] and it has been applied to several populations with substance dependence [for a review and detailed findings see, 24]. For example, modeling approaches on the IGT revealed reduced loss aversion among heroin users compared to healthy individuals, which was robust across all models we tested [23]. Computational models have also been used to decompose performance of clinical populations on the Balloon Analogue Risk Task (BART) [25], the Go/Nogo task [26], and speeded choice-response time tasks [27].

Independently, computational neuroscientists including Montague, Dayan, Dolan, Friston, and colleagues have put efforts to build computational accounts of (ab)normal cognition and its biological underpinnings (a.k.a. Computational Psychiatry) [8,28,29]. They built computational frameworks and used the method called model-based fMRI [3] or model-based electroencephalography [30] (among other methods) in which internal states predicted by computational models are used to identify brain regions that presumably implement a particular cognitive/computational process. Many applications to psychiatric disorders [31–34] have been built around the Bayesian decision framework that offers a Bayesian account of decision-making [35]. In addition, recent studies using model-based fMRI significantly enhanced our understanding of the neurobiological mechanisms underlying reinforcement learning and decision-making in the brain [for recent reviews see, 12,36].

Once we build a computational model, the next important step is parameter estimation. Getting accurate estimates of the key model parameters is critical for phenotyping computational processes precisely. Currently the state of the art for parameter estimation is hierarchical hierarchical Bayesian analysis (HBA) that pools information across individuals and captures similarities and differences among individuals in a hierarchical way [15,37]. Hierarchical methods are particularly useful when the amount of information is small or insufficient for precise parameter estimation at the individual level. Hierarchical methods, whether Bayesian or non-Bayesian [e.g., 38] often lead to very similar parameter estimates (personal communication). Because assumptions and priors for HBA and non-Bayesian hierarchical methods are different, the choice for a hierarchical method may depend on the computational model concerned. However, we believe HBA has several advantages over non-Bayesian methods such as its more flexible assumptions and finding full posterior distributions instead of point estimates (thus providing maximum information about parameter estimates). HBA also allows us to compare clinical and non-clinical groups by comparing their posterior distributions [15,23]. Recent development of tools [39] and tutorials [15,16] also facilitated its usage.

While HBA is already widely accepted and used in cognitive science and mathematical psychology, hierarchical methods including HBA have been only recently adopted in computational/cognitive neuroscience field and non-hierarchical maximum likelihood estimation (MLE) is still often used. Using simulated behavioral data and actual behavioral/fMRI data, Ahn et al. [40] empirically showed how HBA is superior to non-hierarchical MLE methods. While individual MLE estimates, which are based only on each individual’s data, were often driven toward parameter bounds (e.g., learning rate = 0), HBA reliably recovered the true parameters of simulated data. Using HBA also improved the fMRI signal compared to using individual MLE (i.e., higher peak activation and larger regions of activation), presumably because HBA improved the characterization of individual differences.

There still remain major challenges for HBA and associated hierarchical methods. Assuming a single hyper-group across a modest number of individuals (e.g., ~50) might be valid [c.f., 41], but the assumption might be invalid when fitting large samples (e.g., ~1000) with a single hyper-group [42]. Non-parametric Bayesian methods [43] that let the data determine the number of hyper-groups as well as group assignment and estimate parameters simultaneously could be a great solution, but it is computationally very challenging. Alternatively, Bayesian hierarchical mixture approaches [e.g., 44], or HBA of each subgroup that is clustered by behavioral indices, might provide practical compromises. Another important direction is to integrate neural and behavioral measures into a single hierarchical Bayesian framework so that neural and behavioral data can mutually constrain each other and simultaneously inform the parameter estimates of a computational model [45].

B. Developing assays for improving the assessment of psychiatric conditions

Behavioral performance on a decision-making task entails multiple cognitive processes (e.g., valuation of reward/risk, outcome evaluation, action selection) and interaction of multiple decision-making systems including Pavlovian and instrumental (habitual and goal-directed) systems [46]. Thus, it is important to develop and employ assays (i.e., tasks) that will allow us to maximally decompose the underlying processes and reveal the interactions of the decision-making systems.

Historically, there have been mainly two approaches. Clinicians developed or adopted emotionally engaging tasks based on their clinical experience and intuitions to mimic naturalistic risk-taking behaviors. The tasks include the delay discounting task (DDT) [47], the IGT [48], the BART [49], and the Cambridge Gambling Task [50] that had widespread success in classifying clinical populations from healthy individuals (i.e., external validity). However, the complexity of the tasks makes it challenging to decompose its cognitive processes at the behavioral level. Behavioral economists and computational neuroscientists, on the other hand, typically start with difficult goals: Understanding specific constructs (e.g., valuation of reward) or the interactions among the decision-making systems [6] is a primary goal, and tasks are designed accordingly. Examples include decision problems between two monetary gambles [for a review see, 51], decision-making tasks [52–54] teasing apart the contribution of goal-directed and habitual systems, and the orthogonalized Go/Nogo task [55] examining the interactions between Pavlovian and instrumental systems. While early monetary decision-making tasks probing specific reward/risk constructs had limited success in distinguishing clinical populations from healthy controls [51], recent studies using theory-guided decision-making tasks have revealed specific cognitive processes and neural correlates associated with psychiatric conditions [54,56,57].

While being excited about past success applying decision-making probes to psychiatric patients, we are still in need of improving the assays. Considering clinical patients having reduced cognitive capacity and attention span, some of the decision-making tasks are too long or cognitively too challenging to be used in clinical settings. Thus, it is possible that some of the decision-making deficits could be attributed to their general intelligence or working memory capacity, rather than targeted neurocognitive processes. We believe both clinical experience and up-to-date knowledge of neuro-circuitry of specific psychiatric conditions can guide us to design tasks that are not only emotionally engaging [51] but also decomposable.

A promising computational approach to shorten the length of a task or improve the efficiency of information gain is the use of adaptive design optimization (ADO) [58]. In a typical experiment, when designing a task for probing specific processes, researchers make a priori decisions on experimental design (e.g., number of trials, amount of reward/punishment in gambling tasks, delays between stimuli). Then, we identify the best-fitting model and estimate its model parameters (see section B for more details on these issues). In contrast, ADO finds the optimal design “on the fly” that maximizes information gain based on data collected from preceding trials, presents the optimized stimuli on the current trial, observes outcomes, and updates the priors for experimental parameters into posteriors using a Bayesian framework. Recent advances in computer hardware and algorithms make it tractable to do Bayesian updating within a reasonable time scale (e.g., within 1sec). ADO has already been successfully applied to identifying best-fitting models in gambling paradigms [59] and DDT [60], as well as to optimally assess visual acuity using the contrast sensitivity function [61]: The studies demonstrated that ADO substantially reduced the number of trials required to do model comparisons or parameter estimation, or dramatically increased test-retest reliability compared to a non-ADO procedure [61]. These results suggest that implementation of ADO-based experiments might potentially benefit psychiatric research, especially those utilizing neuroimaging methods in saving time and scanning cost.

ADO methods are new and there remain several empirical issues to be addressed. ADO procedures naturally tend to make decisions maximally difficult over trials and change the statistics of the choice set (e.g., the range of gains and losses). Therefore, during ADO-based experiments, participants might be more likely to make random choices with difficult options, behave very differently [62], or experience more negative feelings compared to during non-ADO experiments. To address the concerns, several adjustments have been used: inserting easy trials between tough trials [61], stopping data collection when the same small subset of trials are presented repeatedly, and reducing the number of required trials for convergence by running simulations and identifying an optimal set of choices prior to experimentation [60]. However, additional future studies are needed to determine whether ADO produces systematic differences in choice patterns by examining the inter- and intra-personal differences across ADO and non-ADO sessions.

C. Developing large-scale longitudinal studies and generating predictions using multi-modal and multi-dimensional data

From a clinical perspective, the efforts and approaches listed above eventually boil down to a question: Can we predict clinical outcomes using these neuro-computational markers? To make predictions that generalize to new samples and identify predictors of a psychiatric condition, large sample sizes using longitudinal studies are needed. There already exist several consortia and working groups that provide imaging and genetic data from a large number of individuals, including the Enhancing Neuroimaging Genetics through Meta-Analysis (ENIGMA; http://enigma.ini.usc.edu), the IMAGEN (http://www.imagen-europe.com), the Pediatric Imaging, Neurocognition, and Genetics (PING; http://pingstudy.ucsd.edu) Study, and Brain Genomics Superstruct (https://dataverse.harvard.edu/dataverse/GSP) projects. However, sample sizes or clinical phenotypes publicly available through the consortia are still limited, and there remains a critical need to develop large-scale longitudinal studies in various psychiatric conditions. In drug addiction, the National Institute on Drug Abuse (NIDA) is launching the Adolescent Brain Cognitive Development (ABCD) Study (http://addictionresearch.nih.gov/adolescent-brain-cognitive-development-study), which will longitudinally track 10,000 adolescents over 10 years, and the study will include substance use, neuroimaging, and genomic measures. The NIDA will share the database through their data repository and we anticipate it will allow researchers interested in drug addiction to evaluate the utility of their tools.

Such a large longitudinal dataset contains many millions of variables; thus consideration of multiple comparisons would be essential to minimize Type I and II errors in associated classical statistics. Recent reports suggest that cross-validated machine learning may well serve as a promising approach for effective analysis of such big datasets [63,64]. However, how to integrate neural (e.g., genomic variations and brain-based measures) and behavioral (e.g., model parameters and survey scores) measures in a principled way for generating predictions is still a topic of active research. For example, Whelan et al. [65] conducted a longitudinal study aiming to predict future alcohol misuse by using regression-based machine learning. The study incorporated a wide spectrum of measures including genetic variations, functional/structural MRI, gray matter volume, cognitive tasks, personality surveys, and family history. Although their machine learning method (penalized logistic regression) produced generalizable and relatively accurate predictions, the approach essentially treated all modalities and measures within each modality equally, whereas in reality there is a complex interplay among genetic, environmental, and other unidentified factors [66]. There is a strong need to identify multivariate methods that are suited for this type of multi-modal dataset.

Discussions/Conclusions

Although we have discussed three key aspects of future challenges, we acknowledge that the list is not exhaustive. Another important practical challenge is a search for cost-effective markers. While neuroimaging and genomic measures are promising biomarkers for psychiatric disorders, many practitioners might not be able to afford the cost. Thus, finding markers that are affordable yet predictive of clinical outcomes would have important practical implications [63]. Preliminary literature suggests that eye movements including spontaneous eye blinks [e.g., 67] and pupil diameter [68] could potentially serve as surrogate measures (also see [69]) but the field is still in its infancy.

A potential way to reduce the cost of imaging markers is the application of machine learning approaches to fMRI time-series data. A dominant way of analyzing fMRI data is the voxel-wise general linear model (GLM) approach, which can lead to substantial loss of power in case of model mis-specification [e.g., 70]. Two recent studies demonstrated that the use of unmodeled fMRI time-series data combined with machine learning can produce rapid fMRI classifiers for autism spectrum disorder [71] or political attitudes [72]. They used single-stimulus measurements (approximately 20 seconds of time-series data) as regressors, which contained enough information to make reliable classifications.

Computationally inspired measures have yet to undergo large-scale validation and a lot of serious work remains to be done (e.g., test-retest reliability, validation of computational methods and findings using simulated and real data across diverse populations, understanding the regulatory environment critical for translating scientific tools into clinical practice). We strongly believe that solving these challenges requires close communication and collaboration between people in multiple areas. Clinicians, mathematical psychologists, computational neuroscientists, computer scientists, statisticians, and researchers in related fields have their own expertise and can provide their unique (or overlapping) contributions to tackle these technical and practical challenges. For example, clinical insights and experience can greatly inform the design of computational assays for clinical populations. Also, knowledge in neurobiological underpinnings of psychiatric conditions and decision neuroscience can be essential in every step of computational psychiatric research. Collaboration with people in computer science and statistics will be also critical to identify cutting-edge computational and statistical algorithms that are suited for multi-modal datasets. Also, workshops and meetings that can facilitate communication between multiple areas would mutually benefit each area.

Highlights.

Computational approaches provide novel insights into psychiatric conditions

There exist several challenges for translating the approaches into clinical tools

This review discusses three major methodological and practical challenges

Highlighting a need to communicate and collaborate across multiple disciplines

Acknowledgments

This work was supported by the National Institute on Drug Abuse under award number R01DA021421. The authors thank Roger Ratcliff, Richard Neufeld, Daeyeol Lee, Mark Pitt, Jay Myung, Brandon Turner, and Zhong-Lin Lu for their comments on the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of Interest: The authors declare no competing financial interets.

References

- 1.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. Journal of Neuroscience. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Forstmann BU, Wagenmakers E-J. An Introduction to Model-Based Cognitive Neuroscience. Springer; 2015. [Google Scholar]

- 3.O'Doherty JP, Hampton A, Kim H. Model-Based fMRI and Its Application to Reward Learning and Decision Making. Annals of the New York Academy of Sciences. 2007;1104:35–53. doi: 10.1196/annals.1390.022. [DOI] [PubMed] [Google Scholar]

- 4.Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- 5.Guitart-Masip M, Duzel E, Dolan R, Dayan P. Action versus valence in decision making. Trends Cogn. Sci. 2014;18:194–202. doi: 10.1016/j.tics.2014.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dayan P, Berridge KC. Model-based and model-free Pavlovian reward learning: revaluation, revision, and revelation. Cognitive, Affective, and Behavioral Neuroscience. 2014;14:473–492. doi: 10.3758/s13415-014-0277-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Insel TR. The NIMH Research Domain Criteria (RDoC) Project: Precision Medicine for Psychiatry. The American journal of psychiatry. 2014;171:395–397. doi: 10.1176/appi.ajp.2014.14020138. [DOI] [PubMed] [Google Scholar]

- 8.Montague PR, Dolan RJ, Friston KJ, Dayan P. Computational psychiatry. Trends Cogn. Sci. 2012;16:72–80. doi: 10.1016/j.tics.2011.11.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Maia TV, Frank MJ. From reinforcement learning models to psychiatric and neurological disorders. Nat. Neurosci. 2011;14:154–162. doi: 10.1038/nn.2723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Lee D. Decision Making: From Neuroscience to Psychiatry. Neuron. 2013;78:233–248. doi: 10.1016/j.neuron.2013.04.008. • Describes theoretical frameworks in economics and machine learning and provide comprehensive reviews of their applications in various clinical domains.

- 11. Wang X-J, Krystal JH. Computational Psychiatry. Neuron. 2014;84:638–654. doi: 10.1016/j.neuron.2014.10.018. • Highlights the need for transdiagnostic and computational strategies in clinical research, reviews recent work in Computational Psychiatry, and outlines a model-aided framework for diagnosis and treatment.

- 12. Stephan KE, Iglesias S, Heinzle J, Diaconescu AO. Translational Perspectives for Computational Neuroimaging. Neuron. 2015;87:716–732. doi: 10.1016/j.neuron.2015.07.008. • Provides comprehensive reviews of recent computational neuroimaging approaches and their applications to schizophrenia.

- 13.Anticevic A, Murray JD, Barch DM. Bridging Levels of Understanding in Schizophrenia Through Computational Modeling. Clin. Psychol. Sci. 2015 doi: 10.1177/2167702614562041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Busemeyer JR, Diederich A. Neuroeconomics Decision Making and the Brain. Vol. 49 Academic Press; 2013. Estimation and Testing of Computational Psychological Models. [Google Scholar]

- 15. Kruschke J. Doing Bayesian data analysis: A tutorial with R, JAGS, and Stan. 2014 • Explains basic foundations of Bayesian methods for people with limited statistics or programming knowledge.

- 16. Lee MD, Wagenmakers E-J. Bayesian Cognitive Modeling. Cambridge University Press; 2014. • Illustrates how to do Bayesian modeling using various case examples.

- 17. Neufeld RWJ. Mathematical and Computational Modeling in Clinical Psychology. In: Busemeyer JR, Wang Z, Eidels A, Townsend JT, editors. The Oxford Handbook of Computational and Mathematical Psychology. 2015. • Provides a thorough review of mathematical psychologists' work in clinical applications.

- 18.Busemeyer JR, Stout JC. A contribution of cognitive decision models to clinical assessment: Decomposing performance on the Bechara gambling task. Psychol. Assessment. 2002;14:253–262. doi: 10.1037//1040-3590.14.3.253. [DOI] [PubMed] [Google Scholar]

- 19.Yechiam E, Busemeyer JR, Stout JC, Bechara A. Using cognitive models to map relations between neuropsychological disorders and human decision-making deficits. Psychol Sci. 2005;16:973–978. doi: 10.1111/j.1467-9280.2005.01646.x. [DOI] [PubMed] [Google Scholar]

- 20.Ahn W-Y, Busemeyer JR, Wagenmakers EJ, Stout JC. Comparison of decision learning models using the generalization criterion method. Cognitive Science. 2008;32:1376–1402. doi: 10.1080/03640210802352992. [DOI] [PubMed] [Google Scholar]

- 21.Dai J, Kerestes R, Upton DJ, Busemeyer JR, Stout JC. An improved cognitive model of the Iowa and Soochow Gambling Tasks with regard to model fitting performance and tests of parameter consistency. Front. Psychol. 2015;6:229. doi: 10.3389/fpsyg.2015.00229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Steingroever H, Wetzels R, Horstmann A, Neumann J, Wagenmakers E-J. Performance of healthy participants on the Iowa Gambling Task. Psychol. Assessment. 2013;25:180–193. doi: 10.1037/a0029929. [DOI] [PubMed] [Google Scholar]

- 23. Ahn W-Y, Vasilev G, Lee S-H, Busemeyer JR, Kruschke JK, Bechara A, Vassileva J. Decision-making in stimulant and opiate addicts in protracted abstinence: evidence from computational modeling with pure users. Front Decision Neurosci. 2014;5 doi: 10.3389/fpsyg.2014.00849. • Demonstrates how to apply hierarchical Bayesian analysis to clinical populations and conducts all analyses using Bayesian data analysis.

- 24.Ahn W-Y, Dai J, Vassileva J, Busemeyer JR. Computational modeling for addiction medicine: From cognitive models to clinical applications. In: Ekhtiari H, Paulus MP, editors. Progress in Brain Research. Elsevier; 2015. [DOI] [PubMed] [Google Scholar]

- 25.Wallsten TS, Pleskac TJ, Lejuez CW. Modeling Behavior in a Clinically Diagnostic Sequential Risk-Taking Task. Psychological Review. 2005;112:862–880. doi: 10.1037/0033-295X.112.4.862. [DOI] [PubMed] [Google Scholar]

- 26.Yechiam E, Goodnight J, Bates JE, Dodge KA, Pettit GS, Newman JP. A formal cognitive model of the go/no-go discrimination task: Evaluation and implications. Psychol. Assessment. 2006;18:239–249. doi: 10.1037/1040-3590.18.3.239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.White CN, Ratcliff R, Vasey MW, McKoon G. Using diffusion models to understand clinical disorders. J Math Psychol. 2010;54:39–52. doi: 10.1016/j.jmp.2010.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Dayan P, Dolan RJ, Friston KJ, Montague PR. Taming the shrewdness of neural function: methodological challenges in computational psychiatry. Current Opinion in Behavioral Sciences. 2015 [Google Scholar]

- 29.Friston KJ, Stephan KE, Montague PR, Dolan RJ. Computational psychiatry: the brain as a phantastic organ. Lancet Psychiatry. 2014;1:148–158. doi: 10.1016/S2215-0366(14)70275-5. [DOI] [PubMed] [Google Scholar]

- 30.Ratcliff R, Philiastides MG, Sajda P. Quality of evidence for perceptual decision making is indexed by trial-to-trial variability of the EEG. Proc. Natl. Acad. Sci. 2009;106:6539–6544. doi: 10.1073/pnas.0812589106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Yoshida W, Dziobek I, Kliemann D, Heekeren HR, Friston KJ, Dolan RJ. Cooperation and Heterogeneity of the Autistic Mind. J. Neurosci. 2010;30:8815–8818. doi: 10.1523/JNEUROSCI.0400-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Huys QJM, Dayan P. A Bayesian formulation of behavioral control. Cognition. 2009 doi: 10.1016/j.cognition.2009.01.008. [DOI] [PubMed] [Google Scholar]

- 33.Hula A, Montague PR, Dayan P. Monte Carlo Planning Method Estimates Planning Horizons during Interactive Social Exchange. PLoS computational biology. 2015;11:e1004254. doi: 10.1371/journal.pcbi.1004254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Moutoussis M, Bentall RP, El-Deredy W, Dayan P. Bayesian modelling of Jumping-to- Conclusions bias in delusional patients. Cognitive Neuropsychiatry. 2011;16:422–447. doi: 10.1080/13546805.2010.548678. [DOI] [PubMed] [Google Scholar]

- 35.Huys Q, Guitart-Masip M, Dolan RJ, Dayan P. Decision-theoretic psychiatry. Clinical Psychol Sci. 2015;3:400–421. [Google Scholar]

- 36.O'Doherty JP, Lee SW, McNamee D. The structure of reinforcement-learning mechanisms in the human brain. Current Opinion in Behavioral Sciences. 2014 [Google Scholar]

- 37.Lee MD. How cognitive modeling can benefit from hierarchical Bayesian models. J Math Psychol. 2011;55:1–7. [Google Scholar]

- 38.Huys QJM, Cools R, Gölzer M, Friedel E, Heinz A, Dolan RJ, Dayan P. Disentangling the Roles of Approach, Activation and Valence in Instrumental and Pavlovian Responding. PLoS computational biology. 2011;7:e1002028. doi: 10.1371/journal.pcbi.1002028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Stan Development Team. Stan: A C++ Library for Probability and Sampling. 2015 [Google Scholar]

- 40.Ahn W-Y, Krawitz A, Kim W, Busemeyer JR, Brown JW. A model-based fMRI analysis with hierarchical Bayesian parameter estimation. Journal of Neuroscience, Psychology, and Economics. 2011;4:95–110. doi: 10.1037/a0020684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Scheibehenne B, Pachur T. Using Bayesian hierarchical parameter estimation to assess the generalizability of cognitive models of choice. Psychonomic bulletin & review. 2014;22:391–407. doi: 10.3758/s13423-014-0684-4. [DOI] [PubMed] [Google Scholar]

- 42.Ratcliff R, Childers R. Individual differences and fitting methods for the two-choice diffusion model of decision making. Decision. 2015;2:237–279. doi: 10.1037/dec0000030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Gershman SJ, Blei DM. A tutorial on Bayesian nonparametric models. J Math Psychol. 2012 [Google Scholar]

- 44.Bartlema A, Lee M, Wetzels R, Vanpaemel W. A Bayesian hierarchical mixture approach to individual differences: case studies in selective attention and representation in category learning. Journal of Mathematical Psychology. 2014;59:132–150. [Google Scholar]

- 45.Turner BM, van Maanen L, Forstmann BU. Informing cognitive abstractions through neuroimaging: the neural drift diffusion model. Psychological Review. 2015;122:312–336. doi: 10.1037/a0038894. [DOI] [PubMed] [Google Scholar]

- 46.Rangel A, Camerer CF, Montague PR. A framework for studying the neurobiology of value-based decision making. Nature reviews. Neuroscience. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rachlin H, Raineri A, Cross D. Subjective probability and delay. Journal of the Experimental Analysis of Behavior. 1991;55:233–244. doi: 10.1901/jeab.1991.55-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bechara A, Damasio AR, Damasio H, Anderson SW. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition. 1994;50:7–15. doi: 10.1016/0010-0277(94)90018-3. [DOI] [PubMed] [Google Scholar]

- 49.Lejuez CW, Read JP, Kahler CW, Richards JB, Ramsey SE, Stuart GL, Strong DR, Brown RA. Evaluation of a behavioral measure of risk taking: the Balloon Analogue Risk Task (BART) Journal of Experimental Psychology: Applied. 2002;8:75–84. doi: 10.1037//1076-898x.8.2.75. [DOI] [PubMed] [Google Scholar]

- 50.Rogers RD, Everitt BJ, Baldacchino A, Blackshaw AJ, Swainson R, Wynne K, Baker NB, Hunter J, Carthy T, Booker E. Dissociable deficits in the decision-making cognition of chronic amphetamine abusers, opiate abusers, patients with focal damage to prefrontal cortex, and tryptophan-depleted normal volunteers: evidence for monoaminergic mechanisms. Neuropsychopharmacology. 1999;20:322–339. doi: 10.1016/S0893-133X(98)00091-8. [DOI] [PubMed] [Google Scholar]

- 51.Schonberg T, Fox CR, Poldrack RA. Mind the gap: bridging economic and naturalistic risk-taking with cognitive neuroscience. Trends Cogn. Sci. 2011;15:11–19. doi: 10.1016/j.tics.2010.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Daw ND, Gershman SJ, Ben Seymour, Dayan P, Dolan RJ. Model-Based Influences on Humans' Choices and Striatal Prediction Errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Lee SW, Shimojo S, O'Doherty JP. Neural Computations Underlying Arbitration between Model-Based and Model-free Learning. Neuron. 2014;81:687–699. doi: 10.1016/j.neuron.2013.11.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Gillan CM, Papmeyer M, Morein-Zamir S, Sahakian BJ, Fineberg NA, Robbins TW, de Wit S. Disruption in the balance between goal-directed behavior and habit learning in obsessive-compulsive disorder. The American journal of psychiatry. 2011;168:718–726. doi: 10.1176/appi.ajp.2011.10071062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Guitart-Masip M, Huys QJM, Fuentemilla L, Dayan P, Duzel E, Dolan RJ. Go and no-go learning in reward and punishment: interactions between affect and effect. NeuroImage. 2012;62:154–166. doi: 10.1016/j.neuroimage.2012.04.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.De Martino B, Camerer CF, Adolphs R. Amygdala damage eliminates monetary loss aversion. Proc. Natl. Acad. Sci. 2010;107:3788–3792. doi: 10.1073/pnas.0910230107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Voon V, Derbyshire K, Rück C, Irvine MA, Worbe Y, Enander J, Schreiber LRN, Gillan C, Fineberg NA, Sahakian BJ, et al. Disorders of compulsivity: a common bias towards learning habits. Molecular psychiatry. 2014 doi: 10.1038/mp.2014.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Myung JI, Cavagnaro DR, Pitt MA. A tutorial on adaptive design optimization. J Math Psychol. 2013;57:53–67. doi: 10.1016/j.jmp.2013.05.005. •• Highlights the need for optimal experimental design and describes the theoretical and technical aspects of adaptive design optimization.

- 59.Cavagnaro DR, Gonzalez R, Myung JI, Pitt MA. Optimal Decision Stimuli for Risky Choice Experiments: An Adaptive Approach. Management Science. 2013;59:358–375. doi: 10.1287/mnsc.1120.1558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Cavagnaro DR, Aranovich GJ, McClure SM, Pitt MA. On the Functional Form of Temporal Discounting: An Optimized Adaptive Test. doi: 10.1007/s11166-016-9242-y. (under review) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Hou F, Lesmes L, Kim W, Gu H, Pitt M, Myung J, Lu Z-L. Evaluating the Performance of the Quick CSF Method in Detecting CSF Changes: An Assay Calibration Study. doi: 10.1167/16.6.18. (under review) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Walasek L, Stewart N. How to make loss aversion disappear and reverse: tests of the decision by sampling origin of loss aversion. Journal of experimental psychology. General. 2015;144:7–11. doi: 10.1037/xge0000039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Volkow ND, Koob G, Baler R. Biomarkers in substance use disorders. ACS Chem. Neurosci. 2015;6:522–525. doi: 10.1021/acschemneuro.5b00067. •• Reviews recent progress in the development of biomarkers for drug addiction and discusses future directions.

- 64. Whelan R, Garavan H. When optimism hurts: inflated predictions in psychiatric neuroimaging. Biol. Psychiatry. 2014;75:746–748. doi: 10.1016/j.biopsych.2013.05.014. • Illustrates why we should use cross-validated machine learning methods in psychiatric neuroimaging

- 65. Whelan R, Watts R, Orr CA, Althoff RR, Artiges E, Banaschewski T, Barker GJ, Bokde ALW, Büchel C, Carvalho FM, et al. Neuropsychosocial profiles of current and future adolescent alcohol misusers. Nature. 2014;512:185–189. doi: 10.1038/nature13402. •• Applies machine learning to large longitudinal data from several domains (brain, genetics, personality, cognition, family history, and demographics).

- 66.Gottesman II, Gould TD. The endophenotype concept in psychiatry: etymology and strategic intentions. The American journal of psychiatry. 2003;160:636–645. doi: 10.1176/appi.ajp.160.4.636. [DOI] [PubMed] [Google Scholar]

- 67.Groman SM, James AS, Seu E, Tran S, Clark TA, Harpster SN, Crawford M, Burtner JL, Feiler K, Roth RH, et al. In the blink of an eye: relating positive-feedback sensitivity to striatal dopamine D2-like receptors through blink rate. J. Neurosci. 2014;34:14443–14454. doi: 10.1523/JNEUROSCI.3037-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Nassar MR, Rumsey KM, Wilson RC, Parikh K, Heasly B, Gold JI. Rational regulation of learning dynamics by pupil-linked arousal systems. Nat. Neurosci. 2012;15:1040–1046. doi: 10.1038/nn.3130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Cavanagh JF, Wiecki TV, Kochar A, Frank MJ. Eye tracking and pupillometry are indicators of dissociable latent decision processes. Journal of Experimental Psychology: General. 2014;143:1476–1488. doi: 10.1037/a0035813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Lindquist MA, Meng Loh J, Atlas LY, Wager TD. Modeling the hemodynamic response function in fMRI: efficiency, bias and mis-modeling. NeuroImage. 2009;45:S187–S198. doi: 10.1016/j.neuroimage.2008.10.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Lu JT, Kishida KT, De Asis-Cruz J, Lohrenz T, Treadwell-Deering D, Beauchamp M, Montague PR. Single-Stimulus Functional MRI Produces a Neural Individual Difference Measure for Autism Spectrum Disorder. Clin. Psychol. Sci. 2015;3 doi: 10.1177/2167702614562042. 2167702614562042–432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Ahn W-Y, Kishida KT, Gu X, Lohrenz T, Harvey A, Alford JR, Smith KB, Yaffe G, Hibbing JR, Dayan P, et al. Nonpolitical Images Evoke Neural Predictors of Political Ideology. Curr. Biol. 2014;24:2693–2699. doi: 10.1016/j.cub.2014.09.050. [DOI] [PMC free article] [PubMed] [Google Scholar]