Abstract

Background

Cancer quality indicators have previously been described for a single tumour site or a single treatment modality, or according to distinct data sources. Our objective was to identify cancer quality indicators across all treatment modalities specific to breast, prostate, colorectal, and lung cancer.

Methods

Candidate indicators for each tumour site were extracted from the relevant literature and rated in a modified Delphi approach by multidisciplinary groups of expert clinicians from 3 clinical cancer programs. All rating rounds were conducted by e-mail, except for one that was conducted as a face-to-face expert panel meeting, thus modifying the original Delphi technique. Four high-level indicators were chosen for immediate data collection. A list of confounding variables was also constructed in a separate literature review.

Results

A total of 156 candidate indicators were identified for breast cancer, 68 for colorectal cancer, 40 for lung cancer, and 43 for prostate cancer. Iterative rounds of ratings led to a final list of 20 evidence- and consensus-based indicators each for colorectal and lung cancer, and 19 each for breast and prostate cancer. Approximately 30 clinicians participated in the selection of the breast, lung, and prostate indicators; approximately 50 clinicians participated in the selection of the colorectal indicators.

Conclusions

The modified Delphi approach that incorporates an in-person meeting of expert clinicians is an effective and efficient method for performance indicator selection and offers the added benefit of optimal clinician engagement. The finalized indicator lists for each tumour site, together with salient confounding variables, can be directly adopted (or adapted) for deployment within a performance improvement program.

Keywords: Performance measures, quality indicators, health care quality assessment, quality improvement, quality of cancer care

INTRODUCTION

Instituting quality indicators for the purpose of continuous performance monitoring and subsequent improvement has become a requisite component of health care delivery in most institutions around the world. Quality of care can be defined as “the degree to which health services for individuals and populations increase the likelihood of desired health outcomes and are consistent with current professional knowledge”1. Given the emphasis on quality, systematic measurement and reporting processes have been implemented in many health care systems. After significant investment in the supporting infrastructure necessary for reliable data collection, several national initiatives are now focused on close monitoring of quality performance. Examples include the Dutch Health Care Performance Reports, Danish hospital sector reports, the English National Health Service Quality Accounts, U.S. national reports on quality and disparities, national health care quality reports by Belgium and Sweden, and provincial performance reports in Canada2.

National initiatives in the area of oncology are well described, usually in the form of national cancer plans. England, for example, has instituted a cancer National Service Framework with national directorship, a collaborative for hospital-level service organization, and 34 cancer networks for service coordination between hospitals3. Efforts in the United States date back to 1922, with the formation of the Commission on Cancer, a division of the American College of Surgeons, tasked with standardizing and improving the quality of cancer care4.

In Canada, the Canadian Partnership Against Cancer, in collaboration with national and provincial partners, issues annual performance reports with specific metrics that cover the continuum of cancer care and control5. Yet despite the national collaboration, functions related to cancer care delivery are independently planned and funded by each province. The BC Cancer Agency, for example, operates 6 regional cancer centres and 4 specialized networks to ensure collaboration and consistency of care across British Columbia6. In Ontario, a Cancer Quality Council was established in 2002 in response to the lack of central oversight for system-level cancer care performance. Together with Cancer Care Ontario, the Council devised and implemented a performance monitoring scorecard balanced against a strategy- based framework7. Similar cancer-specific programs and networks have been established in the provinces of Alberta, Saskatchewan, and Manitoba8–10.

The province of Quebec is making progress toward a province-wide cancer control strategy and improved capture of cancer-related data in a central registry. Advancements come mainly through the efforts of the Direction québécoise de cancérologie and of community-based organizations such as the Coalition Priorité Cancer au Québec. Until recently, no available system had the capacity for adequate performance monitoring of the wide breadth of cancer services—a situation that is perhaps most evident in the annual Canadian Partnership Against Cancer reports, in which Quebec data were often excluded from the analyses because of variations in definitions, unreliable vital status of cancer cases, or simply unavailable data5.

To address the need for improvement in the quality of cancer care, the Rossy Cancer Network (rcn) was formed in Montreal. The rcn is a network of 3 McGill University–affiliated hospital cancer programs that aims to harmonize cancer care activities and to provide a superior quality of care. In 2012, the rcn collectively served approximately 11,500 new cancer patients11, representing roughly 24% of all incident cases in Quebec12.

One of the missions of the rcn is to create a network-wide standardized performance improvement framework11. Collaboration between hospital leaders, who are integral to the rcn’s governance, is facilitating a move to common measurement and reporting activities that are expected to set the stage for more uniform care delivery and synergistic quality improvement initiatives. At the core of all rcn activities is the objective to improve survival times, reduce mortality, and increase patient satisfaction. To accomplish those goals, relevant quality metrics have to be identified and tracked over time.

The objective of the present study was to systematically identify common quality indicators for continuous performance improvement across the network, with a focus on the 4 major cancers: breast, prostate, colorectal, and lung.

METHODS

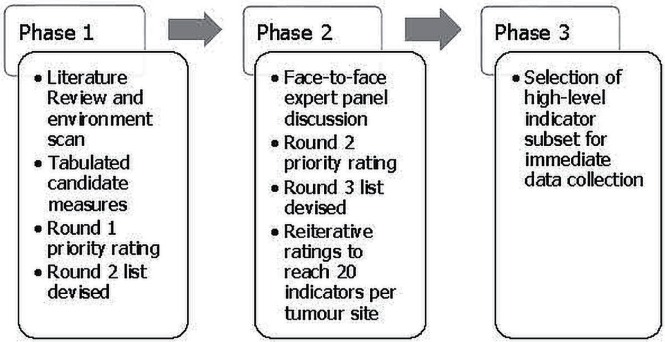

Using a 3-phase modified Delphi approach, quality metrics were developed for treatment, access and patient flow, and outcomes. Each phase was separately deployed for each of the tumour sites. Figure 1 presents a simplified view of the process.

FIGURE 1.

Modified Delphi approach for indicator selection.

The Delphi technique involves iterative rounds with controlled feedback to reach consensus by a group of experts in a systematic manner13; it is widely used in the selection of quality metrics across various clinical domains14–17. The modified Delphi approach includes the addition of a face-to-face meeting of expert participants18.

Phase I: Evidence and First Rating

Candidate performance measures in each tumour category were identified through reviews of published indicators used by other cancer agencies, networks, or national organizations. Examples include the BC Cancer Agency, Cancer Care Ontario, the American Society of Clinical Oncology, and the National Surgical Adjuvant Breast and Bowel Project. Those reviews were supplemented by a comprehensive review of the scholarly literature specific to each tumour site. The name of the tumour site together with the term “quality indicators” was used to identify major papers, after which a snowball strategy was used to identify secondary references.

All candidate indicators, organized by tumour site, were tabulated under one of two network strategic categories: treatment (including outcomes of treatment) and access to care (including patient flow). For indicators that could have been appropriately placed in either category, the “best fit” category was chosen. For example, indicators related to referrals, treatment on a clinical trial, presentation at a tumour board, and clinical documentation were all included under the “access” category even though they were also associated with treatment. Each tumour-specific list was then sent in an e-mail message to all clinicians across the network who had relevant involvement in care delivery for the respective tumour site (30 breast, 29 prostate, 37 lung, and 49 colorectal clinicians). The clinicians were asked to use a 5-point Likert scale (0–4: strongly oppose indicator inclusion to strongly support indicator inclusion) to rate each indicator according to these guiding criteria:

■ Relevance to cancer care quality (a measure of indicator validity)

■ Importance to cancer care quality (a measure of indicator value)

■ Ability to benchmark results (a measure of the ability to set targets)

■ Representative of an emerging practice

■ Applicable across network cancer programs (a measure of appropriateness for the rcn)

Raters were also encouraged to add free-text modifications or comments.

No time limit was set for responses, and weekly e-mail reminders were sent to maximize participation during approximately 1 month. Results from the completed ratings were manually collated by a member of the research team (SRK). Indicators with an average rating of 2 or less (0, 1, 2) were eliminated from the list, and a second version containing the remaining indicators was compiled for phase ii of the process.

Phase II: Face-to-Face Discussion and Iterative Ratings

Clinicians on the e-mail list for the first rating opportunity were invited to participate in a face-to-face meeting to discuss the remaining indicators. Four meetings were held, one for each tumour site, with some panellists receiving multiple requests if their area of expertise or care delivery pertained to more than one tumour type.

The remaining indicators for each tumour site were individually discussed for verbal rating and consensus resolution to include or delete the indicator. Panellists also discussed similar indicators that were redundant and came to consensus on which indicator to keep and the appropriate wording for the indicator. The guiding criteria from phase i were also used in phase ii. Indicators that passed the expert panel discussion, and any newly added indicators, were collated into a version 3 list.

The version 3 list was sent by e-mail to members who participated in either the first rating round or the face-to-face discussion for their respective tumour site. Panellists—still representing a multidisciplinary, multi-institutional panel—were asked to use the same 5-point Likert scale to rate the indicators on the same criteria used in phase i.

The results were collated, and indicators were again eliminated if their average rating was 2 or less. This rating process continued iteratively until the final lists of indicators were achieved. The number of iterations varied depending on the tumour site. Nonresponders to preceding rounds were not excluded from responding to subsequent rounds.

Phase III: High-Level Indicator Selection

To select high-level indicators for immediate data collection, the final indicator lists from each tumour site were merged into one amalgamated list and sent to two members of the research team (GBatist, SRK). Both members independently used predefined criteria (Is the indicator applicable to all four tumour sites? Reflective of the academic nature of the rcn? Representative of best practices associated with clinical care?) to select 4 high-level indicators from the amalgamated list. Criteria related to the validity and appropriateness of each indicator were not included here because those factors had already been confirmed during the earlier consensus process. After the independent selections had been made, the choices were compared. Once the two team members had achieved consensus, the high-level indicator list, together with the amalgamated list that represented all the indicators, was sent by e-mail to all panellists who had attended the face-to-face meetings. Panellists were asked to indicate whether they agreed or disagreed with the selections, and to provide alternative choices if they disagreed. Weekly e-mail reminders were sent to maximize feedback during approximately 1 month. After the 1-month period, panellist feedback was reviewed, and the high-level indicators were finalized.

Identification of Confounding Variables

During the indicator selection process, concurrent work was completed to identify confounding factors necessary for stratified reporting and risk-adjusted analysis. A comprehensive literature review used the key phrases “cancer and comorbidity interaction,” “cancer confounders,” “comorbidity indices and cancer,” “cancer outcomes and prognostic factors,” “prognostic indices and cancer,” “cancer survival prediction,” “predicting cancer progression and mortality,” and “comorbidity considerations in oncology” to search electronic databases accessible through McGill University, including medline, PubMed, the Cochrane Library, and articles found through Google Scholar. Additionally, patient and tumour characteristics generally included in clinical research were reviewed through the Web sites of various organizations and through published studies.

RESULTS

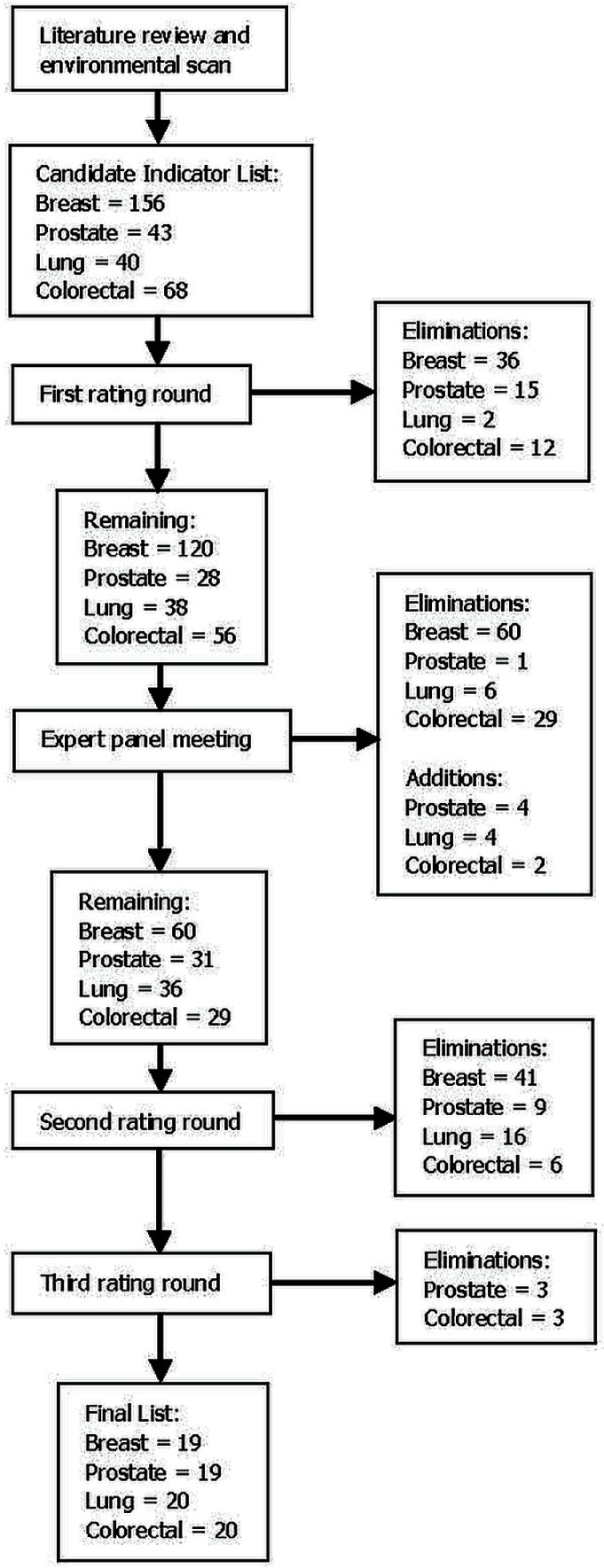

The literature review and environmental scan identified 156 potential indicators for the breast group, 68 for the colorectal group, 40 for the lung group, and 43 for the prostate group.

The first e-mail rating round eliminated 36 breast indicators, 12 colorectal indicators, 2 lung indicators, and 15 prostate indicators, leaving 120 breast, 56 colorectal, 38 lung, and 28 prostate indicators for discussion at the expert panel meeting.

On average, the length of the face-to-face discussion was 3 hours. The research team moderated the discussions. The breast and prostate meetings were attended by 10 clinicians; the lung meeting, by 13; and the colorectal meeting, by 9. All groups included at least one surgical oncologist, medical oncologist, radiation oncologist, and pathologist. The directors of Oncology at each of the 3 network hospitals attended the meeting that most closely matched their area of expertise. Nurses and allied health professionals were invited to all sessions, but were in attendance only at the breast and lung meetings.

Although most indicators reflected evidence-based guidelines for clinical care (grade 1 or 2 measures), additional empirically supported indicators not otherwise included, or indicators based on a high degree of consensus in the expert provider group (grade 3 measures) were added to the lists during the discussions. After the verbal ratings and consensus concerning the inclusion or exclusion of the remaining indicators, the candidate list was further cut to 60 breast, 29 colorectal, 36 lung, and 31 prostate indicators. Of those indicators, 4 lung, 4 prostate, and 2 colorectal indicators were newly added (1 of which had previously been eliminated). Indicators were also reworded for improved clarification and accuracy.

After the face-to-face discussion, the breast group went through 1 e-mail rating cycle to eliminate 41 indicators, the colorectal group went through 2 e-mail cycles to eliminate 9 indicators, the lung group went through 1 e-mail cycle to eliminate 16 indicators, and the prostate group went through 2 e-mail cycles to eliminate 12 indicators. The final list of evidence- and consensus-based indicators numbered 20 for the lung and colorectal sites and 19 for the breast and prostate sites. Considering all the e-mail rating opportunities combined, approximately 30 clinicians participated in the selection of the breast, prostate, and lung indicators, and approximately 50 clinicians, in the selection of the colorectal indicators. Figure 2 summarizes the progressive reduction of indicators.

FIGURE 2.

Phased reduction of indicators.

The final indicator lists were a mix of process and outcome measures, all of which are shown in Tables i, ii, iii, and iv. Measures related to structure were reflected in other categories not discussed here. Process measures accord with best practice and cut across disciplines (surgery, radiation therapy, chemotherapy, psychosocial oncology, palliative care, pathology, and genetics). Measures related to access addressed wait times for diagnostics and treatment, clinical trial enrolment, presentation to a tumour board, and referral to genetic counselling. Outcome measures were focused on treatment toxicities, surgical complications, relapse or recurrence, survival, and mortality.

TABLE I.

Treatment and access and patient flow performance indicators for breast cancer

| Indicator | Description |

|---|---|

| Treatment | |

| 1 | Percentage of patients with early-stage breast cancer (stage I or II) and clinically negative axillary nodes who receive sentinel node biopsy |

| 2 | Complete synoptic pathology report according to the Canadian Association of Pathologists or Rossy Cancer Network guidelines |

| 3 | Percentage of patients with involvement of axillary lymph nodes (1–3 nodes or more) who received adjuvant radiation |

| 4 | Percentage of patients with estrogen receptor–negative invasive carcinoma (tumour > 1 cm or node-positive) who received adjuvant chemotherapy within 8 weeks of surgical resection |

| 5 | Percentage of patients with inflammatory breast cancer or locally advanced nonresectable estrogen receptor–negative carcinoma who received neoadjuvant chemotherapy |

| 6 | Percentage of patients with stage III breast cancer who underwent baseline staging imaging, including bone scan, liver ultrasonography, and chest radiography |

| 7 | Percentage of patients who received systemic-relapse post-adjuvant therapy within 5 years of diagnosis |

| 8 | Percentage of patients with primary operable breast cancer who developed first recurrence to ipsilateral breast or skin or chest wall (or both) within 5 years after mastectomy or breast-conserving surgery |

| 9 | Percentage of biopsies performed at first site of metastasis (stage IV patients) |

| 10 | Percentage of patients receiving chemotherapy with grade 4 toxicity |

| Access and patient flow | |

| 11 | Time from abnormal mammogram to diagnostic biopsy |

| 12 | Time from diagnostic biopsy to initial breast cancer surgery |

| 13 | Percentage of breast cancer patients treated on a clinical trial |

| 14 | Percentage of breast cancer patients offered referral to genetics for evaluation and counselling |

| 15 | Percentage of breast cancer patients presented to the multidisciplinary tumour conference (tumour board) at any time after diagnosis |

| 16 | Wait time for adjuvant radiation therapy from the final pathology report |

| 17 | Wait time for systemic adjuvant therapy from the final pathology report |

| 18 | Wait time for first-line chemotherapy for metastatic disease, from medical oncology visit that decides on chemotherapy |

| 19 | Wait time for computed tomography or magnetic resonance imaging from doctor’s requisition |

TABLE II.

Treatment and access and patient flow performance indicators for prostate cancer

| Indicator | Description |

|---|---|

| Treatment | |

| 1 | Number of needle cores per biopsy |

| 2 | Complete synoptic pathology report according to the Canadian Association of Pathologists or Rossy Cancer Network guidelines |

| 3 | Percentage of patients with metastatic disease treated with first-line systemic therapy |

| 4 | Percentage of patients with bone metastases receiving bone-targeted therapy (for example, bisphosphonates or RANK ligand inhibitor) |

| 5 | Percentage of patients with positive surgical margins, by stage |

| 6 | Percentage of patients with positive margins and prostate-specific antigen (PSA) between 0.2 ng/mL and 0.5 ng/mL who receive radiation therapy |

| 7 | Percentage of patients receiving radiotherapy who have Radiation Therapy Oncology Group grade 3 or higher rectal or bladder toxicity during the treatment period |

| 8 | Percentage of patients with acute surgical complication within 30 days (blood loss of 2.0 L or more; rectal injury; cardiovascular complications such as arrhythmias, myocardial infarction, heart failure, or pulmonary edema; proximal deep-vein thrombosis or pulmonary embolism; infection; or placed on long-term anticoagulant therapy) |

| 9 | Blood transfusion rate from the surgical start time, to and including 72 hours postoperatively |

| 10 | Hospitalization rate within 30 days of treatment, and diagnosis code at time of admission |

| 11 | Percentage of patients with significant urinary incontinence (>2–3 pads daily) at 1 year after surgery |

| 12 | Biochemical disease-free and overall survival at 5, 10, and 15 years after primary treatment by radical prostatectomy or radiation therapy, by stage of disease |

| Access and patient flow | |

| 13 | Time between positive biopsy showing high-risk disease (clinical stage T3-4, or Gleason score 8–10, or PSA > 20 ng/mL at diagnosis) and initiation of one or more of these treatments: radiation therapy, systemic therapy, surgery |

| 14 | Percentage of low-risk patients (clinical stage T1–2a, and Gleason score ≤ 6, and PSA < 10 ng/mL at diagnosis) with documentation of discussion about treatment options and adverse effects |

| 15 | Percentage of patients having undergone definitive therapy for prostate cancer who are followed at least twice in the first year and at least annually thereafter |

| 16 | Percentage of patients with high-risk disease (clinical stage T3-4, or Gleason score 8–10, or PSA > 20 ng/mL at diagnosis) who undergo general staging tests (pelvic computed tomography, magnetic resonance imaging, and bone scan) |

| 17 | Percentage of castration-resistant metastatic patients referred to a medical oncologist or multidisciplinary tumour board |

| 18 | Median length of stay after radical prostatectomy |

| 19 | Percentage of prostate cancer patients treated on a clinical trial |

TABLE III.

Treatment and access and patient flow performance indicators for lung cancer

| Indicator | Description |

|---|---|

| Treatment | |

| 1 | Complete synoptic pathology report according to the Canadian Association of Pathologists or Rossy Cancer Network guidelines |

| 2 | Percentage of patients undergoing curative localized therapy (either surgery or chemoradiation) who receive positron-emission tomography before treatment |

| 3 | Percentage of patients diagnosed with nonsquamous and non-small-cell disease with assigned EGFR and ALK status, by stage |

| 4 | Percentage of patients with metastatic lung cancer treated with cytotoxic chemotherapy during the last 2 weeks of life |

| 5 | Overall survival by stage at initial therapy |

| 6 | Percentage of patients with validated biomarker who receive appropriate targeted therapy |

| 7 | Percentage of lobectomies performed by video-assisted thoracoscopic surgery |

| 8 | Number of lymph nodes retrieved during lobectomy |

| 9 | Percentage of patients who die within 30 days of surgery |

| 10 | Percentage of patients receiving systemic therapy experiencing grade 3 or 4 toxicity |

| Access and patient flow | |

| 11 | Clinical stage at diagnosis in any of the network hospitals |

| 12 | Time from first abnormal chest radiograph to pathology diagnosis |

| 13 | Wait time for final pathology (histologic assignment and genotyping) |

| 14 | Wait time for diagnostic imaging |

| 15 | Percentage of lung cancer patients presented at a multidisciplinary tumour conference (tumour board) |

| 16 | Wait time from booking curative thoracic surgery to procedure |

| 17 | Wait time from referral for curative radiation therapy to treatment |

| 18 | Wait time to systemic therapy for metastatic disease |

| 19 | Percentage of patients with metastatic lung cancer referred to outpatient palliative care services |

| 20 | Percentage of lung cancer patients treated on a clinical trial |

TABLE IV.

Treatment and access and patient flow performance indicators for colorectal cancer

| Indicator | Description |

|---|---|

| Treatment | |

| 1 | Complete synoptic pathology report according to the Canadian Association of Pathologists or Rossy Cancer Network guidelines |

| 2 | Percentage of patients with rectal cancer undergoing surgery with a positive distal or radial margin |

| 3 | Percentage of patients undergoing surgery or radiation therapy for rectal cancer who receive pre-treatment imaging of the pelvis with magnetic resonance imaging (MRI) within the preceding 1 month |

| 4 | Percentage of patients undergoing surgery for colon or rectal cancer who receive preoperative chest, abdominal, or pelvic computed tomography and MRI for rectal cancer only |

| 5 | Percentage of patients with rectal cancer undergoing sphincter-saving resection |

| 6 | Percentage of patients with stage III colon cancer who commence adjuvant chemotherapy within 8 weeks of surgery |

| 7 | Percentage of patients receiving chemotherapy who experience grade 3 or 4 toxicity |

| 8 | Percentage of patients undergoing surgery for rectal cancer in whom continuity is re-established and who experience an anastomotic leak |

| 9 | Percentage of patients having undergone colon or rectal cancer surgery who experience an unplanned return to the operating room within 28 days |

| 10 | Percentage of patients who die within 28 days of non-emergent colon or rectal cancer surgery, excluding multivisceral surgery |

| 11 | Rate of local recurrence within 5 years for patients who have had rectal cancer surgery, by stage |

| 12 | Percentage of colon or rectal cancer patients with systemic relapse within 5 years after adjuvant therapy, by initial stage |

| 13 | 5-Year and adjusted 5-year overall survival rates for colon or rectal cancer patients, by stage |

| Access and patient flow | |

| 14 | Percentage of patients with a family history of colorectal cancer offered referral to genetics |

| 15 | Time between diagnostic biopsy and initial colorectal local therapy |

| 16 | Wait time for computed tomography or MRI for staging |

| 17 | Percentage of colorectal cancer patients treated on a clinical trial |

| 18 | Percentage of patients with colon or rectal cancer, not treated with preoperative chemotherapy or radiotherapy, admitted for surgery within 8 weeks from the time of first surgical consultation |

| 19 | Percentage of patients with known or suspected stage II or III rectal cancer who see a radiation oncologist or are presented to a multidisciplinary tumour board preoperatively or within 4 weeks postoperatively |

| 20 | Percentage of patients with stage II colon cancer whose case is reviewed by the tumour board or medical oncologist within 4 weeks |

Table v lists the 4 high-level indicators slated for immediate data collection. They are relevant to all 4 tumour sites of interest, reflective of the academic nature of the rcn, and reflective of best practices associated with clinical care. The two members of the research team who had independently selected the indicators from the amalgamated list had identical choices, and consensus by the expert panellists was achieved in 1 e-mail round, with no disagreement about the selections. The high-level indicators address time to initial treatment, treatment toxicity, use of multidisciplinary tumour boards, and participation in clinical trials.

TABLE V.

High-level performance indicators for all cancers

| Indicator | Description |

|---|---|

| 1 | Time between diagnosis and initial treatment, with specification of treatment modality |

| 2 | Percentage of patients with high-grade (3 or 4) acute toxicity with cytotoxic chemotherapy |

| 3 | Percentage of patients presented to a multidisciplinary tumour board at any time after diagnosis |

| 4 | Percentage of patients treated on a clinical trial at any time after diagnosis |

Patient and tumour characteristics that could act as confounding factors in the analysis phase were reduced from a wide selection of prognostic factors, including comorbidities, to a base model that would lend itself to feasibility in data collection. Table vi lists those characteristics.

TABLE VI.

Base model of patient- and tumour-related characteristics

| Confounding variables | |

|---|---|

|

| |

| Demographic | Tumour-related |

| Age at diagnosis | Primary cancer site |

| Sex | Date of diagnosis |

| Postal code | Stage of disease |

| Grade of disease | |

DISCUSSION

Quality measures for breast, prostate, colorectal, and lung cancer were extracted from the national and international literature, with final lists compiled through expert consensus achieved using a modified Delphi approach. Each set of measures is rooted in evidence-based clinical practice and includes both process and outcome metrics relevant to quality measurement. The lists, and the process used to create them, could easily be transferred to similar performance improvement initiatives in other jurisdictions. Furthermore, confounding factors that could be useful in the analysis phase are provided. Our work expands on previous studies that have focused on a single tumour site or a single treatment modality, or on measures that correspond with local existing datasets3,16,19.

High-level indicators were chosen so that data collection efforts would prioritize indicators pertinent to all tumour sites; incremental incorporation of all other indicators is planned. The chosen indicators address access to treatment and the incidence of serious toxicities. They also address frequency of patient presentation at a multi-disciplinary tumour board, which has been associated with better outcomes20, and participation in clinical trials. Both of the foregoing indicators also reflect the academic nature of the university-affiliated cancer programs.

As an initial starting point, the chosen indicators constitute the first rcn Quality Report; they also serve to reveal data quality issues that have to be corrected as work proceeds. The end goal of the process was to report on standardized quality metrics for comparison and improvement of care delivery, including efficient use of services as reflected in selected measures that address the underuse of effective testing for patients who could benefit and the overuse of unnecessary testing for patients in whom the potential for benefit is limited4.

The final indicator lists represent a wide range of measurement points that should promote a balanced perspective for improving health care quality. Improvement in one area at the expense of another would contradict the concept of high-quality care, a concept that was an important consideration in the U.K. Quality Outcomes Framework, which involved financial incentives for achieving predetermined quality thresholds2,21.

The selected confounding factors should help to determine whether observed differences in outcomes across the network are indeed attributable to differences in the quality of care as opposed to differences in the case or hospital mix. In the absence of data stratification and risk-adjusted analysis, outcome measures are as ineffectual as process measures that have no scientifically sound link to improved outcomes22,23. Compliance with evidence-based processes requires that statistical parallels to improved patient outcomes remain valid. Similarly, to safeguard the validity of results, factors that can influence the interpretation of outcome measures must be assessed and statistically accounted for15,22–25.

Most of the selected measures have been implemented in health care systems operating outside of Quebec, thus presenting questions about validity in the local context. Although true validity will be expected to be discovered through responsiveness to subsequent improvement initiatives, review and selection by an expert multidisciplinary panel of clinicians with vast experience and familiarity with local care delivery processes added to the validity of the indicators in the local context.

A further advantage of inclusivity among the participating local clinicians is the creation of a bottom-up approach that serves to maximize buy-in because of front-line decision-making concerning what defines quality in cancer care26. The final indicator lists were achieved in a systematic elimination process using iterative e-mail correspondence and a face-to-face meeting with a subset of the original expert panel to engage in an in-depth discussion and debate about further eliminations. The opportunity for face-to-face discussion was a modification of the Delphi technique that proved valuable not only in the context of achieving consensus, but also in building a sense of ownership of the process and its results. Consultation with those who would be responsible for uptake of the results and action in the form of care improvements has been shown to be an effective approach in other quality improvement programs. For example, an important component of the success of the U.S. National Surgical Quality Improvement Program is surgeon ownership of the selection and definition of common quality measures used for continuous tracking24.

Alternatively, a top-down approach was used in the selection of a strategy-based quality framework. Several frameworks have been proposed to guide quality monitoring and improvement, including the well-known U.S. Institute of Medicine paradigm that emphasizes effective, safe, timely, efficient, patient-centred, and equitable care1. Other common frameworks are organized either using the cancer care continuum (from prevention to end-of-life) or institutional goals and objectives—and sometimes both5,7. Choosing an appropriate framework depends largely on the internal environment and the stage of organizational development. During nascent stages, a strategy-based framework that aligns with overarching goals and objectives offers two distinct advantages: communication of goals to all key stakeholders, and effective measurement of progress toward goal attainment (Brown A, Institute of Health Policy, Management and Evaluation. Personal communication, 2012). At later stages in development, it might be perfectly reasonable to integrate a framework that reflects the patient journey or the quality dimensions mentioned earlier.

Selection of performance indicators, followed by measurement and eventual improvements, is a process that is successful when all pertinent players are involved and engaged. An integral component of our work was the up-front engagement of clinicians and hospital administration across the 3 clinical cancer programs. Soliciting input from all key players early in the process is expected to help establish a sustainable performance improvement program coupled with a complementary culture of patient-focused practice.

Limitations

Several process limitations deserve consideration. Some of the selected measures require clinical information not routinely collected by local registries, thus threatening the feasibility of data collection. The selection process did not focus on feasibility as a selection criterion, although feasibility was certainly taken into account after the lists had been created. In the absence of a properly coded electronic medical record, a more focused a priori approach to anticipated data collection challenges would perhaps have changed the finalized lists. However, the goal during the process was to identify metrics truly reflective of quality of care, per consensus by those who deliver the care and would inevitably be accountable for performance results. Contrary to work published by others15,17,25, the focus of the network was not on what our systems could provide, but rather what we needed our systems to provide. A second-tier review of the selected measures by a methods panel to prioritize them according to feasibility and validity considerations27 could be a valuable addition to the modified Delphi technique.

On a related note, some of the clinical information required to complete abstraction of a measure might not be consistently documented, with adverse effects on data reliability. Conversely, feedback about underperformance of measures that are known to be poorly documented could lead to better documentation efforts, as was shown in a study that reported results for chemotherapy administration in stage iii colon cancer28.

Another process-related limitation was one in which a solution is not readily apparent. In the effort to elicit multiple viewpoints, invitations to the multidisciplinary rating panels were extended to all relevant disciplines, including nurses, physiotherapists, dietitians, psychologists, and social workers. Despite that effort, panel involvement was, for the most part, limited to physicians and (for some tumour sites) nurses. Considering the candidate indicators presented for consensus elimination, the strength of the final indicator lists was most likely not affected; however, the opportunity for wider buy-in might have been reduced.

The adoption (or adaptation) of the performance indicator lists described here might be limited by a few factors. One that was already mentioned is the feasibility of data collection, which can vary between institutions. Another is the fact that the indicators were selected within a strategic framework and thus reflect the goals of the network in which they were developed. That being said, operationalization of the indicators in any evidence-based oncology practice would still be applicable, but might not cover the spectrum of care services within the local framework. For example, literature related to prevention and screening was not applicable in the rcn context and was thus not reflected in the indicator lists. Additionally, other local contexts might vary widely from the rcn in care delivery practices and patterns, and thus would require validity of the indicators within their unique context.

Lastly, as is the case with all performance indicators in any mode of care, constant re-evaluation and modification will be required as new research is published and synthesized, with subsequent changes to best practice.

CONCLUSIONS

Performance improvement programs aimed at ensuring high quality-of-care standards are a fundamental component of health care delivery. Health care systems around the world have instituted national systems of quality monitoring, including those focused on cancer. Although Canada, and more specifically Quebec, holds efficient and effective cancer care as a priority, central oversight of the broad range of cancer services remains elusive. In response, small-scale interinstitutional networks can build common platforms of quality monitoring to ensure high-performance cancer care and measurement of its effect on patient outcomes.

The rcn initiative, through a common performance improvement methodology, aims to ensure care of equal standard regardless of where within the network the care is delivered. To that end, standard performance indicators for use across the network were developed for the top 4 tumour sites. The next phase of work will focus on the data abstraction process, including the identification of data sources, reliability of the information, and feasibility of data collection. That information will be used to plan the building of a data warehouse and to transform the supporting technological infrastructure.

In the context of cancer care, whereby patients are increasingly forced to seek a full range of services from multiple providers who might practice across several institutions, integrated performance monitoring and improvement are necessary. When efforts are fragmented between institutions, the chances of inconsistent results increase, potentially translating into varying quality of care, with poor patient experiences, ineffective and inefficient care, and worst of all, care that is unsafe.

ACKNOWLEDGMENTS

This work was conducted under the rcn mandate, an initiative made possible by a generous financial contribution from the Larry and Cookie Rossy Family Foundation. We thank the rcn leadership, the directors of Oncology at the 3 cancer programs, and the health care providers who dedicated significant time and expertise during the completion of this work.

CONFLICT OF INTEREST DISCLOSURES

We have read and understood Current Oncology’s policy on disclosing conflicts of interest, and we declare that we have none.

REFERENCES

- 1.United States, The National Academies, Institute of Medicine, Committee on Quality of Health Care in America . Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001. [Google Scholar]

- 2.Organisation for Economic Co-operation and Development . Improving Value in Health Care: Measuring Quality. Paris, France: Forum on Quality of Care; 2010. [Google Scholar]

- 3.McCarthy M, Gonzalez-Izquierdo A, Sherlaw-Johnson C, Khachatryan A, Coleman MP, Rachet B. Comparative indicators for cancer network management in England: availability, characteristics and presentation. BMC Health Serv Res. 2008;8:45. doi: 10.1186/1472-6963-8-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schneider EC, Malin JL, Kahn KL, Emanuel EJ, Epstein AM. Developing a system to assess the quality of cancer care: asco’s national initiative on cancer care quality. J Clin Oncol. 2004;22:2985–91. doi: 10.1200/JCO.2004.09.087. [DOI] [PubMed] [Google Scholar]

- 5.Canadian Partnership Against Cancer (cpac) The 2012 Cancer System Performance Report. Toronto, ON: CPAC; 2012. [Google Scholar]

- 6.BC Cancer Agency (bcca) About > Who We Are [Web page] Vancouver, BC: BCCA; n.d. [Available at: http://www.bccancer.bc.ca/about/who-we-are; cited 10 July 2014] [Google Scholar]

- 7.Greenberg A, Angus H, Sullivan T, Brown AD. Development of a set of strategy-based system-level cancer care performance indicators in Ontario, Canada. Int J Qual Health C. 2005;17:107–14. doi: 10.1093/intqhc/mzi007. [DOI] [PubMed] [Google Scholar]

- 8.Alberta Health Services (ahs) Provincial Advisory Council: Cancer. Edmonton, AB: AHS; 2014. [Available online at: http://www.albertahealthservices.ca/assets/about/ac/ahs-pac-cancer-brochure.pdf; cited 10 July 2014] [Google Scholar]

- 9.Saskatchewan Cancer Agency (sca) Community Oncology Program of Saskatchewan (COPS) [Web page] Regina, SK: SCA; 2014. [Available at: http://www.saskcancer.ca/Default.aspx?DN=20625c14-d634-4748-9509-83d11437af7f; cited 10 July 2014] [Google Scholar]

- 10.Cancer Care Manitoba . About Us > Programs [Web page] Winnipeg, MB: Action Cancer Manitoba; 2016. [Available at: http://www.cancercare.mb.ca/home/about_us/programs/; cited 10 July 2014] [Google Scholar]

- 11.Sullivan T, Huynh T, Aprikian A, et al. The Rossy Cancer Network: a philanthropy-driven quality program. Oncology Exchange. 2013;12:25–8. [Google Scholar]

- 12.Canadian Cancer Society’s Steering Committee on Cancer Statistics . Canadian Cancer Statistics 2012. Toronto, ON: Canadian Cancer Society; 2012. [Google Scholar]

- 13.Fink A, Kosecoff J, Chassin M, Brook RH. Consensus methods: characteristics and guidelines for use. Am J Public Health. 1984;74:979–83. doi: 10.2105/AJPH.74.9.979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gear C, Koziol-McLain J, Wilson D, et al. Primary healthcare response to family violence: a Delphi evaluation tool. Qual in Primary Care. 2012;20:15–30. [PubMed] [Google Scholar]

- 15.Wilson S, Hauck Y, Bremner A, Finn J. Quality nursing care in Australian paediatric hospitals: a Delphi approach to identifying indicators. J Clin Nurs. 2011;21:1594–605. doi: 10.1111/j.1365-2702.2011.04004.x. [DOI] [PubMed] [Google Scholar]

- 16.Gagliardi AR, Simunovic M, Langer B, Stern H, Brown AD. Development of quality indicators for colorectal cancer surgery, using a 3-step modified Delphi approach. Can J Surg. 2005;48:441–52. [PMC free article] [PubMed] [Google Scholar]

- 17.Watkins K, Horvitz-Lennon M, Caldarone LB, et al. Developing medical record-based performance indicators to measure the quality of mental healthcare. J Healthc Qual. 2011;33:49–66. doi: 10.1111/j.1945-1474.2010.00128.x. [DOI] [PubMed] [Google Scholar]

- 18.Wilson D, Koziol-McLain J, Garrett N, Sharma P. A hospital-based child protection programme evaluation instrument: a modified Delphi study. Int J Qual Health C. 2010;22:283–93. doi: 10.1093/intqhc/mzq025. [DOI] [PubMed] [Google Scholar]

- 19.Del Turco MR, Ponti A, Bick U, et al. Quality indicators in breast cancer care. Eur J Cancer. 2010;46:2344–56. doi: 10.1016/j.ejca.2010.06.119. [DOI] [PubMed] [Google Scholar]

- 20.El Saghir NS, Keating NL, Carlson RW, Khoury KE, Fallowfield L. Tumor boards: optimizing the structure and improving efficiency of multidisciplinary management of patients with cancer worldwide. Am Soc Clin Oncol Educ Book. 2014:e461–6. doi: 10.14694/EdBook_AM.2014.34.e461. [Available online at: http://meetinglibrary.asco.org/content/11400461-144; cited 16 July 2015] [DOI] [PubMed] [Google Scholar]

- 21.Prescribing and Primary Care Team . Quality and Outcomes Framework Achievement, Prevalence and Exceptions Data, 2012/13. Leeds, UK: Health and Social Care Information Centre; 2013. [Google Scholar]

- 22.Mant J. Process versus outcome indicators in the assessment of quality of health care. Int J Qual Health Care. 2001;13:475–80. doi: 10.1093/intqhc/13.6.475. [DOI] [PubMed] [Google Scholar]

- 23.Malin JL, Asch SM, Kerr EA, McGlynn EA. Evaluating the quality of cancer care. Cancer. 2000;88:701–7. doi: 10.1002/(SICI)1097-0142(20000201)88:3<701::AID-CNCR29>3.0.CO;2-V. [DOI] [PubMed] [Google Scholar]

- 24.Piccirillo JF, Tierney RM, Costas I, Grove L, Spitznagel EL., Jr Prognostic importance of comorbidity in a hospital-based cancer registry. JAMA. 2004;291:2441–7. doi: 10.1001/jama.291.20.2441. [DOI] [PubMed] [Google Scholar]

- 25.Maggard-Gibbons M. The use of report cards and outcome measurements to improve the safety of surgical care: the American College of Surgeons National Surgical Quality Improvement Program. BMJ Qual Saf. 2014;23:589–99. doi: 10.1136/bmjqs-2013-002223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mountford J, Shojania KG. Refocusing quality measurement to best support quality improvement: local ownership of quality measurement by clinicians. BMJ Qual Saf. 2012;21:519–23. doi: 10.1136/bmjqs-2012-000859. [DOI] [PubMed] [Google Scholar]

- 27.Desch CE, McNiff KK, Schneider EC, et al. American Society of Clinical Oncology/National Comprehensive Cancer Network quality measures. J Clin Oncol. 2008;26:3631–7. doi: 10.1200/JCO.2008.16.5068. [DOI] [PubMed] [Google Scholar]

- 28.Stewart AK, Gay EG, Patel-Parekh L, Winchester DP, Edge SB, Ko CY. Provider feedback improves reporting on quality measures: National profile reports for adjuvant chemotherapy for stage iii colon cancer [abstract 6572] J Clin Oncol. 2007. p. 25. [Available online at: http://meeting.ascopubs.org/cgi/content/short/25/18_suppl/6572; cited 5 February 2016]