Abstract

The ability to process and respond to emotional facial expressions is a critical skill for healthy social and emotional development. There has been growing interest in understanding the neural circuitry underlying development of emotional processing, with previous research implicating functional connectivity between amygdala and frontal regions. However, existing work has focused on threatening emotional faces, raising questions regarding the extent to which these developmental patterns are specific to threat or to emotional face processing more broadly. In the current study, we examined age‐related changes in brain activity and amygdala functional connectivity during an fMRI emotional face matching task (including angry, fearful, and happy faces) in 61 healthy subjects aged 7–25 years. We found age‐related decreases in ventral medial prefrontal cortex activity in response to happy faces but not to angry or fearful faces, and an age‐related change (shifting from positive to negative correlation) in amygdala–anterior cingulate cortex/medial prefrontal cortex (ACC/mPFC) functional connectivity to all emotional faces. Specifically, positive correlations between amygdala and ACC/mPFC in children changed to negative correlations in adults, which may suggest early emergence of bottom‐up amygdala excitatory signaling to ACC/mPFC in children and later development of top‐down inhibitory control of ACC/mPFC over amygdala in adults. Age‐related changes in amygdala–ACC/mPFC connectivity did not vary for processing of different facial emotions, suggesting changes in amygdala–ACC/mPFC connectivity may underlie development of broad emotional processing, rather than threat‐specific processing. Hum Brain Mapp 37:1684–1695, 2016. © 2016 Wiley Periodicals, Inc.

Keywords: development, emotional faces, amygdala, anterior cingulate cortex, functional connectivity, fMRI

INTRODUCTION

The ability to process and respond to affective facial expressions is essential for navigating the social and emotional world. At birth, neural systems for processing emotional information are already established, with the ability to discriminate emotional expressions beginning in infancy and continuing to develop throughout childhood and adolescence [Herba and Phillips, 2004; Herba et al., 2006; Leppänen and Nelson, 2009]. Deficits in the ability to process and respond to emotional cues have been associated with poor social functioning, and both internalizing and externalizing symptoms in children and adolescence [Denham et al., 2003; Ensor et al., 2011; Fine et al., 2003]. Understanding developmental changes in emotional processing from childhood to adulthood is particularly relevant, given that emotional disorders often develop between childhood and adolescence [Beesdo et al., 2009; Giedd et al., 2008]. As such, characterizing normal development of emotion processing and its underlying neural basis can inform developmental deviations and facilitate early detection and intervention.

Previous literature has highlighted the central role of the amygdala in detecting the affective significance of stimuli, along with an interconnected circuitry including anterior cingulate cortex (ACC) and medial prefrontal cortex (mPFC) [Breiter et al., 1996; Fitzgerald et al., 2006; Hariri et al., 2000; Phan et al., 2002; Phillips et al., 2003]. To understand maturation of neural circuits involved in facial expression processing, prior developmental studies have focused on age‐related structural and functional changes of these brain regions. Structural magnetic resonance imaging (MRI) studies have demonstrated early maturation of amygdala and protracted development of prefrontal regions [Goddings et al., 2014; Gogtay et al., 2004; Mills et al., 2014; Østby et al., 2009; Sowell et al., 1999; Wierenga et al., 2014a]. For example, using a longitudinal design with multiple time points (≥3) over a 20‐year period (age range 10–30 years), Mills and colleagues (2014) showed that amygdala grew during adolescence with its volume increasing 7% between late childhood and late adolescence, whereas maturation of ACC/mPFC continued into early adulthood with a 17% decrease in volume from late childhood to early twenties [Mills et al., 2014]. Functional MRI (fMRI) studies indicate that children and adolescents, like adults, can reliably recruit amygdala during explicit recognition, passive viewing, and implicit processing of emotional faces [Baird et al., 1999; Guyer et al., 2008; Hung et al., 2012; Thomas et al., 2001], and there is evidence that amygdala activation during processing of fearful faces decreases from childhood into adulthood [Gee et al., 2013; Guyer et al., 2008; Killgore et al., 2001; Monk et al., 2003; Swartz et al., 2014]. Adult‐like functional activity in ACC/mPFC during emotional face processing appears to emerge in late adolescence and gradually develop into adulthood [Batty and Taylor, 2006; Hung et al., 2012; Monk et al., 2003; Passarotti et al., 2009], consistent with theories of a mismatch in developmental timing for maturation of limbic regions compared to frontal regions involved in regulating emotional responses [Blackford and Pine, 2012; Casey et al., 2008].

Importantly, there is growing evidence that rather than activating independently, brain regions coordinate and interact with each other as part of interconnected brain circuitry [Yurgelun‐Todd, 2007]. Given that amygdala and prefrontal regions mature at different times, it may be particularly important to examine connectivity between these regions in the context of processing emotional information across development. Consistent with this, there is evidence that age‐related changes in both structural [Swartz et al., 2014] and functional [Decety et al., 2012; Gee et al., 2013; Perlman and Pelphrey, 2011] connectivity between amygdala and frontal regions may underlie emotional development. For example, age‐related increases in amygdala connectivity with ventromedial prefrontal cortex (vmPFC) have been observed when processing intentional harm to others [Decety et al., 2012], and effective connectivity between the anterior cingulate cortex (ACC)/inferior frontal gyrus and the amygdala was found to increase with age in children (5–11 years) during a task demanding emotion regulation [Perlman and Pelphrey, 2011]. More recently, Gee and colleagues examined the development of connectivity during processing of fearful faces, finding that amygdala–mPFC connectivity changed from positive to negative correlation from early childhood into young adulthood (4–22 years olds) [Gee et al., 2013]. However, it remains unclear whether these developmental patterns of connectivity are specific to processing fearful faces or to emotional faces in general.

The current study sought to characterize age‐related changes in overall activation and functional coupling between amygdala and frontal regions (e.g., ACC/mPFC) from childhood to young adulthood in processing both positive (i.e., happy) and negative (i.e., fearful, angry) facial expressions in a large sample spanning childhood into young adulthood (7–25 years). Consistent with previous findings, we hypothesized that there would be age‐related changes in amygdala–frontal functional connectivity. As previous work has focused on reactivity to fearful faces [Gee et al., 2013], we evaluated whether age‐related changes in functional connectivity were consistent across emotional faces or varied as a function of face valence.

METHODS

Participants

The sample consisted of 61 healthy participants, between the ages of 7 and 25 years (mean ± standard deviation: 16.69 ± 5.05), 35 (57%) of whom were females. The group was divided into three age groups: children (7–12 years, n = 15, 7 females [47%]), adolescents (13–18 years, n = 22, 15 females [68%]), and adults (19–25 years, n = 24, 13 females [54%]) (Table 1).

Table 1.

Demographic information of the sample

| Overall | Age subgroups (years) | |||

|---|---|---|---|---|

| 7–12 | 13–18 | 19–25 | ||

| N | 61 | 15 | 22 | 24 |

| Age [mean (SD)] | 16.71 (5.02) | 9.80 (1.66) | 16.00 (1.85) | 21.63 (1.26) |

| Female N (%) | 36 (58%) | 7 (46%) | 16 (68%) | 13 (54%) |

All participants were right‐handed, and free of current and past major medical or neurologic illness, as confirmed by a board certified physician. None of the participants tested positive for alcohol or illegal substances. Informed consent was obtained for participants 18 years and older; assent was obtained for minor participants and informed consent from their parents. Participants were recruited through community advertisements at the University of Illinois at Chicago (UIC) in Chicago, IL and the University of Michigan (UM) in Ann Arbor, MI. Procedures were approved by the Institutional Review Boards (IRB) at both UIC and UM.

Emotional Face Processing Task

All participants underwent structural T1‐weighted (T1w) and fMRI scanning: imaging data on 26 participants were acquired at the UIC site and imaging data on 36 participants were acquired at the UM site. During fMRI, all participants performed an emotional face assessment task (EFAT) adapted from the work of Hariri [Hariri et al., 2002]. This task is a well‐validated and effective paradigm to probe facial affect processing and reliably engages the amygdala and frontal regions, as previously demonstrated [Bangen et al., 2014; Phan et al., 2008; Prater et al., 2013]. The EFAT fMRI paradigm consisted of 18 experimental blocks: 9 blocks of matching facial affect, interspersed with 9 control blocks of matching shapes. Each block lasted 20 s, containing 4 sequential matching trials, 5 s each and the total scan time was 6 min. During the face matching block, the participants viewed a trio of faces and were instructed to match the emotion of the target face on the top with one of two faces on the bottom. The target (top) and matching probe (bottom) displayed angry, fearful or happy expressions; the foil face (bottom) displayed a neutral expression on every trial. Three blocks of each affective expression (i.e., angry, fearful, and happy) were included. During the control shape matching block, the participants were instructed to match a trio of simple shapes (i.e., circles, rectangles, and triangles). Behavioral data including accuracy and response time for EFAT were collected simultaneously with fMRI.

MRI Acquisition

FMRI studies were performed on 3 Tesla GE scanners with 8‐channel head coils at two sites (i.e., UIC and UM). For the UIC site, functional data were acquired using gradient‐echo echo‐planar imaging (EPI) sequence with the following parameters: repetition time (TR) = 2 s, echo time (TE) = minFull [∼25 ms], flip angle = 90°, field of view (FOV) = 22 × 22 cm2, acquisition matrix 64 × 64, 3‐mm slice thickness with no gap, 44 axial slices. For the UM site, functional data were collected with a gradient‐echo reverse spiral acquisition with two sets of imaging parameters: TR = 2 s, TE = 30 ms, flip angle = 90°, FOV = 22 × 22 cm2, acquisition matrix 64 × 64, 3‐mm slice thickness with no gap, 43 slices; or TR = 2 s, TE = 30 ms, flip angle = 77°, FOV = 24 × 24 cm2, acquisition matrix 64 × 64; 5‐mm slice thickness with no gap, 30 axial slices.

Data Analysis

Preprocessing

Functional images were preprocessed in SPM8 (Wellcome Trust Centre for Neuroimaging, http://www.fil.ion.ucl.ac.uk/spm/) for slice timing correction, motion correction (realignment), image normalization, resampling at a 2 × 2 × 2 mm3 voxel size, and 8‐mm Gaussian smoothing. Artifact Detection Tools (ART, http://www.nitrc.org/projects/artifact_detect/) software package was used for automatic detection of spike and motion in the functional data (z threshold 6, movement threshold of 3 mm). Participants with more than 10 outlier volumes (>5% of total volumes) were excluded from this study (n = 7). Further, framewise displacement (FD) was calculated with the rigid body image realignment parameters to reflect spontaneous head motion [Power et al., 2012, 2014]. Specifically, time points with FD > 0.5 mm were identified and one participant with substantial micromovement was excluded (>20% time points with FD >0.5 mm). Combining ART and FD‐based methods, eight participants with significant motion were excluded from the analyses (n = 8), yielding a final sample of 61 participants. Of the eight excluded participants, they were at the age of 7, 10, 11, 12, 13, and 18 (n = 3) years old. With the remaining 61 participants, volume censoring was used in the first‐level within‐subject analyses of brain activity and connectivity to remove outlier volumes identified by two methods respectively (censoring volumes with signal spikes and large motion with ART vs. censoring volumes with micromovement identified with FD). Second‐level outcomes were evaluated separately using each censoring method in order to provide a thorough examination of the potential influence of movement and motion correction on the results. Further, mean FD displacement was added as a covariate in the second level analyses to test whether the effect of age on functional connectivity holds.

Brain activity

First‐level within‐subject analysis was performed with a general linear model (GLM) with six regressors of interest: face matching (angry, fearful, and happy) and shape matching (circle, rectangle, and triangle). Additional nuisance regressors including six motion parameters and outlier volumes were also included to correct for motion and spiking artifacts. For each participant, contrast images of brain activity—angry face vs. shape matching, fearful face vs. shape matching, and happy face vs. shape matching—were generated for further second‐level between‐subject analysis.

Functional connectivity

Generalized psychophysiological interaction (gPPI) analysis [Cisler et al., 2014; Friston et al., 1997; McLaren et al., 2012] was performed to examine functional coupling between amygdala and prefrontal regions. Two seed regions, left and right amygdala, were created based on anatomically defined automated anatomical labeling (AAL) atlas [Tzourio‐Mazoyer et al., 2002]. Mean time series of the seed region (left or right amygdala), task conditions (face matching: angry, fearful, and happy; shape matching: circle, rectangle and triangle), interaction variables (seed times series × task condition), as well as motion parameters and outlier volumes were included in the design matrix. PPI connectivity maps (i.e., angry face vs. shape matching, fearful face vs. shape matching and happy face vs. shape matching) were computed for each individual.

Second‐level analyses

Analyses of covariance (ANCOVAs) were performed in SPM8 for brain activity and functional connectivity to investigate main effect of emotion (angry, fearful, and happy) and emotion × age interaction, while controlling for sex and study site/scanner. To evaluate age‐related changes common to all emotions, we examined the age effect on emotional faces versus shapes. To correct for multiple comparisons, joint height and extent thresholds were determined via Monte Carlo simulations (10,000 iterations) with an a priori frontolimbic mask (AlphaSim, AFNI) [Cox, 1996] and applied to second‐level statistical results for a corrected P < 0.05. The frontolimbic mask was generated by combining masks of the frontal lobe and limbic lobe in the Talairach Daemon database [Lancaster et al., 1997] using the WFU PickAtlas toolbox, SPM8 [Maldjian et al., 2003]. The frontolimbic mask has a total volume of 426,800 mm3, encompassing bilaterally medial prefrontal cortex (mPFC), inferior frontal gyrus (IFG), middle frontal gyrus (MFG), superior frontal gyrus (SFG), amygdala, cingulate, insula, hippocampus, parahippocampus and other regions (Supporting Information Fig. 1). ANCOVAs were also performed in SPSS (SPSS 22.0 version, Chicago IL USA) to examine age‐related changes in behavior performances (i.e., accuracy and response time).

Post hoc analyses

To clarify the effect of age on amygdala functional connectivity, parameter estimates (i.e., beta weights) were extracted from individual PPI connectivity maps with 5‐mm spherical ROIs centered at the voxels showing peak age effect (MarsBarR, SPM8) [Matthew et al., 2002]. Partial Pearson's product–moment correlation was performed in SPSS to examine relationships between functional connectivity and age, and between functional connectivity and behavioral performances (i.e., accuracy and response time), controlling for sex and study site.

RESULTS

Behavioral Data

Valid behavior data were available on 53 participants (n = 53; data from 5 participants were unavailable due to mechanical failure of the response box, and additional three participants were excluded from behavioral analyses for low accuracy (<60%) on the task). Of the eight participants with missing/poor behavior data, they were at the age of 7, 10, 11, 12, 14, 17, 18, and 23 years old. Participants performed the EFAT well, with overall accuracy [mean(SD)] of 92.6% (9.9%) and reaction time for accurate trials of 1535.9 (447.2) ms (descriptive statistics by condition are presented in Table 2). Results of ANCOVAs are presented in Table 3, revealing main effects of age for both response time, F(1,151) = 95.8, P < 0.001, and accuracy, F(1,151) = 5.51, P = 0.02. Specifically, response time significantly decreased with age, r = −0.62, P < 0.001, and accuracy increased with age, r = 0.18, P = 0.03, controlling for emotion, sex, and study site.

Table 2.

Average response time (for correct trials) and accuracy during the Emotional Face Assessment Task

| Mean (SD) | Emotional faces | Shape | ||

|---|---|---|---|---|

| Angry | Fearful | Happy | ||

| Response time (ms) | 1,813.1 ± 472.5 | 1,629.1 ± 460.7 | 1,483.5 ± 379.6 | 1,218.1 ± 197.8 |

| Accuracy (%) | 83.8 ± 12.7 | 97.0 ± 4.9 | 97.5 ± 6.0 | 91.9 ± 7.3 |

Table 3.

Emotion‐by‐age analyses of covariance (ANCOVAs) on response time and accuracy during the Emotional Face Assessment Task

| Emotion × age ANCOVA | |||

|---|---|---|---|

| F | df | P | |

| Response time | |||

| Emotion | 3.40 | 2, 151 | 0.04 |

| Age | 95.8 | 1, 151 | <0.001 |

| Emotion × age | 1.09 | 2, 151 | 0.34 |

| Accuracy | |||

| Emotion | 8.26 | 2, 151 | <0.001 |

| Age | 5.51 | 1, 151 | 0.02 |

| Emotion × age | 1.16 | 2, 151 | 0.32 |

ANCOVAs also demonstrated main effects of emotion for response time, F(2,151) = 3.40, P = 0.04, and accuracy, F(2,151) = 8.26, P < 0.001. Participants were slower in matching angry than fearful (P = 0.007) or happy (P < 0.001) faces and were slower in matching fearful versus happy faces (P = 0.03) (response time: angry > fearful > happy). Participants were less accurate in matching angry than fearful (P < 0.001) or happy faces (P < 0.001), and there was no significant difference between fearful or happy faces (P = 0.78) (accuracy: angry <fearful = happy). Age did not significantly interact with emotion type for either response time or accuracy.

Participants showed slight age‐related improvement in response time, r = −0.39, P = 0.005, but not in accuracy, r = −0.08, P = 0.58, when matching shapes.

Brain Activity

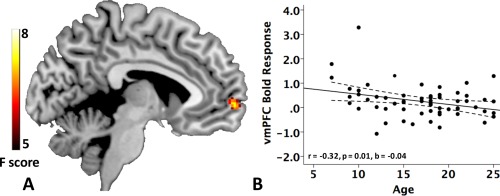

Table 4 presents significant effects of age and emotion from ANCOVAs of brain activation in processing facial expressions versus geometric shapes, demonstrating consistent results between ART and FD‐based methods for motion correction. For both AR and FD‐based censoring methods, significant amygdala activation was observed for emotion vs. shapes processing (P < 0.005), but we did not find evidence that amygdala activation significantly differed depending on the type of emotion (i.e., angry, fearful, or happy faces). Main effect of emotion was found in ventral medial prefrontal cortex (vmPFC) and in right dorsolateral prefrontal cortex (DLPFC). Participants showed decreased vmPFC activation (peak t values [ART/FD] = −4.79/−4.79) and increased DLPFC activation (peak t values [ART/FD] = 3.94/3.29) when viewing negative expressions (angry or fearful) relative to happy faces. A significant linear effect of age was observed in right superior frontal gyri (SFG), reflecting decreasing brain activation with age (peak t values [ART/FD] = −4.45/−3.92). In addition, there was a significant emotion × age interaction for brain activation in vmPFC, reflecting decreasing activation in vmPFC with age when viewing happy faces (peak t values [ART/FD] = −3.46/−3.62) but not angry or fearful faces. Beta weight values were exported from a 5‐mm spherical vmPFC ROI centered at MNI coordinates (as listed in Table 4), and the effect of age was examined for each emotion condition (one participant had beta weights that were significant outliers according to Grubbs test [Grubbs, 1974], P < 0.05 and was excluded from post hoc analyses). Partial correlations controlling for scanner and sex indicated that the effect of age on vmPFC activation was significant for happy faces (r = −0.32, P = 0.01), but not for angry (r = −0.20, P = 0.14) or fearful faces (r = 0.14, P = 0.29) (Fig. 1; Supporting Information Fig. 2).

Table 4.

Emotion‐by‐age effects of brain activities during the Emotional Face Assessment Task (corrected P < 0.05)

| Brain region | Brodmann area (BA) | Peak MNI coordinates (x, y, z) | Z score | Size (mm3) | |

|---|---|---|---|---|---|

| ART | |||||

| Main effect of emotion | |||||

| vmPFC | BA 10 | −2, 62, 0 | 4.26 | 2,064 | |

| L DLPFC | BA 9 | −46, 32, 38 | 4.09 | 1,080 | |

| R DLPFC | BA 9,10, 46 | 44, 44, 32 | 3.42 | 928 | |

| Main effect of age | |||||

| R SFG | BA 6 | 28, 12, 68 | 3.94 | 1,096 | |

| R IFG | BA 11, 47 | 22, 24, −22 | 3.52 | 720 | |

| R vmPFC | BA 11, 25 | 10, 12, −18 | 3.29 | 656 | |

| L PH/HP | −30, −14, −22 | 3.18 | 584 | ||

| Emotion by age interaction | |||||

| vmPFC | BA 10 | 0, 62, 0 | 3.20 | 768 | |

| FD | |||||

| Main effect of emotion | |||||

| vmPFC | BA 10 | 0, 62, 0 | 4.13 | 1,592 | |

| L vmPFC | BA 10 | −10, 46, −10 | 3.33 | 432 | |

| R DLPFC | BA 9 | 22, 30, 34 | 3.47 | 592 | |

| Main effect of age | |||||

| R SFG | BA 6 | 28, 14, 66 | 3.49 | 520 | |

| Emotion by age interaction | |||||

| vmPFC | BA 10 | 4, 64, 0 | 3.37 | 744 | |

ART: using Artifact Detection Tools to identify outlier volumes; FD: using framewise displacement based method to identify outlier volumes; L: left; R: Right; MNI: Montreal Neurologic Institute; vmPFC: ventral medial prefrontal cortex; DLPFC: dorsolateral prefrontal cortex; SFG: superior frontal gyrus; IFG: inferior frontal gyrus; PH/HP: parahippocampus/hippocampus.

Figure 1.

A: Emotion × age interaction on brain activity during emotional face processing (the Emotional Face Assessment Task, EFAT, corrected P < 0.05). B Scatter plot of vmPFC ROI BOLD response (i.e., beta weight, arbitrary units [a.u.]) with age in processing happy faces (vmPFC ROI: a 5‐mm sphere centered at MNI coordinate [4, 64, 0] with peak interaction effect). Dashed lines represent the 95% confidence intervals. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Functional Connectivity

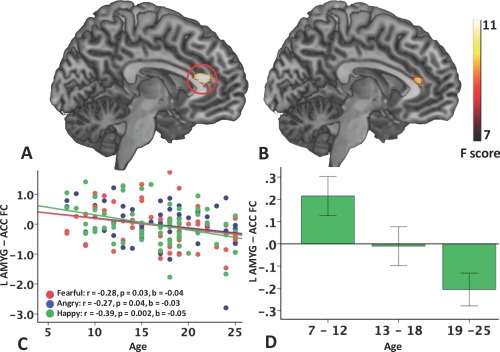

For both ART and FD‐based outlier detection methods, second‐level analyses consistently revealed linear effect of age on functional connectivity between both left amygdala–ACC/mPFC and right amygdala–ACC/mPFC connectivity (ART: Table 5, Fig. 2A,B; FD: Table 5, Supporting Information Fig. 3A,B). The effect of age on bilateral amygdala–ACC/mPFC functional connectivity remained significant when mean framewise displacement was included as an additional covariate in the second‐level analyses. Further, the significant effects of age on bilateral amygdala–ACC/mPFC functional connectivity persisted when controlling for variations in the imaging protocols (i.e., number of slices was included an additional covariate) in the second‐level analyses.

Table 5.

Linear effect of age on amygdala functional connectivity during the Emotional Face Assessment Task (corrected P < 0.05)

| Brain region | Brodmann area (BA) | Peak MNI coordinates (x,y,z) | Z score | Size (mm3) | |

|---|---|---|---|---|---|

| ART | |||||

| Left amygdala functional connectivity | |||||

| Main effect of emotion | None | ||||

| Main effect of age | ACC/mPFC | BA 24, 32 | −6, 34, 16 | 3.18 | 1,552 |

| Emotion by age interaction | None | ||||

| Right amygdala functional connectivity | |||||

| Main effect of emotion | None | ||||

| Main effect of age | ACC/mPFC | BA 24, 32 | −4, 36, 14 | 2.88 | 472 |

| Emotion by age interaction | None | ||||

| FD | |||||

| Left amygdala functional connectivity | |||||

| Main effect of emotion | None | ||||

| Main effect of age | ACC | BA 24, 32 | −8, 28, 18 | 2.83 | 688 |

| Emotion by age interaction | None | ||||

| Right amygdala functional connectivity | |||||

| Main effect of emotion | None | ||||

| Main effect of age | ACC | BA 24, 32 | 6, 36, 12 | 2.63 | 640 |

| Emotion by age interaction | None | ||||

ART: results using Artifact Detection Tools to identify outliers; FD: results using framewise displacement based method to identify outliers; MNI: Montreal Neurologic Institute; ACC: anterior cingulate cortex; mPFC: medial prefrontal cortex.

Figure 2.

Linear relationship between age and amygdala functional connectivity during the perception of emotional faces (the Emotional Face Assessment Task, EFAT). A Main effect of age on left amygdala–frontal functional connectivity (corrected P < 0.05); B Main effect of age on right amygdala–frontal functional connectivity (corrected P < 0.05); C Scatterplot of left amygdala–ACC functional connectivity and chronological age for processing of angry, fearful, and happy expressions, respectively (ACC ROI: a 5 mm sphere centered at the peak F value with MNI coordinate [−6 34 16], as circled in A), showing no emotion × age interaction; D averaged amygdala–ACC functional connectivity across three emotions at three categorical age ranges (childhood 7–12, adolescence 13–18, and young adulthood 19–25 years of age) with ±1 standard error (SE). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

From ART‐based outlier detection method, mean connectivity between left amygdala and ACC/mPFC was extracted from first‐level PPI connectivity maps with a 5‐mm spherical ACC ROI centered at MNI coordinate [−6 34 16] (as circled in Fig. 2A). Scatter plot in Figure 2C visualizes how left amygdala–ACC/mPFC connectivity decreased as a linear function of age. Specifically, left amygdala–ACC/mPFC functional connectivity negatively correlated with age when matching angry (r = −0.27, P = 0.04), fearful (r = −0.28, P = 0.03), and happy (r = −0.39, P = 0.002) faces (vs. shapes). When censoring volumes with FD > 0.50 mm, the significant age effect on functional connectivity between amygdala and ACC/mPFC persisted (corrected P < 0.05), but became less significant (lower F value and smaller ACC region, Table 5, Supporting Information Fig. 3A,B). Similarly, the correlation between age and mean functional connectivity extracted (5‐mm sphere, MNI [−8, 28, 18]) remained significant in the processing of fearful (r = −0.30, P = 0.02) and happy (r = −0.32, P = 0.01) faces but did not reach significance for angry faces (r = −0.15, P = 0.26) (Supporting Information Fig. 3, Supporting Information Fig. 4B).

To further evaluate age‐related changes in amygdala–ACC/mPFC connectivity, we also examined effects of age categorically with three developmental stages: childhood (7–12 years), adolescence (13–18 years), and adulthood (19–25 years). As there was no significant effect of emotion or emotion × age interaction on amygdala–ACC/mPFC functional connectivity, we collapsed across emotional faces (i.e., angry, fearful, and happy) and examined emotional faces versus shapes to simplify interpretation and analyses. In ROI‐based one‐sample t tests, children exhibited a positive correlation between left amygdala and with ACC/mPFC (t = 2.46, P = 0.02), which became significantly negative in young adults (t = −2.78, P = 0.007). There was no significant connectivity between left amygdala and ACC/mPFC in adolescents (t = −0.12, P = 0.90). Bar graphs in Figure 2D represents average left amygdala–ACC/mPFC functional connectivity for the three age groups, which further visualizes the developmental shift from positive correlation in childhood to negative correlation in adulthood. We also evaluated how functional connectivity related to behavioral performance. There was no significant correlation between behavioral performance and left amygdala–ACC/mPFC functional connectivity (response time: r = −0.05, P = 0.54; accuracy: r = −0.002, P = 0.98), controlling for age, sex, scanner site, and emotion.

Amygdala connectivity and effects of age on connectivity appeared consistent across all emotional expressions (angry, fearful, or happy), as there were no significant main effects of emotion condition or emotion × age interactions within the a priori frontolimbic regions (Table 5). As observed in Figure 2C, regression lines for the relationship between amygdala–ACC/mPFC functional connectivity and age had similar estimated intercepts and slopes for all three emotion conditions. Scatterplots depicting the association between age and left amygdala–ACC/mPFC connectivity for each emotional faces with confidence intervals are presented in Supporting Information Figure 4A. Therefore, developmental trajectories of amygdala connectivity with frontal regions did not appear to differ as a function of emotional face type.

DISCUSSION

Using PPI analyses and task‐based fMRI, this study investigated typical development of brain activation and amygdala functional connectivity during explicit processing of happy, fearful, and angry facial expression (versus geometric shapes) from childhood into young adulthood (age range: 7–25 years). There were three main findings. First, we found an interaction between age and emotion condition on vmPFC activation such that decreased activation was observed from childhood to adulthood in response to happy faces but not to fearful or angry faces. Second, there was a negative association between age and amygdala–ACC/mPFC functional connectivity, revealing a positive‐to‐negative shift in connectivity between childhood and adulthood. Specifically, children (7–12 years) exhibited significant positive correlation between the amygdala and ACC/mPFC, suggesting synchronized activation between these structures. In contrast, adults showed negative correlation, suggesting an inversed pattern of activities in these two regions. Lastly, age‐related changes in amygdala–ACC/mPFC functional connectivity did not vary for processing of different facial emotions. Instead, similar patterns of age‐related change in amygdala connectivity with ACC/mPFC were observed for processing angry, fearful, and happy faces.

With regard to age‐related changes in brain activation during face processing, we found that the effect of age on vmPFC activation was moderated by emotion, such that decreased activation with age was observed only for happy faces but not angry or fearful faces. Previous literature suggests that processing of happy faces and positive emotions activates vmPFC, and greater vmPFC activation predicts greater subjective ratings of positive valence while decreased activation in vmPFC is associated with regulation of positive stimuli [Ebner et al., 2012; Winecoff et al., 2013]. Thus, the current findings of age‐related changes in vmPFC for happy faces could be indicative of attenuated engagement of this area to positive emotional stimuli from childhood to adulthood. Future work is needed to link this developmental change with change in subjective responses to positive social cues.

Our finding of a negative association between age and amygdala–ACC/mPFC functional connectivity is consistent with Gee et al. [2013] in that children demonstrated positive correlation, which changed to negative correlation by young adulthood. Reciprocal connections between amygdala and ACC/mPFC have been theorized to reflect a bottom‐up amygdala to ACC/mPFC signaling and a top‐down ACC/mPFC to amygdala regulatory control [Gee et al., 2013; Hariri et al., 2003; Ochsner and Gross, 2005]. Positive correlation in children may represent excitatory signaling from early maturing amygdala to ACC/mPFC (bottom‐up pathway), whereas negative correlation in adults may reflect inhibitory regulatory control from late‐developing ACC/mPFC over amygdala (top‐down pathway); that is, the positive‐to‐negative developmental shift in activation coupling may be conceptualized as the early emergence of amygdala to ACC/mPFC excitatory signaling and relatively late development of ACC/mPFC to amygdala inhibitory regulation. Such an explanation aligns with developmental mismatch of amygdala and ACC/mPFC (i.e., early maturation of the amygdala compared to lagged development of ACC/mPFC) [Casey et al., 2008; Goddings et al., 2014; Hung et al., 2012; Mills et al., 2014; Østby et al., 2009; Wierenga et al., 2014a, 2014b].

Importantly, Gee et al. [2013] focused on connectivity during viewing of fearful faces with no evidence of developmental changes in connectivity for happy faces. The current study focused on three facial affects, with results indicating that comparable developmental patterns are observed for happy, angry, and fearful faces. That is, developmental changes in amygdala–ACC/mPFC connectivity appear to underlie development of emotional processing more broadly, rather than threat reactivity specifically. Developmental changes in amygdala connectivity with frontal regions may underlie the development of emotional processing and regulation from childhood to adulthood [Herba et al., 2006; Thomas et al., 2007; Thompson and Goodman, 2009], and abnormalities in these systems may contribute to the development of psychopathology [Easter et al., 2005; Kim et al., 2011].

Consistent with the behavioral literature [De Sonneville et al., 2002; Durand et al., 2007], in the current study there were significant age effects on task behavioral performance (i.e., response time and accuracy). Specifically, we found substantially stronger association between response time and age than between accuracy and age, confirming that response time is a more sensitive measure for the studied range [De Sonneville et al., 2002]. However, we did not find a significant emotion x age interaction for either response time or accuracy, which differs from previous evidence of asynchronous development across different facial expressions. For example, there is evidence that the ability to recognize more basic expressions (e.g., happy, anger) develops earlier than other more complex emotions (e.g., surprise, shame) [Ale et al., 2010; Broeren et al., 2011; De Sonneville et al., 2002; Durand et al., 2007]. Nonetheless, there is evidence that children have learned to accurately label happy and sad faces by the age of 5 or 6 and to discriminate fearful, angry, and neutral expressions by age 10 [Durand et al., 2007]. Therefore, with the age range (7–25 years) and basic facial expressions (i.e., angry, fearful, and happy) in this study, we may not be able to capture developmental differences in accuracy or response time across emotions.

It is important to note that despite the relatively large sample size spanning a wide range of ages from early childhood to young adulthood, we did not find effects of age on overall amygdala activation for any of the emotion conditions. Previous work has found age‐related decreases in amygdala activation during the processing of fearful faces [Gee et al., 2013; Guyer et al., 2008; Killgore et al., 2001; Monk et al., 2003]. Age‐related decreases of amygdala activation have been observed for passive viewing or implicit processing of emotional expressions [Guyer et al., 2008; Gee et al., 2013; Monk et al., 2003; Passarotti et al., 2009]. In contrast, no effects of age were observed on amygdala activity during explicit processing or labeling of facial expressions [Passarotti et al., 2009; Monk et al., 2003]. In addition, Todd et al. (2011) reported age‐related increase in amygdala response to angry faces and children and adults have opposite patterns of biases for facial expression [Todd et al., 2011]. There is evidence of greater amygdala activation during explicit compared to implicit processing of facial emotion [Habel et al. 2007]. The current study is among the first to evaluate age‐related changes in amygdala activation during an explicit emotional face matching task. It is possible that explicit versus implicit emotional processing and type of task may contribute to this mix of findings from the current study and the literature.

Several limitations of this study should be considered. First, in‐scanner head motion can lead to substantial signal changes in fMRI images and can influence accurate estimation of brain activity and functional connectivity [Friston et al., 1996; Power et al., 2012, 2014; Pujol et al., 2014; Satterthwaite et al., 2012, 2013; Van Dijk et al., 2012]. It is particularly important to correct motion‐related artifacts in neurodevelopmental studies, as head motion has been found to inversely relate to age in healthy children and adolescents [Yuan et al., 2009], and children exhibit greater head motion relative to young adults [Pujol et al., 2014]. Recognizing this issue, we took several steps to reduce and control for motion in this study. Firstly, during data acquisition of this study, custom‐made foam pad was put in the head coil to minimize head motion, and a custom‐made infrared eye‐tracking camera was used to monitor head‐motion and subject alertness in real‐time. Secondly, participants with significant motion (n = 8) (e.g., >5% volumes with 3‐mm motion or substantial micromotion [FD >0.5 mm]) were excluded from this study. Thirdly, during data processing (within‐subject first‐level analyses), motion parameters were included as nuisance regressors and outlier volumes detected by ART or FD were censored and removed from fMRI time series. Consistent outcomes were observed in the second‐level analyses for both outlier detection methods (ART or FD), demonstrating significant age effect on amygdala–ACC functional connectivity. Lastly, we also included individual mean framewise displacement in the second‐level analyses as a covariate, and significant effect of age on amygdala–ACC functional connectivity persisted. However, even using these stringent motion correction methods, it is impossible to completely remove or “undo” motion‐related artifacts in fMRI time series, and future research is needed to optimize approaches for movement correction in task‐based functional connectivity studies across development. In addition, though the current study extends previous work by examining processing of three emotional type (angry, fearful, and happy), we did not include neutral, sad, surprise, disgust, or other emotional faces. Therefore, this study can only inform developmental changes in processing these three facial emotions, and it is possible that amygdala functional connectivity may develop distinctly for more complex facial expressions. Further, without a neutral face matching condition, this study used shape matching as the control condition, which prevents us from separating the effect of emotion from face processing. However, neutral faces are affectively ambiguous and often perceived as sad faces in children, raising questions about their validity as a control condition [Tottenham et al., 2013]. We examined development of functional connectivity using a cross‐sectional design, which estimates brain development through age‐related correlation or differences between age groups. This design is inherently more vulnerable to inter‐subject variance and cohort effects; however, the present study reported and replicated findings with a large sample size and a well‐validated task, although future longitudinal work is needed to further clarify developmental changes in neural circuitry underlying emotional face processing. Lastly, the clinical relevance of this positive‐to‐negative shift in correlation should be examined in the context of psychopathology, particular in relation to the development of emotion‐related disorders across childhood, adolescence, and young adulthood.

Supporting information

Supporting Information Figure 1.

Supporting Information Figure 2.

Supporting Information Figure 3.

Supporting Information Figure 4.

ACKNOWLEDGMENT

The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

REFERENCES

- Ale CM, Chorney DB, Brice CS, Morris TL (2010): Facial affect recognition and social anxiety in preschool children. Early Child Dev Care 180:1349–1359. [Google Scholar]

- Baird AA, Gruber SA, Fein DA, Mass LC, Steingard RJ, Renshaw PF, Cohen BM, Yurgelun‐Todd DA (1999): Functional magnetic resonance imaging of facial affect recognition in children and adolescents. J Am Acad Child Adolesc Psychiatry 38:195–199. [DOI] [PubMed] [Google Scholar]

- Bangen KJ, Bergheim M, Kaup AR, Mirzakhanian H, Wierenga CE, Jeste DV, Eyler LT (2014): Brains of optimistic older adults respond less to fearful faces. J Neuropsychiatry Clin Neurosci 26:155–163. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ (2006): The development of emotional face processing during childhood. Dev Sci 9:207–220. [DOI] [PubMed] [Google Scholar]

- Beesdo K, Knappe S, Pine DS (2009): Anxiety and anxiety disorders in children and adolescents: Developmental issues and implications for DSM‐V. Psychiatr Clin North Am 32:483–524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blackford JU, Pine DS (2012): Neural substrates of childhood anxiety disorders: A review of neuroimaging findings. Child Adolesc Psychiatr Clin N Am 21:501–525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR (1996): Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17:875–887. [DOI] [PubMed] [Google Scholar]

- Broeren S, Muris P, Bouwmeester S, Field A, Voerman J (2011): Processing biases for emotional faces in 4‐ to 12‐year‐old non‐clinical children: An exploratory study of developmental patterns and relationships with social anxiety and behavioral inhibition. J Exp Psychopathol 2:454–474. [Google Scholar]

- Casey BJ, Getz S, Galvan A (2008): The adolescent brain. Dev Rev DR 28:62–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisler JM, Bush K, Steele JS (2014): A comparison of statistical methods for detecting context‐modulated functional connectivity in fMRI. NeuroImage 84:1042–1052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW (1996): AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res Int J 29:162–173. [DOI] [PubMed] [Google Scholar]

- Decety J, Michalska KJ, Kinzler KD (2012): The contribution of emotion and cognition to moral sensitivity: A neurodevelopmental study. Cereb Cortex 22:209–220. [DOI] [PubMed] [Google Scholar]

- Denham SA, Blair KA, DeMulder E, Levitas J, Sawyer K, Auerbach‐Major S, Queenan P (2003): Preschool emotional competence: Pathway to social competence? Child Dev 74:238–256. [DOI] [PubMed] [Google Scholar]

- Durand K, Gallay M, Seigneuric A, Robichon F, Baudouin J‐Y (2007): The development of facial emotion recognition: The role of configural information. J Exp Child Psychol 97:14–27. [DOI] [PubMed] [Google Scholar]

- Easter J, McClure EB, Monk CS, Dhanani M, Hodgdon H, Leibenluft E, Charney DS, Pine DS, Ernst M (2005): Emotion recognition deficits in pediatric anxiety disorders: Implications for amygdala research. J Child Adolesc Psychopharmacol 15:563–570. [DOI] [PubMed] [Google Scholar]

- Ebner NC, Johnson MK, Fischer H (2012): Neural mechanisms of reading facial emotions in young and older adults. Emot Sci 3:223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ensor R, Spencer D, Hughes C (2011): “You feel sad?” Emotion understanding mediates effects of verbal ability and mother–child mutuality on prosocial behaviors: Findings from 2 years to 4 years. Soc Dev 20:93–110. [Google Scholar]

- Fine SE, Izard CE, Mostow AJ, Trentacosta CJ, Ackerman BP (2003): First grade emotion knowledge as a predictor of fifth grade self‐reported internalizing behaviors in childrenfrom economically disadvantaged families. Dev Psychopathol 15:331–342. [DOI] [PubMed] [Google Scholar]

- Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL (2006): Beyond threat: Amygdala reactivity across multiple expressions of facial affect. NeuroImage 30:1441–1448. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Williams S, Howard R, Frackowiak RS, Turner R (1996): Movement‐related effects in fMRI time‐series. Magn Reson Med 35:346–355. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ (1997): Psychophysiological and modulatory interactions in neuroimaging. NeuroImage 6:218–229. [DOI] [PubMed] [Google Scholar]

- Gee DG, Humphreys KL, Flannery J, Goff B, Telzer EH, Shapiro M, Hare TA, Bookheimer SY, Tottenham N (2013): A developmental shift from positive to negative connectivity in human amygdala–prefrontal circuitry. J Neurosci 33:4584–4593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giedd JN, Keshavan M, Paus T (2008): Why do many psychiatric disorders emerge during adolescence? Nat Rev Neurosci 9:947–957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goddings A‐L, Mills KL, Clasen LS, Giedd JN, Viner RM, Blakemore S‐J (2014): The influence of puberty on subcortical brain development. Neuroimage 88:242–251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gogtay N, Giedd JN, Lusk L, Hayashi KM, Greenstein D, Vaituzis AC, Nugent TF, Herman DH, Clasen LS, Toga AW, Rapoport JL, Thompson PM (2004): Dynamic mapping of human cortical development during childhood through early adulthood. Proc Natl Acad Sci USA 101:8174–8179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grubbs FE (1974): Procedures for detecting outlying observations in samples. Technometrics 11:53. [Google Scholar]

- Guyer AE, Monk CS, McClure‐Tone EB, Nelson EE, Roberson‐Nay R, Adler AD, Fromm SJ, Leibenluft E, Pine DS, Ernst M (2008): A developmental examination of amygdala response to facial expressions. J Cogn Neurosci 20:1565–1582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Habel U, Windischberger C, Derntl B, Robinson S, Kryspin‐Exner I, Gur RC, Moser E (2007): Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia 45:2369–2377. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Bookheimer SY, Mazziotta JC (2000): Modulating emotional responses: Effects of a neocortical network on the limbic system. Neuroreport 11:43–48. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Mattay VS, Tessitore A, Fera F, Weinberger DR (2003): Neocortical modulation of the amygdala response to fearful stimuli. Biol Psychiatry 53:494–501. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Tessitore A, Mattay VS, Fera F, Weinberger DR (2002): The amygdala response to emotional stimuli: A comparison of faces and scenes. NeuroImage 17:317–323. [DOI] [PubMed] [Google Scholar]

- Herba CM, Landau S, Russell T, Ecker C, Phillips ML (2006): The development of emotion‐processing in children: Effects of age, emotion, and intensity. J Child Psychol Psychiatry 47:1098–1106. [DOI] [PubMed] [Google Scholar]

- Herba C, Phillips M (2004): Annotation: Development of facial expression recognition from childhood to adolescence: Behavioural and neurological perspectives. J Child Psychol Psychiatry 45:1185–1198. [DOI] [PubMed] [Google Scholar]

- Hung Y, Smith ML, Taylor MJ (2012): Development of ACC–amygdala activations in processing unattended fear. NeuroImage 60:545–552. [DOI] [PubMed] [Google Scholar]

- Killgore WD, Oki M, Yurgelun‐Todd DA (2001): Sex‐specific developmental changes in amygdala responses to affective faces. Neuroreport 12:427–433. [DOI] [PubMed] [Google Scholar]

- Kim MJ, Loucks RA, Palmer AL, Brown AC, Solomon KM, Marchante AN, Whalen PJ (2011): The structural and functional connectivity of the amygdala: From normal emotion to pathological anxiety. Behav Brain Res 223:403–410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster JL, Rainey LH, Summerlin JL, Freitas CS, Fox PT, Evans AC, Toga AW, Mazziotta JC (1997): Automated labeling of the human brain: A preliminary report on the development and evaluation of a forward‐transform method. Hum Brain Mapp 5:238–242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA (2009): Tuning the developing brain to social signals of emotions. Nat Rev Neurosci 10:37–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH (2003): An automated method for neuroanatomic and cytoarchitectonic atlas‐based interrogation of fMRI data sets. NeuroImage 19:1233–1239. [DOI] [PubMed] [Google Scholar]

- Matthew B, Jean‐Luc A, Romain V, Jean‐Baptiste P. Region of interest analysis using an SPM toolbox [abstract] Presented at the 8th International Conference on Functional Mapping of the Human Brain, June 2–6, 2002, Sendai, Japan.

- McLaren DG, Ries ML, Xu G, Johnson SC (2012): A generalized form of context‐dependent psychophysiological interactions (gPPI): A comparison to standard approaches. NeuroImage 61:1277–1286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mills KL, Goddings A‐L, Clasen LS, Giedd JN, Blakemore S‐J (2014): The developmental mismatch in structural brain maturation during adolescence. Dev Neurosci 36:147–160. [DOI] [PubMed] [Google Scholar]

- Monk CS, McClure EB, Nelson EE, Zarahn E, Bilder RM, Leibenluft E, Charney DS, Ernst M, Pine DS (2003): Adolescent immaturity in attention‐related brain engagement to emotional facial expressions. NeuroImage 20:420–428. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Gross JJ (2005): The cognitive control of emotion. Trends Cogn Sci 9:242–249. [DOI] [PubMed] [Google Scholar]

- Østby Y, Tamnes CK, Fjell AM, Westlye LT, Due‐Tønnessen P, Walhovd KB (2009): Heterogeneity in subcortical brain development: A structural magnetic resonance imaging study of brain maturation from 8 to 30 years. J Neurosci 29:11772–11782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Passarotti AM, Sweeney JA, Pavuluri MN (2009): Neural correlates of incidental and directed facial emotion processing in adolescents and adults. Soc Cogn Affect Neurosci 4:387–398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perlman SB, Pelphrey KA (2011): Developing connections for affective regulation: Age‐related changes in emotional brain connectivity. J Exp Child Psychol 108: Special Issue: Executive Function 607–620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phan KL, Angstadt M, Golden J, Onyewuenyi I, Popovska A, Wit H de (2008): Cannabinoid modulation of amygdala reactivity to social signals of threat in humans. J Neurosci 28:2313–2319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I (2002): Functional neuroanatomy of emotion: A meta‐analysis of emotion activation studies in PET and fMRI. NeuroImage 16:331–348. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Drevets WC, Rauch SL, Lane R (2003): Neurobiology of emotion perception I: The neural basis of normal emotion perception. Biol Psychiatry 54:504–514. [DOI] [PubMed] [Google Scholar]

- Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE (2012): Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59:2142–2154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Power JD, Mitra A, Laumann TO, Snyder AZ, Schlaggar BL, Petersen SE (2014): Methods to detect, characterize, and remove motion artifact in resting state fMRI. Neuroimage 84:320–341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prater KE, Hosanagar A, Klumpp H, Angstadt M, Luan Phan K (2013): Aberrant amygdala–frontal cortex connectivity during perception of fearful faces and at rest in generalized social anxiety disorder. Depress Anxiety 30:234–241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pujol J, Macià D, Blanco‐Hinojo L, Martínez‐Vilavella G, Sunyer J, la Torre de R, Caixàs A, Martín‐Santos R, Deus J, Harrison BJ (2014): Does motion‐related brain functional connectivity reflect both artifacts and genuine neural activity? Neuroimage 101:87–95. [DOI] [PubMed] [Google Scholar]

- Satterthwaite TD, Wolf DH, Loughead J, Ruparel K, Elliott MA, Hakonarson H, Gur RC, Gur RE (2012): Impact of in‐scanner head motion on multiple measures of functional connectivity: Relevance for studies of neurodevelopment in youth. Neuroimage 60:623–632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satterthwaite TD, Elliott MA, Gerraty RT, Ruparel K, Loughead J, Calkins ME, Eickhoff SB, Hakonarson H, Gur RC, Gur RE, Wolf DH (2013): An improved framework for confound regression and filtering for control of motion artifact in the preprocessing of resting‐state functional connectivity data. Neuroimage 64:240–256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Sonneville LMJ, Verschoor CA, Njiokiktjien C, Op het Veld V, Toorenaar N, Vranken M (2002): Facial identity and facial emotions: Speed, accuracy, and processing strategies in children and adults. J Clin Exp Neuropsychol 24:200–213. [DOI] [PubMed] [Google Scholar]

- Sowell ER, Thompson PM, Holmes CJ, Jernigan TL, Toga AW (1999): In vivo evidence for post‐adolescent brain maturation in frontal and striatal regions. Nat Neurosci 2:859–861. [DOI] [PubMed] [Google Scholar]

- Swartz JR, Carrasco M, Wiggins JL, Thomason ME, Monk CS (2014): Age‐related changes in the structure and function of prefrontal cortex–amygdala circuitry in children and adolescents: A multi‐modal imaging approach. NeuroImage 86:212–220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas KM, Drevets WC, Whalen PJ, Eccard CH, Dahl RE, Ryan ND, Casey BJ (2001): Amygdala response to facial expressions in children and adults. Biol Psychiatry 49:309–316. [DOI] [PubMed] [Google Scholar]

- Thomas LA, De Bellis MD, Graham R, LaBar KS (2007): Development of emotional facial recognition in late childhood and adolescence. Dev Sci 10:547–558. [DOI] [PubMed] [Google Scholar]

- Thompson RA, Goodman M (2009): Development of emotion regulation: More than meets the eye In: Kring AM, Sloan DM, editors. Emotion Regulation and Psychopathology: A Transdiagnostic Approach to Etiology and Treatment. Guilford Press, New York. pp 38–58. [Google Scholar]

- Todd RM, Evans JW, Morris D, Lewis MD, Taylor MJ (2011): The changing face of emotion: Age‐related patterns of amygdala activation to salient faces. Soc Cogn Affect Neurosci 6:12–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Phuong J, Flannery J, Gabard‐Durnam L, Goff B (2013): A negativity bias for ambiguous facial expression valence during childhood: Converging evidence from behavior and facial corrugator muscle responses. Emot Wash DC 13:92–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzourio‐Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M (2002): Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single‐subject brain. NeuroImage 15:273–289. [DOI] [PubMed] [Google Scholar]

- Van Dijk KRA, Sabuncu MR, Buckner RL (2012): The influence of head motion on intrinsic functional connectivity MRI. Neuroimage 59:431–438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wierenga L, Langen M, Ambrosino S, van Dijk S, Oranje B, Durston S (2014a): Typical development of basal ganglia, hippocampus, amygdala and cerebellum from age 7 to 24. NeuroImage 96:67–72. [DOI] [PubMed] [Google Scholar]

- Wierenga LM, Langen M, Oranje B, Durston S (2014b): Unique developmental trajectories of cortical thickness and surface area. NeuroImage 87:120–126. [DOI] [PubMed] [Google Scholar]

- Winecoff A, Clithero JA, Carter RM, Bergman SR, Wang L, Huettel SA (2013): Ventromedial prefrontal cortex encodes emotional value. J Neurosci 33:11032–11039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan W, Altaye M, Ret J, Schmithorst V, Byars AW, Plante E, Holland SK (2009): Quantification of head motion in children during various fMRI language tasks. Hum Brain Mapp 30:1481–1489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yurgelun‐Todd D (2007): Emotional and cognitive changes during adolescence. Curr Opin Neurobiol 17:251–257. Cognitive neuroscience: [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information Figure 1.

Supporting Information Figure 2.

Supporting Information Figure 3.

Supporting Information Figure 4.