Abstract

Although Schizophrenia (SCZ) and Autism Spectrum Disorder (ASD) share impairments in emotion recognition, the mechanisms underlying these impairments may differ. The current study used the novel “Emotions in Context” task to examine how the interpretation and visual inspection of facial affect is modulated by congruent and incongruent emotional contexts in SCZ and ASD. Both adults with SCZ (n = 44) and those with ASD (n = 21) exhibited reduced affect recognition relative to typically-developing (TD) controls (n = 39) when faces were integrated within broader emotional scenes but not when they were presented in isolation, underscoring the importance of using stimuli that better approximate real-world contexts. Additionally, viewing faces within congruent emotional scenes improved accuracy and visual attention to the face for controls more so than the clinical groups, suggesting that individuals with SCZ and ASD may not benefit from the presence of complementary emotional information as readily as controls. Despite these similarities, important distinctions between SCZ and ASD were found. In every condition, IQ was related to emotion-recognition accuracy for the SCZ group but not for the ASD or TD groups. Further, only the ASD group failed to increase their visual attention to faces in incongruent emotional scenes, suggesting a lower reliance on facial information within ambiguous emotional contexts relative to congruent ones. Collectively, these findings highlight both shared and distinct social cognitive processes in SCZ and ASD that may contribute to their characteristic social disabilities.

Key words: emotion, emotion recognition, face processing, social cognition, social attention, social motivation

Introduction

During the early 20th century, Soviet filmmaker Lev Kuleshov underscored the importance of film editing by alternating video of an actor’s neutral facial expression with emotional scenes.1 He noted that contextual framing led viewers to attribute distinct emotional states to the same expression. Subsequent empirical research has validated the Kuleshov effect, demonstrating that facial affect perception is highly influenced by contextual cues (for a review, see Barrett et al2). Indeed, the perception of context-free faces, as typically examined in psychological and psychiatric research, is the exception in real-world environments rather than the norm.3

For individuals with Schizophrenia (SCZ) and Autism Spectrum Disorder (ASD), impairments in the recognition of facial emotion are common4–6 and may contribute to the social disability that characterizes both conditions.7,8 Overwhelmingly, these impairments are demonstrated using emotional faces presented in isolation,9–12 which maximizes experimental control at the expense of some ecological validity.13 Although such studies suggest reduced ability in SCZ and ASD to decode prototypical facial affect, the use of context-free faces may fail to capture how each group naturally interprets facial emotion within more complex social situations.3,14 Context is often necessary for accurately assessing facial affect in everyday environments—inferring a person’s emotional state changes if, eg, she/he is crying at a wedding compared to a funeral—and thus examining emotion perception strategies within more naturalistic social contexts may better capture the real-world processes underlying impaired affect recognition in SCZ and ASD.13,15–17 Further, given that prominent cognitive theories have suggested that both SCZ and ASD are characterized by a reduced tendency to integrate contextual information,18,19 impairments in affect recognition may be underestimated for both groups when faces are presented in isolation rather than within broader social contexts.

Identifying mechanisms underlying these impairments can also be aided by directly comparing the emotion-recognition strategies of adults with SCZ to those with ASD. Because ASD and SCZ are uniformly found to be impaired in many aspects of social cognition relative to nonclinical controls,20–22 understanding the nature of these deficits may be limited from studies in which their performance is only compared in reference to a typically-developing (TD) comparison group. Systematically-matched direct comparisons between SCZ and ASD on a common task may help identify disorder-specific mechanisms underlying general similarities in social cognitive impairment. Indeed, comparison of social cognitive profiles between SCZ and ASD suggests that comparable overall task performance can often occur for different reasons (for a review, see Sasson et al23). In this sense, direct comparisons can illuminate “how” each group performs on social cognitive tasks, not just “how well.” Ultimately, uncovering these processes is important for informing and refining treatment approaches targeting the distinctive needs of each group.24

The current study combines eye-tracking with the Emotions in Context task (ECT), a novel paradigm that compares affect recognition of faces in isolation to the same faces naturalistically integrated into congruent and incongruent emotional contexts, to examine whether the interpretation and visual inspection of facial affect is modulated by contextual cues differently in SCZ relative to ASD and TD controls. Unlike some prior research that has examined context effects on emotion recognition in SCZ or ASD by superimposing faces on top of a scenic background,25 or displaying them immediately after context is presented,26,27 here they are realistically integrated into the emotional scene as the face of the primary character. In this way, facial affect is not presented separately from, or sequentially after, emotional context, which may discourage the use of context to inform affect recognition, but rather occurs directly within an emotional context. Although several prior studies have leveraged this approach to show that congruent emotional contexts do not augment recognition of, or visual attention to, facial affect in SCZ and ASD to the same degree as controls,14,16,17,28 each of these studies only included a single clinical group and did not directly compare performance between SCZ and ASD. Further, the addition of an “incongruent” context enables the examination of how emotional sources are prioritized in SCZ and ASD when the 2 are in conflict, and the degree to which it modulates recognition judgements.

Consistent with prior research, both clinical groups were predicted to exhibit reduced affect recognition across all conditions, less of a benefit to recognition accuracy when faces are presented within congruent contextual scenes, and lower visual attention to faces within scenes. Extending upon prior work, we predicted that accuracy of emotional judgements for clinical participants would be less affected by incongruent information than controls, which would suggest a reduced tendency to integrate facial and contextual cues and a greater reliance on a single source of emotional information. Further, we hypothesized several mechanistic differences between the SCZ and ASD groups. First, given evidence that visual attention to surrounding context during facial affect recognition is reduced in SCZ16 but increased in ASD,29 we predicted that the SCZ group would devote a greater proportion of their fixation time to faces within scenes than the ASD group, particularly in the presence of conflicting emotional cues (ie, the incongruent condition). Second, a greater tendency to “jump to conclusions” in SCZ30 would manifest in faster response times (RT) than in ASD when both face and scene sources of emotion are available for consideration. Finally, because general cognition correlates with behavioral performance on social cognitive tasks in SCZ31 and can account for up to 60% of the variance in functional outcome,32 while in ASD social cognitive deficits33–35 and poor functional outcomes persist even in those with high IQs,36 we predicted that IQ would correlate with emotion-recognition accuracy in SCZ to a greater degree than in ASD.

Methods and Materials

Participants

One hundred four individuals (44 with SCZ, 21 with ASD, and 39 TD controls) participated (see table 1 for sample characteristics). Outpatients with SCZ were recruited from the Schizophrenia Research Center at the University of Pennsylvania, and Metrocare Services in Dallas, Texas. Diagnoses were confirmed using the Structured Clinical Interview for DSM-IV and chart review. Individuals with ASD were recruited from the UT-Dallas Autism Research Collaborative, a confidential registry of local adults with a confirmed ASD diagnosis via the Autism Diagnostic Observational Schedule37 who have agreed to be contacted about research studies. Symptom severity was assessed in both clinical groups with the Positive and Negative Syndrome Scale (PANSS38). TD individuals with no history of mental illness or neurological impairment, comparable on age, gender composition, ethnicity, and estimated IQ with the SCZ sample, were recruited from the local community.

Table 1.

Sample Characteristics

| Characteristic | SCZ (n = 44) | ASD (n = 21) | Control (n = 39) | P-value |

|---|---|---|---|---|

| N | N | N | ||

| Gender | 27M, 17 F | 18M, 3 F | 23M, 16 F | .09 |

| Ethnicity | 21 Ca, 19 AA, 4 Other | 20 Ca, 1 Other | 17 Ca, 16 AA, 7 Other | <.001 |

| Medication | ||||

| Atypical only | 36 | 4 | — | <.001 |

| Typical only | 2 | — | — | — |

| Both | 1 | — | — | — |

| Mean (SD) | Mean (SD) | Mean (SD) | ||

| Age | 35.34 (10.56) | 23.43 (4.36) | 35.87 (9.33) | <.001 |

| Years of education | 13.09 (2.51) | 13.81 (1.99) | 14.28 (2.05) | .057 |

| WRAT IQ | 94.11 (20.28) | 101.48 (16.97) | 100.56 (14.87) | .161 |

| PANSS | ||||

| Positive total | 15.84 (5.76) | 9.33 (2.65) | — | <.001 |

| Negative total | 11.11 (4.99) | 11.43 (3.68) | — | .798 |

| General total | 28.64 (6.82) | 23.77 (5.18) | — | .005 |

Note: SCZ, Schizophrenia; ASD, Autism Spectrum Disorder; M, male; F, female; AA, African American; Ca, Caucasian; WRAT, Wide Range Achievement Test, PANSS, Positive and Negative Syndrome Scale.

The 3 groups did not differ statistically in gender, education, or intellectual functioning as estimated by the Wide Range Achievement Test, third edition (WRAT-3),39 though the ASD group was younger and less ethnically diverse than the SCZ and TD groups. On the PANNS, the SCZ group had significantly higher Positive and General symptoms, but not Negative symptoms, than the ASD group. The Institutional Review Boards at UT-Dallas and the University of Pennsylvania approved this study, and all participants provided informed consent.

Stimuli

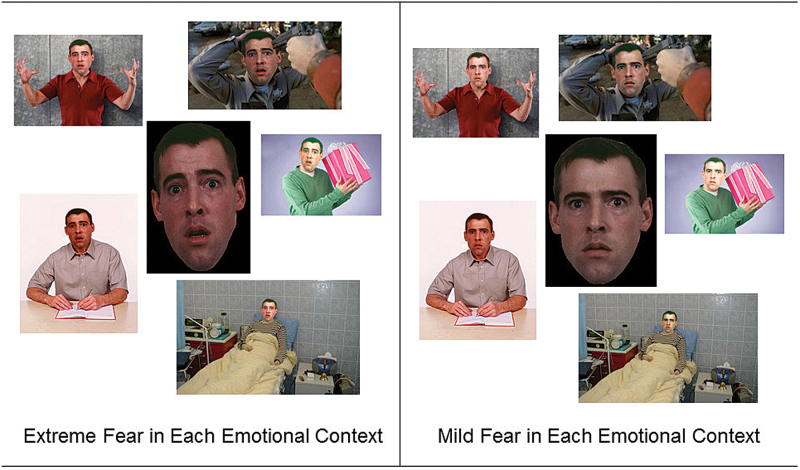

Phase 1 of the ECT consists of 9 colored photographs of an individual’s face (from Kohler et al11) presented in isolation expressing intense and mild versions of happy, sad, anger and fear, plus a neutral expression. Phase 2 consists of 25 images (5 per emotion) of emotional scenes featuring a forward-facing individual whose face had been digitally masked. Phase 3 consists of 45 images: each of the 9 faces from phase 1 are naturalistically integrated using photo-editing software into 5 emotional scenes (1 congruent, 4 incongruent; see figure 1). For example, the same happy face was integrated into happy, angry, fearful, sad, and neutral scenes. See supplementary materials for details regarding the development and validation of the ECT.

Fig. 1.

An intense (left) and mild (right) fearful face, surrounded by congruent and incongruent emotional scenes in which the face has been integrated. The congruent scene (top right) is followed clockwise by incongruent happy, sad, neutral and angry emotional contexts.

Procedure

Testing occurred in 1 of 3 similar research laboratories using equivalent eye-tracking equipment. A Tobii T60-XL eye-tracker (Tobii Technology) displayed the task and collected all behavioral and eye-tracking data. Participants sat approximately 60cm from a 24″ computer monitor integrated with the eye-tracking system. The system tracks both eyes at 60 Hz. Raw data were aggregated into fixations using a criterion of gaze remaining within a radius of 30 pixels for a minimum of 100ms. Prior to the task, a standard 5-point calibration procedure was used and repeated until quality was high. Calibration occurred again before phase 3. Fourteen participants (8 TD, 4 SCZ and 2 ASD) included in behavioral analyses were excluded from the fixation analyses because eye-tracking data were either missing or poor quality (less than a third of gaze recorded).

Prior to each phase, participants followed along as the experimenter read the on-screen instructions informing them that they were going to view a series of pictures depicting a man, and their job was to answer the question, “How does this man feel?” as accurately and quickly as possible using the computer mouse to select one of following choices appearing as text on the right side of the screen: Happy, Sad, Anger, Fear, No Emotion. Approximately 70% of the viewing area consisted of the image, with the remaining 30% devoted to the emotion choices. Participants were given the opportunity to ask questions, and before each phase began, they completed a practice trial using images not included in the actual task. The task did not start until this practice item was answered correctly. Within each phase, images appeared one at a time in a randomized order and remained on screen until the participant responded. To reorient attention and ensure that all gaze began in the same location for each participant, a cross-hair appeared at the center of the screen for 2 seconds before each trial. To reduce memory effects of prior exposure to the faces and scenes, the final phase was completed approximately 30 minutes after the first 2 phases, with other tasks from a larger battery occurring during the interim.

Data Analysis

Although all significant main effects are reported, we only report significant interactions with group given the large number of possible interactions and our focus on group differences. Main effects for variables with more than 2 levels were followed with post hoc comparisons, and significant interactions with group were probed with pairwise t tests. Further, because of group differences in age and ethnicity, we covaried these in all analyses.

Emotional judgments and RT were first compared between the groups using two 3×4 × 5 mixed-model ANOVAs in which clinical status (SCZ vs ASD vs TD) was the between-group variable, and condition (isolation vs scenes vs congruent vs incongruent) and emotion (anger, fear, happy, sad, neutral) were the within-group variables. In phase 2, where no facial information was available, emotion-recognition accuracy was defined by the emotion expressed in the scene. In all other conditions, emotion-recognition accuracy was defined by the emotion expressed by the face. Expression intensity (mild vs intense) was not included in the ANOVAs because preliminary analyses indicated that despite asserting a main effect in each condition (intense > mild; all Ps < .001), intensity did not interact with group in any condition (all Ps > .13). Moreover, collapsing across intensity levels enabled us to include “neutral” expressions, as well as the entirety of phase 2, in our mix model analyses. A similar mixed-model ANOVA, differing only from previous ones by not including the face in isolation condition, was used to examine the percentage of onscreen fixation time devoted to the face region within scenes. Finally, correlations between IQ and emotion-recognition accuracy, as well as between the percentage of fixation time on the face and accuracy, were performed for each group.

Results

Descriptive statistics for all analyses can be viewed in table 2.

Table 2.

Descriptive Statistics

| Condition | SCZ | ASD | Controls | P-value |

|---|---|---|---|---|

| Face in isolation | ||||

| % Correct | 82.8 (14.7) | 82.5(10.9) | 85.7 (13.1) | .176 |

| Response time (in s) | 4.5 (1.9) | 4.0 (2.0) | 3.3 (1.2) | .007 |

| Scenes in isolation | ||||

| % Correct | 84.3 (13.4) | 86.3 (10.1) | 90.6 (7.6) | .016 |

| Response time (in s) | 4.6 (2.1) | 5.0 (2.0) | 3.8 (1.5) | .014 |

| % Fixation time on face (masked) | 13.7 (6.6) | 16.2 (7.9) | 16.6 (5.6) | .179 |

| Congruent context | ||||

| % Correct | 84.3 (18.4) | 82.9 (11.0) | 91.8 (8.7) | .009 |

| Response time (in s) | 3.5 (1.7) | 3.3 (1.4) | 2.9 (0.7) | .038 |

| % Fixation time on face | 39.2 (14.4) | 46.3 (15.2) | 48.2 (12.2) | .015 |

| Incongruent context | ||||

| Accuracy | 66.2 (17.7) | 75.8 (11.8) | 80.7 (9.3) | <.001 |

| Response time (in s) | 4.2 (1.9) | 3.6 (1.6) | 3.5 (1.1) | .143 |

| % Fixation time on face | 43.4 (14.9) | 47.2 (14.3) | 50.8 (12.4) | .045 |

Note: Accuracy in the incongruent context is defined by the emotion shown on the face.

Accuracy

The prediction that both clinical groups would exhibit reduced emotion-recognition accuracy across all conditions was supported. A mixed-model ANOVA found a main effect of group (F(2,98) = 9.19, P < .001, = .16), with post hoc comparisons showing that TD controls demonstrated higher overall accuracy (88.2%) than both the SCZ group (80.2%; P < .001) and the ASD group (77.7%; P = .001), with the 2 clinical groups not differing from each other (P = .40). A main effect was also found for emotion (F(4,392) = 3.63, P < .006, = .036). Post hoc comparisons of emotion revealed happy (91.7%) > anger (87.6%; P = .005) > fear (80.7%; P < .001) = sadness (77.6%) = neutral (72.4%).

The prediction that the congruent condition would not improve accuracy for the clinical groups to the same degree as controls was supported by a significant group × condition interaction (F(6 194) = 2.81, P = .011, = .054). The TD group exhibited significantly higher accuracy than the clinical groups on all conditions except the face in isolation condition (table 2). Further, accuracy was higher for the TD group in both the congruent condition (P = .007) and the scene condition (P = .034) relative to the face in isolation condition, but this was not the case for the SCZ and ASD groups. However, the prediction that accuracy for clinical participants would not be affected by incongruent information to the same degree as controls was not supported. For the incongruent context, accuracy declined from the face in isolation condition for all 3 groups (all Ps ≤ .02).

We examined whether group differences for accuracy on the incongruent condition (P < .001) were driven by the clinical groups being more likely to select the emotion depicted in the scene rather than the face. This did not occur. On trials in which the emotion on the face was not selected, the 3 groups did not differ in how often the emotion of the scene was selected (TD: 46.5%; SCZ: 47.0%; ASD: 42.1%; P = .640).

RT analyses can be found in supplementary materials.

Eye-tracking

The mixed-model ANOVA indicated main effects of group (F(2,84) = 5.36, P = .016, = .093), condition (F(3,168) = 69.81, P < .001, = .454), and emotion (F(4,336) = 2.97, P = .020, = .034). Supporting the prediction that the clinical groups would demonstrate lower visual attention to faces, the TD group devoted a significantly higher proportion of their fixation time to the face region (40.0%) relative to the SCZ group (33.0%; P = .005), with a trend relative to the ASD group (34.2%; P = .083). The prediction that the SCZ group would fixate the face more than the ASD group was not supported: the 2 clinical groups did not differ from each other (P = .633). For condition, time on the face was greater in the incongruent (46.9%) relative to the congruent condition (45.0%; P = .015), which, as expected, was greater than in the scenes condition with occluded face regions (15.3%; P < .001). For emotion, fixation time on face regions was significantly higher for fear (38.6%) than all other emotions (sad: 36.5%; angry: 33.5%; happy: 32.9%; Ps ≤ .009) except for neutral (37.2%).

The group × condition interaction was significant (F(4,84) = 2.66, P = .034, = .06). Whereas fixation time devoted to the face region did not differ between groups in the scene condition, it was higher in the TD group compared to the SCZ group (P = .003) and as a trend compared to the ASD group (P = .083) in the congruent condition, and higher relative to both the SCZ (P = .020) and the ASD groups (P = .045) in the incongruent condition. Further, whereas all groups increased their fixation time to the face region from the scene condition to both the congruent and incongruent conditions (all Ps < .001), both the TD and SCZ groups demonstrated an increase in fixation time to the face from the congruent to incongruent conditions (Ps ≤ .003) but the ASD group did not (P = .799). The difference in percentage of fixation time devoted to the face between these 2 conditions was significantly greater in the SCZ group relative to the ASD group (t(57) = 2.35, P = .022).

Correlations

The prediction that IQ would correlate with emotion-recognition accuracy in the SCZ group but not the ASD group was supported. IQ estimated by the WRAT-3 positively correlated with accuracy on each of the 4 conditions for the SCZ group (face in isolation: r = .484, P = .001; scenes: r = .387, P = .009; congruent: r = .410, P = .006; incongruent: r = .516; P < .001), whereas no significant correlations between IQ and accuracy emerged for the ASD or TD groups for any condition (ASD: face in isolation: r = .115, P = .592; scenes: r = .216, P = .346; congruent: r = .169, P = .430; incongruent: r = .260; P = .220; TD: face in isolation: r = .167, P = .310; scenes: r = .230, P = .159; congruent: r = .155, P = .347; incongruent: r = .308; P = .056). 95% confidence intervals of the difference in the r-values between the SCZ and ASD groups for the 4 conditions are as follows: face in isolation [−.095, .831]; scenes [−.301, .678]; congruent [−.221, .716]; incongruent [−.171, .717].

Percentage of fixation devoted to the face was significantly correlated with emotion-recognition accuracy in the incongruent condition for the SCZ group (r = .519, P = .001), with a trend occurring for the ASD (r = .408, P = .083) and TD groups (r = .325, P = .075). In the congruent condition, where scene information was just as informative as the face for emotion-recognition accuracy, these correlations did not reach significance for any of the 3 groups (SCZ: r = .207, P = .200; ASD: r = .150, P = .539; TD: r = .027, P = .884), nor did they in the scene condition, where no facial information was available (SCZ: r = .053, P =.744; ASD: r = .329, P = .169; TD: r = −.156, P = .401).

Discussion

This study utilized the novel ECT to compare how emotional content within scenes influences facial affect recognition for adults with SCZ and those with ASD. Contrary to recent meta-analyses,5,6 the SCZ and ASD groups did not differ from controls in emotion-recognition accuracy when faces were presented in isolation. In contrast, the TD group outperformed the SCZ and ASD groups when faces were integrated into congruent and incongruent emotional scenes. Thus, the group differences on accuracy in the context conditions was not driven by poorer facial affect recognition by the clinical participants generally, as the 3 groups did not differ when viewing the same faces in isolation. Rather, atypical performance in SCZ and ASD was only apparent in contextual conditions, suggesting that affect recognition differences in these clinical groups may manifest to a greater degree when multiple sources of emotional information are simultaneously available.

This interpretation is reinforced by specific findings from each context condition. Whereas emotion-recognition accuracy for the TD group increased from the face in isolation condition to the congruent context condition, such improvement did not occur for the SCZ and ASD groups. This is consistent with prior studies demonstrating that facial affect recognition in SCZ and ASD may not benefit from supportive contextual cues to the same degree as nonclinical controls.14,16,28 In contrast, all 3 groups demonstrated a decline in accuracy when faces were presented within incongruent emotional contexts. Although this shift in performance between conditions by the SCZ and ASD groups indicates that both did notice, and were affected by, the misaligned emotional context, their lower accuracy relative to controls on the incongruent condition suggests that emotion-recognition difficulties may amplify when the amount and complexity of emotional information increases. Taken together, finding group differences in the context conditions but not the face in isolation condition underscores the importance of using stimuli that more closely approximate ecological situations, as they may be more sensitive at capturing social perception differences in these groups.13,40,41 The behavioral context effects were largely mirrored by eye-tracking findings. Replicating Sasson et al,17 the 3 groups did not differ in the proportion of onscreen fixation time devoted to the face region in the scene condition when the face was digitally erased, but controls increased their gaze to faces in the congruent context to a significantly greater degree than both the SCZ and ASD groups, who did not differ from each other. This similarity between the clinical groups in reduced facial attention supports prior research indicating that individuals with SCZ and ASD may not utilize faces to the same extent as controls when evaluating the emotional content of social scenes.16,17,42 The clinical groups also fixated the face less than controls in the incongruent condition. Given that fixation time to the face in this condition was significantly correlated with emotion-recognition accuracy for the SCZ group, and trended towards significance for the ASD group, this finding suggests that reduced facial attention within ambiguous emotional contexts may relate to poorer emotion recognition for both clinical groups. Despite these similarities, a distinction between the SCZ and ASD groups also emerged within the incongruent context. Whereas the SCZ group paralleled the control group by increasing their fixation time to the face from the congruent to the incongruent condition, the ASD group did not. This suggests that unlike the other 2 groups, individuals with ASD may fail to increase their prioritization of facial information when surrounding emotional cues are ambiguous or conflicting.

As expected, nonclinical controls spent longer responding to faces in the incongruent scenes condition compared to when they were in isolation, reflecting the extra-time needed to weigh or reconcile the conflicting emotional cues. In contrast, both clinical groups were actually faster responding in the incongruent relative to the face in isolation condition. This unexpected finding may indicate that the conflicting emotional information was too complex or ambiguous and resulted in them “giving up.” Consistent with this interpretation, accuracy was disproportionately low for the clinical groups in this condition, and was not offset by increased selection of the emotion depicted in the scene. Thus, lower accuracy by the SCZ and ASD groups in this condition did not occur because of a greater reliance on emotional cues in the scene but because they selected nondepicted emotional choices. Further, post hoc examination revealed that the unexpected decrease in RT between conditions for the SCZ and ASD groups was only significantly different from the TD group for the more subtle and challenging mild expressions. This pattern was particularly stark for the SCZ group, who were nearly a full second faster in the incongruent condition for mild expressions but only 0.1 second faster for intense expressions. This result aligns with prior findings suggestions that individuals with SCZ are likely to misinterpret social information by hastily “jumping to conclusions” during difficult cognitive appraisals.30

We also found that IQ was positively correlated with accuracy in each condition for the SCZ group, but not for the ASD or TD groups. This discrepancy is particularly notable given that the 3 groups did not differ on intellectual ability. Thus, emotion recognition may utilize general cognitive resources in SCZ, with more intellectually capable SCZ individuals leveraging cognitive ability as a compensatory mechanism relative to those with more cognitive dysfunction. Alternatively, it may be that intellectual functioning for patients manifests in a superior ability to stay on task and persist when unmotivated or disinterested. In either case, the failure to find an association between intellectual ability and emotion-recognition performance in ASD suggests that their poor affect recognition may be relatively independent of their general neurocognitive abilities, at least for individuals with IQs in the normal range. Indeed, high-functioning individuals with ASD often exhibit marked deficits in social cognition and social functioning despite average to above-average general intelligence,43,44 supporting theories arguing that social cognition and neurocognition may be dissociable constructs.45 Further, this discrepancy between clinical groups suggests that social cognitive treatments for SCZ may benefit from including a larger focus on general cognitive remediation than those designed for ASD, though future work examining whether general cognition is associated with social cognitive impairment in SCZ to a greater degree than in ASD is encouraged.

A number of limitations should be considered when interpreting these results. Although the ECT task may better approximate real-world emotion recognition than traditional tasks of faces in isolation, it is still somewhat limited in its ecological validity. As a computer-based task displaying only 5 categorical emotions, it cannot capture the complexity of how emotion is expressed and processed in everyday situations. Further, although including facial expressions of just a single Caucasian male maximized experimental control by avoiding having scenes confounded by differing identities, research has found that context processing of faces can be moderated by the sex of the face,46 and face recognition often differs cross-racially,47 even in SCZ and ASD.48,49 The inclusion of a single identity may have precluded variability in how each emotional category was expressed, and also resulted in only 9 trials in the “face in isolation” condition. This may help explain why it proved less sensitive than many prior studies5,9 for detecting impaired affect recognition in the SCZ and ASD groups. Third, eye-tracking analysis was limited to fixation time on faces. Prior studies have found that SCZ and ASD differ in the latency to orient to the face,17 but this metric was inaccessible in the current study because many face regions were located on or near the center of the image, overlapping with the pre-trial fixation cross. Fourth, IQ was estimated in the current study with the WRAT-3, which is not as comprehensive a measure of intellectual functioning as a full IQ assessment. Fifth, alexithymia, or difficulties identifying and describing emotional states in the self that has been linked to the recognition of emotion in others,50 co-occurs with ASD51 and may be present in SCZ,52 but was not assessed in the current study. Some evidence exists that facial emotion recognition in ASD is predicted by alexithymia, rather than by ASD per se,51 a pattern that may also apply to SCZ.53 Future work is needed to determine whether this feature specifically, rather than categorical diagnosis predicts emotion-recognition accuracy similarly for the 2 clinical groups. Finally, almost all of the participants in the SCZ group, as well 4 in the ASD group, were taking antipsychotic medication at the time of testing, and it is currently unclear how such medication may affect emotion processing.54 Future work that matches SCZ and ASD groups on medication use could help eliminate this group difference as a potential contributor to the effects reported here.

These limitations notwithstanding, the current study demonstrates that the interpretation and visual inspection of facial emotion is modulated by surrounding context differently in SCZ and ASD relative to TD controls and to each other. First, both clinical groups only exhibited reduced affect recognition when faces were integrated within broader social contexts, suggesting that increasing the ecological validity of emotional stimuli may more sensitively capture affect recognition differences in these populations. Second, viewing faces within congruent contexts did not improve accuracy, nor increase visual attention to the face region, for the SCZ and ASD groups to the same degree as controls, suggesting that the clinical groups extract reduced benefit from the presence of complementary emotional information. Despite these similarities, direct comparisons of the SCZ and ASD groups on the ECT also revealed important distinctions. Only in the SCZ group was IQ related to performance, indicating a role of general neurocognition to emotion recognition in SCZ that did not occur for the ASD group. Further, only the ASD group failed to increase their visual attention to faces in the incongruent condition, suggesting a reduced reliance on facial information within ambiguous emotional contexts relative to congruent ones. Collectively, these findings highlight both shared and distinct social cognitive processes between SCZ and ASD that warrant further investigation.

Supplementary Material

Supplementary material is available at http://schizophreniabulletin.oxfordjournals.org.

Acknowledgment

The authors have declared that there are no conflicts of interest in relation to the subject of this study.

References

- 1. Mobbs D, Weiskopf N, Lau HC, Featherstone E, Dolan RJ, Frith CD. The Kuleshov Effect: the influence of contextual framing on emotional attributions. Soc Cogn Affect Neurosci. 2006;1:95–106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Barrett LF, Mesquita B, Gendron M. Context in emotion perception. Curr Dir Psychol Sci. 2011;20:286–290. [Google Scholar]

- 3. Kring AM, Campellone TR. Emotion perception in schizophrenia: context matters. Emotion Review. 2012;4:182–186. [Google Scholar]

- 4. Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol Rev. 2010;20:290–322. [DOI] [PubMed] [Google Scholar]

- 5. Kohler CG, Walker JB, Martin EA, Healey KM, Moberg PJ. Facial emotion perception in schizophrenia: a meta-analytic review. Schizophr Bull. 2010;36:1009–1019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Uljarevic M, Hamilton A. Recognition of emotions in autism: a formal meta-analysis. J Autism Dev Disord. 2013;43:1517–1526. [DOI] [PubMed] [Google Scholar]

- 7. Couture SM, Penn DL, Roberts DL. The functional significance of social cognition in schizophrenia: a review. Schizophr Bull. 2006;32:S44–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Pelphrey K, Adolphs R, Morris JP. Neuroanatomical substrates of social cognition dysfunction in autism. Ment Retard Dev Disabil Res Rev. 2004;10:259–271. [DOI] [PubMed] [Google Scholar]

- 9. Eack SM, Mazefsky CA, Minshew NJ. Misinterpretation of facial expressions of emotion in verbal adults with autism spectrum disorder. Autism. 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Edwards J, Jackson HJ, Pattison PE. Emotion recognition via facial expression and affective prosody in schizophrenia: a methodological review. Clinical Psychol Rev. 2002;22:789–832. [DOI] [PubMed] [Google Scholar]

- 11. Kohler CG, Turner TH, Bilker WB, et al. Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am J Psychiatry. 2003;160:1768–1774. [DOI] [PubMed] [Google Scholar]

- 12. Loveland KA, Tunali-Kotoski B, Chen YR, et al. Emotion recognition in autism: verbal and nonverbal information. Dev Psychopathol. 1997;9:579–593. [DOI] [PubMed] [Google Scholar]

- 13. Hanley M, McPhillips M, Mulhern G, Riby DM. Spontaneous attention to faces in Asperger syndrome using ecologically valid static stimuli. Autism. 2013;17:754–761. [DOI] [PubMed] [Google Scholar]

- 14. Wright B, Clarke N, Jordan JO, et al. Emotion recognition in faces and the use of visual context in young people with high-functioning autism spectrum disorders. Autism. 2008;12:607–626. [DOI] [PubMed] [Google Scholar]

- 15. Chawarska K, Macari S, Shic F. Context modulates attention to social scenes in toddlers with autism. J Child Psychol Psychiatry. 2012;53:903–913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Green MJ, Waldron JH, Simpson I, Coltheart M. Visual processing of social context during mental state perception in schizophrenia. J Psychiatry Neurosci. 2008;33:34–42. [PMC free article] [PubMed] [Google Scholar]

- 17. Sasson N, Tsuchiya N, Hurley R, et al. Orienting to social stimuli differentiates social cognitive impairment in autism and schizophrenia. Neuropsychologia. 2007;45:2580–2588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Happe F, Frith U. The weak coherence account: detail-focused cognitive style in autism spectrum disorders. J Autism Dev Disord. 2006;36:5–25. [DOI] [PubMed] [Google Scholar]

- 19. Penn DL, Ritchie M, Francis J, Combs D, Martin J. Social perception in schizophrenia: the role of context. Psychiatry Res. 2002;109:149–159. [DOI] [PubMed] [Google Scholar]

- 20. Couture SM, Penn DL, Losh M, Adolphs R, Hurley R, Piven J. Comparison of social cognitive functioning in schizophrenia and high functioning autism: more convergence than divergence. Psychol Med. 2010;40:569–579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Eack SM, Bahorik AL, McKnight SA, et al. Commonalities in social and non-social cognitive impairments in adults with autism spectrum disorder and schizophrenia. Schizophr Res. 2013;148:24–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Lugnegård T, Hallerbäck MU, Hjärthag F, Gillberg C. Social cognition impairments in Asperger syndrome and schizophrenia. Schizophr Res. 2013;143:277–284. [DOI] [PubMed] [Google Scholar]

- 23. Sasson NJ, Pinkham AE, Carpenter KL, Belger A. The benefit of directly comparing autism and schizophrenia for revealing mechanisms of social cognitive impairment. J Neurodev Dis. 2011;3:87–100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Pinkham AE, Harvey PD. Future directions for social cognitive interventions in schizophrenia. Schizophr Bull. 2013;39:499–500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Campellone TR, Kring AM. Context and the perception of emotion in schizophrenia: sex differences and relationships with functioning. Schizophr Res. 2013;149:192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Da Fonseca D, Santos A, Bastard-Rosset D, Rondan C, Poinso F, Deruelle C. Can children with autistic spectrum disorders extract emotions out of contextual cues?. Res Autism Spectr Dis. 2009;3:50–56. [Google Scholar]

- 27. Lee J, Kern RS, Harvey PO, et al. An intact social cognitive process in schizophrenia: situational context effects on perception of facial affect. Schizophr Bull. 2013;39:640–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Bigelow NO, Paradiso S, Adolphs R, et al. Perception of socially relevant stimuli in schizophrenia. Schizophr Res. 2006;83:257–267 [DOI] [PubMed] [Google Scholar]

- 29. Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59:809–816. [DOI] [PubMed] [Google Scholar]

- 30. Freeman D. Suspicious minds: the psychology of persecutory delusions. Clinical Psychology Review. 2007;27:425–457. [DOI] [PubMed] [Google Scholar]

- 31. Ventura J, Wood RC, Hellemann GS. Symptom domains and neurocognitive functioning can help differentiate social cognitive processes in schizophrenia: a meta-analysis. Schizophr Bull. 2013;39:102–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Green MF, Kern RS, Braff DL, Mintz J. Neurocognitive deficits and functional outcome in schizophrenia: are we measuring the “right stuff”? Schizophr Bull. 2000;26:119–136. [DOI] [PubMed] [Google Scholar]

- 33. Kasari C, Chamberlain B, Bauminger N. Social emotions and social relationships in autism: can children with autism compensate? In: Burack J, Charman T, Yirmiya N, Zelazo P, eds. Development and Autism: Perspectives From Theory and Research. Hillsdale, NJ: Erlbaum Press; 2001. [Google Scholar]

- 34. Baron-Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: evidence from very high functioning adults with autism or asperger syndrome. J Child Psychol Psychiatry. 1997;38:813–822. [DOI] [PubMed] [Google Scholar]

- 35. Klin A. Attributing social meaning to ambiguous visual stimuli in higher-functioning autism and Asperger syndrome: the Social Attribution Task. J Child Psychol Psychiatry. 2000;41:831–846. [PubMed] [Google Scholar]

- 36. Howlin P, Goode S, Hutton J, Rutter M. Adult outcome for children with autism. J Child Psychol Psychiatry. 2004;45:212–229. [DOI] [PubMed] [Google Scholar]

- 37. Lord C, Risi S, Lambrecht L, et al. The Autism Diagnostic Observation Schedule—Generic: a standard measure of social and communication deficits associated with the spectrum of autism. J Autism Dev Disor. 2000;30:205–223. [PubMed] [Google Scholar]

- 38. Kay SR, Flszbein A, Opfer LA. The positive and negative syndrome scale (PANSS) for schizophrenia. Schizophr Bull. 1987;13:261. [DOI] [PubMed] [Google Scholar]

- 39. Wilkinson GS. WRAT-3: Wide Range Achievement Test Administration Manual. Wilmington, DE: Wide Range, Incorporated; 1993. [Google Scholar]

- 40. Chevallier C, Parish-Morris J, McVey A, et al. Measuring social attention and motivation in autism spectrum disorder using eye-tracking: stimulus type matters. Autism Res. 2015;8:620–628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Saitovitch A, Bargiacchi A, Chabane N, Philippe A, Samson Y, Zilbovicius M. Studying Gaze abnormalities in autism; which type of stimulus to use. Open J Psychiat. 2013;3:32–38. [Google Scholar]

- 42. Riby DM, Hancock PJ. Viewing it differently: social scene perception in Williams syndrome and autism. Neuropsychologia. 2008;46:2855–2860. [DOI] [PubMed] [Google Scholar]

- 43. Baron-Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: evidence from very high functioning adults with autism or asperger syndrome. J Child Psychol Psychiatry. 1997;38:813–822. [DOI] [PubMed] [Google Scholar]

- 44. Klin A. Attributing social meaning to ambiguous visual stimuli in higher-functioning autism and Asperger syndrome: the Social Attribution Task. J Child Psychol Psychiatry. 2000;41:831–846. [PubMed] [Google Scholar]

- 45. Allen DN, Strauss GP, Donohue B, van Kammen DP. Factor analytic support for social cognition as a separable cognitive domain in schizophrenia. Schizophr Res. 2007;93:325–333. [DOI] [PubMed] [Google Scholar]

- 46. Brody LR, Hall JA. Gender and emotion in context. Handbook Emot. 2008;3:395–408. [Google Scholar]

- 47. Meissner CA, Brigham JC. Thirty years of investigating the own-race bias in memory for faces: a meta-analytic review. Psychology, Public Policy, and Law. 2001;7:3. [Google Scholar]

- 48. Pinkham AE, Sasson NJ, Calkins ME, et al. The other-race effect in face processing among African-American and Caucasian individuals with schizophrenia. Am J Psychiatry. 2008;165:639–645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Wilson CE, Palermo R, Burton AM, Brock J. Recognition of own-and other-race faces in autism spectrum disorders. Q J Exp Psychol. 2011;64:1939–1954. [DOI] [PubMed] [Google Scholar]

- 50. Swart M, Kortekaas R, Aleman A. Dealing with feelings: characterization of trait alexithymia on emotion regulation strategies and cognitive-emotional processing. PLoS One. 2009;4:e5751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Cook R, Brewer R, Shah P, Bird G. Alexithymia, not autism, predicts poor recognition of emotional facial expressions. Psychol Sci. 2013;24:723–732. [DOI] [PubMed] [Google Scholar]

- 52. Henry JD, Bailey PE, von Hippel C, Rendell PG, Lane A. Alexithymia in schizophrenia. J Clin Exp Neuropsychol. 2010;32:890–897. [DOI] [PubMed] [Google Scholar]

- 53. Bird G, Cook R. Mixed emotions: the contribution of alexithymia to the emotional symptoms of autism. Transl Psychiatry, 2013;3:e285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Horan WP, Wynn JK, Kring AM, Simons RF, Green MF. Electrophysiological correlates of emotional responding in schizophrenia. J Abnorm Psychol. 2010;119:18–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.