Abstract

Fully automated decoding of human activities and intentions from direct neural recordings is a tantalizing challenge in brain-computer interfacing. Implementing Brain Computer Interfaces (BCIs) outside carefully controlled experiments in laboratory settings requires adaptive and scalable strategies with minimal supervision. Here we describe an unsupervised approach to decoding neural states from naturalistic human brain recordings. We analyzed continuous, long-term electrocorticography (ECoG) data recorded over many days from the brain of subjects in a hospital room, with simultaneous audio and video recordings. We discovered coherent clusters in high-dimensional ECoG recordings using hierarchical clustering and automatically annotated them using speech and movement labels extracted from audio and video. To our knowledge, this represents the first time techniques from computer vision and speech processing have been used for natural ECoG decoding. Interpretable behaviors were decoded from ECoG data, including moving, speaking and resting; the results were assessed by comparison with manual annotation. Discovered clusters were projected back onto the brain revealing features consistent with known functional areas, opening the door to automated functional brain mapping in natural settings.

Keywords: unsupervised machine learning, neural decoding, long-term recording, electrocorticography (ECoG), computer vision, speech processing, functional brain mapping, automation

1. Introduction

Much of our knowledge about neural computation in humans has been informed by data collected through carefully controlled experiments in laboratory conditions. Likewise, the success of Brain-Computer Interfaces (BCIs; Wolpaw and Wolpaw, 2012; Rao, 2013)—controlling robotic prostheses and computer software via brain signals—has hinged on the availability of labeled data collected in controlled conditions. Sources of behavioral and recording variations are actively avoided or minimized. However, it remains unclear to what extent these results generalize to naturalistic behavior. It is known that neuronal responses may differ between experimental and freely behaving natural conditions (Vinje and Gallant, 2000; Felsen and Dan, 2005; Jackson et al., 2007). Therefore, developing robust decoding algorithms that can cope with the challenges of naturalistic behavior is critical to deploying BCIs in real-life applications.

One strategy for decoding naturalistic brain data is to leverage external monitoring of behavior and the environment for interpreting neural activity. Previous research that studied naturalistic human brain recordings, including brain surface electrocorticography (ECoG), have required ground truth labels (Derix et al., 2012; Pistohl et al., 2012; Ruescher et al., 2013). These labels were acquired by tedious and time-consuming manual labeling of video and audio. In addition to being laborious, manual labeling is prone to human errors from factors such as loss of attention and fatigue (Hill et al., 2012). This problem is exacerbated by very long recordings, when patients are monitored continuously for several days or longer. Obtaining extensive labeled data and training complex algorithms are difficult or even intractable in rapidly changing, naturalistic environments.

In this article, we describe our use of video and audio recordings in conjunction with ECoG data to decode human behavior in a completely unsupervised manner. Figure 1 illustrates components of the data used in our approach. The data consists of six subjects monitored continuously over at least 1 week after electrode array implantation surgery; each subject had approximately 100 intracranial ECoG electrodes with wide coverage of cortical areas. Importantly, subjects being monitored had no instructions to perform specific tasks; they were undergoing presurgical epilepsy monitoring and behaved as they wished inside their hospital room. Instead of relying on manual labels, we used computer vision, speech processing, and machine learning techniques to automatically determine the ground truth labels for the subjects' activities. These labels were used to annotate patterns of neural activity discovered using unsupervised clustering on power spectral features of the ECoG data. We demonstrate that this approach can identify salient behavioral categories in the ECoG data, such as movement, speech and rest. Decoding accuracy was verified by comparing the automatically discovered labels against manual labels of behavior in a small subset of the data. We also demonstrate the effectiveness of ECoG clusters to decode behavior for many days after the initial cluster annotation enabled by audio and video. Further, projecting the annotated ECoG clusters to electrodes on the brain revealed spatial and power spectral patterns of cortical activation consistent with those characterized during controlled experiments. These results suggest that our unsupervised approach may offer a reliable and scalable way to map functional brain areas in natural settings and enable the deployment of ECoG BCI in real-life applications.

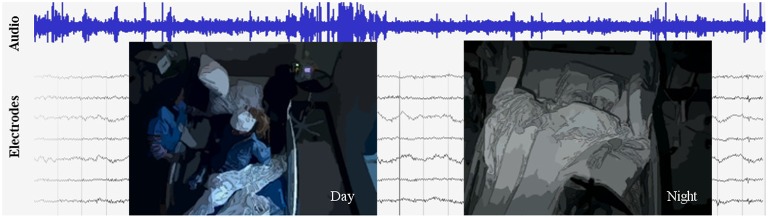

Figure 1.

An excerpt from the data set, which includes video, audio, and intracranial brain activity (ECoG) continuously recorded for at least 1 week for six subjects. ECoG recordings from a small subset of the electrodes are shown, along with the simultaneously recorded audio signals in blue. A typical patient has around 100 electrodes. Overlaid are illustrations of the video, which is centered on the patient; on the left is a daytime anonymized image frame of the patient eating, and on right is a nighttime anonymized frame of the patient sleeping.

2. Background and related work

Intracranial electrocorticography (ECoG) as a technique for observing human neural activity is particularly attractive. Its spatial and temporal resolution offers measurements of temporal dynamics inaccessible by functional magnetic resonance imaging (fMRI) and spatial resolution unavailable to extracranial electroencephalography (EEG). Cortical surface ECoG is accomplished less invasively than with penetrating electrodes (Williams et al., 2007; Moran, 2010) and has much greater signal-to-noise ratio than entirely non-invasive techniques such as EEG (Lal et al., 2004; Ball et al., 2009).

Efforts to decode neural activity are typically accomplished by training algorithms on tightly controlled experimental data with repeated trials. Much progress has been made to decode arm trajectories (Wang et al., 2012; Nakanishi et al., 2013; Wang et al., 2013a) and finger movements (Miller et al., 2009; Wang et al., 2010), to control robotic arms (Yanagisawa et al., 2011; Fifer et al., 2014; McMullen et al., 2014), and to construct ECoG BCIs (Leuthardt et al., 2006; Schalk et al., 2008; Miller et al., 2010; Vansteensel et al., 2010; Leuthardt et al., 2011; Wang et al., 2013b). Speech detection and decoding from ECoG has been studied at the level of voice activity (Kanas et al., 2014b), phoneme (Blakely et al., 2008; Leuthardt et al., 2011; Kanas et al., 2014a; Mugler et al., 2014), vowels and consonants (Pei et al., 2011), whole words (Towle et al., 2008; Kellis et al., 2010), and sentences (Zhang et al., 2012). Accurate speech reconstruction has also been shown to be possible (Herff et al., 2015).

The concept of decoding naturalistic brain recordings is related to passive BCIs, a term used to describe BCI systems that decode arbitrary brain activity that are not necessarily under volitional control (Zander and Kothe, 2011). Our system, which falls within the class of passive BCIs, may also be considered a type of hybrid BCI combining electrophysiological recordings with other signals (Muller-Putz et al., 2015). However, past approaches in this domain have not focused on combining alternative monitoring modalities such as video and audio in order to decode natural ECoG signals.

The lack of ground-truth data makes decoding naturalistic neural recordings difficult. Supplementing neural recordings with additional modes of observation, such as video and audio, can make the decoding more feasible. Previous studies exploring this idea have decoded natural speech (Derix et al., 2012; Bauer et al., 2013; Dastjerdi et al., 2013; Derix et al., 2014; Arya et al., 2015) and natural motions of grasping (Pistohl et al., 2012; Ruescher et al., 2013); however, these studies relied on laborious manual annotations. Entirely unsupervised approaches to decoding have previously targeted sleep stages (Längkvist et al., 2012) and seizures (Pluta et al., 2014) rather than long-term natural ECoG recordings.

Our approach to circumvent the need for manually annotated behavioral labels exploits automated techniques developed in computer vision and speech processing. Both of these fields have seen tremendous growth in recent years with increasing processor power and advances in methodology (Huang et al., 2014; Jordan and Mitchell, 2015). Computer vision techniques have been developed for a variety of tasks including automated movement estimation (Poppe, 2007; Wang et al., 2015), pose recognition (Toshev and Szegedy, 2014), object recognition (Erhan et al., 2014; Girshick et al., 2014), and activity classification (Ryoo and Matthies, 2013; Karpathy et al., 2014). In some cases, computer vision techniques have matched or surpassed single-human performance in recognizing arbitrary objects (He et al., 2015). Voice activity detection has been well studied in speech processing (Ramirez et al., 2004). In this work, we leverage and combine techniques from these rapidly advancing fields to automate and enhance the decoding of naturalistic human neural recordings.

3. Results

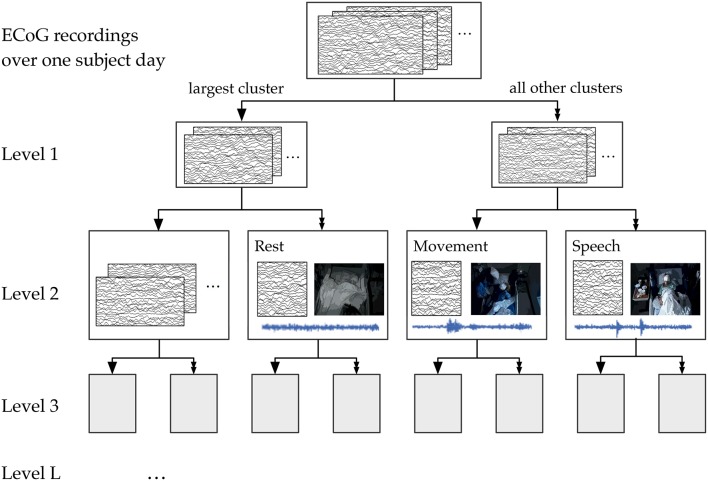

Our general approach to unsupervised decoding of large, long-term human neural recordings is to combine hierarchical clustering of high-dimensional ECoG data with annotations informed by automated video and audio analysis, as illustrated in Figure 2 (further details in the Methods section). Briefly, hierarchical k-means clustering was performed on power spectral features of the ECoG recordings. These clusters are coherent patterns discovered in the neural recordings; video and audio monitoring data was used to interpret these patterns and match them to behaviorally salient categories such as movement, speech and rest. Here we describe results of our analysis on six subjects where we used automated audio and video analysis to annotate clusters of neural activity. The accuracy of the unsupervised decoding method was quantified by comparison to manual labels, and the annotated clusters were mapped back to the brain to enable neurologically relevant interpretations.

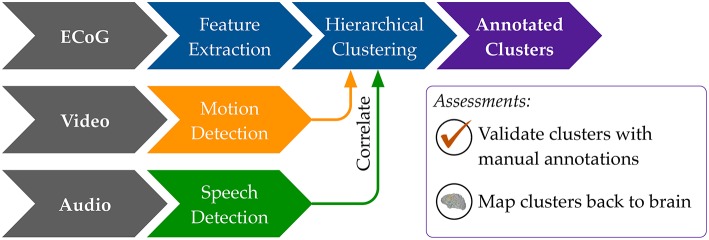

Figure 2.

An overview of our methods to discover neural decoders by automated clustering and cluster annotations. Briefly, the ECoG recordings was broken into short, non-overlapping windows of 2-s. Power spectral features were extracted for each electrode, all electrodes' features were stacked, and the feature space was reduced to the first 50 principal component dimensions. Hierarchical k-means clustering was performed on these 50-dimensional data, and annotation was done by correlations in timing with automated detection of motion and speech levels (see Figure 8). The resultant annotated clusters were validated against manual annotations; cluster centroids mapped to the brain visualize the automatically detected neural patterns.

3.1. Actograms: automated motion and speech detection

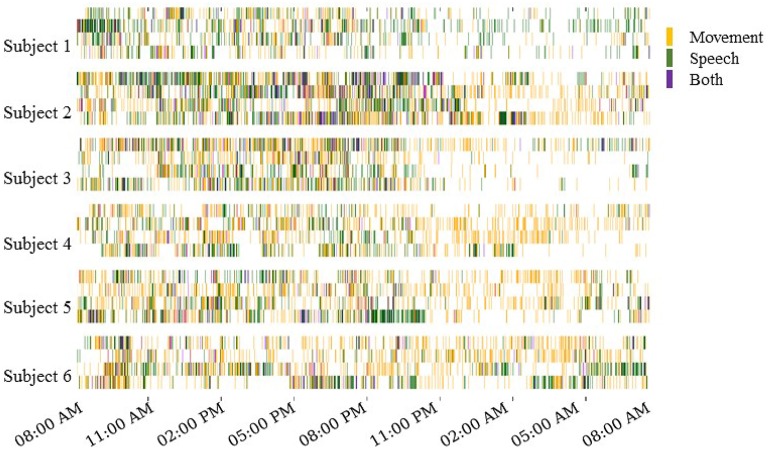

The automated motion and speech detection methods quantified movement and speech levels from the video and audio recordings, respectively. Figure 3 shows daily “actograms” for all six subjects. Movement levels were quantified by analyzing magnitude of changes at feature points in successive frames of the video. Speech levels were quantified by computing the power in the audio signal in the human speech range.

Figure 3.

Daily actograms for all subjects. Each row shows one day of activity profiles summarized by automated speech and motion recognition algorithms. Days 3–6 post surgical implantation were analyzed. For purposes of this visualization, the activity levels were binned to 1-min resolution. Movement and speech levels were highly correlated on most days, and these were concentrated to the active hours between 8:00 a.m. and 11:00 p.m.

As expected, Figure 3 shows that subjects were most active during waking hours, generally between 8:00 a.m. and 11:00 p.m. Also, movement and speech levels are often highly correlated, as the subjects were often moving and speaking at the same time during waking hours. During night time hours, although subjects were generally less active, many instances of movement and speech can still be seen in Figure 3 as the subjects either shifted in their sleep or were visited by hospital staff during the night.

Our automated motion and speech detection algorithms were able to perform with reasonable accuracy when compared to manual annotation of movement and speech. Over all subject days, movement detection was 74% accurate (range of 68–90%), while speech detection was 75% accurate (range of 67–83%).

3.2. Unsupervised decoding of ECoG activity

Unsupervised decoding of neural recordings was performed by hierarchical clustering of power spectral features of the multi-electrode ECoG recordings. Because the subjects' behavior on each day varied widely, both across days for the same subject and across subjects (see actograms in Figure 3), we were agnostic to which specific frequency ranges contained meaningful information and considered all power in frequency bins between 1 and 53 Hz (see Supplemental Information for results considering higher frequency bands). Further, data from each subject day was analyzed separately. Clusters identified by hierarchical k-means clustering were annotated using information from the external monitoring by video and audio. The hierarchical k-means clustering implementation is detailed in the Section 5. Following a tree structure, successive levels of clustering contained larger numbers of clusters (Figure 8).

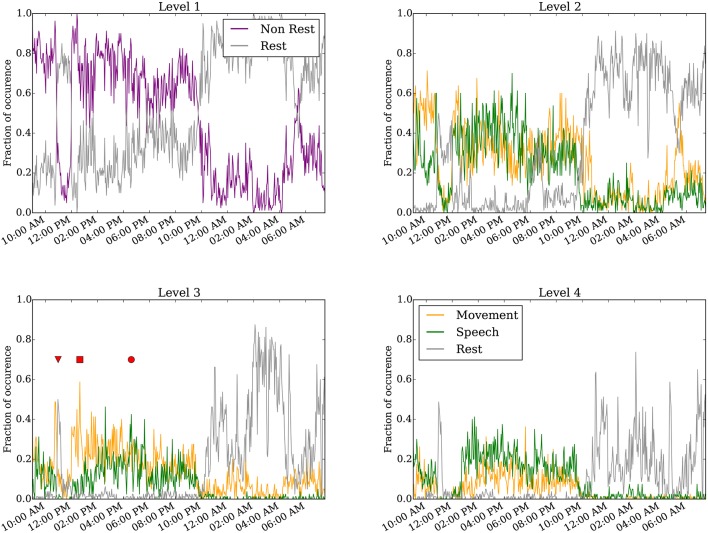

Figure 4 shows results of the annotated clusters for one subject day (Subject 6 on day 6 post implantation) at clustering levels 1–4 as a function of time of day. At level 1, it is clear that rest is separable from non-rest, and the switch in the dominant cluster occurred around 10:00 p.m. We presume the timing of the switch to correspond to when the subject falls asleep, as is corroborated by the video monitoring. when the subject is presumed to have fallen asleep as evident in the video monitoring. Video S1 shows an example of the infrared video acquired during night time. The subject is in a consolidated period of rest between 10:00 p.m. and 9:00 a.m. the following day. Interestingly, for a duration of approximately 1 h starting at around 11:00 a.m., the rest cluster dominated the labels (see also red triangle at level 3). This period corresponds to the subject taking a nap (Video S2).

Figure 4.

Annotated clustering results of one subject day (Subject 6 on day 6 post implant) from hierarchical level 1 to level 4. The vertical axis represents the fraction of time the neural recording is categorized to each annotated cluster. The triangle marks when the subject takes a nap (Video S2), the square marks when the subject is seen to move without speech (Video S3), and the circle marks when the subject spoke more than moved (Video S4). For visualization, the 24-h day was binned to every 160 s.

Starting at level 2, the non-rest behavior separates into movement and speech clusters. These two clusters are generally highly correlated, as moving and talking often co-occur, especially as the movement quantification can detect mouth or face movement. We point out several interesting instances labeled at level 3. First, the inverted triangle points to a period around 11:00 a.m. annotated as rest, when the subject rested during a nap (Video S2). Second, the rectangle marks a period around 1:00 p.m., annotated as predominantly movement but not speech, when the subject shifted around in their bed but did not engage in conversation (Video S3). Third, the circle marks a period around 5:00 p.m. when the subject engaged in conversation (Video S4); this period was labeled as both movement and speech. As described in the validation analysis in the following section, the accuracy of the automated annotations does not change substantially between levels 3–4 across all subject days (Figure 5 and Figure S3).

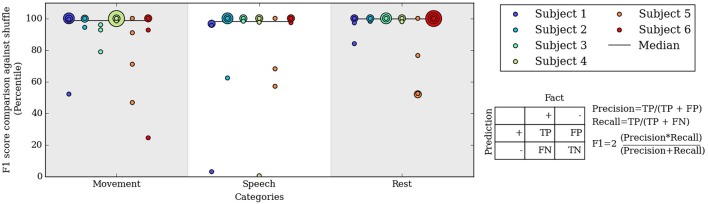

Figure 5.

Percentile of the F1 score of our algorithm at level 3 compared to F1 scores from randomly shuffled manual labels. Each colored dot corresponds to one day for a subject in each behavioral category.

3.3. Validation of automated neural decoding by comparison with manual annotations

The automated neural decoding was assessed by comparison with behaviors labeled manually. Manual labels of the video and audio were supplied by two human annotators, who labeled a variety of salient behaviors for at least 40 total minutes (or approximately 3%) of video and audio recordings for each subject day. The labels were acquired for 2-min segments of data distributed randomly throughout the 24-h day.

The entirely automated neural decoding performed very well in the validation for all subjects on the categories of movement, speech and rest. Table 1 summarizes the accuracy of the annotated clusters averaged over the 4 days analyzed for each subject, comparing the automated labels to manual labels during the labeled portions of each day. In addition to computing the accuracy, we also computed the F1 scores of the automated decoding using manual labels as ground truth for each day; the F1 score is a weighted average of precision and recall (Table S1).

Table 1.

Performance metrics as assessed by comparison of level 3 automated cluster annotation to manual annotations averaged over all 4 days for each subject.

| Acc | F1 | Spc | Sen/Rec | Prc | |

|---|---|---|---|---|---|

| MOVEMENT | |||||

| Subject 1 | 62.12 | 0.50 | 76.51 | 46.75 | 58.57 |

| Subject 2 | 49.37 | 0.53 | 80.13 | 40.68 | 84.07 |

| Subject 3 | 58.37 | 0.47 | 57.06 | 54.05 | 42.33 |

| Subject 4 | 58.78 | 0.61 | 50.53 | 66.67 | 56.55 |

| Subject 5 | 60.88 | 0.46 | 68.78 | 47.69 | 47.16 |

| Subject 6 | 59.57 | 0.61 | 70.20 | 59.71 | 75.07 |

| SPEECH | |||||

| Subject 1 | 52.35 | 0.31 | 56.89 | 35.68 | 28.36 |

| Subject 2 | 62.13 | 0.67 | 71.27 | 55.58 | 85.16 |

| Subject 3 | 68.88 | 0.66 | 73.38 | 65.49 | 68.98 |

| Subject 4 | 60.83 | 0.50 | 62.43 | 56.88 | 45.49 |

| Subject 5 | 48.46 | 0.62 | 59.99 | 43.92 | 63.09 |

| Subject 6 | 72.73 | 0.67 | 84.51 | 64.70 | 75.50 |

| REST | |||||

| Subject 1 | 63.95 | 0.62 | 60.97 | 64.75 | 63.19 |

| Subject 2 | 63.76 | 0.50 | 64.70 | 25.49 | 45.68 |

| Subject 3 | 74.42 | 0.69 | 81.80 | 65.26 | 75.40 |

| Subject 4 | 55.55 | 0.44 | 81.11 | 35.01 | 70.39 |

| Subject 5 | 63.09 | 0.71 | 44.27 | 70.67 | 72.10 |

| Subject 6 | 79.09 | 0.79 | 85.72 | 74.46 | 84.40 |

Acc, Accuracy; F1, F1 Score; Spc, Specificity; Sen/Rec, Sensitivity/Recall; Prc, Precision.

To assess the significance of the automated labels' accuracy, we compared the F1 scores on each day to F1 scores of randomly shuffled labels. The shuffled labels preserved the relative occurrence of labels and gave an unbiased estimate of chance performance. Figure 5 shows the percentile of the true F1 scores within the randomly shuffled F1 scores at hierarchical clustering level 3. For each category of movement, speech and rest, the median percentile of the true F1 scores are at or near the 99th percentile; in other words, our automatically labeled clusters performed significantly better than chance on most subject days. F1 score percentiles for clustering levels 2 and 4 are shown in Figures S2, S3. We also repeated the analysis considering spectral frequencies up to 105 Hz, which does not substantially change the performance of the automated decoder (Table S2 and Figure S4).

3.4. ECoG clusters predict behavior categories without video

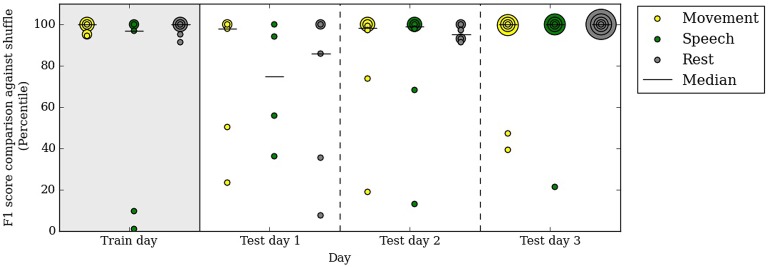

Our automated neural decoding system is able to predict behavior categories for many days after the initial clustering and correlation with video. To assess the stability of ECoG cluster annotations over time, we clustered the ECoG recordings on one day of data (day 3 post surgery, or the first day of analysis) and annotated them automatically with the simultaneously acquired videos and audio. Next, we tested these annotated ECoG clusters on 3 subsequent days without access to the video and audio data. Figure 6 shows that the performance remains relatively stable throughout the test days; the median F1 percentiles are significantly above chance for every category on every day. In fact, the performance on test days is comparable with the performance on the first day.

Figure 6.

A decoder trained on the first day of analysis with video continues to performs well above chance up to 3 days after training. Specifically, on the test days, no video or audio information is used in decoding behavior categories. Percentile of F1 scores are shown, as in Figure 5. Each colored dot corresponds to one subject in each behavioral category on the respective day. All subjects are included in this figure.

The performance in test day 1 is lower than on test days 2 and 3. This difference may be due to chance or to some systematic differences between day 4 post surgery and the rest of the clinical evaluation period.

3.5. Neural correlates of behavior as discovered by unsupervised clustering

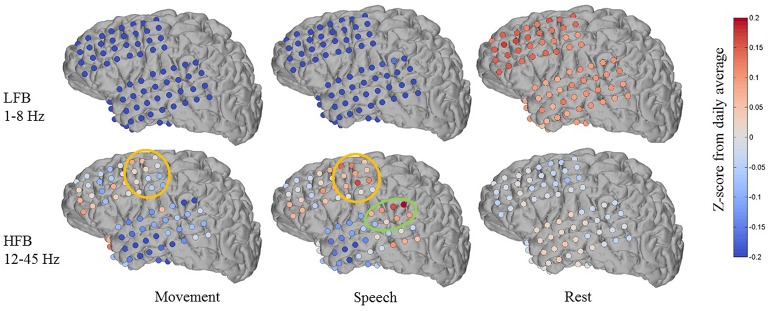

Another way to assess the neural decoder discovered through clustering and automated annotation is to examine the neural patterns identified in this unsupervised approach. We mapped these patterns by projecting the centroids of annotated clusters back to feature space. Next, the feature space in electrode coordinates on the brain were averaged within frequency bands, including those typically of interest to studies of human ECoG.

Figure 7 shows an example of one subject day's annotated cluster centroids shown as deviations from the daily average in a low frequency band (LFB, 1–8 Hz) and a high frequency band (HFB, 12–45 Hz). The LFB was chosen to include activity in the delta and theta range, while the HFB includes beta and low gamma activity. The accuracy of automated decoding on this subject day (Subject 1, day 6 post implant) was 0.56, 0.69, and 0.63 for movement, speech and rest, respectively. In the LFB, there was generalized decrease in power across all recording electrodes during movement and speech, accompanied by a corresponding relative increase in power during rest. In contrast, in the HFB during movement and speech, we observe more spatially specific increase in power that is localized to motor areas (orange circle in Figure 7). There is some overlap in electrodes showing increased HFB power during movement and speech, which may be due to activation of motor areas to produce speech. In addition, during speech but not during movement, there is a localized increase in HFB power at associated auditory region (green circle in Figure 7).

Figure 7.

Features discovered by automated brain decoding at two different frequency bands are consistent with known functions of cortical areas. Shown for one subject day (Subject 1 on day 6 post implant), the centroids of the movement, speech and rest clusters were back projected to brain-electrode space, and then separately averaged over a low frequency band (LFB, 1–8 Hz) and a high frequency band (HFB, 12–45 Hz). The orange and green circles mark the approximate extent of locations typically considered to be sensorimotor and auditory regions, respectively. The colormap indicates the Z-Score of the power levels as compared to the daily average.

These features are largely consistent with known functions of human cortical areas and ECoG phenomena, as well as the existing ECoG literature on motor activation (Miller et al., 2007, 2009) and speech mapping (Towle et al., 2008; Chang et al., 2010; DeWitt and Rauschecker, 2013; Potes et al., 2014). We must note that these patterns of frequency band-specific changes in power for different behavioral categories were discovered in an entirely unsupervised approach, using continuously acquired naturalistic data, and without the luxury of subtraction of baseline activation immediately before or after movement. It is important to keep in mind that previous studies typically define rest as the time just before an action, whereas we compare to daily averages as well as to sleep. During non-rapid eye movement sleep, the theta and delta bands tend to have high power (Cajochen et al., 1999), a factor that distinguishes our results from those obtained from more controlled experiments. We observed qualitatively similar patterns across the four (4) subjects where anatomic reconstruction of the electrode arrays were available (Figures S6–S8).

4. Discussion

Our results represent, to our knowledge, the first demonstration of automated clustering and labeling of human behavior from brain recordings in a naturalistic setting; we achieved annotation without manual labels by leveraging techniques from computer vision and speech processing. Our unsupervised approach discovers clusters for behaviors such as moving, speaking and resting from ECoG data. The discovered cluster labels were verified by comparison to manual labels for a subset of the data. We showed the ECoG clusters were able to predict behavior categories for many days after initial annotation, even without further correlation with video and audio. To provide an interpretation of these clusters, projecting the cluster centers back onto the brain provides an approach to automated functional brain mapping in natural settings.

Our goal was to develop an approach to decode human brain recordings by embracing the richness and variability of complex naturalistic behavior, while avoiding tedious manual annotation of data and fine tuning of parameters. Our current approach has a number of limitations which can be addressed by improving both the available information streams and the algorithmic processing. One limitation of our movement detection algorithm is lack of specificity to the subject when other people enter the frame of the camera. This is particularly challenging when another person overlaps with the subject, for example, when a nurse examines the patient. We are exploring the potential of better subject segmentation using a depth camera. The depth stream information will also allow us to perform much more detailed pose recognition, including obtaining specific movement information from isolated body parts.

A second limitation is our inability to identify the speaker in speech detection. Speech levels include the subject speaking, the subject listening to another person speaking in the room, and the subject listening to the TV or another electronic audio source. We expect that by placing an additional microphone in the room and using algorithms to distinguish speaker voices, it may be possible to more accurately localize the speaker and speech sources.

The temporal aspect of high-dimensional, long-term ECoG data may be better exploited to improve the clusters discovered by unsupervised pattern recognition techniques. For instance, dimensionality reduction by dynamic mode decomposition (DMD; Brunton et al., 2015) may be able to identify spatio-temporal patterns when repeated trials are not available. Phase synchrony and phase coupling may also serve as important neural correlates of behavior (Mercier et al., 2015).

Overall, these results demonstrate that our method has the practicality and accuracy to passively monitor the brain and decode its state during a variety of activities. In our results, we see some variation in performance and cluster maps across days for the same subject. This variance may be due to changes in brain activity as the patient recovers from surgery, or it may represent natural variation from day to day. Despite these variations, we show that a decoder clustered on one day can continue to be used for decoding in subsequent days in the absence of video and audio.

Functional brain mapping acquired by analyzing neural recordings outside instructed tasks has direct relevance to how an individual brain functions in natural conditions. For instance, neural correlates of a subject repeating a series of specific actions may differ from the full range of neural signatures associated with movements in general. Previous attempts to do more “ethological” mapping based on non-cued activities have identified motor (Breshears et al., 2012; Vansteensel et al., 2013) and speech (Derix et al., 2012, 2014) related areas. The automated approach to ethological functional brain mapping explores the analysis of task-free, naturalistic neural data augmented by information from external monitoring, which ultimately enables us to perform the analysis at much larger scale with long-term data. Although the initial unsupervised brain mapping results we have presented are encouraging, a more in-depth investigation is required to study the applicability and accuracy of our clustering methodology compared to other methods. We are currently exploring ways to improve its accuracy and ability to resolve more detailed behavioral categories. We are also actively investigating other techniques for functional brain mapping using long-term naturalistic ECoG data, including more direct comparisons with clinical brain mapping based on stimulation.

We envision our automated passive monitoring and decoding approach with video and audio as a possible strategy to adjust for natural variation and drift in brain activity without the necessity to retrain decoders explicitly. Such an approach may enable deployment of long-term BCI systems, including clinical and consumer applications. More generally, we believe the exploration of large, unstructured, naturalistic neural recordings will improve our understanding of the human brain in action.

5. Methods

5.1. Subjects and recording

All six subjects had a macro-grid and one or more strips of electrocorticography (ECoG) electrodes implanted subdurally for presurgical clinical epilepsy monitoring at Harborview Medical Center. The study was approved by University of Washington's Institutional Review Board's human subject division; all subjects gave their informed consent and all methods were carried out in accordance with the approved guidelines.

Electrode grids were constructed of 3-mm-diameter platinum pads spaced at 1 cm center-to-center and embedded in silastic (AdTech). Electrode placement and duration of each patient's recording were determined solely based on clinical needs. The number of electrodes ranged from 70 to 104, arranged as grids of 8 × 8, 8 × 4, 8 × 2 or strips of 1 × 4, 1 × 6, 1 × 8. Figures S5–S9 show the electrode placements of each subject. ECoG was acquired at a sampling rate of 999 Hz. All patients had between 6 and 14 days of continuous monitoring with video, audio, and ECoG recordings. During days 1 and 2, patients were generally recovering from surgery and spent most of their time sleeping; in this study, days 3 to 6 post implant were analyzed from each subject.

5.2. Video and audio recordings

Video and audio were recorded simultaneously with the ECoG signals and continuously throughout the subjects' clinical monitoring. The video was recorded at 30 frames per second at a resolution of 640 × 480 pixels. Generally, video was centered on the subject with family members or staff occasionally entering the scene. The camera was also sometimes adjusted throughout the day by hospital staff; for instance, the camera may be centered away during bed pan changes and returned to the patient afterwards. Videos S1–S4 show examples of the video at a few different times of one day. The audio signal was recorded at 48 KHz in stereo. The subject's conversations with people in the hospital room, including people not visible by video monitoring, can be clearly heard, as well as sound from the television or a music player. Some subjects listened to audio using headphones, which were not available to our audio monitoring system. For patient privacy, because voices can be identifiable, we do not make examples of the audio data available in the Supplemental Materials.

5.3. Manual annotation of video and audio

To generate a set of ground-truth labels so that we may assess the performance of our automated algorithms, we performed manual annotation of behavior aided by ANVIL (Kipp, 2012) on a small subset of the external monitoring data. Two students were responsible for the annotations, and at least 40 min (or 2.78%) of each subject day's recording was manually labeled for a variety of salient behaviors, including the broad categories of movement, speech and rest. Manual labeling was done for 2-min segments of video and audio, distributed randomly throughout each 24-h day. For patient privacy, some small parts of the video (e.g., during bed pan changes) were excluded from manual labeling. These periods were very brief and should not introduce a generalized bias in manual labels. On average, manual labeling of 1 min of monitoring data was accomplished in approximately 5 min. At least 10 min of each subject day were labeled by both students; agreement between the two labelers was 92.0%, and Cohen's Kappa value for inter-rater agreement is 0.82.

5.4. Automated movement and speech detection

For automated video analysis, we first detected salient features for each frame using Speeded Up Robust Features (SURF), which detects and encodes interesting feature points throughout the frame. The amount of motion in each frame was determined by matching the magnitude of change in these feature points across successive frames. Since the subject was the only person in the frame a majority of the time, we are able to determine the subject's approximate movement levels. This approach detected gross motor movements of the arms, torso and head, as well as some finer movements of the face and mouth during speaking. To detect speech, we measured the power averaged across human speech frequency levels (100–3500 Hz) from fast Fourier transformed audio data.

We assessed the performance of the automated algorithms by comparison to manual annotations. The manual annotations for each behavior were binary (i.e., either the behavior was present or not in a time window) whereas the automated speech and movement levels were analog values. Therefore, the agreement was computed after applying a threshold to the automated movement and speech levels. To clarify, the threshold is what average pixel movement and speech frequency power we considered to be actual speech and movement by the algorithm. We used a small section of videos from a few subject and found movement thresholds that correlated with gross hand-level movement and sound thresholds that included comprehensible speech. These are determined by human visual inspection, on videos, which was consistent across all subjects. This was empirically determined to be an average movement of 1.1 pixels and a mean power of 3 × 1011.

5.5. ECoG preprocessing and feature extraction

All ECoG recording was bandpassed filtered between 0.1 and 160 Hz to reduced noise. The filtered signal was then converted into a set of power spectral features using short-time Fourier transform using non-overlapping 2-s windows. Each 24-h recording was thus separated into 43,200 samples in time.

Because subjects engaged in a variety of activities throughout the day, there is no particular frequency band that would be solely useful for clustering. We considered power at a range of frequencies between 1–52 Hz for each electrode, binning every 1.5 Hz of power for a total of 35 features per electrode per 2-s window. At 82 to 106 electrodes per subject, this process resulted in 2870 to 3710 features for each 2-s window of recording. To normalize the data, we transformed the binned powers levels at each frequency bin for each channel by computing the Z-Score. The dimensionality of the feature space was then reduced with principal component analysis (PCA), and the cumulative fraction of variance explained as a function of the number of PCA modes for each subject day is shown in Figure S1. These spectra were highly variable, both within and between subjects. For purposes of unsupervised clustering, we truncated all feature space to the first 50 PC's. The first 50 PC's generally accounted for at least 40% of the variance in daily power spectral features space.

5.6. Hierarchical clustering of ECoG features

We used the 50-dimensional principal component power spectral features of the ECoG data as features for our hierarchical k-means clustering, a variant of Lamrous et al.'s divisive hierarchical clustering method Lamrous and Taileb (2006). The hierarchical k-means procedure and the annotation of clusters by correlation with movement and speech levels is shown schematically in Figure 8. For each subject on each day, we first perform k-means with k = 20 clusters using euclidean distance measures. Next, we segregate the data points into the single cluster with the most number of data points and all the rest of the clusters. This procedure produces the first level of the hierarchical clustering, which now has two clusters. Next, for level L of the clustering, this procedure is repeated for each cluster from level L−1 using k = 20/L (k floored to the largest previous integer). Again, the single cluster with the most number of data points is separated from the rest of the clusters, so that at level L, we end up with 2L clusters. This process of recursive k-means clustering and aggregation is stopped when there are fewer than 100 data points in each cluster, or when L = 10. In this manuscript, we focused on analyzing annotated clusters in levels 1–4.

Figure 8.

A schematic of the hierarchical clustering and annotation method. Features extracted from ECoG recordings of each subject day were recursively clustered and agglomerated at increasing levels. Annotation consisted of finding the cluster within each level whose time course had the highest correlation with automatically extracted movement and speech levels. For illustration purposes, here we show the annotation of clusters at Level 2, which has 4 total clusters.

5.7. Automated annotation of clusters using video and audio recordings

Results from the clustering analysis were automatically annotated using movement and speech levels. Each type of unsupervised analysis produced time series at different temporal resolutions, so they were all first consolidated into a mean analog value for non-overlapping 16-s windows. For ECoG, we counted how many 2-s windows within each 16-s were assigned to a particular cluster at the target hierarchy level. That is, a score was given for each 16-s window, which was the count of how many 2-s windows within were assigned to the given cluster. For movement and speech detection, we considered what fraction of the 2-s windows within the larger 16-s window exceeded a threshold value, empirically determined by visual inspection to be an average movement of 1.1 pixels and a mean sound power of 3 × 1011 (arbitrary units).

After consolidation into windows of 16 s, we computed the Pearson r correlation between each of the ECoG clusters with the movement and speech levels. The “movement” and “speech” labels were assigned to clusters for which the correlation was the highest for each behavior. If movement and speech both correlated best with the same cluster, the label was assigned to the second best cluster for the activity type that had a lower correlation. The “rest” label was assigned to the cluster with the largest negative correlation with both movement and speech.

We performed this annotation assignment for clustering levels 1–4 (see Figure 8). At level 1, for which there were only 2 total clusters, labels were simplified to be “rest” and “non-rest” (for movement and speech combined). Level 3, where there are 8 total clusters, appeared to be the most parsimonious level of granularity for the number of categories available automatically.

5.8. Validation with ground truth labels

Ground truth labels for random portions of each day were obtained from two students who hand annotated a small random fraction of each subject day (about 40 min, or 3% of each day) for visible and audible behaviors. The hand annotations were distributed randomly throughout each day of each patient. The automated results were compared to manual labels using 16 s windows within the manually annotated times. Each 16 s window is determined to contain an activity if the activity is annotated within any point in the window. Since different clusters have different baseline levels, we determined that the cluster detects the activity if its level is at or above the 25th percentile over the day. Using the manual labels as ground truth, accuracy and F1 scores were computed. The F1 score is the harmonic mean of precision and recall.

To determine the statistical significance of the F1 scores compared to chance, we generated shuffled labels by changing the timing of the ground truth labels of each activity, without changing their overall relative frequency. This shuffling was repeated over 1000 random iterations to determine the distribution of F1 scores assuming chance, and the true F1 score was compared against these shuffled F1 scores. We report the percentile of the true F1 scores for all subject days.

5.9. Mapping annotated clusters back to the brain

For each annotated cluster, we projected the centroid values of the cluster back to brain coordinates. The centroids values are 50-dimensional vectors in PCA space, reduced from power spectral features of all recording electrodes. The inverse PCA transform using the original PCA basis projects the centroid back to brain coordinates, where the relative power in each frequency bin is available at each electrode. Note that because of the Z-Score normalization step before computing the original PCA basis, this back-projection reproduces Z-Score values, not voltages. These Z-Scores can be separately averaged according to frequency bins of interesting bands, including a low frequency band (LFB, 1–8 Hz) and a high-frequency band (HBG, 12–45 Hz) as show in Figure 7. Figures S5–S8 also show results of brain maps at 72–100 Hz. Anatomic reconstruction of electrode coordinates on structural imaging of subjects' brains was available for only four of the six subjects, so we were unable to perform this mapping for Subject 2 and Subject 6.

5.10. Reproducibility and availability of code

The code developed in Python for processing and all analyses presented in this paper are openly available at https://github.com/nancywang1991/pyESig2.git.

Author contributions

NW conceived the experiments with the help of JDO and JGO; NW and RR conducted the experiment; NW, RR, and BB performed the analysis and wrote the manuscript, with input from all authors.

Funding

This research was support by the Washington Research Foundation (WRF), the National Institutes of Health (NIH) grants NS065186, 2K12HD001097-16 and 5U10NS086525-03, and award EEC-1028725 from the National Science Foundation (NSF).

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Ryan Shean and Sharon Ke for annotating the videos and audio recordings for ground truth labels. We also thank the staff and doctors at Harborview Medical Center, in particular Julie Rae, Shahin Hakimian, and Jeffery Tsai for aiding in data collection as well as research discussions. James Wu contributed to brain reconstructions. We thank Ali Farhadi for giving advice on the computer vision based video processing and Lise Johnson for discussions about unsupervised clustering.

Supplementary material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fnhum.2016.00165

References

- Arya R., Wilson J. A., Vannest J., Byars A. W., Greiner H. M., Buroker J., et al. (2015). Electrocorticographic language mapping in children by high-gamma synchronization during spontaneous conversation: comparison with conventional electrical cortical stimulation. Epilepsy Res. 110, 78–87. 10.1016/j.eplepsyres.2014.11.013 [DOI] [PubMed] [Google Scholar]

- Ball T., Kern M., Mutschler I., Aertsen A., Schulze-Bonhage A. (2009). Signal quality of simultaneously recorded invasive and non-invasive EEG. Neuroimage 46, 708–716. 10.1016/j.neuroimage.2009.02.028 [DOI] [PubMed] [Google Scholar]

- Bauer P. R., Vansteensel M. J., Bleichner M. G., Hermes D., Ferrier C. H., Aarnoutse E. J., et al. (2013). Mismatch between electrocortical stimulation and electrocorticography frequency mapping of language. Brain Stimul. 6, 524–531. 10.1016/j.brs.2013.01.001 [DOI] [PubMed] [Google Scholar]

- Blakely T., Miller K. J., Rao R. P., Holmes M. D., Ojemann J. G. (2008). Localization and classification of phonemes using high spatial resolution electrocorticography (ECoG) grids. Conf. Proc. IEEE Eng. Med. Biol. Soc. 4964–4967. 10.1109/IEMBS.2008.4650328 [DOI] [PubMed] [Google Scholar]

- Breshears J. D., Gaona C. M., Roland J. L., Sharma M., Bundy D. T., Shimony J. S., et al. (2012). Mapping sensorimotor cortex using slow cortical potential resting-state networks while awake and under anesthesia. Neurosurgery 71, 305. 10.1227/NEU.0b013e318258e5d1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunton B. W., Johnson L. A., Ojemann J. G., Kutz J. N. (2015). Extracting spatial-temporal coherent patterns in large-scale neural recordings using dynamic mode decomposition. J. Neurosci. Methods 258, 1–15. 10.1016/j.jneumeth.2015.10.010 [DOI] [PubMed] [Google Scholar]

- Cajochen C., Foy R., Dijk D.-J. (1999). Frontal predominance of a relative increase in sleep delta and theta eeg activity after sleep loss in humans. Sleep Res. Online 2, 65–69. [PubMed] [Google Scholar]

- Chang E. F., Rieger J. W., Johnson K., Berger M. S., Barbaro N. M., Knight R. T. (2010). Categorical speech representation in human superior temporal gyrus. Nat. Neurosci. 13, 1428–1432. 10.1038/nn.2641 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dastjerdi M., Ozker M., Foster B. L., Rangarajan V., Parvizi J. (2013). Numerical processing in the human parietal cortex during experimental and natural conditions. Nat. Commun. 4, 2528. 10.1038/ncomms3528 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derix J., Iljina O., Schulze-Bonhage A., Aertsen A., Ball T. (2012). “Doctor” or “darling”? Decoding the communication partner from ECoG of the anterior temporal lobe during non-experimental, real-life social interaction. Front. Hum. Neurosci. 6:251. 10.3389/fnhum.2012.00251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derix J., Iljina O., Weiske J., Schulze-Bonhage A., Aertsen A., Ball T. (2014). From speech to thought: the neuronal basis of cognitive units in non-experimental, real-life communication investigated using ECoG. Front. Hum. Neurosci. 8:383. 10.3389/fnhum.2014.00383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeWitt I., Rauschecker J. P. (2013). Wernicke's area revisited: parallel streams and word processing. Brain Lang. 127, 181–191. 10.1016/j.bandl.2013.09.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erhan D., Szegedy C., Toshev A., Anguelov D. (2014). Scalable object detection using deep neural networks, in 2014 IEEE Conference on Computer Vision and Pattern Recognition (Columbus: IEEE; ), 2155–2162. [Google Scholar]

- Felsen G., Dan Y. (2005). A natural approach to studying vision. Nat. Neurosci. 8, 1643–1646. 10.1038/nn1608 [DOI] [PubMed] [Google Scholar]

- Fifer M. S., Hotson G., Wester B. A., McMullen D. P., Wang Y., Johannes M. S., et al. (2014). Simultaneous neural control of simple reaching and grasping with the modular prosthetic limb using intracranial EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 695–705. 10.1109/TNSRE.2013.2286955 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girshick R., Donahue J., Darrell T., Malik J. (2014). Rich feature hierarchies for accurate object detection and semantic segmentation, in 2014 IEEE Conference on Computer Vision and Pattern Recognition (Columbus: IEEE; ), 580–587. [Google Scholar]

- He K., Zhang X., Ren S., Sun J. (2015). Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. IEEE Int. Conf. Comput. Vis. 1026–1034. arXiv:1502.01852v1. [Google Scholar]

- Herff C., Heger D., de Pesters A., Telaar D., Brunner P., Schalk G., Schultz T. (2015). Brain-to-text: decoding spoken phrases from phone representations in the brain. Front. Neurosci. 9:217. 10.3389/fnins.2015.00217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill N. J., Gupta D., Brunner P. (2012). Recording human electrocorticographic (ECoG) signals for neuroscientific research and real-time functional cortical mapping. J. Vis. Exp. 64:3993. 10.3791/3993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang X., Baker J., Reddy R. (2014). A historical perspective of speech recognition. Commun. ACM 57, 94–103. 10.1145/2500887 [DOI] [Google Scholar]

- Jackson A., Mavoori J., Fetz E. (2007). Correlations between the same motor cortex cells and arm muscles during a trained task, free behavior, and natural sleep in the macaque monkey. J. Neurophysiol. 97, 360–374. 10.1152/jn.00710.2006 [DOI] [PubMed] [Google Scholar]

- Jordan M. I., Mitchell T. M. (2015). Machine learning: trends, perspectives, and prospects. Science 349, 255–260. 10.1126/science.aaa8415 [DOI] [PubMed] [Google Scholar]

- Kanas V. G., Mporas I., Benz H. L., Sgarbas K. N., Bezerianos A., Crone N. E. (2014a). Joint spatial-spectral feature space clustering for speech activity detection from ECoG signals. IEEE Trans. Biomed. Eng. 61, 1241–1250. 10.1109/TBME.2014.2298897 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanas V. G., Mporas I., Benz H. L., Sgarbas K. N., Bezerianos A., Crone N. E. (2014b). Real-time voice activity detection for ECoG-based speech brain machine interfaces, in 2014 19th International Conference on Digital Signal Processing (Hong Kong: IEEE; ), 862–865. [Google Scholar]

- Karpathy A., Toderici G., Shetty S., Leung T., Sukthankar R., Fei-Fei L. (2014). Large-scale video classification with convolutional neural networks, in 2014 IEEE Conference on Computer Vision and Pattern Recognition (Columbus: IEEE; ), 1725–1732. [Google Scholar]

- Kellis S., Miller K., Thomson K., Brown R., House P., Greger B. (2010). Decoding spoken words using local field potentials recorded from the cortical surface. J. Neural Eng. 7:056007. 10.1088/1741-2560/7/5/056007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kipp M. (2012). Anvil: a universal video research tool, in Handbook of Corpus Phonology, eds Durand J., Gut U., Kristoffersen G. (Oxford, UK: Oxford University Press; ). [Google Scholar]

- Lal T., Hinterberger T., Widman G., Michael S., Hill J., Rosenstiel W., et al. (2004). Methods towards invasive human brain computer interfaces, in Eighteenth Annual Conference on Neural Information Processing Systems (NIPS 2004) (Vancouver, BC: MIT Press; ), 737–744. [Google Scholar]

- Lamrous S., Taileb M. (2006). Divisive hierarchical k-means. in International Conference on Computational Intelligence for Modelling, Control and Automation, 2006 and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (Sydney: IEEE; ), 18–18. [Google Scholar]

- Längkvist M., Karlsson L., Loutfi A. (2012). Sleep stage classification using unsupervised feature learning. Adv. Artif. Neural Syst. 2012:107046 10.1155/2012/107046 [DOI] [Google Scholar]

- Leuthardt E. C., Miller K. J., Schalk G., Rao R. P., Ojemann J. G. (2006). Electrocorticography-based brain computer interface–the Seattle experience. IEEE Trans. Neural Syst. Rehabil. Eng. 14, 194–198. 10.1109/TNSRE.2006.875536 [DOI] [PubMed] [Google Scholar]

- Leuthardt E. C., Gaona C., Sharma M., Szrama N., Roland J., Freudenberg Z., et al. (2011). Using the electrocorticographic speech network to control a brain-computer interface in humans. J. Neural Eng. 8:036004. 10.1088/1741-2560/8/3/036004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McMullen D. P., Hotson G., Katyal K. D., Wester B. A., Fifer M. S., McGee T. G., et al. (2014). Demonstration of a semi-autonomous hybrid brain-machine interface using human intracranial EEG, eye tracking, and computer vision to control a robotic upper limb prosthetic. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 784–796. 10.1109/TNSRE.2013.2294685 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mercier M. R., Molholm S., Fiebelkorn I. C., Butler J. S., Schwartz T. H., Foxe J. J. (2015). Neuro-oscillatory phase alignment drives speeded multisensory response times: an electro-corticographic investigation. J. Neurosci. 35, 8546–8557. 10.1523/JNEUROSCI.4527-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller K. J., Schalk G., Fetz E. E., den Nijs M., Ojemann J. G., Rao R. P. N. (2010). Cortical activity during motor execution, motor imagery, and imagery-based online feedback. Proc. Natl. Acad. Sci. U.S.A. 107, 4430–4435. 10.1073/pnas.0913697107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller K. J., denNijs M., Shenoy P., Miller J. W., Rao R. P., Ojemann J. G. (2007). Real-time functional brain mapping using electrocorticography. Neuroimage 37, 504–507. 10.1016/j.neuroimage.2007.05.029 [DOI] [PubMed] [Google Scholar]

- Miller K. J., Zanos S., Fetz E. E., den Nijs M., Ojemann J. G. (2009). Decoupling the cortical power spectrum reveals real-time representation of individual finger movements in humans. J. Neurosci. 29, 3132–3137. 10.1523/JNEUROSCI.5506-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moran D. (2010). Evolution of brain-computer interface: action potentials, local field potentials and electrocorticograms. Curr. Opin. Neurobiol. 20, 741–745. 10.1016/j.conb.2010.09.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mugler E. M., Patton J. L., Flint R. D., Wright Z. A., Schuele S. U., Rosenow J., et al. (2014). Direct classification of all American English phonemes using signals from functional speech motor cortex. J. Neural Eng. 11:035015. 10.1088/1741-2560/11/3/035015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller-Putz G., Leeb R., Tangermann M., Hohne J. H., Kubler A. K., Cincotti F., et al. (2015). Towards noninvasive hybrid brain–computer interfaces: framework, practice, clinical application, and beyond. Proc. IEEE 103, 926–943. 10.1109/JPROC.2015.2411333 [DOI] [Google Scholar]

- Nakanishi Y., Yanagisawa T., Shin D., Fukuma R., Chen C., Kambara H., et al. (2013). Prediction of three-dimensional arm trajectories based on ECoG signals recorded from human sensorimotor cortex. PLoS ONE 8:e72085. 10.1371/journal.pone.0072085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei X., Barbour D. L., Leuthardt E. C., Schalk G. (2011). Decoding vowels and consonants in spoken and imagined words using electrocorticographic signals in humans. J. Neural Eng. 8:046028. 10.1088/1741-2560/8/4/046028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pistohl T., Schulze-Bonhage A., Aertsen A. (2012). Decoding natural grasp types from human ECoG. Neuroimage 59, 248–260. 10.1016/j.neuroimage.2011.06.084 [DOI] [PubMed] [Google Scholar]

- Pluta T., Bernardo R., Shin H. W., Bernardo D. R. (2014). Unsupervised learning of electrocorticography motifs with binary descriptors of wavelet features and hierarchical clustering, in Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Chicago, IL: ), 2657–2660. [DOI] [PubMed] [Google Scholar]

- Poppe R. (2007). Vision-based human motion analysis: an overview. Comput. Vis. Image Underst. 108, 4–18. 10.1016/j.cviu.2006.10.016 [DOI] [Google Scholar]

- Potes C., Brunner P., Gunduz A., Knight R. T., Schalk G. (2014). Spatial and temporal relationships of electrocorticographic alpha and gamma activity during auditory processing. Neuroimage 97, 188–195. 10.1016/j.neuroimage.2014.04.045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramirez J., Segura J. C., Benitez C., de la Torre A., Rubio A. (2004). Efficient voice activity detection algorithms using long-term speech information. Speech Commun. 42, 271–287. 10.1016/j.specom.2003.10.002 [DOI] [Google Scholar]

- Rao R. P. N. (2013). Brain-Computer Interfacing: An Introduction. New York, NY: Cambridge University Press. [Google Scholar]

- Ruescher J., Iljina O., Altenmüller D.-M., Aertsen A., Schulze-Bonhage A., Ball T. (2013). Somatotopic mapping of natural upper- and lower-extremity movements and speech production with high gamma electrocorticography. Neuroimage 81, 164–177. 10.1016/j.neuroimage.2013.04.102 [DOI] [PubMed] [Google Scholar]

- Ryoo M. S., Matthies L. (2013). First-person activity recognition: what are they doing to me? in 2013 IEEE Conference on Computer Vision and Pattern Recognition (Portland: IEEE; ), 2730–2737. [Google Scholar]

- Schalk G., Miller K. J., Anderson N. R., Wilson J. A., Smyth M. D., Ojemann J. G., et al. (2008). Two-dimensional movement control using electrocorticographic signals in humans. J. Neural Eng. 5, 75–84. 10.1088/1741-2560/5/1/008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toshev A., Szegedy C. (2014). DeepPose: human pose estimation via deep neural networks, in 2014 IEEE Conference on Computer Vision and Pattern Recognition (Columbus: IEEE; ), 1653–1660. [Google Scholar]

- Towle V. L., Yoon H.-A., Castelle M., Edgar J. C., Biassou N. M., Frim D. M., et al. (2008). ECOG gamma activity during a language task: differentiating expressive and receptive speech areas. Brain 131, 2013–2027. 10.1093/brain/awn147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vansteensel M. J., Bleichner M. G., Dintzner L., Aarnoutse E., Leijten F., Hermes D., et al. (2013). Task-free electrocorticography frequency mapping of the motor cortex. Clin. Neurophysiol. 124, 1169–1174. 10.1016/j.clinph.2012.08.048 [DOI] [PubMed] [Google Scholar]

- Vansteensel M. J., Hermes D., Aarnoutse E. J., Bleichner M. G., Schalk G., van Rijen P. C., et al. (2010). Brain–computer interfacing based on cognitive control. Ann. Neurol. 67, 809–816. 10.1002/ana.21985 [DOI] [PubMed] [Google Scholar]

- Vinje W. E., Gallant J. (2000). Sparse coding and decorrelation in primary visual cortex during natural vision. Science 287, 1273–1276. 10.1126/science.287.5456.1273 [DOI] [PubMed] [Google Scholar]

- Wang N., Cullis-Suzuki S., Albu A. (2015). Automated analysis of wild fish behavior in a natural habitat, in Environmental Multimedia Retrieval at ICMR (Shanghai: ). [Google Scholar]

- Wang P. T., Puttock E. J., King C. E., Schombs A., Lin J. J., Sazgar M., et al. (2013a). State and trajectory decoding of upper extremity movements from electrocorticogram, in 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER) (San Diego, CA: IEEE; ) 969–972. [Google Scholar]

- Wang W., Collinger J. L., Degenhart A. D., Tyler-Kabara E. C., Schwartz A. B., Moran D. W., et al. (2013b). An electrocorticographic brain interface in an individual with tetraplegia. PLoS ONE 8:e55344. 10.1371/journal.pone.0055344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Z., Gunduz A., Brunner P., Ritaccio A. L., Ji Q., Schalk G. (2012). Decoding onset and direction of movements using Electrocorticographic (ECoG) signals in humans. Front. Neuroeng. 5:15. 10.3389/fneng.2012.00015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Z., Ji Q., Miller K. J., Schalk G. (2010). Decoding finger flexion from electrocorticographic signals using a sparse Gaussian process, in 2010 20th International Conference on Pattern Recognition (Istanbul: IEEE; ), 3756–3759. [Google Scholar]

- Williams J. C., Hippensteel J. A., Dilgen J., Shain W., Kipke D. R. (2007). Complex impedance spectroscopy for monitoring tissue responses to inserted neural implants. J. Neural Eng. 4, 410–423. 10.1088/1741-2560/4/4/007 [DOI] [PubMed] [Google Scholar]

- Wolpaw J., Wolpaw E. W. (2012). Brain-computer interfaces: principles and practice. London: Oxford University Press. [Google Scholar]

- Yanagisawa T., Hirata M., Saitoh Y., Goto T., Kishima H., Fukuma R., et al. (2011). Real-time control of a prosthetic hand using human electrocorticography signals. J. Neurosurg. 114, 1715–1722. 10.3171/2011.1.JNS101421 [DOI] [PubMed] [Google Scholar]

- Zander T. O., Kothe C. (2011). Towards passive brain-computer interfaces: applying brain-computer interface technology to human-machine systems in general. J. Neural Eng. 8:025005. 10.1088/1741-2560/8/2/025005 [DOI] [PubMed] [Google Scholar]

- Zhang D., Gong E., Wu W., Lin J., Zhou W., Hong B. (2012). Spoken sentences decoding based on intracranial high gamma response using dynamic time warping, in Annual International Conference of the IEEE Engineering in Medicine and Biology Society (San Diego, CA: ), 3292–3295. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.