Abstract

Eye-gaze detection and tracking have been an active research field in the past years as it adds convenience to a variety of applications. It is considered a significant untraditional method of human computer interaction. Head movement detection has also received researchers' attention and interest as it has been found to be a simple and effective interaction method. Both technologies are considered the easiest alternative interface methods. They serve a wide range of severely disabled people who are left with minimal motor abilities. For both eye tracking and head movement detection, several different approaches have been proposed and used to implement different algorithms for these technologies. Despite the amount of research done on both technologies, researchers are still trying to find robust methods to use effectively in various applications. This paper presents a state-of-art survey for eye tracking and head movement detection methods proposed in the literature. Examples of different fields of applications for both technologies, such as human-computer interaction, driving assistance systems, and assistive technologies are also investigated.

Keywords: Eye tracking, eye detection, head movement detection

I. Introduction

Eyes and their movements are important in expressing a person's desires, needs and emotional states [1]. The significance of eye movements with regards to the perception of and attention to the visual world is certainly acknowledged since it is the means by which the information needed to identify the characteristics of the visual world is gathered for processing in the human brain. Hence, robust eye detection and tracking are considered to play a crucial role in the development of human-computer interaction, creating attentive user interfaces and analyzing human affective states.

Head movement is also found to be a natural, simple and effective way of pointing to objects, interaction and communication. Thus, head movement detection has received significant attention in recent research. One of the various purposes for head movement detection and tracking is to allow the user to interact with a computer. It also provides the ability to control many devices by mapping the position of the head into control signals.

Eye tracking and head movement detection are widely investigated as alternative interface methods. They are considered to be easier to use than other methods such as voice recognition or EEG/ECG signals. They also have achieved higher accuracy and performance. In addition, using eye tracking or head movement detection as alternative interface, control or communication methods is beneficial for a wide range of severely disabled people who are left with minimal ability to perform voluntary motion. Eye and head movements are the least affected by disabilities because, for example, spinal cord injuries do not affect the ability to control them, as they are directly controlled by the brain. Combining eye tracking and head movement detection can provide a larger number for possible control commands to be used with assistive technologies such as a wheelchair.

There are many approaches introduced in literature focusing on eye tracking. They can be used as a base to develop an eye tracking system which achieves the highest accuracy, best performance and lowest cost.

Head movement detection has been receiving growing interest as well. There are many proposed approaches. Some approaches may be implemented using low computational hardware such as a microcontroller due to the simplicity of the used algorithm.

This paper presents a survey of different eye tracking and head movement detection techniques reported in the literature along with examples of various applications employing these technologies.

The rest of the paper is outlined as follows. Different methods of eye tracking are investigated in Section II. Fields of applications for eye tracking are described briefly in Section III. Section IV discusses several head movement detection algorithms and the applications of this technology are presented in Section V. Systems which use a combination of eye tracking and head movement detection are described in Section VI. Some of the commercial products of eye tracking and head movement detection are presented in VII. The results of using the investigated eye tracking and head movement detection algorithms are reported in Section VIII. Finally, Section IX draws the conclusions.

II. Eye Tracking

The geometric and motion characteristics of the eyes are unique which makes gaze estimation and tracking important for many applications such as human attention analysis, human emotional state analysis, interactive user interfaces and human factors.

There are many different approaches for implementing eye detection and tracking systems [2]. Many eye tracking methods were presented in the literature. However, the research is still on-going to find robust eye detection and tracking methods to be used in a wide range of applications.

A. Sensor-Based Eye Tracking (EOG)

Some eye tracking systems detect and analyze eye movements based on electric potentials measured with electrodes placed in the region around the eyes. This electric signal detected using two pairs of electrodes placed around one eye is known as electrooculogram (EOG). When the eyes are in their origin state, the electrodes measure a steady electric potential field. If the eyes move towards the periphery, the retina approaches one electrode and the cornea approaches the other. This changes the orientation of the dipole and results in a change in the measured EOG signal. Eye movement can be tracked by analyzing the changes in the EOG signal [3].

B. Computer-Vision-Based Eye Tracking

Most eye tracking methods presented in the literature use computer vision based techniques. In these methods, a camera is set to focus on one or both eyes and record the eye movement. The main focus of this paper is on computer vision based eye detection and gaze tracking.

There are two main areas investigated in the field of computer vision based eye tracking. The first area considered is eye detection in the image, also known as eye localization. The second area is eye tracking, which is the process of eye gaze direction estimation. Based on the data obtained from processing and analyzing the detected eye region, the direction of eye gaze can be estimated then it is either used directly in the application or tracked over subsequent video frames in the case of real-time eye tracking systems.

Eye detection and tracking is still a challenging task, as there are many issues associated with such systems. These issues include degree of eye openness, variability in eye size, head pose, etc. Different applications that use eye tracking are affected by these issues at different levels. Several computer-vision-based eye tracking approaches have been introduced.

1. Pattern Recognition for Eye Tracking

Different pattern recognition techniques, such as template matching and classification, have proved effective in the field of eye tracking. Raudonis et al. [4] used principal component analysis (PCA) to find the first six principal components of the eye image to reduce dimensionality problems, which arise when using all image pixels to compare images. Then, Artificial Neural Network (ANN) is used to classify the pupil position. The training data for ANN is gathered during calibration where the user is required to observe five points indicating five different pupil positions. The system requires special hardware which consists of glasses and a single head-mounted camera and thus might be disturbing to the patient as it is in their field of view. The use of classification slows the system and hence it requires some enhancements to be applicable. In addition, the system is not considered a real-time eye tracking system. The proposed algorithm was not tested on a known database which means the quality of the system might be affected by changes in lighting conditions, shadows, distance of the camera, the exact position in which the camera is mounted, etc. The algorithm requires processing which cannot be performed by low computational hardware such as a microcontroller.

Tang and Zhang [5] suggested a method that uses the detection algorithm combined with gray prediction to serve eye tracking purposes. The GM(1,1) model is used in the prediction of the location of an eye in the next video frame. The predicted location is used as the reference for the region of eye to be searched. The method uses low-level data in the image in order to be fast but there are no experimental results evaluating the performance of the method.

Kuo et al. [6] proposed an eye tracking system that uses the particle filter which estimates a sequence of hidden parameters depending on the data observed. After detecting possible eyes positions, the process of eye tracking starts. For effective and reliable eye tracking, the gray level histogram is selected as the characteristics of the particle filter. Using low-level features in the image makes it a fast algorithm. High accuracy is obtained from the system; however, the real-time performance was not evaluated, the algorithm was tested on images not videos and the images were not taken from a known database and, thus, the accuracy and performance of the algorithm may decrease when utilized in a real-world application.

Lui et al. [7] suggested a fast and robust eye detection and tracking method which can be used with rotated facial images. The camera used by the system is not head mounted. A Viola-Jones face detector, which is based on Haar features, is used to locate the face in the whole image. Then, Template Matching (TM) is applied to detect eyes. Zernike Moments (ZM) is used to extract rotation invariant eye characteristics. Support Vector Machine (SVM) is used to classify the images to eye/non-eye patterns. The exact positions of the left and right eyes are determined by selecting the two positions having the highest values among the found local maximums in the eye probability map. Detecting the eye region is helpful as a pre-processing stage before iris/pupil tracking. Especially, it allows for eye detection in rotated facial images. This work presented a simple eye tracking algorithm but the results of the method evaluation were not reported and thus the proposed eye tracking method is weak and not usable.

Hotrakool et al. [8] introduced an eye tracking method based on gradient orientation pattern matching along with automatic template updates. The method detects the iris based on low level features and motion detection between subsequent video frames. The method can be used in applications that require real-time eye tracking with high robustness against change in lighting conditions during operation. The computational time is reduced by applying down-sampling on the video frames. The method achieves high accuracy. However, the experiments were performed on videos of a single eye, which eliminates all surrounding noise, and a known database was not used. The method detects the iris but does not classify its position. The method requires minimal CPU processing time among other real-time eye tracking methods investigated in this survey. The motion detection approach discussed in this paper is worth being considered in new algorithms to obtain the minimal required CPU processing time in eye tracking applications.

Yuan and Kebin [9] presented Local and Scale Integrated Feature (LoSIF) as a new descriptor for extracting the features of eye movement based on a non-intrusive system, which gives some tolerance to head movements. The feature descriptor uses two-level Haar wavelet transform, multi-resolution characteristics and effective dimension reduction algorithm, to find the local and scale eye movement features. Support Vector Regression is used in mapping between the appearance of the eyes and the gaze direction, which correspond to each eye's appearance. The focus of this method is to locate the eye without classifying its position or gaze direction. The method was found to achieve high accuracy in iris detection, which makes this method useful in iris detection and segmentation which is important for eye tracking. However, the real-time performance was not evaluated.

Fu and Yang [10] proposed a high-performance eye tracking algorithm in which two eye templates, one for each eye, are manually extracted from the first video frame for system calibration. The face region in a captured frame is detected and a normalized 2-D cross-correlation is performed for matching the template with the image. Eye gaze direction is estimated by iris detection using edge detection and Hough circle detection. They used their algorithm to implement a display control application. However, it has an inflexible calibration process. The algorithm was not tested on a variety of test subjects and the results were not clearly reported which requires the algorithm to be investigated carefully before choosing to implement it.

Mehrubeoglu et al. [11] introduced an eye detection and tracking system that detects the eyes using template matching. The system uses a special customized smart camera which is programmed to continuously track the user's eye movements until the user stops it. Once the eye is detected, the region of interest (ROI) containing only the eye is extracted with the aim of reducing the processed region. From their work, it can be concluded that it is a fast eye tracking algorithm with acceptable performance. The algorithm could be a nice feature to be added to modern cameras. A drawback is that the experiments were not performed using a database containing different test subjects and conditions, which reduces the reliability of the results. In addition, the algorithm locates the coordinates but does not classify the eye gaze direction to be left, right, up or down.

2. Eye Tracking Based on Corneal Reflection Points

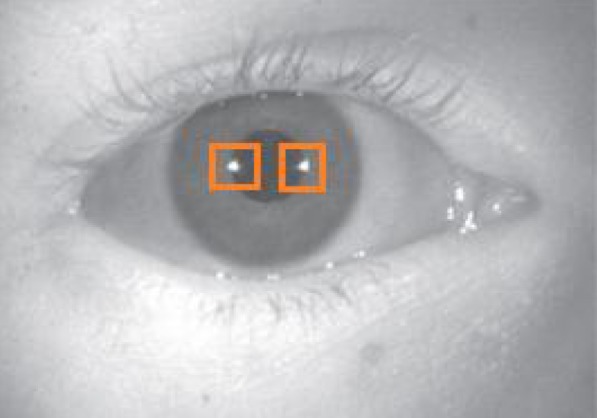

Many computer vision based eye trackers use light reflection points on the cornea to estimate the gaze direction. Fig. 1 shows the corneal reflection points in an eye image [12]. Another name for eye images containing corneal reflection points is Purkinje Image. When using this approach, the vector between the center of the pupil and the corneal reflections is used to compute the gaze direction. A simple calibration procedure of the individual is usually needed before using the eye tracker [2]–[12].

Fig. 1.

Corneal reflection points [12].

Yang et al. [13] proposed a scheme which employs gray difference between the face, pupils and corneal reflection points for eye detection. The proposed scheme was tested under a cross-ratio-invariant-based eye tracking system. The test included users wearing glasses and other accessories and the results showed the ability of the system to eliminate the optical reflective effect of accessories and glasses. The scheme first prepares for gaze tracking by a preprocessing stage applied on cropped faces. This is particularly useful in applications which use a very close camera. The results are not detailed and not performed on a database containing various test subjects under different conditions which makes the algorithm weak when considered for use in real-world applications. In addition, the required CPU time was not addressed and thus the algorithm needs optimization to determine whether it works in real-time applications.

Yang et al. [14] presented an algorithm based on the difference in the gray level of the pupil region and the iris region. The image of the eye region is binarized and an estimate position of the pupil is detected. The exact position of the pupil is found by the vertical integral projection and the horizontal integral projection. The projection area contains the corneal glints and the pupil. The gray level of the pixels representing reflection points is the highest among all pixels. To apply their presented gaze tracking method, the points of corneal reflection must have known coordinates. The system uses hardware which adds limitations and inflexibility for pupil detection. The algorithm was tested on images containing a part of the face. It needs to be applied on different test subjects to prove the accuracy and performance of the algorithm. The required CPU time was not reported which does not make the algorithm feasible for adoption in real-time applications. However, the experiments showed that the algorithm achieves reasonably high accuracy.

3. Eye Tracking Based on Shape

Another approach for eye detection and tracking is to find the location of the iris or the pupil based on their circular shape or using edge detection.

Chen and Kubo [15] proposed a technique where a sequence of face detection and Gabor filters is used. The potential face regions in the image are detected based on skin color. Then, the eye candidate region is determined automatically using the geometric structure of the face. Four Gabor filters with different directions (0,  ,

,  ,

,  ) are applied to the eye candidate region. The pupil of the eye does not have directions and thus, it can be easily detected by combining the four responses of the four Gabor filters with a logical product. The system uses a camera which is not head-mounted. The accuracy of the algorithm is not investigated and the required CPU time is not mentioned which does not make the algorithm preferable for real-world applications compared to other algorithms.

) are applied to the eye candidate region. The pupil of the eye does not have directions and thus, it can be easily detected by combining the four responses of the four Gabor filters with a logical product. The system uses a camera which is not head-mounted. The accuracy of the algorithm is not investigated and the required CPU time is not mentioned which does not make the algorithm preferable for real-world applications compared to other algorithms.

Kocejko et al. [16] proposed the Longest Line Detection (LLD) algorithm to detect the pupil position. This algorithm is based on the assumption that the pupil is arbitrary circular. The longest vertical and horizontal lines of the pupil are found. The center of the longest line among the vertical and horizontal lines is the pupil center. The proposed eye tracking system requires inflexible hardware which requires relatively difficult installation. The accuracy is not discussed and the performance of the system might be affected by changes in illumination, shadows, noise and other effects because the experiments were performed under special conditions and did not use a variety of test samples in different conditions.

Khairosfaizal and Nor'aini [17] presented a straightforward eye tracking system based on mathematical Circular Hough transform for eye detection applied to facial images. The first step is detecting the face region which is performed by an existing face detection method. Then the search for the eye is based on the circular shape of the eye in a two-dimensional image. Their work added value to academic research but not to real-world applications.

Pranith and Srikanth [18] presented a method which detects the inner pupil boundary by using Circular Hough transformation whereas the outer iris boundary is detected by circular summation of intensity from the detected pupil center and radius. This algorithm can be used in an iris recognition system for iris localization because it is applied to cropped eye images. However, it needs further analysis to obtain its accuracy and real-time performance by applying it on a database containing variant images.

Sundaram et al. [19] proposed an iris localization method that identifies the outer and inner boundaries of the iris. The procedure includes two basic steps: detection of edge points and Circular Hough transform. Before these steps are applied, bounding boxes for iris region and pupil area are defined to reduce the complexity of the Hough transform as it uses a voting scheme that considers all edge points  over the image for all possible combinations of center coordinates

over the image for all possible combinations of center coordinates  and different possible values of the radius

and different possible values of the radius  . The algorithm is applicable in applications where a camera close to the eye is used such as in iris recognition systems. The algorithm was tested on the UBIRIS database, which contains images with different characteristics like illumination change. The algorithm is relatively fast when compared to non real-time applications but it is not suitable for real-time eye tracking due to its complexity.

. The algorithm is applicable in applications where a camera close to the eye is used such as in iris recognition systems. The algorithm was tested on the UBIRIS database, which contains images with different characteristics like illumination change. The algorithm is relatively fast when compared to non real-time applications but it is not suitable for real-time eye tracking due to its complexity.

Alioua et al. [20] presented an algorithm that handles an important part of eye tracking, which is analyzing eye state (open/closed) using iris detection. Gradient image is used to mark the edge of the eye. Horizontal projection is then computed for the purpose of detecting upper and lower boundaries of the eye region and the Circular Hough transform is used for iris detection. This algorithm can be useful in eye blinking detection, especially since the possible output classes are limited: open, closed or not an eye. When used in a control application, it can increase the number of possible commands. Due to its importance, the algorithm is required to be fast but no required CPU time was mentioned, although indicating the required CPU time could be useful.

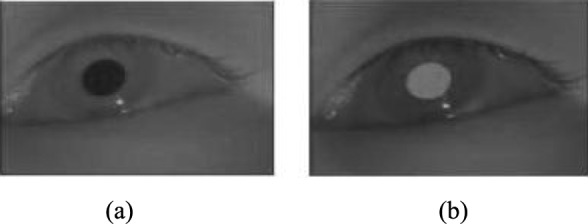

4. Eye Tracking Using Dark and Bright Pupil Effect

There are two illumination methods used in the literature for pupil detection: the dark pupil and the bright pupil method. In the dark pupil method, the location of a black pupil is determined in the eye image captured by the camera. This causes some issues when the user has dark brown eyes because of the low contrast between the brown iris and the black pupil. The bright pupil method uses the reflection of infrared light from the retina which makes the pupil appear white in the eye image. Fig. 2 shows the dark and bright pupil effect.

Fig. 2.

(a) Dark and (b) Bright pupil effects [2].

Yoo et al. [21] proposed using cross-ratio-invariance for a gaze tracking algorithm. The proposed algorithm has been found to achieve very low accuracy. To enhance it, a virtual plane tangent to the cornea is added. The enhancement did not solve all issues as the system remains complex due to using two cameras to obtain the difference between dark and bright pupil images for pupil position detection [22].

5. Eye Tracking Using Eye Models

Eye models can be used for eye tracking. Zhu and Ji [23] proposed two new schemes to allow natural head movement for eye tracking and minimize the calibration of the eye tracking system to only once for each new user. The first scheme estimates the 3-D eye gaze directly. The cornea of the eye is modeled as a convex mirror. Based on the characteristics of a convex mirror, the eye's 3-D optic axis is estimated. The visual axis represents the actual 3-D eye gaze direction of the user. It is determined after knowing the angle deviation between the visual axis and optic axis in calibration. The second scheme does not require estimating the 3-D eye gaze, and the gaze point can be determined implicitly using a gaze mapping function. The gaze mapping function is updated automatically upon head movement using a dynamic computational head compensation model. The proposed schemes require medical and physical background as well as image processing techniques. The schemes are complex and hence they are not applicable for real-time eye tracking. It would be beneficial if the used algorithms, equations and phases were optimized. In addition, the system uses inflexible hardware which is not preferred in real applications. The schemes were not tested on a database containing various test subjects and conditions.

6. Hybrid Eye Tracking Techniques

A mix of different techniques can be used for eye tracking. Huang et al. [24] suggested an algorithm to detect eye pupil based on intensity, shape, and size. Special Infrared (IR) illumination is used and thus, eye pupils appear brighter than the rest of the face. The intensity of the eye pupil is used as the primary feature in pupil detection. However, some other bright objects might exist in the image. To separate the pupil from bright objects existing in the image, other pupil properties can be used, such as pupil size and shape. Support Vector Machine is used to locate the eye location from the detected candidates. The used hardware, including the IR LEDs and the IR camera, is not expensive. The algorithm has been used in a driver fatigue detection application. The algorithm can be considered a new beginning for real-time eye tracking systems if it is tested further with different test subjects and different classification functions in order to reach the most optimized eye algorithm. The required CPU time was not mentioned although it is important in driver fatigue detection applications as they are real-time applications.

Using a corneal reflection and energy controlled iterative curve fitting method for efficient pupil detection was proposed by Li and Wee [12]. Ellipse fitting is needed to acquire the boundary of the pupil based on a learning algorithm developed to perform iterative ellipse fitting controlled by a gradient energy function. This method uses special hardware which has been implemented specifically for this algorithm. It has been used in a Field-of-View estimation application and can be used in other applications.

Coetzer and Hancke [25] proposed a system for eye tracking which uses an IR camera and IR LEDs. It captures the bright and dark pupil images subsequently such that they are effectively the same image but each has been taken in different illumination conditions. Two groups of infrared LEDs are synchronized with the IR camera. The first is placed close to the camera's lens to obtain the bright pupil effect and the second about 19.5 cm away from the lens, to produce the dark pupil effect. The images are then subtracted from each other and a binary image is produced by thresholding the difference. This technique has been presented by Hutchinson [26]. The binary image contains white blobs that are mapped to the original dark pupil image. The sub-images that result from the mapping are potential eye candidates. The possible eye candidate sub-images are classified into either eyes or non-eyes. Artificial neural networks (ANN), support vector machines (SVM) and adaptive boosting (AdaBoost) have been considered as classification techniques in this work. The system is ready for further improvements and enhancements. It was utilized in a driver fatigue monitoring system. It does not require calibration for each user because it uses a dataset for training and feature extraction. The best features to be used and flexible hardware implementation can be investigated further in order to make the algorithm a part of a bigger eye tracking system or eye location classification system. The images used in experiments were eye images after the background and noise were eliminated. This reduces the expectations of this algorithm performance in real applications.

III. Eye Tracking Applications

The field of research in eye tracking has been very active due to the significant number of applications that can benefit from robust eye tracking methods. Many application areas employ eye tracking techniques. In the following, some of these areas are described.

A. Eye Control for Accessibility and Assistive Technology

People who have lost the control over all their muscles and are no longer able to perform voluntary movements as a result of diseases or accidents can benefit widely from eye tracking systems to interact and communicate with the world in daily life. Eye tracker systems provide many options for these individuals such as an eye-typing interface that could have text-to-speech output. They also enable eye-control, including directing electric wheelchairs or switching on the TV or other devices.

Human computer interaction with graphical user interface actions or events may be classified into two main categories [27]:

-

•

Pointing: moving the pointer over an object on the screen such as text or icon on the screen;

-

•

Selection: action interpreted as a selection of the object pointed to.

Kocejko et al. [16] introduced the “Eye Mouse” which people with severe disabilities can use. The mouse cursor position is determined based on the acquired information about the eye position towards the screen which provides the ability to operate a personal computer by people with severe disabilities.

Raudonis et al. [4] dedicated their eye tracking system to the assistance of people with disabilities. The system was used with three on-line applications. The first controls a mobile robot in a maze. The second application was “Eye Writer” which is a text-writing program. A computer game was the third application.

Fu and Yang [10] suggested employing information obtained from tracking the eye gaze to control a display based on video. Eye gaze is estimated and the display is controlled accordingly.

Lupu et al. [27] proposed “Asistsys” which is a communication system for patients suffering from neuro-locomotor disabilities. This system assists patients in expressing their needs or desires.

B. E-Learning

E-learning systems are computer-based teaching systems and are now very common. However, despite the fact that users are usually accustomed to machine interactions, the learning experience can be quite different. In particular, the “emotional” part is significant in the interaction between teacher and learner and it is missing in computer based learning processes.

Calvi et al. [28] presented “e5Learning” which is an e-learning environment that exploits eye data to track user activities, behaviors and emotional or “affective” states. Two main user states were considered: “high workload or non-understanding” and “tiredness.” The author/teacher of the course is able to decide the duration the user should look at certain parts of the course content, whether this content was textual or non-textual.

Porta et al. [29] sought to build an e-learning platform which determines whether a student is having difficulty understanding some content or is tired or stressed based on the interpreted eye behavior.

C. Car Assistant Systems

Research is done on applying eye tracking methods in the vehicle industry with the aim of developing monitoring and assisting systems used in cars. For example, an eye tracker could be used in cars to warn drivers when they start getting tired or fall asleep while driving.

Driver fatigue can be detected by analyzing blink threshold, eye state (open/closed) and for how long the driver's gaze stays in the same direction. Many eye tracking methods were used in this area of application [24], [25], [30].

D. Iris Recognition

Iris recognition is being widely used for biometric authentication. Iris localization is an important and critical step upon which the performance of an iris recognition system depends [18]–[19].

E. Field of View Estimation

Another interesting application of eye tracking systems is that these systems can serve as an effective tool in optometry to assist in identifying the visual field of any individual, especially identifying blind spots of vision.

Li and Wee [12] used eye tracking to estimate the field of view to be used for augmented video/image/graphics display.

IV. Head Movement Detection

The increased popularity of the wide range of applications of which head movement detection is a part, such as assistive technology, teleconferencing and virtual reality, have increased the size of research aiming to provide robust and effective techniques of real-time head movement detection and tracking.

During the past decade, the field of real-time head movement detection has received much attention from researchers. There are many different approaches for head pose estimation. All investigated methods require high computational hardware and cannot be implemented using low computational hardware such as a microcontroller.

A. Computer-Vision-Based Head Movement Detection

One approach for head movement detection is computer vision-based. Liu et al. [31] introduced a video-based technique for estimating the head pose and used it in a good image processing application for a real-world problem; attention recognition for drivers. It estimates the relative pose between adjacent views in subsequent video frames. Scale-Invariant Feature Transform (SIFT) descriptors are used in matching the corresponding feature points between two adjacent views. After matching the corresponding feature points, the relative pose angle is found using two-view geometry. With this mathematical solution, which can be applied in the image-processing field in general, the  ,

,  , and

, and  coordinates of the head position are determined. The accuracy and performance of the algorithm were not highlighted in the work and thus more work is needed to prove this algorithm to be applicable in real applications.

coordinates of the head position are determined. The accuracy and performance of the algorithm were not highlighted in the work and thus more work is needed to prove this algorithm to be applicable in real applications.

Murphy-Chutorian and Trivedi [32] presented a static head-pose estimation algorithm and a visual 3-D tracking algorithm based on image processing and pattern recognition. The two algorithms are used in a real-time system which estimates the position and orientation of the user's head. This system includes three modules to detect the head; it provides initial estimates of the pose and tracks the head position and orientation continuously in six degrees of freedom. The head detection module uses Haar-wavelet Adaboost cascades. The initial pose estimation module uses support vector regression (SVR) with localized gradient orientation (LGO) histograms as its input. The tracking module estimates the 3-D movement of the head based on an appearance-based particle filter. The algorithm was used to implement a very good driver awareness monitoring application based on head pose estimation. Using this algorithm in real-world applications will be effective for applications similar to a driver awareness monitoring system. It needs more software solution optimization before implementing in real applications. This algorithm can be considered to be a good addition to head tracking systems.

Kupetz et al. [33] implemented a head movement tracking system using an IR camera and IR LEDs. It tracks a 2×2 infrared LED array attached to the back of the head. LED motion is processed using light tracking based on a video analysis technique in which each frame is segmented into regions of interest and the movement of key feature points is tracked between frames [34]. The system was used to control a power wheelchair. The system needs a power supply for the LEDs which could be wired or use batteries. The system can be improved to detect the maximum possible unique head movements to be used in different applications. It needs more experiments to prove its accuracy in addition to more theoretical proofs.

Siriteerakul et al. [35] proposed an effective method that tracks changes in head direction with texture detection in orientation space using low resolution video. The head direction is determined using a Local Binary Pattern (LBP) to compare between the texture in the current video frame representing the head image and several textures estimated by rotating the head in the previous video frame by some known angles. The method can be used in real-time applications. It can only find the rotation with respect to one axis while the others are fixed. It can determine the rotation with respect to the yaw axis (left and right rotations) but not neck flexion.

Song et al. [36] introduced a head and mouth tracking method using image processing techniques where the face is first detected using an Adaboost algorithm. Then, head movements are detected by analyzing the location of the face. Five head motions were defined as the basis of head movements. The geometric center of the detected face area is calculated and considered to be the head's central coordinates. These coordinates can be analyzed over time to trace the motion of the head. The used camera is not head-mounted. The method was found to be fast, which makes it applicable in simple applications for people with disabilities. However, the accuracy and performance of the method were not reported.

Jian-zheng and Zheng [37] presented a mathematical approach using image processing techniques to trace head movements. They suggested that the pattern of the head movements can be determined by tracing face feature points, such as nostrils, because the movements of the feature point and the movements of the head do not vary widely. Tracing the feature point with the Lucas-Kanade (LK) algorithm is used to find the pattern of head movements. GentleBoost classifiers are trained to use the coordinates of the nostrils in a video frame to identify the head direction. This technique is very appropriate for academic research as it is theoretically proved but it requires more testing and experiments on different test subjects and a known database containing variant samples in order to prove its reliability in applications.

Zhao and Yan [38] proposed a head orientation estimation scheme based on pattern recognition and image processing techniques. It uses artificial neural networks to classify head orientation. The face is first detected by adopting the YCbCr skin detection method. Then k key points that represent different head orientations are extracted and labeled. The coordinates and local textures of the labeled key points are used as the input feature vector of the neural networks. Head orientation estimation systems must have high real-time performance to be applicable in real-time applications. This scheme needs more optimization to be closer to real-time applications.

Berjón et al. [39] introduced a system which combines alternative Human-Computer Interfaces including head movements, voice recognition and mobile devices. The head movement detection part uses an RGB camera and image processing techniques. It employs a combination of Haar-like features and an optical flow algorithm. Haar-like features are used in detection of the position of the face. The optical flow detects the changes occurring in the position of the face detected within the image. The analysis for the system is shallow and did not provide any mathematical proofs or experimental tests to survive in real-world application fields.

Zhao et al. [40] presented another head movement detection method based on image processing which also used the Lucas-Kanade algorithm. In order to identify head movements accurately, the face is first detected, then the nostrils are located and the Lucas-Kanade algorithm is used to track the optical flow of the nostrils. However, the face position in the video may be approximate and, thus, the coordinates of the feature points might not reflect the head movement accurately. Therefore, they used inter-frame difference in the coordinate of feature points to reflect the head movement. This approach is applicable in head motion recognition systems and it is considered to be fast. More experiments are required using datasets which contain different subjects and cases in order to be considered as an option for real applications, especially since it ignored the challenges of different camera angles of view and normal noise challenges.

Xu et al. [41] presented a method which recovers 3-D head pose video with a 3-D cross model in order to track continuous head movement. The model is projected to an initial template to approximate the head and is used as a reference. Full head motion can then be detected in video frames using the optical flow method. It uses a camera that is not head-mounted. It can be considered a high complexity algorithm which is useful in academic research fields and further research can be done based on the findings of this work and possible applications may be suggested.

B. Acoustic-Signal-Based Methods

Some head direction estimation systems localize the user's voice source (i.e., their mouth) to estimate head direction.

Sasou [42] proposed an acoustic-based head direction detection method. The method uses a microphone array that can localize the source of the sound. In each head orientation, the localized positions of the sounds generated by the user are distributed around unique areas that can be distinguished. Hence, if the boundaries between these unique areas are defined, the head direction can be estimated by determining which area corresponds to the generated sound. The work introduced a novel power wheelchair control system based on head orientation estimation. The system was enhanced with noise removal using back microphones. In real-world applications, this algorithm is very limited and can detect only three movements. Thus, it needs to be combined with other alternative control methods to increase the number of commands available to control the chair. In addition, it needs more tests and experimental analysis to prove the reliability and usability of the system, especially with the noise in the real world.

C. Accelerometer and Gyro-Sensor Based Methods

Many sensors such as gyroscopes and accelerometers can be used in head movement detection systems to obtain information on head movements.

King et al. [43] implemented a hands-free head movement classification system which uses pattern recognition techniques with mathematical solutions for enhancement. A Neural Network with the Magnified Gradient Function (MGF) is used. The MGF magnifies the first order derivative of the activation function to increase the rate of convergence and still guarantee convergence. A dual axis accelerometer mounted inside a hat was used to collect head movement data. The final data sample is obtained by concatenating the two channels of the dual accelerometer into a single stream to be used in the network with no additional pre-processing of the data. The proposed system needs more experiments to move it from being theoretically proved to being used in real world applications in different scenarios. No application based on the proposed method was suggested.

A similar method was presented by Nguyen et al. [44]. The method detects the movement of a user's head by analyzing data collected from a dual-axis accelerometer and pattern recognition techniques. A trained Bayesian Neural Network, which is an optimized version of a Neural Network, is used in the classification process where the head movement can be classified into one of four gestures. The algorithm still needs more experiments to make it usable in real world applications, as there was no mention of any possible applications. The use of Bayesian Neural Networks for optimization enhanced the performance compared with other head movement detection techniques which are based on pattern recognition.

Manogna et al. [45] used an accelerometer device fixed on the user's forehead. The accelerometer senses the tilt resulting from the user's head movement. This tilt corresponds to an analog voltage value that can be used to generate control signals. The implementation of this detection method is completely a simulation which represents a good proof of concept. However, it is missing actual accuracy analysis or real-time applicability investigation. The system is very flexible and can be applied in various applications

Kim et al. [46] presented a head tracking system in which the head pose is estimated based on data acquired using the gyro sensor. By applying an integral operation, the angular velocity obtained from the sensor is converted to angles. The system uses relative coordinates in head pose estimation instead of absolute coordinates. The proposal included a practical solution which is a computer mouse based on simple hardware implementation. This makes this system applicable in the real world. However, it needs more theoretical proofs and more experiments and accuracy analysis. The hardware implemented for the system could be enhanced and modified as it is not very comfortable for the user.

D. Hybrid Head Tracking Techniques

A combination of different techniques can be used in head tracking systems. Satoh et al. [47] proposed a head tracking method that uses a gyroscope mounted on a head-mounted-device (HMD) and a fixed bird's-eye view camera responsible for observing the HMD from a third-person viewpoint. The gyroscope measures the orientation of the view camera in order to reduce the number of the parameters used in pose estimation. The bird's-eye view camera captures images of the HDM and, in order to find the remaining parameters that are needed for head pose estimation, feature key points are detected and processed to obtain their coordinates. Using a fixed camera, customized marker, gyroscope sensor and calibration process makes this proposal impractical for head tracking tasks. The time complexity of the algorithm has not been investigated which makes it a little far from being used in real-world applications, especially in that the paper mentions no suggested application.

V. Head Movement Detection Applications

A. Accessibility and Assistive Technology

Head movement has been found to be a natural way of interaction. It can be used as an alternative control method and provides accessibility for users when used in human computer interface solutions.

Sasou [42] introduced a wheelchair controlled by an acoustic-based head direction estimation scheme. The user is required to make sounds only by breathing. The direction of the head pose controls the direction of wheelchair. King et al. [43] also presented a head-movement detection system to provide hands-free control of a power wheelchair.

Song et al. [36] mapped head movement-combined mouth movements to different mouse events, such as move, click, click and drag, etc. People with disabilities who cannot use a traditional keyboard or mouse can benefit from this system.

B. Video Surveillance

Video surveillance systems are now an essential part of daily life. Xie et al. [48] presented a video-based system that captures the face or the head by using a single surveillance camera and performs head tracking methods to detect humans in the video scene.

C. Car Assistant Systems

Driver's attention recognition plays a significant role in driver assistance systems. It recognizes the state of the driver to prevent driver distraction. The driver's head direction can be an indication of his attention. Liu et al. [31] used head pose detection to recognize driver's attention. Lee et al. [49] introduced a system that detects when the driver gets drowsy based on his head movement.

VI. Combining Eye Tracking and Head Movement Detection

Some research has been done on using a combination of eye tracking and head movements. Kim et al. [50] presented a head and eye tracking system which uses the epipolar method along with feature point matching to estimate the position of the head and its rotational degree. The feature points are high brightness LEDs on a helmet. For eye tracking, it uses an LED for constant illumination and a Kalman filter is used for pupil tracking. Their presented work needs more theoretical proof. In addition, there is no mentioned optimization for the algorithm and the required CPU time analysis is not reported. There are a lot of hardware requirements for the system which makes it relatively expensive to implement.

Iwata and Ebisawa [51] introduced a good flexible eye mouse interface which is the pupil mouse system combined with head pose detection. The system detects pupil motion in the video frames by finding the difference between the bright and dark pupil images. Head direction is detected by tracing key feature points (nostrils). The nostrils were detected as the darker areas in the bright and dark images. The information obtained is mapped into cursor motion on a display. Using head pose detection to support the pupil mouse is a good idea which improved the overall performance. However, the head pose detection part increases the complexity of the pupil mouse algorithm which causes a need for a lot of optimization techniques, which may not be suitable for real-world applications.

VII. Eye/Head Tracking in Commercial Products

In the last decade, the advancing applicability of eye tracking technologies led to a significant interest in producing commercial eye tracking products. However, the research on head movement detection is not yet mature enough to be considered for commercial applications.

Commercial eye tracking products have been used in several applications. In this section, some eye tracking products are discussed.

A. EagleEyes

EagleEyes eye tracking system was first developed by Professor Jim Gips at Boston College [52]. It is primarily used for severely disabled children and adults. The eye movement signals are used to control the cursor on the computer screen. The computer then can be used to assist in communication and to help in education. It can also provide entertainment for the disabled and their families. There are different commercial and customized software programs that can be used in the system to provide the user with many options and functionalities.

B. BLiNK

Another product is BLiNK, which is a simplified eye tracking system suitable for everyday use [53]. There are many applications for BLiNK, such as computer input, gaming, taking orders, and learning. Another important application field is health care. It is used as an alternative communication method with patients who have lost the ability to write and speak. BLiNK is also useful for severely disabled people who have no or very limited movement capabilities.

C. Smi

SMI also provides eye trackers which support many application fields [54]. One of these applications is ophthalmology. The products provided by SMI are used for medical research in addition to related eye diseases investigation where the research results allow understanding the human eye and diagnosis of the disease.

D. Tobii

Tobii eye trackers, along with the Tobii Studio eye tracking software, allow reliable eye tracking results to be obtained [55]. There are many products provided by Tobii. One of these devices is the Tobii C12 AAC device which is by a wide range of disabled people to perform independent functionalities using optional eye or head control [56]. The Tobii C12 AAC device is suitable to be used by people who suffer limitations or loss of functionality in their hands and other body parts due to stroke, diseases of the nervous system, etc.

The device provides various control options. The flexible AAC software allows the user to choose either text or symbols for generating speech to communicate with the device. This provides the ability to ask and answer questions or use e-mail and text messages. There are multiple mounting systems for the device making it compatible with assistive technology including wheelchairs, walkers and bed frames.

Tobii also has an academic program which supports university classrooms and research labs with easy and cost-effective eye tracking. Tobii products are also used by web designers for usability testing where the designers can get direct feedback on their work by analyzing what a user is looking at.

VIII. Experimental Results of Existing Eye/Head Tracking Techniques

The performance of eye tracking and head movement detection systems is evaluated in terms of accuracy and required CPU processing time. This section compares the results of the methods described earlier in the survey.

A. Performance of Eye Tracking Methods

Table I compares the described eye tracking methods in terms of eye detection accuracy, gaze angle accuracy and required CPU time.

Table I. Comparison of Eye Tracking Methods.

| Method | Detection Accuracy (%) | Angle Accuracy (degree) | CPU time (ms) |

|---|---|---|---|

| Eye tracking using Pattern Recognition | |||

| Raudonis et al.[4] | 100% | N/A | N/A |

| Kuo et al.[6] | 90% | N/A | N/A |

| Yuan and Kebin [9] | N/A | 1 | N/A |

| Lui and Lui [7] | 94.1% | N/A | N/A |

| Khairosfaizal and Nor'aini [17] | 86% | N/A | N/A |

| Hotrakool et al.[8] | 100% | N/A | 12.92 |

| Shape-based eye tracking | |||

| Yang et al.[13] | N/A | 0.5 | N/A |

| Yang et al.[14] | N/A | Horizontal: 0.327 Vertical: 0.3 | N/A |

| Mehrubeoglu et al. [11] | 90% | N/A | 49.7 |

| Eye tracking using eye models | |||

| Zhu and Ji [23] (First scheme) | N/A | Horizontal: 1.14 Vertical: 1.58 | N/A |

| Zhu and Ji [23] (Second scheme) | N/A | Horizontal: 0.68 Vertical: 0.83 | N/A |

| Eye tracking using hybrid techniques | |||

| Li and Wee [12] | N/A | 0.5 | N/A |

| Huang et al.[24] | 95.63% | N/A | N/A |

| Coetzer and Hancke [25] | 98.1% | N/A | N/A |

B. Performance of Head Movement Detection Methods

The head detection accuracy, angle accuracy and required CPU time of described head movement detection methods are summarized in Table II.

Table II. Comparison of Head Tracking Methods.

To use eye tracking and head movement detection methods in real-time systems, their time performance must be taken into consideration. The CPU time required for processing and analyzing eye and head movements must be minimized. This has only rarely been investigated in the experimental work of the reported methods.

IX. Conclusion

Eye tracking and head movement detection are considered effective and reliable human-computer interaction and communication alternative methods. Hence, they have been the subject of many research works. Many approaches for implementing these technologies have been reported in the literature. This paper investigated existing methods and presented a state-of-art survey on eye tracking and head movement detection. Many applications can benefit from utilizing effective eye tracking and/or head movement detection methods. However, the research is still facing challenges in presenting robust methods which can be used in applications to detect and track eye or head movements accurately.

Eye tracking methods rarely investigate the required CPU time. However, real-time application requires investigating and optimizing the performance requirements. In addition, most studies do not test eye tracking using a known image database that contains variant images of different subjects in different conditions such as lighting conditions, noise, distances, etc. This makes the reported accuracy of a method less reliable because it may be affected by different test conditions.

Head movement detection requires high computational hardware. A microcontroller, which is considered low computational hardware, cannot be used for implementing head movement detection algorithms reported in literature.

More work and research is needed to provide eye tracking and head movement detection methods that are reliable and useful for real-world applications.

Biographies

Amer Al-Rahayfeh is currently pursuing the Ph.D. degree in computer science and engineering with the University of Bridgeport, Bridgeport, CT, USA. He received the B.S. degree in computer science from Mutah University in 2000 and the M.S. degree in computer information systems from The Arab Academy for Banking and Financial Sciences in 2004. He was an Instructor at The Arab Academy for Banking and Financial from 2006 to 2008. He is currently a Research Assistant with the University of Bridgeport. His research interests include eye tracking and head movement detection for super assistive technology, wireless multimedia sensor networks, and multimedia database systems.

Miad Faezipour (S'06–M'10) is an Assistant Professor in the Computer Science and Engineering and Biomedical Engineering programs, University of Bridgeport, CT, and has been the Director of the Digital/Biomedical Embedded Systems and Technology Lab since July 2011. Prior to joining UB, she has been a Post-Doctoral Research Associate at the University of Texas at Dallas collaborating with the Center for Integrated Circuits and Systems and the Quality of Life Technology Laboratories. She received the B.Sc. degree in electrical engineering from the University of Tehran, Tehran, Iran, and the M.Sc. and Ph.D. degree in electrical engineering from the University of Texas at Dallas. Her research interests lie in the broad area of biomedical signal processing and behavior analysis techniques, high-speed packet processing architectures, and digital/embedded systems. She is a member of the IEEE EMBS and the IEEE Women in Engineering.

References

- [1].Ohno T., “One-point calibration gaze tracking method,” in Proc. Symp. Eye Track. Res. Appl., 2006, pp. 34–34. [Google Scholar]

- [2].Hansen D. W. and Ji Q., “In the eye of the beholder: A survey of models for eyes and gaze,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 32, no. 3, pp. 478–500, Mar. 2010. [DOI] [PubMed] [Google Scholar]

- [3].Gips J., DiMattia P., Curran F. X., and Olivieri P., “Using EagleEyes—An electrodes based device for controlling the computer with your eyes to help people with special needs,” in Proc. Interdisciplinary Aspects Comput. Help. People Special Needs, 1996, pp. 77–84. [Google Scholar]

- [4].Raudonis V., Simutis R., and Narvydas G., “Discrete eye tracking for medical applications,” in Proc. 2nd ISABEL, 2009, pp. 1–6. [Google Scholar]

- [5].Tang J. and Zhang J., “Eye tracking based on grey prediction,” in Proc. 1st Int. Workshop Educ. Technol. Comput. Sci., 2009, pp. 861–864. [Google Scholar]

- [6].Kuo Y., Lee J., and Kao S., “Eye tracking in visible environment,” in Proc. 5th Int. Conf. IIH-MSP, 2009, pp. 114–117. [Google Scholar]

- [7].Liu H. and Liu Q., “Robust real-time eye detection and tracking for rotated facial images under complex conditions,” in Proc. 6th ICNC, 2010, vol. 4, pp. 2028–2034. [Google Scholar]

- [8].Hotrakool W., Siritanawan P., and Kondo T., “A real-time eye-tracking method using time-varying gradient orientation patterns,” in Proc. Int. Conf. Electr. Eng., Electron. Comput. Telecommun. Inf. Technol., 2010, pp. 492–496. [Google Scholar]

- [9].Yuan Z. and Kebin J., “A local and scale integrated feature descriptor in eye-gaze tracking,” in Proc. 4th Int. CISP, 2011, vol. 1, pp. 465–468. [Google Scholar]

- [10].Fu B. and Yang R., “Display control based on eye gaze estimation,” in Proc. 4th Int. CISP, 2011, vol. 1, pp. 399–403. [Google Scholar]

- [11].Mehrubeoglu M., Pham L. M., Le H. T., Muddu R., and Ryu D., “Real-time eye tracking using a smart camera,” in Proc. AIPR Workshop, 2011, pp. 1–7. [Google Scholar]

- [12].Li X. and Wee W. G., “An efficient method for eye tracking and eye-gazed FOV estimation,” in Proc. 16th IEEE Int. Conf. Image Process., Nov. 2009, pp. 2597–2600. [Google Scholar]

- [13].Yang C., Sun J., Liu J., Yang X., Wang D., and Liu W., “A gray difference-based pre-processing for gaze tracking,” in Proc. IEEE 10th ICSP, Oct. 2010, pp. 1293–1296. [Google Scholar]

- [14].Yang X., Sun J., Liu J., Chu J., Liu W., and Gao Y., “A gaze tracking scheme for eye-based intelligent control,” in Proc. 8th WCICA, 2010, pp. 50–55. [Google Scholar]

- [15].Chen Y. W. and Kubo K., “A robust eye detection and tracking technique using gabor filters,” in Proc. 3rd Int. Conf. IEEE Intell. Inf. Hiding Multimedia Signal Process., Nov. 2007, vol. 1, pp. 109–112. [Google Scholar]

- [16].Kocejko T., Bujnowski A., and Wtorek J., “Eye mouse for disabled,” in Proc. IEEE Conf. Human Syst. Interact., May 2008, pp. 199–202. [Google Scholar]

- [17].Khairosfaizal W. and Nor'aini A., “Eye detection in facial images using circular Hough transform,” in Proc. 5th CSPA, Mar. 2009, pp. 238–242. [Google Scholar]

- [18].Pranith A. and Srikanth C. R., “Iris recognition using corner detection,” in Proc. 2nd ICISE, 2010, pp. 2151–2154. [Google Scholar]

- [19].Sundaram R. M., Dhara B. C., and Chanda B., “A fast method for iris Localization,” in Proc. 2nd Int. Conf. EAIT, 2011, pp. 89–92. [Google Scholar]

- [20].Alioua N., Amine A., Rziza M., and Aboutajdine D., “Eye state analysis using iris detection based on circular hough transform,” in Proc. ICMCS, Apr. 2011, pp. 1–5. [Google Scholar]

- [21].Yoo D. H., Kim J. H., Lee B. R., and Chung M. J., “Non-contact eye gaze tracking system by mapping of corneal reflections,” in Proc. 5th IEEE Int. Conf. Autom. Face Gesture Recognit., May 2002, pp. 94–99. [Google Scholar]

- [22].Yoo D. H. and Chung M. J., “A novel nonintrusive eye gaze estimation using cross-ratio under large head motion,” Special Issue on Eye Detection and Tracking, Computer Vision and Image Understanding, vol. 98, no. 1, pp. 25–51, 2005. [Google Scholar]

- [23].Zhu Z. and Ji Q., “Novel eye gaze tracking techniques under natural head movement,” IEEE Trans. Biomed. Eng., vol. 54, pp. 2246–2260, Dec. 2007. [DOI] [PubMed] [Google Scholar]

- [24].Huang H., Zhou Y. S., Zhang F., and Liu F. C., “An optimized eye locating and tracking system for driver fatigue monitoring,” in Proc. ICWAPR, 2007, vol. 3, pp. 1144–1149. [Google Scholar]

- [25].Coetzer R. C. and Hancke G. P., “Eye detection for a real-time vehicle driver fatigue monitoring system,” in Proc. IEEE Intell. Veh. Symp., Jun. 2011, pp. 66–71. [Google Scholar]

- [26].Hutchinson T., Eye movement detection with improved calibration and speed, U.S. 4 950 069, Aug. 21 1990.

- [27].Lupu R., Bozomitu R., Ungureanu F., and Cehan V., “Eye tracking based communication system for patient with major neuro-locomotor disabilities,” in Proc. IEEE 15th ICSTCC, Oct. 2011, pp. 1–5. [Google Scholar]

- [28].Calvi C., Porta M., and Sacchi D., “e5Learning, an e-learning environment based on eye tracking,” in Proc. 8th IEEE ICALT, Jul. 2008, pp. 376–380. [Google Scholar]

- [29].Porta M., Ricotti S., and Pere C. J., “Emotional e-learning through eye tracking,” in Proc. IEEE Global Eng. Educ. Conf., Apr. 2012, pp. 1–6. [Google Scholar]

- [30].Devi M. S. and Bajaj P. R., “Driver fatigue detection based on eye tracking,” in Proc. 1st Int. Conf. Emerg. Trends Eng. Technol., 2008, pp. 649–652. [Google Scholar]

- [31].Liu K., Luo Y. P., Tei G., and Yang S. Y., “Attention recognition of drivers based on head pose estimation,” in Proc. IEEE VPPC, Sep. 2008, pp. 1–5. [Google Scholar]

- [32].Murphy-Chutorian E. and Trivedi M. M., “Head pose estimation and augmented reality tracking: An integrated system and evaluation for monitoring driver awareness,” IEEE Trans. Intell. Transp. Syst., vol. 11, no. 2, pp. 300–311, Jun. 2010. [Google Scholar]

- [33].Kupetz D. J., Wentzell S. A., and BuSha B. F., “Head motion controlled power wheelchair,” in Proc. IEEE 36th Annu. Northeast Bioeng. Conf., Mar. 2010, pp. 1–2. [Google Scholar]

- [34].Francois A. R. J., Real-time multi-resolution blob tracking, IRIS, University of Southern California, Los Angeles, CA, USA, 2004, . [Google Scholar]

- [35].Siriteerakul T., Sato Y., and Boonjing V., “Estimating change in head pose from low resolution video using LBP-based tracking,” in Proc. ISPACS, Dec. 2011, pp. 1–6. [Google Scholar]

- [36].Song Y., Luo Y., and Lin J., “Detection of movements of head and mouth to provide computer access for disabled,” in Proc. Int. Conf. TAAI, 2011, pp. 223–226. [Google Scholar]

- [37].Jian-Zheng L. and Zheng Z., “Head movement recognition based on LK algorithm and Gentleboost,” in Proc. 7th Int. Conf. Netw. Comput. Adv. Inf. Manag., Jun. 2011, pp. 232–236. [Google Scholar]

- [38].Zhao Y. and Yan H., “Head orientation estimation using neural network,” in Proc. ICCSNT, 2011, vol. 3, pp. 2075–2078. [Google Scholar]

- [39].Berjón R., Mateos M., Barriuso A. L., Muriel I., and Villarrubia G., “Alternative human-machine interface system for powered wheelchairs,” in Proc. 1st Int. Conf. Serious Games Appl. Health, 2011, pp. 1–5. [Google Scholar]

- [40].Zhao Z., Wang Y., and Fu S., “Head movement recognition based on Lucas-Kanade algorithm,” in Proc. Int. Conf. CSSS, 2012, pp. 2303–2306. [Google Scholar]

- [41].Xu Y., Zeng J., and Sun Y., “Head pose recovery using 3D cross model,” in Proc. 4th Int. Conf. IHMSC, 2012, vol. 2, pp. 63–66. [Google Scholar]

- [42].Sasou A., “Acoustic head orientation estimation applied to powered wheelchair control,” in Proc. 2nd Int. Conf. Robot Commun. Coordinat., 2009, pp. 1–6. [Google Scholar]

- [43].King L. M., Nguyen H. T., and Taylor P. B., “Hands-free head-movement gesture recognition using artificial neural networks and the magnified gradient function,” in Proc. 27th Annu. Conf. Eng. Med. Biol., 2005, pp. 2063–2066. [DOI] [PubMed] [Google Scholar]

- [44].Nguyen S. T., Nguyen H. T., Taylor P. B., and Middleton J., “Improved head direction command classification using an optimised Bayesian neural network,” in Proc. 28th Annu. Int. Conf. EMBS, 2006, pp. 5679–5682. [DOI] [PubMed] [Google Scholar]

- [45].Manogna S., Vaishnavi S., and Geethanjali B., “Head movement based assist system for physically challenged,” in Proc. 4th ICBBE, 2010, pp. 1–4. [Google Scholar]

- [46].Kim S., Park M., Anumas S., and Yoo J., “Head mouse system based on gyro- and opto-sensors,” in Proc. 3rd Int. Conf. BMEI, 2010, vol. 4, pp. 1503–1506. [Google Scholar]

- [47].Satoh K., Uchiyama S., and Yamamoto H., “A head tracking method using birD's-eye view camera and gyroscope,” in Proc. 3rd IEEE/ACM ISMAR, Nov. 2004, pp. 202–211. [Google Scholar]

- [48].Xie D., Dang L., and Tong R., “Video based head detection and tracking surveillance system,” in Proc. 9th Int. Conf. FSKD, 2012, pp. 2832–2836. [Google Scholar]

- [49].Lee D., Oh S., Heo S., and Hahn M., “Drowsy driving detection based on the driver's head movement using infrared sensors,” in Proc. Int. Symp. Universal Commun., 2008, pp. 231–236. [Google Scholar]

- [50].Kim J., et al. , “Construction of integrated simulator for developing head/eye tracking system,” in Proc. ICCAS, 2008, pp. 2485–2488. [Google Scholar]

- [51].Iwata M. and Ebisawa Y., “PupilMouse supported by head pose detection,” in Proc. IEEE Conf. Virtual Environ., Human-Comput. Inter. Meas. Syst., Jul. 2008, pp. 178–183. [Google Scholar]

- [52].EagleEyes Technology, Salt Lake City, UT, USA: The Opportunity Foundation of America, Jul. 18 2013, [Online]. Available: http://www.opportunityfoundationofamerica.org/eagleeyes/. [Google Scholar]

- [53].Introducing BLiNK, New York, NY, USA: BLiNK, Jul. 18 2013, [Online]. Available: http://www.blinktracker.com/index.htm. [Google Scholar]

- [54].SMI Gaze & EYE Tracking Systems, Teltow, Germany: SensoMotoric Instruments, Jul. 18 2013, [Online]. Available: http://www.smivision.com/en/gaze-and-eye-tracking-systems/home.html. [Google Scholar]

- [55].Eye Tracking Products, Danderyd, Sweden: Tobii Technology, Jul. 20 2013, [Online]. Available: http://www.tobii.com/en/eye-tracking-research/global/products/. [Google Scholar]

- [56].Tobii C12—AAC Device for Independence, Danderyd, Sweden: Tobii Technology, Jul. 20 2013, [Online]. Available: http://www.tobii.com/en/assistive-technology/north-america/products/hardware/tobii-c12/. [Google Scholar]