Abstract

The least angle regression (LAR) was proposed by Efron, Hastie, Johnstone and Tibshirani (2004) for continuous model selection in linear regression. It is motivated by a geometric argument and tracks a path along which the predictors enter successively and the active predictors always maintain the same absolute correlation (angle) with the residual vector. Although it gains popularity quickly, its extensions seem rare compared to the penalty methods. In this expository article, we show that the powerful geometric idea of LAR can be generalized in a fruitful way. We propose a ConvexLAR algorithm that works for any convex loss function and naturally extends to group selection and data adaptive variable selection. After simple modification it also yields new exact path algorithms for certain penalty methods such as a convex loss function with lasso or group lasso penalty. Variable selection in recurrent event and panel count data analysis, Ada-Boost, and Gaussian graphical model is reconsidered from the ConvexLAR angle.

Key words and phrases: group lasso, lasso, least angle regression (LAR), ordinary differential equation (ODE), regularization, solution path

1 Introduction

Regularization is a tool to avoid overfitting and obtain parsimonious and interpretable models, especially when the number of parameters exceeds the number of observations. One powerful regularization technique is penalization. In general, a penalty method minimizes the sum of a loss function and a penalty term. The simplest ℓ1 penalty leads to the popular lasso regression (Tibshirani, 1996; Donoho and Johnstone, 1994). Various other penalty methods have been developed thereafter. Each one targets on a specific question that arises in applications. For instance, the group penalty (Yuan and Lin, 2006; Meier et al., 2008) aims to select groups of variables such as in factorial data analysis. The adaptive lasso (Zou, 2006) applies a weighted penalty method in a data driven fashion that improves the asymptotic properties. All these penalty methods are formulated as an optimization problem and the solution is obtained by either optimizing at a grid of tuning parameter values or by a path algorithm that tracks the solution as a function of the tuning parameter.

In contrast to penalty methods, the least angle regression (LAR) proposed by Efron et al. (2004) is purely motivated by a geometric argument rather than optimization. Given a response vector y = (y1, y2,⋯, yn)T ∈ IRn and its corresponding design matrix X = (x1, …, xn)T ∈ IRn×p with xi = (xi1, xi2,⋯, xip)T, let 𝒜t be the active index set at time t. Following Efron et al. (2004), we assume that the covariates x(j) = (x1j, x2j, …, xnj)T have mean 0 and unit length, and that the response y has mean 0. At t = 0, β(0) = 0p and 𝒜0 contains the predictor that is most correlated with the response vector y. At any t > 0, regression coefficients of active predictors, namely βj(t) for j ∈ 𝒜t, move along a direction such that their corresponding predictor vectors x(j) share the same absolute correlation (angle) with the residual vector e(t) = y−Xβ(t). Here the correlation is nothing but the scaled score vector of the least squares criterion with entries

LAR has gained wide popularity since its introduction. However, there seem only a few attempts for generalizations, strikingly unparallel to the fast development of penalty methods. Specific versions of group LAR for least squares problem are mentioned in Yuan and Lin (2006) and Park and Hastie (2006). Wu extends LAR to the generalized linear models (Wu, 2011) and the Cox’s proportional hazard model (Wu, 2012). In this article, we demonstrate that the powerful geometric idea of LAR can be generalized in a fruitful way and leads to potentially many more applications.

The remaining of the paper is organized as follows. In Section 2, we derive a basic ConvexLAR algorithm that performs continuous variable selection for any general convex loss functions. For least squares loss, Efron et al. (2004) show that the LAR solution path is piecewise linear. This leads to their efficient path following algorithm with computational cost of a single ordinary least squares estimation. For a general loss function, the piecewise linearity property is lost. However, it can be shown that the solution path is piecewise smooth and, within each path segment, follows a simple ordinary differential equation (ODE). ConvexLAR tracks the solution path by utilizing the rich numerical resource for solving ODE. Just like the original LAR for least squares, a simple modification of ConvexLAR yields the corresponding lasso solution path.

In Section 3, we show that the geometric idea of LAR can be adapted to various situations. We demonstrate this by incorporating data adaptive weights and group selection into the ConvexLAR algorithm. Comparing to their penalization analogs, these extensions avoid repeated optimizations and are computationally attractive. Moreover, a slight modification of ConvexLAR yields the exact solution path for the corresponding penalization method. ConvexLAR and its extensions are illustrated by various numerical examples in Section 4. To the best of our knowledge, no LAR algorithms have been proposed for these examples in the current literature. Finally we conclude with a brief discussion.

2 ConvexLAR Algorithm and ConvexLASSO Modification

In this section, we derive the ConvexLAR algorithm which forms the basis of various extensions presented in the next section. The algorithm is similar to the LAR algorithms developed for GLM and Cox model (Wu, 2011, 2012) but with much more generality and simpler derivation. We consider an arbitrary strictly convex loss function f(β), where β ∈ IRp is the vector of parameters subject to regularization. ∇f(β) = [∇1f(β), …, ∇pf(β)]T ∈ IRp denotes the gradient vector of the loss function and H(β) = d2f(β) ∈ IRp×p the Hessian, where ∇jf(β) denotes the partial derivative of f(β) with respect to βj for j = 1, …, p. When f is a negative log-likelihood function, ∇f(β) equals the negative of the score vector and H(β) is the observed information matrix. We use t to index the LAR solution path, with the solution at any t denoted by β(t). The active index set at t is denoted by 𝒜t. For notational simplicity, we will drop the subscript t whenever it is obvious from the context. For instance, β𝒜(t) and ∇𝒜f(β) are the subvectors of β(t) and ∇f(β) corresponding to active predictors at t respectively. Similarly, H𝒜(β(t)) is the submatrix of the Hessian corresponding to 𝒜t.

The key idea of LAR (Efron et al., 2004) is to move the solution in a direction such that the gradient (score) corresponding to each active predictor variable has the same absolute value. We denote this common value by s(t), where s stands for the score. This prescribes that the active solution vector has to satisfy |∇jf(β)| = sgn(∇jf(β)) · ∇jf(β) = s(t) or equivalently

Note that, for any active predictor j, ∇jf(β) is non-zero and therefore has a constant sign, denoted by sgn(∇jf(β)), within a segment. In vector form, we have

| (1) |

In general s(t) can be any smooth and monotonic function that decreases from s(0) = maxj |∇jf(0)| to s(tmax) = 0 at some finite tmax > 0. Intuitively s(t) controls how the common absolute score of active predictors decays with respect to solution index t. Different choices of s(t) lead to different indexing systems yet the same solution path. The classical LAR (Efron et al., 2004) sets s(t) = s(0) − t which implies that tmax = s(0) = maxj |∇jf(0)|.

By construction s(t) is larger than the absolute value of the scores of inactive predictors which are packed at zero. Once the absolute score |∇jf(β(t))| of an inactive predictor j coincides with s(t), it joins the club and its score conforms to the ruling s(t) thereafter. Whenever such an event happens, the set of active predictors is updated by adding this new predictor. The index location t corresponding to this event defines a transition point in the sense that the set of active predictors changes (Wu, 2011). A path segment is defined as the solution path between two consecutive transition points. The following result follows from the implicit function theorem and provides the path following direction of the ConvexLAR algorithm within a path segment. See the Appendix for the proof and the following subsection for the definition of path segment operationally.

Theorem 1

For a strictly convex and twice differentiable loss function f(β), the LAR path solution β(t) is continuous and differentiable at t within a path segment. In addition, the solution vector β(t) satisfies the ordinary differential equation

| (2) |

and βj(t) = 0 for any j ∉ 𝒜t.

2.1 ConvexLAR Algorithm

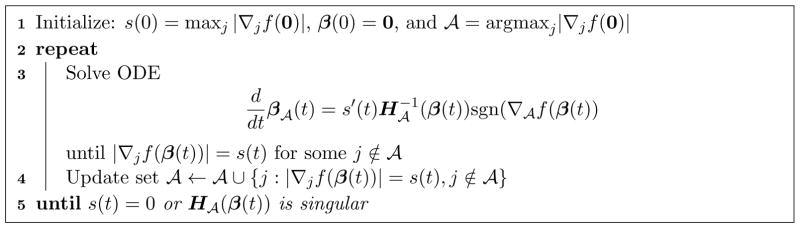

Theorem 1 suggests that the exact solution path of LAR can be obtained by solving the simple ODE system (2) segment by segment. The size of the ODE system within a segment is equal to the corresponding number of active predictors |𝒜|. The ConvexLAR algorithm is summarized in Algorithm 1. We initialize our solution path with β(0) = 0 and the beginning active set contains the predictors that change the objective function f(0) fastest. That is

Algorithm 1.

ConvexLAR.

We then follow the solution path by solving the ODE system (2) until one or more new variables join the active set at some t1 > 0, which is determined by the moment the active score s(t) matches the maximum (absolute) gradient of one or more non-active predictors. The active predictor set 𝒜t stays the same within t ∈ [t0, t1). At t1, it is updated by adding the new predictors that newly join the club. This process continues segment by segment until all the predictors are active. Then, in the final segment, the ConvexLAR solution path moves along a direction such that the absolute values of the first-order partial derivatives decrease at the same speed to zero, which happens at tmax. Under assumptions of Theorem 1, the solution β(tmax) is the global minimizer of the convex loss function, just like the LAR solution ends at the full ordinary least squares estimate. This completes our ConvexLAR solution path algorithm.

2.2 Remark

In this section, we make the following four remarks on the ConvexLAR algorithm.

Remark 1

For the specific choice of s(t) = s(0) − t,

for any j ∈ 𝒜t1. This holds analogously for later transition points. At the end of the m-th LAR segment, the transition point

for any j ∈ 𝒜tm.

Remark 2

For least squares problems, the loss is a quadratic function with a constant Hessian matrix H(β) = XTX. Since sgn(∇jf(β(t))) is constant for all j ∈ 𝒜t in a neighborhood of t, in (2) is piecewise constant. This leads to the piecewise linear solution path of the original LAR (Efron et al., 2004).

Remark 3 (Non-strictly convex losses)

The strict convexity assumption on the loss f precludes applications with non-convex losses or the n < p least squares case. However from the proof in Appendix, we observe that the only essential ingredient is the positive definiteness of H𝒜(β(t)). Therefore in non-strictly convex cases, we terminate path following as soon as H𝒜(β(t)) becomes singular.

Remark 4 (Partial regularization)

So far we have assumed that the full set of parameters β are subject to regularization. In many applications, only a subset of parameters are regularized. Assume that the loss takes the form f(β0, β), where β0 ∈ IRp0 is the vector of parameters exempt from regularization. Depending on the objective function, it may not be easy to remove β0 from the objective. However we can always define the marginal minimizer of β0 as a function of β

| (3) |

Assume that f is strictly convex and thrice differentiable, then this mapping is uniquely defined and twice differentiable by the implicit function theorem. Denote the Jacobian and Hessian of this mapping by Dβ0(β) ∈ IRp0×p and Hβ0(β) ∈ IRp0p×p respectively. Then the first two derivatives with respect to β required in Theorem 1 can be obtained by the chain rule

The Ada-Boost and Gaussian graphical model examples in Section 4 illustrate this strategy.

2.3 ConvexLASSO Modification

Efron et al. (2004) showed that in the least squares case the lasso solution path can be obtained by a slight modification of the LAR. Same extension applies to ConvexLAR. Consider the lasso regularized problem

| (4) |

With λ = s(t) = s(0) − t, the optimality condition for lasso solution is

| (5) |

A proof similar to that for Theorem 1 shows that the lasso solution path moves along the direction

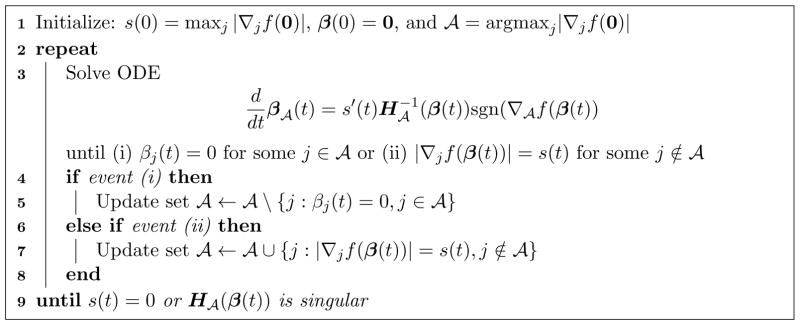

until either (i) βj(t) hits zero for an active predictor j or (ii) |∇jf(β(t))| hits boundary λ = s(t) for some inactive predictor j. Both events change the active set and redefine the direction. The second equation is based on the fact that by (5) a lasso regularized solution satisfies sgn(β𝒜(t)) = −sgn(∇𝒜f(β(t))). Similarly, the ConvexLAR algorithm can be modified to obtain the lasso solution path β(λ) with λ = s(t). Observe that the event defining the LAR segment, i.e., the gradient of an inactive predictor hits λ = s(t), is exactly same as the second type of event for lasso path. However the first type of event, i.e., the coefficient of an active predictor hitting zero, is not tracked in ConvexLAR. We call the modified algorithm, which tracks both types of events, by ConvexLASSO and the pseudocode is listed in Algorithm 2. Same argument as in Efron et al. (2004) and Wu (2011) shows that, under the assumption that, at each transition point, only one single event can happen, namely either one inactive predictor variable becomes active or one currently active predictor variable becomes inactive, ConvexLASSO algorithm yields the lasso solution path.

3 Generalizations

In this section we first summarize a few essential features of ConvexLAR and then demonstrate how these aspects lead to various generalizations.

-

L1

The “influence” of each predictor on the loss function is measured by the magnitude of its score (gradient). Indeed it is these gradient (score) functions that ConvexLAR operates on. For the ConvexLAR algorithm to work properly, we require the influence function to be a monotone IRp → IRp mapping (Ortega and Rheinboldt, 2000). For instance, convexity of a loss function guarantees that its gradient (score) function is a monotone mapping.

-

L2

Certain form of “democratic voting” is enforced among the active predictors. Both the original LAR and ConvexLAR force the influences of individual active predictors to be equal. This equality constraint can be generalized when we want to favor certain predictors over others or to impose group structure on predictors.

-

L3

The influences of active predictors continuously decrease along the path so that those of inactive predictors can catch up. The assumption in L1 that the gradient (score) function must be a monotone mapping guarantees that all influences change continuously.

-

L4

The inactive predictors keep parked at zero until their influence meets that of active ones, at which point they join the club.

-

L5

The influences of all active predictors gradually decrease at the same rate and hit zero at the same time, which declares the end of path following.

Algorithm 2.

ConvexLASSO.

3.1 Weighted/Adaptive ConvexLAR

In many applications, there exists prior information about the importance of predictors, which can also be obtained in a data driven fashion. This motivates the development of adaptive lasso (Zou, 2006), which enjoys favorable asymptotic properties. To incorporate such information into ConvexLAR, we weight the “influence” of each predictor differentially and consider the weighted “influence” wj|∇jf(β)| of each predictor, where wj ≥ 0 are predictor specific weights. A larger wj implies higher “influence” and vice versa. A zero weight means no regularization for the corresponding predictor. For simplicity, we assume that all weights are positive. In this case the function s(t) is the common value wj|∇jf(β)| shared by active predictors. Setting wj ≡ 1 reduces to the ConvexLAR. The stationarity condition for the “democratic voting” reads

and the ODE system becomes

where the vector collects the inverse weights for active predictors. Segment terminates when wj|∇jf(β(t))| hits s(t) for some inactive predictors j ∉ 𝒜. The weighted ConvexLAR is summarized in Algorithm 3 and a similar modification can be applied to get the corresponding adaptive ConvexLASSO.

Algorithm 3.

Weighted ConvexLAR with predictor specific weights wj > 0.

3.2 Group ConvexLAR

In this section we outline a strategy for extending ConvexLAR to incorporate group structure among predictors. Suppose predictors are divided into m groups with group size pi, i = 1, …, m. Slightly abusing notation, we use g to represent both the g-th group and the index set of all predictors belonging to the g-th group. In a similar manner, we use 𝒢 to represent both the set of active groups and the index set of all predictors belonging to current active groups. For an arbitrary matrix H, Hℐ,𝒥 denotes the sub-matrix of H with rows in ℐ and columns in 𝒥.

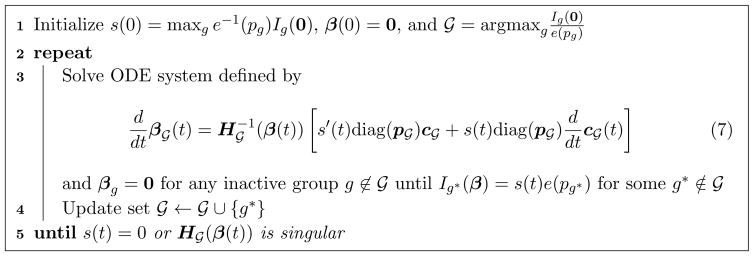

Group ConvexLAR can be devised based on the following considerations. (i) We need one way to gauge the aggregate “influence”, denoted by Ig(β), of a group g. For instance, we can use either the ℓ2 norm or ℓ1 norm of the sub-vector of the gradient corresponding to a group, i.e., or Ig(β) = ||∇gf(β)||1 = Σj∈g |∇jf(β)|. Note that in principle any ℓp norm, p ≥ 1, can be used. (ii) For all groups in the active group set 𝒢, we use the function s(t) to control the common value of e(pg)−1Ig(β), g ∈ 𝒢, where pg denotes the number of predictors in group g and e(pg) is the effective size of g-th group. Common choices for e(pg) are or pg. (iii) To make the ODE well defined, we need to assign the proportions of individual “influences” cg(j) to each predictor j in group g. We require ||cg||p = 1 to satisfy the equation ||∇gf(β)||p = ||cgIg(β)||p, where cg is a column vector with cg(j), j ∈ g, stacked together. These conditions imply the general group LAR identity

| (6) |

Now the implicit function theorem yields the group LAR updating direction (7) in Algorithm 4. Here c𝒢 is a column vector with cg for g ∈ 𝒢 stacked together and p𝒢 is a column vector with the corresponding effective group size e(pg) for each predictor j in group g stacked together.

Algorithm 4.

A general scheme for Group ConvexLAR.

Specific choices of the group “influence” Ig(β), effective group size e(pg), and individual “influence” cg(j) lead to different updating directions. We specialize to the following three variants. The first has a close connection to the group lasso. The second and third recover two versions of group LAR algorithms developed in the literature.

-

GroupConvexLAR: We measure the group influence by Ig(β) = ||∇gf(β)||2, which is distributed to individual predictors within the group according to their effect size cg(j) = −βj/||βg||2. Let and assume 𝒢 = 𝒢0 ∪ 𝒢1, where 𝒢0 = {g0, …, ga} denotes the set of active groups with ||βg||2 = 0 and 𝒢1 = {ga+1, …, ga+b} denotes the set of active groups with ||βg||2 > 0.

Theorem 2. For a strictly convex and twice differentiable loss function f(β), the LAR solution β(t) is continuous and differentiable at t within a segment.

-

If 𝒢0 is an empty set, the active solution vector β𝒢(t) satisfies the differential equation

(8) where D is the block diagonal matrix with blocks -

If 𝒢0 is not empty, the solution vector βgi for gi ∈ 𝒢0 satisfies

(9) and the constants ki, i = 1, …, a, and updating direction for the groups in 𝒢1 are jointly determined by(10) where A ∈ IRa×a has entries aij = dgif(β)Hgi,gj(β)∇gj f(β), 1 ≤ i, j ≤ a,andNote that, when 𝒢0 is empty, (10) reduces to (8). The technical proof of Theorem 2 is delegated to the Appendix. Again the strict convexity assumption can be relaxed to the positive definiteness of H𝒢(β(t)) along the path, which guarantees the non-singularity of the matrix involved in (10).

-

- GroupConvexLAR-L1: We choose Ig(β) = ||∇gf(β)||1, e(pg) = pg and , where tg is the time that the group g joins the active set 𝒢. Note that in this case cg(j) is fixed for any j ∈ g once group g joins the active set. Therefore and the group LAR direction (7) reduces to

(11) -

GroupConvexLAR-L2: With the choice Ig(β) = ||∇gf(β)||2, and , the ODE updating direction is same as (11) with the obvious substitute for c𝒢 and p𝒢.

Note that, when all group sizes pg are equal to 1,and for all g. All three variants reduce to the ConvexLAR.

Connection with Previous Group LAR

Consider the variant GroupConvexLAR-L2 in the special case of least squares, i.e., . In this case, both and c𝒢(t) are constant within a segment. Thus, the group LAR updating direction (11) is constant within each segment, leading to a piecewise linear solution path with segment-wise slope

This recovers a version of group LAR proposed by Yuan and Lin (2006) for group selection in least squares. Park and Hastie (2006) argue that this version of group LAR tends to select a large group with only few of its component correlated with the response. To avoid this problem, they propose another version of group LAR by simply replacing the average squared correlation (Σj∈g[∇jf(β)]2/pg) with the average absolute correlation (Σj∈g |∇jf(β)|/pg), which is simply GroupConvexLAR-L1 specialized to least squares.

We emphasize that, for a general convex loss f, the solution paths by GroupConvexLARL1 and GroupConvexLAR-L2 are both piecewise smooth instead of piecewise linear and ODE solving is necessary.

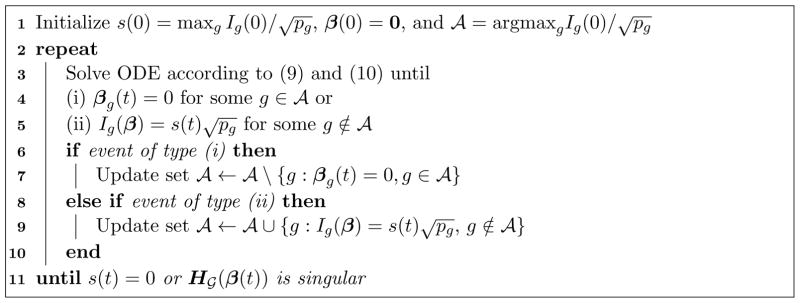

3.3 Group ConvexLASSO Modification

We show next that a simple modification of the aforementioned first variant GroupConvexLAR yields the solution path of group lasso penalized problem min

Its solution satisfies the following the Karush-Kuhn-Tucker (KKT) conditions

| (12) |

| (13) |

With the choice λ = s(t), the stationarity condition (12) coincides with the group LAR identity (6). This observation together with the above KKT conditions implies that the group lasso solution path moves along the same direction (8) of the GroupConvexLAR until either one of the following two types of event happens. The first type of event occurs when all predictors of an active group hit zero, denoted by event of type (i). The second type of event occurs when ||∇gf(β)||2 hits boundary , denoted by event of type (ii). Both types of event change the active set and redefine the direction. The second type of event is already considered in the GroupConvexLAR algorithm. Thus a simple modification of GroupConvexLAR by tracking the first type of event leads to the group lasso solution path. This modification is summarized in Algorithm 5. This exact path following algorithm for the group lasso penalized convex loss seems new. On a side note, there is no obvious connection between GroupConvexLAR-L1, L2 and group lasso.

Algorithm 5.

Group ConvexLASSO.

4 Examples

We illustrate ConvexLAR and its extensions on various statistical problems. To demonstrate the efficiency of the proposed algorithm, we report the running times of all numeric examples on i7 Core 2.93GHz, 8G RAM averaged over 50 independent runs.

4.1 Recurrent event data

Suppose that we have n independent subjects in a recurrent event study. For each subject i, Ni(t) denotes the number of events that occur over the interval (0, t] and xi ∈ IRp is the corresponding covariate vector. Assume that given xi, the counting process {Ni(t)} is a non-homogeneous Poisson process with mean function

| (14) |

for some unknown continuous baseline mean function μ0(t) and unknown parameters β ∈ IRp. See Tong et al. (2009b) for a detailed introduction to recurrent event data.

As typical in survival study, each subject is subject to potential censoring. Let Ci denote the follow-up or dropout time for subject i and Ñi(t) = Ni(min(t,Ci)) be a point process for subject i’s observed process. The observed data are summarized as {(Ñi(t),Ci, xi), i = 1, 2,⋯, n, 0 ≤ t ≤ T}, where the constant T denotes the maximum potential follow-up time. The log-partial likelihood function based on model (14) is given by

| (15) |

Under mild regularity conditions, the log-partial likelihood ℓ(β) is strictly concave in β. Thus in this example, our objective function is chosen to be f(β) = −ℓ(β). The first two derivatives of f(β) with respect to β are

Tong et al. (2009b) studied a Chronic Granulomatous Disease (CGD) data which were collected from a multicenter placebo-controlled randomized trial of gamma inferon with chronic granulotomous disease. There were 128 patients randomized to two groups, gamma interferon group (n1 = 63) and placebo group (n2 = 65). For each patient the times from the beginning of the study to initial and any recurrent serious infections are available. Eleven covariates are considered: 1. trtmt=treatment (Yes/No), 2. inherit=pattern of inheritance (autosomal/recessive), 3. age, 4. height, 5. weight, 6. cortico=use of corticosteroids (Yes/No), 7. prophy=use of prophylactic antibiotics (Yes/No), 8. gender=female, 9. hosp1=hosp. (category: US/other), 10. hosp2=hosp. (category: Europe-Amsterdam), and 11. hosp3=hosp. (category: Europe-other). We standardize all continuous covariates (age, height and weight) to have mean 0 and unit length. Furthermore, we also include the six quadratic and interaction terms between three continuous covariates. They are: 12. age×age, 13. height×height, 14. weight×weight, 15. age×height, 16. age×weight, 17. height×weight.

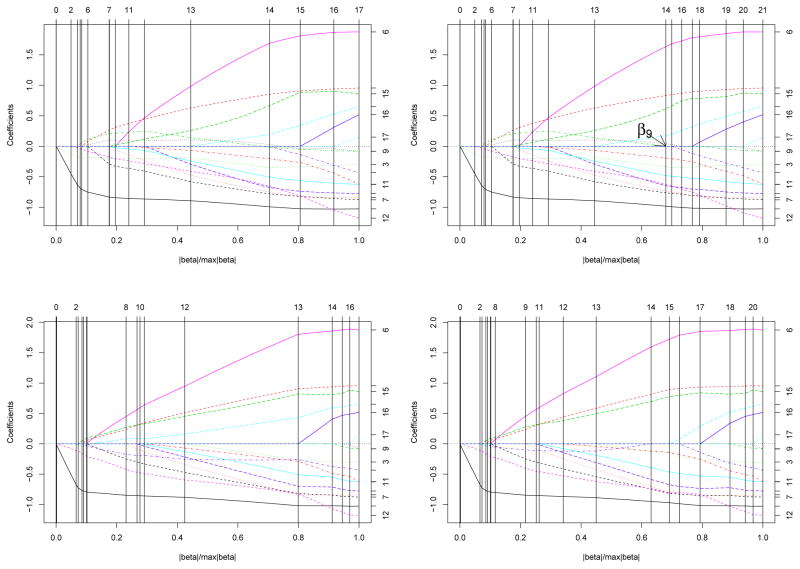

Figure 1 shows the solution paths from different algorithms. Here the numbers on the right hand side indicate which variable each path corresponds to. In all plots, the x-axes are in the units of ||β(t)||1/maxt ||β(t)||1 and vertical lines mark the event times for easy comparison between various solution paths. Top row of Figure 1 displays the ConvexLAR (top left panel) and ConvexLASSO (top right panel) solution paths for the CGD data. They are qualitatively different. For instance, in the ConvexLASSO path, β9 hits zero and then escapes active set at Step 14. In addition we also apply the weighted CovexLAR and ConvexLASSO algorithms with weights set at the maximum likelihood estimator of equation (15). They differ significantly from the unweighted ones. The running times are 4.84, 5.60, 5.34 and 6.37 seconds for the ConvexLAR, ConvexLASSO, weighted ConvexLAR and weighted ConvexLASSO solution paths, respectively.

Figure 1.

Recurrent event example (CGD data). Top left: ConvexLAR; Top right: ConvexLASSO; Bottom left: weighted ConvexLAR; Bottom right: weighted ConvexLASSO.

4.2 Panel Count Data

In the above recurrent event example, we assume that the exact time of each event, if not censored, is observed. Unfortunately this is not the case in many studies. The model for panel count data (Sun and Wei, 2000; Tong et al., 2009a) provides a remedy.

Let Ti1 < Ti2 < ⋯ < Timi be the potential observation times on process Ni(t) and denote the observation process. Let H̃i(t) = H(min(t, Ci)) be the actual observation times after censoring. Then the observed data for panel count model are

When Hi and Ci are mutually independent and also independent of Ni and xi, Sun and Wei (2000) propose to estimate regression parameters by solving the following estimation equation

where . ConvexLAR and its extensions can be applied directly to the influence function

which has a positive definite derivative

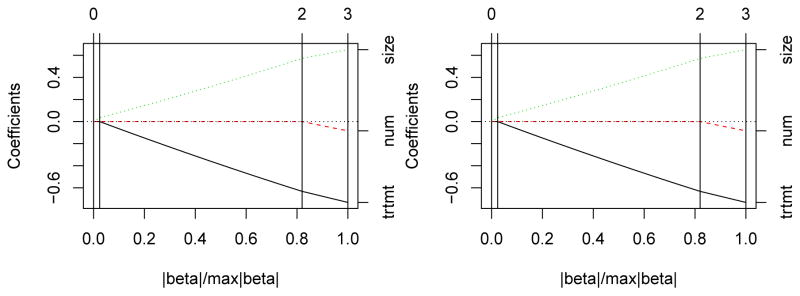

We illustrate with the bladder tumor recurrence data considered in Sun and Wei (2000). A total of 85 bladder tumor patients were randomized into two treatment groups, i.e., placebo group and thiotepa treatment group. Most patients visited the hospital several times to have their recurrent tumors removed. The number of new tumors discovered at each visit was recorded and removed after each visit. The binary treatment (trtmt) is one of our explanatory covariates. Furthermore, we consider two additional important baseline covariates, the number of initial tumors (num) and the size of the largest initial tumor (size). All covariates are centered around zero and scaled to have unit length. Figure 2 shows the ConvexLAR and ConvexLASSO solution paths for this example. The two solution paths are identical in this particular example as no active predictors return to zero along the path. The running times are 0.04 and 0.05 seconds for the ConvexLAR and ConvexLASSO solution paths, respectively.

Figure 2.

ConvexLAR and ConvexLASSO solution paths for the panel count data (bladder) example.

4.3 Ada-Boost

Ada-Boost is considered one of the best off-the-shelf classification methods (Hastie et al., 2009). In binary classification, we are given a training data set {(xi, yi} : i = 1, ⋯, n} with xi ∈ IRp and yi ∈{−1, +1}. The goal is to estimate a function f(x) whose sign will be used as the classification rule. For simplicity we consider the linear classification, in which f(x) = β0 + x⊤β. The Ada-Boost estimates parameters by solving

| (16) |

which is strictly convex and thus amenable to our ConvexLAR algorithm. Denote 𝒟 = {i : yi = −1}. Define the marginal minimizer of β0 as a function of β

| (17) |

Thus the first two derivatives of f(β) with respect to β are

where

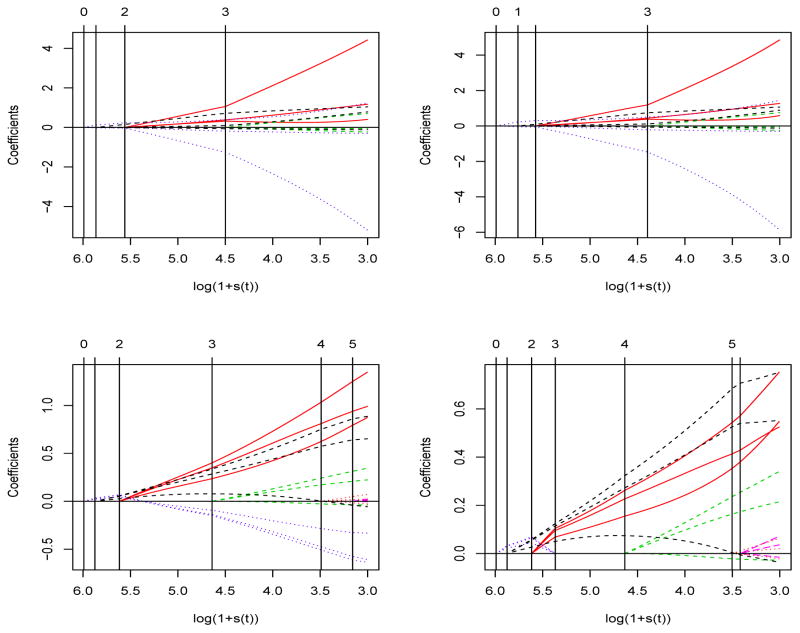

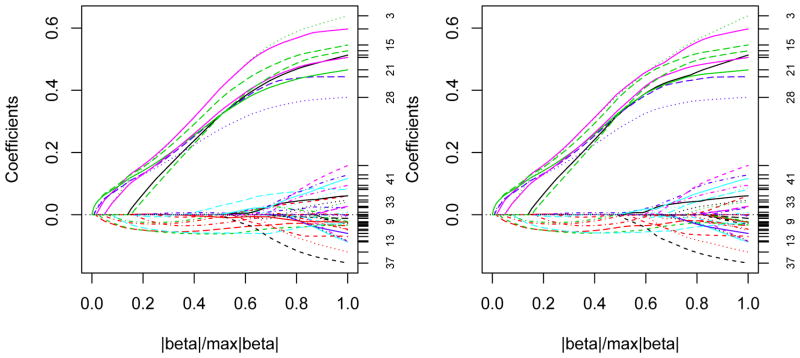

The Wisconsin Diagnostic Breast Cancer (WDBC) data (Frank and Asuncion, 2010) are collected on n = 569 patients from digitized images of a fine needle aspirate (FNA) of their breast mass. The number of predictors is p = 10. The mean, standard error, and “worst” or largest (mean of the three largest values) of these predictors were computed for each patient, resulting in 30 features forming 10 groups each of size 3. The response is binary in that each patient is diagnosed either as malignant (Y = 1) or benign (Y = −1). Each predictor variable is standardized to have mean zero and variance one. Figure 3 displays the group ConvexLARs solution paths for this example. The x-axis is log(1+s(t)), where s(t) is the same as the λ in group ConvexLASSO. To have a clear view, only the solution paths where log(1 + s(t)) > 3 are plotted. GroupConvexLAR-L2 and GroupConvexLAR-L1 appear quite different from GroupConvexLAR with larger maxi∈𝒢 |βi(t)| across the same level of s(t). Bottom row of Figure 3 displays the GroupConvexLAR and Group ConvexLASSO solution paths, which are fundamentally different for the WDBC data. The third group (displayed with dotted line) is the first active one and then stay active along the whole GroupConvexLAR solution path. In contrast, the same group hits zero in the event 3 of the Group ConvexLASSO solution path and escapes the active set thereafter. The running times are 1.53, 1.58, 1.49 and 2.13 seconds for the GroupConvexLAR-L2, GroupConvexLAR-L1, GroupConvexLAR and GroupConvexLASSO solution paths, respectively.

Figure 3.

Group LARs solution paths for the Ada-Boost data (WDBC) example. Top left: GroupConvexLAR-L2; Top right: GroupConvexLAR-L1; Bottom left: GroupConvexLAR; Bottom right: Group ConvexLASSO.

4.4 Gaussian graphical model

Our fourth example concerns the LAR for Gaussian graphical model. Assume we have iid observations x1, x2, ⋯, xn ∈ IRp from N(0, Σ). Denote the sample variance covariance matrix by and precision matrix by Ω = Σ−1. Then the negative likelihood for Ω is given by −ℓ(Ω) = −log detΩ + tr(Σ̂Ω), which is convex. Let ωij denote the (i, j)-element of Ω. wij = 0, i ≠ j, implies the conditional independence between variables i and j. We partition the parameters into the sets Ω0 = (ω11, ω22, ⋯, ωpp)⊤ ∈ IRp and Ω1 = (ω12, ω13, ⋯, ω(p−1)p)⊤ ∈ IRp(p−1)/2. Only those in Ω1 are subject to regularization. We may rewrite the negative loglikelihood as −ℓ(Ω0,Ω1). For every fixed Ω1, we define Ω0(Ω1) = argminΩ0 − ℓ(Ω0, Ω1). With these notations, we have f(Ω1) = −ℓ(Ω0(Ω1), Ω1).

To derive the LAR solution path, we need the first two derivatives of the objective function

where Ω0 is implicitly determined by Ω1. We first show how to determine Ω0 given Ω1. Setting partial derivative of f with respect to Ω0

to 0 gives the stationarity condition

In other words, given Ω1, we need to choose Ω0 such that the diagonal entries of Ω−1 match those of Σ̂. In practice, Newton’s iteration

can be applied to solve for Ω0 given Ω1. We denote this mapping by Ω0(Ω1). The gradient DΩ0(Ω1) ∈ ℝp×p(p−1)/2 will be of use later and is obtained through the implicit function theorem

| (18) |

Now the first derivative of objective function f with respect to Ω1 is

but the second term vanishes because DΩ0 f(Ω0, Ω1) = 0. Hence

In words, given current Ω1, calculate Ω−1 at optimal Ω0; then the off-diagonal entries of 2(Σ̂ − Ω−1) form the gradient of f in terms of Ω1. For the Hessian,

| (19) |

Now substitute DΩ0(Ω1) by the expression (18).

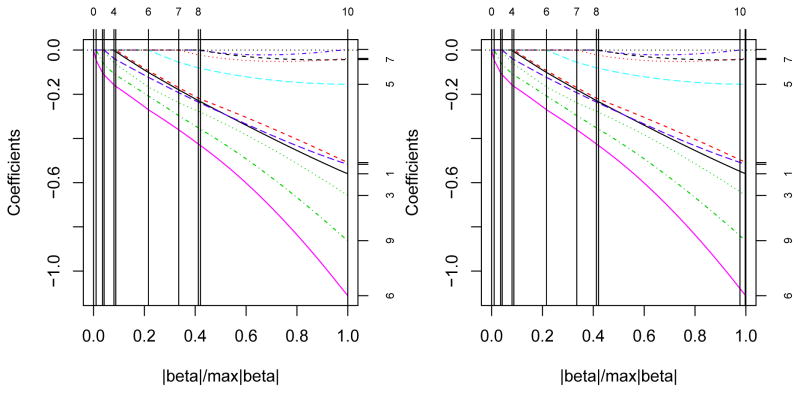

We illustrate our algorithm by two examples. The first data set contains 88 students’ scores on five math courses - mechanics, vector, algebra, analysis and statistics. See Table 1.2.1 of Mardia et al. (1979) for more details. Figure 4 displays the ConvexLAR and ConvexLASSO solution paths. The most important three edges are analysis-algebra, statisticsalgebra, and algebra-vector by lasso regularization. The ConvexLAR and ConvexLASSO solution paths coincide in this example. The running times are 0.28 and 0.37 seconds for the ConvexLAR and ConvexLASSO solution paths, respectively.

Figure 4.

ConvexLAR and ConvexLASSO solution paths for the graphical model (math score) example.

Our second example concerns a simulated data set with p = 10 and n = 200 and illustrates a case where ConvexLAR and ConvexLASSO yield different paths. The true precision matrix Ω = (ωij) has entries ωii = 1, ωi,i−1 = 0.5, ωi,i+1 = 0.5, and ωij = 0 for |i − j| > 1. There are 45 non-diagonal free parameters. Figure 5 displays the ConvexLAR and ConvexLASSO solution paths. The solution paths appear different. The running times are 1.63 and 2.62 seconds for the ConvexLAR and ConvexLASSO solution paths, respectively.

Figure 5.

ConvexLAR and ConvexLASSO solution paths for the graphical model example with the simulated data.

5 Discussion

Variable selection has become an essential tool for modern data analysis. So far penalization method such as lasso has been the dominant regularization technique and extended to handle increasingly more applications. In contrast, the original LAR (Efron et al., 2004) has received much less generalizations despite its popularity. In this expository article, we show that the simple geometric idea in LAR can be naturally extended to various situations such as convex loss, group structures in predictors, and data adaptive regularizations. The classical score function plays an essential role throughout the development. The original “least angle” idea translates to the equality of contributions by the active predictors to the score function. In our understanding, this is the fundamental idea in LAR and underpins the various extensions presented in this article.

This illustrative article is meant to whet readers’ appetites not satiate them. Much is left undone. For instance, in principle it is the estimation equation that LAR operates on. Therefore LAR naturally applies many statistical methods without a natural loss function such as generalized estimation equation (GEE), as hinted in the panel count data in Section 4. In this article we focus on LAR algorithmic development and forego the theoretical treatment. There has been intensive study of the asymptotic properties of regularized estimates by penalty methods in recent years. Same study for ConvexLAR is worth pursuing. Especially those that might shed light on the difference between the two regularization methods are much desired.

Supplementary Material

Acknowledgments

The authors thank the editor, the associate editor, and two referees for their helpful suggestions that led to significant improvement of the article. The work is partially supported by grants NSF DMS-1055210 (Wu), NSF DMS-1310319 (Zhou), NIH/NCI R01 CA-149569 (Wu and Xiao), and NIH HG006139 (Zhou).

Appendix

Proof of Theorem 1

The LAR fundamental identity (1) for active predictors dictates the vector equation

To solve for β𝒜 in terms of t, we apply the implicit function theorem (Lange, 2004). This requires calculating the differential of k with respect to the dependent variables β𝒜 and the independent variable t

Given the non-singularity of H𝒜(β, t), the implicit function theorem applies and shows the continuity and differentiability of β𝒜(t) at t. Furthermore, it supplies the derivative (2).

Derivation of GroupConvexLAR directions (8), (9) and (10)

We use 𝒢0 to denote the set of active groups that equal to 0. Let 𝒢1 = 𝒢 − 𝒢0, where 𝒢 denotes the set of all active groups. We slightly abuse notation by letting 𝒢0, 𝒢1 and 𝒢 to denote both the sets of groups and the set of all predictors belonging to the corresponding groups. Obviously, 𝒢0, 𝒢1 and 𝒢 depend on the time index t.

Derivation of updating direction hinges upon the LAR identity (6), which we rewrite here for convenience

| (20) |

-

When 𝒢0 is empty, differentiating the vector equation (20) with respect to t via chain rule gives

(21) where . Combining all |𝒢| vector equations and rearranging yields the LAR updating direction(22) where D is the block diagonal matrix with blocks -

When 𝒢0 not empty, we assume that 𝒢0 contains a groups, g1, …, ga, and 𝒢1 contains b groups, ga+1, …, ga+b. By rearranging the order of groups, we have , where and . For any group g ∈ 𝒢1, i.e., ||βg||2 ≠ 0, the vector equation (21) still holds which gives

(23) Unfortunately it does not hold for any g ∈ 𝒢0 due to the singularity ||βg||2 = 0. First we show that the updating direction of such groups is proportional to their gradient sub-vectors. By the LAR identity (20) and the fact βg(t) = 0pg,for all δt > 0. Taking limit δt ↓ 0 yields(24) where kg are the constants to be determined.

Equating the norms of the two summand vectors in the LAR identity (20) shows . Differentiating both sides of this identity with respect to t via chain rule gives

| (25) |

for any g ∈ 𝒢0. Now substituting (24) into the equations (23) and (25), we obtain

| (26) |

where A ∈ IRa×a has entries aij = dgif(β)Hgi,gj(β)∇gj f(β), 1 ≤ i, j ≤ a,

Next we show that the linear system is nonsingular and thus admits a unique solution, when H𝒜(β(t)) = H𝒢,𝒢(β(t)) is positive definite. Rewrite

where

Combining the facts (i) H𝒜 is positive definite, (ii) D̃ is positive semidefinite, and (iii) for all g ∈ 𝒢0, E(H + D̃)E⊤ is positive definite. Thus

| (27) |

Equation (27) coupled with (24) yields the LAR updating direction for all active predictors.

Footnotes

Matlab codes used in Section 4 are contained in the zip file matlabcodes.zip available online. Demonstration is provided in main_demo.m for all examples in Section 4.

Contributor Information

Wei Xiao, Email: Ywxiao@ncsu.edu.

Yichao Wu, Email: wu@stat.ncsu.edu.

Hua Zhou, Email: hzhou3@ncsu.edu.

References

- Donoho DL, Johnstone IM. Ideal spatial adaptation by wavelet shrinkage. Biometrika. 1994;81:425–455. [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. Ann Statist. 2004;32(2):407–499. With discussion, and a rejoinder by the authors. [Google Scholar]

- Frank A, Asuncion A. UCI machine learning repository 2010 [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2 Springer; New York: 2009. Springer Series in Statistics. [Google Scholar]

- Lange K. Optimization. Springer-Verlag; New York: 2004. Springer Texts in Statistics. [Google Scholar]

- Mardia KV, Kent JT, Bibby JM. Multivariate Analysis. Academic Press [Harcourt Brace Jovanovich Publishers]; London: 1979. Probability and Mathematical Statistics: A Series of Monographs and Textbooks. [Google Scholar]

- Meier L, van de Geer S, Bühlmann P. The group Lasso for logistic regression. J R Stat Soc Ser B Stat Methodol. 2008;70(1):53–71. [Google Scholar]

- Ortega JM, Rheinboldt WC. Iterative Solution of Nonlinear Equations in Several Variables, volume 30 of Classics in Applied Mathematics. Society for Industrial and Applied Mathematics (SIAM); Philadelphia, PA: 2000. Reprint of the 1970 original. [Google Scholar]

- Park MY, Hastie T. Technical report. Stanford University; 2006. Regularization path algorithms for detecting gene interactions. [Google Scholar]

- Sun J, Wei LJ. Regression analysis of panel count data with covariatedependent observation and censoring times. Journal of The Royal Statistical Society Series B. 2000;62(2):293–302. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J Roy Statist Soc Ser B. 1996;58(1):267–288. [Google Scholar]

- Tong X, He X, Sun L, Sun J. Variable selection for panel count data via non-concave penalized estimating function. Scandinavian Journal of Statistics. 2009a;36(4):620–635. [Google Scholar]

- Tong X, Zhu L, Sun J. Variable selection for recurrent event data via nonconcave penalized estimating function. Lifetime Data Analysis. 2009b;15(2):197–215. doi: 10.1007/s10985-008-9104-2. [DOI] [PubMed] [Google Scholar]

- Wu Y. An ordinary differential equation-based solution path algorithm. Journal of Nonparametric Statistics. 2011;23:185–199. doi: 10.1080/10485252.2010.490584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y. Elastic net for Coxs proportional hazards model with a solution path algorithm. Statistical Sinica. 2012;22:271–294. doi: 10.5705/ss.2010.107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. J R Stat Soc Ser B Stat Methodol. 2006;68(1):49–67. [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101(476):1418–1429. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.