Abstract

While all stages of a community-partnered participatory research (CPPR) initiative involve evaluation, the main focus is the evaluation of the action plans, which often involves the most rigorous evaluation activities of the project, from both a community-engagement and scientific perspective. This article reviews evaluation principles for a community-based project, and describes the goals and functions of the Council’s research and evaluation committee. It outlines 10 steps to partnered evaluation, and concludes by emphasizing the importance of asset-based evaluation that builds capacity for the community and the partnership.

Keywords: Community-Partnered Participatory Research, Community Engagement, Community-Based Research, Action Research

Introduction

How can we show that a project is going as planned? Or that what seems to be happening is what we wanted? The key lies in evaluation. Although evaluation might seem like a side-dish to the main course of the intervention, evaluation is an essential component of every Community-Partnered Participatory Research initiative. Evaluation shows whether a project is working or not working or whether an objective of the project is being met or not. Evaluation is an ongoing, repetitive process in every phase of the project. Evaluation plays a special role in documenting the value of the main action plans of the “Valley” stage and, from a policy perspective is often the central task of the initiative. (Figure 6.1) The outcomes of evaluation processes are considered evidence of the project’s effectiveness. (Figure 6.2)

Fig 6.1.

Valley

Fig 6.2.

Evaluate

There are different types of evaluations. Formative evaluations are designed to show the progress to date, allowing project leaders to shape the continued direction and implementation of the project.

Research evaluations typically focus on outcomes. They are usually conducted by people outside the project to avoid bias and reflect an objective attempt to measure whether the project or intervention worked. The term research means that scientific standards were used to judge whether events or things were connected in valid ways –for example, whether program actions contributed to outcomes.

In CPPR initiatives, formative and research evaluations are designed to support each other and are equally important. CPPR evaluations should 1) show whether the intervention was effective, and 2) provide insight on the process of the intervention itself. If the intervention was ineffective, the evaluation should show how the implementation and action plans can be improved.

Community and Academic Roles in Evaluation

In all phases of evaluation, community and academic members should participate equally. Community participation will influence the way the evaluation is designed, the questions it asks, and the measures it uses to gauge success. Community participation is also more likely to reflect the values, perceptions, and experiences of the community and therefore to be more relevant to the partnership’s goals. Academic participation helps to maintain scientific rigor during evaluation, which is critical for reaching valid results and helping to build the community’s capacity to provide interventions and services in the future. A CPPR initiative respects and honors the expertise of both community and academic participants.

The remainder of this chapter discusses evaluation principles, the Evaluation Committee, formative evaluation (which is only briefly mentioned here, since it is discussed in more detail in Chapter 3), and outcomes evaluation.

Evaluation Principles

CPPR evaluation activities should:

Draw on community strengths and respect community culture and practices

Foster collaborative thinking and build sustainable, authentic partnerships

Support learning among both the partnership and the community

Ensure equal participation by all partners in decision-making and leadership

Implement the evaluation based on continuous input from all participants

Analyze data collaboratively

Support co-ownership of evaluation activities and findings among all members of the group, along with joint participation in dissemination and publication (see “Celebrate Victory” in Chapter 7)

Build trust between the community group and other entities, such as governmental, political and academic institutions

Stay true to what the community defines as the issue and to the project Vision and partnership principles.

These general principles can be used to support five overall standards for evaluation, listed below along with questions that can be used for group reflection concerning the quality of the evaluation.

Standards for Effective Evaluation

The Evaluation Is Useful. The evaluation should answer the community’s questions and build on a body of community and scientific. It should also be useful in a CPPR perspective of allowing contribution from community participation. An evaluation is also considered useful if it tells the leadership what needs to be done next (eg, do we need to improve our message before moving on?). How does it allow for contribution from community participation? Does it give us information for what to do next from a scientific perspective?

The Evaluation Is Outcome-Oriented. Outcome-oriented means that the evaluation has concrete outcomes that are important to the stakeholders and community at-large. It is also important to assess whether the project is likely to achieve those outcomes. Does the evaluation identify outcomes that will be important to stakeholders, and is the project likely to achieve those outcomes?

The Evaluation Is Realistic and Feasible. The evaluation must be practical, politically viable and cost-effective. A good question to ask is, “Can we do it ourselves?” If not, other suitable ways need to be explored. The goals of the evaluation also need to make common sense. Do the evaluation goals and conceptual model make common sense?

The Evaluation Is Culturally Sensitive and Ethically and Scientifically Sound. In CPPR, the evaluation needs to be consistent with the group’s vision, and tap into the diversity of all the groups involved. Consultants might need to be included to ensure both community and scientific validity. Design decisions should be arrived through collaboration. To ensure ethical and scientific soundness, the evaluation needs to follow standard procedures of research, such as human subjects review and scientific peer review.

The Evaluation Is Accurate and Reflective. An important consideration when planning evaluation activities is who provides the information from the evaluation and who summarizes the information. People are likely to bring their own experiences to data analysis, especially when working with qualitative data—different people will find different parts of the data interesting or significant. Therefore, it is important to conduct evaluation jointly, and to have final approval by all parties. To facilitate this, summary data should be de-identified to protect privacy and then made accessible to project members as appropriate.

To facilitate the evaluation according to these principles and standards, larger CPPR initiatives have a separate Evaluation Committee. This Committee provides oversight and support to the Steering Council and working groups as they develop action plans and evaluation strategies. Because of the committee’s central importance to guiding the evaluation, we first describe its purposes and functions.

The Evaluation Committee of the Steering Council

The Evaluation Committee reports to and is supported by the Steering Council. In addition to overseeing the project’s outcome evaluation, evaluation leadership ensures that the core values of CPPR are being met and the initiative’s actions are grounded in community and scientific values. This includes ensuring that the relevant stakeholder perspectives, especially those of grassroots, are reflected equally. The leadership must also: be mindful of community participation, leadership and transparency of technical tasks; develop partnership strengths of the academic members and their capacity to co-lead with community members; build the overall capacity of the group in evaluation activities; find scientific solutions that fit project initiatives and community capacities, strengths and interests; and advance a field of partnered evaluation and science. All these functions are tasked to the evaluation leadership while being mindful of the larger goal of community benefit and capacity building. These tasks require flexibility, including the ability to reprioritize traditional academic goals.

Over time the Evaluation Committee may serve strategic and advisory roles, supporting working groups in evaluating their own action plans or helping community-academic teams to develop proposals to fund evaluation plans.

The Evaluation Committee can also initiate broader strategies to build evaluation capacity. For example, we developed a “CPPR methods book club.” The book club, led by a biostatistician and co-led by a grassroots community member, reviewed published CPPR evaluation methods. That group met for a year, resulting in improved relationships and trust and a clearer understanding of evaluation methods.

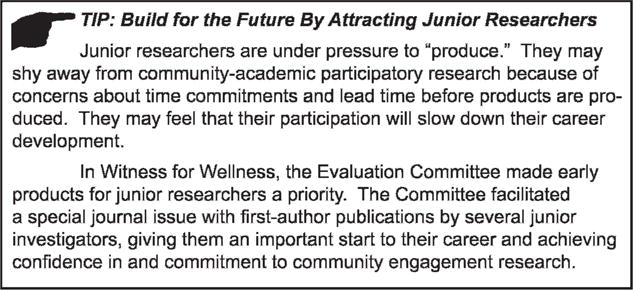

The Evaluation Committee should support logistical issues around the data, such as where and how it is kept, and handle submissions to Institutional Review Boards (explained more fully under “Outcomes Evaluation” below). Other important functions of the Evaluation Committee are to identify opportunities to develop more formal research initiatives, write grant proposals, and support research presentations (which are discussed more fully in Chapter 7). In our partnered projects, the Committee has helped attract and retain promising junior investigators to participate in CPPR projects (Figure 6.3).

Fig 6.3.

Tip

An important role for community members of the Evaluation Committee is helping explain scientific issues to community members. For example, community members can explain to others why a control group was designed a particular way. Because community members can provide such explanations based on their active participation in the decisions, they can help build trust with the community at-large.

Membership Recruitment and Support

Membership of the Evaluation Committee should reflect the diversity of the community as well as the diversity of the academic partners. In forming our own Evaluation Committee for the Witness for Wellness project, we sought committed members who understood or were willing to learn about the local histories of community research; and to explore options for how to conduct research together. This racially and ethnically diverse group included community and academic members with widely varying experiences and a mix of junior and senior people. In some cases, resources to sustain community participation were secured through our “Community Scholars” program.

Our initial Evaluation Committee for Witness for Wellness included a dozen individuals, including academics from various fields, two project co-leaders, and two ad hoc community members. At times, we invited outside evaluators to provide an independent view of our progress and success.

Having members in both the Council and Evaluation Committee means that the principles of Community-Partnered Participatory Research are well known, so the evaluation can be grounded within those principles.

Structure

The Evaluation Committee typically meets monthly to set up the evaluation structure or more frequently in the first several months. During periods where subcommittees or working groups are implementing specific evaluation plans, the Evaluation Committee may meet monthly or quarterly to consult or review work in progress. Additional meetings can be held to support evaluations of particular events or to develop approaches to build community consensus for proposed action plans.

Formative Evaluation

Formative evaluation plays an important role in the overall evaluation of an initiative and of action plans at the Valley stage, particularly as it contributes to the formative goals (shaping the initiative). Information on how things are going helps to ensure that both the partnership and the initiative are pursuing agreed-upon goals and sticking to principles. It allows leadership to gauge the effectiveness of the partnership to allow any needed course corrections to the main action plans. For example, leadership in the Witness for Wellness initiative became aware that an imbalance in knowledge of basic principles of research and evaluation between academic and community members was leading to heavy reliance on academics for decision-making, particularly decisions regarding evaluation. The partnership corrected the imbalance and encouraged shared decision-making by initiating the “CPPR methods book club” discussed above.

Because there is so much overlap between evaluation and intervention activities, the methods of partnership evaluation and tools for conducting them are covered in greater detail in Chapter 3.

Outcomes Evaluation

The heart of the evaluation at the Valley stage is an outcomes evaluation of the main initiative as a whole or for specific working groups’ products. A good evaluation (one that yields valid data that address the goals of the project and are meaningful to different stakeholders) is hard work (that’s why it’s part of the Valley!), but also can be very rewarding. The job of developing an outcomes evaluation consists of 10 main steps.

Step 1. Clarify the Evaluation’s Goals and Main Questions

The evaluation of a CPPR initiative has the goals of determining: 1) whether the intervention reached the intended population; 2) whether the initiative delivered the intervention or processes that it intended to deliver; 3) what the outcomes of the initiative are for different potential stakeholders—both intended and unintended outcomes; while 4) providing feedback to the initiative and to the community along the way. Like all components of the project, the evaluation should be partnered. Sometimes outside evaluators, or persons from outside the team from the community or academic partners, can be brought in to provide a more independent view.

Evaluation questions clarify what the project leaders and community want to know about the effect of the initiative or action plan within the community. Evaluation questions should be stated as clearly as possible in language that all participants, community and academics, understand and find relevant.

Evaluation questions should be useful in that they clarify the community’s questions about the program; outcome-oriented in that they focus on measurable outcomes; realistic in that they can be answered given the project goals and resources; and culturally relevant in that they reflect the norms, values and strengths of the community and the partnership.

Examples of evaluation questions include:

How did this program (such as a new training program for case managers on recognizing a health problem in their clients) affect the knowledge, skills and actions of those participating (case workers, clients and family members)?

Did the new training program increase the ability of case managers to recognize the health problem and did this, in turn, lead to better health outcomes for their clients?

Did the program have any unexpected effects for participants, such as diverting their attention from other important tasks?

Step 2. Define the Intended Population and the Evaluation Sample

The Council and working group leaders should help assure that there is a clear intended population for actions or interventions in the project. Is it the community at-large? A special population within the community? And in practice, what population will the project actually try to reach at this stage? Given these resources, what priorities will be set? These questions can allow a more precise definition of the intended population for this phase of the initiative, so that you can track how that population is reached.

Given the definition of the intended population set by the Council (overall) and working groups (in their specific action plans), it then becomes important to develop measures of the characteristics of people reached by the project, such as their age, sex, or ethnicity, to document whether the project has reached the intended population.

One common way of tracking who actually is reached is through meeting and event attendance records and sign-in sheets. For example, in the Talking Wellness pilot of Witness for Wellness, we wanted to know whether persons of African descent, whether living in the United States or attending from overseas, were participating in the project events sponsored at the Pan African Film festival. Thus, in our surveys we obtained information allowing us to identify country of origin and of residence, and self-identified ethnic/cultural status. Sometimes, it may be possible to compare the populations reached with a broader population of interest. For example, it may be possible to use census data from an area to describe the characteristics of persons living in the community, in relation to those participating in project events.

Here are two definitions that might be useful in analyzing your evaluation sample:

A representative sample means that the group being evaluated is similar to the full population in a number of ways (like age, sex and ethnic distribution).

The term, systematic, stands for a rigorous process to assure something, such as having a balanced or equal number of persons in different age groups or sex groups in a project—to help analyze the implications for how different types of people might respond.

It is often a good idea to have a statistician from the Evaluation Committee consult with the working group to assure that both the intended population, and the strategy to sample participants to represent that population, are valid and documented. Similarly, community members from outside the project can help assure that the intended sample for the intervention and measures of it are valid within the community.

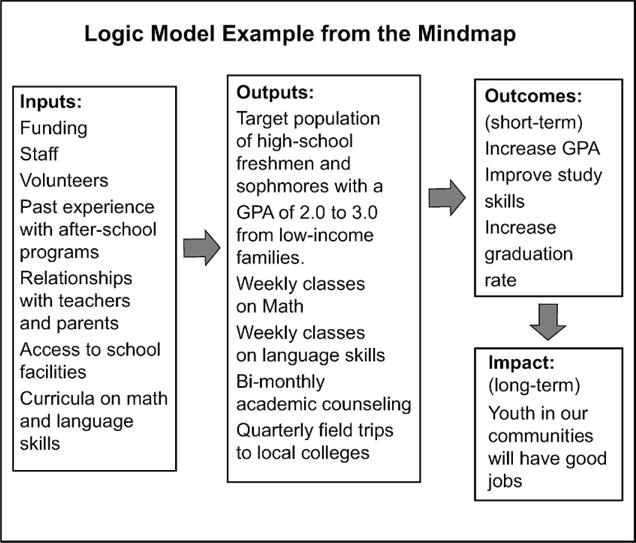

Step 3: Develop a Logic Model

As noted earlier, a logic model is a graphic resembling a flow chart that connects activities to intended outcomes and depicts the pathways by which the initiative is expected to lead to outcomes. There are two kinds of outcomes: intermediate (intermediate steps toward your overall goal) and end (your overall project goal).

To get started, the Evaluation Committee and working group team members should review the Vision and then think about:

What changes or outcomes for the community do we think will result from this project? How will those changes or outcomes occur?

What are we trying to achieve as outcomes? Why are those outcomes important and to whom? (which stakeholders?)

What other kinds of changes might occur as a result of our efforts?

What else might be going on at the same time that might influence our results?

These questions, like the Vision exercises, can be posed during a brainstorming session for the partnership. The ideas should be summarized on a poster or white board. Then the team should try to prioritize outcomes in terms of their overall importance to the project. Interim outcomes should also be specified. For example, if the overall goal is to reduce obesity, an interim outcome might be increasing the availability of fresh fruits and vegetables in the community’s grocery stores. It can also be helpful to get a sense of how likely the team feels that different outcomes will occur as a result of the intervention—some kinds of community health problems may be difficult to change in a short period, or with limited resources.

For example, in the Talking Wellness pilot of Witness for Wellness, community members hoped to use community-generated arts events to reduce the social stigma of depression in the community. But in early discussions, community and academic partners recognized that long-held attitudes may be difficult to change, so the primary outcome shifted from wanting to reduce stigma to one of the intermediate outcomes, to foster the perception that depression is a community concern.

The next step is to link action plans or specific activities of interventions to the prioritized outcomes. Outlining the different outcomes on a white board, and creating arrows (signifying sequence or order of influence), between the outcomes and project activities creates a logic model.

For example, in the Talking Wellness group’s community arts events at the Pan African Film Festival in Los Angeles, a photography exhibit was used to engage the audience in what it feels like to experience depression. Also, different activities were mapped to different outcomes.

At this stage, it can be helpful to review formal theory and conceptual models to suggest other kinds of concepts that might be useful to track as intermediate outcomes. These steps can make the initiative more appealing to funders, who are interested in the scientific and theoretical basis of a project. This step also should be accomplished through a partnered approach, taking the time to share the concepts meaningfully with the group, translating theory and concepts as needed into a common language that everyone in the group can understand, and familiarizing academic members with terms and concepts of meaning in the community. These activities can also build trust within the partnership, in the evaluation activities and reinforce the equal partnership principles.

One example of a logic model was developed by community member Eric C. Wat for the Mindmap, a project of the community-based agency Special Services for Groups. Mr. Wat’s logic model (Figure 6.4) illustrates how the Mindmap project aims to arrive at its goal of improving employment outcomes for youth in South Los Angeles.

Fig 6.4.

Mindmap logic model

Step 4: Identify the Expected Outcomes for Each Stakeholder Group

Step 3 should also lead to a list of intended outcomes for different stakeholders in the partnership and in the community or outcomes map. Examples of various outcomes include improvements in community attitudes, participant satisfaction, increased access to information or resources, publications, development of a local plan, and sustained participation. Keep in mind that as you will be designing the action steps of the intervention, you will have expected outcomes of these action steps. Evaluation is a way to formalize these outcomes explicitly. Below is a list of possible outcomes for different levels of engaged stakeholders.

Individual: Change in knowledge, attitudes, behavior, skills, self-efficacy—or being capable of producing a goal.

Groups: Change in interpersonal relationships and practices; feelings of integration and acceptance.

Organizations: Change in way business is done, resource allocation, policies, involvement of team members in leadership roles.

Systems: Change in delivery of services and the creation of new resources to meet competing demands.

Communities: Changes in community action, political climate, integration of groups, redirection of power, social norms, and community identity.

Policies: Changes in laws and regulations; development of initiatives to better serve the community.

Sometimes there are differences in what community members and academic researchers view as important indicators of success for a project. Questions such as “Were the goal(s) met?”, “What was learned?”, “How can we improve or sustain efforts?” should be addressed from both the community and academic perspective. The answers to such questions can help identify the priorities of different members of the partnership for project outcomes.

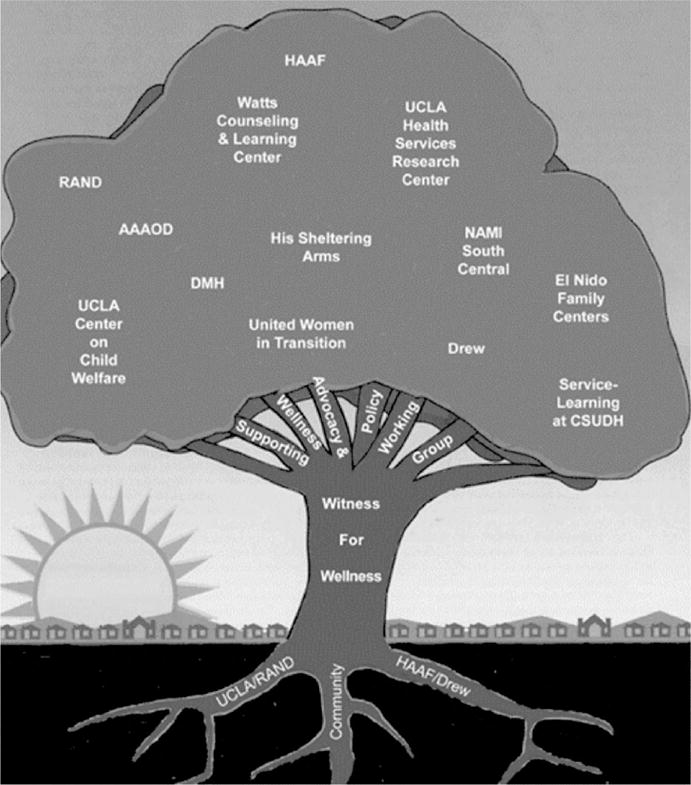

It may help to use an image to visualize the full range of stakeholders for a project or intervention, and their “stake” in the project or issue. For example, Supporting Wellness (one of the Witness for Wellness working groups) used the image of a tree to represent policy leaders (leaves taking in sunlight and oxygen to support growth and direction), community agencies (branches and trunk to serve as a structure and support the initiative), and grassroots members (roots, grass providing nutrients and being fundamental to the growth of the initiative). Visual images can help to demystify concepts and make research and evaluation more community and partnership-friendly. (Figure 6.5)

Fig 6.5.

Supporting wellness tree

The final set of outcomes for each stakeholder should be prioritized. Not all outcomes that people may feel are important can or should be tracked for a given project. Rather, group leaders can help the work group develop priorities for the 2–3 most important outcomes, providing direction for what needs to be measured as indicators of success.

Step 5: Coordinate and Integrate the Expected Outcomes

It is important to have consistency between the Council’s view of outcomes for the project and each working group’s view of outcomes for their action plans.

For this reason, the working group chairs should be designated and participate in the Council’s activities prior to developing broad outcomes, and support from the Council should be available for working groups to review as they develop their own outcomes for their action plans.

Step 6: Design the Evaluation

An evaluation design specifies how the data or information obtained in a project are structured to allow the evaluation questions to be answered. Every design has its strength and limitations, and there are many different evaluation designs. Developing designs typically involves a number of key decisions. Some of the factors to consider are briefly described below.

Comparison Group or Descriptive?

Comparisons are useful to help determine whether one action or intervention (such as a provider training program) makes a difference compared to an alternative approach (such as usual practice or no special training). There are different kinds of comparison group designs with different implications for resources and different demands on the partnership and community.

The classic “randomized clinical trial,” which is the gold standard for testing the effects of a new medical treatment, assigns participants to one condition or another. Randomized assignment to compared conditions is scientifically desirable, but implementing such designs requires a high degree of community trust in the partnership and in the scientific idea and process, so the opportunity must be carefully prepared.

There are other ways of assigning comparison groups or people, such as matching (choosing people or groups that are similar in their characteristics), or assigning on a voluntary basis (based on people’s preferences). These methods are “nonequivalent” comparison group designs. They are sometimes more feasible to implement in community projects, but raise other kinds of questions about whether the evaluation is valid (are the compared groups truly equivalent in factors other than exposure to the intervention?) and rely on careful control in the analysis for ways in which the groups may not be comparable or equivalent.

So in designing a partnered evaluation, it is important to discuss the trade-offs between a more scientifically valid design for comparing groups, such as randomization, with feasibility and the level of prior community trust in the partnership, while also considering the available resources. It may be important, for example, to start off with simpler designs that build trust in evaluation and in the partnership and to work toward more rigorous designs as the partnership matures.

The alternative to a comparison group design is a rich description of a roll-out of an intervention in a population. A rich description is a way in which to describe some event or thing in descriptive detail. This provides a wealth of information beyond a definition or nominative category. A rich description evaluation can lead to important insights as to what the effects might be, and what they mean to people, and what the process of the intervention roll-out has been.

How do projects know what kind of design might be best? The answer depends in part on the intended audience and what other work has already occurred in the community and in research fields. Descriptive studies or broad explorations are often good both when there are only a few other precedents or prior studies and when there are many other precedents or programs or studies. When there are few precedents, a descriptive study will help you figure out how something works first and make sure that it is acceptable to people. This is known as an exploratory or feasibility study. When there are many prior precedents, there is nothing to prove about cause and effect and the project can concentrate on other aspects, such as building sustainable capacity in the community.

Cross-sectional or Longitudinal?

Other important aspects of design include whether everything is measured at one time and lumped into one analysis (a cross-sectional study) or whether the data are collected at different points in time, so that changes can be measured over time (a longitudinal study).

Generally speaking, longitudinal designs are better than cross-sectional designs for drawing conclusions about cause and effect. But a longitudinal design also requires that data be collected at two or more time points, which generally costs money and takes more time. So one strategy in developing a community-academic partnership may be to start with something cross-sectional and descriptive, and point later to a more rigorous, longitudinal, comparison group evaluation. A movement to a more rigorous design may well be in response to community concerns, such as questions about how participants in the project are affected by the intervention over time, setting the stage for a dialogue in the partnership on the importance of answering that question, and developing a strategy for the resources to answer it using a partnered approach.

Quantitative, Qualitative or Mixed?

Quantitative data are reducible to numbers that can be used in a standard statistical analysis. Qualitative data are more narrative or observational based and consist largely of text, which then needs to be coded into themes and ideas in order to be analyzed.

Quantitative and qualitative data have different purposes and suitability to different types of analyses and questions. Qualitative data, being more story-based, tell a richer story and are best for identifying ideas and themes among the information recorded or told. These ideas may vary from participant to participant, so the results can be hard to standardize. An example of collecting qualitative data would be having participants share briefly about how their lives have been affected by the project.

Quantitative data, such as census data and information from standard audience surveys, are more standardized across individuals (when collected properly) but are limited to the specific data collected. For example, asking participants to rate a meeting’s productiveness on a scale of 1 to 10 would yield quantitative data. Thus, quantitative data are more suited to testing a hypothesis or theory about the outcomes because you can compare individuals’ answers to each other, whereas qualitative data are generally not scientifically comparable.

Mixed methods involve collecting both quantitative and qualitative data. One looks for some consistency in the major findings across both methods to tell an overall story. Many community-based participatory research projects used mixed methods. However, it then becomes important to know when and how to use different types of data to tell different stories, because there are fundamental differences in structure and purposes that make them complementary.

Here are some guidelines:

Do you want to prove a point numerically, based on a well-established idea or theory? Then quantitative data may be best.

Do you want to explore how a project unfolds and what people think of it, in their own words? Then qualitative data may be best.

Do you want to test some ideas numerically but have rich explanations of what people think? Then mixed methods data may be best.

The selection of quantitative and qualitative data can be based on the evaluation question (is it exploratory or hypothesis testing?), and readiness and capacity of the partnership to deal with different types of data in a partnered data collection and analysis, as well as what kinds of data will speak to the community stakeholders. Will stories be the most effective, or numbers representing a population, for the intended audience? Does the partnership have the resources for handling the data in the analysis phase? How would the community like to tell its story? Will the findings be publishable, if that is a goal for the partnership?

Step 7: Develop Measures

The next step is to use the outcomes identified earlier, in light of the design and the general type of data collection planned and to develop indicators or measures. Outcomes might be broad—reduce obesity, improve mental health, and so forth. Indicators and measures are specific, such as percent of the participants who lose 3 or more pounds, or percent of the population with two or more symptoms of depression. Turning concepts into indicators and measures means being able to think practically about what the project can achieve and for whom, and the availability of data on that outcome.

Developing measures of success in community engagement projects involves learning how outcomes might be measured based on prior research and program evaluation, independently listening to the voice of the community in terms of what the important outcomes are, and then working to blend perspectives by reworking and extending existing measures, or completely starting over to capture outcomes of importance to the community.

Even in starting over, however, the group should be aware of existing expertise on how concepts can be best measured. This kind of approach often involves a process of academic partners letting go of traditional measures, or at least letting go of the letter of the law, or having measures exactly like those in other projects. This is challenging; academic participants must be flexible, and yet the result must be scientifically valid. If it’s not, no one – neither the academic or community partners – benefits from the research.

Another complication is that funding for follow-on projects will likely depend on the funding organization’s recognition of the initial project as successful (and funders are likely to measure success using traditional yardsticks). Both researchers and community members must work together to convince the funding organization that the project’s measures of success are valid. This can mean having a stage of measures development, or developing formal studies of reliability and validity within the project.

Some definitions:

Reliability: whether the information obtained by a measure will produce the same results when re-administered.

Validity: the truthfulness or correctness of a measure, that is, whether it really captures what it is intended to capture.

The development of a measure depends on what kind of data source will be used. For example, obesity can be measured by self-report, the report of others, or physical measures like waist circumference or weight and height. Sometimes when data are not available, a proxy may be used. For example, suppose the project needs to know the ethnicity of survey participants, but there are no data in the survey on ethnicity. Perhaps an existing survey, such as the census, is available to give this information about the area in which individuals reside. Outcomes that have to rely on existing data will be constrained by the kinds of issues that have already been measured. For example, claims data from an insurance company might not include data on ethnicity.

This means that along with reviewing what kind of existing measures might be available, and how the community thinks about the issue, it is important to think about the sources of data for the measures. Will there be a survey of participants? Are existing data available on the community such as census data or agency data? Will interviews of stakeholders be feasible? Will information from community members be available from discussion groups? What other kinds of data sources might be available: public data or reports? attendance lists from meetings? photographs or videotapes?

Typically, one arrives at a data source strategy by starting with the measures or indicators and the priorities for them, and then listing in a table the data sources that would be required or are available to obtain those measures. Then some decisions are needed about the most efficient strategy to obtain information about the greatest number of measures on the most stakeholders, with the fewest data sources or the most efficient overall strategy. This can mean dropping some types of desirable measures in the interest of feasibility. Or sometimes, entirely new measurement strategies are suggested (like narratives of community members about what it’s like to try to get fresh vegetables that are affordable, or photographs of food actually available in grocery stores) because an outcome is very important, but there is no available data source, or because obtaining them in a standard way (such as through surveys), either would not be acceptable to the community, or is already known to be invalid from prior research.

The process of considering outcomes, measures, and data sources and tolerating not having a final set of outcomes until the whole evaluation strategy has been balanced and is known to be feasible is familiar to most academic partners, but can be unfamiliar and frustrating to community members who are new to a research process. Similarly, taking the time to review existing measures to assure that they are valid and meaningful within the community can be frustrating to academic partners. Together, the members of a partnership can learn how to tolerate these expected frustrations and support each other in developing useful measures that can serve scientific purposes and communicate strongly to the community stakeholders.

To minimize frustration, it can be helpful for the evaluation leaders to set expectations about the process of outcomes evaluation up front. Review the resources for evaluation, what other resources might be brought to the table, and the implications for what kind of data sources might be considered for this project. Explain the process needed to arrive at a good set of outcomes, a rationale for them, and measures and data sources. This will help the whole partnership learn about evaluation, and generate support for its development.

Here again, as in the Visioning exercises described in Chapter 3, we have found it helpful to use engaging strategies, such as stories, puppets, visuals, site visits, and so forth, to make the process of developing (and later implementing) an evaluation engaging. Participatory evaluation is both a method and an art.

Step 8: Submit the Evaluation Design and Proposed Measures to the Institutional Review Board (Human Subjects Protection)

All evaluation designs that potentially can lead to research products will have to go through a human subjects protection process through one or more institutional review boards (IRBs). Collaborative projects in the community might involve one or more academic institutions, each with its own IRB, and various participating community organizations may have their own IRB. Submissions to IRBs require lead time for preparation, for review and for responses and revisions. Data collection involving human subjects can only occur after IRB approval.

Typically (but not always), the academic partners take the lead in the IRB process. However, over time, we have developed a collaborative process of developing and implementing reviews. Community members in our partnership also serve on the academic IRBs. This helps avoid the problems of allowing the research to become too academically directed. (Because the academic partners are already familiar with the IRB process, there’s a risk that they can – with the best of intentions – completely take over the project at this point.) It is important to also build the capacity of community partners to understand and use the IRBs – a process they will need to understand in all subsequent community-academic partnered research projects. For this purpose, we have held IRB presentations in the community, and ask all of our Council members and leaders of the working groups to become IRB-certified. A community engagement perspective implies that findings be regularly shared with the community before being disseminated more widely, and the physical data (such as the paper surveys, etc.) will partially be kept with trusted community partners. Under the capacity-building goals of a community-academic partnered project, the community should be given priority in employment and training opportunities associated with the project, and their contributions recognized and honored in products.

Even apart from IRBs, the importance of attending to human subjects issues and building trust in the community regarding research purposes cannot be overestimated, especially in underserved communities of color that have suffered histories of abuses from research and/or health care. The notorious Tuskegee syphilis experiment is very much a continuing example on the mind of many African Americans in our community, as well as in other minority communities—and this is but one example of many. The history of research abuses is greatly compounded by the legacy of communities that have been subjected to slavery (such as African Americans) or genocide (including Native Americans, for example), such that appearances of experiments and controls can have a particular meaning of potential for harm, even great harm. All research, moreover, involves some risk, even if that risk may be either minor or minor relative to the benefit (to society and possibly to individuals) expected from the knowledge gained. The fear engendered by the history of research abuses is that the potential for harm may not be disclosed or will be forced upon unwilling or unknowing participants.

This background is not intended to deter community-academic partnerships from doing research, but rather to emphasize the importance of transparency in evaluation and research purposes and design, and the need for equal partnership and trust at every stage of the project.

Step 9: Collect the Evaluation Data

Data collection methods should seek to assess success and also be adapted to fit the skills of community participants and available resources. Unless very substantial resources are available, it is best to choose methods that can easily be carried out, take short amounts of time to accomplish, and appeal to those involved. Even with large resources, a portion of the evaluation should use methods with these features. Some successful data collection methods are listed below:

Assemble documentary evidence: examples include meeting attendance and minutes, community newsletters, development of educational pamphlets, pre- and post-intervention opinion surveys, etc.

Monitor event participation: examples include tracking the number of events, along with the number of persons who attend the events and their demographic characteristics.

Interview stakeholders, conduct focus groups, or hold community dialogue sessions: examples include gathering community members to share their views on the extent to which the process is working and if goals are being reached. You might also investigate how the strategy and activities could be improved.

Collect survey data: community members can also be trained as survey researchers, particularly for collecting data at community events (films, marketplaces, etc.). Depending on the circumstances, community members can also partner with trained survey researchers or be supervised in groups.

Take pictures and video: a variety of community-based participatory projects use the method of PhotoVoice or VideoVoice (http://www.photovoice.org/) to capture data and support community members in identifying and taking action for change.

Other resource books provide extensive guidance on collaborating data gathering using a variety of methods within community-based research projects.1

In conducting research under a community-partnership framework, community members may be primarily responsible for the data collection. This means that in designing the data collection instruments and protocols for data collection, it is important to include training in data collection. This may require modifying data collection procedures, giving additional time for training of data collectors, and setting up a leadership structure for training that includes community and academic partners. The style of training may also differ, with more emphasis on community engagement and sharing of perspective, role playing and other activities to build experience during the training, and a shared supervision structure that honors not only technical standards of data collection but cultural and community sensitivity and inclusiveness in data collection.

Step 10: Analyze the Data

Perform joint analyses

Once data are gathered, the group should collectively analyze the data to: 1) build consensus on findings and 2) ensure that everyone understands that the data belongs to all those involved. Consensus on findings, conclusions and recommendations should be reached within each working group involved in the evaluation. To do this, the working group should

Analyze the data.

Compare the data to the team’s assumptions or hypotheses.

Summarize what the team learns.

Give feedback on all the primary findings to the community at large.

Modify action plans as needed, including, if necessary, modifying the approach, moving the work into a new area, or institutionalizing the change.

How is joint analysis done? The answer is: by meeting regularly, taking a step at a time, explaining all concepts and terms in plain English (or the appropriate language for the group), and assuming that all aspects of analysis can and should be a subject of partnered discussion.

For example, the Talking Wellness group (one of the working groups in the Witness for Wellness initiative) met one or two times a month for two hours over the course of one year. In those meetings, the most basic aspects of design and analysis were discussed, ranging from developing hypotheses to scale formation and weighting data, along with various forms of statistics. The idea was not to make community members into statisticians, but to make the concepts in analysis transparent. Various analyses were conducted and results reviewed, so that community members became comfortable with tables and numbers. Conceptual models and theories were discussed, and people were invited to give their own thoughts about the particular events that were the subject of this analysis. Brainstorming about cause and effect led to some innovative hypotheses about how community events led to community commitment to take action. This core idea was developed into a formal or causal analysis, which used data to see if the relationships among the data collected were consistent with a specific, formal model or framework. In addition to the intended simple evaluation of the events themselves, this framework was published as its own scientific piece.

At each step of analysis, we have followed several principles:

Bring in scientific experts as needed and ask them to explain technical matters in plain English, using visuals and examples;

Bring in community experts or facilitators to energize community members to contribute ideas;

Balance academic presentations with brainstorming sessions and other forms of community sharing of perspectives, using the community engagement methods discussed for visioning exercises;

As needed, review background material, whether on community history, or methods of analysis, to build an ongoing capacity for partnered analysis; and

Make sure that community members who participate in analysis have a chance to present it in either a community or scientific forum or both. We have found that the joy of presenting something one truly knows, and receiving appreciation for it, can balance the more tedious aspects of data preparation and analysis.

Keep the team and the community informed

Academic and community members involved in the data analysis will develop a detailed understanding of the analysis process. However, other community members may have limited experience and thus not fully understand exactly what the data look like, and they will have questions. Are minutes project data? Are pictures taken at events project data? What exactly are data from surveys like? Are data numbers punched into a machine?

We often find that questions like these emerge as community members wait to see the data, or to know that they are available to be reviewed. Anticipating this can be helpful, as leaders can show examples of data, review human subject issues as to where certain types of data are stored, and what information will or will not be used owing to human subject protection. Further, community members often do not want to see the full data, which might be voluminous, but are likely to be interested in summary tables or key themes. Academic investigators might think that a request about data literally means seeing raw data, when in fact it often refers to seeing key summaries, such as graphs, tables, or tentative conclusions.

Another common problem is that quantitative and qualitative data require time to be prepared for analysis. Coding has to occur, errors in coding corrected, and variables and variable names developed, with scoring rules for measures. Scales made up of different items need to be developed, evaluated and then finalized. When data are missing, some method is usually developed to reduce the effect of the missing data on the analyses, like developing replacement data.

All of these tasks can be time consuming, delaying by months (sometimes many months for large projects) the availability of the data to community members. At times, those delays can seem like stalling and represent another source of trust problems. It can be helpful for experienced project members, both academic and community leaders, to take a proactive approach to explaining these issues with enough detail so that the community members understand what the issues are and what they represent.

Meanwhile, it can also be helpful to have an early feedback session to the project team, with summaries of some descriptive data. This can show good faith and that the data are not being hidden.

Moreover, as main analyses unfold, summary tables and data should be available, for example on a website or in files available for this purpose in the lead community partner’s agency. Community members can also be invited to come into the academic center to review data with an academic investigator.

Capacity Building

Across the different steps for evaluation at the Valley stage, it is helpful to remember that the long-range goal is to build a capacity in the community for evaluating partnered projects. This means carefully balancing the readiness of the partnership, and particularly its strengths, with the evaluation goals, and making the process of evaluation a positive, capacity-building experience for participants.

Our experience is that over time, through a series of steps in a committed partnership, one can build rigorous designs that address questions of importance to the community that also represent scientific advances, while respecting the community that is hosting the partnership. The evaluation is part of the whole process of respectful and equal engagement, which is the goal of Community-Partnered Participatory Research.

Fig 6.6.

Share

Acknowledgments

We would like to thank the board of directors of Healthy African American Families II; Charles Drew University School of Medicine and Science; the Centers for Disease Control and Prevention, Office of Reproductive Health; the Diabetes Working Groups; the Preterm Working Group; the University of California Los Angeles; the Voices of Building Bridges to Optimum Health; Witness 4 Wellness; and the World Kidney Day, Los Angeles Working Groups; and the staff of Healthy African American Families II and the RAND Corporation.

This work was supported by Award Number P30MH068639 and R01MH078853 from the National Institute of Mental Health, Award Number 200-2006-M-18434 from the Centers for Disease Control, Award Number 2U01HD044245 from the National Institute of Child Health and Human Development, Award Number P20MD000182 from the National Center on Minority Health and Health Disparities, and Award Number P30AG021684 from the National Institute on Aging. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or the Centers for Disease Control.

References

- 1.Israel BA, Eng E, Schulz AJ, Parker EA, editors. Methods in Community-Based Participatory Research for Health. San Francisco, Calif: Jossey-Bass; 2005. [Google Scholar]