Abstract

Dementia is a growing problem that affects elderly people worldwide. More accurate evaluation of dementia diagnosis can help during the medical examination. Several methods for computer-aided dementia diagnosis have been proposed using resonance imaging scans to discriminate between patients with Alzheimer's disease (AD) or mild cognitive impairment (MCI) and healthy controls (NC). Nonetheless, the computer-aided diagnosis is especially challenging because of the heterogeneous and intermediate nature of MCI. We address the automated dementia diagnosis by introducing a novel supervised pretraining approach that takes advantage of the artificial neural network (ANN) for complex classification tasks. The proposal initializes an ANN based on linear projections to achieve more discriminating spaces. Such projections are estimated by maximizing the centered kernel alignment criterion that assesses the affinity between the resonance imaging data kernel matrix and the label target matrix. As a result, the performed linear embedding allows accounting for features that contribute the most to the MCI class discrimination. We compare the supervised pretraining approach to two unsupervised initialization methods (autoencoders and Principal Component Analysis) and against the best four performing classification methods of the 2014 CADDementia challenge. As a result, our proposal outperforms all the baselines (7% of classification accuracy and area under the receiver-operating-characteristic curve) at the time it reduces the class biasing.

1. Introduction

In 2010, the number of people aged over 60 years with dementia was estimated at 35.6 million worldwide and this figure had been expected to double over the next two decades [1]. Actually, World Health Organization and the Alzheimer's Disease International had declared dementia as a public health priority, encouraging articulating government policies and promoting actions at international and national levels [2]. Alzheimer's disease (AD) is the most diagnosed dementia-related chronic illness that demands very expensive costs of care, living arrangements, and therapies. Thus, efforts are underway to improve treatment which may delay, at least, one year the AD onset and development, leading to decreasing the number of cases by nine millions [3]. AD can be early diagnosed by predicting the conversion to dementia from a state of mild cognitive impairment (MCI) that especially increases the AD risk [4].

In this regard, early diagnosis is directly related to the effectiveness of interventions [5]. Along with clinical history, neuropsychological tests, and laboratory assessment, the joint clinical diagnosis of AD also includes neuroimaging techniques like positron emission tomography (PET) and magnetic resonance imaging (MRI). These techniques are usually incorporated in the routine workup for excluding secondary pathology causes (e.g., tumors) [6, 7]. However, factors related to image quality and radiologist experience may limit their use [8]. For dealing with this issue, the imaging-based automatic assessment of quantitative biomarkers has been proven to enhance the performance for dementia diagnosis. In the particular case of AD, there are two groups of widely studied biomarkers: (i) patterns of brain amyloid-beta, such as low cerebrospinal fluid (CSF) Aβ42 and amyloid PET imaging, and (ii) measures of neuronal injury or degeneration like CSF tau measurement, fluorodeoxyglucose PET, and atrophy on structural MRI [9]. Thus, structural MRI has become valuable for biomarker assessment since this noninvasive technique explains structural changes at the onset of cognitive impairment [10].

For the purpose of automated diagnosis, the first stage to implement is the structure-wise feature extraction from available MRI data, including voxel-based morphometry, volume, thickness, shape, and intensity relation. Nonetheless, more emphasis usually focuses on the classification approach due to its strong influence on the entire diagnosis system. With regard to neurodegenerative diseases, the reported classifiers range from straightforward approaches (k-Nearest Neighbors [11], Linear Discriminant Analysis [12], Support Vector Machines [13], Random Forests [14], and Regressions [15]) to the combination of classifiers [16]. Most of the above approaches had been evaluated for the 2014 CADDementia challenge which aimed to reproduce the clinical diagnosis of 354 subjects in a multiclass classification problem of three diagnostic groups [17], Alzheimer's diagnosed patients, subjects with MCI, and healthy controls (NC), given their T1-weighted MRI scans. As a result, the best-performing algorithm yielded an accuracy of 63.0% and an area under the receiver-operating-characteristic (ROC) curve of 78.8%. Nonetheless, reported true positive rates are 96.9% and 28.7% for NC and MCI, respectively, resulting in class biasing.

Generally speaking, dementia diagnosis from MRI still remains a challenging task, mainly, because of the nature of mild cognitive impairment; that is, there is a heterogeneous and intermediate category between the NC and AD diagnostic groups, from which subjects may convert to AD or return to the normal cognition [4]. For overcoming this shortcoming, machine learning tools as the artificial neural networks (ANN) have been developed to enhance dementia diagnosis, presenting the following advantages [18, 19]: (i) ability to process a large amount of data, (ii) reduced likelihood of overlooking relevant information, and (iii) reduction of diagnosis time.

Nonetheless, an essential procedure for ANN implementation is initializing deep architecture (termed pretraining) which can be carried out by training a deep network to optimize directly only the supervised objective of interest, starting from a set of randomly initialized parameters. However, this strategy performs poorly in practice [20]. With the aim to improve each initial-random guess, a local unsupervised criterion is considered to pretrain each layer stepwise, trying to produce a useful higher-level description based on the adjacent low-level representation output of the previous layer. Particular examples that use unsupervised learning are the following: Restricted Boltzmann Machines [21], autoencoders [22], sparse autoencoders [23], and the greedy layer-wise unsupervised learning which is the most common approach that learns one layer of a deep architecture at a time [24]. Although the unsupervised pretraining generates hidden representations that are more useful than the input space, many of the resulting features may be irrelevant for the discrimination task [25, 26].

In this paper, we benefit from the ANN advantages for complex classification tasks to introduce a novel supervised ANN initialization approach devoted to the automated dementia diagnosis. The proposed pretraining approach searches for a linear projection into a more discriminating space so that the resulting embedding features and labels become as much as possible associated. Consequently, the obtained ANN architecture should match better the nature of supervised training data. Taking into account the fact that the ANN straightforward hybridization with other approaches yields stronger paradigms for solving complex and computationally expensive problems [27, 28], we also incorporate kernel theory for assessing the affinity between projected data and available labels. The use of kernel approaches offers an elegant, functional analysis framework for tasks, gathering multiple information sources (e.g., features and labels) as the minimum variance unbiased estimation of regression coefficients and least squares estimation of random variables [29]. Moreover, we consider the centered kernel alignment criterion as the affinity measure between a data kernel matrix and a target label matrix [30, 31]. As a result, the linear embedding allows accounting for features that contribute the most to the class discrimination.

The present paper is organized as follows: Section 2 firstly describes the mathematical background on learning projections using CKA and ANN for classification. Section 3 introduces all the carried out experiments for tuning the algorithm parameters and the evaluation scheme with blinded data. Then, achieved results are discussed in Section 4. Finally, Section 5 presents the concluding remarks and future research directions.

2. Materials and Methods

2.1. Classification Using Artificial Neural Networks

Within the classification framework, an L-layered ANN is assumed to predict the needed class label set through a battery of feedforward deterministic transformations, which are implemented by the hidden layers h l, which map the input space x to the network output h L as follows [27]:

| (1) |

where b l ∈ ℝ ml+1 is the lth offset vector, W l ∈ ℝ ml+1×ml is the lth linear projection, and m l ∈ ℤ + is the size of the lth layer. The function ϕ(·) ∈ ℝ applies saturating, nonlinear, element-wise operations. Here, we choose the standard sigmoid, ϕ(z) = sigmoid(z), expressed as follows:

| (2) |

The first layer in (1) (i.e., h 0 ∈ ℝ D) is conventionally adjusted to the input feature vector. In turn, the output layer h L ∈ [0,1]C predicts the class when combined with a provided target t ∈ {1,…, C} into a loss function ℒ(h L, t). In practice, the output layer can be carried out by the nonlinear softmax function described as follows:

| (3) |

where b c L is the cth element of b L, w c L is the cth row of W L, h L is positive, and ∑c h c L = 1.

The rationale behind the choice of softmax function is that each yielded output h c L can be used as an estimator of P(t i = c∣x i), so that the interpretation of t i relates to the class associated with input pattern x i. In this case, the softmax loss function corresponds often to the negative conditional log-likelihood:

| (4) |

Therefore, the expected value over (x, t) pairs is minimized with respect to the biases and weighting matrices.

2.2. ANN Pretraining Using Centered Kernel Alignment

Let X ∈ {x i ∈ ℝ D : i ∈ N} be the input feature matrix with size ℝ D×N which holds N trajectories and let x i ⊂ 𝒳 be a D-dimensional random process. In order to encode the affinity between a couple of trajectories, {x i, x j}, we determine the following kernel function:

| (5) |

〈·, ·〉 stands for the inner product and φ(·) : ℝ D → ℋ maps from the original domain, ℝ D, into a Reproduced Kernel Hilbert Space (RKHS), ℋ. As a rule, it holds that |ℋ | →∞, so that |ℝ D | ≪|ℋ| can be assumed. Nevertheless, there is no need for computing φ(·) directly. Instead, the well-known kernel trick is employed for computing (5) through the positive definite and infinitely divisible kernel function as follows:

| (6) |

where d : ℝ D × ℝ D ↦ ℝ + is a distance operator implementing the positive definite kernel function κ(·). A kernel matrix K ∈ ℝ N×N that results from the application of κ over each sample pair in X is assumed as the covariance estimator of the random process 𝒳 over the RKHS.

With the purpose of improving the system performance in terms of learning speed and classification accuracy, we introduce the prior label knowledge into the initialization process. Thus, we compute the pairwise relations between the feature vectors through the introduced feature similarity kernel matrix K ∈ ℝ N×N which has elements as follows:

| (7) |

with d W : ℝ D × ℝ D ↦ ℝ + being a distance operator that implements the positive definite kernel function κ x(·), and {(x i, t i): i = 1,…, N} is a set of input-label pairs with x i ∈ ℝ D and t i ∈ {1, C}, with C being the number of classes to identify.

Since we look for a suitable weighting matrix for initializing the ANN optimization, we rely on the Mahalanobis distance that is defined on a D-dimensional space by the following inverse covariance matrix W ⊤ W:

| (8) |

where matrix W ∈ ℝ m1×D holds the linear projection y i = W x i, with y i ∈ ℝ m1, m 1 ≤ D.

Based on the already estimated feature similarities, we propose further to learn the matrix W by adding the prior knowledge about the feasible sample membership (e.g., healthy or diseased groups) enclosed in a matrix B ∈ ℝ N×N with elements b ij = δ(t i − t j). Thus, we measure the similarity between the matrices K and B through the following function of centered kernel alignment (CKA) [32]:

| (9) |

where H = I − N −111⊤, with H ∈ ℝ N×N, is a centering matrix, 1 ∈ ℝ N is an all-ones vector, and 〈·, ·〉F and ‖·,·‖F stand for the Frobenius inner product and norm, respectively.

Therefore, the centered version of the alignment coefficient leads to better correlation estimation compared to its uncentered version [31]. Therefore, the CKA cost function, described in (9), highlights relevant features by learning the matrix W that best matches all relations between the resulting feature vectors and provided target classes. Consequently, we state the following optimization problem to compute the projection matrix:

| (10) |

and we thus initialize the first layer of the ANN with W ⋆.

Additionally, the weighting matrix allows analyzing the contribution of the input feature set for building the projection matrix by computing the feature relevance vector ϱ ∈ ℝ D in the following form:

| (11) |

where w ud ∈ ℝ is the weight that associates each dth feature to uth hidden neuron. E{·} stands for the averaging operator. The main assumption behind the introduced relevance in (11) is that the larger the values of ϱ d the larger the dependency of the estimated embedding on the input attribute.

3. Experimental Setup

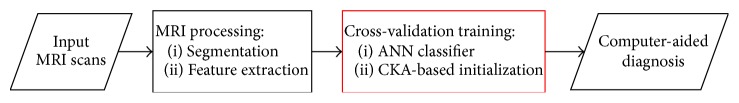

An automated, computer-aided diagnosis system based on artificial neural networks is introduced to classify structural magnetic resonance imaging (MRI) scans in accordance with the following three neurological classes: normal control (NC), mild cognitive impairment (MCI), and Alzheimer's disease (AD). Figure 1 illustrates the methodological development of the proposed approach.

Figure 1.

General processing pipeline: FreeSurfer independently segments and extracts features from given MRIs. Centered kernel alignment is proposed to learn a projection matrix initializing the NN training in a 5-fold cross-validation scheme. Tuned model is used for classification task.

3.1. ADNI Data

Data used in the preparation of this paper were obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.usc.edu/) which was launched in 2003 by the National Institute on Aging (NIA), the National Institute of Biomedical Imaging and Bioengineering (NIBIB), the Food and Drug Administration (FDA), private pharmaceutical companies, and nonprofit organizations. The primary goal of ADNI is to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer's disease (AD). From the ADNI 1, ADNI 2, and ADNI GO phases, we selected a subset of 633 subjects with scans that had been noted with the “best” quality mark. As a result, the selected subset holds N = 1993 images with three class labels described above; C = 3. Besides, a random subset of 70% data was chosen for tuning and training stages, while the remaining 30% is for the test purpose. In addition, 629 images with a “partial” quality mark were selected in order to assess the performance under more complicated imaging conditions. Table 1 briefly describes the demographic information for the ADNI selected cohort.

Table 1.

Demographic and clinical details of the selected ADNI cohort.

| “best” quality | “partial” quality | |||||

|---|---|---|---|---|---|---|

| NC | MCI | AD | NC | MCI | AD | |

| N | 655 | 825 | 513 | 465 | 130 | 34 |

| Age | 74.9 ± 5.0 | 74.4 ± 7.4 | 74.0 ± 7.4 | 76.6 ± 6.4 | 76.0 ± 6.3 | 74.3 ± 6.5 |

| Male | 47.5% | 39.5% | 47.6% | 70.1% | 62.3% | 70.6% |

| MMSE | 29.0 ± 1.0 | 27.1 ± 2.5 | 21.9 ± 4.4 | 27.5 ± 2.0 | 21.2 ± 1.6 | 14.4 ± 2.8 |

3.2. Processing of MRI Data

We used FreeSurfer, version 5.1 (a free available (http://surfer.nmr.mgh.harvard.edu/), widely used and extensively validated brain MRI analysis software package), to process the structural brain MRI scans and compute the morphological measurements [33]. FreeSurfer morphometric procedures have been demonstrated to show good test-retest reliability across scanner manufacturers and across field strengths [34]. The FreeSurfer pipeline is fully automatic and includes the next procedures: a watershed-based skull stripping [35], a transformation to the Talairach, an intensity normalization and bias field correction [36], tessellation of the gray/white matter boundary, topology correction [37], and a surface deformation [38]. Consequently, a representation of the cortical surface between white and gray matters, of the pial surface, and segmentation of white matter from the rest of the brain are obtained. FreeSurfer computes structure-specific volume, area, and thickness measurements. Cortical Volumes and Subcortical Volumes are normalized to each subject's Total Intracranial Volume (eTIV) [39]. Table 2 summarizes the five feature sets extracted for each subject, which are concatenated into the feature matrix X with dimensions N = 1993 and D = 324.

Table 2.

FreeSurfer extracted features. # stands for the number of features.

| Type | # features | Units |

|---|---|---|

| Cortical Volumes (CV) | 70 | mm3 |

| Subcortical Volumes (SV) | 42 | mm3 |

| Surface Area (SA) | 72 | mm2 |

| Thickness Average (TA) | 70 | mm |

| Thickness Std. (TS) | 70 | mm |

|

| ||

| Total | 324 | |

3.3. Tuning of ANN Model Parameter

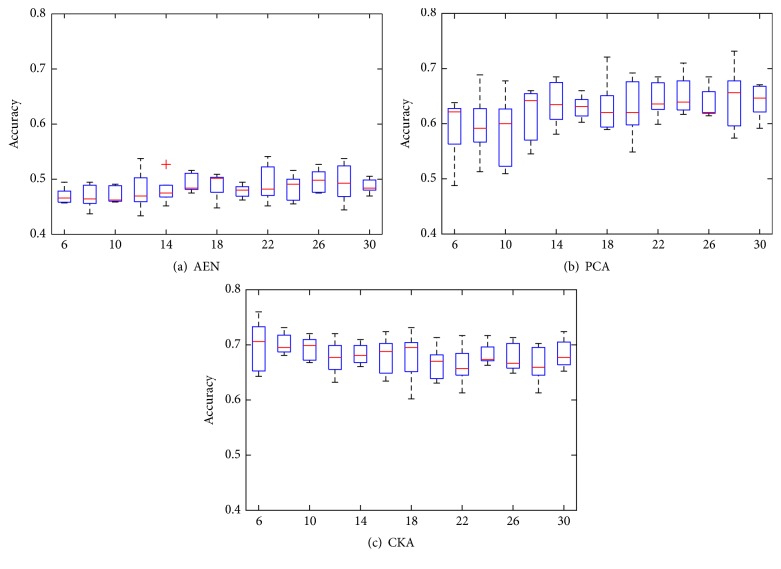

Given input D = 324 MRI features for classification of the 3 neurological classes, we use the feedforward ANNs with one hidden layer: 324-input and 3-output neurons. An exhaustive search is carried out for tuning the single free parameter, namely, the number of neurons in the hidden layer (m 1). We also compare our proposal against autoencoders (AEN) [20] and the well-known Principal Components Analysis (PCA) for the initialization stage. All of these approaches (AEN, PCA, and CKA) provide a projection matrix with an output dimension that, in this case, equates the hidden layer size. Thus, resulting projections are used as the initial weights for the first layer. Also, biases and output layer weights are randomly initialized. For a different number of neurons, Figure 2 shows the accuracy results obtained by each considered strategy of initialization using 5-fold cross-validation scheme. Since we look for the most accurate and stable network configuration, we chose the optimal net as the one with the highest mean-to-deviation ratio. The resulting search indicates that the best number of hidden neurons is accomplished at m 1 = 20, m 1 = 16, and m 1 = 14 for AEN, PCA, and CKA approaches, respectively.

Figure 2.

Artificial neural network performance along the number of nodes in the hidden layer (m 1) for the three initialization approaches: autoencoder, PCA-based projection, and CKA-based projection. Results are computed under 5-fold cross-validation scheme.

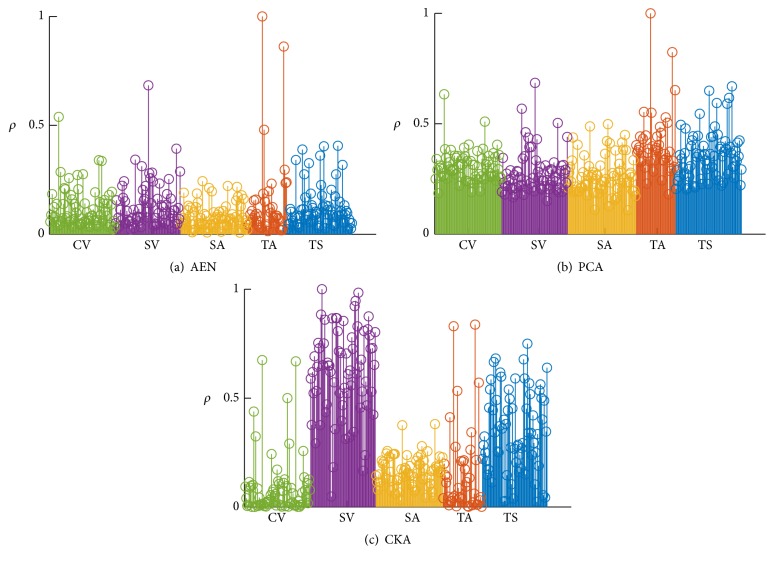

We further analyze the influence of each feature to the initialization process regarding the relevance criterion introduced in (11). Obtained results of relevance in Figure 3 show that the proposed CKA approach enhances the Subcortical Volume features at the time it diminishes the influence of most Cortical Volumes and Thickness Averages. The relevance of each feature set provided by AEN and PCA is practically the same. Hence, CKA allows the selection of relevant biomarkers from MRI.

Figure 3.

Relevance indexes grouped by feature type: Cortical Volume (CV), Subcortical Volume (SV), Surface Area (SA), Thickness Average (TA), and Thickness Std. (TS).

3.4. Classifier Performance of Neurological Classes

As shown in Table 3, the ANN models that have been tuned for the three initialization strategies are contrasted with the best four performing approaches of the 2014 CADDementia challenge [17]. The compared algorithms are evaluated in terms of their classification performance, accuracy (α), area under the receiver-operating-characteristic curve (β), and class-wise true positive rate (τ p c) criteria, respectively, which are defined as

| (12) |

where c ∈ {NC, MCI, AD} indexes each class and N c, t p c, and t n c are the number of samples, true positives, and true negatives for the cth class, respectively. The area under the curve β is the weighted average of the area under the ROC curve of each class β c. Presented results for the baseline approaches are the ones reported on the challenge for 354 images. Although the testing groups on the challenge and on this paper are not exactly the same, the amount of data, their characteristics, and the blind setup make those two groups equivalent for evaluation purposes.

Table 3.

Best performing algorithms in the 2014 CADDementia challenge [17].

| Algorithm | Features | Classifier |

|---|---|---|

| Abdulkadir | Voxel-based morphometry | Support Vector Machine |

| Ledig | Volume and intensity relations | Random Forest classifier |

| Sørensen | Volume, thickness, shape, and intensity relations | Regularized Linear Discriminant Analysis |

| Wachinger | Volume, thickness, and shape | Generalized Linear Model |

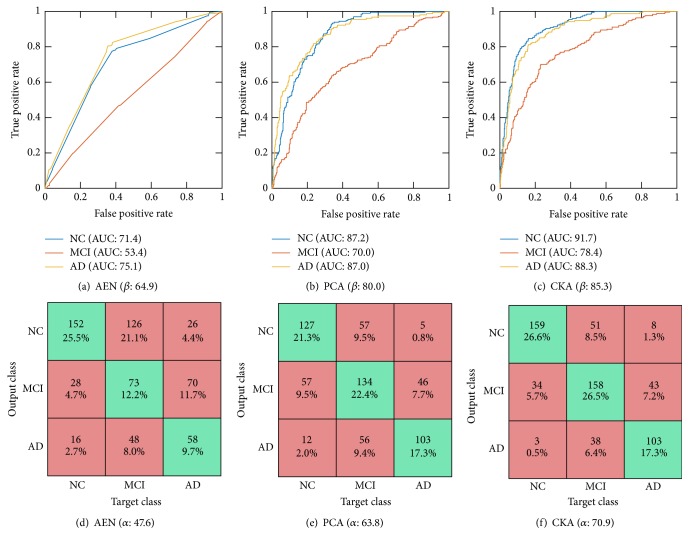

As seen in Table 4 which compares the classification performance on the 30% “best” quality test set for considered algorithms, the proposed approach, besides outperforming other compared approaches of initialization, also performs better than other computer-aided diagnosis methods as a whole. For the “partial” quality images, as expected, the general performance diminishes in all ANN approaches. Nonetheless, the overall accuracy and AUC are still competitive with respect to the challenge winner. Based on the displayed ROC curves and confusion matrices for the ANN-based classifiers with the optimum parameter set (see Figure 4), we also infer that the proposed approach improves MCI discrimination.

Table 4.

Classification performance on the testing groups for considered algorithms under evaluation criteria. Top: baseline approaches. Bottom: ANN pretrainings.

| Algorithm | α | τ NC | τ MCI | τ AD | β | β NC | β MCI | β AD |

|---|---|---|---|---|---|---|---|---|

| 2014 CADDementia | ||||||||

| Sørensen | 63.0 | 96.9 | 28.7 | 61.2 | 78.8 | 86.3 | 63.1 | 87.5 |

| Wachinger | 59.0 | 72.1 | 51.6 | 51.5 | 77.0 | 83.3 | 59.4 | 88.2 |

| Ledig | 57.9 | 89.1 | 41.0 | 38.8 | 76.7 | 86.6 | 59.7 | 84.9 |

| Abdulkadir | 53.7 | 45.7 | 65.6 | 49.5 | 77.7 | 85.6 | 59.9 | 86.7 |

|

| ||||||||

| “best” quality testing | ||||||||

| NN-AEN | 47.6 | 73.4 | 33.1 | 38.1 | 64.9 | 71.4 | 53.4 | 75.1 |

| NN-PCA | 63.8 | 70.4 | 56.7 | 66.9 | 80.0 | 87.2 | 70.0 | 87.0 |

| NN-CKA | 70.9 | 78.4 | 66.6 | 68.3 | 85.3 | 91.7 | 78.4 | 88.3 |

|

| ||||||||

| “partial” quality | ||||||||

| NN-AEN | 62.9 | 64.6 | 46.4 | 32.0 | 77.0 | 82.5 | 65.6 | 72.5 |

| NN-PCA | 64.4 | 67.6 | 49.3 | 26.0 | 78.4 | 82.3 | 67.5 | 79.2 |

| NN-CKA | 65.2 | 68.6 | 38.6 | 42.0 | 81.6 | 85.7 | 70.1 | 82.4 |

Figure 4.

Receiver-operating-characteristic curve ((a), (b), and (c)) and confusion matrix ((d), (e), and (f)) on the 30% test data for AEN ((a) and (d)), PCA ((b) and (e)), and CKA ((c) and (f)) initialization approaches at the best parameter set of the ANN classifier.

4. Discussion

From the validation carried out above for MRI-based dementia diagnosis, the following aspects emerge as relevant for the developed proposal of ANN pretraining:

-

(i)

As commonly implemented by the state-of-the-art ANN algorithms, the proposed initialization approach also has one free model parameter which is the number of hidden neurons. Tuning of this parameter is proposed to be carried out heuristically by an exhaustive search so as to reach the highest accuracy on a 5-fold cross-validation (see Figure 2). Thus, 24, 20, and 16 hidden neurons are selected for CKA, AEN, and PCA, respectively. As a result, the suggested CKA approach improves other pretraining ANN approaches (in about 10%) with the additional benefit of decreasing the performed parameter sensitivity.

-

(ii)

We assess the influence of each MRI feature at the pretraining procedure regarding the relevance criterion introduced in (11). As follows from Figure 3, AEN and PCA ponder every feature evenly, restraining their ability to extract biomarkers. By contrast, CKA enhances the influence of Subcortical Volumes and Thickness Standard deviations at the time it diminishes the contribution of Cortical Volumes and Thickness Averages. Consequently, the proposed approach is also suitable for feature selection tasks.

-

(iii)

In the interest of comparing, we contrast the developed ANN pretraining approach with the best four classification strategies of the 2014 CADDementia, devoted especially to dementia classification. From the obtained results, summarized in Table 4, it follows that proposed CKA outperforms other algorithms in most of the evaluation criteria and imaging conditions, providing the most balanced performance over all classes. Particularly for the 30% testing images, CKA increases by 7%-points the classification accuracy and average area under the ROC curve. It is worth noting that although Sørensen's approach accomplishes a τ NC value that is 18.5%-points higher than the proposal, its performance turns out to be biased towards the NC, yielding a worse value of MCI. That is, CKA carries out unbiased class performance of the dementia classification. In the case of “partial” quality images, in spite of the general performance reduction, CKA remains as the best ANN initialization approach. Moreover, the overall measures are still competitive with the results provided by the CADDementia challenge.

-

(iv)

Figure 4 shows the per-class ROC curves and confusion matrices obtained by the contrasted approaches. In all cases, the area under the curve and accuracy for NC and AD classes are higher than the ones achieved by the MCI class (Figures 4(a)–4(c)). Hence, MCI classification from the incorporated MRI features remains a challenging task due to the following facts: the widely known MCI heterogeneity, the MCI being an intermediate class between healthy individuals and those diagnosed with Alzheimer's disease, and the possibility of MCI subjects eventually converting to AD or NC. Moreover, confusion matrices displayed in Figures 4(d)–4(f) confirm that NC and AD are suitable for distinction in most of the cases. Nevertheless, the MCI class introduces the most errors when considered as both target and output class. Therefore, particular studies on the mild cognitive impairment should improve the diagnosis [5, 40].

5. Conclusion and Future Work

In this paper, we propose a supervised method for initializing the training of artificial neural networks, aiming to improve the computer-aided diagnosis of dementia. Given a set of volume, area, surface, and thickness features extracted from the subject's brain MRI, the examined dementia diagnosis task consists of assigning subjects to the next neurological groups: normal control, mild cognitive impairment (MCI), or Alzheimer's disease. This dementia classification task is particularly challenging because MCI is a heterogeneous and intermediate category between NC and AD. Also, MCI subjects may convert to AD or come back to NC.

To improve the classification performance, we incorporate a matrix projecting the samples into a more discriminating feature space so that the affinity between projected features and class labels is maximized. Such a criterion is implemented by the centered kernel alignment (CKA) between the feature and target label kernels, providing two key benefits: (i) the only free parameter is the hidden dimension; (ii) a relevance analysis can be introduced to find biomarkers. As a result, our proposal of ANN pretraining outperforms the contrasted algorithms (7% of classification accuracy and area under the ROC curve) and reduces the class biasing, resulting in better MCI discrimination.

Nonetheless, the use of CKA implies a couple of restrictions. Firstly, the number of samples should be larger than input and output dimensions to avoid overfitted linear projections. We cope with this drawback by considering a large enough subset of samples for training purposes (about 1300). Secondly, attained projections must always be of lower dimension compared to the original feature space. In this case, the enhancement on class discrimination is due to the affinity between labels and features, not due to an increase of the dimension.

As future work, we plan to evaluate the CKA discriminative capabilities in other neuropathological tasks from MRI as predicting Alzheimer's conversion from MCI and attention deficit hyperactivity disorder classification. We also expect to develop a neural network training scheme using CKA as the cost function.

Acknowledgments

This work was supported by Programa Nacional de Formación de Investigadores “Generación del Bicentenario” 2011 and the research Project no. 111956933522, both funded by COLCIENCIAS. Besides, this research would not have been possible without the funding of the E-health project “Plataforma tecnológica para los servicios de teleasistencia, emergencias médicas, seguimiento y monitoreo permanente de pacientes y apoyo a los programas de prevención” Eje 3-ARTICA.

Appendix

Gradient Descend-Based Optimization of CKA Approach

The explicit objective function of the empirical CKA in (9) yields [32]

| (A.1) |

with ρ 0 ∈ ℝ being a constant independent of W. We then consider the gradient descent approach to iteratively solve the optimization problem. To this end, we compute the gradient of the explicit function in (A.1) with respect to W as

| (A.2) |

where diag(·) and ∘ denote the diagonal operator and the Hadamard product, respectively. G ∈ ℝ N×N is the gradient of the objective function with respect to the kernel matrix K W:

| (A.3) |

As a result, the updating rule for W, given the initial guess W 0, becomes

| (A.4) |

with μ W t ∈ ℝ + being the step size of the updating rule and W t being the estimated projection matrix at iteration t.

Competing Interests

The authors declare that there are no competing financial, professional, or personal interests influencing the performance or presentation of the work described in this paper.

References

- 1.Prince M., Bryce R., Albanese E., Wimo A., Ribeiro W., Ferri C. P. The global prevalence of dementia: a systematic review and metaanalysis. Alzheimer's & Dementia. 2013;9(1):63.e2–75.e2. doi: 10.1016/j.jalz.2012.11.007. [DOI] [PubMed] [Google Scholar]

- 2.Wortmann M. Dementia: a global health priority—highlights from an ADI and World Health Organization report. Alzheimer's Research & Therapy. 2012;4(5, article 40) doi: 10.1186/alzrt143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Brookmeyer R., Johnson E., Ziegler-Graham K., Arrighi H. M. Forecasting the global burden of Alzheimer's disease. Alzheimer's & Dementia. 2007;3(3):186–191. doi: 10.1016/j.jalz.2007.04.381. [DOI] [PubMed] [Google Scholar]

- 4.Klöppel S., Peter J., Ludl A., et al. Applying automated MR-based diagnostic methods to the memory clinic: a prospective study. Journal of Alzheimer's Disease. 2015;47(4):939–954. doi: 10.3233/jad-150334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wolz R., Julkunen V., Koikkalainen J., et al. Multi-method analysis of MRI images in early diagnostics of Alzheimer's disease. PLoS ONE. 2011;6(10):1–9. doi: 10.1371/journal.pone.0025446.e25446 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tijms B. M., Wink A. M., de Haan W., et al. Alzheimer's disease: connecting findings from graph theoretical studies of brain networks. Neurobiology of Aging. 2013;34(8):2023–2036. doi: 10.1016/j.neurobiolaging.2013.02.020. [DOI] [PubMed] [Google Scholar]

- 7.Lithfous S., Dufour A., Després O. Spatial navigation in normal aging and the prodromal stage of Alzheimer's disease: insights from imaging and behavioral studies. Ageing Research Reviews. 2013;12(1):201–213. doi: 10.1016/j.arr.2012.04.007. [DOI] [PubMed] [Google Scholar]

- 8.Dubois B., Feldman H. H., Jacova C., et al. Advancing research diagnostic criteria for Alzheimer's disease: the IWG-2 criteria. The Lancet Neurology. 2014;13(6):614–629. doi: 10.1016/s1474-4422(14)70090-0. [DOI] [PubMed] [Google Scholar]

- 9.McKhann G. M., Knopman D. S., Chertkow H., et al. The diagnosis of dementia due to Alzheimer's disease: recommendations from the National Institute on Aging-Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimer's and Dementia. 2011;7(3):263–269. doi: 10.1016/j.jalz.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jack C. R., Knopman D. S., Jagust W. J., et al. Tracking pathophysiological processes in Alzheimer's disease: an updated hypothetical model of dynamic biomarkers. The Lancet Neurology. 2013;12(2):207–216. doi: 10.1016/s1474-4422(12)70291-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Papakostas G. A., Savio A., Graña M., Kaburlasos V. G. A lattice computing approach to Alzheimer's disease computer assisted diagnosis based on MRI data. Neurocomputing. 2015;150:37–42. doi: 10.1016/j.neucom.2014.02.076. [DOI] [Google Scholar]

- 12.Sørensen L., Pai A., Igel C., Nielsen M. Hippocampal texture predicts conversion from MCI to Alzheimer's disease. Alzheimer's & Dementia. 2013;9(4):p. P581. doi: 10.1016/j.jalz.2013.05.1155. [DOI] [Google Scholar]

- 13.Klöppel S., Abdulkadir A., Jack C. R., Koutsouleris N., Mourão-Miranda J., Vemuri P. Diagnostic neuroimaging across diseases. NeuroImage. 2012;61(2):457–463. doi: 10.1016/j.neuroimage.2011.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Moradi E., Pepe A., Gaser C., Huttunen H., Tohka J. Machine learning framework for early MRI-based Alzheimer's conversion prediction in MCI subjects. NeuroImage. 2015;104:398–412. doi: 10.1016/j.neuroimage.2014.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Eskildsen S. F., Coupé P., Fonov V. S., Pruessner J. C., Collins D. L. Structural imaging biomarkers of Alzheimer's disease: predicting disease progression. Neurobiology of Aging. 2015;36(supplement 1):S23–S31. doi: 10.1016/j.neurobiolaging.2014.04.034. [DOI] [PubMed] [Google Scholar]

- 16.Farhan S., Fahiem M. A., Tauseef H. An ensemble-of-classifiers based approach for early diagnosis of Alzheimer's disease: classification using structural features of brain images. Computational and Mathematical Methods in Medicine. 2014;2014:11. doi: 10.1155/2014/862307.862307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bron E. E., Smits M., van der Flier W. M., et al. Standardized evaluation of algorithms for computer-aided diagnosis of dementia based on structural MRI: the CADDementia challenge. NeuroImage. 2015;111:562–579. doi: 10.1016/j.neuroimage.2015.01.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Amato F., López A., Peña-Méndez E. M., Vaňhara P., Hampl A., Havel J. Artificial neural networks in medical diagnosis. Journal of Applied Biomedicine. 2013;11(2):47–58. doi: 10.2478/v10136-012-0031-x. [DOI] [Google Scholar]

- 19.Chyzhyk D., Savio A., Graña M. Evolutionary ELM wrapper feature selection for Alzheimer's disease CAD on anatomical brain MRI. Neurocomputing. 2014;128:73–80. doi: 10.1016/j.neucom.2013.01.065. [DOI] [Google Scholar]

- 20.Vincent P., Larochelle H., Lajoie I., Bengio Y., Manzagol P.-A. Stacked denoising autoencoders: learning useful representations in a deep network with a local denoising criterion. Journal of Machine Learning Research. 2010;11(3):3371–3408. [Google Scholar]

- 21.Hinton G. E., Osindero S., Teh Y.-W. A fast learning algorithm for deep belief nets. Neural Computation. 2006;18(7):1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 22.Bengio Y., Lamblin P. Advances in Neural Information Processing Systems 19. MIT Press; 2007. Greedy layer-wise training of deep networks; pp. 153–160. [Google Scholar]

- 23.Ranzato M., Poultney C., Chopra S., Cun Y. L. Efficient learning of sparse representations with an energy-based model. Proceedings of the Advances in Neural Information Processing Systems (NIPS '07); 2007; pp. 1137–1144. [Google Scholar]

- 24.Bengio Y. Neural Networks: Tricks of the Trade. Vol. 7700. Berlin, Germany: Springer; 2012. Practical recommendations for gradient-based training of deep architectures; pp. 437–478. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 25.Weston J., Ratle F., Mobahi H., Collobert R. Deep learning via semi-supervised embedding. In: Montavon G., Orr G. B., Müller K.-R., editors. Neural Networks: Tricks of the Trade. Vol. 7700. Berlin, Germany: Springer; 2012. pp. 639–655. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 26.Mohamed A.-R., Sainath T. N., Dahl G., Ramabhadran B., Hinton G. E., Picheny M. A. Deep belief networks using discriminative features for phone recognition. Proceedings of the 36th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP '11); May 2011; Prague, Czech Republic. pp. 5060–5063. [DOI] [Google Scholar]

- 27.Bengio Y. Learning deep architectures for AI. Foundations and Trends in Machine Learning. 2009;2(1):1–127. doi: 10.1561/2200000006. [DOI] [Google Scholar]

- 28.Basheer I. A., Hajmeer M. Artificial neural networks: fundamentals, computing, design, and application. Journal of Microbiological Methods. 2000;43(1):3–31. doi: 10.1016/s0167-7012(00)00201-3. [DOI] [PubMed] [Google Scholar]

- 29.Xu J.-W., Paiva A. R., Park I., Principe J. C. A reproducing kernel Hilbert space framework for information-theoretic learning. IEEE Transactions on Signal Processing. 2008;56(12):5891–5902. doi: 10.1109/TSP.2008.2005085. [DOI] [Google Scholar]

- 30.Orbes-Arteaga M., Cárdenas-Peña D., Álvarez M. A., Orozco A. A., Castellanos-Dominguez G. Image Analysis and Processing—ICIAP 2015: 18th International Conference, Genoa, Italy, September 7–11, 2015, Proceedings, Part I. Vol. 9279. Berlin, Germany: Springer; 2015. Kernel centered alignment supervised metric for multi-atlas segmentation; pp. 658–667. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 31.Cortes C., Mohri M., Rostamizadeh A. Algorithms for learning kernels based on centered alignment. Journal of Machine Learning Research. 2012;13:795–828. [Google Scholar]

- 32.Brockmeier A. J., Choi J. S., Kriminger E. G., Francis J. T., Principe J. C. Neural decoding with kernel-based metric learning. Neural Computation. 2014;26(6):1080–1107. doi: 10.1162/NECO_a_00591. [DOI] [PubMed] [Google Scholar]

- 33.Fischl B. FreeSurfer. NeuroImage. 2012;62(2):774–781. doi: 10.1016/j.neuroimage.2012.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Han X., Jovicich J., Salat D., et al. Reliability of MRI-derived measurements of human cerebral cortical thickness: the effects of field strength, scanner upgrade and manufacturer. NeuroImage. 2006;32(1):180–194. doi: 10.1016/j.neuroimage.2006.02.051. [DOI] [PubMed] [Google Scholar]

- 35.Ségonne F., Dale A. M., Busa E., et al. A hybrid approach to the skull stripping problem in MRI. NeuroImage. 2004;22(3):1060–1075. doi: 10.1016/j.neuroimage.2004.03.032. [DOI] [PubMed] [Google Scholar]

- 36.Sied J. G., Zijdenbos A. P., Evans A. C. A nonparametric method for automatic correction of intensity nonuniformity in mri data. IEEE Transactions on Medical Imaging. 1998;17(1):87–97. doi: 10.1109/42.668698. [DOI] [PubMed] [Google Scholar]

- 37.Ségonne F., Pacheco J., Fischl B. Geometrically accurate topology-correction of cortical surfaces using nonseparating loops. IEEE Transactions on Medical Imaging. 2007;26(4):518–529. doi: 10.1109/TMI.2006.887364. [DOI] [PubMed] [Google Scholar]

- 38.Fischl B., van der Kouwe A., Destrieux C., et al. Automatically parcellating the human cerebral cortex. Cerebral Cortex. 2004;14(1):11–22. doi: 10.1093/cercor/bhg087. [DOI] [PubMed] [Google Scholar]

- 39.Buckner R. L., Head D., Parker J., et al. A unified approach for morphometric and functional data analysis in young, old, and demented adults using automated atlas-based head size normalization: reliability and validation against manual measurement of total intracranial volume. NeuroImage. 2004;23(2):724–738. doi: 10.1016/j.neuroimage.2004.06.018. [DOI] [PubMed] [Google Scholar]

- 40.Ramírez J., Górriz J. M., Ortiz A., Padilla P., Martínez-Murcia F. J. Innovation in Medicine and Healthcare 2015. Vol. 45. Berlin, Germany: Springer; 2016. Ensemble tree learning techniques for magnetic resonance image analysis; pp. 395–404. (Smart Innovation, Systems and Technologies). [DOI] [Google Scholar]