Significance

Modern data analysis requires solving hard optimization problems with a large number of parameters and a large number of constraints. A successful approach is to replace these hard problems by surrogate problems that are convex and hence tractable. Semidefinite programming relaxations offer a powerful method to construct such relaxations. In many instances it was observed that a semidefinite relaxation becomes very accurate when the noise level in the data decreases below a certain threshold. We develop a new method to compute these noise thresholds (or phase transitions) using ideas from statistical physics.

Keywords: semidefinite programming, phase transitions, synchronization, community detection

Abstract

Statistical inference problems arising within signal processing, data mining, and machine learning naturally give rise to hard combinatorial optimization problems. These problems become intractable when the dimensionality of the data is large, as is often the case for modern datasets. A popular idea is to construct convex relaxations of these combinatorial problems, which can be solved efficiently for large-scale datasets. Semidefinite programming (SDP) relaxations are among the most powerful methods in this family and are surprisingly well suited for a broad range of problems where data take the form of matrices or graphs. It has been observed several times that when the statistical noise is small enough, SDP relaxations correctly detect the underlying combinatorial structures. In this paper we develop asymptotic predictions for several detection thresholds, as well as for the estimation error above these thresholds. We study some classical SDP relaxations for statistical problems motivated by graph synchronization and community detection in networks. We map these optimization problems to statistical mechanics models with vector spins and use nonrigorous techniques from statistical mechanics to characterize the corresponding phase transitions. Our results clarify the effectiveness of SDP relaxations in solving high-dimensional statistical problems.

Many information processing tasks can be formulated as optimization problems. This idea has been central to data analysis and statistics at least since Gauss and Legendre’s invention of the least-squares method in the early 19th century (1).

Modern datasets pose new challenges to this centuries-old framework. On one hand, high-dimensional applications require the simultaneous estimation of millions of parameters. Examples span genomics (2), imaging (3), web services (4), and so on. On the other hand, the unknown object to be estimated has often a combinatorial structure: In clustering we aim at estimating a partition of the data points (5). Network analysis tasks usually require identification of a discrete subset of nodes in a graph (6, 7). Parsimonious data explanations are sought by imposing combinatorial sparsity constraints (8).

There is an obvious tension between the above requirements. Although efficient algorithms are needed to estimate a large number of parameters, the maximum likelihood (ML) method often requires the solution of NP-hard (nondeterministic polynomial-time hard) combinatorial problems. A flourishing line of work addresses this conundrum by designing effective convex relaxations of these combinatorial problems (9–11).

Unfortunately, the statistical properties of such convex relaxations are well understood only in a few cases [compressed sensing being the most important success story (12–14)]. In this paper we use tools from statistical mechanics to develop a precise picture of the behavior of a class of semidefinite programming relaxations. Relaxations of this type appear to be surprisingly effective in a variety of problems ranging from clustering to graph synchronization. For the sake of concreteness we will focus on three specific problems.

Synchronization

In the general synchronization problem, we aim at estimating , which are unknown elements of a known group . This is done using data that consist of noisy observations of relative positions . A large number of practical problems can be modeled in this framework. For instance, the case (the orthogonal group in three dimensions) is relevant for camera registration and molecule structure reconstruction in electron microscopy (15).

synchronization is arguably the simplest problem in this class and corresponds to (the group of integers modulo 2). Without loss of generality, we will identify this with the group (elements of the group are , , and the group operation is ordinary multiplication). We assume observations to be distorted by Gaussian noise; namely, for each we observe , where are independent standard normal random variables. This fits the general definition because for .

In matrix notation, we observe a symmetric matrix given by

| [1] |

(Note that entries on the diagonal carry no information.) Here and denote the transpose of , and is a random matrix from the Gaussian orthogonal ensemble (GOE), i.e., a symmetric matrix with independent entries (up to symmetry) and .

A solution of the synchronization problem can be interpreted as a bipartition of the set . Hence, this has been used as a model for partitioning signed networks (16, 17).

Synchronization

This is again an instance of the synchronization problem. However, we take . This is the group of complex number of modulus one, with the operation of complex multiplication .

As in the previous case, we assume observations to be distorted by Gaussian noise; that is, for each we observe , where denotes complex conjugation† and .

In matrix notations, this model takes the same form as [1], provided we interpret as the conjugate transpose of vector , with components , . We will follow this convention throughout.

synchronization has been used as a model for clock synchronization over networks (18, 19). It is also closely related to the phase-retrieval problem in signal processing (20–22). An important qualitative difference with respect to the previous example ( synchronization) lies in the fact that is a continuous group. We regard this as a prototype of synchronization problems over compact Lie groups [e.g., ].

Hidden Partition

The hidden (or planted) partition (also known as community detection) model is a statistical model for the problem of finding clusters in large network datasets (see refs. 7, 23, 24 and references therein for earlier work). The data consist of graph over vertex set generated as follows. We partition by setting or independently across vertices with . Conditional on the partition, edges are independent with

| [2] |

Here are model parameters that will be kept of order one as . This corresponds to a random graph with bounded average degree and a cluster (also known as block or community) structure corresponding to the partition . Given a realization of such a graph, we are interested in estimating the underlying partition.

We can encode the partition , by a vector , letting if and if . An important insight, which we will further develop below (25, 26), is that this problem is analogous to synchronization, with signal strength . The parameters’ correspondence is obtained, at a heuristics level, by noting that if is the adjacency matrix of G, then . (Here and below, denotes the standard scalar product between vectors.)

A generalization of this problem to the case of more than two blocks has been studied since the 1980s as a model for social network structure (27), under the name of “stochastic block model.” For the sake of simplicity, we will focus here on the two-blocks case.

Illustrations

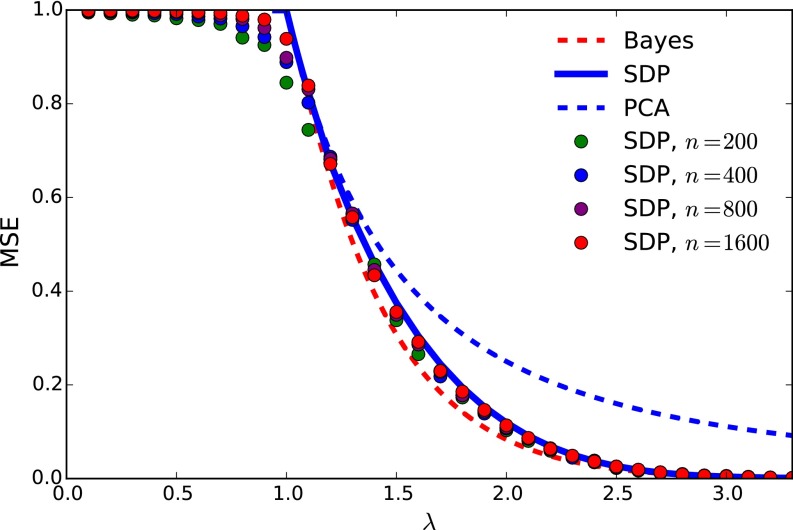

As a first preview of our results, Fig. 1 reports our analytical predictions for the estimation error in the synchronization problem, comparing them with numerical simulations using semidefinite programming (SDP). An estimator is a map , . We compare various estimators in terms of their per-coordinate mean square error (MSE):

| [3] |

where expectation is with respect to the noise model [1] and uniformly random. Note the minimization with respect to the sign inside the expectation: because of the symmetry of [1], the vector can only be estimated up to a global sign. We will be interested in the high-dimensional limit and will omit the subscript n—thus writing —to denote this limit. Note that a trivial estimator that always returns 0 has error .

Fig. 1.

Estimating under the noisy synchronization model of Eq. 1. Curves correspond to (asymptotic) analytical predictions, and dots correspond to numerical simulations (averaged over 100 realizations).

Classical statistical theory suggests two natural reference estimators: the Bayes optimal and the maximum likelihood estimators. We will discuss these methods first, to set the stage for SDP relaxations.

Bayes Optimal Estimator.

The Bayes optimal estimator (also known as minimum MSE) provides a lower bound on the performance of any other approach. It takes the conditional expectation of the unknown signal given the observations:

| [4] |

Explicit formulas are given in SI Appendix. We note that assumes knowledge of the prior distribution. The red dashed curve in Fig. 1 presents our analytical prediction for the asymptotic MSE for . Notice that for all and strictly for all , with quickly as . The point corresponds to a phase transition for optimal estimation, and no method can have nontrivial for .

Maximum Likelihood.

The estimator is given by the solution of

| [5] |

Here is a scaling factor‡ that is chosen according to the asymptotic theory as to minimize the MSE. As for the Bayes optimal curve, we obtain for and (and rapidly decaying to 0) for . (We refer to SI Appendix for this result.)

Semidefinite Programming.

Neither the Bayes nor the maximum likelihood approaches can be implemented efficiently. In particular, solving the combinatorial optimization problem in Eq. 5 is a prototypical NP-complete problem. Even worse, approximating the optimum value within a sublogarithmic factor is computationally hard (28) (from a worst case perspective). SDP relaxations allow us to obtain tractable approximations. Specifically, and following a standard lifting idea, we replace the problem [5] by the following semidefinite program over the symmetric matrix (18. 29, 30):

| [6] |

We use to denote the scalar product between matrices, namely, , and to indicate that is positive semidefinite§ (PSD). If we assume , the SDP [6] reduces to the maximum-likelihood problem [5]. By dropping this condition, we obtain a convex optimization problem that is solvable in polynomial time. Given an optimizer of this convex problem, we need to produce a vector estimate. We follow a different strategy from standard rounding methods in computer science, which is motivated by our analysis below. We compute the eigenvalue decomposition , with eigenvalues and eigenvectors , with . We then return the estimate

| [7] |

with a certain scaling factor (SI Appendix).

Our analytical prediction for is plotted as blue solid line in Fig. 1. Dots report the results of numerical simulations with this relaxation for increasing problem dimensions. The asymptotic theory appears to capture these data very well already for . For further comparison, alongside the above estimators, we report the asymptotic prediction for , the mean square error of principal component analysis (PCA). This method simply returns the principal eigenvector of , suitably rescaled (SI Appendix).

Fig. 1 reveals several interesting features.

First, it is apparent that optimal estimation undergoes a phase transition. Bayes optimal estimation achieves nontrivial accuracy as soon as . The same is achieved by a method as simple as PCA (blue-dashed curve). On the other hand, for , no method can achieve strictly [whereas is trivial by ].

Second, PCA is suboptimal at large signal strength. PCA can be implemented efficiently but does not exploit the information . As a consequence, its estimation error is significantly suboptimal at large λ (SI Appendix).

Third, the SDP-based estimator is nearly optimal. The tractable estimator achieves the best of both worlds. Its phase transition coincides with the Bayes optimal one , and decays exponentially at large λ, staying close to and strictly smaller than , for .

We believe that the above features are generic: as shown in SI Appendix, synchronization confirms this expectation.

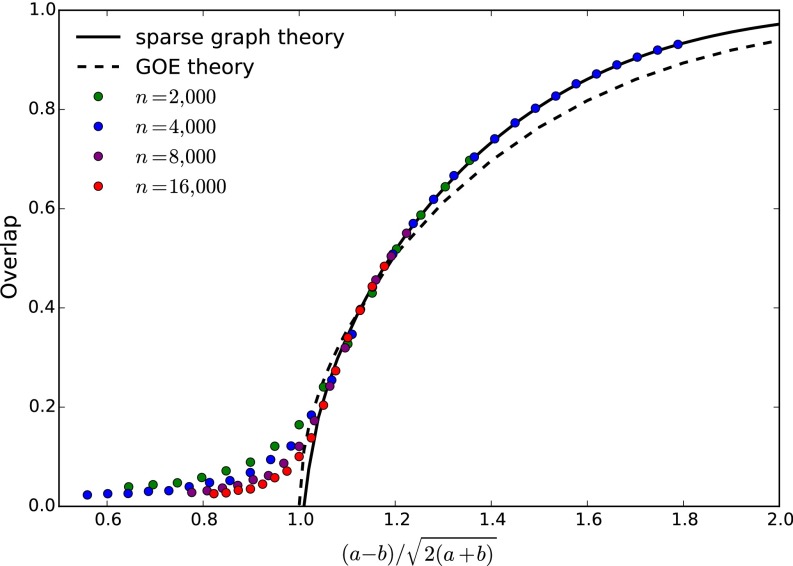

Fig. 2 illustrates our results for the community detection problem under the hidden partition model of Eq. 2. Recall that we encode the ground truth by a vector . In the present context, an estimator is required to return a partition of the vertices of the graph. Formally, it is a function on the space of graphs with n vertices , namely, , . We will measure the performances of such an estimator through the overlap,

| [8] |

and its asymptotic limit (for which we omit the subscript). To motivate the SDP relaxation we note that the maximum likelihood estimator partitions V in two sets of equal size to minimize the number of edges across the partition (the minimum bisection problem). Formally,

| [9] |

where is the all-ones vector. Once more, this problem is hard to approximate (31), which motivates the following SDP relaxation:

| [10] |

Given an optimizer , we extract a partition of the vertices V as follows. As for the synchronization problem, we compute the principal eigenvector . We then partition V according to the sign of . Formally,

| [11] |

Let us emphasize a few features of Fig. 2:

Fig. 2.

Community detection under the hidden partition model of Eq. 2, for average degree . Dots indicate performance of the SDP reconstruction method (averaged over 500 realizations). Dashed curve indicates asymptotic analytical prediction for the Gaussian model (which captures the large-degree behavior). Solid curve indicates analytical prediction for the sparse graph case (within the vectorial ansatz; SI Appendix).

First, both the GOE theory and the cavity method are accurate. The dashed curve of Fig. 2 reports the analytical prediction within the synchronization model, with Gaussian noise (the GOE theory). This can be shown to capture the large degree limit: , with fixed, and is an excellent approximation already for . The continuous curve is our prediction for , obtained by applying the cavity method from statistical mechanics to the community detection problem (see next section and SI Appendix). This approach describes very accurately the empirical data and the small discrepancy from the GOE theory.

Second, SDP is superior to PCA. A sequence of recent papers (ref. 7 and references therein) demonstrate that classical spectral methods—such as PCA—fail to detect the hidden partition in graphs with bounded average degree. In contrast, Fig. 2 shows that a standard SDP relaxation does not break down in the sparse regime. See refs. 25, 32 for rigorous evidence toward the same conclusion.

Third, SDP is nearly optimal. As proven in ref. 33, no estimator can achieve as , if . Fig. 2 (and the theory developed in the next section) suggests that SDP has a phase transition threshold. Namely, there exists such that if

| [12] |

then SDP achieves overlap bounded away from zero: . Fig. 2 also suggests ; that is, SDP is nearly optimal.

Below we will derive an accurate approximation for the critical point . The factor measures the suboptimality of SDP for graphs of average degree d.

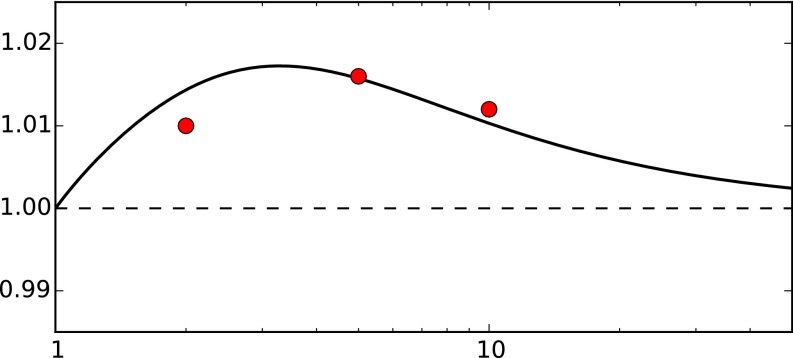

Fig. 3 plots our prediction for the function , together with empirically determined values for this threshold, obtained through Monte Carlo experiments for (red circles). These were obtained by running the SDP estimator on randomly generated graphs with size up to (total CPU time was about 10 y). In particular, we obtain strictly, but the gap is very small (at most of the order of ) for all d. This confirms in a precise quantitative way the conclusion that SDP is nearly optimal for the hidden partition problem.

Fig. 3.

Phase transition for the SDP estimator: for , the SDP estimator has positive correlation with the ground truth; for the correlation is vanishing [here and ]. Solid line indicates prediction from the cavity method (vectorial ansatz; SI Appendix) (compare Eq. 25). Dashed line indicates ideal phase transition . Red circles indicate numerical estimates of the phase transition location for , 5, and 10.

Simulations results are in broad agreement with our predictions but present small discrepancies (below ). These discrepancies might be due to the extrapolation from finite-n simulations to or to the inaccuracy of our analytical approximation.

Analytical Results.

Our analysis is based on a connection with statistical mechanics. The models arising from this connection are spin models in the so-called “large-N” limit, a topic of intense study across statistical mechanics and quantum field theory (34). Here we exploit this connection to apply nonrigorous but sophisticated tools from the theory of mean field spin glasses (35, 36). The paper (25) provides partial rigorous evidence toward the predictions developed here.

We will first focus on the simpler problem of synchronization under Gaussian noise, treating together the and cases. We will then discuss the new features arising within the sparse hidden partition problem. Most technical derivations are presented in SI Appendix. To treat the real () and complex [] cases jointly, we will use to denote any of the fields of reals or complex numbers, i.e., either or .

Gibbs Measures and Vector Spin Models.

We start by recalling that a matrix is PSD if and only if it can be written as for some . Indeed, without loss of generality, one can take , and any is equivalent.

Letting , … be the rows of , the SDP [6] can be rewritten as

| [13] |

with the unit sphere in m dimensions. The SDP relaxation corresponds to any case or, following the physics parlance, . Note, however, that cases with bounded (small) m are of independent interest. In particular, for we have (for the real case) or (for the complex case). Hence, we recover the maximum-likelihood estimator setting . It is also known that (under suitable conditions on ) for , the problem [13] has no local optima except the global ones (37).

A crucial question is how the solution of [13] depends on the spin dimensionality m, for . Denote by the optimum value when the dimension is m (in particular, is also the value of [6] for ). It was proven in ref. 25 that there exists a constant C independent of m and n such that

| [14] |

with probability converging to one as (whereby is chosen with any of the distributions studied in the present paper). The upper bound in Eq. 14 follows immediately from the definition. The lower bound is a generalization of the celebrated Grothendieck inequality from functional analysis (38).

The above inequalities imply that we can obtain information about the SDP [6] in the limit, by taking after . This is the asymptotic regime usually studied in physics under the term “large-N limit.”

Finally, we can associate to the problem [13] a finite-temperature Gibbs measure as follows:

| [15] |

where is the uniform measure over the m-dimensional sphere and denotes the real part of z. This allows us to treat in a unified framework all of the estimators introduced above. The optimization problem [13] is recovered by taking the limit (with maximum likelihood for and SDP for ). The Bayes optimal estimator is recovered by setting and (in the real case) or (in the complex case).

Cavity Method: and Synchronization.

The cavity method from spin-glass theory can be used to analyze the asymptotic structure of the Gibbs measure [15] as . Below we will state the predictions of our approach for the SDP estimator .

Here we list the main steps of our analysis for the expert reader, deferring a complete derivation to the SI Appendix: (i) We use the cavity method to derive the replica symmetric predictions for the model (15) in the limit . (ii) By setting , (in the real case), or (in the complex case) we obtain the Bayes optimal error : on the basis of ref. 39, we expect the replica symmetric assumption to hold and these predictions to be exact. (See also ref. 40 for related work.) (iii) By setting and we obtain a prediction for the error of maximum likelihood estimation . Although this prediction is not expected to be exact (because of replica symmetry breaking), it should be nevertheless rather accurate, especially for large λ. (iv) By setting and , we obtain the SDP estimation error , which is our main object of interest. Notice that the inversion of limits and is justified (at the level of objective value) by Grothendieck inequality. Further, because the case is equivalent to a convex program, we expect the replica symmetric prediction to be exact in this case.

The properties of the SDP estimator are given in terms of the solution of a set of three nonlinear equations for the three scalar parameters μ, q, and that we state next. Let (in the real case) or (in the complex case). Define as the only nonnegative solution of the following equation in :

| [16] |

Then μ, q, and r satisfy

| [17] |

| [18] |

These equations can be solved by iteration, after approximating the expectations on the right-hand side numerically. The properties of the SDP estimator can be derived from this solution. Concretely, we have

| [19] |

The corresponding curve is reported in Fig. 1 for the real case . We can also obtain the asymptotic overlap from the solution of these equations. The cavity prediction is

| [20] |

The corresponding curve is plotted in Fig. 2.

More generally, for any dimension m and inverse temperature β, we obtain equations that are analogous to Eqs. 17 and 18. The parameters characterize the asymptotic structure of the probability measure defined in Eq. 15, as follows. We assume, for simplicity . Define the following probability measure on unit sphere , parametrized by , :

| [21] |

For ν a probability measure on and R an orthogonal (or unitary) matrix, let be the measure obtained by¶ rotating ν. Finally, let denote the joint distribution of under . Then, for any fixed k, and any sequence of k-uples , we have

| [22] |

Here denotes the uniform (Haar) measure on the orthogonal group, denotes convergence in distribution (note that is a random variable), and with , .

Cavity Method: Community Detection in Sparse Graphs.

We next consider the hidden partition model, defined by Eq. 2. As above, we denote by the asymptotic average degree of the graph G and by the signal-to-noise ratio. As illustrated by Fig. 2 (and further simulations presented in SI Appendix), synchronization appears to be a very accurate approximation for the hidden partition model already at moderate d.

The main change with respect to the dense case is that the phase transition at , is slightly shifted, as per Eq. 12. Namely, SDP can detect the hidden partition with high probability if and only if , for some .

Our prediction for the curve will be denoted by and is plotted in Fig. 3. It is obtained by finding an approximate solution of the RS cavity equations, within a scheme that we name “vectorial ansatz” (see SI Appendix for details). We see that approaches very quickly the ideal value for . Indeed, our prediction implies . Also, as . This is to be expected because the constraints imply , with at . Hence, the problem becomes trivial at : it is sufficient to identify the connected components in G, whence .

More interestingly, admits a characterization in terms of a distributional recursion, which can be evaluated numerically and is plotted as a continuous line in Fig. 3. Surprisingly, the SDP detection threshold appears to be suboptimal at most by 2%. To state this characterization, consider first the recursive distributional equation (RDE)

| [23] |

Here denotes equality in distribution, , and are independent and identically distributed (i.i.d.) copies of . This has to be read as an equation for the law of the random variable (see, e.g., ref. 41 for further background on RDEs). We are interested in a specific solution of this equation, constructed as follows. Set almost surely, and for , let . It is proved in ref. 42 that the resulting sequence converges in distribution to a solution of Eq. 23: .

The quantity has a useful interpretation. Consider a (rooted) Poisson Galton–Watson tree with branching number d, and imagine each edge to be a conductor with unit conductance. Then is the total conductance between the root and the boundary of the tree at infinity. In particular, almost surely for , and with positive probability if (see ref. 42 and SI Appendix).

Next consider the distributional recursion

| [24] |

where , , and we use initialization . This recursion determines sequentially the distribution of from the distribution of . Here , , and are i.i.d. copies of , independent of . Notice that because , we have . The threshold is defined as the smallest λ such that the diverges exponentially:

| [25] |

This value can be computed numerically, for instance, by sampling the recursion [24]. The results of such an evaluation are plotted as a continuous line in Fig. 3.

Final Algorithmic Considerations

We have shown that ideas from statistical mechanics can be used to precisely locate phase transitions in SDP relaxations for high-dimensional statistical problems. In the problems investigated here, we find that SDP relaxations have optimal thresholds [in and synchronization] or nearly optimal thresholds (in community detection under the hidden partition model). Here near-optimality is to be interpreted in a precise quantitative sense: SDP’s threshold is suboptimal—at most—by a 2% factor. As such, SDPs provide a very useful tool for designing computationally efficient algorithms that are also statistically efficient.

Let us emphasize that other polynomial–time algorithms can be used for the specific problems studied here. In the synchronization problem, naive PCA achieves the optimal threshold . In the community detection problem, several authors recently developed ingenious spectral algorithms that achieve the information theoretically optimal threshold (see, e.g., refs. 7, 23, 24, 43, 44).

However, SDP relaxations have the important feature of being robust to model misspecifications (see also refs. 30, 45 for independent investigations of robustness issues). To illustrate this point, we perturbed the hidden partition model as follows. For a perturbation level , we draw vertices uniformly at random in G. For each such vertex we connect by edges all of the neighbors of . In our case, this results in adding edges.

In SI Appendix, we compare the behavior of SDP and the Bethe Hessian algorithm of ref. 44 for this perturbed model: although SDP appears to be rather insensitive to the perturbation, the performance of Bethe Hessian are severely degraded by it. We expect a similar fragility in other spectral algorithms.

Supplementary Material

Acknowledgments

A.M. was partially supported by National Science Foundation Grants CCF-1319979 and DMS-1106627 and Air Force Office of Scientific Research Grant FA9550-13-1-0036. A.J. was partially supported by the Center for Science of Information (CSoI) fellowship.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

†Here and below, , with and , denotes the complex normal distribution. Namely, if , with and independent Gaussian random variables.

‡In practical applications, λ might not be known. We are not concerned by this at the moment because maximum likelihood is used as a idealized benchmark here. Note that strictly speaking, this is a scaled maximum likelihood estimator. We prefer to scale to keep .

§Recall that a symmetric matrix is said to be PSD if all of its eigenvalues are nonnegative.

¶Formally, .

See Commentary on page 4238.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1523097113/-/DCSupplemental.

References

- 1.Gauss CF. Theoria motus corporum coelestium in sectionibus conicis solem ambientium auctore Carolo Friderico Gauss. Friedrich Perthes und I. H. Besser; Hamburg, Germany: 1809. [Google Scholar]

- 2.Ben-Dor A, Shamir R, Yakhini Z. Clustering gene expression patterns. J Comput Biol. 1999;6(3-4):281–297. doi: 10.1089/106652799318274. [DOI] [PubMed] [Google Scholar]

- 3.Plaza A, et al. Recent advances in techniques for hyperspectral image processing. Remote Sens Environ. 2009;113(1):S110–S122. [Google Scholar]

- 4.Koren Y, Bell R, Volinsky C. Matrix factorization techniques for recommender systems. Computer. 2009;42(8):30–37. [Google Scholar]

- 5.Von Luxburg U. A tutorial on spectral clustering. Stat Comput. 2007;17(4):395–416. [Google Scholar]

- 6.Girvan M, Newman ME. Community structure in social and biological networks. Proc Natl Acad Sci USA. 2002;99(12):7821–7826. doi: 10.1073/pnas.122653799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Krzakala F, et al. Spectral redemption in clustering sparse networks. Proc Natl Acad Sci USA. 2013;110(52):20935–20940. doi: 10.1073/pnas.1312486110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wasserman L. Bayesian model selection and model averaging. J Math Psychol. 2000;44(1):92–107. doi: 10.1006/jmps.1999.1278. [DOI] [PubMed] [Google Scholar]

- 9.Tibshirani R. Regression shrinkage and selection with the Lasso. J R Stat Soc, B. 1996;58(1):267–288. [Google Scholar]

- 10.Chen SS, Donoho DL, Saunders MA. Atomic decomposition by basis pursuit. SIAM J Sci Comput. 1998;20(1):33–61. [Google Scholar]

- 11.Candès EJ, Tao T. The power of convex relaxation: Near-optimal matrix completion. IEEE Trans Information Theory. 2010;56(5):2053–2080. [Google Scholar]

- 12.Donoho DL, Tanner J. Neighborliness of randomly projected simplices in high dimensions. Proc Natl Acad Sci USA. 2005;102(27):9452–9457. doi: 10.1073/pnas.0502258102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Candés EJ, Tao T. The Dantzig selector: Statistical estimation when p is much larger than n. Ann Stat. 2007;35(6):2313–2351. [Google Scholar]

- 14.Donoho DL, Maleki A, Montanari A. Message-passing algorithms for compressed sensing. Proc Natl Acad Sci USA. 2009;106(45):18914–18919. doi: 10.1073/pnas.0909892106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Singer A, Shkolnisky Y. Three-dimensional structure determination from common lines in cryo-em by eigenvectors and semidefinite programming. SIAM J Imaging Sci. 2011;4(2):543–572. doi: 10.1137/090767777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cucuringu M. Synchronization over F2 and community detection in signed multiplex networks with constraints. J Complex Networks. 2015;cnu050 [Google Scholar]

- 17.Abbe E, Bandeira AS, Bracher A, Singer A. Decoding binary node labels from censored edge measurements: Phase transition and efficient recovery. IEEE Trans Network Sci Eng. 2014;1(1):10–22. [Google Scholar]

- 18.Singer A. Angular synchronization by eigenvectors and semidefinite programming. Appl Comput Harmon Anal. 2011;30(1):20–36. doi: 10.1016/j.acha.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bandeira AS, Boumal N, Singer A. 2014. Tightness of the maximum likelihood semidefinite relaxation for angular synchronization. arXiv:1411.3272.

- 20.Candes EJ, Eldar YC, Strohmer T, Voroninski V. Phase retrieval via matrix completion. SIAM Rev. 2015;57(2):225–251. [Google Scholar]

- 21.Waldspurger I, d’Aspremont A, Mallat S. Phase recovery, maxcut and complex semidefinite programming. Math Program. 2015;149(1-2):47–81. [Google Scholar]

- 22.Alexeev B, Bandeira AS, Fickus M, Mixon DG. Phase retrieval with polarization. SIAM J Imaging Sci. 2014;7(1):35–66. [Google Scholar]

- 23.Massoulié L. Proceedings of the 46th Annual ACM Symposium on Theory of Computing. Association for Computing Machinery; New York: 2014. Community detection thresholds and the weak Ramanujan property; pp. 694–703. [Google Scholar]

- 24.Mossel E, Neeman J, Sly A. 2013. A proof of the block model threshold conjecture. arXiv:1311.4115.

- 25.Montanari A, Sen S. Proceedings of the 48th Annual ACM Symposium on Theory of Computing. Association for Computing Machinery; New York: 2016. Semidefinite programs on sparse random graphs and their application to community detection. [Google Scholar]

- 26.Bandeira AS. 2015. Random Laplacian matrices and convex relaxations. arXiv:1504.03987.

- 27.Holland PW, Laskey K, Leinhardt S. Stochastic blockmodels: First steps. Soc Networks. 1983;5(2):109–137. [Google Scholar]

- 28.Arora S, Berger E, Hazan E, Kindler G, Safra M. 46th Annual IEEE Symposium on Foundations of Computer Science, 2005. FOCS 2005. Inst of Electr and Electron Eng; Washington, DC: 2005. On non-approximability for quadratic programs; pp. 206–215. [Google Scholar]

- 29.Nesterov Y. Semidefinite relaxation and nonconvex quadratic optimization. Optim Methods Softw. 1998;9(1-3):141–160. [Google Scholar]

- 30.Feige U, Kilian J. Heuristics for semirandom graph problems. J Comput Syst Sci. 2001;63(4):639–671. [Google Scholar]

- 31.Khot S. Ruling out ptas for graph min-bisection, dense k-subgraph, and bipartite clique. SIAM J Comput. 2006;36(4):1025–1071. [Google Scholar]

- 32.Guédon O, Vershynin R. 2014. Community detection in sparse networks via grothendieck’s inequality. arXiv:1411.4686.

- 33.Mossel E, Neeman J, Sly A. 2012. Stochastic block models and reconstruction. arXiv:1202.1499.

- 34.Brézin E, Wadia SR. The Large N Expansion in Quantum Field Theory and Statistical Physics: From Spin Systems to 2-Dimensional Gravity. World Scientific; Singapore: 1993. [Google Scholar]

- 35.Mézard M, Montanari A. Information, Physics, and Computation. Oxford University Press; Oxford, United Kingdom: 2009. [Google Scholar]

- 36.Mézard M, Parisi G, Virasoro MA. Spin Glass Theory and Beyond. World Scientific; Singapore: 1987. [Google Scholar]

- 37.Burer S, Monteiro RDC. A nonlinear programming algorithm for solving semidefinite programs via low-rank factorization. Math Program. 2003;95(2):329–357. [Google Scholar]

- 38.Khot S, Naor A. Grothendieck-type inequalities in combinatorial optimization. Commun Pure Appl Math. 2012;65(7):992–1035. [Google Scholar]

- 39.Deshpande Y, Abbe E, Montanari A. 2015. Asymptotic mutual information for the two-groups stochastic block model. arXiv:1507.08685.

- 40.Lesieur T, Krzakala F, Zdeborová L. 2015. MMSE of probabilistic low-rank matrix estimation: Universality with respect to the output channel. arXiv:1507.03857.

- 41.Aldous DJ, Bandyopadhyay A. A survey of max-type recursive distributional equations. Ann Appl Probab. 2005;15(2):1047–1110. [Google Scholar]

- 42.Lyons R, Pemantle R, Peres Y. Classical and Modern Branching Processes. Springer; New York: 1997. Unsolved problems concerning random walks on trees; pp. 223–237. [Google Scholar]

- 43.Decelle A, Krzakala F, Moore C, Zdeborová L. Asymptotic analysis of the stochastic block model for modular networks and its algorithmic applications. Phys Rev E Stat Nonlin Soft Matter Phys. 2011;84(6 Pt 2):066106. doi: 10.1103/PhysRevE.84.066106. [DOI] [PubMed] [Google Scholar]

- 44.Saade A, Krzakala F, Zdeborová L. Advances in Neural Information Processing Systems. Neural Information Processing Systems Foundation; La Jolla, CA: 2014. Spectral clustering of graphs with the Bethe Hessian; pp. 406–414. [Google Scholar]

- 45.Moitra A, Perry W, Wein AS. Proceedings of the 48th Annual ACM Symposium on Theory of Computing. Association for Computing Machinery; New York: 2015. How robust are reconstruction thresholds for community detection? [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.