Abstract

A recent study found that auditory and visual information can be integrated even when you are completely unaware of hearing or seeing the paired stimuli — but only if you have received prior, conscious exposure to the paired stimuli.

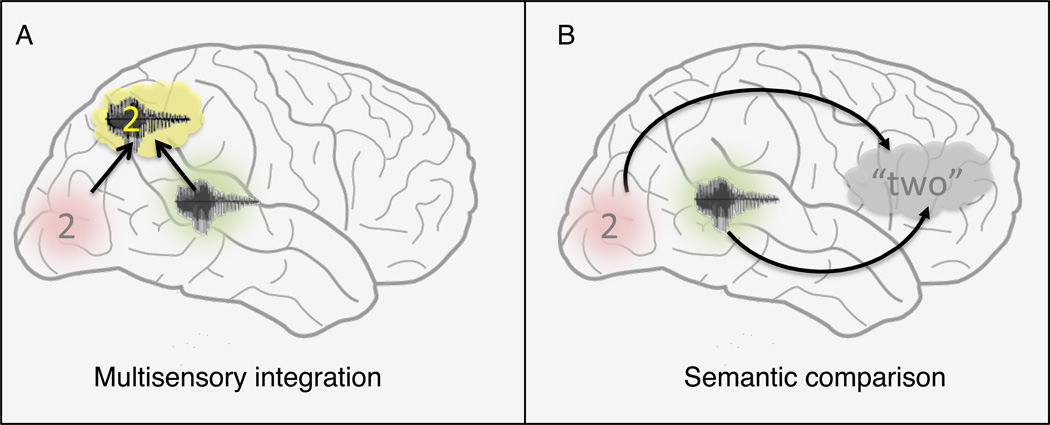

Many of the objects and events we encounter during our everyday lives are made up of distinct blends of auditory and visual information: dogs barking, motors whining, people talking. Even though the physical signals conveying those qualities are fundamentally different — for example, photic energy versus acoustic energy — our brain seamlessly integrates, or ‘binds’, this information into a coherent perceptual Gestalt. The unitary nature of these multisensory perceptual experiences raises an important question in the context of prevailing theories of consciousness [1]: specifically, can such binding take place prior to the emergence of consciousness, or is it an emergent property of consciousness? Earlier work has indicated that audible sounds can impact invisible pictures suppressed from awareness during binocular rivalry [2], but can auditory and visual signals interact when both are presented outside of awareness? A recent study by Faivre et al. [3] provides an answer to this question by unequivocally demonstrating the interaction of subthreshold auditory and visual cues. Left unanswered, however, is whether this interaction represents genuine multisensory integration or, instead, arises from interactions at amodal, semantic levels of analysis (Figure 1).

Figure 1.

Schematic representations of alternative ways in which auditory and visual information may interact in the priming design of Faivre et al. [3].

(A) Multisensory integration involves combination of sensory signals from visual cortex (denoted by red) and auditory cortex (denoted by green), resulting in an integrated representation in regions of multisensory cortex (for example, superior temporal and/or parietal regions, denoted by yellow). (B) With semantic comparison, two independent sensory representations, one auditory (green) and the other visual (red), signify the same object and, thus, activate a common semantic concept (the abstract concept of the number, represented by ‘two’, in this case) within higher level, cognitive areas.

In the new study [3], participants were briefly presented a priming stimulus made up of a pair of digits — one presented as a visual stimulus and the other as an auditory stimulus — that were sometimes identical (for example, a spoken ‘2’ and a printed ‘2’) and other times were not (for example, a spoken ‘8’ and a printed ‘2’). This prime was then followed by an audio-visual pair of target letters that were either identical or not. Participants had to judge whether this second pair was the same (for example, a spoken and printed ‘b’) or different (for example, a spoken ‘m’ and a printed ‘b’). Crucially, the first audio-visual digit pair — the priming pair — was presented at subthreshold intensities and durations. This clever design meant that the pair of primes and the pair of targets could either be congruent (both pairs the ‘same’ or both pairs ‘different’) or incongruent (one pair the ‘same’ and the other pair ‘different’). With this design, by contrasting reaction times to target-relationship identification as a function of whether or not that pair was congruent with the prime-relationship, the authors were able to determine whether the subthreshold primes were integrated (as evidenced by reduced reaction times). Indeed, a congruency effect would be dependent on the successful determination of the semantic relationship between the subliminal auditory and visual digits. The authors also assessed priming under conditions where the auditory and visual digits were suprathreshold.

Remarkably, following repeated exposures to primes presented at suprathreshold levels, subliminal pairs were able to impact reaction times for judging the auditory-visual target relationship, an outcome implying that these subliminal auditory and visual signals were integrated outside of awareness. But what is being integrated in such a situation? Is it the low-level visual and acoustic features of the priming stimuli (thus arguing for true multisensory integration)? Or is it the higher-order semantic features of the stimuli, thus arguing for a process based on comparison of congruence of semantic information arising from two sources, rather than on genuine integration?

The results from the Faivre et al. [3] study do not allow us unequivocally to answer this question. They do, however, provide important clues suggesting that the process may be taking place at the semantic level. These clues are founded in one of the hallmark features of multisensory integration — the concept of inverse effectiveness — whereby the multisensory gain is most pronounced when the paired unisensory signals are weak [4, 5]. If the priming signals were being integrated in a multisensory manner, one would expect that the weaker the primes, the greater the gain when they were integrated, and thus, the larger the effects sizes. Conversely, if the results were entirely driven by sensory-independent semantic congruency priming, we would expect that the stronger the priming signal, the bigger the effect size.

Although inverse effectiveness was not directly tested, there are several informative aspects of the experimental results that bear on the interpretation. The first emerges from a comparison of the results of experiments 1 and 2 with those of experiment 3. In the first two experiments the auditory (experiment 1) and visual (experiment 2) primes were presented at levels sufficiently strong to render them unequivocally suprathreshold, while in experiment 3 they were both presented subliminally. Despite these differences in stimulus effectiveness, the priming effects were comparable in magnitude for each of these three experiments. The second clue emerges from the comparison of results from the first three experiments, where participants were exposed to suprathreshold primes before subliminal testing, to the results from experiment 4, where subliminal testing was not preceded by exposure to suprathreshold prime pairs. Subliminal priming worked in experiments 1–3 but did not work in experiment 4. Framed in the context of inverse effectiveness, it is not at all obvious why prior exposure would be necessary before weak stimuli could be integrated in order to facilitate performance. Thus, this pattern of results also seems incompatible with the concept of inverse effectiveness, but compatible with semantic priming.

We believe that, in addition to effectiveness manipulations, another key set of principles governing multisensory integration may be used in future work to further differentiate between unconscious multisensory integration and unconscious semantic comparison. It is well established that the spatial and temporal structure of paired sensory cues — here, the spoken and written digits — are a major determinant of the probability that these cues will be integrated. Stimuli in close spatial and temporal correspondence have a high likelihood of being integrated [6]. In contrast, semantic priming should be independent of the spatial location at which stimuli are delivered, as well as more dependent upon the relative timing between primes and targets (rather than on the timing between the primes themselves) [7].

In our opinion, the jury is still out on the question of the nature of the information being combined when a subliminal auditory digit is presented together with a subliminal visual digit within a priming paradigm. We believe that this question can be resolved by exploiting several of the classic features of multisensory integration. Regardless of the resolution of that question, however, the study by Faivre et al. [3] stands as a provocative contribution to the question of binding and consciousness by definitively showing that the property of congruence between auditory and visual information can be established outside of awareness.

In Brief.

A recent study found that auditory and visual information can be integrated even when you are completely unaware of hearing or seeing the paired stimuli — but only if you have received prior, conscious exposure to the paired stimuli.

Contributor Information

Jean-Paul Noel, Email: Jean-paul.noel@vanderbilt.edu.

Mark Wallace, Email: Mark.wallace@vanderbilt.edu.

Randolph Blake, Email: Randolph.blake@vanderbilt.edu.

References

- 1.Revonsuo A, Newman J. Binding and consciousness. Conscious. Cogn. 1999;8:123–127. doi: 10.1006/ccog.1999.0393. [DOI] [PubMed] [Google Scholar]

- 2.Chen Y-C, Yeh S-L, Spence C. Crossmodal constraints on human perceptual awareness: auditory semantic modulation of binocular rivalry. Front. Psychol. 2011;2:212. doi: 10.3389/fpsyg.2011.00212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Faivre N, Mudrik L, Schwartz N, Koch C. Multisensory integration in complete unawareness: Evidence from audiovisual congruency priming. Psychol. Sci. 2014;25:2006–2016. doi: 10.1177/0956797614547916. [DOI] [PubMed] [Google Scholar]

- 4.Stein B, Stanford T. Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- 5.Murray MM, Wallace MT. The neural bases of multisensory processes. Boca Raton, FL: CRC Press; 2012. [PubMed] [Google Scholar]

- 6.Wallace MT, Stevenson RA. The construct of a multisensory temporal binding window and its dysregulation in developmental disabilities. Neuropsychologia. 2014;64:105–123. doi: 10.1016/j.neuropsychologia.2014.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Vorberg D, Mattler U, Heinecke A, Schmidt T, Schwarzbach J. Different time courses for visual perception and action priming. Proc. Natl. Acad. Sci. USA. 2003;100:6275–6280. doi: 10.1073/pnas.0931489100. [DOI] [PMC free article] [PubMed] [Google Scholar]