Abstract

Background

Few prospective studies have evaluated theory-driven approaches to the implementation of evidence-based opioid treatment. This study compared the effectiveness of an implementation model (Science to Service Laboratory; SSL) to training as usual (TAU) in promoting the adoption of contingency management across a multi-site opiate addiction treatment program. We also examined whether the SSL affected putative mediators of contingency management adoption (perceived innovation characteristics and organizational readiness to change).

Methods

Sixty treatment providers (39 SSL, 21 TAU) from 15 geographically diverse satellite clinics (7 SSL, 8 TAU) participated in the 12-month study. Both conditions received didactic contingency management training and those in the pre-determined experimental region received 9 months of SSL-enhanced training. Contingency management adoption was monitored biweekly, while putative mediators were measured at baseline, 3-, and 12-months.

Results

Relative to providers in the TAU region, treatment providers in the SSL region had comparable likelihood of contingency management adoption in the first 20 weeks of the study, and then significantly higher likelihood of adoption (odds ratios = 2.4-13.5) for the remainder of the study. SSL providers also reported higher levels of one perceived innovation characteristic (Observability) and one aspect of organizational readiness to change (Adequacy of Training Resources), although there was no evidence that the SSL affected these putative mediators over time.

Conclusions

Results of this study indicate that a fully powered randomized trial of the SSL is warranted. Considerations for a future evaluation are discussed.

Keywords: opioid, contingency management, adoption, implementation

INTRODUCTION

The gap between research and clinical practice is vast across all fields of healthcare, but few areas are characterized by gaps as large and complex as addiction treatment in general1-3 and opioid addiction treatment in particular.4 Existing research indicates that simply offering counselors didactic training is not sufficient to close the research to practice gap,5-7 and that the level and quality of training support affects implementation adoption.8 Over the past 20 years, a number of innovative federal initiatives have promoted the training of opioid addiction treatment counselors in evidence-based practice, including NIDA's Clinical Trial Network,9, 10 SAMHSA's Addiction Technology Transfer Center (ATTC) Network,11 and the joint NIDA-SAMHSA “blending initiative” to distribute the Prescription Opioid Addiction Treatment Study suite of training resources.12 The goal of the current pilot study was to evaluate the effectiveness of a training model that came out of one of these federal initiatives - the ATTC's Science to Service Laboratory (SSL) – among opioid addiction treatment providers.

The ATTC developed the SSL in 2003 and it has been described in prior publications.13, 14 When the SSL was first developed, existing training and implementation models were informed primarily by principles of either individual change or organizational change.13 The SSL was designed to integrate principles from both of these levels, thereby addressing a historical gap in the literature. Organizational change principles were informed by Simpson's heuristic model for transferring research to practice,15 while individual change principles were based on the strategies outlined in the ATTC's Change Book: A Blueprint for Technology Transfer.16 At the organizational level, the SSL model targets organizational readiness to change as a putative mediator of intervention adoption. Organizational readiness to change has been broadly defined as “the extent to which organizational members are psychologically and behaviorally prepared to implement organizational change,”17 and it has recognized as a critical precursor to the effective implementation of evidence-based practice across many disciplines18, 19 including addiction treatment.20 At the individual provider level, the model targets five perceived intervention characteristics that were shown to influence adoption in Roger's21 seminal work on the diffusion of innovations. In particular, the SSL model attempts to emphasize the perceived Relative Advantage, Observability, Trialability, and Compatibility of the intervention, while minimizing perceived Complexity. To exert change in these mediators, the SSL model relies heavily on supportive interpersonal personal strategies (described further in Methods) including the use of an external technology transfer specialist, internal change champions, and collaborative group problem solving.

The current investigation was an initial evaluation of the SSL's effectiveness on evidence-based practice adoption and on putative mediators of adoption in a multi-site opioid addiction treatment program. Contingency management was selected as the evidence-based practice because it has proved challenging to sustain in community settings,22 despite having robust support in meta-analytic reviews,23-26 documented effectiveness when delivered by community counselors,27 and high levels of receptivity among opioid addiction treatment providers.28, 29 This study evaluated the additive effects of the SSL over training as usual (TAU), with all clinics receiving TAU (i.e. didactic training in contingency management) and clinics in the experimental region receiving additional SSL support for 9 months. Contingency management adoption was measured biweekly, while putative mediators of implementation effectiveness (organizational readiness to change and perceived intervention characteristics) were measured at baseline, 3- and 12-month follow-ups. We also assessed and controlled for treatment provider characteristics. By tracking outcomes to 12 months, we gained an indication of sustainability after removal of active SSL support at 9 months.

Our first hypothesis was that SSL providers would report greater levels of contingency management adoption than TAU providers over the 12-month period. We expected the SSL's effect to be largest during the period of active external support (months 1-9), but to remain significant throughout the 12-month follow-up. Our second hypothesis was that the SSL would have a significantly greater effect on putative mediators of implementation effectiveness – organizational readiness to change and perceived intervention characteristics, even when controlling for provider characteristics. Our approach addresses a critical need to promote the implementation of evidence-based practice for opioid addiction treatment providers and is consistent with a recent landmark consolidation of the substance use implementation literature,30 which advises researchers to: a) evaluate the effectiveness of implementation models prospectively; b) measure implementation effects comprehensively by considering the interplay of multiple domains that have been shown to influence implementation effectiveness (i.e., characteristics of individuals involved, intervention characteristics, setting, implementation process); and c) use theory to identify and test potential mechanisms of implementation effectiveness. Because this study was not powered to test mediation, examination of mediators was exploratory.

METHODS

Treatment Sites

Sites were recruited from a comprehensive opioid addiction treatment program spanning six states: Illinois, Indiana, Pennsylvania, Utah, Rhode Island, and Maine. The program had 18 satellite clinics employing over 300 staff and serving 4100 adults annually. Each clinic provided various outpatient services including methadone maintenance, individual, family, and group counseling. In the two years before the study, the program sponsored 28 trainings, only one of which was focused on an EBP (buprenorphine) and none of which addressed CM.

Assignment to Conditions

Assignment of clinics to condition was based on geographic location, such that seven sites in the New England ATTC's catchment area (3 Rhode Island, 4 Maine) received SSL-enhanced training while 11 sites outside of the region (1 Illinois, 1 Indiana, 6 Pennsylvania, 3 Utah) received TAU only. Geographic location was used instead of random assignment for three reasons: a) to prevent contamination associated with staff resource sharing across geographically proximate sites; b) to promote compatibility with procedures of the agency, which frequently held regional trainings; and c) to resemble standard operations across the ATTC network, under which each ATTC had the autonomy to roll out regional training initiatives.

Recruitment

Procedures were approved by the Brown University institutional review board. Clinical directors from the 18 satellite clinics were given an overview of the project during a quarterly leadership meeting and invited to provide informed consent. Inclusion criteria required that providers had been employed for at least 3-months with active on-going caseloads.

Clinical directors supplied current numbers of treatment providers meeting inclusion criteria, resulting in 145 providers (51 SSL, 94 TAU) being invited to participate. Providers received resource packets describing the study, which included return-addressed, stamped postcards indicating interest in participating. Both clinical directors and providers were informed that participation was voluntary and would not affect their employment.

Of the 145 providers, 75 (46 SSL, 29 TAU) returned reply cards, reflecting a 52% initial response. The response rate was 90% in SSL (46/51) and 31% in TAU (29/94), rates that clearly favored the SSL (z(144) = 22.2, p<.001). A primary reason for this differential response was likely the unblinded nature of the study, under which SSL clinics knew they would receive additional support. Although differential enrollment indicates response bias, it stands to reason that bias might have created a more stringent test of the SSL; in essence, SSL providers were being compared to those TAU providers most interested in receiving contingency management training.

Of the 75 responders, 60 (80% of responders) ultimately provided informed consent and completed a baseline assessment. Participation of those interested was 85% in SSL (39/46) and 72% in TAU (21/29), which was not statistically different (z(74)=1.30, p>.05). Clinic directors from all 18 sites agreed to participate, but three TAU sites did not return interest forms. The final sample contained 60 providers across 15 sites (7 SSL, 8 TAU).

Standard Training

Participating treatment providers in both conditions received didactic training in contingency management led by a national expert in the intervention.31 The training protocol encompassed: a) overview of evidence supporting contingency management, b) behavioral principles related to contingency management and how they inform intervention, c) designing monitoring and reward schedules, d) implementation procedures, and e) training and supervision.32 Providers were excused from work and received continuing education credits, consistent with usual program procedures. Costs associated with contingency management reinforcers were not provided through this study; however, shortly after the training, the central organization announced that sites could apply for seed funds to defray the costs of reinforcers. Thirteen of 15 sites (7/7 SSL, 6/8 TAU) subsequently applied and received seed funding of approximately $1,500 per site.

SSL-Enhanced Training

Treatment providers in the SSL region received 9 months of additional support containing four elements. First, sites received access to a technology transfer specialist, an external change agent who provided ongoing technical assistance. The technology transfer specialist had a Master's of Social Work and over 20 years of experience in clinical program management and supervision. Second, each site was assigned an in-house innovation champion to work with the technology transfer specialist to support adoption. Clinical directors were invited to serve as innovation champions or identify alternates and in all cases, the clinical directors volunteered. Third, all employees received specialized training focused on the change process. Innovation champions received a 4-day training focused on principles and theories of organizational change, leadership decisions related to adoption of evidence-based practices, and strategies to integrate evidence-based practices into existing organizational structures. Remaining providers received a 4-hour training led collaboratively by the technology transfer specialist and in-house innovation champions, focused on defining evidence-based practice, reviewing the theory of change, and providing an overview of the adoption process. Additional technology transfer specialist support included separate monthly conference calls for treatment providers and innovation champions, moderation of an online community bulletin board, and scheduled forums to discuss barriers to contingency management utilization. Informal technology transfer specialist support was also available upon request.

Measures

Contingency management adoption was measured biweekly, while other measures were completed at baseline, 3 – and 12-month follow-ups. Providers completed measures using a customized data collection website with a dedicated secure server. Providers received weekly reminders to complete measures and could make retrospective entries up to four weeks. Up to $200 could be earned for completing assessments: $20 for baseline, $30 for 3-months, $50 for 12-months, and a $50 bonus for completing all 26 bi-weekly entries.

Provider Characteristics

Providers completed a self-report measure at baseline recording their demographic characteristics and clinical experience (i.e., training, years’ experience, caseload).

Individual-Level Putative Mediator: Perceived Attributes Scale(PAS33)

The PAS is a 27-item, customizable scale that measures five aspects of an intervention or innovation (i.e., Relative Advantage, Compatibility, Complexity, Trialability, Observability) that have been shown to influence adoption.21 Items are scored on 7-point Likert scales ranging from 1 (strongly disagree) to 7 (strongly agree). Analyses of the PAS’ psychometric properties have indicated high construct, content, and criterion validity, along with good internal consistency and test-retest reliability.34-36 Reliability coefficients ranged from .75-.90 in this sample.

Organizational-Level Putative Mediator: ORC Scale (ORC-S37)

The ORC-S is a comprehensive assessment tool based on Simpson's model of organizational change.15 The measure consists of 115 Likert-type items, comprising 18 scales across four major domains: Motivation for Change (i.e., need for improvement, training needs, pressure for change), Adequacy of Resources (i.e., offices, staffing, training, equipment, computer/internet), Staff Attributes (i.e., growth, efficacy, influence, adaptability), and Organizational Climate (i.e., mission, cohesion, autonomy, communication, stress, change). We conducted exploratory analyses of all 18 scales, though based on prior SUD studies,38, 39 we expected Staff Attributes and Organizational Climate to be most sensitive to the SSL. Reliability coefficients ranged from .75-.96.

Implementation Outcome: Adoption of Contingency Management

Adoption of contingency management was measured over 4-week intervals using providers’ responses to a biweekly question asking the number of patients with whom they had used incentives over the past two weeks. Providers who reported using incentives with at least one patient across concurrent biweekly reports were coded as adopters in that 4-week period.

Analysis Plan

Preliminary analyses assessed missing data, correlations among measures, and distributional properties of measures. Next, group equivalence on demographics and clinical characteristics was examined to determine which variables to include as covariates.

Primary analyses applied generalized linear mixed models using all available data, consistent with an intent-to-treat approach. Missing data were handled using maximum likelihood estimation, which uses all available data and yields non-biased estimates when data are missing at random.40 The first set of analyses tested the hypothesis that the SSL would be related to higher rates of contingency management adoption (binary outcome) over the 12-month (52-week) follow-up. The model specified random effects for providers within agencies along with a categorical time index using assessed differences in the first 4-week interval as a reference for assessing differences throughout the follow-up period. Odds ratios calculated the odds of contingency management adoption among SSL providers relative to TAU providers over each 4-week period. The second set of analyses tested the hypothesis that the SSL would be associated with higher organizational readiness (ORC-S) and more favorable perceptions of innovation characteristics (PAS; both continuous outcomes) and used data from baseline, 3- and 12-month follow-ups. Each of the PAS and ORC-S scales was tested separately to optimize power to detect an effect. Because of the exploratory nature of our hypotheses, no corrections were made for multiple analyses.

All of the mixed models included a main effect of Training Group and Group*Time interactions. Random effects accounted for the non-independence of providers within agencies. Nested likelihood ratio tests were used to determine the significance of model terms.

RESULTS

Treatment Provider Characteristics

Table 1 depicts the providers’ baseline demographic and clinical experience characteristics by condition. Relative to TAU therapists, SSL therapists differed on two clinical characteristics: 1) a greater proportion reported heavy caseloads of 40+ patients per week; and 2) a lower proportion had education beyond a Bachelor's degree. We therefore controlled for caseload and education level in the analyses. Retention rates were high across groups and did not vary by condition: 93% for the 3-month assessment (97% SSL, 86% TAU); 85% for the 26 biweekly assessments (87% SSL, 81% TAU); and 82% for the 12-month assessment (85% SSL, 76% TAU).

Table 1.

Baseline Comparisons of Treatment Providers in the Science to Service Laboratory (SSL) and Training as Usual (TA U) Conditions

| Provider Characteristics | SSL Condition (n = 39) | TAU Condition (n = 21) | Total (n = 60) | p-value |

|---|---|---|---|---|

| Age | 41.9 (11.5) | 41.1 (14.4) | 41.7(12.4) | .80 |

| Sex | .70 | |||

| Female | 26 (67%) | 15 (71%) | 41 (68%) | |

| Male | 13 (33) | 6 (29%) | 19 (32%) | |

| Ethnicity | .93 | |||

| Hispanic | 4 (10%) | 2 (10%) | 6 (10%) | |

| Non-Hispanic | 35 (90%) | 19 (90%) | 54 (90%) | |

| Race | .95 | |||

| White | 30 (77%) | 16 (76%) | 46 (77) | |

| Non-white | 9 (23%) | 5 (24%) | 14 (23%) | |

| Highest Degree | .048* | |||

| BA or Less | 33 (85%) | 13 (62%) | 46 (77%) | |

| MA or Greater | 6 (15%) | 8 (38%) | 14 (23%) | |

| Years of Experience | 1.00 | |||

| Less than 1 year | 4 (11%) | 2 (10%) | 6 (10%) | |

| 1-3 years | 11 (28%) | 7 (33%) | 18 (30%) | |

| 3+ years | 24 (61%) | 12 (57%) | 36 (60%) | |

| Years with Agency | .85 | |||

| Less than 1 year | 17 (44%) | 8 (38%) | 25 (41%) | |

| 1-3 years | 10 (26%) | 9 (43%) | 19 (32%) | |

| 3+ years | 12 (30%) | 4 (19%) | 16 (27%) | |

| Caseload | .013* | |||

| Standard (≤ 39) | 13 (33%) | 14 (67%) | 27 (45%) | |

| Heavy (40+) | 26 (67%) | 7 (33%) | 33 (55%) |

Note: Values are n(%) or M(SD). For categorical variables, between group differences in proportions were tested using the z-statistic. For ordinal variables with more than 2 categories, differences in distributions were tested using the independent samples Mann-Whitney U test.

Effects of SSL Training on CM Adoption

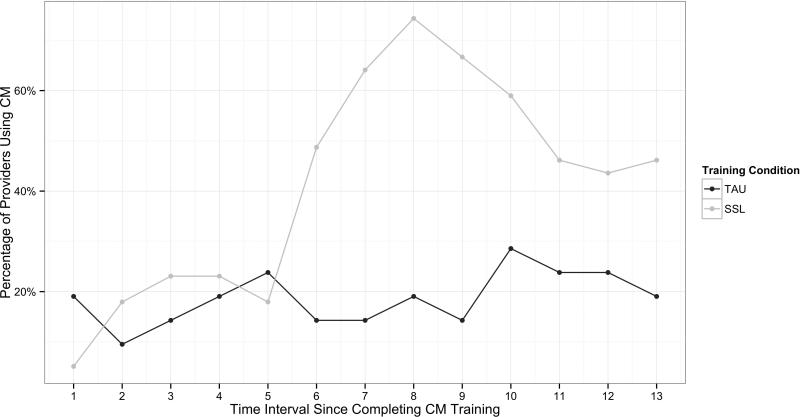

Figure 1 depicts rates of contingency management adoption over 13 consecutive 4-week intervals. The mixed model analyses controlling for covariates indicated a significant main effect of Training Group on the likelihood of contingency management adoption, favoring the SSL (B=1.28, SE=0.62, p<.04, OR=3.6, 95% CI=1.07–12.12). An examination of the Group*Time terms demonstrated that the likelihood of adoption in SSL compared to TAU also differed over time (X2(12)=38.15, p<0.01).

Figure 1.

Rates of contingency management adoption over time in the Science-to-Service Laboratory (SSL) and Training as Usual (TAU) conditions.]

Table 2 presents the rate of contingency management adoption in the SSL relative to the TAU group, along with estimates of the Time and Group*Time terms for each interval. Once Group*Time interactions were added, the Training Group main effect was no longer interpretable or significant (B=−1.96, SE =1.22, p=.11) in the context of significant interactions. Examination of odds ratios indicated that SSL providers were not significantly more likely to use contingency management during the first 5 intervals (20 weeks) after the baseline assessment, but then had between 2.4 and 13.5 times higher odds of using contingency management over the remainder of the follow up period (weeks 24 - 52).

Table 2.

Contingency Management Adoption among Providers in the Science to Service Laboratory (SSL) Condition Relative to Training as Usual (TAU)

| SSL |

TAU |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 4-Week Period | n | % | n | % | Effect | Group*Time | p | OR | L95CI | U95CI |

| 1 | 37 | 5.4% | 20 | 20.0% | Reference | -- | -- | 0.23 | 0.04 | 1.38 |

| 2 | 36 | 19.4% | 18 | 11.1% | −0.76 | 2.65 | 0.08 | 1.93 | 0.36 | 10.42 |

| 3 | 36 | 25.0% | 18 | 16.7% | −0.08 | 2.43 | 0.09 | 1.67 | 0.39 | 7.11 |

| 4 | 36 | 25.0% | 18 | 22.2% | 0.48 | 1.87 | 0.18 | 1.17 | 0.30 | 4.47 |

| 5 | 36 | 19.4% | 18 | 27.8% | 0.97 | 0.92 | 0.50 | 0.63 | 0.17 | 2.35 |

| 6 | 36 | 52.8% | 18 | 16.7% | −0.08 | 4.18 | 0.00 | 5.59 | 1.38 | 22.70 |

| 7 | 36 | 69.4% | 18 | 16.7% | −0.08 | 5.22 | 0.00 | 11.36 | 2.72 | 47.40 |

| 8 | 36 | 80.6% | 17 | 23.5% | 0.51 | 5.48 | 0.00 | 13.46 | 3.35 | 54.15 |

| 9 | 36 | 72.2% | 17 | 17.6% | −0.06 | 5.39 | 0.00 | 12.13 | 2.86 | 51.45 |

| 10 | 35 | 65.7% | 17 | 35.3% | 1.49 | 3.35 | 0.01 | 3.51 | 1.04 | 11.85 |

| 11 | 34 | 52.9% | 17 | 29.4% | 1.02 | 2.98 | 0.03 | 2.70 | 0.78 | 9.35 |

| 12 | 34 | 50.0% | 17 | 29.4% | 1.02 | 2.81 | 0.04 | 2.40 | 0.69 | 8.30 |

| 13 | 34 | 52.9% | 17 | 23.5% | 0.51 | 3.49 | 0.01 | 3.66 | 0.99 | 13.52 |

Note: Regression model estimates for the difference between SSL compared to TAU and changes in this effect at each time point include model terms for highest degree and caseload of providers. OR = odds ratio; L95CI = lower 95% confidence interval; H95CI = higher 95% confidence interval.

Effects of SSL Training on Putative Mediators

Mixed models also tested the effects of the SSL on two putative mediators of adoption – perceived intervention attributes (PAS scales) and organizational readiness (ORC-S scales). Inclusion of random slopes in the mixed models testing mediators did not improve model fit, so random slopes were removed.

Regarding the five perceived intervention attributes (PAS) scales, random intercept models revealed a significant main effect for Observability (i.e., the dimension indicating how visible the benefits of the intervention are to those in the system) favoring the SSL (B=2.35; SE=0.95; p<.05), but did not indicate a significant Group*Time interaction (B=0.08; SE=0.11; p=0.48). These data suggested that SSL providers perceived the benefits of training as more observable than TAU providers did, though there was no significant change over time. No significant Training Group or Group*Time effects were found for any of the other attributes.

For the organizational readiness (ORC-S) scales, we first examined the Staff Attributes and Organizational Climate domains, as these were expected to be most sensitive to the SSL. No significant differences between SSL and TAU were found on any of these scales. Additional exploratory analyses revealed one significant difference in favor of the SSL on one of the Adequacy of Resources scales: there was a significant Training Group effect for Training (B=1.59; SE=0.69; p<.05), but no Group*Time interaction (B=0.02; SE=0.06; p=0.74).

DISCUSSION

This study evaluated the effectiveness of a theory-driven implementation strategy (SSL) in promoting increased contingency management adoption across a multi-site opioid addiction program. Results provided mixed support for our two hypotheses. Consistent with the first hypothesis, the SSL was associated with both higher likelihood and faster rate of contingency management adoption over the 12-month period. We expected the longitudinal data to reveal two discrete time intervals: a period with large, significant effect sizes favoring the SSL during active support in months 1-9, followed by a period of significant effect sizes favoring the SSL (though less large in magnitude) after support was removed in months 10-12. In the latter half of the study, our results directly supported these predictions: the SSL demonstrated superiority with very large odds ratios in months 6-9 and continued to demonstrate superiority with moderate odds ratios in months 10-12. Furthermore, these changes in odds ratios appeared to be driven by the SSL, since rates of adoption in the TAU condition were relatively stable over the 12-month period.

Unexpected, however, was the identification of another time interval at the start of the study; from weeks 1-20, there was no significant difference between the SSL and TAU conditions. This lack of a significant difference suggests that the upfront didactic training in contingency management had comparable effects on the two groups, and that the SSL did not immediately add incremental benefit. One possible explanation is that didactic training was sufficient to promote adoption for a discrete time period, but that more intensive training was needed to sustain adoption over a longer interval. Another potential interpretation is that the SSL, which relied heavily on interpersonal strategies, required a substantial time investment to begin having beneficial effects.

The attenuation of the SSL's effects after removal of active support in month 9 was consistent with our first hypothesis. Nevertheless, such a trend highlights the need to attend to sustainability throughout the implementation process. A recent review recommended that researchers work to prevent the all too common “voltage drop” as interventions move from efficacy and effectiveness to implementation and sustainability.41 Specific recommendations included in this review were the use of dynamic implementation approaches that encourage continued learning and problem solving, ongoing adaptations of intervention approaches, and expectations of ongoing improvements. The SSL philosophy in which a technology transfer specialist works with designated in-house innovation champions to foster continued learning and collaborative problem solving is well-aligned with these recommendations. Still, our results indicate that further work is needed to optimize the SSL's sustainability. Longer follow-ups are also needed to evaluate the SSL's effects for a prolonged timeframe without external assistance.

Support for our second hypothesis was more equivocal. Only one perceived innovation attribute (Observability) and one organizational readiness to change scale (Training Resources) differed between groups, and there was no indication that the SSL affected these variables over time. These results suggest that, across the entire study period, counselors in the SSL group perceived the benefits of their training as more visible and perceived themselves as having greater access to training resources than counselors in the TAU group; these findings are not surprising, since providers in the SSL condition knew that they would be receiving additional training resources and that the effects of their training would be monitored throughout the organization.

Without evidence supporting putative mediators, it is challenging to speculate what accounted for the beneficial effects of the SSL. Assuming that providers’ perceptions of Observability and Training Resources might have contributed to the observed outcome, it is unclear how stable these factors would be in the absence of other expected mediators. The lack of an effect on the dimension of Relative Advantage is particularly noteworthy, considering that Relative Advantage – defined as perceived benefit over current practice - is typically the strongest predictor of adoption.21 Future tests of the SSL will seek to target Relative Advantage by emphasizing the approach's key advantages throughout the initial training sessions, as well as the ongoing support processes.

Results must be interpreted within the context of several limitations. First, the differential recruitment success between SSL and TAU clinics both in terms of number of clinics (8/11 TAU vs. 7/7 SSL) and percentage of eligible participants (22% TAU vs. 76% SSL) clearly indicated response bias. As noted earlier, differential enrollment was likely an artifact of the design in which SSL providers knew they would receive additional resources. While differential enrollment might have biased the study in favor of SSL, it is equally plausible that the design biased results toward TAU, since only those TAU providers who were most motivated to adopt contingency management were likely to enroll in the study. This theory is bolstered by the fact that TAU providers had more education and higher baseline rates of contingency management adoption than those in the SSL condition.

Additional limitations pertain to the relatively small sample size of treatment agencies and providers. Furthermore, because this was not a randomized trial, it is possible that sites differed in unmeasured ways that influenced our results. Our design mirrored actual ATTC procedures, in which training initiatives are rolled out regionally, thereby optimizing ecological validity but limiting our ability to isolate the effects of the SSL on adoption. Finally, our study measured contingency management adoption using self-report and did not examine the extent to which the intervention was implemented with fidelity or competence. All of these limitations should be addressed in future evaluations of the SSL model.

Despite these limitations, this study advances knowledge by providing a prospective test of a theory-driven implementation approach. Our results have two key implications for researchers and clinicians. First, our results indicate that applying the SSL in a large multi-site, multi-state agency was feasible and had preliminary evidence of effectiveness. Our promising results suggest that a larger scale randomized trial is warranted. To optimize both internal and ecological validity, a stepped wedge cluster randomized trial assigning clinics to conditions appears to be a particularly promising design.see 42 Second, our study found that the SSL was associated with increased contingency management adoption despite no effects on putative mediators, highlighting a need for more comprehensive examination of mechanisms. Additional evaluation of mediators can help to support the SSL's uptake in community agencies by elucidating how it promotes adoption of evidence-based practice and which aspects of the model are most essential.

Acknowledgments

FUNDING

This work was supported by grants R21DA021150 (PI: D. Squires) and K23DA031743 (PI: S. Becker) from the National Institute on Drug Abuse and grant 5UD1TI013418 (PI: D. Squires) from the Center for Substance Abuse Treatment, Substance Abuse and Mental Health Services Administration, United States Department of Health and Human Services. The views and opinions contained within this document do not necessarily reflect those of the National Institute on Drug Abuse or the U.S. Department of Health and Human Services, and should not be construed as such.

Footnotes

AUTHOR CONTRIBUTIONS

Drs. Becker and Squires contributed equally to this work. Dr. Becker was responsible for conceptualizing this manuscript and taking the lead on its the preparation, while Dr. Squires was the Principal Investigator responsible for conceptualizing the original study design, obtaining funding, overseeing study execution, and contributing to the writing of this manuscript. Dr. Strong was responsible for designing and conducting the statistical analyses. Drs. Barnett and Monti were Co-Investigators of the original study and contributed to study conceptualization, obtaining funding, and overseeing study execution. Dr. Petry was a Consultant on the original study and was responsible for overseeing the development of the contingency management training protocol and delivery. All co-authors reviewed, edited, and approved this manuscript.

REFERENCES

- 1.Institute of Medicine . Bridging the gap between practice and research: Forging partnerships with community-based drug and alcohol treatment. National Academy Press; Washington, DC: 1998. [PubMed] [Google Scholar]

- 2.McLellan AT, Carise D, Kleber HD. Can the national addiction treatment infrastructure support the public's demand for quality care? J Subst Abuse Treat. 2003;25:117–21. [PubMed] [Google Scholar]

- 3.Compton WM, Stein JB, Robertson EB, Pintello D, Pringle B, Volkow ND. Charting a course for health services research at the National Institute on Drug Abuse. J Subst Abuse Treat. 2005;29:167–72. doi: 10.1016/j.jsat.2005.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nosyk B, Anglin MD, Brissette S, et al. A call for evidence-based medical treatment of opioid dependence in the United States and Canada. Health Aff (Millwood) 2013;32:1462–9. doi: 10.1377/hlthaff.2012.0846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Beidas R, Kendall P. Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clin Psychol Sci Pract. 2010;17:1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clin Psychol Rev. 2010;30:448–66. doi: 10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sholomskas DE, Syracuse-Siewert G, Rounsaville BJ, Ball SA, Nuro KF, Carroll KM. We don't train in vain: A dissemination trial of three strategies of training clinicians in cognitive–behavioral therapy. J Consult Clin Psychol. 2005;73:106–15. doi: 10.1037/0022-006X.73.1.106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:327–50. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- 9.Hanson GR, Leshner AI, Tai B. Putting drug abuse research to use in real-life settings. J Subst Abuse Treat. 2002;23:69–70. doi: 10.1016/s0740-5472(02)00269-6. [DOI] [PubMed] [Google Scholar]

- 10.Tai B, Straus MM, Liu D, Sparenborg S, Jackson R, McCarty D. The first decade of the National Drug Abuse Treatment Clinical Trials Network: Bridging the gap between research and practice to improve drug abuse treatment. J Subst Abuse Treat. 2010;38:S4–S13. doi: 10.1016/j.jsat.2010.01.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Brown BS, Flynn PM. The federal role in drug abuse technology transfer: A history and perspective. J Subst Abuse Treat. 2002;22:245–57. doi: 10.1016/s0740-5472(02)00228-3. [DOI] [PubMed] [Google Scholar]

- 12.Addiction Technology Transfer Center Network [October 15, 2015];Prescription Opioid Addiction Treatment Study (POATS) Resource List. from: http://www.attcnetwork.org/explore/priorityareas/science/blendinginitiative/documents/poats/PO ATS_Resource_List.pdf. n.d.

- 13.Gumbley S, Duby L, Torch M, Storti S. ATTC New England Science to Service Laboratory: A comprehensive technology transfer model. 2005 [Google Scholar]

- 14.Squires DD, Gumbley SJ, Storti SA. Training substance abuse treatment organizations to adopt evidence-based practices: The Addiction Technology Transfer Center of New England Science to Service Laboratory. J Subst Abuse Treat. 2008;34:293–301. doi: 10.1016/j.jsat.2007.04.010. [DOI] [PubMed] [Google Scholar]

- 15.Simpson DD. A conceptual framework for transferring research to practice. J Subst Abuse Treat. 2002;22:171–82. doi: 10.1016/s0740-5472(02)00231-3. [DOI] [PubMed] [Google Scholar]

- 16.Addiction Technology Transfer Centers (ATTC) Network . The Change Book: A Blueprint for Technology Transfer. Addiction Technology Transfer Centers (ATTC), University of Missouri; Kansas City, MO: 2000. [Google Scholar]

- 17.Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: A review of the literature in health services research and other fields. Med Care Res Rev. 2008;65:379–436. doi: 10.1177/1077558708317802. [DOI] [PubMed] [Google Scholar]

- 18.Weiner BJ. A theory of organizational readiness for change. Implement Sci. 2009;4:67. doi: 10.1186/1748-5908-4-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Weiner BJ, Lewis MA, Linnan LA. Using organization theory to understand the determinants of effective implementation of worksite health promotion programs. Health Education Research. 2009;24:292–305. doi: 10.1093/her/cyn019. [DOI] [PubMed] [Google Scholar]

- 20.Simpson DD, Flynn PM. Moving innovations into treatment: A stage-based approach to program change. J Subst Abuse Treat. 2007;33:111–20. doi: 10.1016/j.jsat.2006.12.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Rogers EM. Diffusion of Innovations. 3rd ed. Free Press; New York: 1983. [Google Scholar]

- 22.Hartzler B, Lash SJ, Roll JM. Contingency management in substance abuse treatment: A structured review of the evidence for its transportability. Drug Alcohol Depend. 2012;122:1–10. doi: 10.1016/j.drugalcdep.2011.11.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dutra L, Stathopoulou G, Basden SL, Leyro TM, Powers MB, Otto MW. A meta-analytic review of psychosocial interventions for substance use disorders. Am J Psychiatry. 2008;165:179–87. doi: 10.1176/appi.ajp.2007.06111851. [DOI] [PubMed] [Google Scholar]

- 24.Griffith JD, Rowan-Szal GA, Roark RR, Simpson DD. Contingency management in outpatient methadone treatment: A meta-analysis. Drug Alcohol Depend. 2000;58:55–66. doi: 10.1016/s0376-8716(99)00068-x. [DOI] [PubMed] [Google Scholar]

- 25.Lussier JP, Heil SH, Mongeon JA, Badger GJ, Higgins ST. A meta-analysis of voucher-based reinforcement therapy for substance use disorders. Addiction. 2006;101:192–203. doi: 10.1111/j.1360-0443.2006.01311.x. [DOI] [PubMed] [Google Scholar]

- 26.Prendergast M, Podus D, Finney J, Greenwell L, Roll J. Contingency management for treatment of substance use disorders: A meta-analysis. Addiction. 2006;101:1546–60. doi: 10.1111/j.1360-0443.2006.01581.x. [DOI] [PubMed] [Google Scholar]

- 27.Peirce JM, Petry NM, Stitzer ML, et al. Effects of lower-cost incentives on stimulant abstinence in methadone maintenance treatment: A National Drug Abuse Treatment Clinical Trials Network study. Arch Gen Psychiatry. 2006;63:201–8. doi: 10.1001/archpsyc.63.2.201. [DOI] [PubMed] [Google Scholar]

- 28.Fuller BE, Rieckmann T, Nunes EV, et al. Organizational readiness for change and opinions toward treatment innovations. J Subst Abuse Treat. 2007;33:183–92. doi: 10.1016/j.jsat.2006.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hartzler B, Donovan DM, Tillotson CJ, Mongoue-Tchokote S, Doyle SR, McCarty D. A multilevel approach to predicting community addiction treatment attitudes about contingency management. J Subst Abuse Treat. 2012;42:213–21. doi: 10.1016/j.jsat.2011.10.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Damschroder LJ, Hagedorn HJ. A guiding framework and approach for implementation research in substance use disorders treatment. Psychol Addict Behav. 2011;25:194. doi: 10.1037/a0022284. [DOI] [PubMed] [Google Scholar]

- 31.Petry NM. Contingency management for substance abuse treatment: A guide to implementing this evidence-based practice. Routledge; New York: 2012. [Google Scholar]

- 32.Petry NS,M. Yale University Psychotherapy Development Center Training Series No. 6. 09241. West Haven, Connecticut: Contingency management: Using motivational incentives to improve drug abuse treatment. p. 2002. sponsored by NIDA P50 DA. [Google Scholar]

- 33.Moore GC, Benbasat I. Development of an instrument to measure the perceived characteristics of adopting an information technology innovation. Information Systems Research. 1991;2:192–221. [Google Scholar]

- 34.Karahanna E, Straub DW, Chervany NL. Information technology adoption across time: A cross-sectional comparison of pre-adoption and post-adoption beliefs. MIS Quarterly. 1999;23:183–213. [Google Scholar]

- 35.Plouffe CR, Hulland JS, Vandenbosch M. Richness versus parsimony in behaviour technology adoption decisions -- Understanding merchant adoption of a smart card-based payment system. Information Systems Research. 2001;12:208–22. [Google Scholar]

- 36.Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: Toward a unified view. MIS Quarterly. 2003;27:425–78. [Google Scholar]

- 37.Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. J Subst Abuse Treat. 2002;22:197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- 38.Joe GW, Broome KM, Simpson DD, Rowan-Szal GA. Counselor Perceptions of Organizational Factors and Innovations Training Experiences. J Subst Abuse Treat. 2007;33:171–82. doi: 10.1016/j.jsat.2006.12.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Simpson DD, Joe GW, Rowan-Szal GA. Linking the Elements of Change: Program and Client Responses to Innovation. J Subst Abuse Treat. 2007;33:201–9. doi: 10.1016/j.jsat.2006.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Enders CK, Bandalos DL. The Relative Performance of Full Information Maximum Likelihood Estimation for Missing Data in Structural Equation Models. Structural Equation Modeling: A Multidisciplinary Journal. 2001;8:430–57. [Google Scholar]

- 41.Chambers D, Glasgow R, Stange K. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8:117. doi: 10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hemming K, Haines TP, Chilton PJ, Girling AJ, Lilford RJ. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. BMJ. 2015;350:h391. doi: 10.1136/bmj.h391. [DOI] [PubMed] [Google Scholar]