Abstract

Attention is thought to be controlled by a specialized frontoparietal network that modulates the responses of neurons in sensory and association cortex. However, the principles by which this network affects the responses of these sensory and association neurons remains unknown. In particular, it remains unclear whether different forms of attention, such as spatial and feature-based attention, independently modulate responses of single neurons. We recorded responses of single V4 neurons in a task that controls both forms of attention independently. We find that the combined effects of spatial and feature-based attention can be described as the sum of independent processes with a small super-additive interaction term. This pattern of effects demonstrates that the spatial and feature-based aspects of the attentional control system can independently affect responses of single neurons. These results are consistent with the idea that spatial and feature-based attention are controlled by distinct neural substrates whose effects combine synergistically to influence responses of visual neurons.

INTRODUCTION

Attention is the process by which sensory stimuli are selected for enhanced perceptual processing (Desimone & Duncan, 1995, Egeth & Yantis, 1997, Reynolds & Chelazzi, 2004). Different forms of attention allow selection of spatial locations (Connor, Preddie, Gallant & Van Essen, 1997, McAdams & Maunsell, 1999, Motter, 1993, Reynolds, Chelazzi & Desimone, 1999), features (Liu, Slotnick, Serences & Yantis, 2003, McAdams & Maunsell, 2000, Motter, 1994, Treue & Martinez Trujillo, 1999), objects (Blaser, Pylyshyn & Holcombe, 2000, Roelfsema, Lamme & Spekreijse, 1998, Serences, Schwarzbach, Courtney, Golay & Yantis, 2004), and points in time (Doherty, Rao, Mesulam & Nobre, 2005, Ghose & Maunsell, 2002, Nobre, Allison & McCarthy, 1998). It is currently unclear whether the mechanism or mechanisms that control these differerent forms of attention can independently affect the tuning of single neurons in sensory cortex. Such information may shed light on the question of whether different forms of attention are mediated by discrete neural substrates or rather reflect the operation of a single, general-purpose attention system (Doherty et al., 2005, Hayden & Gallant, 2005, Kastner & Ungerleider, 2000, Posner & Dehaene, 1994, Raz & Buhle, 2006, Treue, 2001).

Several studies have addressed this issue by examining the effects of spatial and feature-based attention on visual processing. It has been shown that these two forms of attention have roughly additive effects on the firing rates of single neurons (Hayden & Gallant, 2005, McAdams & Maunsell, 2000, Treue & Martinez Trujillo, 1999), brain metabolic activity (Fink, Dolan, Halligan, Marshall & Frith, 1997), evoked electrical potentials (Doherty et al., 2005, Hillyard & Munte, 1984) and reaction times (Doherty et al., 2005, Maljkovic & Nakayama, 1996). These data have been taken to support the idea that different forms of attention reflect independent processes. However, in the absence of physiological data, the validity of this claim remains unknown.

Other studies have investigated the relationship between spatial and feature-based attention by examining properties of the fronto-parietal attentional control network (Corbetta, Shulman, Miezin & Petersen, 1995, Giesbrecht, Woldorff, Song & Mangun, 2003, Kastner & Ungerleider, 2000, Vandenberghe, Duncan, Dupont, Ward, Poline, Bormans, Michiels, Mortelmans & Orban, 1997, Wojciulik & Kanwisher, 1999). These data suggest that spatial and feature-based attention are mediated by similar or overlapping fronto-parietal networks.

We investigated the relationship between the effects of spatial and feature-based attention by recording responses of single area V4 neurons in a task that controls both forms of attention simultaneously (Hayden & Gallant, 2005). Unlike tasks used in previous physiological studies of visual neurons, our task fully crosses conditions of spatial and feature-based attention. In earlier work we showed that spatial and feature-based attention have different effects on the dynamics of visual responses, suggesting that the neural substrates of these two forms of attention are independent (Hayden & Gallant, 2005). Here we investigate the interaction between these two effects.

We show that spatial and feature-based attention can be described as independent processes with a small super-additive interaction term. These results demonstrate that different forms of attention can independently control the responses of single neurons in visual cortex. This finding is consistent with the idea that these two forms of attention are controlled by independent processes that have mutually synergistic effects on the responses of single V4 neurons. The present results therefore provide constraints on future models of attention, and also point towards the neuronal mechanism of attentional modulation of visual responses.

MATERIALS AND METHODS

Subjects and physiological procedures

All animal procedures were approved by oversight committees at the University of California, Berkeley, and satisfied or exceeded all NIH and USDA regulations. Methods have been reported in detail elsewhere (David, Vinje & Gallant, 2004, Hayden & Gallant, 2005, Mazer & Gallant, 2003). We performed extracellular single-neuron recordings with epoxy-coated tungsten electrodes (FHC, Bowdoinham, ME) from two macaques. We used a spike sorter (Plexon Instruments, Dallas, TX) to amplify, filter, and isolate neuronal responses. We located area V4 by exterior cranial landmarks and by direct visualization of the lunate sulcus, and confirmed these by comparing receptive field properties to those reported previously. We estimated the boundaries of each classical receptive field (CRF) manually and confirmed them by reverse correlation using a dynamic sequence of squares flashed on the monitor. CRF diameters ranged from 3–8 degrees (median 5 degrees) and eccentricities ranged from 7–20 degrees (median 12 degrees).

We monitored eye position with an infrared eye tracker (500 Hz: Eyelink II, SR Research, Toronto, CA). Trials during which eye position deviated by more than 0.5 degrees from the fixation spot were excluded before analysis. We found that eye position does not depend on either spatial attention of feature-based attention for any neuron in our data set (randomized t-test, p>0.05).

Behavioral Task

Trials began when subjects grabbed a capacitive touch bar. A fixation spot then appeared; after fixation was acquired, an image cue (natural image patch) and spatial cue (small red line pointing toward one location) appeared for 150–600 ms. (For approximately half the cells, the spatial cue only appeared on the first trial in the block.) We selected cells and cues so that cues never encroached upon the CRF. Following an 850 ms blank period (350 ms in 30 neurons) two stimulus streams appeared: one in the CRF and one 180 degrees away, in the opposite hemifield. Image patches appeared at a constant rate (3.5–4.5 Hz, varying across cells) and there was no blank period between successive stimuli. To receive reward subjects had to maintain continuous fixation and release the response bar within one second of the appearance of the match in the cued stream.

The stimuli were circular patches selected randomly from black and white digital photographs of natural scenes (Corel Corp.). Our random selection algorithm favored images with broad frequency spectra. Images were roughly matched to RF size and the outer 10% was blended linearly into the gray background. We did not normalize images for contrast or luminance. At the beginning of each day, we chose two match images arbitrarily from the set of all images. These did not differ statistically from the distractors and were chosen without regard to neuronal response properties. To avoid any long-term bias we chose new images each day.

We constructed four conditions of attention by crossing two spatial conditions with two image conditions. Conditions were run in blocks of ten trials. Each block was associated with a single combination of spatial and feature conditions. Thus, there were four block types. We ran block types in sequence and did not randomize their order. On any trial as many as twenty distractor images could appear before the match. After the match, images continued to appear until either the bar was released or until one second had passed. (If the animal did not respond within one second of the appearance of the match, the trial was counted as an error.) A match appeared on all trials.

To ensure that subjects did not adopt a strategy of remembering both possible matches on each trial, the un-cued match occasionally appeared in the cued stream (in this case it was called the catch image). To ensure that subjects did not adopt a strategy of searching in both spatial streams on each trial, the match was occasionally shown in the uncued stream (spatial catch image). The catch image and spatial catch were shown with approximately the same probability as the match. Responses to the catches caused the trial to end immediately and were not rewarded. Only data from correct trials were analyzed. Reaction times of both subjects were tightly distributed about 320 ms (subject 1) and 340 ms (subject 2), indicating that they were not adopting a guessing strategy.

Data analysis

The experiment followed a two-by-two design, in which two conditions of spatial attention were crossed with two conditions of feature-based attention. In all analyses presented here, the average response of a neuron to an image was defined as the average firing rate in a window from 50 to 300 ms after the onset of each distractor. Analyses were repeated with several response windows of different sizes; results were nearly identical regardless of window size (data not shown). The entire data set obtained for each neuron consisted of responses to 48–1890 unique patches (median 450) per attention condition.

For each neuron, we used a two-factor ANOVA to assess the main effects of spatial and feature-based attention, and their interaction. (To satisfy the homogeneity of variance assumption of ANOVA, before analysis we performed a square root transformation of response rates obtained on each trial.) Results of the ANOVA were verified using a Kruskal-Wallis test, which produced similar results (data not shown). We used a general linear model (GLM) to obtain quantitative parameter estimates for the relative contributions of spatial and feature-based attention, and their interaction. All analyses were performed in Matlab using the Statistics Toolbox (Mathworks, Natick MA). In the GLM, we defined the baseline firing rate as the average evoked response of the neuron when spatial attention was directed away from the response field and the relatively less effective feature was the target.

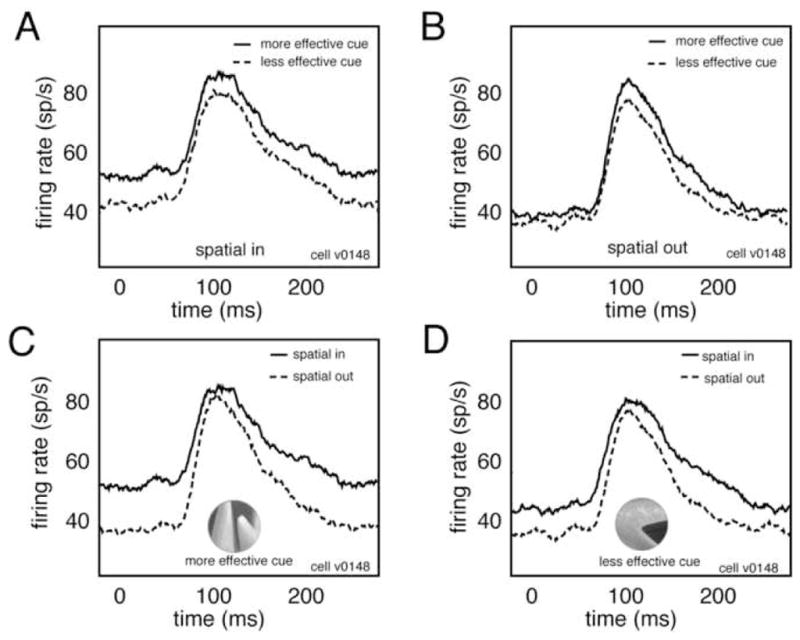

To construct the PSTHs shown in figure 2 we calculated average responses of this neuron to all distractor images in each of the four crossed attention conditions. Note that the modulation that occurs before the transient response in this Figure reflects the influence of the previous stimulus, not delay period modulation.

Figure 2.

Super-additive interaction of spatial and feature-based attention. A. Peri-stimulus time histograms (PSTHs) for one V4 neuron show response to distractors in both feature-based attention conditions when spatial attention is directed toward the receptive field. Zero indicates time of distractor onset. B. Responses of the same neuron in both feature-based attention conditions when spatial attention is directed away from the receptive field. Modulation by feature-based attention in the spatial-in condition (panel A) is stronger than modulation in the spatial-out condition (panel B). This interaction is super-additive. C. Responses of the same neuron in both spatial attention conditions when feature-based attention is directed to the cue that elicits greater activation when it was attended (i.e., the more effective feature), inset. D. Responses in both spatial attention conditions when feature-based attention is directed to the cue that elicited weaker responses (i.e., the less effective feature). Modulation by spatial attention in one feature condition (panel C) is stronger than modulation in the other feature condition (panel D). This interaction is super-additive.

RESULTS

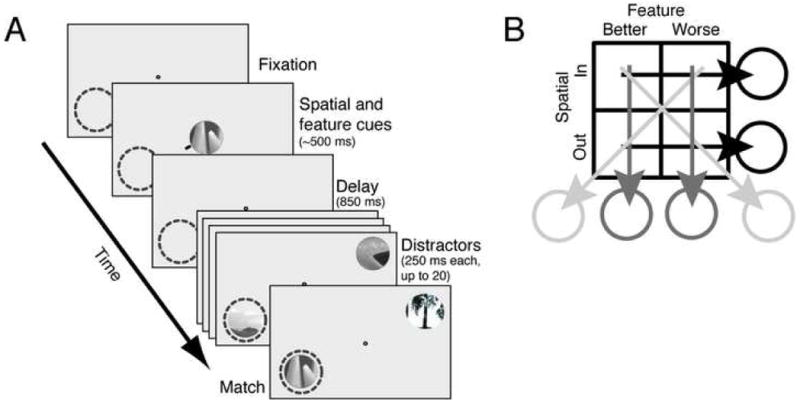

We recorded responses of 110 single neurons in area V4 during performance of a delayed match-to-sample task that controls both spatial and feature-based attention (Figure 1). Each trial began after a response bar was depressed and fixation was acquired. Two cues were then presented simultaneously at fixation. The feature cue was identical to the search target (the match) and served to guide feature-based attention; the spatial cue consisted of a small red line (<1 degree long) and indicated which of the two image streams should be attended. Following a brief delay two rapidly changing streams (~4 Hz) of up to twenty distractor images appeared, one within the receptive field and one in the opposite hemifield. Reward was given if the response bar was released within one second after the match appeared in the spatially cued stream.

Figure 1.

Delayed match-to-sample task and analysis. A. Each frame represents a different portion of the trial. Dashed circle represents the receptive field of the neuron under study. A target (feature cue) appeared at the fixation spot for 150–600 ms. A small red line (spatial cue) appeared on one side of the target to designate the relevant stream. Following an 850 ms delay period, two streams of patches appeared, one in the receptive field and one in the opposite quadrant. Patches were shown at approximately 4 Hz with no blank interval between successive patches. Reward was given for bar release within one second after the target appeared in the cued stream. Failures to release, early releases, and fixation breaks at any time were considered errors. The target and all distractors were circular patches selected from photos and fit to the size of the classical receptive field. B. Four attention conditions were obtained by crossing two spatial and two feature-based conditions. The spatial attention comparison (black lines) was performed by averaging data collected over the two feature conditions; the feature-based attention comparison (gray lines) was performed by averaging data collected over the two spatial conditions.

Both fixation breaks and early and late releases were considered errors. The majority of errors were fixation breaks. Fixation breaks occurred on 13% of trials and 16% of trials for subjects 1 and 2, respectively. Early and late releases were more rare (2% of trials for subject 1 and 4% of trials for subject 2). Most of these errors consisted of releasing the bar when the cued target appeared in the uncued location or when the uncued target appeared at the cued location. There were not enough errors to compare neuronal responses on error trials to those on correct trials, and data from the error trials are not reported here. Because the overall error rate for both subjects was >10%, we believe that the task is relatively difficult for the subjects.

The responses of a single neuron are shown in figure 2. For this neuron, feature-based attention modulates responses by 11.8% when spatial attention is directed toward the neuron’s receptive field (Figure 2a) and by 2.4% when spatial attention is directed away from the receptive field (Figure 2b). Thus, spatial attention enhances the modulatory effect of feature-based attention.

We also compared responses in the two feature-based attention conditions. We typically observed greater neuronal responses in one condition than in the other. We called the cue that elicited greater firing rates when it was attended the more effective cue and the cue that elicited weaker firing rates the less effective cue. We found that spatial attention modulates responses by 21.8% in the more effective cue condition (Figure 2c) and by 12.4% in the less effective cue condition (Figure 2d). Thus, feature-based attention enhances the modulatory effects of spatial attention for this neuron.

To evaluate the prevalence of both spatial and feature-based attention and their interactions in our sample of 110 V4 neurons, we performed a separate 2×2 ANOVA on the responses of each neuron to all distractor images. The state of spatial and feature-based attention were used as factors. (Because the match and catch images had a special behavioral relevance, responses to these images were removed from the data set before analysis.) According to the ANOVA model the independent effects of spatial and feature-based attention should emerge as main effects and any interaction should emerge as an interaction term.

We observe a main effect of spatial attention in 74% of the recorded neurons (n=81/110 cells; randomized t-test, p<0.05). Across all neurons in the sample, spatial attention modulates responses by 13.3% of the baseline firing rate; the size of spatial modulation is 14.1% in the more effective feature condition and 12.4% in the less effective feature condition. This difference is significant (randomized t-test, p<0.01). We observe a main effect of feature-based attention in 69% of neurons (n=76/110; randomized t-test, p<0.05). Across all neurons, feature-based attention modulates responses by 8.9%; the size of feature-based modulation is 10.1% when spatial attention is directed toward the receptive field and 7.6% when spatial attention is directed away from the receptive field. This difference is also significant (randomized t-test, p<0.001). ANOVA also reveals a significant interaction between the effects of spatial and feature-based attention in 42% of neurons in the sample (n=46/110; p<0.01). Thus, for many cells spatial attention is stronger when feature-based attention is directed to the more effective feature, and vice versa. The results of the ANOVA were mentioned in an earlier paper (Hayden & Gallant, 2005), but neither the form nor the size of the interaction was investigated.

To estimate the form of the interaction independent of the ANOVA, we fit a general linear model (GLM) to neuronal responses obtained under different conditions of attention. The GLM gives separate parameter estimates for visual response in the absence of attention (intercept parameter b), the influence of spatial attention (βs), the influence of feature-based attention (feature parameter, βf) and the interaction (βi). The equation for the GLM is:

| (1) |

The intercept parameter b approximates a neuron’s response in the absence of attention. The parameters s and f are binary terms that indicate the status of spatial and feature-based attention, respectively. Note that we did not optimize the attended features in this study, so we have probably underestimated the potential magnitude of feature-based attention. Thus, our estimates of βf and βi are conservative, and we cannot know how strong feature-based attention would be with ideal stimuli.

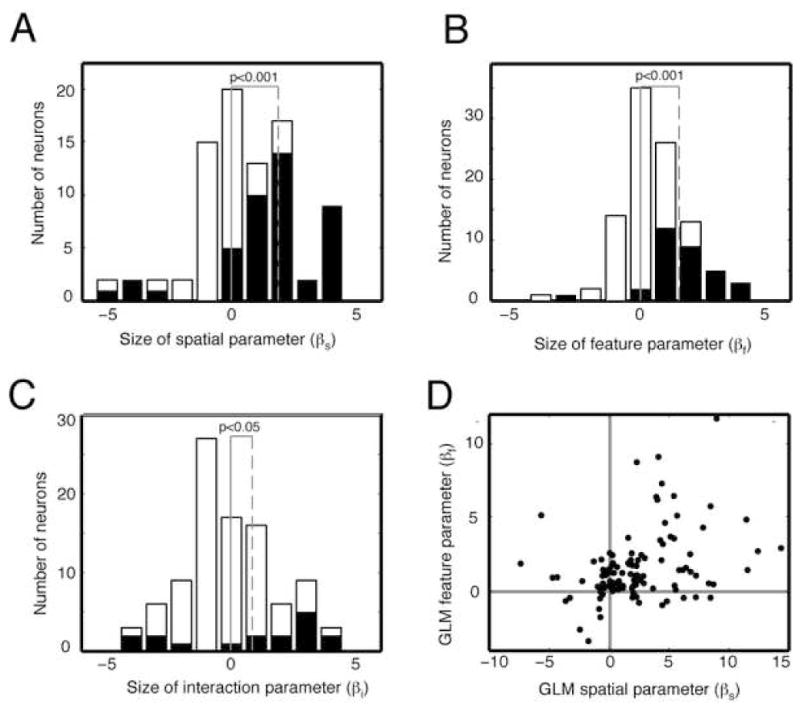

Across all neurons, the average size of the intercept parameter (b) is 18.38 spikes/sec; the average influence of spatial attention (βs) is 2.34 spikes/sec (12.8% of baseline firing rate, figure 3a); and the influence of feature-based attention (βf) is 1.57 spikes/sec (8.5% of baseline firing rate, figure 3b). These modulation ratios are both significant (p<0.001) and within the range of those previously reported for attentional modulation in V4 (Connor et al., 1997, McAdams & Maunsell, 1999, McAdams & Maunsell, 2000, Motter, 1993, Motter, 1994, Reynolds et al., 1999, Treue & Martinez Trujillo, 1999). The average size of the interaction (βi) is 0.75 spikes/sec (4.0% of baseline firing rate, figure 3c). This positive interaction term is small but significant (chi-square test, p<0.05), demonstrating that spatial and feature-based attention generally enhance each other’s effects. The general linear model provides a significantly better fit than chance (F=3.04, p<0.03). Note that these numbers were obtained by averaging across all neurons in the sample, not just those that are modulated significantly. By combining effects across all neurons, we remain agnostic about the way in which information is integrated by downstream neurons. Had we analyzed only those neurons with significant modulation these parameter estimates would be larger.

Figure 3.

Effects of spatial and feature-based attention and their interaction for all neurons. A. Histogram of GLM parameters for spatial attention for all 110 neurons in the sample. Positive numbers indicate neurons whose firing rate is enhanced by spatial attention. Black bars indicate neurons with significant attentional modulation. Vertical gray line indicates zero; vertical dashed line indicates mean of distribution. B. Histogram of GLM parameters for feature-based attention, format same as panel A. C. Histogram of GLM parameters for the interaction of spatial and feature-based attention. Neurons to the right of zero have a super-additive interaction. The distribution is shifted significantly to the right. D. Scatter plot showing relative size of parameters for spatial and feature-based attention. These two variables are significantly correlated (correlation coefficient 0.33, p<0.01, bootstrapped correlation test).

DISCUSSION

We have shown that spatial and feature-based attention both affect the responses of single V4 neurons and that their interaction can be described by a general linear model with a small super-additive interaction term. These results demonstrate that the effects of spatial and feature-based attention on responses of single visual neurons are independent. The roughly additive nature of the two forms of attention we observe is consistent with the results of previous studies using more indirect measures (Doherty et al., 2005, Maljkovic & Nakayama 1996, McAdams & Maunsell, 2000, Saenz & Boynton, 2006, Treue & Martinez Trujillo, 1999). This additivity is consistent with the idea that spatial and feature-based attention are controlled by separate neural systems (Doherty et al., 2005). The super-additive interaction between spatial and feature-based attention has not been reported in previous studies (Doherty et al., 2005, Maljkovic & Nakayama, 1996, McAdams & Maunsell, 2000, Saenz & Boynton, 2006, Treue & Martinez Trujillo, 1999). This interaction may reflect an increase in the excitability of V4 neurons conferred by one form of attention that increases the influence of the other (for similar ideas see (O’Donnell, 2003, Yu & Dayan, 2005).

If spatial and feature-based attention are mediated by distinct mechanisms, we would expect their combined effects to be roughly independent. If, on the other hand, a single mechanism mediates spatial and feature-based attention, we might expect strong interactions. For example, a single control mechanism may have differential access to different neurons, leading to a strong correlation for the magnitude of spatial and feature-based attention in single neurons. This circumstance would lead to a strong superadditive interaction. Another possibility is that a single control mechanism may affect neuronal responses by allocating either spatial or feature-based attention, but not both. This circumstance would lead to a subadditive interaction. Our observation that interaction between the effects of spatial and feature-based attention is weakly superadditive makes these possibilities unlikely.

The present results build upon those presented in a previous report from our group (Hayden & Gallant, 2005). The earlier report (using the same dataset as that analyzed here) demonstrated that spatial and feature-based attention have different timecourses, suggesting that they are mediated by separate processes. That paper also reported a significant interaction between these two forms of attention. However, the earlier report did not evaluate the interaction to determine its form or magnitude. In the current report we show that this significant interaction is small and super-additive. Thus, this report provides further support for the idea that spatial and feature-based attention are mediated by discrete cortical substrates, and suggests that both forms of attention act by enhancing the excitability of visual neurons.

The interaction between spatial and feature-based attention demonstrates that the effect of each form of attention depends upon the state of the other. This dependence underscores the importance of carefully controlling all forms of attention in attention experiments. Synergistic interactions between various forms of attention are likely to be important in naturalistic situations involving simultaneous allocation and control of multiple forms of attention.

The present results suggest that spatial and feature-based attention are controlled by separate cortical networks. However, the anatomical bases of these control networks remain unknown (Kastner & Ungerleider, 2000). It has been argued that spatial attention is a by-product of motor planning that is controlled by structures such as the FEF (Moore, Armstrong & Fallah, 2003, Moore & Fallah, 2004). However, it is difficult to see how feature-based attention could reflect motor planning. It is more likely that feature-based attention is controlled by structures involved in working memory for features and objects. For example, it is possible that dorsal pre-frontal regions control spatial attention while ventral pre-frontal regions control feature-based attention. The present results are therefore consistent with the idea that spatial and feature-based attention reflect distinct cognitive processes.

Acknowledgments

We thank J. Mazer for development of the neurophysiology software suite. J. Mazer, S. David, and K. Gustavsen provided invaluable advice on experimental design and data analysis. This work was supported by grants to JLG from the NEI and NIMH.

Footnotes

The authors declare no competing financial interests.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Blaser E, Pylyshyn ZW, Holcombe AO. Tracking an object through feature space. Nature. 2000;408(6809):196–199. doi: 10.1038/35041567. [DOI] [PubMed] [Google Scholar]

- Connor CE, Preddie DC, Gallant JL, Van Essen DC. Spatial attention effects in macaque area V4. J Neurosci. 1997;17(9):3201–3214. doi: 10.1523/JNEUROSCI.17-09-03201.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL, Miezin FM, Petersen SE. Superior parietal cortex activation during spatial attention shifts and visual feature conjunction. Science. 1995;270(5237):802–805. doi: 10.1126/science.270.5237.802. [DOI] [PubMed] [Google Scholar]

- David SV, Vinje WE, Gallant JL. Natural stimulus statistics alter the receptive field structure of v1 neurons. J Neurosci. 2004;24(31):6991–7006. doi: 10.1523/JNEUROSCI.1422-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Doherty JR, Rao A, Mesulam MM, Nobre AC. Synergistic effect of combined temporal and spatial expectations on visual attention. J Neurosci. 2005;25(36):8259–8266. doi: 10.1523/JNEUROSCI.1821-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egeth HE, Yantis S. Visual attention: control, representation, and time course. Annu Rev Psychol. 1997;48:269–297. doi: 10.1146/annurev.psych.48.1.269. [DOI] [PubMed] [Google Scholar]

- Fink GR, Dolan RJ, Halligan PW, Marshall JC, Frith CD. Space-based and object-based visual attention: shared and specific neural domains. Brain. 1997;120(Pt 11):2013–2028. doi: 10.1093/brain/120.11.2013. [DOI] [PubMed] [Google Scholar]

- Ghose GM, Maunsell JH. Attentional modulation in visual cortex depends on task timing. Nature. 2002;419(6907):616–620. doi: 10.1038/nature01057. [DOI] [PubMed] [Google Scholar]

- Giesbrecht B, Woldorff MG, Song AW, Mangun GR. Neural mechanisms of top-down control during spatial and feature attention. Neuroimage. 2003;19(3):496–512. doi: 10.1016/s1053-8119(03)00162-9. [DOI] [PubMed] [Google Scholar]

- Hayden BY, Gallant JL. Time course of attention reveals different mechanisms for spatial and feature-based attention in area v4. Neuron. 2005;47(5):637–643. doi: 10.1016/j.neuron.2005.07.020. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Munte TF. Selective attention to color and location: an analysis with event-related brain potentials. Percept Psychophys. 1984;36(2):185–198. doi: 10.3758/bf03202679. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annu Rev Neurosci. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Liu T, Slotnick SD, Serences JT, Yantis S. Cortical mechanisms of feature-based attentional control. Cereb Cortex. 2003;13(12):1334–1343. doi: 10.1093/cercor/bhg080. [DOI] [PubMed] [Google Scholar]

- Maljkovic V, Nakayama K. Priming of pop-out: II. The role of position. Percept Psychophys. 1996;58(7):977–991. doi: 10.3758/bf03206826. [DOI] [PubMed] [Google Scholar]

- Mazer JA, Gallant JL. Goal-related activity in V4 during free viewing visual search. Evidence for a ventral stream visual salience map. Neuron. 2003;40(6):1241–1250. doi: 10.1016/s0896-6273(03)00764-5. [DOI] [PubMed] [Google Scholar]

- McAdams CJ, Maunsell JH. Effects of attention on the reliability of individual neurons in monkey visual cortex. Neuron. 1999;23(4):765–773. doi: 10.1016/s0896-6273(01)80034-9. [DOI] [PubMed] [Google Scholar]

- McAdams CJ, Maunsell JH. Attention to both space and feature modulates neuronal responses in macaque area V4. J Neurophysiol. 2000;83(3):1751–1755. doi: 10.1152/jn.2000.83.3.1751. [DOI] [PubMed] [Google Scholar]

- Moore T, Armstrong KM, Fallah M. Visuomotor origins of covert spatial attention. Neuron. 2003;40(4):671–683. doi: 10.1016/s0896-6273(03)00716-5. [DOI] [PubMed] [Google Scholar]

- Moore T, Fallah M. Microstimulation of the frontal eye field and its effects on covert spatial attention. J Neurophysiol. 2004;91(1):152–162. doi: 10.1152/jn.00741.2002. [DOI] [PubMed] [Google Scholar]

- Motter BC. Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. J Neurophysiol. 1993;70(3):909–919. doi: 10.1152/jn.1993.70.3.909. [DOI] [PubMed] [Google Scholar]

- Motter BC. Neural correlates of attentive selection for color or luminance in extrastriate area V4. J Neurosci. 1994;14(4):2178–2189. doi: 10.1523/JNEUROSCI.14-04-02178.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nobre AC, Allison T, McCarthy G. Modulation of human extrastriate visual processing by selective attention to colours and words. Brain. 1998;121(Pt 7):1357–1368. doi: 10.1093/brain/121.7.1357. [DOI] [PubMed] [Google Scholar]

- O’Donnell P. Dopamine gating of forebrain neural ensembles. Eur J Neurosci. 2003;17(3):429–435. doi: 10.1046/j.1460-9568.2003.02463.x. [DOI] [PubMed] [Google Scholar]

- Posner MI, Dehaene S. Attentional networks. Trends Neurosci. 1994;17(2):75–79. doi: 10.1016/0166-2236(94)90078-7. [DOI] [PubMed] [Google Scholar]

- Raz A, Buhle J. Typologies of attentional networks. Nat Rev Neurosci. 2006;7(5):367–379. doi: 10.1038/nrn1903. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Chelazzi L. Attentional modulation of visual processing. Annu Rev Neurosci. 2004;27:611–647. doi: 10.1146/annurev.neuro.26.041002.131039. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Chelazzi L, Desimone R. Competitive mechanisms subserve attention in macaque areas V2 and V4. J Neurosci. 1999;19(5):1736–1753. doi: 10.1523/JNEUROSCI.19-05-01736.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roelfsema PR, Lamme VA, Spekreijse H. Object-based attention in the primary visual cortex of the macaque monkey. Nature. 1998;395(6700):376–381. doi: 10.1038/26475. [DOI] [PubMed] [Google Scholar]

- Saenz M, Boynton GM. Vision Sciences Society. Sarasota, FL: 2006. Combined Effects of Spatial and Feature-Based Attention in Human Visual Cortex. [Google Scholar]

- Serences JT, Schwarzbach J, Courtney SM, Golay X, Yantis S. Control of object-based attention in human cortex. Cereb Cortex. 2004;14(12):1346–1357. doi: 10.1093/cercor/bhh095. [DOI] [PubMed] [Google Scholar]

- Treue S. Neural correlates of attention in primate visual cortex. Trends Neurosci. 2001;24(5):295–300. doi: 10.1016/s0166-2236(00)01814-2. [DOI] [PubMed] [Google Scholar]

- Treue S, Martinez Trujillo JC. Feature-based attention influences motion processing gain in macaque visual cortex. Nature. 1999;399(6736):575–579. doi: 10.1038/21176. [DOI] [PubMed] [Google Scholar]

- Vandenberghe R, Duncan J, Dupont P, Ward R, Poline JB, Bormans G, Michiels J, Mortelmans L, Orban GA. Attention to one or two features in left or right visual field: a positron emission tomography study. J Neurosci. 1997;17(10):3739–3750. doi: 10.1523/JNEUROSCI.17-10-03739.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wojciulik E, Kanwisher N. The generality of parietal involvement in visual attention. Neuron. 1999;23(4):747–764. doi: 10.1016/s0896-6273(01)80033-7. [DOI] [PubMed] [Google Scholar]

- Yu AJ, Dayan P. Uncertainty, neuromodulation, and attention. Neuron. 2005;46(4):681–692. doi: 10.1016/j.neuron.2005.04.026. [DOI] [PubMed] [Google Scholar]