Abstract

Hypothesis

Image-guided cochlear implant (CI) programming can improve hearing outcomes for pediatric CI recipients.

Background

CIs have been highly successful for children with severe-to-profound hearing loss, offering potential for mainstreamed education and auditory-oral communication. Despite this, a significant number of recipients still experience poor speech understanding, language delay, and, even among the best performers, restoration to normal auditory fidelity is rare. While significant research efforts have been devoted to improving stimulation strategies, few developments have led to significant hearing improvement over the past two decades. Recently introduced techniques for image-guided CI programming (IGCIP) permit creating patient-customized CI programs by making it possible, for the first time, to estimate the position of implanted CI electrodes relative to the nerves they stimulate using CT images. This approach permits identification of electrodes with high levels of stimulation overlap and to deactivate them from a patient’s map. Prior studies have shown that IGCIP can significantly improve hearing outcomes for adults with CIs.

Methods

The IGCIP technique was tested for 21 ears of 18 pediatric CI recipients. Participants had long-term experience with their CI (5 months-13 years) and ranged in age from 5-17 years old. Speech understanding was assessed after approximately 4 weeks of experience with the IGCIP map.

Results

Using a two-tailed Wilcoxon signed-rank test, statistically significant improvement (p<0.05) was observed for word and sentence recognition in quiet and noise as well as pediatric self-reported quality of life (QOL) measures.

Conclusion

Our results indicate that image-guidance significantly improves hearing and QOL outcomes for pediatric CI recipients.

Keywords: Cochlear implant, stimulation overlap, channel interaction, customized programming, pediatric, children

Introduction

Cochlear implants (CIs) are surgically implanted neural prosthetic devices used to treat severe-to-profound hearing loss (1). CIs use implanted electrodes to stimulate spiral ganglion (SG) cells to induce hearing sensation. CIs have been highly successful for children with severe-to-profound hearing loss, offering potential for mainstreamed education and auditory-oral communication. Despite this, a significant number of recipients still experience poor speech understanding and language delay, and, even among the best performers, restoration to normal auditory fidelity is rare (2-3). This is due, in part, to several well-known issues with electrical stimulation that prevent CIs from accurately simulating natural acoustic hearing. Electrode interaction is an example of one such issue that, despite significant improvements made by advances in hardware and signal processing, remains challenging (e.g., (4-5)). In natural hearing, a nerve pathway is activated when the characteristic frequency associated with that pathway is present in the incoming sound. Neural pathways are tonotopically ordered by decreasing characteristic frequency along the length of the cochlear duct, and this finely tuned spatial organization is well known (6). CI electrode arrays are designed such that each electrode should stimulate nerve pathways corresponding to a pre-defined spectral bandwidth. In surgery, however, the array is blindly threaded into the cochlea with its insertion path guided only by the walls of the spiral-shaped intra-cochlear cavities. Since the final positions of the electrodes are generally unknown, the only option when programming has been to assume the electrodes are situated in the correct scala with relatively uniform and independent electrode-to-neuron activation patterns across the array. However, many experiments, such as those involving varying the number of active channels (7-8), have indicated that electrode activation patterns are not independent, and that electrode interaction indeed limits or hinders outcomes for most if not all CI patients. Several techniques have been proposed to assess patient-specific electrode interactions, e.g., by using electrically evoked compound action potentials (9) or focused stimulation strategies (10), but programming strategies that account for interactions measured using such techniques have not come into widespread clinical use.

Recently, image processing techniques have been introduced that make it possible, for the first time, to estimate the position of implanted CI electrodes relative to the spiral ganglion nerves they stimulate in CT images (11-12). These methods permit identifying electrodes that are likely to be causing high levels of electrode interaction. Once identified, these electrodes can be deactivated them from a patient’s map. We refer to this process as Image-Guided Cochlear Implant Programming (IGCIP) (13). IGCIP has been shown to lead to significant improvement in hearing outcomes for adults with CIs (14). IGCIP could be even more significant for pediatric implant recipients who are developing speech and language and are therefore more reliant on bottom-up processing than postlingually deafened adults (e.g., (15)). Any new techniques or technologies that can improve hearing outcomes at an earlier age have the potential to significantly improve a child’s development of speech, language, literacy, academic performance, and overall quality-of-life (QOL) for children with profound hearing loss (16-18). In this article, we report the results of the first study quantifying the effect of IGCIP techniques in the pediatric population.

Materials and methods

Demographic information about the research participants is shown in Table 1. Participants were 18 pediatric CI recipients ranging in age from 5-17 years old (mean 10 years) with a mean duration of CI experience of 4.5 years. Fifteen of 18 children were bilaterally implanted (Bil) with the remaining 3 children utilizing a bimodal (Bimod) hearing configuration. Three of the bilateral recipients underwent experimentation for each ear sequentially for a total of 21 ears. Speech understanding and auditory function was assessed using monosyllabic words from the Lexical Neighborhood Test (LNT) words (19), BabyBio sentences in quiet, +10 dB signal-to-noise ratio (SNR), and +5dB SNR (20), BKB-SIN (21), and spectral modulation detection (SMD) using the quick SMD (QSMD) test (22). All measures were administered using both the clinical map and with the IGCIP map after approximately 4 weeks experience with the IGCIP experimental map. Analysis of the change in performance between the two time points characterizes the benefit of the IGCIP vs. clinical map. Statistically significant group differences in hearing performance scores between the pre- and post-remapping conditions were measured by detecting significant change in group median using the two-tailed Wilcoxon signed rank test (23) at p<0.05.

Table 1.

Demographics and individual results of 18 pediatric subjects (21 experimental ears). For each participant, shown are which ear was tested (side), the configuration of devices (Config)-bilateral (Bil) or bimodal (Bimod) and age of participant. For each experiment, years of use in the experimented ear (CI use) and the contralateral CI ear (CI use in contral. ear), the number of electrodes deactivated for IGCIP (# Elect Deact), the percentage of electrodes deactivated out of the total of available electrodes for the given electrode array (% Elect Deact), and whether the subject elected to keep the experimental map (Y) or not (N) are reported. Additionally included for each experiment are the total number of tests completed (Total # Scores) and the overall number of test scores that improved (# Imprv) and declined (# Declin) with the number of those that are significant in parentheses.

| Exp. # | Subj. # | Side | Gender | Config. | Age (yrs) |

CI use (yrs) |

CI use in contral. ear (yrs) |

# Elect Deact |

% Elect Deact |

Kept Map |

# Imprv (Signif) Scores |

# Declin (Signif) Scores |

Total # Scores |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | L | M | Bil | 12.2 | 2.3 | 9.4 | 4 | 25 | Y | 7(4) | 3(0) | 10 |

| 2 | 2 | L | F | Bil | 14.1 | 3.3 | 7.5 | 14 | 64 | Y | 4(3) | 3(0) | 8 |

| 3 | 3 | L | F | Bil | 12.7 | 5.4 | 5.4 | 9 | 41 | Y | 5(0) | 5(0) | 10 |

| 4 | 4 | L | M | Bil | 5.6 | 4.0 | 4.0 | 5 | 23 | N | 5(1) | 3(0) | 10 |

| 5 | 5 | L | F | Bil | 6.3 | 4.7 | 5.2 | 7 | 32 | Y | 5(2) | 5(0) | 10 |

| 6 | 6 | L | F | Bil | 7.3 | 5.7 | 5.7 | 7 | 32 | Y | 6(1) | 2(0) | 10 |

| 7 | 7 | L | F | Bil1 | 3.2 | 6.6 | 10.9 | 2 | 9 | Y | 9(4) | 1(0) | 10 |

| 8 | 8 | L | F | Bil | 17.0 | 0.4 | 7.8 | 8 | 36 | Y | 8(6) | 2(0) | 10 |

| 9 | 9 | L | M | Bil | 10.9 | 1.5 | 9.9 | 5 | 31 | N | 5(3) | 1(0) | 9 |

| 10 | 10 | L | F | Bil | 7.4 | 3.8 | 6.1 | 6 | 38 | N | 0(0) | 3(0) | 3 |

| 11 | 11 | L | F | Bimod | 7.4 | 3.1 | 8 | 50 | Y | 6(3) | 1(0) | 8 | |

| 12 | 12 | L | M | Bil | 6.0 | 4.3 | 2.4 | 10 | 45 | Y | 3(1) | 0(0) | 3 |

| 13 | 13 | L | M | Bimod | 17.7 | 13.7 | 5 | 31 | Y | 5(5) | 0(0) | 5 | |

| 14 | 14 | R | M | Bil | 12.5 | 4.4 | 5.7 | 10 | 45 | Y | 4(1) | 3(1) | 8 |

| 15 | 15 | R | F | Bimod | 9.1 | 3.3 | 10 | 45 | Y | 4(0) | 4(1) | 10 | |

| 16 | 4 | R | M | Bil | 5.6 | 3.9 | 3.9 | 10 | 45 | Y | 5(1) | 5(1) | 10 |

| 17 | 16 | R | M | Bil | 9.4 | 3.0 | 1.3 | 6 | 27 | Y | 3(1) | 3(0) | 10 |

| 18 | 3 | R | F | Bil | 12.7 | 5.4 | 5.4 | 10 | 45 | Y | 4(1) | 3(1) | 10 |

| 19 | 17 | R | F | Bil | 8.5 | 3.7 | 3.3 | 4 | 18 | Y | 10(3) | 0(0) | 10 |

| 20 | 12 | R | M | Bil | 6.1 | 2.5 | 4.4 | 9 | 41 | Y | 3(1) | 0(0) | 3 |

| 21 | 18 | R | M | Bil | 14.9 | 9.2 | 11.4 | 5 | 31 | Y | 1(1) | 0(0) | 2 |

|

| |||||||||||||

| Average | 10.3 | 4.5 | 6.1 | 7.3 | 36.0 | 85.7% | |||||||

| Min | 5.6 | 0.4 | 1.3 | 2 | 9.1 | ||||||||

| Max | 17.7 | 13.7 | 11.4 | 14 | 63.6 | ||||||||

|

|

|||||||||||||

The IGCIP maps were created with the process reported in (13) using a software suite that implements the series of automated image analysis techniques. In brief, the positions of implanted electrodes relative to the modiolus are detected using pre- and post-implantation CT scans. The electrodes are localized in post-implantation CT using the approach described in (13). Using the automated image analysis techniques proposed in (11), the modiolus is localized with the pre-implantation CT, where, in contrast with post-implantation CT, anatomical structures are not distorted by image reconstruction artifacts created from the metallic electrode array. The pre- and post- implantation CTs are then aligned so that positions of the electrodes can be quantified relative to the modiolus. This approach for quantifying electrode position has been validated in histological studies (24). The spatial relationship between the electrodes and the modiolus is analyzed to determine both the spread of excitation of each electrode, where electrodes positioned further from the modiolus are assumed to create greater spread, and the resulting areas of stimulation overlap across electrodes. The active electrode configuration is then selected as the one that that includes the largest number of active electrodes that avoids deleterious levels of stimulation overlap as described in (13). This strategy has since been formulated as an automated objective function (25). With this approach, there are parameters used to describe the amount of spread of excitation that occurs for an electrode based on its distance to the modiolus. There are also parameters that define how much stimulation overlap is deleterious. In previous and the present studies, these parameters were chosen subjectively by the authors and fixed for all experiments. Ongoing work aims to find optimized values for these parameters. Electrodes not included in the selected configuration are subsequently deactivated from the patient’s map. Conveniently, this approach does not conflict with existing signal processing strategies, and thus reprogramming does not require major processing changes. In our experiments, after identified electrodes are deactivated, the sound spectrum is automatically reallocated to the remaining active electrodes. Further, the stimulation rate is manually adjusted to remain constant with the patient’s original clinical map—2 of the 3 FDA approved devices would otherwise automatically increase the rate with the deactivation of electrodes.

In our experiments, all speech and non-speech stimuli were presented at a calibrated presentation level of 60 dBA using a single loudspeaker presented at 0° azimuth at a distance of 1 meter. Estimates of spectral resolution were obtained using the QSMD task, which is a non-speech based hearing performance metric that provides a psychoacoustic estimate of spectral resolution, i.e., the ability of the auditory system to decompose a complex spectral stimulus into its individual frequency components (21, 26-29). To measure performance qualitatively, participants and their primary caregiver each completed the parent or child version of the Pediatric Quality of Life Inventory or PedsQL version 4.0 core scales (30). The PedsQL is a standardized measure of generic quality of life composed of four subscales: (1) Physical functioning, 2) Emotional functioning, 3) Social functioning, and 4) School functioning. To complete the PedsQL questionnaire, each child and his/her primary caregiver were asked how much of a problem each item has been over the past month. Study data were collected and managed using the REDCap (Research Electronic Data Capture) secure data management tools (31).

Results

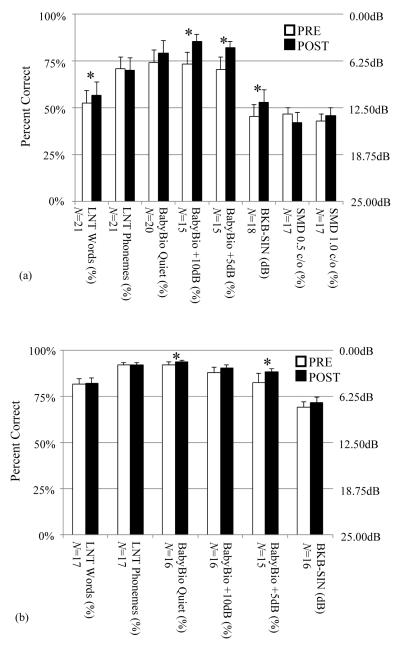

In Figure 1, the bar-graphs show the average and standard error of hearing performance scores across all study subjects in the pre- and post-remapping conditions when testing the remapped ear alone in (a) and when testing in the best aided condition in (b). Below each bar-graph, the number of scores in the dataset (N) is shown, and scores found to have a statistically significant difference between the pre- and post-remapping conditions are indicated with an asterisk. The dataset size, N, differs from plot to plot since some participants could not complete all testing due to time constraints or inability to understand the task. For example, one participant did not understand the QSMD task despite training and thus did not complete testing for either ear. Since the BKB-SIN measure is scored in terms of the dB signal-to-noise ratio (SNR) yielding approximately 50% correct, or SNR-50, units for BKB-SIN benefit (in dB) are shown along the right ordinate. The remaining measures were scored in terms of percent correct, and these units are displayed along the left ordinate.

Figure 1.

Pre- and post-remapping hearing performance scores when testing with the re-mapped ear alone (a) and with both ears in the best aided condition (b). Each bar and whisker indicate the average and standard error. Asterisks indicate significantly different results.

As shown in Figure 1a, performance for five of the six speech recognition metrics improved on average when listening with the remapped ear alone. Improvements in LNT word recognition and three measures of sentence recognition in noise (BabyBio +10dB SNR, BabyBio +5dB SNR, and BKB-SIN) were statistically significant. Little change was seen for LNT phonemes as well as QSMD at 0.5 and 1 cycle/oct. Scores for the best-aided, bilateral CI or bimodal, condition are shown in Figure 1b. Improvement in sentence recognition in quiet (BabyBio quiet) and in noise (BabyBio +5dB SNR) were statistically significant. From both panels we saw little change in word and phoneme recognition but significant improvements in sentence recognition ability in quiet and in noise. When the new IGCIP map was used in conjunction with the contralateral ear, benefit to overall hearing performance especially in noise was clear, even in this relatively short 4-week time frame.

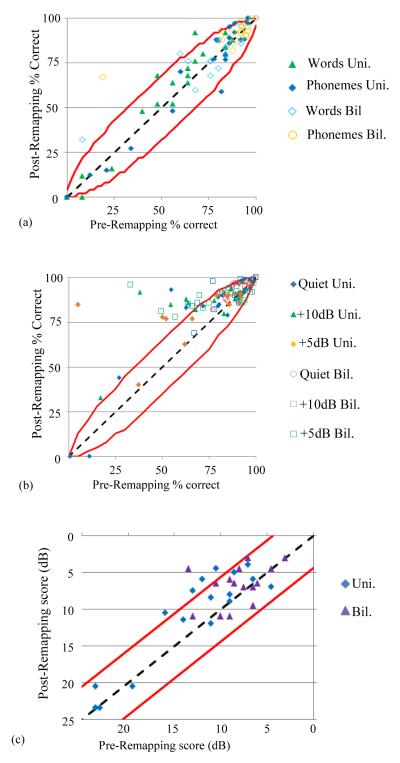

In Figure 2, the pre- and post-remapping value of individual scores are shown in scatter plots for the LNT (panel a), BabyBio (panel b), and BKB-SIN (panel c) speech recognition tests, respectively, in both the unilateral (Uni.) listening condition with the remapped ear alone and bilateral (Bil.), best-aided listening condition. The red lines in the plots indicate the known confidence intervals for individual scores (20, 32-33). Data points falling above the upper line are scores that significantly improved on an individual level, those below the lower line significantly declined, and those between represent no significant difference. Four LNT word scores in panel a significantly improved and one declined, five BKB-SIN scores in panel c significantly improved with no scores significantly declining, and 33 BabyBio sentence scores in panel b significantly improved and 4 significantly declined post-remapping in both quiet and noisy conditions. Table 1 includes for each patient the number of scores shown in Figure 2 that improved (Imprv) and declined (Declin) as well as the number of those improvements and declines that were significant in parentheses. As shown in Table 1, out of all 169 tests performed across all subjects, a total of 103 (60.9%) scores improved with 42 (24.9%) indicative of significant improvement. On the other hand, 47 (27.8%) scores declined, though only 5 (3.0%) scores declined significantly. Across all 21 ears tested, 18 (85.7%) had at least one score that improved significantly; in contrast, 4 (19.0%) of the ears had at least 1 score that significantly declined. Significant improvements were observed for participants across the range of ages and CI experience and also across the range of the number and percentage of electrodes deactivated.

Figure 2.

Pre- vs. post-remapping individual scores for the LNT (a), BabyBio (b), and BKB-SIN (c) measures. Confidence intervals are indicated by red lines.

Qualitative benefit measured using the PedsQL parent-child questionnaire is shown in Figure 3. Parental data showed little qualitative change, with only one sub-scale demonstrating significant improvement. In contrast, each sub-scale for the pediatric data improved with two of the four sub-scale scores as well as the overall total score reaching statistical significance. Thus there was a significant subjective preference for the experimental IGCIP map amongst the children who participated in this study.

Figure 3.

Pre- and post-remapping PedsQL parent-child qualitative survey results for N=18 experiments. Each bar and whisker indicate the average and standard error. Asterisks indicate significantly different results.

At the end of the study, participants elected to keep their experimental maps as their primary map with no changes for 18 out of 21 ears (85.7%), with many of these strongly opposed to returning to their old maps. Many subjects who did not exhibit an individually significant benefit for speech did, in fact, report improved sound quality and this is reflected here in the 85.7% rate of experimental map retention. When patients made comments about the map, these were recorded and are shown in Table 2.

Table 2.

Subject comments immediately following the change to the IGCIP experimental map and at the follow up visit. Note that subjects were not required to give a comment regarding the change and, thus, comments were only recorded when provided.

| Exp. # | Immediate comments | Comments after long-term use |

|---|---|---|

| 1 | It's better. I hear more like this. | It's better. I want to keep it. |

| 2 | It sounds the same. | No difference reported. |

| 3 | It's good. I hear better. | I like it. It's good. |

| 4 | You don't sound like you. It's ok if they are both on. |

It doesn't sound good. I like my other program. |

| 5 | You sound like a tricky little girl. You sound like a mouse. |

|

| 6 | This is clearer. I like it. | It's good. It's a better sound. |

| 7 | It seems louder. | Please don't take this program away. |

| 8 | It is definitely clearer. I can hear everything you are saying without looking. |

It has been like night and day with this program change. I used to hear static and noise all the time. Now it's almost completely gone. I love it. |

| 9 | It's different. | It's okay now that I am used to it but I think I like the old one better. |

| 10 | It sounds like a horn. Everyone sounds like boys. | |

| 11 | I like it! | It sounds good! |

| 12 | It sounds silly. | Now my left ear is my favorite ear |

| 13 | It's different, but I like it. | Speech is clearer. |

| 14 | I hear like other kids now! | It sounds a lot better. |

| 15 | It sounds funny. I don't like it. It doesn't sound normal. |

It sounds normal. |

| 16 | It's good. | I like it. |

| 17 | It sounds better when I'm singing. | |

| 18 | I like the new program better. | |

| 19 | I like it. My voice sounds different. Clearer different. |

I like it |

| 20 | ||

| 21 | I think it sounds better. | It helped, but I think my left CI is now too soft. |

Discussion

The results of our experiments show that the IGCIP approach leads to significantly improved speech recognition and subjective hearing quality in quiet and in noise, and thus could improve hearing and QOL, for many pediatric CI recipients. Improvement in performance in background noise is especially significant considering that speech recognition in noise is one of the most common problems even among the best performing CI users (8). It is also of note that the improvement in sentence recognition in quiet and in noise generally agrees with the results of using IGCIP in the adult population reported previously (13). Improving performance in noise for pediatric CI recipients may hold greater impact than for adult recipients for several reasons. Children are rarely in quiet listening environments. In fact, occupied classroom noise ranges from 48 dBA to 69 dBB with mean levels approximating 65 dBA for an early elementary classroom (e.g., (29-32)). Also, Clark and Govett (33) reported that children’s equivalent continuous sound level, or Leq 24-hour level ranges from 87.3 dBA for all students and are as high as 95.5 dBA for fifth graders. Despite the high levels of noise experienced in the school-aged child’s listening environment, FM systems are rarely utilized outside the classroom, thereby rendering improved speech understanding in noise more valuable.

It is clear that the information provided by image-guidance is critical for the improvements in speech recognition seen in our results since electrode deactivation performed using other criteria has been studied by many groups without significantly affecting performance. For example, some groups have experimented with deactivating different numbers of electrodes in regular patterns and found little effect on average speech recognition performance as long as more than 4-8 electrodes are active (7-8, 39). Other groups have deactivated electrodes based on psychoacoustic criteria, resulting in increases in certain aspects of speech recognition and decreases in others (40-41). Our results clearly demonstrate the impact this IGCIP deactivation strategy can have on pediatric CI users.

Because the reprogramming strategy we use only requires deactivating electrodes, it is simple to integrate with existing sound processing strategies using the existing clinical software provided by CI manufacturers. Typically when changes to a program are made, quantitative and qualitative hearing scores tend to favor the original program (42-44). Thus, it is remarkable that the majority of the participants in our experiments noted substantial improvement in sound quality immediately after reprogramming, and these improvements are reflected in our quantitative results. These results have further significance as any advancement that improves speech recognition from an earlier age has the potential to significantly improve the long-term QOL for children with severe-to-profound hearing loss, and it is likely that long-term experience with the new program would result in further improvements in hearing performance. Future studies will explore the possibility that using the IGCIP map from the point of initial activation might permit better outcomes with a steeper trajectory to asymptotic performance for pediatric subjects. We believe that the benefit of IGCIP could be greatest with children who are still developing speech and language and who are more reliant on bottom-up processing.

Acknowledgments

Sources of Funding: RHG serves on the Audiology Advisory Board for Advanced Bionics, Cochlear, and Med-El. RFL is a consultant for Advanced Bionics, Medtronic, and Ototronix. AJHW serves on the Audiology Advisory Board for Med-El. This work was supported in part by grants R01DC014037, R21DC012620, R01DC008408, and R01DC13117 from the National Institute on Deafness and Other Communication Disorders and UL1TR000445 from the National Center for Advancing Translational Sciences.

Footnotes

Conflicts of Interest

The content is solely the responsibility of the authors and does not necessarily represent the official views of these institutes.

References

- 1.Cochlear Implants. National Institute on Deafness and Other Communication Disorders. 2011. No. 11-4798.

- 2.Huber M, Kipman U. Cognitive skills and academic achievement of deaf children with cochlear implants. Otolaryngology--Head and Neck Surgery. 2012;147(4):763–772. doi: 10.1177/0194599812448352. [DOI] [PubMed] [Google Scholar]

- 3.Sarant JZ, Harris DC, Bennet LA. Academic Outcomes for School-Aged Children With Severe–Profound Hearing Loss and Early Unilateral and Bilateral Cochlear Implants. Journal of Speech, Language, and Hearing Research. 2015:1–16. doi: 10.1044/2015_JSLHR-H-14-0075. [DOI] [PubMed] [Google Scholar]

- 4.Fu QJ, Nogaki G. Noise susceptibility of cochlear implant users: The role of spectral resolution and smearing. J Assoc Res Otolaryngol. 2005;6(1):19–27. doi: 10.1007/s10162-004-5024-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Boex C, de Balthasar C, Kos MI, Pelizzone M. Electrical field interactions in different cochlear implant systems. J Acoust Soc Am. 2003;114:2049–2057. doi: 10.1121/1.1610451. [DOI] [PubMed] [Google Scholar]

- 6.Stakhovskaya O, Spridhar D, Bonham BH, Leake PA. Frequency Map for the Human Cochlear Spiral Ganglion: Implications for Cochlear Implants. J. Assoc. Res. Otol. 2007;8:220–33. doi: 10.1007/s10162-007-0076-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fishman KE, Shannon RV, Slattery WH. Speech recognition as a function of the number of electrodes used in the SPEAK cochlear implant speech processor. J Speech Lang Hear Res. 1997;40(5):1201–15. doi: 10.1044/jslhr.4005.1201. [DOI] [PubMed] [Google Scholar]

- 8.Friesen LM, Shannon RV, Baskent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. J. Acoust. Soc. Am. 2001;110(2):1150–63. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- 9.Hughes ML, Stille LJ. Psychophysical and physiological measures of electrical-field interaction in cochlear implants. J. Acoust. Soc. Am. 2009;125(1):247–60. doi: 10.1121/1.3035842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Goldwyn JH, Bierer SM, Bierer JA. Modeling the electrode-neuron interface of cochlear implants: effects of neural survival, electrode placement, and the partial tripolar configuration. Hear Res. 2010;268(1-2):93–104. doi: 10.1016/j.heares.2010.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Noble JH, Gifford RH, Labadie RF, Dawant BM. Ayache N, et al., editors. Statistical Shape Model Segmentation and Frequency Mapping of Cochlear Implant Stimulation Targets in CT. MICCAI 2012, Part II, LNCS 7511. 2012:421–428. doi: 10.1007/978-3-642-33418-4_52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Noble JH, Labadie RF, Majdani O, Dawant BM. Automatic segmentation of intra-cochlear anatomy in conventional CT. IEEE Trans. on Biomedical. Eng. 2011;58(9):2625–32. doi: 10.1109/TBME.2011.2160262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Noble JH, Labadie RF, Gifford RH, Dawant BM. Image-guidance enables new methods for customizing cochlear implant stimulation strategies. IEEE Trans. on Neural Systems and Rehabilitiation Engineering. 2013;21(5):820–9. doi: 10.1109/TNSRE.2013.2253333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Noble JH, Gifford RH, Hedley-Williams AJ, Dawant BM, Labadie RF. Clinical evaluation of an image-guided cochlear implant programming strategy. Audiology & Neurotology. 2014;19:400–11. doi: 10.1159/000365273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Snedeker J, Trueswell JC. The developing constraints on parsing decisions: The role of lexical-biases and referential scenes in child and adult sentence processing. Cognitive Psychology. 2004;49:238–99. doi: 10.1016/j.cogpsych.2004.03.001. [DOI] [PubMed] [Google Scholar]

- 16.Connor CM, Craig HK, Raudenbush SW, Heavner K, Zwolan TA. The age at which young deaf children receive cochlear implants and their vocabulary and speech-production growth: is there an added value for early implantation? Ear and hearing. 2006;27(6):628–644. doi: 10.1097/01.aud.0000240640.59205.42. [DOI] [PubMed] [Google Scholar]

- 17.Geers AE, Moog JS, Biedenstein J, Brenner C, Hayes H. Spoken language scores of children using cochlear implants compared to hearing age-mates at school entry. Journal of Deaf studies and Deaf education. 2009;14(3):371–385. doi: 10.1093/deafed/enn046. [DOI] [PubMed] [Google Scholar]

- 18.Ruffin CV, Kronenberger WG, Colson BG, Henning SC, Pisoni DB. Long-Term Speech and Language Outcomes in Prelingually Deaf Children, Adolescents and Young Adults Who Received Cochlear Implants in Childhood. Audiology & Neuro-Otology. 2013;18(5):289–296. doi: 10.1159/000353405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kirk KI, Pisoni DB, Osberger MJ. Lexical effects on spoken word recognition by pediatric cochlear implant users. Ear Hear. 1995;16:470–481. doi: 10.1097/00003446-199510000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Spahr AJ, Dorman MF, Litvak LM, Cook SJ, Loiselle LM, DeJong MD, Hedley-Williams A, Sunderhaus LS, Hayes CA, Gifford RH. Development and Validation of the Pediatric AzBio Sentence Lists. Ear Hear. 2014;35(4):418–22. doi: 10.1097/AUD.0000000000000031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bench J, Kowal A, Bamford J. The BKB (Bamford-Kowal-Bench) sentences lists for partially-hearing children. Br. J. Audiol. 1979;13:1–12. doi: 10.3109/03005367909078884. [DOI] [PubMed] [Google Scholar]

- 22.Gifford RH, Hedley-Williams A, Spahr AJ. Clinical assessment of spectral modulation detection for cochlear implant recipients: a non-language based measure of performance outcomes. Int J Audiol. 2014;53(3):159–64. doi: 10.3109/14992027.2013.851800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wilcoxon F. Individual comparisons by ranking methods. Biometrics Bulletin. 1945;1(6):80–83. [Google Scholar]

- 24.Schuman TA, Noble JH, Wright CG, Wanna GB, Dawant B, Labadie RF. Anatomic Verification of a Novel, Non-rigid Registration Method for Precise Intrascalar Localization of Cochlear Implant Electrodes in Adult Human Temporal Bones Using Clinically-available Computerized Tomography. Laryngoscope. 2010;120(11):2277–2283. doi: 10.1002/lary.21104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zhao Y, Dawant BM, Noble JH. Automatic electrode configuration selection for image-guided cochlear implant programming. Proceedings of the SPIE Conf. on Medical Imaging, 9415.2015. p. 94150K. [Google Scholar]

- 26.Saoji AA, Litvak LM, Spahr AJ, Eddins DA. Spectral modulation detection and vowel and consonant identifications in cochlear implant listeners. J. Acoust. Soc. Am. 2009;126(3):955–8. doi: 10.1121/1.3179670. [DOI] [PubMed] [Google Scholar]

- 27.Henry BA, Turner CW. The resolution of complex spectral patterns by cochlear implant and normal-hearing listeners. J Acoust Soc Am. 2003;113(5):2861–73. doi: 10.1121/1.1561900. [DOI] [PubMed] [Google Scholar]

- 28.Drennan WR, Won JH, Nie K, Jameyson E, Rubinstein JT. Sensitivity of psychophysical measures to signal processor modifications in cochlear implant users. Hear Res. 2010;262:1–8. doi: 10.1016/j.heares.2010.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Drennan WR, Anderson ES, Won JH, Rubenstein JT. Validation of clinical assessment of spectral-ripple resolution for cochlear implant users. Ear and hearing. 2014;35(3):e92–8. doi: 10.1097/AUD.0000000000000009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Varni JW, Seid M, Kurtin PS. PedsQL™ 4.0: Reliability and Validity of the Pediatric Quality of Life Inventory™ Version 4.0 Generic Core Scales in Healthy and Patient Populations. 2001;39:800–12. doi: 10.1097/00005650-200108000-00006. [DOI] [PubMed] [Google Scholar]

- 31.Paul A. Harris, Robert Taylor, Robert Thielke, Jonathon Payne, Nathaniel Gonzalez, Jose G. Conde. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009 Apr;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Etymotic Research: Bamford-kowal-bench speech-in-noise test (version 1.03) [audio cd] 2005.

- 33.Thornton AR, Raffin MJ. Speech-discrimination scores modeled as a binomial variable. J Speech Hear Res. 1978;21:507–518. doi: 10.1044/jshr.2103.507. [DOI] [PubMed] [Google Scholar]

- 34.Sanders D. Noise conditions in normal school classrooms. Exceptional Child. 1965;31:344–353. doi: 10.1177/001440296503100703. [DOI] [PubMed] [Google Scholar]

- 35.Nober LW, Nober EH. Auditory discrimination of learning disabled children in quiet and classroom noise. Journal of Learning Disabilities. 1975;8:656–659. [Google Scholar]

- 36.Bess FH, Sinclair JS, Riggs D. Group amplification in schools for the hearing impaired. Ear and Hearing. 1984;5:138–144. doi: 10.1097/00003446-198405000-00004. [DOI] [PubMed] [Google Scholar]

- 37.Finitzo-Hieber T. Classroom acoustics. In: Roeser R, editor. Auditory Disorders in School Children. 2nd ed Thieme-Stratton; New York: 1988. pp. 221–223. [Google Scholar]

- 38.Clark WW, Govett SB. School-related noise exposure in children. Paper presented at the Association for Research in Otolaryngology Mid-Winter Meeting; St. Petersburg, FL. 1995. [Google Scholar]

- 39.Garnham C, O’Driscoll M, Ramsden R, Saeed S. Speech understanding in noise with a Med-El COMBI 40+ cochlear implant using reduced channel sets. Ear Hear. 2002;23(6):540–52. doi: 10.1097/00003446-200212000-00005. [DOI] [PubMed] [Google Scholar]

- 40.Garadat SN, Zwolan TA, Pfingst BE. Across-site patterns of modulation detection: Relation to speech recognition. J. Acoust. Soc. Am. 2012;131(5):4030–41. doi: 10.1121/1.3701879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zwolan TA, Collins LM, Wakefiled GH. Electrode discrimination and speech recognition in postlingually deafened adult cochlear implant subjects. J. Acoust. Soc. Am. 1997;102(6):3673–85. doi: 10.1121/1.420401. [DOI] [PubMed] [Google Scholar]

- 42.Tyler RS, Preece JP, Lansing CR, Otto SR, Gantz BJ. Previous experience as a confounding factor in comparing cochlear-implant processing schemes. J. Speech Hear. Res. 1986;29:282–7. doi: 10.1044/jshr.2902.282. [DOI] [PubMed] [Google Scholar]

- 43.Buechner A1, Beynon A, Szyfter W, et al. Clinical evaluation of cochlear implant sound coding taking into account conjectural masking functions, MP3000™. Cochlear Implants International. 2011;12(4):194–204. doi: 10.1179/1754762811Y0000000009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Skinner MW, Arndt PL, Staller SJ. Nucleus 24 advanced encoder conversion study: performance versus preference. Ear Hear. 2002;23(1 Suppl):2S–17S. doi: 10.1097/00003446-200202001-00002. [DOI] [PubMed] [Google Scholar]