Abstract

Background

The purpose of the Community Alliance for Research Empowering Social change (CARES) training program was to (1) train community members on evidence-based public health, (2) increase their scientific literacy, and (3) develop the infrastructure for community-based participatory research (CBPR).

Objectives

We assessed participant knowledge and evaluated participant satisfaction of the CARES training program to identify learning needs, obtain valuable feedback about the training, and ensure learning objectives were met through mutually beneficial CBPR approaches.

Methods

A baseline assessment was administered before the first training session and a follow-up assessment and evaluation was administered after the final training session. At each training session a pretest was administered before the session and a posttest and evaluation were administered at the end of the session. After training session six, a mid-training evaluation was administered. We analyze results from quantitative questions on the assessments, pre- and post-tests, and evaluations.

Results

CARES fellows knowledge increased at follow-up (75% of questions were answered correctly on average) compared with baseline (38% of questions were answered correctly on average) assessment; post-test scores were higher than pre-test scores in 9 out of 11 sessions. Fellows enjoyed the training and rated all sessions well on the evaluations.

Conclusions

The CARES fellows training program was successful in participant satisfaction and increasing community knowledge of public health, CBPR, and research method ology. Engaging and training community members in evidence-based public health research can develop an infrastructure for community–academic research partnerships.

Keywords: Community-based participatory research, community–academic partnerships, suburban population, community research training, social change

CBPR has emerged as a promising approach in public health and is often used by universities to engage community stakeholders and address priority public health concerns.1–4 Engaging community members in the research process is often the missing link to improving the quality and outcomes of health promotion activities, disease prevention initiatives, and research studies.1,5 This paradigm is particularly useful for increasing community research capacity (e.g., ability to identify, mobilize) to address a broad array of public health concerns.2,6–8

Training community stakeholders on CBPR and public health increases community capacity and facilitates research partnerships integral for the development of culturally appropriate interventions designed to improve health outcomes.9 When effective, training demystifies research methodologies and develops a common language between community members and researchers while building trust, enhancing knowledge, and addressing community health needs.8 Within this paradigm, there is a co-learning process or reciprocal exchange of information and expertise among researchers and community members.2

Training programs for lay health advisors or community health advocates are a promising health promotion strategy.10–15 Several CBPR projects have used community research training as a mechanism for increasing research capacity among vulnerable, minority and underserved communities. In the Alternatives for Community & Environment project, youth in Roxbury, Massachusetts, were trained to educate the community on the relationship between air pollution and health.16 The Community Action Against Asthma program in Detroit, Michigan, trained outreach workers as “Community Environmental Specialists” to conduct household assessments and personal monitoring of exposure.17 In Brooklyn, New York, El Puente and The Watchperson Project trained community health educators to conduct interviews and facilitate focus groups.18 The West Side Community Asthma Project in the Lower East Side of Buffalo, New York, conducted a training to increase the community’s ability to participate in asthma research.19

CARES

Minority communities in Long Island, a residentially segregated suburb of New York City, experience a disproportionate burden of poor health outcomes. These communities have increased morbidity and mortality from chronic illnesses, older housing stock, poorer school systems, and lower socioeconomic status.20,21 Through community forums called mini-summits on minority health, researchers, practitioners, community health workers, and faith- and community-based organizations worked collaboratively to develop region specific solutions for the public health problems facing minority communities in the region.22 Based on the recommendations developed through this multifaceted, community-driven approach was CARES, an academic–community-based research partnership designed to (1) train community members on evidence-based public health, (2) increase their scientific literacy, and (3) develop the infrastructure for CBPR such that local stakeholders can examine and address racial/ethnic health disparities in their communities.23

The CARES training curriculum and goals were designed by the CARES leadership team. The CARES leadership had equal representation from academic and community partners and all members of the CARES leadership team also served as CARES faculty.23 This comprehensive, 15-week, evidence-based public health research course included 11 didactic training sessions and 4 experiential workshops and was based on a standard Masters in Public Health curriculum (see online supplement to Goodman et al23 for detailed curriculum) taught by multidisciplinary faculty from research institutions. Each 3-hour training session was held at a community library and was geared to community health workers, leaders of community-based organizations, and community members.

Nineteen diverse fellows enrolled in the CARES training cohort. The majority of fellows were female (79%) and born in the United States (79%). Ten (52%) were Black, four (21%) were White, three (16%) were Hispanic, and two (10%) were Native American. Fellows ranged in age from 22 to 78, with a mean age of 51. Fellows were members of community-based organizations (32%), community health workers (32%), and community members (36%); all had completed some college coursework. CARES fellows represent diversity of thought, educational backgrounds, and demographics, yet they share a collective vision to use research as a tool to elucidate existing health disparities and become social change agents. Detailed information on the CARES training program, recruitment of participants, leadership team, selection of faculty, participant demographics, and program results are presented elsewhere.23

We assess participant knowledge and evaluate participant satisfaction of the CARES training program to identify learning needs, obtain valuable feedback about the training, and ensure learning objectives were met through mutually beneficial CBPR approaches.

METHODS

Assessment of Participant Knowledge

Of the 19 fellows enrolled in the CARES training program, 13 (68%) completed the 15-week training course, and 11 (58%) completed both baseline and follow-up assessments. The majority of fellows who completed both the baseline and follow-up assessment were female (73%) and born in the United States (73%); seven (64%) were Black, three (27%) were White, and one (9%) Hispanic. Fellows ranged in age from 22 to 78, with a mean age of 55. The majority of fellows were members of community-based organizations (45%) and community health workers (36%); all had a college degree (Table 1).

Table 1.

Characteristics of CARES Fellows Who Completed Both Baseline and Follow-Up Assessments (N = 11)

| Characteristic | n | % |

|---|---|---|

| Gender | ||

| Female | 8 | 72.7 |

| Male | 3 | 27.3 |

| Race | ||

| Non-Hispanic Black | 7 | 63.6 |

| Non-Hispanic White | 3 | 27.3 |

| Hispanic | 1 | 9.1 |

| Education | ||

| College Degree | 5 | 45.5 |

| Graduate Degree | 5 | 45.5 |

| Doctoral Degree | 1 | 9.1 |

| Country of birth | ||

| United States | 8 | 72.7 |

| Foreign Born | 3 | 27.3 |

| Affiliation | ||

| Community Health Worker | 4 | 36.4 |

| Community-Based Organization | 5 | 45.5 |

| Community Member | 2 | 18.2 |

| Age (yrs) | ||

| Mean | 54.7 | |

| Standard Deviation | 14.0 |

Fellows’ baseline and follow-up assessments were linked using ID numbers. Each assessment (baseline and follow-up) consisted of 16 identical questions (see online appendix at http://muse.jhu.edu/journals/progress_in_community_health_partnerships_research_educaton_and_action/v006/6.3.goodman_supp01.pdf). Because of the small sample size (N = 11), we used nonparametric statistical methods to analyze the data. Wilcoxon signed-rank tests (nonparametric counterpart of the paired t-test) were used to examine differences in participants overall scores on the baseline assessment compared with the follow-up assessment. The percent of CARES fellows who correctly responded to each question on baseline compared with the percent of CARES fellows who answered the same question correctly on the follow-up assessments were also examined using the Wilcoxon signed-ranked test. To gain better insight into the change in assessment scores, we stratified participant responses to questions into four categories: (1) Correct at baseline and incorrect at follow-up, (2) incorrect at baseline and incorrect at follow-up, (3) correct at both baseline and follow-up, and (4) incorrect at baseline and correct at follow-up to determine whether differences seen between baseline and follow-up assessment reflect learning.

At each of the 11 didactic training sessions, a pretest was administered before the session and a posttest was administered after the session to assess participant knowledge of the training topic. Fellows’ pre- and post-test responses were linked using ID numbers; pre-test and post-test had same number of questions but not always the same content (see online appendicies at http://muse.jhu.edu/journals/progress_in_community_health_partnerships_research_educaton_and_action/v006/6.3.goodman_supp01.pdf). Ten questions were asked on the pre- and post-tests for session 1; for most of the subsequent sessions,2–6,9–11 five questions were asked. Four questions were asked on the pre- and post-tests for sessions 7 and 8. Wilcoxon signed-rank tests were used to examine the percent of correct scores on pre-test compared with the post-test for each session.

Evaluation of Participant Satisfaction

After each session, participants were asked to complete a session evaluation form. Three quantitative questions were included on the session 1 evaluation: (1) Exercise learning objectives were met, (2) the group exercises were well facilitated, and (3) overall, how would you rate this session. For all other sessions (2–5, 7–11), 7 quantitative questions were asked on the evaluation: (1) Exercise learning objectives were met, (2) information learned in this session was useful, (3) group activities in this session were useful, (4) understood the concepts presented in this session, (5) facilitator(s) were well organized, (6) facilitator(s) seemed knowledgeable about the subject, and (7) overall, how would you rate this session. Participants were asked to respond to each question using a 5-point Likert scale; for all questions except the last question on each evaluation, response options were: 1, strongly disagree; 2, disagree; 3, neutral; 4, agree, or 5, strongly agree. For the last question on each evaluation (question 3 on session 1 evaluation and question 7 on all other session evaluations) the response options were: 1, poor; 2, fair; 3, neutral; 4, good; or 5, excellent. We calculated the mean and standard deviation of each question for each session and compute an overall session evaluation mean score. No session evaluation was conducted for session 6; an overall mid-training evaluation was given at the end of this session to assess participants’ satisfaction with the training up to this point.

On the mid-training evaluation, seven quantitative evaluation questions were asked: (1) The facilitator(s) have been prepared and well organized, (2) the facilitator(s) seemed knowledgeable about the subject, (3) the information learned so far in this training was helpful, (4) the CARES project staff is knowledgeable and helpful, (5) I would recommend this training to others, (6) none of the information presented is new to me, and (7) I would prefer distance learning instead of in class training. A 5-point Likert response scale (1, strongly disagree; 2, disagree; 3, neutral; 4, agree, or 5, strongly agree) was used for questions 1 through 3; true/false responses were used for the last four questions.

On the follow-up assessment, nine quantitative evaluation questions were asked; questions 1 through 5 and 7 from the mid-training evaluation, along with three new questions: (1) An appropriate amount of material covered during this training, measured on a 5-point Likert scale (1, strongly disagree; 2, disagree; 3, neutral, 4, agree, or 5, strongly agree). There were two true/false questions—the structure and the format of the training was beneficial to the learning process and the information presented in the training has adequately prepared me for the next phase of the CARES project. Mean values and standard deviations were computed for each Likert response question and frequencies and percentages were computed for true/false questions on the mid-training and follow-up evaluations.

SAS 9.2 (SAS Institute Inc., Cary, NC) was used to conduct statistical analyses; significance was assessed at p < .05. This study was approved by the Stony Brook University Committee on Research Involving Human Subjects.

RESULTS

Assessment of Participant Knowledge

Overall, there were indications that fellows knowledge improved; out of 16 questions, on average fellows answered 6 (38%) questions correctly at baseline (mean, 6.2; SD, 3.3; median, 7.0) and 12 (75%) questions correctly at follow-up (mean, 11.7; SD, 3.0; median, 12.0; p = .01). The three greatest improvements were for defining health literacy (no one got it correct at baseline and 8 [73%] got it correct at follow-up), defining the Belmont Report (1 [9%] answered correctly at baseline versus 8 [73%] at follow-up, and explaining the differences between quantitative and qualitative research methods (4 [36%] correct at baseline and 10 [91%] at follow-up). Based on the Wilcoxon signed-rank tests, these differences were statistically significant (p = .01 for all three; Table 2). Significant differences also existed when participants were asked to define the purpose of focus groups, HIPPA, and ethnography (p < .05 for all three). The three smallest differences were for defining the role of an Institutional Review Board (8 [73%] participants providing correct responses at baseline and 8 [73%] at follow-up), defining the overarching goal of Healthy People 2010 (4 [36%] correct at baseline and 5 [46%] at follow-up), and defining the Tuskegee Experiment (9 [82%] correct at baseline and 11 [100%] at follow-up). Fellows performed poorly when asked to define the role of an Institutional Review Board and the overarching goal of Healthy People 2010 (highest percentage of participants with correct response at baseline and incorrect at follow-up, 18% for both); the most difficult question was defining the term ethnography (the majority of fellows were incorrect at both baseline and follow-up [64%]).

Table 2.

CARES Fellows (N = 11) Percent of Questions Correct at Baseline and Follow-Up

| CARES Fellows Responses at Baseline and Follow-Up Assessments | % CARES Fellows Answered Questions Correctly | BS1FU0† n (%) |

BS0FU0† n (%) |

BS1FU1† n (%) |

BS0FU1† n (%) |

||||

|---|---|---|---|---|---|---|---|---|---|

| Baseline | Follow-Up | Difference | Wilcoxon Signed-Ranks Test | ||||||

| zW | p | ||||||||

| Informed Consent | 63.6 | 90.9 | 27.3 | 1.342 | .18 | 1 (9.1) | 6 (54.5) | 4 (36.4) | |

| Belmont Report | 9.1 | 72.7 | 63.6 | 2.646 | .01** | 3 (27.3) | 1 (9.1) | 7 (63.6) | |

| Tuskegee Experiment | 81.8 | 100 | 18.2 | 1.414 | .16 | 9 (81.8) | 2 (18.2) | ||

| Health Literacy | 0.0 | 72.7 | 72.7 | 2.828 | .01** | 3 (27.3) | 8 (72.7) | ||

| Evidence-Based Public Health | 36.4 | 63.6 | 27.2 | 1.732 | .08 | 4 (36.4) | 4 (36.4) | 3 (27.3) | |

| Cultural Competency | 54.6 | 81.8 | 27.2 | 1.342 | .18 | 1 (9.1) | 1 (9.1) | 5 (45.4) | 4 (36.4) |

| IRB Role | 72.7 | 72.7 | 0.0 | 0.000 | 1.00 | 2 (18.2) | 1 (9.1) | 6 (54.5) | 2 (18.2) |

| HIPAA | 36.4 | 81.8 | 45.4 | 2.236 | .03* | 2 (18.2) | 4 (36.4) | 5 (45.4) | |

| Differences Between Quantitative and Qualitative Methods | 36.4 | 90.9 | 54.5 | 2.449 | .01* | 1 (9.1) | 4 (36.4) | 6 (54.5) | |

| Differences Between CBPR and traditional Research | 54.6 | 81.8 | 27.2 | 1.342 | .18 | 1 (9.1) | 1 (9.1) | 5 (45.4) | 4 (36.4) |

| Mixed-Methods Approach | 36.4 | 72.7 | 36.3 | 1.633 | .10 | 1 (9.1) | 2 (18.2) | 3 (27.3) | 5 (45.4) |

| Ethnography | 0.0 | 36.4 | 36.4 | 2.000 | .05* | 7 (63.6) | 4 (36.4) | ||

| Purpose of Focus Groups | 27.3 | 72.7 | 45.4 | 2.236 | .03* | 3 (27.3) | 3 (27.3) | 5 (45.4) | |

| Overarching Goal of Healthy People 2010 | 36.4 | 45.5 | 9.1 | 0.447 | .66 | 2 (18.2) | 4 (36.4) | 2 (18.2) | 3 (27.3) |

| Information Expect to Get From Community Health Assessment | 63.6 | 90.9 | 27.3 | 1.732 | .08 | 1 (9.1) | 7 (63.6) | 3 (27.3) | |

| BEST Health Promotion Planning Model | 9.1 | 45.5 | 36.4 | 1.633 | .10 | 1 (9.1) | 5 (45.4) | 5 (45.4) | |

p < .05;

p < .01.

BS1FU0, correct at baseline and incorrect at follow-up; BS0FU0: incorrect at baseline and incorrect at follow-up; BS1FU1: correct at both baseline and follow-up; BS0FU1: incorrect at baseline and correct at follow-up.

Comparisons for the mean percent of correct scores on pre- and post-tests at each session showed that in 9 out of 11 sessions, post-test scores were higher than pre-test scores; two sessions had average post-test scores that were lower than pre-test scores. Based on the Wilcoxon signed-rank tests, sessions 1, 7, 9, and 11, post-test scores were significantly higher than pre-test scores (p = .01, .02, .03, and .03, respectively); post-test scores for sessions 5 (p = .02) and 8 (p = .05) were significantly lower than pre-test scores (Table 3).

Table 3.

CARES Fellows Training Pretest and Posttest Scores (Percent of Total Correct at Each Session)

| Sessions | n | Pretest Score | Pretest Score | Score Difference (post–pre) | Wilcoxon Signed-Ranks Test | ||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | zW | p | ||

| 1. Introduction to Research | 16 | 67.5 | 16.5 | 83.1 | 7.0 | 15.6 | 18.6 | 2.689 | .01** |

| 2. E-Health and Health Literacy | 18 | 76.7 | 18.5 | 88.9 | 14.1 | 12.2 | 26.7 | 1.866 | .06 |

| 3. Ethics | 18 | 71.1 | 14.1 | 74.4 | 20.4 | 3.3 | 20.9 | 0.676 | .50 |

| 4. Research Methods | 15 | 60.0 | 21.4 | 61.3 | 26.7 | 1.3 | 19.2 | 0.277 | .78 |

| 5. Library Resources/Data/Cultural Competency | 15 | 77.3 | 21.2 | 53.3 | 24.7 | −24.0 | 31.4 | −2.381 | .02* |

| 6. Qualitative Methods | 12 | 50.0 | 30.2 | 61.7 | 24.8 | 11.7 | 32.4 | 1.144 | .25 |

| 7. Census 2010: Stand Up and Be Counted/Quantitative Methods | 12 | 62.5 | 16.9 | 81.3 | 18.8 | 18.8 | 21.7 | 2.310 | .02 |

| 8. Community-Based Participatory Research | 14 | 76.8 | 26.8 | 51.8 | 22.9 | −25.0 | 40.4 | −1.987 | .05* |

| 9. Community Health | 12 | 81.7 | 15.9 | 91.7 | 10.3 | 10.0 | 13.5 | 2.121 | .03* |

| 10. Introduction to Epidemiology | 12 | 75.0 | 21.1 | 80.0 | 17.1 | 5.0 | 21.1 | 0.791 | .43 |

| 11. Workforce Assessment and Health Literacy | 9 | 46.7 | 17.3 | 75.6 | 16.7 | 28.9 | 28.5 | 2.214 | .03* |

p < .05;

p < .01.

Evaluation of Participant Satisfaction

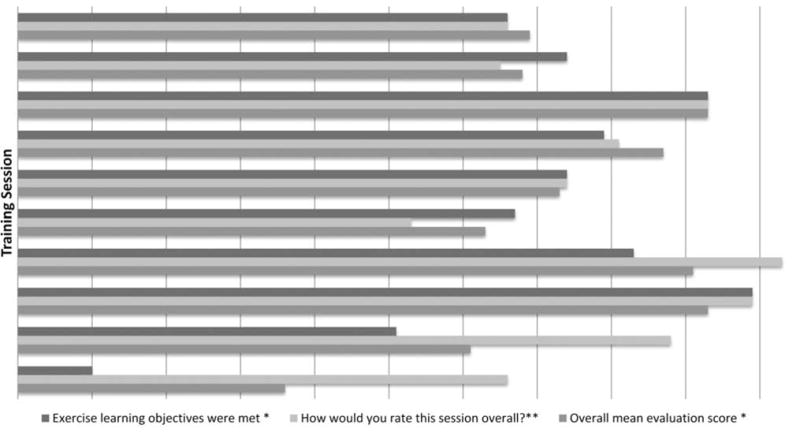

Fellows’ rated all sessions well on the evaluations; overall evaluation average range from 4.4 to 4.9 (between good and excellent). The mean of the evaluation scores for each session were between 4.3 (session 1) and 4.8 (sessions 3 and 9; Figure 1). The mean of the mid-training evaluation scores were between 4.3 and 4.7, and mean of follow-up assessment evaluation scores were between 4.1 and 4.4. CARES fellows all reported that the CARES project team/staff was knowledgeable and helpful, they would recommend the training to others, information presented was new to them, and had adequately prepared them for the next phase of the CARES project; the majority (90% at mid-training, 80% at follow-up) of CARES fellows prefer in-class training over distance learning.

Figure 1. CARES Fellows’ Mean Evaluation Scores for Each Session†.

†Session 6 had no evaluation data;

*Ratings: 1 – Strongly Disagree 2 – Disagree 3 – Neutral 4 – Agree 5 – Strongly Agree

**Ratings: 1 –Poor 2 –Fair 3 – Neutral 4 –Good 5 – Excellent

DISCUSSION

The CARES training was designed to increase research literacy in minority communities and develop the infrastructure for CBPR in Long Island. When CBPR was introduced to this community, one of their primary requests was to be trained in research methodology. Community members requested training as a necessary tool for them to operate as equal partners in research projects. The ability to act as partners in the research process allows for the community to take ownership of the research done in their community and ensure that projects conducted are based on a community-driven research agenda.

The optimal measure of success for the CARES project was the response to the CARES request for proposals and the development of two successful pilot CBPR projects. These projects reflect the true spirit of CBPR, such that the ideas are generated by and are important to the community. Four CARES fellows developed a study a where they conducted door-to-door surveys in a predominately Hispanic community to gain better insight to the barriers in obtaining health care. Two CARES fellows developed a 12-week (6 sessions) educational obesity intervention for Black women; each educational session was followed by a focus group with participants to elucidate the reasons for the increased prevalence of obesity among Black women and foster a supportive environment for the discussion of successful strategies for incorporating healthy lifestyle changes. The CARES training program prepared fellows to develop CBPR projects using a broad array of research methodologies (quantitative and qualitative) to address health disparities.

We assessed participant knowledge and conducted a comprehensive (formative and summative), mixed-methods evaluation of the CARES training program. Quantitative assessments include baseline and follow-up assessments, and session pre- and post-tests. Quantitative evaluation components include closed ended evaluation questions from the session evaluations, mid-training evaluation, and follow-up evaluation (questions on follow-up assessment). Qualitative evaluation components include open ended questions asked on session one evaluation, mid-training evaluation, follow-up evaluation, and summative evaluation semistructured interviews conducted several months after the training was complete. The results from the quantitative evaluation suggest the CARES training program was highly successful and well-received by participants. Results of the qualitative evaluation components will be presented elsewhere.

We stratified the assessment results by correct or incorrect response at baseline and follow-up. Ideally, fellows would be in the incorrect at baseline and correct at follow-up group, demonstrating information learned during the training. If fellows already knew material before the training, they would be in the correct at both baseline and follow-up group. There were never more than two respondents (18%) in the correct at baseline and incorrect at follow-up group for any of the assessment questions. However, there were several instances where respondents answered questions incorrectly at both baseline and follow-up. This occurred most often for the questions on ethnography (n = 7 [64%]), evidence-based public health (n = 4 [36%]), and overarching goals of Health People 2010 (n = 4 [36%]). We believe the major contributing factor for fellows being in this group was due to missed sessions.

Although CARES was a pilot project and the size of the cohort was selected to ensure a manageable first time implementation, we believe a cohort of approximately 20 fellows is ideal; this size allows the cohort to break into a few small groups of two to five for group activities and CBPR pilot projects. The CARES training cohort became a cohesive unit as fellows’ own experiences brought a great deal to the training; many fellows shared similar interest about change for their communities. We believe the size of the cohort greatly contributed to the cohesiveness of the cohort and that this was a major factor for commitment by fellows to completion of the program.

The structure of the CARES training program (weekly in-person sessions) was a major reason for attrition of participants. Although the training was scheduled based on fellows’ responses to an availability survey, we could not find a time that worked for everyone and thus some fellows missed sessions owing to a conflict with the timing of the training sessions. Most of the attrition took place in the first 4 weeks of training. Fellows signed a participant agreement at the orientation session that stated they would not miss more than two training sessions; by week 4 we lost four (21%) participants because of the attendance policy. We lost a another three fellows between weeks five and six of the program, and we believe this is because the course started over the summer months but transitioned into the fall months; a few participants had schedule changes and could no longer attend the training as scheduled.

CARES produced a paradigm shift, emphasizing a community-driven research agenda, enhancing community knowledge of research, and uniting key stakeholders into a comprehensive academic-community based research network. In this setting, community members are fully engaged and instrumental to the development of research conducted in their communities. The CARES training program was instrumental in developing an infrastructure for true CBPR where the projects developed are initiated by the community and lend themselves to community action.

Supplementary Material

Acknowledgments

The authors thank the CARES fellows for their participation and insight, and the CARES faculty for volunteering their time and going into the community to share their knowledge.

This project was funded by The National Institutes of Health Public Trust Initiative and the National Institute for Child Health and Human Development (1R03HD061220). Dr. Goodman was also supported by funding from the Barnes-Jewish Hospital Foundation, Siteman Cancer Center, National Institutes of Health, National Cancer Institute grant U54CA153460 and Washington University Faculty Diversity Scholars Program.

References

- 1.Minkler ME, Wallerstein NE. Community based participatory research for health. San Francisco: Jossey-Bass; 2003. [Google Scholar]

- 2.Viswanathan M. Community-based participatory research: assessing the evidence. Rockville (MD): Agency for Healthcare Research and Quality; 2004. [PMC free article] [PubMed] [Google Scholar]

- 3.D’Alonzo KT. Getting started in CBPR: lessons in building community partnerships for new researchers. Nurs Inq. 2010 Dec;17(4):282–8. doi: 10.1111/j.1440-1800.2010.00510.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Israel BA. Methods in community-based participatory research for health. San Francisco: Jossey-Bass; 2005. [Google Scholar]

- 5.Minkler M. Ethical challenges for the “outside” researcher in community-based participatory research. Health Educ Behav. 2004;31(6):684. doi: 10.1177/1090198104269566. [DOI] [PubMed] [Google Scholar]

- 6.Schulz AJ, Krieger J, Galea S. Addressing social determinants of health: community-based participatory approaches to research and practice. Health Educ Behav. 2002;29(3):287. doi: 10.1177/109019810202900302. [DOI] [PubMed] [Google Scholar]

- 7.Israel BA, Coombe CM, Cheezum RR, Schulz AJ, McGranaghan RJ, Lichtenstein R, et al. Community-based participatory research: A capacity-building approach for policy advocacy aimed at eliminating health disparities. Am J Public Health. 2010 Nov;100(11):2094–102. doi: 10.2105/AJPH.2009.170506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wallerstein NB, Duran B. Using community-based participatory research to address health disparities. Health Promot Pract. 2006;7(3):312. doi: 10.1177/1524839906289376. [DOI] [PubMed] [Google Scholar]

- 9.Tandon SD, Phillips K, Bordeaux B, Bone L, Brown PB, Cagney K, et al. A vision for progress in community health partnerships. Prog Community Health Partnersh. 2007;1(1):11–30. doi: 10.1353/cpr.0.0007. [DOI] [PubMed] [Google Scholar]

- 10.Yu MY, Song L, Seetoo A, Cai C, Smith G, Oakley D. Culturally competent training program: a key to training lay health advisors for promoting breast cancer screening. Health Educ Behav. 2007 Dec;34(6):928–41. doi: 10.1177/1090198107304577. [DOI] [PubMed] [Google Scholar]

- 11.Satcher D, Sullivan LW, Douglas HE, Mason T, Phillips RF, Sheats JQ, et al. Enhancing cancer control programmatic and research opportunities for African-Americans through technical assistance training. Cancer. 2006 Oct 15;107(8 Suppl):1955–61. doi: 10.1002/cncr.22159. [DOI] [PubMed] [Google Scholar]

- 12.Spadaro AJ, Grunbaum JA, Wright DS, Green DC, Simoes EJ, Dawkins NU, et al. Peer reviewed: Training and technical assistance to enhance capacity building between prevention research centers and their partners. Prev Chronic Dis. 2011;8(3) [PMC free article] [PubMed] [Google Scholar]

- 13.Story L. Training community health advisors in the Mercy Delta Express Project: A case study. Jackson: The University of Mississippi Medical Center; 2009. [Google Scholar]

- 14.Kuhajda MC, Cornell CE, Brownstein JN, Littleton MA, Stalker VG, Bittner VA, The University of Mississippi Medical Center Training community health workers to reduce health disparities in Alabama’s Black Belt: The Pine Apple Heart Disease and Stroke Project. Fam Community Health. 2006;29(2):89. doi: 10.1097/00003727-200604000-00005. [DOI] [PubMed] [Google Scholar]

- 15.Perez M, Findley SE, Mejia M, Martinez J. The impact of community health worker training and programs in NYC. J Health Care Poor Underserved. 2006;17(1):26–43. doi: 10.1353/hpu.2006.0011. [DOI] [PubMed] [Google Scholar]

- 16.Loh P, Sugerman-Brozan J, Wiggins S, Noiles D, Archibald C. From asthma to AirBeat: Community-driven monitoring of fine particles and black carbon in Roxbury. Massachusetts Environ Health Perspect. 2002;110(Suppl 2):297. doi: 10.1289/ehp.02110s2297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Keeler GJ, Dvonch T, Yip FY, Parker EA, Isreal BA, Marsik FJ, et al. Assessment of personal and community-level exposures to particulate matter among children with asthma in Detroit, Michigan, as part of Community Action Against Asthma (CAAA) Environ Health Perspect. 2002 Apr;110(Suppl 2):173–81. doi: 10.1289/ehp.02110s2173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Corburn J. Combining community-based research and local knowledge to confront asthma and subsistence-fishing hazards in Greenpoint/Williamsburg, Brooklyn, New York. Environ Health Perspect. 2002 Apr;110(Suppl 2):241–8. doi: 10.1289/ehp.02110s2241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tumiel-Berhalter LM, Mclaughlin-Diaz V, Vena J, Crespo CJ. Building community research capacity: Process evaluation of community training and education in a community-based participatory research program serving a predominately Puerto Rican community. Prog Community Health Partnersh. 2007;1(1):89. doi: 10.1353/cpr.0.0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Powell JA, editor. ERASE Racism conference on regional equity, race and the challenge to Long Island. Long Island, NY: 2004. May 6, [Google Scholar]

- 21.Rusk D, editor. Long Island Little Boxes Must Act as One: Overcoming urban sprawl & suburban segregation. New Horizons for Long Island; Islandia Marriott, Islandia, NY: 2002. [Google Scholar]

- 22.Goodman M, Stafford J, Coalition SCMHA . Mini-summits on minority health; 2007, 2008. Suffolk County, NY: Community Engaged Scholarship for Health; 2011. Mini-summit health proceedings. [Google Scholar]

- 23.Goodman MS, Dias JJ, Stafford JD. Increasing research literacy in minority communities: CARES fellows training program. J Empirical Res Human Res Ethics. 2010;5(4):33–41. doi: 10.1525/jer.2010.5.4.33. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.