Abstract

In the past decade, the developments of vehicle detection have been significantly improved. By utilizing cameras, vehicles can be detected in the Regions of Interest (ROI) in complex environments. However, vision techniques often suffer from false positives and limited field of view. In this paper, a LiDAR based vehicle detection approach is proposed by using the Probability Hypothesis Density (PHD) filter. The proposed approach consists of two phases: the hypothesis generation phase to detect potential objects and the hypothesis verification phase to classify objects. The performance of the proposed approach is evaluated in complex scenarios, compared with the state-of-the-art.

Keywords: LiDAR, vehicle detection

1. Introduction

Traffic accidents are a major cause of death worldwide. A study by the World Health Organization (WHO) reports that an estimated 1.2 million people die in traffic accidents every year, and up to 50 million people are injured [1]. Autonomous driving thus becomes significantly important in order to prevent accidents in traffic scenarios. However, it is still quite challenging for autonomous driving in all scenarios. For automotive manufacturers, the technology behind autonomous driving has been continually refined as a long-term goal, whereas the Advanced Driver Assistance System (ADAS) has been proposed as a short-term development to gradually improve road safety. Numerous ADAS functions have been developed to help drivers avoid accidents, improve driving efficiency, and reduce driver fatigue, in which vehicle detection plays an important role.

Most approaches rely on vision techniques to first detect Regions Of Interest (ROI) and then classify vehicles [2]. Khammari et al. use the Adaboost classification to detect vehicles [3]. Miller et al. and Paragios et al. have also utilized filtering techniques to detect vehicles [4,5]. Meanwhile, vehicle profile symmetry and the corresponding shadows are used in Reference [6]. However, vision techniques suffer from light intensities.

LiDAR is also widely used in vehicle detection. In contrast to vision sensors, LiDAR is robust against light intensities and offers a range of information [7,8,9,10,11]. Teichman et al. use the log odds estimators to recognize objects, where the performance is demonstrated in a large scale environment. Dominguez et al. demonstrate a data fusion platform for tracking vehicles [12]. Compared with vision sensors, LiDAR measurement often suffers from data association issues.

This paper extends our previous work to detect vehicles by using information from LiDAR, where objects are represented by the position and shape parameters (more details would be explained later). In Reference [13], we used the Difference of Normal (DoN) operator and the Random Hypersurface Model (RHM) to cluster the points cloud data and estimate the shape parameters [14,15]. To avoid the data association issue, the Probability Hypothesis Density (PHD) filter is proposed to detect vehicles based on Random Finite Set statistics (RFSs) [16]. In RFSs, several approaches are developed to avoid the data association issue, including the PHD filter, the Cardinalized PHD (CPHD) filter [17] and the Bernoulli filter [18]. The PHD filter propagates the probability hypothesis density function over the single target state space, whereas the CPHD filter also propagates the distribution of the target numbers (cardinality). By using the CPHD filter, the system requires more complex implementations and achieves more reliability in cardinality estimation. As the main goal of this paper is to estimate the states in a speed-critical environment, the PHD filter is thus considered. Unlike the PHD or CPHD filter, the multi-Bernoulli filter propagates the posterior target density. Although it has the same complexity as the PHD filter, the performance is better in highly nonlinear environments (it does not require the additional clustering step for state estimation) [18]. As the proposed RHM could be linearly implemented, the PHD filter is considered as the cheapest solution. The estimated states are then classified by the Support Vector Machine (SVM) to eliminate the non-vehicle objects.

The contributions are summarized as: first and foremost, the proposed solution achieves high performance in the presence of unknown data association environments. Furthermore, the shape parameter is first proposed to classify objects.

This paper is structured as follows: Section 2 describes the random hypersurface model, as well as the probability hypothesis density filter for hypothesis generation. Section 3 introduces the support vector machine for hypothesis verification. Section 4 demonstrates the performance of the experiments in urban environments. Finally, the paper is concluded in Section 5.

2. Hypothesis Generation

In the hypothesis generation phase, LiDAR measurements are filtered based on the Random Hypersurface Model (RHM). To further extend RHM in the presence of unknown data association scenarios, the Gaussian Mixture Probability Hypothesis Density (GMPHD) filter is proposed. Notice that objects are tracked and estimated in the 2D Cartesian coordinate system, whereas the depth information is unnecessarily required.

The result of the generation phase is then utilized for the verification phase to eliminate non-vehicles.

2.1. Random Hypersurface Model (RHM)

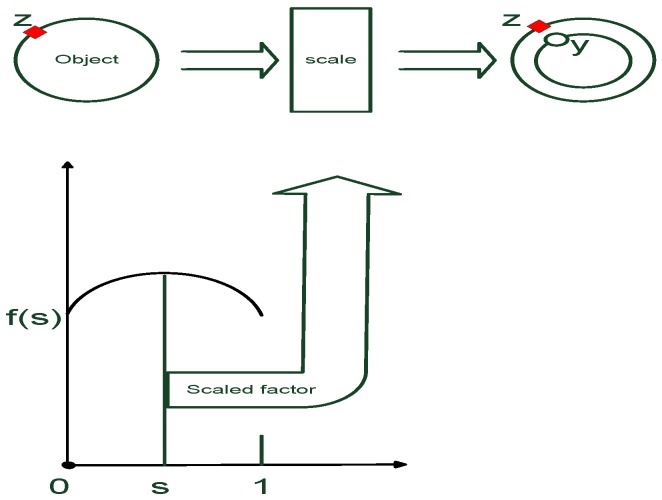

As illustrated in Figure 1, in a 2D Cartesian coordinate system, a point is considered as a scaled point with the factor within the range drawn on the surface. Thus, the RHM is defined as:

Figure 1.

Random hypersurface model.

denotes the surface which consists of both the shape parameter and the center . Each point that lies on the surface is represented as a scaled boundary when s is drawn from :

| (1) |

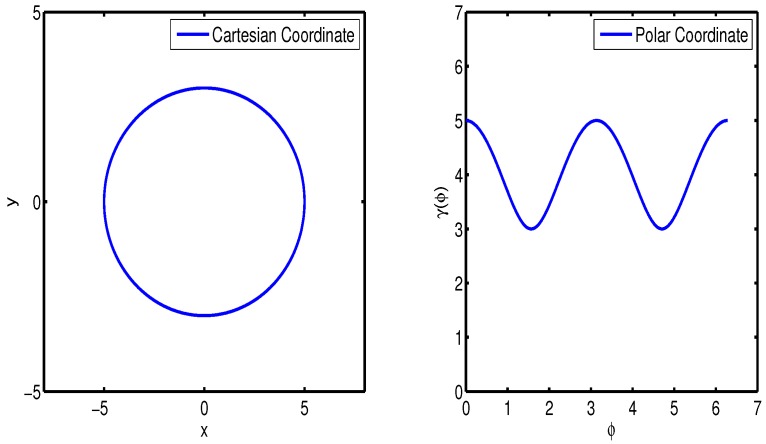

In Figure 2, denotes the distance function calculated from the center to boundary on angle φ in the polar coordinate system.

Figure 2.

Example of star-convex object.

Assuming consists of the shape parameter and the center , the surface is represented as

| (2) |

where and denote the unit vector and the radial function in the form of the Fourier series, respectively. Due to its periodic proprieties, the Fourier series expansion of degree becomes

| (3) |

where is given by

| (4) |

If φ is fixed, Equation (3) is represented as

| (5) |

where

| (6) |

Notice that a low number of Fourier coefficients encode rough information of the surface, whereas a larger number of coefficients give more details.

Bayes Filter

The RHM represents the shape information by using the Fourier coefficients, and the Bayes filter is utilized to calculate the corresponding parameters.

Process model

Assuming the state denotes the Fourier descriptors and does not drift against time, the process model is described as

| (7) |

where and denote the identity matrix and the process noise, respectively.

Measurement model

As illustrated in Figure 1, a single measurement from LiDAR is originated from a surface boundary point with scaled factor s,

| (8) |

where denotes the measurement noise.

Using Equation (2), Equation (8) becomes

| (9) |

which maps the relationship between the state and the measurement .

Based on Equation (5), the measurement model is represented as

| (10) |

with algebraic manipulations on Equation (10), we get

| (11) |

The measurement model is thus acquired as:

| (12) |

where a pseudo measurement is used to model the relationship between the state, the scaled factor, the measurement and its noise. The state convergences to the true Fourier descriptor by updating with a large number of measurements.

Notice that the proposed measurement model is implemented in the 2D Cartesian coordinate system and is only effective on the backside of the target. In addition, the depth information from LiDAR measurement is unrequested.

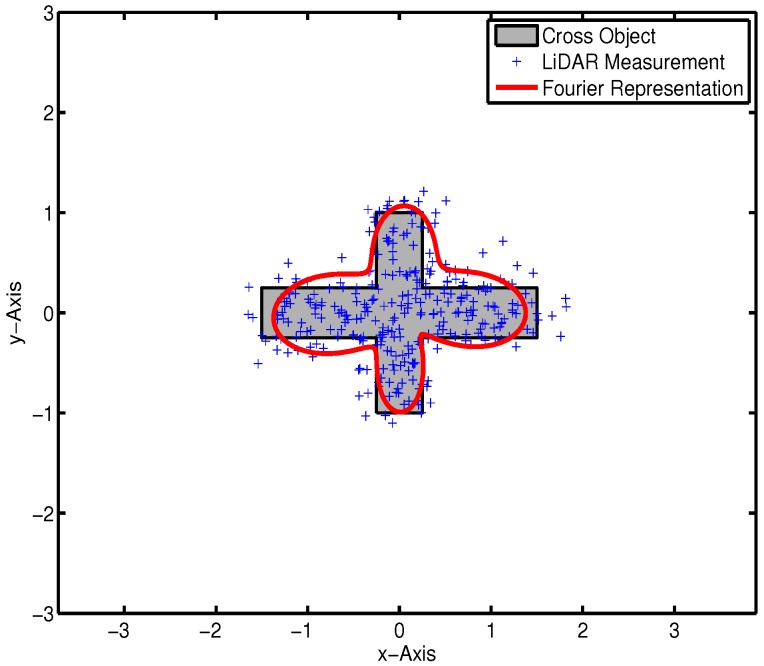

Figure 3 exhibits the estimated result with a cross target, in which the state and measurement y are represented by 13 Fourier coefficients and 2D Cartesian coordinates, respectively.

Figure 3.

Bayesian estimation in a data association known environment.

2.2. Probability Hypothesis Density (PHD) Filter

As illustrated in Figure 3, the RHM estimates the shape information by using the Bayes filter. The key challenge for practice implementation is data association. A traditional estimator operates in measurement-to-target known scenarios, where all assignments are confirmed. However, LiDAR provides a large number of measurements without any association information. In previous work, the Difference of Normal (DoN) operator is utilized as a preprocessing procedure to cluster measurements. The miss detection is quite high since the clustering process may also eliminate objects. Hence, the PHD filter is proposed to track objects in presence of unknown data association scenarios.

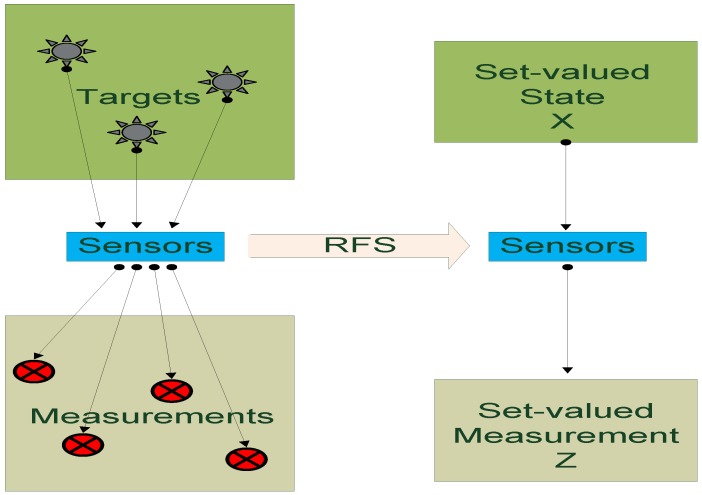

2.2.1. Overview

The PHD filter is represented with the set-valued state and observation for multiple-object Bayesian filtering. All targets and observations are collected and represented in the set space, whereas the data association problem in traditional filtering domain is avoided. Figure 4 is a basic introduction of the RFS statistic. Compared to the single target filtering, the PHD filter relies on the random finite set statistics to process data in the set space level.

Figure 4.

Set-valued states and set-valued observations.

2.2.2. Mathematic Background

Considering the survived targets , the spontaneous targets and the spawned targets , the set-valued state is described as:

| (13) |

In addition, the set observation consists of the reflections from both the targets and the clutters .

| (14) |

Similar to Bayesian estimator, the PHD filter is also divided into prediction and update processes. Notice that D and denote the posterior density and the transition function, respectively. is the previous state.

Thus, the prediction is represented as:

| (15) |

The intensity function is updated based on the measurement set :

| (16) |

where and denote the likelihood function and the detection probability, respectively.

Notice that the predict function in Equation (15) are affected by targets, which enter the scene (), and survive from the previous time step and the spawn targets ().

The update function in Equation (16) corrects the prediction by using innovations from the observations. Notice that the clutter rate is also considered in the update function.

| (17) |

Equation (17) exhibits that the integration of the intensity function represents the number of targets. Meanwhile, the intensity is not a probability density and thus unnecessarily sums up to 1 [19].

The PHD recursions have multiple integrals with no closed form representation. Thus, the most common approach is to use Gaussian Mixture (GM)-PHD approximations [20]:

| (18) |

| (19) |

where and denote the current measurement and state, whereas denotes the previous state. A Gaussian distribution is represented as with mean and covariance P. and denote the transition matrix and process covariance, respectively. and denote the observation matrix and observation noise covariance. Notice that the detection and survival probabilities are constant values:

| (20) |

Birth targets are modeled as:

| (21) |

where , , and denote the weight, covariance, mean and amount of the Gaussians.

Assuming the posterior intensity at time is a Gaussian mixture:

| (22) |

Equation (15) to time k is also a Gaussian mixture

and the Equation (16) at time k is calculated as

| (23) |

where

The GMPHD filter addresses the data association challenge in contrast to the standard Bayes filter. The process model Equation (18) and measurement model Equation (19) are equal to the RHM Bayes filter in Equations (7) and (8), whereas the implementation process is different.

2.3. RHM–GM–PHD Filter

In Section 2.2, the GMPHD filter is introduced for dealing with unknown data association issues. Notice that the standard GMPHD filter operates in condition that one reflection is received for each target per frame, called “Point Target (PT) tracking”. In the vehicle detection scenario, a large number of measurements would be collected from the surface of a single object, called “Extended Target (ET) tracking”. Therefore, the GMPHD filter should be redesigned for dealing with extended targets solely relying on LiDAR measurement.

The process model and measurement model are similar to the RHM Bayes filter in Equations (7) and (8). The prediction equation of the ET–GM–PHD filter are also the same as the standard GMPHD filter. The measurement update formulas for the ET–GM–PHD filter is introduced as:

| (24) |

and the pseudo-likelihood function is defined as

| (25) |

where is the mean number of clutter measurements, is the spatial distribution of the clutter, notation denotes that p partitions the measurement set into non-empty cells W, notation denotes that W is a cell in the partition p, and denote the non-negative coefficients for each partition and cell, and denotes the same likelihood function for a single measurement in Equation (12).

Here,

| (26) |

and

| (27) |

where is the Kronecker delta function and is the number of measurements in cell W. More details of the implementation process could be found in [21,22].

Hence, the ET–GM–PHD filter tracks the potential objects by solely relying on LiDAR without any association or cluster process. The estimated states represent the potential objects and would be filtered again by the support vector machine to eliminate non-vehicle objects.

3. Hypothesis Verification

To eliminate the outliers, the support vector machine is utilized to classify the vehicle and non-vehicle Fourier coefficients.

3.1. Support Vector Machine (SVM)

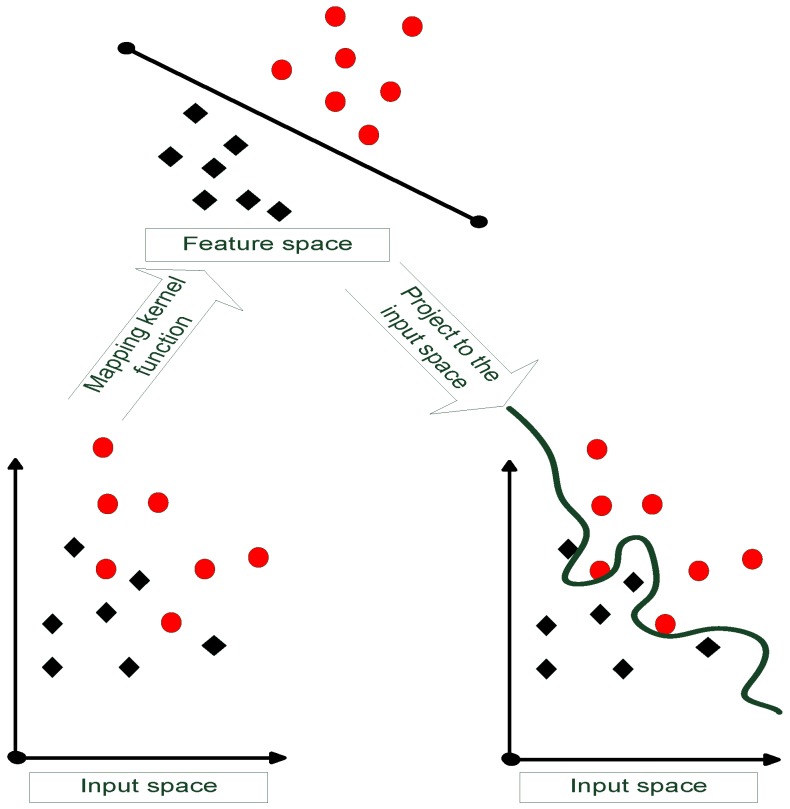

As exhibited in Figure 5, the SVM is proposed to obtain classifiers with good generalization [23]. The mathematical background is introduced as follows:

Figure 5.

The concept of support vector machine (SVM)

For with respect to the classification , the hyperplane is defined as:

| (28) |

to linearly separate each data. Notice that , w and b denote the input vector, weight vector and the bias, respectively. Hence a maximum margin is found to separate positive class from negative class based on

| (29) |

The calculation of the hyperplane is subjected to the following constraints:

and the classification performance relies on the optimization as:

where and denote the Kernel function and Lagrange multiplier, respectively. Notice that if the data can not be separated linearly, the kernel function changes according to

| (30) |

where C denotes the penalize parameter.

3.2. Implementation Detail

During the implementation, there are still issues in both the generation and verification phases.

ET–GM–PHD Implementation

To track objects in multiple frames, the alignment issue should also be considered. Thus the state to be tracked in the ET–GM–PHD filter is , where is the normal state of the object centroid in the 2D Cartesian coordinate system and is the shape parameter by using 13 Fourier descriptors. Although a higher number of Fourier descriptors returns more details of the surface, the computation performance and robustness is unsatisfied. In this paper, 13 Fourier descriptors are selected based on the experience during the experiment.

Each object follows the liner Gaussian dynamic Equation (18) with the following configurations:

| (31) |

| (32) |

where and denote the identity and zero matrix, respectively. The measurement covariance is given using parameters .

Regarding the measurement model, it follows the non-linear Gaussian dynamic Equation (19) and is described as:

where s is a Gaussian function with mean 0.5 and variance 0.03. The birth intensity of the PHD filter is given according to the geometry information. To reduce the computation complexity, measurements which fall into the road regions are utilized to initialize the vehicles. The birth intensity is thus given by

| (33) |

where , and represents the vehicles in the middle (0,1), left (−5,1) and right (5,1), respectively. .

SVM Implementation

The KITTI dataset is utilized for training the classifier, which provides a set of 5000 training frames with 1893 manually labeled objects (car, van, tram, misc, pedestrian, cyclist, trunk and so on). For each object, the original measurements (all objects are labeled with a 2D box, where measurement-to-target association is confirmed) are projected to the 2D Cartesian coordinate system around its original point. The 13 Fourier coefficients are then calculated by using the standard nonlinear Kalman filter [15]. Instead of multiple categories, objects are only considered as vehicles and non-vehicles (actually it is divided by cars and non-cars). Then, the SVM is trained by collecting Fourier coefficients from all calculated objects. In further evaluation, another 2000 frames of test data is utilized to guarantee the performance both quantitatively and qualitatively. The SVM is implemented in both the training and test phases without cross-validation.

Key Parameters and Open Issues

During the ET–GM–PHD process, potential objects are collected in the set-valued state. The Fourier coefficients describe the shape information, and the position vector represents the location. The estimated objects are also shown by using bounding boxes, where the mean point uses the position and the width/height are calculated based on the Fourier coefficients (the Fourier coefficients represent the rough shapes in polar coordinate systems, in which the width and height of the boundary box are calculated by setting the φ equals to 0 and ). Since the PHD filter addresses the data association issue, the points cloud data is directly utilized to estimate the set-valued states. No further cluster process is required. Meanwhile, for each single measurement, the probabilities of both detection and survival are constant and no more than 1. In addition, the PHD filter may estimate close objects as single objects mainly due to the scale factor s. For the RHM model, measurements are considered as random draws from boundaries with different scaling factors in the range . When objects are close to each other, it is quite challenging to distinguish them.

During the SVM process, the calculated coefficients from objects are utilized to eliminate the outliers. Since most cars have a similar width/length rate, the car-labeled objects are treated as vehicles and the rest are non-vehicles. Furthermore, the 13 Fourier coefficients have been found to be quite challenging for linearly separating vehicles and non-vehicles. To better train the SVM, the radial based kernel function is utilized as:

| (34) |

where σ affects distributing complexity in the feature space.

4. Experiment Evaluation

To evaluate the approach quantitatively and qualitatively, the KIT dataset is utilized and compared with the state-of-the-art [24]. During the experiment, the proposed approach is implemented in Matlab with 2 Cores@3 GHz, and the average time is 5 s per frame.

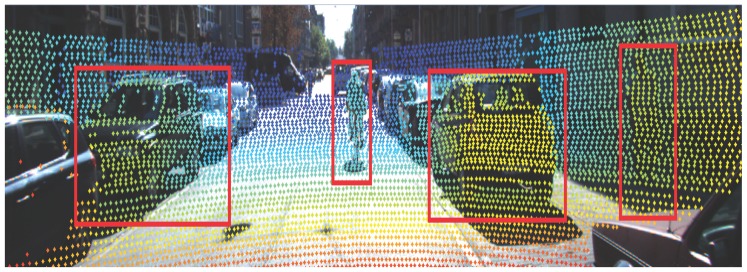

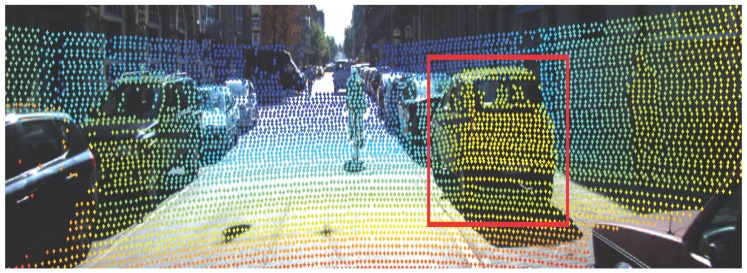

Figure 6, Figure 7 and Figure 8 demonstrate the detection performance based on the proposed approach in one scenario. In Figure 6, a bicycle is fully observed in the middle of the road. On the left side, a parking car is observed with partial occlusion. On the right side, both car and pedestrian are fully observed. As illustrated in Figure 7, potential objects are detected based on the RHM–ET–PHD filter. It is observed that the proposed approach extracts the potential objects based on the geometry of the road, where the birth model plays an important role. The extracted objects are drawn by boundary boxes calculated by the set-valued state (the center point is based on the position, and the width/height are based on the Fourier coefficients). Afterwards, the SVM is utilized to eliminate the non-vehicle objects. Figure 8 shows the results of the verification phase. Due to the occluded issues, it is observed that the left vehicle is also eliminated.

Figure 6.

Original data from image view.

Figure 7.

Result on hypothesis generation phase.

Figure 8.

Result on hypothesis verification phase.

Table 1 demonstrates the overall performance, in contrast to the state-of-the-art, in all scenarios from the KITTI dataset. Although there are also approaches using cameras, the evaluation focuses on the algorithms which only use LiDAR measurements.

Table 1.

Performance of the vehicle detection approach compared with the state-of-the-art.

As illustrated in Table 1, moderate, easy and hard denote the occlusion level of vehicles, with respect to partly occluded, fully visible and difficult to see. Among the references, the proposed approach achieves a high performance for the easy category and poor performance for both moderate and hard categories. In easy scenarios, all vehicles are fully observed and the corresponding reflections on surfaces are uniformly distributed. Hence, the proposed approach can track and estimate the states successfully, and the final classification process has high performance. In moderate and hard scenarios, the corresponding performance drops significantly in both the generation and verification processes. For PHD filter, although it detects the potential objects, the calculated Fourier coefficients are strongly influenced by the invisible measurements. For SVM classification, the training process mainly relies on the visible measurements to calculate Fourier coefficients.

Nevertheless, compared with the overall performance in easy scenarios, the proposed approach still improves almost –.

As a summary, the contributions are concluded as follows: first and foremost, the proposed framework solely relies on LiDAR measurements for vehicle detection in the presence of unknown data association environments. Furthermore, the Fourier coefficient is first proposed for object classification and concluded with high performance for fully visible vehicles.

5. Conclusions

Vehicle detection is important for developing driver assistance systems. To address the data association problem that suffers from points cloud data, the Probability Hypothesis Density (PHD) filter is proposed in this paper. The proposed scheme utilizes contour information for classification. The evaluation results illustrate a high performance in contrast to the state-of-the-art techniques.

Future work focuses on the improvement of detecting occluded vehicles.

Acknowledgments

This work was supported by the German Research Foundation (DFG) and the Technische Universität München within the funding program Open Access Publishing.

Author Contributions

All authors have contributed to the manuscript. Feihu Zhang performed the experiments and analyzed the data. Alois Knoll provided helpful feedback on the experiments design.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- 1.Global Status Report on Road Safety 2013. [(accessed on 1 October 2013)]. Available online: http://www.who.int/violence_injury_prevention/road_safety_status/2013/en/

- 2.Leon L., Hirata R. Vehicle detection using mixture of deformable parts models: Static and dynamic camera; Proceedings of the 25th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI); Ouro Preto, Brazil. 22–25 August 2012; pp. 237–244. [Google Scholar]

- 3.Khammari A., Nashashibi F., Abramson Y., Laurgeau C. Vehicle detection combining gradient analysis and AdaBoost classification; Proceedings of the 2005 IEEE Intelligent Transportation Systems; Vienna, Austria. 13–15 September 2005; pp. 66–71. [Google Scholar]

- 4.Sun Z., Bebis G., Miller R. On-road vehicle detection using evolutionary Gabor filter optimization. IEEE Trans. Intell. Transp. Sys. 2005;6:125–137. doi: 10.1109/TITS.2005.848363. [DOI] [Google Scholar]

- 5.Paragios N., Deriche R. Geodesic active contours and level sets for the detection and tracking of moving objects. IEEE Trans. Pattern Anal. Mach. Intell. 2000;22:266–280. doi: 10.1109/34.841758. [DOI] [Google Scholar]

- 6.Sun Z., Bebis G., Miller R. Monocular precrash vehicle detection: Features and classifiers. IEEE Trans. Image Process. 2006;15:2019–2034. doi: 10.1109/tip.2006.877062. [DOI] [PubMed] [Google Scholar]

- 7.Huang L., Barth M. Tightly-coupled LIDAR and computer vision integration for vehicle detection; Proceedings of the 2009 IEEE Intelligent Vehicles Symposium; Xi’an, China. 3–5 June 2009; pp. 604–609. [Google Scholar]

- 8.Behley J., Kersting K., Schulz D., Steinhage V., Cremers A. Learning to hash logistic regression for fast 3D scan point classification; Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Taipei, Taiwan. 18–22 October 2010; pp. 5960–5965. [Google Scholar]

- 9.Li Y., Ruichek Y., Cappelle C. Extrinsic calibration between a stereoscopic system and a LIDAR with sensor noise models; Proceedings of the 2012 IEEE Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI); Hamburg, Germany. 13–15 September 2012; pp. 484–489. [Google Scholar]

- 10.Xiong X., Munoz D., Bagnell J., Hebert M. 3-D scene analysis via sequenced predictions over points and regions; Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA); Shanghai, China. 9–13 May 2011; pp. 2609–2616. [Google Scholar]

- 11.Li Y., Ruichek Y., Cappelle C. Optimal Extrinsic Calibration Between a Stereoscopic System and a LIDAR. IEEE Trans. Instrum. Meas. 2013;62:2258–2269. doi: 10.1109/TIM.2013.2258241. [DOI] [Google Scholar]

- 12.Dominguez R., Onieva E., Alonso J., Villagra J., Gonzalez C. LIDAR based perception solution for autonomous vehicles; Proceedings of the 11th International Conference on Intelligent Systems Design and Applications (ISDA); Cordoba, Spain. 22–24 November 2011; pp. 790–795. [Google Scholar]

- 13.Zhang F., Clarke D., Knoll A. LiDAR based vehicle detection in urban environment; Proceedings of the 2014 International Conference on Multisensor Fusion and Information Integration for Intelligent Systems (MFI); Beijing, China. 28–29 September 2014; pp. 1–5. [Google Scholar]

- 14.Ioannou Y., Taati B., Harrap R., Greenspan M. Difference of normals as a multi-scale operator in unorganized point clouds; Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission (3DIMPVT); Zurich, Switzerland. 13–15 October 2012; pp. 501–508. [Google Scholar]

- 15.Baum M., Hanebeck U. Shape tracking of extended objects and group targets with star-convex RHMs; Proceedings of the 14th International Conference on Information Fusion (FUSION); Chicago, IL, USA. 5–8 July 2011; pp. 1–8. [Google Scholar]

- 16.Mahler R. Multitarget Bayes filtering via first-order multitarget moments. IEEE Trans. Aerosp. Electron. Syst. 2003;39:1152–1178. doi: 10.1109/TAES.2003.1261119. [DOI] [Google Scholar]

- 17.Mahler R. Approximate multisensor CPHD and PHD filters; Proceedings of the 13th Conference on Information Fusion (FUSION); Edinburgh, UK. 26–29 July 2010; pp. 1–8. [Google Scholar]

- 18.Vo B.T., Vo B.N., Hoseinnezhad R., Mahler R. Robust multi-Bernoulli filtering. IEEE J. Sel. Top. Signal Process. 2013;7:399–409. doi: 10.1109/JSTSP.2013.2252325. [DOI] [Google Scholar]

- 19.Mahler R. Multitarget Bayes filtering via first-order multitarget moments. IEEE Trans. Aerosp. Electron. Syst. 2003;39:1152–1178. doi: 10.1109/TAES.2003.1261119. [DOI] [Google Scholar]

- 20.Vo B.N., Ma W.K. The Gaussian Mixture Probability Hypothesis Density Filter. IEEE Trans. Signal Process. 2006;54:4091–4104. doi: 10.1109/TSP.2006.881190. [DOI] [Google Scholar]

- 21.Mahler R. PHD filters for nonstandard targets, I: Extended targets; Proceedings of the 12th International Conference on Information Fusion, FUSION ’09; Seattle, WA, USA. 6–9 July 2009; pp. 915–921. [Google Scholar]

- 22.Han Y., Zhu H., Han C. A Gaussian-mixture PHD filter based on random hypersurface model for multiple extended targets; Proceedings of the 16th International Conference on Information Fusion (FUSION); Istanbul, Turkey. 9–12 July 2013; pp. 1752–1759. [Google Scholar]

- 23.Cortes C., Vapnik V. Support-Vector Networks. Mach. Learn. 1995;20:273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 24.Geiger A., Lenz P., Urtasun R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite; Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Providence, RI, USA. 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- 25.Wang D.Z., Posner I. Voting for voting in online point cloud object detection; Proceedings of the Robotics: Science and Systems; Rome, Italy. 13–17 July 2015. [Google Scholar]

- 26.Plotkin L. Bachelor’s Thesis. Karlsruhe Institute of Technology; Karlsruhe, Germany: Mar, 2015. PyDriver: Entwicklung Eines Frameworks für Räumliche Detektion und Klassifikation von Objekten in Fahrzeugumgebung. [Google Scholar]

- 27.Behley J., Steinhage V., Cremers A. Laser-based segment classification using a mixture of bag-of-words; Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Tokyo, Japan. 3–7 November 2013; pp. 4195–4200. [Google Scholar]