Abstract

Evidence implicates ventral parieto-premotor cortices in representing the goal of grasping independent of the movements or effectors involved [Umilta, M. A., Escola, L., Intskirveli, I., Grammont, F., Rochat, M., Caruana, F., et al. When pliers become fingers in the monkey motor system. Proceedings of the National Academy of Sciences, U.S.A., 105, 2209–2213, 2008; Tunik, E., Frey, S. H., & Grafton, S. T. Virtual lesions of the anterior intraparietal area disrupt goal-dependent on-line adjustments of grasp. Nature Neuroscience, 8, 505–511, 2005]. Modern technologies that enable arbitrary causal relationships between hand movements and tool actions provide a strong test of this hypothesis. We capitalized on this unique opportunity by recording activity with fMRI during tasks in which healthy adults performed goal-directed reach and grasp actions manually or by depressing buttons to initiate these same behaviors in a remotely located robotic arm (arbitrary causal relationship). As shown previously [Binkofski, F., Dohle, C., Posse, S., Stephan, K. M., Hefter, H., Seitz, R. J., et al. Human anterior intraparietal area subserves prehension: A combined lesion and functional MRI activation study. Neurology, 50, 1253–1259, 1998], we detected greater activity in the vicinity of the anterior intraparietal sulcus (aIPS) during manual grasp versus reach. In contrast to prior studies involving tools controlled by nonarbitrarily related hand movements [Gallivan, J. P., McLean, D. A., Valyear, K. F., & Culham, J. C. Decoding the neural mechanisms of human tool use. Elife, 2, e00425, 2013; Jacobs, S., Danielmeier, C., & Frey, S. H. Human anterior intraparietal and ventral premotor cortices support representations of grasping with the hand or a novel tool. Journal of Cognitive Neuroscience, 22, 2594–2608, 2010], however, responses within the aIPS and premotor cortex exhibited no evidence of selectivity for grasp when participants employed the robot. Instead, these regions showed comparable increases in activity during both the reach and grasp conditions. Despite equivalent sensorimotor demands, the right cerebellar hemisphere displayed greater activity when participants initiated the robot’s actions versus when they pressed a button known to be nonfunctional and watched the very same actions undertaken autonomously. This supports the hypothesis that the cerebellum predicts the forthcoming sensory consequences of volitional actions [Blakemore, S. J., Frith, C. D., & Wolpert, D. M. The cerebellum is involved in predicting the sensory consequences of action. NeuroReport, 12, 1879–1884, 2001]. We conclude that grasp-selective responses in the human aIPS and premotor cortex depend on the existence of nonarbitrary causal relationships between hand movements and end-effector actions.

INTRODUCTION

Technology fundamentally alters the relationship between our actions and their consequences in the world by enabling goals to be accomplished through otherwise ineffective behaviors. For instance, distant objects may become accessible when a plier is used to extend the range of our reach-to-grasp. Remarkably, with training, neurons in monkey ventral premotor cortex (vPMC; area F5) that code grasping objects with the hands come to represent the same action undertaken with this non-biological effector. Critically, some of these units respond similarly regardless of whether the plier is conventional or reversed, such that opening the hand causes the tool’s jaws to close on the object (Umilta et al., 2008). This result is considered strong evidence for the hypothesis that these premotor neurons are coding the goal of grasping independent of the specific movements or effectors involved in its realization (Rizzolatti et al., 1988). Response properties consistent with goal representation have also been reported within hand manipulation neurons of macaque inferior parietal cortex, specifically in area PFG (Bonini et al., 2011) and the anterior intraparietal region (Gardner et al., 2007).

Cortex located at the junction of the human postcentral and anterior intraparietal sulci (aIPS; Frey, Vinton, Norlund, & Grafton, 2005; Culham et al., 2003; Binkofski et al., 1998) and vPMC (Binkofski et al., 1999) exhibits grasp-selective activity resembling that of hand manipulation neurons. Importantly, responses in the aIPS (Hamilton & Grafton, 2006; Tunik, Frey, & Grafton, 2005) and vPMC (Hamilton & Grafton, 2006; Johnson-Frey et al., 2003) are independent of the specific sensorimotor demands involved in grasp execution. The interpretation of these effects as evidence for goal-dependent action representations is bolstered by data indicating that both regions exhibit increased activity when planning grasping actions that involve use of the hands or of a handheld tool (Gallivan, McLean, Valyear, & Culham, 2013; Martin, Jacobs, & Frey, 2011; Jacobs, Danielmeier, & Frey, 2010).

A potential confound in both macaque and human tool use studies is the existence of a nonarbitrary causal relationship between movements of the hand and the actions of the handheld instrument. By its very nature, a handheld tool must be grasped, and extending or retracting the hand has the same effect on the end-effector of the tool. The same is true for wrist adduction/abduction and forearm pronation/supination. Although transformed by the mechanical properties of the tools (Arbib, Bonaiuto, Jacobs, & Frey, 2009), finger extension or flexion still served to open or close the tools’ end-effectors in these studies (Gallivan et al., 2013; Martin et al., 2011; Jacobs et al., 2010; Umilta et al., 2008). Rather than abstract goal-dependent representations, this non-arbitrary causal relationship between hand movements and tool actions may explain aIPS and vPMC involvement in grasping with the hands or with a tool.

Modern technology enables previously arbitrary movements to be harnessed as control signals for the actions of a wide variety of tools and devices in peripersonal, extrapersonal, and even extraterrestrial space. It is possible, for example, to learn to reach for and grasp objects with a robotic arm controlled through the press of a button, manipulation of a joystick, or even directly through brain activity (Hochberg et al., 2012; Schwartz, Cui, Weber, & Moran, 2006; Andersen, Burdick, Musallam, Pesaran, & Cham, 2004; Wolpaw & McFarland, 2004; Carmena et al., 2003; Nicolelis, 2001). Conversely, these same inputs can be used to control a diversity of tools and actions. The arbitrary causal relationship between our movements and tools’ actions enabled by these technologies provides an unprecedented chance to test the hypothesis that the aIPS and/or vPMC are coding the goal of grasping actions independent of specific sensorimotor demands.

We capitalize on this opportunity by recording whole-brain activity with fMRI during two tasks in which the same participants planned and then performed object-oriented grasp or reach actions. To functionally localize grasp-related areas within the aIPS and potentially also in the premotor cortex, participants undertook a manual task (MT) wherein these behaviors are performed naturally, with the hand in peripersonal space. In the robot task (RT) we eliminate the nonarbitrary causal relationship between hand and tool by requiring participants to use individual fingers to initiate grasp or reach actions of a robotic arm located in remote extrapersonal space through button presses. If the human aIPS and vPMC represent the goal of grasping independent of the demands associated with sensorimotor control, then we expect these areas to exhibit similar grasp-selective responses in both the MT and RT. Alternatively, if the selective involvement of these areas in grasping depends on a nonarbitrary causal relationship between hand movements and tool actions, then we anticipate no differences in activity during grasping versus reaching with the robotic arm. Findings from a recent electroencephalography study suggest that aIPS and premotor activity depends on participants’ perceiving that they are causing subsequently observed grasping actions (Bozzacchi, Giusti, Pitzalis, Spinelli, & Di Russo, 2012). If so, then we predict greater aIPS and premotor activity when participants press a button to launch the robot’s actions and observe the ensuing consequences versus when they press a button known to be nonfunctional and watch the very same actions undertaken autonomously by the robot.

METHODS

Eighteen volunteers (mean age = 24.4 years, range = 18.7–39.5, six men) participated in the study, which was approved by the University of Oregon institutional review board. All participants were right-handed as measured by the Edinburgh Handedness Inventory (Oldfield, 1971) and had normal or corrected-to-normal vision. Two participants completed only the MT.

Stimulus presentation and response recording for the MT were controlled by custom LabView software (www.ni.com/labview/), whereas the RT used Presentation (www.neurobs.com/). Trial orders in both experiments were optimized with Optseq2 (surfer.nmr.mgh.harvard.edu/optseq/). A central white fixation point was visible throughout the entirety of both experiments, and participants were asked to maintain fixation. Compliance was monitored by the experimenters through the live video feed from an MR-compatible eye-tracking camera (www.asleyetracking.com/Site/). If the participant was not fixating or showed signs of drowsiness, verbal feedback was provided as needed by the experimenter between runs.

The MT and RT were undertaken within a single session, and the order of administration was counterbalanced across participants. In both tasks, the workspace consisted of a 30.5 × 61 cm board and a 50-mm diameter, circular opening. Likewise, the target objects for reach or grasp movements were red or white 25 × 25 × 50 mm wooden blocks. In both the MT and RT, the workspace was captured by a digital video camera and projected onto a screen located at the rear of the scanner bore. This screen was viewed through a mirror mounted to the head coil. To ensure that participants were attending similarly to actions in both tasks, a red block replaced the standard white block on approximately 25% of the total number of trials. Participants were instructed to keep count of the number of red blocks used in the Grasp condition only and to verbally report this value after the end of each run. Because of an error, block counts were only recorded for the RT.

Manual Task

The workspace was placed across the participant’s lap. A five-button response pad was positioned at a comfortable distance on the midsagittal plane. Participants viewed a live video of the workspace, captured from bird’s eye view, creating a perspective similar to what they would experience if seated and looking down at their laps. The identity of the premovement cues and timing of the premovement phase was identical across tasks. However, the execution phases differed in length. On the basis of piloting, we found that the time required to perform the movements in the MT appeared extremely fast when replicated with the robot. On the basis of this observation, the RT execution phase was lengthened.

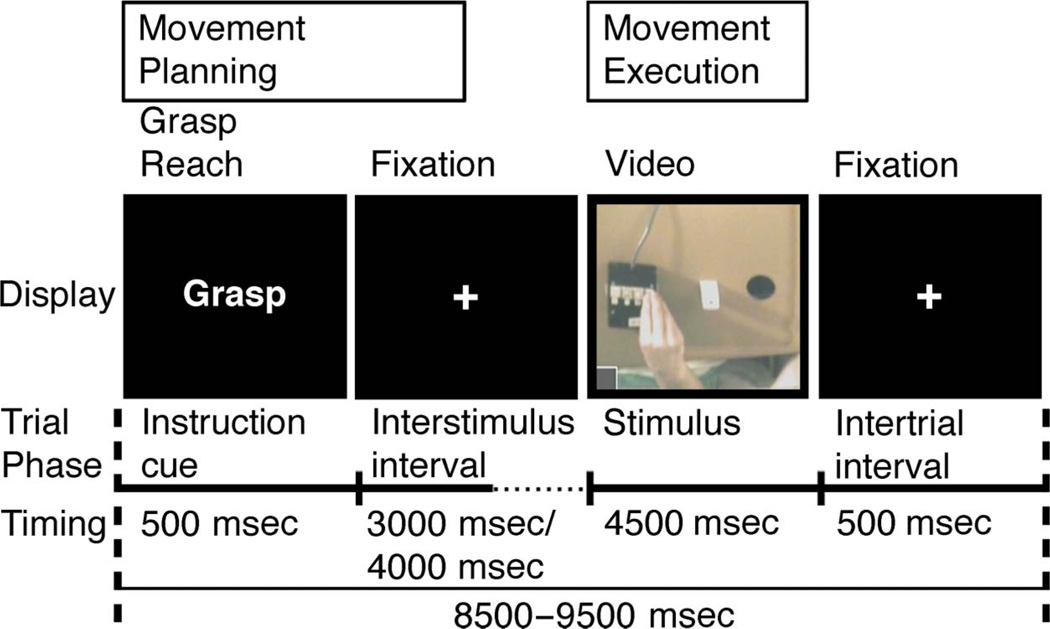

At the onset of each trial, a 500-msec visual instructional word cue (“Reach” or “Grasp”) indicated which movement would be involved. This was followed by a variable 3000- or 4000-msec delay interval. The premovement phase consisted of the 3500-msec period starting with the onset of the instructional cue and included the subsequent 3000-msec delay. Next, a 4500-msec execution phase began with the onset of the live video stream of the workspace. This signaled participants to initiate their movements and included the subsequent 4500-msec movement period (Figure 1).

Figure 1.

Common workspace and the MT. Schematic of the workspace used in both the manual and RTs. This board was placed on the participant’s lap during both tasks. In the MT, participants manually reached or grasped objects placed on the workspace. However, during the RT, only the response pad was used. MT: Each trial began with a 500-msec visual instructional cue (“Grasp” or “Reach”). This was followed by a variable duration delay period (3000–4000 msec). For analysis purposes, the premovement phase was defined as the first 3500 msec of the trial, which included the 500-msec instructional cue and the first 3000 msec of the following delay period. Onset of the live video display of the workspace served as the movement cue. The execution phase consisted of the entire 4500-msec period during which participants received visual feedback. See text for details.

In the execution phase, visual feedback of the hands was provided by live video feed. In the Reach condition, the fingertips remained together throughout the movements. On each trial, participants released the start button, reached forward and touched the 6-mm radius circle located on the top of the target block with the fingertips, moved the hand laterally to the circular opening, returned to the start position, and depressed the button. In the Grasp condition, participants released the start button, reached toward and grasped the target object with the fingertips, transported it laterally, and dropped it into the circular opening before returning to the start location and depressing the button.

The session consisted of 90 trials, 30 each of grasp, reach, and null conditions. Null conditions consisted of the fixation cross against a black screen and served both as a rest condition and induced temporal variation necessary for deconvolution of the event-related hemodynamic responses (Buckner, 1998). For each condition, the average trial length was 9 sec. The session was approximately 14 min in length including 15 sec of fixation at the beginning of the session to orient the participant and 15 sec of fixation at the end to capture the delayed hemodynamic response from the final trial. Trials were presented in two optimal orders (see above) that were administered in counterbalanced fashion across participants. Participants practiced the MT for approximately 5 min during the acquisition of the MRI structural scan.

Robot Task

The procedure was similar to that of the MT (Figure 1).

Training Session

On the day before fMRI testing, participants were shown the robotic arm and introduced to controlling reaching and grasping actions through button presses in our behavioral laboratory. They were told that the next day’s fMRI task would involve controlling the robot remotely. They then practiced controlling the robot live in the following four experimental conditions: (1) Reach: Pressing the button beneath the middle finger initiated the robot to perform the same actions as in the MT Reach condition, but with the “fingers” of the tools’ end-effector closed and simply contacting the top of the wooden block and then moving laterally to the circular opening (Figure 2). (2) Grasp: Pressing the button under the index finger initiated the robot to pick up the wooden block, transport it laterally, and place it in the circular opening (Figure 2), similar to the MT Grasp condition. (3) Press: Pressing the button beneath the ring finger was ineffective; the blank screen and fixation cross remained visible for the duration of the trial. This condition was a control for activity related to the motor response. (4) Watch: No buttons were pressed, and participants observed the robot performing either the Grasp or Reach actions autonomously. Counterbalanced trial orders in the training session differed from those used during testing on the following day.

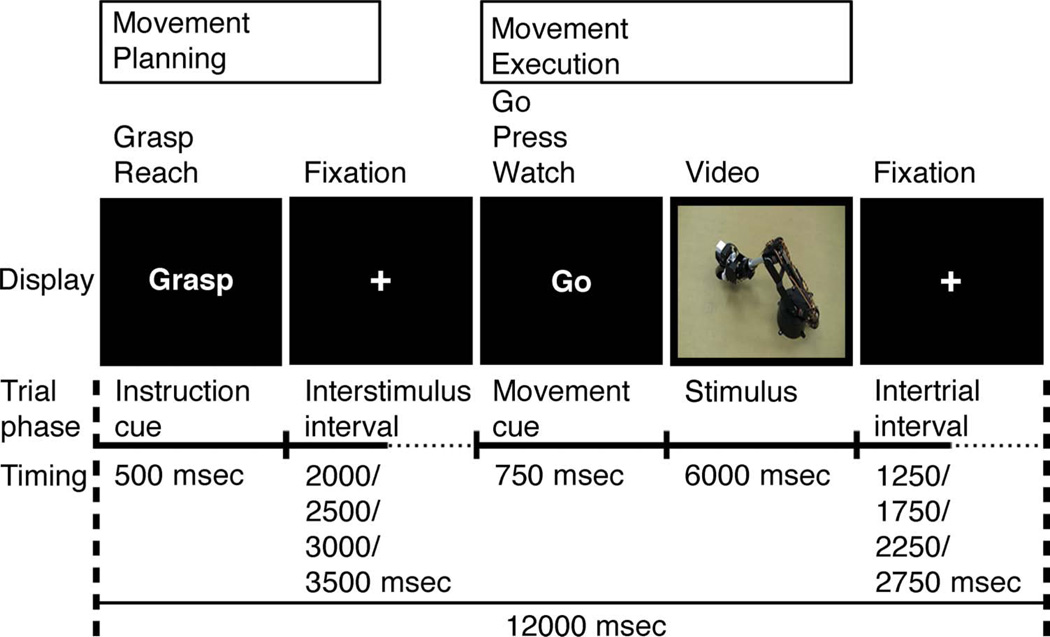

Figure 2.

Robot Task. As in the MT, every trial began with a 500-msec visual instructional cue (“Grasp” or “Reach”). This was followed by a variable duration delay period lasting 2000, 2500, 3000, or 3500 msec, during which participants viewed a black screen with a small white fixation cross in the center (visible throughout the entirety of each run). The premovement phase included the 500-msec instructional cue and the first 3000 msec of the following delay period. Onset of the visual display served as the movement cue. The execution phase consisted of the 750-msec movement cue (“Go”, “Press”, or “Watch”) and the subsequent 6000 msec. See text for details.

fMRI Testing Session

Unbeknownst to participants, during the fMRI experiment, they viewed prerecorded videos of the robot movements rather than an actual live video feed of the robot. In all other respects, the fMRI testing session was identical to the training session. To reinforce the impression of live video, reach and grasp actions of the robot were recorded from four different camera angles to create a total of 16 different videos of the robotic arm: 4 perspectives × 2 movement types (reach, grasp) × 2 block colors (red, white).

Each 12-sec trial began with a 500-msec visual instructional cue consisting of either the word “Grasp” or “Reach.” The instructional cue was followed by a variable duration delay period of 2000, 2500, 3000, or 3500 msec during which time participants were instructed to prepare to press the associated button. During the delay period, the omnipresent white fixation point was displayed against a black background (Figure 2). The 2500-msec premovement phase began with the onset of the instructional cue and concluded at the end of the shortest (2000 msec) delay interval. At the end of the delay interval, a movement cue appeared consisting of either the word “Go”, “Press”, or “Watch.” The 6750-msec execution phase began with the onset of the movement cue and concluded after the end of the video clip in the Go or Watch conditions (or fixation period in the case of the Press condition). After a “Go” movement cue, the participant was instructed to push either the “Grasp” or “Reach” button, depending on the identity of the preceding instructional cue. If issued within 750 msec of movement cue onset, a correct button press response would launch a video of the robot either grasping or reaching as described above. Likewise, issuing a correct “Press” response would result in a blank screen with central fixation cross through the end of the trial. If the participant did not press a button within 750 msec of the movement cue, feedback “too slow” was displayed for 6 sec. For the “Watch” movement cue, the participant was instructed to refrain from issuing any response and instead merely watch the robot autonomously perform the reach or grasp actions as indicated by the preceding instructional cue. To reinforce the sense of control, pressing an incorrect button in the training and experimental sessions resulted in observing the robot perform the corresponding, incorrect action.

The two instruction cues (Grasp, Reach) and the three movement cues (Go, Press, Watch) defined six unique trial types. The experiment consisted of eight predefined runs presented in counterbalanced order across participants. Every run contained 29 trials in optimally counterbalanced order (12 with the instructional cue reach [4 trials followed by Go, 4 by Watch, and 4 by Press], 12 grasp [4 trials followed by Go, 4 by Watch, and 4 by Press], and 5 null [black screen with central fixation cross]; Figure 2).

On the day of the fMRI experiment, participants completed a single refresher run using a trial order from the previous day’s training session. At the beginning of each run, a 15-sec fixation screen was presented to allow the participant to become oriented, and a 15-sec fixation screen was shown at the end of each run to capture the BOLD response related to the last trial presented. The total time of each run of trials was 6:03.

MRI Procedure

All MRI scans were performed on a Siemens (Erlangen, Germany) 3T Allegra MRI scanner at the Robert and Beverly Lewis Center for Neuroimaging located at the University of Oregon. BOLD echo-planar images were collected using a T2*-weighted gradient-echo sequence, a standard birdcage radiofrequency coil, and the following parameters: repetition time = 2500 msec, echo time = 30 msec, flip angle = 80°, 64 × 64 voxel matrix, field of view = 200 mm, 42 contiguous axial slices acquired in interleaved order, thickness = 4 mm, in-plane resolution = 3.125 × 3.125 mm, and bandwidth = 2605 Hz/pixel. High-resolution T1-weighted structural images were also acquired using the 3-D MP-RAGE pulse sequence: repetition time = 2500 msec, echo time = 4.38 msec, inversion time = 1100 msecec, flip angle = 8.0°, 256 × 256 voxel matrix, field of view = 256 mm, 176 contiguous axial slices, thickness = 1 mm, and in-plane resolution = 1 × 1 mm. DICOM image files were converted to NIFTI format using MRIConvert software (lcni.uoregon.edu/jolinda/MRIConvert/).

fMRI Data Analyses

For both MT and RT, fMRI data processing was carried out using FEAT (FMRI Expert Analysis Tool) Version 4.14, part of FSL (FMRIB’s Software Library, www.fmrib.ox.ac.uk/fsl). The following prestatistics processing was applied: motion correction using MCFLIRT (Jenkinson, Bannister, Brady, & Smith, 2002); nonbrain removal using BET (Smith, 2002); spatial smoothing using a Gaussian kernel of FWHM 5 mm; high-pass temporal filtering (Gaussian-weighted least-squares straight line fitting, with sigma = 100 sec). Time-series statistical analysis was carried out using FILM with local autocorrelation correction (Woolrich, Ripley, Brady, & Smith, 2001). Delays and undershoots in the hemodynamic BOLD response were accounted for by convolving the model with a double-gamma basis function. Registration to high-resolution structural and/or standard space images (MNI-152) was carried out using FNIRT (Smith et al., 2004). Localization of cortical (Eickhoff et al., 2005) and cerebellar (Diedrichsen, Balsters, Flavell, Cussans, & Ramnani, 2009) responses was determined through use of probabilistic atlases in FSLview and visual inspection. For surface visualization, data were mapped to a 3-D brain using CARET’s Population-Average, Landmark- and Surface-based atlas using the Average Fiducial Mapping algorithm (Van Essen, 2005).

Manual Task Analyses

The experimental conditions for each run were modeled separately at the first level of analysis for each individual participant. Five explanatory (i.e., predictor) variables (EVs) were modeled along with their temporal derivatives. Four EVs coded the experimental conditions, Phase (Plan, Execute), and movement Type (Reach, Grasp). A fifth EV coded the 9000-msec null (resting baseline) trials. Orthogonal contrasts (one-tailed t tests) were used to test separately for differences between combinations of the four experimental conditions and between combinations of the four experimental conditions and resting baseline. The resulting first-level contrasts of parameter estimates (COPEs) then served as input to higher-level analyses carried out using FLAME Stage 1 (Woolrich, Behrens, Beckmann, Jenkinson, & Smith, 2004; Beckmann, Jenkinson, & Smith, 2003) to model and estimate random-effects components of mixed-effects variance. Z (Gaussianized T/F) statistic images were thresholded using clusters determined by Z > 2.3 and a (corrected) cluster significance threshold of p = .05 (Worsley, 2001).

First, a whole-brain analysis was undertaken to identify the cerebral areas that responded significantly to the experimental conditions when compared with resting baseline at the group level. The first-level COPEs were averaged across participants (second level). Second, to test for the main effects of Phase and Type and for the interaction between these two factors, a standard 2 (Phase: plan, execute) × 2 (Type: reach, grasp) repeated-measures ANOVA (F tests) was carried out on first-level COPEs. The sensitivity of this analysis was increased by restricting it to only those voxels that showed a significant increase in activity in at least one of the four experimental conditions compared with resting baseline at the group level in the whole-brain analysis (Z > 2.3, corrected cluster significance threshold of p = .05).

Robot Task Analyses

Trials were excluded from the fMRI analysis if participants made any of the following errors: pressed a button when none was expected, pressed an incorrect button or more than one button, or pressed the correct button <200 msec or >750 msec from the onset of the movement cue. The preprocessing and data modeling steps were identical to those described earlier for the MT. The execution phase EVs included the 750 msec that the motion cue was presented and the subsequent 6000 msec of either stimulus video (in the Watch or Go conditions) or fixation point (in the Press condition). A ninth EV coded the 12,000-msec null trials that were used as resting baseline.

ROI Analysis

To functionally define the aIPS, we compared activation for the manual grasp versus reach conditions of the MT (Frey et al., 2005; Binkofski et al., 1998). A 5-mm radius sphere was centered on the x, y, z coordinates of the group mean peak activation located at the intersection of the left IPS and postcentral sulcus. Mean percent signal change (PSC) for each condition of the RT was then computed using FSL’s Featquery across significantly activated voxels for the contrast of grasp versus reach conditions in the MT that were located within the boundaries of the sphere. This was done separately for each participant and for the Planning versus Execution phases of the RT. Mean PSC was analyzed using a 2 (Instruction cue: Grasp, Reach) × 3 (Movement cue: Go, Press, Watch) repeated-measures ANOVA.

RESULTS

Manual Task

All trials were completed within the time limits, as indexed by button release at movement initiation and button press when the hand returned to the starting location. Video of the movements was reviewed, and a total of 16 trials across participants were removed before analysis because the grasp or reach actions were incorrectly executed.

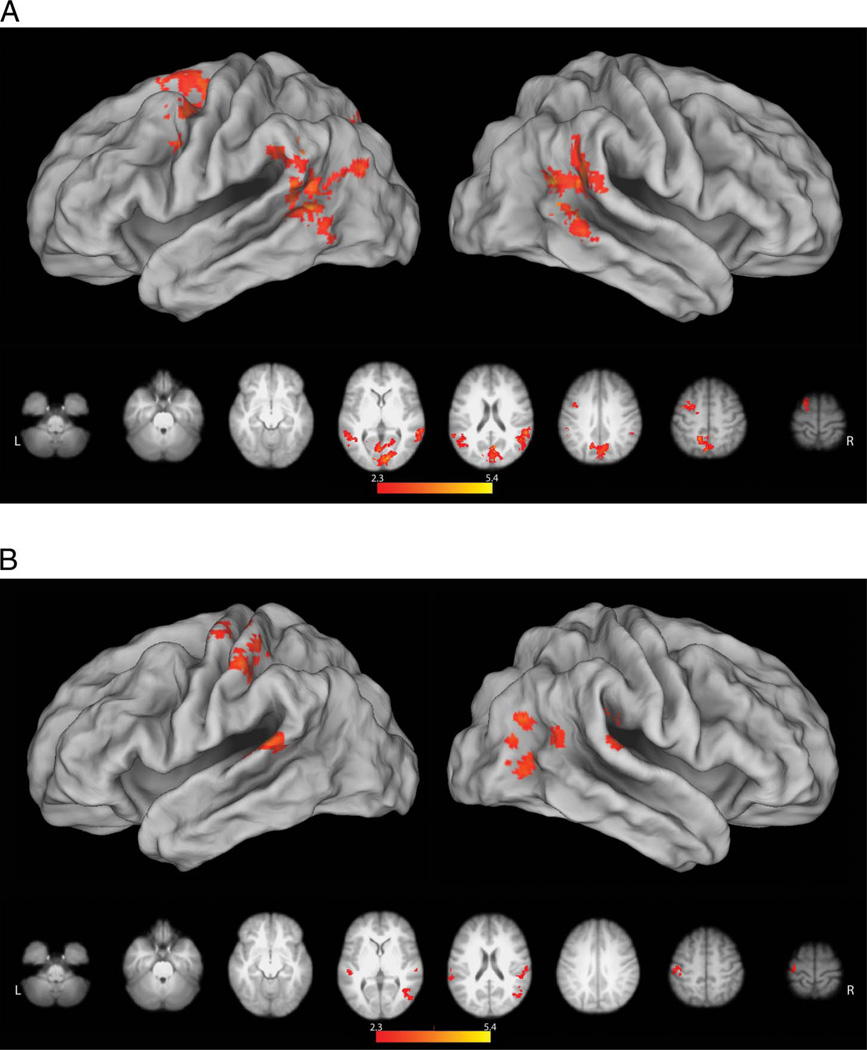

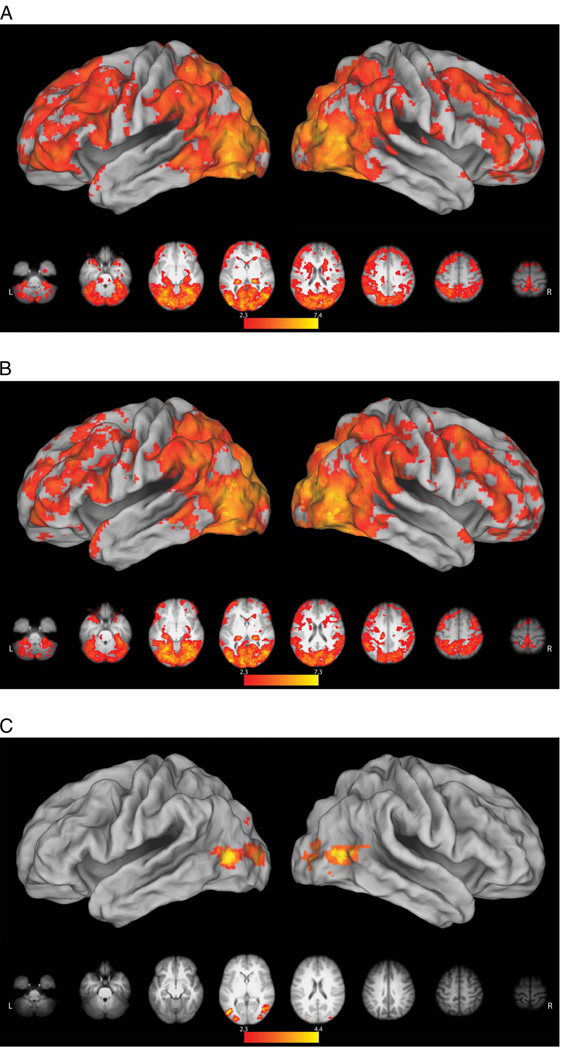

Premovement Phase

A direct comparison between reach and grasp conditions following the onset of the instructional word cues (“Reach” or “Grasp”) failed to detect any areas exhibiting significant differences, and the data were therefore pooled for further analysis. Relative to resting baseline, we detected significant premovement increases in activity within the occipital cortex, extending dorsally into the medial superior parietal lobule, as well as in left vPMC and dorsal premotor cortices (dPMC; Figure 3A). These premotor increases are consistent with prior work reporting similar responses in association with processing action verbs (Pulvermuller, Hauk, Nikulin, & Ilmoniemi, 2005; Hauk, Johnsrude, & Pulvermuller, 2004). Bilateral activity was detected at the TPJ, a region considered to be part of the ventral attention network (Corbetta & Shulman, 2002; Shulman, d’Avossa, Tansy, & Corbetta, 2002) that may also play a role in the prediction of upcoming actions (Carter, Bowling, Reeck, & Huettel, 2012). In the left hemisphere, this cluster of increased activity extended into the caudal left middle temporal gyrus (cMTG), which shows increased activity when planning manual object-oriented actions (Marangon, Jacobs, & Frey, 2011; Martin et al., 2011; Jacobs et al., 2010).

Figure 3.

Planning and execution phase responses in the MT. Here and in subsequent figures, significant results (z > 2.3, p = .05. with clusterwise correction for multiple comparisons) were mapped to a 3-D brain using CARET’s Population-Average, Landmark- and Surface-based atlas using the Average Fiducial Mapping algorithm (Van Essen, 2005). (A) Relative to resting baseline, both types of action planning were associated with significant increases in occipital cortex, extending dorsally into the medial superior parietal lobule, left premotor cortex (dorsal and ventral), bilateral TPJ, and cMTG. No regions exhibited selective responses for Grasp or Reach planning during this planning (premovement) phase. (B) During movement execution, grasp-related increases in activity were found near the intersection of the IPS and postcentral sulcus (i.e., the functionally defined aIPS) contralateral to the hand involved. This cluster extended along the lateral convexity of the postcentral gyrus and into left precentral gyrus. These differences likely reflect the increased motoric demands of shaping the hand to engage the object and/or sensory feedback associated with these movements as well as object contact. Increased sensory stimulation may also account for the finding of bilaterally increased parietal opercular activity (putative secondary somatosensory cortex). We also saw a lateral increase in activity within rostral right LOC extending into the cMTG that is likely attributable to greater visual processing of object structure and/or motion in the grasp condition.

Execution Phase

Relative to resting baseline, execution of either the grasp or reach conditions resulted in significant increases in a distributed network associated with closed loop sensorimotor control, including bilateral posterior parietal and premotor cortex and subcortical regions (cerebellum and BG). Direct comparisons of grasp versus reach conditions revealed increases in activity in the contralateral aIPS (peak location at MNI coordinates: −54, −30, 48). This cluster extended rostrally along the lateral convexity of the postcentral gyrus, through the central sulcus, and onto the rostral bank of the precentral gyrus (Figure 3B). As in prior fMRI investigations (Frey et al., 2005; Culham et al., 2003; Binkofski et al., 1998), the result of this contrast was used to functionally define the aIPS (see Methods) for ROI analyses elaborated below. These differences likely reflect the increased sensorimotor control demands of the Grasp condition, which include preshaping the hand to engage the object, integrating sensory feedback associated with these movements, as well as with object contact and transport. Increased sensory feedback related to object contact may account for the finding of bilaterally increased activity of the parietal operculum (putative secondary somatosensory cortex) during the grasp condition (−56, −32, 12; 56, −28, 26), as has been reported previously (Frey et al., 2005; Grafton, Fagg, Woods, & Arbib, 1996). We also detected increased activity in the lateral convexity of the right occipital cortex (48, −68, 24). This is the vicinity of area MT+, a complex known to be involved in processing visual motion (Ferber, Humphrey, & Vilis, 2003; Dukelow et al., 2001), including that of the hands (Whitney et al., 2007; Oreja-Guevara et al., 2004). This increase may therefore reflect greater motion of the fingers and object during the grasp, as compared with the reach, condition. Lateral occipital cortex is also involved in processing object structure (Kourtzi & Kanwisher, 2001), and its greater engagement during the grasp condition could reflect the additional processing needed to derive structural properties for the specification of hand shape. As with the majority of prior studies, the grasp > reach comparison failed to reveal grasp-selective activity within the premotor cortex (Grafton, 2010; Castiello & Begliomini, 2008).

Robot Task

The overall error rate was 7%, and subsequent analyses were based exclusively on correctly performed trials (see Methods for details). Participants correctly identified the number of red blocks in the grasp condition on 91.1% of the runs.

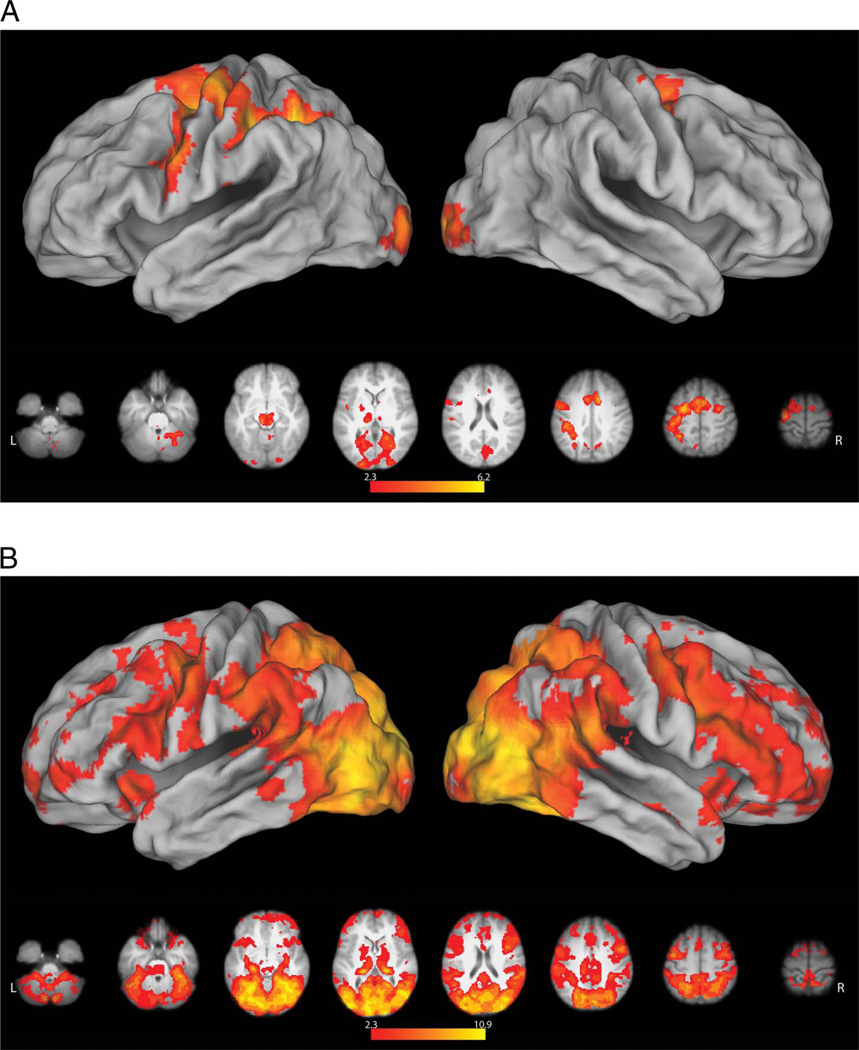

Premovement Phase

As in the MT, direct comparison of grasp versus reach conditions failed to reveal any significant differences, and data were therefore pooled for subsequent analyses. Although subsequent movements only involved pressing a button to launch the reach or grasp actions of the robot, we again detected increased left premotor activity (relative to resting baseline) in response to the instruction cues (Figure 4A). This too is possibly related to the processing of action verbs (Pulvermuller et al., 2005; Hauk et al., 2004). In the RT, however, premovement responses were more expansive, possibly due the collection of more data. Areas of increased activity included the entirety of the left intraparietal sulcus (IPS), extending rostrally through the postcentral gyrus into the central sulcus and onto the precentral gyrus (Figure 4A). Left-lateralized vPMC and IPS activity has been reported previously during the planning of grasping actions with a handheld novel tool that had a nonarbitrary relationship to hand movements (Martin et al., 2011; Jacobs et al., 2010). In the present case, these increases may be associated with the demands of solving the arbitrary mapping between finger movements (required to depress the correct buttons) and the actions of the tool (reach or grasp). This account may also explain increased activity in the cingulate gyrus extending dorsally into the pre-SMA, regions known to be involved in motor cognition (Macuga & Frey, 2012; Frey & Gerry, 2006) and movement inhibition (Sharp et al., 2010). Subcortical increases were present in the BG and the right cerebellum, which are functionally interconnected with one another and with the cerebral cortex (Bostan, Dum, & Strick, 2010; Bostan & Strick, 2010). Structures within the BG contribute to a variety of motor-related functions including motor learning and the modulation of reward-related motor activity (Turner & Desmurget, 2010), whereas the cerebellum participates in a variety of cognitive and motor functions including timing and feed-forward control (Manto et al., 2012; Fuentes & Bastian, 2007; Wolpert, Miall, & Kawato, 1998; Ivry & Baldo, 1992; Keele & Ivry, 1990).

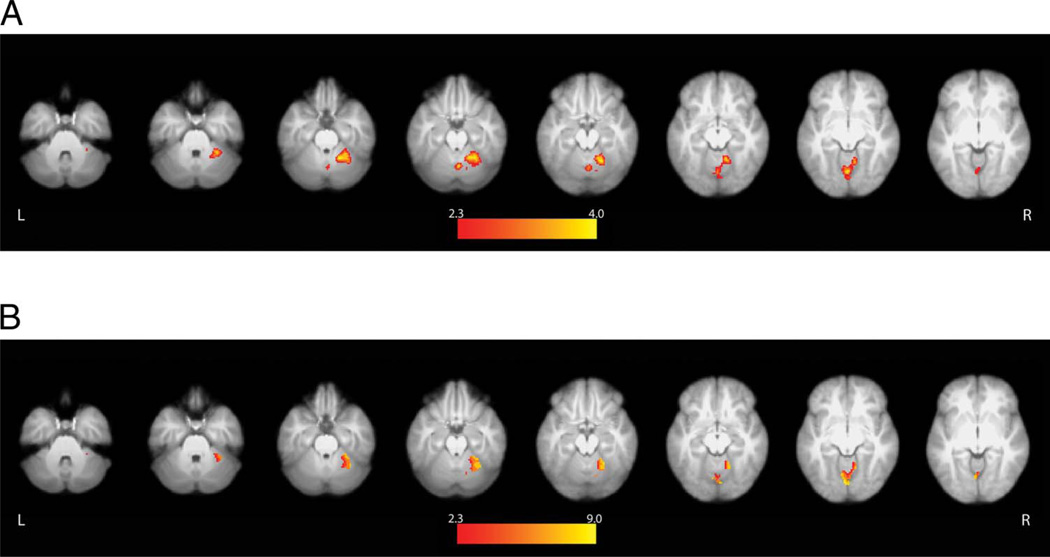

Figure 4.

Planning and execution phase responses in the RT. (A) Similar to the MT, no differences were detected between reach and grasp conditions, during the premovement phase. However, relative to resting baseline, both conditions were associated with left-lateralized increases in activity within and along the intraparietal sulcus (including aIPS), extending rostrally across the postcentral and precentral gyri. In the left hemisphere, activity ran the entire length of the precentral sulcus between dPMC and vPMC. In the right hemisphere, only dPMC showed a significant increase in activity. Along the midline, increased activity was detected in the pre-SMA and underlying cingulate cortex. Subcortical increases were present in right cerebellum and bilateral BG. (B) Contrary to what is expected if these regions represent the goal of grasping and in contrast to the MT, no significant differences were detected between activity during grasp versus reach execution (Grasp Go and Reach Go conditions). Pooled together, both execution conditions were associated with widespread increases, relative to pressing an ineffective button followed by fixation of a blank screen (Go > Press conditions). These included bilateral occipital, posterior parietal, premotor, and lateral prefrontal regions that have frequently been reported during observation of manual actions, as well as the BG and cerebellum.

Execution Phase

Relative to pressing an ineffective response button (Press), when participants pressed designated buttons to initiate grasp (Grasp Go) or reach (Reach Go) with the robotic arm and then observed the resulting actions, activity increased throughout bilateral occipital, posterior parietal, premotor, and lateral prefrontal regions that have frequently been reported during observation of manual actions (Macuga & Frey, 2012; Frey & Gerry, 2006; Grafton, Arbib, Fadiga, & Rizzolatti, 1996). Increases in activity were also detected bilaterally in the BG and cerebellum (Figure 4B). Contrary to what is expected if the aIPS selectively represents the goal of grasp, however, we failed to detect any significant differences between grasp and reach execution (Grasp Go < > Reach Go), and the data were therefore pooled for subsequent analysis.

Relative to resting baseline, passively observing the robot autonomously reach (Reach Watch; Figure 5A) or grasp (Grasp Watch; Figure 5B) was associated with a widespread pattern of bilateral cortical and subcortical increases in activity, closely resembling the results of comparing the Go versus Press conditions (cf. Figures 4B and 5A, B). Increases in activity within inferior parietal and ventral premotor regions that are considered part of the mirror neuron system (Rizzolatti & Craighero, 2004) during observation of the robot’s movements are consistent with some prior work (Gazzola, Rizzolatti, Wicker, & Keysers, 2007; Ferrari, Rozzi, & Fogassi, 2005). Together, these findings suggest that involvement of this network in action perception is not exclusive to behaviors involving biological effectors. Likewise, these responses do not appear to depend on the observer perceiving that they have caused these actions. Comparison of the observation of grasping (Grasp Watch) versus reaching (Reach Watch) revealed significant bilateral increases in the LOC and cMTG (Figure 5C). Similar to what was mentioned in regard to the MT, LOC involvement may reflect greater motion of the robot’s hand and/or the object (Whitney et al., 2007; Oreja-Guevara et al., 2004), or greater processing of object structure during the Grasp condition (Kourtzi & Kanwisher, 2001). Given its involvement in processing the motions of objects and tools (Beauchamp, Lee, Haxby, & Martin, 2002, 2003), the adjacent left cMTG responses may also reflect the additional grasp-related motion. However, this cannot explain the presence of the Grasp versus Reach difference in these regions during passive observation, but not when participants initiate the very same actions through button presses (i.e., Grasp Go vs. Reach Go). As will be discussed, this suggests that responses within these areas may be suppressed when the actor causes these behaviors. The inverse contrast (Reach Watch > Grasp Watch) failed to reveal any differences.

Figure 5.

Areas exhibiting greater activity during the observation of robot actions. (A) Compared with resting baseline, a widespread pattern of bilateral cortical and subcortical increases in activity was detected during observation of the robot reaching. This was very similar to what was revealed by the comparison of Go versus Press conditions (see Figure 4B). (B) The pattern of activity associated with observation of robot grasping versus resting baseline was very similar to reaching (cf. A and B). Note that, although these actions involved nonbiological effectors, observing either reach or grasp increased activity within inferior parietal and ventral premotor regions that are considered part of the mirror neuron system. (C) Compared with the observation of reaching, viewing the robot grasping was associated with significantly greater bilateral activity in the LOC and cMTG. As detailed in the text, these increases may be related to processing of increased motions of the robot and target object, or they may be associated with the perception of causality in the Grasp condition.

Neither the aIPS nor premotor cortex demonstrated sensitivity to the perception of self-initiated causal actions. Instead, we detected a robust response within the right cerebellum that traversed areas V and VI and a smaller cluster within the vermis. Cerebellar activity was greater when participants pressed a button to initiate movements of the robotic arm and observed the resulting reaching or grasping actions compared with when they passively watched the same actions performed autonomously by the robot (Go conditions > Watch conditions; Figure 6A). Critically, use of an inclusive contrast mask revealed that most voxels in these cerebellar clusters also exhibited significantly greater activity when participants launched and observed the robot’s subsequent actions versus when they pressed the nonfunctional button (Go conditions > Watch conditions and Go conditions > Press conditions; Figure 6B). Reponses within cerebellar voxels surviving this conjunction cannot be attributed either to the perception of the robot’s actions (identical in both Go and Watch conditions) or to the motor demands of the button press (identical in the Go and Press conditions). As will be discussed shortly, the right cerebellum appears to be related to the actors’ perceptions that their pressing the correct button controlled the behavior of the robot, or to the generation of predictions concerning the sensory feedback that is expected to follow pressing of the functional “Grasp” or “Reach” buttons.

Figure 6.

Cerebellum displays greater activity during self-initiated actions. Neither the aIPS nor the premotor cortex showed evidence of selectively coding self-initiated actions. Instead, the right cerebellar hemisphere exhibited significantly increased activity during both (A) Go > Watch and (B) Go > Press conditions. This response cannot be explained either by differences in visual stimulation or motor responses and appears to be related to the self-initiation of the robot’s actions, as elaborated in the text.

ROI Analysis in the Functionally Defined aIPS

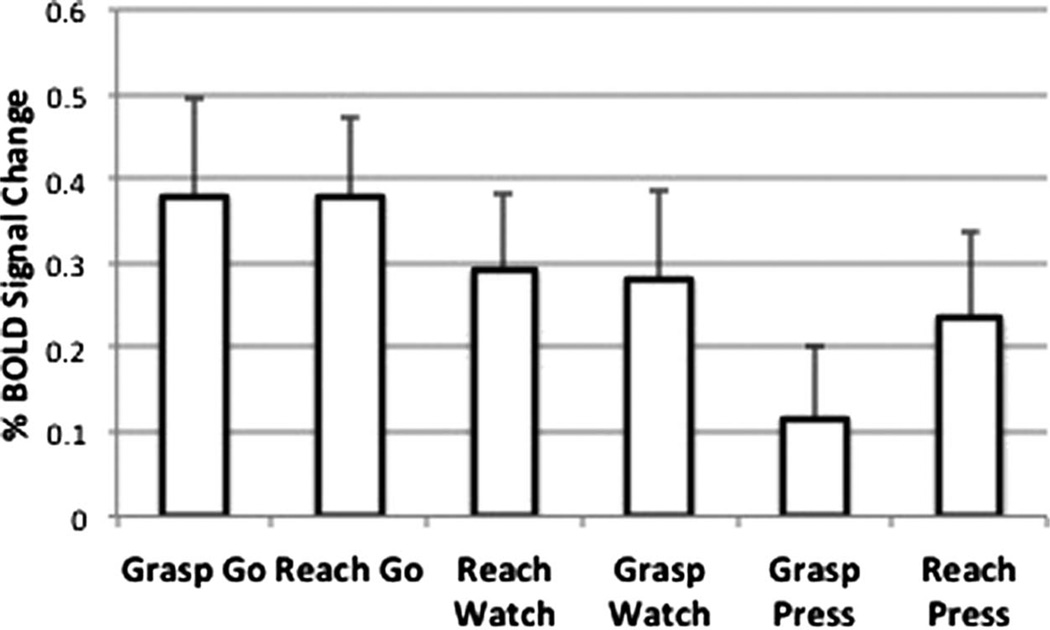

More sensitive ROI analyses were conducted on the mean percent BOLD signal change (PSC) in the aIPS, defined functionally on the basis of results from the grasp > reach execution contrast in the MT (Figure 3B). For the MT, there was a main effect of Movement Phase [F(1, 17) = 5.2168, p < .001], which reflects greater activity during execution (mean PSC = 1.92, SD = 1.01) than planning (mean PSC = −0.062, SD = 0.34). During the RT, the difference between execution (mean PSC = 0.28, SD = 0.37) and planning (mean PSC = 0.29, SD = 0.31) was nonsignificant, p = .94. There was a main effect of Action Type [F(1, 15) = 4.727, p = .046], due to responses during the Go conditions (mean PSC = 0.56, SD = 0.09) being greater than in either the Watch (mean PSC = 0.43, SD = 0.55, p = .04) or Press (mean PSC = 0.29, SD = 0.56, p = .005) conditions (Figure 7). Importantly, unlike the MT, there was no difference between mean responses in the grasp (Grasp Go: mean PSC = 0.378, SD = 0.39) and reach (Reach Go: mean PSC = 0.378, SD = 0.48) execution conditions, p = .99. As will be discussed shortly, this is contrary to what is expected if the aIPS is representing the goal of grasping independent of the movements and effectors involved.

Figure 7.

Mean percent BOLD signal change in the functionally defined aIPS relative to resting baseline for conditions of the RT. We failed to detect differences between the execution of grasp versus reach (i.e., Grasp Go vs. Reach Go). The absence of this effect is unexpected if the aIPS codes the goal of grasping selectively and independent of the movements involved.

DISCUSSION

Our primary objective was to clarify the roles of the aIPS and/or vPMC in representing the goals of object-oriented actions independent of the demands associated with sensorimotor control. This was approached through use of a task in which grasping and reaching with a robotic arm were initiated with button presses, movements bearing an arbitrary causal relationship to the actions they controlled. Consistent with existing evidence (Frey et al., 2005; Culham et al., 2003; Binkofski et al., 1998), we found that the comparison of manually performed grasp versus reach yielded significantly increased activity at the intersection of the IPS and postcentral sulcus (i.e., the functionally defined aIPS). By contrast, when these actions were undertaken with a robotic arm controlled by button press, the aIPS, vPMC (and indeed all other regions exhibiting increased activity) responded equivalently during both grasp and reach. This is inconsistent with what is expected if these areas represent the goal of grasping independent of the demands associated with sensorimotor control (Tunik, Rice, Hamilton, & Grafton, 2007; Hamilton & Grafton, 2006; Tunik et al., 2005; Johnson-Frey et al., 2003). As will be discussed, these findings instead support the hypothesis that grasp-specific responses in aIPS depend on the existence of a nonarbitrary causal relationship between the sensorimotor demands of hand movements and resulting manual or tool actions.

Grasp-selective Responses in the aIPS Depend on Nonarbitrary Causal Relationships between Hand Movements and End-effectors

Manual reach and grasp actions were associated with significant increases in activity throughout a widespread network of regions involved in sensorimotor control, including bilateral posterior parietal and premotor cortices. As in past research, we detected greater activity when contrasting the execution of manual grasping versus reaching conditions near the intersection of the IPS and postcentral sulcus, the functionally defined aIPS (Frey et al., 2005; Culham et al., 2003; Binkofski et al., 1998). When using the robot, activity in posterior parietal and premotor cortices also increased significantly above resting baseline. However, no brain regions exhibited grasp-selective increases in activity when these actions were undertaken with the robotic arm via button presses.

Prior fMRI research, including from our own lab, reports that grasping with the hands or with a handheld tool engages the aIPS and premotor cortex (Gallivan et al., 2013; Jacobs et al., 2010; As noted in the Introduction, however, the causal relationship between hand movements and the actions of the tools’ end-effectors was nonarbitrary in all of these studies. This is also true of the elegant investigation of F5 neurons by Umilta and colleagues (2008). Regardless of whether pliers are normal or reversed, grasping with the tool still requires finger flexion or extension movements naturally involved in grasping. It is important that the introduction of a truly arbitrary causal relationship between hand movements (single degree-of-freedom button presses) and the actions of the robotic arm abolished any grasp-selective responses in these areas. On the basis of these findings, we conclude that the selective involvement of the aIPS and premotor cortex in grasp depends on hand movements that bear a nonarbitrary causal relationship to natural manual grasping. In the absence of such a relationship, we failed to detect any evidence for grasp-selective responses in the human brain, other than those exhibited during passive observation, as discussed shortly.

An alternative interpretation is that the aIPS does code the goal of grasping, but only when it is the immediately forthcoming (proximal) objective of the actor (Grafton, personal communication). This predicts that the aIPS will be involved not only when manually grasping an object but also when a handheld tool is grasped, as was the case in all previous studies. If, as in the RT, grasp is not involved in wielding and controlling the tool, then the aIPS will not respond selectively even when the ultimate (distal) goal of the action is grasping. Further work is required to determine if these closely related alternatives can be empirically disambiguated.

In this initial investigation, we elected to use a simple button press to launch reach or grasp actions, rather than have participants engage in online sensorimotor control of the robot. This design was chosen to equate the sensorimotor demands between these two movement conditions perfectly. Future research should focus on comparing neural representations involved in controlling such devices through the use of both arbitrary and non-arbitrary control signals. The challenge will be to equate these conditions for their sensorimotor demands. If our hypothesis is correct, then grasp selectivity in the aIPS (and perhaps also premotor cortex) will be apparent only when there is a nonarbitrary causal relationship between hand movements and tool actions.

Lateral Occipital Cortex and cMTG Differentiate between Passively Observed Grasping versus Reaching Actions of the Robot

We did find differences in responses to passive observation of the robot autonomously grasping versus reaching. Relative to resting baseline, watching both types of actions were associated with widespread increases in cortical and subcortical activity that included inferior parietal and premotor regions considered to be part of the mirror neuron system (Rizzolatti & Craighero, 2004). This is contrary to what is expected if this network is exclusive to actions undertaken with biological effectors (Tai, Scherfler, Brooks, Sawamoto, & Castiello, 2004). Instead, it is consistent with prior evidence of responses in macaque F5 neurons during the observation of grasping with tools, actions not in their motor repertoire (Ferrari et al., 2005), and with increased parieto-frontal activity detected in humans during the observation of a robot’s actions (Gazzola et al., 2007).

In both hemispheres, the lateral aspect of the lateral occipital cortex, extending rostrally into cMTG, exhibited increased activity during the observation of the robot grasping versus reaching. One possible reason for this difference concerns the greater motion in the Grasp condition of both the robot’s hand and the target blocks during pickup, transport, and release. The lateral aspect of the occipital lobe (putative MT/MT+) plays a key role in processing visual motion (Whitney et al., 2007; Oreja-Guevara et al., 2004; Ferber et al., 2003; Dukelow et al., 2001) and object form (Kourtzi & Kanwisher, 2001), which may receive greater attention when grasping because of its relevance to end-effector preshaping. Relatedly, Beauchamp and colleagues have demonstrated selective responses within the adjacent cMTG for processing the motions of objects and tools (Beauchamp et al., 2002, 2003). Alternatively, earlier work revealed very similar responses when participants viewed causal interactions between objects. Lateral occipital and caudal temporal activity was increased bilaterally when one ball appeared to cause movements of another through collision, as compared with when similar actions occurred in the absence of contact (Blakemore, Fonlupt, et al., 2001). It is possible that the effects exhibited here are similarly driven by the observation of causal contact between the hand and object during Grasp, but not during Reach. None of these accounts, however, explains the absence of these differences when participants initiated the very same actions through button presses (i.e., Grasp Go vs. Reach Go). It is possible that responses within these areas, whether driven by motion, form, and/or perceived causal interactions between tool and object, may be suppressed when the actor causes these actions. We speculate that corollary discharge associated with the button press that initiated these actions modulates responses within the regions and that this could play a role in the perception that these events are causally related to one’s own movements. More work is clearly needed to replicate and clarify this effect.

Cerebellum Responds Selectively to Self-generated Actions of the Robot

Earlier electroencephalographic work suggests that the aIPS and premotor cortex are sensitive to the perception that an observed action is under one’s own control (Bozzacchi et al., 2012). If so, then greater activity should be detected in these areas when participants press a button to launch the robot’s actions and observe the ensuing consequences versus when they press a button known to be nonfunctional and watch the very same actions undertaken autonomously by the robot. Conventional whole-brain analyses failed to detect the predicted effect in any cortical region. A more sensitive ROI analysis within the functionally defined aIPS did, however, reveal significantly greater increases in activity in the Go conditions than in either the Press or Watch conditions. It appears that the aIPS may have modest sensitivity to the perception that an action is self-initiated. This more subtle effect may be responsible for the results reported by Bozzacchi et al. using ERPs and a paradigm that involved videos closely resembling the participants’ own hands.

Unexpectedly, whole-brain analyses did detect increased activity in the right cerebellar hemisphere (V, VI, and vermis) when button presses led to the robot’s actions than during the Watch or Press conditions. It is important to appreciate that this difference was observed in a comparison between conditions with identical motor responses (button presses) and visual stimulation (the same prerecorded video clips of the robot reaching or grasping). There is a considerable literature arguing for involvement of the cerebellum in generating predictions about the sensory feedback that will result from one’s motor commands (Nowak, Topka, Timmann, Boecker, & Hermsdorfer, 2007; Flanagan, Vetter, Johansson, & Wolpert, 2003; Blakemore, Frith, & Wolpert, 2001; Wolpert et al., 1998). From this perspective, this cerebellar increase might reflect the prediction of the forthcoming sensory events that are expected to follow pressing of the “Grasp” or “Reach” buttons. This would explain why a comparable response is not detected during passive observation of the robot’s actions or when the button known to be nonfunctional is pressed. This account is supported by evidence from an elegant study by Blakemore and colleagues that introduced delays between movements of the right hand and the experience of touch on the left palm. They found that responses within the right lateral cerebellar hemisphere increased as a function of the delay interval and concluded that this was attributable to sensory prediction (Blakemore, Frith, et al., 2001). Likewise, damage to the cerebellum impairs the ability to anticipate the sensory consequences of one’s own movements (Diedrichsen, Verstynen, Lehman, & Ivry, 2005).

Alternatively, asymmetric involvement of the cerebellum, ipsilateral to hand involved in motor or sensory functions, is well documented (Diedrichsen, Wiestler, & Krakauer, 2013; Yan et al., 2006; Sakai et al., 1998), and it is possible that this accounts for the right-lateralized responses observed here. However, this alone cannot explain why this region exhibited greater activity when a button press with the right hand controlled the behavior of the robot versus when an ineffective button was pressed (see Figure 6B).

In conclusion, grasp-selective responses in the aIPS appear to depend on the existence of a nonarbitrary casual relationship between hand movements and consequent actions. When this relationship exists, as in our MT and prior investigations of grasping with the hands or with tools, the aIPS exhibits grasp-selective responses. Conversely, when hand movements bear an arbitrary causal relationship to tool actions, we fail to detect grasp-selective responses in the aIPS. If the aIPS is truly involved in goal-dependent representations of grasp, then we would instead expect grasp-selective responses that are independent of the relationship between hand movements and tool actions. This work may have important implications for our understanding of neural representations involved in the use of advanced technologies including brain-controlled interfaces, neural prosthetics, and assistive technologies, whose control signals can be very flexibly related to their actions. In turn, this understanding may engender neurally inspired control systems that exploit organizing principles of the existing biological architecture for action (Sadtler et al., 2014; Leuthardt, Schalk, Roland, Rouse, & Moran, 2009).

Acknowledgments

This work was supported by grants to S. H. F. from ARO/ARL (49581-LS) and NIH/NINDS (NS053962). The authors thank Bill Troyer for technical assistance, Ken Valyear for thoughtful discussions and input on the manuscript, and anonymous reviewers for constructive feedback.

REFERENCES

- Andersen RA, Burdick JW, Musallam S, Pesaran B, Cham JG. Cognitive neural prosthetics. Trends in Cognitive Sciences. 2004;8:486–493. doi: 10.1016/j.tics.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Arbib MA, Bonaiuto JB, Jacobs S, Frey SH. Tool use and the distalization of the end-effector. Psychological Research. 2009;73:441–462. doi: 10.1007/s00426-009-0242-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. fMRI responses to video and point-light displays of moving humans and manipulable objects. Journal of Cognitive Neuroscience. 2003;15:991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in fMRI. Neuroimage. 2003;20:1052–1063. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Buccino G, Posse S, Seitz RJ, Rizzolatti G, Freund H. A fronto-parietal circuit for object manipulation in man: Evidence from an fMRI-study. European Journal of Neuroscience. 1999;11:3276–3286. doi: 10.1046/j.1460-9568.1999.00753.x. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Dohle C, Posse S, Stephan KM, Hefter H, Seitz RJ, et al. Human anterior intraparietal area subserves prehension: A combined lesion and functional MRI activation study. Neurology. 1998;50:1253–1259. doi: 10.1212/wnl.50.5.1253. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Fonlupt P, Pachot-Clouard M, Darmon C, Boyer P, Meltzoff AN, et al. How the brain perceives causality: An event-related fMRI study. NeuroReport. 2001;12:3741–3746. doi: 10.1097/00001756-200112040-00027. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Frith CD, Wolpert DM. The cerebellum is involved in predicting the sensory consequences of action. NeuroReport. 2001;12:1879–1884. doi: 10.1097/00001756-200107030-00023. [DOI] [PubMed] [Google Scholar]

- Bonini L, Serventi FU, Simone L, Rozzi S, Ferrari PF, Fogassi L. Grasping neurons of monkey parietal and premotor cortices encode action goals at distinct levels of abstraction during complex action sequences. Journal of Neuroscience. 2011;31:5876–5886. doi: 10.1523/JNEUROSCI.5186-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bostan AC, Dum RP, Strick PL. The basal ganglia communicate with the cerebellum. Proceedings of the National Academy of Sciences, U.S.A. 2010;107:8452–8456. doi: 10.1073/pnas.1000496107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bostan AC, Strick PL. The cerebellum and basal ganglia are interconnected. Neuropsychology Review. 2010;20:261–270. doi: 10.1007/s11065-010-9143-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bozzacchi C, Giusti MA, Pitzalis S, Spinelli D, Di Russo F. Similar cerebral motor plans for real and virtual actions. PLoS One. 2012;7:e47783. doi: 10.1371/journal.pone.0047783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL. Event-related fMRI and the hemodynamic response. Human Brain Mapping. 1998;6:373–377. doi: 10.1002/(SICI)1097-0193(1998)6:5/6<373::AID-HBM8>3.0.CO;2-P. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmena JM, Lebedev MA, Crist RE, O’Doherty JE, Santucci DM, Dimitrov DF, et al. Learning to control a brain-machine interface for reaching and grasping by primates. PLoS Biology. 2003;1:E42. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter RM, Bowling DL, Reeck C, Huettel SA. A distinct role of the temporal-parietal junction in predicting socially guided decisions. Science. 2012;337:109–111. doi: 10.1126/science.1219681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castiello U, Begliomini C. The cortical control of visually guided grasping. Neuroscientist. 2008;14:157–170. doi: 10.1177/1073858407312080. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza JF, Gati JS, Menon RS, Goodale MA. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Experimental Brain Research. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Balsters JH, Flavell J, Cussans E, Ramnani N. A probabilistic MR atlas of the human cerebellum. Neuroimage. 2009;46:39–46. doi: 10.1016/j.neuroimage.2009.01.045. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Verstynen T, Lehman SL, Ivry RB. Cerebellar involvement in anticipating the consequences of self-produced actions during bimanual movements. Journal of Neurophysiology. 2005;93:801–812. doi: 10.1152/jn.00662.2004. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Wiestler T, Krakauer JW. Two distinct ipsilateral cortical representations for individuated finger movements. Cerebral Cortex. 2013;23:1362–1377. doi: 10.1093/cercor/bhs120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dukelow SP, DeSouza JF, Culham JC, van den Berg AV, Menon RS, Vilis T. Distinguishing subregions of the human MT complex using visual fields and pursuit eye movements. Journal of Neurophysiology. 2001;86:1991–2000. doi: 10.1152/jn.2001.86.4.1991. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, et al. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Ferber S, Humphrey GK, Vilis T. The lateral occipital complex subserves the perceptual persistence of motion-defined groupings. Cerebral Cortex. 2003;13:716–721. doi: 10.1093/cercor/13.7.716. [DOI] [PubMed] [Google Scholar]

- Ferrari PF, Rozzi S, Fogassi L. Mirror neurons responding to observation of actions made with tools in monkey ventral premotor cortex. Journal of Cognitive Neuroscience. 2005;17:212–226. doi: 10.1162/0898929053124910. [DOI] [PubMed] [Google Scholar]

- Flanagan JR, Vetter P, Johansson RS, Wolpert DM. Prediction precedes control in motor learning. Current Biology. 2003;13:146–150. doi: 10.1016/s0960-9822(03)00007-1. [DOI] [PubMed] [Google Scholar]

- Frey SH, Gerry VE. Modulation of neural activity during observational learning of actions and their sequential orders. Journal of Neuroscience. 2006;26:13194–13201. doi: 10.1523/JNEUROSCI.3914-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frey SH, Vinton D, Norlund R, Grafton ST. Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Brain Research, Cognitive Brain Research. 2005;23:397–405. doi: 10.1016/j.cogbrainres.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Fuentes C, Bastian A. “Motor cognition”—What is it and is the cerebellum involved? The Cerebellum. 2007;6:232–236. doi: 10.1080/14734220701329268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Valyear KF, Culham JC. Decoding the neural mechanisms of human tool use. eLife. 2013;2:e00425. doi: 10.7554/eLife.00425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Babu KS, Reitzen SD, Ghosh S, Brown AS, Chen J, et al. Neurophysiology of prehension. I. Posterior parietal cortex and object-oriented hand behaviors. Journal of Neurophysiology. 2007;97:387–406. doi: 10.1152/jn.00558.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzola V, Rizzolatti G, Wicker B, Keysers C. The anthropomorphic brain: The mirror neuron system responds to human and robotic actions. Neuroimage. 2007;35:1674–1684. doi: 10.1016/j.neuroimage.2007.02.003. [DOI] [PubMed] [Google Scholar]

- Grafton ST. The cognitive neuroscience of prehension: Recent developments. Experimental Brain Research. 2010;204:475–491. doi: 10.1007/s00221-010-2315-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grafton ST, Arbib MA, Fadiga L, Rizzolatti G. Localization of grasp representations in humans by positron emission tomography. 2. Observation compared with imagination. Experimental Brain Research. 1996;112:103–111. doi: 10.1007/BF00227183. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Fagg AH, Woods RP, Arbib MA. Functional anatomy of pointing and grasping in humans. Cerebral Cortex. 1996;6:226–237. doi: 10.1093/cercor/6.2.226. [DOI] [PubMed] [Google Scholar]

- Hamilton AF, Grafton ST. Goal representation in human anterior intraparietal sulcus. Journal of Neuroscience. 2006;26:1133–1137. doi: 10.1523/JNEUROSCI.4551-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermuller F. Somatotopic representation of action words in human motor and premotor cortex. Neuron. 2004;41:301–307. doi: 10.1016/s0896-6273(03)00838-9. [DOI] [PubMed] [Google Scholar]

- Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485:372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ivry RB, Baldo JV. Is the cerebellum involved in learning and cognition? Current Opinion in Neurobiology. 1992;2:212–216. doi: 10.1016/0959-4388(92)90015-d. [DOI] [PubMed] [Google Scholar]

- Jacobs S, Danielmeier C, Frey SH. Human anterior intraparietal and ventral premotor cortices support representations of grasping with the hand or a novel tool. Journal of Cognitive Neuroscience. 2010;22:2594–2608. doi: 10.1162/jocn.2009.21372. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Maloof FR, Newman-Norlund R, Farrer C, Inati S, Grafton ST. Actions or hand-object interactions? Human inferior frontal cortex and action observation. Neuron. 2003;39:1053–1058. doi: 10.1016/s0896-6273(03)00524-5. [DOI] [PubMed] [Google Scholar]

- Keele SW, Ivry R. Does the cerebellum provide a common computation for diverse tasks? A timing hypothesis. Annals of the New York Academy of Sciences. 1990;608:179–207. doi: 10.1111/j.1749-6632.1990.tb48897.x. discussion 207-111. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Representation of perceived object shape by the human lateral occipital complex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Leuthardt EC, Schalk G, Roland J, Rouse A, Moran DW. Evolution of brain-computer interfaces: Going beyond classic motor physiology. Neurosurgical Focus. 2009;27:E4. doi: 10.3171/2009.4.FOCUS0979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macuga KL, Frey SH. Neural representations involved in observed, imagined, and imitated actions are dissociable and hierarchically organized. Neuroimage. 2012;59:2798–2807. doi: 10.1016/j.neuroimage.2011.09.083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manto M, Bower JM, Conforto AB, Delgado-Garcia JM, da Guarda SN, Gerwig M, et al. Consensus paper: Roles of the cerebellum in motor control—The diversity of ideas on cerebellar involvement in movement. Cerebellum. 2012;11:457–487. doi: 10.1007/s12311-011-0331-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marangon M, Jacobs S, Frey SH. Evidence for context sensitivity of grasp representations in human parietal and premotor cortices. Journal of Neurophysiology. 2011;105:2536–2546. doi: 10.1152/jn.00796.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin K, Jacobs S, Frey SH. Handedness-dependent and -independent cerebral asymmetries in the anterior intraparietal sulcus and ventral premotor cortex during grasp planning. Neuroimage. 2011;57:502–512. doi: 10.1016/j.neuroimage.2011.04.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nicolelis MA. Actions from thoughts. Nature. 2001;409:403–407. doi: 10.1038/35053191. [DOI] [PubMed] [Google Scholar]

- Nowak DA, Topka H, Timmann D, Boecker H, Hermsdorfer J. The role of the cerebellum for predictive control of grasping. Cerebellum. 2007;6:7–17. doi: 10.1080/14734220600776379. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Oreja-Guevara C, Kleiser R, Paulus W, Kruse W, Seitz RJ, Hoffmann KP. The role of V5 (hMT+) in visually guided hand movements: An fMRI study. European Journal of Neuroscience. 2004;19:3113–3120. doi: 10.1111/j.0953-816X.2004.03393.x. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F, Hauk O, Nikulin VV, Ilmoniemi RJ. Functional links between motor and language systems. European Journal of Neuroscience. 2005;21:793–797. doi: 10.1111/j.1460-9568.2005.03900.x. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Camarda R, Fogassi L, Gentilucci M, Luppino G, Matelli M. Functional organization of inferior area 6 in the macaque monkey. II. Area F5 and the control of distal movements. Experimental Brain Research. 1988;71:491–507. doi: 10.1007/BF00248742. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Sadtler PT, Quick KM, Golub MD, Chase SM, Ryu SI, Tyler-Kabara EC, et al. Neural constraints on learning. Nature. 2014;512:423–426. doi: 10.1038/nature13665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakai K, Takino R, Hikosaka O, Miyauchi S, Sasaki Y, Putz B, et al. Separate cerebellar areas for motor control. NeuroReport. 1998;9:2359–2363. doi: 10.1097/00001756-199807130-00038. [DOI] [PubMed] [Google Scholar]

- Schwartz AB, Cui XT, Weber DJ, Moran DW. Brain-controlled interfaces: Movement restoration with neural prosthetics. Neuron. 2006;52:205–220. doi: 10.1016/j.neuron.2006.09.019. [DOI] [PubMed] [Google Scholar]

- Sharp DJ, Bonnelle V, De Boissezon X, Beckmann CF, James SG, Patel MC, et al. Distinct frontal systems for response inhibition, attentional capture, and error processing. Proceedings of the National Academy of Sciences, U.S.A. 2010;107:6106–6111. doi: 10.1073/pnas.1000175107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shulman GL, d’Avossa G, Tansy AP, Corbetta M. Two attentional processes in the parietal lobe. Cerebral Cortex. 2002;12:1124–1131. doi: 10.1093/cercor/12.11.1124. [DOI] [PubMed] [Google Scholar]

- Smith SM. Fast robust automated brain extraction. Human Brain Mapping. 2002;17:143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Walsh V, et al. Advances in functional and structural MR image analysis and implementation as FSL. Nueroimage. 2004;23(Suppl 1):S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Tai Y, Scherfler C, Brooks D, Sawamoto N, Castiello U. The human premotor cortex is ‘mirror’ only for biological actions. Current Biology. 2004;14:117–120. doi: 10.1016/j.cub.2004.01.005. [DOI] [PubMed] [Google Scholar]

- Tunik E, Frey SH, Grafton ST. Virtual lesions of the anterior intraparietal area disrupt goal-dependent on-line adjustments of grasp. Nature Neuroscience. 2005;8:505–511. doi: 10.1038/nn1430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tunik E, Rice NJ, Hamilton A, Grafton ST. Beyond grasping: Representation of action in human anterior intraparietal sulcus. Neuroimage. 2007;36(Suppl. 2):T77–T86. doi: 10.1016/j.neuroimage.2007.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner RS, Desmurget M. Basal ganglia contributions to motor control: A vigorous tutor. Current Opinion in Neurobiology. 2010;20:704–716. doi: 10.1016/j.conb.2010.08.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Umilta MA, Escola L, Intskirveli I, Grammont F, Rochat M, Caruana F, et al. When pliers become fingers in the monkey motor system. Proceedings of the National Academy of Sciences, U.S.A. 2008;105:2209–2213. doi: 10.1073/pnas.0705985105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC. A Population-Average, Landmark-and Surface-based (PALS) atlas of human cerebral cortex. Neuroimage. 2005;28:635–662. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- Whitney D, Ellison A, Rice NJ, Arnold D, Goodale M, Walsh V, et al. Visually guided reaching depends on motion area MT+ . Cerebral Cortex. 2007;17:2644–2649. doi: 10.1093/cercor/bhl172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proceedings of the National Academy of Sciences, U.S.A. 2004;101:17849–17854. doi: 10.1073/pnas.0403504101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert DM, Miall RC, Kawato M. Internal models in the cerebellum. Trends in Cognitive Sciences. 1998;2:338–347. doi: 10.1016/s1364-6613(98)01221-2. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Behrens TEJ, Beckmann CF, Jenkinson M, Smith SM. Multi-level linear modelling for fMRI group analysis using Bayesian inference. Neuroimage. 2004;21:1732–1747. doi: 10.1016/j.neuroimage.2003.12.023. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady JM, Smith SM. Temporal autocorrelation in univariate linear modelling of fMRI data. Neuroimage. 2001;14:1370–1386. doi: 10.1006/nimg.2001.0931. [DOI] [PubMed] [Google Scholar]

- Worsley KJ. Statistical analysis of activation images. In: Jezzard P, Matthews PM, Smith SM, editors. Functional MRI: An introduction to methods. Oxford: Oxford University Press; 2001. pp. 251–270. [Google Scholar]

- Yan L, Wu D, Wang X, Zhou Z, Liu Y, Yao S, et al. Intratask and intertask asymmetry analysis of motor function. NeuroReport. 2006;17:1143–1147. doi: 10.1097/01.wnr.0000230509.78467.df. [DOI] [PubMed] [Google Scholar]