Significance

It is widely considered a universal feature of human moral psychology that reasons for actions are taken into account in most moral judgments. However, most evidence for this moral intent hypothesis comes from large-scale industrialized societies. We used a standardized methodology to test the moral intent hypothesis across eight traditional small-scale societies (ranging from hunter-gatherer to pastoralist to horticulturalist) and two Western societies (one urban, one rural). The results show substantial variation in the degree to which an individual’s intentions influence moral judgments of his or her actions, with intentions in some cases playing no role at all. This dimension of cross-cultural variation in moral judgment may have important implications for understanding cultural disagreements over wrongdoing.

Keywords: morality, intentions, cognition, culture, human universals

Abstract

Intent and mitigating circumstances play a central role in moral and legal assessments in large-scale industrialized societies. Although these features of moral assessment are widely assumed to be universal, to date, they have only been studied in a narrow range of societies. We show that there is substantial cross-cultural variation among eight traditional small-scale societies (ranging from hunter-gatherer to pastoralist to horticulturalist) and two Western societies (one urban, one rural) in the extent to which intent and mitigating circumstances influence moral judgments. Although participants in all societies took such factors into account to some degree, they did so to very different extents, varying in both the types of considerations taken into account and the types of violations to which such considerations were applied. The particular patterns of assessment characteristic of large-scale industrialized societies may thus reflect relatively recently culturally evolved norms rather than inherent features of human moral judgment.

Although humans everywhere clearly make moral judgments about others’ behavior, postulated universal features of human moral judgment remain highly contentious (1). One putative universal is embodied in what we call the “moral intent hypothesis.” This hypothesis, which is well-supported in large-scale industrialized societies, holds that consideration of an agent’s reasons for action—their intentions, motivations, and circumstances—is a universal feature of human moral judgment. By this, we mean that it is a species-typical property of humans to take an agent’s reasons for action into account in making most types of moral judgments, especially judgments of moral scenarios involving harm. This hypothesis is supported by work in experimental psychology, brain imaging, cognitive development, evolutionary biology, and surveys of legal systems (2–7). For example, neural imaging studies have found that the right temporoparietal junction, thought to be centrally involved in the representation of beliefs and intentions (8), discriminates between intentional and accidental harms in its patterns of activity, and these patterns correlate with patterns of moral judgment (9). Likewise, developmental studies have found that sociomoral judgments of infants as young as 8 mo old are affected by the intent of an action and not simply the outcome (10). Importantly, prior work suggests that the role of intentions may be stronger in some domains of moral judgment than others, with intentions playing a smaller role for judgments about violations not involving harm, such as food taboos (3, 11).

However, there are reasons to suspect that the role of intentions in moral judgments might not be universal across societies. Anthropologists have suggested that the importance of intentions in moral judgments might vary cross-culturally (1, 12–14), and some prior studies support this possibility. For example, a study comparing American Jews and Christians found that Christians weighed intentions more heavily in moral judgment (15), and a study comparing urban Americans and Japanese found that intentions played a smaller role in the judgments of Japanese than American participants (16).

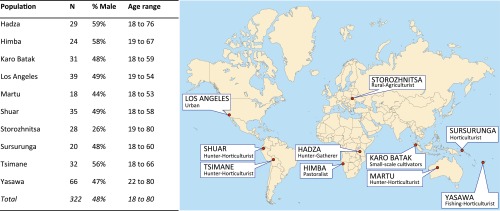

To date, however, the vast majority of studies on the moral intent hypothesis have been carried out in so-called Western, educated, industrial, rich, and democratic (WEIRD) societies (17), and none have been carried out in small-scale non-Western societies. Small-scale societies provide a critical test of potential universality under conditions very distinct from those of modern WEIRD societies, both because of the potential for the recent historical spread of moral and legal ideas between large-scale societies, and because small-scale societies more closely approximate the conditions under which putative universals of moral judgment might have evolved. To this end, we probed moral judgments across a sample of 10 societies on six continents, spanning a diversity of population sizes and cultural traditions (Fig. 1). Importantly, our sample included eight diverse small-scale traditional societies, which were compared with two Western societies (one traditional rural Western society, and one urban industrialized society). The research performed in this study was approved by The North General Institutional Review Board, Office of the Human Research Protection Program, University of California, Los Angeles; The Office of Research Human Subjects Committee, University of California, Santa Barbara; The Behavioural Research Ethics Board, University of British Columbia; The Cambridge Humanities and Social Sciences Research Ethics Committee, University of Cambridge; The Ethical Committee of the Faculty of Social and Economic Sciences, Comenius University; The Office of Research Integrity, Human Subjects, University of Nevada, Las Vegas; and The Human Subjects Office, University of Washington. Informed consent was obtained from all participants before participation (SI Appendix).

Fig. 1.

Study populations and sample characteristics.

Using standardized vignettes, we sought to measure how a character’s reasons for actions moderate moral judgments about that character’s behavior across multiple contexts and dimensions. Specifically, our vignettes systematically varied whether the character’s behavior was (i) intentional or accidental, (ii) motivated or not, or (iii) potentially justified by mitigating factors such as insanity or self-defense. They also included variations in context and type of potential moral wrongdoing, including degree of harm done (striking another person vs. poisoning an entire village) and type of norm violated (harm, theft, violating a food taboo) (see SI Appendix for complete study design, including full texts of the vignettes, and additional analyses).

Participants responded to two banks of vignettes, the “Intentions Bank” and the “Mitigating Factors Bank.” The Intentions Bank used matched pairs of scenarios that varied information about the perpetrator’s reasons for the actions in the story in two ways: (i) intentional vs. accidental, and (ii) motivated vs. antimotivated. In the intentional condition, the perpetrator was described as committing a particular act (e.g., striking another person, stealing another’s bag) without qualification. The accidental condition held the act constant but described it as accidental (e.g., tripping and accidentally striking another person, mistaking another’s bag for one’s own). In the motivated and antimotivated pairs, language about the perpetrator’s intentions was omitted, and the perpetrator was described as either having an incentive to commit the act (e.g., past negative interactions with the person being struck, seeing expensive items in another’s bag), or having an incentive not to do it (e.g., seeking to make a good impression on the person being struck, seeing that the bag contained nothing of value). Crossed with these intent and motivation variation pairs were four different norm violation scenarios: (i) physical harm (striking another person), (ii) group harm (poisoning a village well), (iii) theft, and (iv) violation of a food taboo.

Each participant heard four vignettes, one each of the intentional, accidental, motivated, and antimotivated scenarios. Each was assigned in pseudorandomized format to one of the four types of norm violation (physical harm, group harm, theft, food taboo). For each vignette, participants made six judgments, each on a five-point scale. Three of these were moral judgment questions designed to assess different aspects of participants’ judgments of the moral valence of the act: “Badness” of the action (judged on a scale from very bad, bad, neutral, good, to very good); “Punishment” (whether the agent should be highly punished, punished, neutral, rewarded, highly rewarded for the action); and “Reputation” (whether others will think the agent a very bad person, bad, neutral, good, or very good in light of the action). In addition, we asked three manipulation check questions, designed to assess participants’ judgments of what happened in the vignettes: “Intentional” (the degree to which the act was intentional), “Victim Outcome” (the degree to which the act affected the victim positively or negatively), and “Victim Reaction” (the degree to which the victim was pleased or angered by the event). A principal-component analysis revealed that the moral judgment variables clustered together and separately from the manipulation check variables (SI Appendix, section 3a). Here, we report analyses of the moral judgment variables and how they were influenced by society and by our experimental manipulations. Additional analyses, including analyses of our manipulation check variables and detailed breakdowns by society and scenario, are reported in SI Appendix.

Effects of our experimental manipulations and variation across societies on participant judgments were analyzed with ordered logit models, using a model comparison approach to find the best-fit model for the data (Table 1 and SI Appendix, sections 1 and 2). Model comparison revealed that the effects of the intentional vs. accidental and motivated vs. antimotivated manipulations were statistically comparable and best pooled into a single binary factor, which we call High vs. Low Intent. The ordered logit model provides an estimate of the effect of this factor on the severity of negative moral judgments: across societies, an act committed with High Intent (intentional or motivated) increased the odds of a one-unit increase in severity on our moral judgment scale by a factor of 5, compared with a similar act committed with Low Intent (accidental or antimotivated) (P < 0.001). In other words, acts of intentional wrongdoing were five times more likely to boost negative moral evaluations by a point on our five-point scale than were equivalent nonintentional acts. The three moral judgment measures (Badness, Punishment, Reputation) clustered together and closely tracked each other, consistent with evolutionary models of cooperation, which show that negative sanctions against norm violators, including reputational damage and punishment, are important in stabilizing cooperation (18, 19).

Table 1.

Parameters of best-fit ordered logit models for Intentions Bank

| Intentions Bank | Estimate | exp(β) | Variance | SD | SE | P |

| Fixed effects | ||||||

| High vs. Low Intent | 1.62 | 5.05 | 0.428 | <0.001 | ||

| Sex (1 = male) | −0.209 | 1.23 | 0.123 | 0.09 | ||

| Random effects | ||||||

| Society | 1.05 | 1.03 | ||||

| Scenario | 0.848 | 0.921 | ||||

| Subject | 0.807 | 0.898 | ||||

| Scenario × Society | 0.638 | 0.799 | ||||

| High vs. Low Intent × Society | 0.26 | 0.51 | ||||

| High vs. Low Intent × Scenario | 0.25 | 0.501 | ||||

| Question item | 0.065 | 0.255 |

Effects are reported in descending order of effect size within effect type (fixed, random).

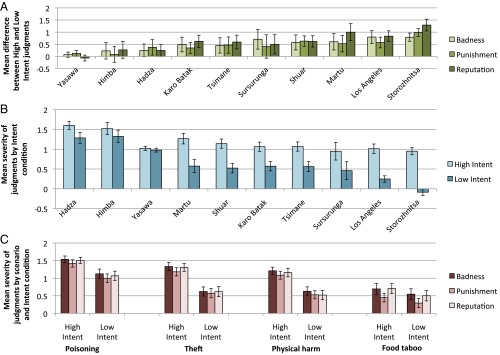

Although the main effect of High vs. Low Intent in the omnibus statistical model is consistent with the moral intent hypothesis, there was substantial variation across societies and scenarios in the effects of intent on moral judgment [consistent with earlier work on differences in the role of intent across moral domains (3)]. Fig. 2 displays effects of the High- vs. Low-Intent manipulation by Society (Fig. 2 A and B), and Scenario (Fig. 2C). Interactions between Society, Scenario, and the High- vs. Low-Intent manipulation are shown in Fig. 3. As illustrated in Fig. 2 A and B, the effect of High vs. Low Intent on overall severity of moral judgment across the scenarios varied across societies (see Intent-by-Society interaction term in Table 1). Indeed, High vs. Low Intent had no significant overall effect on severity of moral judgment in two societies, Yasawa and Himba. Interestingly, Yasawa is a society in the Pacific culture area where mental opacity norms, which proscribe speculating about the reasons for others’ behavior in some contexts, have been reported (13). In addition, the samples that showed the largest effects of High vs. Low Intent on moral judgments were Los Angeles and Storozhnitsa, the two Western societies in our sample.

Fig. 2.

Intentions Bank: summaries of effects of High- vs. Low-Intent manipulation across societies (A and B) and scenarios (C). (A) Mean difference between High- and Low-Intent conditions for three different judgment variables (Badness, Punishment, Reputation). Societies are shown in ascending order of mean difference between High and Low Intent. (B) Mean severity of judgments for High-Intent and Low-Intent conditions, question items pooled (Badness, Punishment, Reputation). Societies are shown in descending order of mean severity of judgment, High and Low Intent pooled. (C) Mean severity of judgments by Scenario for High- vs. Low-Intent conditions, societies pooled. Scenarios are shown in descending order of mean severity of judgment, High and Low Intent pooled. All judgments on a five-point scale, +2 to −2: +2, very bad; 0, neutral; −2, very good. Bars indicate 95% CI. n = 322.

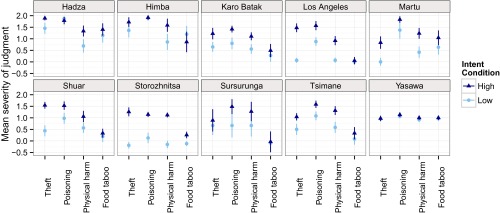

Fig. 3.

Intentions Bank: interactions between Society, Scenario, and High- vs. Low-Intent manipulation. Points show mean severity of moral judgments (Badness, Punishment, Reputation) on a five-point scale, +2 to −2: +2, very bad; 0, neutral; −2, very good. Bars indicate 95% CI. n = 322. Scenarios ordered Left to Right in descending order of effect size of amount of variance contributed to effects of High vs. Low Intent on moral judgments across societies.

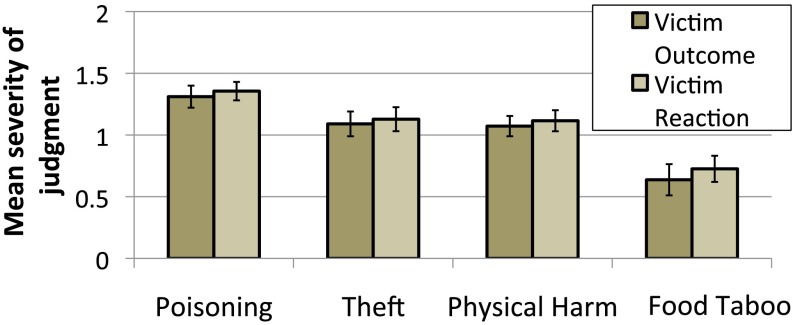

Scenario had the second largest of the random effects in the model, indicating substantial variation in moral wrongness judgments across the four vignettes. As indicated in Fig. 2C, there was variation in the effects of High vs. Low Intent on moral judgments across the scenarios. Effects of intent were smallest for Food Taboo (which only increased the odds of a one-unit increase in severity of moral judgment by a factor of 1.5; P = 0.055) and largest for Theft (where intent increased those odds by a factor of 20; P < 0.0001). Interestingly, although the group harm (Poisoning) scenario showed the most severe moral badness judgments, on average, there was still a substantial effect of Intent on severity of moral judgment (odds increase by a factor of 7; P < 0.0001). As illustrated in Fig. 4, Poisoning was the scenario judged to have the worst effects on the victim, followed by Theft, Physical Harm, and Food Taboo.

Fig. 4.

Mean judgments of severity of Victim Outcome and Victim Reaction by Scenario, pooled across societies. Bars indicate 95% CI. n = 322.

In addition, there were variations in how the scenarios were judged across societies, as illustrated in Fig. 3. Fitting an additional model to the data (SI Appendix, section 3d) revealed a modest three-way interaction between High vs. Low Intent, Scenario, and Society (variance estimate, 0.23). To assess the nature of this interaction, we computed effect sizes of interactions between Society and High vs. Low Intent for each of the scenarios separately, and rank-ordered them from largest to smallest amount of variance explained. The scenario with the highest cross-society variance in differences between High- and Low-Intent judgments was Theft, followed by Poisoning, Physical Harm, and Food Taboo (effect sizes of 1.54, 0.50, 0.45, and 0.00, respectively). Thus, the Theft scenario contributed most to cross-society variation in effects of intent on moral judgment, and Food Taboo contributed least. This replicates and extends prior work in Western societies showing that intentions play a smaller role in judgments of purity violations than those involving harm (3). It also shows that nonpurity scenarios (Theft, Poisoning, Physical Harm) contributed most to the variance in effects of intent on moral judgment across societies, with the purity violation scenario (Food Taboo) contributing least. If the moral intent hypothesis is least applicable in the purity domain (3), then one might expect that this is where one would find the largest amount of cross-society variation, with less cross-society variation in the role of intentions in nonpurity scenarios. In fact, however, we found the reverse, with the purity scenario exhibiting the least amount of variation in the role of intentions.

In the Mitigating Factors Bank, we investigated five factors that might serve as justifications or excuses for engaging in otherwise norm-violating behavior, thereby potentially reducing the severity of moral judgments about the act. All scenarios involved battery and included an Intentional condition that was comparable to the intentional physical harm scenario in the Intentions Bank. The mitigating factors investigated were (i) Self-Defense (perpetrator strikes someone attacking him), (ii) Necessity (perpetrator strikes someone to access a bucket to put out a fire), (iii) Insanity (perpetrator has a history of mental illness), (iv) Mistake of Fact (perpetrator believes he is intervening in a fight, but the combatants were just playing), and (v) Different Moral Beliefs (perpetrator holds the belief that striking a weak person to toughen him up is praiseworthy, in contrast to the prevailing view of the community). Each participant judged three of these scenarios, using the scales described above.

We again used ordered logit models with model comparison to arrive at a best-fit model for our data (Table 2). As in the Intentions Bank, the effect of question item (Badness, Punishment, Reputation) was small, with these judgments tracking one another across scenarios. As expected, variation in mitigating factors had a large effect on participants’ judgments; Mitigating Factor was the largest of the random effects in the best-fit model, indicating substantial cross-societal consistency in ranking of the factors, although there was also a modest Mitigating Factor-by-Society interaction, indicating some societal variation in the role of mitigating factors in moral judgment.

Table 2.

Parameters of best-fit ordered logit models for Mitigating Factors Bank

| Mitigating Factors Bank | Estimate | exp(β) | Variance | SD | SE | P |

| Fixed effects | ||||||

| Sex (1 = male) | −0.26 | 1.30 | 0.173 | 0.133 | ||

| Random effects | ||||||

| Mitigating Factor | 3.13 | 1.77 | ||||

| Subject | 1.6 | 1.27 | ||||

| Society × Mitigating | 0.725 | 0.851 | ||||

| Society | 0.369 | 0.608 | ||||

| Question item | 0.0685 | 0.262 |

Effects are reported in descending order of effect size within effect type (fixed, random).

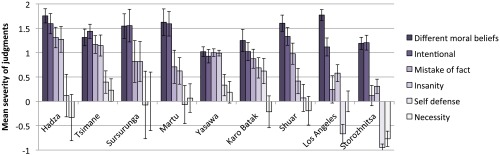

Fig. 5 shows the effect of different mitigating factors on the severity of moral judgments across societies. Across all societies, Intentional and Different Moral Beliefs were judged most harshly; in no society was Different Moral Beliefs mitigating compared with Intentional battery. Slightly below these in severity, but still judged negatively across societies, were the Mistake of Fact and Insanity scenarios, with these being highly mitigating in some societies (e.g., Los Angeles, Storozhnitsa) but not at all mitigating in others (e.g., Yasawa).

Fig. 5.

Mitigating Factors Bank: effects of mitigating factors across societies. Bars show mean severity of judgments for six mitigating factors conditions, question items pooled (Badness, Punishment, Reputation). Societies are shown in descending order of mean severity of judgment (across mitigating factors), and mitigating factors are shown in descending order of mean severity of judgment (across societies). All judgments on a five-point scale, +2 to −2: +2, very bad; 0, neutral; −2, very good. Bars indicate 95% CI. n = 322.

Virtually all societies viewed Self-Defense and Necessity as highly mitigating factors, with negative moral judgments often reduced to zero or near zero (i.e., neutral), and even toward morally positive in Western societies: Self-Defense was judged praiseworthy in Los Angeles and Storozhnitsa, as was Necessity (striking someone to access a bucket to put out a fire) in Storozhnitsa. In addition, Los Angeles and Storozhnitsa showed the largest effects of mitigating factors on moral judgments. Notably, Self-Defense and Necessity were considered mitigating in Yasawa, where we had not seen discrimination in moral judgments between High- and Low-Intent scenarios. In addition to the model reported in Table 2, in which mitigating factor is treated as a random effect, we created a binary fixed factor, High-Mitigating (Self-Defense and Necessity) vs. Low-Mitigating (all other scenarios), to estimate the effects of High vs. Low Mitigation on the odds of increased severity in moral judgments (SI Appendix, section 4). Low-Mitigating situations (i.e., situations other than Self-Defense or Necessity) increased the odds of severe judgments by a factor of 25 compared with High-Mitigating situations (P < 0.0001).

Our results yield several main conclusions. On the one hand, participants in all 10 societies moderated moral judgments in light of agents’ intentions, motivations, or mitigating circumstances in some way. On the other hand, the societies studied exhibited substantial variation not only in the degree to which such factors were viewed as excusing, but also in the kinds of factors taken to provide exculpatory excuses, and in the types of norm violations for which such factors were seen as relevant.

Thus, we can distinguish between two versions of the moral intent hypothesis: a strong version and a weak version. The strong moral intent hypothesis that we outlined above holds that it is a species-typical property of humans to take an agent’s reasons for action into account in making most types of moral judgments, especially judgments of moral scenarios involving harm. On this hypothesis, it would be natural to suppose that reasons for action would play much the same role in moral reasoning across societies, with intentional actions being judged worse than accidental ones, especially in the case of harm. This hypothesis was not supported by our data. There was large variation across societies in the role that intentions played in moral judgment. In some scenarios, such as Poisoning and Theft, intentions played a large role in some societies (Los Angeles, Storozhnitsa) and little or none in others (Hadza, Himba, and Yasawa; Fig. 3), despite these being judged as the most harmful scenarios across societies.

The weak moral intent hypothesis, on the other hand, holds that intentions and other reasons for action play some role in moral psychology in all societies, although that role might vary by society and by context. This version of the moral intent hypothesis was indeed supported by our data. In every society, for example, Self-Defense and Necessity were treated as legitimate excuses that mitigated the moral badness of a harmful act (battery). Moreover, nearly every society, with the exception of Yasawa, showed an effect of High vs. Low Intent on moral judgments in one or more scenarios in the Intentions Bank. Indeed, the High- vs. Low-Intent manipulation explained the largest amount of variance in judgment across societies in the Intentions Bank, with an effect size that was much larger than the Society-by-Intent interaction (Table 1). The support we found for the weak moral intent hypothesis makes sense, as evolutionary considerations support the idea that agents with the ability to monitor mental states might benefit from using intent as a predictive index of future behavior. Interestingly, no society considered different moral beliefs to be a mitigating factor in harmful acts, suggesting that at least a weak form of moral objectivism might be culturally universal or nearly so.

Our findings do not suggest that intentions and other reasons for action are not important in moral judgment. Instead, what they suggest is that the roles that intentions and reasons for action play in moral judgment are not universal across cultures, but rather, variable. One way of interpreting this is that reasoning about the sources of an agent’s action—using theory of mind and other evolved abilities (20)—is universally available as a resource for moral judgments, but it might not always be used in the same way, or even at all, in particular cases. Even in societies where we found a strong role for intentional inference in moral judgments, such as Los Angeles, there are domains where intentions do not matter, such as domains of “strict liability” (e.g., speeding).

Our study was not designed to test particular theories that might explain the societal-level variation we subsequently observed. That said, it is interesting to note that among the societies we sampled, the two that showed the largest overall effects of intentions and mitigating factors were the two most Western societies, Los Angeles and Storozhnitsa. This is consistent with the fact that most prior studies of the moral intent hypothesis, which have found strong effects of intentions on moral judgments, have been conducted in the West. However, the finding that smaller-scale societies showed more modest and variable effects of intentions suggests that the extremity of Westerners in this regard may reflect not an inherent feature of human moral evaluation, but rather the influence of norms generated by cultural evolution within the last few millennia. Although our study design does not allow us to do more than conjecture in this regard, our findings suggest that future research might reveal a relationship between the scale and structure of human societies and their norms of moral judgment. For example, it is possible that in large-scale societies where disputes must often be adjudicated by third parties on the basis of explicit standards of evidence, norms involving reasons for action might become more elaborate via processes of cultural evolution. Other factors may also be important, such as the presence or absence of witchcraft, where the overactive attribution of malevolent feelings (e.g., envy) to others—feelings that are in turn taken to be responsible for much sickness, injury, and death—can lead to cycles of violence and revenge, or notions of corporate responsibility in which members of a group, such as a kin group, are collectively held responsible for wrongdoings of individual members. Alternatively, it may be that the best explanation of the patterns of variation we found will take a different form, for example, in terms of evoked culture, or that cross-society variation in norms of moral reasoning is more haphazard, arising from processes of cultural drift in which idiosyncratic historical factors result in different constellations of moral norms and patterns of judgment across societies. Exploration of the question of whether there is meaningful patterning to the differences in moral reasoning we have observed across societies, functional or otherwise, and examination of different explanatory factors and frameworks, are important topics for future work.

We close by reiterating the importance of cross-cultural work, particularly in small-scale, non-WEIRD societies, for the study of human universals (17). Our results were unanticipated given the broad support for the moral intent hypothesis derived from multiple methodologies in large-scale Western societies. By using a set of standardized vignettes across a diverse sample of societies, we were able to document aspects of moral judgment that appear to be universal across societies (e.g., the roles of Self-Defense and Necessity), as well as aspects of moral judgment that appear to be highly variable (e.g., the role of intentions in morally evaluating an extreme harm, the poisoning of a village well). Not only does this kind of comparative work across diverse societies allow us to amend conclusions about human nature based solely on Western samples, it also allows us to produce more nuanced and detailed descriptions of the specific dimensions along which human psychology varies, and does not, across the globe.

Supplementary Material

Acknowledgments

Thanks to all our participants, to those who made our research possible at our field sites, and to those who helped code our data. J.H. thanks the Canadian Institute for Advanced Research. Funding for this research was provided by the United Kingdom’s Arts and Humanities Research Council (for the AHRC Culture and the Mind Project), the Rutgers University Research Group on Evolution and Higher Cognition, and the Hang Seng Centre for Cognitive Studies, University of Sheffield.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: Data are permanently deposited on the data page of the AHRC Culture and the Mind Project, www.philosophy.dept.shef.ac.uk/culture&mind/Data/IntentionsMorality/IntentionsMorality.csv.

See Commentary on page 4555.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1522070113/-/DCSupplemental.

References

- 1.Zigon J. Morality: An Anthropological Perspective. Berg; New York: 2008. [Google Scholar]

- 2.Young L, Cushman F, Hauser M, Saxe R. The neural basis of the interaction between theory of mind and moral judgment. Proc Natl Acad Sci USA. 2007;104(20):8235–8240. doi: 10.1073/pnas.0701408104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Young L, Saxe R. When ignorance is no excuse: Different roles for intent across moral domains. Cognition. 2011;120(2):202–214. doi: 10.1016/j.cognition.2011.04.005. [DOI] [PubMed] [Google Scholar]

- 4.Mikhail J. Elements of Moral Cognition: Rawls’ Linguistic Analogy and the Cognitive Science of Moral and Legal Judgment. Cambridge Univ Press; New York: 2011. [Google Scholar]

- 5.Krams I, Kokko H, Vrublevska J, Āboliņš-Ābols M, Krama T, Rantala MJ. The excuse principle can maintain cooperation through forgivable defection in the Prisoner's Dilemma game. Proc R Soc London Ser B. 2013;280(1766):20131475. doi: 10.1098/rspb.2013.1475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zelazo PD, Helwig CC, Lau A. Intention, act, and outcome in behavioral prediction and moral judgment. Child Dev. 1996;67(5):2478–2492. [Google Scholar]

- 7.Gray K, Young L, Waytz A. Mind perception is the essence of morality. Psychol Inq. 2012;23(2):101–124. doi: 10.1080/1047840X.2012.651387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Saxe R, Kanwisher N. People thinking about thinking people. The role of the temporo-parietal junction in “theory of mind.”. Neuroimage. 2003;19(4):1835–1842. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- 9.Koster-Hale J, Saxe R, Dungan J, Young LL. Decoding moral judgments from neural representations of intentions. Proc Natl Acad Sci USA. 2013;110(14):5648–5653. doi: 10.1073/pnas.1207992110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hamlin JK. Failed attempts to help and harm: Intention versus outcome in preverbal infants’ social evaluations. Cognition. 2013;128(3):451–474. doi: 10.1016/j.cognition.2013.04.004. [DOI] [PubMed] [Google Scholar]

- 11.Young L, Tsoi L. When mental states matter, when they don’t, and what that means for morality. Soc Personal Psychol Compass. 2013;7(8):585–604. [Google Scholar]

- 12.Duranti A. The Anthropology of Intentions. Cambridge Univ Press; Cambridge, UK: 2015. [Google Scholar]

- 13.Robbins J, Rumsey A. Introduction: Cultural and linguistic anthropology and the opacity of other minds. Anth Quart. 2008;81(2):407–420. [Google Scholar]

- 14.Miller JG, Bersoff DM. Cultural influences on the moral status of reciprocity and the discounting of endogenous motivation. Pers Soc Psychol Bull. 1994;20(5):592–602. [Google Scholar]

- 15.Cohen AB, Rozin P. Religion and the morality of mentality. J Pers Soc Psychol. 2001;81(4):697–710. [PubMed] [Google Scholar]

- 16.Hamilton VL, et al. Universals in judging wrongdoing: Japanese and Americans compared. Am Sociol Rev. 1983;48(2):199–211. [Google Scholar]

- 17.Henrich J, Heine SJ, Norenzayan A. The weirdest people in the world? Behav Brain Sci. 2010;33(2-3):61–83, discussion 83–135. doi: 10.1017/S0140525X0999152X. [DOI] [PubMed] [Google Scholar]

- 18.Henrich J, Boyd R. Why people punish defectors. Weak conformist transmission can stabilize costly enforcement of norms in cooperative dilemmas. J Theor Biol. 2001;208(1):79–89. doi: 10.1006/jtbi.2000.2202. [DOI] [PubMed] [Google Scholar]

- 19.Panchanathan K, Boyd R. Indirect reciprocity can stabilize cooperation without the second-order free rider problem. Nature. 2004;432(7016):499–502. doi: 10.1038/nature02978. [DOI] [PubMed] [Google Scholar]

- 20.Barrett HC, Broesch T, Scott RM, He Z, Baillargeon R, Wu D, Bolz M, Henrich J, Setoh P, Wang J, Laurence S. Early false-belief understanding in traditional non-Western societies. Proc R Soc London Ser B. 2013;280(1755):20122654. doi: 10.1098/rspb.2012.2654. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.