Abstract

The potential for academic research institutions to facilitate knowledge exchange and influence evidence-informed decision-making has been gaining ground. Schools of public health (SPHs) may play a key knowledge brokering role—serving as agencies of and for development. Understanding academic-policymaker networks can facilitate the enhancement of links between policymakers and academic faculty at SPHs, as well as assist in identifying academic knowledge brokers (KBs). Using a census approach, we administered a sociometric survey to academic faculty across six SPHs in Kenya to construct academic-policymaker networks. We identified academic KBs using social network analysis (SNA) in a two-step approach: First, we ranked individuals based on (1) number of policymakers in their network; (2) number of academic peers who report seeking them out for advice on knowledge translation and (3) their network position as ‘inter-group connectors’. Second, we triangulated the three scores and re-ranked individuals. Academic faculty scoring within the top decile across all three measures were classified as KBs. Results indicate that each SPH commands a variety of unique as well as overlapping relationships with national ministries in Kenya. Of 124 full-time faculty, we identified 7 KBs in 4 of the 6 SPHs. Those scoring high on the first measure were not necessarily the same individuals scoring high on the second. KBs were also situated in a wide range along the ‘connector/betweenness’ measure. We propose that a composite score rather than traditional ‘betweenness centrality’, provides an alternative means of identifying KBs within these networks. In conclusion, SNA is a valuable tool for identifying academic-policymaker networks in Kenya. More efforts to conduct similar network studies would permit SPH leadership to identify existing linkages between faculty and policymakers, shared linkages with other SPHs and gaps so as to contribute to evidence-informed health policies.

Keywords: Evidence-informed decision-making, evidence-to-policy, Kenya, knowledge broker, schools of public health, social network analysis

Key Messages

There exist Kenyan academic faculty at schools of public health (SPH) who engage in activities and relationships that place them in unique positions as knowledge brokers and conduits for policy influence.

Using social network analysis to identify knowledge brokers can provide insight into who the advisors/resource persons for faculty in the SPHs are, who have relationships with policy makers, who can be supported and leveraged for bridging the research to policy (and vice versa) divide and which members can convene to collectively influence public health policy.

More efforts to conduct similar network studies would permit leadership at SPHs as well as government policymakers to identify existing linkages between faculty and policymakers, shared linkages with other SPHs and gaps so as to contribute to evidence-informed health policies.

Introduction

The role of academia in policy influence

Policies influenced by sound scientific evidence and best practices can improve public health outcomes (Lavis et al. 2004) and contribute to national development. Although several types of agencies such as donors, think tanks, civil society organizations, the media and research institutes can and do play a role in promoting and supporting the use of evidence in decision-making, the potential of academic institutions as mediums for knowledge exchange has gained explicit attention (van Kammen et al. 2006; Whitchurch 2008; Jansson et al. 2010; Ketelaar et al. 2010; Meyer 2010; Rycroft-Malone et al. 2011). The UK Department for Business Innovation and Skills, for example, stated that ‘[. . .] universities are the most important mechanism we have for generating and preserving, disseminating and transforming knowledge into wider society and economic benefits’ (BIS 2009).

Researchers and decision-makers are often characterized as ‘distinct communities’ whose infrequent interaction, varied priorities and incongruent timelines, amongst others, impede the flow of evidence (Innvaer et al. 2002). The gap between these two communities has been well documented in the literature (Dobbins et al. 2004; Lomas et al. 2005; Davies 2007; Ball and Exley 2010) and highlighted at global meetings (World Health Organisation 2005; WHO 2006). It is important to understand how this gap can be narrowed so that researchers and policymakers are able to nurture productive relationships, therefore contributing to public health policies that are better informed by research evidence.

One way to narrow the gap between researchers and decision-makers is to find and maintain opportunities for interaction. Although the onus of ensuring that evidence reaches decision-making domains has historically been placed upon researchers, there have been two realizations: first, the dearth of researchers’ expertise and capacity to ‘package’ their results for multiple audiences (Feldman et al. 2002; Canadian Health Services Research Foundation 2003; Choi et al. 2003; Lomas 2007; Datta and Jones 2011); second, the potential role of specialists—either dedicated knowledge officers in organizations or intermediaries/knowledge brokers (KBs) that straddle the two domains—as a means of filling this gap (Choi et al. 2005). Past debates raised concerns as to whether KBs are neutral bridges or strategic gatekeepers with advocacy agendas (Bardach 1998). Regardless of the intent of their activities, the fact that KBs lie on the plane between evidence and policy renders them important players.

Hargadon (1998) asserts that organizations that ‘consult to others—have the potential to act as KBs’. The potential therefore for institutions as KBs in the evidence-informed decision-making (EIDM) paradigm has led to experimentation with knowledge translation platforms—‘national- or regional-level institutions which foster linkage and exchange across a (health) system’ (Bennett and Jessani 2011)1. Although the roles of KBs are ambiguous and no one model to date captures the complexity of their positions (Ward et al. 2009), UK higher education institutes are increasingly developing purposeful staff appointments for what they call ‘hybrid’ or ‘blended’ professionals who have ‘mixed backgrounds and portfolios, comprising elements of both professional and academic activity’ (Whitchurch 2008).

This experimentation seems to be emerging in Africa where institutes of higher education and increasingly networks such as the Association of African Universities (AAU) are beginning to participate actively in developmental policymaking (Johnson et al. 2011). In thinking about academic KBs, Meyer’s work would suggest that they exist at the periphery of both worlds they bridge—that of academia as well as that of policy—rendering their meaning and significance unclear to both. As a consequence, academic KBs, although playing a catalytic role in leveraging intellectual capital, may suffer from lack of support, lack of training opportunities (Surridge and Harris 2007) and institutional failure to recognize and value the social processes that provide the undercurrent for successful relations (Johnston et al. 2010) and subsequent informal networks.

The need for more research on the role of individual KBs and their networks as innovative means of bridging the research and policy domains is apparent in a wide body of publications (Lomas 2007; Lairumbi et al. 2008; Johnston et al. 2010; Gagnon 2011). Only one study to date has attempted to explore the role of academic KBs and is embedded in the Australian context (Lewis 2006), there are no such studies that feature low-income countries. For the purpose of this study, we define academic KBs as: schools of public health (SPH) faculty who are connected to policymakers as a conduit to policy influence and serve as advisors to academic peers on EIDM or Knowledge Translation (KT).

Context: Kenya

There exist several institutions of higher education in Kenya with the majority located in the capital, Nairobi. These range from universities to vocational training institutes. Of the ∼39 universities in the country (Commission for University Education 2013), several encompass public health training and research. Research, research training, research funding and research to policy initiatives however are not confined to traditional academic bodies. For instance, the primary research arm of the Ministry of Health (MOH) in Kenya is the Kenya Medical Research Institute (KEMRI) and the policy arm of government is an autonomous think tank—Kenya Institute for Public Policy Research and Analysis (KIPPRA)—dedicated solely to assisting the government with using evidence to inform their policies in all sectors. Other organizations have emerged to promote capacity in the production (African Population and Health Research Center (APHRC), the Consortium for National Health Research (CNHR)) as well as utilization (KEMRI, Institute of Policy Analysis and Research (IPAR), African Institute for Development and Policy (AFIDEP)) of research evidence for policy and practice. The EIDM movement therefore is gaining prominence and importance in Kenya. This is further demonstrated by reference to evidence use in several policy documents such as Kenya’s Vision 2030 (Government of the Republic of Kenya 2007) amongst others.

Lairumbi et al. (2008) assert that although formal partnerships between academia and policymakers exist in Kenya, these are suboptimal resulting in an under appreciation of the social value of research results. Anecdotal evidence from Kenya suggests that faculty from academic institutes such as SPHs have been playing a KB role; however, the extent of their reach, the relative credibility of their influence and the methods in which researchers as well as policymakers leverage them are undocumented.

Study aims

To understand the role of academic institutes in influencing health policy, we focused our study on Kenyan SPHs and the faculty within. We aimed:

To understand the architecture of the various ‘SPH-National Government’ networks in Kenya

To map the individual ‘academic faculty-policymaker’ connections that underlie the institutional networks

To identify individual persons playing a hub role and therefore serving as academic KBs.

Methods

To map and understand the professional networks of academic faculty at Kenyan SPHs, we identified all six institutions that fit the criteria of teaching and conducting research in public health in Kenya: University of Nairobi School of Public Health (SPHUoN), Kenyatta University School of Public Health (KUSPH), Kenya Methodist University (KEMU), Maseno University School of Public Health and Community Development (ESPUDEC), Moi University School of Public Health (MUSOPH), and Great Lakes University of Kisumu-Tropical Institute of Community Health (GLUK).

Data collection

The Deans of each SPH approved the study and facilitated communication with the faculty. A roster of leadership and full-time faculty was requested to estimate the number of sociometric surveys required. All faculty in the various SPHs were contacted first via email followed by text messages and/or phone calls to have a census to the extent possible. Office-bearing individuals such as Chancellors, Vice Chancellors and Departmental Chairs/Directors relevant to the SPH were identified by Deans and invited to participate in the survey. Survey instruments were piloted in advance.

Sociometric survey questions were administered orally in March 2013 by the principal researcher (N.J.) to increase question response rates and minimize problems associated with missing data. The survey instrument included demographic and socioeconomic information on each respondent including age, sex, highest academic degree obtained, countries where degree obtained, organization, years in organization, academic position, administrative position, years in position and prior or current engagement in health policies.

The suggested maximum for the number of contacts in a respondent’s network—referred to as ‘alters’ in social network parlance—vary from 5 to 7 for each name generator question (Miller 1956). In the context of policy networks, the minimum suggested is five (Crona and Parker 2011). To ensure capture of expected variation in heterogeneity, consider experiences from the field of policy networks as well as being respectful of the respondents’ time, we requested up to seven contacts in each of three categories of relations: A) national level policymakers (members of parliament, ministers, heads of departments) who they know and with whom they have worked/interacted since 20082; B) other faculty members (peers) who approach them for assistance on KT activities and C) other faculty members (peers) who they approach for assistance on KT activities. Rather than assuming a uniform understanding of KT, we provided a list of activities that could be considered KT, which included providing/receiving: KT capacity building, advice on research to policy strategies, peer research results for use in policy discussions, assistance in KT activities such as policy dialogues, policy briefs, etc., and insights on policy priorities for research.

These relationships were selected to provide a sense of the size of the individual faculty networks relevant to evidence-to-policy activities (both with policymakers as well as within peers) as well as the size and structure of the SPH networks by aggregating the individual networks.

Many part time staff and full-time faculty at one institution were often engaged in part time teaching at other institutions. For this reason, we excluded part time faculty from the study. Additionally, policymakers were not subjected to a sociometric survey; ties between faculty and policymakers are therefore unidirectional.

The study was approved by the Johns Hopkins University Bloomberg School of Public Health Institutional Review Board and the Kenyatta National Hospital/University of Nairobi Research Ethics Committee.

Data analysis

Participants were de-identified; their names were replaced with unique numerical identifiers. Responses were entered during the interview into individual data entry forms within Excel, then consolidated and imported into STATA 12 (StataCorp 2011) for descriptive and statistical analysis and into UCINet version 6.217 (Borgatti et al. 2002) for social network analysis (SNA). NetDraw 2.131 (Borgatti 2002) was employed to generate sociograms for network visualization. To overcome the concern about missing data, we retained faculty who declined or were unable to participate as nodes in the network as long as another member mentioned them. However, their personal ‘ego’ networks and their reciprocal relations do not feature in the analysis. Faculty who existed but neither participated nor were mentioned as alters were classified in the analysis as isolates and do not appear in the network maps.

Institutional network structure: SPH-national government connections

The total number of dyadic connections from individual faculty at each SPH to policymakers at each ministry was aggregated into dyadic institutional relations—also referred to as two-mode data analysis (Borgatti and Everett 1997)—to characterize the relationship between SPHs and various national level government institutions. Links between the institutions were ‘weighted’ to visualize the strength and diversity of interlocking institutional connections.

Individual network structure: academic faculty-policymaker connections

The reported connections between each faculty respondent and each policymaker mentioned in the study were recorded in a matrix.

We measured the prevalence of academic-policymaker relations at each SPH in two ways: the absolute number of faculty connected to at least one policymaker and the proportion of faculty who reported at least one policymaker contact. Although respondents were restricted to a maximum nomination list of seven per category of relations, we used the maximum nomination by any one member at the SPH as well as average policymaker contacts per faculty, as indicators for breadth of relations. Depth of connections was measured by the extent of overlap of policymaker connections amongst faculty within each SPH in terms of number of shared policymaker contacts as well as the proportion of shared policymaker contacts amongst the total number of policymakers in the SPH network.

Identification of KBs

There are several methods of identifying key actors in a network using SNA. We used measures of centrality to highlight the relative position within the three categories of relationship (A–C) introduced earlier:

A) Outdegree to policymakers: respondents who mention a large number of policymakers who they know and reach out to are considered to possess high outdegree centrality and are considered influential.

B) Indegree from peers: actors who are named by others as people that they reach out to for introductions to policymakers, for advice on KT and/or for knowledge on the policy process enjoy high indegree centrality.

C) Outdegree to peers: similar to A earlier, captures the size of the peer network a respondent reaches out to for reasons similar to that of category B.

In addition, we also used betweenness centrality as an indicator of the extent to which one actor is connected to others who are not connected to each other. Persons with high betweenness centrality serve as bridges and key players in the flow of ideas between different clusters of people (Freeman 1980).

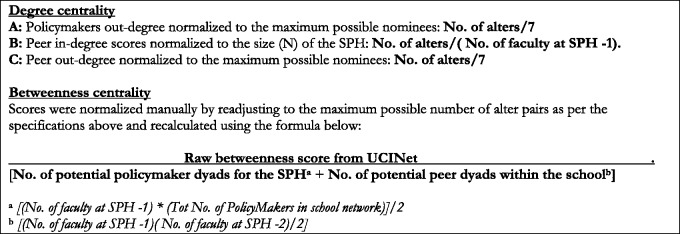

To identify faculty who could be academic KBs, we used all four measures mentioned earlier. Detailed descriptions of our calculations for measures of degree centrality and betweenness centrality are presented in Figure 1.

Figure 1.

Calculations for measures of degree and modified betweenness centrality

We reviewed the distributions of the four different scores and faculty members were subsequently classified as KBs if their normalized scores fell within the top 10% for Category A, Category B and Betweenness. We reasoned that a combination of faculty’s expanse of relations with policymakers (Category A), the reliance his/her peers had on him with respect to KT like expertise (Category B) and his/her relative betweenness position in the network (considers Categories A, B and C) provided an alternate means of capturing their structural role through capture of their functional role rather than any one single indicator. This is due to the belief that academic KBs do not assume a structural position in the network only as a conduit for controlling information flow (as measured by betweenness centrality) but that their position is possible due to their popularity amongst peers well as their influence with policymakers—each of which are captured through direct rather than indirect ties.

Finally, to ensure that the selection of indicators was reflective of our expectations, we used Pearson’s correlation to explore the association between the various centrality scores.

Results

We interviewed 124 of 157 full-time faculty, or between 81 and 94% of faculty onsite at each institution at the time of the study. For the purposes of the sociometric analyses, all faculty who were mentioned (regardless of employment status, ability to partake in study, academic department or higher leadership beyond the SPH for example Chancellors, Principal, Heads of Research Directorates, etc.) and all policymakers who were mentioned were retained in the network to allow for a complete network analysis. This yielded a total analytical sample of 168 faculty across the six schools (Table 1). There were 204 total mentions of policymakers (109 unique names, 95 recurring names) comprising 16 unique national government institutions including the Office of the President, the Office of the Prime Minister, Kenyan Parliament and 13 ministries. The majority of policymakers mentioned were senior officials ranging from current or former: Prime ministers (1), members of parliament (3), permanent secretaries (6), ministers (5), ministry directors (11), chief officers (11) and department and division heads (31) across the various ministries. Several deputies of the earlier positions were also mentioned (20). Program managers and project officers were amongst the minority (21).

Table 1.

Overview of SPH respondents and associated policymaker connections

| Institution | No. Full-time SPH Faculty | No. respondents | No. faculty mentioned in the surveysa | No. policymaker contacts mentioned by respondents | No. unique policymaker contacts | No. gov’t institution connected to each SPH |

|---|---|---|---|---|---|---|

| MUSOPH | 27 | 22 | 29 | 43 | 36 | 10 |

| SPHUoN | 17 | 15 | 17 | 34 | 27 | 8 |

| GLUK | 34 | 29 | 37 | 49 | 27 | 8+ |

| ESPUDEC | 29 | 24 | 31 | 21 | 16 | 8 |

| KEMU | 27 | 17 | 31 | 17 | 15 | 7 |

| KUSPH | 23 | 17 | 23 | 40 | 31 | 6 |

| Total | 157 | 124 | 168 | 204 | Unique PMs across all SPHs: 109 | 16 |

aNumber of faculty in this column differ from those in the previous one to the extent that they include leadership external to the SPH that were mentioned as relevant to the study (e.g. principal, chancellor, director of research, etc.).

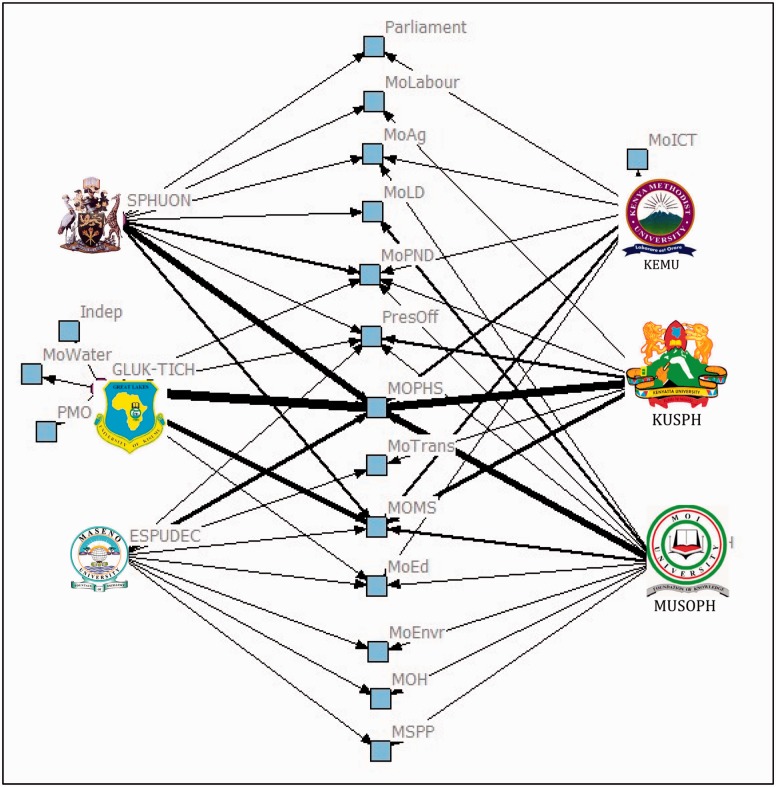

Institutional network structure: SPH-national government connections

As depicted in Figure 2 and Table 2, each SPH commands a variety of relationships with national government policymaking institutions. In the figure, the ties are weighted so that the greater the number of individual faculty-policymaker relations between an SPH and the complement government institution, the thicker the lines between them.

Figure 2.

‘Weighted’ institutional connections between Schools of Public Health (SPHs) and National Government agencies

Table 2.

Characteristics of academic-policymaker relations across Kenyan Schools of Public Health (SPHs)

| 1 | 2 | 3 | 4 | 5 |

6 |

7 |

||||

|---|---|---|---|---|---|---|---|---|---|---|

| Institution | No. Full-time faculty respondents | Total no. PM mentioned | No. unique PMs | Network size | Prevalence of PM relationsa |

Diversity of academic-policymaker relations |

||||

| No. | % | Max degreeb | Avg degree | No. sharedc PMs | % shared PMs | |||||

| MUSOPH | 22 | 43 | 36 | 60 | 16 | 72 | 7 | 1.95 | 5 | 14% |

| SPHUoN | 15 | 34 | 27 | 42 | 12 | 80 | 4 | 2.27 | 4 | 15% |

| GLUK | 29 | 49 | 27 | 57 | 16 | 55 | 7 | 1.69 | 9 | 33% |

| ESPUDEC | 24 | 21 | 16 | 39 | 13 | 52 | 3 | 0.88 | 4 | 25% |

| KEMU | 17 | 17 | 15 | 34 | 7 | 41 | 6 | 1.00 | 2 | 13% |

| KUSPH | 17 | 40 | 31 | 48 | 12 | 71 | 7 | 2.35 | 5 | 16% |

| TOTAL | 124 | 204 | Unique PMs across all SPHs: 109 | 76 | 61 | n/a | n/a | |||

aPrevalence of academic-policymaker relations: absolute no. of faculty connected to ≥1 policymaker; Proportion of same (Col 5/Col 2).

bDegree of academic-policymaker relations: maximum no. of policymaker (PM) contacts mentioned by any one faculty at the SPH; Avg no. of relations (Col 2/Col 1).

cShared academic-policymaker relations: total no. of shared policymaker (PM) contacts in network; Proportion of relations shared (Col 7/Col 3).Bolded entries are rows of cumulative totals so as not to be confused with the rows above.

The number of policymaker connected to an SPH via faculty respondents ranged from 15 to 36 per SPH. Although all SPHs have connections to Ministry of Public Health and Sanitation (MOPHS) and the Ministry of Medical Services (MoMS), the other government institutions to which each SPH has connections ranged in number (from 6 to 10 ministries) and in type. For instance, GLUK displayed unique connections to the Prime Minister’s Office (PMO) as well as the Ministry of Water (MoWater). The connection to MoWater, however, was rooted in a relationship cultivated during the same policymaker’s tenure at MOPHS. KEMU was the only academic institution demonstrating connections to the Ministry of Information, Communication and Technology (MoICT). Although we witness a variety of unique connections among the SPHs, we also note a number of overlapping networks indicating shared relations and research interests, both within as well as across SPHs. Niche areas with high specialization such as the integration of human, domestic animal and wildlife disease surveillance and control manifests as a shared connection between MUSOPH and SPHUoN to the Ministry of Livestock and Development through the OneHealth Initiative East and Central Africa (OHCEA) for instance.

Individual network structure: academic faculty-policymaker connections

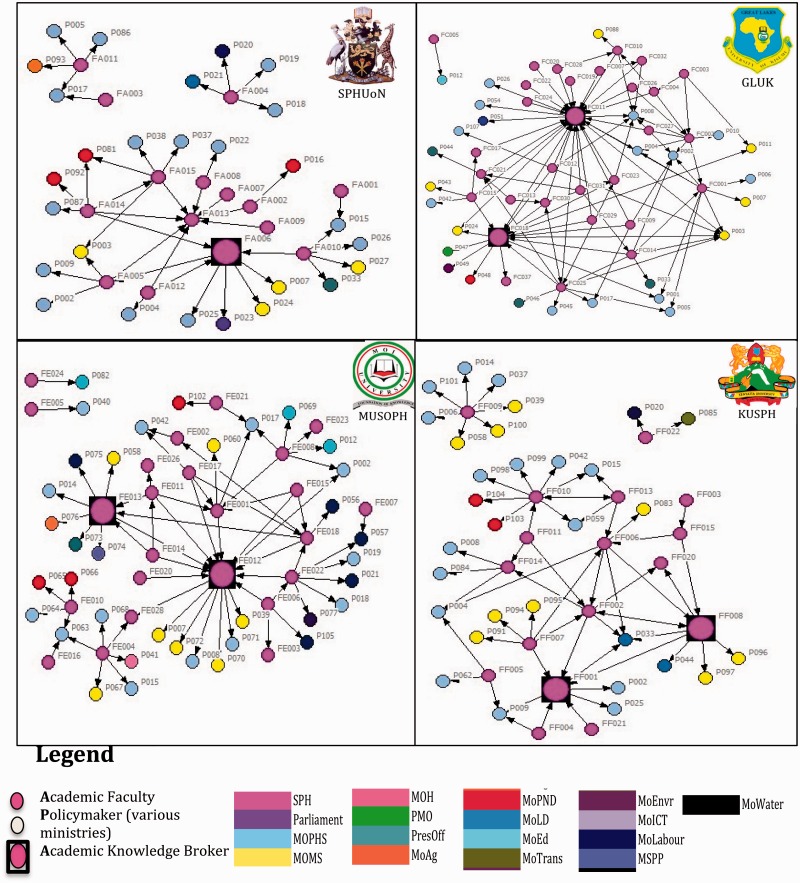

Whole network maps (sociograms) for each SPH were drawn using a combination of all three categories of individual relationships and therefore capture connections between each individual faculty member, their respective peers and policymakers. Isolated faculty were excluded to provide a more complete appreciation of the network. Figure 3 depicts the sociograms of the six SPHs. The various colours delineate SPH academic faculty from policymakers across the various government institutions

Figure 3.

Academic Knowlege Brokers (KBs) and their position within the academic-policymaker networks

Although all SPHs demonstrated multiple connections to policymakers, the distribution of these relationships varied across schools as depicted in Table 2. The cells representing the highest scores within each indicator are bordered in bold.

Across all SPHs, the average number of policymaker contacts per faculty was 2 (Mean 1.62, SD 1.95, range 0.88–2.35) with a median of 1. Approximately 5% of surveyed faculty listed the maximum allowable of seven policymaker alters. These were faculty at MUSOPH, GLUK and KUSPH. Forty-eight faculty (38%) mentioned not knowing any decision-makers at the national level (Mode = 0).

The absolute prevalence of academic-policymaker relations at each SPH ranged from 7 to 16 and the percentage of faculty possessing policymaker contacts ranged from 41 to 80%. Although some faculty at the various SPHs demonstrate relations with a diversity of policymakers (up to a maximum of seven), this was not uniform as demonstrated by the average number of relations ranging from 0.88 policymaker contacts per faculty to 2.35. Duplication of relations by way of overlapping and shared connections with policymakers among faculty within the same SPHs shows a smaller range of 13–33%. Of particular note are SPHUoN and GLUK, each of which indicates relations with 27 unique policymakers. The proportion of these that were shared amongst faculty however were 15 and 33%, respectively. Different SPHs demonstrated different sources of strengths within their academic-policymaker networks and no one SPH scores the highest or lowest on all indicators.

As depicted in the sociograms in Figure 3, there were pockets of academic faculty within all the SPHs except ESPUDEC, who while externally well connected (outdegree ≥ 4 policymaker contacts), appeared to be internally disconnected (e.g. FF009 at KUSPH). This was heightened at SPHUoN (4/12: 33%) and MUSOPH (4/16: 25%). There were also faculty who exhibited high indegree but no reported policymaker contacts (e.g. FB027 at KEMU).

Identification of KBs

Normalized outdegree centrality scores for Category A (policymaker alters) ranged from 0 to 100 (Absolute number of alters ranged from 0 to 7) with 6/124 (∼5%) of surveyed faculty across the six SPHs indicating knowing seven (or more) policymakers. Twenty-one faculty scored in the top 10% corresponding to ≥4 policymaker alters (normalized scores between 57.14 and 100). The top 10 percentile of normalized indegree centrality scores in Category B (peer alters) fell between 10.71 and 63.89 comprising 17 faculty. The top 10 percentile of normalized betweenness centrality scores fell between 2.97 and 22.74 comprising 17 faculty.

There were seven faculty members who consistently scored in the top 10 percentile across the three measures and were therefore considered KBs by this definition. The 7 KBs are each enlarged and enclosed in a square in Figure 3. They represent the SPHs as follows: SPHUoN -1, MUSOPH -2, GLUK -2 and KUSPH-1. They ranged in age from 44 to 67 years, only one was female, all possessed a medical and/or PhD degree at the minimum, and had been at their respective SPHs between 4 and 23 years. Their academic positions varied from Lecturers to Professors. However, 6 out of 7 KBs had previously or currently been in administrative positions of responsibility and leadership such as department heads, deans, vice chancellors and chancellors. All but one KB pursued one of their degrees in a foreign country. Three of the KBs held current positions of leadership within the SPH—1 from KUSPH and 2 from GLUK. Of the three KBs currently in leadership positions, all were men who had well-established academic careers (associate or full professors), and had been with their respective institutions for over 10 years. Their highest level of education comprised medical and/or doctorate degrees with qualifications obtained abroad in addition to Kenya. Furthermore, they all indicated having had extensive direct experience working with the various Ministries of Health either holding previous positions of authority, or in an advisory capacity.

Although seven faculty members were identified as KBs through the SNA, their network profiles varied (Table 3). Correlation analysis across the scores (Table 4) yielded a small correlation (0.14) between Categories A and C indicating that people with a strong network of policymaker relations are less likely to reach out to their peers for assistance in introductions to policymakers, in learning about the policy cycle, or in exploring methods to access or communicate with them. However, we do see a higher correlation between Categories A and B (0.41) whereby those with high policymaker contacts are more likely to have higher demand from their peers for assistance. The lowest correlation was between Categories B and C. that is those who rarely seek out their peers for policy relevant assistance similarly have fewer peers seeking them out for the same. Betweenness centrality scores indicate highest correlation with Category B (0.80) followed by Category A (0.56).

Table 3.

Centrality measures across the seven SNA-identified Academic Knowledge Brokers

| Identification Code | Outdegree to policymaker | Outdegree to policymaker normalized | Indegree from peers | Indegree from peers normalized | Peer and PM betweenness centrality | Peer and PM betweenness centrality (normalized)*100 |

|---|---|---|---|---|---|---|

| FC011 | 7 | 100.00 | 23 | 63.89 | 253.78 | 22.74 |

| FC018 | 7 | 100.00 | 9 | 25.00 | 159.13 | 14.25 |

| FE012 | 7 | 100.00 | 10 | 35.71 | 87.00 | 9.86 |

| FF008 | 4 | 57.14 | 3 | 13.64 | 46.50 | 8.12 |

| FF001 | 4 | 57.14 | 5 | 22.73 | 34.33 | 6.00 |

| FA006 | 4 | 57.14 | 3 | 18.75 | 24.00 | 7.14 |

| FE013 | 6 | 85.71 | 3 | 10.71 | 42.00 | 4.76 |

Outdegree to policymaker (PM) is normalized to 7 potential nominees: #alters/7.

Indegree from peers is normalized to size of school: #alters/(N − 1).

Peer&PM betweenness centrality includes isolates and normalized to (#potential PM dyads for SPH (all PMs mentioned by faculty at each particular SPH) +# dyads within the school).

Table 4.

Correlation analysis across the four SNA scores

| Outdegree to policymaker (Normalized) | Indegree from peers (Normalized) | Outdegree to peers (Normalized) | Peer and PM betweenness centrality (Normalized) | |

|---|---|---|---|---|

| Outdegree to policymaker (Normalized) | 1 | |||

| Indegree from peers (Normalized) | 0.4096 | 1 | ||

| Outdegree to peers (Normalized) | 0.1429 | 0.0603 | 1 | |

| Peer and PM betweenness centrality (Normalized) | 0.5588 | 0.7983 | 0.1997 | 1 |

Discussion

Academic networks, like policy networks, are nebulous and therefore difficult to assess. Relationships are likely to be informal and dynamic in nature (Cross et al. 2002). Due to its potential for making invisible networks visible, and recognizing strategic but under-utilized collaborations (Cross et al. 2002), SNA provided us with a novel method to understand academic-policymaker networks of public health faculty across six SPHs in Kenya. Unlike more prevalent methods of explicit actor identification such as stakeholder mapping or reference to formal organograms (Brugha and Varvasovszky 2000) that assume knowledge on actor role, power and interests, SNA reveals actors and their socially constructed roles through more quantitative methods. Additionally, SNA illustrates the relations between actors, which is important for knowledge brokering. Similar to other studies utilizing SNA to examine research and policy networks (Wonodi et al. 2012; Shearer et al. 2014), we explored the individual relationships between academic faculty and policymakers, depicted how these manifest institutionally for each SPH and identified seven academic KBs.

Individual connections

Given that SNA is used to study networks predominated by individual rather than organizational connections (Drew et al. 2011) as well as networks of communication channels between decision-makers and stakeholders (Ragland et al. 2011), it provided us with some understanding of relationships between academic faculty and those with whom they interact, as well as their relative position in these networks. The existence of academic KBs in some SPHs with relationships spanning several government institutions indicates that communication patterns revolve through key individuals within the network (Dunn and Westbrook 2011; Lewis 2006), regardless of organizational affiliation. Furthermore, it implies that KBs understand the complexities of both arenas—that of academia as well as that of policy—and are able to navigate them. This implication is reinforced by qualitative interviews that not only support identification of those who appeared as KBs in this SNA but also indicate a nuanced understanding of the politics of policymaking and strategies for engaging with policymakers for the purposes of EIDM (Jessani et al, Forthcoming (a)). Moreover, they reveal unique sociodemographic attributes, professional competencies, experiential knowledge, interactive skills and personal disposition amongst KBs (Jessani et al, Forthcoming (b)).

In addition to SNA-identified academic KBs, a distinct set of academic faculty who were externally influential but not necessarily internally prominent appeared—and vice versa. These individuals may be latent and plausibly ‘potential’ KBs. Although correlational analysis indicated that those with high policymaker contacts were more likely to have higher demand from their peers for assistance (0.41), it is surprising that this correlation was not higher.

For individuals who have limited opportunities for direct interaction, we see evidence of connectivity through KBs—members of a network who bridge ‘structural holes’ (Burt 1992; 2005; Granovetter 1973). Based on correlations with betweenness centrality, it appears that those who are prominent as KT advisors within the SPH (corr 0.80) have a greater proclivity to be KBs than those with greater policymaker contacts alone (corr 0.56). Further research to better understand the drivers for such relationship building (tie formation) and their differential effects would contribute to understanding how to leverage the expertise in an SPH, encourage capacity strengthening and develop expanded networks. This may have implications for the type of social capital (networks) as well as human capital (attributes, skills and capacities) that are required for academic faculty to effectively assist with KT and evidence-to-policy activities. In addition, an exploration of the ‘potential KBs’ that is those faculty who scored high on two of the three indicators, would assist in understanding what is needed for them to fully realize their potential if they would so wish to.

Geographic proximity to Nairobi, and therefore to policymakers, appears likely to contribute to knowledge brokering in what was still a nationally centralized governance system at the time. MUSOPH and GLUK although in the Western Provinces had a campus in the capital Nairobi and therefore were similar to SPHUoN and KUSPH in their access to policymakers and the policy environment in Kenya. ESPUDEC, one of the SPHs that did not appear to have a KB was focused on rural public health and it is likely that, similar to other geographically distant SPHs, its influence occurred primarily at the local or regional levels. Koon et al. (2013) indicate that while geographical proximity to policymakers may lead to greater ‘embeddedness’ in a network, more distal organizations can enhance their centrality through strong linkages to policymakers. SPHs without representation in the capital should consider exploring more diverse and effective methods of engagement with policymakers if they wish to influence national policy. However as decentralization unfolds in Kenya, universities with a presence outside of Nairobi may become increasingly important in terms of informing sub-national policies.

Institutional networks

Among the individual connections appeared a web of distinct as well as overlapping collective networks. Although each SPH—by virtue of its faculty connections—demonstrated relative monopoly with some policymakers, the various SPH networks were linked through shared relations with other policymakers. The choice and ability to engage with relevant policymakers was likely driven by, amongst other things, the research priorities of the SPH as well as the relationships cultivated by individual faculty reflecting to some extent Haas’ description of epistemic communities: ‘…a network of professionals with recognized expertise and competence in a particular domain and an authoritative claim to policy relevant knowledge within that domain or issue-area’ (Haas 1992).

Furthermore, SPHs with connections spanning fewer government institutions likely conducted research in niche areas with high specialization, as illustrated for example by KEMU’s links to the Ministry of Information, Communication and Technology. Those whose connections fanned a multitude of organizations reflected perhaps not only the size of the SPH but also their engagement in a greater variety of research topics. The history of SPHUoN as an SPH that absorbed faculty from other health disciplines speaks to this breadth of research interests as well as the diversity of relations that they brought with them. Social network mapping therefore, while capturing dynamic relations in a static format, urges us to consider elements of strategic relations that persist over time in addition to instrumental relations that were pertinent at the time of the study.

Measuring the quantity of shared connections between faculty in an SPH and any one policymaker may cast light on the embededdness of the policymaker in an SPH network, and the extent of potential institutional influence on the policymaker (and by extension on their organization). For instance, faculty within an SPH who share connections with the same policymakers may illustrate closer institutional ties such as at GLUK where faculty members shared relations with 9/27 (33%) of the policymakers mentioned. The persistence of relations with a small pool of policymakers, which in GLUK’s case is the Division of Community Health Services, may also reflect the saliency of a particular interest—community health and human resources for health. The benefits of multiple shared connections render the network less reliant on any one particular individual and therefore more stable (Burt 1992; Burt et al. 1998). However, redundant relations could also subject the network to insularity at the expense of diversity and greater reach. Additionally, similar interests could also stimulate a more competitive environment unless well co-ordinated by the institutions.

Constructing measures of knowledge brokering

Although the literature suggests using the betweenness score for the identification of KBs (Burt 2004; 2005; Granovetter 1973), we use a multiple indicator composite that is a function of scores on Categories A–C relationships—normalized on manually re-calculated network size. Individuals scoring in the top 10th percentile on all three indicators were subsequently identified as KBs.

KBs’ betweenness centrality scores demonstrated great variation and spread (Table 3). Furthermore, deeper analysis of those who scored high only on betweenness centrality demonstrated that they were connected to ‘potential KBs’—those scoring high on Category A (outdegree to policymakers) or Category B (indegree from peers) only—rather than being KBs themselves in the definition we have. An example of this is FF013 in Figure 3 who scored relatively high on betweenness centrality and fell within the top 10% of faculty on this metric. Upon further analysis, it appears that this is due to his being on the ‘shortest path’ to FF010 (high Category A score) and FF006 (high Category B score) each of which score high on at least one dimension. This further illustrates our hesitancy to depend solely on the betweenness centrality score to identify our academic KBs.

By normalizing the scores, we can control for the size of the SPHs. By triangulating the three scores, we can better understand which score is the greater driver of KB identification. There is therefore no centrality score per se that can be transposed to other studies to identify KBs as these scores are a function of a multitude of factors. However, using thresholds—in this case the top 10 percentile across normalized scores for outdegree to policymakers, indegree from peers and betweenness centrality—can be a useful way of classifying KBs in a network.

This modified approach provided us with an alternate means of identifying academic KBs within a network. Other methods for identification of KBs could be explored, particularly when multiple disparate networks such as SPHs are connected to common and overlapping networks of policymakers through the strategic position of key actors in the overall web. In addition, future research that validates the structural measure of KB against a set of behavioural traits or observed activities, or the content and quality of knowledge brokering exchanges would be important.

Implications

With academic faculty spanning a national policymaker network of 109 across 16 agencies, it would appear that social capital exists, is fairly large, and is widely distributed. Irrespective of the size of the individual SPH networks and the number of KBs within each, the fact that there are six SPHs with academic-policy networks that, while unique in and of themselves, have a fair amount of overlap is encouraging. As Bennett et al. (2011) assert, research networks serve to ‘strengthen the focus on national research priorities, enhance capacity through bringing together researchers with differing disciplinary skills and facilitate longer-term trust-based’ relations. Combined efforts of networks of multiple actors are likely to increase the chances of policy impact (Greene and Bennett 2007). The six SPHs in Kenya, each with varied specialties, unique experiences and overlapping interests, therefore provide a unique opportunity for unification, coalition building and collective action amongst them with the aim to influence policy as well as respond with research that is relevant for policymakers.

Revealing the existence of academic KBs has implications on SPHs with respect to maintaining their relevance, role and relationships in a systematic way. Recognition, reward and retention of these valuable faculty might be one such consideration. Although qualitative data would reveal the incentives (or disincentives) for faculty to engage in policy influence, it would behoove SPHs and other academic institutions to consider metrics of performance for this cadre and develop mechanisms to encourage skills in KT, brokering and networking.

Limitations

The study focused on faculty at SPHs and their networks with national policymakers, however, it is quite common for research relevant to public health policy to occur in faculties of medicine, nursing, agriculture, economics, etc. Therefore this study likely does not capture the full gamut of ‘public health’ researchers, and their associated networks, that influence policymakers in Kenya.

Freelisting likely elicits close and recent contacts thereby serving as a proxy for relationship strength; however, it could also overestimate tie strength, underestimate network density and fail to capture more infrequent and distant alters, also referred to as ‘weak ties’ (Brewer et al. 1999). Concerns about respondents truncating their reported lists of alters due to sensitivity and fear of exposure were addressed by reassuring respondents of confidentiality and creating unique identifiers for all alters. Respondent fatigue, sensitivity towards naming alters and forgetting likely contributed to a limited list of alters in some cases leading to ‘node-level’ missing data. To improve recall and capture weak ties, we used probes such as role cues: types of relationships, location cues: places people interact and chronological cues: prominent events during the period of interest (Brewer and Garrett 2001). Social desirability bias may have led to indications of more policymaker contacts through brief rather than substantial interactions to appear more connected. In both instances, incomplete information may have affected the importance of individuals in the networks and their relative placement and classification as brokers or non-brokers.

We were unable to conduct sociometric surveys with all policymakers mentioned in the network and therefore unable to verify the bidirectionality of relationships. To address the concerns about reciprocal relations, dyadic relations can be imputed in some cases where the ties are directed. However, due to concerns of over or under nominations of relevant alters we decided to rely only on triangulated indications as measures of reciprocity rather than using imputations. This was particularly important when understanding the relations between faculty and policymakers (Category A) where reciprocity cannot be assumed.

The classification of KBs was based on logical but debatable cut-points for SNA scores: top 10 percentile for each of the three scores. Changes in these cut-points may result in a different set of identified KBs. To verify we were not inadvertently missing any KBs as a result of our criteria, we reviewed faculty who scored above the threshold on 2 of the 3 criteria in the event that recall bias was resulting in their low scores on the third dimension. Of the four additional faculty who appeared in the revised set, three indicated that they were not previously or currently engaged in national health policy discussions, technical working groups or in advisory capacities. The fourth only had one policymaker contact. For this reason, we believe that our method of KB identification, at least in the Kenyan context, has captured this cadre.

Quantitative SNA, although useful in depicting places, positions and strength of relations between actors in a network, cannot capture the quality of the relationships within the networks—whether direct or mediated (Ball and Exley 2010), the instability of the network, contextual influences on the structure and function of the network, causal mechanisms or its dynamism due to entry and exit of individuals and changing political paradigms. In addition to quality of exchanges or relations not being captured, the outcomes of such exchanges are also elusive and therefore it is not possible to tell from the mapping whether the engagements was associated with symbolic rather than instrumental use of evidence in policy as seen in Burkina Faso (Shearer et al. 2014). Network mapping therefore inspires new questions for investigation.

Although this article seeks to identify the networks and the individuals within them using SNA, the broader study complemented this with more qualitative investigation of the characteristics of the KBs (Jessani et al, Forthcoming (b)) as well as the strategies for engagement between academic researchers and policymakers (Jessani et al, Forthcoming (a)). These accompanying results are reported elsewhere.

Conclusion

The results of our study suggest that there are several Kenyan academic faculty who engage in activities and relationships that place them in unique positions as KBs and conduits for policy influence. Using SNA as a heuristic device to identify these academic KBs appears to be valuable and revealing. We therefore recommend using SNA, rather than traditional forms of stakeholder analysis or subjective opinions, to reveal who the actual advisors/resource persons for faculty in the SPHs are, who have relationships with policymakers, who can be supported and leveraged for bridging the research to policy (and vice versa) divide and which members can convene to collectively influence public health policy.

To uncover and benefit from these relations, SPHs should conduct regular SNAs so as to: situate themselves within the larger academic network of SPHs; uncover the prevalence and distribution of individual academic-policymaker connections; demonstrate the potential influence of the SPH through individual academic-policymaker connections; position the SPH in the network of policymakers across government institutions; identify and leverage academic KBs; recognize untapped potential KBs and enhance individual capacity and organizational systems required to realize this potential.

Furthermore, by attributing research interests to each academic faculty in such a network, national government could utilize recurrent SNA studies to: identify the location and distribution of academic expertise in a country; leverage existing relations for the purposes of influencing health systems research and policy decisions; build strategic networks in areas where gaps exist and understand shared interests for the purposes of engaging in multidisciplinary and multi-sectoral governmental collaborations.

Acknowledgements

The authors thank the leadership at the six SPHs and their staff for their support in facilitating the data collection across the six Kenyan academic institutions. Without participant (both faculty as well as policymakers) willingness to be interviewed, it would not have been possible to capture the nuances of relations and strategies for engagement. The authors express their appreciation for the financial support (Grant #H050474) provided by the UK Department for International Development for the Future Health Systems research programme consortium. The views expressed are not necessarily those of the funders.

Conflict of interest statement. None declared.

1 Examples of attempts at KTPs in low- and middle-income countries (LMICs) include the Regional East African Community Health Policy Initiative (REACH-PI), the Zambian Forum for Health Research (ZAMFOHR), the Ebonyi State Health Policy Advisory Committee (ESHPAC) and WHO’s Evidence Informed Policy Network (EVIPnet).

2 Following the creation of a coalition government in 2008, the Kenyan Ministry of Health (MOH) was divided into two ministries each with distinct functions: the Ministry of Public Health and Sanitation (MOPHS) and the Ministry of Medical Services (MOMS). Each drew upon the same budget, which consequently introduced a need for inter-agency coordination as well as set the stage for competing practices for limited resources, duplication of efforts and ambiguous boundaries of responsibilities.

References

- African Institute for Development Policy Research and Dialogue (AFIDEP). http://www.afidep.org/, accessed 14 July 2014.

- African Population and Health Research Center. www.aphrc.org, accessed 18 July 2014.

- Ball SJ, Exley S. 2010. Making policy with ‘good ideas’: policy networks and the ‘intellectuals’ of new labour. Journal of Education Policy 25: 151–69. [Google Scholar]

- Bardach E. 1998. Getting Agencies to Work Together: The Practice and Theory of Managerial Craftmanship. Washington, D.C.: Brookings Institution Press. [Google Scholar]

- Bennett G, Jessani N. (eds). 2011. The Knowledge Translation Toolkit: Bridging the Know-Do Gap: A Resource for Researchers. Delhi, India: IDRC/SAGE. [Google Scholar]

- Bennett S, Agyepong IA, Sheikh K, Hanson K, Ssengooba F, Gilson L. 2011. Building the field of health policy and systems research: an agenda for action. PLoS Medicine 8(8): e1001081 doi:10.1371/journal.pmed.1001081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- BIS. 2009. Higher Ambitions: The Future of Universities in a Knowledge Economy. New York, NY: Department for Business, Innovation and Skills. [Google Scholar]

- Borgatti SP. 2002. NetDraw Software for Network Visualization. Lexington, KY: Analytic Technologies. [Google Scholar]

- Borgatti SP, Everett MG, Freeman LC. 2002. UCINet for Windows: Software for Social Network Analysis. Harvard, MA: Analytic Technologies. [Google Scholar]

- Borgatti SP, Everett MG. 1997. Network analysis of 2-mode data. Social Networks 19: 243–69. [Google Scholar]

- Brewer DD, Garrett SB. 2001. Evaluation of interviewing techniques to enhance recall of sexual and drug injection partners. Sexually Transmitted Diseases 28: 666–77. [DOI] [PubMed] [Google Scholar]

- Brewer DD, Garrett SB, Kulasingam S. 1999. Forgetting as a cause of incomplete reporting of sexual and drug injection partners. Sexually Transmitted Diseases 26: 166–76. [DOI] [PubMed] [Google Scholar]

- Brugha R, Varvasovszky Z. 2000. Stakeholder analysis: a review. Health Policy and Planning 15: 239–46. [DOI] [PubMed] [Google Scholar]

- Burt RS. 1992. Structural Holes: The Social Structure of Competition. Cambridge: First Harvard University Press. [Google Scholar]

- Burt RS. 2004. Structural holes and good ideas. American Journal of Sociology 110: 349–99. [Google Scholar]

- Burt RS. 2005. Brokerage and Closure: An Introduction to Social Capital. Oxford: Oxford University Press. [Google Scholar]

- Burt RS, Jannotta JE, Mahoney JT. 1998. Personality correlates of structural holes. Social Networks 20: 63–87. [Google Scholar]

- Canadian Health Services Research Foundation. 2003. The Theory and Practice of Knowledge Brokering in Canada’s Health System. Ottawa: CHSRF. [Google Scholar]

- Choi BCK, McQueen DV, Rootman I. 2003. Bridging the gap between scientists and decision makers. Journal of Epidemiology and Community Health 57: 918. [Google Scholar]

- Choi BCK, Pang T, Lin V, Puska P, Sherman G, Goddard M, et al. 2005. Can scientists and policy makers work together? Journal of Epidemiology and Community Health 59: 632–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Commission for University Education. 2013. Status of Universities: Universities Authorized to Operate in Kenya, 2013. http://www.cue.or.ke/services/accreditation/status-of-universities, accessed 15 July 2014.

- Consortium for National Health Research (CNHR). http://cnhrkenya.org/, accessed 14 July 2014.

- Crona BI, Parker JN. 2011. Network determinants of knowledge utilization preliminary lessons from a boundary organization. Science Communication 33: 448–71. [Google Scholar]

- Cross R, Borgatti SP, Parker A. 2002. Making invisible work visible: using social network analysis to support strategic collaboration. California Management Review 44: 25–46. [Google Scholar]

- Datta A, Jones N. 2011. Linkages between Researchers and Legislators in Developing Countries. London: ODI. [Google Scholar]

- Davies P. 2007. “Evidence-based Government: how do we make it happen?” Presentation at the Canadian Association of Paediatric Health Centres. October 2007. Montreal, Canada. [Google Scholar]

- Dobbins M, DeCorby K, Twiddy T. 2004. A knowledge transfer strategy for public health decision makers. Worldviews on Evidence-Based Nursing 1: 120–8. [DOI] [PubMed] [Google Scholar]

- Drew R, Aggleton P, Chalmers H, Wood K. 2011. Using social network analysis to evaluate a complex policy network. Evaluation 17: 383–94. [Google Scholar]

- Dunn AG, Westbrook JI. 2011. Interpreting social network metrics in healthcare organisations: a review and guide to validating small networks. Social Science and Medicine 72: 1064–8. [DOI] [PubMed] [Google Scholar]

- Feldman PH, Nadash P, Gursen M. 2002. Researchers from mars, policymakers from venus. Health Affairs 21: 299–300. [DOI] [PubMed] [Google Scholar]

- Freeman LC. 1980. The gatekeeper, pair-dependency and structural centrality. Quality and Quantity 14: 585–92. [Google Scholar]

- Gagnon ML. 2011. Moving knowledge to action through dissemination and exchange. Journal of Clinical Epidemiology 64: 25–31. [DOI] [PubMed] [Google Scholar]

- Government of the Republic of Kenya. 2007. Kenya Vision 2030. The Popular Version. Nairobi: GoK. [Google Scholar]

- Granovetter M. 1973. The strength of weak ties. American Journal of Sociology 78: 1360–80. [Google Scholar]

- Greene A, Bennett S. 2007. Sound Choices: Enhancing Capacity for Evidence-Informed Health Policy. Geneva: WHO. [Google Scholar]

- Haas PM. 1992. Introduction: epistemic communities and international policy coordination. International Organization 46: 1–35. [Google Scholar]

- Hargadon AB. 1998. Firms as knowledge brokers: Lessons in pursuing continuous innovation. Calif Manage Rev 1998(3): 209–227. [Google Scholar]

- Innvaer S, Vist G, Trommald M, Oxman A. 2002. Health policy-makers’ perceptions of their use of evidence: a systematic review. Journal of Health Services Research & Policy 7: 239–44. [DOI] [PubMed] [Google Scholar]

- Institute of Policy Analysis and Research (IPAR). http://www.ipar.or.ke/, accessed 19 July 2014.

- Jansson SM, Benoit C, Casey L, Phillips R, Burns D. 2010. In for the long haul: knowledge translation between academic and nonprofit organizations. Qualitative Health Research 20: 131–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jessani NS, Kennedy C, Bennett SC. (a) Navigating the academic and political environment: Strategies for engagement between public health faculty and policymakers in Kenya Forthcoming. [Google Scholar]

- Jessani NS, Kennedy C, Bennett SC. (b) The human capital of knowledge brokers: An analysis of attributes, capacities and skills of academic faculty at Kenyan schools of public health Forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson AT, Hirt JB, Hoba P. 2011. Higher education, policy networks, and policy entrepreneurship in Africa: the case of the Association of African Universities. Higher Education Policy 24: 85–102. [Google Scholar]

- Johnston L, Robinson S, Lockett N. 2010. Recognising “open innovation” in HEI-industry interaction for knowledge transfer and exchange. International Journal of Entrepreneurial Behaviour and Research 16: 540–60. [Google Scholar]

- KEMRI. REACH-PI Overview. http://www.kemri.org/index.php/centres-a-departments/reach-pi, accessed 14 July 2014.

- Kenya Institute for Public Policy Research and Analysis (KIPPRA). http://www.kippra.org/About-KIPPRA/about-kippra.html, accessed 14 July 2014.

- Kenya Medical Research Institute (KEMRI). http://www.kemri.org/, accessed 14 July 2014.

- Ketelaar M, Harmer-Bosgoed M, Willems M, Russell D, Rosenbaum P. 2010. Moving research evidence into clinical practice: the role of knowledge brokers. Developmental Medicine and Child Neurology 52: 66–7.19732118 [Google Scholar]

- Koon AD, Rao KD, Tran NT, Ghaffar A. 2013. Embedding health policy and systems research into decision-making processes in low- and middle-income countries. Health Research Policy and Systems 11(1): 30–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lairumbi GM, Molyneux S, Snow RW, Marsh K, Peshu N, English M. 2008. Promoting the social value of research in Kenya: examining the practical aspects of collaborative partnerships using an ethical framework. Social Science & Medicine 67: 734–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavis JN, Posada FB, Haines A, Osei E. 2004. Use of research to inform public policymaking. Lancet 364: 1615–21. [DOI] [PubMed] [Google Scholar]

- Lewis JM. 2006. Being around and knowing the players: networks of influence in health policy. Social Science and Medicine 62: 2125–35. [DOI] [PubMed] [Google Scholar]

- Lomas J. 2007. The in-between world of knowledge brokering. BMJ 334: 129–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lomas J, Culver T, McCutcheon C, McAuley L, Law S. 2005. Conceptualizing and Combining Evidence for Health System Guidance. Ottawa, Canada: CHSRF. [Google Scholar]

- Meyer M. 2010. Knowledge brokers as the new science mediators. HERMES 57: 165–71. [Google Scholar]

- Miller GA. 1956. The magical number seven, plus or minus two: some limits on our capacity for processing information. Psychological Review 63: 81. [PubMed] [Google Scholar]

- One Health Initiative East and Central Africa (OHCEA). www.ohcea.org, accessed 24 July 2013.

- Ragland CJ, Feldpausch-Parker A, Peterson TR, Stephens J, Wilson E. 2011. “Socio-political dimensions of CCS deployment through the lens of social network analysis”. Energy Procedia 4: 6210–6217. [Google Scholar]

- Rycroft-Malone J, Wilkinson JE, Burton CR, Andrews G, Ariss S, Baker R, et al. 2011. Implementing health research through academic and clinical partnerships: a realistic evaluation of the Collaborations for Leadership in Applied Health Research and Care (CLAHRC). Implementation Science 6(1): 74–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shearer JC, Dion M, Lavis JN. 2014. Exchanging and using research evidence in health policy networks: a statistical network analysis. Implementation Science 9: 126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- StataCorp. 2011. Stata Statistical Software: Release 12. College Station, TX: StataCorp LP. [Google Scholar]

- Surridge B, Harris B. 2007. Science-driven integrated river basin management: a mirage?. Interdisciplinary Science Reviews 32: 298–312. [Google Scholar]

- van Kammen J, de Savigny D, Sewankambo N. 2006. Using knowledge brokering to promote evidence-based policy-making: the need for support structures. Bulletin of the World Health Organization 84: 608–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ward V, House A, Hamer S. 2009. Knowledge brokering: the missing link in the evidence to action chain? Evidence and Policy 5: 267–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitchurch C. 2008. Shifting identities and blurring boundaries: the emergence of third space professionals in UK higher education. Higher Education Quarterly 62:377–96. [Google Scholar]

- WHO. 2006. “Bridging the “know-do” gap” Meeting on knowledge translation in global health. Available at http://www.who.int/kms/WHO_EIP_KMS_2006_2.pdf. [Google Scholar]

- Wonodi CB, Privor-Dumm L, Aina M, Pate AM, Reis R, Gadhoke P, Levine OS. 2012. Using social network analysis to examine the decision-making process on new vaccine introduction in Nigeria. Health Policy and Planning 27(suppl 2): ii27–38. [DOI] [PubMed] [Google Scholar]

- World Health Organisation. 2005. Knowledge for better health: strengthening health systems. Ministerial Summit on Health Research 2004. WHO, 16–20 November 2004. [Google Scholar]