Abstract

Background Published clinical problem solving exercises have emerged as a common tool to illustrate aspects of the clinical reasoning process. The specific clinical reasoning terms mentioned in such exercises is unknown.

Objective We identified which clinical reasoning terms are mentioned in published clinical problem solving exercises and compared them to clinical reasoning terms given high priority by clinician educators.

Methods A convenience sample of clinician educators prioritized a list of clinical reasoning terms (whether to include, weight percentage of top 20 terms). The authors then electronically searched the terms in the text of published reports of 4 internal medicine journals between January 2010 and May 2013.

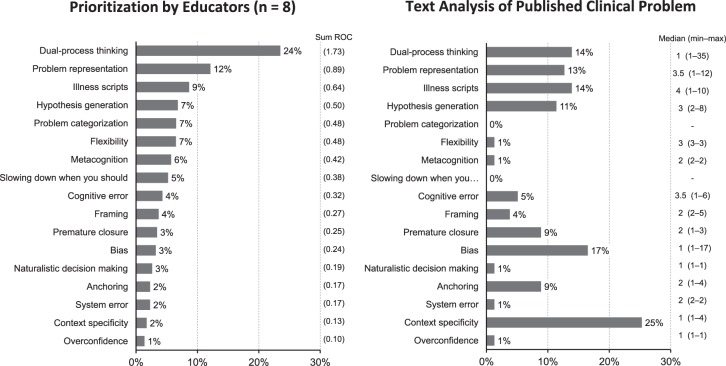

Results The top 5 clinical reasoning terms ranked by educators were dual-process thinking (weight percentage = 24%), problem representation (12%), illness scripts (9%), hypothesis generation (7%), and problem categorization (7%). The top clinical reasoning terms mentioned in the text of 79 published reports were context specificity (n = 20, 25%), bias (n = 13, 17%), dual-process thinking (n = 11, 14%), illness scripts (n = 11, 14%), and problem representation (n = 10, 13%). Context specificity and bias were not ranked highly by educators.

Conclusions Some core concepts of modern clinical reasoning theory ranked highly by educators are mentioned explicitly in published clinical problem solving exercises. However, some highly ranked terms were not used, and some terms used were not ranked by the clinician educators. Effort to teach clinical reasoning to trainees may benefit from a common nomenclature of clinical reasoning terms.

What was known and gap

Research in cognitive psychology has advanced understanding of the mental process characteristic of clinical reasoning.

What is new

A convenience sample of clinical educators identified and ranked clinical reasoning terms and compared them to terms used in published exercises.

Limitations

Single site study and lack of exploration of terms in context.

Bottom line

Core concepts of modern theories of clinical reasoning ranked highly by educators are not mentioned consistently in published clinical problem solving exercises; teaching of clinical reasoning would benefit from the use of common nomenclature.

Editor's Note: The online version of this article contains clinical reasoning terms identified from published reviews, rank order centroid weights attributed to clinical reasoning terms, and steps for text search of clinical reasoning terms.

Introduction

Research in cognitive psychology has advanced the community's understanding of the mental process characteristic of clinical reasoning.1–6 As a fundamental skill in the practice of medicine, how to teach and assess clinical reasoning is a matter of continued study.7–11 Evidence suggests that residents and students value the clinical reasoning of experienced clinicians.12,13 In fact, the “sharing of attending's thought process” was the highest-rated attribute of successful teaching rounds for residents and medical students in 1 study.12 Similarly, medical students reported learning clinical reasoning from their resident teachers when the behavior is role modeled during clinical encounters.13 However, a common nomenclature of clinical reasoning terms does not exist to date.1

Clinical problem solving exercises have emerged as a written form intended to illustrate aspects of the diagnostic reasoning process.14–17 An expert clinician sequentially discusses clinical information presented; some journals also include an explicit discussion of clinical reasoning concepts. In this format, the reader is exposed to a “think-out-loud” version of the thought process of the expert clinician. Such published exercises offer a venue for learning clinical reasoning, akin to learning clinical medicine from case reports.

To our knowledge, the specific clinical reasoning terms discussed in such clinical problem solving exercises is unknown. In this pilot study, we identified the terms (valued highly by clinician educators) from the clinical reasoning literature that are being used in published clinical problem solving exercises.

Methods

Clinical Reasoning Terms

We compiled, narrowed, and prioritized a list of terms. Using an iterative process, 2 authors (J.L.M., C.A.E.) compiled a list of clinical reasoning terms.1,4,18–21 To narrow the list, a convenience sample of 7 educators at a single institution selected clinical reasoning terms they would include in a review on the subject. Of the list of 43 potential terms, the top 20 terms receiving at least 5 endorsements for inclusion were included in the next step (lists are provided as online supplemental material).

To further prioritize the list, a convenience sample of 8 educators at a single institution independently ranked the terms using a card sort method.22 Manual card sorting incorporates a visual, motor, cognitive dimension that lends itself to more accurate portrayals of opinions.22 To combine rankings by each individual, we used the rank order centroid method. This method provides a reliable means of prioritizing terms among a group of decision makers that is superior to subjective weighting by individuals, translating ordinal rankings into weighted values.23–25 With the rank order centroid method, weighted values are directly proportional to the number of terms being ranked. For example, for 3 terms ranked as first, second, and third (if just 3 terms are available), their relative weights are 0.61, 0.28, and 0.11, respectively. For each individual, the sum of weights applied to each set of ranked terms is always equal to 1, ensuring consistent weight distribution among the ordinal ranks submitted by each individual.26 The main measure for prioritizing clinical reasoning terms was the weight percentage (percentage = [sum of weights / number of raters] × 100). Post-hoc, we recalculated the weight percentage after combining system 1 (nonanalytic) and system 2 (analytic) into dual-process thinking as they reflect a unified concept. We also excluded the term diagnosis as it was mentioned in all published exercises and it lacks specificity as a clinical reasoning term.

Review by the Institutional Review Board was not obtained because individuals were a convenience sample of clinician educators who worked with the authors teaching clinical reasoning.

Text Search of Clinical Reasoning Terms

To search the literature of clinical reasoning exercises, we included all articles between January 2010 and May 2013 listed in the table of contents of The New England Journal of Medicine (“Clinical Problem Solving”), Journal of Hospital Medicine (“Clinical Care Conundrum”), Journal of General Internal Medicine (“Exercises in Clinical Reasoning”), and The American Journal of the Medical Sciences (“Clinical Reasoning: A Case-Based Series”). We then searched for the terms in the text of those reports using a semi-automated approach (steps are provided as online supplemental material). The main measures were the percentage of articles in which each clinical reasoning term was mentioned at least once and the number of times when the term was mentioned.

Results

A total of 79 clinical problem solving style exercises were published during the study period: 41 (52%) in The New England Journal of Medicine, 22 (28%) in the Journal of Hospital Medicine, 11 (14%) in the Journal of General Internal Medicine, and 5 (6%) in The American Journal of the Medical Sciences.

Clinical Reasoning Terms

The 20 clinical reasoning terms, weights assigned by each educator, and the prioritization of terms are provided as online supplemental material. The prioritized list of clinical reasoning terms is shown in the figure (left panel); the terms with the greatest weight percentage included dual-process thinking (24%), problem representation (12%), illness scripts (9%), hypothesis generation (7%), problem categorization (7%), flexibility (7%), metacognition (6%), slowing down when you should (5%), cognitive error (4%), and framing (4%). Dual-process thinking included system 1 (nonanalytic) and system 2 (analytic).

figure.

Clinical Reasoning Terms

Note: Prioritization by educators (left panel) and text analysis of published clinical reasoning exercises (right panel). Prioritization by educators (left panel): percentage values are relative to the sum of weights of each term using the rank order centroid (ROC) method; values indicate sum ROC. Text analysis (right panel): percentage of articles in which each clinical reasoning term was mentioned at least once in the text of published clinical problems solving exercises (n = 79); values indicate number of times when the term was mentioned. Dual-process thinking includes system 1 (non-analytic) and system 2 (analytic) and the term diagnosis is excluded (see Methods section).

Text Search of Clinical Reasoning Terms

The frequency of clinical reasoning terms specifically mentioned in the text across the published clinical problem exercises (n = 79) is shown in the figure (right panel). The top 10 terms were context specificity (n = 20, 25%), bias (n = 13, 17%), dual-process thinking (n = 11, 14%), illness scripts (n = 11, 14%), problem representation (n = 10, 13%), hypothesis generation (n = 9, 11%), premature closure (n = 7, 9%), anchoring (n = 7, 9%), cognitive error (n = 4, 5%), and framing (n = 3, 4%). When a term was included in the text, the terms were mentioned a median of 1 to 4 times (range 1 to 35) as shown in the figure.

Discussion

In this study, we identified terms from the clinical reasoning literature being used in published clinical problem solving exercises. Educators who teach clinical reasoning prioritized the top clinical reasoning terms for inclusion in a review of clinical problem solving exercises. The top 5 terms were dual-process thinking (including system 1 and system 2), problem representation, illness scripts, hypothesis generation, and problem categorization. The 5 terms mentioned most frequently in published clinical problem solving cases were context specificity, bias, dual-process thinking, illness scripts, and problem representation.

Three terms ranked highly by educators were also included in the terms mentioned frequently in published exercises: dual-process thinking, problem representation, and illness scripts. At the same time, the top 2 terms mentioned in the text of published exercises were not highly ranked by educators. Context specificity,27 the influence of contextual factors (eg, setting, patient characteristics) on the clinical reasoning process of the clinician, ranked first in published exercises, yet ranked second to last on the list prioritized by educators. Bias ranked second in published exercises and 12th in the list by educators. Premature closure and anchoring also were among the 10 terms most frequently mentioned in published exercises, yet they ranked in the bottom half of the educators' list.

Our study did not formally examine the reasons for these discrepancies. Educators may have ranked these terms lower in an attempt to rank broader, more encompassing terms higher or, given their clinical experiences, prioritized higher what is possible to teach. Finally, the structure posed by the journals may highlight certain terms. For example, terms that explain cognitive errors (bias, anchoring, and premature closure) have received attention in recent literature.

Our findings have implications for educators and future research. First, teaching clinical reasoning, both in person and through published exercises, will likely benefit from a common nomenclature of terms and concepts. Increasingly, efforts are being placed on distilling the knowledge we have about clinical reasoning into a core group of terms readily accessible to clinical educators.1,4,20 As outlined by Norman,1 the clinical reasoning literature is difficult to synthesize, due to the scattering of clinical reasoning experts and their publications across a wide array of journals, which leads to discord over even basic clinical reasoning terms. Our findings contribute to the discourse on this matter, as the discrepancies noted between the priorities placed by educators and the terms mentioned in the published exercises are intriguing and worth further exploration.

Second, the list shines light on potential topics for future clinical problem solving cases. Problem categorization, flexibility, metacognition, and slowing down when you should were rated among the top 10 terms by educators in our study, but were rarely mentioned in published clinical problem solving exercises. New articles discussing these concepts and their application would add to the breadth of clinical reasoning terms in the clinical problem solving literature.

Our study has limitations. First, the selection of clinical reasoning terms was challenging. For example, although 1 aspect of meta-cognition is self-reflection,28 we did not include the term as a component of meta-cognition. Second, the educators who ranked the terms were from a single institution and may have used a local mental model of clinical reasoning, limiting generalizability. Third, we did not explore the context in which clinical reasoning terms were used. Fourth, clinical reasoning concepts illustrated but not explicitly mentioned in the text were not examined.

Future studies may examine the clinical reasoning concepts, rather than terms, discussed in published exercises or other types of publications (clinical-pathological conferences or morbidity and mortality reports). Future studies are also needed to test the effectiveness of using published exercises to teach clinical reasoning skills in residency programs, as the cases presented are rich in clinical detail and complexity. For example, journals that include a case discussion14,16 or explicit summary of clinical reasoning15,17 could be used in small group discussions or independent study to reinforce clinical reasoning terms learned as part of a broader clinical reasoning curriculum. Cognitive errors, such as bias, premature closure, and anchoring, could be discussed in the context of cases presented in published exercises. Evidence from allied health education suggests that “making thinking visible” enhances the efficacy of communication between teacher and student.29 The clinician's commentary in published exercises could be viewed as highly selected and edited versions of a think-out-loud presentation of a case discussion. Residency programs may use local clinical expertise to develop clinical problem solving exercises from the findings of this pilot study and other resources. For example, tips for presenting a clinical problem solving exercise are available,30 with clinical information presented iteratively while the discussant thinks out loud as the case unfolds. Engaging students with exercises that provide explicit discussion about both diagnostic reasoning and clinical management is a forum for simulated practice.

Conclusion

Core concepts of modern clinical reasoning theory ranked highly by educators are mentioned explicitly in published clinical problem solving exercises; however, discrepancies exist between several highly ranked terms and their frequency in the literature. This study advances existing efforts to identify a unified common nomenclature of clinical reasoning concepts while simultaneously highlighting several potential topics for future clinical problem solving cases.

Supplementary Material

References

- 1. Norman G. Research in clinical reasoning: past history and current trends. Med Educ. 2005; 39 4: 418– 427. [DOI] [PubMed] [Google Scholar]

- 2. Croskerry P, Norman G. Overconfidence in clinical decision making. Am J Med. 2008; 121 suppl 5: 24– 29. [DOI] [PubMed] [Google Scholar]

- 3. Kassirer JP, Kuipers BJ, Gorry GA. Toward a theory of clinical expertise. Am J Med. 1982; 73 2: 251– 259. [DOI] [PubMed] [Google Scholar]

- 4. Bowen JL. Educational strategies to promote clinical diagnostic reasoning. N Engl J Med. 2006; 355 21: 2217– 2225. [DOI] [PubMed] [Google Scholar]

- 5. Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008; 121 suppl 5: 2– 23. [DOI] [PubMed] [Google Scholar]

- 6. Bordage G, Lemieux M. Semantic structures and diagnostic thinking of experts and novices. Acad Med. 1991; 66 suppl 9: 70– 72. [DOI] [PubMed] [Google Scholar]

- 7. Patel R, Sandars J, Carr S. Clinical diagnostic decision-making in real life contexts: a trans-theoretical approach for teaching: AMEE Guide No. 95. Med Teach. 2015; 37 3: 211– 227. [DOI] [PubMed] [Google Scholar]

- 8. Mamede S, van Gog T, Sampaio AM, de Faria RM, Maria JP, Schmidt HG. How can students' diagnostic competence benefit most from practice with clinical cases? The effects of structured reflection on future diagnosis of the same and novel diseases. Acad Med. 2014; 89 1: 121– 127. [DOI] [PubMed] [Google Scholar]

- 9. Ilgen JS, Bowen JL, McIntyre LA, Banh KV, Barnes D, Coates WC, et al. Comparing diagnostic performance and the utility of clinical vignette-based assessment under testing conditions designed to encourage either automatic or analytic thought. Acad Med. 2013; 88 10: 1545– 1551. [DOI] [PubMed] [Google Scholar]

- 10. Sibbald M, de Bruin AB, van Merrienboer JJ. Checklists improve experts' diagnostic decisions. Med Educ. 2013; 47 3: 301– 308. [DOI] [PubMed] [Google Scholar]

- 11. Heist BS, Gonzalo JD, Durning S, Torre D, Elnicki DM. Exploring clinical reasoning strategies and test-taking behaviors during clinical vignette style multiple-choice examinations: a mixed methods study. J Grad Med Educ. 2014; 6 4: 709– 714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Roy B, Castiglioni A, Kraemer RR, Salanitro AH, Willett LL, Shewchuk RM, et al. Using cognitive mapping to define key domains for successful attending rounds. J Gen Intern Med. 2012; 27 11: 1492– 1498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Karani R, Fromme HB, Cayea D, Muller D, Schwartz A, Harris IB. How medical students learn from residents in the workplace: a qualitative study. Acad Med. 2014; 89 3: 490– 496. [DOI] [PubMed] [Google Scholar]

- 14. Kassirer JP. Clinical problem-solving—a new feature in the journal. N Engl J Med. 1992; 326 1: 60– 61. [DOI] [PubMed] [Google Scholar]

- 15. Henderson M, Keenan C, Kohlwes J, Dhaliwal G. Introducing exercises in clinical reasoning. J Gen Intern Med. 2010; 25 1: 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Bump GM, Parekh VI, Saint S. “Above or below?” J Hosp Med. 2006; 1 1: 36– 41. [DOI] [PubMed] [Google Scholar]

- 17. Guidry MM, Drennan RH, Weise J, Hamm LL. Serum sepsis, not sickness. Am J Med Sci. 2011; 341 2: 88– 91. [DOI] [PubMed] [Google Scholar]

- 18. Rencic J. Twelve tips for teaching expertise in clinical reasoning. Med Teach. 2011; 33 11: 887– 892. [DOI] [PubMed] [Google Scholar]

- 19. Eva KW. What every teacher needs to know about clinical reasoning. Med Educ. 2005; 39 1: 98– 106. [DOI] [PubMed] [Google Scholar]

- 20. Kassirer JP. Teaching clinical reasoning: case-based and coached. Acad Med. 2010; 85 7: 1118– 1124. [DOI] [PubMed] [Google Scholar]

- 21. Nendaz MR, Bordage G. Promoting diagnostic problem representation. Med Educ. 2002; 36 8: 760– 766. [DOI] [PubMed] [Google Scholar]

- 22. Rugg G, McGeorge P. The sorting techniques: a tutorial paper on card sorts, picture sorts and item sorts. Expert Systems. 1997; 14 2: 80– 93. [Google Scholar]

- 23. Dolan JG. Multi-criteria clinical decision support: a primer on the use of multiple criteria decision making methods to promote evidence-based, patient-centered healthcare. Patient. 2010; 3 4: 229– 248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Barron FH, Barrett BE. Decision quality using ranked attribute weights. Manage Sci. 1996; 42 11: 1515– 1523. [Google Scholar]

- 25. Kirkwood CW, Sarin RK. Ranking with partial information: a method and an application. Oper Res. 1985; 33: 38– 48. [Google Scholar]

- 26. Roberts R, Goodwin P. Weight approximations in multi-attribute decision models. J Multi-Criteria Dec Analysis. 2002; 11 6: 291– 303. [Google Scholar]

- 27. Durning S, Artino AR, Jr, Pangaro L, van der Vleuten CP, Schuwirth L. Context and clinical reasoning: understanding the perspective of the expert's voice. Med Educ. 2011; 45 9: 927– 938. [DOI] [PubMed] [Google Scholar]

- 28. Goldszmidt M, Minda JP, Bordage G. Developing a unified list of physicians' reasoning tasks during clinical encounters. Acad Med. 2013; 88 3: 390– 397. [DOI] [PubMed] [Google Scholar]

- 29. Delany C, Golding C. Teaching clinical reasoning by making thinking visible: an action research project with allied health clinical educators. BMC Med Educ. 2014; 14: 20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Dhaliwal G, Sharpe BA. Twelve tips for presenting a clinical problem solving exercise. Med Teach. 2009; 31 12: 1056– 1059. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.