Abstract

The goal of the Young Scientist Program (YSP) at Washington University School of Medicine in St. Louis (WUSM) is to broaden science literacy and recruit talent for the scientific future. In particular, YSP seeks to expose underrepresented minority high school students from St. Louis public schools (SLPS) to a wide variety of careers in the sciences. The centerpiece of YSP, the Summer Focus Program (SFP), is a nine-week, intensive research experience for competitively chosen rising high school seniors (Scholars). Scholars are paired with volunteer graduate student, medical student, or postdoctoral fellow mentors who are active members of the practicing scientific community and serve as guides and exemplars of scientific careers. The SFP seeks to increase the number of underrepresented minority students pursuing STEM undergraduate degrees by making the Scholars more comfortable with science and science literacy. The data presented here provide results of the objective, quick, and simple methods developed by YSP to assess the efficacy of the SFP from 2006 to 2013. We demonstrate that the SFP successfully used formative evaluation to continuously improve the various activities within the SFP over the course of several years and in turn enhance student experiences within the SFP. Additionally we show that the SFP effectively broadened confidence in science literacy among participating high school students and successfully graduated a high percentage of students who went on to pursue science, technology, engineering, and mathematics (STEM) majors at the undergraduate level.

INTRODUCTION

Many minority groups are significantly underrepresented in science, technology, engineering, and mathematics (STEM) fields at the undergraduate level and beyond (25, 26). In 2013, underrepresented minorities earned 7% of doctorates in science and engineering in the United States, and in 2010, women made up 21% of full professors with science and engineering doctorates (25, 26). To attract underrepresented high school students into scientific careers, two MD/PhD students founded the Young Scientist Program (YSP) at the Washington University in St. Louis School of Medicine in 1991. The YSP is almost entirely led by graduate student volunteers (Volunteers) who design, organize and participate in the different components of the program (3, 5, 7). YSP works in partnership with St. Louis Public High Schools (SLPS) to recruit, mentor, and teach students from underrepresented backgrounds (36). As of 2013, over 80% of the students in SLPS were African-American, an underrepresented minority in STEM fields (25), and more than 80% of students in SLPS qualified for free or reduced-price lunch (22). YSP operates a suite of outreach programs that aim to strengthen science literacy and promote interest in scientific careers and STEM undergraduate studies.

Inquiry-based hands-on laboratory research is an exciting and productive introduction to science for both undergraduate (19, 30) and K–12 (12) students. The focal point of YSP outreach is the Summer Focus Program (SFP), a nine-week, paid summer research internship held each summer for 12 to 16 rising high school seniors (Scholars). The goal of the SFP is to broaden scientific literacy and recruit new, diverse talent to scientific professions by making Scholars more comfortable with science and increasing their science literacy. The internship provides an immersive experience in laboratory research, pairing high school students with YSP Volunteer mentors. A STEM pipeline program with similar goals aimed at undergraduate-level students increased minority student matriculation into STEM PhD programs (28). Likewise, science “apprenticeship” programs for middle and high school students have been shown to increase students’ critical thinking skills and interest in scientific careers (1, 4, 9, 14, 15, 16, 21, 24, 29, 32, 37, 38, 39). However, few published studies describe work with urban communities or underrepresented minority students (10, 21).

YSP utilizes a workforce of Volunteers, graduate students, and fellows who are actively doing scientific research and are thus a part of the scientific community of practice. In contrast, the SLPS high school students are at the periphery of the scientific community of practice. In other words, many SLPS high school students receive instruction in the sciences but have little hands-on experience. To bridge this gap, YSP focuses on several key skills needed to be a successful laboratory scientist. These include basic laboratory skills and safety, understanding scientific articles, peer review, and verbal and written science communication. Scholars work under the guidance of graduate/medical students and postdoctoral fellows who volunteer as research mentors (Mentors). Mentors partner with SFP Scholars in a nine-week independent research project, guiding Scholars through the scientific method and teaching them the relevant concepts and techniques required for their specific research projects. Scholars are also paired with a science literacy tutor (Tutor), a YSP Volunteer who meets with the Scholar weekly to help the latter learn concepts and improve her or his scientific writing.

The mentored research component of the SFP is complemented by several auxiliary activities that Scholars participate in over the course of their summer. Prior to entering research labs, all Scholars participate in a Research Boot Camp (BC), a “crash course” in common laboratory tools and techniques. Throughout the summer, Scholars participate weekly in the Science Communication Course (SCC), where they are taught how to read the primary scientific literature. This course also teaches Scholars how to clearly communicate scientific data, culminating in the completion of both a scientific paper and a presentation detailing the results of the Scholar’s summer research project. Lastly, the SCC focuses on peer review, an integral component of the scientific process for both article publication and grant review.

We hypothesized that participation in the SFP could broaden science literacy among Scholars, resulting in graduation of a high percentage of students who went on to pursue STEM majors at the undergraduate level. To continually enhance the SFP experience and to assess whether the program was achieving its goals, YSP developed and implemented a suite of evaluation tools that included pre-and post-program surveys and interviews with Scholars and Volunteers. Here, we report on the successful implementation of these assessment tools during iterative evaluation and improvement cycles for two fundamental SFP activities, the Research Boot Camps (BC) and the Science Communication Course (SCC). Our results indicate that YSP improved the quality and effectiveness of the SFP experience for Scholars over the time period of our study, with lasting influences on Scholar graduation rates, college matriculation, and pursuit of STEM degrees.

METHODS

Survey development and administration

Before 2007 many of the SFP’s assessments for program improvement lacked formal, efficient, and systematic evaluation mechanisms to assess the impact and efficiency of the programs. To correct these shortcomings, YSP partnered with two experienced independent evaluators (Leslie Edmonds Holt and Glen E. Holt of Holt Consulting, presently in Seattle, WA) in 2007 to identify problems, build assessment tools, analyze results, and implement improvements. From 2007 to 2011, YSP sought to develop an evaluation program that met the following criteria: 1) A fast and easy-to-integrate system of evaluation for a program run by Volunteers; 2) A system wherein Volunteers utilize feedback immediately; and 3) A flexible system of evaluation for improvement that other volunteer science outreach programs could use as a model to improve their effects.

To fit the needs of YSP and guide development of specific, efficient assessment tools, the evaluators modified existing formative evaluation models (27, 31). The goals of the SFP include teaching Scholars to communicate science as well as improving their general attitudes towards science. YSP needed to learn whether its goals were being achieved in a short timeframe, and formative feedback was therefore chosen to identify areas for improvement (20, 31). YSP used simple pre- and post-activity question sets in which participants were asked to report their observations about and reactions to the activities and what they had learned from the activities using a modified Likert scale (17). YSP also asked Scholars to answer questions about basic scientific knowledge as well as educational and career goals. Similar questions were asked at the end of the SFP in a long-form survey. Surveys results were anonymous, with students agreeing to participate after reading the following statement: “This survey is designed to provide us with information to continue improving our science outreach efforts and measure the impact of the Young Scientist Program, in particular the Summer Focus Program, on previous participants. As a recent SFP Scholar, your input is essential for us to continue to improve this program. By filling out the questionnaire, you agree to participate in this survey and release your answers to the Young Scientist Program. Please feel free to contact [the program coordinator] with any questions regarding this survey. With your privacy in mind, we want you to know that this data will be anonymous and that your name will not be shared with outside parties.” An example of the End-of-Summer Survey is shown in Appendix 1. Analysis of the data acquired from this study was approved by the Institutional Review Board at Washington University in St. Louis.

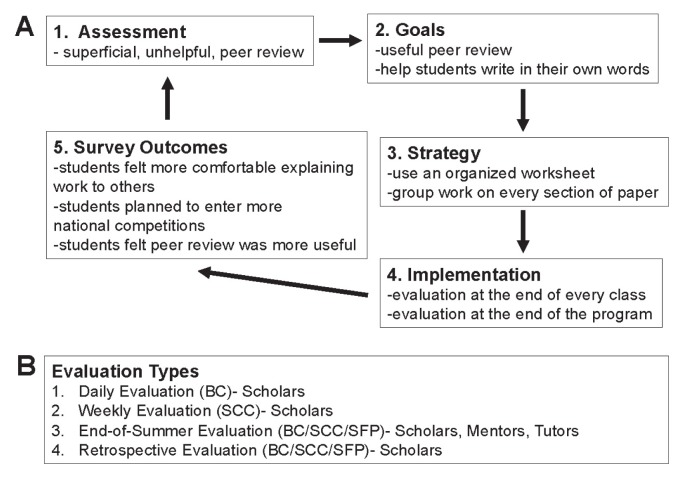

YSP collected and used evaluation data to assess two components of the SFP: the Research Boot Camp (BC) and the Scientific Communication Course (SCC). This design is based on continual cycles of evaluation-driven program improvements. Figure 1A specifically depicts the evaluation cycle for SCC peer review: 1) During the assessment, evaluators assisted YSP in incorporating the needs and suggestions of Volunteers and parsing previously acquired informal evaluation data. The assessment indicated that peer review was not useful and that Scholars often employed rote memorization rather than explaining their scientific projects in their own words. 2) YSP defined or refined goals and outcomes for the program. 3) YSP developed and implemented strategies to achieve the goals. YSP overhauled the peer review system by giving Scholars specific criteria to focus on and encouraged students to explain their research in their own words to small groups led by Volunteers. 4) YSP evaluated the success of these strategies through both informal discussions with Scholars and surveys given immediately upon component completion (i.e., at the end of each weekly SCC teaching session), at the end of the summer program, and retrospectively via e-mail. 5) Surveys were evaluated and the assessment (step 1) began again for the next year.

FIGURE 1.

A. Evaluation Model. Strategy to improve mission-driven outcomes of YSP through quick and simple evaluation mechanisms. Bullet points demonstrate how the model was utilized to improve the Science Communication Course (SCC). B. Types and frequency of evaluations conducted and participants for each.

From 2008 to 2013, Scholars completed anonymous surveys about BC both immediately upon its completion and at the end of the summer (Fig. 1B). From 2008 to 2013, anonymous surveys after each SCC course session assessed whether the Scholars understood the daily material. If Scholars were unclear on specific concepts, instructors revisited topics during the next class period. Additionally, Scholars anonymously completed questions about the SCC in the end-of-summer survey. Retrospective polling of former SFP Scholars (2006–2012) in an anonymous electronic survey was used to evaluate longer-term effects (Fig. 1B).

The SFP also engaged in evaluation that was more informal in nature. This included end-of-summer discussions between all SFP Scholars and YSP volunteers who had not been involved in the SFP program and served as neutral moderators of discussion. These volunteers did not know the students by name and wrote down student responses without any identifiers. Students were not required to respond to questions. These informal evaluations, during which the SFP recorded and coded the Scholars’ responses anonymously, were very useful to SFP leaders as a supplement to the more formal surveys. SFP leadership found that students were more likely to give negative feedback in informal discussions than in the surveys, perhaps because they were concerned about SFP leaders’ perceptions of them. Thus a combination of formal and informal feedback allowed SFP leadership to identify problems and respond to them effectively.

Data analysis

Analysis of data presented in this manuscript was performed using base functions in the R statistical software suite and programming environment (33). Specifically, we used ANOVA and Tukey’s honest significance difference (HSD) to analyze student responses over time, and t-tests and Fisher’s exact tests to compare SFP students to SLPS and Missouri students. P values are presented for t-tests between percentages. We additionally performed Fisher’s exact test on raw data.

RESULTS

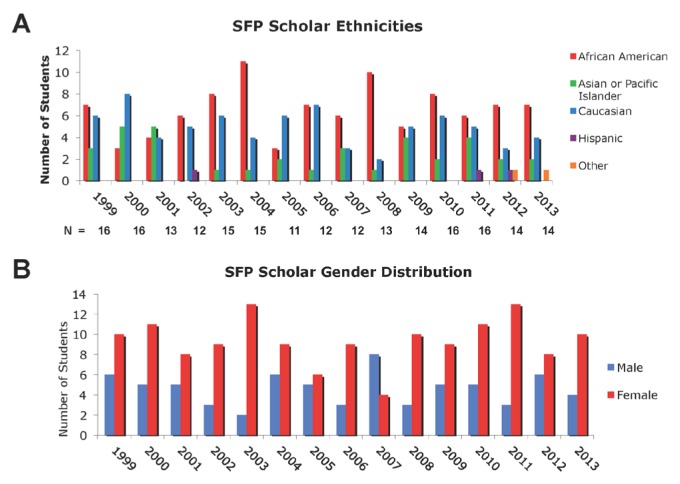

Since 1991, 280 high school students have participated as Scholars in the SFP. Since YSP began collecting demographic data in 1999, 101 Scholars (48%) belonged to underrepresented minorities (Fig. 2A), and a majority (140) were women (Fig. 2B).

FIGURE 2.

SFP Demographics. A. Ethnicities of SFP Scholars. Self-reported ethnicities of Scholars from 1999 to 2013. Definitions of ethnicities are from The Common Application. B. Genders of SFP Scholars. Self-reported genders of Scholars from 1999 to 2013. SFP = summer focus program.

Basic laboratory skills and safety

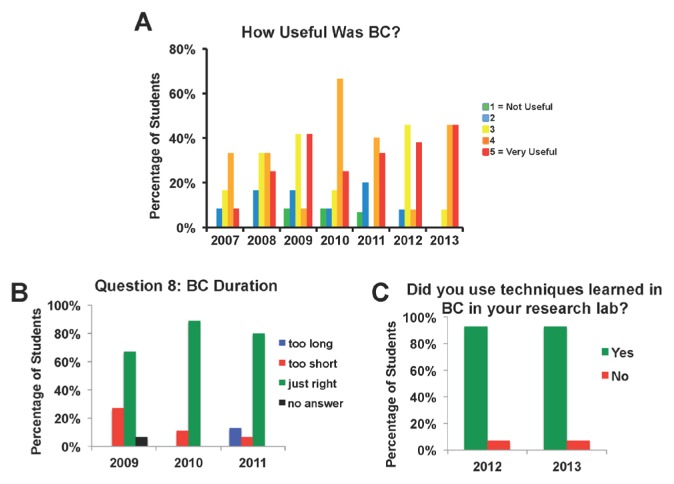

The first key scientific skills that YSP teaches Scholars are basic laboratory techniques and safety, addressed in a Research Boot Camp (BC) before Scholars join their research laboratories. Prior to 2007, Scholars participated in a three-day BC course that took place a few days after they entered their summer research labs. YSP began a two-year assessment of BC in 2007, soliciting feedback from both Scholars and Volunteers via informal discussions and a comprehensive survey completed at the end of the SFP. In the comprehensive end-of-summer survey the Scholars were asked, “How useful was the Boot Camp?” on a modified five-point Likert scale (17) where 1 = not useful and 5 = very useful (Fig. 3A). In 2007, responses to this question were more negative than to any other query in this survey. Verbal and written comments from Scholars criticized the BC timetable and content. Scholars did not like the length of the course or the degree of detail taught, and cited a lack of relevance to the Scholars’ eventual summer research projects.

FIGURE 3.

Assessment of BC. SFP Scholars completed survey questions about their Research Boot Camp (BC) experience. The percentage of Scholars selecting each answer is shown on the y-axis. Questions were ranked on a five-point scale, 1 being the most negative and 5 the most positive. A. Scholar responses to the question, “How useful was BC?”, evaluated in the end-of-summer survey (2007–2013). B. Scholar responses to the question, “Was the BC too long, too short, or just the right length?”, evaluated in the new end-of-BC survey (2009–2011). C. Scholar responses to the question, “Did you use the techniques you learned in BC in your research lab?”, implemented 2012–2013. BC = boot camp; SFP = summer focus program.

In response to the assessment data, the BC was refocused and restructured. YSP defined three main goals (Table 1) for BC that provided clear and comprehensive teaching directions. YSP also reorganized and condensed BC into a two-day course held before Scholars entered their research labs. YSP queried Scholars about BC twice: immediately after completing BC and at the end of the summer. Data from 2008 improve over 2007, with roughly half of the Scholars rating BC as “useful” (a score of 4 or 5) (Fig. 3A). These assessment data were then used to continue adjusting and improving BC over the next several years to accomplish the stated goals.

TABLE 1.

Research boot camp (BC) goals.

| Boot Camp Goal | Details |

|---|---|

| 1) Ice Breaking | Scholars will get to know each other and become comfortable learning/working/communicating with each other as peers. |

| 2) Gaining Basic Laboratory Skills/Techniques | Scholars will gain valuable skills that 1) enhance their abilities as scientists, 2) prepare them to participate in the research lab, and 3) prepare them for college-level biology courses. Scholars will learn how to prepare a lab notebook; pipet liquids; determine protein concentrations; use sterile technique; perform bacterial transformation, restriction digests, gel electrophoresis, and PCR; and formulate solutions. |

| 3) Developing Active Learning Skills | Scholars will perfect active learning skills that can make them good scientists and prepare each of them for college-level learning experiences: asking and answering questions, developing self-motivation, and proactively studying/questioning/listening. |

PCR = polymerase chain reaction.

From 2009 to 2013, Scholars completed a short evaluation (Appendix 2) immediately after completing BC to determine whether the most recent program changes had fulfilled the goals outlined in Table 1. Questions 1 and 2 addressed whether BC provided an “ice-breaking” environment for the Scholars (Goal 1). Nearly all students felt more comfortable with their colleagues and instructors after completing BC (100% of students answered “yes” to Question 1 in 2009, 88% in 2010, and 100% in 2011–2013). Questions 3 to 5 focused on specific concepts and techniques taught in BC (Goal 2). Question 3 assessed Scholars’ understanding of pipetting technique, Question 4 assessed Scholars’ understanding of antibiotic selection in cloning techniques, and Question 5 assessed Scholars’ understanding of sterile technique. In 2009 and 2010, all Scholars correctly answered Question 3, and 96% of Scholars correctly answered Question 5, indicating that Volunteers were successfully teaching Scholars pipetting and sterile techniques. However, half of the responses to Question 4 were incorrect (36.7%) or left blank (13.3%). This information indicated that Volunteers needed to better explain antibiotics and their use in cloning techniques. In response, YSP made adjustments to increase time spent discussing this topic in the BC course the following year. The 2011 data showed a significant increase in correct answers to Question 4, with more than 90% of Scholars answering correctly (F (2,44) = 3.413, p = 0.042). This significant increase in learning indicated that YSP was able to use its evaluation method to improve outcomes for the BC.

Questions 6 and 7 query the degree of the Scholars’ active participation/learning (Goal 3). Finally, Questions 8 and 9 address Scholars’ attitudes toward BC duration and usefulness. When Scholars were queried about the duration of BC following course restructuring (Q8), responses over the subsequent years were overall positive, with anywhere from 67% to 90% of Scholars indicating that BC was the right length (Fig. 3B). When asked about the merit of BC (Q9), a majority of scholars ranked usefulness at 4 or 5 out of 5 (where 5 was “very useful”) from 2009 to 2013. Although responses did not change significantly over time (F (2,42) = 1.123, p = 0.335), SFP Scholars’ responses from 2009 to 2013 were generally more positive about the length and content of BC than the informal feedback collected in 2007 and 2008 that prompted the overhaul of the BC curriculum. In support of this, comments regarding this question ranged from “explained/covered a lot that is not taught in my science class” to “helped recall information and techniques learned in the past.”

In 2011, YSP noted a lower ranking of the BC experience (Fig. 3A) as measured in the end-of-summer assessment. Feedback from Scholars indicated that they did not feel that the techniques learned in BC were relevant to their eventual summer research projects. YSP Volunteers used this feedback to again restructure the BC curriculum to better reflect the skills most Scholars were using in the course of their summer laboratory projects. These differences were not statistically significant (F (6,84) = 0.871, p = 0.520); however, anecdotally, in 2012 and 2013, we implemented a query on whether the Scholars used techniques learned in BC once they started work in their research labs. Nearly all students replied “Yes” (Fig. 3C). In this way, YSP successfully utilized evaluation cycles to improve the BC experience for students and to directly address its goal of teaching Scholars basic scientific skills in an active-learning setting.

Evaluating the Scientific Communication Course (SCC)

Besides acquiring laboratory and safety skills, successful scientists must be able to read and understand the primary literature, communicate their novel findings to others, and give and respond to feedback. In the Scientific Communication Course (SCC), Scholars are taught how to read the primary literature and clearly communicate scientific data in written and oral form. Scholars also engage in peer review to give feedback to one another and learn how to utilize constructive criticism. Most importantly, the SCC gives Scholars the opportunity to communicate with people of different backgrounds.

YSP initiated the SCC in 2004 to teach Scholars scientific communication, specifically how to write scientific papers about their summer research projects. Assessment began in 2007 with the following informal observations by Volunteers: a) Scholars were not fully engaged, interactive participation in discussions was poor, few questions were asked, and instructor questions were not answered; b) Scholars did not understand scientific vocabulary, could not define specific phrases in their own words, and simply copied phrases they did not comprehend from their mentors or published sources; c) Scholars reviewed each other’s papers weekly but their critiques were superficial and did not critically assess content; and d) Scholars did not understand that peer review could actually improve their writing.

Based on these assessments, YSP redefined goals for the SCC (Table 2) and systematically overhauled the content and format. Assessment and improvement of the SCC followed the outline in Figure 1A: setting goals, crafting a strategy incorporating active learning and peer review, devising and implementing new assessment tools, analyzing outcomes, and changing the SCC curriculum. Volunteers leading the SCC consulted with The Teaching Center at Washington University on course design and peer review implementation (34, 35) and created a syllabus with explicit long-term and lesson-specific goals (see Ref. 7). During each weekly class, Volunteer instructors teach Scholars about one section of a paper (for example, the Introduction). Scholars spend the next week writing an Introduction section, and, in the following class period, they hand this in to instructors before learning how to write the Materials and Methods section. Their assignment for the next week is to write the Materials and Methods section and to review their peers’ Introduction sections. This course design enabled Scholars to learn to write the sections of a scientific paper and receive feedback from both Volunteer instructors and peers. For each Scholar, the final culmination of the SCC is a complete scientific paper documenting the results of their summer research project. The Scholars also prepare formal presentations about their research projects and present these at a symposium attended by faculty, Volunteers, other Scholars, and family and friends.

TABLE 2.

Science communication course (SCC) goals.

| Science Communication Course Goal | Details |

|---|---|

| 1) Understanding | Scholars can describe in their own words and analyze the basic content of scientific papers. Scholars understand different ways about which science can be written or spoken. |

| 2) Communicating | Scholars can communicate their scientific research successfully so that others understand it at a basic level (written paper, oral presentation). They can recognize and apply the appropriate format of scientific papers in their own writing. |

| 3) Peer Review | Scholars can evaluate the work of others constructively. They can identify common problems in composition. |

The SCC uses pedagogical concepts similar to those of the BIO 2010 Initiative (6), focusing on strong Scholar engagement by emphasizing active participation and presenting concepts dynamically, in contrast to using a lecture format (passive learning). Active learning, in the form of group activities and discussions, is crucial to scientific teaching (8, 13, 18), especially for minority students (11). To incorporate active learning, we developed a format that gave students the opportunity to hear and visualize new information during a mini-lecture, followed by an activity that prompted them to apply the new concepts they had just absorbed. For example, we explained a concept for approximately 15 minutes, during which Instructors encouraged Scholars to both ask and answer questions. For the following 15 to 20 minutes, the Scholars participated in a “Think-Pair-Share” exercise in which students worked on a question related to the mini-lecture. Scholars worked on the question first on their own, then with a partner, and they finally discussed answers with the entire class. These exercises required students to think independently as well as communicate their ideas to a group: two forms of active learning. To track the efficacy of the new curriculum, a survey was administered to Scholars after each SCC class to determine whether they understood the daily material. If Scholars were unclear on specific concepts, these topics were revisited during the next class period. Additionally, Scholars were queried about the entire SCC experience in the end-of-summer survey. Polling of former SFP Scholars (2006–2012) retrospectively in an electronic survey was used to evaluate longer-term impacts (Fig. 1B). Scholars were asked to rank the importance of Summer Focus in developing skills in the following areas (1 being “most helpful” to 8 being “least helpful”): writing skills, applications of the scientific method, basic job skills, information research skills, presentation skills, laboratory skills, job communication skills, and applying science reasoning to everyday life. Of these categories, the 40 respondents most often ranked laboratory skills, applications of the scientific method, and basic job skills as being most helpful or second most helpful (45%, 30%, and 30% of Scholars, respectively).

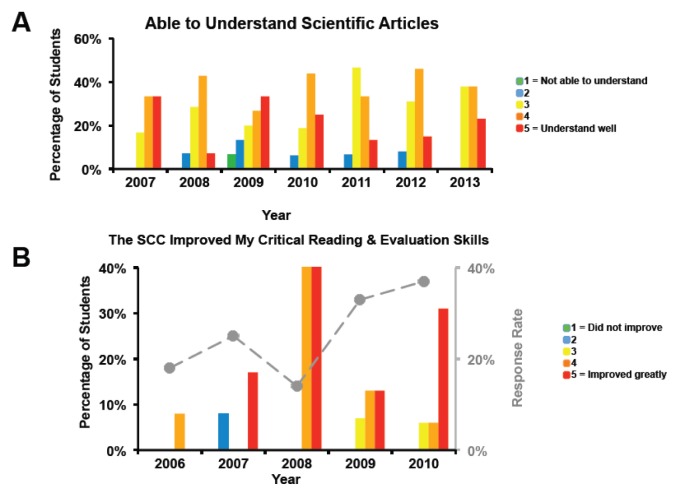

Teaching Scholars to read and understand the primary scientific literature

To assess the effectiveness of teaching Scholars to read and understand the primary scientific literature, Volunteers asked Scholars how well they understood scientific articles (Fig. 4A). We expected that these articles might be challenging for high school students because of detailed content, specific terminology, and style. In 2007, 66% of Scholars responded positively (rank of 4 or 5 on a 5-point Likert scale) to this question and continued to respond positively from 2008 to 2010. However, in 2011, only 47% of Scholars responded positively. We addressed this in 2012 by focusing on dissecting scientific articles in the SCC and by employing the volunteer Tutors to assist Scholars with their analyses. By 2012–2013, more than 65% of Scholars responded positively, indicating improvement in understanding of scientific articles. These changes were not statistically significant (F (6,87) = 1.245, p = 0.292); however, we did observe an increase in positive responses over time.

FIGURE 4.

Assessment of scientific literacy in the SCC. SFP Scholars answered survey questions about their SCC experience. The percentage of Scholars selecting each answer is plotted in bars on the y-axis; response rate for each year is plotted as dots on the right-hand y-axis. Questions were ranked on a five-point scale, with 1 being the most negative and 5 the most positive. A. Scholar responses to the question, “How well do you feel you are able to understand scientific articles?”, evaluated in the end-of-summer survey (2007–2013). B. Scholar responses to the statement, “The science communication course improved my critical reading and evaluation skills,” evaluated in the retrospective survey (2006–2010). SCC = science communication course; SFP = summer focus program.

Teaching Scholars to use and respond to peer review

Through informal discussions at the beginning of the evaluation process (2007), Scholars indicated that they had difficulty grasping and applying peer review. Peer review is a crucial scientific skill both for publishing findings in leading journals and for funding scientific projects. Early exposure to the peer review process helps prepare Scholars for a future in science (2, 15). We employed a peer review model developed by the Teaching Center at Washington University to run peer review sessions (34) in which Volunteers engaged the Scholars in guided peer review that used worksheets, small-group work, and discussions. Volunteers coached Scholars to write in their own voice rather than copying the words of their Mentors or published scientific articles. Scholars began practicing peer review through constructive criticism of research papers written by previous SFP participants. Scholars were then assembled into diverse peer review groups (composed of three or four Scholars of different gender and race) to critique each other’s papers using a basic worksheet (see (7)). The sheet contained questions designed to help Scholars critically evaluate papers and provide useful feedback to their peers. In these small peer review groups Scholars reached a level of comfort that enabled them to give constructive criticism. When surveyed about the restructured peer review module in the end-of-summer 2007 evaluation, Scholars responded favorably. One Scholar stated, “the peer review of other students’ papers was very useful, and the constructive criticism of my own paper proved helpful in revising the final draft of the paper.” A retrospective survey taken after Scholars had begun college queried whether the SCC improved Scholars’ ability to “critically read and evaluate others’ work” (Fig. 4B). While the response rate was low for this survey and the differences did not reach statistical significance (F (4,12) = 0.241, p = 0.91), Scholars who did respond consistently regarded the SCC as having improved their critical reading and evaluation skills. Thus, restructuring the peer review module resulted in Scholars who became more comfortable with the process and realized that they directly benefited from gaining proficiency in peer review.

Communicating scientific findings to diverse audiences

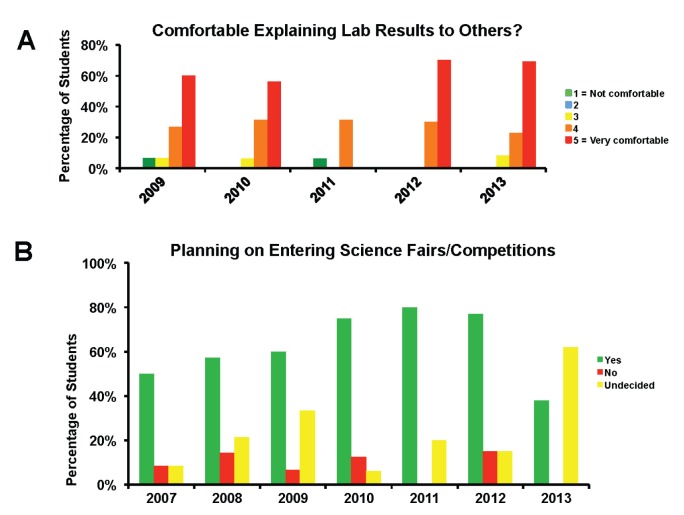

Coherently explaining scientific research findings to others is a crucial scientific skill. While the peer review component of the SCC is able to address written communication, we also focus on verbal communication. During the last week of the SFP, Scholars prepared a five-minute digital presentation on their research project and practiced presenting it every day for an audience of their peers and Volunteers to get constructive feedback on presentation content and style. An end-of-summer survey question asked Scholars whether they felt comfortable explaining their research results to others. Although the responses did not change significantly over time (F (4,66) = 0.372, p = 0.828), we observed (Fig. 5A) that over 80% of Scholars responded positively to this question from 2009 to 2013 (the question was added to the survey in 2009), suggesting that the majority of Scholars to felt comfortable presenting their work to others.

FIGURE 5.

Assessment of scientific communication in the SCC. SFP Scholars’ answers to survey questions about their SCC experience. Percentage of Scholars selecting each answer (bars) on the y-axis. Questions were ranked on a five-point scale, 1 being the most negative and 5 the most positive. A. Student responses to the question, “How comfortable do you feel explaining your laboratory results to others?”, evaluated in the end-of-summer survey (2009–2013). B. Percentage of students who plan to enter science fairs or competitions per year, evaluated in the end-of-summer survey (2007–2013). SCC = science communication course; SFP = summer focus program.

Requiring Scholars to explain their research to peers improves their own understanding of their research projects and their ability to communicate science to people of different backgrounds. YSP encourages Scholars to submit their research papers to science competitions and tracks the percentage of Scholars who plan to submit their work (Fig. 5B). There was a trend-level effect of time explaining whether a student decided to enter a science competition (F (6,81) = 1.123, p = 0.079). Another useful metric of student engagement and success at science communication was participation in the Siemens Competition, a prestigious national competition for high school student research projects in math and science. Before 2009, no SFP Scholars were semifinalists in the Siemens Competition, and SCC instructors therefore encouraged Scholars to submit their findings to this competition. Between 2009 and 2012, SFP Scholars comprised 6 of the 15 Missouri semifinalists. The SFP noted that in 2013, more Scholars were “undecided” about submitting their work (Fig. 5B). We responded to this the following year by making information about competitions available to students early on in the SFP and by encouraging students to submit their final papers.

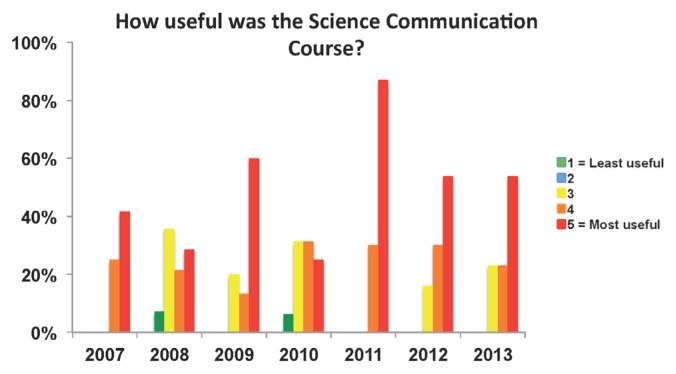

To assess the overall utility of the SCC to Scholars, the end-of-summer survey (5-point scale) asked, “How useful was the Science Communication Course?” (Fig. 6). In 2007, the responses were favorable; however, only 67% of Scholars answered the question. The response rate improved subsequent to the 2008 redesign of the SCC, and in 2009, 73% of Scholars ranked the SCC positively compared with less than 50% in 2008. These data reflect a positive response to the active learning approach and were statistically significant (F (6,85) = 3.358, p = 0.005). Surprisingly, only 56% of Scholars ranked the SCC positively in 2010, with most criticisms suggesting that the SCC was too basic. To address this criticism, YSP increased the frequency and specificity of individual meetings between Scholars and SCC Volunteers to impress upon Scholars the importance of fundamental writing skills, particularly in explaining complex ideas. The 2011 evaluations reflect the success of the continual reworking of the SCC: 100% of students ranked the SCC positively as revealed by post-hoc analysis, indicating significant improvement between 2010 and 2011 (p = 0.012). These positive evaluations were continued into 2012 and 2013. Thus, continual evaluation-driven restructuring of the SCC successfully met the science communication goals of the SCC and was fluid enough to address the changing needs of Scholars.

FIGURE 6.

Assessment of long-term impact of the SCC. SFP Scholars’ answers to survey questions about their SCC experience. Percentage of Scholars selecting each answer (bars) on the y-axis. Questions were ranked on a five-point scale, 1 being the most negative and 5 the most positive. Scholar responses to the question, “How useful was the SCC?”, evaluated in the end-of-summer survey (2007–2013). SCC = science communication course; SFP = summer focus program.

Overall effect of the SFP on Scholars

In addition to BC- and SCC-specific assessments, we queried Scholars at the end of the SFP and retrospectively to determine the effect of the entire SFP experience on all students (Fig. 1B). We compared the rate of SFP students who graduated high school from 2011 to 2014 (100%) with rates of graduation from SLPS during comparable years (mean 83.85%) (21) and found that SFP Scholars graduated high school at a rate significantly higher than SLPS and Missouri students (p = 0.033). Furthermore, SFP Scholars continued on to two- or four-year colleges at a high rate (82.88% of students). A significantly larger proportion of SFP graduates was likely to attend college than would be expected given the average college matriculation rates of SLPS in general (31.9%; p < 0.001) and Missouri schools (37.86%; p < 0.001). This is especially noteworthy considering that only 36.89% of schools attended by SFP Scholars graduated students with ACT scores above the threshold indicating college readiness (22).

When asked to rank the impact of their SFP experience on eventual college major (on a scale of 1 to 5, with 5 being “major influence”), 75% of Scholars from 2006 to 2013 answered 4 or 5. Of the 111 SFP Scholars from 2006 to 2013, 92 confirmed that they were enrolled in college, with a majority (73%) pursuing STEM majors. This percentage of students pursuing STEM degrees is significantly higher than both the Missouri (9.7%) and United States (10.7%) percentages (p < 0.001) (23).

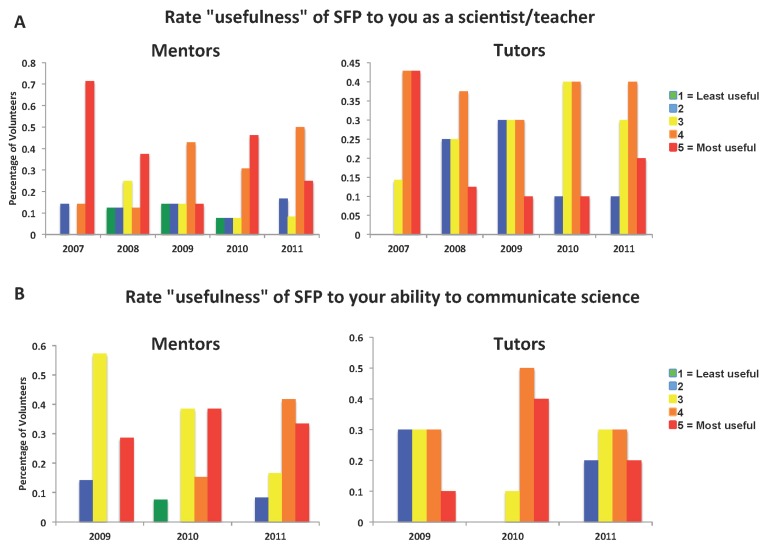

YSP also used its new evaluation tools to track and improve Volunteer outcomes. In 2008 and 2009, YSP Volunteers had a decrease in anonymous positive responses to the question “Rate the “usefulness” of the summer to you as a scientist/teacher.” Informal evaluations revealed that Volunteers were unclear of their responsibilities as Mentors and Tutors. In response, SFP leaders defined goals, responsibilities, and benefits for the different volunteer positions in the SFP. Additionally, leaders held a brief training session for mentors. As a result, Mentor and Tutor responses to the questions “Rate the “usefulness” of the summer to you as a scientist/teacher” and “Rate the “usefulness” of the summer to your ability to communicate science” increased over time, with tutor responses to the latter question increasing significantly over time (p = 0.041, Fig. 7).

FIGURE 7.

Assessment of the SFP by Volunteers (Mentors and Tutors). SFP Volunteers’ (Mentors’ and Tutors’) answers to survey questions about their SCC experience. Percentage of Mentors/Tutors selecting each answer (bars) on the y-axis. Questions were ranked on a five-point scale, 1 being the most negative and 5 the most positive. A. Responses to the statement, “Rate the usefulness of the SFP to you as a scientist/teacher,” evaluated in the end-of-summer survey (2007–2011). B. Responses to the statement, “Rate the usefulness of the SFP to your ability to communicate science,” evaluated in the end-of-summer survey (2009–2011). SFP = summer focus program; SCC = science communication course.

DISCUSSION

In this manuscript, we have described the use of quick and simple evaluation tools to improve our volunteer-based science outreach program and the effectiveness of recruiting high school students to pursue undergraduate STEM degrees. We focused our efforts on improving two components of the nine-week Summer Focus Program (SFP) research internship: the Research Boot Camp (BC) and the Science Communication Course (SCC). The SFP teaches scientific skills to bring high school students into the scientific community of practice. The SFP seeks to teach Scholars key scientific skills including laboratory and safety skills, how to read and understand the scientific literature, scientific communication, and peer review. We developed and implemented evaluation tools to assess effectiveness in achieving these goals that could be easily modified to focus on new issues that arose regarding the SFP. In the case of BC, these evaluation tools led us to streamline the curriculum to make it shorter and more focused on specific scientific techniques the Scholars would later use in their research projects. In the SCC, evaluation tools were used to assess peer review and scientific writing. These tools have enabled the SFP to achieve its goals of increasing science literacy and recruiting underrepresented minorities to science, as evidenced by the high number of SFP Scholars who go on to pursue STEM degrees. In our analyses of the changes in evaluation over time, we found that results were positive, but that many results did not reveal a statistically significant change. Although surprising, this result might be explained by the fact that many students were reporting near ceiling, and thus there was too little variability in the responses to detect significant changes with our sample size. Nevertheless, the evaluation results generate useful documentation of our gains and successes that can in turn be provided to government and private funders, as well as similar outreach programs. In line with our initial hypotheses, we found that SFP Scholars graduated from high school at rates substantially higher than peers in the SLPS and Missouri school system, and nearly 75% of SFP graduates were pursuing STEM careers.

One criticism of the success of SFP Scholars is that a self-selection bias might lead to having only the most talented and highly motivated students apply for and be accepted into this program. Although it is likely that highly motivated students apply for the program, the selection process for the SFP carried out by YSP Volunteers focuses on choosing students who exhibit the most enthusiasm for science, rather than the “best and brightest” from our pool of applicants. Volunteers do not take high school transcripts into account when selecting students for the program, instead focusing on interest in science, ability to work hard, and whether Scholars would benefit from the SFP. Evaluation of similar “pipeline” programs that aim to expose underrepresented minority students to science and promote scientific careers have noted many of the shortfalls we mentioned for our evaluation program, including difficulties in followup and lack of an appropriate “control group” (16, 38). A 2009 survey by the US Department of Health and Human Services found only 24 studies on pipeline programs in the literature, of which only seven addressed high school programs. Winkelby et al. (39) have addressed these concerns by matching participants, academically and socioeconomically, in a pipeline program with a cohort of students who were not chosen to participate in the program. Tyler-Wood et al. (38) assessed the impact of an elementary school science program for girls by comparing the participants in the program with “contrasts” (girls who attended the same school but did not participate in the program) as well as to STEM faculty, science majors, and nonscience majors. To use the information found in these studies to improve the SFP, YSP is currently developing retrospective surveys of 1) students who were not selected for an interview for the SFP and 2) those interviewed but not chosen for the SFP, to act as a “contrast group” (38, 39). We plan to assess these students’ college matriculation, post-college education/career, and attitudes toward science and compare these with retrospective surveys of SFP Scholars to determine long-term impacts of the SFP.

The data discussed here are from professionally-guided assessment obtained from five years of SFP Scholar and Volunteer feedback but suffer from the limitations of small sample size (12–16 Scholars per year) and brief evaluations. Continuing evaluation will enable YSP to better understand its longer-term impacts in the community, to make course corrections based on YSP’s growing database, and to assess the effectiveness of improvements. Most of our data are self-reported; to learn more objectively about Scholars’ skills, YSP plans to use the Mentors and Tutors to perform pre- and post-program assessments of Scholars. This will allow us to perform an objective analysis of Scholars’ skills before and after the SFP. In addition, Tutors will be responsible for evaluating the quality of Scholars’ final papers and presentations over time.

There is a need for extensive “post” evaluation of SFP Scholars during undergraduate years and beyond to determine the long-term impact of the SFP. As discussed in this manuscript, YSP conducts pre- and post-program surveys to evaluate short-term program impacts. We contact students to ascertain college plans, but beyond that, we rely on retrospective surveys at five-year intervals. As we need more detailed and frequent feedback to improve the SFP, we are replacing the five-year survey with surveys at the end of participants’ sophomore year in college to evaluate whether the SFP continues to have an impact on students as they progress through college. Additionally, the low response rates of retrospective surveys motivated us to offer incentives for completing further retrospective surveys and to utilize social media to maintain up-to-date e-mail addresses for former SFP participants. In the future, YSP intends to conduct summative evaluations using data from ten or more years of SFP participants. Future directions include tracking the impact of SFP participation on the career choices of former Scholars and Volunteers and the perceived impacts of the SFP on the broader aspects of the careers of past Scholars and Volunteers.

CONCLUSION

YSP successfully implemented objective, quick, and simple methods to assess program efficacy and to make program improvements, and demonstrated that a volunteer outreach organization can economically and efficiently improve its educational programs targeting underrepresented minorities. Faculty and students at other institutions can benefit from implementing similar assessment methods to begin and/or enhance their own science education and outreach programs to target communities. We present this program as a “pipeline” to increase the recruitment of underrepresented minority and disadvantaged students to scientific undergraduate study. Given the short window since completion of the program and the time necessary to observe effects on college and graduate school attendance and career choice, as well as the small sample size from the current eight-year study, YSP plans to continue assessments for many years to determine the full impact of the SFP on Scholars. In the future, YSP intends to conduct summative evaluations using data from ten or more years of SFP participants. We also plan to track the impact of SFP participation on the career choices of former Scholars and the perceived impacts of the SFP on broader aspects of the careers of past Scholars. The data presented here show that the SFP makes use of formative evaluation to constantly improve the learning experience for high school students, significantly impacting Scholar graduation rates, college matriculation, and pursuit of STEM degrees.

SUPPLEMENTAL MATERIALS

Appendix 1: End-of-summer survey

Appendix 2: Research boot camp assessment

ACKNOWLEDGMENTS

The SFP was supported from 2006 to 2013 by funding from the Howard Hughes Medical Institute (HHMI 51006113) and the Washington University Medical Center Alumni Association. These organizations provided funding for the operation budgets of the SFP but had no input in study design, implementation, data collection, analysis, interpretation, manuscript preparation, review, or publication. The authors declare that there are no conflicts of interest.

Footnotes

Supplemental materials available at http://asmscience.org/jmbe

REFERENCES

- 1.Barab SA, Hay KE. Doing science at the elbows of experts: issues related to the science apprenticeship camp. J Res Sci Teach. 2001;38:70–102. doi: 10.1002/1098-2736(200101)38:1<70::AID-TEA5>3.0.CO;2-L. [DOI] [Google Scholar]

- 2.Bean J. Engaging ideas: the professor’s guide to integrating writing, critical thinking, and active learning in the classroom. Jossey-Bass Publishers; San Francisco, CA: 1996. [Google Scholar]

- 3.Beck MR, Morgan EA, Strand SS, Woolsey TA. Volunteers bring passion to science outreach. Science. 2006;314:1246–1247. doi: 10.1126/science.1131917. [DOI] [PubMed] [Google Scholar]

- 4.Bencze L, Hodson D. Changing practice by changing practice: toward more authentic science and science curriculum development. J Res Sci Teach. 1999;36:521–539. doi: 10.1002/(SICI)1098-2736(199905)36:5<521::AID-TEA2>3.0.CO;2-6. [DOI] [Google Scholar]

- 5.Chiappinelli KB. Fostering diversity in science and public science literacy. ASBMB Today. [accessed 8 October 2011]. [Online.] http://www.asbmb.org/asbmbtoday/asbmbtoday.aspx?id=14604.

- 6.Committee on Undergraduate Biology Education to Prepare Research Scientists for the 21st Century, National Research Council of the National Academies. BIO 2010: Transforming undergraduate education for future research biologists. The National Academies Press; Washington, DC: 2003. [Google Scholar]

- 7.Danka ES, Malpede BM. Reading, writing, and presenting original scientific research: a nine-week course in scientific communication for high school students. J Microbiol Biol Educ. 2015;16:203–210. doi: 10.1128/jmbe.v16i2.925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Deslauriers L, Schelew E, Wieman C. Improved learning in a large-enrollment physics class. Science. 2001;332:862–864. doi: 10.1126/science.1201783. [DOI] [PubMed] [Google Scholar]

- 9.Fougere M. The educational benefits to middle school students participating in a student-scientist project. J Sci Educ Technol. 1998;7:25–29. doi: 10.1023/A:1022580015026. [DOI] [Google Scholar]

- 10.Fraleigh-Lohrfink KJ, Schneider MV, Whittington D, Feinberg AP. Increase in science research commitment in a didactic and laboratory-based program targeted to gifted minority high-school students. Roeper Rev. 2013;35(1):18–26. doi: 10.1080/02783193.2013.740599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Haak DC, HilleRisLambers J, Pitre E, Freeman S. Increased structure and active learning reduce the achievement gap in introductory biology. Science. 2011;332:1213–1214. doi: 10.1126/science.1204820. [DOI] [PubMed] [Google Scholar]

- 12.Hanauer DI, Jacobs-Sera D, Pedulla ML, Cresawn SG, Hendrix RW, Hatfull GF. Inquiry learning. Teach Sci Inquiry. 2006;314:1880–1881. doi: 10.1126/science.1136796. [DOI] [PubMed] [Google Scholar]

- 13.Handelsman J, et al. Education. Scientific Teaching Science. 2004;304:521–522. doi: 10.1126/science.1096022. [DOI] [PubMed] [Google Scholar]

- 14.Hart C, Mulhall P, Berry A, Gunstone R. What is the purpose of this experiment? Or can students learn something from doing experiments? J Res Sci Teach. 2000;37:25–29. doi: 10.1002/1098-2736(200009)37:7<655::AID-TEA3>3.0.CO;2-E. [DOI] [Google Scholar]

- 15.Iyengar R, et al. Inquiry learning. Integrating content detail and critical reasoning by peer review. Science. 2008;319:1189–1190. doi: 10.1126/science.1149875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Knox KL, Moynihan JA, Markowitz DG. Evaluation of short-term impact of a high school summer science program on students’ perceived knowledge and skills. J Sci Educ Technol. 2003;12:471–478. doi: 10.1023/B:JOST.0000006306.97336.c5. [DOI] [Google Scholar]

- 17.Likert R. A technique for the measurement of attitudes. Arch Psychol. 1932;140:1–55. [Google Scholar]

- 18.Linn MC, Lee HS, Tinker R, Husic F, Chiu JL. Teaching and assessing knowledge integration in science. Science. 2006;313:1049–1050. doi: 10.1126/science.1131408. [DOI] [PubMed] [Google Scholar]

- 19.Lopatto D, et al. Undergraduate research. Genomics Education Partnership Science. 2008;322:684–685. doi: 10.1126/science.1165351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mervis J. Meager evaluations make it hard to find out what works. Science. 2004;304:1583. doi: 10.1126/science.304.5677.1583. [DOI] [PubMed] [Google Scholar]

- 21.Miranda RJ, Hermann RS. A critical analysis of faculty-developed urban K–12 science outreach programs. Persp Urban Educ. 2010;7:109–114. [Google Scholar]

- 22.Missouri Department of Elementary and Secondary School Education. Student Demographics. 2014. Dec 29, [Google Scholar]

- 23.Missouri Mathematics and Science Coalition. (n.d.) [Online.] http://dhe.mo.gov/stem/

- 24.Munn M, O’Neill Skinner P, Conne L, Horsma HG, Gregory P. The involvement of genome researchers in high school science education. Genome Res. 1999;9:597–607. [PubMed] [Google Scholar]

- 25.National Center for Science and Engineering Statistics. Special Report NSF. Arlington, VA: 2013. [accessed December 2014]. Women, minorities, and persons with disabilities in science and engineering. [Online.] http://www.nsf.gov/statistics/wmpd/ [Google Scholar]

- 26.Olson K. Despite increases, women and minorities still underrepresented in undergraduate and graduate S & E education. Sociology Department, Faculty Publications; 1999. Paper 92. [Online.] http://digitalcommons.unl.edu/sociologyfacpub/92. [Google Scholar]

- 27.Patton MQ. Utilization-focused evaluation. 4th Edition. SAGE Publications; Thousand Oaks, CA: 2008. [Google Scholar]

- 28.Pender M, Marcotte DE, Domingo MRS, Maton KI. The STEM pipeline: the role of summer research experience in minority students’ graduate aspirations. Educ Policy Anal Arch. 2010;18(30):1–36. [PMC free article] [PubMed] [Google Scholar]

- 29.Rahm J, Miller HC, Hartley L, Moore JC. The value of an emergent notion of authenticity: examples from two student/teacher-scientist partnership programs. J Res Sci Teach. 2003;40:737–756. doi: 10.1002/tea.10109. [DOI] [Google Scholar]

- 30.Russell SH, Hancock MP, McCullough J. Benefits of undergraduate research experiences. Science. 2007;316:548–549. doi: 10.1126/science.1140384. [DOI] [PubMed] [Google Scholar]

- 31.Scriven M. The methodology of evaluation. In: Stake RE, editor. Curriculum evaluation. Rand McNally, Chicago, IL: American Educational Research Association; 1967. (monograph series on evaluation, no. 1). [Google Scholar]

- 32.Spencer S, Hudzek G, Muir B. Developing a student-scientist partnership: Boreal Forest Watch. J Sci Educ Technol. 1998;7:32–43. doi: 10.1023/A:1022532131864. [DOI] [Google Scholar]

- 33.The R Project for Statistical Computing. [accessed 30 July 2015]. (n.d.) [Online.] https://www.r-project.org/

- 34.The Teaching Center at Washington University in St. Louis. Planning and guiding in-class peer review. [accessed 8 October 2011]. (n.d.) [Online.] https://teachingcenter.wustl.edu/resources/incorporating-writing/planning-and-guiding-in-class-peerreview/

- 35.The Teaching Center at Washington University in St. Louis. Using peer review to help students improve their writing. [accessed 8 October 2011]. (n.d.) [Online.] https://teachingcenter.wustl.edu/resources/incorporating-writing/using-peer-review-to-help-students-improve-their-writing/

- 36.The Young Scientist Program. n.d.. [accessed 28 February 2015]. [Online.] http://ysp.wustl.edu.

- 37.Tinker RF. Student scientist partnership: shrew maneuvers. J Sci Educ Technol. 1997;6:111–117. doi: 10.1023/A:1025613914410. [DOI] [Google Scholar]

- 38.Tyler-Wood T, Ellison A, Lim O, Periathiruvadi S. Bringing Up Girls in Science (BUGS): the effectiveness of an after school environmental science program for increasing female students’ interest in science careers. J Sci Educ Technol. 2012;21:46–55. doi: 10.1007/s10956-011-9279-2. [DOI] [Google Scholar]

- 39.Winkleby MA, Ned J, Ahn D, Koehler A, Fagliano K, Crump C. A controlled evaluation of a high school biomedical pipeline program: design and methods. J Sci Educ Technol. 2014;23:138–144. doi: 10.1007/s10956-013-9458-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix 1: End-of-summer survey

Appendix 2: Research boot camp assessment