Abstract

Background

Longitudinal clinical investigations often rely on patient reports to screen for postdischarge adverse outcomes events, yet few studies have examined the accuracy of such patient reports.

Methods and Results

Patients with acute myocardial infarction (MI) in the TRANSLATE‐ACS study were asked during structured interviews at 6 weeks, 6 months, and 12 months postdischarge to report any rehospitalizations. The accuracy of patient‐reported rehospitalizations within 1 year of postdischarge was determined using claims‐based medical bill validation as the reference standard. The cumulative incidence of rehospitalizations was compared when identified by patient report versus medical bills. Patients were categorized by the accuracy in reporting events (accurate, under‐, or over‐ reporters) and characteristics were compared between groups. Among 10 643 MI patients, 4565 (43%) reported 7734 rehospitalizations. The sensitivity and positive predictive value of patient‐reported rehospitalizations were low at 67% and 59%, respectively. A higher cumulative incidence of rehospitalization was observed when identified by patient report versus medical bills (43% vs 37%; P<0.001). Overall, 18% of patients over‐reported and 10% under‐reported the number of hospitalizations. Compared with accurate reporters, under‐reporters were more likely to be older, female, African American, unemployed, or a non‐high‐school graduate, and had greater prevalence of clinical comorbidities such as diabetes and past cardiovascular disease.

Conclusions

The accuracy of patient‐reported rehospitalizations was low with patients both under‐ and over‐reporting events. Longitudinal clinical research studies need additional mechanisms beyond patient report to accurately identify rehospitalization events.

Clinical Trial Registration

URL: https://clinicaltrials.gov. Unique identifier: NCT01088503.

Keywords: myocardial infarction, patient outcome assessment, validation studies

Subject Categories: Quality and Outcomes, Myocardial Infarction

Introduction

Clinical research studies often require accurate assessment of longitudinal patient outcomes. In some countries, linked universal patient health records serve as the source of such data.1, 2 In the United States, where such a resource is not available, epidemiological and comparative studies are both often dependent on patient self‐reporting.3, 4 Specifically, patients are asked to report events, such as a hospitalization, which can then often be validated by the collection of source documentation.5 However, the accuracy of patient reporting used in isolation has been questioned in clinical studies.6, 7, 8, 9

The TReatment with ADP receptor iNhibitorS: Longitudinal Assessment of Treatment Patterns and Events after Acute Coronary Syndrome (TRANSLATE‐ACS) study is a multicenter, observational study of more than 12 000 acute myocardial infarction (MI) patients across the United States with longitudinal follow‐up conducted up to 15 months post‐MI. We used these data to examine the accuracy of patient‐reported rehospitalizations within the 1 year post‐MI using medical bill data for validation. In addition, the cumulative incidence of rehospitalizations was compared when identified by patient report versus medical bills. Finally, we characterized those patients who either accurately, under‐, or over‐reported rehospitalizations. We hypothesized that solely relying on patient‐reported events in clinical studies could lead to inaccuracies in the assessment of outcomes, potentially compromising the validity of the research.

Methods

The TRANSLATE‐ACS study (clinicalTrials.gov number: NCT01088503) is an observational study of 12 365 acute MI patients treated with percutaneous coronary intervention (PCI) and adenosine diphosphate receptor inhibitor therapy.10 Patients were enrolled during the index MI hospitalization at 1 of 233 participating US hospitals from April 2010 to October 2012. At enrollment, all patients provided consent for medical bill and record release, valid for the succeeding 15 months. After discharge, centralized telephone follow‐up interviews were conducted at 6 weeks, as well as at 6, 12, and 15 months, by trained personnel at the Duke Clinical Research Institute (Durham, NC).

In the current analysis, we studied all patients who completed 12‐month follow‐up interviews. We excluded patients who died (n=370) or withdrew consent (n=404) within the 12 months, as well as those missing both the 12‐ and 15‐month interviews (n=948). If a patient missed the 12‐month interview, but was interviewed at 15 months, then this interview could still permit assessment of 12‐month outcomes. We examined only outcomes occurring up to 12 months after index hospitalization in this analysis; events occurring between the 12‐ and 15‐month interviews were not included. After exclusions, the final analysis population included 10 643 patients.

During each interview, patients were asked, “Since your hospital discharge/last interview on (date), have you gone back to a hospital as a patient for any reason?” If a patient answered “yes,” then they were instructed to describe when and where they were hospitalized. Patients were asked to categorize the reported rehospitalizations as a “planned admission,” “unplanned admission,” “observation stay,” “emergency room visit only,” “rehab visit,” “nursing home stay,” or “cannot remember”; each category was mutually exclusive. Patient‐reported rehospitalizations included all reported visits except those reported as “emergency room visit only,” “rehab visit,” or “nursing home stay.” After October 6, 2011, the study interview was modified to also ask patients the main reason for each hospitalization; examples of reasons included “heart attack,” “cath procedure,” “bypass surgery,” “stroke,” or “bleeding.”

As described previously, hospital bills for all rehospitalizations were collected in TRANSLATE‐ACS to identify events that required collection of medical records for formal independent adjudication of study endpoints by the clinical events committee.10 Medical bills were in the form of the uniform bill‐04 (UB‐04) claims form, which is a common reporting format used by US hospitals. The UB‐04 contains diagnosis and procedure codes that can be used to identify major clinical events and procedures. Hospital bills for any rehospitalization were requested and centrally abstracted. If a patient‐reported hospitalization was not found within the reported dates, we broadened the search to any hospitalizations within 7 days before and after the reported dates in case patients did not exactly recall their hospitalized dates. If, despite broadening the dates, the hospitalization could still not be confirmed at the hospital reported by the patient, then surrounding hospitals located within 60 miles of the city and state of the patient's address were screened for any hospitalizations. Attention was specifically paid to hospitals similar in name to that reported by the patient or where the patient had previously been hospitalized (if applicable). Standard queries of all TRANSLATE‐ACS hospitals at 12 months postenrollment were conducted to screen for rehospitalizations under‐reported by the patient.

Hospital bills were further categorized as “inpatient status,” “observation status,” or “other.” Bills classified as “observation status” were emergency room or outpatient visits with at least an overnight hospitalization. “Other” bills included situations in which no UB‐04 bill could be obtained from the hospital, but medical records documented at least an overnight hospitalization. Reasons for inability to obtain a bill included hospitalization in foreign hospitals, hospitalization for which a per diem or health maintenance organization nonitemized bill was received, or the hospital refused release of a bill. If a medical bill could not be obtained, then the study team requested medical records directly to validate occurrence of a rehospitalization. Medical records were obtained for all hospitalizations with suspected major adverse cardiovascular events (MACEs), based on the screening review of the medical billing data. Those included death, recurrent MI, stroke, or unplanned coronary revascularization. These endpoints were independently validated by study physicians at the Duke Clinical Research Institute using prespecified criteria.10 Physicians validated an MI event if the hospital record included a diagnosis of ST‐elevation or non‐ST‐elevation MI, documentation of ischemic symptoms, and at least one of the following: (1) cardiac biomarkers with at least one of the values in the abnormal range for that laboratory (typically above the 99th percentile of the upper reference limit for normal patients); (2) electrocardiogram changes indicative of new ischemia (new ST‐T changes, new left bundle branch block, development of pathological Q‐waves [or equivalent findings for true posterior MI]); or (3) imaging evidence (eg, echocardiographic, nuclear) of new loss of viable myocardium or new regional wall motion abnormality. Stroke was defined by a diagnosis of stroke or cerebrovascular accident on the hospitalization report, typically characterized as loss of neurological function caused by an ischemic or hemorrhagic event, with residual symptoms at least 24 hours after onset or leading to death. Unplanned coronary revascularization was defined as any unplanned revascularization of one or more coronary vessels occurring after the index revascularization event. Staged coronary revascularizations that were planned at the time of the index procedure and completed within 60 days were not considered an unplanned revascularization.

Statistical Analysis

For the present analysis, we assessed the accuracy of patient‐reported rehospitalizations within the first 12 months postdischarge for index hospitalization using medical bill validation as the reference. Accuracy at the patient‐level was assessed using sensitivity and positive predictive value (PPV). Sensitivity was used to determine the true positive rate of patient‐reported hospitalizations, by the percentage of hospitalizations confirmed by medical bills that were reported by patients. The PPV was used to assess the precision of patient report: the ratio of medical bill–validated hospitalizations that had been previously reported by patients to all patient‐reported hospitalizations (whether or not confirmed by medical bills). Both specificity and negative predictive value were unable to be calculated in our analysis, because “true negatives” could not be accurately determined (ie, the number of patient‐reported rehospitalizations that were not reported and confirmed to be not reported by medical bills could not be calculated). In patients with all follow‐up interviews occurring after October 6, 2011 (implementation date for version 2 of the interview questionnaire, which additionally queried the patient on reason for hospitalization), we also assessed the accuracy of patient‐reported rehospitalizations for recurrent MI and stroke. The accuracy of patient report for coronary revascularization could not be determined, because only unplanned coronary revascularization was physician validated; however, patients were asked to report about any coronary revascularization procedure (planned or unplanned). Sensitivity and PPV were calculated using independent physician event validation as the reference.

We then compared the cumulative incidence rates of rehospitalizations when identified by patient report versus medical bills. Among patients completing version 2 of the interview questionnaire which asked the patient to report a reason for rehospitalization, we compared the cumulative incidence of recurrent MI as reported by the patient versus the “true” cumulative incidence of recurrent MI ascertained by independent physician validation of medical records and triggered by all of the follow‐up mechanisms employed in TRANSLATE‐ACS. Group differences were tested using the McNemar test.

Characteristics of all patients who accurately, over‐, or under‐reported the number of rehospitalizations were examined. Reporter groups were categorized by the difference between the overall number of reported hospitalizations and the number of medical bill–validated hospitalizations over the 1‐year period of interest (=0 for accurate reporters, >0 for over‐reporters, and <0 for under‐reporters, respectively). For each of the 3 groups (accurate, over‐, or under‐reporters), summary statistics were calculated as counts and percentages for categorical data, and medians and interquartile ranges (IQRs) for continuous data. Pair‐wise comparisons were made with accurate reporters as the reference. Chi‐square tests and Wilcoxon rank‐sum tests were used to compare categorical and continuous variables, respectively. We also reported the cumulative incidence of validated MACEs stratified by reporter groups, using log‐rank tests to compare between groups. Odds ratios (ORs) with 95% CIs were calculated to describe associations between patient‐reporter groups and MACE; these descriptive analyses did not adjust for differences in patient characteristics between reporter groups. A sensitivity analysis was performed only in patients with ≥1 medical bill‐validated hospitalization, again stratified by the predefined patient reporter groups, to further assess the cumulative incidence of MACE.

We used SAS software (version 9.3; SAS Institute Inc., Cary, NC) for all analyses. The institutional review board of the Duke University Health System approved the study.

Results

Agreement Between Patient Report and Bill Validation

Among 10 643 MI patients, 4565 (43%) reported a total of 7734 rehospitalizations within the 1 year postdischarge and 6078 (57%) reported no rehospitalization. Table 1 shows the timing of patient‐reported rehospitalizations stratified by type. Patients reported 2085 rehospitalizations between discharge from the index hospitalization and the 6‐week interview, 2776 rehospitalizations from the 6‐week to 6‐month interview, and 2873 rehospitalizations from the 6‐ to 12‐month interview. Unplanned hospitalizations (53%) were the most common type of patient‐reported hospitalization, followed by scheduled or planned admissions (30%). Patients reported observation stays in 15% of rehospitalizations.

Table 1.

Patient‐Reported and Medical Bill–Confirmed Rehospitalizations, Stratified by Type and Follow‐up Interval

| Hospitalization Type | Follow‐up Interval | |||

|---|---|---|---|---|

| 6 Weeks | 6 Months | 12 Months | Total | |

| Patient reported | ||||

| Unplanned admission | 1150 | 1437 | 1489 | 4076 (53%) |

| Planned admission | 558 | 886 | 878 | 2322 (30%) |

| Observation stay | 346 | 387 | 440 | 1173 (15%) |

| Cannot remember | 31 | 66 | 66 | 163 (2%) |

| Total | 2085 | 2776 | 2873 | 7734 |

| Medical bill | ||||

| Inpatient | 1022 | 1676 | 1677 | 4375 (64%) |

| Observationa | 605 | 751 | 737 | 2093 (31%) |

| Otherb | 80 | 116 | 122 | 318 (5%) |

| Total | 1707 | 2543 | 2536 | 6786 |

| Patient‐reported hospitalizations confirmed by medical bills | 1304 (63%) | 1654 (60%) | 1621 (56%) | 4579 (59%) |

| Confirmed medical bills not patient‐reported | 403 (24%) | 889 (35%) | 915 (36%) | 2207 (33%) |

Emergency room or outpatient bill involving overnight hospitalization.

No medical bill was obtained, but medical record documentation showed an overnight hospitalization; medical bill was not obtained because either hospital refused release of bill, hospitalization occurred in a foreign hospital, or only a per diem or health maintenance organization nonitemized bill was received.

Alternatively, a total of 6786 rehospitalizations among 5015 patients were identified from medical bills in the 12 months postdischarge. A total of 1707 medical bills were identified during the period between the index hospitalization and 6‐week interview and 2543 medical bills from the 6‐week to 6‐month interview. As shown in Table 1, inpatient hospitalizations were the most common type of bill‐validated rehospitalization (54%). In contrast to patient report, observation stays comprised 31% of all rehospitalizations.

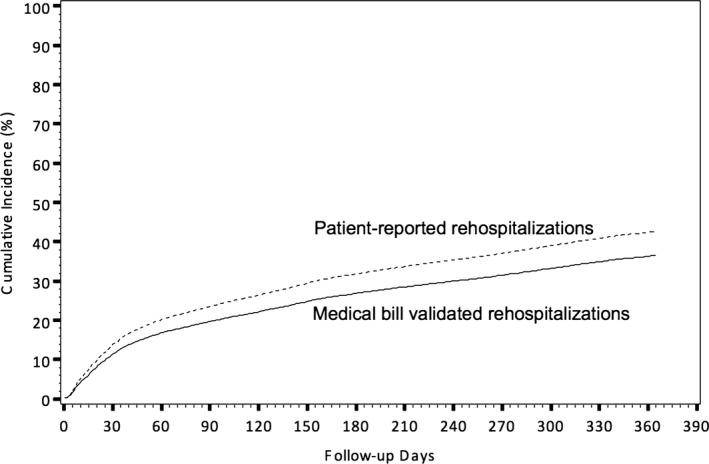

Among the total 7734 patient‐reported rehospitalizations, only 4579 were confirmed by medical billing data; the PPV of patient‐reported rehospitalization was 59%. Among the total 6786 medical bills collected, 33% (n=2207) were not reported by patients, yielding a sensitivity of 67%. Figure 1 shows that the cumulative incidence rates of rehospitalizations by 1 year postdischarge are higher when identified by patient report than when identified by medical bills (43% vs 37%; P<0.001).

Figure 1.

Cumulative incidence of rehospitalizations, stratified by patient‐reported events vs medical bills.

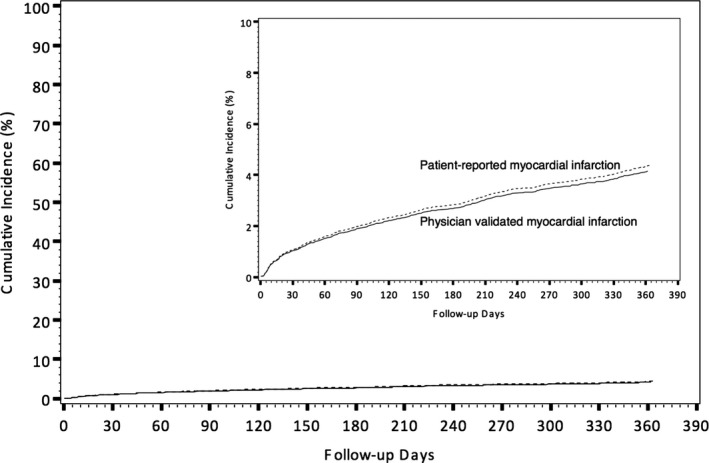

In the subpopulation of 7067 patients (66%) who were asked during the interview the reason for their hospitalization, the agreements between patient‐reported and physician‐validated MI and stroke are shown in Table 2; the sensitivity and PPV of patient‐reported MI were both 29%. Similarity, the sensitivity and PPV of patient‐reported stroke were both low at 35%; however, the incidence of rehospitalization for MI within 1 year postdischarge as reported by the patient was not significantly different from the incidence of recurrent MI as validated by physician review of medical records (4.4% vs 4.2%; P=0.46; Figure 2).

Table 2.

Comparison Between Patient‐Reported Rehospitalization for MI and Stroke and Physician‐Validated Recurrent MI and Stroke

| Physician Validated MI: Yes | Physician Validated MI: No | |

|---|---|---|

| Patient‐reported MI: yes | 103 | 257 |

| Patient‐reported MI: no | 254 | N/A |

| Sensitivity | 29% | |

| Positive predictive value | 29% | |

| Physician Validated Stroke: Yes | Physician Validated Stroke: No | |

|---|---|---|

| Patient‐reported stroke: yes | 19 | 57 |

| Patient‐reported stroke: no | 36 | N/A |

| Sensitivity | 35% | |

| Positive predictive value | 35% | |

MI indicates myocardial infarction; N/A, not applicable.

Figure 2.

Cumulative incidence of recurrent MI, stratified by patient‐reported MI only vs medical bill triggered, physician validated MI. MI indicates myocardial infarction.

Reporter Characteristics

Among all patients, 72% (n=7720) accurately reported their number of rehospitalizations within the next year, 18% (n=1911) over‐reported by a mean +1.3±0.7 hospitalizations with some patients over‐reporting by 7 hospitalizations, and 10% (n=1012) under‐reported by a mean −1.5±1.2 hospitalizations, with some patients under‐reporting as many as 14 hospitalizations.

Patient characteristics, stratified by accuracy in reporting rehospitalizations, are shown in Table 3. Compared with accurate reporters, under‐reporters were more likely to be older (62 vs 60 years), female (34.6% vs 26.1%), or African American (15% vs 7.5%; P<0.001 for all). Under‐reporters, when compared to accurate reporters, were also less likely to be full‐ or part‐time employed (32.2% vs 53.8%) or a high school graduate (83.5% vs 89.9%; P<0.001 for both). Among the 3 groups, under‐reporters had the greatest prevalence of clinical comorbidities, such as diabetes, hypertension, ischemic heart disease, cerebrovascular disease, and heart failure. Compared to accurate reports, under‐reporters were also more likely to have 4 or more comorbid conditions (P<0.001).

Table 3.

Patient Characteristics Stratified by Patient Accuracy in Reporting Hospitalizations

| Under‐reporters (n=1012) | P Valuea | Accurate Reporters (n=7720) | Over‐reporters (n=1911) | P Valueb | |

|---|---|---|---|---|---|

| Demographics | |||||

| Age | 62 (54, 71) | <0.001 | 60 (52, 68) | 60 (52, 68) | 0.69 |

| Female sex | 34.6% | <0.001 | 26.1% | 30.2% | <0.001 |

| Race | |||||

| White | 83.2% | <0.001 | 89.6% | 89.5% | 0.87 |

| African American | 15.0% | <0.001 | 7.5% | 8.7% | 0.09 |

| Hispanic ethnicity | 2.9% | 0.43 | 3.3% | 3.5% | 0.7 |

| High school graduate | 83.5% | <0.001 | 89.9% | 88.6% | 0.09 |

| Full‐/part‐time employed | 32.2% | <0.001 | 53.8% | 46.8% | <0.001 |

| Health insurance | |||||

| Private | 56.8% | <0.001 | 67.2% | 63.3% | <0.001 |

| Medicare | 47.6% | <0.001 | 31.6% | 35.1% | 0.002 |

| Medicaid | 11.7% | <0.001 | 4.3% | 6.8% | 0.004 |

| Military | 3.9% | <0.001 | 3.4% | 4.2% | <0.001 |

| State‐specific plan | 1.9% | 0.29 | 1.4% | 1.6% | 0.08 |

| No insurance | 12.2% | 0.17 | 13.7% | 14.0% | 0.55 |

| Clinical characteristics | |||||

| Past MI | 29.3% | <0.001 | 17.4% | 20.4% | 0.73 |

| Past PCI | 29.5% | <0.001 | 19.9% | 21.4% | 0.003 |

| Past CABG | 16.6% | <0.001 | 7.8% | 10.0% | 0.15 |

| Past stroke or TIA | 9.0% | <0.001 | 4.6% | 6.1% | 0.01 |

| PAD | 13.4% | <0.001 | 4.8% | 6.5% | 0.01 |

| Heart failure | 13.4% | <0.001 | 3.9% | 5.9% | 0.003 |

| AF/flutter | 7.5% | <0.001 | 3.9% | 5.2% | <0.001 |

| Diabetes | 38.4% | <0.001 | 23.4% | 26.3% | 0.01 |

| Hypertension | 78.9% | <0.001 | 65.1% | 69.2% | <0.001 |

| Dyslipidemia | 70.8% | <0.001 | 65.7% | 65.8% | 0.92 |

| Dialysis | 3.8% | <0.001 | 0.6% | 1.0% | 0.04 |

| Current/recent smoker | 38.6% | 0.14 | 36.2% | 35.6% | 0.59 |

| Chronic lung disease | 16.9% | <0.001 | 7.9% | 10.4% | <0.001 |

| Recent GI/GU bleeding | 1.7% | 0.10 | 1.1% | 0.6% | 0.07 |

| Number of comorbidities | <0.001 | 0.005 | |||

| 0 | 3.8% | 8.8% | 7.7% | ||

| 1 | 12.4% | 20.3% | 18.5% | ||

| 2 | 17.4% | 24.0% | 22.3% | ||

| 3 | 17.1% | 19.4% | 20.1% | ||

| 4 | 14.0% | 12.7% | 13.6% | ||

| ≥5 | 35.3% | 14.8% | 17.8% | ||

Values are median (25th, 75th percentile) or n (%). AF indicates atrial fibrillation; CABG, coronary artery bypass grafting; GI, gastrointestinal; GU, genitourinary; MI, myocardial infarction; PAD, peripheral arterial disease; PCI, percutaneous coronary intervention; TIA, transient ischemic attack.

For the comparison between accurate reporters and under‐reporters.

For the comparison between accurate reporters and over‐reporters.

When compared with accurate reporters, over‐reporters were also more likely to be female and unemployed (P<0.001, both); nevertheless, there were no significant differences in race, educational level, and uninsured status between these 2 groups. Compared to accurate reporters, over‐reporters were also more likely to have clinical comorbidities, such as diabetes, hypertension, ischemic heart disease, cerebrovascular disease, heart failure, and a greater number of comorbid conditions, although the magnitudes of difference were smaller than those between under‐ and accurate reporters.

The cumulative incidence of rehospitalizations and MACEs, stratified by reporter groups, is shown in Table 4. Accurate reporters had a mean (SD) of 0.4±0.7 rehospitalizations in the 12 months postdischarge. Compared to accurate reporters, both over‐ and under‐reporters were more likely to be rehospitalized; over‐reporters had a mean of 0.7±1.1 rehospitalizations, whereas under‐reporters had a mean of 2.6±2.0 rehospitalizations. Among under‐reporters, 84% of patient‐reported hospitalizations were confirmed by medical bills, whereas 56% of medical bill–identified hospitalizations were not patient reported. Among over‐reporters, 30% of patient‐reported hospitalizations were confirmed by medical bills, whereas 12% of bill‐identified hospitalizations were not patient reported.

Table 4.

Cumulative Incidence of Rehospitalization and Adjudicated MACE Stratified by Patient Accuracy in Reporting Hospitalizations

| Under‐reporters (n=1012) | P Valuea | Accurate Reporters (n=7720) | Over‐ reporters (n=1911) | P Valueb | |

|---|---|---|---|---|---|

| Confirmed rehospitalizations | |||||

| Median (IQR) | 2 (1–3) | — | 0 (0–1) | 0 (0–1) | — |

| Mean (SD) | 2.6±2.0 | <0.001 | 0.4±0.7 | 0.7±1.1 | <0.001 |

| 5th, 95th percentile | 1, 6 | — | 0, 2 | 0, 3 | — |

| Margin of over‐/under‐report | −1.5±1.2 | — | — | +1.3±0.7 | — |

| MACE | 458 (45.3%) | <0.001 | 812 (10.5%) | 337 (17.6%) | <0.001 |

| MI | 160 (5.8%) | <0.001 | 203 (2.6%) | 71 (3.7%) | 0.01 |

| Stroke | 39 (3.9%) | <0.001 | 26 (1.2%) | 7 (0.4%) | 0.84 |

| Unplanned coronary revascularization | 390 (38.5%) | <0.001 | 725 (9.4%) | 314 (16.4%) | <0.001 |

IQR indicates interquartile range; MACE, major adverse cardiovascular event; MI, myocardial infarction; SD, standard deviation.

For the comparison between accurate reporters and under‐reporters.

For the comparison between accurate reporters and over‐reporters.

Observed MACE rates at 1 year post‐MI were 45.3% among under‐reporters versus 10.5% among accurate reporters (OR, 7.03; 95% CI, 6.01–8.12). Frequencies of all individual MACE components (MI, stroke, or unplanned coronary revascularization) were significantly higher among under‐reporters as compared to accurate reporters (P<0.001, all). For over‐reporters, the observed MACE rate by 1 year was 17.6%, with an OR of 1.82 (95% CI, 1.59–2.09) when compared to accurate reporters. Higher rates of confirmed MI and unplanned revascularization were noted in these patients compared to accurate reporters.

Because 73% of accurate reporters (n=5628) were never rehospitalized, we repeated the above analysis among the 5015 patients with at least one bill‐confirmed rehospitalization. Among this group, 2092 (42%) were accurate reporters, 1911 (38%) were over‐reporters, and 1012 (21%) were under‐reporters. Under‐reporters remained at higher risk of observed MACEs compared to accurate reporters (45.3% vs 38.8%; OR, 1.30; 95% CI, 1.12–1.52), but over‐reporters were at lower risk of MACE compared to accurate reporters (17.6% vs 38.8%; OR, 0.34; 95% CI, 0.29–0.39).

Discussion

This analysis showed: (1) rehospitalizations are common within the first year after an acute MI; (2) patients often inaccurately report rehospitalizations, with both over‐ and under‐reporting of events, when compared to a reference of medical bills; (3) relying on patient report as a means of identifying events would have led to higher rates of rehospitalizations, but not recurrent MI; and (4) significant differences in demographic, clinical characteristics, and risk for future MACEs exist between patients who are over‐ or under‐reporters versus accurate reporters. These findings underscore the challenge of relying on follow‐up that is patient reported. Follow‐up dependent on patient report triggering event investigation may lead to major inaccuracies in outcomes assessment.

Patient self‐report forms the foundation for follow‐up in most clinical research studies involving longitudinal follow‐up. In major epidemiological studies, patient self‐report, without further adjudication, has been relied upon for data accrual.3, 4 In clinical trials, patient reporting of an event, such as a hospitalization, usually triggers an attempt to obtain source documentation in order to confirm the event.5 This process of verifying a patient‐reported event is costly and typically requires intensive study resources.11, 12 Moreover, it is unclear whether patient report of an event always leads to definitive documentation of an actual event or whether important events are missed because of inaccurate patient recall.6, 7, 8, 9 A major challenge of pragmatic research trial design is to navigate the tension between the uncertain accuracy of patient self‐report and the need to efficiently utilize study resources and lower trial costs.13, 14, 15 Therefore, the practice of using patient report in clinical studies warrants critical appraisal. In addition, the lack of a single‐payer system or a universal health record in the United States impedes the ability to centrally monitor longitudinal events. Finally, rehospitalization rates remain high in routine community practice, further emphasizing the continued need for accurate accounting of events.16

Our results show that patients often inaccurately report rehospitalizations and add to past observations of this issue. Klungel et al. showed variable agreement in the identification of cardiovascular disease and risk factors between patient self‐report and medical record review in a population from The Netherlands.7 Nonetheless, an analysis of The Coronary Artery Risk Development in Young Adults (CARDIA) study demonstrated concordance between patient self‐report and medical records for the reason of hospitalization, although black race and intravenous drug abuse were associated with more discord.9 Importantly, some key differences between the CARDIA and TRANSLATE‐ACS study populations must be noted. The TRANSLATE‐ACS study enrolled an older cohort of community‐treated MI patients who are at substantially higher risk of subsequent cardiovascular and noncardiovascular adverse events (in contrast, the entry criteria in the CARDIA study was age 18–30 years).17 Whereas black race was associated with more discord in the CARDIA study, we observed race and other sociodemographic characteristics to be more likely associated with under‐ than over‐reporting in our analysis. Overall, the sensitivity and PPV for patient self‐report, as compared to medical bills, in our analysis were markedly lower than expected. The relatively poor performance of patient self‐report was especially true for rehospitalizations for important clinical diagnoses in this patient population, such as recurrent MI.

Additionally, we found that ≈10% of patients under‐reported events, but almost twice as many over‐reported. The type of error in patient reporting (under‐ versus over‐reporting) has specific consequences for studies relying on patient report. Under‐reporting may lead to the need for additional efforts, resources, and costs to ensure that important events are not missed. In contrast, over‐reporting may ultimately lead to unnecessary expenditure of trial resources. Under‐reporters had greater prevalence of clinical comorbidities and poorer health outcomes status compared to accurate reporters, which may have contributed to recall bias. Under‐reporters also had lower socioeconomic status, which may be predictive of low health literacy.18 Significantly, our study showed that under‐reporters were more likely to be rehospitalized than accurate reporters and, notably, had higher risk of cardiovascular adverse events, which are often the endpoints that cannot be missed in studies of the post‐MI population.

Most sociodemographic characteristics, such as race, educational level, and uninsured status, were not significantly different between over‐reporters and accurate reporters. However, over‐reporters were more likely to be female, less likely to be employed, and had greater prevalence of clinical comorbidities, such as diabetes, past coronary artery disease, and heart failure than accurate reporters. Many of these patient characteristic differences were also present and even more pronounced when comparing under‐ versus accurate reports. Indeed, outcomes of over‐reporters were more similar to those of accurate reporters, although a modestly higher MACE rate was observed among over‐reporters.

Our results also suggest that relying solely on a method of patient report, as compared to additional use of medical bills, would have led to an overestimation of the number of recurrent hospitalizations, consistent with the higher numbers of over‐reporters. Interestingly, though, we found no differences in the observed rates of MI between the methods of patient‐report versus medical bill–triggered, physician‐validated MI. Although patients often inaccurately recalled recurrent MI events, the discord did not significantly change the event curves for recurrent MI. This finding perhaps stands in contrast to our other results that showed a poor correlation between patient report and medical bills. However, it is important to note that these MIs reported by patients were not necessarily the same MIs that were eventually validated by physicians. The similar MI event rate curves comparing these 2 methods may give the misleading appearance of patient‐report reliability that is, perhaps, masked without further consideration of this lack of accuracy.

Our sum findings have implications with regard to the practical deployment of study resources to ascertain patient outcomes comprehensively and accurately when a universal medical record is not available. Given many of the observed differences in sociodemographic and clinical characteristics between under‐ and accurate reporters, risk prediction methodologies may be employed to identify patients with a high likelihood of under‐reporting. Conceptually, practical study designs might prioritize building “safety nets” around these patients to ensure that important clinical events are not missed. Examples of safety nets include planned queries of electronic health records or health care providers for additional screening of events. In contrast, over‐reporters may be more difficult to distinguish from accurate reporters. Current data do not clearly suggest potential markers of over‐reporting that could be targeted for more‐streamlined data collection. Moreover, patient input into trial design may be warranted to further facilitate effective and efficient data collection, as well as to understand how patients process adverse events. Finally, given the limitations of patient self‐report described in this study, a universal health care record would be of high value to the engineering of future practical research studies. There is hope that the ongoing efforts to build clinical data research networks that pool cohorts and data from multiple sources will ultimately help us achieve this goal.19

There are several important limitations in our analysis that may contribute to over‐ or under‐reporting. First, patients and hospitals may have differences in the definition of rehospitalization. Patients may have misunderstood or incorrectly recalled the type of their health care encounter; for example, a patient may report an urgent care or emergency room visit less than 24 hours in duration as a hospitalization or consider a staged PCI procedure to be unplanned. Second, despite the fact that consent forms for TRANSLATE‐ACS informed patients that data was being collected for research purposes only, uninsured patients may be reluctant to report events because of concerns about being billed for their hospitalization. Hospitals generate a medical bill regardless of insurance status, and all enrolling hospitals were screened for rehospitalizations that may have been unreported by the patient. Third, some medical bills could not be collected from hospitals despite multiple attempts; in these cases, medical records were used to ascertain rehospitalization and identify clinical endpoints of interest. Fourth, with hospital penalties for early readmission,20 there may be interhospital differences in the classification of inpatient admissions versus observational visits. To accommodate this potential confounder, we conservatively counted all inpatient admissions or encounters involving at least an overnight stay as rehospitalizations. Fifth, medical bill codes may have been inaccurate and included events from past hospitalizations; however, study endpoints were validated by study physicians by independent medical record review. Finally, we cannot exclude the possibility that rehospitalizations may have been missed despite extensive screening of enrolling and local hospitals. In this case, patients may have been misclassified as having over‐reported events, and an underestimation of the actual number of hospitalizations may have occurred.

In conclusion, in a large, community‐based study, the accuracy of patient‐reported hospitalizations was low when compared with validation by medical bills, with patients both under‐ and over‐reporting events. Relying on patient report would have overestimated rehospitalization rates. Certain patient characteristics may identify those patients more likely to erroneously report rehospitalizations and warrant further investigation into methods to best identify these patients. These findings have important implications for the design of future practical clinical studies.

Sources of Funding

The TRANSLATE‐ACS (NCT01088503) was sponsored by Daiichi Sankyo, Inc and Lilly USA. The Duke Clinical Research Institute is the coordinating center for this study, which represents a collaborative effort with the American College of Cardiology.

Disclosures

Dr Krishnamoorthy reports research funding from Novartis Pharmaceutical Corporation and travel support from Medtronic, Inc. Dr Peterson reports research funding from the American College of Cardiology, American Heart Association, Eli Lilly & Company, Janssen Pharmaceuticals, and Society of Thoracic Surgeons and consulting for Merck & Co, Boehringer Ingelheim, Genentech, Janssen Pharmaceuticals, and Sanofi‐Aventis. Dr Anstrom reports grants from NHLBI. Dr Effron reports employment from Eli Lilly and Company and shareholding with Lilly, USA. Dr Zettler reports employment from Eli Lilly and Company. Dr Baker is a current employee of Daiichi Sankyo, Inc. Dr McCollam reports employment from Eli Lilly and Company. Dr Mark reports grants from NIH, Lilly, AstraZeneca, Bristol‐Myers Squibb, and St Jude; grants and personal fees from Medtronic and Gilead; and personal fees from Janssen Pharmaceuticals and from Milestone Pharmaceuticals. Dr Wang reports research grants to the Duke Clinical Research Institute from AstraZeneca, Boston Scientific, Daiichi Sankyo, Eli Lilly, Gilead Sciences, GlaxoSmithKline, and Regeneron Pharmaceuticals; honorarium for educational activities from AstraZeneca; and consulting for Eli Lilly and Astra Zeneca.

Acknowledgments

Erin Hanley, MS, Duke University, provided editorial assistance and prepared the manuscript. Ms. Hanley did not receive compensation for her assistance apart from her employment at the institution where the analysis was conducted.

(J Am Heart Assoc. 2016;5:e002695 doi: 10.1161/JAHA.115.002695)

References

- 1. Schoen C, Osborn R, Squires D, Doty M, Rasmussen P, Pierson R, Applebaum S. A survey of primary care doctors in ten countries shows progress in use of health information technology, less in other areas. Health Aff (Millwood). 2012;31:2805–2816. [DOI] [PubMed] [Google Scholar]

- 2. Tu K, Mitiku TF, Ivers NM, Guo H, Lu H, Jaakkimainen L, Kavanagh DG, Lee DS, Tu JV. Evaluation of Electronic Medical Record Administrative data Linked Database (EMRALD). Am J Manag Care. 2014;20:e15–e21. [PubMed] [Google Scholar]

- 3. Beckett M, Weinstein M, Goldman N, Yu‐Hsuan L. Do health interview surveys yield reliable data on chronic illness among older respondents? Am J Epidemiol. 2000;151:315–323. [DOI] [PubMed] [Google Scholar]

- 4. Walker MK, Whincup PH, Shaper AG, Lennon LT, Thomson AG. Validation of patient recall of doctor‐diagnosed heart attack and stroke: a postal questionnaire and record review comparison. Am J Epidemiol. 1998;148:355–361. [DOI] [PubMed] [Google Scholar]

- 5. Mahaffey KW, Harrington RA, Akkerhuis M, Kleiman NS, Berdan LG, Crenshaw BS, Tardiff BE, Granger CB, DeJong I, Bhapkar M, Widimsky P, Corbalon R, Lee KL, Deckers JW, Simoons ML, Topol EJ, Califf RM; For the PURSUIT Investigators . Systematic adjudication of myocardial infarction end‐points in an international clinical trial. Curr Control Trials Cardiovasc Med. 2001;2:180–186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Barr EL, Tonkin AM, Welborn TA, Shaw JE. Validity of self‐reported cardiovascular disease events in comparison to medical record adjudication and a statewide hospital morbidity database: the AusDiab study. Intern Med J. 2009;39:49–53. [DOI] [PubMed] [Google Scholar]

- 7. Klungel OH, de Boer A, Paes AH, Seidell JC, Bakker A. Cardiovascular diseases and risk factors in a population‐based study in The Netherlands: agreement between questionnaire information and medical records. Neth J Med. 1999;55:177–183. [DOI] [PubMed] [Google Scholar]

- 8. Oksanen T, Kivimaki M, Pentti J, Virtanen M, Klaukka T, Vahtera J. Self‐report as an indicator of incident disease. Ann Epidemiol. 2010;20:547–554. [DOI] [PubMed] [Google Scholar]

- 9. Rahman A, Gibney L, Person SD, Williams OD, Kiefe C, Jolly P, Roseman J. Validity of self‐reports of reasons for hospitalization by young adults and risk factors for discordance with medical records: the Coronary Artery Risk Development in Young Adults (CARDIA) Study. Am J Epidemiol. 2005;162:491–498. [DOI] [PubMed] [Google Scholar]

- 10. Chin CT, Wang TY, Anstrom KJ, Zhu B, Maa JF, Messenger JC, Ryan KA, Davidson‐Ray L, Zettler M, Effron MB, Mark DB, Peterson ED. Treatment with adenosine diphosphate receptor inhibitors‐longitudinal assessment of treatment patterns and events after acute coronary syndrome (TRANSLATE‐ACS) study design: expanding the paradigm of longitudinal observational research. Am Heart J. 2011;162:844–851. [DOI] [PubMed] [Google Scholar]

- 11. Calvo G, McMurray JJV, Granger CB, Alonso‐García Á, Armstrong P, Flather M, Gómez‐Outes A, Pocock S, Stockbridge N, Svensson A, Van de Werf F. Large streamlined trials in cardiovascular disease. Eur Heart J. 2014;35:544–548. [DOI] [PubMed] [Google Scholar]

- 12. Pogue J, Walter SD, Yusuf S. Evaluating the benefit of event adjudication of cardiovascular outcomes in large simple RCTs. Clin Trials. 2009;6:239–251. [DOI] [PubMed] [Google Scholar]

- 13. Sugarman J, Califf RM. Ethics and regulatory complexities for pragmatic clinical trials. JAMA. 2014;311:2381–2382. [DOI] [PubMed] [Google Scholar]

- 14. Pocock SJ, Gersh BJ. Do current clinical trials meet society's needs? A critical review of recent evidence. J Am Coll Cardiol. 2014;64:1615–1628. [DOI] [PubMed] [Google Scholar]

- 15. Ware JH, Hamel MB. Pragmatic trials–guides to better patient care? N Engl J Med. 2011;364:1685–1687. [DOI] [PubMed] [Google Scholar]

- 16. Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee‐for‐service program. N Engl J Med. 2009;360:1418–1428. [DOI] [PubMed] [Google Scholar]

- 17. Friedman GD, Cutter GR, Donahue RP, Hughes GH, Hulley SB, Jacobs DR Jr, Liu K, Savage PJ. CARDIA: study design, recruitment, and some characteristics of the examined subjects. J Clin Epidemiol. 1988;41:1105–1116. [DOI] [PubMed] [Google Scholar]

- 18. Berkman ND, Sheridan SL, Donahue KE, Halpern DJ, Crotty K. Low health literacy and health outcomes: an updated systematic review. Ann Intern Med. 2011;155:97–107. [DOI] [PubMed] [Google Scholar]

- 19. Fleurence RL, Curtis LH, Califf RM, Platt R, Selby JV, Brown JS. Launching PCORnet, a national patient‐centered clinical research network. J Am Med Inform Assoc. 2014;21:578–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Joynt KE, Jha AK. A path forward on Medicare readmissions. N Engl J Med. 2013;368:1175–1177. [DOI] [PubMed] [Google Scholar]