Abstract

Psychophysical techniques typically assume straightforward relationships between manipulations of real-world events, their effects on the brain, and behavioral reports of those effects. However, these relationships can be influenced by many complex, strategic factors that contribute to task performance. Here we discuss several of these factors that share two key features. First, they involve subjects making flexible use of time to process information. Second, this flexibility can reflect the rational regulation of information-processing trade-offs that can play prominent roles in particular temporal epochs: sensitivity to stability versus change for past information, speed versus accuracy for current information, and exploitation versus exploration for future goals. Understanding how subjects manage these trade-offs can be used to help design and interpret psychophysical studies.

Psychophysics quantifies relationships between physical and psychological events with appealing rigor and simplicity. In studies of perception, memory, preference, and other cognitive capacities, the manipulation of a property in the real world (e.g., the brightness of a visual stimulus to be detected, the number of items to be remembered, or the relative values of items to be selected) causes an “impression” in the brain that “must be liable to more or less accidental derangement at every step of its progress” [1, 2]. This noisy impression leads to probabilistic behavioral reports. Traditional methods combine precise measurements of these reports with models of the derangement process to infer the quality of the signals represented in the brain. In many cases, signal quality can be described using a simple scalar value like discrimination threshold.

However, even for the most carefully controlled psychophysical tasks, this simplicity belies the complexity of the decision processes that are used to convert the manipulated property into a measurable behavioral report [3–5]. Subjects must mediate attentional and memory demands, the integration of information from different sources, decision rules, preferences, biases, goals, and other strategic factors. In general, incomplete knowledge of how these factors are managed can affect the interpretation of psychophysical data [6*]. Accordingly, major advances in psychophysical approaches often involve new ways to identify and account effectively for the strategic choices made by subjects. For example, early detection experiments that measured a high probability of detecting a dim visual stimulus potentially conflated a low discrimination threshold with a tendency to report detections even in the absence of a stimulus. Signal detection theory resolved this issue by distinguishing the internal representation of the stimulus from the subject’s decision rule for making a perceptual judgment [3, 4].

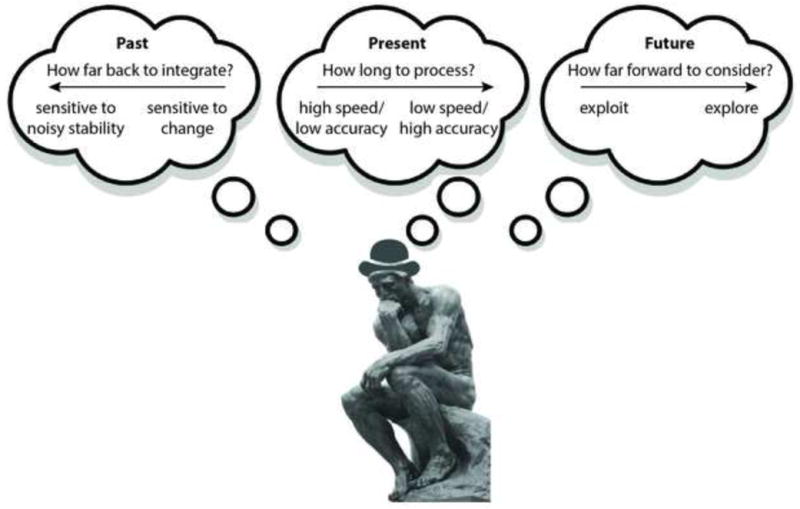

Despite such successes, accounting for the different ways that subjects solve tasks remains a fundamental challenge for psychophysics. To help overcome this challenge, here we review recent work describing several strategic factors that share two key characteristics that can affect performance on a broad range of tasks (Figure 1). First, they involve the flexible use of time in processing information to generate responses. Second, this flexibility reflects inherent trade-offs between competing goals. We focus on three trade-offs that can interact and operate over multiple timescales. Each one also has particular relevance to how subjects process information from a certain temporal window: 1) a change-stability trade-off that governs the historical extent of past information that is relevant for future decisions, 2) a speed-accuracy trade-off that governs the duration of the current information-processing window, and 3) an explore-exploit trade-off that governs the future time horizon used for establishing goals. Studying these trade-offs under relatively simple conditions can yield insights into the kinds of complex choices subjects make more generally, which is useful in psychophysics for designing tasks and interpreting data.

Figure 1.

A psychophysical subject thinking about how to use past, present, and future information to solve a task.

Processing past information and a change-stability trade-off

Psychophysical subjects can make flexible use of information from the past to inform current choices. Under some conditions, such as perceptual tasks in which stimulus presentations are explicitly selected to be independent from trial to trial, subjects often make choices that are also largely independent of past choices, stimuli, or outcomes. However, even under these conditions choices and response times (RTs) can show sequential effects across trials. These effects can result from incomplete knowledge of the independent task structure, innate strategies like probability matching, lower-level motor mechanisms, or other factors [7–10]. In addition, many tasks are designed with across-trial dependencies, and subjects can use information from either the recent or more distant past to guide behavior [11, 12].

This flexible processing of historical data is likely governed by many factors, including task demands and memory limitations. In addition, the choice of how far in the past to consider historical information that is used to inform future choices may involve principles of effective inference in dynamic environments [13, 14]. These principles include an inherent trade-off between sensitivity to fundamental changes in a relevant signal and sensitivity to the value of the signal when it is stable but noisy [15**]. For example, consider a process that predicts the probability or magnitude of the next expected reward based on past stochastic rewards. Using a narrow time window that takes into account only the most recent rewards can allow such a process to constantly adjust its predictions based on new information. These adjustments are particularly useful for maintaining accurate predictions when the environment can undergo fundamental change-points. However, these predictions also tend to follow noise in the environment, giving rise to unstable predictions and potentially suboptimal choices during periods of noisy stability. In contrast, wider windows that include information further into the past can average out variability but are relatively insensitive to change-points, which are smoothed over.

Under some conditions, human subjects appear to regulate this trade-off adaptively, using wider windows during periods of expected stability and narrower windows during periods of expected change [13, 15**, 16]. Models of choice behavior that ignore these adaptive adjustments can mischaracterize the resulting choice variability as noise or other factors, resulting in potentially misleading interpretations of the underlying computational mechanisms [6*]. However, taking these adjustments into account can also be challenging because they do not simply depend on the statistical stability of information presented in a given task, but also on the subject’s subjective estimate of that stability. For example, even when statistically stable information is presented, subjects can use a narrow window to process historical information, acting as if they expect some level of instability [15**]. This kind of expectation might contribute to forms of imperfect information integration that have been found under a variety of conditions but are ascribed to other factors such as an urgency to respond [17, 18] or computationally costly or leaky mechanisms [19–21].

Processing incoming information and the speed-accuracy trade-off

Akin to the flexibility that subjects use to process past information, they also can make flexible use of time when processing incoming information. For many perceptual, memory, and other tasks, decision processes are deliberative and therefore take time [7, 22, 23]. This time is often used to improve accuracy by signal averaging over the incoming inputs or by increasing the number of sequential opportunities to make an effective judgment from those inputs [24, 25]. Under these conditions, choice accuracy tends to increase as a function of experimenter-controlled stimulus durations for up to several hundred milliseconds [26, 27]. For RT tasks, in which the subject chooses when to respond and hence effectively controls the duration of the stimulus-driven decision process, longer RTs also typically correspond to higher accuracy [28, 29]. Thus, the temporal window used to process incoming information governs an inverse relationship between decision time and decision accuracy, known as the speed-accuracy trade-off.

Control of this trade-off is flexible and can be affected by instructions, changes in reward contingencies or task timing, and other factors [30–32]. In the absence of an understanding of how this trade-off is managed, measurements of choice accuracy or RT alone provide an incomplete picture of the encoding and use of task-relevant information. For example, studies of perceptual learning, which involves long-lasting, training-induced improvements in perceptual sensitivity, often estimate perceptual thresholds before and after learning but typically do not also measure RTs [33]. Consequently, a measured increase in perceptual sensitivity could, in principle, result from an improvement in how the brain encodes the relevant sensory variable, an increased emphasis on the accuracy of the decision at the expense of speed, or both [34*].

Ambiguity from an uncharacterized speed-accuracy trade-off might also be relevant for tasks with experimenter-controlled stimulus durations. For example, subjects might use decision rules that can terminate the processing of incoming stimulus information at any time during stimulus presentation. Early termination subsequently filters out potentially useful information that could have been used to improve accuracy but frees up processing resources for other uses, such as preparing subsequent actions [35]. In these cases, a lack of RT measurements can make it difficult to assess the temporal window used by the subject to process incoming information. Further complicating this problem, information-processing dynamics within the chosen temporal window might not be uniform or predictable. For example, sniffs govern the timing of olfactory processing [21, 36]. Likewise, expectations about environmental changes that arise from processes governing the use of historical information, as discussed above, can cause the impact of incoming information on a subsequent decision to wax and wane over time [15**, 21, 36]. In these cases, a combination of approaches, including RT measurements, variable stimulus durations, and choice-guided reverse correlation of noisy stimulus features, can help infer both the temporal window and the information-processing dynamics the brain uses to process that incoming information [26, 35, 37, 38].

Processing future goals and the explore-exploit trade-off

Flexible temporal processing windows can also be applied to expectations [39]. To achieve the goal of maximizing reward in the near-term, subjects can exploit the option with the highest expected reward [40]. However, exploitation may neglect the possibility of gaining new information about other options, such as changes in reward contingencies, that are helpful for maximizing reward in the long-term. In contrast, exploring might provide new information but at the cost of missing out on available rewards in the near-term.

This exploration-exploitation trade-off can confound the interpretation of psychophysical data. Exploratory behavior can be modeled by adding stochasticity to the action-selection process [22, 40]. However, evidence for random choice behavior in dynamic environments is mixed, and many task designs conflate factors that could produce similar effects on behavior [41–43**]. For example, for tasks in which past choices govern the information available for the current choice, information-seeking behavior can be difficult to distinguish from reward-seeking behavior. Thus, decoupling information and reward can help identify exploratory aspects of behavior that affect psychophysical performance [43**]. More generally, over-emphasizing stochastic action selection can minimize sensitivity to other processes, such as adaptive inference to maximize near-term reward, that can contribute to behavioral variability [6*]. Conversely, ignoring a tendency to make stochastic choices can result in overestimates of uncertainty or adaptability in the exploitative decision process.

Exploration might also reflect other rational processes. For example, subjects might aim to reduce uncertainty, valuing actions proportional to the uncertainty about their outcomes [44–46], or to satisfy curiosity, valuing actions proportional to the quality of their predictions about the associated outcomes [47, 48]. These more complex strategies can be even more difficult to identify and address in psychophysical data. For example, subjects might appear to seek uncertainty in one context but avoid it in another [49, 50]. To account for these apparently contradictory effects, it can be helpful to identify different possible sources of uncertainty. These sources, which are also central to the change-stability trade-off, include unexpected change-points (uncertainty about the identity of a signal), estimation uncertainty (insufficient samples to provide a reliable estimate of the signal), and noise (intrinsic variability in the signal). Thus, the time window used for establishing goals about future rewards might be modulated by the same factors affecting the processing of past and present information [13–16, 51]. Using task designs that carefully distinguish these and other forms of uncertainty, and possibly the timescales over which they occur, can help to identify their distinct effects on behavior [16, 52*]. Accounting for these effects can, in turn, allow for more precise estimates of how the brain processes other task-relevant variables.

Conclusion

The rigor and simplicity of psychophysical tasks are based on the tenuous assumption that potentially complex strategic factors that affect task performance can be ignored, controlled, or addressed effectively. In this article we highlighted several factors that challenge this assumption and are prevalent across tasks. These factors share the common feature of flexibility in the temporal windows used to process information, as applied to the past, present, and future. This flexibility can make it difficult to infer from behavior the mapping between controlled physical events and the psychological responses they elicit. Nevertheless, because control of this flexibility often involves rational trade-offs in processing information, careful experimental design and analysis can help to account for their effects on behavior.

Highlights.

The simplicity of psychophysical measurements belies underlying behavioral complexity

This complexity includes rational trade-offs in using time to process information

Temporal windows on past information govern a change-stability trade-off

Temporal windows on incoming information govern a speed-accuracy trade-off

Temporal windows on future expectations and goals govern an explore-exploit trade-off

Acknowledgments

Funded by NIH R01 EY015260 and NSF 1533623.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of interest statement

Nothing declared

Bibliography

- 1.Peirce CS, Jastrow J. On small differences of sensation. US Government Printing Office; 1885. [Google Scholar]

- 2.Thurstone LL. A law of comparative judgment. Psychological review. 1927;34(4):273. [Google Scholar]

- 3.Green DM, Swets JA. Signal detection theory and psychophysics. New York: John Wiley; 1966. [Google Scholar]

- 4.Macmillan NA, Creelman CD. Detection theory: A user’s guide. Psychology press; 2004. [Google Scholar]

- 5.Gold JI, Ding L. How mechanisms of perceptual decision-making affect the psychometric function. Progress in neurobiology. 2013;103:98–114. doi: 10.1016/j.pneurobio.2012.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6*.Nassar MR, Gold JI. A healthy fear of the unknown: Perspectives on the interpretation of parameter fits from computational models in neuroscience. PLoS Comput Biol. 2013;9(4):e1003015. doi: 10.1371/journal.pcbi.1003015. The authors discuss several challenges associated with fitting models to behavioral data from tasks that are susceptible to the kinds of complex strategic factors described here. These challenges include possibly misleading fits – for example suggesting that subjects are exploratory when in fact they are not – when the models are appropriate but incomplete. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Laming DRJ. Information theory of choice-reaction times. 1968 [Google Scholar]

- 8.Cho RY, et al. Mechanisms underlying dependencies of performance on stimulus history in a two-alternative forced-choice task. Cognitive, Affective, & Behavioral Neuroscience. 2002;2(4):283–299. doi: 10.3758/cabn.2.4.283. [DOI] [PubMed] [Google Scholar]

- 9.Gold JI, et al. The relative influences of priors and sensory evidence on an oculomotor decision variable during perceptual learning. Journal of neurophysiology. 2008;100(5):2653–2668. doi: 10.1152/jn.90629.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jones M, et al. Sequential effects in response time reveal learning mechanisms and event representations. Psychological review. 2013;120(3):628. doi: 10.1037/a0033180. [DOI] [PubMed] [Google Scholar]

- 11.Herrnstein RJ. On the law of effect. J Exp Anal Behav. 1970;13(2):243–266. doi: 10.1901/jeab.1970.13-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lau B, Glimcher PW. Dynamic response-by-response models of matching behavior in rhesus monkeys. J Exp Anal Behav. 2005;84(3):555–79. doi: 10.1901/jeab.2005.110-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Behrens TE, et al. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10(9):1214–21. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 14.Wilson RC, Nassar MR, Gold JI. Bayesian online learning of the hazard rate in change-point problems. Neural computation. 2010;22(9):2452–2476. doi: 10.1162/NECO_a_00007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15**.Glaze CM, Kable JW, Gold JI. Normative evidence accumulation in unpredictable environments. Elife. 2015;4:e08825. doi: 10.7554/eLife.08825. The authors present a novel, normative model of the temporal dynamics of information accumulation in changing environments. They show that human subjects exhibit key features of the model, including adaptive adjustment of the time window of accumulation in response to changes in the statistics of the input. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nassar MR, et al. An approximately Bayesian delta-rule model explains the dynamics of belief updating in a changing environment. The Journal of Neuroscience. 2010;30(37):12366–12378. doi: 10.1523/JNEUROSCI.0822-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Reddi B, Carpenter R. The influence of urgency on decision time. Nature neuroscience. 2000;3(8):827–830. doi: 10.1038/77739. [DOI] [PubMed] [Google Scholar]

- 18.Cisek P, Puskas GA, El-Murr S. Decisions in changing conditions: the urgency-gating model. The Journal of Neuroscience. 2009;29(37):11560–11571. doi: 10.1523/JNEUROSCI.1844-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Usher M, McClelland JL. The time course of perceptual choice: the leaky, competing accumulator model. Psychological review. 2001;108(3):550. doi: 10.1037/0033-295x.108.3.550. [DOI] [PubMed] [Google Scholar]

- 20.Drugowitsch J, et al. The cost of accumulating evidence in perceptual decision making. The Journal of Neuroscience. 2012;32(11):3612–3628. doi: 10.1523/JNEUROSCI.4010-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Uchida N, Kepecs A, Mainen ZF. Seeing at a glance, smelling in a whiff: rapid forms of perceptual decision making. Nature Reviews Neuroscience. 2006;7(6):485–491. doi: 10.1038/nrn1933. [DOI] [PubMed] [Google Scholar]

- 22.Luce RD. Individual choice behavior: A theoretical analysis. Courier Corporation; 1959. [Google Scholar]

- 23.Sternberg S. Separate modifiability, mental modules, and the use of pure and composite measures to reveal them. Acta Psychol (Amst) 2001;106(1–2):147–246. doi: 10.1016/s0001-6918(00)00045-7. [DOI] [PubMed] [Google Scholar]

- 24.Watson AB. Probability summation over time. Vision research. 1979;19(5):515–522. doi: 10.1016/0042-6989(79)90136-6. [DOI] [PubMed] [Google Scholar]

- 25.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–74. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 26.Gold JI, Shadlen MN. The influence of behavioral context on the representation of a perceptual decision in developing oculomotor commands. J Neurosci. 2003;23(2):632–51. doi: 10.1523/JNEUROSCI.23-02-00632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kahneman D, Norman J. The time-intensity relation in visual perception as a function of observer’s task. Journal of Experimental Psychology. 1964;68(3):215. doi: 10.1037/h0046097. [DOI] [PubMed] [Google Scholar]

- 28.Wickelgren WA. Speed-accuracy tradeoff and information processing dynamics. Acta psychologica. 1977;41(1):67–85. [Google Scholar]

- 29.Bogacz R, et al. The neural basis of the speed–accuracy tradeoff. Trends in neurosciences. 2010;33(1):10–16. doi: 10.1016/j.tins.2009.09.002. [DOI] [PubMed] [Google Scholar]

- 30.Palmer J, Huk AC, Shadlen MN. The effect of stimulus strength on the speed and accuracy of a perceptual decision. J Vis. 2005;5(5):376–404. doi: 10.1167/5.5.1. [DOI] [PubMed] [Google Scholar]

- 31.Simen P, et al. Reward rate optimization in two-alternative decision making: empirical tests of theoretical predictions. Journal of Experimental Psychology: Human Perception and Performance. 2009;35(6):1865. doi: 10.1037/a0016926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Liu ASK, et al. Temporal integration of auditory information is invariant to temporal grouping cues. eneuro. 2015;2(2) doi: 10.1523/ENEURO.0077-14.2015. ENEURO. 0077–14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fine I, Jacobs RA. Comparing perceptual learning across tasks: A review. Journal of vision. 2002;2(2):5. doi: 10.1167/2.2.5. [DOI] [PubMed] [Google Scholar]

- 34*.Liu CC, Watanabe T. Accounting for speed–accuracy tradeoff in perceptual learning. Vision research. 2012;61:107–114. doi: 10.1016/j.visres.2011.09.007. Perceptual learning was characterized in terms of changes in the parameters of the drift-diffusion model fit to both choice and RT data, in contrast to other studies that typically focus on one or the other data types. The study is the first to emphasize that perceptual learning can involve changes in both perceptual sensitivity and the speed-accuracy tradeoff. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kiani R, Hanks TD, Shadlen MN. Bounded integration in parietal cortex underlies decisions even when viewing duration is dictated by the environment. The Journal of Neuroscience. 2008;28(12):3017–3029. doi: 10.1523/JNEUROSCI.4761-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rinberg D, Koulakov A, Gelperin A. Speed-accuracy tradeoff in olfaction. Neuron. 2006;51(3):351–358. doi: 10.1016/j.neuron.2006.07.013. [DOI] [PubMed] [Google Scholar]

- 37.Ahumada AJ. Classification image weights and internal noise level estimation. Journal of Vision. 2002;2(1):8. doi: 10.1167/2.1.8. [DOI] [PubMed] [Google Scholar]

- 38.Brunton BW, Botvinick MM, Brody CD. Rats and humans can optimally accumulate evidence for decision-making. Science. 2013;340(6128):95–98. doi: 10.1126/science.1233912. [DOI] [PubMed] [Google Scholar]

- 39.Cohen JD, McClure SM, Angela JY. Should I stay or should I go? How the human brain manages the trade-off between exploitation and exploration. Philosophical Transactions of the Royal Society B: Biological Sciences. 2007;362(1481):933–942. doi: 10.1098/rstb.2007.2098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 41.Steyvers M, Lee MD, Wagenmakers EJ. A Bayesian analysis of human decision-making on bandit problems. Journal of Mathematical Psychology. 2009;53(3):168–179. [Google Scholar]

- 42.Lee MD, et al. Psychological models of human and optimal performance in bandit problems. Cognitive Systems Research. 2011;12(2):164–174. [Google Scholar]

- 43**.Wilson RC, et al. Humans use directed and random exploration to solve the explore–exploit dilemma. Journal of Experimental Psychology: General. 2014;143(6):2074. doi: 10.1037/a0038199. Using a novel task design that manipulated the time horizon of future choices, the authors were able to distinguish information-seeking versus stochastic behavior. They show that subjects can control both in the service of exploration. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sutton RS. Proceedings of the seventh international conference (1990) on Machine learning. Morgan Kaufmann Publishers Inc; 1990. Integrated architecture for learning, planning, and reacting based on approximating dynamic programming. [Google Scholar]

- 45.Kaelbling LP. Learning in embedded systems. MIT press; 1993. [Google Scholar]

- 46.Dayan P, Sejnowski TJ. Exploration bonuses and dual control. Machine Learning. 1996;25(1):5–22. [Google Scholar]

- 47.Gottlieb J, et al. Information-seeking, curiosity, and attention: computational and neural mechanisms. Trends in cognitive sciences. 2013;17(11):585–593. doi: 10.1016/j.tics.2013.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Schmidhuber J. 1991 IEEE International Joint Conference on Neural Networks. IEEE; 1991. Curious model-building control systems. [Google Scholar]

- 49.Frank MJ, et al. Prefrontal and striatal dopaminergic genes predict individual differences in exploration and exploitation. Nature neuroscience. 2009;12(8):1062–1068. doi: 10.1038/nn.2342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Payzan-LeNestour E, Bossaerts P. Risk, unexpected uncertainty, and estimation uncertainty: Bayesian learning in unstable settings. PLoS computational biology. 2011;7(1):e1001048. doi: 10.1371/journal.pcbi.1001048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Averbeck BB. Theory of Choice in Bandit, Information Sampling and Foraging Tasks. PLoS computational biology. 2015;11(3):e1004164–e1004164. doi: 10.1371/journal.pcbi.1004164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52*.Payzan-LeNestour É, Bossaerts P. Do not bet on the unknown versus try to find out more: estimation uncertainty and “unexpected uncertainty” both modulate exploration. Frontiers in neuroscience. 2012;6 doi: 10.3389/fnins.2012.00150. The authors propose a new model of uncertainty-driven exploratory choice behavior. Their model rests on a dilemma, inving a balance between ambiguity avoidance to limit dangers but novelty seeking to promote new opportunities for reward, that exemplifies the challenges inherent to characterizing choice behavior. [DOI] [PMC free article] [PubMed] [Google Scholar]