Abstract

Background:

Challenges in the design of electronic health records (EHRs) include designing usable systems that must meet the complex, rapidly changing, and high-stakes information needs of clinicians. The ability to move and assemble elements together on the same page has significant human-computer interaction (HCI) and efficiency advantages, and can mitigate the problems of negotiating multiple fixed screens and the associated cognitive burdens.

Objective:

We compare MedWISE—a novel EHR that supports user-composable displays—with a conventional EHR in terms of the number of repeat views of data elements for patient case appraisal.

Design and Methods:

The study used mixed-methods for examination of clinical data viewing in four patient cases. The study compared use of an experimental user-composable EHR with use of a conventional EHR, for case appraisal. Eleven clinicians used a user-composable EHR in a case appraisal task in the laboratory setting. This was compared with log file analysis of the same patient cases in the conventional EHR. We investigated the number of repeat views of the same clinical information during a session and across these two contexts, and compared them using Fisher’s exact test.

Results:

There was a significant difference (p<.0001) in proportion of cases with repeat data element viewing between the user-composable EHR (14.6 percent) and conventional EHR (72.6 percent).

Discussion and Conclusion:

Users of conventional EHRs repeatedly viewed the same information elements in the same session, as revealed by log files. Our findings are consistent with the hypothesis that conventional systems require that the user view many screens and remember information between screens, causing the user to forget information and to have to access the information a second time. Other mechanisms (such as reduction in navigation over a population of users due to interface sharing, and information selection) may also contribute to increased efficiency in the experimental system. Systems that allow a composable approach that enables the user to gather together on the same screen any desired information elements may confer cognitive support benefits that can increase productive use of systems by reducing fragmented information. By reducing cognitive overload, it can also enhance the user experience.

Keywords: Informatics, Human Computer Interaction (HCI), Health Information Technology, Health Technology Assessment

Introduction

The numerous challenges involved in the development of electronic health records (EHRs) include designing usable systems that must meet the complex and rapidly changing information needs of clinicians. We previously described a novel architectural model for how to create such systems, via modular, widget-based, user-composable platforms.1,2 Clinician users can create their own system and tools via the drag and drop method, without having to know how to program.

We first present some definitions. “User-composable” means that the system allows the nonprogrammer end user, such as a doctor or nurse, to drag and drop any desired elements (e.g., a lab panel) of the clinical information system and place them together on the same screen, composing their own display layout. The display layout may be designed to be patient specific or to meet the needs of a particular clinical unit or specialty need. For example, the user may choose from panels that correspond to an aspect of the patient record (e.g., vital signs, lab results), identify what is relevant, and organize them on a display in way that is meaningful to users and facilitates their workflow processes.

“Working memory” refers to a type of memory that humans use to carry out and plan tasks. For example, if a clinician is documenting a patient record and relying on multiple sources (e.g., paper forms or multiple screens), the clinician will need to occasionally maintain information in working memory to be able to record the patient data in the EHR. One may not remember the information afterward, but working memory fulfilled its temporary function of allowing one to carry out the immediate task. Another familiar example is a waiter keeping all the dinner orders for a table of six in mind long enough to deliver them.

Here we define “overall efficiency” to mean “the amount of work that can be done per unit time.” This means that reducing the effort (for example, effort expended in navigation) for a task involving the EHR, or reducing the time taken for an overall task, would increase overall efficiency.

We propose that a user platform that embraces a composable approach can reduce the time taken for a task and can increase efficiency via several mechanisms. Navigation in order to view relevant information on multiple screens is a substantial part of the use of EHRs. Thus reduction in navigation time, (by reduced repeat element viewing or reduced screen viewing) would increase efficiency. For the present purposes, we define “navigation efficiency” as the “inverse of proportion of repeat views a user makes while carrying out a task.” In other words, each repeat view is akin to introducing a theoretically unnecessary extra move. Navigation efficiency is one component of overall efficiency.

Decreasing the time clinicians take in navigation activities may allow more time to devote to patient assessment and treatment planning. Time and efficiency are important considerations for anyone using an EHR, as they may affect the quality of care, workload, ability to streamline processes, and cost. McGinn et al.’s review of implementation studies found 32 percent of studies listed time taken as being a great stakeholder concern about EHR implementation.3 There are mixed findings on time spent using EHRs. Hripcsak et al. found that their clinical users spent moderate time authoring and reviewing notes; most less than 90 minutes/day, with documentation taking 7–21 percent of clinician time.4 However Oxentenko et al., in a large survey, found that 68 percent of residents perceived that they spent more than four hours daily on documentation.5 This exceeds the time they reportedly spend in patient contact. Poissant et al.’s review found significant increases (98–328 percent) in physician time spent on documentation following the implementation of an EHR.6

This paper compares the efficiency of clinician data-element gathering for patient case appraisal using a user-composable EHR2 to that of using a conventional EHR through a mixed-methods approach that includes a laboratory study and log file analysis.

Background

MedWISE: a User-Composable EHR

We created a demonstration system, MedWISE, based upon the model whose features are fully described in Senathirajah and Bakken1 and in Senathirajah et al.2 Here we describe only those features pertinent to efficiency, as follows:

Using MedWISE, clinicians can gather any desired elements such as laboratory test results, notes, X-ray reports, RSS feeds, or other information on the same screen, and arrange them into a multicolumn screen layout, by using drag and drop.

They can also create and share custom laboratory panels, timeline or other graphic visualizations, templates, and entire interfaces. The resulting creations may be patient specific or reflect specialty or individual clinician needs and preferences.

These elements can be arranged spatially, marked with colors (e.g., to denote urgency), collapsed showing headers, or expanded to full screen. The screen arrangement can be changed at any time while the user reviews a case.

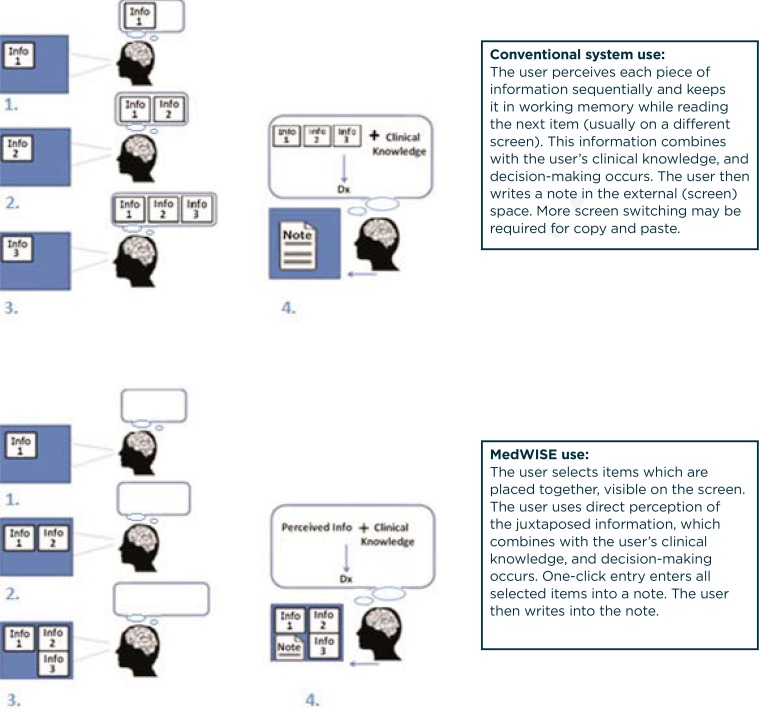

Below, we describe how these features can improve efficiency and facilitate the user’s cognitive processes.18–20 Figure 2 shows the essential interaction differences. MedWISE has been described in greater detail elsewhere.1,7–9 Screenshots are included in the appendix.

Figure 2.

Conventional System (WebCIS) and MedWISE Interaction

HCI and Cognition: Theoretical Bases for MedWISE Interaction

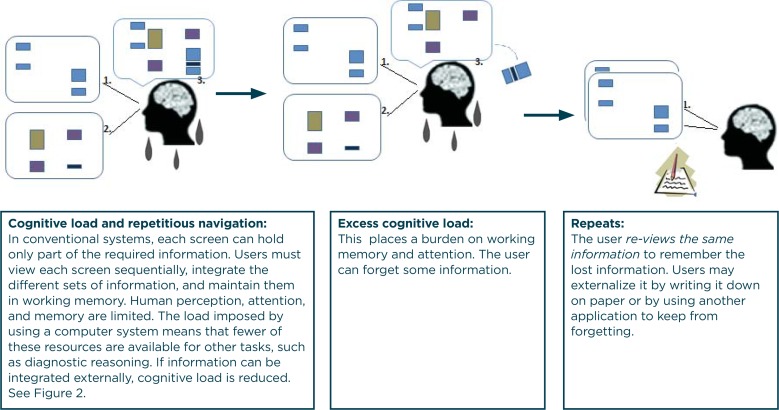

The basic task in clinical case appraisal involves assembling and considering many pieces of information. The use of any complex system such as an EHR necessitates that the user divide his or her attention between negotiating the system (e.g., navigating to the needed screen) and performing the task at hand, for example, characterizing the patient problem.10,11 MedWISE aims to provide a physical manipulable platform that more closely approximates the clinician’s thinking processes and reduces the effort necessary for negotiating inflexible systems. To facilitate explaining the cognition and HCI theory involved, we present concepts as a series of graphics (Figure 1) accompanied by text explanations.

Figure 1.

Cognitive Load and Repetitious Navigation

Woods coined the term “keyhole effect” to describe the problem of trying to obtain access to a large collection of information via a small window, as if looking into a large room via a small keyhole.12 In conventional EHRs, patient information is accessible only in given locations. The clinician must adapt; limited screen size usually means searching and viewing multiple screens to get all the required information, depending on the goal task. This screen switching means the user has to remember items between screens, imposing a load on working memory. As working memory is limited, this may also require re-viewing information before the users can integrate it into their decision-making. We suggest that this is the reason for the repeat views that we see in log files.

Because humans have limited cognitive resources (perception, attention, and memory), whether or not a person has to use mental resources for a task or can use external tools is significant for system usability. The more that the (task-relevant) information is externalized, the fewer are the cognitive resources a person must use to navigate the system and the more that cognitive resources are available for higher-order functions such as clinical reasoning.16 In composable systems, more required elements are external (on screen, not in the user’s memory) during the individual user’s diagnostic reasoning process, putatively improving usability. Reducing repetitious navigation also should affect time taken and, hence, efficiency.

Hybrid Approach to Comparing Systems

Comparing two different systems by using conventional methods often presents difficulties since the two systems may be deployed in different settings, or at different times (such as before and after the go-live of a new system), or there may not be availability of research staff or permissions to study the system in situ. Log files can be used to compare systems on important points of interest. They allow for rich and detailed analysis of user actions throughout a period. They have the additional advantage of being one of the least intrusive methods of capturing user interaction in the clinical setting. Here we used this approach to compare a fully deployed and an experimental system. In this study, we used a hybrid approach that includes (1) log file analysis of a production system EHR that was in wide use at the institution, and (2) video capture of users employing an experimental system.

Methods

Study Design

The observational study used mixed methods (clinician case appraisal in laboratory setting and log file analysis) to address the hypothesis: there will be a significantly lower proportion of case sessions with repeat views of data elements in MedWISE than in the conventional EHR. Data on repeat views was obtained from log files and video recordings as described below.

Setting

The study setting was New York-Presbyterian Hospital/Columbia University Medical Center (NYP CUMC), a large academic medical center in New York City. At the time of the study, a conventional EHR—a web-based clinical information system (WebCIS)—was used. It is a homegrown system that had been in place for several years. WebCIS aggregates and displays information from dozens of clinical systems.17 It allowed clinicians to view laboratory results and other data and read colleagues’ notes asynchronously as part of the care coordination and consultation processes. Thus, clinicians in our study were familiar with the note and laboratory test formats. They were also acquainted with authors (i.e., colleagues) of clinical documents, and with the hospital service organization. The EHR has conventional navigation with a left-hand pane used to make information elements available. The information location is fixed and elements selected from the menu appear one at a time in the main right-hand pane. Figure 2 compares user interaction in WebCIS with the user-composable EHR, MedWISE.

Sample and Recruitment

The study protocol was approved by the Columbia University Institutional Review Board. A convenience sample of 11 clinicians was recruited via a focus group announcement and email from the hospitalist and nephrology departments of NYP-CUMC.

Data Collection Procedures

Patient Case Studies

The four cases for examination of repeat viewing of data elements were patients with substantial comorbidities. Case 1 involved an elderly patient with a recent left meniscal tear, (the reason for the visit), coronary artery disease and hypertension, osteoarthritis, and venous insufficiency with lower extremity edema. Case 2 described a person with hypertension, hyperlipidemia, diminished renal function, cardiac pain, obesity, and insulin resistance, who was in for examination because of hypertension, hyperlipidemia, and cardiac symptoms. Case 3 concerned a person with obesity, diabetes, blindness, and renal problems, who presented with flu symptoms. Case 4 involved a patient with a long history of aortic aneurysm and vascular problems, who had undergone multiple surgeries.

Data Element Viewing Using MedWISE

Users were scheduled for two-hour sessions and compensated $100 for their efforts. Users were told (via oral and printed instructions) to assume that they would be taking over care of the patient and to use MedWISE in any way they wished to familiarize themselves with the patient’s condition and state their assessment, diagnoses, and plan.

Data were recorded using Morae video-analytic software (Techsmith, Okemos, Michigan). Recordings included user screen actions and speech.18 The recorded screen actions provided the data for calculating the number of repeat views of data elements.

Data Element Viewing Using the Conventional EHR

We extracted three years of log files for the same four patient cases and same user roles (e.g., residents) as with MedWISE. WebCIS log files provide granular information about user behavior, including which specific data elements were viewed (at the level of individual laboratory result panels, note types, study reports, etc.). They permit identification of individual users and patients and the point in time an element was accessed, and thus how users viewed data throughout a session. A “session” was defined as “continuous use of the system by a user for a patient case with no more than 30 minutes of inactivity.”

Data Analysis Procedures

Data Element Viewing Using MedWISE

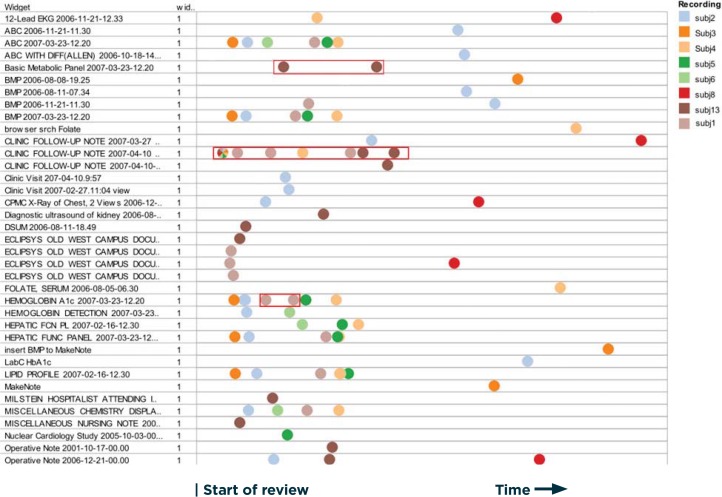

Based on user interactions as captured in Morae, all user data-element viewing for each case was plotted on the same axes (data element versus time) as “swim lanes,” to allow comparison of different user actions for the same case. Here swim lanes show the time course of access for each element as a horizontal row. This allowed us to count the number of repeat views in MedWISE sessions for each user by case (Figure 3) and to tabulate the total number of repeat views.

Figure 3.

Partial Swim Lane Representation of Users’ Paths Through the EHR For Case 2

Notes: The horizontal axis is time; the vertical axis is list of elements. Each color represents one subject. Each dot represents one view of that information element. By following dots of one color we can see the sequence of what that user viewed. If two dots of the same color appear in the same row, it means that user re-viewed the same information twice. Examples are outlined in red. Subjects 1 and 13 repeated viewing the same element (particularly the index note, Clinic Follow-up Note 2007-04-10).

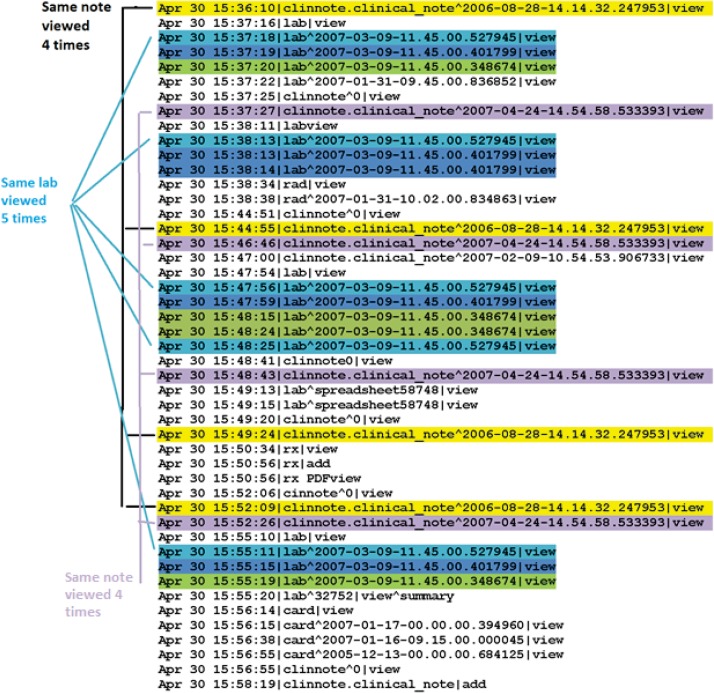

Data Element Viewing Using Conventional EHR

Log files from conventional EHR use were examined, and repeat views during a session were counted manually. Figure 4 shows an example of how log files reveal repeat views of the same elements within a session.

Figure 4.

WebCIS Log File Extract Showing Repeated Elements Highlighted

Notes: Each color represents a different specific element, and one can see an interdigitating pattern of note and laboratory result viewing. Some elements were viewed four or more times during the 20 minute session.

Hypothesis Testing

The numbers of repeat views in MedWISE and WebCIS sessions were compared using Fisher’s exact test.

Results

Sample

The sample for MedWISE repeat views comprised resident physicians (n=9), one attending physician, and one physician assistant, with an average of 2.5 years of service at NYP-CUMC, 3.3 years of experience in their fields, and 2.4 years of experience using WebCIS. They had an average 2.7 years of experience using other commercial EHRs. Eight of the 11 clinicians rated themselves above average in computer knowledge, with one self-rating as “expert.” All but one used social media. The 11 clinicians produced 41 case sessions. Extraction of log files for the same 4 patients for 3 years yielded 175 case sessions for analysis.

Data Element Viewing Using MedWISE

A total of 589 elements were viewed in the 41 sessions, of which 7 (1.1 percent) were repeat views. The number of data elements viewed per session ranged from 1 for Case 1 to 36 for Case 4 with a mean of 17.03 per case. The number of data elements for all cases varied by clinician ranging from 36 to 107 with an average of 64. The number of case sessions with repeat views was 6 (14.6 percent). By case, the numbers of repeats were as follows: Case 1 (n=1); Case 2 (n=2), Case 3 (n=1) and Case 4 (n=2). Five clinicians had no repeat views.

Data Element Viewing Using Conventional EHR

Log file analysis revealed that conventional EHR users adopt a general pattern of starting with an index note, and then viewing other parts of the record. They often revisited the index note, alternating with viewing other results (e.g., lab panels) repeatedly. The total number of element views was 4,150 of which 718 (17 percent) were repeat views. The number viewed per session ranged from 2 for Case 1 to 117 for Case 3 with a mean of 20.1 per case. The total number of case sessions with repeat reviews was 127 (72.6 percent). By case, the numbers of repeat views were as follows: Case 1 (n=60); Case 2 (n=31), Case 3 (n=279) and Case 4 (n=348).

Comparing Systems

There was a significant (p<.0001) 58 percent difference in proportions of case sessions with repeat views between MedWISE and the conventional EHR.

Discussion

As predicted, we observed a significant difference in repetitious accesses of the same element within the same session with MedWISE as compared to the conventional EHR. The sharp difference in repeat navigation with MedWISE supports the idea that the ability to juxtapose elements on the same screen allows comparisons (for example, comparing note sections with the related laboratory data). It also provides the necessary aggregation of information without the user having to retain information in working memory. It is consistent with the idea that conventional systems have the potential to overload working memory between screens, and that the composable interaction mechanism might serve to reduce cognitive load. In several studies, Kerne et al. found that this type of composition is also a form of information externalization. He found that allowing the user to organize information externally lets the user step back, compare information elements, and often reformulate the problem.19–22

This finding of increased navigation efficiency as measured by fewer repeat views of data elements is in concert with other studies’ findings that including the most relevant information on the same screen increases overall efficiency. Koopman’s comparison of a custom diabetes dashboard with the conventional EHR found significantly reduced average time (from 6.3 to 1.9 minutes, p<.001) for the dashboard, and a higher proportion of needed information retrieved.23 Staggers likewise found increased efficiency without decreased accuracy when all relevant information was presented on one screen.24 Koopman points out that inability to find relevant information within a short time is also a motivation for unnecessary test ordering. Thus efficiency improvements may have an economic impact beyond reducing staff time spent on EHR-related tasks.23

There are several other mechanisms by which a composable approach may contribute to overall efficiency. Information selection (filtering) done by users also increases the amount of relevant information presented in the same interface space. In theory, more cognitive resources (used in perception, attention, memory) should be available for diagnostic reasoning and other patient care cognitive tasks, since fewer are required for negotiating the interface or searching for needed information.

An additional mechanism for increased overall efficiency is, of course, the reduction in needed navigation by a population of users all creating and sharing interfaces for the same patient. Members of a team caring for the same patient re-view the same data elements repeatedly.25 In conventional systems each user session involves searching for those elements again. Thus in a composable system, larger scale efficiencies are possible when only the first user has to search for information, composes the relevant elements together, and shares the interface. Subsequent users can then view those elements presented in the composed interface with little need to search. The potential reduction in navigation actions via this mechanism is substantial (56 percent to 93 percent in our results). Composable approaches can also serve to increase efficiency by diminishing display fragmentation, an identified cause of errors.26

Lessons Learned and Possible Replication Elsewhere

Use of log files to allow comparison of systems that otherwise cannot be tested together constitutes a novel hybrid approach. It allows partial reconstruction of past user actions. In general, log file analysis is a viable approach to discovering patterns of use or interaction. A user composable system may enhance user experience by reducing extraneous and time-consuming navigation.

Limitations

The limitations of this work include the small number of subjects in the laboratory study and one study setting (though with data from two major medical centers), which limits generalizability. Most clinician subjects were in a training phase (residents). The fact that the majority of subjects were residents may have affected the results inasmuch as residents are generally younger than the general clinician population and were perhaps more familiar with (then) new web-based forms of interaction and social media. It is also possible that there was a self-selection bias since participation was voluntary. As the patients were not those assigned to the clinicians in real life, clinician cognitive investment in using the system may differ from that of clinicians in a real clinical practice situation. Although we assume that the log files offer a realistic basis of comparison, we cannot know the specific context of their use that may influence repeat navigation. On the other hand, the use of real patient records and realistic tasks were strengths. Moreover, the log files reflected actual clinical practice rather than a laboratory experiment.

Conclusion

Clinicians spend an inordinate amount of time in both information-gathering and documentation tasks. We found that the composable interaction approach involves substantially fewer instances of repetitious navigation, with putative increase in efficiency due to time savings. An EHR that minimizes interaction complexity and reduces the amount of effort needed to negotiate the system may not only support more robust clinical reasoning, but may allow for a flourishing of creativity in support of coordinating clinical care. Of course, this hypothesis needs to be tested. However, it does present hope that future EHRs will be less stultifying and more enabling.

Acknowledgments

We greatly appreciate the time and effort of the participants in the study. This research was supported by NLM/RWJ ST15 LM007079-15 Research Training Grant (Senathirajah) and the Irving Institute for Clinical and Translational Research grant UL1RR024156 (Bakken).

Appendix

Table A1.

MedWISE Basic Features, Related Theory, Coding and Relationship to Care Process

| FEATURE/FUNCTIONALITY/MECHANISM | THEORY CONCEPTS | CODES AND BEHAVIOR FACILITATED | RELATIONSHIP TO DIAGNOSTIC AND CARE PROCESSES |

|---|---|---|---|

| Gather and spatially arrange any information elements from the EHR together on the same page, by click and drag. | DC, K, IS, CL, E | Identify information sources; arrange display elements to support procedure and prioritization, juxtaposition, data examination, exploration, explanation, hypothesis evaluation, discrepancy processing, metareasoning and summarization; assign regions for particular purposes. | Make relationships between variables, order according to diagnostic or treatment importance or relevance, communicate significance to self or colleagues, assist thinking, store data |

| Make and share custom lab panels from any user-selected labs. Likewise, share user-created tabs (page interfaces) containing collections of notes, lab panels, plots, RSS feeds, etc. Creators of shared elements are identified in the list for importing elements, so users may choose based on their informal knowledge of the authors’ specialty, expertise level, etc. | DC, P | Create display more exactly fitted to patient case or general needs; share this with colleagues; facilitate data examination, exploration, and discrepancy processing. | Display all elements of a patient case on one screen, facilitating thinking and decision-making without the need to navigate, thus speeding the process. |

| Set a tab containing user-gathered elements as a template so that labs in the page are automatically updated with new information as it becomes available. | P, communication, & collaboration | Automatic information updates, standardization. | Facilitate rapid and up-to-date case review in subsequent sessions; standardize process across sessions, patients, and clinicians; and communicate with colleagues. |

| Create multiaxis plots of any desired types of lab test values together on the same plot (a mashup) encompassing all available patient data; pan, and zoom from a years- long scale to minutes/seconds. | Data examination, exploration and summarization, discrepancy processing. | Facilitate decision-making | |

| Collapse and expand widgets; edit header colors and titles; view widgets full screen. | IS (marking, grouping, perception, choice, etc.) | Data identification, examination, exploration, marking, grouping according to topic or relevance, increasing perceptibility, summarization, data storage. | Facilitate reading notes or full-text journal articles; speed case review and decision-making. |

| Sticky note: A “sticky note” can be added for inserting text into the interface, with a customizable background and header color. | Allows user to write notes or anything else desired in a widget. | Combining user-created text with other information on same screen. | |

| RSS feed widget: Through a multistep process, a user can set up RSS feeds to appear in a widget. | Inclusion of self-updating (therefore current) information (e.g., standing Medline search results) in a widely used format; and drill-down to full text journals in the interface. | Facilitating EBM and guidelines, alerting, etc. Any RSS feed allowed. Inclusion of diverse external information sources. | |

| Mouse over lab results preview: Mousing over the left-hand lab menu link gives a preview of the lab panel. | Allows user to preview widgets before inserting them into the tab, facilitates selection and mitigates the need to take action to remove unwanted widgets. | Decreases unnecessary actions in widget selection and placement; rapid information overview. |

Notes: DC=distributed cognition, K=Keyhole effect, E=epistemic action, CL=cognitive load, IS=intelligent uses of space, P=produsage, EBM=Evidence-based medicine

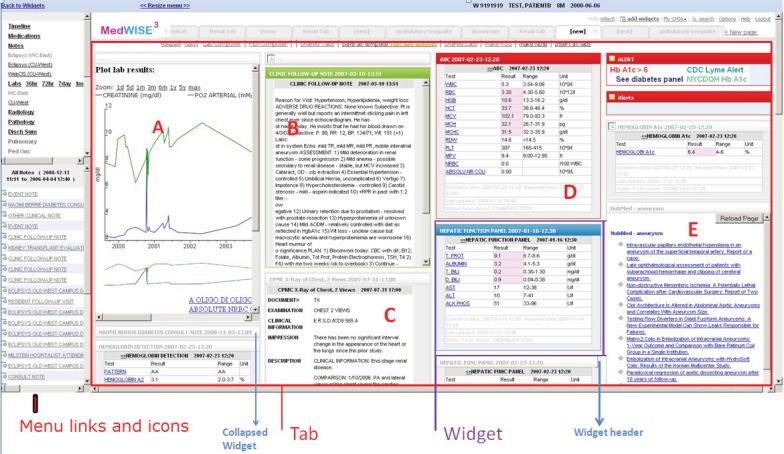

Figure A1.

MedWISE Screenshot

Clicking the left-hand menu inserts data items (as movable rectangles, or widgets) into the right-hand pane (tab). Area A is notes; area B is study reports; area C is laboratory results; area D is orders; and area E is RSS feeds. Users can gather and arrange together on the same page any elements of the clinical record. These interfaces are stored and can be shared. For safety, the usual EHR interaction is available and can be accessed by clicking the icons next to the menu links.

Definitions

A “widget” is a single draggable window containing an information display, such as a note, lab panel, RSS feed listing, or lab results plot. A “tab” is a navigational device that allows access to a single full screen—by clicking tabs at the top of the large right-hand pane. “Creating a tab” means populating it with widgets.

Users can create and share original widgets, for example, custom lab panels, which are created by dragging and dropping the lab tests chosen from the complete list of 908 lab tests that are used at this institution. They can also share the complete screen (tab) of widgets they assembled, which could include lab panels, RSS feeds, notes, user-created notes, user-created mashups of lab plots, orders, and so on. Thus a user could create a tab containing all the relevant information for a particular patient and share it, or set it as a template and share the template.

“Templates” are tabs in which the laboratory panels are self-updating (that is, when new results are available the screen automatically shows the newest information). Teams or specialties can set up templates (e.g., for renal function) that they use and share.

“Importing” a tab or widget is done by bringing up the “shared tabs” or “shared widgets” list and clicking a link; this opens the tab or inserts the widget into the current interface, respectively. Users can control the information density on screen according to their preferences, by distributing widgets over several tabs by dropping widgets onto other tabs.

Video examples of MedWISE features in use.

Footnotes

Disciplines

Computational Engineering | Computer and Systems Architecture | Health Information Technology

References

- 1.Senathirajah Y, Bakken S. Important ingredients for health adaptive information systems. Studies in health technology and informatics. 2011;169:280–4. [PubMed] [Google Scholar]

- 2.Senathirajah Y, Kaufman D, Bakken S. The Clinician in the Driver’s Seat: Part 1 - A Drag/drop User-configurable Electronic Health Record Platform. Journal of biomedical informatics. 2013 doi: 10.1016/j.jbi.2014.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McGinn C, Grenier S, Duplantie J, Shaw N, Sicotte C, Mathieu L, et al. Comparison of user groups’ perspectives of barriers and facilitators to implementing electronic health records: a systematic review. BMC Med. 2011;9:46. doi: 10.1186/1741-7015-9-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hripcsak G, Vawdrey D, Fred M, Bostwick S. Use of electronic clinical documentation: time spent and team interactions. Journal of the American Medical Informatics Association : JAMIA. 2011;18:112–7. doi: 10.1136/jamia.2010.008441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Oxentenko A, West C, Popkave C, Weinberger S, Kolars J. Time Spent on Clinical Documentation. Arch Intern Med. 2010;170(4):377–80. doi: 10.1001/archinternmed.2009.534. [DOI] [PubMed] [Google Scholar]

- 6.Poissant L, Pereira J, Tamblyn R, Kawasumi Y. The Impact of Electronic Health Records on Time Efficiency of Physicians and Nurses: A Systematic Review. Journal of the American Medical Informatics Association : JAMIA. 2005;12(5):505–16. doi: 10.1197/jamia.M1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Senathirajah Y, Bakken S. Advances in Information Technology and Communication in Health, IOS Press. 2009. Architectural and Usability Considerations in the Development of a Web 20-based EHR; pp. 315–21. [PubMed] [Google Scholar]

- 8.Senathirajah Y, Kaufman D, Bakken S. The clinician in the Driver’s Seat: Part 2: Intelligent Uses of Space in a User-composable electronic health record. Journal of Biomedical Informatics (under review) 2014. [DOI] [PMC free article] [PubMed]

- 9.Senathirajah Y, Kaufman D, Bakken S. The clinician in the Driver’s Seat: Part 1: - A Drag/drop User-composable Electronic Health Record Platform. Journal of Biomedical Informatics (in press) 2014. [DOI] [PMC free article] [PubMed]

- 10.Horsky J, Kaufman DR, Patel V. The Cognitive Complexity of a Provider Order Entry Interface. AMIA Annu Symp Proc. 2003:294–8. [PMC free article] [PubMed] [Google Scholar]

- 11.Horsky J, Kaufman D, Oppenheim M, Patel V. A framework for analyzing the cognitive complexity of computer-assisted clinical ordering. Journal of biomedical informatics. 2003;36(1–2):4–22. doi: 10.1016/s1532-0464(03)00062-5. [DOI] [PubMed] [Google Scholar]

- 12.Woods D. Toward a theoretical base for representation design in the computer medium: Ecological perception and aiding human cognition. In: Flach PH J, Caird J, Vicente KJ, editors. Global perspectives on the ecology of human-machine systems. Hillsdale, NJ: Lawrence Erlbaum; 1995. pp. 157–88. [Google Scholar]

- 13.Hazlehurst B, Gorman PN, McMullen CK. Distributed cognition: an alternative model of cognition for medical informatics. International journal of medical informatics. 2008;77(4):226–34. doi: 10.1016/j.ijmedinf.2007.04.008. [DOI] [PubMed] [Google Scholar]

- 14.Hazlehurst B, McMullen C, Gorman P, Sittig D. How the ICU follows orders: care delivery as a complex activity system. AMIA Annual Symposium proceedings / AMIA Symposium AMIA Symposium. 2003:284–8. [PMC free article] [PubMed] [Google Scholar]

- 15.Hazlehurst B, McMullen CK, Gorman PN. Distributed cognition in the heart room: how situation awareness arises from coordinated communications during cardiac surgery. Journal of biomedical informatics. 2007;40(5):539–51. doi: 10.1016/j.jbi.2007.02.001. [DOI] [PubMed] [Google Scholar]

- 16.Horsky J, Kaufman DR, Patel VL. The cognitive complexity of a provider order entry interface. AMIA Annual Symposium proceedings / AMIA Symposium AMIA Symposium. 2003:294–8. [PMC free article] [PubMed] [Google Scholar]

- 17.Hripcsak G, Cimino JJ, Sengupta S. WebCIS: Large Scale Deployment of a Web-based Clinical Information System. AMIA Annu Symp Proc. 1999:804–8. [PMC free article] [PubMed] [Google Scholar]

- 18.Kushniruk AW, Patel V. Cognitive evaluation of decision making processes and assessment of information technology in medicine. International Journal of Medical Informatics. 51(2):83–90. doi: 10.1016/s1386-5056(98)00106-3. [DOI] [PubMed] [Google Scholar]

- 19.Kerne A, Koh E, Dworaczyk B, Mistrot J, Choi H, Smith S, Graeber R, Caruso D, Webb A, Hill R, Albea J, editors. CombinFormation: A Mixed-Initiative System for Representing Collections as Compositions of Image and Text Surrogates. ACM/IEEE Joint Conference on Digital Libraries; 2006. [Google Scholar]

- 20.Kerne A, Koh E, Smith S, Choi H, Graeber R, Webb A, editors. Promoting emergence in information discovery by representing collections with composition. Proceedings of the 6th ACM SIGCHI conference on Creativity and Cognition; 2007 June. [Google Scholar]

- 21.Kerne A, Koh E. Representing Collections as Compositions to Support Distributed Creative Cognition and Situated Creative Learning. New Review of Hypermedia and Multimedia (NRHM) 2007;13(2) [Google Scholar]

- 22.Zhang J. The nature of external representations in problem solving. Cognitive Science. 1997;21(2):179–217. [Google Scholar]

- 23.Koopman RJ, Kochendorfer KM, Moore JL, Mehr DR, Wakefield DS, Yadamsuren B, et al. A diabetes dashboard and physician efficiency and accuracy in accessing data needed for high-quality diabetes care. Ann Fam Med. 2011;9(5):398–405. doi: 10.1370/afm.1286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Staggers N, Mills ME. Nurse-Computer Interaction: Staff Performance Outcomes. Nursing Research. 1994;43(3):144–50. [PubMed] [Google Scholar]

- 25.Stein D, Wrenn J, Johnson S, Stetson P. Signout: a collaborative document with implications for the future of clinical information systems. AMIA Annu Symp Proc. 2007:696–700. [PMC free article] [PubMed] [Google Scholar]

- 26.Technology CoPSaHI . Health IT and Patient Safety: Building Safer Systems for Better Care. Institute of Medicine; 2011. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.