Abstract

Multiphoton microscopy has emerged as a ubiquitous tool for studying microscopic structure and function across a broad range of disciplines. As such, the intent of this paper is to present a comprehensive resource for the construction and performance evaluation of a multiphoton microscope that will be understandable to the broad range of scientific fields that presently exploit, or wish to begin exploiting, this powerful technology. With this in mind, we have developed a guide to aid in the design of a multiphoton microscope. We discuss source selection, optical management of dispersion, image-relay systems with scan optics, objective-lens selection, single-element light-collection theory, photon-counting detection, image rendering, and finally, an illustrated guide for building an example microscope.

1. Introduction: Why Multiphoton Microscopy?

In the relatively short two and a half decades since the demonstration of a multiphoton microscope [1], multiphoton laser scanning microscopy (MPLSM) has grown into a vibrant and productive field. In particular, MPLSM has demonstrated its utility for noninvasive imaging deep within scattering media, such as biological tissue [2–10]. Additionally, multiphoton microscopy provides several contrast mechanisms, including two-photon excitation fluorescence (TPEF) [1,11,12], second-harmonic generation (SHG) [13–17], third-harmonic generation (THG) [18–21], sum-frequency generation (SFG) [22,23], stimulated Raman scattering (SRS) [24], and coherent anti-Stokes Raman spectroscopy (CARS) [25–29]. These contrast modalities are used to extract information pertaining to the structure and function of the specimen under consideration, which is not present in other optical imaging techniques. The application base of multiphoton microscopy continues to grow, encompassing a broad range of basic science applications and clinical diagnostics [3,6,14,30–50].

Because of the utility of MPLSM, one can purchase complete commercial MPLSM systems from the source laser to the data-analysis software. However, this convenience can come at a cost. By making your own home-built MPLSM system, not only can you reduce the expense, you can ensure flexibility in your platform through your understanding of its construction and also by the use of easily replaceable and adjustable off-the-shelf components.

Examples of home-built multiphoton microscopes can be found in the literature [51]. Of particular note is the Parker laboratory’s home-built two-photon microscope [52–55]. Other examples demonstrate how to convert an already present confocal fluorescent microscope to a MPLSM [56].

Because of the broad application base for multiphoton microscopy, this paper will present a guide to designing and building a MPLSM system—well suited for TPEF, SHG and THG imaging—from the selection of an ultrafast laser source to the image processing of the detected signal. With little exception, this microscope system will be assembled completely from off-the-shelf components.

First, we will provide a brief history of microscopy and the developments that led to multiphoton microscopy. Section 2 will explain the advantages of multiphoton microscopy over previous methods of fluorescence microscopy, namely, confocal microscopy. Then Sections 3–10 will provide a thorough guide for microscope construction, from the selection of a laser source to a brief discussion of data analysis and an example built.

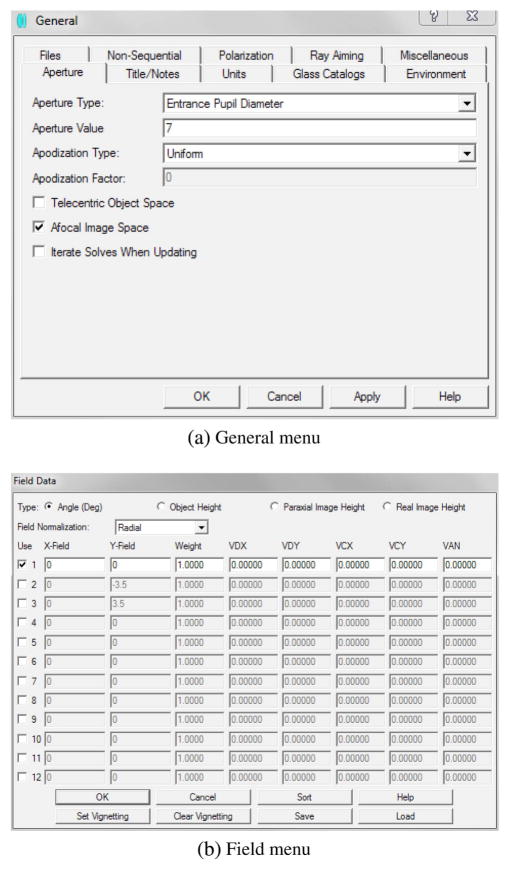

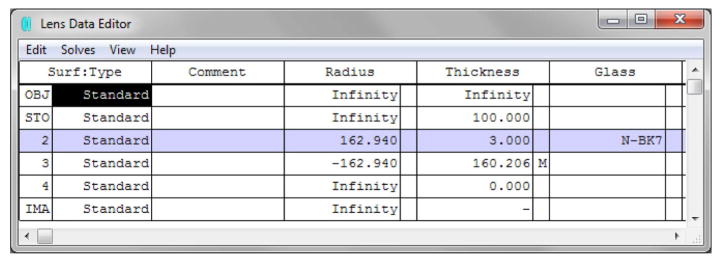

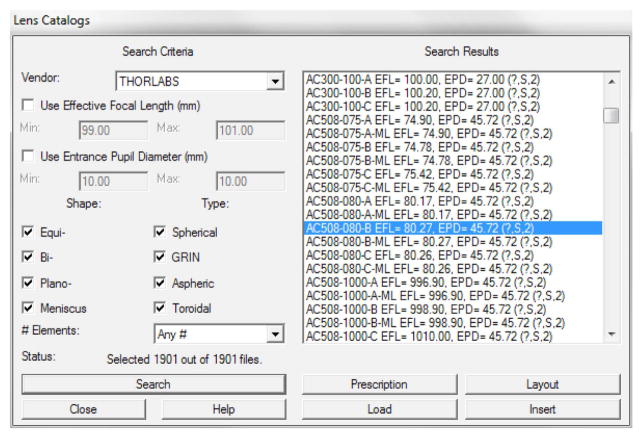

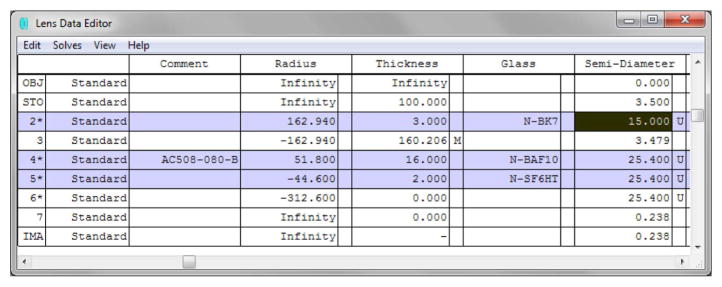

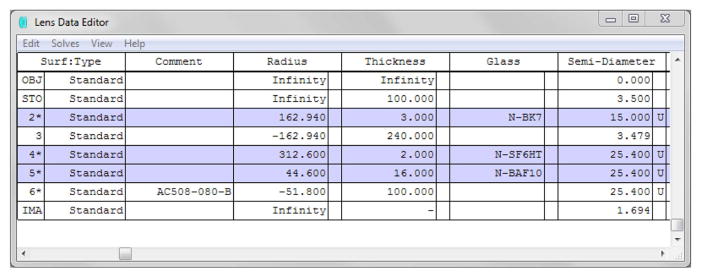

We employ a variety of software applications for designing a multiphoton microscope. Many of them have overlapping functionality; however, they also have advantages and drawbacks when compared with one another. We present examples using ZEMAX [57] and Optica [58], ray-tracing tools, which we have used in our laboratory. Besides these, there are many other ray-tracing tools that range in price and application. Some of these are Code V [59], FRED [60], LensLab and Rayica [58] (packages for Mathematica), OpTaliX [61], OPTIS [62] (a tool set for SolidWorks), OSLO [63], and VirtualLab [64].

2. A Brief History of Microscopy from Light to Multiphoton Microscopy

There are many excellent articles and texts that provide a thorough background on the history of microscopy, help to provide a framework for topics germane to microscopy, and describe how the different forms of the microscope came to be [65–73]. There are also excellent articles specifically about nonlinear [74], multiphoton [26,31,33,34,37,38,40,50,75–83], and ultrafast microscopy [78,84–91]

The field of microscopy began with simple and sometimes novel instruments that provided the ability to view the previously unobservable through improvements to resolution, contrast, and magnification (the ability to discriminate between two objects on the basis of distance, color or intensity, and image size, respectively). The human eye is capable of resolving objects as small as 0.1 mm (about the width of a human hair). The white-light microscope can resolve objects as small as 0.2 μm (a blood cell is about 7.5 μm in width [67,73]).

2.1. The Light Microscope

The development and history of the microscope is replete with contributions and independent developments, advancements, and improvements from many sources and places over the course of a few centuries. However, the use of lenses (or lens analogues) and curved mirrors can be found as far back as the first century A.D. Interest in the fundamental structure of natural objects drove the development of the optical light microscope in the 16th and 17th centuries [92,93]. The invention of the single-lens microscope is attributed to the draper Antonie van Leeuwenhoek (1632–1723) who developed a technique for making small spherical lenses. These early microscopes were composed of a single lens that created a large virtual image of the sample analogous to a magnifying glass or loupe [71,73,94,95]. Concurrent with the development of the single-lens microscope, and inseparable from the development of the telescope, was the invention of the bilenticular microscope, for which credit is given to Zaccharias Janssen (1587–1638) and Hans Janssen (1534–1592).

Joseph Jackson Lister (1786–1869) developed the achromatic and spherical-aberration-free Lister objective. This was a landmark achievement for multiple-element microscopes that allowed them to finally be used for production of higher-quality images than their single-lens counterparts. Up to this point, chromatic aberration had been the limiting factor for multilens microscopes. Giaovanni Battista Amici (1786–1863) was the first to use an immersion fluid to increase the resolution. He also used an ellipsoidal-mirror objective to prevent chromatic aberrations, as proposed initially by Christiaan Huygens and Isaac Newton.

Humanity has been aware of, and attempted to model with varying degrees of success, the phenomenon of refraction as far back as Ptolomy (ca. 90–ca. 168). However, early lenses were constructed through a process of trial-and-error in which the quality of the optic was a function of the skill and experience of the lens designer [96–98]. This trial-and-error process was supplanted by Ernst Abbe (1840–1906), who was the first to establish a theoretical framework for quantifying advances in microscope design and describing the role of diffraction in image formation. Abbe noticed that a larger front aperture for an objective often dictated better resolution—though some aberration might be present. This observation led Abbe to formulate the parameter numerical aperture (NA), which along with wavelength yields the classical resolution limit:

| (1) |

| (2) |

Equations (1) and (2) embody the fundamental notion of Abbe’s theory of image formation. The theory states that sample features, which represent a spectrum of spatial frequencies, behave like diffraction gratings. These features diffract the incident light at angles inversely proportional to the features’ size (See Fig. 1). To form an image, different orders of the diffracted light must be focused and the interference of these orders creates the image. If light is diffracted at too great an angle, it does not enter the objective, and image information below a certain feature size is lost [40,67,73,99–109].

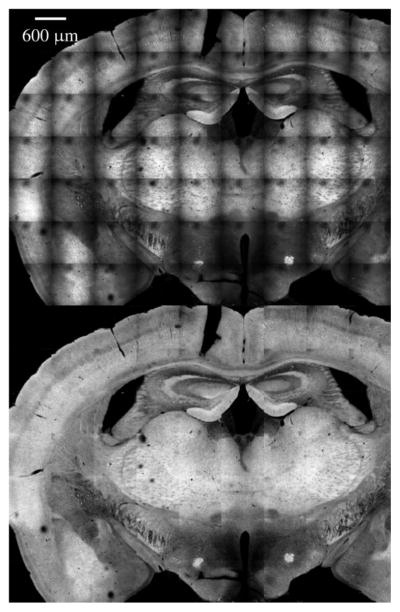

Figure 1.

Abbe’s theory of image formation explains how diffracted light from the specimen is collected by an objective lens. Light diffracted at a larger angle than the acceptance angle (θ) of the objective is lost and, thus, so is the spatial information associated with it.

Improvements to contrast came largely from interference techniques. One of the most notable of these was developed by Frits Zernike (1881–1966) who developed phase-contrast microscopy (PCM). PCM translates phase shifts in light, which result from traveling through different parts of the sample (i.e., different optical path lengths), as intensity variations of the brightness [70,72,73,102,110].

2.2. The Fluorescent Microscope

The development of fluorescence microscopy provided additional contrast methods and also a means for improved specificity. Fluorescence microscopy was driven by two advancements. The first was the discovery of both intrinsic (or endogenous) and synthetic fluorescent molecules. The second was advancements in microscope design that used wavelengths in the ultraviolet (UV, 200–400 nm) as a means of improving resolution. The contrast of UV fluorescent microscopes was further improved by the addition of dark-field condensers and lateral illumination (a precursor to light sheet illumination [9,111–116]). These techniques prevented incident light from entering the microscope objective [67,69,102,117–121].

Fluorescence microscopy faces many of the same issues as all types of microscopy, which are noise, limited resolution, and optical aberration. Fluorescence microscopy also has challenges with regards to UV damage (i.e., absorption) of the specimen (e.g., photodamage, photobleaching) and scattering. Photodamage occurs because living cells are more prone to damage given their typical predisposition to absorb UV light [122]. This problem is only made worse by adding fluorescent probes. Photobleaching is the destruction of the fluorescent molecules where excitation illumination can transform the fluorescent molecules into nonfluorescent ones [73,123]. Visually this is realized as a continuous decrease in signal intensity. Scattering is a function of thick samples where the fluorescent light emitted by the probe is scattered on its way to the detector [6,124–130]. Conversely, the excitation beam also may be scattered on its way to the focus. Regardless, the result is a blurred image, decreased resolution and a loss of contrast [5,127,131–133].

Marvin Minsky (1927–) invented the confocal microscope, which eliminated the out-of-focus fluorescent photons from reaching the detector and also provided improved lateral resolution [40,67,69,73,134–137]. The conventional design of the optical microscope images the entire field of view (FOV) simultaneously and provides a high-quality image that is uniform across the field. This requirement is relaxed by allowing the microscope to image a single point at a time (as an example of the boundary between point scanning and wide-field imaging, see [138–141]). The trade-off is that the object, detector, or excitation light must now be scanned to build up an image. If the light from the object is collected by an objective and relayed to a single-element (e.g., photodiode or photomultiplier tube) detector, then a significant improvement in the resolution of the optical system can be realized. This may be achieved with a pinhole aperture at the object or at a conjugate image plane [134,142]. Incidentally, while not necessary for multiphoton microscopy, a pinhole aperture also can have a positive effect on resolution [143,144].

2.3. The Nonlinear Multiphoton Microscope

The obstacles with fluorescence confocal microscopy largely revolve around phototoxicity, limited imaging depth, out-of-focus flare, photo bleaching, and difficulties implementing UV-based lasers and optical systems. Some of these problems can be minimized by transitioning to a multiphoton microscope [31,50].

Multiphoton microscopy falls within the broader field of nonlinear optics or nonlinear optical microscopy (NOM) [27,48,74,76,79,83,91,121,145]. Maria Göppert-Mayer established the theoretical foundation of two-photon quantum transitions in her 1931 doctoral dissertation [146]. Annotated English translations of Göppert-Mayer’s theory of two-quantum processes can be found in Masters and So [147].

With single-photon fluorescence microscopy, the incident photon must match the energy to transition a fluorophore from a ground state to an excited state. With multiphoton and, in this case, TPEF microscopy, two incident photons in the near-infrared with half of the previous energy can cause the fluorophore to be excited provided that they occur within the same quantum event (within 10−16 s [37,148]). Denk, Stickler, and Webb demonstrated the first two-photon microscope in 1990 [1]. Multiphoton microscopy reduces the problems with photo-toxicity by using lower energy photons and limiting their absorption to a small focal region, as defined by the NA of the objective, where a multiphoton quantum transition is probable (see Fig. 2; see also [27,44,50] for additional diagrams of nonlinear optical microscopy modalities). This small focal volume also allows for the elimination of the pinhole aperture in confocal microscopy. The decrease in axial sectioning that occurs from working with longer wavelengths is largely offset by the improvement afforded by the two-photon process, where the signal declines as 1/r4; for single-photon process the signal declines as 1/r2. For higher order processes, the signal declines as 1/r2N, where N is the order [1,69,149].

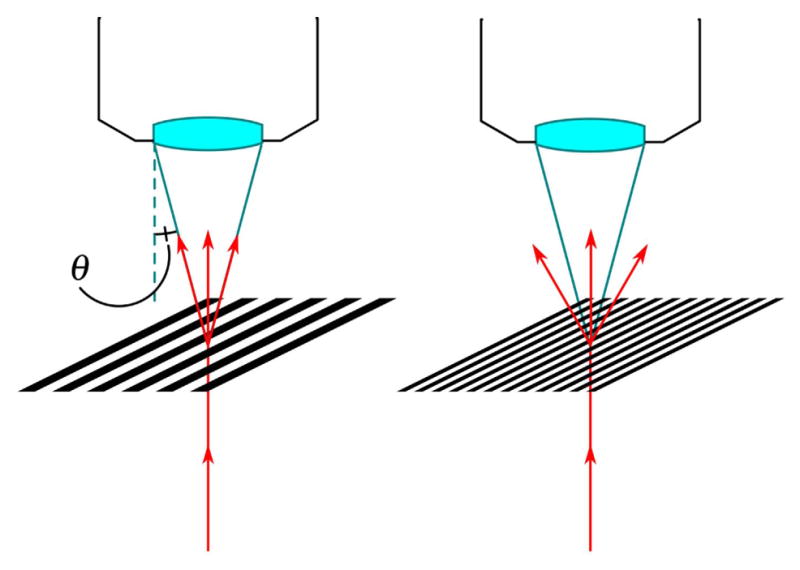

Figure 2.

Jablonski diagram of a two-photon transmission and representation of single-and two-photon absorption in a sample.

In addition, photons in the near-infrared penetrate deeper (as far as 500 μm [5,8,150,151]) into the sample and thus provide great utility for deep tissue imaging.

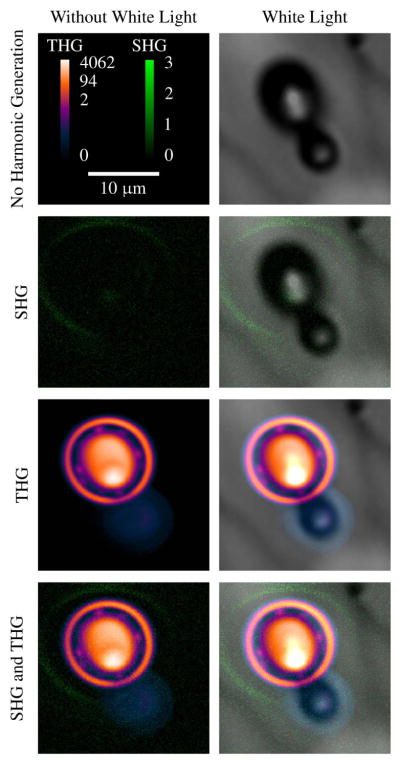

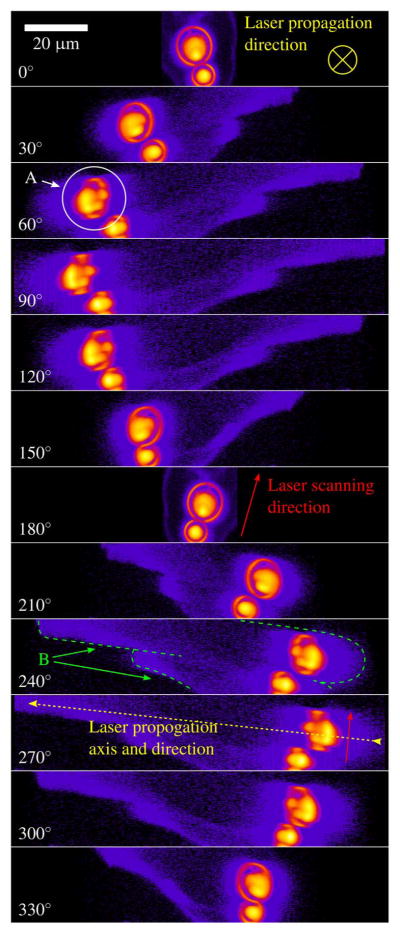

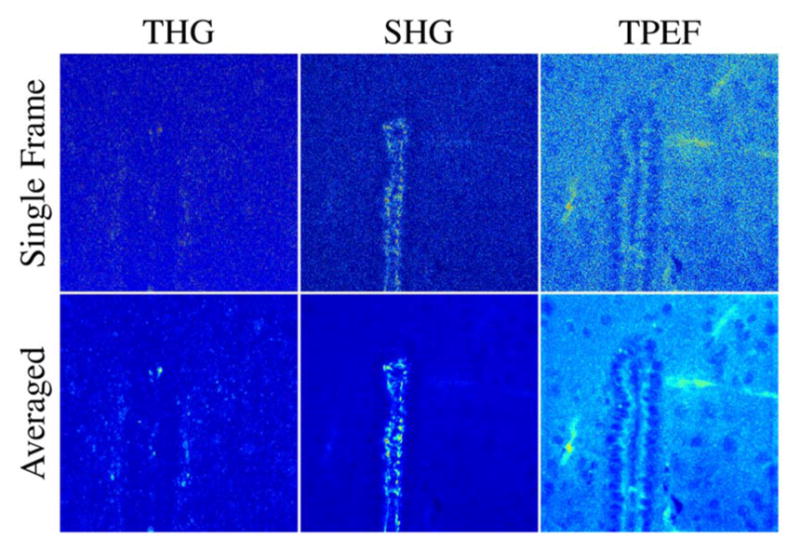

TPEF represents only one nonlinear optical modality. In this paper we will present examples of TPEF and also SHG and THG images. Some other nonlinear optical modalities, as previously mentioned, are SFG, SRS, and CARS.

2.4. Multiphoton Laser Scanning Microscopy

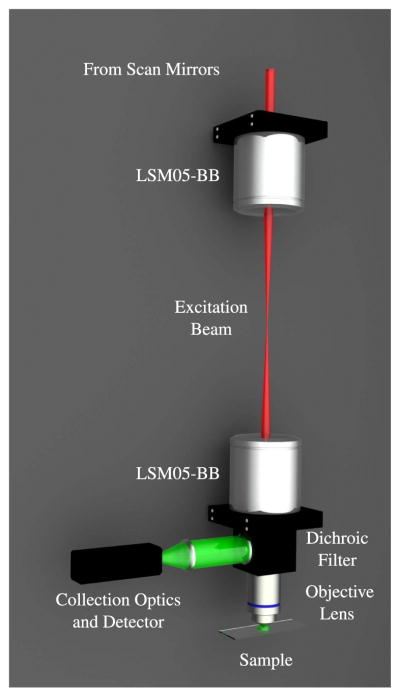

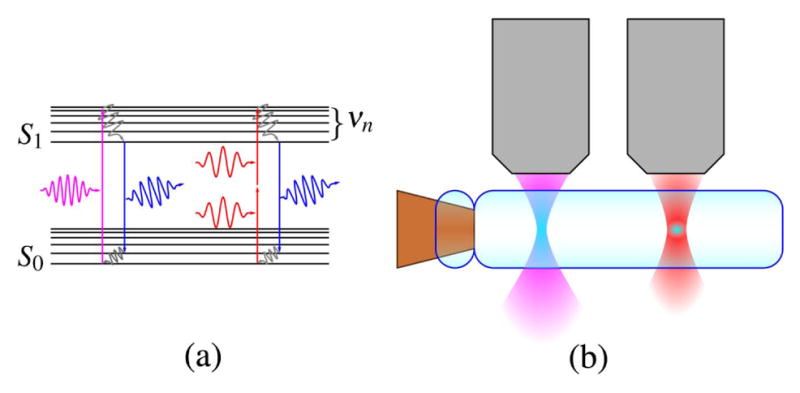

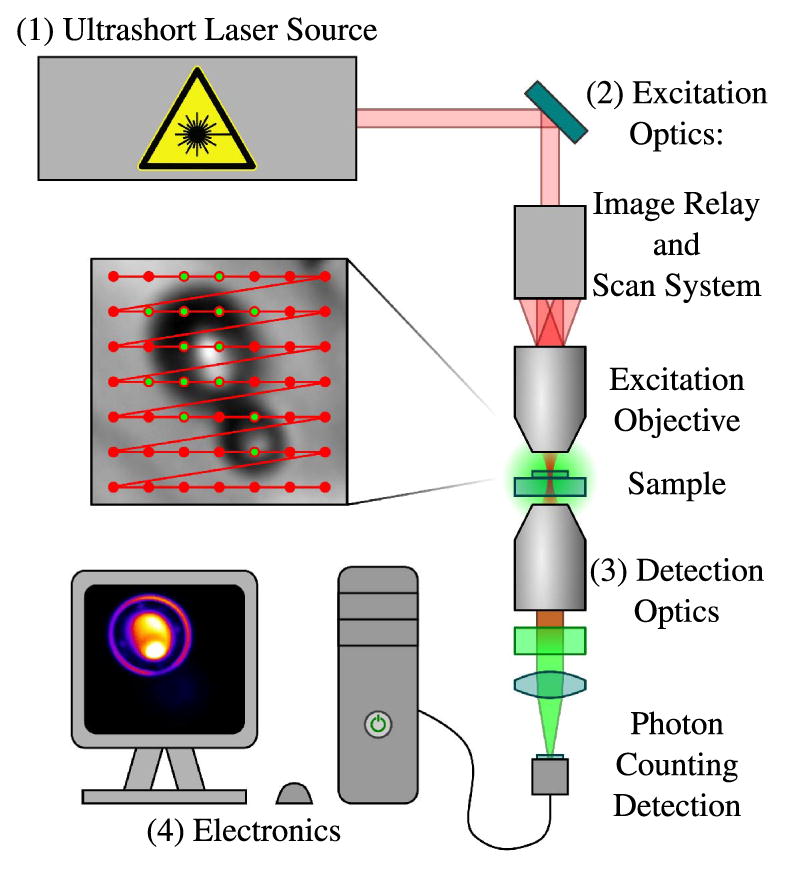

A MPLSM system, as seen in Fig. 3, can be neatly divided into four parts: (1) an ultrafast pulsed-laser source; (2) the excitation optics, which are responsible for beam routing, pulse shaping, and focusing of the pulse train; (3) the detection optics, which are responsible for collecting the emitted contrast signal; and (4) the electronics that quantify the measured signal and store the data for later use.

Figure 3.

Multiphoton laser scanning microscope: (1) an ultrafast pulsed-laser source, (2) excitation optics, which may include scan optics, (3) detection optics, and (4) electronics for data storage and processing.

3. Sources

Nearly concurrent with the first applications of multiphoton laser scanning fluorescence microscopy [1] was the development of stable intracavity mode locking of femtosecond pulses in titanium sapphire (Ti:Al2O3 or Ti:sapphire) solid-state lasers [86,87,89,152–156]. This ultimately seated Ti:sapphire oscillators as the primary platform for nonlinear microscopy. Often referred to as the “workhorse” of ultrashort pulse lasers in the literature, the Ti:sapphire laser is still the most prolific source for nonlinear microscopy two decades later [11,126,157,158]. Besides the Ti:sapphire laser, there are many other available sources for multiphoton microscopy that have been reviewed in the literature [33,126,149,158–161]. For imaging modalities like CARS and SRS, a multibeam system, usually with adjustable convergence and wavelength, is required. These techniques and associated sources are more complex. However, the source laser can often be built on top of an existing multiphoton microscope (Ti:sapphire is a common example [162,163]) through the use of an optical parametric amplifier [164].

The science of ultrashort pulse laser sources for biological imaging applications has been driven by a number of considerations: to include the desire to target fluorophores outside of the 800–1000-nm center wavelength of a typical Ti:sapphire laser, the need to push to longer wavelengths in order to increase penetration depth in scattering media, and the demand for portable, rugged, and affordable clinical microscopy platforms.

In the past decade, the Yb3+ ion has been shown to be a promising dopant in a variety of crystal hosts used in rare-earth-ion diode-pumped solid-state femto-second lasers operating in the 1-μm wavelength range [159]. A thorough review of the spectroscopic properties of many Yb3+-doped hosts which include aluminates, borates, fluorophosphates, sesquioxides, and tungstates can be found in [165]. Most common of the Yb3+-doped hosts as a gain medium are the three double tungstates: Yb3+:KGd(WO4)2, or KGW; KY(WO4)2, or KYW; and KLu(WO4)2, Yb:KLuW, or KLuW—all of which exhibit similar spectroscopic properties. A significant amount of work has been done in the past decade to fully characterize KGW and KYW crystal properties and growth techniques [166,167]. KLuW, however, was more recently introduced as a promising laser crystal host [168] and is just starting to get a foothold among research groups exploring Yb3+-doped double tungstates.

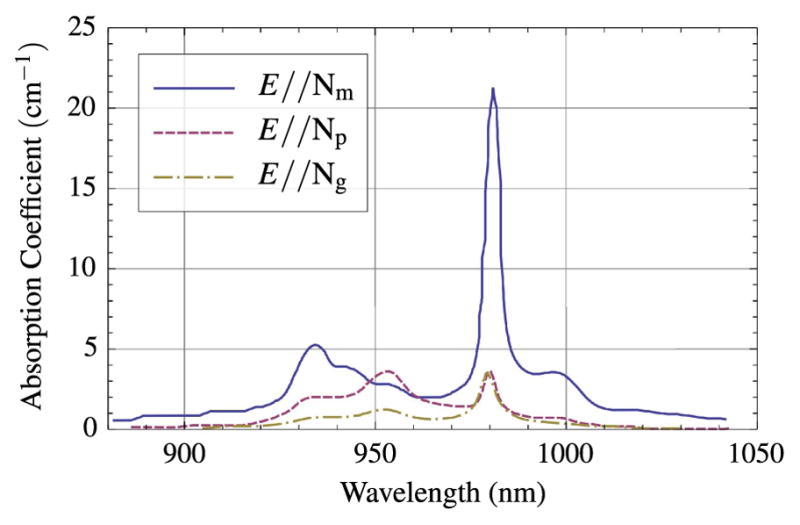

Owing to its broad gain bandwidth, decades of oscillator development, and, most recently, the demonstration of a cost-effective direct diode-pumped system 169,170]] Ti:sapphire will likely remain the workhorse of ultrashort-pulse science and nonlinear microscopy for years to come. However, as mentioned above, KGW (and its sister crystal hosts) offers an attractive alternative, or better yet, a complement to Ti:sapphire-based nonlinear microscopy systems and opens a door to an extremely inexpensive means to image at a longer excitation wavelength (1020–1040 nm) and at higher average powers directly from the oscillator. In general, the advantage of Yb3+ lasers is that pump light is converted into laser light very efficiently and with relatively little heat production. This efficiency stems from a variety of properties that are typically discussed in terms of comparison relative to those of Nd3+-based lasers, since they also emit at just over 1-μm wavelength. Advantageous properties of Yb3+-doped double tungstates include large absorption and emission cross sections, sufficiently broad emission bandwidth for ultrashort pulse generation, and small laser quantum defect (~6%). The quantum defect, 1 – λp/λe, is defined as the fractional amount of the absorbed photon (pump) energy that is converted to heat due to the difference between the absorbed and emitted photon energies. The heat generated inside of the gain medium can degrade laser output power, beam quality and oscillator stability [171]. Other properties of Yb3+-doped double tungstates are the absence of excited-state absorption, cross relaxation and energy-transfer upconversion [172]. These three mechanisms are well-known challenges with many Nd3+-doped materials and provide alternate pathways for upper-laser-level depopulation and thus reduce efficiency. Additionally, a strong absorption line near 980 nm (Fig. 4) means that Yb3+-based laser materials can be pumped by InGaAs diode lasers, which are commonly used by the telecommunications industry and are commercially available in compact, high output power, relatively inexpensive, turnkey configurations. The development of Yb:KGW-based lasers is an area of active research and development [85,159,165,173–178] and has been demonstrated as a viable source for nonlinear microscopy [179,180]

Figure 4.

Room-temperature absorption spectra for Yb3+:KGW for polarizations parallel to the principal refractive index axes Nm, Np, and Ng.

In this section, we discuss the general characteristics of some of the common commercial systems that are available for nonlinear microscopy. The years of optical science and engineering behind the commercial systems today make them well-suited “black-box” sources for research laboratories that focus exclusively on the window into the biological sciences enabled by nonlinear microscopy. That said, many laboratories are finding it advantageous to design and build their own ultrashort-pulse laser sources. Though cost, flexibility, and maintenance are a few of the more pragmatic motivating factors for home-built systems, the ability to participate in the collective effort to push the science of short pulse lasers, as imaging tools, is expanding the number of laboratories designing lasers specifically to fit their research, rather than adapting their research, to accommodate the output characteristics of their laser. As such, we also present design and construction considerations for building your own source. Specifically, we discuss in detail a direct diode-pumped Yb:KGW home-built ultrashort-pulse oscillator that is a relatively inexpensive, and a practical first-time build suitable for a variety of nonlinear imaging applications.

3.1. Commercial Sources

3.1a. Ti:sapphire

There are commercial Ti:sapphire systems available to meet just about any biological imaging need. For example, broadly tunable oscillators with outputs ranging from less than 700 nm to well over 1000 nm can produce average power levels up to 2 W and pulse durations between 70 and 100 fs. These are desirable characteristics for a nonlinear microscopy platform given that they can generate output powers necessary to image more deeply in scattering tissue than early-generation Ti:sapphire lasers. They have short pulse widths for high peak power and they are generally operated through user-friendly interfaces. Though commercial platforms generating sub-10-fs pulses are available, these systems have generally been considered to be less than ideal for nonlinear microscopy applications because, at the requisite bandwidth, dispersion management presents a challenge that in most cases outweighs the significant increase in peak power. However, with careful compensation of higher order dispersion, these very short pulses have produced some significant imaging advantages [85,181,182].

3.1b. Yb:KGW

Though Ti:sapphire systems clearly dominate the commercial market for ultra-short pulse sources for nonlinear microscopy, one can purchase a turnkey Yb: KGW oscillator/amplifier system that can generate sub-500-fs pulses with average power of up to 4 W and repetition rates up to 7 kHz [183].

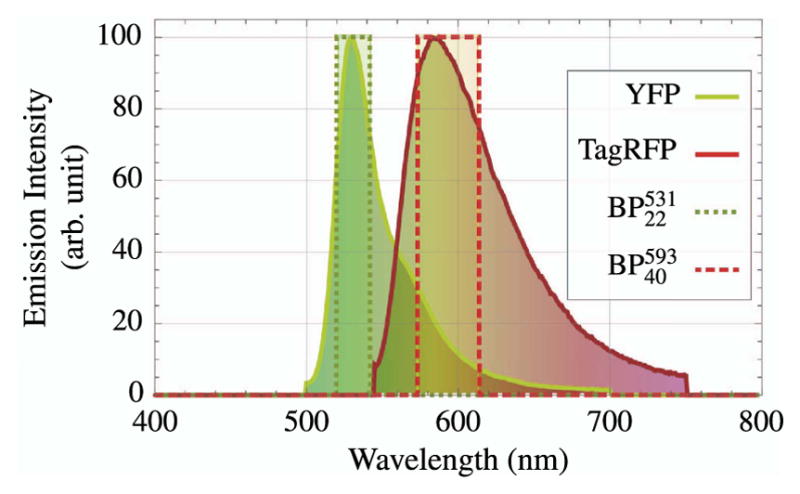

While Yb:KGW lasers are not broadly tunable by themselves (1030–1090 nm [173,184]) the addition of other components can increase the tunability dramatically. Optical parametric amplifiers [80], used in conjunction with Yb:KGW lasers, can be tuned from 620 to 990 nm [185], and from 1380 to 1830 nm [186]. Additionally, the use of nonlinear fibers can create a supercontinuum from 420 to 1750 nm [175]. This can in turn be used to generate a broadly tunable source that can generate sub-65-fs laser pulses from 600 to 1450 nm [187]. While Yb:KGW, by itself, is well suited to exciting fluorescent tags such as YFP, and even DsRed [188], it has been demonstrated that it can also be used to efficiently excite tags such as GFP through the use of a nonlinear fiber [189]. The addition of these tunable capabilities also presents an opportunity to implement more imaging modalities, such as CARS and SRS [190].

Not discussed here, but clearly a valuable laser source in microscopy, are femto-second fiber lasers [156,191–193] and fiber lasers used for SRS [194]. Fiber sources have recently been reviewed by Xu and Wise [161]. We have reproduced the results of Wise [195] with excellent result. Our home-built fiber sources based on this design routinely produce 150-fs pulses with average powers greater than 0.5 W at 50–60 MHz.

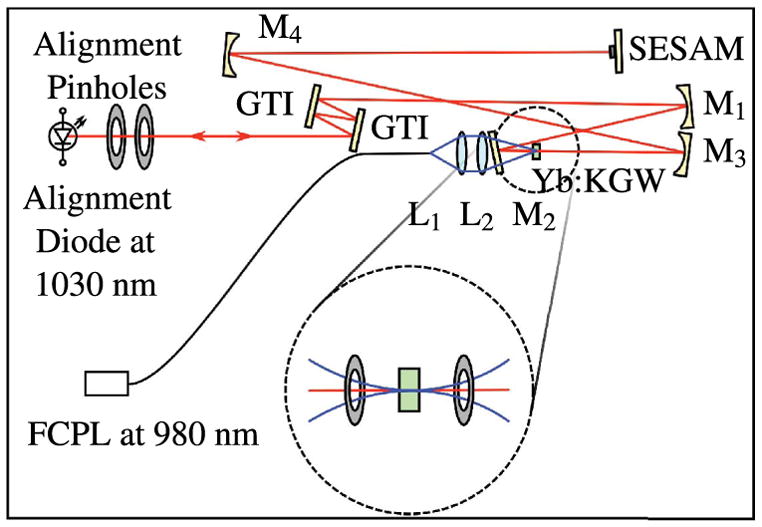

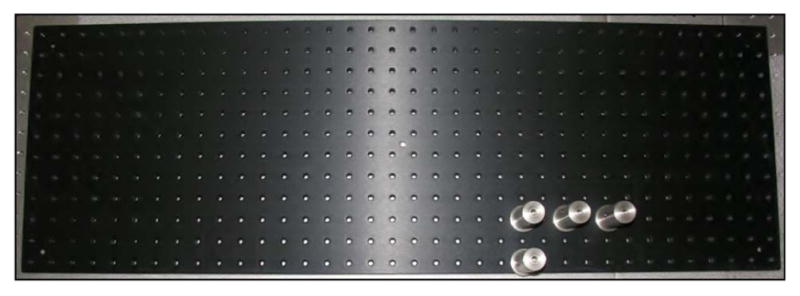

3.2. Building Your Own Source: an Example Home-Built KGW Oscillator

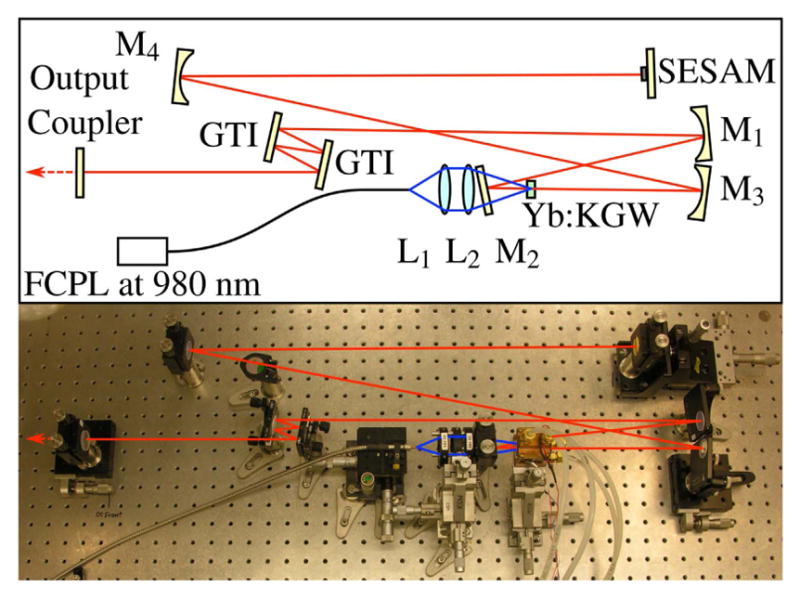

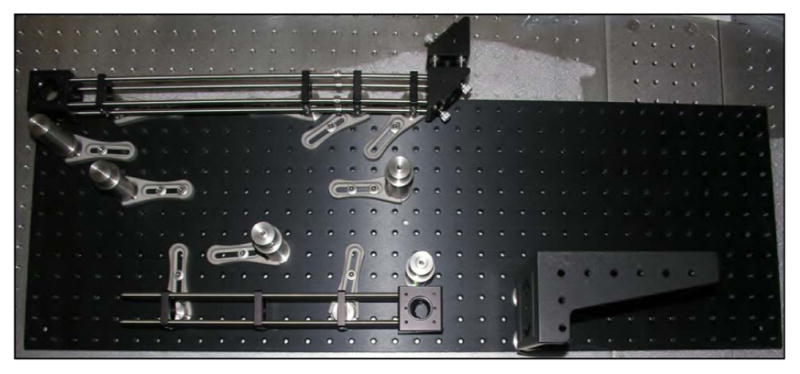

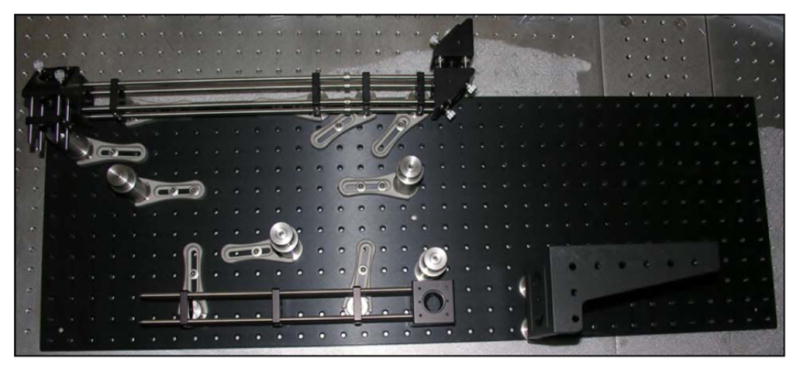

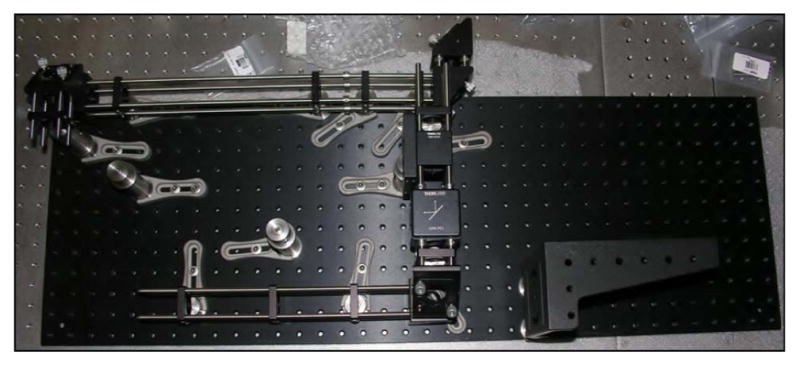

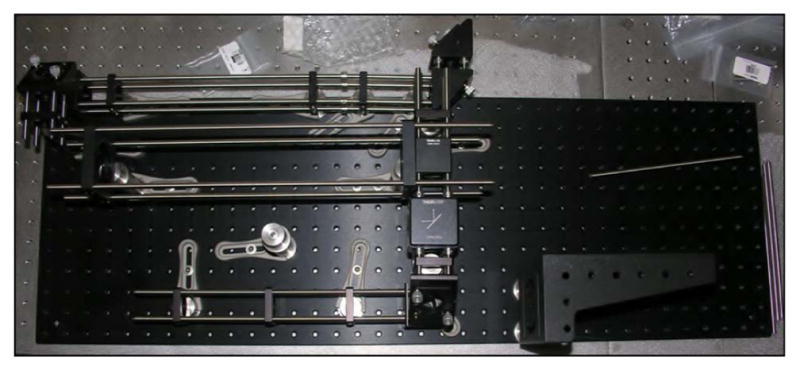

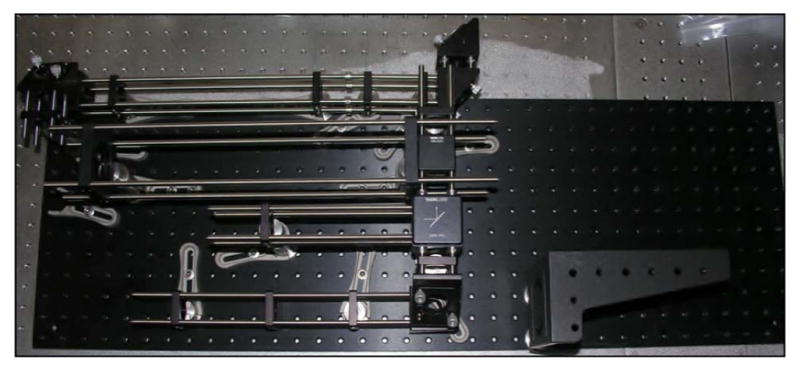

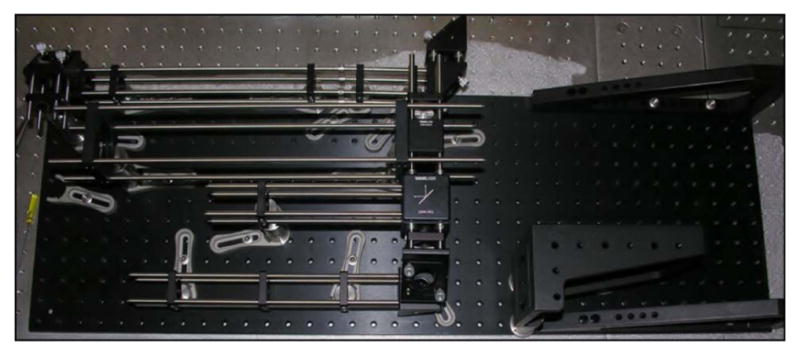

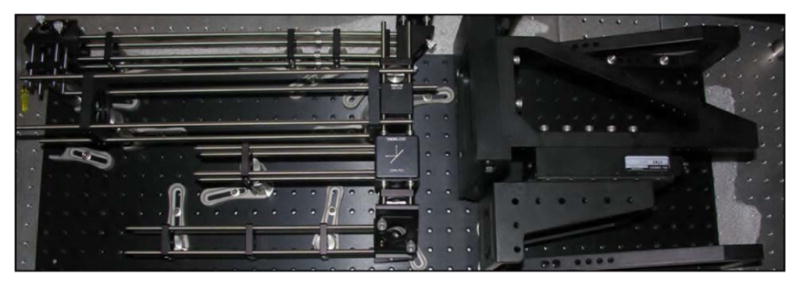

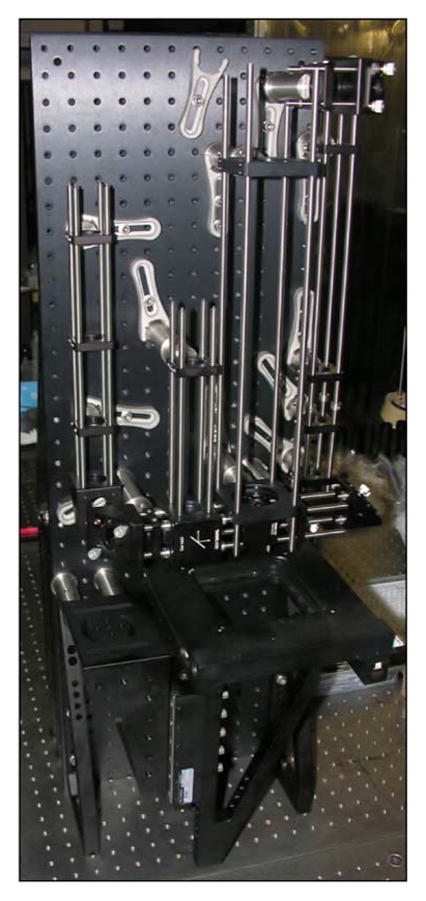

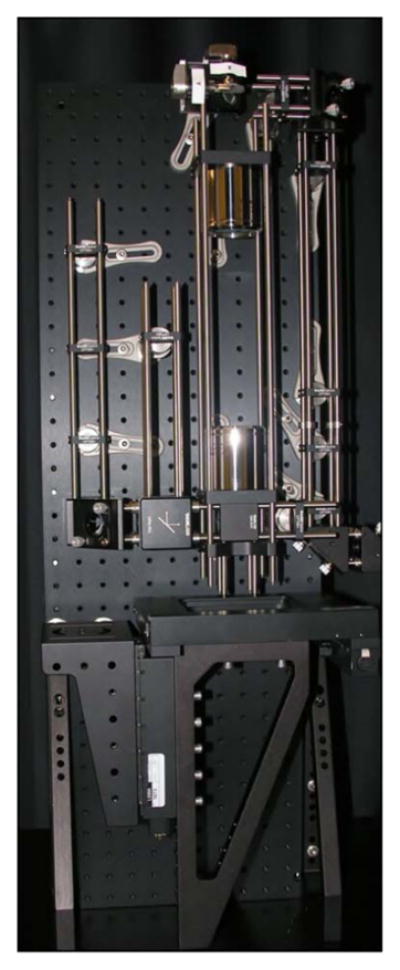

There are clear benefits to purchasing the proven commercial systems discussed above. They are reliable, easy to use, and increasingly tunable to a variety of applications. However, for roughly one order of magnitude decrease in the cost, designing, building, and maintaining your own home-built system is an option with tremendous advantages. In an optics laboratory equipped with a breadboard—3′ × 5′ is sufficient—or optics table and a modest suite of general hardware and mounts, one can expect to invest on the order of $30,000 for a complete ultrashort-pulse KGW oscillator. The general design of our example KGW oscillator is based on a laser developed by Major et al. [184]. The layout of the oscillator is shown schematically (top) and in a photograph (bottom) in Fig. 5.

Figure 5.

(Top) Schematic representation of Yb:KGW oscillator layout. Mirrors are labeled M1–M4; GTI, Gires-Tournois interferometer; Output Coupler; SESAM, semiconductor saturable absorber mirror; FCPL, fiber coupled pump laser. (Bottom) Photograph of the oscillator in the laboratory. Lines depicting the pump beam (blue, center) and the laser beam (red) are graphically added to the photo.

3.2a. Gain Medium

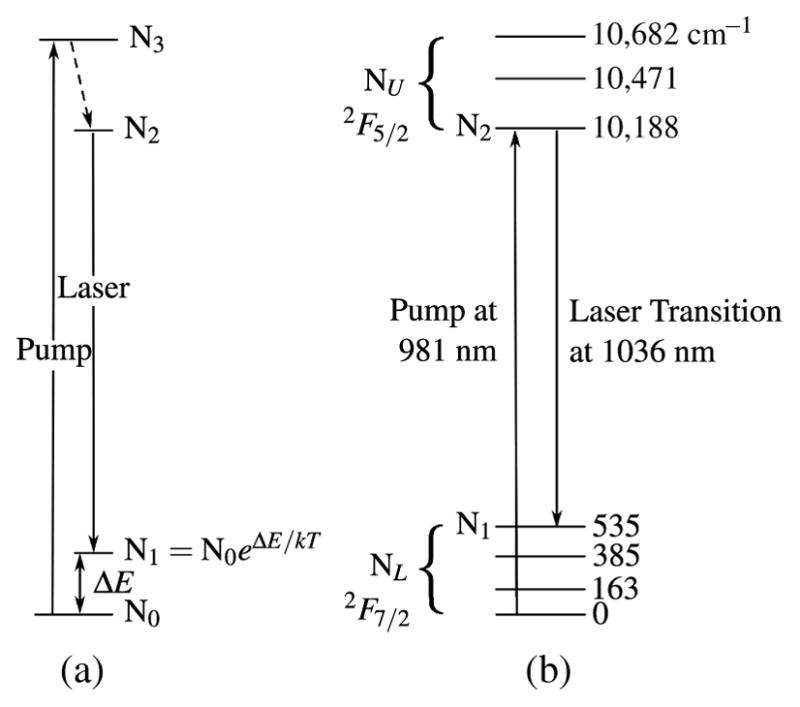

The gain medium in this example oscillator is 4-mm long, 5-mm wide, and 2-mm high, antireflection (AR)-coated, 5% Yb:KGW crystal (EKSMA OPTICS, Vilnius, Lithuania). 5% doping is fairly standard among the Yb3+-doped double tungstates but KGW crystals, as well as their nearly interchangeable KYW sister crystals, are available in a variety of orientations and dimensions. For end-pumped configurations, 3–5-mm-long crystals are typically used. Many of the efficiency advantages of Yb3+-doped double tungstates discussed above result from the rather simple energy level scheme consisting of only two relevant Stark manifolds: 2F7/2 and 2F5/2. However, there is also a downside to the energy scheme. The system is described as “quasi-three-level” (see Fig. 6) because the closely spaced Stark levels within each manifold are only “quasi separate” and are connected by a Boltzmann distribution. As such, the lower laser level of quasi-three-level systems is so close to the ground state that an appreciable population in that level occurs at thermal equilibrium. Our basic models of quasi-three-level KGW systems (based on [196–198]) show that the ideal length in the trade-off between gain path length and reabsorption effects is between 3 and 4 mm.

Figure 6.

Quasi-three-level energy diagram. (a) General. (b) Yb:KGW with Stark levels shown in cm−1. Arrows indicate pump and laser transitions.

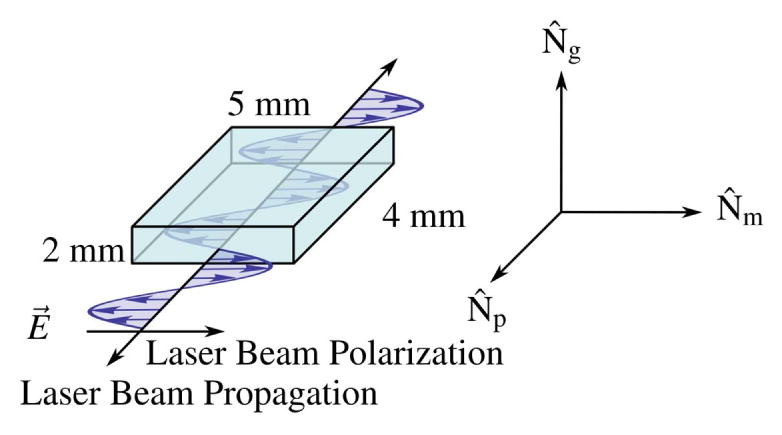

The crystal orientation in the cavity is shown in Fig. 7. The intracavity beam propagates along the Np semi-axis of the refractive-index ellipsoid (indicatrix, which coincides with the b-crystallographic axis [199]) and the generated laser radiation is polarized parallel to the Nm semi-axis of the indicatrix. This orientation is chosen based on the larger relative emission cross section for an electric field polarized parallel to the Nm axis in KGW. It is worth noting an interesting study conducted by Hellström et al. [200], who found that an alternative to the standard b-cut for KGW/KYW crystals has better thermal management properties. Called the ad-cut (athermal direction cut), this configuration required higher doping levels due to smaller absorption cross sections but may be worth consideration for situations where thermal lensing and mode quality become problematic.

Figure 7.

KGW crystal orientation in the oscillator cavity. Gain medium is cut along semi-axes of the refractive index ellipsoid (indicatrix).

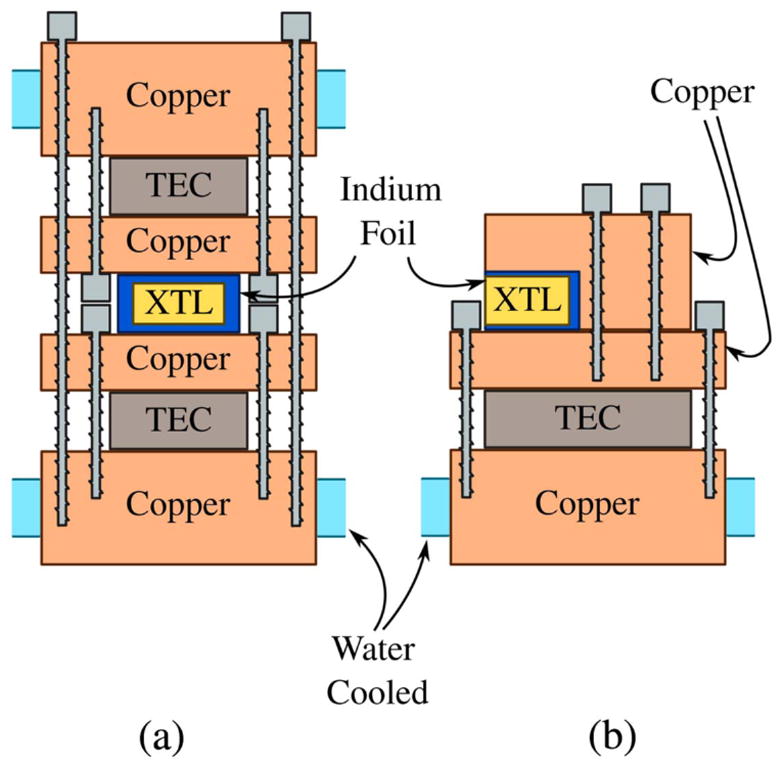

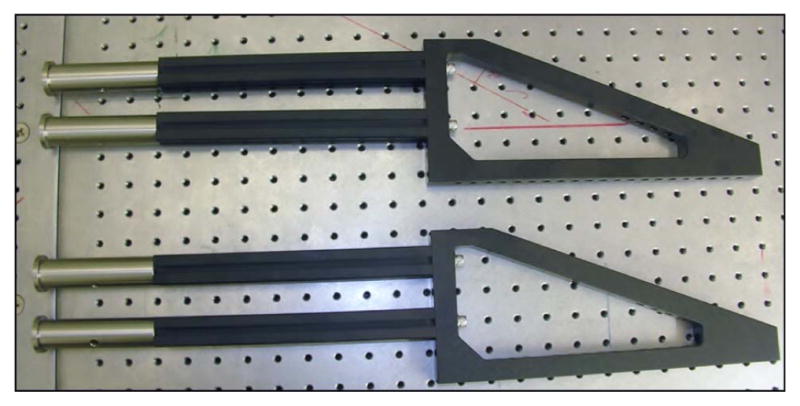

Although the small quantum defect of KGW is beneficial for thermal management, the crystal must still be actively cooled due to the high intensity pumping. The crystal in Fig. 5 is cooled to 15°C from both sides by thermoelectric coolers (TECs; TE Technology, Inc., Traverse City, Michigan, USA) housed in a home-built water-cooled copper crystal mount, as shown in Fig. 8(a). However, we have had success with other mount designs, such as that in Fig. 8(b), which shows a design that cools less aggressively but provides better access to the crystal faces for alignment and cleaning.

Figure 8.

Two crystal-mount designs: (a) mount used in the oscillator shown in Fig. 5 and (b) mount used in another home-built KGW oscillator.

3.2b. Pump Source

There are many commercial vendors producing self-contained turnkey fiber-coupled laser pump modules (e.g., Apollo Instruments, IPG, QPC Lasers, nLight). Generally, the pump laser will constitute between 1/3 and 1/2 the cost of the KGW oscillator. Many of these systems advertise a central wavelength of 976 nm with anywhere from 2 to 5 nm of bandwidth. Because of the rather narrow absorption line around 981 nm in Yb:KGW (see Fig. 4), we have found significant increases in oscillator performance when operating the pump diodes at the high end of the manufacturer’s acceptable temperature range and thus pushing the emission line toward, or up to, 981 nm. The pump source for our example oscillator is a 25-W fiber-coupled diode module with a 200-μm core diameter emitting at 980 nm (F25-980-2, Apollo Instruments, Inc., Irvine, California, USA). The fiber is imaged 1/1 into the crystal and thus defines the pump- and laser-mode volume. In the system shown in Fig. 5 we image the fiber through two 40-mm singlets, L1 and L2. We have also built systems using a commercial lens assembly designed for low aberration multimode fiber applications (LL60, Apollo Instruments). Fiber coupling, alignment, and stabilization all benefit from the commercial lens assembly option, although careful design of a singlet imaging system provides an opportunity for cost savings and can work well.

3.2c. Cavity Elements

The pump light enters the cavity through a short-wave pass flat mirror, M2 (Layertec GmbH, Mellingen, Germany), coated for 98% transmission at the pump wavelength (980 nm) and 99.9% reflection at the laser wavelength (1040 nm). Mirrors M1 and M3 are highly reflective (≥99.98%, Layertec) curved mirrors with radii of curvature r = 500 mm.

A pair of Gires-Tournois interferometer (GTI) mirrors (Layertec), each providing – 1300 fs2 per bounce (four bounces per round trip per mirror) are used for dispersion compensation. In most of the early KGW/KYW laser designs, prism pairs were used for dispersion compensation in the cavity, which allowed oscillator designers to change the prism insertion distance to vary the center wavelength or bandwidth of the output pulses [173]. In the past few years, GTI mirrors have become a more popular means of dispersion compensation due to their compactness and alignment simplicity. There have been numerous theoretical treatments addressing the amount of negative group-delay dispersion needed to offset the self-phase modulation in the gain material and create a dispersion regime that supports a stable mode-locked cavity [173,201,202]. Researchers building oscillators for specific practical application often take a trial-and-error approach, especially with discrete-valued GTI mirrors, and simply increase the negative dispersion until the stable mode-locked output is reached. We have found that two bounces per GTI (eight bounces per cavity round trip) is simple to align and enables a stable mode-lock regime.

Passive mode locking is achieved by focusing the beam with a highly reflective curved mirror, M4 (r = 1000 mm, Layertec), onto a semiconductor saturable absorber mirror (SESAM, SAM-1040-2-25.4g; Batop GmbH, Jena, Germany) with a modulation depth (or maximum change in nonlinear reflectivity) of 1.2%, where the SESAM is used as an end mirror. Passive mode-locking techniques for the generation of ultrashort pulse trains are generally preferred over active techniques due to the ease of incorporating passive devices, such as SESAMs, into laser cavities. A SESAM consists of a Bragg mirror on a semiconductor wafer like GaAs, covered by an absorber layer. Pulses result from the phase locking of the multiple lasing modes supported in continuous-wave laser operation. The absorber becomes saturated at high intensities (i.e., where the multiple lasing modes are in phase at the absorber), thus preferentially allowing the majority of the cavity energy to pass through the absorber to the mirror, where it is reflected back into the laser cavity.

3.2d. Alignment Tools and Considerations

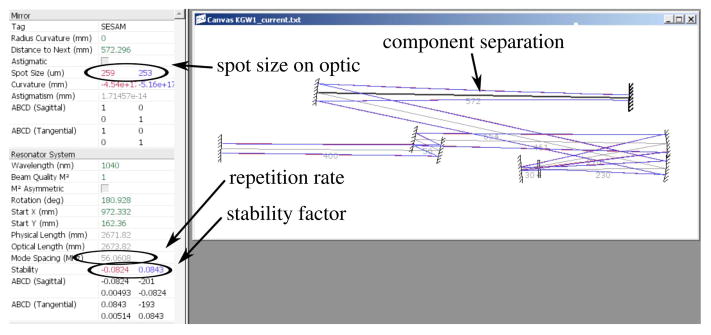

To determine the precise spacing between cavity elements, a laser-cavity modeling program (LaserCanvas5, developed by Philip Schlup; contact corresponding author for availability) was used that employs ABCD matrix formalism to establish cavity stability and resonant mode sizes for oscillators. For our design criteria, we wanted a relatively low-repetition-rate oscillator (roughly 50 MHz) in order to increase the average pulse energy. Figure 9 shows a screen shot of the cavity model as rendered by LaserCanvas5. The cavity design process is one of balancing four key parameters: the desired repetition rate, the laser mode size inside the gain medium, the laser mode size on the SESAM, and the cavity stability factor.

Figure 9.

Screen shot of the KGW oscillator as modeled by laser-cavity program LaserCanvas5. A few of the pertinent design parameters, such as distance between optical components, spot size on a selected component, and stability factor, are circled for emphasis.

We have found that a good initial design is to model a cavity geometry that produces a fluence on the SESAM on the order of five times the manufacturer-provided absorber saturation fluence. As mentioned above, our pump-fiber aperture, which has a diameter of 200 μm, is imaged 1/1 into the crystal, and, thus, we aim for a mode size with a waist very close to 100 μm. Given this constraint, the desired cavity repetition rate can be achieved by selecting the correct radii for the curved mirrors and by proper placement of the flat mirrors in the nearly collimated arms of the cavity. Though the criteria for a stable cavity is a stability factor of less than 1, we imposed a criteria of less than 0.1 to ensure long-term stable laser operation.

The SESAM, which is the optical component selected in the screen shot of Fig. 9, is one of the two components where the mode size is of critical importance. To benefit from the full modulation depth of the saturable absorber in constant-wave mode-locked lasers, the pulse energy must be high enough to bleach the absorber. To meet that condition, the pulse fluence on the SESAM should be approximately five times the manufacturer-provided absorber saturation fluence [203]. Another important parameter on the SESAM is the damage threshold. Listed by the manufacturer as an intensity value rather than a fluence, this provides a minimum bound on the spot size on the SESAM. A recent study by Li et al. [177] looks at spot size ranges that result in stable mode-lock output for a commonly used SESAM (identical to the one used in our oscillator) in a KGW laser. For our KGW laser, typical average output power is 2 W. Given our 10% output coupler and a repetition rate of 56 MHz, we have an intracavity pulse energy of 0.36 μJ. The spot waist on the SESAM is approximately 250 μm, yielding an energy density of 183 μJ/cm2, which is 2.6× the saturation fluence of 70 μJ/cm2. We also tested a mirror with r = 500 cm (M4) and obtained an energy density of 11.4× the saturation fluence and were also able to achieve stable mode lock—albeit at lower output power. In this higher-fluence configuration, the system would begin to multi-pulse when operating above about 1.5 W.

Although the LaserCanvas5 software is a tremendous tool for designing a cavity with the desired characteristics, it can still be quite challenging to align the cavity so that the pump mode and the intracavity laser mode are overlapped in the crystal. There are several techniques for aligning a cavity and many have their own “tricks-of-the-trade” for precise and efficient alignment. We provide one method for ensuring that you have sufficient pump mode overlap for lasing to occur. Once lasing occurs, one can simply “walk” the laser mode onto the pump mode by using the two end mirrors of the cavity: the output coupler and the SESAM. Figure 10 shows a schematic of the cavity during the alignment stage. Note that the output coupler is removed to maximize brightness of the alignment beam. This is especially important for low-power diodes. It is, of course, ideal to have an alignment diode at a wavelength as close to 1030 nm as possible, but 1064 nm works adequately. It is advantageous to align the pump beam first and then align the cavity mode on top of the established pump line. One of the challenges of aligning the pump beam is that many of the high-power (25–40 W) commercial turn-key pump systems have a minimum stable operating current, which normally corresponds to about 7–10 W of output power. Focused to 200 μm, the resulting intensity will burn standard pinholes or other alignment tools. One can purchase high-damage-threshold versions of just about any optical tool, but we provide a very simple and effective technique that requires only standard pinholes.

Figure 10.

Alignment technique for a KGW oscillator.

The inset of Fig. 10 shows an arrangement of two identical aperture pinholes equidistant from the focus. Capturing the pump beam at these slightly expanded locations enables one to precisely align the pump beam such that the location of the waist is directly in the crystal. Once it is established that the pump beam is straight, level, and focused in the correct location, the pump beam can be turned off and the alignment beam can be brought through the cavity from the output coupler side, aligned to the same pair of pinholes flanking the crystal, then retro-reflected off of the SESAM and back upon itself at the entrance pinholes. Finally, one can replace the output coupler and once again manipulate the mount until the alignment beam reflects off the back of the output coupler onto itself. At this point, the pump can be turned back on at near its maximum power and a sensitive powermeter can be placed directly outside of the output coupler. Lasing will typically “flash” with slight systematic manipulation of the horizontal and vertical positioning of the output coupler mount. Lasing efficiency can be optimized with careful walking of the oscillator mode by iterating between fine adjustments of the output coupler and SESAM mounts.

Table 1 presents the laser component spacings for our home-built KGW laser oscillator.

Table 1.

KGW Laser Component Spacingsa

| Component Pair | Spacing (cm) |

|---|---|

| L1 to L2 | 3.2 |

| L2 to M2 | 2.5 |

| Output coupler to GTI | 40.0 |

| GTI to GTI | 5.0 |

| GTI to M1 | 46.2 |

| M1 to M2 | 27.0 |

| M2 to crystal front face | 3.0 |

| Crystal back face to M3 | 23.0 |

| M3 to M4 | 65.4 |

| M4 to SESAM | 57.5 |

See Fig. 5 for component names.

3.2e. Sample Oscillator Output Characteristics

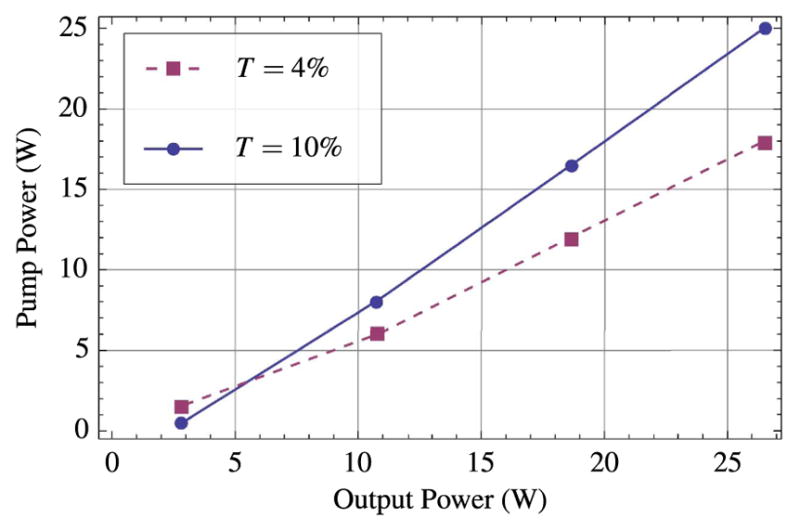

Figure 11 shows plots of the output power of our example KGW oscillator for two different output couplers: T = 4% and T = 10%. The final configuration of the laser uses the 10% output coupler due to slightly better slope efficiency and mode-lock stability.

Figure 11.

Average output power of mode-locked example KGW laser for output couplers with T = 4% and T = 10%.

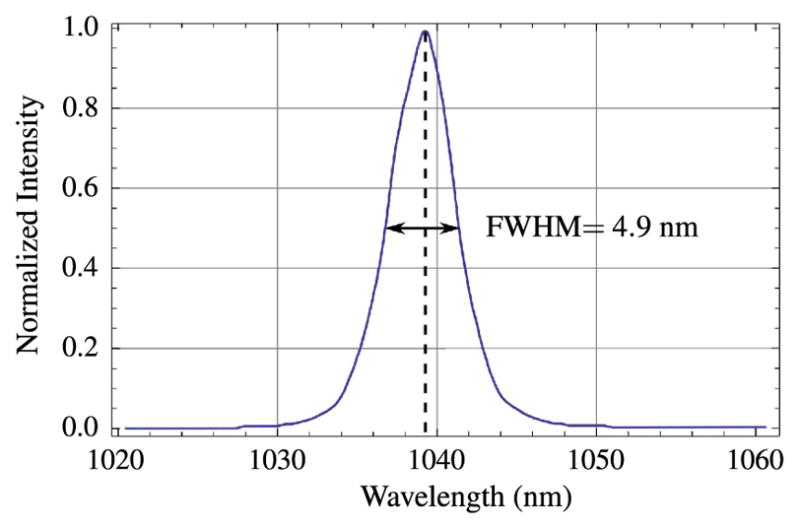

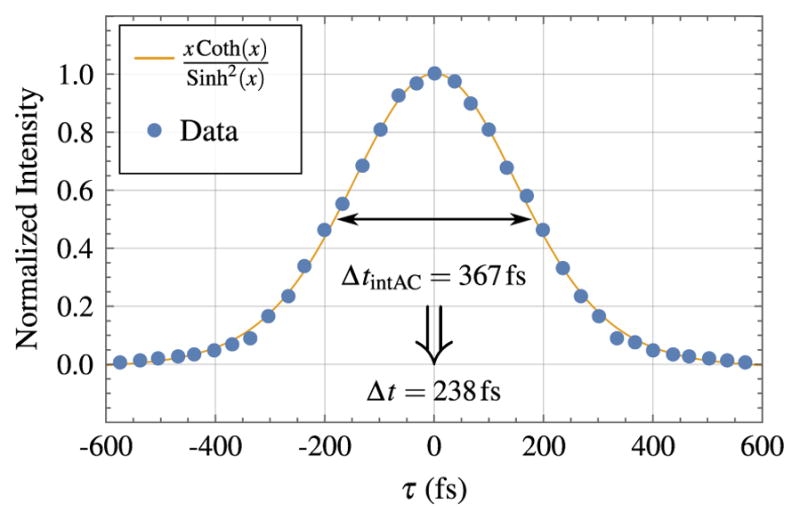

The spectrum shown in Fig. 12 shows that the pulses are centered at 1039 nm and have a bandwidth of about 4.9 nm. At this bandwidth, the theoretical transform-limited pulse duration, assuming a sech2 temporal shape, would be 235 fs. The actual pulse duration, as measured by a second-order intensity autocorrelation (see Fig. 13), is 238 fs.

Figure 12.

Pulse spectrum for the home-built KGW laser.

Figure 13.

Intensity autocorrelation for the home-built KGW laser. The blue data points are fitted to a x coth(x)/ sinh2(x) function. The full width at half-maximum for the intensity autocorrelation (Delta;tint Ac) is 367 fs. For a sinh2 temporal shape, Delta;t = 238 fs.

In summary, our example KGW oscillator generates a 56-MHz pulse train with a maximum average power of 2.5 W, thus yielding pulse energies as high as 45 nJ. The pulses are centered at 1039 nm and have duration of 247 fs.

4. Dispersion in Optics

In Section 3, a powerful femtosecond laser source was described. The short pulses produced by this source enable multiphoton processes to be driven efficiently at the focus of the microscope objective. Yet, with short pulses come challenges such as dispersion: the frequency-dependent index of refraction for glass in the microscope, which results in chromatic effects that can effect pulse shape and thus reduce the excitation efficiency. Generating shorter and shorter pulses requires progressively larger spectral bandwidths; e.g., the spectrum of a 10-fs Gaussian pulse will require most of the visible spectrum [204].

For normal dispersion, as the femtosecond laser pulse passes through the glass of the microscope, the longer frequency components will arrive ahead of the shorter frequencies. This positive dispersion of the laser pulse results in a decrease in the amplitude and broadening of the pulse shape. Dispersion reduces the excitation efficiency and results in a decreased signal intensity. Characterizing and compensating for dispersion can be an important part of an MPLSM [78,87,89,205–209].

To demonstrate the effects of dispersion, we consider a “forward-moving” ultra-short pulse with a Gaussian temporal profile with a time duration of τ measured as the full width at half-maximum of the temporal intensity profile. The temporal profile is written as

| (3) |

where the shape factor, Γ, is given as

| (4) |

The Fourier transform of Eq. (3) provides the positive spectrum:

| (5) |

Equation (5) may be propagated through the system by multiplying it by the exponential of the spectral phase (the phase of the electric field in the frequency domain), which gives us

| (6) |

The phase (i.e., argument) of the exponential in Eq. (6) may be expanded in a Taylor series, which allows the contribution of each term to be addressed:

| (7) |

| (8) |

The first-order term in Eq. (8), ϕ0, is constant, does not affect the pulse shape, and only introduces a time delay. All of the higher order terms, ϕ1, ϕ2, …, are dependent on ω and do affect the pulse propagation and shape. ϕ1 is called group delay (GD). ϕ2 is called group delay dispersion (GDD). The higher order dispersive terms, ϕ3, ϕ4 are referred to as third-order dispersion (TOD) and fourth-order dispersion (FOD), respectively.

The spectral phase as a function of optical path length (P) for a pulse propagating through a dispersive medium is

| (9) |

The dispersive terms from Eq. (8) then may be expressed in terms of P:

| (10) |

| (11) |

| (12) |

| (13) |

An important supplemental expression relates GDD to the pulse duration:

| (14) |

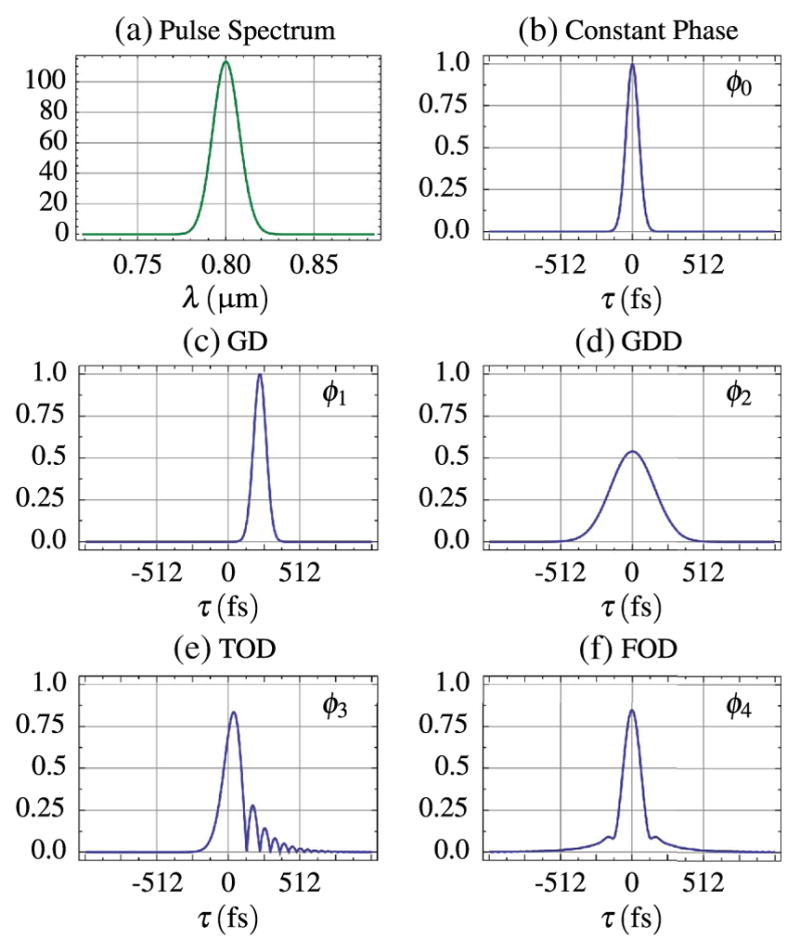

The effects of dispersion from each separate ordered term are shown in Fig. 14. Even-ordered dispersive terms cause symmetric broadening of the pulse. Odd-ordered dispersive terms higher than ϕ2 give the pulse a skewed appearance and add a ringing-like feature that can appear on the leading or trailing edge of the pulse depending on the sign.

Figure 14.

Pulse width bandwidth as seen in (a), in the temporal domain (pulse width, τin, is 64 fs) (b) and temporal envelopes as affected by each order of dispersion: (c) 225 fs of ϕ1, (d) 3375 fs2 of ϕ2, (e) 50, 625 fs3 of ϕ3, and (f) 759, 375 fs4 of ϕ4.

Wollenhaupt et al. present an elucidating example (Table 12.2 in [210]) in which the effect of increasing amounts of GDD on pulses of different temporal lengths is tabulated. A typical multiphoton microscope with an 800-nm source may have as much as 4000 fs2 of GDD [211]. This amount of GDD would result in a 160-fs pulse broadening to 174.4 fs. However, a 10-fs pulse would broaden to 1109.1 fs. This demonstrates that the use of shorter pulses does not always guarantee an improved multiphoton signal and also of the importance of dispersion compensation.

For more discussion of pulse propagation, we recommend [160,204,210].

4.1. Dispersion Compensation

Is dispersion compensation necessary in a microscope? For imaging processes that scale nonlinearly with excitation intensity, dispersion compensation would seem to provide an unambiguous improvement in excitation efficiency (i.e., the ability to generate nonlinear signal photons). However, it is important to calculate the “photon economics” in order to evaluate the net impact of a dispersion-compensation system on signal photon generation. To optimize the excitation efficiency within the microscope it is desirable to maintain a diffraction-limited focal spot that is transform-limited in time. Just as spherical aberration can extend the focal volume spatially and reduce the excitation efficiency, dispersion in beam expanders, scan optics, and microscope objectives can extend the pulse duration and also degrade the pulse quality. There are multiple strategies that can be employed to precompensate for the dispersion of these optics, to ensure a transform-limited, or near-transform-limited, pulse at focus. Notably, the efficiency of the compensation scheme itself should be considered to ensure that there is a realizable gain in the final image. For example, if we assume a simple square pulse shape, the average detected second-order signal can be estimated to scale as

| (15) |

where N is the pulse repetition rate, E is the pulse energy, τ is the pulse duration, and A is the area. In this instance, we are looking at a second-order nonlinearity such as TPEF or SHG. Notably, we see that the detected signal scales inversely with pulse duration. If our compensation scheme reduces the pulse duration by a factor of 2, the detected signal will increase by a factor of 2. However, if the transmission of our compensation scheme is 50% (Etransmitted = 0.5 × Eincident), even with the reduction in pulse duration, our net detected signal is actually down by a factor of 2. Thus, any consideration of a dispersion compensation scheme, as outlined in this simplistic analysis, needs to include the transmission efficiency. A useful rule of thumb is that, for imaging with a second-order nonlinearity, if the transmission efficiency of the compensation system is α and the reduction in pulse width is β, then α2β must be greater than 1 to realize a measurable signal gain:

| (16) |

For example, if we are able to reduce the pulse duration by a factor of 2, β = 2, then the above rule of thumb suggests we need our transmission of the compensator, α, to be greater than 71%.

In a microscope, the combination of scan optics, tube lenses, dichroics, and objectives can result in GDD of the order of 5000 fs2. For many users, with a pulse duration of ~100 fs and modest dispersion from the microscope of ~3300 fs2, the pulse stretches to ~130 fs. This 30% increase constrains the compensation arm efficiency to be >88%.

Keeping transmission efficiency in mind, a second decision affecting the choice of compensator is the ability to compensate for higher order dispersion, which can also limit the pulse duration [212]. Table 2 lists the sign of the GDD and TOD for glass, prisms, gratings, and grisms.

Table 2.

Sign Value of Second- and Third-Order Dispersion for Glass, Prisms, Gratings, and Grisms

| GDD | TOD | |

|---|---|---|

| Glass | + | + |

| Grating | − | + |

| Prisms | − | − |

| Grisms | − | − |

Table 2 shows that glass in general exhibits positive GDD and TOD, and, as such, it is desirable that the compensator match the magnitude of the dispersion but be opposite in sign. It is evident that gratings, with the resultant mismatch in the sign of the TOD, can quickly become limiting: the grating’s TOD dispersion adds to that of the glass and, thus, most compensators used for multiphoton microscopy employ prisms. Prisms can be cut at Brewster’s angle, and, consequently, prism compensators have excellent transmission efficiency [213,214]. Choice of the prism glass is critical. Glasses like SF10 seem desirable because prisms made from these materials are highly dispersive, and a compact prism geometry results. However, while the TOD from the prism has the correct sign, it is incorrect in magnitude. Consequently, the pulse duration at focus quickly becomes TOD limited as a result of the prism compensator [215]. This has driven the glass choice to materials such as fused silica. Fused-silica prisms still ultimately limit the TOD compensation, but pulse durations of less than 20 fs can be compensated in microscopes, which is adequate for most systems.

The less dispersive glass choice requires a greater prism separation. However, compact geometries can still result by a careful choice of geometry, such as demonstrated by Akturk et al. [216]. Using a single prism in conjunction with a corner cube and roof mirror, they created a compact dispersion-compensation system with good throughput. Using PBH71 glass, they achieved 15, 000 fs2 of dispersion at 800 nm with a transmission efficiency of 75%. The displacement between the corner cube and the prism in this instance was just 30 cm. One of the nice advantages of the system is that a single prism design is much more amenable for use with systems in which the wavelength is tuned over a broad range. Akturk et al. show that only a 10° rotation of the prism is required to accommodate the wavelength range of 700–1100 nm.

A notable change in dispersion-compensation methods has been the availability of mirror coatings that have substantial GDD suitable for pulse-width correction while maintaining high reflectivity (>99%) over a broad wavelength range (0.7–1.0 μm). For example, a coating may have −200 fs2 of GDD at 800 nm. For a microscope that has dispersion on the order of 3000–5000 fs2, 15–25 reflections off the coating are required for complete GDD compensation. The net transmission is then 78%–86%. For a 100-fs pulse at 800 nm this range of dispersion equates to pulses stretched from 130 to 150 fs, meaning that the signal gain as a result of pulse width reduction will be almost exactly offset by the transmission losses.

It is interesting to note that the ratio of TOD to GDD is relatively constant for most materials. At 800 nm, this ratio is approximately +0.247 fs. The ratio of TOD to GDD for a prism compensator does not match that of materials and, as such, is why the prism compensator itself ultimately becomes the limiting element in terms of achieving transform-limited pulses at focus. The combination of a grating written onto a prism (or grating and prism separated only by a small air gap) is known as a grism [217]. Grisms not only have the correct sign of GDD and TOD correction, but they can be engineered to have the correct ratio of 0.247 fs. Thus, grisms enable quartic-phase-limited dispersion compensation, for large material path lengths. They can be configured such that throughput of >70% is achievable [218]. Grisms are the best choice when the glass path length in the microscope becomes significant: of the order of 10, 000 fs2.

4.1a. Dispersion Compensation and Pulse Compressors

There are many examples of dispersion compensation or pulse compression systems. These include the use of diffraction grating pairs [219–221], prism pairs [214,215,222–224], chirped mirrors [225–227], and the use of spatial light modulators (SLMs) or acousto-optic modulators (AOMs) for pulse shaping [89,160,207,228–234].

4.2. Pulse Measurements

It is important to have detailed knowledge of the spatial and temporal characteristics of an ultrashort pulse—especially for pulses below ~200 fs—at the focus of an objective so as to ensure optimum resolution and the highest efficiency for nonlinear photon production [230]. Quantitative metrics of the pulse intensity are also necessary in the case of in vivo samples so as to maintain sample viability. Inefficient pulse shapes can lead to undesirable bleaching. In this section we present the method of an interferometric two-photon absorption autocorrelation (TPAA) in a photodiode along with examples of dispersion for first-, second-, and third-order autocorrelation measurements. The strength of interferometric autocorrelation methods is that they are straightforward to implement and are suitable for optimizing the excitation efficiency for most multiphoton imaging applications. They are, however, fundamentally limited in terms of their inability to extract the actual pulse shape and phase of the pulse. [210]. As such, a Gaussian or hyperbolic secant (sech) shaping function is usually assumed. Thus, a suite of much more sophisticated pulse measurement techniques has been developed that are well matched to a microscope; namely, frequency-resolved optical gating (FROG [235–241]) and spectral phase interferometry for direct electric field reconstruction (SPIDER [208,240–244]) are able to provide additional information. Furthermore, multiphoton intrapulse interference phase scan (MIIPS [209,239,240,245–250]) not only measures the pulse but can shape it, as well. There are many papers that detail the utility of performing autocorrelations as a measure of a microscope system’s two-photon imaging performance [149,215,236,251,252].

4.2a. Interferometric Autocorrelations

Autocorrelation measurements are taken by sweeping an identical copy of the pulse across itself. This is accomplished by propagating the pulse through an interferometer where one of the arms has a variable length and is thus capable of providing an adjustable time delay (τ). A balanced autocorrelator provides equal amounts of material and coatings such that each pulse experiences an identical amount of dispersion. Additionally, interferometric autocorrelations can be performed such that the full back aperture of the objective is used—the measurement is taken at the full NA of the objective—and thus gives an accurate representation of performance under imaging conditions [47,253].

To take an autocorrelation of the beam with meaningful pulse-width information, you must have a material at the focus of your objective capable of producing a nonlinear, intensity-dependent signal. This intensity-dependent interaction acts as the ultrafast gating function, which allows a time-dependent measurement of the pulse with a detector that has a bandwidth or frequency response substantially below that of the optical pulse.

A typical and easy method of obtaining the autocorrelation is to measure the TPEF of a sample containing a fluorescent dye. Easier still is to employ the use of a GaAsP photodiode that has a two-photon spectral response from 600 to 1360 nm [252]. This bandwidth adequately covers the tunable range of a Ti:sapphire laser and the typical central frequencies of many other lasers used for multiphoton microscopy. Additionally, GaAsP photodiodes are inexpensive and are not susceptible to the problems of photobleaching or photodamage typical of fluorescent dyes.

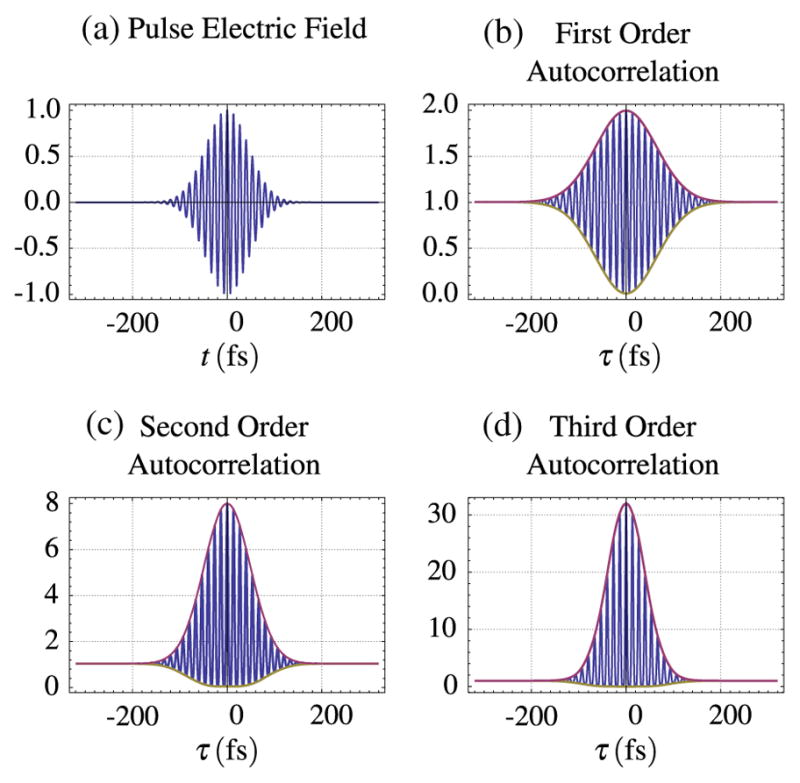

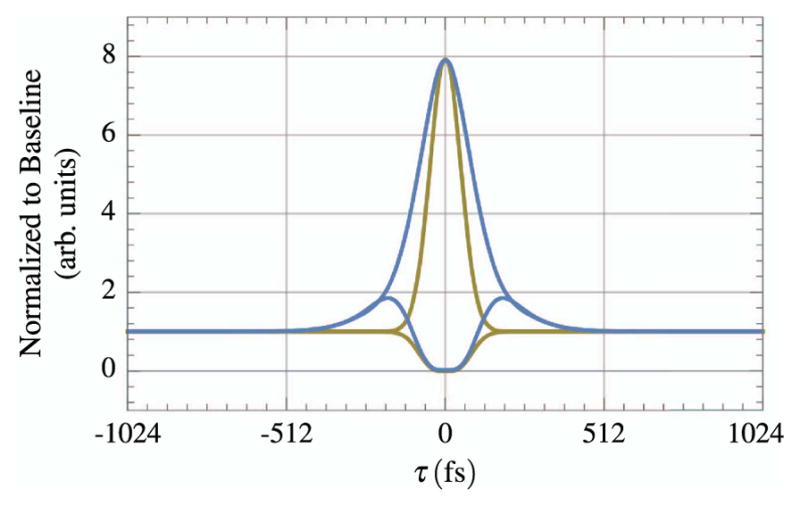

Figure 15 is an example of three different autocorrelations. The first-order correlation does not reveal anything about the pulse width except for the coherence length of the laser. The higher order autocorrelations, which make use of a nonlinear, intensity-dependent signal, can provide information about the amount and types of dispersion in the pulse. For interferometric autocorrelations of the second order, the ratio of the peak of the enveloping function to the nonzero baseline is 8/1, whereas for a third-order autocorrelation the ratio is 32/1 [149]. Figure 16 presents an example of the effect of GDD on an ultrashort pulse as measured by a second-order autocorrelation.

Figure 15.

Autocorrelation orders

Figure 16.

Example of the effect of 3375 fs2 of GDD on a second-order autocorrelation of an ultrashort pulse (τin = 64 fs). The initial pulse is in yellow and the disperse pulse is in blue. The envelopes are normalized to the baseline value.

5. The Image Relay System

Up through Section 4 we have focused on how to produce and maintain short, high-energy laser pulses in a microscope system. While these are essential aspects of a MPLSM system, we have yet to discuss the process of constructing an image by using a raster-scanned focal spot of the laser. In this section, we give a brief description of the image-construction process (Section 5.1) and outline the fundamentals of laser scanning (Section 5.2). We then briefly discuss the limitations of paraxial system design (Section 5.3). We will also discuss the use of computer-aided optical design for optimizing the spatial properties of the focused pulse while scanning (Sections 5.4 and 5.5), as well as improving FOV and field curvature (Sections 5.3a and 5.5). Finally, we expand our discussion to cover multifocal approaches to increase data acquisition rates (Section 5.4). Alignment techniques are not discussed in this section, but Heintzmann [254] presents a basic and accessible introduction to laser-beam alignment from beam collimation to mirror and lens alignment.

5.1. Image Construction in MPLSM

As outlined in the Section 2, a significant advantage of MPLSM over other imaging modalities is its relative insensitivity to scattering by turbid (e.g., biological) media. Nonlinear contrast mechanisms limit the excitation to within the laser focal volume, as mentioned in Section 2.3. This enables whole-field detection—elimination of the confocal pinhole—where the nonlinear signal is collected and quantified by a nonimaging detector, such as a photomultiplier tube. Since the signal is known to have originated from the focal point, all the collected nonlinear light can be attributed to that location in the specimen.

To form an image, the intensity of the nonlinear signal is quantified for each voxel by scanning the focal point relative to the specimen. While images can be formed by scanning the specimen while the laser focus remains stationary—a relatively simple and straightforward solution—scanning the laser focus across a static sample is often more desirable because of superior image acquisition speed and specimen stability, although it is more difficult to implement. Laser scanning requires that the beam’s incident angle vary while remaining centered on the objective’s back aperture; this prevents vignetting. Thus, the process of scanning not only determines the FOV but also can have a dramatic effect on the efficiency of excitation across the region of the scan.

The simplest version of a multiphoton microscope has a single focal point that is scanned through the region of interest. While numerous multifocal MPLSM systems have been reported [31,139–141,144,255–263], we treat the single-focus system first to illustrate the problem of beam delivery to the specimen. We then broaden the discussion to include multifocal imaging techniques and discuss some of the unique issues that arise from such systems.

5.2. Single Focus System

While systems for scanning 2D planes in the object region with arbitrary orientation have been reported [42,45,82,264–273], we will concentrate on systems that decouple the axial scanning from the lateral scanning. In such a system, volumetric images are collected by the sequential scanning of a lateral plane, oriented perpendicular to the optical axis, as the axial position of the specimen is varied. Lateral scanning of the focal volume is the key to image formation in this configuration.

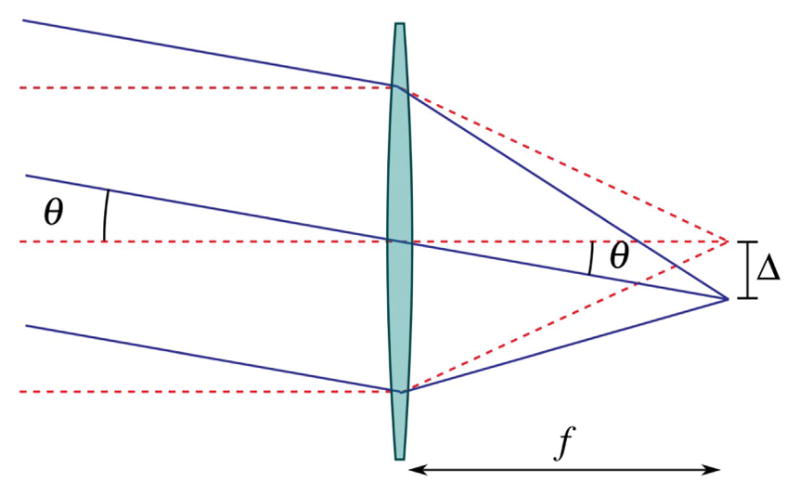

To laterally deflect the focal spot in the object plane, a controlled incident angle is applied to the collimated excitation beam at the back aperture of the objective lens. This is shown schematically in Fig. 17. In the paraxial approximation, the magnitude of the deflection in the object plane (Δ) is proportional to the focal length of the objective lens (f ) and the angle of incidence with respect to the optic axis (θ) at the optic’s back aperture. The crux of laser scanning is to design a system to change the angle of incidence of the spatially collimated illumination at the back aperture of the objective lens without vignetting.

Figure 17.

Lateral scanning with an infinity-corrected optic. To deflect the focal point in the object region, the angle of the collimated input beam must be varied with respect to the optic axis without translating the beam position at the input of the lens. The focal point of the illumination is deflected in the object region by Δ = f tan θ ≈ f θ in the small angle approximation.

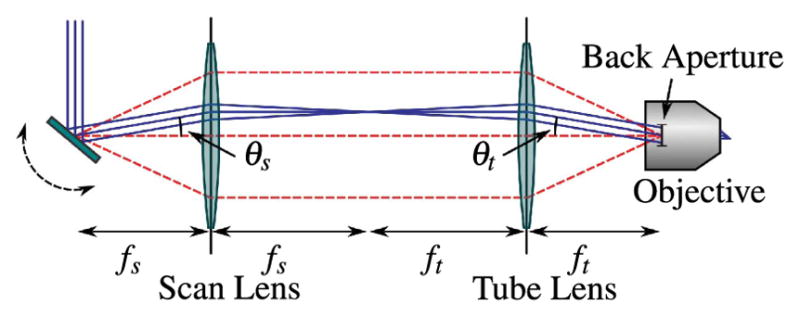

Scanning the angle of a laser beam can be accomplished in a variety of ways, including acousto-optic deflectors (AODs [274–276]), resonant [54,277] and nonresonant [30,33] galvanometric scan mirrors, polygonal scan mirrors [12,278], and microelectronic mirror (MEMS [279–281]) devices. The predominant method is to use a pair of galvanometric scan mirrors, one for each lateral dimension, to deflect an incoming beam in the lateral plane, and examples of this design are presented in related texts [90,135,282–284]. This is shown for one lateral dimension in Fig. 18, where the optical axis is indicated with a dashed line. At the back aperture of the objective, we require that the beam be collimated and incident, such that it does not walk off the aperture (vignetting), with an angle that varies as the scanners are rotated. The scan optics provides a system for mapping the angle of beam deflection by the scan mirrors to the angle of beam incidence on the objective. A straightforward solution is to image relay the scan mirrors to the back aperture of the objective lens using a double-sided telecentric system.

Figure 18.

Simple laser scanning system in the paraxial approximation. A collimated input beam is deflected by a scanning mechanism, such as a galvonometric mirror. Two lenses are used in a 4f configuration to image the scanning device to the back aperture of the illumination objective, as indicated by the red dashed lines. The lens closest to the scanner is referred to the scan lens, and has focal length f s, while the other lens is referred to as the tube lens and has focal length f t.

Image telecentricity is where the stop is placed before the optics such that the chief rays for different field angles are parallel at the focal plane. The reverse of image telecentricity is object telecentricity, and a combination of the two systems in series is double-sided telecentricity [285]. A lens is not inherently telecentric, as this is a function of stop placement. However, when a scan lens is referred to as telecentric, it usually means that the lens not only satisfies the F-theta condition—that the image height scales linearly with scan angle—but that the stop is placed at the scanning device so as to ensure telecentricity.

To build a relay system with double-sided telecentricity, the first relay lens is placed one focal length after the scan mirrors, the second relay lens is placed one focal length before the objective back aperture, and the relay lenses are separated by the sum of their focal lengths. Notice that the telecentric region is between the lenses as opposed to other double-sided telecentric systems in which there is telecentricity on either side of the relay system. This configuration is referred to as a 4f relay system due to the position of the relay lenses. Any difference between their focal lengths results in some magnification, which can be useful as outlined in Sections 5.3 and 5.4. This system ensures that the chief rays for different field, and in our case, scan, angles are parallel between the relay lenses and the beam does not walk off the objective back aperture as it is scanned.

5.3. Paraxial Scan System Design

Designing a laser-scanning microscope with an imaging system for the scanners is straightforward in the paraxial approximation. As an example, suppose that we have the following constraints for designing a MPLSM system for which a 500-μm FOV is required, with a lateral spatial resolution (d) of ~1 μm. Given a source wavelength of 1040 nm (corresponding to our Yb:KGW laser oscillator), we want to select an objective lens that will provide the required spatial resolution, and a pair of image relay lenses that will allow image formation over the desired FOV.

First, let us select an objective lens based on the spatial-resolution requirement. While the characteristics of the objective lens will be discussed in detail later in Section 6, we briefly note that the lateral spatial resolution under two-photon excitation with tightly focused excitation light is well described by a Gaussian fit to the intensity distribution in the object region. The spatial resolution as the 1/e radius of the maximal intensity for the square of the illumination point spread function (IPSF2), which is defined in [99] (cited by [37,73,286]) as

| (17) |

where λ is the wavelength of the illumination light and NA is the numerical aperture of the objective lens. We define the lateral spatial resolution of the imaging system as the full width at the 1/e2-point of the IPSF2: . Solving for NA, under the assumption that it is less than 0.7, we find that an objective lens with 0.65 NA is sufficient to provide approximately 1 μm spatial resolution with 1040 nm illumination light. Thus we select a 40 × /0.65 NA objective lens. Given this objective, we now select a scan lens and tube lens that will provide the desired FOV. Practically, this amounts to selecting a tube lens with an appropriate f -number (f /#): the ratio of the focal length to the aperture of the lens.

The aperture of the tube lens (At) must be large enough to support the full diameter of the illumination beam at the maximum scan angle (θmax). Thus the aperture of the tube lens must be greater than or equal to the sum of the beam diameter (Db) and the maximum displacement of the chief ray from the optic axis:

| (18) |

Since the spatial resolution we computed above is achieved only when the back aperture of the objective is filled, we will assume that Db is equivalent to the diameter of the back aperture. Under the paraxial approximation, the diameter of the objective back aperture (Ao) is

| (19) |

θmax is related to the focal length of the objective lens and the desired FOV. Once again, exploiting the paraxial approximation, this angle is

| (20) |

As expected, the aperture of the tube lens is set by the desired FOV, focal length, and NA of the objective lens:

| (21) |

The focal length of an infinity-corrected objective lens can be determined by the magnification of the lens and the focal length of the manufacturer-prescribed tube lens (see Section 6). For the UIS-series Zeiss lens (Zeiss, Thornwood, New York, USA) we have selected, the focal length of the tube lens is , so the focal length of the objective lens is

| (22) |

Equations (21) and (22) can be used to determine the required aperture of the tube lens in terms of the desired FOV and the parameters obtainable from the objective lens (i.e., the magnification and the NA):

| (23) |

provided that the manufacturer-prescribed focal length of the tube lens is known.

While Eq. (23) serves as a quick rule of thumb for selecting the aperture of the tube lens, it is more often the case that the aperture is fixed and the focal length of the tube lens is a free parameter. Therefore, it is more useful to rearrange this expression to

| (24) |

For a given aperture, Eq. (24) provides a good rule of thumb for selecting the focal length of the tube lens. In the present example, we will assume that the aperture of the tube lens is 30 mm, requiring that the focal length of the lens be less than or equal to 200 mm.

In the simplest case, the focal lengths of the scan and tube lenses are equivalent. However, in some cases magnification is required to fill the aperture of the tube lens. Appropriate magnification of the beam is achieved by adjusting the ratio of focal lengths between the tube and scan lenses. With the scan-lens focal length determined, the aperture of the scan lens can be computed to ensure selection of a lens with the proper f /#.

In the present example, a beam diameter of ~5.34 mm is not so large as to warrant beam expansion within the scan system, and it is practical to select a set of identical scan and tube lenses.

Additionally, these equations may be used to determine θs; this ensures that the scanning mechanism can provide the required deflection to achieve the prescribed FOV.

5.3a. Large Aperture Telecentric Lens for Nonparaxial Approximation

In Section 5.3 we made an initial design of the scan system with the paraxial approximation, meaning that the angle of deflection from the optical axis is small. While this is a good first-order approximation for suitable commercially available scan lenses, for realistic scan systems the effect of field curvature is also important to consider, as it can distort the spot size over the image plane. Additionally, since a large FOV is desirable for faster mosaic imaging, the problem is only compounded the farther off-axis we scan.

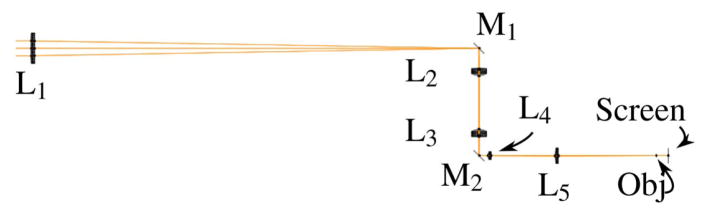

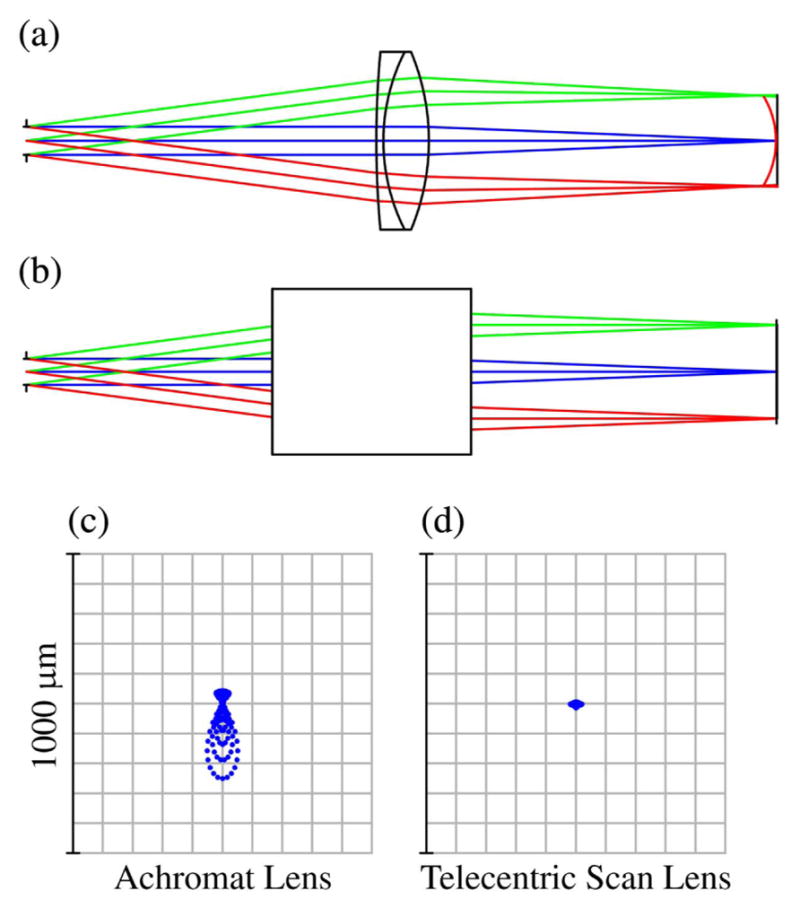

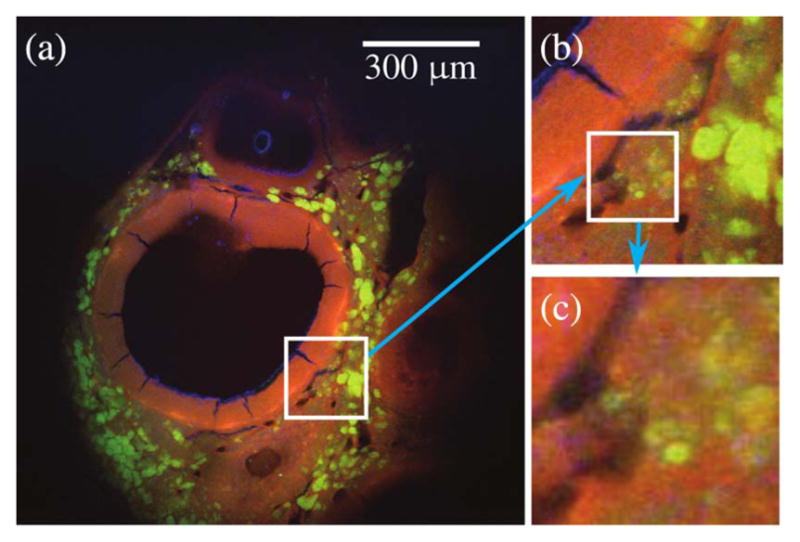

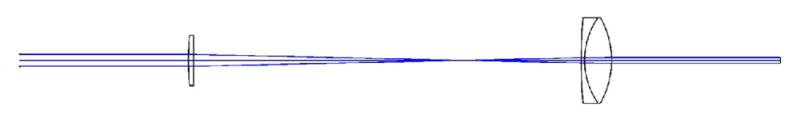

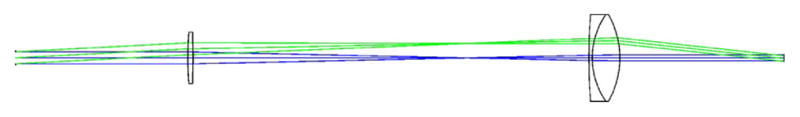

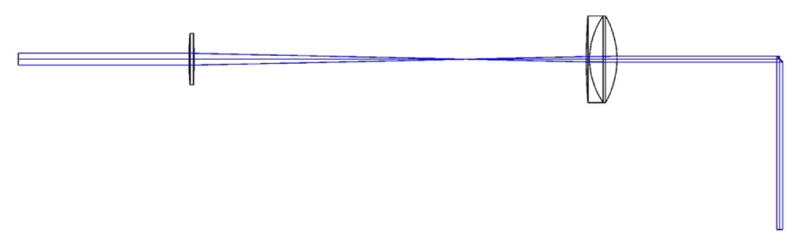

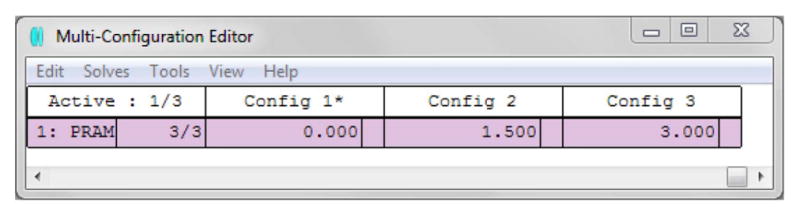

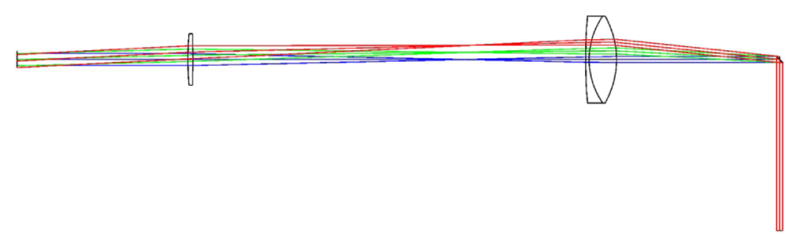

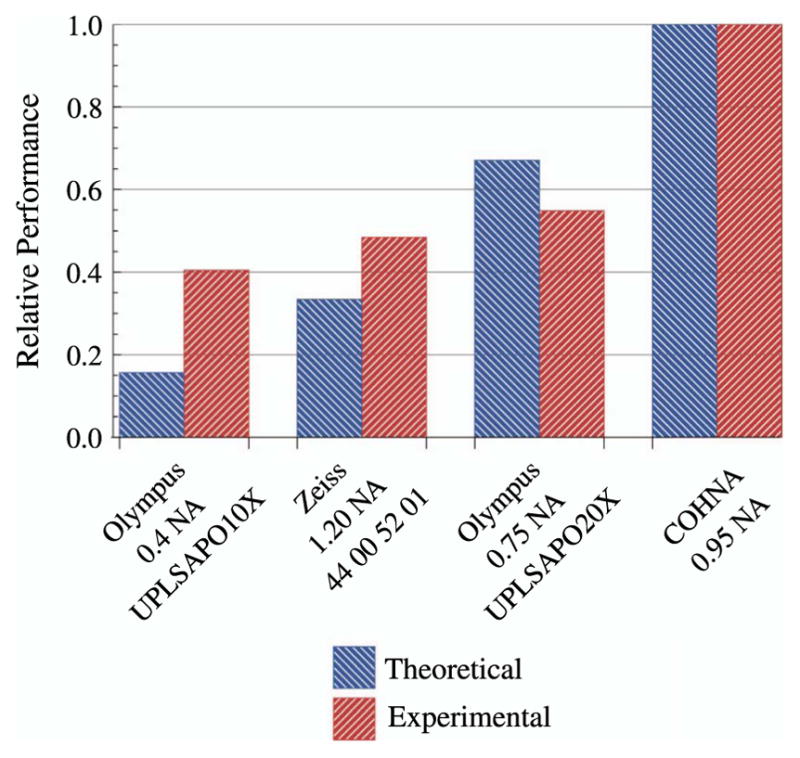

Without the paraxial approximation, even slight deflections can cause the focal spot to experience deviations from optimal focusing–which is achieved for on-axis light propagation. Standard achromatic lenses are designed to not only minimize chromatic aberrations, but typically to minimize spherical aberration, as well. Unfortunately, this optimization is for on-axis light. Consequently, when a collimated laser beam is scanned off-axis through a standard achromatic lens, the beam becomes significantly aberrated. This includes spherical aberration, coma, astigmatism, and field curvature. However, as previously discussed in Section 5.2, certain lenses are designed and optimized for scanning applications. Figure 19 shows a comparison between an achromatic lens and a scan lens designed for telecentric scanning (both commercially available); the figure demonstrates the quality of focus for both lenses over a scan range and the curvature of the focal plane. Because of the scan lens’ superior performance, two of them will be used for the relay system between the scan mirrors and objective back aperture (rendered in Fig. 20). Figure 21 demonstrates the ability of the commercial scan lenses for acquiring large FOV images.

Figure 19.

ZEMAX comparisons of off-axis focusing performance between a commercial achromatic lens and a commercial telecentric scan lens. Lens diagrams (a) and (b) are the achromatic and LSM05-BB lenses, respectively. (a) also shows the field curvature of the focal plane in red. Spot diagrams (c) and (d) compare the focal spots of the lenses for 7.5° of deflection from the optical axis.

Figure 20.

Computer rendering of the basic microscope system. The two large optics in the relay system are broadband-coated (850–1050 nm) 1.6× OCT Scan Lenses (LSM05-BB, ThorLabs), which allow for a large FOV and, because they are designed for optical coherence tomography (OCT), the scan lenses satisfy the telecentric condition.

Figure 21.

Large FOV images of fixed murine ovarian tissue obtained with MPLSM using commercial scan lenses. 300 μm scale bar. The objective lens used was a 40 × /0.65 NA Zeiss A-Plan.

5.4. Multiple-Focus Systems

In the past decade, there has been significant effort to develop multifocal multiphoton laser scanning microscopes that parallelize the image acquisition process, enabling a high-speed capability to capture processes in real time, and open a window into dynamic systems [31,139–141,255–259,261–263,287]. The idea of capitalizing on the abundance of power available in the latest ultrashort pulse laser designs to generate an array of focal points was first introduced in 1998 [31,138]. Sheetz et al. [179] have demonstrated a Yb:KGW oscillator that produces six temporally and spatially separate beams directly from the cavity. For this lateral array of multiple foci, the requirement to translate an angular deflection into a lateral shift in the foci remains. However, the optical configuration needed to transform an array of beamlets into separate foci at the sample plane, and to do so in such a way that the foci can be scanned without vignetting, becomes more complex.

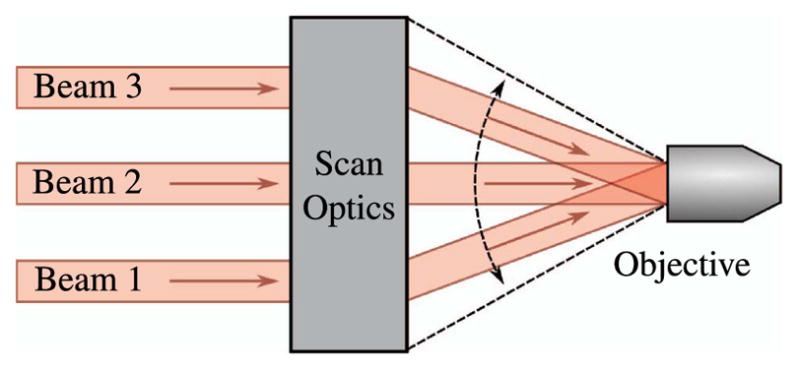

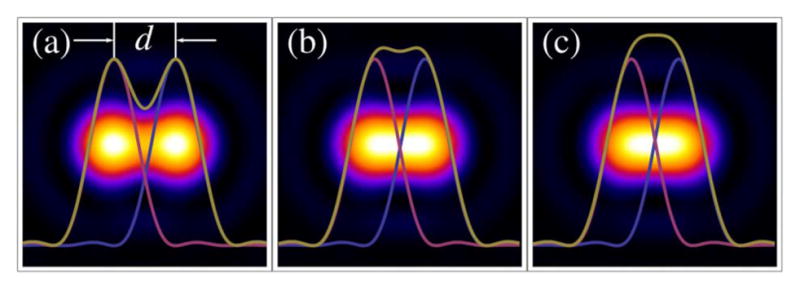

Figure 22 demonstrates what the multifocal scan optics must accomplish. The beamlets must be overlapping and collimated at the back aperture of the objective; the spot formed by the overlapping beamlets must remain fixed on the objective back aperture as they are scanned in two lateral dimensions. In Fig. 22, the angular scan range is indicated by the dashed lines representing the outer marginal rays of the outer beamlets.

Figure 22.

Telecentric scanning of multiple foci.

To achieve overlapping collimated beamlets that are properly sized to slightly overfill the back aperture of the objective lens, the scan optics must be designed with three primary considerations:

The lens system must focus the beamlet array to telecentric stops at the scan mirrors and at the back of the objective lens.

The lens system must offset the position of the beamlet waists from the focus of the lateral beamlet array; in other words, the necessity of overlapping the beamlets on the objective back aperture while maintaining collimation of the individual beamlets, as shown in Fig. 22. This requires that the tube lens act on beamlets that are diverging from a minimum waist position, yet have parallel chief rays.

The lens system must serve to magnify the beamlets so that the collimated spot size appropriately overfills the objective lens.

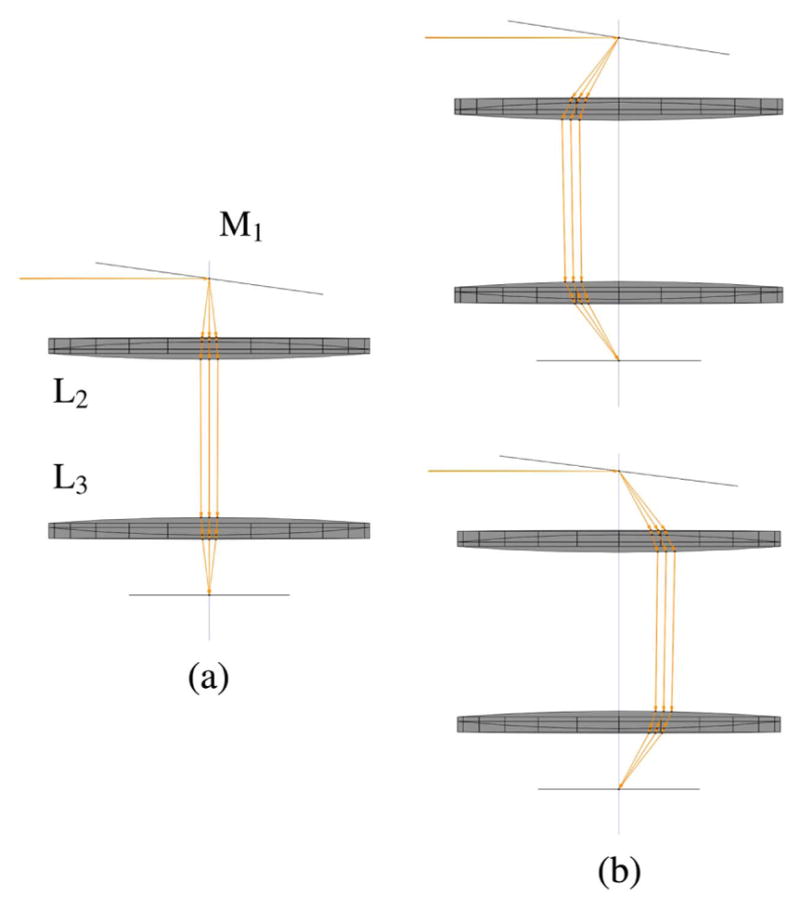

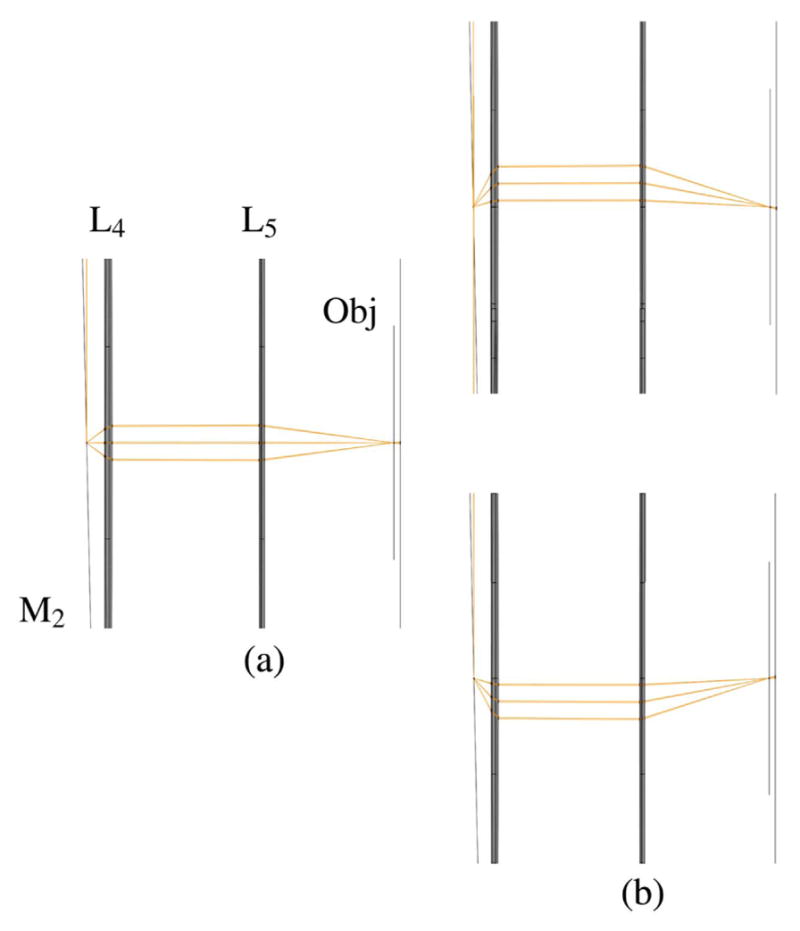

Fittinghoff and Squier [140] present a theoretical treatment of imaging multiple Gaussian beamlets to an objective that gives a good starting point for lens parameters (focal lengths and spacing) that can be refined with a paraxial ray-tracing program. However, their three-lens scan-optics system uses two closely spaced scan mirrors and forms one telecentric stop directly between the two scan mirrors, which is imaged to another telecentric stop at the back of the objective. This is common practice in many scanning-microscopy setups because commercial scan mirrors typically come mounted together in a closely spaced, periscope-type configuration, and the benefits of such simplicity usually outweigh the downside of minor vignetting caused by the fact that the telecentric stop is not exactly on either of the scan mirrors. It may be considered inconsequential for applications where only small scan angles are normally required. However, we chose to mount two scan mirrors separately so that one of the scan mirrors could later be replaced with a rotating polygonal mirror for video-rate imaging applications. Doing so clearly adds to the complexity of the scan-optics system but benefits from the ability to place telecentric stops exactly at each scan-mirror surface, which increases the lateral area that one can scan without vignetting.

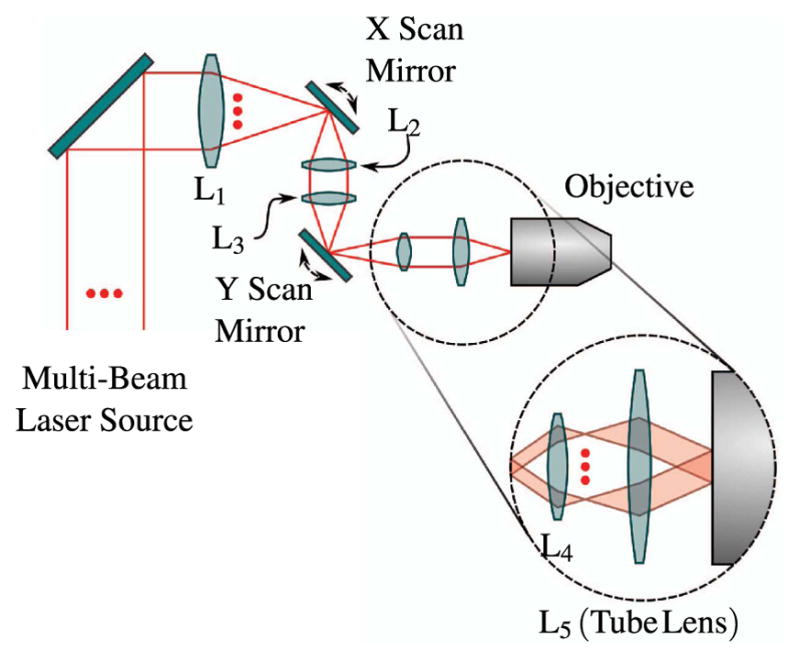

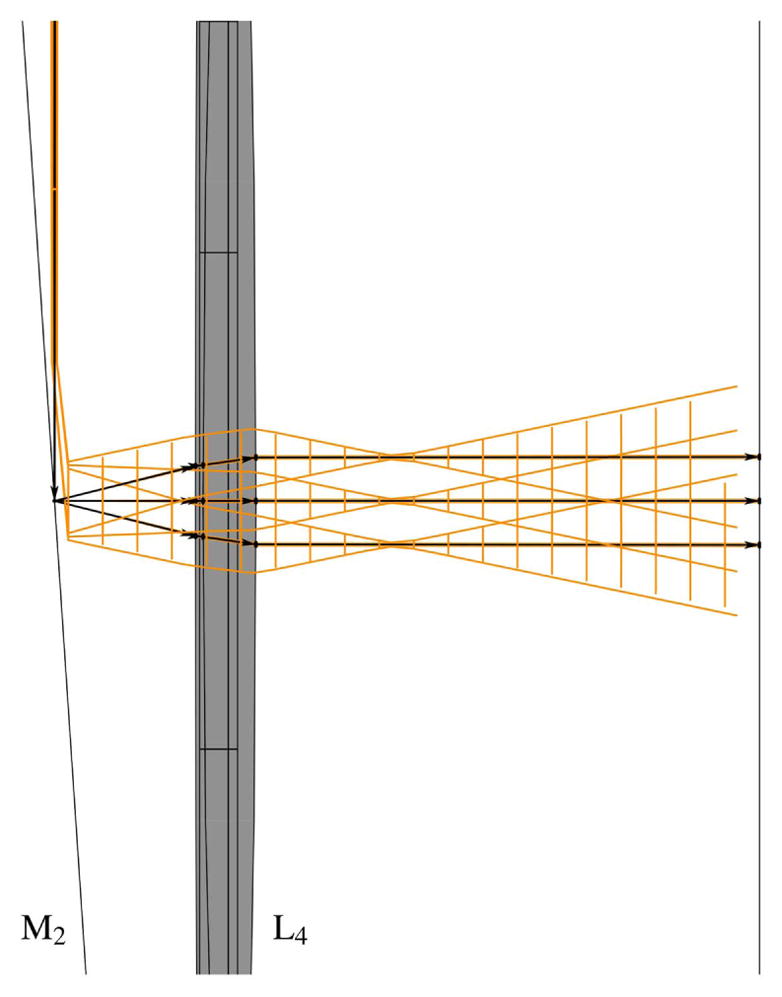

The schematic of an optical system designed to scan multiple beams with separated horizontal and vertical galvonometric scanners is shown in Fig. 23. The system is independent of both the method used to generate multiple foci and the number of foci. However, the details of the system components and geometry, provided in Table 3 and shown in Figs. 24–29, are for the multifocal system used to scan six foci generated directly from the oscillator [179]. Here, a one-dimensional (1D) array of six ~1 mm diameter parallel beams with 5 mm interbeam separation is used. All lenses are achromatic doublets designed and coated for 1-μm light (CVI Laser Optics, Albuquerque, New Mexico, USA). The first lens, L1, has a 2″ aperture and a focal length of 750-mm and focuses the beams onto the first of two 5-mm clear-aperture galvanometric scan mirrors (driven by SC2000; GSI Group, Inc., Bedford, Massachusetts, USA). The focal point of L1 forms the first of three telecentric stops in the optical system. Lenses L2 and L3 are 1″ aperture lenses with focal lengths of 40-mm and form a 1/1 telescope to image the telecentric stop from the first scan mirror to the second scan mirror. Lens L4 has a 1/2″ aperture and a focal length of 19-mm. This lens serves to bend the principal axes (i.e., chief rays) of the beamlets to be mutually parallel while sharply focusing the individual beamlets such that they will expand to overfill the back aperture of the objective. Lens L5 is a 1″, 100-mm tube lens that recollimates each magnified beamlet and overlaps it to the third telecentric stop at the back aperture of the objective, as shown in the magnified inset of Fig. 23.

Figure 23.

Schematic representation of multifocal scan optics. Achromatic doublets are labeled L1–L5. Magnified inset shows beam configuration on the back aperture of the objective required for scanning. For clarity, only the outer two beamlets of the array are shown.

Table 3.

Focal Lengths and Spacings for Five-Lens Multifocal Scanning System for a Home-Built Microscopea

| Element | Focal Length (mm) | Distance to Next (mm) |

|---|---|---|

| L1 | 750 | 752 to M1 |

| M1 | ∞ | 45 to L2 |

| L2 | 40 | 50 to L3 |

| L3 | 40 | 45 to M2 |

| M2 | ∞ | 12 to L4 |

| L4 | 19 | 114 to L5 |

| L5 | 100 | 110 to Obj |

L1–L5, lenses as labeled in Fig. 23; M1 and M2, horizontal and vertical scan mirrors.

Figure 24.

Multifocal scan optics: large-scale screen shot of entire five-lens system as modeled in Optica software.

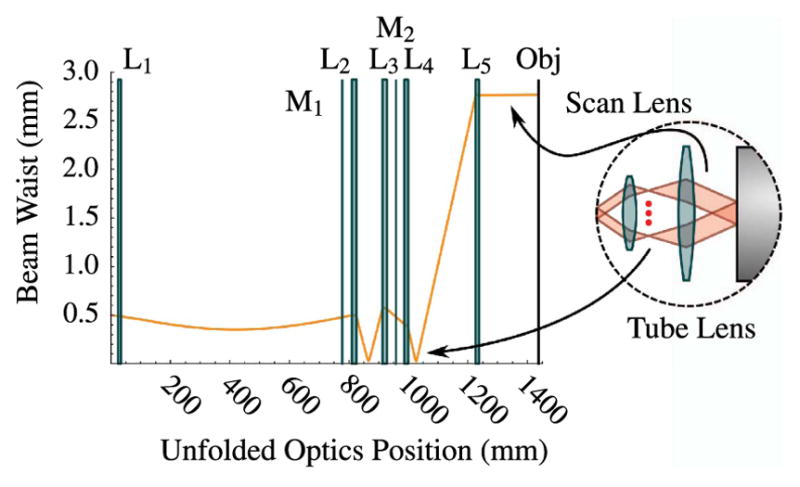

Figure 29.

Multifocal scan optics: beam size of the central beamlet throughout the five-lens scan-optics system.

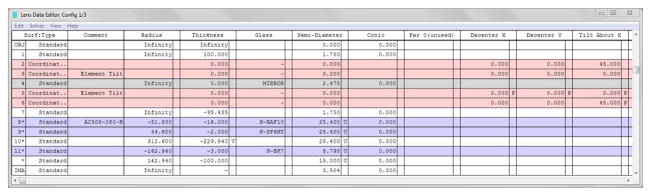

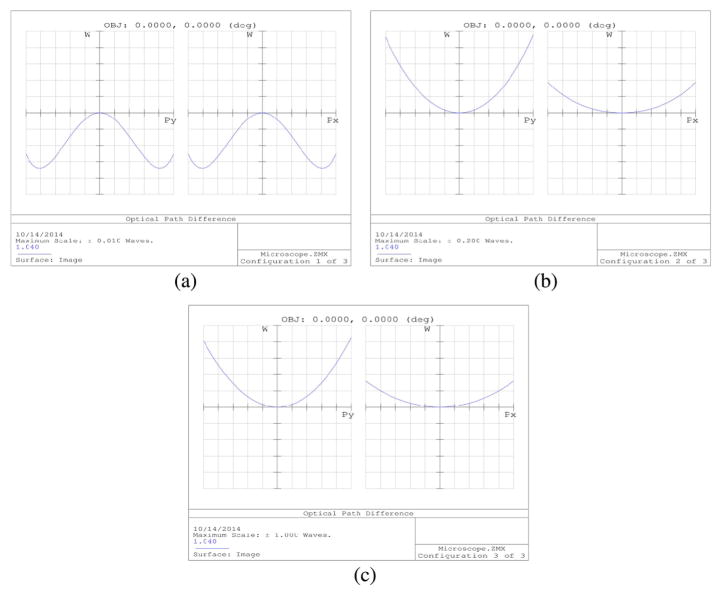

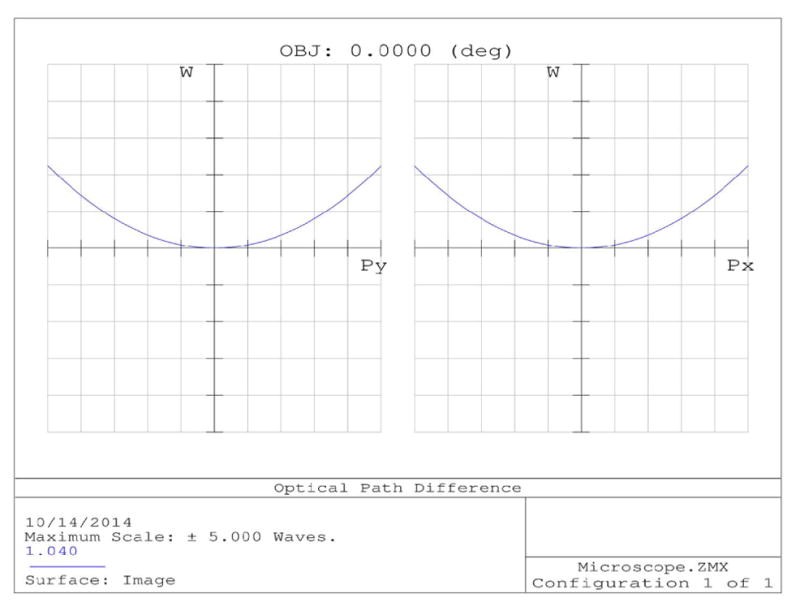

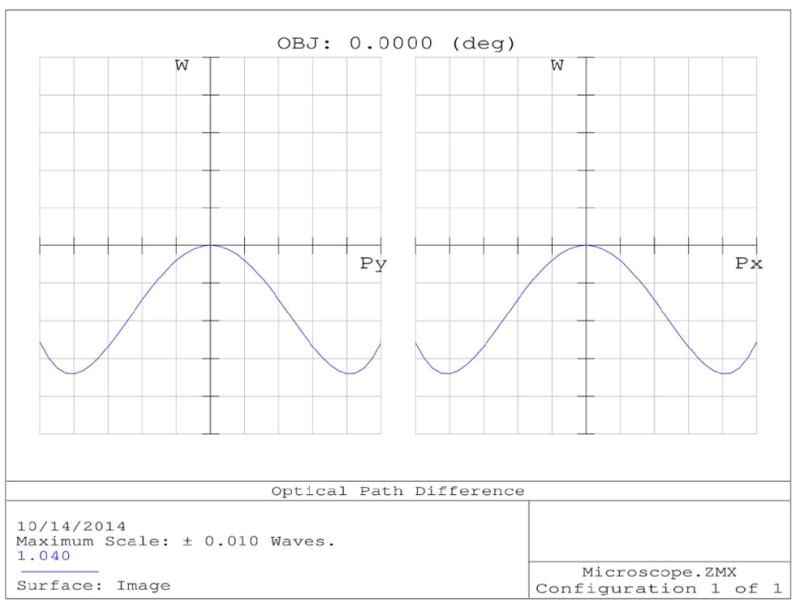

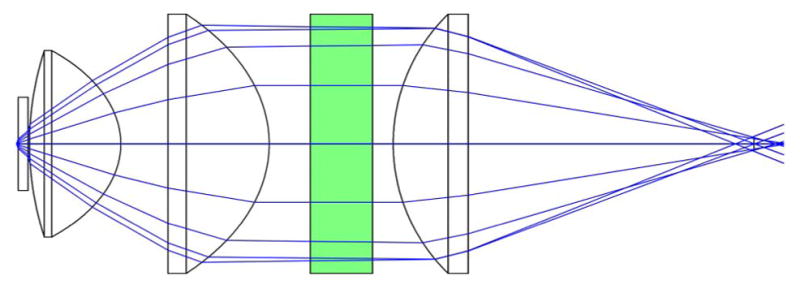

The five-lens scan optics system was modeled using the Rayica-Wavica ray-tracing and Gaussian beam propagation package (Optica Software, Urbana, Illinois, USA) that runs within Mathematica (Wolfram, Champaign, Illinois, USA). Figures 24–29 provide screen shots of the model output at various stages of the five-lens system to illustrate the performance of each lens and the criteria for assessing whether the beams are sufficiently expanded, collimated, and overlapping at the objective such that we can scan without vignetting.

Figure 24 shows a broad view of the entire five-lens scanning model. Exact focal lengths and element separation distances for the scan-optics systems for a home-built microscope are given in Table 3.

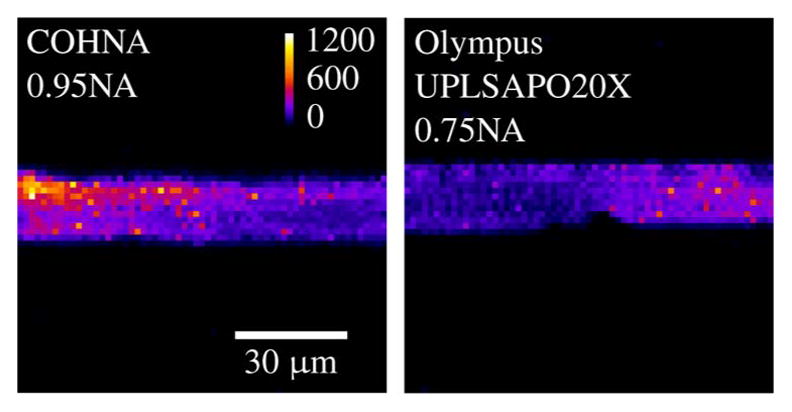

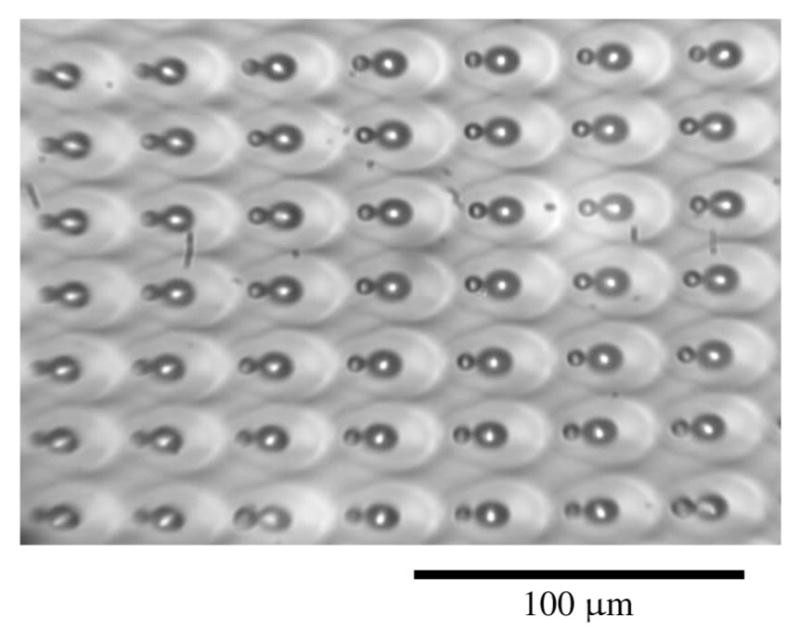

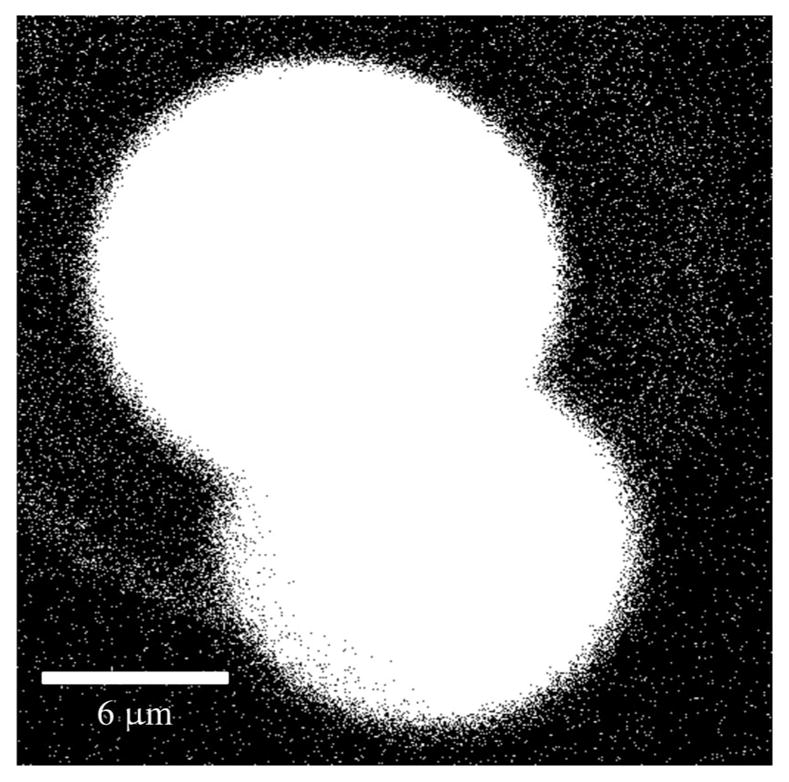

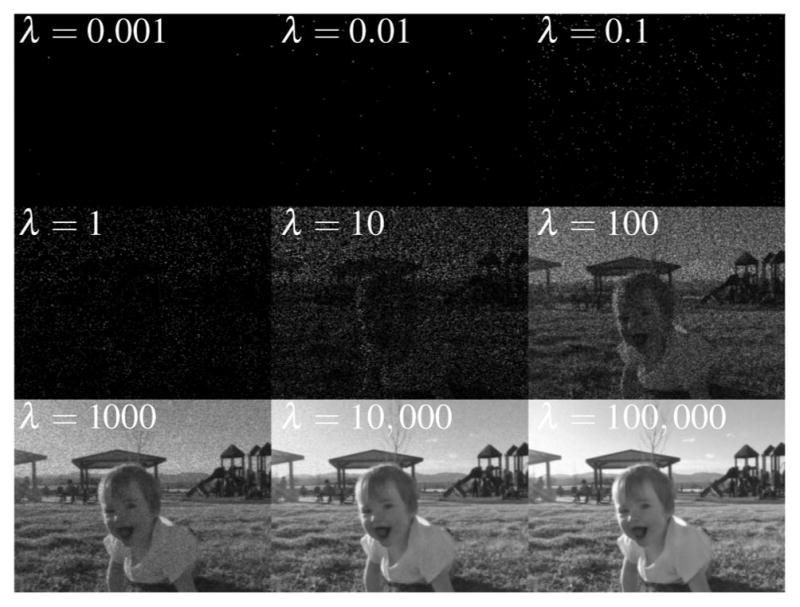

The first lens in the model, L1, simply overlaps the beamlets onto the first scan mirror and maintains collimation by negating the divergent nature of Gaussian beams. This telecentric stop will ultimately be image relayed to the back aperture of the objective.