Abstract

In 1997, the National Institute of Standards and Technology (NIST) initiated a process to select a symmetric-key encryption algorithm to be used to protect sensitive (unclassified) Federal information in furtherance of NIST’s statutory responsibilities. In 1998, NIST announced the acceptance of 15 candidate algorithms and requested the assistance of the cryptographic research community in analyzing the candidates. This analysis included an initial examination of the security and efficiency characteristics for each algorithm. NIST reviewed the results of this preliminary research and selected MARS, RC™, Rijndael, Serpent and Twofish as finalists. Having reviewed further public analysis of the finalists, NIST has decided to propose Rijndael as the Advanced Encryption Standard (AES). The research results and rationale for this selection are documented in this report.

Keywords: Advanced Encryption Standard (AES), cryptography, cryptanalysis, cryptographic algorithms, encryption, Rijndael

“I’ll do the [S]quare thing.”

—G.B. McCutcheon (1917)

1. Overview of the Development Process for the Advanced Encryption Standard and Summary of Round 2 Evaluations

The National Institute of Standards and Technology (NIST) has been working with the international cryptographic community to develop an Advanced Encryption Standard (AES). The overall goal is to develop a Federal Information Processing Standard (FIPS) that specifies an encryption algorithm capable of protecting sensitive (unclassified) government information well into the twenty-first century. NIST expects that the algorithm will be used by the U.S. Government and, on a voluntary basis, by the private sector.

The competition among the finalists was very intense, and NIST selected Rijndael as the proposed AES algorithm at the end of a very long and complex evaluation process. This report describes that process and summarizes many of the characteristics of the algorithms that were identified during the public evaluation periods. The following sections provide an overview of the AES development followed by a discussion of specific analysis details.

1.1 Background

On January 2, 1997, NIST announced the initiation of an effort to develop the AES [31] and made a formal call for algorithms on September 12, 1997 [32]. The call indicated NIST’s goal that the AES would specify an unclassified, publicly disclosed encryption algorithm, available royalty-free, worldwide. At a minimum, the algorithm would have to implement symmetric key cryptography as a block cipher and support a block size of 128 bits and key sizes of 128, 192, and 256 bits.

On August 20, 1998, NIST announced 15 AES candidate algorithms at the First AES Candidate Conference (AES1) and solicited public comments on the candidates [33]. Industry and academia submitters from twelve countries proposed the fifteen algorithms. A Second AES Candidate Conference (AES2) was held in March 1999 to discuss the results of the analysis that was conducted by the international cryptographic community on the candidate algorithms. In August 1999, NIST announced its selection of five finalist algorithms from the fifteen candidates. The selected algorithms were MARS, RC6™, Rijndael, Serpent and Twofish.

1.2 Overview of the Finalists

The five finalists are iterated block ciphers: they specify a transformation that is iterated a number of times on the data block to be encrypted or decrypted. Each iteration is called a round, and the transformation is called the round function. The data block to be encrypted is called the plaintext; the encrypted plaintext is called the ciphertext. For decryption, the ciphertext is the data block to be processed. Each finalist also specifies a method for generating a series of keys from the original user key; the method is called the key schedule, and the generated keys are called subkeys. The round functions take distinct subkeys as input along with the data block.

For each finalist, the very first and last cryptographic operations are some form of mixing of subkeys with the data block. Such mixing of secret subkeys prevents an adversary who does not know the keys from even beginning to encrypt the plaintext or decrypt the ciphertext. Whenever this subkey mixing does not naturally occur as the initial step of the first round or the final step of the last round, the finalists specify the subkey mixing as an extra step called pre- or post-whitening.

There are other common technical features of the finalists. Four of the finalists specify substitution tables, called S-boxes: an A×B bit S-box replaces A bit inputs with B bit outputs. Three of the finalists specify variations on a structure for the round function, called the Feistel structure. In the classic Feistel structure, half of the data block is used to modify the other half of the data block, and then the halves are swapped. The two finalists that do not use a Feistel structure process the entire data block in parallel during each round using substitutions and linear transformations; thus, these two finalists are examples of substitution-linear transformation networks.

Below is a summary of each of the finalist candidates in alphabetical order; profiles and Round 2 assessments are provided in subsequent sections of this report.

MARS [15] has several layers: key addition1 as pre-whitening, eight rounds of unkeyed forward mixing, eight rounds of keyed forward transformation, eight rounds of keyed backwards transformation, eight rounds of unkeyed backwards mixing, and key subtraction as post-whitening. The 16 keyed transformations are called the cryptographic core. The unkeyed rounds use two 8×32 bit S-boxes, addition, and the XOR operation. In addition to those elements, the keyed rounds use 32 bit key multiplication, data-dependent rotations, and key addition. Both the mixing and the core rounds are modified Feistel rounds in which one fourth of the data block is used to alter the other three fourths of the data block. MARS was submitted by the International Business Machines Corporation (IBM).

RC6 [75] is a parameterized family of encryption ciphers that essentially use the Feistel structure; 20 rounds were specified for the AES submission. The round function of RC6 uses variable rotations that are regulated by a quadratic function of the data. Each round also includes 32 bit modular multiplication, addition, XOR (i.e., exclusive-or), and key addition. Key addition is also used for pre- and post-whitening. RC6 was submitted to the AES development effort by RSA Laboratories.

Rijndael [22] is a substitution-linear transformation network with 10, 12, or 14 rounds, depending on the key size. A data block to be processed using Rijndael is partitioned into an array of bytes, and each of the cipher operations is byte-oriented. Rijndael’s round function consists of four layers. In the first layer, an 8×8 S-box is applied to each byte. The second and third layers are linear mixing layers, in which the rows of the array are shifted, and the columns are mixed. In the fourth layer, subkey bytes are XORed into each byte of the array. In the last round, the column mixing is omitted. Rijndael was submitted by Joan Daemen (Proton World International) and Vincent Rijmen (Katholieke Universiteit Leuven).

Serpent [4] is a substitution-linear transformation network consisting of 32 rounds. Serpent also specifies non-cryptographic initial and final permutations that facilitate an alternative mode of implementation called the bitslice mode. The round function consists of three layers: the key XOR operation, 32 parallel applications of one of the eight specified 4×4 S-boxes, and a linear transformation. In the last round, a second layer of key XOR replaces the linear transformation. Serpent was submitted by Ross Anderson (University of Cambridge), Eli Biham (Technion), and Lars Knudsen (University of California San Diego).

Twofish [83] is a Feistel network with 16 rounds. The Feistel structure is slightly modified using 1 bit rotations. The round function acts on 32 bit words with four key-dependent 8×8 S-boxes, followed by a fixed 4×4 maximum distance separable matrix over GF(28), a pseudo-Hadamard transform, and key addition. Twofish was submitted by Bruce Schneier, John Kelsey, and Niels Ferguson (Counterpane Internet Security, Inc.), Doug Whiting (Hi/fn, Inc.), David Wagner (University of California Berkeley), and Chris Hall (Princeton University).

In announcing the finalists, NIST again solicited public review and comment on the algorithms [34]. These algorithms received further analysis during a second, more in-depth review period, and the Third AES Candidate Conference (AES3) was held in April 2000 to present and discuss much of that analysis. The public comment period for reviewing the finalist algorithms closed on May 15, 2000. At that time, NIST’s AES team conducted a thorough review of all of the public comments and analyses of the finalists.

1.3 Evaluation Criteria

In the September 1997 call for candidate algorithms [32], NIST specified the overall evaluation criteria that would be used to compare the candidate algorithms. These criteria were developed from public comments to Ref. [31] and from the discussions at a public AES workshop held on April 15, 1997 at NIST.

The evaluation criteria were divided into three major categories: 1) Security, 2) Cost, and 3) Algorithm and Implementation Characteristics. Security was the most important factor in the evaluation and encompassed features such as resistance of the algorithm to cryptanalysis, soundness of its mathematical basis, randomness of the algorithm output, and relative security as compared to other candidates.

Cost was a second important area of evaluation that encompassed licensing requirements, computational efficiency (speed) on various platforms, and memory requirements. Since one of NIST’s goals was that the final AES algorithm be available worldwide on a royalty-free basis, public comments were specifically sought on intellectual property claims and any potential conflicts. The speed of the algorithm on a variety of platforms needed to be considered. During Round 1, the focus was primarily on the speed associated with 128 bit keys. During Round 2, hardware implementations and the speeds associated with the 192 bit and 256 bit key sizes were addressed. Memory requirements and software implementation constraints for software implementations of the candidates were also important considerations.

The third area of evaluation was algorithm and implementation characteristics such as flexibility, hard ware and software suitability, and algorithm simplicity. Flexibility includes the ability of an algorithm:

To handle key and block sizes beyond the minimum that must be supported,

To be implemented securely and efficiently in many different types of environments, and

To be implemented as a stream cipher, hashing algorithm, and to provide additional cryptographic services.

It must be feasible to implement an algorithm in both hardware and software, and efficient firmware implementations were considered advantageous. The relative simplicity of an algorithm’s design was also an evaluation factor.

During Rounds 1 and 2, it became evident that the various issues being analyzed and discussed often crossed into more than one of the three main criteria headings. Therefore, the criteria of cost and algorithm characteristics were considered together as secondary criteria, after security. This report addresses the criteria listed above, as follows:

| Security: | Sects. 3.2 and 3.6. |

| Cost: | Sects. 3.3, 3.4, 3.5, 3.7, 3.8, 3.10, and 4. |

| Algorithm Characteristics: | Sects. 3.3, 3.4, 3.5, 3.6, 3.8, 3.9, and 3.10. |

1.4 Results from Round 2

The Round 2 public review extended from the official announcement of the five AES finalists on August 20, 1999 until the official close of the comment period on May 15, 2000. During Round 2, many members of the global cryptographic community supported the AES development effort by analyzing and testing the five AES finalists.

NIST facilitated and focused the analysis of the finalists by providing an electronic discussion forum and home page. The public and NIST used the electronic forum [1] to discuss the finalists and relevant AES issues, inform the public of new analysis results, etc. The AES home page [2] served as a tool to disseminate information such as algorithm specifications and source code, AES3 papers, and other Round 2 public comments.

Thirty-seven papers were submitted to NIST for consideration for AES3. Twenty-four of those papers were presented at AES3 as part of the formal program, and one of the remaining papers was presented during an informal session at AES3. All of the submitted papers were posted on the AES home page [2] several weeks prior to AES3 in order to promote informed discussions at the conference.

AES3 gave members of the international cryptographic community an opportunity to present and discuss Round 2 analysis and other important topics relevant to the AES development effort. A summary of AES3 presentations and discussions will be available in Ref. [29]. In addition to the AES3 papers, NIST received 136 sets of public comments on the finalists during Round 2 in the form of submitted papers, email comments and letters. All of these comments were made publicly available on the AES home page [2] on April 19, 2000.

NIST performed an analysis of mathematically optimized ANSI C and Java™ implementations2 of the candidate algorithms that were provided by the submitters prior to the beginning of Round 1. NIST’s testing of ANSI C implementations focused on the speed of the candidates on various desktop systems, using different combinations of processors, operating systems, and compilers. The submitters’ Java™ code was tested for speed and memory usage on a desktop system. NIST’s testing results for the ANSI C and Java™ code are presented in Refs. [7] and [28], respectively. Additionally, extensive statistical testing was performed by NIST on the candidates, and results are presented in Ref. [88].

1.5 The Selection Process

A team of NIST security personnel convened a series of meetings in order to establish the strategy for AES algorithm selection (see Sec. 2). The team then proceeded to evaluate the papers and comments received during the AES development process, compare the results of the numerous studies made of the finalists and finally make the selection of the proposed AES algorithm. There is a consensus by the team that the selected algorithm will provide good security for the foreseeable future, is reasonably efficient and suitable for various platforms and environments, and provides sufficient flexibility to accommodate future requirements.

1.6 Organization of this Report

This report is organized as follows. Section 2 provides details on NIST’s approach to making its selection, and discusses some of the more critical issues that were considered prior to evaluating the algorithms. Section 3 presents the various factors and analysis results that were taken into consideration during the algorithms’ evaluation by NIST; this section presents a number of specific case studies. Section 4 summarizes the intellectual property issue. In Section 5, candidate algorithm profiles summarize the salient information that NIST accrued for each finalist, based on the results summarized in Section 3. Section 6 takes the information from the algorithm profiles and draws comparisons and contrasts, in terms of the advantages and disadvantages identified for each algorithm. Finally, Sec. 7 presents NIST’s conclusion for its selection of Rijndael. Section 8 indicates some of the next steps that will occur in the AES development effort.

2. Selection Issues and Methodology

2.1 Approach to Selection

As the public comment period neared its closing date of May 15, 2000, NIST reconstituted its AES selection team (hereafter called the “team”) that was used for the Round 1 selection of the finalists. This team was comprised of cross-disciplinary NIST security staff. The team reviewed the public comments, drafted this selection report and selected the algorithms to propose as the AES.

A few fundamental decisions confronted the team at the beginning of the selection process. Specifically, the team considered whether to:

Take a quantitative or qualitative approach to selection;

Select one or multiple algorithms;

Select a backup algorithm(s); and

Consider public proposals to modify the algorithms.

The following sections briefly address these issues.

2.2 Quantitative vs Qualitative Review

At one of its first meetings to plan for the post Round 2 activities, the team reviewed the possibility of conducting a quantitative approach as proposed in Ref. [87]. Using this process, each algorithm and combination of algorithms would receive a score based on the evaluation criteria [32]. If such a quantitative approach were feasible, it could provide an explicit assignment of values and allow a comparison of the algorithms. The quantitative approach would also provide explicit weighting of each AES selection factor. However, the consensus of the team was that the degree of subjectivity of many of the criteria would result in numeric figures that would be debatable. Moreover, the issue of quantitative review had been raised by the public at various times during the AES development effort (most recently at AES3), and there seemed to be little agreement regarding how different factors should be weighted and scored. Team members also expressed concern that determining a quantitative scoring system without significant public discussion would give the impression that the system was unfair. For those reasons, the team concluded that a quantitative approach to selection was not workable, and decided to proceed as they did after Round 1. Namely, the team decided to review the algorithms’ security, performance, implementation, and other characteristics, and to make a decision based upon an overall assessment of each algorithm—keeping in mind that security considerations were of foremost concern.

2.3 Number of AES Algorithms

During the course of the Round 1 and 2 public evaluation periods, several arguments were made regarding the number of algorithms that should be selected for inclusion in the AES. In addition, the issue was raised about the selection of a “backup” algorithm in the case that a single AES algorithm were selected and later deemed to be unsuitable. This could occur, for example, because of a practical attack on the algorithm or an intellectual property dispute. The team decided that it was necessary to address this issue as early as possible, in part to narrow its scope of options under consideration during the rest of the selection process.

Several arguments made in favor of multiple algorithms (and/or against a single algorithm) included:

In terms of resiliency, if one AES algorithm were broken, there would be at least one more AES algorithm available and implemented in products. Some commenters expressed the concern that extensive use of a single algorithm would place critical data at risk if that algorithm were shown to be insecure [42] [51] [52].

Intellectual property (IP) concerns could surface later, calling into question the royalty-free availability of a particular algorithm. An alternative algorithm might provide an immediately available alternative that would not be affected by the envisioned IP concern [52].

A set of AES algorithms could cover a wider range of desirable traits than a single algorithm. In particular, it might be possible to offer both high security and high efficiency to an extent not possible with a single algorithm [47] [52].

The public also submitted arguments in favor of a single AES algorithm (and/or against multiple algorithms). Some of those arguments suggested that:

Multiple AES algorithms would increase interoperability complexity and raise costs when multiple algorithms were implemented in products [17] [91].

Multiple algorithms could be seen as multiplying the number of potential “intellectual property attacks” against implementers [17] [47] [48].

The specification of multiple algorithms might cause the public to question NIST’s confidence in the security of any of the algorithms [6] [91].

Hardware implementers could make better use of available resources by improving the performance of a single algorithm than by including multiple algorithms [92].

The team discussed these and other issues raised during Round 2 regarding single or multiple AES algorithms. The team recognized the likelihood, as evidenced by commercial products today, that future products will continue to implement multiple algorithms, as dictated by customer demand, requirements for interoperability with legacy/proprietary systems, and so forth. The Triple data Encryption Standard (Triple DES), which NIST anticipates will remain a FIPS-approved algorithm for the foreseeable future, is expected to be available in many commercial products for some time, as are other FIPS and non-FIPS algorithms. In some regard, therefore, the presence of these multiple algorithms in current products provides a degree of systemic resiliency—as does having multiple AES key sizes. In the event of an attack, NIST would likely assess options at that time, including whether other AES finalists were resistant to such an attack, or whether entirely new approaches were necessary.

With respect to intellectual property issues, vendors noted that if multiple AES algorithms were selected, market forces would likely result in a need to implement all AES algorithms, thus exposing the vendors to additional intellectual property risks.

At the AES3 conference, there was significant discussion regarding the number of algorithms that should be included in the AES. The vast majority of attendees expressed their support—both verbally and with a show of hands—for selecting only a single algorithm. There was some support for selecting a backup algorithm, but there was no agreement as to how that should be accomplished. The above sentiments were reflected in written comments provided to NIST by many of the attendees after the conference.

The team considered all of the comments and factors above before making the decision to propose only a single algorithm for the AES. The team felt that other FIPS-approved algorithms will provide a degree of systemic resiliency, and that a single AES algorithm will promote interoperability and address vendor concerns about intellectual property and implementation costs.

2.4 Backup Algorithm

As noted earlier, intertwined in the discussion of multiple AES algorithms was the issue of whether to select a backup algorithm, particularly in the case of a single AES algorithm. A backup could take a number of forms, ranging from an algorithm that would not be required to be implemented in AES validated products (“cold backup”), to requiring the backup algorithm in AES products as a “hot backup.” It was argued by some commenters that, in many respects, a backup algorithm was nearly equivalent to a two-algorithm AES, since many users would reasonably demand that even a “cold backup” be implemented in products.

Given 1) the vendors’ concerns that a backup algorithm would be a de facto requirement in products (for immediate availability in the future), 2) the complete uncertainty of knowing the potential applicability of future breakthroughs in cryptanalysis, 3) NIST’s interest in promoting interoperability, and 4) the availability of other algorithms (FIPS and non-FIPS) in commercial products, the team decided not to select a backup algorithm.

As with its other cryptographic algorithm standards, NIST will continue to follow developments in the cryptanalysis of the AES algorithm, and the standard will be formally reevaluated every five years. Maintenance activities for the AES standard will be performed at the appropriate time, in full consideration of the situation’s particular circumstances. If an issue arises that requires more immediate attention, NIST will act expeditiously and consider all available alternatives at that time.

2.5 Modifying the Algorithms

During Rounds 1 and 2, NIST received a number of comments that expressed an interest in increasing the number of rounds (or repetitions) of certain steps of the algorithms. Although some comments offered explicit rationale for an increase in the number of rounds (e.g., choosing an algorithm with twice the number of rounds that the currently best known reduced-round analysis requires), many did not. NIST noted that the submitters of the two algorithms that received the most comments regarding an increase in rounds, RC6 and Rijndael, did not choose to increase the number of rounds at the end of Round 1 (when “tweak” proposals were being considered). Additionally, the Rijndael submitters even stated “the number of rounds of Rijndael provides a sufficient margin of security with respect to cryptanalytic attack.” [23]

The following issues and concerns were expressed during the team’s discussions:

For some algorithms, it is not clear how the algorithm would be fully defined (e.g., the key schedule) with a different number of rounds, or how such a change would impact the security analysis.

Changing the number of rounds would impact the large amount of performance analysis from Rounds 1 and 2. All performance data for the modified algorithm would need to be either estimated or performed again. In some cases, especially in hardware and in memory-restricted environments, estimating algorithm performance for the new number of rounds would not be a straightforward process.

There was a lack of agreement in the public comments regarding the number of rounds to be added, and which algorithms should be altered.

The submitters had confidence in the algorithms as submitted, and there were no post-Round 1 “tweaked” proposals for an increased numbers of rounds.

After much discussion, and given the factors listed above, the team decided that it would be most appropriate to make its recommendation for the AES based on the algorithms as submitted (i.e., without changing the number of rounds).

3. Technical Details of the Round 2 Analysis

3.1 Notes on Sec. 3

The analyses presented in this paper were performed using the original specifications submitted for the finalists prior to the beginning of Round 2. Most of the analysis of MARS considered the Round 2 version [15], in which modifications had been made to the original submitted specifications [100]. Some of the studies—including the NIST software performance analyses [7] [28]—used algorithm source code that was provided by the submitters themselves.

While NIST does not vouch for any particular data items that were submitted, all data was taken into account. In some cases, the data from one study may not be consistent with that of other studies. This may be due, for example, to different assumptions made for the various studies. NIST considered these differences into account and attempted to determine the general trend of the information provided. For the various case studies presented in Sec. 3, this report summarizes some of these analyses and results, but the reader should consult the appropriate references for more complete details.

3.2 General Security

Security was the foremost concern in evaluating the finalists. As stated in the original call for candidates [32], NIST relied on the public security analysis conducted by the cryptographic community. No attacks have been reported against any of the finalists, and no other properties have been reported that would disqualify any of them.

The only attacks that have been reported to data are against simplified variants of the algorithms: the number of rounds is reduced or simplified in other ways. A summary of these attacks against reduced-round variants, and the resources of processing, memory, and information that they require, is discussed in Sec. 3.2.1 and presented in Table 1.

Table 1.

Summary of reported attacks on reduced-round variants of the finalists

| Algorithm, Rounds | Reference | Rounds (Key size) | Type of Attack | Texts | Mem. Bytes | Ops. |

|---|---|---|---|---|---|---|

| MARS | [57] | 11C | Amp. Boomerang | 265 | 270 | 2229 |

| 16 Core(C) | [58] | 16 M, 5 C | Meet-in-Middle | 8 | 2236 | 2232 |

| 16 Mixing | 16 M, 5 C | Diff. M-i-M | 250 | 2197 | 2247 | |

| (M) | 6 M, 6 C | Amp. Boomerang | 269 | 273 | 2197 | |

| RC6 | [39] | 14 | Stat. Disting. | 2118 | 2112 | 2122 |

| 20 | [60] | 12 | Stat. Disting. | 294 | 242 | 2119 |

| 14(192,256) | Stat. Disting. | 2110 | 242 | 2135 | ||

| 14(192,256) | Stat. Disting. | 2108 | 274 | 2160 | ||

| 15(256) | Stat. Disting. | 2119 | 2138 | 2215 | ||

| Rijndael | [22] | 4 | Truncated. Diff. | 29 | small | 29 |

| 10 (128) | 5 | Truncated. Diff. | 211 | small | 240 | |

| 12 (192) | 6 | Truncated. Diff. | 232 | 7*232 | 272 | |

| 14 (256) | [37] | 6 | Truncated. Diff. | 6*232 | 7*232 | 244 |

| 7 (192) | Truncated. Diff. | 19*232 | 7*232 | 2155 | ||

| 7 (256) | Truncated. Diff. | 21*232 | 7*232 | 2172 | ||

| 7 | Truncated. Diff. | 2128–2119 | 261 | 2120 | ||

| 8 (256) | Truncated. Diff. | 2128–2119 | 2101 | 2204 | ||

| 9 (256) | Related Key | 277 | NA | 2224 | ||

| [63] | 7 (192) | Truncated. Diff. | 232 | 7*232 | 2184 | |

| 7 (256) | Truncated. Diff. | 232 | 7*232 | 2200 | ||

| [40] | 7 (192,256) | Truncated. Diff. | 232 | 7*232 | 2140 | |

| Serpent | [57] | 8 (192,256) | Amp. Boomerang | 2113 | 2119 | 2179 |

| 32 | [62] | 6 (256) | Meet-in-Middle | 512 | 2246 | 2247 |

| 6 | Differential | 283 | 240 | 290 | ||

| 6 | Differential | 271 | 275 | 2103 | ||

| 6 (192,256) | Differential | 241 | 245 | 2163 | ||

| 7 (256) | Differential | 2122 | 2126 | 2248 | ||

| 8 (192,256) | Boomerang | 2128 | 2133 | 2163 | ||

| 8 (192,256) | Amp. Boomerang | 2110 | 2115 | 2175 | ||

| 9 (256) | Amp. Boomerang | 2110 | 2212 | 2252 | ||

| Twofish | [35] | 6 (256) | Impossible Diff. | NA | NA | 2256 |

| 16 | [36] | 6 | Related Key | NA | NA | NA |

NA = Information not readily available.

It is difficult to assess the significance of the attacks on reduced-round variants of the finalists. On the one hand, reduced-round variants are, in fact, different algorithms, so attacks on them do not necessarily imply anything about the security of the original algorithms. An algorithm could be secure with n rounds even if it were vulnerable with n−1 rounds. On the other hand, it is standard practice in modern cryptanalysis to try to build upon attacks on reduced-round variants, and, as observed in Ref. [56], attacks get better over time. From this point of view, it would seem to be prudent to try to estimate a “security margin” of the candidates, based on the attacks on reduced-round variants.

One possible measure of the security margin, based on the proposal in Ref. [10], is the degree to which the full number of rounds of an algorithm exceeds the largest number of rounds that have been attacked. This idea and its limitations are discussed in Sec. 3.2.2. There are a number of reasons not to rely heavily on any single figure of merit for the strength of an algorithm; however, this particular measure of the security margin may provide some utility.

NIST considered other, less quantifiable characteristics of the finalists that might conceivably impact upon their security. Confidence in the security analysis conducted during the specified timeframe of the AES development process is affected by the ancestry of the algorithms and their design paradigms as well as the difficulty of analyzing particular combinations of operations using the current framework of techniques. These issues are discussed in Secs. 3.2.3 and 3.2.4. The statistical testing that NIST conducted on the candidates is discussed in Sec. 3.2.5. Various public comments about the security properties of the finalists are discussed in Sec. 3.2.6. NIST’s overall assessment of the security of the finalists is summarized in Sec. 3.2.7.

3.2.1 Attacks on Reduced-Round Variants

Table 1 summarizes the attacks against reduced-round variants of the finalists. For each attack, the table gives a reference to the original paper in which the attack was described, the number of rounds of the variant under attack, the key size, the type of attack, and the resources that are required. The three resource categories that may be required for the attack are information, memory, and processing.

The “Texts” column indicates the information required to effect the attack, specifically, the number of plaintext blocks and corresponding ciphertext blocks encrypted under the secret key. For most of the attacks, it does not suffice for the adversary to intercept arbitrary texts; the plaintexts must take a particular form of the adversary’s choosing. Such plaintexts are called chosen plaintexts. In the discussions of the attacks in Secs. 3.2.1.1–3.2.1.5, it is noted when an attack can use any known plaintext, as opposed to chosen plaintext.

The “Mem. Bytes” column indicates the largest number of memory bytes that would be used at any point in the course of executing the attack; this is not necessarily equivalent to storing all of the required information.

The “Ops.” column indicates the expected number of operations that are necessary to perform the attack. It is difficult to translate such a number into a time estimate, because the time will depend on the computing power available, as well as the extent to which the procedure can be conducted in parallel. The nature of the operations will also be a factor; they will typically be full encryption operations, but the operations may also be partial encryptions or some other operation. Even full encryptions will vary in the required processing time across algorithms. Therefore, the number of operations required for an attack should be regarded only as an approximate basis for comparison among different attacks. The references should be consulted for full details.

A useful benchmark for the processing that is required for the attacks on reduced-round variants is the processing that is required for an attack by key exhaustion, that is, by trying every key. Any block cipher, in principle, can be attacked in this way. For the three AES key sizes, key exhaustion would require 2127, 2191, or 2255 operations, on average. Even the smallest of these is large enough that any attacks by key exhaustion are impractical today and likely to remain so for at least several decades.

Exhaustive key search requires little memory and information and can be readily conducted in parallel using multiple processors. Thus, any attack that required more operations than are required for the exhaustive key search probably would be more difficult to execute than exhaustive key search. For this reason, many of the attacks on reduced-round variants are only relevant to the larger AES key sizes, although the processing requirements are nevertheless impractical today. Similarly, the memory requirements of many of the reported attacks against reduced-round variants are significant.

Practical considerations are also relevant to the information requirements of the reported attacks against reduced-round variants. Almost all of these attacks require more than 230 encryptions of chosen plaintexts; in other words, more than a billion encryptions, and in some cases far more are required. Even if a single key were used this many times, it might be impractical for an adversary to collect so much information. For instance, there are linear and differential attacks in Ref. [12] and Ref. [64] on DES that require 243 known plaintexts and 247 encryptions of chosen plaintexts. However, NIST knows of no circumstance in which those attacks were carried out against DES.

One model for collecting such large amounts of information would require physical access for an adversary to one or more encryption devices that use the same secret key. In that case, another useful benchmark would be the memory that would be required to store the entire “codebook,” in other words, a table containing the ciphertext blocks corresponding to every possible plaintext block. Such a table would require 2132 bytes of memory for storage.

The following are comments on the attacks presented in Table 1.

3.2.1.1 MARS

There are many ways to simplify MARS for the purpose of analysis because of the heterogeneous structure consisting of four different types of rounds. The 16 keyed rounds of the cryptographic core are “wrapped” in 16 unkeyed mixing rounds and pre- and post-whitening.

Four attacks on three simplified variants of MARS were presented in Refs. [57] and [58]. The first variant includes 11 core rounds, without any mixing rounds or whitening. The authors attack this variant with a new type of truncated differential attack, called the boomerang-amplifier, extending the methods in Ref. [90]. The second variant includes both the whitening and the full 16 mixing rounds, while reducing the core rounds from 16 to 5. Two different meet-in-the-middle attacks are proposed on this variant; the adversary does not need to choose the plaintexts for these attacks. The third variant includes the whitening, while reducing both the number of mixing rounds and the number of core rounds from 16 to 6.

Another paper reports an impossible differential for 8 of the 16 rounds of the MARS core [11]. The authors imply that the existence of an impossible differential typically leads to an attack that recovers the secret key from a variant that is a couple of rounds longer than the differential. Because the attack does not actually exist, this attack is not included in Table 1.

3.2.1.2 RC6

The two papers presenting attacks on variants of RC6 both present a small, but iterative, statistical bias in the round function. The resulting statistical correlations between inputs of a certain form and their outputs can be used to distinguish some number of rounds of RC6 from a random permutation. In other words, the two papers construct “distinguishers.” Both papers assume that the distribution of the subkeys is uniformly random; the attack described in Ref. [39] on a 14 round variant of RC6, also assumes that the variable rotation amounts produced within the round function are random. In Ref. [60], the authors describe a distinguisher that they estimate, based on systematic experimental results, will apply to variants of RC6 with up to 15 rounds. Attacks, i.e., methods for recovering the secret key, are described for 12, 14, and 15 round variants. For a class of weak keys, estimated to be one key in 280, the non-randomness is estimated to persist in reduced-round variants employing up to 17 rounds of RC6. In Ref. [76], the RC6 submitters comment on the results in Ref. [60] and observe that those results support their own estimates of the security of RC6.

3.2.1.3 Rijndael

The Rijndael specification describes a truncated differential attack on 4, 5, and 6 round variants of Rijndael [22], based on a 3 round distinguisher of Rijndael. This attack is called the “Square” attack, named after the cipher on which the attack was first mounted. In Ref. [40], truncated differentials are used to construct a different distinguisher on 4 rounds, based on the experimentally confirmed existence of collisions between some partial functions induced by the cipher. This distinguisher leads to a collision attack on 7 round variants of Rijndael.

The other papers that present attacks on variants of Rijndael build directly on the Square attack. In Ref. [63], the Square attack is extended to 7 round variants of Rijndael by guessing an extra round of subkeys. Table 1 indicates the results for the 192 and 256 bit key sizes, where the total number of operations remains below those required for exhaustive search. Similar attacks are described in Ref. [37]. These attacks are improved, however, by a partial summing technique that reduces the number of operations. The partial summing technique is also combined with a technique for trading off operations for information, yielding attacks on 7 and 8 round variants that require almost the entire codebook. The same paper also presents a related key attack on a 9 round variant with 256 bit keys. This attack requires not only encryptions of chosen plaintexts under the secret key, but also encryptions under 255 other keys that are related to the secret key in a manner chosen by the adversary.

3.2.1.4 Serpent

In Ref. [57], the amplified boomerang technique is used to construct a 7 round distinguisher of Serpent, leading to an attack on a variant of Serpent with 8 rounds for the 192 and 256 bit key sizes. In Ref. [58], a refinement based on an experimental observation reduces the texts, memory, and processing required for the attack; an extension to an attack on a 9 round variant is also offered. The same paper also presents a standard meet-in-the-middle attack and differential attacks on 6 and 7 round variants of Serpent, and a standard boomerang attack on an 8 round variant of Serpent that requires the entire codebook.

3.2.1.5 Twofish

The Twofish team has found two attacks on variants of Twofish. In Ref. [35], a 5 round impossible differential is used to attack a 6 round variant of Twofish under 256 bit keys, with the required number of processing operations equivalent to that required for an exhaustive search. If the pre- and post-whitening is removed from the variant, then the attack can be extended to 7 rounds; alternatively, without whitening, 6 round variants can be attacked with a complexity less than that of an exhaustive search for each key size. In Ref. [36], the Twofish team explains why the partial chosen-key and related key attack on a 9 round variant of Twofish that they reported in the Twofish specification does not work. The best such attack of which they are aware applies to a 6 round variant, or a 7 round variant without whitening. The Twofish specification [83] also reports attacks on reduced-round variants of Twofish that are considerably simplified in other ways: for example, by using fixed S-boxes, by removing whitening or subkeys, or by allowing partial key guesses.

Outside of attacks mounted by the Twofish team, NIST knows of no attacks that have been mounted on Twofish by simply reducing the number of rounds. In Ref. [70], differential characteristics on 6 rounds are presented that apply only to certain key dependent S-boxes and thus, only to a fraction of the keys. This particular fraction of the keys could be considered as a class of weak keys, because the authors claim that characteristics like theirs should lead directly to an attack on 7 or eight round variants of Twofish. Because the attack does not actually exist, it does not appear in Table 1. In Ref. [59], an attack is mounted on a 4 round variant of Twofish in which 32 bit words are scaled down to 8 bit words; other properties of Twofish are also explored.

3.2.2 Security Margin

NIST wished to evaluate the likelihood that an analytic shortcut attack would be found for the candidate algorithms with all specified rounds in the next several decades, or before attacks by key exhaustion become practical. It is difficult, however, to extrapolate the data for reduced-round variants to the actual algorithms. The attacks on reduced round variants are generally not even practical at this time because they require huge amounts of resources. In fact, most of these attacks on reduced round variants are, arguably, more difficult to execute in practice than attacks by exhaustive key search, despite smaller processing requirements, because of their information and memory requirements. Moreover, even if a shortcut attack on a simplified variant were practical, the original algorithm might remain secure.

Nevertheless, attacks will improve in the future, and the resources available to carry them out will be greater, so it might be prudent to favor algorithms that appear to have a greater margin for security. If only a little simplification allows an attack on one algorithm, but a second algorithm has only been attacked after much greater simplification, then that may be an indication that the second algorithm has a greater margin for security. Simplification includes round reductions, which is not surprising, because the most notable frameworks of attacks, differential and linear cryptanalysis, may be effectively resisted if the number of rounds is high enough. Therefore, the full number of rounds specified for the algorithm has been compared to the largest number of rounds at which an attack currently exists. In Ref. [85], the ratio of these numbers was defined as the “safety factor” and calculated for each candidate.

There are several problems with relying heavily on this measure, or on any single figure of merit that is based on the attacks on reduced-round variants. In general, the results will be biased against algorithms that attract greater scrutiny in a limited analysis period. This could plausibly occur, for example, if a particular algorithm is simpler, or at least appears to be simpler, to analyze against certain attacks. Another factor could be the ancestry of the algorithm and its constituent techniques, and the existence of previous attacks upon which to build. The proposed measure would tend to favor novel techniques for resisting attacks, techniques that have not yet stood the test of time. Similarly, the proposed measure may not be a good index to the resistance of the algorithms to new and novel techniques for attacking algorithms.

To develop a measure based on the largest number of rounds that are currently attacked is also technically problematic, as is acknowledged in Ref. [85]. There is no natural definition for the number of analyzed rounds, or even the total number of rounds specified for each algorithm. For example, should the whitening in MARS, Twofish, RC6, and Rijndael count as rounds or partial rounds? MARS has 16 unkeyed mixing rounds and 16 keyed core rounds: is MARS a 16 round or a 32 round algorithm, or something in between? Should attacks that ignore the mixing rounds be considered? Should reduced-round variants of Serpent or Rijndael be required to inherit the slightly modified final round? Another complicating factor is the key size, especially for Rijndael, which varies the number of rounds depending on the key size.

What types of attacks should be included in the definition? Some attacks were successful against only a small fraction of keys; some required encryption operations under related unknown keys; some distinguished outputs from random permutations without an explicit method for recovering the key; and some relied on experimental conjectures. In addition, the attacks required considerably different resources; some even assume that nearly the entire codebook was available to the attacker.

In light of these difficulties, NIST did not attempt to reduce its assessment of the security margins of the finalists to a single measurement. NIST considered all of the reported data, and used the raw number of analyzed rounds out of the total rounds specified for an algorithm as a first approximation. The results are summarized below for each finalist.

Note that the rounds defined for the candidates are not necessarily comparable to each other. For example, the algorithms based on the Feistel construction, MARS, RC6, and Twofish, require two rounds to alter an entire word of data, while a single round of Rijndael or Serpent accomplishes this.

MARS: The results for MARS depend on the treatment of the “wrapper,” i.e., the pre- and post-whitening and the 16 unkeyed mixing rounds that surround the 16 keyed core rounds. Without the wrapper, 11 out of the 16 core rounds have been attacked. With the wrapper, MARS has many more rounds than have been successfully attacked: only 5 out of the 16 core rounds, or 21 out of the 32 total rounds have been attacked. Or, if the wrapper is regarded as a pair of single, keyed pair of single, keyed rounds, then 7 out of the 18 rounds have been attacked. For any of these cases, MARS appears to offer a high security margin.

RC6: Attacks have been mounted against 12, 14, and 15 out of the 20 rounds of RC6, depending on the key size. The submitters point out in Ref. [78] that these results support their original estimate that as many as 16 out of the 20 rounds may be vulnerable to attack. RC6 appears to offer an adequate security margin.

Rijndael: For 128 bit keys, 6 or 7 out of the 10 rounds of Rijndael have been attacked, the attack on 7 rounds requiring nearly the entire codebook. For 192 bit keys, 7 out of the 12 rounds have been attacked. For 256 bit keys, 7, 8, or 9 out of the 14 rounds have been attacked. The 8 round attack requires nearly the entire codebook, and the 9 round attack requires encryptions under related unknown keys. The submitters point out in Ref. [26] that the incremental round improvements over their own 6 round Square attack come at a heavy cost in resources. Rijndael appears to offer an adequate security margin.

Serpent: Attacks have been mounted on 6, 8, or 9 out of 32 rounds of Serpent, depending on the key size. Serpent appears to offer a high security margin.

Twofish: The Twofish team has mounted an attack on 6 out of the 16 rounds of Twofish that requires encryption operations under related unknown keys. Another attack proposed on 6 rounds for the 256 bit key size is no more efficient than exhaustive key search. Twofish appears to offer a high security margin.

3.2.3 Design Paradigms and Ancestry

The history of the underlying design paradigms affects the confidence that may be placed in the security analysis of the algorithms. This also applies to the constituent elements of the design, such as the S-boxes. It may require more time for attacks to be developed against novel techniques, and traditional techniques may tend to attract more analysis, especially if attacks already exist on which to build. For example, the Feistel construction, such as employed by DES, has been well studied, and three of the finalists use variations of this structure. Another element that can affect public confidence is the design of the S-boxes, which can be suspected of containing a hidden “trapdoor” that can facilitate an attack. These considerations are discussed below for each finalist.

MARS: The heterogeneous round structure of MARS appears to be novel. Both the mixing round and the core rounds are based on the Feistel construction, with considerable variation. MARS uses many different operations, most of which are traditional. A product of key material and data is used to regulate the variable rotation operation. The S-box was generated deterministically to achieve certain desired properties; thus, the MARS specification asserts that MARS is unlikely to contain any structure that could be used as a trapdoor for an attack. The MARS specification does not cite any algorithm as an ancestor.

RC6: The design of RC6 evolved from the design of RC5, which has undergone several years of analysis. The security of both algorithms relies on variable rotations as the principal source of non-linearity; there are no S-boxes. The variable rotation operation in RC6, unlike RC5, is regulated by a quadratic function of the data. The key schedules of RC5 and RC6 are identical. The round structure of RC6 is a variation on the Feistel construction. The RC6 specification asserts that there are no trapdoors in RC6 because the only a priori defined part of RC6 is the well known mathematical constants used during key setup.

Rijndael: Rijndael is a byte-oriented cipher based on the design of Square. The submitters’ presentation of the Square attack served as a starting point for further analysis. The types of substitution and permutation operations used in Rijndael are standard. The S-box has a mathematical structure, based on the combination of inversion over a Galois field and an affine transformation. Although this mathematical structure might conceivably aid an attack, the structure is not hidden as would be the case for a trapdoor. The Rijndael specification asserts that if the S-box was suspected of containing a trapdoor, then the S-box could be replaced.

Serpent: Serpent is a byte-oriented algorithm. The types of substitution and permutation operations are standard. The S-boxes are generated deterministically from those of DES to have certain properties; the Serpent specification states that such a construction counters the fear of trapdoors. The Serpent specification does not cite any algorithm as an ancestor.

Twofish: Twofish uses a slight modification of the Feistel structure. The Twofish specification does not cite any particular algorithm as its ancestor, but it does cite several algorithms that share an important feature of Twofish, the key-dependent S-boxes, and weighs the various design approaches to them. The Twofish specification asserts that Twofish has no trapdoors and supports this conclusion with several arguments, including the variability of the S-boxes.

3.2.4 Simplicity

Simplicity is a property whose impact on security is difficult to assess. On the one hand, complicated algorithms can be considered more difficult to attack. On the other hand, results may be easier to obtain on a simple algorithm, and an algorithm that is perceived to be simple may attract relatively more scrutiny. Therefore, during the AES analysis period, it may have been easier to be confident in the analysis of a simple algorithm.

There is no consensus, however, on what constitutes simplicity. MARS has been characterized as complicated in several public comments, but the submitters point out in Ref. [20] that MARS requires fewer lines of C code in the Gladman implementations than Rijndael, Twofish, and Serpent. RC6, by contrast, is generally regarded as the simplest of the finalists, yet the modular multiplication operation it contains is arguably much more complicated than typical cipher operations. In Ref. [49], the MARS team points out that the published linear analysis of RC5 was found to be in error three years after the publication of that analysis, so seemingly simple ciphers are not necessarily easier to analyze.

For standard differential cryptanalysis, the type of operations employed tangibly affects the rigor of the security analysis. If key material is mixed with data only by the XOR operation, as in Serpent and Rijndael, then plaintext pairs with a given XOR difference are the natural inputs, and the security analysis is relatively clean. If key material is mixed with data by more than one operation, as in the other finalists, then there is no natural notion of difference, and the security analysis requires more estimates. Similarly, the use of variable rotations in MARS and RC6 would seem to inhibit the possibility of clean security results against a variety of differential and linear attacks.

Another aspect of simplicity that relates to security analysis is scalability. If a simplified variant can be constructed with a smaller block size, for example, then conducting experiments on the variant becomes more feasible, which in turn may shed light on the properties of the original algorithm. In Ref. [79], it is claimed that the lack of smaller versions of MARS severely hampers analysis and experimentation. Similarly, in Ref. [59], the authors assert that a “realistic” scaled-down variant of Twofish seems difficult to construct. Both claims are plausible, although it should be noted that the MARS and Twofish specifications contain considerable analysis of their individual design elements. The Serpent specification asserts, plausibly, that it would not be difficult to construct scaled-down variants of Serpent. RC6 and Rijndael are scaleable by design.

3.2.5 Statistical Testing

NIST conducted statistical tests on the AES finalists for randomness by evaluating whether the outputs of the algorithms under certain test conditions exhibited properties that would be expected of randomly generated outputs. These tests were conducted for each of the three key sizes. In addition, NIST conducted a subset of the tests on reduced-round versions of each algorithm. All of the testing was based on the NIST Statistical Test Suite [80].

For the full round testing, each of the algorithms produced random-looking outputs for each of the key sizes. For the reduced-round testing of each finalist, the outputs of an early round appear to be random, as do the outputs of each subsequent round. Specifically, the output of MARS appears to be random at four or more core rounds, RC6 and Serpent at four or more rounds, Rijndael at three or more rounds, and Twofish at two or more rounds. The test conditions and results are described in Ref. [88]. For comments on the limitations of NIST’s methodology, see Ref. [69].

Additional testing, as described in Ref. [53] and limited to RC6, confirmed NIST’s results for RC6 on certain statistical tests. Reference [74] presented detailed results from measuring the diffusion properties of full round and reduced round versions of the finalists. The quantities measured—including the degrees of completeness, of the avalanche effect, and of strict avalanche criterion—were “indistinguishable from random permutations after a very small number of rounds,” for all of the finalists.

In summary, none of the finalists was statistically distinguishable from a random function.

3.2.6 Other Security Observations

Many observations have been offered about various properties that might impact the security of the finalists. Because the implications of these observations are generally subjective, they did not play a significant role in NIST’s selection.

MARS: In Ref. [20], the MARS team conjectures that the heterogeneous structure of MARS and its variety of operations constitute a kind of insurance against the unknown attacks of the future. The MARS key schedule requires several stages of mixing; in Ref. [77], key schedules that require the thorough mixing of key bits are cited for security advantages. The estimates in the MARS specification of the resistance of the core to linear cryptanalysis are questioned in Ref. [79]. In Ref. [61], one conjectured estimate from the MARS specification is proven incorrect. In Ref. [14], it is pointed out that the MARS S-box does not have all of the properties that the designers required. No attacks are proposed based on these observations. In Ref. [49], the MARS team offers a clarification of its analysis, supporting the original assessment that MARS is resilient against linear attacks.

RC6: In Ref. [77], the thorough mixing provided by the RC6 key schedule is cited as a security advantage. In Ref. [20], the concern is raised that RC6 relies mainly on data-dependent rotations for its security, constituting a “ ‘single point of failure’ … (as it does not use S-boxes).”

Rijndael: In Ref. [86], the author discusses three concerns about the mathematical structure of Rijndael and the potential vulnerabilities that result. First, he observes that all of the operations of the cipher act on entire bytes of the data, rather than bits; this property allows the Square attack on reduced-round variants. Moreover, the nearly symmetric movement of the bytes troubles him. The only break to the symmetry is the use of different round constants in the key schedule, and for the first eight rounds, these constants are only one bit. If Rijndael were simplified to omit these round constants, then encryption would be compatible with rotating each word of the data and subkeys by a byte.

The second concern discussed in Ref. [86] is that “Rijndael is mostly linear.” He disagrees with the deliberate design decision to avoid mixing the XOR operations with ordinary addition operations. He illustrates how to apply a linear map to the bits within each byte without changing the overall algorithm, by compensating for the linear map in the other elements of the cipher, including the key schedule. Similarly, the Galois field that underlies the S-box can be represented in different basis vectors or can be transformed to other Galois fields with different defining polynomials. In other words, the Rijndael’s mathematical structure permits many equivalent formulations. The author suggests that, by performing a series of manipulations to the S-box, an attacker might be able to find a formulation of Rijndael with an exploitable weakness.

The third concern discussed in Ref. [86] is the relatively simple algebraic formula for the S-box, which is given in the Rijndael specification. The formula is a polynomial of degree 254 over the given Galois field, but there are only nine terms in the polynomial, far fewer than would be expected in a typical randomly generated S-box of the same size. The mathematical expression for the iteration of several rounds of Rijndael would be much more complex, but the author asserts that the growth of the expression size as a function of rounds has not been analyzed in detail. He presents some examples of calculations in this setting, including the possible use of a “normal” basis, under which the squaring operation amounts to just a rotation of bits. If the expression for five rounds of Rijndael turned out to contain, say, only a million terms, then the author asserts that a meet in the middle attack could be mounted by solving a large system of linear equations. Such an attack would require the attacker to collect two million plaintext-ciphertext pairs.

In Ref. [86], it is also noted that an attacker that recovers or guesses appropriate bits of Rijndael’s subkeys will be able to compute additional bits of the subkeys. (In the case of DES, this property aided the construction of linear and differential attacks.) Extensions of this observation are discussed in Ref. [37]; its authors deem these properties worrisome and suggest that, contrary to a statement in the Rijndael specification, the key schedule does not have high diffusion.

In Ref. [72], some properties of the linear part of the round function in Rijndael are explored. In particular, the linear mapping within the round function has the property that 16 iterations are equivalent to the identity mapping. The authors suggest that this casts doubt on the claim in the Rijndael submission that the linear mapping provides high diffusion over multiple rounds. In Ref. [24], the Rijndael submitters explain that the observations in Ref. [72] do not contradict their claims about the security of Rijndael. The authors of Ref. [72] offer a further response in Ref. [71].

Serpent: In Ref. [3], the Serpent team asserts that Serpent is the most secure of the finalists. They cite Serpent’s many extra rounds, beyond those needed to resist today’s attacks, as a reason why future advances in cryptanalysis should not break its design. In Ref. [67], a concern is raised about the small size of Serpent’s S-boxes. Although the author views the S-boxes as well designed with respect to linear and differential cryptanalysis, the S-boxes may turn out to exhibit some other properties that are exploitable in an attack. No such properties or attacks have been proposed. In Ref. [86], it is noted that an attacker that recovers or guesses appropriate bits of the subkeys will be able to compute additional bits of the subkeys.

Twofish: Twofish uses an innovative paradigm, in the form of key-dependent S-boxes. This creates an unusual dependency between the security of the algorithm and the structure of the key schedule and S-boxes. In the 128 bit key case (where there are 128 bits of entropy), Twofish may be viewed as a collection of 264 different cryptosystems. A 64 bit quantity (representing 64 bits of the original 128 bits of entropy) that is derived from the original key controls the selection of the cryptosystem. For any particular cryptosystem, 64 bits of entropy remain, in effect, for the key. As a result of this partitioning of the 128 bits of entropy derived from the original key, there has been some speculation [66] that Twofish may be amenable to a divide-and-conquer attack. In such an attack, an attacker would determine which of the 264 cryptosystems is in use, and then determine the key to the cryptosystem. If a method could be devised to execute these steps, the work factor for each step would presumably be 264. However, no general attack along this line has been forthcoming. That is, if an attacker is faced with the task of decrypting ciphertext encrypted with a 128 bit key, it is not clear that the partitioning of the 128 bits of entropy gives the attacker any advantage. On the other hand, if a fixed 128 bit key is used repeatedly, each usage may leak some information about the cryptosystem selected. If an attacker can make repeated observations of the cryptosystem in action, he might conceivably be able to determine which of the 264 cryptosystems is in use. Similar remarks apply to higher key sizes (in general, for k bit keys, the cryptosystem is determined by k/2 bits of entropy).

This feature of Twofish, called the key separation property of Twofish in Ref. [66], is discussed further in Refs. [55], [68], and [96]. In particular, Ref. [55] notes that the dependence of the S-boxes in Twofish on only 64 bits of entropy in the 128 bit key case was a deliberate design decision. This decision is somewhat analogous to the security/efficiency tradeoff involved in establishing the number of rounds in a system with a fixed round function. The authors note that if the S-boxes had depended on 128 bits of entropy, the number of rounds of Twofish would have had to be reduced in order to avoid an overly negative effect on key agility and/or throughput.

In Ref. [55], the Twofish team asserts that key-dependent S-boxes constitute a form of security margin against unknown attacks.

In Ref. [59], the author explores a variety of properties of Twofish, including the construction of truncated differentials for up to 16 rounds. Although these differentials do not necessarily lead to an attack, the author finds it surprising that non-trivial information can be pushed through all 16 rounds of Twofish.

3.2.7 Summary of Security Characteristics of the Finalists

As noted earlier, no general attacks against any of the finalists is known. Hence, the determination of the level of security provided by the finalists is largely guesswork, as in the case of any unbroken cryptosystem. The following is a summary of the known security characteristics of the finalists.

MARS appears to have a high security margin. A precise characterization of MARS is difficult because of the fact that MARS employs two different kinds of rounds. MARS has received some criticism based on its complexity, which may have hindered its security analysis during the timeframe of the AES development process.

RC6 appears to have an adequate security margin. However, RC6 has received some criticism because of its low security margin relative to that offered by other finalists. On the other hand, RC6 has been praised for its simplicity, which may have facilitated its security analysis during the specified timeframe of the AES development process. RC6 is descended from RC5, which has received prior scrutiny.

Rijndael appears to have an adequate security margin. The security margin is a bit difficult to measure because the number of rounds changes with the key size. Rijndael has received some criticism on two grounds: that its security margin is on the low side among the finalists, and that its mathematical structure may lead to attacks. However, its structure is fairly simple, which may have facilitated its security analysis during the specified timeframe of the AES development process.

Serpent appears to have a high security margin. Serpent also has a simple structure, which may have facilitated its security analysis during the specified timeframe of the AES development process.

Twofish appears to have a high security margin. Since Twofish uses key-dependent round function, the notion of security margin may have less meaning for this algorithm than for the other finalists. The dependence of the Twofish S-boxes on only k/2 bits of entropy in the k bit key case has led to a speculation that Twofish may be amenable to a divide-and-conquer attack, although no such attack has been found. Twofish has received some criticism for its complexity, making analysis difficult during the timeframe of the AES development process.

3.3 Software Implementations

Software implementations cover a wide range. In some cases, space is essentially unrestricted; in other cases, RAM and/or ROM may be severely restricted. In some cases, large quantities of data are encrypted or decrypted with a single key. In other cases, the key changes frequently, perhaps with each block of data.

Encryption or decryption speed may be traded off against security, indirectly or directly. That is, the number of rounds specified for an algorithm is a factor in security; encryption or decryption speed is roughly proportional to the number of rounds. Thus, speed cannot be studied independently of security, as noted in Sec. 3.3.6.

There are many other aspects of software implementations. Some of these are explored below, along with the basic speed and cost considerations.

3.3.1 Machine Word Size

One issue that arises in software implementations is the basic underlying architectures. The platforms on which NIST performed testing were oriented to 32 bit architectures. However, performance on 8 bit and 64 bit machines is also important, as was recognized in the public comments and analyses. It is difficult to project how various architectures will be distributed over the next 30 years (roughly the minimum period in which the AES is expected to remain viable). Hence, it is difficult to assign weights to the corresponding performance figures that accurately represent their importance during this timeframe. Nonetheless, from the information received by NIST, the following picture emerges:

It appears that over the next 30 years, 8 bit, 32 bit, and 64 bit architectures will all play a significant role (128 bit architectures might be added to the list at some point). Although the 8 bit architectures used in certain applications will gradually be supplanted by 32 bit versions, 8 bit architectures are not likely to disappear. Meanwhile, some 32 bit architectures will be supplanted by 64 bit versions at the high-end, but 32 bit architectures will become increasingly relevant in low-end applications, so that their overall significance will remain high. Meanwhile, 64 bit architectures will grow in importance. Since none of these predictions can be quantified, it appears that versatility is of the essence. That is, an AES should exhibit good performance across a variety of architectures.

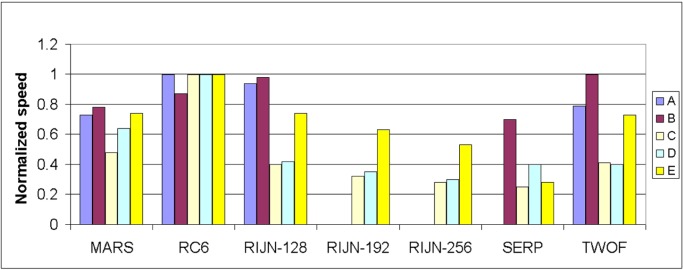

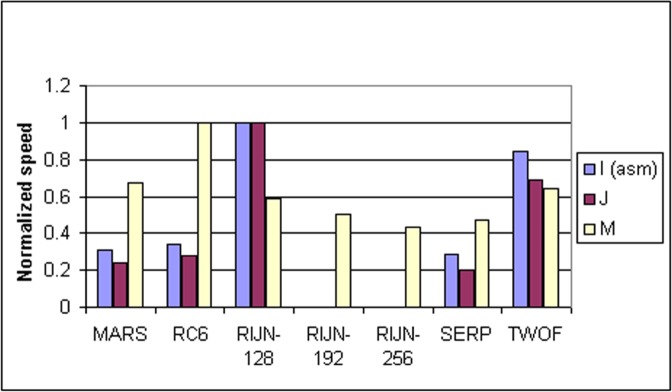

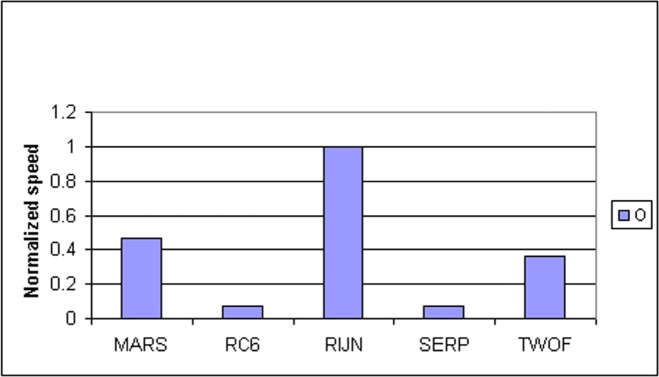

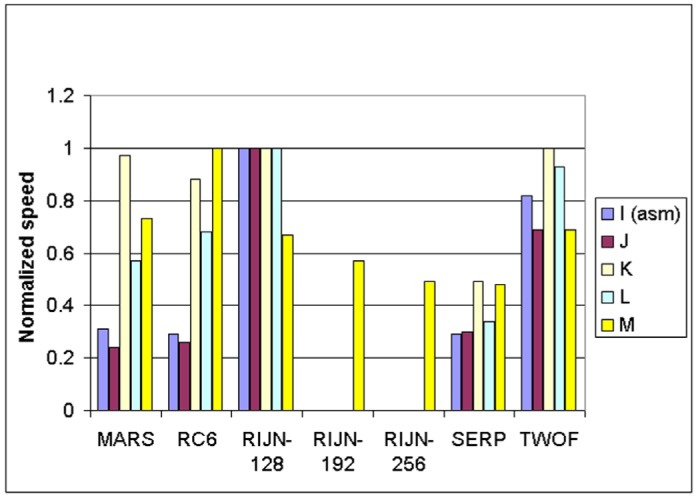

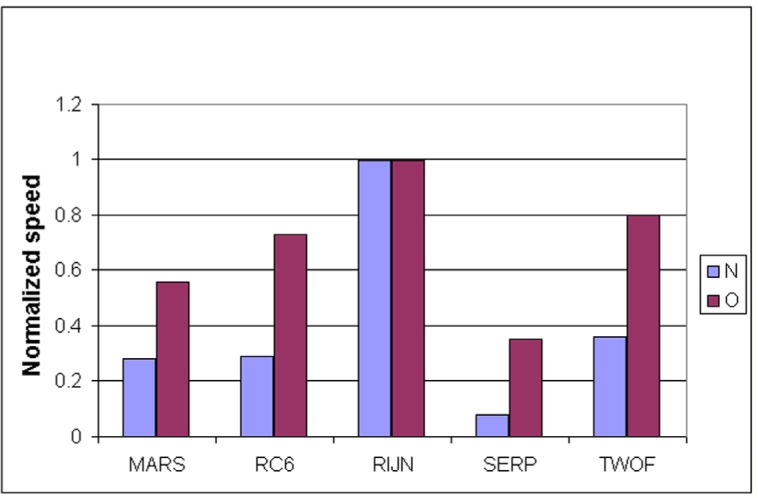

Some information on the performance of the finalists with respect to word size may be accrued from Tables 16 through 25 of Appendix A. In this appendix, encryption speeds are grouped into four categories: 8 bit, 32 bit C and assembler code, 64 bit C and assembler code, and other (Java, DSPs, etc.). Graphs are also provided in order to aid the visualization of the table information.

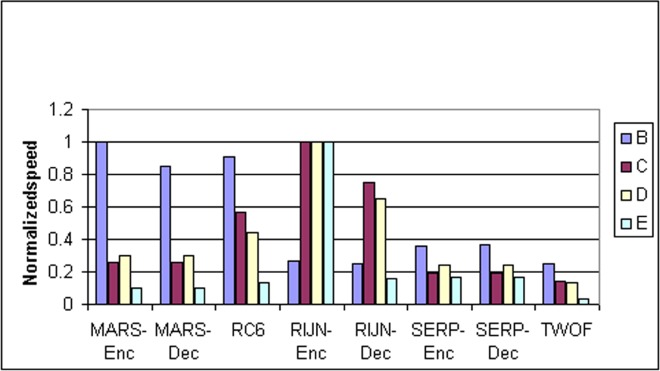

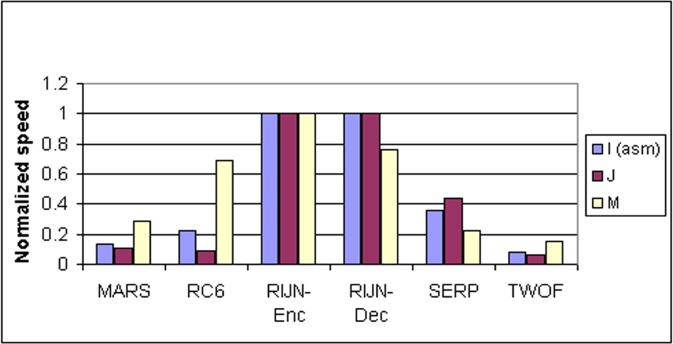

Table 16.

Software speeds (encryption): 32 bit processors (C)

| A | B | C | D | E | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Clocks | Norm. | Clocks | Norm. | Clocks | Norm. | Mbits | Norm. | Clocks | Norm. | |

| MARS | 306 | 0.73 | 1600a | 0.78 | 656 | 0.48 | 80.6 | 0.64 | 364 | 0.74 |

| RC6 | 223 | 1.00 | 1436 | 0.87 | 318 | 1.00 | 125.9 | 1.00 | 269 | 1.00 |

| RIJN | 237 | 0.94 | 1276 | 0.98 | 805 | 0.40 | 52.6 | 0.42 | 362 | 0.74 |

| 981 | 0.32 | 44.3 | 0.35 | 428 | 0.63 | |||||

| 1155 | 0.28 | 38.2 | 0.30 | 503 | 0.53 | |||||

| SERP | 1800 | 0.70 | 1261 | 0.25 | 50.3 | 0.40 | 953 | 0.28 | ||

| TWOF | 282 | 0.79 | 1254 | 1.00 | 780 | 0.41 | 50.3 | 0.40 | 366 | 0.73 |

The value is based on the Round 1 version of MARS (with a different key schedule from the Round 2 version).

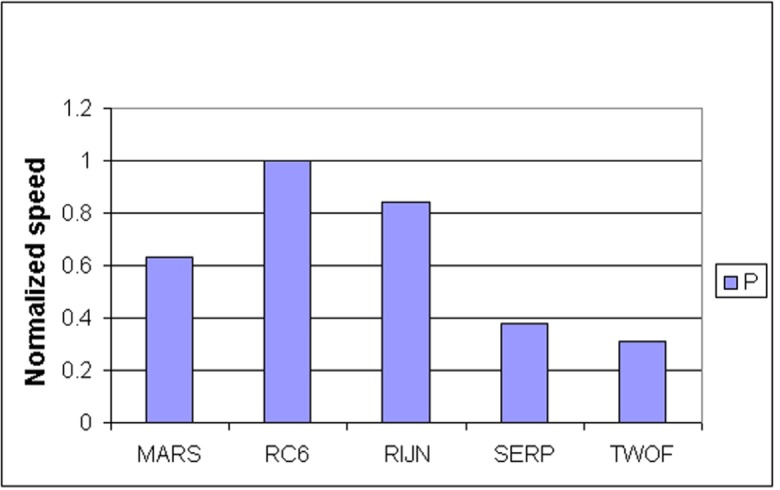

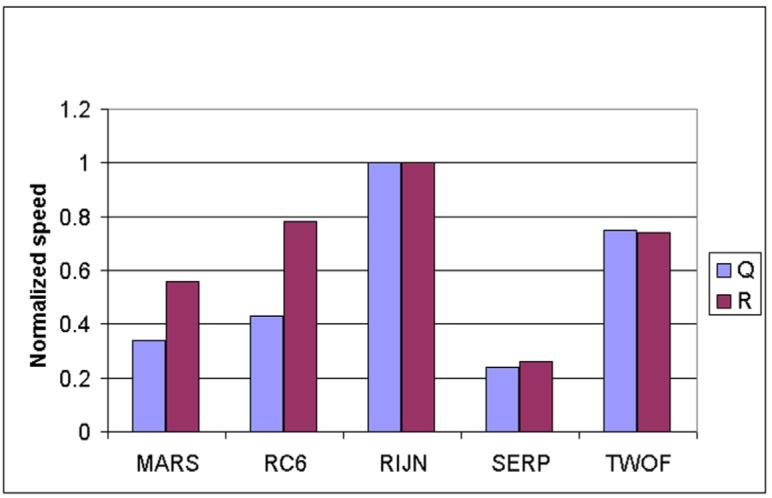

Table 25.

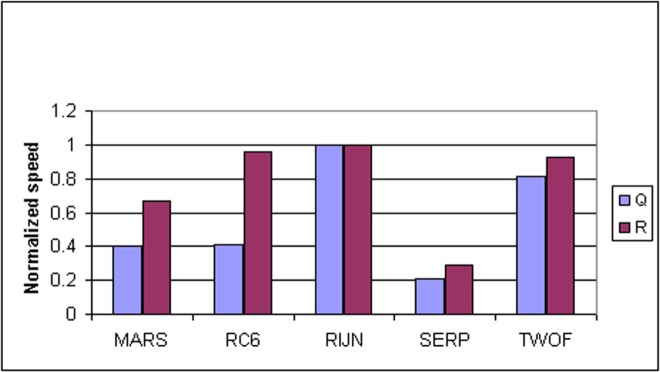

Software speeds (decryption): Digital Signal Processors (DSPs)

| Q | R | |||

|---|---|---|---|---|

| Clocks | Norm. | Clocks | Norm. | |

| MARS | 8 826a | 0.40 | 400 | 0.67 |

| RC6 | 8 487 | 0.41 | 281 | 0.96 |

| RIJN | 3 500 | 1.00 | 269 | 1.00 |

| SERP | 16 443 | 0.21 | 917 | 0.29 |

| TWOF | 4 328 | 0.81 | 290 | 0.93 |

The value is based on the Round 1 version of MARS (with a different key schedule from the Round 2 version).

It should be noted that performance cannot be classified by word size alone. One additional factor is the support provided by software. This is noted (but not systematically explored) in the next section.

3.3.2 Other Architectural Issues

Both MARS and RC6 use 32 bit multiplies and 32 bit variable rotations. These operations, particularly the rotations, are not supported on some 32 bit processors. The 32 bit multiply and rotation operations are both awkward to implement on processors of other word sizes. Moreover, some compilers do not actually use the rotation operations even when they are available in the processor instruction set. Therefore, the relative performance of MARS and RC6, when running the same source code, shows somewhat more variance from specific platform (processor and compiler) to platform, than do the other three finalists.

3.3.3 Software Implementation Languages

The performance of the finalists also depends somewhat on the particular high-level language used (e.g., assembler, compiler or interpreter). In some cases, the role played by particular software has a strong effect on performance figures. There is a spectrum of possibilities. At one extreme, hand-coded assembly code will generally produce better performance than even an optimizing compiler. At the other extreme, interpreted languages are, in general, poorly adapted to the task of optimizing performance. Compilers are typically in between. In addition, as noted in the Sec. 3.3.2, some compilers do a better job than others in making use of the support provided by the underlying architecture for operations such as 32 bit rotations. This increases the difficulty of measuring performance across a variety of platforms. Some finalists benefited from the use of certain compilers on certain processors. However, this type of performance increase on specific platforms does not necessarily translate into high performance results across platforms.

There is no clear consensus on the relative importance of different languages. In Ref. [84], the opinion is expressed that assembler coding is the best means of evaluating performance on a given architecture. The reason provided is that hand-coded assembler will be used when speed is important and a hardware implementation is not available. On the other hand, the use of assembler or another means of optimizing for speed may raise costs. Code development cost may be significant, especially if the goal is maximum speed. For example, optimizations may be effected using hand coding for high-level languages such as C, or by the use of assembly code. This developmental cost may or may not translate into significant monetary cost, depending upon the specific environment. In some environments, the speed at which the code runs is perceived as a paramount consideration in evaluating efficiency, overriding cost considerations. In other cases, the time and/or cost of code development is a more important consideration. In some cases, the speed of key setup is more significant than encryption or decryption speed. This makes it difficult to develop a universal metric for evaluating the performance of the finalists.

Code development cost may need to be traded off against speed. That is, the use of standard reference code may minimize cost, but may not allow significant optimization in a particular environment. On the other hand, the use of non-standard code, such as hand-coded assembler, may optimize speed at the expense of higher development cost.

Optimization spans a broad range. Some optimizations may be made without great effort. Furthermore, some optimizations may be portable across platforms. At the opposite extreme, some optimizations require much effort and/or are restricted to particular platforms. Two related examples are discussed in Refs. [43] and [73], in which optimized implementations of the Serpent S-boxes are obtained. This work involves exhaustive searching through possible instruction sequences. The results improve Serpent’s performance on the targeted platforms. However, this level of optimization involves resource expenditures (e.g., 1000 hours of execution of search programs [43]) far beyond optimizations that may be obtained using hand coding. Optimizations obtained by such searches do not necessarily port to different platforms. Maximal optimization on specific platforms may raise the cost of code development substantially.

In Tables 16–21 of Appendix A, the results were obtained via a mixture of reference code and hand-coded assembler. Some finalists (notably, Rijndael and Twofish) performed better on some platforms when hand-coded assembler was used as opposed to compilers. The results from Refs. [43] and [73] and from other papers dealing with heavily optimized implementations of one finalist have been omitted from these tables. Although such papers would be valuable aids in implementing a finalist in practice, their significance for comparing the finalists is questionable since the papers only address a single algorithm. Without knowing the level of effort applied to optimizing the algorithms in the separate studies, it is impractical to compare studies where a single algorithm was optimized. Choosing an AES algorithm on the basis of heavily optimized implementations would not necessarily be an accurate predictor of the general performance of the algorithm in the field, since extreme optimization may not be feasible or cost-effective in many applications.

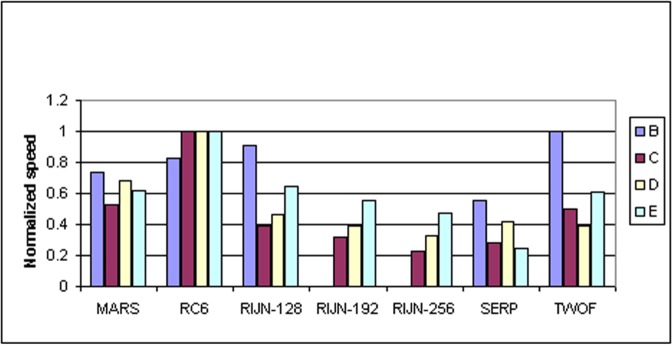

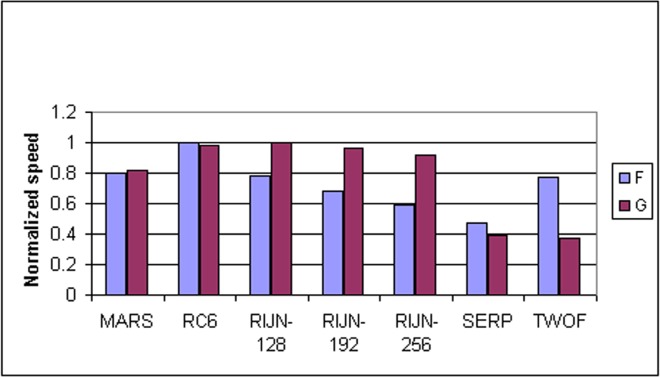

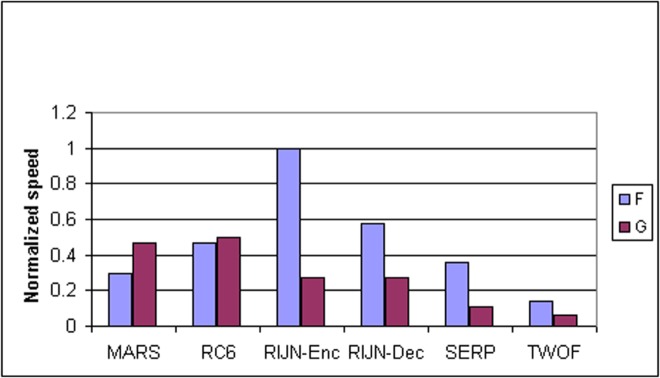

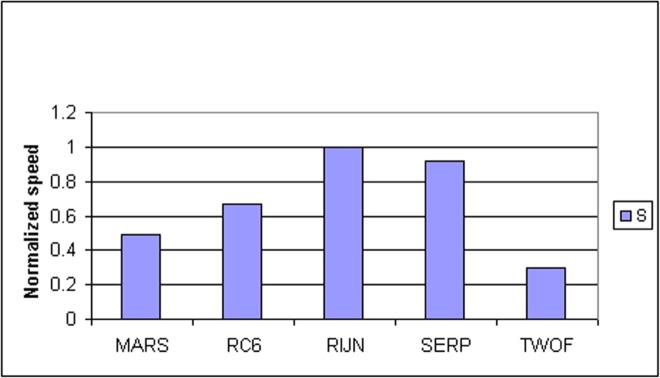

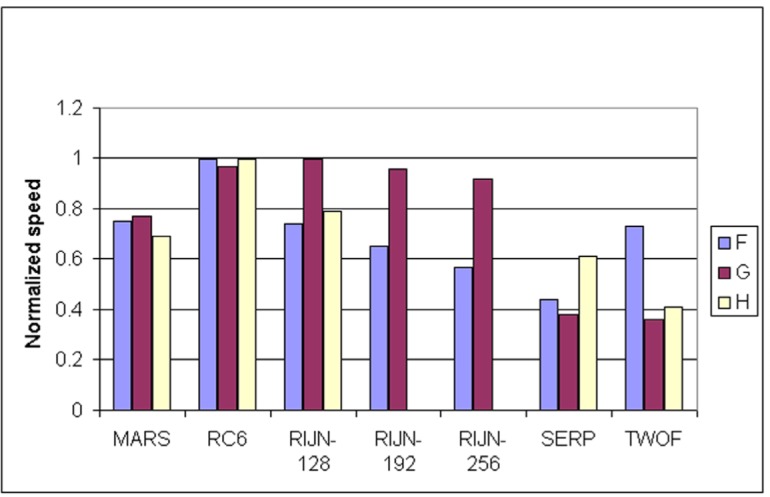

Table 17.

Software speeds (encryption): 32 bit processors (Java)

| F | G | H | ||||

|---|---|---|---|---|---|---|

| kbits/s | Norm. | kbits/s | Norm. | Clocks | Norm. | |

| MARS | 19 718 | 0.75 | 3738 | 0.77 | 8840a | 0.69 |

| RC6 | 26 212 | 1.00 | 4698 | 0.97 | 6110 | 1.00 |

| RIJN | 19 321 | 0.74 | 4855 | 1.00 | 7770 | 0.79 |

| 16 922 | 0.65 | 4664 | 0.96 | |||

| 14 957 | 0.57 | 4481 | 0.92 | |||

| SERP | 11 464 | 0.44 | 1843 | 0.38 | 10 050 | 0.61 |

| TWOF | 19 265 | 0.73 | 1749 | 0.36 | 14 990 | 0.41 |