Abstract

Purpose

Our aim was to study how patients and their clinicians evaluated the usability of PatientViewpoint, a webtool designed to allow patient-reported outcome (PRO) measures to be used in clinical practice.

Methods

As part of a two-round quality improvement study, breast and prostate cancer patients and their medical and radiation oncology clinicians completed semi-structured interviews about their use of PatientViewpoint. The patient interview addressed different phases of the PRO completion workflow: reminders, completing the survey, and viewing the results. The clinician interviews asked about use of PatientViewpoint, integration with the clinical workflow, barriers to use, and helpful and desired features. Responses were recorded, categorized, and reviewed. After both rounds of interviews, modifications were made to PatientViewpoint.

Results

Across the two rounds, 42 unique patients (n=19 in round 1, n=23 in round 2) and 12 clinicians (all in both rounds) completed interviews. For patients, median age was 65, 81% were white, 69% were college graduates, 80% had performance status of 0, 69% had loco-regional disease, and 81% were regular computer users. In the quality improvement interviews, patients identified numerous strengths of the system, including its ability to flag issues for discussion with their provider. Comments included confusion about how scores were presented and that the value of the system was diminished if the doctor did not look at the results. Requests included tailoring questions to be applicable to the individual and providing more explanation about the score meaning, including having higher scores consistently indicating either better or worse status. Clinicians also provided primarily positive feedback about the system, finding it helpful in some cases, and confirmatory in others. Their primary concern was with impact on their workflow.

Conclusions

Systematically collected feedback from patients and clinicians was useful to identify ways to improve a system to incorporate PRO measures into oncology practice. The findings and evaluation methods should be useful to others in efforts to integrate PRO assessments into ambulatory care.

Implications for Cancer Survivors

Systems to routinely collect patient-reported information can be incorporated into oncology practices and provide useful information that promote patient and clinician partnership to improve the quality of care.

Keywords: Patient-reported outcome, Quality of life, Electronic health record, Oncology, Clinical practice, Quality improvement

Introduction

Patient-reported outcome (PRO) measures are used increasingly in clinical practice [1–4]. PRO measures are ratings and reports that come directly from patients about their health, including information about functional status, psychological well-being, health-related quality of life, and symptoms [5]. The rise of information technology has made collection of PRO measures more convenient, allowing for electronic data capture, immediate scoring, and generation of reports [6, 7]. The concurrent upswing of interest in comparative effectiveness research and the widespread adoption of electronic health records (EHRs) are helping to accelerate the integration of PROs into clinical practice [1, 8].

While a number of studies have evaluated the impact of PROs in clinical care and reviewed different electronic PRO (ePRO) systems, there have been few published reports focused specifically on how to optimize the usability of the ePRO technologies that collect the PRO data. In general, clinicians have found PRO data to be useful and not disruptive to their practices [9–11]. Rose and Bezjak [12] and others [4, 13, 14] have described some of the logistics of collecting PROs in clinical practice. Jensen and colleagues reviewed the electronic collection of PRO measures in cancer care and found that there was little consensus on questionnaire administration, integration, or results reporting for 33 unique systems identified [15].

We developed PatientViewpoint, a webtool designed to (1) allow clinicians to order PRO measures to be completed by a patient, (2) collect PRO data from patients, and (3) link the PRO data with the EHR to facilitate their use in managing individual patients [16, 17]. A primary goal of PatientViewpoint is to improve patients’ experience of care by facilitating doctor-patient communication. An initial pilot test of PatientViewpoint demonstrated that patients and clinicians are willing to use the website and find the technology helpful [18]. However, this pilot was done in a research setting with a limited number of patients, and with close monitoring by the research team of how, when, and where patients and clinicians used or did not use the system.

In this paper, we report on a further test of PatientViewpoint, conducted as a substudy of a randomized controlled trial (RCT) comparing patient and clinician preferences for the use of different PRO questionnaires in ambulatory oncology practice [19]. While the larger RCT focused on the content of the different questionnaires being tested, the substudy used a quality improvement approach to ascertain the usability of PatientViewpoint to deliver the questionnaires. This two-round, short-cycle substudy elicited patient and clinician comments and suggestions for improvement, followed by adjustments and then reevaluation [20].

Methods

Design

This was a quality improvement (QI) study of the PatientViewpoint webtool, conducted as a substudy of a randomized controlled trial comparing three PRO measures for use with cancer patients and their clinicians [19]. While the larger trial focused on the content of the questionnaires, this study focused specifically on the usability of PatientViewpoint in a subgroup of purposively sampled patients and clinicians. There were two quality improvement rounds, each of which included a cross-sectional, interview-based assessment. This was not a qualitative study in that the data were not collected with the goal of achieving thematic saturation, but rather to inform adjustments. The main trial was conducted between October 25, 2010 and December 9, 2012. The quality improvement substudy began on September 12, 2011 and continued until the end of the study.

PatientViewpoint

PatientViewpoint allows clinicians to assign patients PRO questionnaires to be completed at pre-defined intervals [16, 17]. Patients receive an e-mail reminder to complete the questionnaire with a link to the website. The results from the patient’s current and previous questionnaires are displayed in graphical format. Scores that are poor in absolute terms, or represent a significant worsening from the previous assessment, are highlighted in yellow to alert clinicians. Free-text boxes ask patients to report the issue they are most interested in discussing with their clinician and any other feedback. Links are available to explain what is covered in each domain (“What is this?”) and how the different PROs are scored (“Score Meaning”). An additional “What can I do” link provides clinicians with advice about steps that might be taken in response to poor scores. PatientViewpoint provides graphical score reports, and a plain-text table is imported into the electronic health record (EHR). Because the yellow highlighting is not possible in the plain-text format, an asterisk is used instead.

Study setting

Study participants included breast and prostate cancer patients and their clinicians in radiation and medical oncology practice from the East Baltimore campus of the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins in Maryland. A consecutive sample of eligible patients able to read English was selected. Details of the randomized trial have been reported [19]. The Johns Hopkins School of Medicine IRB approved the study, including the current quality improvement component.

Recruitment

E-mail invitations were sent to the radiation and medical oncologists with substantial patient loads in breast and prostate cancer. If a clinician was willing to participate, she/he was asked to provide written informed consent. We also invited the nurse practitioners who worked with these clinicians to participate. Oncology staff used a flyer to recruit patients of participating clinicians. For eligible patients who agreed to participate, the study coordinator obtained written consent.

Procedures

At enrollment, patients completed brief socio-demographic questionnaires, and basic clinical information (performance status, type of cancer, extent of disease, and past and current treatments) was collected from their providers. During the period of the quality improvement substudy, enrolled patients were encouraged to complete their PRO questionnaires via the PatientViewpoint website from home (or elsewhere). Specifically, enrolled patients were given a letter with a username and password for the website and oriented on how to use the website. Patients without computer/Internet access or who did not complete the questionnaires offsite were given the opportunity to complete the questionnaires in the clinic prior to their visit, using a laptop provided by the research coordinator. Patients were followed for the duration of their treatment or until the study terminated (whichever was shorter).

Enrolled patients received e-mail reminders when it was time for them to complete the PatientViewpoint questionnaire online. The PRO questionnaires used in this study were the EORTC QLQ-C30 [21], the Supportive Care Needs Survey-Short Form [22], and PROMIS Short Forms representing several domains [23]. Whether patients completed the PRO questionnaire at home or in the clinic, they accessed the PatientViewpoint website via the Internet. The research coordinator monitored whether patients completed the PRO questionnaire, either offsite prior to their oncologist visit or using the in-clinic computer, and asked patients who did not complete the questionnaire about their reasons for non-completion (too ill, got tired, lost interest, not enough time, technical problems).

At each clinic visit, for patients who completed the PRO questionnaires, clinicians were provided with an automatically generated report in the electronic medical record. Clinicians could also obtain a printed hard copy of the summary report or access the results via the PatientViewpoint website. All clinicians were trained in the website’s use and the interpretation of the patient PRO data summary reports when they enrolled.

Quality improvement

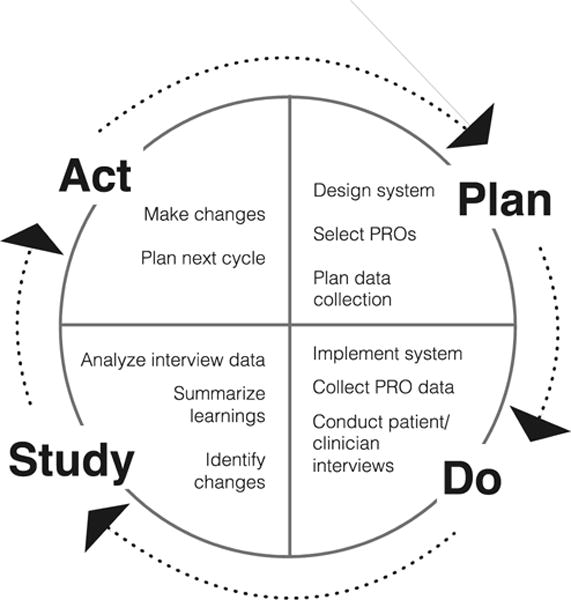

To assess and improve the website’s usability, we applied a quality improvement (QI) approach with two 3-month cycles, adapting the standard Plan-Do-Study-Act (PDSA) model (Fig. 1) [24]. PDSA is an iterative management method used in the continuous quality improvement of processes and products. This approach has been widely adopted in medicine as the basis for rapid improvement of clinical processes [25]. Since the product, PatientViewpoint, had already been developed (Plan), we started each cycle with the “Do” component of implementing it in the clinic. Each “Study” cycle included feedback from patients and clinicians about their experience with steps in using PatientViewpoint, including ordering, data collection/survey completion, viewing results, interpretation, and clinical decision making. The “Act” component took place at the end of each 3-month cycle. We used the information gathered from patients and clinicians to make adjustments to improve PatientViewpoint. At the end of each PDSA cycle, the research team “Planned” the improvements of the system based on this feedback and implemented them (Do).

Fig. 1.

Plan-Do-Study-Act (PDSA) model for quality improvement

Patients

The study team conducted semi-structured interviews with patients regarding the use of the website. At the beginning of the interview, patients demonstrated using PatientViewpoint and then reported on parts of the process they found helpful and perceived barriers. In each cycle, we aimed to conduct “new user” interviews with 12 patients after they had completed their first questionnaires using PatientViewpoint, and we aimed to interview 12 different “experienced user” patients after they had completed three questionnaires. For each set of interviews, the target sample included three breast medical oncology patients, three prostate medical oncology patients, three breast radiation oncology patients, and three prostate radiation oncology patients. Patients were only asked to participate in one interview (i.e., as a “new” or “experienced” user) and were only interviewed in one PDSA cycle. Sample size was estimated to provide enough responses to inform improvements.

Clinicians

The study team conducted semi-structured interviews with clinicians regarding the use of the website. We asked clinicians to relate an example in which they used the PatientViewpoint web system during a patient encounter, an example in which they did not use the PatientViewpoint web system during a patient encounter, and their most recent visit with a patient who was enrolled in the study. We asked clinicians to demonstrate how they used the information during the patient encounter, aspects of the system they found informative and helpful, and barriers they perceived in using the system. Clinician interviews occurred after the first month of each cycle so that clinicians had an opportunity to become accustomed to using the updated web system. Enrolled clinicians were interviewed for both PDSA cycles.

Analysis

The characteristics of the study sample of patients and clinicians were summarized descriptively. For the QI interviews, responses were recorded verbatim. The goal was to gather information on the complete range of reactions to the system—without indicating which ones characterized the most patients and which ones were based on only a few responses. One researcher (CFS) categorized themes from the interviews using a framework based on the semi-structured probes corresponding to different phases in the questionnaire completion workflow (e-mail reminders, completing the survey, viewing the results, other). Three research team members (AWW, SMW, CFS) then met and discussed the responses and the identified themes. Disagreements were adjudicated by consensus.

Results

Study sample and clinician and patient characteristics

Twelve of 13 clinicians (92%) approached agreed to participate in the study (5 medical oncologists, 4 radiation oncologists, 3 nurse practitioners; 8 were male and 4 were female). Between October 25, 2010 and December 9, 2012, study staff approached 301 potentially eligible patients, of whom 224 (74%) were enrolled [19]. The 100 patients enrolled after September 12, 2011 were trained on PatientViewpoint so that they could complete their questionnaires remotely, rather than in the clinic. Of these 100 patients, 42 participated in the QI substudy, purposively sampled to represent breast or prostate cancer patients being treated in medical or radiation oncology—19 patients were interviewed in round 1 and 23 in round 2. Of these, the median age was 65, 81% were white, 69% were college graduates or higher, 17 had breast cancer, 24 had prostate cancer, 80% had performance status of 0, and 69% had loco-regional disease. Nineteen percent were not regular computer users, and 23% did not have high-speed Internet access (Table 1).

Table 1.

Patient characteristics

| Characteristic | All patients (n=42) |

|---|---|

| Median age (range) | 65 (32–83) |

| Race, n (%)* | |

| White | 34 (81) |

| Other | 8(19) |

| Education, n (%) | |

| Less than college | 6(14) |

| Some college | 7(17) |

| College graduate or higher | 29 (69) |

| Performance status, n (%) | |

| 0 | 32 (80) |

| 1+ | 8(19) |

| Unknown | 2 |

| Extent of disease, n (%) | |

| Early stage/loco-regional | 28 (69) |

| Metastatic | 12 (30) |

| Unknown | 2 |

| Computer usage, n (%) | |

| Regular | 34 (81) |

| Occasional | 1(2) |

| Rare | 3(7) |

| Never | 4(10) |

| Internet access, n (%) | |

| High-speed | 30(77) |

| Dial-up/low-speed | 3(8) |

| None | 6(15) |

| Unknown | 3 |

Patient and clinician quality improvement interviews

The semi-structured interviews conducted with patients and clinicians provided information regarding their perspectives on PatientViewpoint, its features, their experience, and how it fits into the clinical workflow. Table 2 summarizes the results for round 1 and round 2 of interviews for patients and for clinicians. Most patient feedback focused on streamlining survey ordering and completion and understanding the results, whereas the majority of clinician feedback focused on integration of PRO results into their workflow, as well as improving the interpretability and usefulness.

Table 2.

Patient and clinician feedback about PatientViewpoint functionality

| Patient feedback | Action | |

|---|---|---|

| Round 1 | ||

| E-mail reminders | Unclear that e-mail reminders are from Johns Hopkins Subject line unclear | Add “Hopkins” to e-mail Subject and From lines |

| Some reminders going to spam | ||

| Reminder not generated if questionnaire assigned within 3 days of visit | Reminder generated immediately if within 3 days of visit | |

| Need more than 1 reminder | Reminder frequency increased to 3 times | |

| Completing the survey | No warning that there is a character limit for entering free-text description of important issues | Warning added about limit on free-text characters |

| Weak WiFi access in clinic | x | |

| Viewing the results | Unclear that must hit Submit button to get graphical results | Submit button made clearer |

| Meaning of numbers is unclear | x | |

| Unaware of option to get explanation of PRO domain | Added explanations on results page | |

| Unclear what yellow highlighting of scores means | ||

| Not obvious that trend in score over time is being displayed | ||

| Unclear if higher score indicates better or worse health | Added arrows to indicate direction better/worse | |

| Display on 0–100 scale difficult to interpret, especially if, e.g., a score SO is normal | ||

| Description of score is too technical (e.g., standard deviation) | Study | |

| Want comparison to normal scores for “someone like me” | x | |

| Want tailored description of own score | ||

| Scores should go in same direction for all scales | Study | |

| Other comments | Want program to remember their password | x |

| Want free-text option after each group of questions | x | |

| Clinician feedback | ||

| Comments | Scores don’t really tell them anything new; patients bring up same issues during visit | – |

| Time required for viewing is a barrier to use | – | |

| Want more lead time to have the survey completed before the visit day | x | |

| Want direct link from website to patient record in EHR without multiple logons | x | |

| Want direct link from email reminder to patient results | x | |

| Prefer to be given hard copy of results | Results can be printed in clinic | |

| Want to be prompted to review results | x | |

| Links to explanations are not obvious | Reference to explanations added on results page | |

| Want simpler language to explain meaning of scores | Study | |

| Scores should go in same direction for all scales | Study | |

| Want free-text comments to be more obvious | Moved text comments to top of page | |

| Like graphs and yellow highlighting of problematic scores | – | |

| Want patients with highlighted results to be highlighted | x | |

| Want customized list of own patients | x | |

| Want program to remember their login/password | x | |

| Results in EHR are more convenient, but PatientViewpoint website provides better information | – | |

| Want easy, one-click printing | x | |

| System Issues | Need alert when there is missing data | Added alert for missing data |

| Need to be able to change frequency of completion for already assigned questionnaires | x | |

| Difficulty adjusting ordering frequency if previous survey in a series was already completed | x | |

| Round 2 | ||

| E-mail reminders | Emails work fine and liked emails | – |

| Value link from email reminder to survey | – | |

| Adding “Hopkins” to From and Subject lines is helpful | – | |

| Some reminders still go to junk mail | – | |

| Completing survey | Able to complete with no problem | – |

| Prefer paper to computer completion | – | |

| Appreciate that scrolling not needed within surveys | – | |

| Annoyed with passwords but like ability to change to own choice | – | |

| Survey helps identify issues that might not come up otherwise, reminds of things to discuss with clinician | – | |

| Free-text comment boxes useful | – | |

| Difficult to print results from home | – | |

| Viewing the results | Value ability to track scores over time | – |

| Rely on the arrows to determine which direction is better/worse; variation in direction of scores confusing to some | Study | |

| Yellow highlighting helpful | – | |

| Cut off scores currently result in too many “yellow” highlighted scores | Study | |

| Use explanatory links infrequently | – | |

| Difficult knowing what the numbers mean | Study | |

| Other comments | Several useful features of the system | – |

| Value completing from home, because it addresses things that come up between visits which they may not necessarily bring up on their own during a visit | – | |

| If doctor does not look at it, feels like it is a waste of time | – | |

| Would like opportunity to comment at the end of each set of questions | x | |

| Would like descriptive comments right next to graphs | Reference to explanations added on results page | |

| Would like directional arrows to be more noticeable | Directional arrows made more prominent | |

| Defer to doctors for suggestions of what to do | – | |

| Want to see complete score history; not just last 5 scores | x | |

| Want more guidance to help navigate through completing the questionnaire, submitting comments, seeing scores | x | |

| Want to know when doctors see scores | x | |

| Clinician feedback | ||

| Comments | Improved ability to find patients | Improved searching function |

| Would like the graphs to be displayed in EHR | Defer pending Epic migration | |

| Would like to be able to link scores with other clinical information | ||

| Too much work to logon to PatientViewpoint website separately | x | |

| PRO results helpful as a reminder | – | |

| In many cases, scores confirm what they already know; provide verification about how patient is doing | – | |

| Leaves it up to the patient to bring up problems even if scores are low | – | |

| Only looks at the data if patient completed before coming to clinic | – | |

| Helps identify/track issues | – | |

| Like free-text comments | – | |

| Want description of meaning of scores put right next to graph | Study | |

| Like arrows but could be more prominent; should be included in description of what scores mean | Study | |

| Like graphic score report; would like “normal” range shaded | Study | |

| Would like more detailed referrals to specific resources for “What can I do” | x | |

| Would like smarrphone app | x | |

| System issues | May forget password | x |

| Learn from the links early on, then do not use as often later | – |

– Not applicable, x not done

EHR electronic health record, PRO patient-reported outcome

Overall, patients provided primarily positive feedback about the system and identified several strengths including the experience of being notified and completing the questionnaires at home, identifying issues that might otherwise be missed, and discussing them with their clinician. In the first round, patients complained that they did not get sufficient notification about surveys and that they were unable to recognize that the surveys were being sent from the hospital. Patients valued the opportunity to submit free-text comments. They felt the value of the system was diminished if the clinician did not look at the results; some wanted to be notified when the provider viewed the scores. In viewing the results, patients valued the ability to track scores over time. They found it confusing that higher scores meant better health for some scales, but worse health for others. The absolute value of the scores was not well understood. Patients felt that they could answer PRO questions around the time of an office visit, but recommended tailoring questions to be applicable to the individual and providing more explanation about the score meaning, including having higher scores, consistently indicate either better or worse status. Regardless of this, some patients thought it was the clinician’s responsibility to review the scores, discuss abnormal findings, and act on them.

A few clinicians were slow to adopt the system initially and had not used it prior to their first interview. However, once engaged, all reported that it could be helpful. Individual respondents wanted more functionality in different respects, but a universal concern was the impact of incorporating PROs into their workflow. Comments indicated that they had not integrated checking of PRO results into their clinic routine. Some described looking at patient results the night or morning before their appointments, with concerns that this would not be possible if patients completed surveys in clinic on the same day. One clinician noted that a patient was seen by a physician’s assistant, so she/he had not logged in to view the results. Another noted wanting to use appointment time for face-to-face interaction, rather than looking at the computer. E-mail reminders were convenient because clinicians already had an established routine for using them.

Most physicians preferred graphs to tables. Clinicians wanted clearer and more consistent indications for what scores mean, including if a score was in the normal range and whether a higher score indicates better or worse health. Having to log on to more than one site (e.g., both EHR and PatientViewpoint) was viewed as a barrier. Several clinicians stated that they would be willing to sacrifice the graphical presentation of results to avoid the hassle of logging in to an additional system. A few believed that usability and usefulness would improve once PRO results were more fully integrated into the electronic health record. They suggested a number of improvements to the presentation of PRO results and interpretation. However, they also reported that explanatory information presented with the patient results became less necessary over time as they became more accustomed to the system. In using PRO results, one clinician reported that despite being presented with the information, she/he still wanted the patient to mention it before acting. Another expressed distrust of the importance of the scores, preferring that the patient “identify the need directly to me rather than through a score on a survey, which may or may not indicate that it is an issue they would choose to spend time on with me. A number on a survey does not convey to me whether or not the patient really feels the issue is bothersome enough or needs to be addressed with me.”

Based on the feedback from round 1, several modifications were made to PatientViewpoint. These included adding “Hopkins” to the e-mail subject line and in the “From” alias for notification about surveys, increasing the frequency of email notifications sent to patients, making the “submit” button more obvious on the patient screens, adding a warning about the limit on free-text characters, and revising instructions. Some modifications were not made due to financial and system constraints, such as creating a direct link from the site to the EHR After round 2, additional planned improvements included (1) an improved patient searching function for clinicians, (2) placing the meaning of scores adjacent to the graph for each specific domain, (3) making arrows indicating the direction of “better” scores more prominent, (4) permitting a single login, (5) shading of the normal range on the graphical presentations of scores, (6) improving the “what can I do” function by providing specific referral information, (7) linking PRO scores to other patient data, and (8) developing a smartphone app. Some of these improvements were ultimately delayed pending migration of the Health System to Epic. A number of issues remained as questions for further research and development, such as imposing uniform directionality on all scales to indicate better health and individualized tailoring in describing results.

Discussion

We conducted a longitudinal pilot test of PatientViewpoint, a webtool to collect patient-reported outcome measures and deliver them to electronic health records. We improved the tool iteratively using a quality improvement approach. We conducted the research in a practice setting in the context of a larger clinical trial. The results informed improvements to our system, as well as having implications for the design and application of PRO data collection in general into clinical settings.

Patients liked several features of the system, including the ability to complete questionnaires at home, identify issues of specific importance, and flag these issues for discussion with their doctor. Patients appreciated being able to view their own results and valued being able to track scores over time. However, they recommended tailoring questions more to the goals and priorities of individual patients. Some were confused by conventions in presenting and scoring of PRO measures. In their opinion, the value was greatly diminished if the clinician did not look at the results. While some appreciated the reminder to bring up issues with their provider, others fell back on the clinician to evaluate their responses, bring them up in the visit, and act on them.

Clinicians also provided primarily positive feedback about the system and found it helpful in some cases, confirmatory and reassuring in others. They valued graphical presentation, but their greatest concerns were the impact of incorporating PROs into their workflow. Unsurprisingly, they reported that the informational links (e.g., “What is this?” and “Score Meaning”) became less necessary as they grew accustomed to using the system. Interestingly, one physician reported that despite being presented with information on PROs, she/he still wanted the patient to mention it before acting. Since many patients rely on their physicians to do this, this demonstrates that simply having the information does not necessarily lead to action. It also suggests that more education of both patients and providers would be useful in how to apply PROs in clinical practice.

The study provides valuable information on how improvements were made to the system; for example, information about the meaning of scores and guidance on action for scores indicating potential needs can be helpful to patients and clinicians. Our method of rapid cycle improvements based on feedback from patients and clinicians was useful to identify refinements that improved PatientViewpoint’s feasibility and value. These findings and methods should be useful to others conducting related efforts to integrate PRO measurement into ambulatory clinical practice and within EHRs [4]. The study has also some limitations. The patient sample was primarily white and highly educated, which could limit the generalizability of the findings.

Newer systems for PRO data collection integrated with the EHR are being developed. Since the completion of this study, Johns Hopkins Medicine has adopted the Epic EHR, which includes MyChart, a tethered patient portal with the capacity for patients to complete PRO measures [1, 8, 26]. There are several PRO measures built into the 2012 release of Epic, including the PROMIS static adult and child short forms [23, 27] and the PHQ-9 depression measure [26, 28]. Wagner and colleagues have published results using the PROMIS measures for symptom screening in ambulatory cancer care [29]. Other PRO measures can be built into the system. These PROs can be ordered by physicians or others, and the results are integrated into the EpicCare health record viewed by clinicians. The results can be viewed numerically and graphically and can be plotted over time alongside other data elements. As a result, the planned complete linkage of PatientViewpoint with the Johns Hopkins homegrown “Electronic Patient Record” was cancelled. This reduced the capacity of the system tested to achieve all of its planned functionality.

It is helpful to interpret the results in the context of the study’s design. In particular, we applied a quality improvement approach, using a PDSA model. The results provide a range of feedback from 42 patients and 12 clinicians but were not intended to identify the frequency or importance of specific issues, such as those that may emerge from quantitative or qualitative research. Strengths of the study and the PatientViewpoint webtool include stakeholder and expert input into the features and design from the inception of the project and integration with potentially any electronic health record. These advantages were sufficient so that the Breast Cancer Program at Johns Hopkins is currently using PatientViewpoint to collect PROs as part of a registry of patients on hormonal therapy, a diet intervention study, and a recently funded program by the Centers for Disease Control and Prevention to facilitate management of breast cancer survivors diagnosed at <45 years of age.

Implications

Since we began our work, there are now a several dedicated patient portals that are linked to EHRs, the most prominent being the Epic MyChart, which can be used to collect PRO data. However, the majority of EHRs do not have their own “tethered” portal for PRO data collection. This suggests that there will be a need for PRO data collection systems like PatientViewpoint that can be “bolted on” to these EHRs. An example of this kind of system is the EHR currently being used in small ambulatory care practices (e.g., eClinicalWorks and NextGen [30, 31]). Even for larger EHRs, there may be instances, particularly for research, where it is more efficient to build PRO questionnaires outside of the EHR. In the US Department of Veterans Affairs, the largest managed care system in the USA, the My HealtheVet patient portal allows authenticated users to enter information about health history and health events, use secure messaging to alert their physician, and use health management tools. While My HealtheVet is tethered to the EHR, its linkage does not yet include PROs [32, 33]. PatientViewpoint is developed so that it is straightforward to create an interface to any EHR.

Although some of the findings are specific to our system, there are some more general lessons from this project that might be applied by organizations to develop their own systems. Patient portals help to engage patients in their health and health care and may make them more satisfied members of their own health care team [34]. To promote engagement, systems should give patients multiple options to complete questionnaires, view their own results, and identify individual priorities. Systems should be designed to encourage clinicians to view the results, in order for these to be useful. This requires presenting the data in an interpretable and actionable manner. Optimizing integration of PRO measures within the clinical workflow is critical; this may benefit from dedicated work with clinical users to identify and overcome barriers. Several of these findings have been supported by other published research [15].

This study also raised a number of questions, some regarding the presentation of PRO results to patients and clinicians. We are currently conducting a study to evaluate presentation of PRO data to patients and clinicians to improve understanding and application of the data into practice [35].

Acknowledgments

The PatientViewpoint Scientific Advisory Board includes Neil Aaronson, PhD (Netherlands Cancer Institute, Amsterdam, Netherlands); Michael Brundage, MD, MSc (Queen’s University, Kingston, ON, Canada); Carolyn Gotay, PhD (University of British Columbia, Vancouver, BC, Canada); Michele Halyard, MD (Mayo Clinic, Scottsdale, AZ, USA); Denise Hynes, RN, MPH, PhD (Edward Hines, Jr. VA Hospital, Hines, IL, USA, and University of Illinois, Chicago, IL, USA); J.B. Jones, MBA, PhD (Geisinger Health System, Danville, PA, USA); Susan Yount (Northwestern University, Chicago, IL, USA); and Galina Velikova, BMBS(MD), PhD (St James’s Institute of Oncology, Leeds, UK). We also want to thank Michelle Campbell, Ray Hamann, and colleagues for their technical development of PatientViewpoint Finally, we are most grateful to the patients and clinicians who participated in this study.

Financial support PatientViewpoint’s development was supported by the National Cancer Institute (1R21CA134805-01A1; 1R21CA113223-01). The American Cancer Society provided the support for the randomized controlled trial (MRSG-08-011-01-CPPB). The funding sources had no role in study design, data collection, analysis, interpretation, writing, or decision to submit the manuscript for publication.

Appendix

Continuous quality improvement (QI) interview script (PATIENTS)

We would like for you to provide some feedback about how the PatientViewpoint system works for you and could be improved. The information that you give us today will contribute to the ongoing improvement of the PatientViewpoint website. Please provide your honest assessment.

Can you show me how you go through the process of completing the survey using the PatientViewpoint website.

- Email reminders

- Have you received the email reminders?

- Are the email reminders useful? Do you use the reminders to connect to the survey?

- Is there anything in particular that you like about the reminders?

- Is there anything that you think could be better with the email reminders?

- Completing the survey

- Show me how you log onto the PatientViewpoint website and complete the survey.

- What aspects of the process do you find helpful?

- What problems have you encountered when completing the process?

- Viewing the results

- What do you like about the presentation of the results?

- Is there anything in the results section that is hard to understand?

- What do you think could be done to improve the presentation of the survey results?

Other suggestions and comments

Continuous quality improvement (QI) interview script (CLINICIANS)

We are interested in your feedback about how the PatientViewpoint system works and how it might be improved. The information you give to us today will contribute to the ongoing improvement of our web system. Please provide your honest assessment.

Have you used PVP in a patient encounter in the past month?

Tell me about a patient encounter where you used PatientViewpoint:

Tell me about a patient encounter where you had the opportunity to use PatientViewpoint but DID NOT:

Tell me about your most recent visit with your patient Mr. /Ms. (insert name of most recently seen patient enrolled in the study). What happened? What was done?

Tell me how the PatientViewpoint web system fits in with what you do in your clinic. When do you view the survey results for your patients? What is your preferred way to view the results (website, EPR or printout)?

Now I would like for you to walk me through how you use the website and the information it provides during the patient encounter.

Notes and observations

Which aspects of the system do you find informative/helpful?

What barriers do you perceive in using the system?

Are there any other features that you would like PatientViewpoint to have?

Do you have any other comments?

Footnotes

Conflict of interest The authors have no other relevant conflicts of interest.

References

- 1.Wu AW, Jensen RE, Salzberg C, Snyder C. Including case studies. PCORI national workshop to advance the use of PRO measures in electronic health records; 2013. Advances in the use of patient reported outcome measures in electronic health records. [Google Scholar]

- 2.Snyder CF, Aaronson NK. Use of patient-reported outcomes in clinical practice. Lancet. 2009;374(9687):369–70. doi: 10.1016/S0140-6736(09)61400-8. [DOI] [PubMed] [Google Scholar]

- 3.Wu AW, Bradford AN, Velanovich V, Sprangers MA, Brundage M, Snyder C. Clinician’s checklist for reading and using an article about patient-reported outcomes. Mayo Clin Proc. 2014;89(5):653–61. doi: 10.1016/j.mayocp.2014.01.017. [DOI] [PubMed] [Google Scholar]

- 4.Snyder CF, Aaronson NK, Choucair AK, Elliott TE, Greenhalgh J, Halyard MY, et al. Implementing patient-reported outcomes assessment in clinical practice: a review of the options and considerations. Qual Life Res. 2012;21(8):1305–14. doi: 10.1007/s11136-011-0054-x. [DOI] [PubMed] [Google Scholar]

- 5.Acquadro C, Berzon R, Dubois D, Leidy NK, Marquis P, Revicki D, et al. Incorporating the patient’s perspective into drug development and communication: an ad hoc task force report of the patient-reported outcomes (PRO) harmonization group meeting at the Food and Drug Administration, February 16, 2001. Value Health. 2003;6(5):522–31. doi: 10.1046/j.1524-4733.2003.65309.x. [DOI] [PubMed] [Google Scholar]

- 6.Snyder CF, Wu AW, Miller RS, Jensen RE, Bantug ET, Wolff AC. The role of informatics in promoting patient-centered care. Cancer J. 2011;17(4):211–8. doi: 10.1097/PPO.0b013e318225ff89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Basch E, Abernethy AP. Supporting clinical practice decisions with real-time patient-reported outcomes. J Clin Oncol. 2011;29:954–6. doi: 10.1200/JCO.2010.33.2668. [DOI] [PubMed] [Google Scholar]

- 8.Wu AW, Kharrazi H, Boulware LE, Snyder CF. Measure once, cut twice—adding patient-reported outcome measures to the electronic health record for comparative effectiveness research. J Clin Epidemiol. 2013;66(8 Suppl):S12–20. doi: 10.1016/j.jclinepi.2013.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Detmar SB, Aaronson NK. Quality of life assessment in daily clinical oncology practice: a feasibility study. Eur J Cancer. 1998;34:1181–6. doi: 10.1016/s0959-8049(98)00018-5. [DOI] [PubMed] [Google Scholar]

- 10.Detmar SB, Muller MJ, Schomagel JH, et al. Health-related quality-of-life assessments and patient-physician communications. A randomized clinical trial. JAMA. 2002;288:3027–34. doi: 10.1001/jama.288.23.3027. [DOI] [PubMed] [Google Scholar]

- 11.Velikova G, Booth L, Smith AB, Brown PM, Lynch P, Brown JM, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. J Clin Oncol. 2004;22:714–24. doi: 10.1200/JCO.2004.06.078. [DOI] [PubMed] [Google Scholar]

- 12.Rose M, Bezjak A. Logistics of collecting patient-reported outcomes (PROs) in clinical practice: an overview and practical examples. Qual Life Res. 2009;18:125–36. doi: 10.1007/s11136-008-9436-0. [DOI] [PubMed] [Google Scholar]

- 13.Aaronson N, Elliott T, Greenhalgh J, Halyard M, Hess R, Miller D, Reeve B, Santana M, Snyder C. User’s guide to implementing patient-reported outcomes assessment in clinical practice. Version 2. 2015 doi: 10.1007/s11136-011-0054-x. ISOQOL. http://www.isoqol.org/UserFiles/2015UsersGuide-Version2.pdf. Accessed 16 Feb 2015. [DOI] [PubMed]

- 14.Jones JB, Snyder CF, Wu AW. Issues in the design of Internet-based systems for collecting patient-reported outcomes. Qual Life Res. 2007;16:1407–17. doi: 10.1007/s11136-007-9235-z. [DOI] [PubMed] [Google Scholar]

- 15.Jensen RE, Snyder CF, Abernethy AP, Basch E, Potosky AL, Roberts AC, et al. Review of electronic patient-reported outcomes systems used in cancer clinical care. J Oncol Pract. 2014;10(4):e215–22. doi: 10.1200/JOP.2013.001067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Snyder CF, Jensen R, Courtin SO, et al. PatientViewpoint: a website for patient-reported outcomes assessment. Qual Life Res. 2009;18:793–800. doi: 10.1007/s11136-009-9497-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Web-based system helps doctors, patients communicate. https://www.youtube.com/watch?v=S-r4ykaUhfU. Accessed 16 Feb 2015.

- 18.Snyder CF, Blackford AL, Wolff AC, Carducci MA, Herman JM, Wu AW, et al. Feasibility and value of PatientViewpoint: a web system for patient-reported outcomes assessment in clinical practice. Psychooncology. 2013;22(4):895–901. doi: 10.1002/pon.3087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Snyder CF, Herman JM, White SM, Luber BS, Blackford AL, Carducci MA, et al. When using patient-reported outcomes in clinical practice, the measure matters: a randomized controlled trial. J Oncol Pract. 2014;10(5):e299–306. doi: 10.1200/JOP.2014.001413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Langley GJ, Moen R, Nolan KM, Nolan TW, Norman CL, Provost LP. The improvement guide: a practical approach to enhancing organizational performance. 2nd. Hoboken: Jossey-Bass; 2009. [Google Scholar]

- 21.Aaronson NK, Ahmedzai S, Bergman B, Bullinger M, Cull A, Duez NJ, et al. The European Organization for Research and Treatment of Cancer QLQ-C30: a quality-of-life instrument for use in international clinical trials in oncology. J Natl Cancer Inst. 1993;85(5):365–76. doi: 10.1093/jnci/85.5.365. [DOI] [PubMed] [Google Scholar]

- 22.Sanson-Fisher R, Girgis A, Boyes A, et al. The unmet supportive care needs of patients with cancer. Cancer. 2000;88:226–37. doi: 10.1002/(sici)1097-0142(20000101)88:1<226::aid-cncr30>3.3.co;2-g. [DOI] [PubMed] [Google Scholar]

- 23.Cella D, Gershon R, Lai JS, et al. The future of outcomes measurement: item banking, tailored short-forms, and computerized adaptive assessment. Qual Life Res. 2007;16(Suppl 1):133–41. doi: 10.1007/s11136-007-9204-6. [DOI] [PubMed] [Google Scholar]

- 24.WA Shewhart. Statistical method from the viewpoint of quality control. New York: Dover; 1939. [Google Scholar]

- 25.Cleghorn GD, Headrick LA. The PDSA cycle at the core of learning in health professions education. Jt Comm J Qual Improv. 1996;22(3):206–12. doi: 10.1016/s1070-3241(16)30223-1. [DOI] [PubMed] [Google Scholar]

- 26.Jensen RE, Rothrock NE, DeWitt EM, Spiegel B, Tucker CA, Crane HM, et al. The role of technical advances in the adoption and integration of patient-reported outcomes in clinical care. Med Care. 2015;53(2):153–9. doi: 10.1097/MLR.0000000000000289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Varni JW, Magnus B, Stucky BD, Liu Y, Quinn H, Thissen D, et al. Psychometric properties of the PROMIS ® pediatric scales: precision, stability, and comparison of different scoring and administration options. Qual Life Res. 2014;23(4):1233–3. doi: 10.1007/s11136-013-0544-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001;16(9):606–13. doi: 10.1046/j.1525-1497.2001.016009606.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wagner LI, Schink J, Bass M, Patel S, Diaz MV, Rothrock N, et al. Bringing PROMIS to practice: brief and precise symptom screening in ambulatory cancer care. Cancer. 2015;121(6):927–34. doi: 10.1002/cncr.29104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.eClinicalWorks improving healthcare together www.eClinicalWorks.com Accessed 1 May 2015.

- 31.NEXTGEN® Healthcare. www.nextgen.com Accessed 1 May 2015.

- 32.Nazi KM, Hogan TP, Wagner TH, et al. Embracing a health services research perspective on personal health records: lessons learned from the VA My HealtheVet system. J Gen Intern Med. 2010;251(Suppl 1):62–7. doi: 10.1007/s11606-009-1114-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Goldzweig CL, Towfigh AA, Paige NM, Orshansky G, Haggstrom DA, Beroes JM, Miake-Lye IM, Shekelle PG. Systematic review: secure messaging between providers and patients, and patients’ access to their own medical record. (VA-ESP Project #05-226).Evidence on health outcomes, satisfaction, efficiency and attitudes. 2012 http://www.hsrd.research.va.gov/publications/esp/myhealthevetpdf Accessed 15 May 2015. [PubMed]

- 34.Kruse CS, Bolton K, Freriks G. The effect of patient portals on quality outcomes and its implications to meaningful use: a systematic review. J Med Internet Res. 2015;17(2):e44. doi: 10.2196/jmir.3171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Brundage MD, Smith K, Little EA, Bantug ET, Snyder CF, PRO Data Presentation Stakeholder Advisory Board Communicating patient-reported outcome scores using graphic formats: results from a mixed-methods evaluation. Qual Life Res. 2015;24:2457–72. doi: 10.1007/s11136-015-0974-y. [DOI] [PMC free article] [PubMed] [Google Scholar]