Abstract

We propose a high dimensional classification method that involves nonparametric feature augmentation. Knowing that marginal density ratios are the most powerful univariate classifiers, we use the ratio estimates to transform the original feature measurements. Subsequently, penalized logistic regression is invoked, taking as input the newly transformed or augmented features. This procedure trains models equipped with local complexity and global simplicity, thereby avoiding the curse of dimensionality while creating a flexible nonlinear decision boundary. The resulting method is called Feature Augmentation via Nonparametrics and Selection (FANS). We motivate FANS by generalizing the Naive Bayes model, writing the log ratio of joint densities as a linear combination of those of marginal densities. It is related to generalized additive models, but has better interpretability and computability. Risk bounds are developed for FANS. In numerical analysis, FANS is compared with competing methods, so as to provide a guideline on its best application domain. Real data analysis demonstrates that FANS performs very competitively on benchmark email spam and gene expression data sets. Moreover, FANS is implemented by an extremely fast algorithm through parallel computing.

Keywords: density estimation, classification, high dimensional space, nonlinear decision boundary, feature augmentation, feature selection, parallel computing

1 Introduction

Classification aims to identify to which category a new observation belongs based on feature measurements. Numerous applications include spam detection, image recognition, and disease classification (using high-throughput data such as microarray gene expression and SNPs). Well known classification methods include Fisher’s linear discriminant analysis (LDA), logistic regression, Naive Bayes, k-nearest neighbors, neural networks, and many others. All these methods can perform well in the classical low dimensional settings, in which the number of features is much smaller than the sample size. However, in many contemporary applications, the number of features p is large compared to the sample size n. For instance, the dimensionality p in microarray data is frequently in thousands or beyond, while the sample size n is typically in the order of tens. Besides computational issues, the central conflict in high dimensional setup is that the model complexity is not supported by limited access to data. In other words, the “variance” of conventional models is high in such new settings, and even simple models such as LDA need to be regularized. We refer to Hastie et al. (2009) and Bühlmann and van de Geer (2011) for overviews of statistical challenges associated with high dimensionality.

In this paper, we propose a classification procedure FANS (Feature Augmentation via Non-parametrics and Selection). Before introducing the algorithm, we first detail its motivation. Suppose feature measurements and responses are coded by a pair of random variables (X, Y), where X ∈ 𝒳 ⊂ ℝp denotes the features and Y ∈ {0, 1} is the binary response. Recall that a classifier h is a data-dependent mapping from the feature space to the labels. Classifiers are usually constructed to minimize the risk P(h(X) ≠ Y).

Denote by g and f the class conditional densities respectively for class 0 and class 1, i.e., (X|Y = 0) ~ g and (X|Y = 1) ~ f. It can be shown that the Bayes rule is 1I(r(x) ≥ 1/2), where r(x) = E(Y|X = x). Let π = P(Y = 1), then

Assume for simplicity that π = 1/2, then the oracle decision boundary is

Denote by g1, ⋯, gp the marginals of g, and by f1, ⋯, fp those of f. Naive Bayes models assume that the conditional distributions of each feature given the class labels are independent, i.e.,

| (1.1) |

Naive Bayes is a simple approach, but it is useful in many high-dimensional settings. Taking a two class Gaussian model with a common covariance matrix, Bickel and Levina (2004) showed that naively carrying out the Fisher’s discriminant rule performs poorly due to diverging spectra. In addition, the authors argued that independence rule which ignores the covariance structure performs better than the Fisher’s rule in some high-dimensional settings. However, correlation among features is usually an essential characteristic of data, and it can help classification under suitable models and with relative abundance of the sample. Examples in bioinformatics study can be found in Ackermann and Strimmer (2009). Recently, Fan et al. (2012) showed that the independence assumption can lead to huge loss in classification power when correlation prevails, and proposed a Regularized Optimal Affine Discriminant (ROAD). ROAD is a linear plug-in rule targeting directly on the classification error, and it takes advantages of the un-regularized pooled sample covariance matrix.

Relaxing the two-class Gaussian assumption in parametric Naive Bayes gives us a general Naive Bayes formulation such as (1.1). However, this model also fails to capture the correlation, or dependence among features in general. On the other hand, the marginal density ratios are the most powerful univariate classifiers and using them as features in multivariate classifiers can yield very powerful procedures. This consideration motivates us to ask the following question: are there advantages of combining these transformed features rather than untransformed feature? More precisely, we would like to learn a decision boundary from the following set

| (1.2) |

(All coefficients are 1 in the Naive Bayes model, so optimization is not necessary.) For univariate problems, properly thresholding the marginal density ratio delivers the best classifier. In this sense, the marginal density ratios can be regarded as the best transforms of future measurements, and (1.2) is an effort towards combining those most powerful univariate transforms to build more powerful classifiers.

This is in a similar spirit to the sure independence screening (SIS) in Fan and Lv (2008) where the best marginal predictors are used as probes for their utilities in the joint model. By combining these marginal density ratios and optimizing over their coefficients βj ’s, we wish to build a good classifier that takes into account feature dependence. Note that although our target boundary 𝒟 is not linear in the original features, it is linear in the parameters βj ’s. Therefore, any linear classifiers can be applied to the transformed variables. For example, we can use logistic regression, one of the most popular linear classification rules. Other choices, such as SVM (linear kernel), are good alternatives, but we choose logistic regression for the rest of discussion.

Recall that logistic regression models the log odds by log

where the βj’s are estimated by the maximum likelihood approach. We should note that without explicitly modeling correlations, logistic regression takes into account features’ joint effects and levels a good linear combination of features as the decision boundary. Its performance is similar to LDA, but both models can only capture decision boundaries linear in original features.

On the other hand, logistic regression might serve as a building block for the more flexible FANS algorithm. Concretely, if we know the marginal densities fj and gj, and run logistic regression on the transformed features {log(fj(xj)/gj(xj))}, we create a decision boundary nonlinear in the original features. The use of these transformed features is easily interpretable: one naturally combines the “most powerful” univariate transforms (building blocks of univariate Bayes rules) {log(fj(xj)/gj(xj))} rather than the original measurements. In special cases such as the two-class Gaussian model with a common covariance matrix, the transformed features are not different from the original ones. Some caution should be taken: if fj = gj for some j, i.e., the marginal densities for feature j are exactly the same, this feature will not have any contribution in classification. Deletion like this might lose power, because features having no marginal contribution on their own might boost classification performance when they are used jointly with other features. In view of this defect, a variant of FANS augments the transformed features with the original ones.

Since marginal densities fj and gj are unknown, we need to first estimate them, and then run a penalized logistic regression (PLR) on the estimated transforms. Note that some regularization (e.g., penalization) is necessary to reduce model complexity in the high dimensional paradigm. This two-step classification rule of feature augmentation via nonparametrics and selection will be called FANS for short. Precise algorithmic implementation of FANS is described in the next section. Numerical results show that our new method excels in many scenarios, in particular when no linear decision boundary can separate the data well.

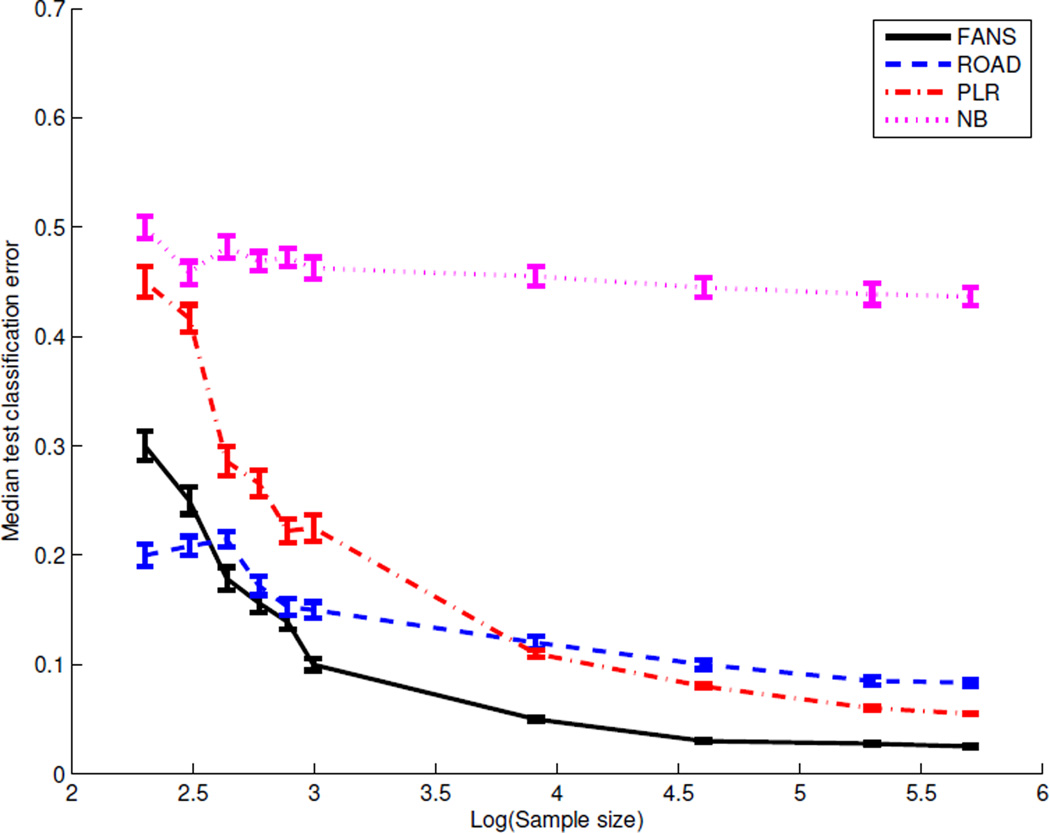

To understand where FANS stands compared to Naive Bayes (NB), penalized logistic regression (PLR), and the regularized optimal affine discriminant (ROAD), we showcase a simple simulation example. In this example, the choice is between a multivariate Gaussian distribution and some componentwise mixture of two multivariate Gaussian distributions:

Class 0: ,

Class 1: , where p = 1000, ◦ is the element-wise product between matrices, Σii = 1 for all i = 1, ⋯, p, Σij = 0.5 for all i, j = 1, ⋯, p and i ≠ j, and w = (w1, ⋯, wp)T, in which wj ~iid Bernoulli(0.5).

The median classification error with the standard error shown in the error bar for 100 repetitions as a function of training sample size n is rendered in Figure 1. This figure suggests that increasing the sample size does not help NB boost performance (in terms of the median classification error), because the NB model is severely biased in view of significant correlation presence. It is interesting to compare PLR with ROAD. ROAD is a more efficient approach when the sample size is small; however, PLR eventually performs better when the sample size becomes large enough. This is not surprising because the underlying true model is not two class Gaussian with a common covariance matrix. So the less “biased” PLR beats ROAD on larger samples. Nevertheless, even if ROAD uses a misspecified model, it still benefits from a specific model assumption on small samples. Finally, since the oracle decision boundary in this example is nonlinear, the newly proposed FANS approach performs significantly better than others when the sample size is reasonably large. The above analysis seems to suggest that FANS does well as long as we have enough data to construct accurate marginal density estimates. Note also that ROAD is better than FANS when the training sample size is extremely small. Figure 1 shows that even under the same data distribution, the best method in practice largely depends on the available sample abundance.

Figure 1.

The median test errors for Gaussian vs. mixture of Gaussian when the training data size varies. Standard errors shown in the error bars.

A popular extension of logistic regression and close relative to FANS is the additive logistic regression, which belongs to the generalized additive model (Hastie and Tibshirani, 1990). Additive logistic regression allows (smooth) nonparametric feature transformations to appear in the decision boundary by modeling

| (1.3) |

where hj’s are smooth functions. This kind of additive decision boundary is very general, in which FANS and logistic regression are special cases. It works well for small-p-large-n scenarios, while its penalized versions adapt to high dimensional settings. We will compare FANS with penalized additive logistic regression in numerical studies. The major drawback of additive logistic regression (generalized additive model) is the heavy computational complexity (e.g., the backfitting algorithm) involved in searching the transformation functions hj(·). Moreover, the available algorithms, e.g., the algorithm for penGAM (Meier et al., 2009), fail to give an estimate when the sample size is very small. Compared to FANS, the generalized additive model uses a factor of Kn more parameters, where Kn is the number of knots in the approximation of every additive components . While this reduces possible biases in comparison with FANS, it increases variances in the estimation and results in more computation cost (see Table 2). Moreover, FANS admits a nice interpretation of optimal combination of optimal building blocks for univariate classifiers.

Table 2.

Computation time (in seconds) comparison for FANS, SVM, ROAD and penGAM. The parallel computing technique is applied. Standard errors are in the parentheses.

| Ex(ρ) | FANS | FANS(para) | SVM | ROAD | penGAM |

|---|---|---|---|---|---|

| 1(0) | 12.0(2.6) | 3.8(0.2) | 59.4(12.8) | 99.1(98.2) | 243.7(151.8) |

| 1(0.5) | 12.7(2.1) | 3.5(0.2) | 81.3(19.2) | 100.7(89.3) | 325.8(194.3) |

| 2(0.5) | 16.0(3.1) | 4.0(0.2) | 77.6(18.1) | 106.8(90.7) | 978.0(685.7) |

| 2(0.9) | 22.0(4.6) | 4.5(0.3) | 75.7(17.8) | 98.3(83.9) | 3451.1(3040.2) |

| 3(0) | 12.1(2.1) | 3.4(0.2) | 152.1(27.4) | 96.3(68.8) | 254.6(130.0) |

| 3(0.5) | 11.9(2.0) | 3.4(0.2) | 342.1(58.0) | 95.9(74.8) | 298.7(167.4) |

| 4 | 22.4(3.9) | 6.6(0.4) | 264.3(45.0) | 75.1(54.0) | 4811.9(3991.7) |

Besides the aforementioned references, there is a huge literature on high dimensional classification. Examples include principal component analysis in Bair et al. (2006) and Zou et al. (2006), partial least squares in Nguyen and Rocke (2002), Huang (2003) and Boulesteix (2004), and sliced inverse regression in Li (1991) and Antoniadis et al. (2003). Recently, there has been a surge of interest for extending the linear discriminant analysis to high-dimensional settings including Guo et al. (2007), Wu et al. (2009), Clemmensen et al. (2011), Shao et al. (2011), Cai and Liu (2011), Mai et al. (2012) and Witten and Tibshirani (2012).

The rest of the paper is organized as follows. Section 2 introduces the FANS algorithm. Section 3 is dedicated to simulation studies and real data analysis. Theoretical results are presented in Section 4. We conclude with a discussion in Section 5. Longer proofs and technical results are relegated to the Appendix.

2 Algorithm

In this section, an efficient algorithm (S1 – S5) for FANS will be introduced. We will also describe a variant of FANS (FANS2), which uses the original features in addition to the transformed ones.

2.1 FANS and its Running Time Bound

-

S1

Given n pairs of observations D = {(Xi, Yi), i = 1, ⋯, n}. Randomly split the data into two parts for L times: Dl = (Dl1, Dl2), l = 1, ⋯, L.

-

S2

On each Dl1, l ∈ {1, ⋯, L}, apply kernel density estimation and denote the estimates by f̂ = (f̂1, ⋯, f̂p)T and ĝ = (ĝ1, ⋯, ĝp)T.

-

S3

Calculate the transformed observations Ẑi = Zf̂, ĝ(Xi), where Ẑij = log f̂j(Xij) − log ĝj(Xij), for each i ∈ Dl2 and j ∈ {1, ⋯, p}.

-

S4

Fit an L1-penalized logistic regression to the transformed data {(Ẑi, Yi), i ∈ Dl2}, using cross validation to get the best penalty parameter. For a new observation x, we estimate transformed features by log f̂j(xj)−log ĝj(xj), j = 1, …, p, and plug them into the fitted logistic function to get the predicted probability pl.

-

S5

Repeat (S2)–(S4) for l = 1, ⋯, L, use the average predicted probability as the final prediction, and assign the observation x to class 1 if prob ≥ 1/2, and 0 otherwise.

A few comments on the technical implementation are made as follows.

Remark 1

In S2, if an estimated marginal density is less than some threshold ε (say 10−2), we set it to be ε. This Winsorization increases the stability of the transformations, because the estimated transformations log f̂j and log ĝj are unstable in regions where true densities are low.

In S4, we take penalized logistic regression, but any linear classifier can be used. For example, support vector machine (SVM) with linear kernel is also a good choice.

In S4, the L1 penalty (Tibshirani, 1996) was adopted since our primary interest is the classification error. We can also apply other penalty functions, such as SCAD (Fan and Li, 2001), adaptive LASSO (Zou, 2006) and MCP (Zhang, 2010).

In S5, the average predicted probability is taken as the final prediction. An alternative approach is to make a decision on each random split, and listen to majority vote.

In S1, we split the data multiple times. The rationale behind multiple splitting lies in the two-step prototype nature of FANS, which uses the first part of the data for marginal nonparametric density estimates (in S2) and (transformation of) the second part for penalized logistic regression (in S4). Multiple splitting and prediction averaging not only make our procedure more robust against arbitrary assignments of data usage, but also make more efficient use of limited data. This idea is related to random forest (Breiman, 2001), where the final prediction is the average over results from multiple bootstrap samples. Other related literature includes Fu et al. (2005) which considers estimation of misclassification error with small samples via bootstrap cross-validation. The number of splits is fixed at L = 20 throughout all numerical studies. This choice reflects our cluster’s node number. Interested readers can as well leverage their better computing resources for a larger L. However, we observed that further increasing L leads to similar performance for all simulation examples. Also, we recommend a balanced assignment by switching the role of data used for feature transformation and for feature selection, i.e., D2l = (D(2l−1),2, D(2l−1),1) when D2l−1 = (D(2l−1),1, D(2l−1),2).

It is straightforward to derive a running time bound for our algorithm. Suppose splitting has been done. In S2, we need to perform kernel density estimation for each variable, which costs O(n2p)1. The transformations in S3 cost O(np). In S4, we call the R package glmnet to implement penalized logistic regression, which employs the coordinate decent algorithm for each penalty level. This step has a computational cost at most O(npT), where T is the number of penalty levels, i.e., the number of times the coordinate descent algorithm is run (see Friedman et al. (2007) for a detailed analysis). The default setting is T = 100, though we can set it to other constants. Therefore, a running time bound for the whole algorithm is O(L(n2p + np + npT)) = O(Lnp(n + T)).

The above bound does not look particularly interesting. However, smart implementation of the FANS procedure can fully unleash the potential of our algorithm. Indeed, not only the L repetitions, but also the marginal density estimates in S2 can be done via parallel computing. Suppose L is the number of available nodes, and the cpu core number in each node is N ≥ n/T. This assumption is reasonable because T = 100 by default, N = 8 for our implementation, and sample sizes n for many applications are less than a multiple of TN. Under this assumption, the L predicted probabilities calculations can be carried out simultaneously and the results are combined later in S5. Moreover in S2, the running time bound becomes O(n2p/N). Henceforth, a bound for the whole algorithm will be O(npT), which is the same as that for penalized logistic regression. The exciting message here is that, by leveraging modern computer architecture, we are able to implement our nonparametric classification rule FANS within running time at the order of a parametric method. The computation times for various simulation setups are reported in Table 2, where the first column reports results when only L repetitions are paralleled, and the second column reports the improvement when marginal density estimates in S2 are paralleled within each node.

2.2 Augmenting Linear Features

As we argued in the introduction, features with no marginal discrimination power do not make contribution in FANS. One remedy is to run (in S4) the penalized logistic regression using both the transformed features and the original ones, which amounts to modeling the log odds by

This variant of FANS is named FANS2, and it allows features with no marginal power to enter the model in a linear fashion. FANS2 helps when a linear decision boundary separates data reasonably well.

3 Numerical Studies

3.1 Simulation

In simulation studies, FANS and FANS2 are compared with competing methods: penalized logistic regression (PLR, Friedman et al. (2010)), penalized additive logistic regression models (penGAM, Meier et al. (2009)), support vector machine (SVM), regularized optimal affine discriminant (ROAD, Fan et al. (2012)), linear discriminant analysis (LDA), Naive Bayes (NB) and feature annealed independence rule (FAIR, Fan and Fan (2008)).

In all simulation settings, we set p = 1000 and training and testing data sample sizes of each class to be 300. Five-fold cross-validation is conducted when needed, and we repeat 50 times for each setting (The relative small number of replications is due to the long computation time of penGAM, c.f. Table 2). Table 1 summarizes median test errors for each method along with the corresponding standard errors. This table omits Fisher’s classifier (using pseudo inverse for sample covariance matrix), because it gives a test error around 50%, equivalent to random guessing.

Table 1.

Median test error (in percentage) for the simulation examples. Standard errors are in the parentheses.

| Ex(ρ) | FANS | FANS2 | ROAD | PLR | penGAM | NB | FAIR | SVM |

|---|---|---|---|---|---|---|---|---|

| 1(0) | 6.8(1.1) | 6.2(1.2) | 6.0(1.3) | 6.5(1.2) | 6.6(1.1) | 11.2(1.4) | 5.7(1.0) | 13.2(1.5) |

| 1(0.5) | 16.5(1.7) | 16.2(1.8) | 16.5(5.3) | 15.9(1.7) | 16.9(1.6) | 20.6(1.7) | 17.2(1.6) | 22.5(1.8) |

| 2(0.5) | 4.2(0.9) | 2.0(0.6) | 2.0(0.6) | 2.5(0.6) | 3.7(0.9) | 43.5(11.1) | 25.3(1.6) | 5.3(1.1) |

| 2(0.9) | 3.1(1.1) | 0.0(0.0) | 0.0(0.0) | 0.0(0.0) | 0.2(1.4) | 46.8(8.8) | 30.2(1.9) | 0.0(0.1) |

| 3(0) | 0.0(0.0) | 0.0(0.0) | 49.6(2.4) | 50.0(1.3) | 0.0(0.1) | 50.4(2.2) | 50.2(2.1) | 31.8(2.4) |

| 3(0.5) | 3.4(0.7) | 3.4(0.7) | 49.3(2.4) | 50.0(1.3) | 3.7(0.8) | 50.0(2.1) | 50.2(2.0) | 19.8(2.4) |

| 4 | 0.0(0.0) | 0.0(0.0) | 28.2(1.8) | 50.0(10.7) | 0.0(0.0) | 41.0(1.1) | 34.6(1.4) | 0.0(0.0) |

Example 1

We consider the two class Gaussian settings where Σii = 1 for all i = 1, ⋯, p and Σij = ρ|i−j|, μ1 = 01000 and , in which 1d is a length d vector with all entries 1, and 0d is a length d vector with all entries 0. Two different correlations ρ = 0 and ρ = 0.5 are investigated.

This is the classical LDA setting. In view of the linear optimal decision boundary, the nonparametric transformations in FANS is not necessary. Table 1 indicates some efficiency (not much) loss due to the more complex model FANS. However, by including the original features, FANS2 is comparable to the methods (e.g., PLR and ROAD) which learn boundaries linear in original features. In other words, the price to pay for using the more complex method FANS (FANS2) is small in terms of the classification error.

An interesting observation is that penGAM, which is based on a more general model class than FANS and FANS2, performs worse than our new methods. This is also expected as the complex parameter space considered by penGAM is unnecessary in view of a linear optimal decision boundary. Surprisingly, SVM performs poorly (even worse than NB), especially when all features are independent.

Example 2

The same settings as Example 1 except the common covariance matrix is an equal correlation matrix, with a common correlation ρ = 0.5 and ρ = 0.9.

Same as in Example 1, FANS and FANS2 have performance comparable to PLR and ROAD. Although FAIR works very well in Example 1, where the features are independent (or nearly independent), it fails badly when there is significant global pairwise correlation. Similar observations also hold for NB. This example shows that ignoring correlation among features could lead to significant loss of information and deterioration in the classification error.

Example 3

One class follows a multivariate Gaussian distribution, and the other a mixture of two multivariate Gaussian distributions. Precisely,

Class 0: ,

Class 1: ,

where Σii = 1, Σij = ρ for i ≠ j. Correlations ρ = 0 and ρ = 0.5 are considered.

In this example, Class 0 and Class 1 have the same mean, but have different marginal densities for the first 10 dimensions. Table 1 shows that all methods based on linear boundary perform like random guessing, because the optimal decision boundary is highly nonlinear. penGAM is comparable to FANS and FANS2, but SVM cannot capture the oracle decision boundary well even if a nonlinear kernel is applied.

Example 4

Two classes follow uniform distributions,

Class 0: Unif (A),

Class 1: Unif (B\A),

where A = {x ∈ ℝp : ‖x‖2 ≤ 1} and B = [−1, 1]p. Clearly, the oracle decision boundary is {x ∈ ℝp : ‖x‖2 = 1}. Again, FANS and FANS2 capture this simple boundary well while the linear-boundary based methods fail to do so.

Computation times (in seconds) for various classification algorithms are reported in Table 2. FANS is extremely fast thanks to parallel computing. While penGAM performs similarly to FANS in the simulation examples, its computation cost is much higher. The similarity in performance is due to the abundance in training examples. We will demonstrate with an email spam classification example that penGAM fails to deliver satisfactory results on small samples.

3.2 Real Data Analysis

We study two real examples, and compare FANS (FANS2) with competing methods.

3.2.1 Email Spam Classification

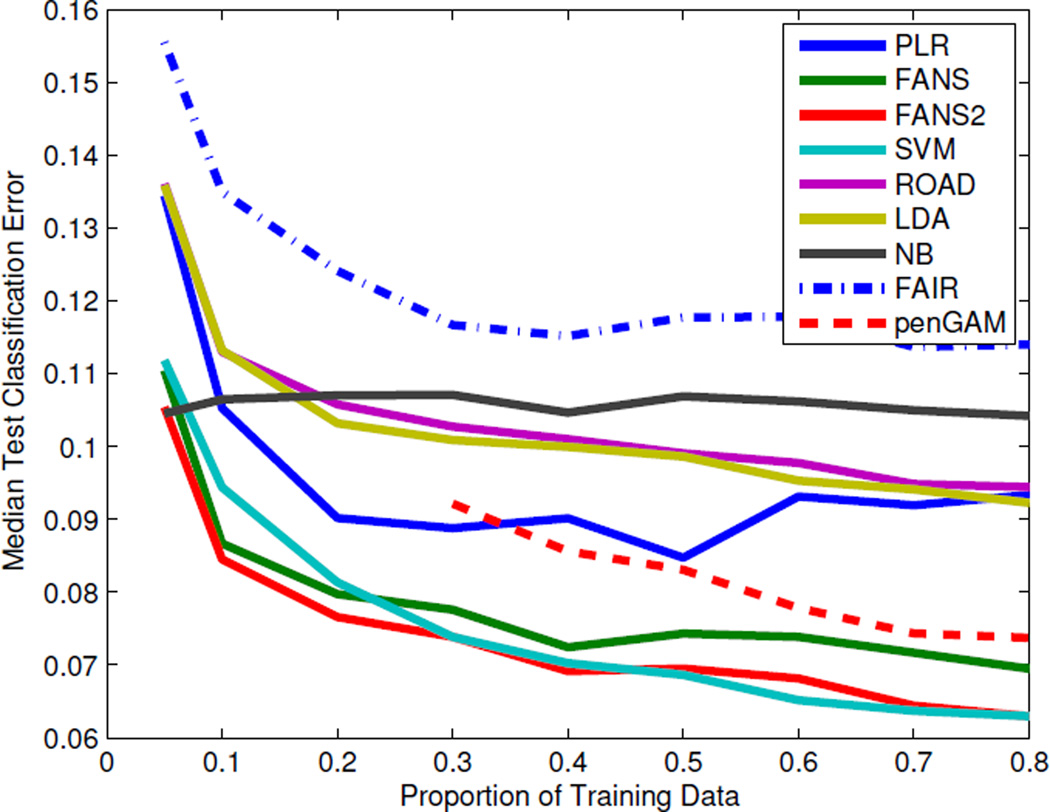

First, we investigate a benchmark email spam data set. This data set has been studied by Hastie et al. (2009) among others to demonstrate the power of additive logistic regression models. There are a total of n = 4, 601 observations with p = 57 numeric attributes. The attributes are, for instance, the percentage of specific words or characters in an email, the average and maximum run lengths of upper case letters, and the total number of such letters. To show suitable application domains of FANS and FANS2, we vary the training proportion, from 5%, 10%, 20%, ⋯, to 80% of the data while assigning the rest as test set. Splits are repeated for 100 times and we report the median classification errors.

Figure 2 and Table 3 summarize the results. First, we notice that FANS and FANS2 are very competitive when training sample sizes are small. As the training sample size increases, SVM becomes comparable to FANS2 and slightly better than FANS. In general, these three methods dominate throughout different training proportions. The more complex model penGAM failed to yield classifiers when training data proportion is less than 30% due to the difficulty of matrix inversion with the splines basis functions. For larger training samples, penGAM performs better than linear decision rules; however, it is not as competitive as either FANS or FANS2. Also interestingly, when the training sample size is 5%, Naive Bayes (NB) performs as well as the sophisticated method FANS2 in terms of median classification error, but NB has a larger standard error. Moreover, the median classification error of NB remains almost unchanged when the sample size increases. In other words, NB’s independence assumption allows good training given very few data points, but it cannot benefit from larger samples due to severe model bias.

Figure 2.

The median test classification error for the spam data set using various proportions of the data as training sets for different classification methods.

Table 3.

Median classification error (in percentage) on e-mail spam data when the size of the training data varies. Standard errors are in the parentheses.

| % | FANS | FANS2 | ROAD | PLR | penGAM | LDA | NB | FAIR | SVM |

|---|---|---|---|---|---|---|---|---|---|

| 5 | 11.1(2.6) | 10.5(1.1) | 13.6(0.9) | 13.5(1.7) | - | 13.6(1.1) | 10.5(5.0) | 15.6(1.7) | 11.2(0.8) |

| 10 | 8.7(2.4) | 8.5(0.9) | 11.3(0.8) | 10.5(1.1) | - | 11.3(0.9) | 10.7(4.2) | 13.5(0.9) | 9.4(0.7) |

| 20 | 8.0(2.1) | 7.7(0.7) | 10.6(0.6) | 9.0(0.8) | - | 10.3(0.6) | 10.7(5.3) | 12.4(0.7) | 8.1(0.7) |

| 30 | 7.8(1.7) | 7.4(0.5) | 10.3(0.4) | 8.9(0.6) | 9.2(0.6) | 10.1(0.5) | 10.7(4.0) | 11.7(0.4) | 7.4(0.6) |

| 40 | 7.2(2.2) | 6.9(0.5) | 10.1(0.5) | 9.0(0.6) | 8.6(0.5) | 10.0(0.4) | 10.5(5.1) | 11.5(0.6) | 7.0(0.5) |

| 50 | 7.4(2.2) | 7.0(0.5) | 9.9(0.5) | 8.5(0.6) | 8.3(0.5) | 9.9(0.4) | 10.7(4.1) | 11.8(0.6) | 6.9(0.5) |

| 60 | 7.4(2.2) | 6.8(0.5) | 9.8(0.6) | 9.3(0.6) | 7.8(0.6) | 9.5(0.5) | 10.6(4.8) | 11.8(0.7) | 6.5(0.6) |

| 70 | 7.2(1.6) | 6.4(0.6) | 9.5(0.7) | 9.2(0.7) | 7.4(0.7) | 9.4(0.6) | 10.5(4.6) | 11.4(0.7) | 6.4(0.7) |

| 80 | 6.9(1.6) | 6.3(0.7) | 9.4(0.6) | 9.3(0.9) | 7.4(0.8) | 9.2(0.6) | 10.4(4.7) | 11.4(0.8) | 6.3(0.9) |

3.2.2 Lung Cancer Classification

We now evaluate the newly proposed classifiers on a popular gene expression data set “Lung Cancer” (Gordon et al., 2002), which comes with predetermined, separate training and test sets. It contains p = 12, 533 genes for n0 = 16 adenocarcinoma (ADCA) and n1 = 16 mesothelioma training vectors, along with 134 ADCA and 15 mesothelioma test vectors.

Following Dudoit et al. (2002), Fan and Fan (2008), and Fan et al. (2012), we standardized each sample to zero mean and unit variance. The classification results for FANS, FANS2, ROAD, penGAM, NB, FAIR and SVM are summarized in Table 4. FANS and FANS2 achieve 0 test classification error, while the other methods fail to do so.

Table 4.

Classification error and number of selected genes on lung cancer data.

| FANS | FANS2 | ROAD | PLR | penGAM | NB | FAIR | SVM | |

|---|---|---|---|---|---|---|---|---|

| Training Error | 0 | 0 | 1 | 0 | 0 | 6 | 0 | 0 |

| Testing Error | 0 | 0 | 1 | 6 | 2 | 36 | 7 | 4 |

| No. of selected genes | 52 | 52 | 52 | 15 | 16 | 12533 | 54 | 12533 |

4 Theoretical Results

In this section, an oracle inequality regarding the excess risk is derived for FANS. Denote by f = (f1, ⋯, fp)T and g = (g1, ⋯, gp)T vectors of marginal densities of each class with f0 = (f0,1, ⋯, f0,p)T and g0 = (g0,1, ⋯, g0,p)T being the true densities. Let be i.i.d. copies of (X, Y), and the regression function be modeled by

where Z1 = (Z11, ⋯, Z1p)T, each Z1j = Z1j(X1) = log fj(X1j) − log gj(X1j), and m(·) is a generic function in some function class ℳ that includes the linear functions. Now, let 𝒬 = {q = (m, f, g)} be the parameter space of interest with constraints on m, f and g be specified later. The loss function we consider is

Let m0 = arg minm∈ℳ Pρ(m, f0, g0). Then the target parameter is q* = (m0, f0, g0). We use a working model with mβ(Z1) = βTZ1 to approximate m0. Under this working model, for a given parameter q = (mβ, f, g), let

| (4.4) |

With this linear approximation, the loss function is the logistic loss

Denote the empirical loss by , and the expected loss by Pρ(q) = Eρq(X, Y). In the following, we take ℳ as linear combinations of the transformed features so that m0 = mβ0, where

In other words, q0 = (mβ0, f0, g0) = q*. Hence, the excess risk for a parameter q is

| (4.5) |

As described in Section 2, densities f0 and g0 are unavailable and must be estimated. Theorem 2 will establish the excess risk bound for the L = 1 base procedure, which implies that the logistic regression coefficient and density estimates are close to the corresponding true values. Therefore, we expect for each l = 1, ⋯, L, the predicted probability pl is close to the oracle πq*(·). This further implies that is close to πq*(·). Given the above analysis, we fix L = 1 in the FANS algorithm (i.e., only one random splitting is conducted) throughout the theoretical development.

Suppose we have labeled samples (used to learn f0) and (used to learn g0; theory carries over for different sample sizes), in addition to an i.i.d. sample {(X1, Y1), ⋯, (Xn, Yn)} (used to conduct penalized logistic regression). Moreover, suppose {(X1, Y1), ⋯, (Xn, Yn)} is independent of and . A simple way to comprehend the above theoretical set up is that the sample size of 2n1 + n has been split into three groups. The notations P and E are regarding the random couple (X, Y). We use the notation Pn to denote the probability measure induced by the sample {(X1, Y1), ⋯, (Xn, Yn)}, and notations P+ and P− for the probability measures induced by the samples and .

The density estimates f̂ = (f̂1, ⋯, f̂p)T and ĝ = (ĝ1, ⋯, ĝp)T are based on samples and :

in which K(·) is a kernel function and h is the bandwidth. Then with these estimated marginal densities, we have an “oracle estimate” q1 = (β1, f̂, ĝ), where

It is the oracle given marginal density estimates f̂ and ĝ, and is estimated in FANS by

Let q̂1 = (mβ̂1, f̂, ĝ). Our goal is to control the excess risk ℰ(q̂1), where ℰ is defined by (4.5). In the following, we introduce technical conditions for this task.

Let Z0 be the n × p design matrix consisting of transformed covariates based on the true densities f0 and g0. That is , for i = 1, ⋯, n and j = 1, ⋯, p. In addition, let . Also, denote by |S| the cardinality of the set S, and by ‖D‖max = maxij |Dij| for any matrix D with elements Dij.

Assumption 1 (Compatibility Condition)

The matrix Z0 satisfies compatibility condition with a compatibility constant ϕ(·), if for every subset S ⊂ {1, ⋯, p}, there exists a constant ϕ(S), such that for all β ∈ ℝp that satisfy ‖βSc‖1 ≤ 3‖βS‖1, it holds that

A direct application of Corollary 6.8 in Bühlmann and van de Geer (2011) leads to a compatibility condition on the estimated transform matrix Ẑ, in which Ẑij = log f̂j(Xij) − log ĝj(Xij).

Lemma 1

Denote by E = Ẑ − Z0 the estimation error matrix of Z0. If the compatibility condition is satisfied for Z0 with a compatibility constant ϕ(·), and the following inequalities hold

| (4.6) |

the compatibility condition holds for Ẑ with a new compatibility constant .

The Compatibility Condition can be interpreted as a condition that bounds the restricted eigenvalues. The irrepresentable condition (Zhao and Yu, 2006) and the Sparse Riesz Condition (SRC) (Zhang and Huang, 2008) are in similar spirits. Essentially, these conditions avoid high correlation among subsets where signals are concentrated; such high correlation may cause difficulty in parameter estimation and risk prediction.

To help theoretical derivation, we introduce two intermediate L0-penalized estimates. Given the true densities f0 and g0, consider a penalized theoretical solution , where

| (4.7) |

in which H(·) is a strictly convex function on [0,∞) with H(0) = 0, sβ = |Sβ| is the cardinality of Sβ = {j : βj ≠ 0}, and ϕ(·) is the compatibility constant for Z0. Throughout the paper, we consider a specific quadratic function2 H(υ) = υ2 / (4c) whose convex conjugate is G(u) = supυ{uυ − H(υ)} = cu2. Then, equation (4.7) defines an L0-penalized oracle:

| (4.8) |

Similarly, with density estimate vectors f̂ and ĝ, we define an L0-penalized oracle estimate , where

| (4.9) |

To study the excess risk ℰ(q̂1), we consider its relationship with and .

Assumption 2 (Uniform Margin Condition)

There exists η > 0 such that for all (mβ, f, g) satisfying ‖β − β0‖∞ + max1≤j≤p ‖fj − f0,j‖∞ + max1≤j≤p ‖gj − g0,j‖∞ ≤ 2η, we have

| (4.10) |

where c is the positive constant in (4.8).

The uniform margin condition is related to the one defined in Tsybakov (2004) and van de Geer (2008). It is a type of “identifiability” condition. Basically, near the target parameter q0 = (mβ0, f0, g0), the functional value needs to be sufficiently different from the value on q0 to enable enough separability of parameters. Note that we impose the uniform margin condition in both the neighborhood of the parametric component β0 and the nonparametric components f0 and g0, because we need to estimate the densities, in addition to the parametric part. A related concept in binary classification is called “Margin Assumption”, which was first introduced in Polonik (1995) for densities.

To study the relationship between ℰ(q̂1) and , we define

Denote by

Set M* = ε*/λ0 (λ0 to specified in Theorem 1) and

The idea here is to choose λ0 such that the event 𝒥1 has high probability.

A few more notations are introduced to facilitate the discussion. Let τ > 0. Denote by ⌊τ⌋ the largest integer strictly less than τ. For any x, x′ ∈ ℝ and any ⌊τ⌋ times continuously differentiable real valued function u on ℝ, we denote by ux its Taylor polynomial of degree ⌊τ⌋ at point x:

For L > 0, the (τ, L, [−1, 1])-Hölder class of functions, denoted by Σ(τ, L, [−1, 1]), is the set of functions u : ℝ → ℝ that are ⌊τ⌋ times continuously differentiable and satisfy, for any x, x′ ∈ [−1, 1], the inequality:

The (τ, L, [−1, 1])-Hölder class of density is defined as

Assumption 3

Assume that β1 is in the interior of some compact set 𝒞p. There exists an ε0 ∈ (0, 1) such that for all β ∈ 𝒞p and fj, gj ∈ 𝒫Σ(2, L, [−1, 1]), j = 1, ⋯, p, ε0 < π(mβ,f,g)(·) < 1 − ε0.

Assumption 4

‖Z0‖max ≤ K for some absolute constant K > 0, and ‖β0‖∞ ≤ C1 for some absolute constant C1 > 0.

Assumption 5

The penalty level λ is in the range of (8λ0, Lλ0) for some L > 8. Moreover, the following holds

where η is as in the uniform margin condition.

Assumption 3 is a regularity condition on the probability of the event that the observation belongs to class 1. Since the FANS estimator is based on the estimated densities, we impose the constraints in a neighborhood of the oracle estimate β1 (when using f̂ and ĝ). Assumption 4 bounds the maximum absolute entry of the design matrix as well as the maximum absolute true regression coefficient. Assumption 5 posits a proper range of the penalty parameter λ to guarantee that the penalized estimator mimics the un-penalized oracle.

Assumption 6

Suppose the feature measurement X has a compact support [−1, 1]p, and f0,j, g0,j ∈ 𝒫Σ(2, L, [−1, 1]) for all j = 1, ⋯, p, where 𝒫Σ denotes a Hölder class of densities.

Assumption 7

Suppose there exists εl > 0 such that for all j = 1, ⋯, p, εl ≤ f0,j, . Also we truncate estimates f̂j and ĝj at εl and .

Assumption 8

and,

for some constant α > 7/15.

Assumption 6 imposes constraints on the support of X and smoothness condition on the true densities f0 and g0, which help control the estimation error incurred by the nonparametric density estimates. Assumption 7 assumes that the marginal densities and the kernel are strictly positive on [−1, 1]p. Assumption 8 puts a restriction on the growth of the dimensionality p in terms of sample size n1.

We now provide a lemma to bound the uniform deviation between f̂j and f0,j for j = 1, ⋯, p.

Lemma 2

Under Assumptions 6–8, taking the bandwidth , for any δ1 > 0, there exists such that if ,

for , and C2 is an absolute constant.

Denote by

where η is the constant in the uniform margin condition. It is straightforward from Lemma 2 that

The next lemma can be similarly derived as Lemma 2, so its proof is omitted.

Lemma 3

Under Assumptions 6–8, taking the bandwidth , for any δ > 0, there exists such that if ,

where E is the estimation error matrix as defined in Lemma 1 and for some absolute constant C3.

Corollary 1

Under Assumptions 6–8, take the bandwidth . On the event (regarding labeled samples) with P+−(𝒥3) > 1 − δ, there exists and C4 > 0 such that if uniformly for k, l = 1, ⋯, p, where . Denote by

On the event 𝒥4, the inequality (4.6) holds, and the compatibility condition is satisfied for Ẑ if we assume Assumption 1 (by Lemma 1). Moreover, it can be derived from Lemma 3 by taking a specific δ,

where Ap = maxS⊂{1,⋯,p}|S|/ϕ(S)2. Combining Lemma 2 and the uniform margin condition, we see that for given estimators f̂ and ĝ, the margin condition holds for the estimated transformed matrix Ẑ involved in the FANS estimator β̂1. Following similar lines as in van de Geer (2008) delivers the following theorem, so a formal proof is omitted.

Theorem 1 (Oracle Inequality)

In addition to Assumptions 1–8, assume and . Then on the event 𝒥1 ∩ 𝒥2 ∩ 𝒥3 ∩ 𝒥4, we have

Moreover, when and under the normalization condition that ‖Z1j‖∞ ≤ 1 for all j = 1, ⋯, p, it holds that

for

where 𝕡 is the probability with regards to all the samples and

Theorem 1 shows that with high probability, the excess risk of the FANS estimator can be controlled in terms of the excess risk of when using the estimated density functions f̂ and ĝ plus a term of explicit order. Next, we will study the excess risk of .

Assumption 9

Let be the subvector of corresponding to the nonzero components of β1, and . Assume sβ1 ≤ an1 for some deterministic sequence {an1}, and an1 · bn1 = o(1). In addition, , for some absolute constant C5.

Assumption 9 allows the number of nonzero elements of β1 to diverge at a slow rate with n1. Also, it demands a lower bound of the restricted eigenvalue of the sub-matrix of Z0 corresponding to the nonzero components of β1.

Lemma 4

Let Q(β) = Pρ(mβ, f̂, ĝ) + λ‖β‖0, and β̄1 = min{|β1,j| : j ∈ Sβ1}. Under Assumptions 3, 6, 7, 8 and 9, on the event 𝒥3, there exists a constant such that, if and the penalty parameter , the L0 penalized solution coincides with the unpenalized version; that is .

Theorem 2 (Oracle Inequality)

In addition to Assumptions 1–9, suppose , the penalty parameter λ ∈ (8λ0, min(Lλ0, 0.5C5ε0(1 − ε0) · minj:β1,j ≠0(|β1,j|))), where C5 is defined in Assumption 9, and . Taking the bandwidth , on the event 𝒥1 ∩ 𝒥2 ∩ 𝒥3 ∩ 𝒥4 as in Theorem 1, we have

Then in view of Theorem 1, we have

This theorem finale requires quite some conditions. We now de-convolute them by providing a high level description of the motivations behind these conditions. Because FANS is essentially a two step procedure, we need both steps to do well in order to have the theoretical performance guarantee. The first step is to estimate the transformed features. In this step, we need regularity conditions on the class conditional densities f0 and g0, and regularity conditions on the kernel density estimate components, such as the kernel K. Also, the sample size need to be big enough so that the kernel density estimate is close to the truth. The second step is penalized logistic regression using the estimated transformed features. In this step, usual conditions on the penalty level, design matrix and signal strength are needed. Moreover, some conditions that link nonparametric and parametric components, i.e., the first and second steps, such as the uniform margin condition should be in place.

From Theorem 2, it is clear that the excess risk of the FANS estimator is naturally decomposed into two parts. One part is due to the nonparametric density estimation while the other part is due to the regularized logistic regression on the estimated transformed covariates. When both the penalty parameter λ and the bandwidth h of the nonparametric density estimates f̂ and ĝ are chosen appropriately, the FANS estimator will have a diminishing excess risk with high probability. Note that one can make explicit λ to obtain a bound on the excess risk in terms of the sample sizes n and n1, and the dimensionality p. Also, it is worth noting that the development of oracle inequality of the FANS procedure β̂1 is accomplished via an important bridge of the L0-regularized estimator .

The oracle inequality for FANS2 can be developed along similar lines. In particular, the parameter under the working model will be changed to q2 = (m(β,γ), f, g) and the success probability given X1 will be modeled by a modified logistic function

| (4.11) |

where we note that in addition to the transformed features, the original features are also included. We would like to emphasize that X1 is observed and therefore there is no need to control its estimation error as we did for Z1. The conditions for the theory of FANS can be adapted to establish an oracle inequality for FANS2. We omit the details to avoid duplication of similar conditions and arguments.

5 Discussion

We propose a new two-step nonlinear rule FANS (and its variant FANS2) to tackle binary classification problems in high-dimensional settings. FANS first augments the original feature space by leveraging flexibility of nonparametric estimators, and then achieves feature selection through regularization (penalization). It combines linearly the best univariate transforms that essentially augment the original features for classification. Since nonparametric techniques are only performed on each dimension, we enjoy a flexible decision boundary without suffering from the curse of dimensionality. An array of simulation and real data examples, supported by an efficient parallelized algorithm, demonstrate the competitive performance of the new procedures.

To verify our methods’ performance against model misspecification, we evaluate different classifiers on the following example that has non-additive optimal decision boundary. Similar to Example 4, FANS and FANS2 perform the best among all competing methods (penGAM performs slightly worse with a larger standard error).

Example 5

Non-additive decision boundary. In particular, for x ~ N(0p, Ip), let .

One problem in applications we are faced with is whether we should use FANS or FANS2. While we do not have a universal rule, a rule of thumb might shed some insight. From the simulation examples, we see when the sample size is small and/or decision boundary is highly nonlinear, FANS is recommended over FANS2. Otherwise, FANS2 is recommended. Admittedly, in real data applications, it is often impossible to know a priori how the oracle decision boundary looks like. Data abundance can be a rough guideline in these scenarios.

A few extensions are worth further investigation. For example, an extension to multi-class classification is an interesting future work. Beyond a specific procedure, FANS establishes a general two-step classification framework. For the first step, one can use other types of marginal density estimators, e.g., local polynomial density estimates. For the second step, one might rely on other classification algorithms, e.g., the support vector machine, k-nearest neighbors, etc. Searching for the best two-step combination is an important but difficult task, and we believe that the answer mainly depends on the specific applications.

We can further augment the features by adding pairwise bivariate density ratios. These bivariate densities can be approximated by the bivariate kernel density estimates. Alternatively, we can restrict our attention to bivariate ratios of features selected by FANS. The latter has significantly fewer features.

Dimensions of data sets (e.g., SNPs) in many contemporary applications could be in millions. In such ultra-high dimensional scenarios, directly applying the FANS (FANS2) approach could cause problems due to high computational complexity and instability of the estimation. It will be beneficial to have a prior step to reduce the dimensionality in the original data. Notable works towards this effort on the theoretical front include Fan and Lv (2008), which introduced the sure independence screening (SIS) property to screen out the marginally unimportant variables. Subsequently, Fan et al. (2011) proposed nonparametric independence screening (NIS), an extension of SIS to the additive models.

Table 5.

Median test error (in percentages) for Example 5. Standard errors are in the parentheses.

| Ex | FANS | FANS2 | ROAD | PLR | penGAM | NB | FAIR | SVM |

|---|---|---|---|---|---|---|---|---|

| 5 | 6.7(1.1) | 6.9(1.1) | 50.2(2.1) | 50.0(1.4) | 8.0(2.3) | 50.2(2.3) | 49.7(2.1) | 50.0(2.0) |

Acknowledgments

The financial support from National Institutes of Health grants R01-GM072611 and R01GM100474-01 and National Science Foundation grants DMS-1206464 and DMS-1308566 is greatly acknowledged. The authors thank the editor, the associate editor, and referees for their constructive comments.

Appendix

The appendix contains technical proofs and Lemma 5.

Proof of Lemma 2

For any r, m > 0,

Since we assumed that all f̂j and f0,j are uniformly bounded by , ‖f̂j − f0,j‖∞ is bounded by for all j ∈ {1, ⋯, p}. This coupled with Lemma 1 in Tong (2013), provides a high probability bound for ‖f̂j − f0,j‖∞, gives rise to the following inequality,

where δ2 plays the role of ε in Lemma 1 of Tong (2013)(taking constant C = 1 for simplicity).

Finding the optimal order for r does not seem to be feasible. So we plug in and , then

where in the last inequality we have used the bandwidth .

The results are derived by taking (so , and by taking Assumption 8. Note that we need to introduce α > 0 because the consistency conditions do not hold for α = 0. In fact, we need at least α > 7/15. Under this assumption, there exists a positive integer such that if ,

Therefore, for ,

Lemma 5

For any vector θ0 = (θ0,1, ⋯, θ0,p)T, let Sθ0 = {j : θ0,j ≠ 0}, and let the minimum signal level be θ̄0 = min{|θ0,j| : j ∈ Sθ0}. Let g(θj) = cj(θj − θ0,j)2 + λ‖θj‖0, where cj > 0. If , g(θj) achieves the unique minimum at θj = θ0,j.

Proof of Lemma 5

For θ0,j = 0, the result is obvious. For θ0,j ≠ 0, we have j ∈ Sθ0 and

If ,

Since g(θ0,j) = λ‖θ0,j‖0, the lemma follows.

Proof of Lemma 4

Denote Q0(β) = Pρ(mβ, f̂, ĝ). Then we have β1 = arg minβ∈ℝp Q0(β): Since ∇Q0(β1) = 0 and

By Taylor’s expansion of Q0(β) at β1,

| (6.12) |

where β̃ lies between β and β1. Let M̂ = P{Ẑ1(β1)Ẑ1(β1)T}, where Ẑ1(β1) is the subvector of Ẑ1 corresponding to the nonzero components of β1, and , where is the subvector of corresponding to the nonzero components of β1. Let F = M̂ − M (a symmetric matrix). From the uniform deviance result of Lemma 3, with probability 1 − δ regarding the labeled samples, there exists a constant C4 > 0 such that |Fkl| ≤ C4bn1 uniformly for k, l = 1, ⋯, sβ1, where .

Hence, ‖F‖2 ≤ ‖F‖F ≤ C4sβ1bn1 ≤ C4an1bn1. For any eigenvalue λ(M̂), by the Bauer-Fike inequality (Bhatia, 1997), we have min1≤k≤sβ1 |λ(M̂) − λk(M)| ≤ ‖F‖2 ≤ C4an1bn1, where λk(A) denotes the k-th largest eigenvalue of A. In addition, in view of Assumption 9, there exists k ∈ Sβ1 such that

Since an1bn1 = o(1), there exists such that when , we have λmin(M̂) > 0.

Let be the subvector of β1 consisting of the nonzero components. Then by (6.12) and Lemma 5 for each j ∈ Sβ1 with λ < 0.5C5ε0(1−ε0)β̄12, we have

| (6.13) |

where βj and β1,j are the j-th components of β and β1, respectively. For ,

By (6.12), we have

Therefore, β1 is a local minimizer of Q(β). It then follows from the convexity of Q(β) that β1 is the global minimizer .

Proof of Theorem 2

For simplicity, denote by ρ(m(Z1), Y1) the loss function ρq(X1, Y1) = −Y1m(Z1) + log(1 + exp(m(Z1)). Note that

and

By the second order Taylor expansion, we obtain that

| (6.14) |

where m* lies between mβ(Ẑ1) and . Since

| (6.15) |

and 0 < ∂2ρ(m*, Y1)/[∂mβ(Z1)]2 < 1, taking the expectation we obtain that

Hence, from Corollary 1, on the event 𝒥3,

where sβ = |Sβ| is the cardinality of Sβ = {j : βj ≠ 0}. Naturally, .

In addition, by definition of β1, Pρ(mβ1(Ẑ1), Y1) = minβ Pρ(mβ (Ẑ1), Y1). As a result, Pρ(mβ1(Ẑ1), Y1) ≤ Pρ(mβ0 (Ẑ1), Y1). Thus, we have

| (6.16) |

In addition, by (6.14) and (6.15), for any β we have . Then, setting β = β1 on the left side leads to

| (6.17) |

Combining (6.16) and (6.17) leads to

| (6.18) |

As a result, we have

| (6.19) |

(6.19) combined with Lemma 4 leads to

| (6.20) |

Recall the oracle estimator

Then by Theorem 1,

| (6.21) |

Therefore, by (6.20) and (6.21),

Footnotes

Approximate kernel density estimates can be computed faster, see e.g., Raykar et al. (2010).

The following theoretical results can be derived for a generic strictly convex function H(·) along the same lines.

Contributor Information

Jianqing Fan, Jianqing Fan is Frederick L. Moore Professor of Finance, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ, 08544 (jqfan@princeton.edu).

Yang Feng, Yang Feng is Assistant Professor, Department of Statistics, Columbia University, New York, NY, 10027 (yangfeng@stat.columbia.edu).

Jiancheng Jiang, Jiancheng Jiang is Associate Professor, Department of Mathematics and Statistics, University of North Carolina at Charlotte, Charlotte, NC, 28223 (jjiang1@uncc.edu).

Xin Tong, Xin Tong is Assistant Professor, Department of Data Sciences and Operations, University of Southern California, Los Angeles, CA, 90089 (xint@marshall.usc.edu).

References

- Ackermann M, Strimmer K. A general modular framework for gene set enrichment analysis. BMC Bioinformatics. 2009;10:1471–2105. doi: 10.1186/1471-2105-10-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Antoniadis A, Lambert-Lacroix S, Leblanc F. Effective dimension reduction methods for tumor classification using gene expression data. Bioinformatics. 2003;19:563–570. doi: 10.1093/bioinformatics/btg062. [DOI] [PubMed] [Google Scholar]

- Bair E, Hastie T, Paul D, Tibshirani R. Prediction by supervised principal components. J. Amer. Statist. Assoc. 2006;101:119–137. [Google Scholar]

- Bickel PJ, Levina E. Some theory for Fisher’s linear discriminant function, ’naive Bayes’, and some alternatives when there are many more variables than observations. Bernoulli. 2004;10:989–1010. [Google Scholar]

- Boulesteix A-L. PLS dimension reduction for classification with microarray data. Stat. Appl. Genet. Mol. Biol. 2004;3:32. doi: 10.2202/1544-6115.1075. Art. 33 (electronic). [DOI] [PubMed] [Google Scholar]

- Breiman L. Random forests. Machine learning. 2001;45:5–32. [Google Scholar]

- Bühlmann P, van de Geer S. Statistics for High-Dimensional Data. Springer; 2011. [Google Scholar]

- Cai T, Liu W. A direct estimation approach to sparse linear discriminant analysis. J. Amer. Statist. Assoc. 2011;106:1566–1577. [Google Scholar]

- Clemmensen L, Hastie T, Wiiten D, Ersboll B. Sparse discriminant analysis. Technometrics. 2011;53:406–413. [Google Scholar]

- Dudoit S, Fridlyand J, Speed TP. Comparison of discrimination methods for the classification of tumors using gene expression data. J. Amer. Statist. Assoc. 2002;97:77–87. [Google Scholar]

- Fan J, Fan Y. High-dimensional classification using features annealed independence rules. Ann. Statist. 2008;36:2605–2637. doi: 10.1214/07-AOS504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Feng Y, Song R. Nonparametric independence screening in sparse ultra-high dimensional additive models. J. Amer. Statist. Assoc. 2011;106:544–557. doi: 10.1198/jasa.2011.tm09779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Feng Y, Tong X. A road to classification in high dimensional space: the regularized optimal affine discriminant. Journal of the Royal Statistical Society. Series B (Statistical Methodology) 2012;74:745–771. doi: 10.1111/j.1467-9868.2012.01029.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Amer. Statist. Assoc. 2001;96:1348–1360. [Google Scholar]

- Fan J, Lv J. Sure independence screening for ultrahigh dimensional feature space (with discussion) J. Roy. Statist. Soc., Ser. B: Statistical Methodology. 2008;70:849–911. doi: 10.1111/j.1467-9868.2008.00674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Höfling H, Tibshirani R. Pathwise coordinate optimization. Annals of Applied Statistics. 2007;1:302–332. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software. 2010;33:1–22. [PMC free article] [PubMed] [Google Scholar]

- Fu WJ, Carroll RJ, Wang S. Estimating misclassification error with small samples via bootstrap cross-validation. Bioinformatics. 2005;21:1979–1986. doi: 10.1093/bioinformatics/bti294. [DOI] [PubMed] [Google Scholar]

- Gordon GJ, Jensen RV, Hsiao L-L, Gullans SR, Blumenstock JE, Ramaswamy S, Richards WG, Sugarbaker DJ, Bueno R. Translation of microarray data into clinically relevant cancer diagnostic tests using gene expression ratios in lung cancer and mesothelioma. Cancer Research. 2002;62:4963–4967. [PubMed] [Google Scholar]

- Guo Y, Hastie T, Tibshirani R. Regularized linear discriminant analysis and its application in microarrays. Biostatistics. 2007;8:86–100. doi: 10.1093/biostatistics/kxj035. [DOI] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R. Generalized Addtive Models. Chapman & Hall/CRC; 1990. [Google Scholar]

- Hastie T, Tibshirani R, Friedman JH. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2nd edition. Springer-Verlag Inc; 2009. [Google Scholar]

- Huang PWX. Linear regression and two-class classification with gene expression data. Bioinformatics. 2003;19:2072–2978. doi: 10.1093/bioinformatics/btg283. [DOI] [PubMed] [Google Scholar]

- Li K-C. Sliced inverse regression for dimension reduction. J. Amer. Statist. Assoc. 1991;86:316–342. With discussion and a rejoinder by the author.

- Mai Q, Zou H, Yuan M. A direct approach to sparse discriminant analysis in ultra-high dimensions. Biometrika. 2012;99:29–42. [Google Scholar]

- Meier L, Geer V, Bühlmann P. High-dimensional additive modeling. Ann. Statist. 2009;37:3779–3821. [Google Scholar]

- Nguyen DV, Rocke DM. Tumor classification by partial least squares using microarray gene expression data. Bioinformatics. 2002;18:39–50. doi: 10.1093/bioinformatics/18.1.39. [DOI] [PubMed] [Google Scholar]

- Polonik W. Measuring mass concentrations and estimating density contour clusters-an excess mass approach. Annals of Statistics. 1995;23:855–881. [Google Scholar]

- Raykar V, Duraiswami R, Zhao L. Fast computation of kernel estimators. Journal of Computational and Graphical Statistics. 2010;19:205–220. [Google Scholar]

- Shao J, Wang Y, Deng X, Wang S. Sparse linear discriminant analysis by thresholding for high dimensional data. Ann. Statist. 2011;39:1241–1265. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J. Roy. Statist. Soc., Ser. B. 1996;58:267–288. [Google Scholar]

- Tong X. A plug-in approach to anomaly detection. Journal of Machine Learning Research. 2013;14:3011–3040. [Google Scholar]

- Tsybakov A. Optimal aggregation of classifiers in statistical learning. Ann. Statist. 2004;32:135–166. [Google Scholar]

- van de Geer S. High-dimensional generalized linear models and the lasso. Ann. Statist. 2008;36:614–645. [Google Scholar]

- Witten D, Tibshirani R. Penalized classification using fisher’s linear discriminant. Journal of the Royal Statistical Society Series B. 2012;73:753–772. doi: 10.1111/j.1467-9868.2011.00783.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu MC, Zhang L, Wang Z, Christiani DC, Lin X. Sparse linear discriminant analysis for simultaneous testing for the significance of a gene set/pathway and gene selection. Bioinformatics. 2009;25:1145–1151. doi: 10.1093/bioinformatics/btp019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang C-H. Nearly unbiased variable selection under minimax concave penalty. Ann. Statist. 2010;38:894–942. [Google Scholar]

- Zhang C-H, Huang J. The sparsity and bias of the LASSO selection in high-dimensional linear regression. Ann. Statist. 2008;36:1567–1594. [Google Scholar]

- Zhao P, Yu B. On model selection consistency of lasso. Journal of Machine Learning Research. 2006;7:2541–2563. [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. J. Amer. Statist. Assoc. 2006;101:1418–1429. [Google Scholar]

- Zou H, Hastie T, Tibshirani R. Sparse principal component analysis. J. Comput. Graph. Statist. 2006;15:265–286. [Google Scholar]