Significance

Reconsolidation-updating theory suggests that existing memory traces can be modified, or even erased, by postretrieval new learning. Compelling empirical support for this claim could have profound theoretical, clinical, and ethical implications. However, demonstrating reconsolidation-mediated memory updating in humans has proved particularly challenging. In four direct and three conceptual replication attempts of a prominent human reconsolidation study, we did not observe any reconsolidation effects when testing either procedural or declarative recall of sequence knowledge. These findings suggest that the considerable theoretical weight attributed to the original study is unwarranted and that postretrieval new learning does not reliably induce human memory updating via reconsolidation.

Keywords: reconsolidation, sequence learning, memory updating, forgetting, replication

Abstract

Reconsolidation theory proposes that retrieval can destabilize an existing memory trace, opening a time-dependent window during which that trace is amenable to modification. Support for the theory is largely drawn from nonhuman animal studies that use invasive pharmacological or electroconvulsive interventions to disrupt a putative postretrieval restabilization (“reconsolidation”) process. In human reconsolidation studies, however, it is often claimed that postretrieval new learning can be used as a means of “updating” or “rewriting” existing memory traces. This proposal warrants close scrutiny because the ability to modify information stored in the memory system has profound theoretical, clinical, and ethical implications. The present study aimed to replicate and extend a prominent 3-day motor-sequence learning study [Walker MP, Brakefield T, Hobson JA, Stickgold R (2003) Nature 425(6958):616–620] that is widely cited as a convincing demonstration of human reconsolidation. However, in four direct replication attempts (n = 64), we did not observe the critical impairment effect that has previously been taken to indicate disruption of an existing motor memory trace. In three additional conceptual replications (n = 48), we explored the broader validity of reconsolidation-updating theory by using a declarative recall task and sequences similar to phone numbers or computer passwords. Rather than inducing vulnerability to interference, memory retrieval appeared to aid the preservation of existing sequence knowledge relative to a no-retrieval control group. These findings suggest that memory retrieval followed by new learning does not reliably induce human memory updating via reconsolidation.

Reconsolidation theory proposes that retrieval of existing memory traces causes them to destabilize, triggering a transient molecular restabilization (“reconsolidation”) process during which they are open to modification (1, 2). If reconsolidation enables memory modification in humans, it could have profound theoretical (3), clinical (4), and ethical (5) implications. For example, the ability to erase “pathological” memory traces that contribute to posttraumatic stress disorder, addiction, and phobias, offers the potential of permanent relief from these conditions (4).

Proponents of reconsolidation theory suggest that there is broad empirical support across a range of species, tasks, and memory types (2, 4, 6), but several authors have expressed skepticism about the extent to which existing studies rule out alternative explanations (7–9). Extending reconsolidation investigations to human participants has proved particularly challenging. Support for the theory is largely based on nonhuman animal studies in which invasive interventions, such as electroconvulsive shock or pharmacological treatment, are delivered following retrieval of an established memory trace (2, 6). By contrast, ethical constraints have led to the use of new learning as a postretrieval intervention in many investigations with human participants (e.g., refs. 10–12; for review, see ref. 13). In both cases, the observation of substantial trace-dependent performance impairments on a subsequent test is taken as evidence that the intervention has disrupted the reconsolidation of the memory trace, resulting in its modification or destruction. Although physiological interventions are intended to directly disrupt the putative molecular substrates of reconsolidation, considerable ambiguity surrounds the envisioned mechanism by which a behavioral intervention might influence these same processes. Nevertheless, there are prevalent claims about the functional role of reconsolidation as a memory “updating” mechanism (14–16) whereby existing memory traces are selectively “rewritten” by postretrieval new learning (12, 17).

It is worth noting that the reconsolidation controversy is only the latest chapter in an enduring historical debate about the locus of interference and forgetting effects (18). On the one hand, amnesia for previously recallable information has been attributed to storage deficits: the permanent physical modification of memory traces by postencoding and postretrieval interventions [e.g., “consolidation” (19); “unlearning” (20); “destructive updating” (21); “reconsolidation” (2)]. On the other hand, amnesia has been attributed to mechanisms operating during trace retrieval that temporarily modulate trace-dependent performance without necessarily influencing the underlying memory trace [e.g., “response competition” (22); “cue-dependent forgetting” (23); “state-dependent retrieval” (24); “context-dependent forgetting” (25)]. These retrieval deficit accounts can explain experimentally induced amnesia without invoking claims about physical trace disruption that cannot be directly observed. They also provide a more convincing account of the widespread finding that impairments of trace-dependent performance are often temporary and show high propensity for recovery under favorable retrieval conditions (26). This debate is particularly pertinent to the evaluation of reconsolidation studies because retrieval deficit explanations are often overlooked (7–9).

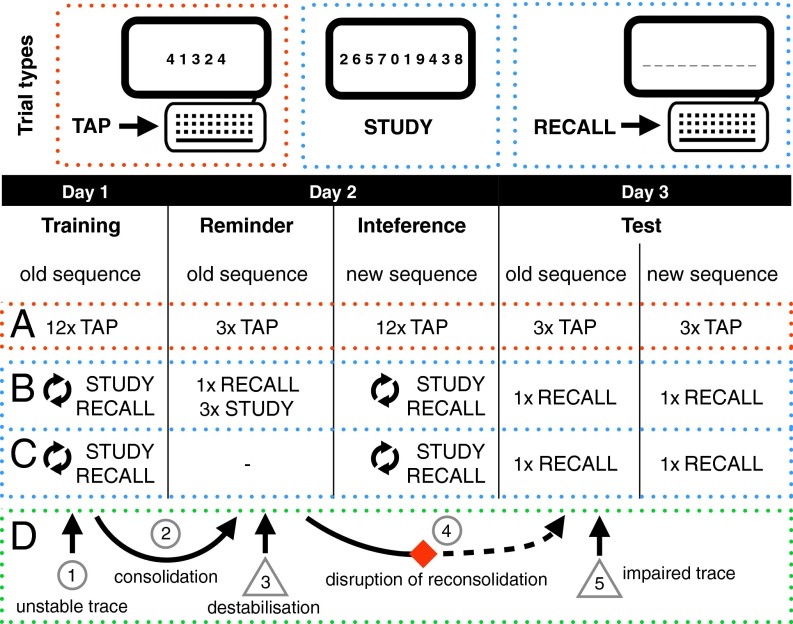

A particularly prominent finding reported by Walker et al. (ref. 27, group 7, hereafter referred to as the original study) is widely cited as a convincing demonstration of reconsolidation-mediated memory updating in humans (e.g., refs. 2, 4, 6, 13, and 14). The results are especially compelling because the experiment conformed to the canonical 3-day reconsolidation protocol (Fig. 1) typically used in nonhuman animal studies, thus meeting several key criteria necessary for a robust investigation of reconsolidation (2, 4, 6). On day 1, participants used a computer keyboard to repeatedly tap a simple sequence of on-screen digits (e.g., 41342). Speed and accuracy improvements were observed as participants learned this initial (“Old”) sequence. On day 2, participants in the Reminder group (n = 16) practiced the Old Sequence immediately before learning a New Sequence. The No-Reminder group did not practice the Old Sequence before new learning. The No-Intervention group practiced the Old Sequence but did not learn a New Sequence. On day 3, sequence performance was tested for all groups. The key finding was that the Reminder group’s Old Sequence accuracy suffered a substantial decline (∼57%) between the Reminder stage and the Test stage, although only minor decrements were observed on the speed measure (∼2%). By contrast, improvements in accuracy and speed between Training and Test stages were observed in the No-Reminder and No-Intervention groups. Therefore, it would appear that the accuracy impairment in the Reminder group was contingent on the time-dependent interaction of the reminder and intervention as demonstrated in similar nonhuman animal studies (1) and widely accepted as evidence for reconsolidation (2, 4, 6). Consistent with the view that the Old Sequence memory trace had been rewritten by the new learning (12, 17), the authors suggested that reconsolidation may have “functional significance,” allowing the “continued refinement and reshaping of previously learned movement skills” (ref. 27, p. 618).

Fig. 1.

Study design for Walker et al. (27) and direct replications (A, ··· red boundary), conceptual replications (··· blue boundary) with reminder condition (B) and without reminder condition (C), and hypothesized underlying mechanisms and events predicted by reconsolidation theory (D, ··· green boundary). Critical time points for calculation of the reconsolidation score (RS) are indicated by triangle symbols. See main text for details.

However, from the perspective of the aforementioned storage–retrieval debate (18), this interpretation should be viewed with caution, especially as retrieval deficit explanations were not explored. For example, it was not clear whether the effect endured beyond the 3-day study period, or showed propensity for recovery under favorable retrieval conditions (26), effects that have been observed in several investigations of reconsolidation with nonhuman animals (e.g., refs. 28–30). In the present study, we initially sought to replicate and extend the reported reconsolidation effect (ref. 27, group 7) by examining whether it could be accounted for by retrieval deficits rather than the storage deficit mechanisms outlined under reconsolidation theory (our investigation does not address other findings, unrelated to reconsolidation, reported in the same article). We conducted a replication battery (31) consisting of both “direct replications” (32) that followed the methodology of the original study as closely as possible, and “conceptual replications” (33) that manipulated key task parameters to explore the broader validity of the reconsolidation-updating theory.

To foreshadow our findings, the complete absence of a reconsolidation effect in any of our experiments precluded any further investigation of a retrieval deficit account. Instead, we made several attempts to reproduce the effect in repeated direct replications (n = 64) using our own software (experiment 1), software provided by the original researchers (experiment 2), and under conditions intended to increase task difficulty (experiments 3 and 4). In our conceptual replications (n = 48), we used “declarative” recall conditions more consistent with the wider human reconsolidation literature (e.g., refs. 10 and 11). These experiments also involved sequence learning within a 3-day reconsolidation protocol (Fig. 1), but used sequences similar in length and structure to phone numbers (experiments 5 and 7) or computer passwords (experiment 6). A No-Reminder control group (experiment 7) enabled us to ascertain whether performance impairments were contingent on retrieval-induced vulnerability as predicted by reconsolidation theory.

Results

All data (Datasets S1 and S2) and analysis scripts are publically available on the Open Science Framework (https://osf.io/gpeq4/). All experiments and measures are reported. Unequal variances in between-subject comparisons were addressed by using Welch t tests. Statistical significance was defined at the 0.05 level.

Direct Replications (Experiments 1–4).

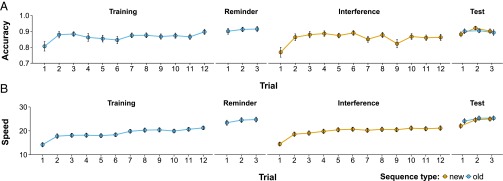

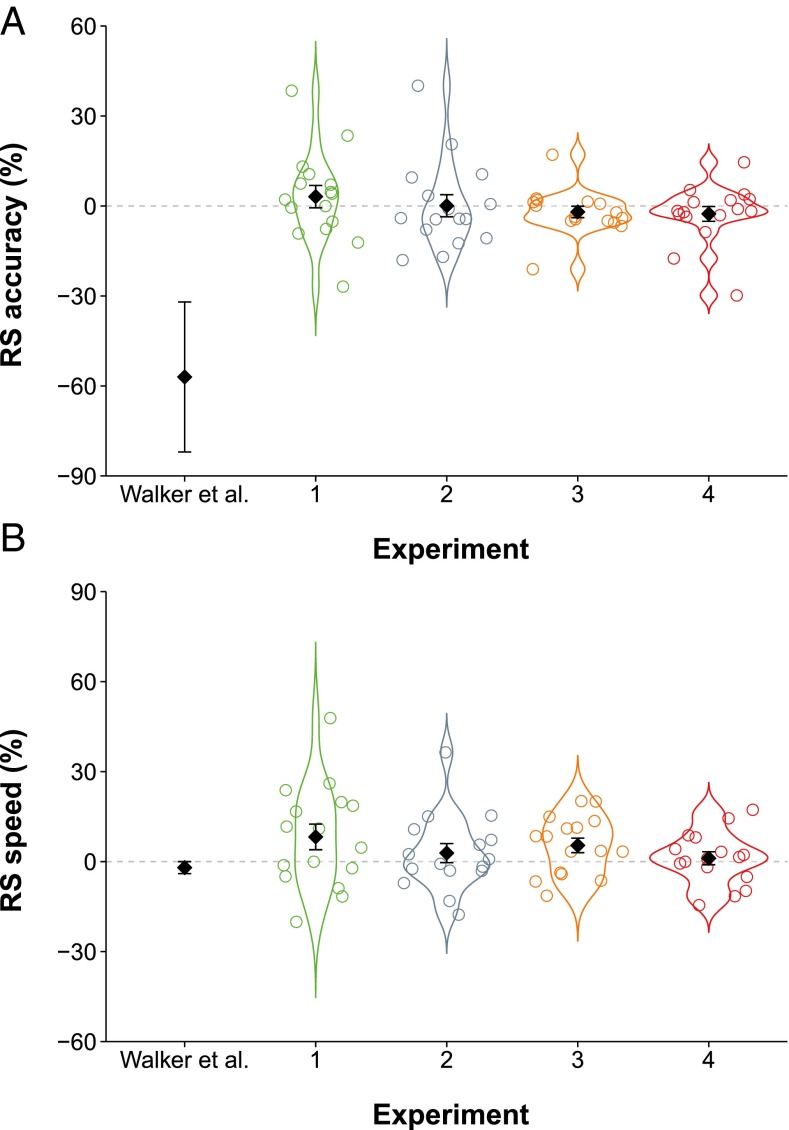

Consistent with the original study, we observed time-dependent improvements in accuracy and speed across the course of the Training and Interference stages, and overnight between stages (Fig. 2 and SI Results). The critical index of a reconsolidation effect (the percentage difference between Old Sequence performance at the Reminder stage and Test stage; from herein Reconsolidation Score or RS; Fig. 1, triangles), completely contradicted the finding of the original study (27): we observed only small fluctuations around zero for both accuracy (Fig. 3A) and speed (Fig. 3B) in all four direct replication attempts (experiments 1–4). Minor procedural differences between the replications and the original study (variability in participant age and time of testing) were ruled out as potential confounds through additional analyses (SI Results). Averaged across experiments, mean RS declined by <1% for accuracy, compared with ∼57% in the original study, and increased by ∼4% for speed. One-sample t tests (one-tailed) indicated that none of the RS values (Table 1) obtained in the direct replications were significantly less than zero.

Fig. 2.

Full study timeline showing mean accuracy (A; number of errors made relative to the number of complete sequences achieved) and mean speed (B; number of complete sequences achieved) by stage (Training, Reminder, Interference, and Test), trial, and sequence type, for experiments 1–4 (pooled). A full definition of these dependent variables is available in SI Methods. Error bars show ±SEM.

Fig. 3.

Accuracy (A) and speed (B) reconsolidation scores (RSs) for Walker et al. (27); n = 16) and experiments 1–4 (N = 64). Black diamonds represent means, and error bars show SEM. Where raw data are available (experiments 1–4), individual participant scores (circles) and kernel density distributions are also depicted.

Table 1.

Direct replication RS statistics for accuracy and speed

| Exp | DV | RS | SD | t(15) | P | BF01 |

| 1 | Accuracy | 3.13 | 14.95 | 0.84 | 0.79 | 8.97 |

| Speed | 8.24 | 17.14 | 1.92 | 0.96 | 13.59 | |

| 2 | Accuracy | 0.08 | 14.75 | 0.02 | 0.51 | 5.38 |

| Speed | 2.85 | 12.74 | 0.89 | 0.81 | 9.23 | |

| 3 | Accuracy | −1.98 | 7.58 | −1.04 | 0.16 | 1.90 |

| Speed | 5.41 | 9.75 | 2.22 | 0.98 | 14.68 | |

| 4 | Accuracy | −2.64 | 9.88 | −1.07 | 0.15 | 1.85 |

| Speed | 1.12 | 8.70 | 0.52 | 0.69 | 7.52 |

BF01, Bayes factor quantifying evidence in favor of the null hypothesis (RS = 0) relative to the reconsolidation hypothesis (RS < 0); DV, dependent variable; Exp, experiment; RS, mean reconsolidation score.

As the inherent limitations of null-hypothesis significance testing constrain the degree to which one can determine the strength of evidence in favor of the null hypothesis (34), we also conducted a Bayesian analysis that enabled us to quantify the evidence in favor of the null hypothesis H0 (RS = 0) relative to the reconsolidation hypothesis H1 (RS < 0). Specifically, we calculated directional Bayes factors (35) using an “objective” JZS prior (Cauchy distribution with scale r = 1). H1 was based on the general prediction of reconsolidation theory that trace-dependent performance should be reduced following disrupted reconsolidation of the reactivated trace (2, 4, 6). In all experiments, Bayes factors (BF01) (Table 1) were larger than 1, indicating greater evidentiary support for H0 relative to H1.

A primary goal of replication attempts is to facilitate more precise estimates of effect-size magnitude (36). However, in light of the stark discrepancy between the finding observed in the original experiment (ref. 27, n = 16) and the four direct replications (n = 64), we focused on assessing the extent to which the collated evidence indicated that the phenomenon exists at all. That is to say, we aimed to establish whether the effect is qualitatively reproducible, as nonreplication will preclude attempts to derive greater quantitative precision in the estimation of the effect’s magnitude.

Directional metaanalytic Bayes factors using t values for experiments 1–4 (Table 1) indicated greater evidentiary support for the null hypothesis (RS = 0) relative to the alternative hypothesis (RS < 0) for both accuracy (BF01 = 5.743) and speed (BF01 = 36.027). This pattern remained after incorporating an estimated t value for the original study (accuracy: BF01 = 2.080; speed: BF01 = 31.317). The complete absence of predicted outcomes across these four experiments suggests that the reconsolidation effect reported in group 7 of the original study (27) is not robust.

Conceptual Replications (Experiments 5–7).

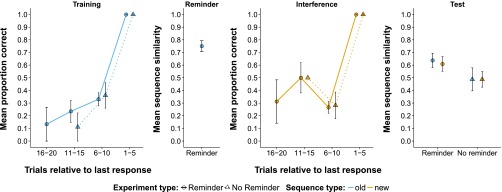

In the second component of the replication battery, we aimed to evaluate the broader validity of reconsolidation-updating theory. These experiments also involved sequence learning within a 3-day reconsolidation protocol (Fig. 1), but used sequences similar in length and structure to phone numbers (experiments 5 and 7) or computer passwords (experiment 6), and required declarative (rather than procedural) recall at the Test stage. Performance was assessed using a string-matching algorithm that provided an index of similarity between the target sequence and the sequence entered by the user (SI Methods). This afforded a sensitive measure of partial (or “chunked”) sequence knowledge. As the pattern of performance did not vary significantly between experiment 5 and experiment 6 (“Reminder experiments”), these data were pooled for display (Fig. 4) and subsequent analyses. Participants learned either a number (experiment 5) or letter (experiment 6) sequence to a criterion on day 1 (Training stage). On day 2, these sequences were recalled (Reminder stage) before new learning (Interference stage), and on day 3, recall of the sequences was evaluated (Test stage). In the No-Reminder control group (experiment 7), there was no Reminder stage, permitting a comparison of day 3 recall in the presence or absence of the day 2 reminder.

Fig. 4.

Full study timeline showing performance in experiments 5 and 6 pooled (Reminder groups; n = 32), and experiment 7 (No-Reminder group; n = 16). The Training and Interference panels show mean proportion correct on RECALLFeedback trials across five trial bins plotted relative to participants’ final response of the stage. All participants reached the performance criterion (five correct trials in a row) but required a different number of trials to do so (SI Results). The small number of participants who took more than 20 trials to reach criterion (Training: n = 2, maximum trials = 29; Interference: n = 1, maximum trials = 22) contribute to all relevant analyses. The Reminder and Test panels show mean sequence similarity between the target sequence and the user-entered sequence assessed on a single RECALLNoFeedback trial for each previously learned sequence (Old and New). Error bars show SEM.

During the Training and Interference stages, all participants successfully reached the criterion of five consecutive errorless sequence recalls (i.e., a maximum similarity score of 1.0), indicating successful learning of both the Old and New Sequences (SI Results). A one-way repeated-measures ANOVA indicated significant changes across stages (Training, Reminder, Test) for the Reminder experiments [F(2,90) = 8.68, P < 0.001]. Follow-up paired t tests (one-tailed) showed that there was significant decline from the Training stage (1.0) to the Reminder stage [mean (M) = 0.750, SD = 0.246; t(31) = 5.742, P < 0.001], and from Reminder stage to the Test stage [M = 0.638, SD = 0.315; t(31) = 2.645, P < 0.001]. The No-Reminder control group (experiment 7) enabled us to ascertain whether the observed recall impairments could be causally attributed to the time-dependent interaction of memory reactivation and interference as predicted by reconsolidation theory (2, 4, 6). Despite the absence of a Reminder stage, these participants also showed a substantial performance decrement from Training (1.0) to Test (M = 0.488, SD = 0.363). A paired-samples t test (two-tailed) confirmed that this decline was significant [t(15) = 5.646, P < 0.001].

These findings imply that at least some of the recall impairment observed in the Reminder experiments was not contingent on the provision of a reminder-triggered reconsolidation process. Furthermore, a between-group comparison of Test stage performance indicated poorer recall in the No-Reminder experiment (M = 0.488, SD = 0.363) than in the Reminder experiments (M = 0.638, SD = 0.315), and a two-sample t test (one-tailed) indicated no significant difference [t(26.59) = −1.409, P = 0.915]. Rather than inducing a state of increased susceptibility to interference, memory reactivation resulted in numerically less recall impairment of the Old Sequence at the Test stage relative to no memory reactivation, an effect in the opposite direction to that predicted by reconsolidation theory.

SI Text

SI Results

Direct Replications (Experiments 1–4).

Old Sequence Training.

To establish whether there were performance gains during the Old Sequence Training stage, we used two separate 4 × 12 mixed-factorial ANOVAs for accuracy and speed with one between-subjects variable (experiment: 1–4) and one within-subjects variable (trial: 1–12).

Accuracy (Fig. 2A, Training) increased numerically between trial 1 (M = 0.806, SD = 0.238) and trial 12 (M = 0.879, SD = 0.132), although neither the linear [F(1,60) = 2.96, P = 0.091] nor quadratic [F(1,60) = 0.105, P = 0.747] trend reached significance. There was no significant interaction between experiment and either linear [F(3,60) = 0.580, P = 0.630] or quadratic [F(3,60) = 1.203, P = 0.316] trend of trial.

Speed (Fig. 2B, Training) increased numerically between trial 1 (M = 14.200, SD = 6.40) and trial 12 (M = 21.238, SD = 6.356). Linear [F(1,60) = 95.398, P < 0.001] and quadratic [F(1,60) = 12.908, P = 0.001] trends both reached statistical significance. There was no significant interaction between experiment and linear trend of trial [F(3,60) = 1.371, P = 0.260], but the interaction between experiment and quadratic trend of trial reached significance [F(3,60) = 3.209, P = 0.029].

New Sequence Interference.

The same ANOVA design was used to assess changes in New Sequence performance across the Interference stage.

Accuracy (Fig. 2A, Interference) increased numerically between trial 1 (M = 0.769, SD = 0.246) and trial 12 (M = 0.862, SD = 0.155). Across trials, there was a significant quadratic trend [F(1,60) = 7.651, P = 0.008]. The linear trend was not significant [F(1,60) = 1.087, P = 0.301]. There was no significant interaction between experiment and quadratic [F(3,60) = 1.254, P = 0.274] or linear [F(3,60) = 1.327, P = 0.274] trend of trial.

Speed (Fig. 2B, Interference) increased numerically between trial 1 (M = 14.466, SD = 6.593) and trial 12 (M = 21.128, SD = 7.588). Linear [F(1,60) = 83.075, P < 0.001] and quadratic [F(1,60) = 58.072, P < 0.001] trends both reached significance. There was no interaction between experiment and linear [F(3,60) = 1.137, P = 0.342] or quadratic [F(3,60) = 1.362, P = 0.263] trend.

Overnight performance changes.

In the original study (27), the following comparisons were made to examine overnight changes in sequence performance:

• Overnight Score Old (OSO) was the percentage change between Old Sequence Training (trials 10–12 only) and Old Sequence Reminder (all three trials).

• Overnight Score New (OSN) was the percentage change between New Sequence Interference (trials 10–12 only) and New Sequence Test (all three trials).

Only the final three trials of the Training and Interference stages have been used because calculating an average across all 12 trials could attenuate the true time-dependent performance changes achieved by the end of these stages (after ref. 27). To establish whether the overnight scores varied between experiments, we used a series of one-way ANOVAs with experiment (1–4) as a between-subjects factor and overnight score (separately for old/new and separately for accuracy/speed) as a dependent variable.

For accuracy, there was no significant main effect of experiment for OSO [F(3,60) = 1.287, P = 0.287] or OSN [F(3,60) = 0.986, P = 0.406]. However, for speed, there was a significant main effect of experiment for both OSO [F(3,60) = 3.426, P = 0.023] and OSN [F(3,60) = 5.126, P = 0.003]. Consequently, we report follow-up tests for the data pooled across experiments (accuracy) or for each experiment individually (speed). One-sample t tests (one-tailed) were used to assess whether any performance changes between time points were significantly greater than zero.

Consistent with the original study, we observed significant overnight accuracy improvements for OSO [OSO = 4.649, SD = 15.089, t(63) = 2.465, P = 0.008] and OSN [OSN = 5.638, SD = 15.081, t(63) = 2.991, P = 0.002] when data were pooled across experiments. In most cases, improvements in speed were larger and more in keeping with the original study for both OSN and OSO (Table S1).

Table S1.

Overnight scores for direct replications

| Experiment | Sequence | OS | SD | t(15) | P |

| 1 | Old | 5.93 | 20.81 | 1.14 | 0.14 |

| New | 2.89 | 12.86 | 0.90 | 0.19 | |

| 2 | Old | 16.72 | 14.16 | 4.72 | <0.001 |

| New | 15.82 | 15.66 | 4.04 | <0.001 | |

| 3 | Old | 19.72 | 16.87 | 4.68 | <0.001 |

| New | 13.63 | 9.25 | 5.89 | <0.001 | |

| 4 | Old | 26.00 | 19.90 | 5.23 | <0.001 |

| New | 21.71 | 16.60 | 5.23 | <0.001 |

Speed-dependent variable only. OS, Overnight score.

Impact of counterbalancing.

Counterbalanced conditions were sequence order (X or Y; i.e., whether the Old Sequence was designated as 4–1–3–2–4 or 2–3–1–4–2, with the remaining sequence being assigned as the New Sequence) and test order (A or B; i.e., whether the Old or New Sequence was tested first on the Day 3 Test). Conditions were balanced in all experiments (n = 8 per condition), except in experiment 2 where researcher error led to unbalanced conditions (test order: A = 12, B = 4; sequence order: X = 7, Y = 9). To establish whether the counterbalancing procedures influenced the RS, we used a series of one-way ANOVAs separately for test order (A, B) or sequence order (X, Y) as a between-subjects factor and RS (separately for accuracy and speed), as a dependent variable.

There was no significant main effect of sequence order on RS accuracy [F(1,62) = 0.004, P = 0.948] or RS speed [F(1,62) = 0.224, P = 0.638]. There was also no significant main effect of test order on RS accuracy [F(1,62) = 0.655, P = 0.421]; however, test order did influence RS speed significantly [F(1,62) = 5.320, P = 0.024]. Follow-up one-sample t tests (two-tailed) indicated that RS speed was significantly higher than zero for test order A [RS = 7.482, SD = 13.479, t(35) = 3.331, P = 0.002] and did not differ significantly from zero for test order B [RS = 0.448, SD = 10.044, t(27) = 0.236, P = 0.815], confirming that there was no reconsolidation effect in either condition.

Impact of training accuracy.

To address concerns about ceiling effects in the accuracy data, we conducted a median split on the pooled data across all experiments and repeated the RS analysis for accuracy. The pooled data were split based on the median accuracy score achieved on the final three trials of Old Sequence Training (0.894). In the above-median group, there were minor improvements in accuracy [RS = 1.98, t(31) = 0.825, P = 0.792], and the Bayes factor (BF01 = 12.47) indicated greater evidentiary support for the null hypothesis (RS = 0) against the alternative hypothesis (RS < 0). In the below-median group, there were minor nonsignificant decrements in accuracy [RS = −2.689, t(31) = −1.496, P = 0.072], and the Bayes factor (BF01 = 1.374) indicated that the data are inconclusive when comparing the null hypothesis (RS = 0) against the alternative hypothesis (RS < 0). Therefore, even when below-average performers were examined in isolation, reconsolidation effects were not observed.

Extracting and estimating statistics from the original study.

As neither raw or summary-level data for the original study were available, we used plot-digitizing software to extract RS values for accuracy (M = −57, SEM = 25) and speed (M = −2, SEM = 2) from the relevant graphs published in the original article (figure 4C in ref. 27). Values were rounded to the nearest whole number. As t values were not reported in the original article, we used these means and SEMs to recalculate them for use in metaanalysis.

Statistical power.

Cohen’s d effect sizes for RSs in the original study (27) were calculated for accuracy (d = −0.57) and speed (d = −0.25). This was achieved using the estimated t values (Extracting and estimating statistics from the original study) in the following equation (48):

Given these effect sizes, the use of directional one-sample t tests, and an α level of 0.05, the power for any one of our experiments taken individually (n = 16) was 0.70. The combined power of all of the direct replications (n = 64) was 0.99. As the direct replications overall had high statistical power to detect the effect size reported in the original study, it seems unlikely that our findings reflect a false-negative or “type II error.” In addition, the metaanalytic Bayesian analysis (main text), which accounts for sample size, indicated greater evidentiary support for the null hypothesis relative to the reconsolidation hypothesis.

Influence of variability in session times and participant age range.

Two minor procedural differences between the original study and the direct replications (participant age range and time of testing) were evaluated to see whether they influenced the findings. Time of testing in the direct replications (rounded to the nearest hour: median = 15.00 h, SD = 1.833) differed only slightly from Walker et al. (13.00 h) and was not significantly correlated with RSs (r = 0.12, P = 0.355). Therefore, time of testing cannot account for the absence of a reconsolidation effect.

Participant age in the direct replications (median, 22 y; range, 18–54 y) covered a larger range than Walker et al. (median unknown; range, 18–27 y). A reanalysis of RSs for only those participants who fell within the 18–27 age bracket (n = 48), showed that there was still no substantial impairment [mean = −2.05, SD = 10.51; t(47) = −1.35, P = 0.092]. Therefore, participant age cannot account for the absence of a reconsolidation effect.

Conceptual Replications (Experiments 5–7).

Old Sequence Training.

All participants were trained until they reached a performance criterion of five consecutive errorless recalls of the Old Sequence and recall failure resulted in additional study trials. This ensured that, regardless of idiosyncratic learning strategies, all participants robustly encoded both the Old and New sequences. There is some evidence from nonhuman animal studies to suggest that stronger memories are less amenable to reconsolidation than weaker ones (49). However, the specific parameters under which this potential moderator might operate are not well defined (2, 4). Furthermore, this seems unlikely to be an influential factor in this case as participants demonstrated below-ceiling performance at the Reminder stage (M = 0.750, SD = 0.246; see Old Sequence performance between stages below). More trials were required to reach criterion in experiment 6 (Letters; M = 13.00, SD = 6.623) than experiment 5 (Numbers; M = 8.688, SD = 2.676) and experiment 7 (Numbers No Reminder; M = 7.813, SD = 3.124). A one-way ANOVA indicated that the number of trials required to reach criterion varied significantly between experiments [F(2,45) = 6.089, P < 0.001]. Follow-up Welch two-sample t tests indicated that experiments 5 and 7 did not differ significantly [t(29.31) = 0.851, P = 0.402]. However, participants required significantly more trials to reach criterion in experiment 6 compared with experiment 5 [t(19.77) = −2.42, P = 0.026]. No participants failed to reach the performance criterion.

New Sequence Interference.

The same learn-to-criterion procedure was used in the Interference stage as in the Training stage. Although more trials were required to reach criterion in experiment 6 (Letters; M = 10.375, SD = 4.938) than in experiment 5 (Numbers; M = 8.563, SD = 4.397) and experiment 7 (Numbers No Reminder; M = 7.125, SD = 2.363), a one-way ANOVA indicated that these differences were not statistically significant [F(2,45) = 2.583, P = 0.087]. No participants failed to reach the performance criterion.

Old Sequence performance between stages.

Following the Training stage baseline (1.0), there were performance decrements in Old Sequence performance at the subsequent Reminder (M = 0.756, SD = 0.253) and Test stages (M = 0.606, SD = 0.315) in experiment 5 (Numbers). A similar pattern was observed in experiment 6 (Letters) with performance declining at the Reminder (M = 0.744, SD = 0.248) and Test stages (M = 0.669, SD = 0.322).

A 2 × 3 mixed-factorial ANOVA (experiment: 5, 6; stage: Training, Reminder, Test) showed that this performance decline across stages was statistically significant [F(2,87) = 8.629, P < 0.001]. There was no main effect of experiment [F(1,87) = 1.122, P = 0.292], or interaction between experiment and stage [F(1,30) = 0.672, P = 0.513]. As the overall pattern did not vary between experiments 5 and 6 (“Reminder experiments”), we pooled the data for subsequent analysis (main text).

New Sequence performance between stages.

New Sequence performance declined from the Interference stage baseline (1.0) to the subsequent Test stage in experiment 5 (M = 0.625, SD = 0.317), experiment 6 (M = 0.594, SD = 0.342), and experiment 7 (M = 0.488, SD = 0.245). A 3 × 2 mixed-factorial ANOVA (experiment: 5, 6, 7; stage: Interference, Test) indicated that there was a main effect of stage [F(1,88) = 15.425, P < 0.001] and no main effect of experiment [F(2,88) = 0.548, P = 0.580] or interaction between experiment and stage [F(2,88) = 0.548, P = 0.580].

Impact of counterbalancing.

We counterbalanced sequence order, i.e., whether the Old Sequence (X) was designated as 1–4–6–3–2–9–5–0–8–7/2–6–5–7–0–1–9–4–3–8 (experiments 5 and 7) or l–p–k–s–f–q–j–d–x–h/j–f–l–d–q–x–k–h–p–s (experiment 6), with the remaining sequence being assigned as the New Sequence (Y). We also counterbalanced test order (A or B; i.e., whether the Old or New Sequence was tested first on the Day 3 Test). Conditions were balanced in all experiments (n = 8 per condition). To establish whether the counterbalancing procedures influenced sequence similarity scores at the Test stage, two separate two-way ANOVAs with experiment (5, 6, 7) and either test order (A, B) or sequence order (X, Y) as between-subjects factors. There was no significant main effect of test order [F(1,42) = 0.466, P = 0.498], or interaction between test order and experiment [F(2,42) = 0.723, P = 0.491], and no main effect of sequence order [F(1,42) = 0.714, P = 0.403] or interaction between sequence order and experiment [F(2,42) = 0.162, P = 0.851].

SI Methods

Direct Replications.

Procedural variations.

Each experiment had minor variations from the general procedure outlined in the main text. Unlike the other experiments, in experiment 1, the sequence remained on screen during rest trials, there was no countdown timer, and the background color was invariant throughout. Key presses were acknowledged with the transient display of white dots arranged in a row that corresponded to the horizontal order of the physical keys. Experiments 1, 3, and 4 were executed in Python code developed by T.E.H., whereas experiment 2 was run from an executable file provided by the original research team.

In the original study (27) and experiments 1 and 2, participants were instructed to tap the sequence, “as quickly and accurately as possible.” In experiments 3 and 4, this instruction was modified to read “as quickly as you can. Try not to make errors, but overall you should emphasize speed over accuracy.” The phrase “tap as quickly as you can!” was also displayed continuously on screen during test phases in experiments 3 and 4. In experiment 4, the keyboard was positioned in an adapted box file such that the participant was unable to view their hand during task performance. Tactile markers were placed on the response keys to prevent the participants’ hand shifting to the incorrect keys. Participants were allowed to lift the lid of the box file during rest phases so they could stretch their fingers and ensure the hand was correctly positioned before closing the lid and starting the next trial.

Operationalizing accuracy and speed.

The precise operationalization of the dependent measures reported in the original study (27) was ambiguous: Performance measures were the number of complete sequences achieved (“speed”), and the number of errors made relative to the number of complete sequences achieved (“accuracy”). The senior author of the original research team confirmed the following definitions:

• speed was the number of complete sequences achieved during a 30-s trial plus any partial sequence the participant was completing when the trial was terminated. For example, a participant who performed 15 complete sequences, and had just entered two correct items when the trial terminated, would receive a speed score of 15.4 (15 + 2/5);

• accuracy was 1 − (errors/speed), where a single error was defined as any string of up to five contiguous incorrect items that did not match the target sequence. For example, three contiguous incorrect items would constitute a single error, but six contiguous incorrect items would constitute two errors.

Note that, under this scheme, it is technically possible for a participant to incur a negative accuracy score on an individual trial if error exceeds speed. This could substantially bias between-stage comparisons, as accuracy scores should only range between 0 and 1. Across the four experiments reported here, five trials with negative accuracy scores were identified (<0.003% of total trials) and converted to zero. This did not impact the qualitative pattern of the results.

Conceptual Replications.

Operationalizing sequence similarity.

The similarity between the target (Old/New) and user-entered sequences was measured using a normalized ratio of the Damerau–Levenshtein edit distance: a metric that indicates the number of “fundamental” operations (substitution, deletion, insertion, or transposition) required to convert one character string into another and thus reflecting the “similarity” of the two sequences (50).

Sequence construction.

Sequences were generated with relatively unique grammars but used the same items to ensure a degree of old–new competition. To do this, we first defined a “base set” of 10 items, which were either randomly selected consonants (l p k s f q j d x h; experiment 6) or single digits (0–9; experiments 5 and 7). The first sequence was generated by randomly shuffling the order of these items. The second sequence was generated by repeatedly shuffling the first sequence until (i) all relative item positions (i.e., pairwise forward and backward transitions) were unique, and (ii) all absolute item positions were unique. The same two sequences were used for all participants.

Discussion

Reconsolidation-updating theory suggests that retrieval of an existing trace in the human memory system can render that trace vulnerable to modification from postretrieval new learning. In the present investigation, we attempted to replicate and extend a critical finding (27) widely considered to provide a compelling demonstration of reconsolidation-mediated memory updating in humans. In four direct-replication attempts involving procedural recall and three conceptual-replication attempts involving declarative recall, we did not observe the critical impairment effects observed in the original study and predicted by reconsolidation theory (2, 4, 6).

The findings of our direct replications are consistent with several recent investigations that used variations of the original paradigm (27), with either transcranial magnetic stimulation (37, 38) or new learning (39) interventions. Although the findings of these studies were interpreted as favorable evidence for reconsolidation theory, the expected Reminder–Test performance decrement was actually absent in most conditions, including close replications of the original study. When trace-dependent performance decrements were observed at short reminder lengths, they were modest, and rapidly recovered within the test session (39). It is difficult to reconcile the absence of performance impairments and the presence of recovery effects with the prediction of permanent trace modification (2). Thus, the outcomes of all three studies (37–39) are inconsistent with both the original study (27) and reconsolidation theory in general (2, 4, 6).

The findings of our conceptual replications cast further doubt on the veracity of claims that memory updating can be mediated by reconsolidation processes (12, 14–17). These experiments adhered to the canonical 3-day reconsolidation protocol and aimed to increase external validity through the use of sequences similar in structure to phone numbers or computer passwords. In addition, consistent with several studies in the human reconsolidation literature (e.g., refs. 10–12), participants completed a declarative recall task. Under these conditions, performance impairments occurred in the both the presence and absence of memory retrieval. Rather than triggering a state of heightened trace-vulnerability, retrieval actually led to numerically higher performance than in the no-retrieval control group. This finding is consistent with previous investigations of reconsolidation that found retrieval practice can afford some protection against interference (e.g., ref. 40), and a considerable body of evidence suggesting that retrieval aids rather than impairs subsequent recall (41).

Two notable aspects of human reconsolidation research are not directly addressed by the present investigation. First, there is evidence that postretrieval pharmacological interventions can attenuate emotional responding in a fear-conditioning paradigm (42). However, the reliability of these effects has also recently come under scrutiny (43). Similarly, initially promising findings based on using postretrieval extinction to disrupt reconsolidation in a fear-conditioning paradigm (17) have proved elusive in subsequent replication attempts (44, 45). It is striking that declarative recall of the conditioned stimulus–unconditioned stimulus contingency remains intact across these fear-conditioning studies, either in the presence or absence of effects on emotional responding.

Additionally, it has been suggested that “prediction error” is a necessary reconsolidation trigger (11, 46). If this were the case, it could explain the absence of reconsolidation effects in the present replications. However, it would also be surprising that a reconsolidation effect was observed in the original study because the reminder protocol required participants to practice the Old Sequence in its entirety, and thus presumably did not invoke prediction error. To justify an auxiliary theoretical assumption about prediction error, one would need to reconcile a considerable amount of contradictory evidence. For example, relative to controls, no impairment of declarative recall is observed in the aforementioned prediction error studies (11, 46), only attenuation of emotional responding (46), or ambiguous null effects on an indirect measure of trace integrity (retrieval-induced forgetting; ref. 11). Furthermore, reconsolidation-like effects have been reported when the reminder involves reinforced trials (and thus no prediction error) in both nonhuman animals (47) and humans (12), and impairment effects are absent even in studies where prediction error would be expected (44, 45). At present, therefore, it is unclear whether prediction error is either necessary or sufficient for reconsolidation effects to emerge.

Taken together, our findings cast doubt on the efficacy of new-learning interventions as a means for disrupting the reconsolidation of procedural or declarative memory in humans. The absence of reconsolidation effects in all four direct replications suggests that the considerable theoretical weight attributed to the original study (2, 4, 6, 13, 14) is unwarranted. Furthermore, the absence of retrieval-contingent impairment in the conceptual replications is inconsistent with the purported functional role of reconsolidation as an adaptive mechanism that underlies memory updating (12, 14–17). Replication will be an essential tool in future reconsolidation investigations as researchers seek to verify the reliability of existing findings, identify genuine boundary conditions, and foster theoretical progress.

Methods

All experimental programs and verbatim materials are publically available on the Open Science Framework (https://osf.io/gpeq4/). Participants were recruited from the University College London (UCL) mixed-occupation subject pool and received either monetary compensation or course credits. All participants reported that they were right-handed and had no history of neurological, psychiatric, or sleep disorder. All participants provided informed consent and the study was approved by the local UCL ethics committee.

Direct Replications (Experiments 1–4).

Participants.

Sixteen participants were randomly allocated to each of the four direct-replication experiments, affording a total sample size of 64 individuals (49 females; median age, 22 y; age range, 18–54 y). Two additional participants were excluded for typing an incorrect sequence at the Reminder stage, and four additional participants did not complete all three stages of the study.

Design.

Participants performed a “finger-tapping” sequence learning task in three discrete sessions taking place on consecutive days (Fig. 1). Two five-digit sequences (X: 4–1–3–2–4; Y: 2–3–1–4–2) were assigned to be the Old Sequence and the New Sequence in counterbalanced order. On day 1, participants completed 12 Old Sequence trials (Training). On day 2, participants performed three Old Sequence trials (Reminder) immediately before 12 New Sequence trials (Interference). On day 3, participants completed three trials of both the Old Sequence and the New Sequence in counterbalanced order (Test). The dependent variables (see SI Methods for details) were the number of sequences completed during each 30-s trial (“speed”) and the ratio of errors to speed [“accuracy”; 1 − (errors/speed)].

Procedure.

Unless otherwise stated (SI Methods), the following procedures were used in all direct replications and precisely matched those reported in the original study (27). Ambiguous or missing information was clarified through contact with the senior author of the original research team. Participants were seated in front of a computer screen in a quiet room and used the four fingers of their left (nondominant) hand to respond using the four top-row numeric keys 1, 2, 3, and 4 of a standard keyboard. The task involved repeatedly tapping a five-element sequence that was displayed on the screen for 30 s (including on “test” trials), followed by 30 s of rest during which the sequence was absent. Key presses were acknowledged with white dots that accumulated on screen, but there was no feedback regarding response accuracy. A 30-s countdown timer was displayed during the rest phase to signal the approaching test phase. During the tapping phase, the screen background was green, and during the rest phase it was red. Participants were instructed to “tap out the sequence as quickly and accurately as possible.” There was no within- or between-subjects timing variability in the original study because all sessions were conducted at 1:00 PM. In the present experiments, there was also no within-subject variability: participants completed sessions at precise 24-h intervals (±15 min); however, session times varied between participants (9:00 AM to 6:00 PM).

Conceptual Replications.

Participants.

Sixteen participants were randomly allocated to each of the three conceptual-replication experiments, affording a total sample size of 48 individuals (38 females; median age, 22 y; age range, 18–52 y). Three additional participants were excluded as they did not complete all three stages of the study.

Design.

Participants performed a sequence-learning task in three discrete sessions taking place on consecutive days (Fig. 1). Two 10-item sequences with independent grammars (SI Methods) were assigned to be the Old Sequence and the New Sequence in counterbalanced order. For experiments 5 and 7, the sequences were numbers (X: 1–4–6–3–2–9–5–0–8–7; Y: 2–6–5–7–0–1–9–4–3–8). For experiment 6, the sequences were letters (X: l–p–k–s–f–q–j–d–x–h; Y: j–f–l–d–q–x–k–h–p–s). On day 1, an adaptive test-feedback protocol was used to ensure that all participants could recall the Old Sequence unassisted five times in a row (Training). On day 2, participants in experiments 5 and 6 recalled and restudied the Old Sequence immediately before new learning (Reminder). All participants learned the New Sequence in the same manner as Old Sequence Training (Interference). On day 3, participants were asked to recall both sequences in counterbalanced order (Test). The dependent variable was a metric of the similarity between the target (Old/New) sequence at a given stage and the sequence entered by the user (“sequence similarity”; see SI Methods for details).

Procedure.

Participants were seated in front of a computer screen in a quiet room and responded using a standard keyboard. On STUDY trials, participants were instructed to memorize the sequence while it was displayed on screen for 5 s. No response was required. On RECALLFeedback trials, participants were asked to enter the sequence from memory into 10 blank placeholders (_). Correctly entered items appeared in green. Entering an item in an incorrect order caused that item to flash in red and black (4 × 0.5-s flashes over 2 s) followed by replacement with the correct item, which flashed in green and black (4 × 0.5-s flashes over 2 s), and early termination of the trial. On RECALLNoFeedback trials, participants also had to enter the sequence from memory; however, the trial was not interrupted if they entered items in an incorrect order and they could make corrections if they wished. All items appeared in black so there was no feedback on these trials.

The Training and Interference stages involved iterative cycles of STUDY and RECALLFeedback trials starting with the former. Accurately entering the whole sequence on a RECALLFeedback trial led to additional RECALLFeedback trials. Failure to complete a RECALLFeedback trial resulted in a STUDY trial and the cumulative RECALLFeedback counter was reset. When the participant had achieved five accurate RECALLFeedback trials in a row, the stage was terminated.

The Reminder stage involved a single RECALLNoFeedback trial followed by two STUDY trials. The Test stage involved two RECALLNoFeedback trials where participants were asked to “Recall the OLD sequence from day one and enter it on the next screen” and, separately, “Recall the NEW sequence from day two and enter it on the next screen.” Participants completed sessions at precise 24-h intervals (±15 min); however, session times varied between participants (9:00 AM to 6:00 PM).

Supplementary Material

Acknowledgments

We thank the UCL Institute of Making for assistance with apparatus for experiment 4. This work was supported by Economic and Social Research Council Grant ES/J500185/1.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1601440113/-/DCSupplemental.

References

- 1.Nader K, Schafe GE, Le Doux JE. Fear memories require protein synthesis in the amygdala for reconsolidation after retrieval. Nature. 2000;406(6797):722–726. doi: 10.1038/35021052. [DOI] [PubMed] [Google Scholar]

- 2.Nader K, Hardt O. A single standard for memory: The case for reconsolidation. Nat Rev Neurosci. 2009;10(3):224–234. doi: 10.1038/nrn2590. [DOI] [PubMed] [Google Scholar]

- 3.Nadel L, Land C. Memory traces revisited. Nat Rev Neurosci. 2000;1(3):209–212. doi: 10.1038/35044572. [DOI] [PubMed] [Google Scholar]

- 4.Schwabe L, Nader K, Pruessner JC. Reconsolidation of human memory: Brain mechanisms and clinical relevance. Biol Psychiatry. 2014;76(4):274–280. doi: 10.1016/j.biopsych.2014.03.008. [DOI] [PubMed] [Google Scholar]

- 5.Hui K, Fisher CE. The ethics of molecular memory modification. J Med Ethics. 2015;41(7):515–520. doi: 10.1136/medethics-2013-101891. [DOI] [PubMed] [Google Scholar]

- 6.Tronson NC, Taylor JR. Molecular mechanisms of memory reconsolidation. Nat Rev Neurosci. 2007;8(4):262–275. doi: 10.1038/nrn2090. [DOI] [PubMed] [Google Scholar]

- 7.Cahill L, McGaugh JL, Weinberger NM. The neurobiology of learning and memory: Some reminders to remember. Trends Neurosci. 2001;24(10):578–581. doi: 10.1016/s0166-2236(00)01885-3. [DOI] [PubMed] [Google Scholar]

- 8.Riccio DC, Millin PM, Bogart AR. Reconsolidation: A brief history, a retrieval view, and some recent issues. Learn Mem. 2006;13(5):536–544. doi: 10.1101/lm.290706. [DOI] [PubMed] [Google Scholar]

- 9.Millin PM, Moody EW, Riccio DC. Interpretations of retrograde amnesia: Old problems redux. Nat Rev Neurosci. 2001;2(1):68–70. doi: 10.1038/35049075. [DOI] [PubMed] [Google Scholar]

- 10.Hupbach A, Gomez R, Hardt O, Nadel L. Reconsolidation of episodic memories: A subtle reminder triggers integration of new information. Learn Mem. 2007;14(1-2):47–53. doi: 10.1101/lm.365707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Forcato C, Argibay PF, Pedreira ME, Maldonado H. Human reconsolidation does not always occur when a memory is retrieved: The relevance of the reminder structure. Neurobiol Learn Mem. 2009;91(1):50–57. doi: 10.1016/j.nlm.2008.09.011. [DOI] [PubMed] [Google Scholar]

- 12.Chan JCK, LaPaglia JA. Impairing existing declarative memory in humans by disrupting reconsolidation. Proc Natl Acad Sci USA. 2013;110(23):9309–9313. doi: 10.1073/pnas.1218472110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Schiller D, Phelps EA. Does reconsolidation occur in humans? Front Behav Neurosci. 2011;5:24. doi: 10.3389/fnbeh.2011.00024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lee JLC. Reconsolidation: Maintaining memory relevance. Trends Neurosci. 2009;32(8):413–420. doi: 10.1016/j.tins.2009.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dudai Y. Predicting not to predict too much: How the cellular machinery of memory anticipates the uncertain future. Philos Trans R Soc Lond B Biol Sci. 2009;364(1521):1255–1262. doi: 10.1098/rstb.2008.0320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hardt O, Einarsson EÖ, Nader K. A bridge over troubled water: Reconsolidation as a link between cognitive and neuroscientific memory research traditions. Annu Rev Psychol. 2010;61(1):141–167. doi: 10.1146/annurev.psych.093008.100455. [DOI] [PubMed] [Google Scholar]

- 17.Schiller D, et al. Preventing the return of fear in humans using reconsolidation update mechanisms. Nature. 2010;463(7277):49–53. doi: 10.1038/nature08637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Miller RR, Matzel LD. Retrieval failure versus memory loss in experimental amnesia: Definitions and processes. Learn Mem. 2006;13(5):491–497. doi: 10.1101/lm.241006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.McGaugh JL. Memory—a century of consolidation. Science. 2000;287(5451):248–251. doi: 10.1126/science.287.5451.248. [DOI] [PubMed] [Google Scholar]

- 20.Melton AW, Irwin JM. The influence of degree of interpolated learning on retroactive inhibition and the overt transfer of specific responses. Am J Psychol. 1940;53(2):173–203. [PubMed] [Google Scholar]

- 21.Loftus EF. The malleability of human memory. Am Sci. 1979;67(3):312–320. [PubMed] [Google Scholar]

- 22.McGeoch JA. The Psychology of Human Learning: An Introduction. Longmans; New York: 1942. [Google Scholar]

- 23.Tulving E. Cue-dependent forgetting: When we forget something we once knew, it does not necessarily mean that the memory trace has been lost; it may only be inaccessible. Am Sci. 1974;62(1):74–82. [Google Scholar]

- 24.Eich JE. The cue-dependent nature of state-dependent retrieval. Mem Cognit. 1980;8(2):157–173. doi: 10.3758/bf03213419. [DOI] [PubMed] [Google Scholar]

- 25.Capaldi EJ, Neath I. Remembering and forgetting as context discrimination. Learn Mem. 1995;2(3-4):107–132. doi: 10.1101/lm.2.3-4.107. [DOI] [PubMed] [Google Scholar]

- 26.Bouton ME. Context, ambiguity, and unlearning: Sources of relapse after behavioral extinction. Biol Psychiatry. 2002;52(10):976–986. doi: 10.1016/s0006-3223(02)01546-9. [DOI] [PubMed] [Google Scholar]

- 27.Walker MP, Brakefield T, Hobson JA, Stickgold R. Dissociable stages of human memory consolidation and reconsolidation. Nature. 2003;425(6958):616–620. doi: 10.1038/nature01930. [DOI] [PubMed] [Google Scholar]

- 28.Power AE, Berlau DJ, McGaugh JL, Steward O. Anisomycin infused into the hippocampus fails to block “reconsolidation” but impairs extinction: The role of re-exposure duration. Learn Mem. 2006;13(1):27–34. doi: 10.1101/lm.91206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lattal KM, Abel T. Behavioral impairments caused by injections of the protein synthesis inhibitor anisomycin after contextual retrieval reverse with time. Proc Natl Acad Sci USA. 2004;101(13):4667–4672. doi: 10.1073/pnas.0306546101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Eisenberg M, Dudai Y. Reconsolidation of fresh, remote, and extinguished fear memory in Medaka: Old fears don’t die. Eur J Neurosci. 2004;20(12):3397–3403. doi: 10.1111/j.1460-9568.2004.03818.x. [DOI] [PubMed] [Google Scholar]

- 31.Rosenthal R. Replication in behavioral research. J Soc Behav Pers. 1990;5(4):1–30. [Google Scholar]

- 32.Simons DJ. The value of direct replication. Perspect Psychol Sci. 2014;9(1):76–80. doi: 10.1177/1745691613514755. [DOI] [PubMed] [Google Scholar]

- 33.Schmidt S. Shall we really do it again? The powerful concept of replication is neglected in the social sciences. Rev Gen Psychol. 2009;13(2):90–100. [Google Scholar]

- 34.Dienes Z. Bayesian versus orthodox statistics: Which side are you on? Perspect Psychol Sci. 2011;6(3):274–290. doi: 10.1177/1745691611406920. [DOI] [PubMed] [Google Scholar]

- 35.Rouder JN, Speckman PL, Sun D, Morey RD, Iverson G. Bayesian t tests for accepting and rejecting the null hypothesis. Psychon Bull Rev. 2009;16(2):225–237. doi: 10.3758/PBR.16.2.225. [DOI] [PubMed] [Google Scholar]

- 36.Cumming G. Understanding the New Statistics: Effect Sizes, Confidence Intervals, and Meta-analysis. Routledge; New York: 2012. [Google Scholar]

- 37.Censor N, Horovitz SG, Cohen LG. Interference with existing memories alters offline intrinsic functional brain connectivity. Neuron. 2014;81(1):69–76. doi: 10.1016/j.neuron.2013.10.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Censor N, Dimyan MA, Cohen LG. Modification of existing human motor memories is enabled by primary cortical processing during memory reactivation. Curr Biol. 2010;20(17):1545–1549. doi: 10.1016/j.cub.2010.07.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.de Beukelaar TT, Woolley DG, Wenderoth N. Gone for 60 seconds: Reactivation length determines motor memory degradation during reconsolidation. Cortex. 2014;59:138–145. doi: 10.1016/j.cortex.2014.07.008. [DOI] [PubMed] [Google Scholar]

- 40.Potts R, Shanks DR. Can testing immunize memories against interference? J Exp Psychol Learn Mem Cogn. 2012;38(6):1780–1785. doi: 10.1037/a0028218. [DOI] [PubMed] [Google Scholar]

- 41.Roediger HL, Butler AC. The critical role of retrieval practice in long-term retention. Trends Cogn Sci. 2011;15(1):20–27. doi: 10.1016/j.tics.2010.09.003. [DOI] [PubMed] [Google Scholar]

- 42.Kindt M, Soeter M, Vervliet B. Beyond extinction: Erasing human fear responses and preventing the return of fear. Nat Neurosci. 2009;12(3):256–258. doi: 10.1038/nn.2271. [DOI] [PubMed] [Google Scholar]

- 43.Bos MGN, Beckers T, Kindt M. Noradrenergic blockade of memory reconsolidation: A failure to reduce conditioned fear responding. Front Behav Neurosci. 2014;8:412. doi: 10.3389/fnbeh.2014.00412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Golkar A, Bellander M, Olsson A, Öhman A. Are fear memories erasable?—reconsolidation of learned fear with fear-relevant and fear-irrelevant stimuli. Front Behav Neurosci. 2012;6:80. doi: 10.3389/fnbeh.2012.00080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kindt M, Soeter M. Reconsolidation in a human fear conditioning study: A test of extinction as updating mechanism. Biol Psychol. 2013;92(1):43–50. doi: 10.1016/j.biopsycho.2011.09.016. [DOI] [PubMed] [Google Scholar]

- 46.Sevenster D, Beckers T, Kindt M. Prediction error demarcates the transition from retrieval, to reconsolidation, to new learning. Learn Mem. 2014;21(11):580–584. doi: 10.1101/lm.035493.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Duvarci S, Nader K. Characterization of fear memory reconsolidation. J Neurosci. 2004;24(42):9269–9275. doi: 10.1523/JNEUROSCI.2971-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lakens D. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Front Psychol. 2013;4:863. doi: 10.3389/fpsyg.2013.00863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Suzuki A, et al. Memory reconsolidation and extinction have distinct temporal and biochemical signatures. J Neurosci. 2004;24(20):4787–4795. doi: 10.1523/JNEUROSCI.5491-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Van der Loo M. The stringdist package for approximate string matching. R J. 2014;6(1):111–122. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.