Abstract

Aim of Study:

To evaluate the ability of ancillary health staff to use a novel smartphone imaging adapter system (EyeGo, now known as Paxos Scope) to capture images of sufficient quality to exclude emergent eye findings. Secondary aims were to assess user and patient experiences during image acquisition, interuser reproducibility, and subjective image quality.

Materials and Methods:

The system captures images using a macro lens and an indirect ophthalmoscopy lens coupled with an iPhone 5S. We conducted a prospective cohort study of 229 consecutive patients presenting to L. V. Prasad Eye Institute, Hyderabad, India. Primary outcome measure was mean photographic quality (FOTO-ED study 1–5 scale, 5 best). 210 patients and eight users completed surveys assessing comfort and ease of use. For 46 patients, two users imaged the same patient's eyes sequentially. For 182 patients, photos taken with the EyeGo system were compared to images taken by existing clinic cameras: a BX 900 slit-lamp with a Canon EOS 40D Digital Camera and an FF 450 plus Fundus Camera with VISUPAC™ Digital Imaging System. Images were graded post hoc by a reviewer blinded to diagnosis.

Results:

Nine users acquired 719 useable images and 253 videos of 229 patients. Mean image quality was ≥ 4.0/5.0 (able to exclude subtle findings) for all users. 8/8 users and 189/210 patients surveyed were comfortable with the EyeGo device on a 5-point Likert scale. For 21 patients imaged with the anterior adapter by two users, a weighted κ of 0.597 (95% confidence interval: 0.389–0.806) indicated moderate reproducibility. High level of agreement between EyeGo and existing clinic cameras (92.6% anterior, 84.4% posterior) was found.

Conclusion:

The novel, ophthalmic imaging system is easily learned by ancillary eye care providers, well tolerated by patients, and captures high-quality images of eye findings.

Keywords: EyeGo, mobile health, Paxos Scope, smartphone anterior and posterior imaging, teleophthalmology, triage

In rural India, individuals with eye problems are often first seen by technicians or primary care physicians at local primary vision centers that cater to populations of >50,000 individuals.[1] These centers have limited eye equipment to aid diagnosis. Any suspected pathology at primary centers necessitates a referral and possibly long distance travel to secondary service centers where patients are evaluated and treated by comprehensive ophthalmologists.[1,2,3] More complex cases may require travel from remote villages into tertiary centers or centers of excellence such as L. V. Prasad Eye Institute (LVPEI), for the opinions of specialist ophthalmologists.[1]

Affordable, mobile, and user-friendly remote imaging has the potential to increase accessibility of higher-level ophthalmic services to underserved populations in rural India and potentially decrease travel. The smartphone-based ophthalmic imaging system used in this study was an anterior and posterior imaging adapter that the authors called “EyeGo” that served as the prototype for the device now known as Paxos Scope by DigiSight Technologies (San Francisco, CA, USA). It was developed at Stanford University with the goal of facilitating remote triage imaging using existing equipment and is now registered with the Food and Drug Administration as a Class II 510(k) exempt ophthalmic camera.[4] It is a light weight, simple system that turns a smartphone into a portable camera capable of capturing and uploading high-quality pictures of the anterior and posterior segment of the eye via direct macro imaging and indirect ophthalmoscopy with an indirect ophthalmoscopy lens, respectively. The nurse or technician can then transmit the images to an ophthalmologist for review through a secure, encrypted institutional e-mail, or a HIPAA-compliant messaging application.[5] Originally designed for the iPhones 4 through 5S, the EyeGo system prototype tested includes two components to facilitate appropriate magnification and illumination of the eye: (1) An anterior adapter consisting of a macro lens and light emitting diode (LED) light [Fig. 1a] and a (2) three-dimensional-printed posterior adapter to help align a 20D lens with the phone's camera [Figs. 1b and 2].[5,6]

Figure 1.

The EyeGo system including (a) an anterior adapter consisting of a macro lens and LED light and (b) a three-dimensional printed posterior adapter to help align a 20D lens with the phone's camera

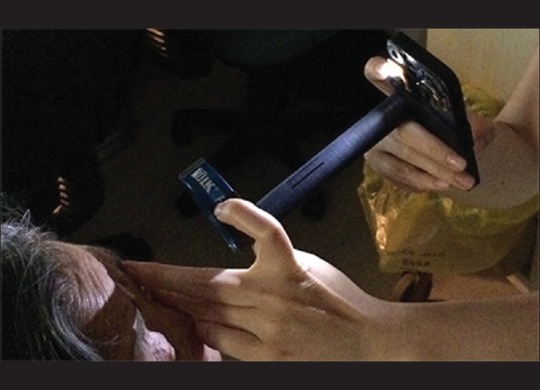

Figure 2.

An ophthalmologist is recording a movie of the posterior segment using the EyeGo three-dimensional-printed posterior adapter in conjunction with an iPhone 5. The right hand stabilizes the smartphone and captures the image while the left hand stabilizes the lens and lifts the upper eyelid

Myung et al. demonstrated EyeGo's ability to capture useful images of the anterior and posterior chambers of the eye when used by ophthalmology residents, but the ability of users with varying medical training to capture images and videos using the EyeGo adapters is currently unknown.[5,6] Therefore, our primary aim was to evaluate the ability of eye care technicians and optometrists as well as ophthalmologist trainees to take images of sufficient quality to rule out emergent findings. The primary outcome measure was mean photographic quality on a previously validated 5-point scale as described in the FOTO-ED study: (1) inadequate for any diagnostic purpose; (2) unable to exclude all emergent findings; (3) only able to exclude emergent findings; (4) not ideal but still able to exclude subtle findings; and (5) ideal quality.[7] Our secondary aims were to assess user and patient experiences during imaging with a simple survey rating ease of use and tolerability of the EyeGo, to evaluate the reproducibility of two users imaging the same patient, and to compare EyeGo smartphone camera images with existing clinic slit-lamp and fundus imaging.

Materials and Methods

Research was performed after obtaining approval from the Stanford University Institutional Review Board (IRB) and LVPEI IRB. Informed consent was obtained from either the participant or parent of a minor participant. All image and video acquisition and transmittal were handled with strict attention to the confidentiality of personal data in accordance with the Data Protection Act of 1998 and Access to Health Records Act of 1990. All research adhered to the tenets of the Declaration of Helsinki.

The phone used to capture images was encrypted using the Stanford University Mobile Device Management application. Images and movies were uploaded from the phone to Research Electronic Data Capture (REDCap) electronic data capture tools hosted at Stanford University.[8] The study data were input and managed using REDCap. REDCap is a secure, web-based application designed to support data capture for research studies, providing (1) an intuitive interface for validated data entry; (2) audit trails for tracking, manipulation, and export procedures; (3) automated export procedures for seamless data downloads to common statistical packages; and (4) procedures for importing data from external sources. All figures and analyses were done using R 3.1.1 and Statistical Analysis Software, Enterprise Guide Version 6.1 (Cary, North Carolina, USA).

The EyeGo user study included 229 patients presenting to the Cornea and Retina Departments at a quaternary eye care center, LVPEI.[2] After informed consent was obtained, patients were imaged using the EyeGo system. This was done during a waiting period in their regular hospital visit. The study personnel did not dilate the eyes of patients. However, the patients had already been examined in the clinic and were dilated (if a dilated examination was required) by the clinical staff after ascertaining safety of dilation as per the routine practice in the hospital. Patients were excluded from anterior and/or posterior imaging if they opted out due to time constraint, were <5 years of age, or did not give consent for the study. In the case of a time constraint permitting imaging with only one adapter, patients being seen in the cornea department underwent anterior segment imaging alone while those being seen in the retina department underwent posterior segment imaging alone. Patients were excluded from posterior imaging if their eyes were not dilated or if pathology impeded visualization of the retina (e.g., cataract, corneal opacity). Dilation was considered insufficient if the pupils were dilated to <6 mm. Demographic data including age, gender, ocular history, and past medical history were recorded from patient charts.

Patients were imaged by one of nine EyeGo users with varying medical backgrounds (one technician, one medical student, three cornea optometrists, three retina optometrists, one retina fellow). All users reported feeling somewhat to very comfortable using a smartphone (four or five on a 5-point Likert scale). Users were randomly selected based on availability during their normal workday. Ophthalmic technicians undergo 2 years of optometric training and work with optometrists to assist in performing eye examinations. Optometrists at LVPEI undergo 4 years of optometric training, perform eye examinations, and are trained to image patients in the retina or cornea diagnostics centers using slit lamp and fundus cameras. The technician had no prior medical training and was recruited outside of LVPEI.

Each user self-determined whether multiple images should be taken based on whether images on the smartphone screen were in focus. Users received brief training describing the goal of viewing the entire posterior pole (nerve, arcade vessels, macula). In some cases (40/331 eyes), multiple images were obtained for each patient (maximum = 4) and the highest quality image, normal or with pathology, was used for the analysis as determined by the grader. Each user also determined whether to stop imaging if the patient opted out of one part of imaging due to time constraint, if the patient's eyes were not sufficiently dilated or if pathology impeded visualization of the retina.

One hundred and sixty-seven patients (334 eyes) underwent ocular anterior segment imaging using the EyeGo anterior chamber smartphone adapter and an iPhone 5S (Apple Inc., Cupertino, CA, USA) [Fig. 3a]. Three eyes were missed, one due to time constraint, one from an error resulting in no recorded photo, and one patient declining imaging in one eye resulting in n = 331 eyes for analysis. Individuals with no prior experience using the anterior adapter of the EyeGo system were given brief (<5 minutes) standard instructions on how to take photographs using the EyeGo.[5] With the patient sitting upright, users were instructed to hold the iPhone with two hands: one hand holding the phone sideways with the third digit and thumb while the fourth and fifth digits rested on the patient's forehead in order to stabilize the phone, and the other hand used to further stabilize the phone and push the button to capture a photo using the index finger. Care was taken in all cases to prevent contact between the phone and adapter and the patient's skin. In the event contact was made, the adapter and phone were wiped clean with an alcohol swab, and the adapter itself was submerged in soap and water or another cleaning solution. Users were given real-time feedback on their first ten images by an experienced user (CAL) before independently capturing images to be included in the study.

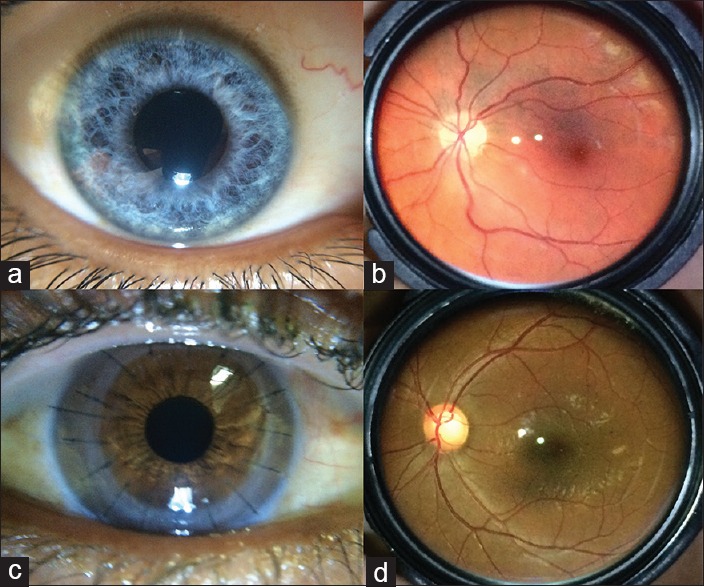

Figure 3.

Examples of normal (a) anterior and (b) posterior segment images and of subtle findings including (c) detail of all sutures in corneal transplant and (d) optic nerve cupping

One hundred and forty-four patients (253 eyes of 288 total) underwent nonstereoscopic, mydriatic movie recording of the ocular fundus using the EyeGo lens attachment (Stanford, CA, USA), an iPhone 5S and a Panretinal 2.2 lens (Volk Optical Inc., Mentor, OH, USA) [Fig. 3b]. Twenty-two patients only underwent right eye imaging, and 13 only underwent left eye imaging. Of these, 32 were insufficiently dilated for imaging, two had pathology that impeded visualization of the retina and one opted out of one eye due to time constraint. Thus, 253 videos of 253 eyes were included in the analysis. The iPhone application Filmic Pro (Cinegenix LLC, Seattle, WA, USA; http://filmicpro.com/) was used to provide constant adjusted illumination and video capture in conjunction with the EyeGo.[9] Individuals with no prior experience using the posterior adapter of the EyeGo system were given brief (<5 minutes) instructions on standard use and told to (1) video-capture the optic disc, macula, superior vascular arcade, and inferior vascular arcade and (2) video-capture the fundus while each patient, seated upright, looked up, down, left, right, and straight. This allowed for up to a 55° field of view with the Volk Panretinal 2.2 lens. Users were given feedback on their first ten recording attempts by an experienced user (CAL). Users were instructed to limit the attempt to capture a good video to 30 seconds for each eye to avoid fatigue from the flash illumination source. Videos were taken at 24 fps at 1080p resolution.

Anterior photographs, posterior movies, and gold standard slit lamp and fundus camera imaging (EyeGo and standard image comparison) were deidentified and graded by one medical student grader (CAL) using a 5-point scale previously validated for nonmydriatic imaging in the FOTO-ED study (weighted κ for two neuro-ophthalmologist graders of 0.84–0.87) as described below.[7] Images and videos were graded according to the following criteria: (1) Inadequate for any diagnostic purpose; (2) unable to exclude all emergent findings; (3) only able to exclude emergent findings; (4) not ideal but still able to exclude subtle findings; and (5) ideal quality. This scale was appropriate for determining the utility of the EyeGo in excluding emergent findings.

Images and videos were reviewed by a single grader (CAL) on a 15.4-inch backlit display monitor with in-plane switching technology—technology allowing for a broad angle at which images can be viewed without deterioration of color and brightness (MacBook Pro, resolution: 2880 × 1800 at 220 pixels/inch). The reviewer could zoom in on the images and adjust monitor contrast and brightness for image review. To measure intraobserver reliability, the grader reassessed a random subset of 50 consecutive images and 50 consecutive videos 1 year following the initial grading. For anterior segment images, we found an overall agreement of 86.0% with a weighted κ of 0.784 (95% confidence interval [CI]: 0.627–0.941) indicating substantial agreement. For posterior segment videos, we found an overall agreement of 86.0% with a weighted κ of 0.816 (95% CI: 0.663–0.970) indicating almost perfect agreement.

Anterior chamber photographs were graded on the 5-point quality scale. Emergent findings for anterior imaging include corneal abrasions/lacerations/ulcers, episcleritis, scleritis, chemical burns, foreign bodies, hyphema, hypopyon, and traumatic injury. An example of a visible subtle finding for anterior imaging includes detail of all sutures in corneal transplant [Fig. 3c].

Videos were graded using the same 5-point scale for quality assessment. The grader was able to pause the video to improve video assessment. Emergent findings for posterior imaging include optic disc edema, optic disc pallor, retinal white spots, and retinal hemorrhages. Examples of visible subtle findings for posterior imaging would include the microaneurysms of background diabetic retinopathy, macular changes of age-related macular degeneration, and optic nerve cupping [Fig. 3d].

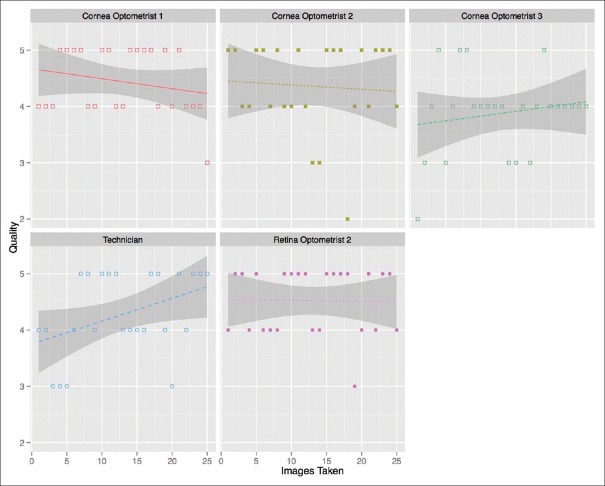

The mean quality of imaging captured using the anterior and posterior EyeGo attachments was compared across users. This analysis included the initial 25 images taken by each user (23 for the retina fellow due to time constraints for study participation). The medical student was excluded from this analysis as the medical student trained the other eight EyeGo users.

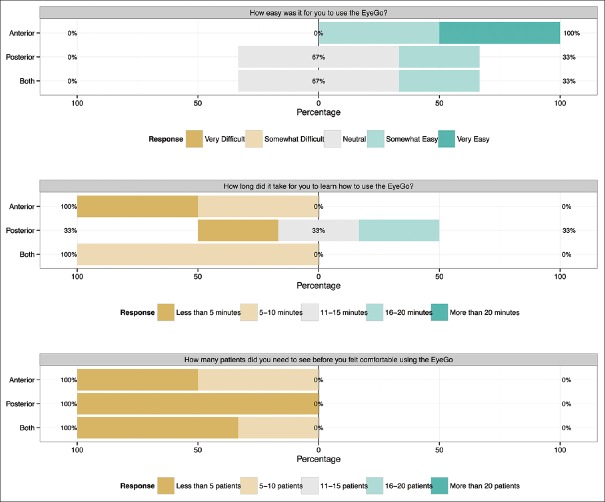

After imaging 25 eyes (23 for the retina fellow), users were asked three survey questions: (1) How easy was it for you to use the EyeGo? (2) How long did it take for you to learn how to use the EyeGo? and (3) How many patients did you need to see before you felt comfortable using the EyeGo? Responses [Fig. 4] were grouped by users who performed imaging with the anterior attachment, posterior attachment, or both. The medical student was again excluded from this analysis.

Figure 4.

User survey response (n = 8). Five-item Likert scale reporting each user's response to three survey questions following imaging using either the anterior EyeGo imaging attachment (n = 2), posterior EyeGo imaging attachment (n = 3), or both (n = 3)

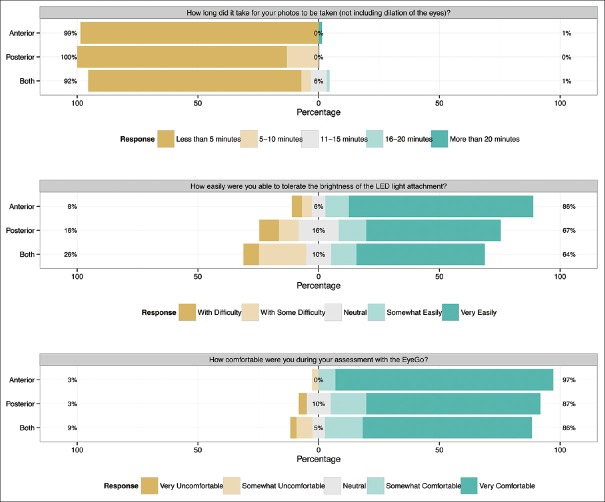

Following imaging, all patients were asked three survey questions: (1) How long did it take for your photos to be taken (not including dilation of the eyes)? (2) How easily were you able to tolerate the brightness of the LED light attachment? and (3) How comfortable were you during your assessment with the EyeGo? Responses [Fig. 5] were grouped depending on whether patients had undergone anterior imaging, posterior imaging, or both. Nineteen of 229 patients declined survey response (91.7% response rate).

Figure 5.

Patient survey responses (n = 210). Five-item Likert scale reporting each patient's response to three survey questions following EyeGo imaging with either the anterior imaging attachment (n = 72), posterior imaging attachment (n = 61), or both (n = 77)

Two users imaged a subset of 46 patients within 10 minutes of each other. Users were picked based on their availability for additional imaging. For the medical student and cornea optometrist pair, only anterior imaging was compared. For the medical student and technician pair, patients underwent imaging on both eyes, anteriorly and posteriorly unless exclusion criteria were met.

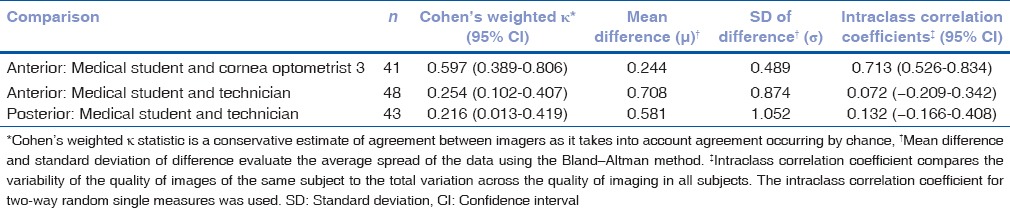

Cohen's weighted κ statistic was used as a conservative estimate of agreement between imagers as it takes into account agreement occurring by chance. Mean difference and standard deviation of difference were used to evaluate the average spread of the data using the Bland-Altman method. Intraclass correlation coefficient (ICC) for two-way random single measures was used.[10] This statistic compares the variability of the quality of images of the same subject to the total variation across the quality of imaging in all subjects.

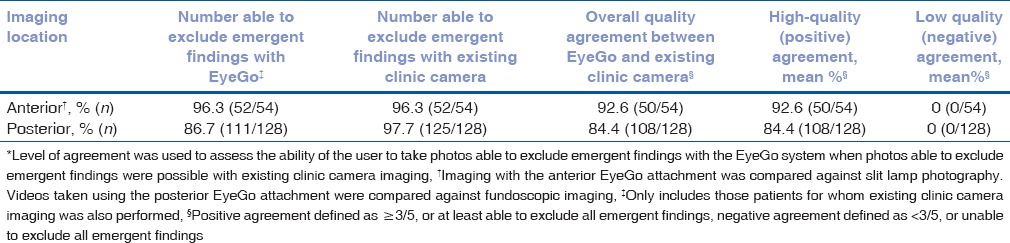

The quality of photographs taken using the EyeGo adapter for anterior imaging was compared with the quality of photographs taken using a BX 900 slit-lamp (Haag-Streit USA Inc., Mason, OH, USA) and Canon EOS 40D Digital Camera (Canon USA Inc., Melville, New York, USA). Only diffuse illumination photos taken with the slit-lamp and camera system were used for comparison. Patients included were those already undergoing slit lamp imaging, no additional slit lamp images were taken for study purposes alone. Level of agreement (LOA) was used to assess the ability of the user to take photos able to exclude emergent findings with the EyeGo system when photos able to exclude emergent findings were possible with the slit lamp camera. Therefore, in contrast to the FOTO-ED study in which high quality was defined as Grades 4 or 5, we defined positive agreement as Grades 3, 4, or 5 on the 1–5 scale, or at least able to exclude all emergent findings.[3]

The quality of photographs taken using the EyeGo lens attachment was compared with the quality of photographs taken using an FF 450 plus Fundus Camera with VISUPAC™ Digital Imaging System (Carl Zeiss Meditec Inc., Oberkochen, Germany). Patients included were those already undergoing fundoscopic imaging, no additional fundoscopic images were taken for study purposes alone. LOA was calculated with positive agreement defined as ≥3 on the 1–5 scale, or at least able to exclude all emergent findings.

Results

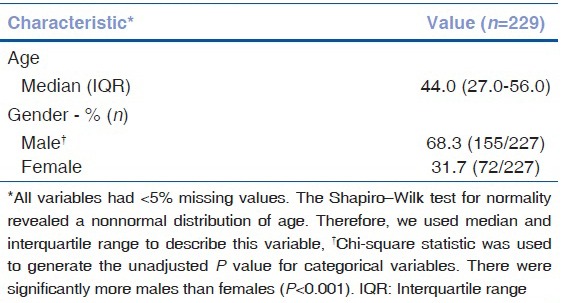

Nine different users at LVPEI imaged 229 patients. The baseline characteristics of the imaged cohort are included in Table 1a. The cohort included 68.2% (155/229) males and 31.7% (72/229) females (χ2 = 30.25, P < 0.0001) with an average age of 42.4 years (minimum = 7.0, maximum = 79.0, median = 44.0, interquartile range [IQR] = 27.0–56.0). On chart review of patient ocular history, 62.3% (144/231) of eyes imaged with the anterior EyeGo adapter had a history of anterior segment pathology [Table 1b]. Similarly, 63.6% (161/253) of eyes recorded with the posterior EyeGo adapter had a history of posterior segment pathology.

Table 1a.

Demographic characteristics of patients imaged in the EyeGo user study

Table 1b.

History of pathology in eyes imaged in the EyeGo user study as reported in the patient chart (n=229)

For each eye imaged with the anterior EyeGo imaging device, the mean image quality of the technician was 4.00 (n = 48 eyes, median = 4, IQR = 3–5, σ = 0.90), that of the retina optometrist was 4.54 (n = 26 eyes, median = 5, IQR = 4–5, σ = 0.58), and that of each cornea optometrist was 4.23 (n = 44 eyes, median = 4, IQR = 4–5, σ = 0.80), 4.35 (n = 26 eyes, median = 4.5, IQR = 4.5–5, σ = 0.80) and 4.07 (n = 41 eyes, median = 4, IQR = 4–5, σ =0.72). Fig. 6 shows the quality of imaging as a function of number of images taken for each user's first 25 eyes.

Figure 6.

Quality of imaging done using the anterior EyeGo attachment compared to number of images taken by users of various medical backgrounds. Notably, the figure includes the initial 25 images taken by each user

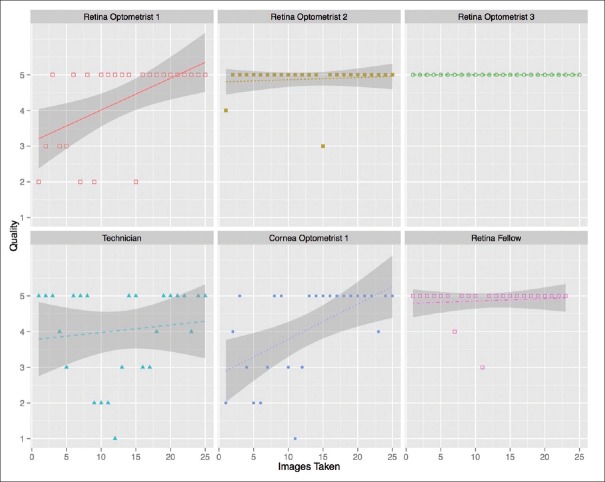

For each eye recorded using the posterior EyeGo adapter, the mean image quality of the technician was 4.26 (n = 43 eyes, median = 5, IQR = 4–5, σ =1.09), that of the cornea optometrist was 4.08 (n = 25 eyes, median = 5, IQR = 3–5, σ=1.29), that of each retina optometrist was 4.31 (n = 26 eyes, median = 5, IQR = 3–5, σ = 1.20), 4.89 (n = 28 eyes, median = 5, IQR = 5–5, σ = 0.42) and 5.00 (n = 25 eyes, median = 5, IQR = 5–5, σ = 0.00), and that of the retina fellow was 4.87 (n = 23 eyes, median = 5, IQR = 5–5, σ = 0.46). Fig. 7 shows the quality of imaging as a function of number of images taken for each user's first 25 eyes (with the exception of the retina fellow who only recorded 23 eyes).

Figure 7.

Quality of imaging done using the posterior EyeGo attachment compared to number of images taken by users of various medical backgrounds. Notably, the figure includes the initial 25 images taken by each user (23 for the retina fellow)

All (8/8) users completed the post-imaging survey [Fig. 4]. On a 5-point scale from “Very Easy” to “Very Difficult” to use, users reported neutral to high levels of ease of use of both the anterior and posterior EyeGo imaging devices. In addition, all reported that it took ten or fewer patients to learn how to use the EyeGo. About 87.5% (7/8) of users reported that it took 15 minutes or less to learn how to use the EyeGo.

Nearly, 91.7% (210/229) of patients completed the post imaging survey [Fig. 5]. Frequencies of patients reporting that imaging took <5 minutes were 98.6% (71/72) anterior imaging only, 86.9% (53/61) posterior recording only, and 88.3% (68/77) both. Notably, average measured recording time of the 253 fundi was 71 seconds (σ = 51, minimum = 10, maximum = 302). Frequencies of patients reporting the ability to tolerate the brightness of the LED light as somewhat to very easily were 86.1% (62/72) anterior imaging only, 67.2% (41/61) posterior recording only, and 63.6% (49/77) both. Frequencies of patients reporting being somewhat to very comfortable during the exam were 97.2% (70/72) anterior imaging only, 86.9% (53/61) posterior recording only, and 85.7% (66/77) both.

A medical student and optometrist experienced in anterior segment diagnostics imaged the same 41 eyes using the anterior EyeGo adapter. In addition, a medical student and technician imaged a different set of 48 eyes using the anterior EyeGo adapter. The lower mean difference in image quality (0.244; σ = 0.489) was found between the medical student and optometrist [Table 2]. The same pair had a weighted κ coefficient of 0.597 (95% CI: 0.398–0.806) and ICC of 0.713 (95% CI: 0.526–0.834) [Table 2]. In comparison, the medical student and technician had a mean difference in image quality of 0.708 (σ = 0.874). This pair had a weighted κ coefficient of 0.254 (95% CI: 0.102–0.407) and ICC of 0.072 (95% CI: −0.209–0.342).

Table 2.

Inter-user reproducibility of image quality

Both a medical student and technician recorded 43 eyes using the posterior EyeGo adapter. The mean difference in video quality was 0.581 (σ = 1.052) [Table 2]. The pair had a weighted κ coefficient of 0.216 (95% CI: 0.013–0.419) and ICC of 0.132 (95% CI: −0.166–0.408) [Table 2].

Fifty-four eyes imaged using the EyeGo adapter for anterior imaging were also imaged using existing clinic slit lamp photography [Fig. 8]. 96.3% (52/54) of those images taken with the EyeGo adapter as well as 96.3% (52/54) of those taken with slit lamp photography were given a grade of ≥3/5 or, at least, able to exclude all emergent findings [Table 3]. Positive agreement—defined as a grade of ≥3/5 or, at least able to exclude all emergent findings—between imaging using the EyeGo adapter and slit lamp photography was 92.6% (50/54). Negative agreement—defined as a grade of <3/5 or unable to exclude all emergent findings—was 0% (0/54).

Figure 8.

Image of corneal abscess taken with (a) anterior chamber EyeGo imaging and (b) slit lamp imaging shows similar level of detail

Table 3.

EyeGo and standard image quality comparison*

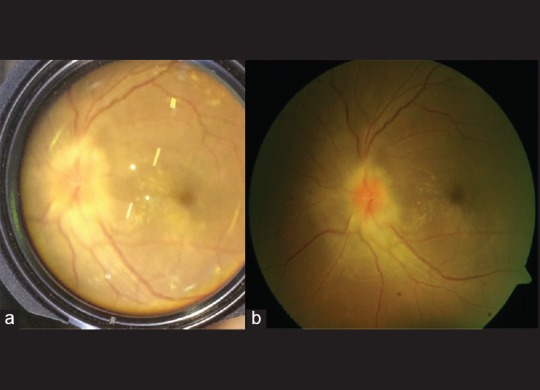

One hundred twenty-eight eyes recorded using the EyeGo posterior adapter were also imaged using an existing fundoscopic-imaging device [Fig. 9]. About 86.7% (111/128) of those videos taken with the EyeGo adapter as well as 97.7% (125/128) of images taken with fundoscopic photography were given a grade of ≥3/5 or, at least able to exclude all emergent findings [Table 3]. Positive agreement—defined as a grade of ≥3/5 or, at least able to exclude all emergent findings—between recording using the EyeGo adapter and fundoscopic photography was 84.4% (108/128). Negative agreement—defined as a grade of <3/5 or unable to exclude all emergent findings—was 0% (0/128).

Figure 9.

Image of posterior uveitis taken with (a) fundoscopic EyeGo imaging and (b) fundoscopic imaging shows similar level of detail

Discussion

We found that technicians, optometrists, and fellows alike could learn to use the EyeGo system quickly and effectively with minimal (<5 minutes) training in addition to real-time feedback on their first ten imaging attempts. On average, the quality of images and videos of the anterior (x− = 4.31/5.00, median = 4.00/5.00, σ = 0.80) and posterior (x− = 4.19/5.00, median = 5.00/5.00, σ = 1.24) segment taken by all users was sufficient to exclude emergent findings. Furthermore, all users of the EyeGo reported neutral to high levels of ease of use of the EyeGo system (x− = 3.63/5.00). Similarly, overall patient-reported comfort (x− = 4.60/5.00) and LED tolerance (x− = 4.10/5.00) with the EyeGo examination were high, with lower levels of comfort and LED tolerance reported with patients who received posterior recording only and both anterior and posterior recording. All patients who received posterior recording of their fundi had undergone dilation prior to both anterior and posterior imaging and were therefore exposed to more light than those who received anterior segment imaging only. The safety of the LED on the iPhone is discussed at length elsewhere.[11] However, in response to this, the FDA-registered, commercially available version of the EyeGo device (Paxos Scope by DigiSight Technologies) provides an external, battery-powered, variable intensity LED that can be titrated precisely to a patient's level of comfort, unlike the internal flash of the iPhone which cannot be titrated from 0% to 100% intensity.

EyeGo users reproduced images of comparable quality on the same patients. The κ statistic demonstrated moderate agreement (weighted κ = 0.597, 95% CI: 0.389–0.806) between image quality for images taken by the medical student and optometrist using the anterior EyeGo adapter.[12] However, the κ statistic only demonstrated fair agreement (anterior: weighted κ = 0.254, 95% CI: 0.102–0.407; posterior: weighted κ = 0.216, 95% CI: 0.013–0.419) between the medical student and technician with no medical training. While the medical student had imaged >50 eyes by the time of reliability testing, the optometrist and technician had only undergone a 5-minute training period. Therefore, the learning process each user underwent may account for variation in image quality between users.

Finally, our study reports a high positive agreement and low negative agreement between the EyeGo system and the equivalent existing clinic camera system. A high-positive agreement indicates that patients who successfully underwent imaging with existing clinic camera systems were also likely to have successfully undergone EyeGo imaging. Low-negative agreement indicates that patients who were difficult to image using the EyeGo may have otherwise undergone successful imaging with existing clinic camera systems. Therefore, patients for whom a high-quality EyeGo photo cannot be taken will likely benefit instead from existing clinic imaging.

Our image quality data are limited by the variability in patient pathology as well as patient cooperation that may have affected image quality regardless of EyeGo user technique. In addition, iris color affects the quality of photos taken of the anterior chamber, with increased pigmentation decreasing the visibility of pathology. Our survey data are limited by the small sample size of eight users and by use of a subjective Likert scale. However, this study mainly acts as a proof of concept pilot study for larger deployment of the EyeGo.

Despite study limitations, our study leveraged a population of socioeconomically diverse individuals with varying pathologies to show that the EyeGo is an accessible device, able to be easily and quickly taught to healthcare providers of varying eye experience, and comfortable for patients. Additional studies have simultaneously been completed to test actionable decision making with the EyeGo system by looking for a specific disease (e.g., proliferative diabetic retinopathy) when doing mass screenings (e.g., of diabetes mellitus).

Available options for remote imaging include nonmydriatic fundus photography, slit lamp adaptors for smartphones, panoptic portable ophthalmoscopy, and the use of smartphones with handheld lenses. Unfortunately, each of these options has limitations in expense, mobility, and expertise required.

Nonmydriatic fundus photography demonstrates high diagnostic capability in emergency triage but is limited in global usage by its expense.[13] Adapters such as the EyePhotoDoc (eyephotodoc.com) allow for high-quality imaging, but require a slit lamp, decreasing mobility and use outside of ophthalmology clinics. Similarly, the iExaminer (Welch Allyn, Skaneateles Falls, NY, USA) includes a panoptic portable ophthalmoscope that is more portable than a slit lamp, but requires direct ophthalmoscope skill and purchase of a panoptic unit and does not allow for easy and instantaneous sharing of images. Manually aligning a smartphone in conjunction with indirect ophthalmoscopy condensing lenses requires additional expertise in lens placement that the EyeGo attachment may reduce.[9,14,15]

In contrast with current adapters, the EyeGo system is lightweight, easy to use, and can be used in conjunction with the smartphones many medical personnel already possess.[16,17] With the addition of the EyeGo system, smartphones transform into tools for documentation of eye pathology in areas with limited access to ophthalmologic care. Smartphone-based ophthalmic imaging with this system can be learned quickly by ancillary healthcare providers to capture cornea and retina images of sufficient quality to rule out emergent findings. Moreover, patients are comfortable throughout imaging when being photographed with this system. Of note, the EyeGo system used in this study was the prototype for the device that is now available as Paxos Scope in the USA (through DigiSight Technologies). It now features a variable intensity external LED light source that can be titrated readily to a patient's comfort, and universal, spring-loaded mounting and alignment system to adapt to virtually any smartphone. Paxos Scope is also coupled with a software app developed by DigiSight that enables ease of capture and HIPAA compliant storage of anterior and posterior segment images.

Financial support and sponsorship

The project described herein was conducted with support for Cassie A. Ludwig from the TL1 component of the Stanford Clinical and Translational Science Award to Spectrum (NIH TL1 TR 001084) and the Medical Scholars Research Grant for Stanford University Medical Students. David J. Myung, M.D., Ph.D. and Robert T. Chang, M.D. received support from the Stanford Society of Physician Scholars Grant in addition to the Stanford SPECTRUM/Biodesign Research Grant.

Conflicts of interest

Robert T. Chang M.D., Alexandre Jais, M.S., and David J. Myung, M.D., Ph.D. are patent holders of the smartphone ophthalmic imaging system discussed. David J. Myung, M.D., Ph.D. is a consultant to DigiSight Technologies.

Acknowledgments

Thank you to the faculty and staff at L. V. Prasad Eye Institute for your general support and enthusiasm for this project. A special thanks to Nazia Begum, Vinay Kumar, Vaibhav Sethi, Hari Kumar, Lalitha Yalagala, Ashwin Kumar Goud, Jonna Dula Karthik, and Arvind Bady for making time to consent and image patients. This research would not have been possible without the help of the Healthy Scholars Foundation, Raman and Srini Madala, Lily Truong, the Madala Charitable Trust, and CCD Varni in coordinating research. We would like to acknowledge gratefully the contributions of Brian Toy to this research. Thanks to Andrew Martin at the Stanford Center for Clinical Informatics, who provided database management and development of a streamlined database collection tool. The REDCap database tool hosted by Stanford University is maintained by the Stanford Center for Clinical Informatics grant support (Stanford CTSA award number UL1 RR025744 from NIH/NCRR).

References

- 1.Rao GN, Khanna RC, Athota SM, Rajshekar V, Rani PK. Integrated model of primary and secondary eye care for underserved rural areas: The L V Prasad Eye Institute experience. Indian J Ophthalmol. 2012;60:396–400. doi: 10.4103/0301-4738.100533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.LVPEI's Mission is to Provide Equitable and Efficient Eye Care to All Sections of Society. Hyderabad, India: L V Prasad Eye Institute; 2015. [Last cited on 2016 Jan 09]. Available from: http://www.lvpei.org/aboutus.php . [Google Scholar]

- 3.Kovai V, Rao GN, Holden B. Key factors determining success of primary eye care through vision centres in rural India: Patients' perspectives. Indian J Ophthalmol. 2012;60:487–91. doi: 10.4103/0301-4738.100558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Paxos Scope. [Last cited on 2015 Jan 18]. Available from: https://www.digisight.us/digisight/paxos-scope.php .

- 5.Myung D, Jais A, He L, Chang RT. Simple, low-cost smartphone adapter for rapid, high quality ocular anterior segment imaging: A photo diary. J Mob Technol Med. 2014;3:2–8. [Google Scholar]

- 6.Myung D, Jais A, He L, Blumenkranz MS, Chang RT. 3D printed smartphone indirect lens adapter for rapid, high quality retinal imaging. J Mob Technol Med. 2014;3:9–15. [Google Scholar]

- 7.Lamirel C, Bruce BB, Wright DW, Newman NJ, Biousse V. Nonmydriatic digital ocular fundus photography on the iPhone 3G: The FOTO-ED study. Arch Ophthalmol. 2012;130:939–40. doi: 10.1001/archophthalmol.2011.2488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) – A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Haddock LJ, Kim DY, Mukai S. Simple, inexpensive technique for high-quality smartphone fundus photography in human and animal eyes. J Ophthalmol. 2013;2013:518479. doi: 10.1155/2013/518479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Shrout PE, Fleiss JL. Intraclass correlations: Uses in assessing rater reliability. Psychol Bull. 1979;86:420–8. doi: 10.1037//0033-2909.86.2.420. [DOI] [PubMed] [Google Scholar]

- 11.Kim DY, Delori F, Mukai S. Smartphone photography safety. Ophthalmology. 2012;119:2200–1. doi: 10.1016/j.ophtha.2012.05.005. [DOI] [PubMed] [Google Scholar]

- 12.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–74. [PubMed] [Google Scholar]

- 13.Lamirel C, Bruce BB, Wright DW, Delaney KP, Newman NJ, Biousse V. Quality of nonmydriatic digital fundus photography obtained by nurse practitioners in the emergency department: The FOTO-ED study. Ophthalmology. 2012;119:617–24. doi: 10.1016/j.ophtha.2011.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lord RK, Shah VA, San Filippo AN, Krishna R. Novel uses of smartphones in ophthalmology. Ophthalmology. 2010;117:1274.e3. doi: 10.1016/j.ophtha.2010.01.001. [DOI] [PubMed] [Google Scholar]

- 15.Chhablani J, Kaja S, Shah VA. Smartphones in ophthalmology. Indian J Ophthalmol. 2012;60:127–31. doi: 10.4103/0301-4738.94054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Smith L. Pharmas Need to Take a Targeted Approach to Reach Physicians in China, Brazil and Australia, Study Shows. Burlington, Massachusetts, USA: 2014. [Last updated on 2014 Apr 28; Last cited on 2014 Oct 30]. Available from: http://www.prnewswire.com/news-releases/pharmas-need-to-take-a-targeted-approach-to-reach-physicians-in-china-brazil-and-australia-study-shows-256993321.html . [Google Scholar]

- 17.Maximizing Multi-Screen Engagement among Clinicians. San Francisco, CA, USA: Epocrates, Inc.; 2013. [Last cited on 2014 Oct 30]. Available from: http://www.epocrates.com/oldsite/statistics/2013.Epocrates.MobileTrends.Report_FINAL.pdf . [Google Scholar]