Abstract

Orienting our eyes to a light, a sound, or a touch occurs effortlessly, despite the fact that sound and touch have to be converted from head- and body-based coordinates to eye-based coordinates to do so. We asked whether the oculomotor representation is also used for localization of sounds even when there is no saccade to the sound source. To address this, we examined whether saccades introduced similar errors of localization judgments for both visual and auditory stimuli. Sixteen subjects indicated the direction of a visual or auditory apparent motion seen or heard between two targets presented either during fixation or straddling a saccade. Compared with the fixation baseline, saccades introduced errors in direction judgments for both visual and auditory stimuli: in both cases, apparent motion judgments were biased in direction of the saccade. These saccade-induced effects across modalities give rise to the possibility of shared, cross-modal location coding for perception and action.

Keywords: auditory localization, eye movements, multisensory processing, remapping, spatial perception

orienting to stimuli improves the processing speed and precision necessary for optimal motor actions. In humans, saccadic eye movements are the dominant form of orienting, yet sound and touch also convey information about space and can attract saccades. This poses an interesting challenge because each sensory modality is coded in its own reference frame: auditory location is in head-based coordinates (e.g., Hofman and Van Opstal 1998; Middlebrooks 1992; Middlebrooks and Green 1991; Wightman and Kistler 1989) and touch is somatotopic or body based (e.g., Björnsdotter et al. 2009; Walshe 1948), whereas vision is eye based (retinotopic; e.g., Head and Holmes 1911). Clearly, these different reference frames must be converted into eye-based coordinates every time a saccade is made to a nonvisual target (tactile: Diederich et al. 2003; Meredith and Stein 1986; Munoz and Wurtz 1995a, 1995b; auditory: Collins et al. 2010; Jay and Sparks 1987a, 1987b; Lee and Groh 2012; Russo and Bruce 1994). In this study we ask how the position of a nonvisual stimulus is represented when no saccade is planned. For example, the location of an auditory stimulus could be coded predominantly in the standard head-based coordinates if no saccade was involved. If this were the case, there should be no influence of eye position or eye movements on auditory localization. However, it may also be the case that the locations of sensory targets, whatever the modality, are coded on a map that serves overt orientation. Perceptual location would therefore emerge from the coding of a target on a common map for location, and as a consequence, there should be interactions between eye movements and auditory localization if that common map is an oculomotor control map.

Indeed, there are several investigations demonstrating that auditory locations are influenced by static eye position (Harrar and Harris 2009; Lewald 1997, 1998; Lewald and Ehrenstein 1998; Pritchett and Harris 2011). For example, Lewald (1998) showed that localization of sound sources is biased during eccentric fixation such that sounds are repelled from fixation (see also Lewald 1997; Lewald and Ehrenstein 1998). However, Klingenhöfer and Bremmer (2009) proposed that it is the presence of visual stimuli that causes the auditory shift in this case. They replicated the previous findings by Lewald (1998) and Lewald and Ehrenstein (1998) but added a control condition in which visual targets were removed and fixation was held at a memorized location. In this control, there was no influence of fixation position on auditory localization, raising the possibility that such mislocalizations only arise when eye position is combined with a visual stimulus.

More recently, interest has arisen in localization of sound across changes in eye position, rather than static eye position. Collins et al. (2010) showed an effect of saccades on auditory localization. Their observers made saccades to auditory or visual targets, and during each saccade the target was displaced toward the original fixation. After several trials, this saccadic adaptation produced shortened saccades, compensating for the target displacement. Critically, the perceived location of visual or auditory probes tested at the saccade target location following the adaptation was also biased in the direction of adaptation. Collins et al. (2010) showed that the mislocalizations were correlated across the modalities; that is, subjects with a large shift for visual probes also showed a large shift for auditory probes, pointing further toward a shared reference frame.

Klingenhöfer and Bremmer (2009) came to the opposite conclusion based on position shifts seen for brief probes around the time of a saccade. When a visual stimulus is flashed briefly within 50 ms of a saccade, there is a dramatic mislocalization of the flash toward the saccade target: the perisaccadic compression effect (Honda 1989; Ross et al. 1997, 2001). Klingenhöfer and Bremmer (2009) found that auditory stimuli are also mislocalized if presented just before a saccade but are only shifted in one direction whatever the test location, rather than showing shifts always toward the saccade target. The authors concluded that auditory and visual locations do not share the same map.

In contrast, a study by Pavani et al. (2008) favors eye-based coding of auditory targets. The authors employed a same-different delay procedure where observers judged whether the second of two sounds came from the same location as the first. Participants' performance diminished if the second sound moved in the direction opposite to the intervening eye movement(s) between the two stimulus presentations. One interpretation of this result was that the first, presaccadic auditory target was mislocalized in the direction opposite to that of the saccade, degrading displacement judgments in that direction.

The observation of Pavani et al. (2008) is reminiscent of that by Szinte and Cavanagh (2011) in the visual domain. They reported that the direction of a vertical apparent motion is altered by a horizontal eye movement occurring between the first and second stimulus of the apparent motion. That is, a downward visual motion straddling a leftward saccade is perceived to be tilted to the left, suggesting that the first stimulus was mislocalized in the direction opposite to the saccade, similar to the shift possibly underlying the findings of Pavani et al. (2008) for auditory stimuli. Therefore the two investigations give rise to the possibility that auditory and visual locations are coded on a common map.

To sum up, Klingenhöfer and Bremmer (2009) dismissed the idea of common location coding for auditory and visual targets by showing that saccadic compression of visual space is not translated into auditory space perception. Yet, multiple studies indicate common coding for visual and auditory locations from designs with static eye position (Harrar and Harris 2009, 2010; Lewald 1997, 1998; Lewald and Ehrenstein 1998; Pritchett and Harris 2011) as well as others with eye movements (Collins et al. 2010; Pavani et al. 2008). Importantly, however, in the study by Collins et al. (2010), coordinate transformations necessarily had to occur because subjects made an eye movement to the auditory saccade target, requiring this auditory location to be converted to eye-based coordinates. Pavani et al. (2008), on the other hand, showed saccade-induced mislocalizations of auditory targets even if no eye movements to the auditory stimuli were made. Furthermore, in conjunction with the findings of Szinte and Cavanagh (2011), Pavani et al. (2008)'s observation suggests similar saccade-induced mislocalization across saccades for both auditory and visual targets. However, a study that directly compares auditory and visual mislocalization is lacking [but see Klingenhöfer and Bremmer (2009) for mislocalizations at earlier time intervals relative to the saccade]. To address this, we examined localization judgments of auditory and visual locations across saccades when no eye movement is planned to the auditory (or visual) probes. This allowed us to assess whether auditory and visual locations have a similar stable mislocalization bias even when no oculomotor transformation occurs on the stimuli.

Healthy adult participants had to report the direction of a horizontal apparent motion between two successively presented targets, either visual or auditory. If both visual and auditory locations were coded on a common eye-centered map, then we would expect similar transsaccadic mislocalization and a correlation between visual and auditory mislocalization. In other words, observers with a large effect in one modality should show a large effect in the other modality, as well.

METHODS

Participants

Seventeen subjects (7 female, age 20–35 yr) participated in the experiment. Participants received payment for their time and signed consent forms. Experiments were carried out according to the ethical standards specified in the Declaration of Helsinki. A prescreen test was performed to test suitability of subjects for the auditory task (see below).

Apparatus and Stimuli

Auditory conditions.

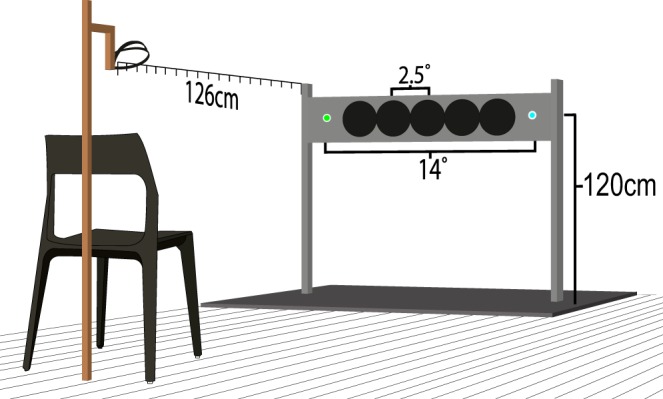

Stimuli were presented in a soundproof chamber. Five speakers (6-cm diameter) were mounted side by side on the horizontal bar of a frame 120 cm above the floor. At a viewing distance of ∼126 cm the individual speakers were 2.5 visual degrees (°) apart center to center. The sounds were broadband clicks, presented at 54 dB, with a duration of 7 ms at 6,400 samples/s. Two multicolor light-emitting diodes (LED) were placed at each end of the speaker array with 14° separation between them. The LEDs emitted either a blue or a green light at any time. This was done to counteract capture effects, that is, the capture of the auditory stimulus to the visual stimulus. The use of two locations does not eliminate the possibility of capture but at least avoids biases to one side over the other. The LEDs were controlled via an Arduino Uno 3, which in turn was directly controlled via the MATLAB toolbox ArduinoIO (The MathWorks). Responses were recorded with an Apple keyboard placed on the subject's lap. Refer to Figs. 1 and 2, A and B, for more details.

Fig. 1.

Auditory setup. Refer to text for more details.

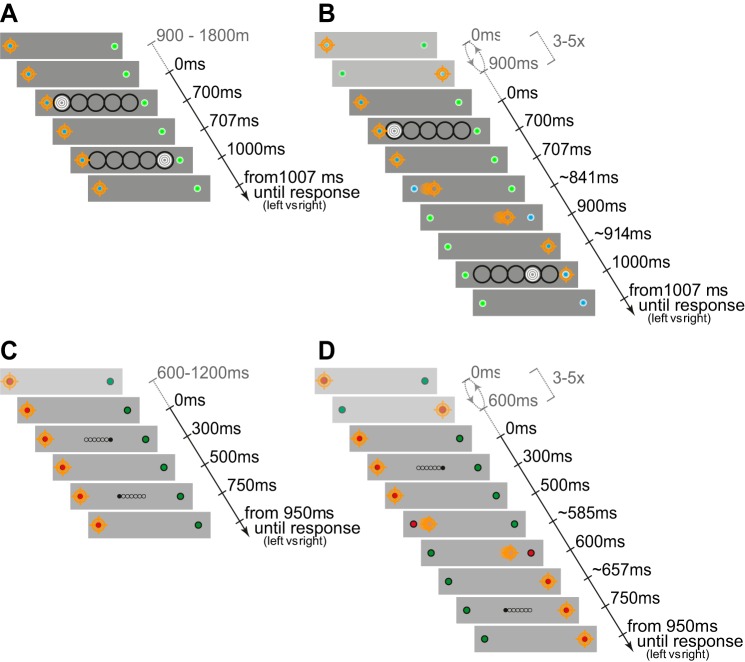

Fig. 2.

A: trial sequence for auditory fixation trials. The orange crosshair corresponds to eye position in this and all figures. In this example, the sound moved to the right. B: saccade trials with the sound moving to the right. C and D: trial sequence for visual fixation and saccade trials, respectively, with the target moving right to left. B and D show the average saccadic latencies. Note: all probe locations are also illustrated, but only one probe at a time was presented in the experiment. Scaling is altered for the purposes of illustration. Saccade starting and landing times are mean values obtained across participants.

We also ran an auditory control condition with three of the participants from the main auditory condition. We tracked eye movements with an EyeLink II (SR Research) for online detection of fixation and eye movements. The eye movement tracking procedure and analysis was the same as described below for the visual conditions except that we used a three-point calibration that was repeated every 20 trials.

Visual conditions.

Stimuli were presented in a separate testing chamber. A Power Mac G5 controlled the stimuli and displayed them on a Sony cathode ray tube monitor (refresh rate 100 Hz) on an evenly gray background. The stimuli were red and green circles (0.7° in diameter) as saccade targets and two black circles (0.4° in diameter) as probe stimuli. The probes could appear at seven different locations and always appeared with equal distance from the center in opposite direction (Fig. 2, C and D). Responses were recorded with an Apple keyboard.

Design.

Eye movements of the right eye were monitored in the visual condition with an EyeLink 1000 at a 1,000-Hz sampling rate. At the beginning of a session, the EyeLink was calibrated with the standard five-point EyeLink procedure. Before each trial, fixation was checked. If the distance between the fixation check and the calibration was greater than 1.5°, a new calibration was initiated. Calibration was also automatically renewed every 30 trials.

Online analysis of fixation location was used based on the EyeLink standard algorithm with the standard velocity, acceleration, and displacement thresholds of 30°/s, 8,000°/s2, and 0.15°, respectively (EyeLink User Manual; SR Research 2005). The timing of the gaze contingence is described below in the procedure. Experiments were programmed using the Psychophysics (Brainard 1997; Pelli 1997), ArduinoIO (MATLAB), and EyeLink toolboxes (Cornellissen et al. 2002).

Procedure

Participants were instructed to indicate the direction of an auditory and visual apparent motion in two different conditions: fixation and saccade. Fixation and saccade conditions are described below, and the differences between auditory and visual conditions are presented in Table 1.

Table 1.

Differences between auditory and visual conditions

| Auditory | Visual | |

|---|---|---|

| Prestimulus fixation interval, ms | 900–1,800 | 600–1,200 |

| Probe stimulus duration, ms | 7 | 200 |

| Jump size | 0°–10°, in steps of 2.5° | 0°–2.4°, in steps of 0.4° |

| Interstimulus interval, ms | 300 | 250 |

| Inter- saccade interval, ms | 900 | 600 |

| No. of trials | 200 + 200 | 208 (104)* + 208 |

| Eye tracking | 3 subjects | All subjects |

| Recycled trials, % | ||

| Participant rejected trial | 2.4 | 2.6 |

| Fixation error | 3.2 (SE 0.8) | 16.2 (SE 3.8) |

| Erroneous eye movements | 29.7 (SE 5.5) | 24.3 (SE 8.1) |

Because the psychometric functions in the visual fixation condition proved to be steep and stable, the number of trials was reduced from 208 to 104 after the fifth subject in the fixation condition only. This did not alter the slope properties of the psychometric function [t(14) = 0.18; P > 0.861].

Prescreening test.

Participants performed 25 trials of a simple auditory direction discrimination task to assess general sound detection ability. The sound stimuli were an apparent motion either to the left or to the right. The sounds were 10° apart (maximum speaker separation; see Fig. 1) with a 200-ms stimulus onset asynchrony (SOA) followed by a 900-ms interstimulus interval (ISI) and were then repeated until the participant hit the space bar and indicated with the arrow keys a leftward or rightward motion. A feedback sound informed subjects whether they had made the correct choice. If participants performed less than 80% correct, they were excluded from further participation in the experiment (1 subject). Participants were asked after the first practice whether they would like to have another practice round (3 subjects did request a second round).

Fixation trials.

In the fixation conditions subjects had to fixate one of two possible peripheral fixation locations throughout the trial. Fixation location was varied between trials such that half of the trials required a fixation left and the other half right. After the prestimulus fixation interval appeared, the apparent motion stimulus was presented such that the second of two probe stimuli was presented to the left, right, or at the same location as the first probe stimulus. Subjects could indicate the direction (left or right) by button press on the keyboard (left or right arrow key). During eye tracking (auditory control condition and visual condition), trials were recycled if a saccade was detected or fixation deviated outside a predefined area (1.5° around fixation). In the visual condition an error message was displayed, whereas in the auditory condition the response was recorded and then an error sound played.

Saccade trials.

Saccade trials began similarly with the instruction to fixate one of the two peripheral locations. After a brief intersaccade interval, the two peripheral locations changed color, instructing the subject to make a saccade to the opposite peripheral location. This procedure repeated multiple times until the first of the two apparent motion stimuli appeared briefly before a saccade and the second briefly after the saccade (refer to Fig. 2 and Table 1 for more details). During eye tracking (auditory control condition and visual condition), the eye position had to be maintained at the correct fixation location (within ±2°) until the end of the first probe presentation, and the eye then had to be detected at the new fixation location (within ±2°) before the onset of the second probe stimulus. If these criteria were not met, the trial was continued but recycled at a later stage (auditory control condition) or aborted with the display of an error message informing the participant about the type of error made (i.e., eye movement was executed too late or too early) and then recycled at a later stage (visual condition). In both the fixation and saccade conditions the participants were also informed to press the space bar in cases in which they felt distracted and judged their response as not reflecting the instructions.

All participants were tested in the same order: auditory fixation, auditory saccade followed by visual fixation, visual saccade. This order was chosen such that subjects who were not able to perform the auditory task could be excluded at an early stage. The fixation conditions acted additionally as practice in the respective condition, which therefore could favor veridical performance in the saccade conditions.

Analysis

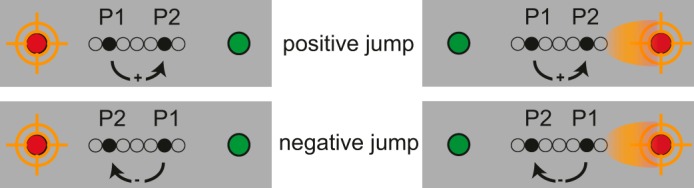

The first 10% of trials were practice trials that were excluded from the data analysis. The jump size between the two stimuli creating an apparent motion was coded relative to the (presaccadic) eye position. For example, on a given trial with the instruction to fixate left, a jump size was coded as positive if the second of the two probes was farther to the right, that is, the direction of motion was away from fixation (see Fig. 3, top left). For each jump size, we calculated the proportion of trials in which subjects' responses reflected the direction of the saccade. (Note that the participants were asked to indicate the direction of the apparent motion in space independent of fixation location and saccade direction.)

Fig. 3.

Coding used to analyze the data. Left, in fixation trials an apparent motion was marked as a positive jump if it moved away from the fixation location (top) and as negative if it moved toward the fixation (bottom). Right, in saccade trials the same coding applied, taken from the presaccadic fixation location: a jump size was positive if the apparent motion moved away from the presaccadic fixation location (top) and negative if it moved toward it (bottom). P1 and P2, first and second probes.

The data were fitted with a logistic function given by the formula:

In this formulation, the point of subjective equality (PSE) is given by the fitted value for B, the x-value at which the curve crosses 0.5 (left and right responses are equal at this point). The slope of the best-fitting function is determined by the value of C, which scales the slope nonlinearly. The fitting of the parameters was performed on the basis of nonlinear least-squares fitting (using lsqnonlin from the MATLAB Optimization Toolbox).

To obtain confidence intervals for the estimated parameters for each subject, we computed bootstrap estimates by resampling data sets of equal size (with replacement) from the original data (1,000 resamples per subject). Sampling was performed across trials while the distribution of trials across stimulus conditions was maintained. Confidence intervals (CI) of the PSE were then computed as the variability of the PSE bootstrap estimates, and 1 SE was equal to the standard deviation of the PSE bootstrap estimates. To support the correlations within and across modalities, we performed a weighted linear regression, taking the noise (SE of the PSE) in x and y into account (York et al. 2004).

RESULTS

Auditory Trials with Fixation

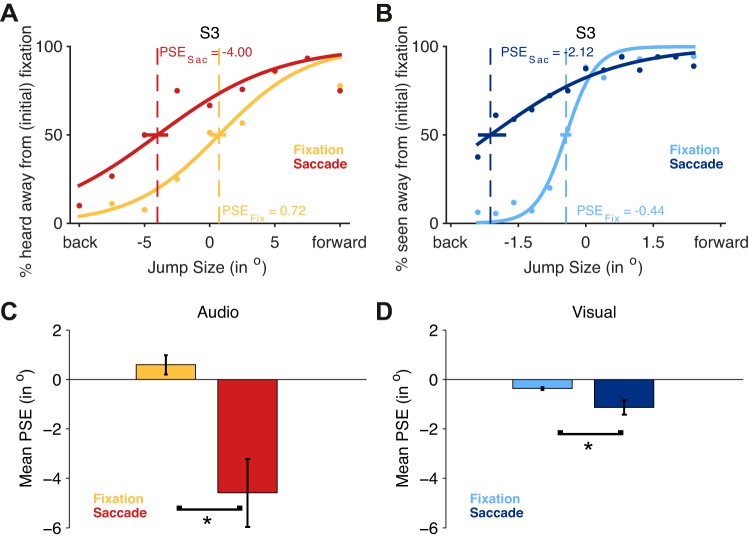

Participants performed overall 74.5% correct in judging the direction of motion, with 93.8% (SE 2.95%) correct responses in the easiest levels (i.e., maximal speaker separation). Figure 4A shows the psychometric function for one subject, and Fig. 4C shows the mean PSE across all subjects. The mean PSE (0.61; SE 0.39) was not different from zero [t(15) = 1.588; P > 0.133], indicating performance was close to veridical.

Fig. 4.

Results for the auditory and visual conditions. A: data for sample subject S3 in the auditory task. The orange line reflects the psychometric function for fixation, the red line for saccade trials. As shown, the PSE is shifted for saccade trials (PSESac) in the direction of the saccade. PSEFix, PSE for fixation condition. B: shows data for the same subject (S3) in the visual condition. Light blue reflects the fit for fixation trials, dark blue for saccade trials. Again, the PSE in the saccade condition is shifted such that even a jump size of over 2° in the direction opposite to the saccade is reported 50% of the time as occurring in the direction of the saccade. Colored horizontal bars indicate bootstrapped CIs of the PSEs. Note the different scaling of the horizontal axes of A and B. C and D: group means for PSEs, reflecting the jump size that appears stationary (light colors reflect fixation condition, darker colors saccade conditions). Error bars are SE. *P < 0.05, significant difference.

Auditory Trials with Saccades

Participants performed overall 66.3% correct, with 94.8% (SE 2.65%) correct responses in the easiest level. The mean PSE (−4.52; SE 1.39) of the psychometric curves for saccade trials shifted significantly [t(15) = 3.25; P < 0.005] in the opposite direction of the eye movement, indicating that subjects perceived a jump size of ∼4.5° in the direction opposite to the saccade as occurring equally likely to the right or to the left.

Auditory Trials: Fixation vs. Saccades

The difference between saccade and fixation trials was significantly different from zero [t(15) = 3.434; P < 0.004]. The PSEs of fixation and saccade trials were not correlated [unweighted r(14) = −0.141; P > 0.602], although the weighted linear regression was significant (slope: −3.242; uncertainty interval: ±1.6). The slopes of the psychometric functions also differed significantly [t(15) = 3.023; P < 0.009] such that the slopes were steeper in the fixation condition than in the saccade condition. Steepness of the slope in fixation conditions did predict slopes in the saccade condition [r(11) = 0.604; P < 0.013]. Bootstrapped CIs for each subject's fixation and saccade PSEs showed a significant shift (no overlap) for 11 of the 16 subjects (68.75%).

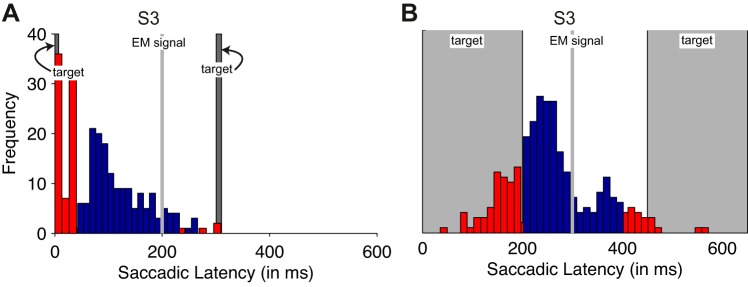

In the eye movement control condition, the screening ensured that the two probe sounds straddled the saccade so that they were never both heard before or after the saccade. Trials with saccades failing these criteria were rerun, and overall, 29.67% of trials had saccades that began too soon or landed too late. We assume that a similar percentage of trials would have failed these criteria in the main condition, as well. The critical saccade occurred on average 140.8 ms (SE 28.5 ms) after the onset of the first probe sound. Fixation at the postsaccadic location was detected on average 86.8 ms (SE 30.6 ms) before the onset of the second probe sound. A histogram of sample participant S3's saccadic latencies is shown in Fig. 5A.

Fig. 5.

Saccade timings for the auditory saccade condition (A) and the visual saccade condition (B) for subject S3.The critical saccade is plotted relative to the first of the 2 apparent motion stimuli (but note that the saccades were executed relative to the saccade signal). The dark gray rectangle on the left shows the onset and duration of the presaccadic probe, and the rectangle on the right for the postsaccadic probe. Blue bars are included eye movements; red bars are excluded eye movements. EM signal,

Given these control condition results, the main condition undoubtedly also included a percentage of trials where the two probe sounds were both heard before the saccade or both after. Nevertheless, the results in the control condition where all trials with early or late saccades were excluded were quite similar to the results in the main experiment. Specifically, the two participants that showed a significant difference in PSEs between saccade and fixation conditions in the main session also had a significant difference in the control (the bootstrapped SEs of the PSEs for the fixation and saccade conditions showed no overlap). The one participant with no significant difference in the control (no overlap of fixation and saccade SEs) also had no significant difference in the main condition. These results confirm that saccade-induced mislocalizations of auditory space occur even when eye movements are carefully controlled.

Visual Trials with Fixation

Participants performed overall 88.4% correct in judging the direction of the motion, with 99.6% (SE 0.05%) correct responses at the easiest level (maximum probe separation). Figure 4B shows the psychometric function for sample subject S3, and Fig. 4D shows the mean PSE across all subjects. The mean PSE (−0.27; SE 0.05) was significantly shifted away from fixation [t(15) = 7; P < 0.001], indicating that subjects would perceive two stimuli that had no offset as making a jump of ∼0.3° away from fixation.

Visual Trials with Saccades

Participants performed overall 75.0% correct, with 97.3% (SE 0.03) correct responses at the easiest level. On average, the critical saccade occurred 284.7 ms (SE 4.7 ms) after the onset of the first probe stimulus. The average duration of the critical saccade was 72.1 ms (SE 4.2 ms) such that the end of the saccade occurred on average 56.7 ms (SE 3.9 ms) after the color switch, ∼90 ms before the onset of the second probe stimulus (refer to Fig. 5).

The mean PSE (−1.13; SE 0.08) in saccade trials significantly [t(15) = 3.867; P < 0.002] shifted in the direction opposite to the saccade, indicating that subjects would perceive two stimuli that had an offset of ∼1.1° in direction opposite to the saccade as equally likely moving left or right (Fig. 4, B and D).

Visual Trials: Fixation vs. Saccades

The mean PSE for fixation and saccade trials differed significantly [t(15) = 2.336; P < 0.034] and were correlated [r(14) = −0.576; P < 0.02] such that a large shift in the fixation condition was associated with a small shift in the saccade condition. The slopes of the psychometric functions also differed significantly between fixation and saccade trials [t(15) = 5.809; P < 0.001], but performance in fixation did not predict performance in saccade trials [r(14) = 0.007; P > 0.978]. Individually bootstrapped CIs of the PSEs revealed significant individual shifts (no overlap in confidence intervals) for 8 of 16 subjects (50%).

Auditory vs. Visual Conditions

A repeated-measures ANOVA with modality (auditory, visual) and motion (fixation, saccade) was conducted. In line with the individual analyses reported above, the main effect of motion [F(1,15) = 12.3; P < 0.004] was significant: the PSE was shifted more in the saccade conditions (mean −2.83; SE 0.08) than in the fixation conditions [mean 0.12; SE 0.2; t(15) = 3.507; P < 0.004]. The main effect of modality was marginally significant [F(1,15) = 3.494; P = 0.082]; however, the interaction between modality and motion (F = 10.294, P < 0.006) and subsequent planned comparisons suggest that shifts in PSE were larger in auditory than in visual saccade trials [t(15) = 2.675; P < 0.017] and also that fixation conditions differed between auditory and visual trials [t(15) = 2.518; P < 0.024]. Importantly, and of main interest to this investigation, the interaction did maintain the main effect of motion that is visible in Fig. 4, C and D, and has been tested in the individual analyses above: saccade-induced shifts in direction judgments were larger in the saccade conditions than in the fixation condition for both auditory and visual stimuli.

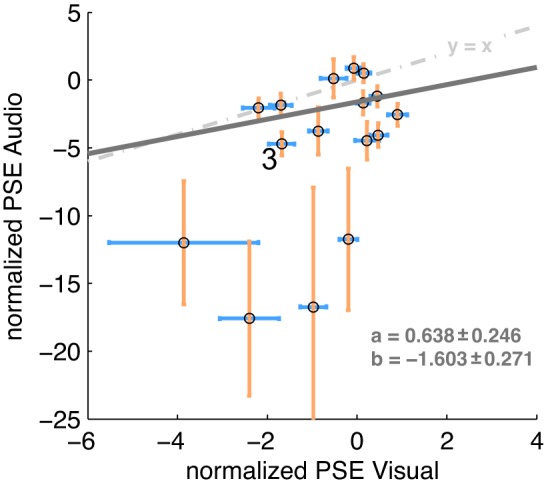

Finally, we asked whether the saccade-induced effects were correlated across participants. If locations were coded on a common map, then we would expect the auditory and the visual mislocalizations judgments to be associated across modalities. A Pearson correlation revealed a significant unweighted correlation between the normalized PSE values (saccade-fixation) for visual probes and for auditory probes [r(14) = 0.497; P = 0.050; bootstrap 95% CI 0.023–0.774]. The relationship was further supported by a weighted linear regression that took errors of both variables (visual and auditory) into account. The slope of the regression differed from zero (slope: 0.638 ± 0.246). This suggests that subjects with a large saccade-induced displacement of auditory probes also had a large saccade-induced displacement of visual probes (Fig. 6).

Fig. 6.

Cross-modal relationship for the normalized PSEs, where each point reflects the normalized PSE (fixation-saccade) for 1 subject in the auditory (y-axis) and visual (x-axis) condition. Error bars are SE for visual and audio PSEs for each respective subject. The dark gray line shows the weighted linear regressions, and the slope a and intercept b for the regression are shown (means ± SE). The light gray dashed line shows the identity relationship between x and y. Note that the axis scales differ between x and y. 3 indicates data for sample subject S3. Refer to text for further details.

DISCUSSION

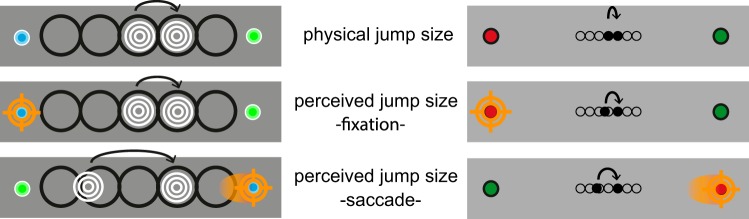

When a saccade intervened between the presentations of two stimuli, the perceived direction of motion between the two stimuli was biased in the direction of the saccade. This systematic misperception occurred regardless of the modality (auditory or visual) in which the probe stimuli were presented. A visualization of the effect is shown in Fig. 7.

Fig. 7.

Schematic representation of the visual and auditory mislocalization judgments. Left, auditory conditions. Shown is a representation of the speaker setup. Top, the physical jump size is shown as a motion to the right. Middle, the hypothetical perception of this physical jump size during fixation based on the shift of the PSE of the mean psychometric fit for fixation. Bottom, the extrapolation of the perceived jump size as a rightward saccade crosses the rightward apparent motion. The jump size is perceptually expanded by more than one stimulus level (i.e., 1 speaker separation). Right, visual conditions. Top, the physical jump size of the shortest stimulus separation is shown. Middle, the perceptual shift extrapolated from the PSE of the mean psychometric fit at fixation: the perceived jump size is somewhat elongated. Bottom, the perceptual shift of the rightward apparent motion across a rightward saccade. The perceived jump size is elongated by more than one stimulus separation, similar to what is observed in the auditory condition.

The correlation of this perceptual shift between modalities does furthermore suggest that auditory and visual saccade-induced displacement illusions are of similar magnitude across subjects. However, given the significance level of this correlation (P = 0.05), this interpretation should be treated with caution.

We examined the possibility that auditory and visual targets are maintained on a common, eye-centered map. An apparent motion straddling a saccade is biased in the direction opposite the saccade, indicating a horizontal shift between the remembered stimulus location (presaccadic stimulus) and the reference probe (postsaccadic stimulus). These mislocalizations are observed for both visual and auditory targets, suggesting that saccades interfere with the location representation of both representations of sensory space. Importantly, these mislocalizations occurred in the auditory condition even as no eye movement to the auditory target was planned.

The current results confirm and replicate previous observations for both visual (Szinte and Cavanagh 2011) and auditory domains (Pavani et al. 2008). Szinte and Cavanagh (2011) showed that horizontal saccades make a vertical motion appear tilted as if the first location was shifted back in the direction opposite to the saccade, suggesting a hypermetric correction for the saccade. We extend this finding by showing that these hypermetric visual corrections also occur for stimuli appearing in apparent motion along the horizontal midline between the saccadic endpoints. Pavani et al. (2008) showed that judging the location of an auditory stimulus was unaffected during fixation, but with an intervening saccade it was mislocalized in the direction opposite to the saccade, resulting in reduced discrimination ability between the pre- and postsaccadic locations. In this study we extended this finding by showing that systematic misjudgments of sound location can reverse the perceived direction. Importantly, we have combined the two previous studies to show that the two separately examined phenomena may have a common origin. By examining behavior in both modalities, we have found that saccades induce a similar location judgment error for stimuli presented just before and just after a saccade in both modalities.

The current findings are also in line with those of Collins et al. (2010), who showed that saccadic adaptation biases localization of both visual and auditory stimuli. In this study we extend this finding by showing that auditory localization judgments are influenced by saccades even if no eye movements are explicitly made to the auditory targets.

Despite the discussed similarities between the auditory and visual condition, it is also important to note that visual and auditory stimuli revealed different effects in the fixation condition. In the visual, but not auditory, fixation condition, direction judgments were shifted such that an apparent motion was more often seen as going away from fixation. It is unclear, however, whether the first probe was attracted to fixation or the second probe repelled from fixation, and since we did not measure absolute localization, we cannot distinguish between these two interpretations. Attention is known to affect position judgments of nearby stimuli (Au et al. 2013; Suzuki and Cavanagh 1997), and potentially, the attention attracted by the first of the two probes exposes effects on the perceived position of the second probe.

Furthermore, this shift away from the midpoint predicted the strength of the shift in the saccade condition: a large shift in the saccade condition was associated with a small shift in the fixation condition. Potentially, the memory of the initial location is attracted toward fixation, and this effect is diminished if a saccade straddles the pre- and postsaccadic stimulus presentation. No such relationship was observed in the auditory modality.

It is important to note that it is unlikely that the findings for the visual condition reported in this article are caused by saccadic compression (Honda 1989). Saccadic compression occurs for stimuli presented between 100 ms before the onset of the saccade and 50 ms after the end of the saccade. In the current experiment, the first stimulus was presented on average ∼280 ms before the eye movement, and the second stimulus ∼40 ms after the end of the saccade. Furthermore, saccadic compression is observed for briefly presented stimuli, typically not exceeding 50-ms presentation duration, whereas in the current experiment the stimuli were presented for 200 ms. Despite the lack of a systematic investigation of the effects of duration on saccadic compression, it is highly unlikely that stimuli with such long stimulus durations are compressed (Sogo and Osaka 2001; Watanabe et al. 2005).

Lewald and colleagues have reported a repulsion of auditory locations away from fixation that might contribute to our effects if we consider the two sequential auditory stimuli, each heard from a different fixation location (Lewald 1997, 1998; Lewald and Ehrenstein 1996). However, repulsion from eye position would predict that an auditory motion is perceived in the opposite direction to the intervening saccade. On the contrary, we find that the perceived direction is biased in direction of the saccade. Others have shown attraction effects (capture) of auditory stimuli toward visual fixation targets (Razavi et al. 2007; Weerts and Thurlow 1971). Capture toward the fixation location is consistent with the direction of our results: if the presaccadic stimulus is captured by the presaccadic fixation and the postsaccadic stimulus by postsaccadic fixation, the perception of the apparent motion will be biased in the direction of the saccade, as we report. However, our fixation control condition indicates that the saccade increases the mislocalization beyond what is seen in the fixation condition. Szinte and Cavanagh (2011) also observed larger mislocalizations across saccades if compared with fixation. As such, we do not believe that the kind of capture reported by others (e.g., Weerts and Thurlow 1971) is sufficient to explain these saccade-induced mislocalizations.

However, Szinte and Cavanagh (2011) suggested that the mislocalization of visual stimuli across saccades is induced by the corrections of location that occur at the time of the saccade. This “remapping” (Duhamel et al. 1992; Rolfs et al. 2011; Wurtz 2008) has been proposed to dynamically update attended target locations across eye movements to compensate for the shift of retinal coordinates caused by the saccade. According to Szinte and Cavanagh (2011), remapping has a small, stable overcompensation of the saccade. As a consequence, remapped locations suffer a mislocalization across saccades. The first probe location will be shifted back in the direction opposite the saccade and cause an illusory tilt of the direction of the apparent motion. Given this interpretation of the transsaccadic mislocalizations, the current findings point to a modality-independent remapping of location.

Why would this remapping error affect targets in other modalities, even when they are not saccade targets? Cavanagh et al. (2010) proposed that the remembered location of the presaccade stimulus is maintained as an attentional placeholder. Because this is a marker for a location of interest, the placeholder is independent of the effector that drew attention to the location. It follows that localization of attended stimuli in any sensory modality should be updated across saccades and show the same stable localization errors as the remapped visual locations studied by Szinte and Cavanagh (2011). This is in line with the current findings.

Coordinate transformations between different sensory modalities are necessary when the sensory input is used for orienting, such as when bringing a stimulus into the center of vision with a saccadic eye movement. Whereas auditory stimuli are neurally represented in head-based coordinates at stimulus onset (e.g., Jay and Sparks 1987b), the motor code for a saccade to an auditory target is partially transformed to eye-centered coordinates (Jay and Sparks 1984, 1987a; Lee and Groh 2012). In the present study we show that auditory and visual stimuli have similar biases across eye movements, suggesting a common coding of location for these modalities, even if no eye movement to the visual and auditory probes is planned or executed. One potential explanation is that our probes reflexively generated a motor plan to the probe stimuli, thereby facilitating the transformation otherwise only observed for saccade targets (Lee and Groh 2012). If this were the case, then one could argue that all behaviorally relevant stimuli evoke a reflexive motor plan in the form of an attentional pointer (e.g., Rizzolatti et al. 1987) and that coding on the common saccade map is reserved for these attentional targets as suggested by the idea that perceptual stability is achieved through the updating of attentional placeholders (Cavanagh et al. 2010).

GRANTS

The research leading to these results has received funding from Open Research Area Project ANR-12-ORAR-0001 (to P. Cavanagh and T. Collins) and from the European Research Council (ERC) under the European Union's Seventh Framework Programme FP7/2007-2013/ERC grant agreement no. AG324070 (to P. Cavanagh).

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

H.M.K., T.C., and P.C. conception and design of research; H.M.K. performed experiments; H.M.K. and B.E. analyzed data; H.M.K., T.C., and P.C. interpreted results of experiments; H.M.K. and B.E. prepared figures; H.M.K. drafted manuscript; H.M.K., T.C., and P.C. edited and revised manuscript; H.M.K., T.C., and P.C. approved final version of manuscript.

REFERENCES

- AU RK, Ono F; Watanabe K. Spatial distortion induced by imperceptible visual stimuli. Conscious Cogn 22: 99–110, 2013. [DOI] [PubMed] [Google Scholar]

- Björnsdotter M, Löken L, Olausson H, Vallbo Å, Wessberg J. Somatotopic organization of gentle touch processing in the posterior insular cortex. J Neurosci 29: 9314–9320, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997. [PubMed] [Google Scholar]

- Cavanagh P, Hunt AR, Afraz A, Rolfs M. Visual stability based on remapping of attention pointers. Trends Cogn Sci 14: 147–153, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins T, Heed T, Röder B. Eye-movement-driven changes in the perception of auditory space. Atten Percept Psychophys 72: 736–746, 2010. [DOI] [PubMed] [Google Scholar]

- Cornelissen FW, Peters EM, Palmer J. The Eyelink Toolbox: eye tracking with MATLAB and the Psychophysics Toolbox. Behav Res Methods Instrum Comput 34: 613–617, 2002. [DOI] [PubMed] [Google Scholar]

- Diederich A, Colonius H, Bockhorst D, Tabeling S. Visual-tactile spatial interaction in saccade generation. Exp Brain Res 148: 328–337, 2003. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science 255: 90–92, 1992. [DOI] [PubMed] [Google Scholar]

- Harrar V, Harris LR. Eye position affects the perceived location of touch. Exp Brain Res 198: 403–410, 2009. [DOI] [PubMed] [Google Scholar]

- Harrar V, Harris LR. Touch used to guide action is partially coded in a visual reference frame. Exp Brain Res 203: 615–620, 2010. [DOI] [PubMed] [Google Scholar]

- Head H, Holmes G. Sensory disturbances from cerebral lesions. Brain 34: 102–254, 1911. [Google Scholar]

- Hofman PM, Van Opstal AJ. Spectro-temporal factors in two dimensional human sound localization. J Acoust Soc Am 103: 2634−2648, 1998. [DOI] [PubMed] [Google Scholar]

- Honda H. Perceptual localization of visual stimuli flashed during saccades. Percept Psychophys 45: 162–174, 1989. [DOI] [PubMed] [Google Scholar]

- Jay MF, Sparks DL. Auditory receptive fields in primate superior colliculus shift with changes in eye position, 1984. [DOI] [PubMed] [Google Scholar]

- Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. I. Motor convergence. J Neurophysiol 57: 22–34, 1987a. [DOI] [PubMed] [Google Scholar]

- Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. II. Coordinates of auditory signals. J Neurophysiol 57: 35–55, 1987b. [DOI] [PubMed] [Google Scholar]

- Klingenhöfer S, Bremmer F. Perisaccadic localization of auditory stimuli. Exp Brain Res 198: 411–423, 2009. [DOI] [PubMed] [Google Scholar]

- Lee J, Groh JM. Auditory signals evolve from hybrid-to eye-centered coordinates in the primate superior colliculus. J Neurophysiol 108: 227–242, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewald J. Eye-position effects in directional hearing. Behav Brain Res 87: 35–48, 1997. [DOI] [PubMed] [Google Scholar]

- Lewald J. The effect of gaze eccentricity on perceived sound direction and its relation to visual localization. Hear Res 115: 206–216, 1998. [DOI] [PubMed] [Google Scholar]

- Lewald J, Ehrenstein WH. The effect of eye position on auditory lateralization. Exp Brain Res 108: 473–485, 1996. [DOI] [PubMed] [Google Scholar]

- Lewald J, Ehrenstein WH. Auditory-visual spatial integration: a new psychophysical approach using laser pointing to acoustic targets. J Acoust Soc Am 104: 1586–1597, 1998. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J Neurophysiol 56: 640–662, 1986. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC. Narrow-band sound localization related to external ear acoustics. J Acoust Soc Am 92: 2607–2624, 1992. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Green DM. Sound localization by human listeners. Annu Rev Psychol 42: 135–159, 1991. [DOI] [PubMed] [Google Scholar]

- Munoz DP, Wurtz RH. Saccade-related activity in monkey superior colliculus. I. Characteristics of burst and buildup cells. J Neurophysiol 73: 2313–2333, 1995a. [DOI] [PubMed] [Google Scholar]

- Munoz DP, Wurtz RH. Saccade-related activity in monkey superior colliculus. II. Spread of activity during saccades. J Neurophysiol 73: 2334–2348, 1995b. [DOI] [PubMed] [Google Scholar]

- Pavani F, Husain M, Driver J. Eye-movements intervening between two successive sounds. Exp Brain Res 189: 435–449, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG. The Video Toolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10: 437–442, 1997. [PubMed] [Google Scholar]

- Pritchett LM, Harris LR. Perceived touch location is coded using a gaze signal. Exp Brain Res 213: 229–234, 2011. [DOI] [PubMed] [Google Scholar]

- Razavi B, O'Neill WE, Paige GD. Auditory spatial perception dynamically realigns with changing eye position. J Neurosci 27: 10249–1058, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Riggio L, Dascola I, Umiltá C. Reorienting attention across the horizontal and vertical meridians: evidence in favor of a premotor theory of attention. Neuropsychologia 25: 31–40, 1987. [DOI] [PubMed] [Google Scholar]

- Rolfs M, Jonikaitis D, Deubel H, Cavanagh P. Predictive remapping of attention across eye movements. Nat Neurosci 14: 252–256, 2011. [DOI] [PubMed] [Google Scholar]

- Ross J, Morrone MC, Goldberg ME, Burr DC. Changes in visual perception at the time of saccades. Trends Neurosci 24: 113–121, 2001. [DOI] [PubMed] [Google Scholar]

- Ross J, Morrone MC, Burr DC. Compression of visual space before saccades. Nature 386: 598–601, 1997. [DOI] [PubMed] [Google Scholar]

- Russo GS, Bruce CJ. Frontal eye field activity preceding aurally guided saccades. J Neurophysiol 71: 1250–1250, 1994. [DOI] [PubMed] [Google Scholar]

- Sogo H, Osaka N. Perception of relation of stimuli locations successively flashed before saccade. Vision Res 41: 935–942, 2001. [DOI] [PubMed] [Google Scholar]

- SR Research. EyeLink User Manual. Mississauga, ON, Canada: SR Research, 2005. [Google Scholar]

- Suzuki S, Cavanagh P. Focused attention distorts visual space: an attentional repulsion effect. J Exp Psychol Hum Percept Perform 23: 443–463, 1997. [DOI] [PubMed] [Google Scholar]

- Szinte M, Cavanagh P. Spatiotopic apparent motion reveals local variations in space constancy. J Vis 11: 4–10, 2011. [DOI] [PubMed] [Google Scholar]

- Walshe FM. Critical Studies in Neurology. London: Livingstone, 1948. [Google Scholar]

- Watanabe J, Noritake A, Maeda T, Tachi S, Nishida SY. Perisaccadic perception of continuous flickers. Vision Res 45: 413–430, 2005. [DOI] [PubMed] [Google Scholar]

- Weerts TC, Thurlow WR. The effects of eye position and expectation on sound localization. Percept Psychophys 9: 35–39, 1971. [Google Scholar]

- Wightman FL, Kistler DJ. Headphone simulation of free-field listening. II: Psychophysical validation. J Acoust Soc Am 85: 868–878, 1989. [DOI] [PubMed] [Google Scholar]

- Wurtz RH. Neuronal mechanisms of visual stability. Vision Res 48: 2070–2089, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- York D, Evensen N, Martinez M, Delgado J. Unified equations for the slope, intercept, and standard errors of the best straight line. Am J Phys 72: 367–375, 2004. [Google Scholar]