Abstract

Neuroimaging research has identified category-specific neural response patterns to a limited set of object categories. For example, faces, bodies, and scenes evoke activity patterns in visual cortex that are uniquely traceable in space and time. It is currently debated whether these apparently categorical responses truly reflect selectivity for categories or instead reflect selectivity for category-associated shape properties. In the present study, we used a cross-classification approach on functional MRI (fMRI) and magnetoencephalographic (MEG) data to reveal both category-independent shape responses and shape-independent category responses. Participants viewed human body parts (hands and torsos) and pieces of clothing that were closely shape-matched to the body parts (gloves and shirts). Category-independent shape responses were revealed by training multivariate classifiers on discriminating shape within one category (e.g., hands versus torsos) and testing these classifiers on discriminating shape within the other category (e.g., gloves versus shirts). This analysis revealed significant decoding in large clusters in visual cortex (fMRI) starting from 90 ms after stimulus onset (MEG). Shape-independent category responses were revealed by training classifiers on discriminating object category (bodies and clothes) within one shape (e.g., hands versus gloves) and testing these classifiers on discriminating category within the other shape (e.g., torsos versus shirts). This analysis revealed significant decoding in bilateral occipitotemporal cortex (fMRI) and from 130 to 200 ms after stimulus onset (MEG). Together, these findings provide evidence for concurrent shape and category selectivity in high-level visual cortex, including category-level responses that are not fully explicable by two-dimensional shape properties.

Keywords: category selectivity, visual cortex organization, body representations

functional magnetic resonance imaging (fMRI) studies have shown that multivoxel response patterns in high-level visual cortex reliably discriminate different object categories (Haxby et al. 2001) and that these show a meaningful categorical organization (e.g., an animate-inanimate distinction; Kriegeskorte et al. 2008). Similarly, signatures of category-specific processing in the time domain have been identified using magneto- and electroencephalography (MEG/EEG), with MEG sensor patterns across the scalp allowing for reliable classification of object categories (Carlson et al. 2013; Cichy et al. 2014).

However, it is unclear whether such categorical responses are truly reflecting category membership, detached from specific visual features, or whether they are instead driven by visual properties of objects that systematically covary with category membership. For example, the face-selective fusiform face area (Kanwisher et al. 1997) is preferentially activated for round, nonface stimuli that have a higher spatial concentration of elements in the upper half even when these stimuli are not recognized as faces (Caldara et al. 2006), and the occipital face area (Gauthier et al. 2000) has been shown to be causally involved in the perception of stimulus symmetry (Bona et al. 2015). Furthermore, large-scale response patterns in monkey inferior temporal cortex can be well-explained by the shape similarity of the objects without the need to refer to category membership (Baldassi et al. 2013). Such findings prompt the hypothesis that closely matching shape properties of objects from different categories would largely abolish category-specific response patterns.

We tested this prediction by investigating how matching for two-dimensional (2D) shape properties impacts neural responses to a specific category, the human body. Previous studies have characterized distinct spatiotemporal signatures of body perception, recruiting specific regions in occipitotemporal and fusiform cortices and evoking specific electrophysiological waveform components (for review, see Peelen and Downing 2007). Furthermore, bodies can be reliably separated from other categories based on MEG and fMRI response patterns (Cichy et al. 2014; Kriegeskorte et al. 2008). It is unknown whether these body-specific fMRI and MEG responses reflect selectivity for particular shape properties of bodies (e.g., symmetry) or whether they reflect, at least partly, a truly categorical response.

Participants were tested in separate fMRI and MEG experiments with largely identical experimental procedures. Multivariate classification techniques were used to characterize category representations in space (fMRI) and time (MEG). The stimulus set consisted of human body parts (hands and torsos) and pieces of clothing (gloves and shirts) that were closely shape-matched to the body part stimuli. To reveal category-independent shape responses, classifiers were trained to discriminate between different shapes within one category (e.g., hands versus torsos) and tested to discriminate these shapes within the other category (e.g., gloves versus shirts). To reveal shape-independent category responses, classifiers were trained to discriminate between the categories (bodies and clothes) within one shape (e.g., hands versus gloves) and tested to discriminate these categories within the other shape (e.g., torsos versus shirts).

MATERIALS AND METHODS

Participants.

Twenty-four healthy adults (eleven men; mean age 24.2 yr, SD = 3.4) took part in the fMRI experiment, and twenty-one healthy adults (fourteen men; mean 25.0 yr, SD = 3.2) took part in the MEG experiment. One participant completed both experiments. All participants had normal or corrected-to-normal visual acuity. All procedures were carried out in accordance with the Declaration of Helsinki and were approved by the ethical committee of the University of Trento.

Stimuli and procedure.

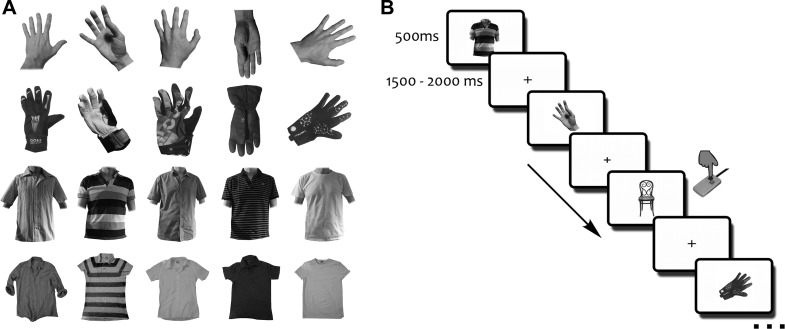

Unless otherwise noted, all aspects of the design were identical between the fMRI and MEG experiments. The full stimulus set consisted of nine different categories (hands, gloves, torsos, shirts, brushes, pens, trees, vegetables, and chairs) with twenty-one different exemplars per category. Four of these categories (brushes, pens, trees, and vegetables) were related to a different research question and are not analyzed here. Chairs served as target stimuli (Fig. 1B) and were also excluded from all analyses. Our analyses were focused on the comparison between stimuli depicting human body parts (human hands and torsos, i.e., shirts with a human upper body inside) and stimuli depicting solely pieces of clothing despite being very similar to the human body parts in their shape properties (gloves and shirts; Fig. 1A).

Fig. 1.

Stimuli and paradigm. A: the stimulus set contained 2 human body parts (hands and torsos; see 1st and 3rd rows for examples) and 2 pieces of clothing that are highly similar in their shape (gloves and shirts; 2nd and 4th rows). B: stimuli were presented for 500 ms separated by a variable 1,500- to 2,000-ms fixation interval. Participants were instructed to maintain central fixation and to respond manually to chairs.

Both experiments consisted of multiple runs, where participants viewed grayscale images of the different categories for 500 ms in a randomized order (Fig. 1B), with stimuli being separated by a fixation interval varying randomly between 1,500 and 2,000 ms (in discrete steps of 50 ms). Participants were instructed to maintain central fixation and press the response button whenever they saw a chair (these trials appeared equally often as all other categories, e.g., 21 times per run). For the MEG experiment, participants were additionally instructed to use the chair trials specifically for eye blinks. Each run contained each individual exemplar of every category exactly once, leading to a total of 189 trials per run and an average run duration of 7.1 min. In the fMRI experiment, every run additionally contained a 10-s fixation period at the beginning and end. During the fMRI experiment, participants completed 6 of these runs (for 1 participant, only data from 5 runs were collected due to a technical problem), and during the MEG experiment, participants completed 10 runs (1 participant performed 11 runs). Stimulus presentation was controlled using the Psychtoolbox (Brainard 1997); in the MRI, stimuli were back-projected onto a screen at the end of the scanner bore and participants saw the stimulation through a tilted mirror mounted on the head coil, whereas in the MEG, stimuli were back-projected onto a translucent screen located in front of the participant.

fMRI data acquisition and preprocessing.

MR imaging was conducted using a Bruker BioSpin MedSpec 4T head scanner (Bruker BioSpin, Rheinstetten, Germany) equipped with an eight-channel head coil. During the experimental runs, T2*-weighted gradient-echo echo-planar images were collected [repetition time (TR) = 2.0 s, echo time (TE) = 33 ms, 73° flip angle, 3 × 3 × 3-mm voxel size, 1-mm gap, 34 slices, 192-mm field of view, 64 × 64 matrix size]. Additionally, a T1-weighted image (MP-RAGE; 1 × 1 × 1-mm voxel size) was obtained as a high-resolution anatomic reference. All resulting data were preprocessed using MATLAB and SPM8. The functional volumes were realigned and coregistered to the structural image. Additionally, structural images were spatially normalized to the Montreal Neurological Institute MNI305 template (as included in SPM8) to obtain normalizing parameters for each participant. These parameters were later used to normalize individual participants' searchlight result maps before entering them into statistical analysis.

fMRI decoding analysis.

Multivariate pattern analysis (MVPA) was carried out on a TR-based level using the CoSMoMVPA toolbox (http://www.cosmomvpa.org/). To reveal areas yielding above-chance decoding throughout the brain, a searchlight analysis was conducted where a spherical neighborhood of 40 voxels (6.4-mm average radius) was moved across the whole brain. For each voxel belonging to a specific neighborhood, TRs corresponding to the conditions of interest were selected by shifting the voxelwise time course of activation by 3 TRs (to account for the hemodynamic delay). Subsequently, for each run separately, activation values were extracted from the unsmoothed echo-planar image volumes for each TR coinciding with the onset of a specific condition. For the cross-decoding analysis, linear discriminant analysis (LDA) classifiers were trained on discriminating two conditions (e.g., hands versus gloves) and tested on two different conditions (e.g., torsos versus shirts); all available trials were used in the training and test set. Classification accuracy for every searchlight sphere was assessed by comparing the labels predicted by the classifier to the actual labels, with chance performance always being 50%. Individual-subject searchlight maps were normalized to MNI space before they were entered into statistical analyses. Above-chance classification was identified using a threshold-free cluster enhancement (TFCE) procedure (Smith and Nichols 2009), where the observed decoding accuracy was tested against a simulated null distribution (generated from 10,000 bootstrapping iterations). The resulting statistical maps were thresholded at P < 0.05 (1-tailed).

MEG acquisition and preprocessing.

Electromagnetic brain activity was recorded using an Elekta Neuromag 306 MEG system (Elekta, Helsinki, Finland), composed of 204 planar gradiometers and 102 magnetometers. Signals were sampled continuously at 1,000 Hz and band-pass filtered online between 0.1 and 330 Hz. Offline preprocessing was done using MATLAB and the FieldTrip analysis package (Oostenveld et al. 2011). Data were concatenated for all runs, high-pass filtered at 1 Hz, and epoched into trials ranging from −100 to 500 ms with respect to stimulus onset. Based on visual inspection, trials containing eye blinks and other movement-related artifacts were completely discarded from all analyses. Data were then baseline-corrected with respect to the prestimulus window and downsampled to 100 Hz to increase the signal-to-noise ratio of the multivariate classification analysis (see Carlson et al. 2013).

MEG decoding analysis.

MVPA was carried out on single-trial data using the CoSMoMVPA toolbox. Only magnetometers were used, as these sensors allowed for the most reliable classification in previous work in our laboratory (Kaiser et al. 2015). Classification was performed using LDA classifiers. For the shape cross-decoding analysis, classifiers were trained on one category-matched shape comparison (i.e., hands versus torsos or gloves versus shirts) and tested on the other comparison (i.e., gloves versus shirts or hands versus torsos). For the category cross-decoding analysis, classifiers were trained on one shape-matched category comparison (i.e., hands versus gloves or torsos versus shirts) and tested on the other comparison (i.e., torsos versus shirts or hands versus gloves). To increase the reliability of the data supplied to the classifiers, new, “synthetic” trial data were created by averaging single-trial data separately for every condition and chunk by randomly picking 25% of trials and averaging these data across trials. This procedure was repeated 100 times (with the constraint that no trial was used more than 1 time more often than any other trial), so that for every condition and chunk, 100 of these synthetic trials were available for classification. Classification accuracy was then assessed by computing the percentage of correctly classified trials in the test chunk, with chance performance being 50%. Classification was repeated for every possible combination of training and testing time points, leading to a 60 × 60 time points (600 × 600 ms, with 100-Hz temporal resolution) matrix of classification accuracies. Individual subject accuracy maps were smoothed using a 3 × 3 time points (i.e., 30 ms in train and test time) averaging filter. To identify time periods of significant above-chance classification, similar to the fMRI analysis, a TFCE procedure was used, where the observed decoding accuracy was tested against a simulated null distribution (generated from 10,000 bootstrapping iterations). The baseline (prestimulus) interval was not taken into account for statistical testing. The resulting statistical maps were thresholded at P < 0.05 (1-tailed).

RESULTS

Shape cross-decoding.

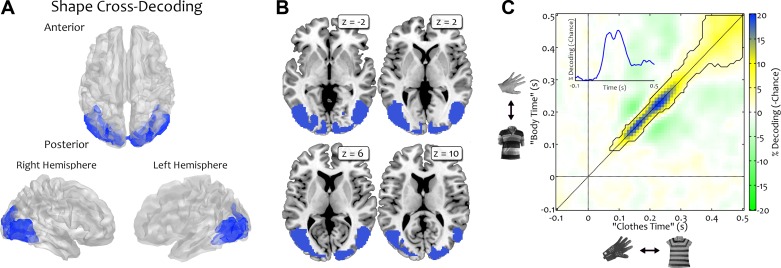

Brain regions representing object shape across categories were identified by training classifiers on discriminating shape within one category (e.g., hands versus torsos) and testing these classifiers on discriminating shape within the other category (e.g., gloves versus shirts). Results from both possible train/test directions were averaged. An fMRI searchlight using this approach revealed regions in right (33,128 mm3; peak MNI coordinate: x = 48, y = −68, z = −4; t23 = 8.5) and left (30,368 mm3; peak MNI coordinate: x = −6, y = −94, z = −12; t23 = 9.6) visual cortex, spanning early visual areas and regions of lateral occipitotemporal cortex (Fig. 2, A and B). The MEG data showed above-chance decoding of shape, starting at 90 ms after stimulus onset and peaking along the diagonal at 170 and 240 ms (467 time points in total, maximum decoding accuracy: 70.2%; t20 = 11.4; Fig. 2C).

Fig. 2.

Shape cross-decoding analysis. To reveal shape-selective mechanisms, classifiers were trained to discriminate shape within 1 category (e.g., hands versus torsos) and tested on the other category (e.g., gloves versus shirts). Results from both train/test directions were averaged. A and B: fMRI decoding was significantly above chance in large areas of visual cortex, spanning primary visual areas and regions of occipitotemporal cortex. C: MEG decoding was significantly above chance along the diagonal, starting from 90 ms after stimulus onset and peaking after 170 and 240 ms. Note that the axes here reflect time with respect to the 2 possible train and test comparisons, independently of the actual train/test direction. The connected area indicates above-chance decoding.

Category cross-decoding.

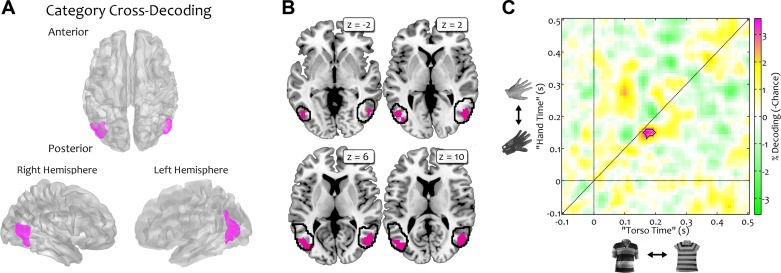

A second cross-decoding analysis was conducted to test for responses that reflect object category (body parts versus clothes) independently of shape properties. To detect such shape-independent responses, classifiers were trained to discriminate bodies and clothes for one shape-matched comparison (e.g., hand versus glove) and subsequently tested on the other comparison (e.g., torso versus shirt). Results from both possible train/test directions were averaged.

In the fMRI searchlight analysis, clusters in right (3,664 mm3; peak MNI coordinate: x = 52, y = −70, z = 6; t23 = 6.5) and left (5,752 mm3; peak MNI coordinate: x = −44, y = −78, z = 10; t23 = 5.8) lateral occipitotemporal cortex were identified (Fig. 3A). These clusters overlapped with the extrastriate body area (EBA; Fig. 3B; coordinates of Downing et al. 2001: x = ±51, y = −72, z = 5). Performing the same cross-classification analysis on the MEG data revealed a specific temporal signature associated with shape-independent category responses: classifiers could reliably discriminate between bodies and clothes between 130 and 160 ms with respect to the hand-glove comparison and 160 and 200 ms with respect to the torso-shirt comparison (12 time points in total, maximum decoding accuracy: 53.6%; t20 = 6.9; Fig. 3C).

Fig. 3.

Category cross-decoding analysis. To reveal generalization across the 2 body-clothes pairs, classifiers were trained on 1 comparison (e.g., hands versus gloves) and tested on the other (e.g., torsos versus shirts). Results from both train/test directions were averaged. A: fMRI decoding was significantly above chance in bilateral regions of lateral occipitotemporal cortex. B: the clusters obtained in this searchlight analysis fell within regions previously reported as body-selective: the black outline represents the boundaries of a group map of body selectivity in occipitotemporal cortex (taken from http://web.mit.edu/bcs/nklab/GSS.shtml; Julian et al. 2012). C: MEG decoding revealed a temporally specific window of successful cross-classification ranging from 130 to 160 ms with respect to the hand-glove comparison (“Hand Time”) and from 160 to 200 ms with respect to the torso-shirt comparison (“Torso Time”). Note that the axes here reflect time with respect to the 2 possible train and test comparisons, independently of the actual train/test direction. The connected area indicates above-chance decoding.

DISCUSSION

Here, we asked whether categorical representations in visual cortex are fully driven by category-associated visual features or whether they (at least partly) reflect category membership. Unlike previous studies investigating category selectivity, the stimuli presented in the current study were matched for shape properties, including object-part structure (e.g., hands and gloves both have 5 “fingers”), outline similarity, and symmetry. We found that large clusters in visual cortex are sensitive to shape differences (i.e., “hand/glove” shape versus “upper body” shape): classifiers trained on discriminating hands and torsos successfully discriminated gloves and shirts (and vice versa) in both early visual areas and occipitotemporal cortex. These shape differences were reliably decodable from MEG response patterns as early as 90 ms after stimulus onset.

Crucially, we also found evidence for shape-independent category responses: classifiers trained on discriminating hands and gloves successfully discriminated torsos and shirts (and vice versa) in bilateral clusters in the occipitotemporal cortex. These large clusters likely encompass body-, motion-, and object-selective regions of visual cortex, which closely overlap both at the group level and within individual subjects (Downing et al. 2007). Interestingly, the MEG data showed a specific temporal profile associated with such shape-independent body representations. Response patterns between 130 and 200 ms after stimulus onset allowed for successful cross-classification, in line with previous electrophysiological findings showing that bodies can be differentiated from other categories based on scalp distributions from 130 to 230 ms (Thierry et al. 2006). These fMRI and MEG results thus confirm previous studies on body-selective responses but additionally show that this selectivity is not fully explicable by 2D shape properties.

A particular strength of the cross-decoding approach used here is that it provides a rigorous control of possible visual differences between the two categories (bodies and clothes) beyond the shape matching of the two body-clothing pairs: uncontrolled visual differences in one comparison (e.g., the presence of a neck in torsos, not shirts) would also need to be present in the other comparison (e.g., hand versus glove) for these differences to lead to successful decoding. Thus successful decoding in this analysis likely reflects genuine category membership rather than visual or shape properties. Similarly, it is unlikely that differences in the deployment of spatial attention could account for the results: classifiers picking up on such differences between the two training stimuli (e.g., a preferential allocation of attention to the upper part of torsos, but not shirts) are unlikely to benefit from this when tested on the other comparison. It is still possible, in principle, that there are remaining visual differences, such as skin texture or 3D volume, that are shared by the body conditions but not the clothes conditions. However, we think it is unlikely that such features would drive body-selective responses, considering previous work showing body-selective responses to highly schematic depictions of the body lacking these cues (e.g., point-light motion, stick figures, and silhouettes; Peelen and Downing 2007). Nevertheless, further studies are needed to identify and rule out any such remaining differences.

We interpret the present findings as showing that the presence of particular visual or shape features is not necessary for evoking a body-selective response. Rather, these responses appear to reflect (or follow from) the categorization of an object as being a body part, a category that is associated with specific perceptual and conceptual properties, such as bodily actions/movements, social relevance, and agency (Sha et al. 2015). Different cues can support the inference that a perceived object is a body. These cues are often part of the object itself (e.g., characteristic body shapes or movements) but may also come from the surrounding context (Cox et al. 2004), from other modalities, or from expectations and knowledge (e.g., knowing that a mannequin in a shopping window is not a human). Our results show that body-selective responses in lateral occipitotemporal cortex, emerging at around 130–200 ms, follow from this categorical inference rather than reflecting a purely stimulus-driven response to the visual features of the object.

Interestingly, clusters exhibiting category-independent shape responses overlapped with clusters exhibiting shape-independent category responses. This observation is congruent with previous studies highlighting both visual (Andrews et al. 2015; Baldassi et al. 2013) and semantic (Huth et al. 2012; Sha et al. 2015) dimensions as organizational principles of high-level visual cortex. Response patterns in inferotemporal cortex seem to be best explicable by models using a combination of visual feature attributes and category membership (Khaligh-Razavi and Kriegeskorte 2014), suggesting that in high-level visual cortex these representations coexist.

Whereas the fMRI data demonstrated that shape and category responses are spatially entwined, the MEG results revealed differing temporal dynamics of these responses: although shape-specific responses could be decoded early and across a relatively long time interval, shape-independent category responses showed a specific temporal signature between 130 and 200 ms. We interpret this as a temporally restricted period where cortical responses reflect processing of category membership: successful decoding in the category cross-decoding analysis requires not only shape independence of body-specific responses, but also generalization across different body parts. This generalization might be restricted to the specific time window revealed here, with earlier computations reflecting stimulus-specific attributes (related to individual body parts) and later processing reflecting more sophisticated stimulus analysis that diverges for different body parts (e.g., hands carry social and action-related information different from torsos). Hence, the temporally specific generalization across body parts observed here might reflect a unique timestamp of category-level recognition. Interestingly, this category-level recognition occurred at different time points for the two body parts included in the study, with slightly faster categorization of the hands (130–160 ms) than the torso (160–200 ms). This later discriminability of torsos and shirts may reflect the greater similarity of these two stimuli on a perceptual level (Fig. 1A), leading to relatively delayed recognition of the torsos as being a body part.

To conclude, the present study characterizes the spatial and temporal profiles of shape-independent categorical neural responses by showing that MEG and fMRI response patterns distinguish between body parts and closely matched control stimuli. The patterns that distinguished each of the two body parts from their respective shape-matched controls showed sufficient commonality to allow for cross-pair decoding of object category. These generalizable category-selective response patterns were localized in space (lateral occipitotemporal cortex) and time (130–200 ms after stimulus onset).

GRANTS

The research was funded by the Autonomous Province of Trento, Call “Grandi Progetti 2012,” Project “Characterizing and improving brain mechanisms of attention (ATTEND).”

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

D.K., D.C.A., and M.V.P. conception and design of research; D.K. and D.C.A. performed experiments; D.K. and D.C.A. analyzed data; D.K., D.C.A., and M.V.P. interpreted results of experiments; D.K. prepared figures; D.K. and M.V.P. drafted manuscript; D.K., D.C.A., and M.V.P. edited and revised manuscript; D.K., D.C.A., and M.V.P. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Nick Oosterhof for help with data analysis.

REFERENCES

- Andrews TJ, Watson DM, Rice GE, Hartley T. Low-level properties of natural images predict topographic patterns of neural responses in the ventral visual pathway. J Vis 15: 3, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldassi C, Alemi-Neissi A, Pagan M, Dicarlo JJ, Zecchina R, Zoccolan D. Shape similarity, better than semantic membership, accounts for the structure of visual object representations in a population of monkey inferotemporal neurons. PLoS Comput Biol 9: e1003167, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bona S, Cattaneo Z, Silvanto J. The causal role of the occipital face area (OFA) and lateral occipital (LO) cortex in symmetry perception. J Neurosci 35: 731–738, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997. [PubMed] [Google Scholar]

- Caldara R, Seghier ML, Rossion B, Lazeyras F, Michel C, Hauert CA. The fusiform face area is tuned for curvilinear patterns with more high-contrasted elements in the upper part. Neuroimage 31: 313–319, 2006. [DOI] [PubMed] [Google Scholar]

- Carlson T, Tovar DA, Alink A, Kriegeskorte N. Representational dynamics of object vision: the first 1000 ms. J Vis 13: 1–19, 2013. [DOI] [PubMed] [Google Scholar]

- Cichy RM, Pantazis D, Oliva A. Resolving human object recognition in space and time. Nat Neurosci 17: 455–462, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox D, Meyers E, Sinha P. Contextually evoked object-specific responses in human visual cortex. Science 304: 115–117, 2004. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science 293: 2470–2473, 2001. [DOI] [PubMed] [Google Scholar]

- Downing PE, Wiggett AJ, Peelen MV. Functional magnetic resonance imaging investigation of overlapping lateral occipitotemporal activations using multi-voxel pattern analysis. J Neurosci 27: 226–233, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Movlan J, Skudlarski P, Gore JC, Anderson AW. The fusiform “face area” is part of a network that processes faces at the individual level. J Cogn Neurosci 12: 495–504, 2000. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293: 2425–2430, 2001. [DOI] [PubMed] [Google Scholar]

- Huth AG, Nishimoto S, Vu AT, Gallant JL. A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron 76: 1210–1224, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Julian JB, Fedorenko E, Webster J, Kanwisher N. An algorithmic method for functionally defining regions of interest in the ventral visual pathway. Neuroimage 60: 2357–2364, 2012. [DOI] [PubMed] [Google Scholar]

- Kaiser D, Oosterhof NN, Peelen MV. The temporal dynamics of target selection in real-world scenes. J Vis 15: 740, 2015. [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for the perception of faces. J Neurosci 17: 4302–4311, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khaligh-Razavi SM, Kriegeskorte N. Deep supervised, but not unsupervised, models may explain IT cortical representation. PLoS Comput Biol 10: e1003915, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, Bandettini P. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron 60: 1126–1141, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci 2011: 156869, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. The neural basis of visual body perception. Nat Rev Neurosci 8: 636–648, 2007. [DOI] [PubMed] [Google Scholar]

- Sha L, Haxby JV, Abdi H, Guntupalli JS, Oosterhof NN, Halchenko YO, Connolly AC. The animacy continuum in the human ventral vision pathway. J Cogn Neurosci 37: 665–678, 2015. [DOI] [PubMed] [Google Scholar]

- Smith SM, Nichols TE. Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage 44: 83–98, 2009. [DOI] [PubMed] [Google Scholar]

- Thierry G, Pegna AJ, Dodds C, Roberts M, Basan S, Downing PE. An event-related potential component sensitive to images of the human body. Neuroimage 32: 871–879, 2006. [DOI] [PubMed] [Google Scholar]