Abstract

The growing availability of efficient and relatively inexpensive virtual auditory display technology has provided new research platforms to explore the perception of auditory motion. At the same time, deployment of these technologies in command and control as well as in entertainment roles is generating an increasing need to better understand the complex processes underlying auditory motion perception. This is a particularly challenging processing feat because it involves the rapid deconvolution of the relative change in the locations of sound sources produced by rotational and translations of the head in space (self-motion) to enable the perception of actual source motion. The fact that we perceive our auditory world to be stable despite almost continual movement of the head demonstrates the efficiency and effectiveness of this process. This review examines the acoustical basis of auditory motion perception and a wide range of psychophysical, electrophysiological, and cortical imaging studies that have probed the limits and possible mechanisms underlying this perception.

Keywords: auditory motion perception, auditory velocity threshold, minimum audible movement angle

Introduction

We live in a world that moves. On the one hand, animate objects can present as threats or opportunities and tracking their course of movement is critical to responding appropriately. On the other hand, listeners are also in motion, so physically stationary sound sources move relative to the listener. The challenge here is twofold. First understanding how motion per se is encoded in the auditory nervous system and second, understanding how the listener is able to disambiguate the actual motion of a source from the apparent motion. Despite almost constant head motion, the fact that we still perceive the world around us as stable may provide some important clues as to how the nervous system performs this complicated deconvolution (Brimijoin & Akeroyd, 2014). Furthermore, a fair bit is known about this ubiquitous perceptual property in vision, and there is undoubtedly much there to inform our understanding in audition. There are, however, material differences in the encoding of space between the two systems—visual space largely reflects the spatiotopic coding of the receptors on the retina, while auditory space is necessarily computational and relies on acoustic cues that arise at each ear and between the ears. In the course of this review, we will also examine some of the important consequences of these differences.

The study of the perception and physiological processing of moving sound sources has been complicated to some extent by the technical difficulty in generating adequately controlled stimuli. Mechanically moving a sound source soundlessly is challenging and the practical trajectories are usually simple linear and rotational movement with respect to the listener. Perrott and Strybel (1997) pointed out that one of the earliest reports exploiting simulated auditory motion, using binaural beat stimuli, appeared in the literature almost a 100 years ago (Peterson, 1916). In marking this centenary, it is timely to take stock of our current understanding and what major outstanding questions or themes remain. Aside from the work of Rayleigh (1907), Perrott’s paper also documented a spurt of activity in this area in the early part of the 20th century and from the late 1960s a very rapid growth in the number of studies; some using real motion but the majority (∼70%) using forms of movement simulation.

In this review, we will consider the major themes that have emerged in those 100 years. We will argue that many of the anomalies and contradictions in the literature can be traced back to the limitations of the stimulus paradigms with both real and simulated movement. Another reason we believe that such a review is timely is the increasing access to the advanced virtual and augmented reality technologies that can flexibly generate the full set of veridical acoustic cues that drive this perception. Coupled with precise human movement tracking, such systems can be used to examine not just the question of the perception of auditory motion but the disambiguation of source and self-motion, together with the cross-modal and audio-motor interactions that undoubtedly play an important role in our everyday perceptual experiences.

Acoustic Cues to Spatial Perception

A sound source will have three different perceptual attributes that are relevant to this discussion. The first is whether it is moving or stationary; the second the location and trajectory of the motion; and the third is the velocity of motion relative to that of the head. At the most fundamental level, perception is dependent on the acoustic cues used in the spatial localization of a sound source, so we will begin with a brief review of the physical and psychophysical basis of the perception of stationary sound sources.

Binaural Cues to Sound Location

The placement of the two ears on roughly opposite sides of the head allows the auditory system to simultaneously sample the sound field from two different spatial locations and, for sounds located away from the listener’s midline, under slightly different acoustic conditions. The information from both ears constitutes the binaural cues to location (for reviews, see Carlile, 1996, 2014).

For sound locations off the midline, the path length differences between the sound source and each ear produces a difference in the time of arrival (onset) of the sound to each ear—the interaural time difference (ITD) cue to azimuth or horizontal location. The magnitude of the ITD roughly follows a sine function of horizontal angle (0° directly ahead; Shaw, 1974) so that small displacements from the midline produce much larger changes in ITD than do the same displacements at more lateral locations. The auditory system is also sensitive to the instantaneous phase of low-frequency sounds (<1.5 kHz), and the amplitude envelopes of high frequencies (e.g., Bernstein & Trahiotis, 2009; Ewert, Kaiser, Kernschmidt, & Wiegrebe, 2012).

The reflection and refraction of the sound by the head for wavelengths smaller than the head also give rise to an interaural level difference cue (ILD), which is also dependent on the horizontal location of the source. The pinna and concha also boost and spectrally filter these wavelengths, particularly for locations in space contralateral to the ear. The ILD calculated for a spherical head shows a maximum for locations not on the interaural axis, but for location 45° on either side of that axis (Shaw, 1974). Furthermore, the acoustical axis of the pinna is orientated toward the frontal field over this frequency range (Middlebrooks, Makous, & Green, 1989). The combination of these two acoustic properties cause the ILDs for the mid to high-frequency range to increase to a maximum for locations in the anterior field, off the midline which varies in a frequency dependent manner due to the spectral filtering characteristics of the pinna and concha (Shaw, 1974).

The observation that the ILD cues are predominant at the middle to high-frequency range of human hearing, and the ITD cues are particularly important for low frequencies was first made by Lord Rayleigh (1907); this is referred to as the duplex theory of localization (see also Mills, 1958, 1972). Despite a range of limitations in its interpretation, the theory still holds considerable influence over the way many researchers think about the utilization of these acoustic cues.

One generally recognized limitation is that these binaural cues are ambiguous because of the symmetrical placement of the ears on the head. That is, any particular ITD/ILD combination can only specify the sagittal plane containing the source. This ambiguity has been referred to as the “cone of confusion” for specific binaural intervals (Carlile, Martin, & McAnnaly, 2005; but see also Shinn-Cunningham, Santarelli, & Kopco, 2000). This ambiguity can be resolved using the so-called monaural spectral cues provided by the location dependent filtering of the outer ear (see below), but this requires that the sound source contains energy covering a relatively broad range of frequencies.

For a repeated sound or one of moderate duration, moving the head can help resolve the cone of confusion by multiply sampling the sound source and integrating that information with information about the movement of the head (van Soest, 1929, as quoted in Blauert, 1997; Brimijoin & Akeroyd, 2012; Perrett & Noble, 1997; Pollack & Rose, 1967; Wallach, 1940; Wightman & Kistler, 1999). This integration of motor and sensory information in spatial hearing is very important, but largely neglected, a theme to which we will return.

Spectral or Monaural Cues to Sound Location

The complexly convoluted shape of the outer ear produces a complex pattern of sound resonances and diffractions that boost and cut different frequencies. The specific spectral pattern depends on the coupling of the various acoustic mechanisms with the sound field which in turn is dependent on the relative angle of incidence of the wave front (see for instance Teranishi & Shaw, 1968; reviews Carlile, 1996; Shaw, 1974). Many of the more prominent spectral features (>4 kHz) that vary with location are likely represented in the neural code (Carlile & Pralong, 1994).

Quite a number of studies have demonstrated superior levels of accuracy (e.g., Carlile, Leong, & Hyams, 1997; Makous & Middlebrooks, 1990b) and precision (Mills, 1958) in auditory localization performance for the anterior region of space. This is likely to be due at least in part to more spatially detailed acoustic cues as a consequence of the anteriorly directed pinna acoustic axis and the diffractive effects of pinna aperture. The specific frequencies of the spectral gains and notches are highly dependent on the spatial location of the source for this region of space.

One important perceptual outcome of the filtering of the sound by the outer ear is the emergent perception of an externalized sound image. Head movements can also play a role in the emergent perception of an externalized sound image (Brimijoin, Boyd, & Akeroyd, 2013; Loomis, Hebert, & Cicinelli, 1990).

Accuracy and Resolution in Auditory Localization

In ecological terms, localizing a sound source entails identifying its spatial location relative to the listener. While on the one hand, this is patently obvious, and on the other, this is not necessarily the way that performance has been measured. Many early studies of the processing of the acoustic cues to location were focused on the absolute sensitivity to these cues and how they might vary with overall magnitude (the so-called Weber fraction). In the classic study of Mills (1958), the spatial resolution or the minimum audible angle (MAA) was measured for a wide range of frequencies for different location on the anterior audio-visual horizon. In a two alternative forced choice 2AFC task, the subject identified if the second of two short tone bursts, separated by a second of silence, appeared to the right or left of the first. For locations around the midline, the MAA ranged from 1° to 3° as a function of frequency (1.5 kHz and 10 kHz had the highest thresholds) and the MAA increased with increasingly more lateral locations (7° or much greater for some frequencies at 75° azimuth).

The MAA is a measure of the precision or the just noticeable difference in spatial location. Importantly, the direction of the change in location between the two test stimuli is necessary to ensure that the just noticeable difference is related to spatial location as opposed to some other chance in the percept. A large number of other studies have examined absolute localization accuracy (e.g., Butler, Humanski, & Musicant, 1990; Gilkey & Anderson, 1995; Good & Gilkey, 1996; Hofman & Van Opstal, 1998, 2003; Makous & Middlebrooks, 1990a; Oldfield & Parker, 1984; Wightman & Kistler, 1989) including work from our own laboratory (see in particular Carlile et al., 1997). These data are best considered in terms of the overall accuracy (bias), represented by the average localization response to repeat stimuli, and precision, as indicated by the variance of those responses. The MAA is much smaller (at least fivefold) than the precision exhibited by the absolute localization response and this difference is likely due to a number of factors. The MAA is a perceptual task while the different approaches used to indicate the perceived absolute location of the stimulus will each have a different error associated with the method (pointing with the nose or hand to the spatial location, using a pointer on an interface or calling out a spatial coordinate estimate, etc.). On the other hand, the differences between the MAA and absolute localization are also likely to reflect the differences in the nature of the task: the MAA is a just noticeable difference JND task, where there is a standard or anchor that a second stimulus is judged by, and the localization task is a single-interval absolute judgement task—these two tasks rely on entirely different domains of judgement, even though they share the same perceptual cues.

Sensory–Motor Integration in Auditory Spatial Perception

Before we consider in detail the research that has focussed particularly on the perception of auditory motion, it is important to consider the related question of the role of non-auditory information in auditory spatial processing. In humans, the ears are symmetrically placed on the head and immobile so that the acoustic cues to location are head-centred. Of course, the relative position of the head is constantly changing and needs to be accounted for in the generation of the perceived location of a sound source. Using an audio-visual localization paradigm Goossens and van Opstal (1999) have shown that auditory targets are encoded in a body centred, rather than a head-centred, coordinate system. This indicates that the accurate perception of the location of a sound source requires the integration of information about the relative position of the head with respect to the body. Not surprisingly then, they also found that head orientation influenced the localization of a stationary auditory target.

This finding has been corroborated by other studies: Lewald and Ehrenstein (1998) reported that the perceived midline was influenced by the direction of gaze or the horizontal orientation of the head. This has been extended this to both horizontal and vertical dimensions using a laser pointing task (Lewald & Getzmann, 2006). Moreover, the spatial shift induced by eye position has also been shown to occur in the absence of a visual target (Cui, Razavi, Neill, & Paige, 2010; Razavi, O’Neill, & Paige, 2007). Whether the source of this postural information is afferent proprioceptive information or efference copy from the motor system is not known, although vestibular stimulation has been shown to influence auditory spatial perception in the absence of changes in the posture of the head (DiZio, Held, Lackner, Shinn-Cunningham, & Durlach, 2001; Lewald & Karnath, 2000).

In summary, the emerging picture suggests that non-auditory information about the relative location of the head is important in converting the head centred, auditory spatial cues into body-centred coordinates. This may well provide the basis for both integrated action in response to auditory spatial information and for the generation of the perception of a stable auditory world.

The Perception of Source Motion

The perception of motion can represent both the actual movement of the source through space or the apparent motion produced by the translation or rotation of the head in space. It is probably fair to say that the majority of motion events that we experience are those that result from the self-motion generated by an active, ambulatory listener with a highly mobile head. By contrast, with very few exceptions, it is motion perception with a static head that has been the focus of the research in this area to date.

It is also important to note that, in the latter part of the last century, much of the research and subsequent debate have revolved around conceptions of motion processing that have their roots in visual motion processing. In particular, the contributions of Hassenstein and Reichardt to the understanding of low-level motion processing have been extremely influential (discussed in Borst, 2000). In vision, low-level motion detectors can selectively respond to velocity and direction with monocular input, using a simple neural circuitry first proposed by Reichardt (1969). First, although the auditory system lacks a spatiotopic receptor epithelium—a feature central to the idea of low-level motion processing in the retina—the search for auditory motion detectors focused on cues that could vary continuously as a function of the variation in spatial location, such as dynamic changes in interaural phase (e.g., Spitzer & Semple, 1991; discussed in detail later). A major limitation of such approaches is that actual motion induces a coherent variation in each of the location cues reviewed earlier. We will review work below demonstrating that the use of individual cues results in a much reduced perception of motion in the listener, a reduced auditory motion after effect and significantly reduced motion response in imaging, and electroencephalography (EEG) studies when compared with a veridical combination of cues.

Second, there has been much debate about whether low-level motion detectors exist in the auditory system. Such motion detectors, first modeled by Reichardt (1969) in vision, would be characterized by their monaural responses and peripheral location. Yet, as discussed in Neurophysiology section, such low-level motion detectors have not been found in the auditory system. This suggests that auditory motion perception maybe subserved by a higher level system—similar to that of third-order motion detectors in vision, which are centrally located, binocular in nature, and heavily modulated by attention (Boucher et al., 2004). In audition, such a third-order system would likely take as inputs positional information resolved from binaural cues and form the basis for a “snap-shot” style model.

To date, this has not led to the sort of rigorous modeling that gave rise to many of the most useful predictions around visual motion detection (as discussed in detail in Borst, 2000) and has remained at a qualitative level of explanation. Clearly, we can perceive auditory motion. There are many EEG and vital imaging studies demonstrating the cortical areas responding to moving auditory stimuli which are complimented by lesion case studies demonstrating perceptual deficits (Griffiths et al., 1996). Positing these as evidence for motion detectors per se, however, adds nothing to the discussion about the nature and processing of the relevant cues.

One final introductory point is the nature of a moving stimulus. By definition, movement represents a change in location over time. The emergent property of speed or velocity can be derived from the duration of the movement and the distance traveled. Is velocity per se (a certain distance in a certain time) or distance or time the critical factor in movement threshold? How does sensitivity to these elements covary in an ecologically or psychophysically meaningful way? This covariation of distance, time, and velocity is a complication that needs to be managed both in terms of the experimental design and the interpretation of the nature of the effective stimulus underlying the perception of velocity and direction of motion – an issue we will return to below.

Detection of Motion

The subjective perception of motion can be induced using the sequential presentation of discrete auditory events (also referred to as “saltation” Phillips & Hall, 2001) for relatively short (50 ms) bursts of sound the perception of motion is determined by the stimulus onset asynchrony (SOA): that is, the time from the onset of the leading stimulus to the onset of the following burst. For location arranged about the anterior midline, the optimal SOA was between 30 ms to 60 ms. In this range, subjects were able to correctly discriminate the direction of motion and the relative spatial separation of the sources (6°, 40°, or 160°) did not affect the perception of motion (Strybel, Manligas, Chan, & Perrott, 1990). The optimal SOA also depended on the duration of the first stimulus (see Strybel et al, 1992 and Perrott & Strybel, 1997 for discussion). Following monaural ear plugging, listeners still reported motion for these stimuli but were at chance levels in identifying the direction of motion (Strybel & Neale, 1994). This suggests that the spectral changes associated with the change in location were sufficient to induce the perception of motion; however, at least for the horizontal dimension tested, the binaural cues appear necessary to determine the direction of motion. The SOA measures using noise bursts are broadly similar to findings reported using click stimuli where the strength of the perception of motion increased with binaural presentation and where binaural level differences were less than 30 dB (Phillips & Hall, 2001). Notably, these two studies focused on saltation or the perception of sequential step wise movements. In contrast, Harris and Sargent (1971) found that the MAA for a real moving sound source was similar between monaural and binaural listening with white noise, while that for a pure tone increased substantially, presumably due to the loss of spectral cues.

At the other end of the continuum, the upper limits of rotational motion perception have been examined under anechoic and reverberant conditions (Feron, Frissen, Boissinot, & Guastavino, 2010). Using a 24-speaker array, listeners were able to perceive simulated horizontal rotation up to 2.8 rotations/s, that is, close to 1000°/s. Listeners were slightly more sensitive to accelerating compared with decelerating noise, as well as band-limited and harmonic complexes (12–32 harmonics). Listeners were more sensitive to motion of harmonic complexes with low fundamental frequencies, presumably because of the greater availability of ITD information. Surprisingly, threshold velocities were even faster for all stimuli when testing in highly reverberant conditions (a concert hall). It was suggested that this might be the consequence of a broader source image in the reverberant compared with the dry conditions (but see Sankaran Leung & Carlile, 2014 for lower velocities).

The main focus of this review is on rotational trajectories. However, it is also instructive to briefly discuss looming and linear motion, both of which are common in a natural environment. In a series of psychophysical tasks, Lutfi and Wang (1999) examined the relative weighting of overall intensity, ITDs, and Doppler shifts cues that are involved in a sound that moved linearly in front of a listener. They reported that intensity and ITDs correlated most with displacement discrimination, at least at slower velocities (10 m/s), while Doppler cues dominated at the faster velocities (50 m/s). Not surprisingly, velocity and acceleration discrimination were highly correlated with the Doppler effects. A looming stimulus describes an object that is moving toward the listener, such as that of an approaching threat. Unlike rotational motion which requires interaural cues, a “looming” percept can be produced from increasing intensity with a diotic stimulus (Seifritz et al., 2002). Its opposite—receding motion—can be generated by a decrease in intensity. A number of studies have examined the perception of looming motion (Bach, Neuhoff, Perrig, & Seifritz, 2009; Ghazanfar, Neuhoff, & Logothetis, 2002; Gordon, Russo, & MacDonald, 2013; Maier & Ghazanfar, 2007; Neuhoff, 2001), in particular by comparing the salience of looming versus receding sounds (Hall & Moore, 2003; Rosenblum, Wuestefeld, & Saldaña, 1993). It has been shown that subjects consistently overestimate the intensity of a looming stimulus and underestimate the corresponding time to target (Neuhoff, 1998). This is not surprising, given the evolutionary advantages this can afford for threat evasion. Seifritz et al. (2002) explored the neural mechanisms subserving this perceptual bias using functional magnetic resonance imaging. They found that a looming stimulus activated a wider network of circuitry than to a receding stimulus. Furthermore, consistent with horizontal and vertical auditory motion, they also found greater activation in the right temporal plane in both looming and receding motion compared with stationary sounds.

Direction of Motion and the Minimum Audible Movement Angle

The MAA is presumed to be the limiting condition for motion perception. In the latter quarter of the 20th century, work focused on the measurement of the minimum audible movement angle (MAMA) which was defined as the minimum distance that a stimulus needed to be moved to be distinguished from a stimulus that was stationary. The first studies had huge disparities in the velocities tested, which reflected the different ways in which the movement was generated. Harris and Sargent (1971) used a loudspeaker speaker on a trolley moving linearly at 2.8°/s and for a 500 Hz pure tone report a MAMA of around 2°. Perrott and Musicant (1977) who attached a loud-speaker to the end of a rapidly rotating boom and examined velocities from 90°/s to 360°/s, reported that the MAMA for a 500 Hz tone increased from 8.3° to 59° with increasing velocity. Grantham (1986) used stereo balancing to simulate the motion of a 500 Hz tone at velocities of 22°/s to 360°/s. The MAMA data from eight studies spanning 1971 to 2014 are plotted in Figure 1 for locations around the frontal midline.

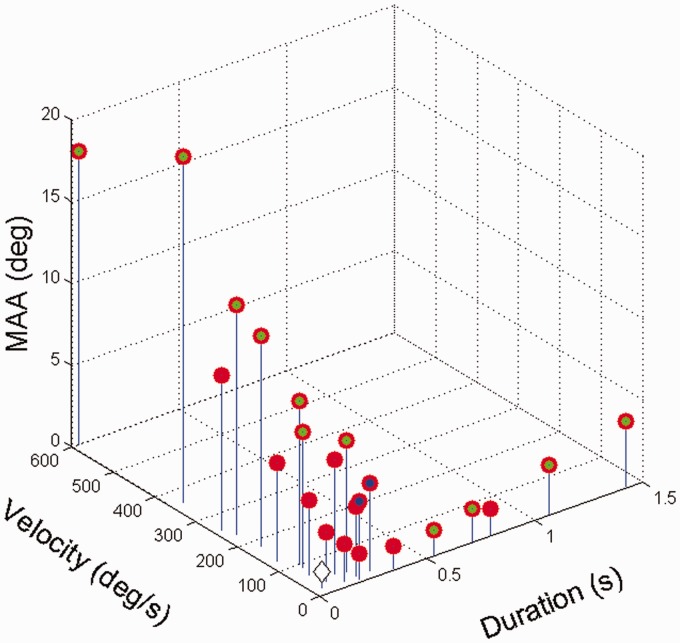

Figure 1.

The relationship between horizontal MAMA at 0° Azimuth, velocity and stimulus duration at MAMA threshold for seven studies. The green filled symbols indicate pure tone stimuli (usually 500 Hz), the blue filled symbols band limited stimuli (>6 kHz or <2 kHz) and the open diamond symbol MAMA threshold following many weeks of training (Data from Brimijoin & Akeroyd, 2014; Grantham, 1986; Grantham et al., 2003; Harris & Sergeant, 1971; Perrott & Marlborough, 1989; Perrott & Musicant, 1977; Strybel, Manligas, et al., 1992; Strybel, Witty, & Perrott, 1992).

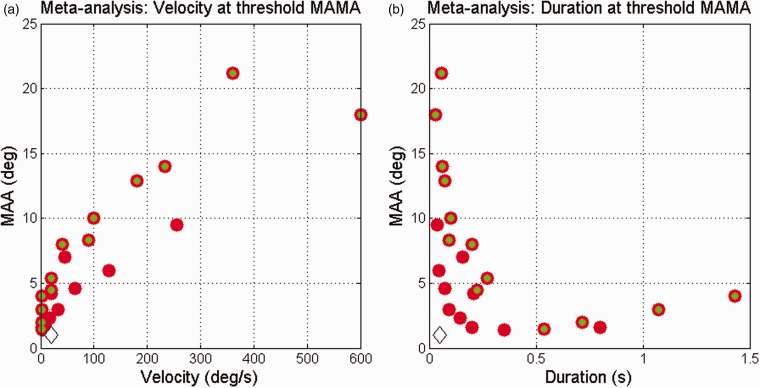

Despite the large differences in the stimulation procedures, stimulus characteristics and measurement protocols, a number of general observations can be made: (a) MAMA is a strong function of velocity (see also Figure 2a); (b) wide band stimuli (filled red circle) show smaller MAMAs than pure tones (filled green circle); (c) training has a significant effect on the MAMA (open black diamond; see Perrott & Marlborough, 1989 and Strybel, Manligas, & Perrott, 1992). The variation in MAMA with velocity is also consistent with the increase in MAA with decreasing ISI (see Grantham, 1997). When collapsed across velocity (Figure 2b) and the effects of training (open diamond) are ignored, the MAMA appears to asymptote at around 1.5° for duration greater than 200 ms. This observation was first made by Grantham (1986) who originally estimated this as ∼5° MAMA at ∼150 ms duration for a 500 Hz tone and suggested that this reflected a minimum integration time for optimal performance.

Figure 2.

Data from Figure 1 collapsed across duration (a) and velocity (b). All other details as per Figure 1.

Using broad-band pulses, Saberi and Perrott (1990) simulated a wide range of velocities and showed a U-shaped function for horizontal MAMA—data from two subjects showed an increase in the MAMA for very slow movement of less than 1°/s. This suggests that there is an optimal velocity for movement detection of between 1°/s and around 20°/s. Figure 1 also illustrates that there is a minimum amount of perceptual “information” required before the sound was judged to be moving, and this could be the stimulus duration or the distance travel. Distance was not simply about the locations of the end points of the motion, as continuously sounding stimuli are associated with a significant (albeit small) improvement in MAMA compared with short stimuli marking the end points (Perrott & Marlborough, 1989). Moreover, accelerating and decelerating stimuli can be discriminated over relatively short durations (90 ms and 310 ms for 18° and 9° or arc movement, respectively; Perrott, Costantino, and Ball (1993)), indicating sensitivity to sound events during the course of the movement (Grantham, 1997).

The MAMA for horizontal movement varies as a function of the azimuth location (Grantham, 1986; Harris & Sergeant, 1971; Strybel, Manligas, et al., 1992) and follows much the same pattern as the MAA increasing two to threefold from 0° to 60° from the midline and increasing substantially for locations behind the subject (Saberi, Dostal, Sadralodabai, & Perrott, 1991). For horizontal movement at different elevations, Strybel, Manligas, et al. (1992) reported that the MAMA increased slightly with elevation but not substantially until the elevation was greater than 70°. The MAMA also increased marginally for diagonal trajectories but was significantly larger for vertical trajectories (Grantham, Hornsby, & Erpenbeck, 2003; Saberi & Perrott, 1990). The vertical MAMA was not substantially different for anterior or posterior locations (Saberi et al., 1991). These latter studies also suggested that the diagonal MAMA is defined by the relative contributions of the binaural (horizontal) and monaural (vertical) cues.

In general, for horizontal moving stimuli around the midline, the MAMA was 2 to 3 times larger than the MAA for static stimuli when measured under the same conditions (Brimijoin & Akeroyd, 2014; Grantham, 1986; Grantham et al., 2003; Harris & Sergeant, 1971; Saberi & Perrott, 1990 but see Grantham, 1986 footnote 2). This was also the case for measures along diagonal or vertical orientations (Grantham et al., 2003; Saberi & Perrott, 1990). The “dynamic” MAA has also been measured using moving sound sources and found to agree with that measured using static sources, indicating that the larger MAMA is not simply the result of “blurred” localization (Perrott & Musicant, 1981). With extensive training, however, the MAMA has been shown to approach the previously published MAA for horizontal broad-band sounds around the midline (Saberi & Perrott, 1990; Perrott & Marlbrough, 1989, c.f. Perrott & Saberi, 1990). From these data, however, it is not clear if this represents perceptual or procedural learning.

Velocity

The perception of the rate of motion of a sound source (its velocity) has been examined psychophysically in only a handful of studies. Altman and colleagues have used dynamic variation in the ITD (Altman & Viskov, 1977) and ILD (Altman & Romanov, 1988) of trains of clicks in a 2AFC discrimination task with a reference velocity of 14°/s. Using only ITD, the difference limens were surprisingly large at 10.8°/s, whereas ILD variation produced difference limens of around 2°/s (see Figure 3). Although these stimuli are both impoverished and conflicted (real motion produces a covariation of each of the localization cues), this result suggests that ILD may provide a more salient cue for velocity discrimination—a difficult finding for those physiological studies that have used binaural beat or other temporally varying stimuli in the search for low-level motion detectors (e.g., Spitzer & Semple, 1991, 1993; see also below). These experiments involved radial motion. When the movement trajectory is linear, intensity changes and Doppler shifts provide the most salient cues for velocity and acceleration discrimination (see Lufti & Wang, 1999).

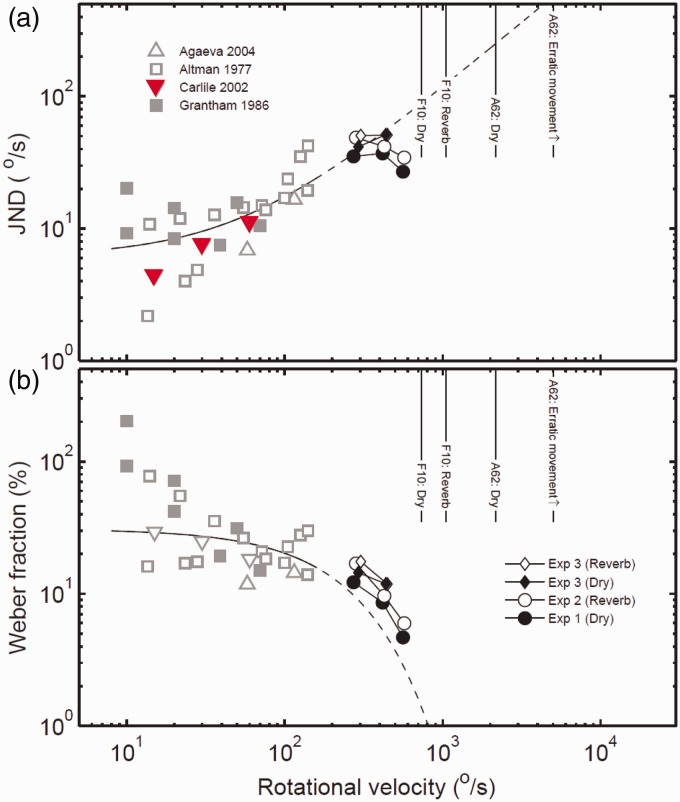

Figure 3.

(a) JNDs and (b) Weber fractions for all studies to date examining velocity perception (Agaeva, 2004; Altman & Viskov, 1977; Carlile & Best, 2002; Frissen et al., 2014; Grantham, 1986). Adapted from Frissen et al. 2014 with permission.

Taking a different approach, Grantham (1986) used stereo balancing to generate the illusion of a moving 500 Hz tone. Difference limens in this study were also very large and of the order of 10°/s (a Weber fraction of 1!) and may have reflected the relative brevity of the stimuli (<1 s). He did note, however, that threshold discrimination may have been on the basis of the difference in the distance traveled between the reference and the test stimuli and calculated this threshold as between 4° and 10°. As discussed earlier, the discrimination between two stimuli with different velocities could rely on difference in the distance traveled for fixed duration stimuli or in the case of a fixed distance, the relative duration of the stimulus.

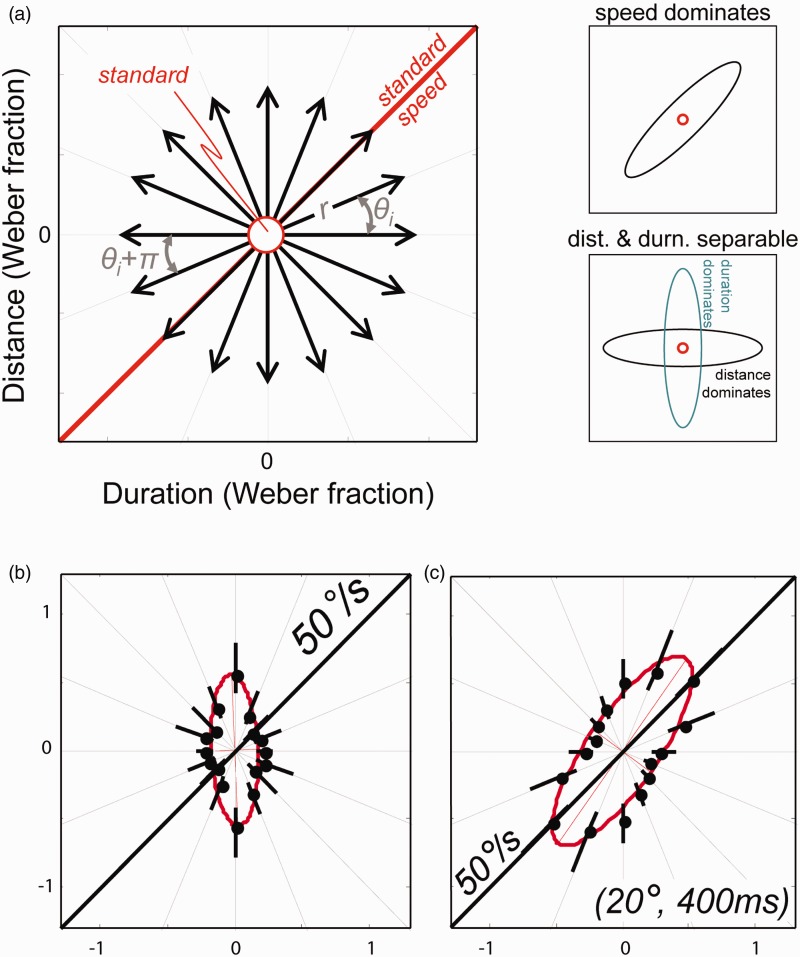

We tackled this problem in our own laboratory in two different experiments. In the first, we randomized the duration and distance (i.e., the arc of movement) of the stimuli, making these cues uninformative (Carlile & Best, 2002). In that experiment, JNDs increased with increasing velocity with median Weber fractions of 0.37, 0.3, and 0.24 for reference velocities of 15°/s, 30°/s, and 60°/s, respectively. This indicated that subjects were sensitive to velocity per se but curiously and possibly counterintuitively, the Weber fractions decreased with increasing velocity. When distance cues were made available by using constant duration stimuli, thresholds improved significantly in a manner that was related to the magnitude of the distance cue. In that study, the subject’s attention was drawn to stimulus velocity by the nature of the 2AFC task so in a second, more recent study (Freeman et al., 2014) we used a 3AFC odd-ball task and measured discrimination contours for stimuli lying in the distance-duration plane (see Figure 4). The odd-ball task will identify the cues being used in the discrimination task without biasing the observer to focus on any particular cue. The discrimination contour method produces a set of motion thresholds from the manipulation of the distance, time, and speed cues. When thresholds are plotted on the distance-duration plane, the orientation of the contour should be along the dimension which is most informative: that is, when the dimension which identifies the difference between the standards and the odd-ball is equivalent, this should result in a larger threshold. For instance, on a distance-duration plane, if velocity sensitivity was dependent on velocity detectors alone, then the contour orientation should be on the diagonal, where velocity and distance covary. On the other hand, if the system relies more on difference of duration, then the contours should orientate along that dimension. Without roving the duration and distance (so that these cues remained informative), the detection of the odd-ball was dependent primarily on duration and to a lesser extent on distance. When the stimuli were randomly roved (as in the Carlile & Best, 2002, study) thresholds were higher and contours were orientated along the diagonal velocity plane. This provided very strong evidence that the auditory system relies on duration and distance over speed; however, when these cues are unavailable or unreliable (as is the case when distance and duration where randomized), then the auditory system used the velocity cues but was much less sensitive compared with when distance and duration cues are available. The reduction in Weber fraction with increasing velocity was also evident over the range 12.5°/s to 200°/s in that study.

Figure 4.

(a) Motion discrimination contours, defined in the duration (x axis) – distance (y axis) plane. Sensitivity thresholds were measured along the orientations θi using a three-interval oddity task consisting of two identical standard stimuli and one test stimulus, presented in a random order (see Freeman et al. 2014). When the perception of speed dominates performance, the resultant ellipse will be oriented along θ = 45° (top right). If duration and distance are separable and individually dominates performance, the ellipses will be aligned along the cardinal axes (bottom right). (b) Motion discrimination contour for a single naïve subject for speed = 50°/s, duration = 400 ms. In this task, the stimuli contained veridical distance and duration cues without additive noise. If auditory motion were encoded by specialized velocity detectors, we would expect a resultant ellipse along 45°. Instead, the results suggest that subjects were most sensitive to the duration cues. (c) Same as (b) but noise was added to the distance and duration cues in the stimuli, rending them less salient. The results showed a discrimination contour with an ellipse along 45°, suggesting that subjects were sensitive to speed, when distance and duration cues were made uninformative.

In another quite recent study, Frissen, Féron, and Guastavino (2014) have reported that velocity discrimination persists up to 720°/s. In that study, spectral information at lower frequencies produced better JNDs for velocities from 288°/s to 576°/s and the Weber fractions also reduced over that range (see Figure 4, Frissen et al., 2014). Most interestingly, velocity sensitivity was also shown to be unaffected by relatively high levels of reverberation—in contrast to the deleterious effects of reverberation for static localization accuracy (e.g., Devore, Ihlefeld, Hancock, Shinn-Cunningham, & Delgutte, 2009).

Despite the wide range of paradigms (dichotic stimulation, stereo-balance (500 Hz), virtual space presentation, discrete speaker switching) the data present a quite coherent picture of sensitivity across a wide range of velocities. Note that the solid lines and (interpolated) dotted lines indicate that while the JND increases with velocity the Weber fraction actually decreases. (Figure 4 adapted from Frissen et al., 2014).

The Relationship Between Velocity Perception and MAMA

In a very recent experiment in our laboratory, we have focused on the relationship between the perception of velocity and the MAMA (Locke, Leung, & Carlile 2015). Recall that the MAMA represents the threshold angle that a source needs to travel before it is perceived as moving. If the perception of velocity is dependent on the MAMA, then it is a reasonable prediction that if the velocity of a source changes during its motion, then the threshold arc of motion to detect a change in velocity will be the MAMA for the new velocity. In a two-interval velocity-discrimination experiment with large step changes in velocity (×2 to ×4), Leung, Locke, and Carlile (2014) report the threshold arcs were 2 to 5 times larger than would have been predicted by the MAMA. Moreover, the threshold angles required to discriminate the velocity in the second interval were significantly influenced by the velocity in the first, where small changes were associated with larger thresholds (Locke et al., 2015). The velocity JND for a step change in the velocity was also reported to be many times larger than that previously reported for a discontinuous comparison of velocity (Carlile & Best, 2002). Taken together these results indicate that the recent history of a temporally continuous or a spatially continuous source plays an important role in the perception of the velocity of the source (Locke et al., 2015; see in particular Senna, Parise and Ernst, 2015).

These results are far more consistent with the idea of a leaky integrator process underling velocity perception compared with a “snap shot” model of motion perception (e.g., Middlebrooks & Green, 1991). For instance, when the velocity changes instantaneously, the integrator contains mainly previous velocity information and takes time for a new mean output to settle. As time progresses, the integrator begins to fill up with the new velocity information. If the new velocity is very different, then the mean output of the detector will change sooner than if there is a smaller change: that is, will have a lower threshold for larger changes. Short intervals of silence between stimuli will affect the integrator but do not provide a complete reset. On the other hand, large changes in spatial location (as in Carlile & Best, 2002) do seem to have the capacity to reset the process underlying velocity perception—possibly because difference in location is a strong cue to a discontinuous or new target. Such a model is also consistent with the role of onset asynchrony in generating the perception of apparent motion (Perrott & Strybel, 1997), the dependence of auditory saltation on inter stimulus interval (Phillips & Hall, 2001) and that accelerating and decelerating sound sources result in detection thresholds that are different to the MAMA for the equivalent average velocity (Perrott et al., 1993).

The Auditory Motion After effect

Using the well know dictum “if you can adapt it, it’s there” (Mollon, 1974), demonstrations of an auditory motion after effect (AMAE), analogous to the visual motion after effect or “waterfall effect” have been used as evidence that the auditory systems employs motion detectors similar to the first order motion detectors in vision (Freeman et al., 2014). Headphone presentation of a tone with dynamic variation in the inter-aural phase and a complementary ramping of ILD results in the perception of a source moving between the ears. Repetitive exposure to such a tone at 500 Hz (the adapting stimulus) was reported to produce apparent motion of a stationary source (the probe stimulus at the same frequency) in the direction opposite to the adapting stimulus but only when moving at 200°/s and not for a 2 kHz tone at any velocity up to 200°/s (Grantham & Wightman, 1979). A similar finding was reported for 500 Hz low-pass noise from a source rotated around the listener (Grantham, 1989), but only when the adaptor and probe were spectrally matched. In contrast (Ehrenstein, 1984; reported in Grantham, 1998) used narrowband (0.5-octave) noise adaptors moving in the free field, or simulated motion using dynamically varying ILD or ITDs and found no AMAE. Rather, subsequent localization of stationary stimuli was displaced in the direction opposite that of the adaptor, but only for stimuli around 6 kHz with varying ITD.

In their well-regarded review of auditory spatial perception, Middlebrooks and Green (1991) observed that the auditory after effects were somewhat smaller than those observed in vision and, together with the contradictory previous findings (summarized earlier), suggested that there was little evidence to support the notion of dedicated motion processors in the auditory system. More recent work has demonstrated, however, much more robust auditory after effects. Dong, Swindale, Zakarauskas, and Hayward (2000) measured AMAE’s using broad-band stimuli rotated at relatively slower velocities (20°/s or slower) as a percentage of the adapting velocity and found them comparable to those demonstrated in the visual system at similar velocities (see also Carlile, Kurilowich, & Leung, 1998). Using units of sensitivity (JNDs), Deas, Roach, and McGraw (2008) demonstrated comparable auditory and visual motion after effects in the same individuals using stimuli with similar movement dynamics. Of some interest, they have also shown that shifts of up to two JNDs can occur in auditory localization following adaption to moving visual stimuli!

As with the MAMA, it appears that spectrally rich stimuli with a full set of veridical cues (actual free field; Dong et al., 2000, or individualized virtual auditory space (VAS); Carlile et al., 1998; Kurilowich, 2008) produce the most robust AMAEs. In addition to the latter two studies, Grantham (1998) used non-individualized VAS and collectively these data indicate that spectral match and spatial overlap between probes and adaptors produce a strong AMAE that is invariant with azimuth location (unlike the MAA). The duration of the adaptation period also appears to play a role in the magnitude of the AMAE (as it does in vision), with the early studies reporting weaker AMAE using no preadaptation (Grantham & Wightman, 1979) or a relatively short period (30 seconds; Grantham, 1989; Grantham, 1992, 1998). Studies employing longer periods of adaptation (2 minutes; Dong et al., 2000, 3 minutes; Carlile et al., 1998; Kurilowich, 2008), with “top-up” exposure to the adaptor between probe stimuli, all showed much larger and more robust AMAE. Kurilowich (2008) systematically degraded the adaptor presented in individualized VAS by holding the ILD or ITD constant and found (a) that the AMAE was smaller when the cues were conflicting (e.g., monaural spectral cues and ILD change systematically but ITD is held at 0) and (b) that ILD was a much stronger driver of the AMAE than ITD. One study examined the early time course of the AMAE (Neelon & Jenison, 2004) and reported that adaptor periods as brief as 1 s produced an AMAE that increased in strength for adaptors increasing in duration up to 5 s. In the former case, probe stimuli needed to be presented immediately after the adaptor, while in the latter case, the AMAE was strongly evident up to 1.7 s later, indicating that more adaptation produces longer lasting AMAE. These shorter adaption periods only produced an AMAE for sounds moving toward the midline, and this was argued to reflect the short-term dynamics of the neural response reported in animal models.

All of the studies above have used or simulated motion that rotated around the listener (as when the head rotates). However, a significant fraction of moving sounds will move linearly and largely tangentially with the head. Such motion also produces a variation in distance and angular velocity; however, AMAE produced by such simulated motion was not different from that produced by a comparable rotating stimulus at 76°/s (Neelon & Jenison, 2003). The effect of motion in depth to produce AMAE has been examined in a number of recent studies from Andreeva and colleagues (Andreeva & Malinina, 2010, 2011; Andreeva & Nikolaeva, 2013; Malinina, 2014a, 2014b; Malinina & Andreeva, 2013). This group has simulated the approach or recession of a moving target by level balancing between two loudspeaker directly ahead at 1 m and 4.5 m. In general, 5 s of adaptation using a broad-band stimulus produces an AMAE in the direction opposite to that of the adapter (approaching or receding), which was more effective for “velocities” of 3.4 m/s or slower and was stronger when the adaptor and probe were matched in distance and spectral content. Control experiments indicated that some of the perceptual biases might be accounted for by loudness adaptation effects (see also Ehrenstein, 1994) but that this was an inadequate explanation of the overall effect.

Auditory Representational Momentum

Early studies of the MAMA also noted that the perception of the locations of the end points of the motion was often displaced in the direction of the motion (Perrott & Musicant, 1977). The term “representational momentum (RM)” was coined in the visual research literature to describe a similar observation for a moving visual stimulus, where a significant amount of work has been done examining both the drivers and potential mechanisms (see, e.g., Hubbard, 2005; Hubbard, Nijhawan, & Khurana, 2010). The link between target velocity and the magnitude of the RM is well established in the visual domain; however, in the auditory domain, there are only a handful of studies, and there is significant contradiction in the results. The initial auditory observations report RM over a range from −11° to 25° (Perrott & Musicant, 1977) and an influence of target velocity (600°/s to 90°/s) for the onset but not for the offset location. Using a pointing task, Getzmann, Lewald, and Guski (2004) report displacement in the direction of motion of up to 7° to 8° and an inverse influence of velocity for the quite slow speeds of 16°/s and 8°/s. Schmiedchen, Freigang, Rübsamen, and Richter (2013) demonstrated comparable RM to visual and auditory targets in the same subjects over a wide range of velocities (160°/s to 13°/s) with an effect of velocity but only for a target moving from the periphery to the midline but not for motion away from the midline. The large differences in the velocities tested between studies may explain some of the differences in the results, as might the methods of measuring the effect (being perceptual estimates in the first and pointing in the latter; see also Leung, Alais, & Carlile, 2008).

In a recent experiment in our laboratory, we examined auditory RM using a horizontally moving (25°/s to 100°/s) broad-band stimuli presented in individualized VAS, the eyes fixed to one of three fixation points and a perceptual task to measure the perceived end points of the motion (Feinkohl, Locke, Leung, & Carlile 2014). We found a significant effect of velocity (100°/s RM2.3° c.f. 25°/s RM 0.9°) with endpoint displacement in the direction of the motion and comparable to that reported for visual RM. We also found a significant and substantial additive effect of fixation, which is consistent with previous studies of the effects of eye position on the localization of stationary targets (e.g., Cui et al., 2010). In the previous studies of auditory RM, eye position was not controlled for and may have resulted in the substantial variation in the results between studies. Although the magnitude of the RM is relatively small over quite a wide range of velocities, it does underscore the influence of the recent history of a moving stimulus in its perception (see also section The Relationship Between Velocity Perception and MAMA).

Auditory Self-Motion

Head Movements

Apart from source motion, self-motion due to body and head movements can also lead to similar changes in acoustical cues. Such cue changes are fundamentally limited by our range of motion. Numerous studies have examined the biomechanics and neural feedback loop underlying head movements (Peterson, 2004; Zangemeister, Stark, Meienberg, & Waite, 1982). For a normal adult, the head moves commonly along the horizon, with a maximal extent of approximately 70° off the midline in either direction. Neck muscle spindles are highly innervated with proprioceptive inputs, allowing constant feedback of positional information and control. However, the head-neck musculoskeletal system is over-complete, where the number of muscles required to control the articulations are greater than the minimum required for activation. This contributes to the large variations of velocity and acceleration profile, not only between individuals but also within each subject, since it is possible different muscles are activated for the same task, at different times. Some common scenarios are ballistic head movements from a startle reflex, an auditory “search light” behavior to refine the location of a source stimulus or gaze orienting and tracking to bring and maintain an object of interest into foveal space (Brimijoin et al., 2013; Vliegen, Van Grootel, & Van Opstal, 2004; Wightman & Kistler, 1994). Each of these situations elicit different temporal, velocity, and acceleration profiles, thus posing an additional level of complexity in experiments where head movements need to be controlled.

Head movements are commonly used to refine localization accuracy, especially when binaural cues become ambiguous along a cone of confusion. In these situations, head movements by listeners can help resolve such ambiguities, as hypothesized by Wallach (1940). Wightman and Kistler (1999) further showed a significant reduction of front-back errors in the localization of static sound sources when subjects were allowed to move their heads. They also examined the opposite—whether source motion, either independently or via subject control, had the same effect when head movements were restricted. Here they found that only when source motion was controlled by the subjects did front-back errors disappear. Recent work has confirmed that even small head rotations can facilitate front-back localization in stimuli with weak spectral information (Macpherson, 2011; Martens, Cabrera, & Kim, 2011). This suggests that head movements were supplementing the reduced spectral cues. Results from Brimijoin and Ackeroyd (2012) and Macpherson (2011) also suggest that as the amount of spectral information increased, the stability of location percept from the Wallach cue decreased. Using simulated motion that contained binaural cues corresponding to a stationary source in the hemisphere opposite to the motion, a low-pass sound (500 Hz) was perceived as static. However, as the stimulus spectral content increased, it came into increasing conflict with the self-motion cues, and subjects reported an unstable location percept that flickered between the front and back, reaching a guess rate of ∼50% with a low-pass cut-off greater than 8 kHz (Brimijoin & Ackeroyd, 2012). Brimijoin and Akeroyd (2014) also showed that the moving MAA from self-motion (subjects moving their heads) was 1° to 2° smaller than if an external sound source moved along the same trajectory, even though the changes in acoustical cues would have been identical relative to the head. These results are consistent with the idea, discussed above, that efferent feedback from the head motor commands or vestibular information may play a role in auditory perception

Evidence of such sensorimotor interaction was further demonstrated by Leung et al. (2008), where ballistic head movements along the horizon resulted in a compression of auditory space toward the target position, with maximal distortion occurring 50 ms prior to onset of motion, similar to the presaccadic interval in vision. In the visual system, this ensures a smooth temporal representation of space, despite the discontinuities and rapid changes in gaze. Such compression of auditory space was also observed by Teramoto, Sakamoto, Furune, Gyoba, and Suzuki (2012), during forward body motion using a motorized wheelchair moving at 0.45 to 1.35 m/s. Interestingly, as the subjects were seated and the wheelchair was remotely controlled, only vestibular feedback cues were present, suggesting that auditory spatial shifts can also be affected by vestibular sensory afferents. In another study, Cooper, Carlile, and Alais (2008) examined localization accuracy during rapid head turns using very short broad-band stimuli, in effect causing a “smearing” of the acoustical cues. There, they found a systematic mislocalization of lateral angles that were maximal in the rear. Critically, such mislocalizations only occur when the sounds were presented at the later part of the head turn. This suggests that a “multiple look” strategy was being employed by the participants in localizing a target during rapid head movements. Interestingly, the smallest localization error occurred when subjects turned toward the point of attentional focus, strongly suggesting that attention could modulate the effect of cue smearing.

A methodological consideration when conducting motion experiments is whether the stimuli should be delivered in free field or virtually over headphones. Virtual sound delivery systems are not limited by speaker placements, where spatial interpolation of veridical head-related transfer functions (HRTFs) can render realistic motion in any trajectory. However, most HRTFs are recorded in anechoic environments, and the lack of reverberation cues or room acoustics may reduce the sense of spaciousness, thus subjects may perceive the moving stimuli closer to their heads. Furthermore, when self motion is integrated, excessive delays in updating the next spatial location during playback can substantially reduce the veracity of the resultant VAS. As examined in Brungart, Kordik, and Simpson (2006), the latency from head-tracking feedback can affect localization accuracy. Subjects were able to detect latencies >60 ms, and a delay >73 ms lead to an increase in mislocalization of static targets. It was recommended that a delay of less than 30 ms be maintained in fast-moving and complex listening situations.

Studies described so far have examined auditory motion in either frame of reference—source or self movements. However, we also encounter situations where both our heads and sources are moving, such as when tracking a moving object and few studies have examined such complex acoustical–sensory feedback interactions.

Head Tracking

Head tracking of moving sounds by pointing the nose or orientating the face toward a moving sound source has not been examined extensively. Beitel (1999) studied the dynamics of auditory tracking in cats by recording their head motion when tracking a series of clicks emitted by a speaker rotating at 12°/s or 16°/s. Using cats with sectioned optical nerves to eliminate visual involvement, the cats reacted to moving sounds in two phases: (a) a rapid head-orienting response to localize the target followed by (b) a tracking response that consisted of a succession of stepwise movements involving cycles of overshoot-and-pause, which ensured the target was maintained around the midline. This response has a passing resemblance to that of visual pursuit of acoustical targets and is suggestive of a series of stepwise localization tasks. In a methodological study (Scarpaci, 2006), the head-tracking accuracy of auditory stimuli was examined in humans as a means to verify the accuracy of a real-time VAS rendering system. The subjects were asked to track a band-limited stimulus filtered with non-individualized HRTF that moved around the head in a pseudo-random manner. The time lag of the head tracker and the method of phase reconstruction were varied as dependent variables, showing that the tracking error increased as a function of head-tracker delay.

These two studies provided insights into the mechanics of auditory motion tracking and highlighted various methodological requirements. Yet, much of the behavioral norms and biological constraints involved remain unknown. In a subsequent study by Leung, Wei, Burgess, and Carlile (2016), auditory head-tracking behavior was examined over a wide range of stimulus velocities in order to establish the basic underlying limits of the sensory feedback loop. Using a real-time virtual auditory playback system, subjects were asked to follow a moving stimulus by pointing their nose. The stimulus moved along the frontal horizon between ±50° Azimuth with speeds ranging from 20°/s to 110°/s and was compared against a visual control condition where subjects were asked to track a similarly moving visual stimulus. Based on a root mean square error analysis averaging across the trajectory, head-eye-gaze tracking was substantially more accurate than auditory head tracking across all velocities. Not surprisingly, tracking accuracy was related to velocity, where at speeds >70°/s, auditory head-tracking error diverged significantly from visual tracking. One interpretation is that at the higher velocities, subjects were performing a ballistic style movement, with little positional feedback adjustments; while at the slower velocities, subjects were able to make use of the neck proprioceptive feedback information in the sensory–motor feedback loop.

Neural Encoding of Auditory Motion

Animal Neurophysiology

Neurophysiological studies of various animals have examined motion sensitivity at successive nuclei along the central auditory pathway by quantifying the changes in spatial receptive fields (SRF) and response patterns of these neurons to moving stimuli. The assumption is that motion processing based on the snapshot hypothesis would only need location sensitive neurons, making motion sensitive neurons unnecessary and improbable (Wagner, Kautz, & Poganiatz, 1997). So far, no motion sensitive neurons have been found in the brainstem nuclei of medial superior olive and the lateral superior olive, one of the first major binaural integrative sites. In the midbrain, shifts in the SRF have been reported in bats, guinea pigs, and owls (Ingham, Hart, & McAlpine, 2001; McAlpine, Jiang, Shackleton, & Palmer, 2000; Wilson & O’Neill, 1998; Witten, Bergan, & Knudsen, 2006). Here, the neurons typically responded much more robustly to sounds entering their receptive fields, shifting the SRF opposite the direction of motion. Importantly, Witten et al., (2006) found that such shifts in the SRF can predict where the target will be in 100 ms and are scaled linearly with stimulus velocity. They further note that this is the approximate delay for an owl’s gaze response to a target.

These data and further computational modeling strongly suggest that auditory space maps are not static and that such shifts are behaviorally critical to compensate for delays in the sensorimotor feedback loop. No studies, however, have conclusively demonstrated the existence of neurons that are actually motion sensitive. While a number of early studies have reported a small number of neurons in the inferior colliculus of cats and gerbils to be selective to stimulus direction (Altman, 1968; Spitzer & Semple, 1993; Yin & Kuwada, 1983), McAlpine et al. (2000) demonstrated that these neurons were not directly sensitive to the motion cues per se but rather to their previous response to stimulation. In other words, instead of motion-specialized cells in a similar vein to that of the visual system, these were spatially tuned neurons that were adapted by the direction and rate of motion.

Higher level recordings have been made in monkeys, rats, and cats in the auditory cortex and the surrounding anterior ectosylvian cortex (Ahissar, Ahissar, Bergman, & Vaadia, 1992; Doan & Saunders, 1999; Firzlaff & Schuller, 2001; Poirier, Jiang, Lepore, & Guillemot, 1997; Stumpf, Toronchuk, & Cynader, 1992; Toronchuk, Stumpf, & Cynader, 1992). While directional sensitivity, shifts in SRF and velocity sensitivities have been reported, the significant majority of neurons that responded to motion was also sensitive to static location and as such, may not be “pure” motion detectors.

Stimulus differences could also play a role in the diversity of responses reported, and an important consideration in this regard is what actually constitutes an adequate auditory motion stimulus. Dichotic stimuli, such as those used in Witten et al. (2006) and Toronchuk et al. (1992), relied on changes in one parameter of auditory cues to illicit apparent movements, be it interaural phase or amplitude differences. Such sounds are not externalized in space, with a range of motion limited to between the ears. Even so, the neuronal responses were strongly dependent upon changes in the parameters of these stimuli, which Spitzer and Semple (1993) attributed to selective sensitivity to the dynamic phase information. Others, such as Poirier et al. (1997) and Ingham et al. (2001), used speaker arrays in free field for a richer set of acoustical cues and cautioned that the majority of responses were not directionally selective and that responses to stationary and moving sounds were similar.

Human Imaging and EEG

A number of studies have explored the cortical pathways involved in auditory motion processing in humans. Early work implicated the parietal cortex and superior temporal gyrus (specifically the planum temporale, PT) as regions of interest (Alink, Euler, Kriegeskorte, Singer, & Kohler, 2012; Baumgart, Gaschler-Markefski, Woldorff, Heinze, & Scheich, 1999; Ducommun et al., 2004; Griffiths et al., 1996; Krumbholz, Hewson-Stoate, & Schönwiesner, 2007; Lewald, Staedtgen, Sparing, & Meister, 2011; Poirier et al., 2005; Smith, Hsieh, Saberi, & Hickok, 2010; Smith, Okada, Saberi, & Hickok, 2004; Smith, Saberi, & Hickok, 2007; Warren, Zielinski, Green, Rauschecker, & Griffiths, 2002). Yet, it is unclear whether these structures are explicitly motion sensitive or rather simply sensitive to spatial changes. Smith et al. (2004, 2007) have in particular examined the PT and found no significant differences between moving and spatially varying but non-moving sounds. In a more recent study, Alink et al. (2012) used multivoxel pattern analysis to examine directional sensitivity of moving sounds and found that the left and right PT provided the most reliable activation pattern for detection, while a trend in increased activation pattern in borh the left and right primary auditory cortex was also observed. Other studies explored the cortical dynamics in response to moving stimuli with EEG, examining mismatch negativity and classifying onset responses. There is strong evidence that there are significant differences in responses between moving and static sounds (Altman, Vaitulevich, Shestopalova, & Petropavlovskaia, 2010; Krumbholz et al., 2007; Shestopalova et al., 2012), yet again, such dynamics are not necessarily representative of explicit motion sensitivity but rather more general changes in spatial position (Getzmann & Lewald, 2012).

Numerous case studies have reported deficits in auditory motion perception after lesions. In a case report, Griffiths, Bates, et al. (1997) discussed a patient with a central pontine lesion that presented difficulty in sound localization and movement detection. Using a 500 Hz phase-ramped stimulus presented over headphones, it was shown that the subject was unable to perceive sound movement, even when the phase change was equivalent to 180° Azimuthal displacement. Repeated testing using an interaural amplitude modulated stimulus confirmed that the subject was significantly worse than untrained controls. Interestingly, the subject exhibited normal performance in detecting fixed interaural phase differences (static targets). Magnetic resonance imaging revealed that the lesion involved the trapezoidal body but spared the midbrain, suggesting an early delineation of spatial and temporal processing in the auditory processing chain. In another case study, Griffiths, Rees, et al. (1997) reported a subject with spatial and temporal processing deficits, including a deficit in perceiving apparent sound source movement after a right hemispheric infarction affecting the right temporal lobe and insula.

Subsequently, Lewald, Peters, Corballis, and Hausmann (2009) examined the effects of hemispherectomy versus temporal lobectomy in static localization and motion perception and found evidence supporting different processing pathways between the two tasks. Here, a subject with right-sided temporal lobectomy was able to perceive auditory motion with the same precision as controls but had significantly worse performance in static localization tasks. However, two subjects with hemispherectomy exhibited selective motion deafness, “akineatocousis,” similar to that described in Griffiths, Rees, et al. (1997), while static localization abilities were normal.

Spatial Motion Perception and Hearing Loss

To date, no experiments have directly addressed the impact of hearing loss on spatial motion perception. A number of studies, however, have examined localization and discrimination performance in a static auditory space for the aging population and hearing loss patients (Abel, Giguere, Consoli, & Papsin, 2000; Dobreva, O’Neill, & Paige, 2011; Freigang, Schmiedchen, Nitsche, & Rübsamen, 2014; Kerber & Seeber, 2012; Seeber, 2002; for recent review see Akeroyd, 2014). Performance is generally decreased in people with hearing impairment. This is likely due first, to the overall decrease in availability of location cues (e.g., reduced sensitivity to the mid to high-frequency information important for both monaural and interaural level difference cues) and, second, to decrease in the fidelity of the residual cues produced by degradation of timing information (for a recent review see Moon & Hong, 2014) and reduced frequency selectivity (e.g., Florentine, Buus, Scharf, & Zwicker, 1980). Given the importance of these cues in motion perception discussed above, it is likely that motion sensitivity will be similarly affected for individuals with hearing loss.

Concluding Remarks

The early studies used a wide range of different sound stimuli and means for producing or simulating motion. The survey of those data presented here, however, identifies the general findings that the MAMA is strongly dependent on velocity and signal bandwidth, increasing with velocity and decreasing with bandwidth. Likewise, other work indicates that a combination of veridical cues is a far stronger driver of physiological responses and motion after effects than the manipulation of a single cue to spatial location. Another common theme is that velocity perception, particularly to changes in velocity, is likely to be affected by a prior state and is perhaps better characterized by a leaky integrator process than a more static “snapshot” model of changes in location.

When considering the complexity of the self-motion and source-motion deconvolution task, the picture emerging is one of a process involving a dynamic interaction of various sources of sensory and motor information with all the likely attendant loop delays, reliability, and noise issues. The more recent work suggests that a wide range of mechanisms are likely to be playing a role in this ecologically important perceptual process, including prior state, prediction, dynamic updating of perceptual representation and attentional modulation of processing characteristics. There are now emerging a range of sophisticated technical approaches using virtual space stimulation techniques, coupled with fast and accurate kinematic tracking, that are providing powerful new platforms for systematically examining these processes.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors received no financial support for the research, authorship, and/or publication of this article.

References

- Abel S. M., Giguere C., Consoli A., Papsin B. C. (2000) The effect of aging on horizontal plane sound localization. The Journal of the Acoustical Society of America 108(2): 743–752. doi: 10.1121/1.429607. [DOI] [PubMed] [Google Scholar]

- Agaeva M. (2004) Velocity discrimination of auditory image moving in vertical plane. Hearing Research 198(1–2): 1–9. doi:10.1016/j.heares.2004.07.007. [DOI] [PubMed] [Google Scholar]

- Ahissar M. V., Ahissar E., Bergman H., Vaadia E. (1992) Encoding of sound-source location and movement: Activity of single neurons and interactions between adjacent neurons in the monkey auditory cortex. Journal of Neurophysiology 67(1): 203–215. [DOI] [PubMed] [Google Scholar]

- Akeroyd M. A. (2014) An overview of the major phenomena of the localization of sound sources by normal-hearing, hearing-impaired, and aided listeners. Trends in Hearing 18 pii:2331216514560442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alink A., Euler F., Kriegeskorte N., Singer W., Kohler A. (2012) Auditory motion direction encoding in auditory cortex and high-level visual cortex. Human Brain Mapping 33(4): 969–978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altman J. A. (1968) Are there neurons detecting direction of sound source motion? Experimental Neurology 22: 13–25. [DOI] [PubMed] [Google Scholar]

- Altman J. A., Romanov V. P. (1988) Psychophysical characteristics of the auditory image movement perception during dichotic stimulation. International Journal of Neuroscience 38(3–4): 369–379. [DOI] [PubMed] [Google Scholar]

- Altman J. A., Vaitulevich S. P., Shestopalova L. B., Petropavlovskaia E. A. (2010) How does mismatch negativity reflect auditory motion? Hearing Research 268(1–2): 194–201. doi:10.1016/j.heares.2010.06.001. [DOI] [PubMed] [Google Scholar]

- Altman J. A., Viskov O. V. (1977) Discrimination of perceived movement velocity for fused auditory image in dichotic stimulation. The Journal of the Acoustical Society of America 61(3): 816–819. [DOI] [PubMed] [Google Scholar]

- Andreeva I., Malinina E. (2010) Auditory motion aftereffects of approaching and withdrawing sound sources. Human Physiology 36(3): 290–294. [PubMed] [Google Scholar]

- Andreeva I., Malinina E. (2011) The auditory aftereffects of radial sound source motion with different velocities. Human Physiology 37(1): 66–74. [PubMed] [Google Scholar]

- Andreeva I., Nikolaeva A. (2013) Auditory motion aftereffects of low-and high-frequency sound stimuli. Human Physiology 39(4): 450–453. [Google Scholar]

- Bach D. R., Neuhoff J. G., Perrig W., Seifritz E. (2009) Looming sounds as warning signals: The function of motion cues. International Journal of Psychophysiology 74(1): 28–33. [DOI] [PubMed] [Google Scholar]

- Baumgart F., Gaschler-Markefski B., Woldorff M. G., Heinze H.-J., Scheich H. (1999) A movement-sensitive area in auditory cortex. Nature 400(6746): 724–726. [DOI] [PubMed] [Google Scholar]

- Beitel R. E. (1999) Acoustic pursuit of invisible moving targets by cats. The Journal of the Acoustical Society of America 105(6): 3449–3453. [DOI] [PubMed] [Google Scholar]

- Bernstein L. R., Trahiotis C. (2009) How sensitivity to ongoing interaural temporal disparities is affected by manipulations of temporal features of the envelopes of high-frequency stimuli. The Journal of the Acoustical Society of America 125(5): 3234–3242. doi:10.1121/1.3101454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blauert J. (1997) Spatial hearing: The psychophysics of human sound localization, Cambridge, MA: MIT Press. [Google Scholar]

- Borst A. (2000) Models of motion detection. Nature neuroscience 3: 1168. [DOI] [PubMed] [Google Scholar]

- Brimijoin W. O., Akeroyd M. A. (2012) The role of head movements and signal spectrum in an auditory front/back illusion. i-Perception 3(3): 179–182. doi:10.1068/i7173sas. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brimijoin W. O., Akeroyd M. A. (2014) The moving minimum audible angle is smaller during self motion than during source motion. Frontiers in Neuroscience 8: 273 doi:10.3389/fnins.2014.00273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boucher, L., Lee, A., Cohen, Y. E., & Hughes, H. C. (2004). Ocular tracking as a measure of auditory motion perception. Journal of Physiology-Paris, 98(1–3), 235–248. doi:10.1016/j.jphysparis.2004.03.010. [DOI] [PubMed]

- Brimijoin W. O., Boyd A. W., Akeroyd M. A. (2013) The contribution of head movement to the externalization and internalization of sounds. PloSone 8(12): e83068 doi:10.1371/journal.pone.0083068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brungart D., Kordik A. J., Simpson B. D. (2006) Effects of headtracker latency in virtual audio displays. Journal of the Audio Engineering Society 54(1/2): 32–44. [Google Scholar]

- Butler R. A., Humanski R. A., Musicant A. D. (1990) Binaural and monaural localization of sound in two-dimensional space. Perception 19: 241–256. [DOI] [PubMed] [Google Scholar]

- Carlile S. (1996) The physical and psychophysical basis of sound localization. In: Carlile S. (ed.) Virtual auditory space: Generation and applications. (Ch 2), Austin, TX: Landes. [Google Scholar]

- Carlile S. (2014) The plastic ear and perceptual relearning in auditory spatial perception. Frontiers in Neuroscience 8: 273 doi:10.3389/fnins.2014.00237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlile S., Best V. (2002) Discrimination of sound source velocity by human listeners. The Journal of the Acoustical Society of America 111(2): 1026–1035. [DOI] [PubMed] [Google Scholar]

- Carlile, S., Kurilowich, R., & Leung, J. (1998). An auditory motion aftereffect demonstrated using broadband noise presented in virtual auditory space. Paper presented at the Assoc Res Otolaryngol., St Petersburg, Florida.

- Carlile S., Leong P., Hyams S. (1997) The nature and distribution of errors in sound localization by human listeners. Hearing Research 114(1–2): 179–196. doi:10.1016/s0378-5955(97)00161-5. [DOI] [PubMed] [Google Scholar]

- Carlile S., Martin R., McAnnaly K. (2005) Spectral information in sound localisation. In: Irvine D. R. F., Malmierrca M. (eds) Auditory spectral processing vol. 70, San Diego, CA: Elsevier, pp. 399–434. [Google Scholar]

- Carlile S., Pralong D. (1994) The location-dependent nature of perceptually salient features of the human head-related transfer function. The Journal of the Acoustical Society of America 95(6): 3445–3459. [DOI] [PubMed] [Google Scholar]

- Cooper J., Carlile S., Alais D. (2008) Distortions of auditory space during rapid head turns. Experimental Brain Research 191(2): 209–219. [DOI] [PubMed] [Google Scholar]

- Cui Q. N., Razavi B., Neill W. E., Paige G. D. (2010) Perception of auditory, visual, and egocentric spatial alignment adapts differently to changes in eye position. Journal of Neurophysiology 103(2): 1020–1035. doi:10.1152/jn.00500.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deas R. W., Roach N. W., McGraw P. V. (2008) Distortions of perceived auditory and visual space following adaptation to motion. Experimental Brain Research 191(4): 473–485. doi:10.1007/s00221-008-1543-1. [DOI] [PubMed] [Google Scholar]

- Devore S., Ihlefeld A., Hancock K., Shinn-Cunningham B., Delgutte B. (2009) Accurate sound localization in reverberant environments is mediated by robust encoding of spatial cues in the auditory midbrain. Neuron 62(1): 123–134. doi:10.1016/j.neuron.2009.02.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DiZio P., Held R., Lackner J. R., Shinn-Cunningham B., Durlach N. (2001) Gravitoinertial force magnitude and direction influence head-centric auditory localization. Journal of Neurophysiology 85(6): 2455–2460. [DOI] [PubMed] [Google Scholar]

- Doan D. E., Saunders J. C. (1999) Sensitivity to simulated directional sound motion in the rat primary auditory cortex. Journal of Neurophysiology 81(5): 2075–2087. [DOI] [PubMed] [Google Scholar]

- Dobreva M. S., O’Neill W. E., Paige G. D. (2011) Influence of aging on human sound localization. Journal of Neurophysiology 105(5): 2471–2486. doi:10.1152/jn.00951.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dong C. J., Swindale N. V., Zakarauskas P., Hayward V. (2000) The auditory motion aftereffect: Its tuning and specificity in the spatial and frequency domains. Perception & Psychophysics 62(5): 1099–1111. doi:10.3758/bf03212091. [DOI] [PubMed] [Google Scholar]

- Ducommun C. Y., Michel C. M., Clarke S., Adriani M., Seeck M., Landis T., Blanke O. (2004) Cortical motion deafness. Neuron 43(6): 765–777. [DOI] [PubMed] [Google Scholar]

- Ehrenstein W. H. (1984) Richtungsspezifische adaption des raum- und bewegungshorens [Direction specific auditory adaption]. In: Spillmann L., Wooten B. R. (eds) Sensory experience, adaption and perception, Hillsdale, NJ: Lawrence Erlbaum, pp. 401–419. [Google Scholar]

- Ehrenstein W. H. (1994) Auditory aftereffects following simulated motion produced by varying interaural intensity or time. Perception 23: 1249–1255. [DOI] [PubMed] [Google Scholar]