Abstract

Much evidence from distinct lines of investigation indicates the involvement of angular gyrus (AnG) in the retrieval of both episodic and semantic information, but the region's precise function and whether that function differs across episodic and semantic retrieval have yet to be determined. We used univariate and multivariate fMRI analysis methods to examine the role of AnG in multimodal feature integration during episodic and semantic retrieval. Human participants completed episodic and semantic memory tasks involving unimodal (auditory or visual) and multimodal (audio-visual) stimuli. Univariate analyses revealed the recruitment of functionally distinct AnG subregions during the retrieval of episodic and semantic information. Consistent with a role in multimodal feature integration during episodic retrieval, significantly greater AnG activity was observed during retrieval of integrated multimodal episodic memories compared with unimodal episodic memories. Multivariate classification analyses revealed that individual multimodal episodic memories could be differentiated in AnG, with classification accuracy tracking the vividness of participants' reported recollections, whereas distinct unimodal memories were represented in sensory association areas only. In contrast to episodic retrieval, AnG was engaged to a statistically equivalent degree during retrieval of unimodal and multimodal semantic memories, suggesting a distinct role for AnG during semantic retrieval. Modality-specific sensory association areas exhibited corresponding activity during both episodic and semantic retrieval, which mirrored the functional specialization of these regions during perception. The results offer new insights into the integrative processes subserved by AnG and its contribution to our subjective experience of remembering.

SIGNIFICANCE STATEMENT Using univariate and multivariate fMRI analyses, we provide evidence that functionally distinct subregions of angular gyrus (AnG) contribute to the retrieval of episodic and semantic memories. Our multivariate pattern classifier could distinguish episodic memory representations in AnG according to whether they were multimodal (audio-visual) or unimodal (auditory or visual) in nature, whereas statistically equivalent AnG activity was observed during retrieval of unimodal and multimodal semantic memories. Classification accuracy during episodic retrieval scaled with the trial-by-trial vividness with which participants experienced their recollections. Therefore, the findings offer new insights into the integrative processes subserved by AnG and how its function may contribute to our subjective experience of remembering.

Keywords: fMRI, memory, multivoxel pattern analysis, parietal lobe, recollection

Introduction

Left lateral parietal cortex, in particular angular gyrus (AnG), is commonly associated with changes in neural activity during recollection of episodic memories (Vilberg and Rugg, 2008; Wagner et al., 2005; Cabeza et al., 2008; Levy, 2012). Insights into the role AnG may play during recollection are provided by studies examining memory in patients with lateral parietal lesions. Although such patients are not amnesic and can retrieve memories successfully, the richness and vividness of those memories, and patients' confidence in them, is often diminished (Berryhill et al., 2007; Simons et al., 2010; Hower et al., 2014). These results implicate lateral parietal cortex in the subjective experience of remembering episodic memories as rich, vivid, multisensory events (Simons et al., 2010; Moscovitch et al., 2016). A traditionally separate literature suggests that AnG may also be a key region for semantic memory (Binder et al., 2009). Patients with lesions that include left AnG often exhibit difficulties with semantic and conceptual processes involving auditory or written language (Damasio, 1981). Neuroimaging studies involving healthy volunteers have observed activity in this region during performance of various episodic (Vilberg and Rugg, 2008) and semantic (Binder et al., 2009) memory tasks. Recent work using resting-state functional connectivity approaches suggests that AnG might not be a single, homogenous area, but rather may comprise several functionally and anatomically separable subregions (Nelson et al., 2010; Nelson et al., 2013), leading to the question of whether AnG supports the same cognitive processes during episodic and semantic retrieval or if different mechanisms are recruited, perhaps localized to distinct subregions of AnG.

A key feature of episodic memory is its coherent, holistic nature, allowing the recollection of many different aspects of a previous experience, such as the sights and sounds comprising the event. Little is currently known about how mnemonic information relating to multiple sensory modalities is integrated during episodic memory retrieval. AnG, a connective hub linking sensory association cortices and other processing systems (Seghier, 2013), is an ideal candidate for supporting such multimodal integration. The region has also been proposed as a convergence zone in the semantic memory literature, largely on the basis of activity reported during performance of semantic tasks involving unisensory stimuli of different modalities (Bonner et al., 2013). However, existing studies have not successfully demonstrated an involvement of AnG in the processing of multimodal stimuli (e.g., concurrent auditory and visual presentation), a key test of whether the region plays an integrative role in semantic memory processing. It remains possible that AnG processes semantic information similarly regardless of sensory modality rather than playing a specific role in the production of coherent, integrated multimodal semantic representations.

Participants in the current study completed an episodic and a semantic memory task. We used univariate fMRI analyses to test for task-induced changes in mean activity levels to provide information about differential recruitment of brain areas during the processing of unisensory and multisensory stimuli. In addition to considering such general processing differences between multimodal and unimodal stimuli, we used a multivariate decoding approach [multivoxel pattern analysis (MVPA); Haynes and Rees, 2006; Norman et al., 2006] to discriminate between individual memories of different modalities (auditory, visual, and audio-visual) by decoding unique aspects of individual memory representations from the brain areas of interest. We hypothesized that, if AnG is involved in the processing of multimodal features of episodic and/or semantic memories, then this function should be reflected in increased activity (as detected by univariate analysis) for multimodal compared with unimodal memories in this area. Moreover, to the extent that multimodal features are recombined within AnG, multivoxel patterns of neural activity that discriminate between individual multimodal memories should be distinguishable in AnG. In contrast, distinct patterns for individual unisensory memories should only be observed in auditory and visual sensory association areas, consistent with the idea of distributed modality-specific representations.

Materials and Methods

Participants.

Sixteen healthy, right-handed participants (8 female, 8 male) took part in the experiment (mean age = 25.9 years, SD = 4.5, range = 19–34). All had normal or corrected-to-normal vision and gave informed written consent to participation in a manner approved by the Cambridge Psychology Research Ethics Committee.

Stimuli selection.

Both the episodic and the semantic memory tasks used in the current study required nouns that were associated with (1) auditory, (2) visual, or (3) audio-visual features. We first undertook an online pilot procedure in which 87 adults rated 150 words for their auditory and visual features. Of these words, nine words (which are shown in Fig. 2) were selected for the episodic memory task: three auditory dominant words, rated as having significantly more auditory than visual features (t2 = 25.07, p = 0.002); three visual dominant words, rated as having significantly more visual than auditory features (t2 = −12.424, p = 0.006); and three audio-visual dominant words, rated as being equal for auditory and visual properties (t(2) = −0.907, p = 0.460). Seventy-two words were selected for the semantic memory task on a similar basis: 24 auditory dominant words, rated as having significantly more auditory than visual features (t(23) = 12.769, p < 0.001); 24 visual dominant words, rated as having significantly more visual than auditory features (t(23) = −39.986, p < 0.001); and 24 audio-visual dominant words, which were no different for auditory and visual properties (t(23) = −1.011, p = 0.321).

Figure 2.

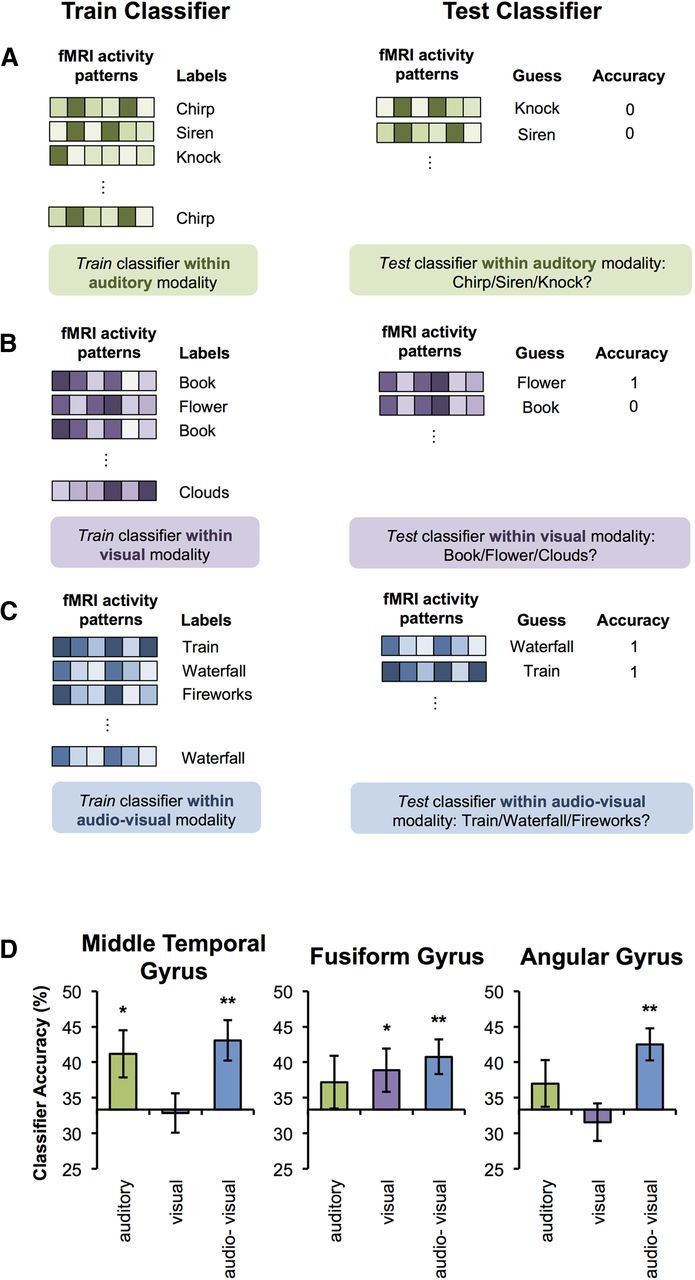

Decoding of episodic memories. Individual memories were decoded using a 10-fold cross-validation approach. Importantly, classification was undertaken separately within the auditory (A, green), within the visual (B, purple), and within the audio-visual (C, blue) condition. For example, the classifier was trained to differentiate the auditory memories (A) for “chirp,” “siren,” and “knock” using a subset of the data. It was then tested on a left out set of auditory trials (that was not included in the training data set). A corresponding approach was used for trials of the visual memoires (B), as well as the audio-visual memories (C). D, Three-way classification accuracies in each of the ROIs: MTG, FG, and AnG. Note: Chance = 33.33%. Error bars indicate SEM. *p < 0.05, **p < 0.005, one-tailed t test.

Episodic memory task.

During a prescan training session for the episodic task, participants were presented with the episodic stimuli that they were later to recall in the scanner. They were presented with three short auditory clips (heard through headphones), three silent visual clips (viewed on a computer screen), and three audio-visual clips that were presented concurrently both auditorily and visually. The clips were each 6 s long and were representations of the word stimuli described above. For example, the clip for the rated auditory-visual word “train” comprised a film depicting a steam train chugging along the countryside while emitting a piercing whistle. The participants were presented with each clip six times and, after each presentation, they practiced recalling the clip as vividly and accurately as possible regardless of how long it took them to remember the complete clip. Participants were then instructed and trained to recall each clip within a 6 s recall period (so that the memory would be the same length as the clip) in response to the associated word cue. There were six training trials per clip. Participants were encouraged to recall the clip as vividly as possible and to maintain the quality and consistency of their recall on each subsequent recall trial for that clip. At the end of the training sessions, participants performed a practice session of the scanning task (Fig. 1A).

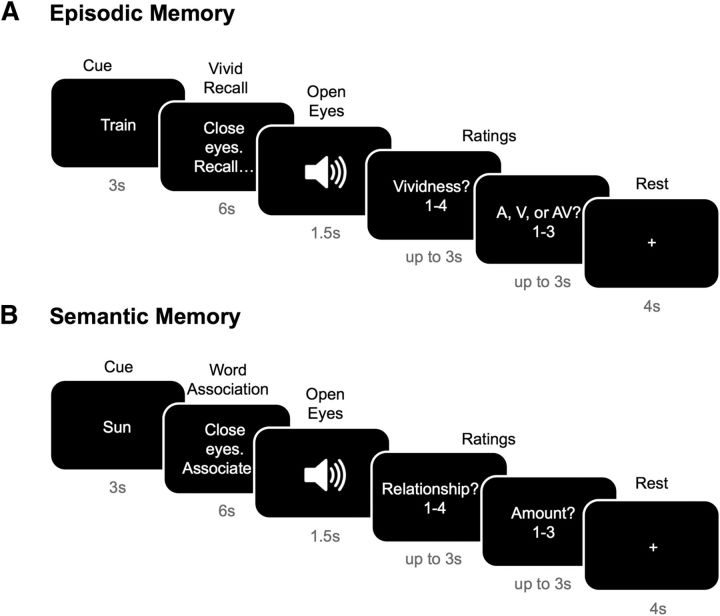

Figure 1.

Episodic and semantic memory tasks performed inside the scanner. A, Episodic memory: Participants completed 72 trials of the episodic task. In each trial, participants first saw a cue telling them which clip to recall. They then closed their eyes for 6 s and recollected the clip as vividly as possible. Once the 6 s had elapsed, they heard a tone signaling them to open their eyes. They then rated the vividness of the recollection as well as stating in which of the three modalities the clip had originally been presented. They then had a 4 s fixation pause before the next trial commenced. B, Semantic memory. Participants completed 72 trials of the semantic task. Each trial started with a cue denoting the word to which they were to associate words. They then closed their eyes for 6 s and thought of as many words associated with the cue as possible. Once the 6 s had elapsed, they heard a tone signaling them to open their eyes and to rate how related the words they thought of were to the cue word, as well as the number of words that they came up with in the 6 s (1 meaning none, 2 up to three words, and 3 >3 words). They then had a 4 s fixation pause before the next trial commenced.

During scanning, each clip was recalled eight times, consistent with similar MVPA studies (Chadwick et al., 2010), resulting in a total of 72 trials. The clips were presented in pseudorandom order, ensuring that the same clip was not repeated twice (or more) in a row. For each trial, participants were first shown the verbal cue of the clip to recollect, which was presented for 3 s. They were then presented with instructions to close their eyes and recall the clip for 6 s, after which they heard a tone in their headphones signaling to them to open their eyes. They were then asked to rate the quality of their recollection of the clip in terms of vividness on a scale of 1 to 4, where 1 signified “poor vividness” and 4 signified “very vivid,” and to decide whether the clip recollected had originally been presented auditorily, visually, or audio-visually. Participants had up to 3 s to provide each of these ratings using a 4-button box keypad. The vividness ratings were used to select the most vividly (ratings of 3 or 4) recollected trials for inclusion in the MVPA analysis. Of the eight trials for each condition, a comparable number of trials was selected for each condition with a mean of 6.02 (SD = 1.41) trials for the auditory clip recollections, 6.15 (SD = 1.53) trials for the visual clip recollections, and 5.90 (SD = 1.72) trials for the audio-visual recollections (F(2,30) = 0.298, p = 0.744). There followed a 4 s fixation pause before the next trial commenced.

After scanning, during a short debrief session, participants rated on a five-point scale the effort required to recall their memories, the level of detail of their recollections for each memory, and how vivid their recollection of each clip was (see Table 1 and discussion below).

Table 1.

Mean (SD) vividness ratings to episodic memories recorded during scanning and mean (SD) ratings of vividness, effort of recall, and level of detail obtained in the postscan debriefing session

| Auditory | Visual | Audio–visual | |

|---|---|---|---|

| Within-scan rating | |||

| Vividness | 3.03 (0.45) | 3.06 (0.51) | 3.03 (0.49) |

| Debrief ratings | |||

| Vividness | 4.27 (0.49) | 4.25 (0.55) | 4.29 (0.50) |

| Effort of recall | 1.50 (0.44) | 1.75 (0.73) | 1.73 (0.57) |

| Level of detail | 4.23 (0.43) | 4.40 (0.53) | 4.15 (0.62) |

Semantic memory task.

During a prescan training session, participants were trained on the word association semantic task that they would be performing in the scanner (Fig. 1B). The participants' task was to try to generate as many associations as possible to a trial-specific cue word in a 6 s period. To maximize the associations produced, participants were instructed to refer back to the cue word continuously during the association phase, thus focusing their attention on features of the critical modality. For example, the word “sun” might produce a list of responses such as “sky,” “light,” and “yellow.” At the end of the training session, participants practiced the task with the timing used in the scanner. A different set of words was used during training and during the scan.

During scanning, participants were first shown a word cue for 3 s and were then instructed to close their eyes and silently come up with words associated with the cue word, as they had done during the training session. After 6 s, they heard a tone through their headphones providing a signal for them to open their eyes. The participants were then asked to rate how related to the cue word they felt the words they had generated during the 6 s were. Relatedness was indicated on a scale from 1 to 4, where 1 denoted “not at all related” and 4 denoted “very related.” They were then asked to provide an estimate of the number of words they had come up with in those 6 s, where button 1 denoted no words at all, 2 denoted that they had thought of up to 3 words, and 3 signified that they had thought of >3 words. These ratings were then used to remove any trials in which participants thought of no words or words not related to the cue word from the analysis of the fMRI data. Using this criterion, an average of 7 trials (SD = 8.79) was excluded per participant. There followed a 4 s fixation pause before the next trial commenced. Note that each stimulus was presented once only during the semantic memory task, precluding MVPA analysis of these data. To provide temporal consistency across participants between the encoding and retrieval phases of the episodic memory task, the episodic and semantic tasks were performed consecutively, with the episodic task preceding the semantic task for all participants.

Image acquisition.

Using a 3T whole-body MRI scanner (Magnetom TIM Trio; Siemens) operated with the 32-channel head receive coil, functional data were acquired using an echoplanar imaging (EPI) sequence in a single session for each participant (in-plane resolution = 3 × 3 mm2; matrix = 64 × 64; field of view = 192 × 192 mm; 32 slices acquired in descending order; slice thickness = 3 mm with 25% gap between slices; echo time TE = 30 ms; asymmetric echo shifted forward by 26 PE lines; echo spacing = 560 μs; repetition time TR = 2 s; flip angle α = 78°). Finally, T1-weighted (MPRAGE) images were collected for coregistration (resolution = 1 mm isotropic; matrix = 256 × 240 × 192 mm; field of view = 256 × 240 × 192 mm; TE = 2.99 ms; TR = 2.25 s; flip angle = 9°).

ROIs.

For mass univariate analyses, a priori ROIs in the AnG were defined based on the coordinate of peak AnG activation from previous univariate meta-analyses of episodic and semantic memory. The coordinate for episodic memory (−43, −66, 38) was obtained from Vilberg and Rugg (2008) and the coordinate for semantic memory (−46, −75, 32) from Binder et al. (2009). ROIs were also defined for modality-specific sensory association regions. To target auditory processing areas, a left middle temporal gyrus (MTG) coordinate (−58, −24, 3) was obtained from Schirmer et al. (2012). Visual processing activity was examined using a left fusiform gyrus (FG) peak coordinate (−45, −64, −14) reported by Wheeler et al. (2000). For all ROIs, the signal was averaged across voxels within 6-mm-radius spheres centered on the reported peak coordinates. All coordinates conformed to MNI coordinate space, except for the semantic memory coordinate, which was estimated from the ALE meta-analysis map of general semantic foci in Figure 4 of Binder et al. (2009) and transformed from Talairach to MNI space (using the function “tal2mni” obtained from http://imaging.mrc-cbu.cam.ac.uk/imaging/MniTalairach).

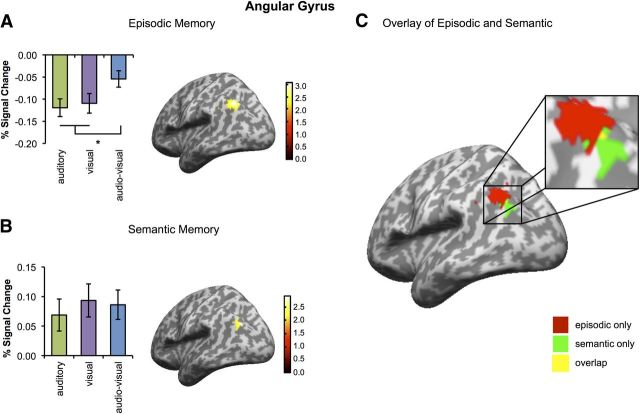

Figure 4.

Modality-specific regional signal change during episodic and semantic memory. A, Left, Percentage signal change for auditory (green), visual (purple), and audio-visual (blue) memories in the peak AnG voxel (−42, −66, 42) for the contrast “audio-visual − audio + visual” during episodic memory in the predefined spherical 6 mm ROI. Right, Left AnG activation for the same contrast. B, Left, Same as in A but for the peak AnG voxel (−42, −78, 36) for the main effect of audio-visual trials during semantic memory. Right, Left AnG activation for the same contrast. C, Overlay of activation maps shown in A and B. Red indicates activations selective to episodic memory, green indicates activations selective to semantic memory, and yellow indicates an overlap in activations. Activations are shown at a threshold of p < 0.05 (whole-brain, uncorrected) and are masked to the AAL mask for the ROI. The overlap shown in yellow comprised 1 voxel (−45, −72, 36) and did not reach significance in a conjunction analysis (t(15) = 1.85, p = 0.160). Note: Peak voxels were identified within the predefined spherical 6 mm ROIs using the contrasts specified above. Error bars indicate SEM. *p < 0.05, two-tailed t tests. Activations are shown at a threshold of p < 0.05 (whole-brain, uncorrected) and for the purposes of visualization are masked to the AAL mask for the ROI.

MVPA analysis involved characterizing information from patterns of activity across voxels. To ensure sufficient data in each analysis, anatomically defined ROIs based on the Automated Anatomical Labeling (AAL) template were used for the MVPA analysis (in contrast to the smaller spherical ROIs used for the univariate contrasts). The Wake Forest University (WFU) PickAtlas toolbox in SPM8 (Maldjian et al., 2003) was used to obtain anatomical ROIs for the left AnG, the left MTG, and the left FG.

Mass univariate analysis.

Image preprocessing was performed using SPM8 (http://www.fil.ion.ucl.ac.uk/spm). The first six EPI volumes were discarded to allow for T1 equilibration effects (Frackowiak et al., 2004). The remaining EPI images were coregistered to the T1 weighted structural scan, realigned and unwarped using field maps (Andersson et al., 2001), and spatially normalized to MNI space using the DARTEL toolbox (Ashburner, 2007). Data were then smoothed using an 8 mm3 FWHM Gaussian function.

Statistical analysis was performed in an event-related manner using the general linear model. Separate regressors were included for auditory trials, visual trials, audio-visual trials, and fixation trials. Each of these regressors was generated with boxcar functions convolved with a canonical hemodynamic response function. The duration specified for the regressors was 6 s for the auditory, visual, and audio-visual trials and 4 s for fixation trials. Subject specific movement parameters were included as regressors of no interest. Subject-specific parameter estimates pertaining to each regressor were calculated for each voxel. Group-level activation was determined by performing one-sample t tests on the linear contrasts of the statistical parametric maps (SPMs) generated during the first level analysis. We report significant fMRI results for each 6 mm ROI sphere at a voxel-level threshold of p < 0.05, corrected for the number of voxels within the ROI.

MVPA.

Image preprocessing was performed as reported in the previous section; however, for the MVPA analysis of the episodic memory task, we used unsmoothed data, consistent with previous studies that involved stimuli with highly overlapping features (cf. Chadwick et al., 2012). For each trial, we then modeled a separate regressor for the recall period of that trial and convolved it with the hemodynamic response function. This created participant-specific parameter estimates for each recall trial.

We used a standard MVPA procedure that has been described previously (Bonnici et al., 2012b; Bonnici et al., 2012a; Chadwick et al., 2012; Chadwick et al., 2010). The overall classification procedure involved splitting the fMRI data into two segments: a “training” set used to train a classifier with fixed regularization hyperparameter C = 1 to identify response patterns related to the episodic memories being discriminated and a “test” set used to test classification performance independently (Duda et al., 2001) using a 10-fold cross-validation procedure. The classification approach used in the current study is illustrated in Figure 2, A–C. Specifically, in each ROI, we used MVPA to distinguish between the three individual auditory memories (Fig. 2A), between the three individual visual memories (Fig. 2B), and between the three individual audio-visual memories (Fig. 2C). That is, in contrast to the univariate analysis in which we contrast auditory, visual, and audio-visual trials, the MVPA analysis attempts to differentiate trials within a given modality to test whether information specific to the individual memories can be detected. The classification analyses resulted in accuracy values for each ROI based on the percentage of correctly classified trials. These values were then tested at the group level against a chance level of 33.33% using one-tailed t tests because we were only interested in whether results were significantly above chance (all other t tests reported are two-tailed). A threshold of p < 0.05 was used throughout.

Results

Behavioral results

Examining participants' vividness ratings during scanning revealed no significant differences between modalities (F(2,30) = 0.5, p = 0.951; Table 1). During a debrief session after scanning, participants rated the nine memories for vividness, effort to recall, and level of details. Again, all variables were matched across modalities (all F(2,30) < 1.42, p > 0.26; Table 1).

Neuroimaging results

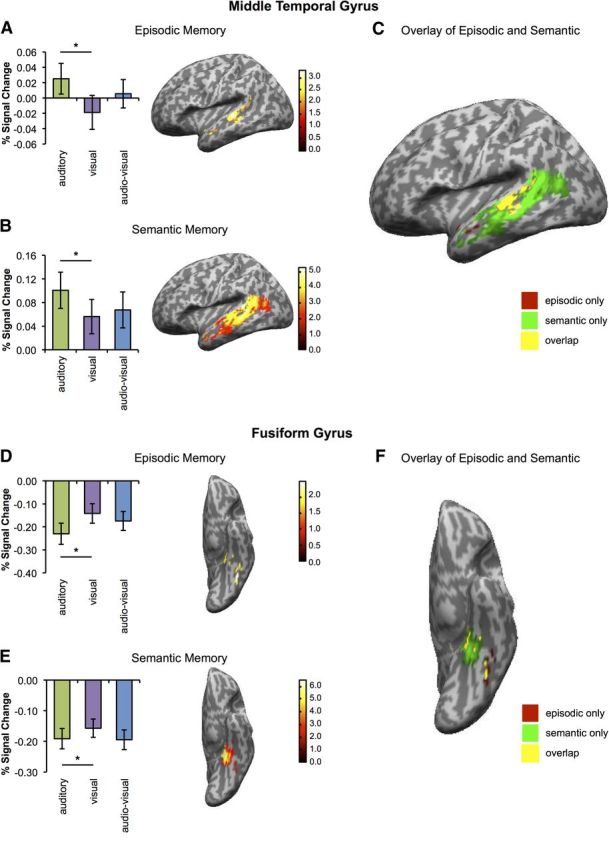

Modality-specific regions in episodic memory

We first considered functional activity in a priori modality-specific ROIs (see Materials and Methods). We hypothesized that the left MTG would be predominantly engaged during recall of auditory memories, and that the left FG would show greater activity for visual memories. Consistent with these predictions, auditory memories were associated with significantly greater activity than visual memories in the left MTG ROI (peak: −57, −30, 3; t(15) = 3.09, p = 0.029; Fig. 3A). Visual memories elicited significantly greater activity than auditory memories in the left FG ROI (peak: −45, −63, −9; t(15) = 3.40, p = 0.018; Fig. 3D). A repeated-measures ANOVA with factors region (MTG vs FG) and modality (auditory vs visual) on the percentage signal change in the respective peak voxels confirmed a significant interaction (F(15) = 30.46, p < 0.001). As shown in Figure 3, A and D, multimodal audio-visual memories were associated with intermediate levels of activity between that of auditory and visual trials.

Figure 3.

Modality-specific regional signal change during episodic and semantic memory. A, Left, Percentage signal change for auditory (green), visual (purple), and audio-visual (blue) memories in the peak voxel for the contrast “audio-visual” in MTG during episodic memory. Right, Left MTG activation for the same contrast. B, Same as A but for semantic memory. C, Overlay of activation maps shown in A and B. Red indicates activations selective to episodic memory, green indicates activations selective to semantic memory, and yellow indicates an overlap in activations. D, Left, Percentage signal change for auditory (green), visual (purple), and audio-visual (blue) memories in the peak voxel for the contrast “visual − auditory” in the FG during episodic memory. Right, Left FG activation for the same contrast. E, Same as D but for semantic memory. F, Overlay of activation maps shown in D and E. Note: Peak voxels were identified within the predefined spherical 6 mm ROIs using the contrasts specified above. Error bars indicate SEM. *p < 0.05, two-tailed t tests. Activations are shown at a threshold of p < 0.05 (whole-brain, uncorrected) and for the purposes of visualization are masked to the AAL mask for the ROI.

Examining whether individual memories within each modality could be distinguished based on classifying the patterns of activity across voxels in modality-specific regions (Fig. 2A,B,D), results revealed that it was possible to discriminate between individual auditory memories (t(15) = 2.46, p = 0.013) and between each of the audio-visual memories (t(15) = 3.53, p = 0.002) in MTG, but, as expected, not between individual visual memories (t(15) = −0.05, p = 0.521). Therefore, specific features of auditory and audio-visual memories, but not of visual memories, appear to be represented within MTG, consistent with what would be expected based on modality-specific processing accounts.

In contrast, activity patterns in the FG enabled the classifier to distinguish reliably between individual visual memories (t(15) = 1.93, p = 0.037) as well as between individual audio-visual memories (t(15) = 3.18, p = 0.003), but not between individual auditory memories (t(15) = 1.13, p = 0.138), again suggesting that information about individual memories was processed in a modality-specific manner. A repeated-measures ANOVA with factors region (MTG vs FG) and modality (auditory vs visual vs audio-visual) conducted on the classification performance data confirmed a significant interaction between these factors (F(2,30) = 5.20, p = 0.012). Classification accuracy for audio-visual memories was not significant higher than for auditory memories in MTG (t(15) = 0.70, p = 0.49) or for visual memories in FG (t(15) = 0.43, p = 0.68).

Modality-specific regions in semantic memory

Turning to semantic memory, we hypothesized that, as in the episodic memory task, modality-specific regions would be particularly involved during semantic retrieval of unimodal word trials. This was indeed observed, with significantly greater activity in the left MTG ROI (peak: −60, −27, 0; t(15) = 4.15, p = 0.006; Fig. 3B) during retrieval of auditory versus visual words and significantly greater activity in the left FG ROI (peak: −45, −63, −9; t(15) = 3.47, p = 0.019; Fig. 3E) for retrieval of visual versus auditory words. Multimodal memories again displayed activity levels that were intermediate between that elicited by the two unimodal memory types.

Direct comparison of the results using conjunction analysis confirmed that there was considerable overlap in activations between the episodic and the semantic task during the unimodal auditory (peak: −57, −30, 3; t(15) = 3.09, p = 0.029) and visual (peak: −45, −63, −9; t(15) = 3.40, p = 0.018) conditions (areas of overlap are visualized in yellow in Fig. 3C,F), suggesting a degree of commonality in the modality-specific sensory processing regions involved during both episodic and semantic memory retrieval.

Episodic memories in the angular gyrus

Having established that common modality-specific processing regions are involved during episodic and semantic retrieval, the key question in this experiment was to determine whether AnG was more engaged during retrieval of integrated multimodal memories than during retrieval of unimodal memories. Consistent with the idea of AnG as a multimodal integration hub, audio-visual episodic memories were associated with significantly greater activity in AnG than were unimodal auditory or visual memories (peak: −42, −66, 42; t(15) = 2.98, p = 0.033; Fig. 4A).

To determine whether AnG was simply more engaged during retrieval of multimodal memories or, alternatively, if activity patterns in this region represent details of individual audio-visual memories (as would be expected from a region that integrates multimodal features of retrieved episodes), we used MVPA classifiers to differentiate between individual auditory, individual visual, and individual multimodal audio-visual memories in this region (Fig. 2C,D). A repeated-measures ANOVA analyzing the classifier's ability to distinguish between trials within each of the three modalities (auditory, visual, audio-visual) revealed a main effect of modality (F(2,30) = 6.15, p = 0.006). Classification performance for multimodal trials was significantly greater than for unimodal trials (t(15) = 2.95, p = 0.01); moreover, classification of multimodal memories (t(15) = 4.09, p = 0.0004), but not unimodal memories, was significantly above chance (auditory memories: t(15) = 1.11, p = 0.14; visual memories: t(15) = −0.68, p = 0.26). These results suggest selective involvement of AnG in representing multimodal, compared with unimodal, episodic memories, consistent with a role for this region in the integration of multimodal features. Therefore, whereas unimodal memories were only distinguishable from each other in sensory association regions, features of multimodal memories were also represented in AnG.

An important follow-up question is whether the information that the classifier decoded to differentiate between the individual memories might relate to the subjective experience of the memories as vivid events. To shed some light on this question, we computed the relationship between classifier accuracy (whether the classifier correctly guessed which memory was being retrieved) and the in-scan vividness rating that participants assigned to the retrieved memory on each trial. For this analysis, trials with the full range of vividness ratings from 1 to 4 were included. To obtain a classification accuracy value for each trial, a leave-one-trial-out cross-validation procedure was used in which the classifier was trained on 23 of the 24 trials of each modality (auditory, visual, or audio-visual) and tested on the left-out trial. This procedure was repeated until a classification accuracy value for each trial was obtained. The difference in subjects' vividness ratings for accurately and inaccurately classified trials was then calculated separately for target and nontarget stimuli for each ROI (MTG: target = auditory, nontarget = visual; FG: target = visual; nontarget = auditory; AnG target = audio-visual, nontarget = auditory or visual). A repeated-measures ANOVA with factors ROI (MTG, FG, and AnG) and stimulus type (target vs nontarget) on this difference in vividness ratings revealed a significant main effect of stimulus type (F(15) = 16.138, p = 0.001) such that target stimuli were, on average, associated with a greater difference in vividness scores between correctly and incorrectly classified trials (mean = 0.121, SD = 0.181) than nontarget stimuli (mean = −0.087, SD = 0.210). Exploratory analysis revealed that the effect of stimulus type (target vs nontarget) on the difference in vividness ratings reached significance in AnG (t(15) = 4.362, p = 0.0006), but not in FG or MTG (t(15) = 1.22, p = 0.24, and t(15) = 0.76, p = 0.46, respectively). These findings suggest that the information that the classifier decoded indeed contributed to participants' experiencing of these memories as vivid events.

Semantic memory in the angular gyrus

Having observed that both mean activity and distributed memory-specific information were significantly greater in the AnG for multimodal than unimodal episodic memories, we next examined semantic retrieval using mass univariate analysis. Based on previous findings (Bonner et al., 2013; Simanova et al., 2014; Noonan et al., 2013) that AnG activity during semantic retrieval was observed for a range of modality-specific associations, we hypothesized that AnG would exhibit significant activation for all word modalities in the current task. Consistent with this prediction, evidence of common involvement during semantic retrieval was observed in AnG, with significant activity for visual trials (peak: −42, −78, 36; t(15) = 3.62, p = 0.016) and audio-visual trials (peak: −42, −78, 36; t(15) = 3.75, p = 0.014) reflected in significant main effects in the AnG ROI. Activity for auditory word trials showed a strong trend toward significance (peak: −45, −75, 33; t(15) = 2.85, p = 0.053). However, in contrast to the results observed in the episodic memory task, AnG did not exhibit significantly greater activation during retrieval of multimodal audio-visual semantic associations compared to the retrieval of unimodal auditory (t(15) = 1.21, p = 0.243) or visual (t(15) = 0.632, p = 0.54) memories.

To compare more directly AnG activation associated with episodic and semantic memory, we extracted brain activity from the same literature-derived coordinates (Vilberg and Rugg, 2008; Binder et al., 2009) for episodic and semantic memory that were the basis of our univariate analysis. Activity for the two single-voxel coordinates was normalized to ensure comparability between voxels and a “multimodality index” was computed, defined as the difference in activation between multimodal and unimodal trials at these voxels. A repeated-measures ANOVA with variables task (episodic vs semantic) and coordinate (literature-based coordinate for episodic vs semantic memory) on this multimodality measure revealed a significant interaction (F(15) = 21.74, p < 0.001). Episodic memories displayed a significantly larger multimodality index than semantic memories at the coordinate for episodic memory (t(15) = 3.17, p = 0.006), but not at the semantic memory coordinate (t(15) = 0.21, p = 0.838). The multimodality index for episodic memory trials was also significantly higher at the coordinate for episodic memory than at the coordinate for semantic memory (t(15) = 3.28, p = 0.005). Note that the voxels used in this analysis were ∼11 mm apart, meaning that a degree of overlap in the data used to compute the activity level at each voxel may have resulted due to smoothing. However, such an overlap should, if anything, reduce the likelihood of detecting the reported interaction.

Supporting the suggestion of a possible functional distinction within AnG for episodic and semantic retrieval, a conjunction analysis between the contrasts specified above revealed no significant overlap in activity, consistent with the observation that the activation for episodic memory appeared to be localized more dorsally than the activation for semantic memory (Fig. 4C). The results thus indicate that the preferential processing of multimodal memories in AnG was selective to the episodic task and that this processing was observed in a more dorsal AnG subregion than the area active during the semantic task. Together, these results provide preliminary support for the idea that functionally separable subregions of AnG may be recruited during the retrieval of multimodal episodic and semantic memories. However, the fixed order of task presentation might have influenced processing in the two tasks. Further studies will therefore be needed to confirm this observation. Furthermore, it should be noted that all analyses focused on a small number of a priori selected regions. No activations outside of the ROIs survived appropriate statistical correction (p < 0.05, whole-brain corrected).

Discussion

The present study investigated the role played by AnG during the retrieval of unimodal and multimodal episodic and semantic memories. The results indicated that AnG is involved in feature integration processes that support the retrieval of multimodal rather than unimodal episodic memories, a distinction that does not extend to semantic memory, where AnG exhibited statistically equivalent levels of activity during multimodal and unimodal trials. Unimodal episodic and semantic memories were associated with modality-specific activity in sensory association regions, confirming that the dissociation between multimodal and unimodal episodic memories in AnG was not simply due to unimodal memories eliciting less activation than multimodal memories overall, but instead was consistent with the proposed role of this region in the integration of multimodal episodic memories.

Our multivariate analyses extended these results, revealing clear differences in the kinds of mnemonic information represented in AnG and sensory association areas. MVPA classifiers successfully discriminated between individual audio-visual episodic memories in AnG, whereas unimodal episodic memories could not be distinguished from each other in that region. Instead, unimodal auditory and visual memories were successfully decoded from activity patterns in their respective sensory association cortices, suggesting that inability to decode these memories in AnG reflected specific sensitivity of this region to multimodal episodic information, rather than any intrinsic difficulty decoding unimodal memories.

Angular gyrus and episodic memory

Our data indicated a central role for AnG in the multimodal integration of episodic memories. The results may help explain recent seemingly counterintuitive findings that “visual” memories could be decoded in AnG but not ventral temporal areas (Kuhl and Chun, 2014), which the investigators speculated may be because the episodic memories in their task were to some extent multimodal, comprising visual features and those from other sensory modalities. Our analyses built on that prediction, testing the multimodality account via the inclusion of unimodal (auditory and visual) and multimodal (audio-visual) stimuli. The observation that multivoxel patterns in AnG distinguished individual multimodal episodic memories suggests that, in addition to being more active overall during multimodal trials, AnG represents specific features unique to individual audio-visual memories. Moreover, the observed link between classification accuracy and trial-by-trial vividness ratings indicates that the information the classifier decodes is associated with the experience of multisensory memories as vivid and detailed events, consistent with previous neuropsychological findings (Berryhill et al., 2007; Simons et al., 2010).

The findings thus indicate that AnG exhibits characteristics of an integration area—or “convergence zone” (Damasio, 1989; Shimamura, 2011)—for multimodal memories, which could be the function that underlies its role in the subjective experience of remembering (Simons et al., 2010; Moscovitch et al., 2016). Different theoretical accounts have discussed the contribution of lateral parietal areas (including AnG) to memory, proposing, for example, a role as a working memory “buffer” (Vilberg and Rugg, 2008), a region that may “accumulate” information for mnemonic decisions (Wagner et al., 2005), or an area involved in attentional processes in memory (Cabeza et al., 2008). Of these, the mnemonic buffer hypothesis is most compatible with our data, accommodating the finding that specific aspects of individual multimodal memories can be decoded from AnG. The accumulator and attention accounts, in contrast, do not suggest that retrieved memories themselves are represented in AnG and are therefore less compatible with the finding that individual memories can be decoded in AnG.

Other regions have also been proposed to support integration processes during episodic retrieval, most prominently the hippocampus (Staresina et al., 2013; Davachi, 2006), which may support the “re-experiencing” of memories via pattern completion (Horner et al., 2015). Exploratory analysis using Horner et al.'s (2015) hippocampal peak coordinate (30, −31, −5) revealed marginally significantly higher hippocampal activation for multimodal compared with unimodal episodic memories in the present data (33, −33, −9; t(15) = 2.59, p = 0.059). It has been suggested previously that, compared with hippocampus, AnG may be more involved in the processing of egocentric compared with allocentric information (Zaehle et al., 2007; Ciaramelli et al., 2010; cf. Yazar et al., 2014). The vivid recall that participants engaged in during the current study might be considered to have favored the kind of first-person perspective remembering associated with egocentric processing. Moreover, whereas the hippocampus may integrate elements of an episodic memory such as space and time (Burgess et al., 2002; Eichenbaum, 2014), AnG may integrate sensory elements of multimodal memories into a complete and vivid recollection. Examining the possible functional dissociation between these two regions is a critical next step for future research.

Angular gyrus and semantic memory

In addition to episodic retrieval, we investigated activity in AnG during retrieval of semantic memories based on previous evidence implicating AnG in semantic processing. Previous studies have reported that AnG exhibits increased activity during semantic memory tasks involving unisensory stimuli compared with a baseline task (Bonner et al., 2013). The current results echo these findings (in the form of main effects) by showing that unimodal and multimodal trials led to increased activity in AnG. Consistent with one of the possible accounts proposed in the introduction, and in contrast to our findings for episodic memory, unimodal and multimodal semantic memories activated AnG to a statistically equivalent degree, suggesting that AnG processes semantic information similarly regardless of sensory modality.

One possible alternative explanation for this finding is that semantic memories may be inherently more complex than episodic memories, in that even retrieval of “unimodal” semantic memories might lead to the reactivation of a large set of (possibly multimodal) associations, reducing differences between unimodal and multimodal conditions (Patterson et al., 2007; Yee et al., 2014). Therefore, participants might have generated words of different modalities in response to modality-specific cues during the semantic task, which may have decreased differences between conditions. Alternatively, semantic memories may lack some of the sensory–perceptual features associated with episodic memories, represented using a more abstract “amodal” code. Accordingly, participants may have performed the semantic memory task without retrieving sensory information to the extent that they did in the episodic condition (Ryan et al., 2008).

However, these accounts do not explain why unimodal semantic memories were associated with differential activation in sensory regions, indicating that sufficient modality-distinct sensory information was present for semantic memories. Moreover, activity levels for audio-visual memories lay between that of auditory and visual memories in these areas, mirroring the pattern of results observed during the episodic memory task in these brain regions. Therefore, although we cannot be certain that the modality manipulation affected both tasks equivalently, the differential multimodal effect in AnG for episodic and semantic retrieval may reflect distinctions in the recruitment of AnG during the retrieval of these memories, consistent with the observation that functionally separable AnG subregions appear to be involved in the processing of multimodal episodic and semantic memories. A proposed segregation of AnG into anterior/dorsal and posterior/ventral subregions has been discussed in the recent literature (Seghier, 2013; Nelson et al., 2010, 2013). In our data, the semantic task activated more posterior/ventral parts of AnG that connect to parahippocampal regions, which are considered part of a network involved in storage and retrieval of semantic knowledge (Binder et al., 2009). The more anterior region of AnG activated in the episodic task exhibits stronger connections with anterior prefrontal regions, potentially reflecting the need for controlled retrieval processes in the episodic task.

Unimodal memories

Brain activity elicited by unimodal memories revealed modality-specific processing regions involved in episodic and semantic retrieval. Our univariate and multivariate results converged in finding that MTG was primarily involved in the processing of auditory compared with visual memories, whereas FG demonstrated a preference for visual compared with auditory memories, consistent with the notion that activity in sensory association areas during retrieval mirrors their functional specialization during perception.

The results of the decoding analysis are noteworthy in that limited evidence currently exists that activity in sensory association regions reflects the representation of unique aspects of individual memories. Our findings suggest that sensory association regions not only exhibit an increase in overall brain activity in response to the retrieval of different unimodal stimuli (which could reflect increased processing demands for content of different modalities), but that features of the original memories are represented there, consistent with the view that brain activity in sensory areas may contribute to vivid remembering by reinstating “perceptual” aspects of the original episode (Buchsbaum et al., 2012; Wheeler et al., 2000). To our knowledge, this is the first demonstration that individual memories can be decoded in sensory association areas using multivariate analysis techniques.

Conclusion

Converging evidence from univariate and multivariate fMRI analyses indicates that AnG contributes to the multimodal integration of episodic memories, but is not sensitive to the distinction between unimodal and multimodal semantic memories. The present results support a functionally distinct contribution of AnG subregions during the retrieval of episodic and semantic memories. These distinctions may stem from differences in the nature of episodic and semantic memories themselves or in the retrieval processes that operate upon them. Either way, the link between our neuroimaging data and the vividness of participants' recollections demonstrates the central role of AnG in the experiencing of complex memories as rich, multisensory events.

Footnotes

This work was supported by a James S. McDonnell Foundation Scholar Award (J.S.S.) and was carried out within the University of Cambridge Behavioural and Clinical Neuroscience Institute, which is funded by a joint award from the Medical Research Council (MRC) and the Wellcome Trust. We thank the staff of the MRC Cognition and Brain Sciences Unit MRI facility for scanning assistance.

This is an Open Access article distributed under the terms of the Creative Commons Attribution License Creative Commons Attribution 4.0 International, which permits unrestricted use, distribution and reproduction in any medium provided that the original work is properly attributed.

References

- Andersson JL, Hutton C, Ashburner J, Turner R, Friston K. Modeling geometric deformations in EPI time series. Neuroimage. 2001;13:903–919. doi: 10.1006/nimg.2001.0746. [DOI] [PubMed] [Google Scholar]

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38:95–113. doi: 10.1016/j.neuroimage.2007.07.007. [DOI] [PubMed] [Google Scholar]

- Berryhill ME, Phuong L, Picasso L, Cabeza R, Olson IR. Parietal lobe and episodic memory: bilateral damage causes impaired free recall of autobiographical memory. J Neurosci. 2007;27:14415–14423. doi: 10.1523/JNEUROSCI.4163-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonner MF, Peelle JE, Cook PA, Grossman M. Heteromodal conceptual processing in the angular gyrus. Neuroimage. 2013;71:175–186. doi: 10.1016/j.neuroimage.2013.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnici HM, Chadwick MJ, Lutti A, Hassabis D, Weiskopf N, Maguire EA. Detecting representations of recent and remote autobiographical memories in vmPFC and hippocampus. J Neurosci. 2012a;32:16982–16991. doi: 10.1523/JNEUROSCI.2475-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnici HM, Kumaran D, Chadwick MJ, Weiskopf N, Hassabis D, Maguire EA. Decoding representations of scenes in the medial temporal lobes. Hippocampus. 2012b;22:1143–1153. doi: 10.1002/hipo.20960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchsbaum BR, Lemire-Rodger S, Fang C, Abdi H. The neural basis of vivid memory is patterned on perception. J Cogn Neurosci. 2012;24:1867–1883. doi: 10.1162/jocn_a_00253. [DOI] [PubMed] [Google Scholar]

- Burgess N, Maguire EA, O'Keefe J. The human hippocampus and spatial and episodic memory. Neuron. 2002;35:625–641. doi: 10.1016/S0896-6273(02)00830-9. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Ciaramelli E, Olson IR, Moscovitch M. The parietal cortex and episodic memory: an attentional account. Nat Rev Neurosci. 2008;9:613–625. doi: 10.1038/nrn2459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chadwick MJ, Hassabis D, Weiskopf N, Maguire EA. Decoding individual episodic memory traces in the human hippocampus. Curr Biol. 2010;20:544–547. doi: 10.1016/j.cub.2010.01.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chadwick MJ, Bonnici HM, Maguire EA. Decoding information in the human hippocampus: a user's guide. Neuropsychologia. 2012;50:3107–3121. doi: 10.1016/j.neuropsychologia.2012.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciaramelli E, Rosenbaum RS, Solcz S, Levine B, Moscovitch M. Mental space travel: damage to posterior parietal cortex prevents egocentric navigation and reexperiencing of remote spatial memories. J Exp Psychol Learn Mem Cogn. 2010;36:619–634. doi: 10.1037/a0019181. [DOI] [PubMed] [Google Scholar]

- Damasio AR. The brain binds entities and events by multiregional activation from convergence zones. Neural Comput. 1989;1:123–132. [Google Scholar]

- Damasio H. Cerebral localization of the aphasias. In: Sarno MT, editor. Acquired aphasia. New York: Academic; 1981. pp. 27–50. [Google Scholar]

- Davachi L. Item, context and relational episodic encoding in humans. Curr Opin Neurobiol. 2006;16:693–700. doi: 10.1016/j.conb.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Duda RO, Hart PE, Stork DG. Pattern classification. New York: Wiley; 2001. [Google Scholar]

- Eichenbaum H. Time cells in the hippocampus: a new dimension for mapping memories. Nat Rev Neurosci. 2014;15:732–744. doi: 10.1038/nrn3827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frackowiak RS, Friston KJ, Frith CD, Dolan RJ, Mazziotta JC. Human brain function. New York: Elsevier; 2004. [Google Scholar]

- Haynes JD, Rees G. Decoding mental states from brain activity in humans. Nat Rev Neurosci. 2006;7:523–534. doi: 10.1038/nrn1931. [DOI] [PubMed] [Google Scholar]

- Horner AJ, Bisby JA, Bush D, Lin WJ, Burgess N. Evidence for holistic episodic recollection via hippocampal pattern completion. Nat Commun. 2015;6:7462. doi: 10.1038/ncomms8462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hower KH, Wixted J, Berryhill ME, Olson IR. Impaired perception of mnemonic oldness, but not mnemonic newness, after parietal lobe damage. Neuropsychologia. 2014;56:409–417. doi: 10.1016/j.neuropsychologia.2014.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Chun MM. Successful remembering elicits event-specific activity patterns in lateral parietal cortex. J Neurosci. 2014;34:8051–8060. doi: 10.1523/JNEUROSCI.4328-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy DA. Towards an understanding of parietal mnemonic processes: Some conceptual guideposts. Front Integr Neurosci. 2012;6:41. doi: 10.3389/fnint.2012.00041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage. 2003;19:1233–1239. doi: 10.1016/S1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Moscovitch M, Cabeza R, Winocur G, Nadel L. Episodic memory and beyond: the hippocampus and neocortex in transformation. Annu Rev Psychol. 2016;67:105–134. doi: 10.1146/annurev-psych-113011-143733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson SM, Cohen AL, Power JD, Wig GS, Miezin FM, Wheeler ME, Velanova K, Donaldson DI, Phillips JS, Schlaggar BL, Petersen SE. A parcellation scheme for human left lateral parietal cortex. Neuron. 2010;67:156–170. doi: 10.1016/j.neuron.2010.05.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson SM, McDermott KB, Wig GS, Schlaggar BL, Petersen SE. The critical roles of localization and physiology for understanding parietal contributions to memory retrieval. Neuroscientist. 2013;19:578–591. doi: 10.1177/1073858413492389. [DOI] [PubMed] [Google Scholar]

- Noonan KA, Jefferies E, Visser M, Lambon Ralph MA. Going beyond inferior prefrontal involvement in semantic control: evidence for the additional contribution of dorsal angular gyrus and posterior middle temporal cortex. J Cogn Neurosci. 2013;25:1824–1850. doi: 10.1162/jocn_a_00442. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci. 2006;10:424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Ryan L, Cox C, Hayes SM, Nadel L. Hippocampal activation during episodic and semantic memory retrieval: comparing category production and category cued recall. Neuropsychologia. 2008;46:2109–2121. doi: 10.1016/j.neuropsychologia.2008.02.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Fox PM, Grandjean D. On the spatial organization of sound processing in the human temporal lobe: a meta-analysis. Neuroimage. 2012;63:137–147. doi: 10.1016/j.neuroimage.2012.06.025. [DOI] [PubMed] [Google Scholar]

- Seghier ML. The angular gyrus: multiple functions and multiple subdivisions. Neuroscientist. 2013;19:43–61. doi: 10.1177/1073858412440596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shimamura AP. Episodic retrieval and the cortical binding of relational activity. Cogn Affect Behav Neurosci. 2011;11:277–291. doi: 10.3758/s13415-011-0031-4. [DOI] [PubMed] [Google Scholar]

- Simanova I, Hagoort P, Oostenveld R, van Gerven MA. Modality-independent decoding of semantic information from the human brain. Cereb Cortex. 2014;24:426–434. doi: 10.1093/cercor/bhs324. [DOI] [PubMed] [Google Scholar]

- Simons JS, Peers PV, Mazuz YS, Berryhill ME, Olson IR. Dissociation between memory accuracy and memory confidence following bilateral parietal lesions. Cereb Cortex. 2010;20:479–485. doi: 10.1093/cercor/bhp116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Cooper E, Henson RN. Reversible information flow across the medial temporal lobe: the hippocampus links cortical modules during memory retrieval. J Neurosci. 2013;33:14184–14192. doi: 10.1523/JNEUROSCI.1987-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vilberg KL, Rugg MD. Memory retrieval and the parietal cortex: a review of evidence from a dual-process perspective. Neuropsychologia. 2008;46:1787–1799. doi: 10.1016/j.neuropsychologia.2008.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner AD, Shannon BJ, Kahn I, Buckner RL. Parietal lobe contributions to episodic memory retrieval. Trends Cogn Sci. 2005;9:445–453. doi: 10.1016/j.tics.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL. Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci U S A. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yazar Y, Bergström ZM, Simons JS. Continuous theta burst stimulation of angular gyrus reduces subjective recollection. PLoS One. 2014;9:e110414. doi: 10.1371/journal.pone.0110414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yee E, Chrysikou EG, Thompson-Schill SL. The cognitive neuroscience of semantic memory. In: Ochsner KN, Kosslyn S, editors. The Oxford handbook of cognitive neuroscience. Oxford: OUP; 2014. [Google Scholar]

- Zaehle T, Jordan K, Wüstenberg T, Baudewig J, Dechent P, Mast FW. The neural basis of the egocentric and allocentric spatial frame of reference. Brain Res. 2007;1137:92–103. doi: 10.1016/j.brainres.2006.12.044. [DOI] [PubMed] [Google Scholar]