Abstract

Neuroimaging studies have identified three scene-selective regions in human cortex: parahippocampal place area (PPA), retrosplenial complex (RSC), and occipital place area (OPA). However, precisely what scene information each region represents in not clear, especially for the least studied, more posterior OPA. Here we hypothesized that OPA represents local elements of scenes within two independent, yet complementary scene descriptors: spatial boundary (i.e., the layout of external surfaces) and scene content (e.g., internal objects). If OPA processes the local elements of spatial boundary information, then it should respond to these local elements (e.g., walls) themselves, regardless of their spatial arrangement. Indeed, we found OPA, but not PPA or RSC, responded similarly to images of intact rooms and these same rooms in which the surfaces were fractured and rearranged, disrupting the spatial boundary. Next, if OPA represents the local elements of scene content information, then it should respond more when more such local elements (e.g., furniture) are present. Indeed, we found that OPA, but not PPA or RSC, responded more to multiple than single pieces of furniture. Taken together, these findings reveal that OPA analyzes local scene elements – both in spatial boundary and scene content representation – while PPA and RSC represent global scene properties.

Keywords: OPA, TOS, PPA, RSC, scene perception, fMRI

1.1 Introduction

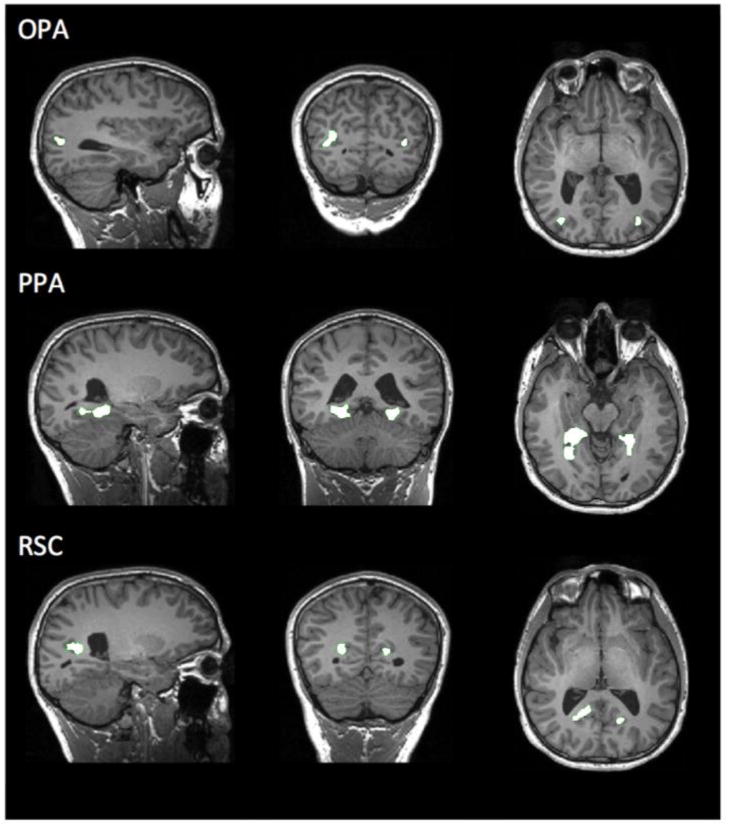

Functional magnetic resonance imaging (fMRI) studies have reliably identified three scene-selective regions in human cortex: the parahippocampal place area (PPA) (Epstein and Kanwisher, 1998), the retrosplenial complex (RSC) (Maguire, 2001), and the occipital place area (OPA) (Dilks et al., 2013), also known as transverse occipital sulcus (TOS) (Grill-Spector, 2003) (Figure 1). However, precisely what information each region extracts from scenes is far from clear—particularly for the least studied OPA.

Figure 1.

Scene-selective regions of interest (ROIs) in a sample participant. Occipital Place Area (OPA), Parahippocampal Place Area (PPA), and Retrosplenial Complex (RSC), labeled accordingly. Using ‘Localizer’ scans, these ROIs were selected as those regions responding significantly more to scenes than objects (p < 0.0001, uncorrected). Responses of these ROIs to the experimental conditions were then tested using an independent set of data (Experimental scans).

More is known about information processing in face- and body-selective cortical systems. In face processing, the more posterior occipital face area (OFA) responds strongly to face parts (i.e., eyes, nose, mouth) regardless of their spatial arrangement, whereas the more anterior fusiform face area (FFA) represents face parts and the typical spatial arrangement of these parts (e.g., two eyes above a nose above a mouth) (Yovel and Kanwisher, 2004; Pitcher et al., 2007; Liu et al., 2009). Similarly, among body-selective regions, the more posterior extrastriate body area (EBA) represents body parts, with the response to body parts rising gradually as more of the body is visible (e.g., a single finger versus a hand with five fingers). By contrast, the more anterior fusiform body area (FBA) is sensitive to the whole body, not the amount of body shown (Taylor et al., 2007). Here we ask whether the scene processing system exhibits a similar functional division of labor. In particular, we hypothesize that the more posterior OPA represents scenes at the level of local elements, while the more anterior PPA and RSC represent the global properties of scenes.

But what are the local elements of a scene? Initial clues can be found in behavioral and computational work suggesting that scenes are represented by two independent, yet complementary descriptors: i) spatial boundary, or the external shape, size, and scope of the space, and ii) scene content, or the internal features of the scene encompassing objects, textures, colors, and materials (Oliva and Torralba, 2001, 2002). Within spatial boundary representation, local scene elements may be the major surfaces and planes that together comprise the spatial boundary (i.e., walls, floors, and ceilings). Evidence for this possibility comes from the finding that PPA responds significantly more to images of intact, empty apartment rooms than to these same rooms in which the walls, floors, and ceilings were fractured and rearranged, such that they no longer defined a coherent space (Epstein and Kanwisher, 1998). This possibility also dovetails with one approach in robotic mapping that assumes elements of the environment consist of large, flat surfaces (e.g., ceiling and walls) (Thrun, 2002). Next, within scene content representation, local scene elements may be the individual objects, textures, colors, and materials that make up the internal content of a scene (Oliva and Torralba, 2001, 2002). For example, a piece of furniture might be considered a local scene element insofar as furniture is an object that is typically associated with particular places or contexts (e.g., a sofa is typically found in a living room) (Bar and Aminoff, 2003), and is different from other ‘objects’ because it is generally large and not portable (Mullally and Maguire, 2011; Konkle and Oliva, 2012; Troiani et al., 2014).

To test whether the more posterior OPA represents local scene elements within both spatial boundary and scene content representation, we examined responses in the OPA (as well as PPA and RSC) to images of 1) empty rooms; 2) these same rooms ‘fractured’ and rearranged such that the walls, floors, and ceilings no longer defined a coherent space; 3) single, nonfurniture objects; 4) single pieces of furniture; and 5) multiple pieces of furniture (Figure 2). Within spatial boundary representation, if the more posterior OPA processes scenes at the level of local elements, then it should not represent the coherent spatial arrangement of the elements, but rather the local elements themselves. As such, we predicted that OPA would respond similarly to the empty and the fractured rooms. By contrast, if the more anterior PPA and RSC encode global representations of the spatial boundary, then they should respond more to images of empty rooms that depict a coherent layout than to images of fractured and rearranged rooms in which the spatial boundary is disrupted. Within scene content representation, if OPA is sensitive to the local elements of scenes (i.e., furniture), then it should respond more when more such elements are presented. As such, we predicted that OPA would respond more to images of multiple pieces of furniture than to images of single pieces of furniture. By contrast, if PPA and RSC represent global properties of scene content, then their responses should be independent of the amount of content (i.e., furniture) presented.

Figure 2.

Example stimuli used in the Experimental scans. From top row to bottom row: 1) intact, empty apartment rooms (intact rooms); 2) indoor rooms whose walls, floors, and ceilings were fractured and rearranged such that they no longer defined a coherent space (fractured rooms); 3) single, non-furniture objects (single objects); 4) single pieces of furniture (single furniture); and 5) multiple pieces of furniture (multiple furniture).

1.2 Methods

1.2.1 Participants

Twenty-five participants (Age: 18-25; 12 from Emory University, 13 from MIT; 13 females, 12 males) were recruited for this experiment. Two participants were excluded from further analyses because of nonsignificant localizer results, and one for excessive motion during scanning, yielding a total of 22 participants reported here. All participants gave informed consent and had normal or corrected-to-normal vision.

1.2.2 Design

We used a region of interest (ROI) approach in which we localized category-selective regions (Localizer runs) and then used an independent set of runs to investigate their responses to a variety of stimulus categories (Experimental runs). For both Localizer and Experimental runs, participants performed a one-back task, responding every time the same image was presented twice in a row.

For the Localizer scans, ROIs were identified using a standard method described previously (Epstein & Kanwisher, 1998). Specifically, a blocked design was used in which participants viewed images of scenes, objects, faces, and scrambled objects. Each participant completed 2-3 localizer runs. Each run was 336 s long and consisted of 4 blocks per stimulus category. The order of blocks in each run was palindromic (e.g., faces, objects, scenes, scrambled objects, scrambled objects, scenes, objects, faces) and the order of blocks in the first half of the palindromic sequence was pseudorandomized across runs. Each block contained 20 images from the same category for a total of 16 s blocks. Each image was presented for 300 ms, followed by a 500 ms interstimulus interval, and subtended 8 × 8 degrees of visual angle. We also included five 16 s fixation blocks: one at the beginning, three in the middle interleaved between each palindrome, and one at the end of each run.

For the Experimental scans, participants viewed runs during which 16 s blocks (20 stimuli per block) of either 8 (at Emory) or 12 (at MIT) categories of images were presented. Five of the categories were common between Emory and MIT, and tested the central hypotheses described here; the additional categories tested unrelated hypotheses. Each image was presented for 300 ms, followed by a 500 ms interstimulus interval, and subtended 8 × 8 degrees of visual angle. At Emory, participants viewed 8 runs, and each run contained 21 blocks (2 blocks of each condition, plus 5 blocks of fixation), totaling 344 s. At MIT, participants viewed 12 runs, and each run contained 16 blocks (one block of each of the 12 different stimulus categories, and 4 blocks of fixation), totaling 256 s.

For the five categories of interest, we used the same stimuli presented in Epstein and Kanwisher (1998; indicated with an asterisk), as well as one other category (Figure 2): (1*) photographs of apartment rooms with all furniture and objects removed (intact rooms); (2*) the same rooms but fractured into their component surfaces and rearranged such that they no longer defined a coherent space (fractured rooms); (3*) single non-furniture objects (single objects); (4) single items of furniture (single furniture); and (5*) arrays of all of the objects from one of the furnished rooms cut out from the original background and rearranged in a random configuration (multiple furniture).

1.2.3 fMRI scanning

All scanning was performed on a 3T Siemens Trio scanner. At Emory, scans were conducted in the Facility for Education and Research in Neuroscience. Functional images were acquired using a 32-channel head matrix coil and a gradient-echo single-shot echoplanar imaging sequence (28 slices, TR = 2 s, TE = 30 ms, voxel size = 1.5 × 1.5 × 2.5 mm, and a 0.25 interslice gap). At MIT, scans were conducted at the Athinoula A. Martinos Imaging Center at the McGovern Institute for Brain Research. Functional images were acquired using a 32-channel head matrix coil and a gradient-echo single-shot echoplanar imaging sequence (28 slices, TR = 2 s, TE = 30 ms, voxel size = 1.4 × 1.4 × 2.0 mm, and a 0.2 interslice gap). For all scans, slices were oriented approximately between perpendicular and parallel to the calcarine sulcus, covering the occipital and temporal lobes. Whole-brain, high-resolution anatomical images were also acquired for each participant for purposes of registration and anatomical localization (see Data analysis).

1.2.4 Data analysis

fMRI data analysis was conducted using the FSL software (FMRIB's Software Library; www.fmrib.ox.ac.uk/fsl) (Smith et al., 2004) and the FreeSurfer Functional Analysis Stream (FS-FAST; http://surfer.nmr.mgh.harvard.edu/). ROI analysis was conducted using the FS-FAST ROI toolbox. Before statistical analysis, images were motion corrected (Cox and Jesmanowicz, 1999). Data were then detrended and fit using a double gamma function. Localizer data, but not experimental data, were spatially smoothed (5-mm kernel). After preprocessing, scene-selective regions OPA, PPA, and RSC were bilaterally defined in each participant (using data from the independent localizer scans) as those regions that responded more strongly to scenes than objects (p < 10-4, uncorrected), as described previously (Epstein and Kanwisher, 1998) (Figure 1). OPA, PPA, and RSC were identified in at least one hemisphere in all participants. We further defined two additional ROIs as control regions (again, using data from the localizer scans). First, we functionally defined a bilateral foveal confluence (FC) ROI – the region of cortex responding to foveal stimulation (Dougherty et al., 2003) – as the region that responded more strongly to scrambled objects than to intact objects (p < 10-4, uncorrected), as described previously (MacEvoy and Yang, 2012; Linsley and MacEvoy, 2014; Persichetti et al., 2015). FC was identified in at least one hemisphere in all participants. Second, we functionally defined the object-selective lateral occipital complex (LOC) as the region responding more strongly to objects than scrambled objects (p < 10-4, uncorrected), as described previously (Grill-Spector et al, 1998). LOC was identified in at least one hemisphere in all participants. Within each ROI, we then calculated the magnitude of response (percent signal change, or PSC) to the five categories of interest, using the data from the experimental runs. A 2 (hemisphere: Left, Right) × 5 (condition: intact rooms, fractured rooms, single objects, single furniture, multiple furniture) repeated-measures ANOVA for each scene ROI was conducted. We found no significant hemisphere × condition interaction in OPA (p = 0.62), PPA (p = 0.08), or RSC (p = 0.43). Thus, both hemispheres were collapsed for further analyses.

In addition to the ROI analysis described above, we also performed a group-level analysis to explore responses to the experimental conditions across the entire slice prescription. This analysis was conducted using the same parameters as were used in the ROI analysis, with the exceptions that the experimental data were spatially smoothed with a 5-mm kernel, and registered to standard stereotaxic (MNI) space. For each contrast, we performed a nonparametric one-sample t-test using the FSL randomize program (Winkler et al., 2014) with default variance smoothing of 5 mm, which tests the t value at each voxel against a null distribution generated from 5,000 random permutations of group membership. The resultant statistical maps were then corrected for multiple comparisons (p < 0.05, FWE) using threshold-free cluster enhancement (TFCE) (Smith and Nichols, 2009).

1.3 Results

1.3.1 Local elements of spatial boundary

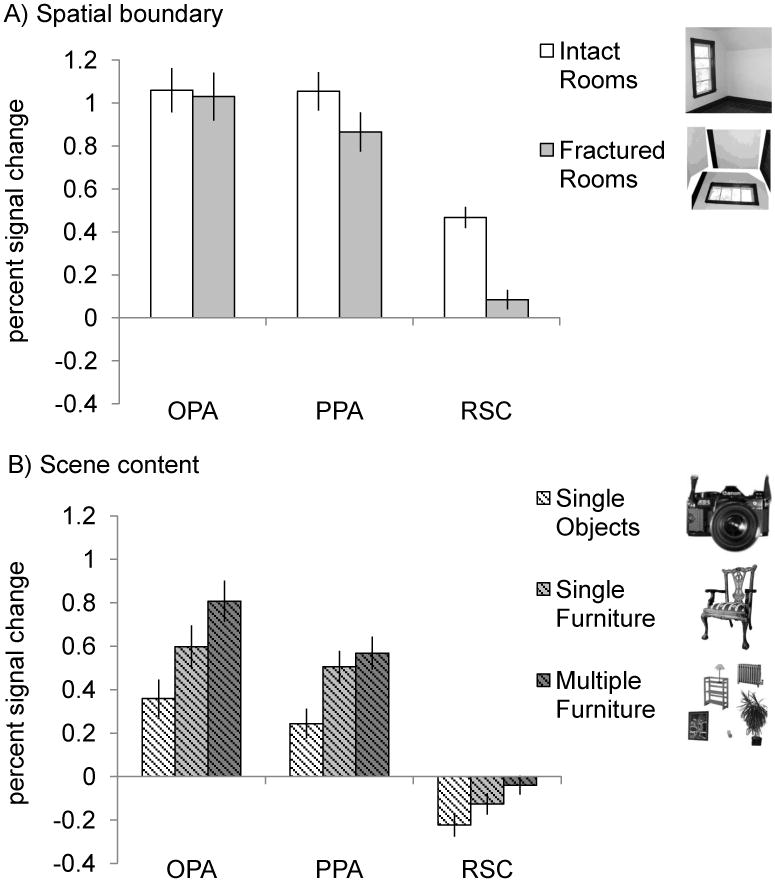

If OPA represents local scene elements (i.e., walls, floors, and ceilings) within spatial boundary representation, then it should respond to those elements regardless of how they are arranged relative to one another. To test this prediction, we first compared responses to the intact and fractured rooms in each scene-selective region individually (Figure 3A). For OPA, a paired t-test revealed no significant difference between intact and fractured rooms (t(21) = 0.89, p = 0.39), consistent with the local scene elements hypothesis for OPA. For PPA, a paired t-test revealed a significantly greater response to intact than fractured rooms (t(21) = 5.68, p < 0.001), replicating previous findings (Epstein & Kanwisher, 1998). Finally, for RSC, a paired t-test revealed a significantly greater response to intact rooms than fractured rooms (t(21) = 8.81, p < 0.001), consistent with previous reports of spatial boundary representation in RSC (Harel et al., 2012).

Figure 3.

Average percent signal change in OPA, PPA, and RSC to the five conditions. Error bars indicate the standard error of the mean. (A) Spatial boundary representation in OPA, PPA, and RSC. A 3 (ROI: OPA, PPA, RSC) × 2 (room type: intact, fractured) repeated measures ANOVA revealed a significant interaction (F(2, 42) = 33.66, p < 0.001), with OPA responding significantly more to fractured rooms relative to intact rooms, compared with both PPA and RSC (interaction contrasts, both p values < 0.001). (B) Scene content representation in OPA, PPA, and RSC. A 3 (ROI: OPA, PPA, RSC) × 3 (condition: single objects, single furniture, multiple furniture) repeated-measures ANOVA revealed a significant interaction (F(4, 84) = 14.579, p < 0.001). In particular, both OPA and PPA responded more to single furniture than to single objects, relative to RSC (interaction contrasts, both p values < 0.005), and OPA responded more to multiple furniture than to single furniture, relative to both PPA and RSC (interaction contrasts, both p values < 0.05).

The analyses above suggest that the three scene-selective regions represent spatial boundary information differently, so next we directly compared their response profiles. A 3 (ROI: OPA, PPA, RSC) × 2 (room type: intact, fractured) repeated measures ANOVA revealed a significant interaction (F(2, 42) = 33.66, p < 0.001), with OPA responding significantly more than both PPA and RSC to fractured rooms relative to intact rooms (interaction contrasts, both p values < 0.001). This result shows that the scene-selective regions represent spatial boundary information differently, with OPA representing the local elements (e.g., walls, floors, ceilings) that compose the spatial boundary, and PPA and RSC representing the global spatial arrangement of these elements relative to one another.

1.3.2 Local elements of scene content

Next, if OPA represents local scene elements (i.e., furniture) within scene content representation, then it should be sensitive to the number of such elements in a scene. To test this prediction, we first examined responses to images of single objects, single furniture, and multiple furniture in each scene-selective region individually (Figure 3B). For OPA, a three-level repeated-measures ANOVA revealed a significant main effect (F(2, 42) = 75.80, p < 0.001), with OPA responding significantly more to both single and multiple furniture than single objects – suggesting that furniture may indeed be considered a local element of a scene – and, as predicted, significantly more to multiple furniture than single furniture (Bonferroni corrected post hoc comparisons, all p's < 0.001).

But might OPA be sensitive to the amount of any object information more generally, rather than the amount of scene content information in particular? To address this question, in ten of our participants, we included an additional condition – images of multiple non-furniture objects (multiple objects). Importantly, the number and position of objects in the multiple object images was matched with the number and position of pieces of furniture in the multiple furniture images. A paired t-test revealed a significantly greater response to multiple furniture than multiple objects (t(9) = 4.34, p < 0.01) in OPA, suggesting that OPA does not simply represent the number of any sort of objects, but rather scene-related objects in particular. This finding dovetails with a recent study that also found a significantly greater response to images of multiple furniture than multiple objects in OPA (referred to as TOS in that study) (Bettencourt and Xu, 2013).

For PPA, a three-level repeated-measures ANOVA revealed a significant main effect (F(2, 42) = 52.93, p < 0.001), with PPA responding significantly more to both multiple and single furniture than single objects (Bonferroni corrected post hoc comparisons, both p's < 0.001) – consistent with previous findings (Bar and Aminoff, 2003; Mullally and Maguire, 2011; Harel et al., 2012) – but similarly to multiple and single furniture (Bonferroni corrected post hoc comparison, p = 0.19). Finally, RSC did not respond above baseline to any object or furniture condition, consistent with previous findings that RSC is not sensitive to scene content (Harel et al., 2012).

The above analyses suggest that the three scene-selective regions encode scene content information differently, so next we directly tested this suggestion. A 3 (ROI: OPA, PPA, RSC) × 3 (condition: single objects, single furniture, multiple furniture) repeated measures ANOVA revealed a significant interaction of ROI and condition (F(4, 84) = 14.58, p < 0.001), with both OPA and PPA responding significantly more to single furniture than single objects, relative to RSC, and critically with OPA responding more to multiple furniture than to single furniture relative to both PPA and RSC (interaction contrasts, all p values < 0.01). These results suggest that the three scene-selective regions represent scene content information differently, with OPA more sensitive than both PPA and RSC to the amount of content.

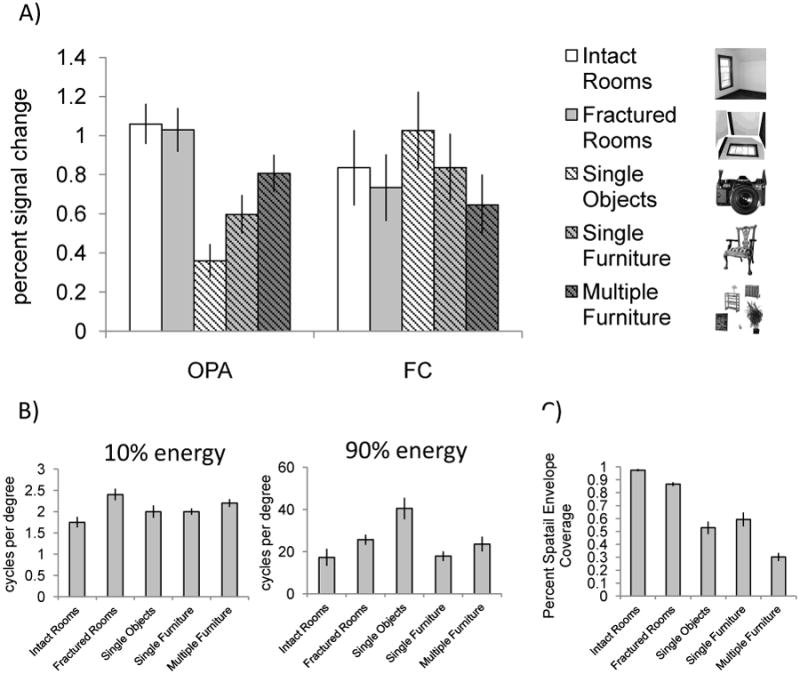

1.3.3 Local scene element representation in OPA does not reflect low-level visual information representation

But might the more posterior OPA – adjacent to early retinotopic regions – be responding to low-level visual information in our stimuli, like an early retinotopic region? We addressed this concern in two ways. First, given studies showing increased sensitivity to high versus low spatial frequency information in scene selective cortex (Rajimehr et al., 2011; Kauffmann et al., 2015), we performed a power spectrum analysis on our stimuli to explore differences between the five conditions across the range of spatial frequencies (Figure 4B) (Park et al., 2014). At low spectral energy levels (10% power), a one-way ANOVA revealed significant differences between the five conditions (F(4,84) = 4.39, p < 0.005). Post hoc analyses indicated that this effect was driven by the difference in low spatial frequencies between the intact and fractured rooms (Tukey HSD, p = 0.001). No other conditions significantly differed from one another (all p values > 0.05). At high spectral energy levels (90% power), a one-way ANOVA also revealed significant differences between the five conditions (F(4,84) = 6.84, p < 0.001). Post hoc analyses indicated that this effect was driven by the difference in high spatial frequency between images of single objects and all other conditions (Tukey HSD, all p values < 0.05). No other conditions significantly differed from one another (all p values > 0.45). While this analysis indicates that our stimuli were not precisely matched for spatial frequency information, crucially, neither of these patterns of results can explain the pattern observed in OPA (or PPA or RSC), ruling out the possibility that differential sensitivity to spatial frequency information can explain our findings. Second, we compared responses in OPA to those in FC, an early retinotopic region sensitive to such low level differences (Figure 4A). A 2 (ROI: OPA, FC) × 5 (condition: intact rooms, fractured rooms, single objects, single furniture, multiple furniture) repeated measures ANOVA revealed a significant interaction of ROI and condition (F(4,84) = 62.03, p < 0.001), indicating that the pattern of responses in OPA was not as expected for an early retinotopic region. In particular, whereas OPA responded as predicted for a region sensitive to local elements of scenes, FC responded as expected for a low-level visual region sensitive to foveal information in two ways. First, FC should respond more to conditions with more information presented at the fovea compared to those conditions with less information. To quantify the amount of visual information presented at the fovea in each condition, we calculated the percentage of non-white pixels in each image (in other words, how much of the image's spatial envelope was covered) (Figure 4C). A one-way ANOVA revealed significant differences in spatial envelope coverage across the five conditions (F(4,95) = 56.78, p < 0.001), with both single object and single furniture conditions covering significantly more of the spatial envelope than the multiple furniture condition (Tukey's HSD, both p's < 0.001). Not surprisingly then, FC responded more to single objects and single furniture than it did to multiple furniture, consistent with the differing amounts of information contained in each image across the categories. Second, FC should respond more to conditions with more information in the higher spatial frequency range, given that foveal cells are tuned to higher spatial frequencies than parafoveal cells (De Valois et al., 1982). Analysis of high spatial frequency information across our stimuli revealed that the single object condition had more high spatial frequency information than the single furniture or the multiple furniture conditions (Figure 4B). Again, not surprisingly then, FC responded more to the non-furniture objects than either furniture condition, consistent with differences in higher spatial frequency information across the conditions. Taken together, the above analyses demonstrate that OPA is indeed scene selective, rather than retinotopic.

Figure 4.

(A) Average percent signal change in OPA and FC to the five conditions. Error bars indicate the standard error of the mean. A 2 (ROI: OPA, FC) × 5 (condition: intact rooms, fractured rooms, single objects, single furniture, multiple furniture) repeated measures ANOVA revealed a significant interaction (F(4,84) = 62.03, p < 0.001), indicating that the pattern of responses in OPA was not as expected for a retinotopic region. (B) Distribution of low (10% energy) and high (90% energy) spatial frequency information across the five conditions. (C) Average percent spatial envelope coverage in each condition. Importantly, while our stimuli were not precisely matched for spatial frequency content or spatial envelope coverage, none of these sources of low-level visual information predicts the pattern of responses observed in OPA.

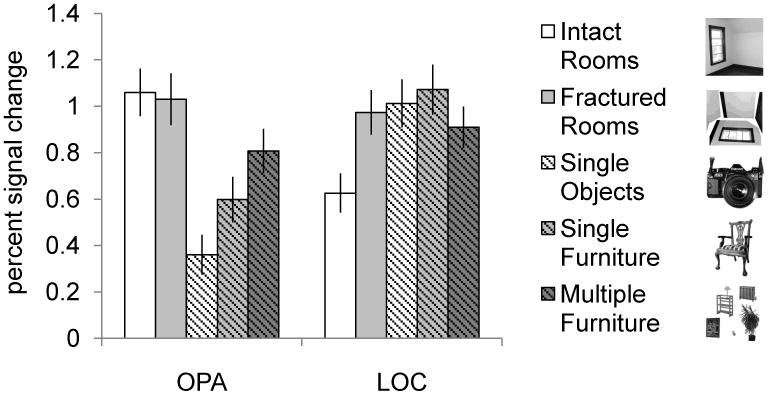

1.3.4 Local scene elements representation in OPA does not reflect general object representation

How does the observed sensitivity to furniture information in OPA compare to that in an object-selective region? To address this question, we compared the magnitude of responses in OPA with those in object-selective lateral occipital complex (LOC) across all five conditions (Figure 5). A 2 (ROI: OPA, LOC) × 5 (object type: intact rooms, fractured rooms, single object, single furniture, multiple furniture) repeated-measures ANOVA revealed a significant interaction, (F(4, 84) = 151.59, p < 0.001), suggesting that OPA and LOC are functionally distinct, and consistent with previous reports that OPA is selectively involved in scene, not object processing (Dilks et al., 2013). The differential information processing in these regions was particularly clear in the difference between OPA and LOC responses to single objects and single furniture, where OPA responses were significantly lower to single objects than to single furniture, compared with LOC (interaction contrast, p < 0.002). This finding indicates that whereas LOC shows a general sensitivity to object information (i.e., responding similarly to the single object and single furniture conditions), OPA responds selectively to scene-related objects in particular (i.e., furniture). Next, OPA responded significantly more to images of multiple furniture than single furniture, relative to LOC (interaction contrast, p < 0.001). The reduced response to multiple furniture versus single furniture in LOC is consistent with an fMRI study showing that LOC responds less to arrays of multiple objects than single objects (Nasr et al., 2015), as well as with neurophysiological studies suggesting that responses in monkey temporal cortex to multiple objects are generally equal to or less than the strongest response to one stimulus (Rousselet et al., 2004). Finally, LOC responded significantly more to fractured rooms than empty rooms, relative to OPA (interaction contrast, p < 0.001). This finding suggests that LOC treats the major surfaces as “objects” when they are fractured from the background that defines the scene, but not when they are properly configured as part of the spatial boundary. By contrast, OPA responds similarly to local scene elements regardless of their coherent spatial arrangement. Taken together, the above analyses reveal that local scene element representation in OPA is distinct from general object representation in LOC.

Figure 5.

Average percent signal change in OPA and object-selective LOC for all five conditions. Error bars depict the standard error of the mean. A 2 (ROI: OPA, LOC) × 5 (Condition: Intact rooms, Fractured Rooms, Single Objects, Single Furniture, Multiple Furniture) repeated-measures ANOVA revealed a significant interaction (F(4, 84) = 151.59, p < 0.001), indicating that the pattern of activity in LOC across these conditions was indeed distinct from that in OPA.

1.3.5 Do other regions beyond OPA represent local elements of scenes?

To investigate whether cortical regions beyond the functionally defined OPA might represent local elements of scenes, we performed a group-level analysis exploring responses to our experimental conditions across the entire slice prescription. This analysis focused on two contrasts testing the central claims of the local elements hypothesis: “intact vs. fractured rooms” and “multiple vs. single furniture”. If a region represents local scene elements, then it should respond similarly to intact and fractured rooms, and more to multiple than single furniture. We found three regions exhibiting this pattern of results, including i) a region in right lateral superior occipital lobe that overlapped with a group-defined right OPA (contrast = “intact rooms vs. single objects”), consistent with the ROI analysis; ii) a set of regions in the peripheral portion of the calcarine sulcus, which likely reflect low-level visual differences between the stimuli; and iii) a previously unreported region in superior parietal lobe (see Supplemental Figure 1, Supplemental Table 1). Intriguingly, this superior parietal region showed overlapping activation with a region responding more to “intact rooms vs. single objects”, suggesting that it may further be scene-selective. We did not observe increased responses to “intact vs. fractured rooms” within the group-defined PPA or RSC; however, this lack of findings in the group analysis may reflect anatomical variability in these regions across subjects (Fedorenko et al., 2010). Thus, the present group analysis is consistent with our ROI analysis showing that OPA represents local scene elements, and provides evidence for another region in superior parietal lobe that may likewise represent local elements of scenes. Importantly, our slice prescription primarily targeted the ventral visual pathway, leaving open the possibility that regions outside of this slice prescription may likewise represent local elements of scenes. Further, it is possible that other regions even within this slice prescription represent local scene elements, but are too anatomically variable to be detected in the present group analysis (Saxe et al., 2006; Fedorenko et al., 2010).

1.4 Discussion

Here we asked whether the more posterior OPA represents local elements of scenes, while the more anterior PPA and RSC represent global scene properties. We tested this hypothesis within two independent, yet complementary scene descriptors: spatial boundary information and scene content information. For spatial boundary representation, OPA responded similarly to images of intact rooms and these same rooms fractured and rearranged, such that they no longer defined a coherent space, suggesting that OPA is sensitive to the presence of these surfaces independent of their coherent spatial arrangement. By contrast, PPA and RSC responded significantly more to the empty than the fractured rooms, indicating that these regions encode global representations of spatial boundary. For scene content representation, OPA and PPA, but not RSC, responded more strongly to furniture than other objects. Only OPA, however, was sensitive to the amount of furniture, suggesting that OPA represents local elements of scene content individually, while PPA encodes global representations of scene content that are independent of the number of such elements that make it up. Importantly, the pattern of responses in OPA was distinct from that in an early visual region (FC), was not explained by low-level visual information (i.e., high or low spatial frequency information, spatial envelope coverage), and further was distinct from the pattern of responses in object selective cortex (LOC). These findings are consistent with the hypothesis that OPA analyzes scenes at the level of local elements—both in spatial boundary and scene content representation—while PPA and RSC represent global properties of scenes. This work may provide a missing piece of evidence for an overarching functional organization of information processing in ventral visual cortex, where more posterior regions (OPA, OFA, EBA) are sensitive to local elements, while more anterior regions (PPA, FFA, FBA) represent global configurations (Yovel and Kanwisher, 2004; Pitcher et al., 2007; Taylor et al., 2007; Liu et al., 2009). Note that this functional division of labor does not necessarily imply a particular hierarchy of processing (i.e., parts processed before wholes), but rather simply reflects a common division of labor across category-selective cortex in the ventral visual pathway.

Our findings showing that PPA represents both spatial boundary and scene content are consistent with many other studies (Epstein and Kanwisher, 1998; Janzen and van Turennout, 2004; Kravitz et al., 2011; MacEvoy and Epstein, 2011; Mullally and Maguire, 2011; Park et al., 2011; Harel et al., 2012; Bettencourt and Xu, 2013). Further, our finding that RSC responded to intact over fractured rooms, and not at all to objects or furniture, is consistent with previous reports of spatial boundary sensitivity, but not scene content sensitivity, in RSC (Maguire, 2001; Ino et al., 2002; Epstein et al., 2007; Park and Chun, 2009; Harel et al., 2012). Finally, while some recent studies have focused on retinotopic and low-level visual functions in these regions (Rajimehr et al., 2011; Nasr et al., 2014; Watson et al., 2014; Kauffmann et al., 2015; Silson et al., 2015; Watson et al., 2016), our findings suggest that scene-selective cortex represents properties of scenes independent of such lower-level visual representations—even in the case of OPA, which lies immediately adjacent to the retinotopically defined area V3A (Grill-Spector, 2003; Nasr et al., 2011).

In contrast to PPA and RSC, little is known about information processing in OPA. For example, this is the first study to our knowledge to explore spatial boundary processing in OPA. One other study has explored scene content processing in OPA, and found that OPA was sensitive to multiple properties of objects presented in isolation, including physical size, fixedness, “placeness”, and the degree to which the object is “space defining” (Troiani et al., 2014), consistent with our finding that OPA responds more to furniture than non-furniture objects. Note that the increased response to furniture in OPA does not necessarily indicate that OPA represents the object category of “furniture” per se, nor the precise identity of the local elements (e.g., a “chair”); rather, OPA may be sensitive to multiple mid-level object properties commonly captured by the category of furniture (e.g., furniture tends to be larger and more fixed compared with other non-furniture objects). Finally, while the present study tested the local elements hypothesis in the context of indoor scenes only, this hypothesis is readily extended to outdoor scenes as well, where OPA may likewise represent major surfaces that make up the spatial boundary (e.g., the ground plane, the side of a building) and large, fixed objects that make up the scene content (e.g., a parked car, a bench).

Beyond spatial boundary and content information, two recent studies have reported that OPA encodes ‘sense’ (left/right) (Dilks et al., 2011) and egocentric distance information (Persichetti and Dilks, under revision), suggesting a role for OPA in navigation. At first glance, our finding that OPA is relatively insensitive to spatial boundary appears inconsistent with the role of OPA in navigation, insofar as fractured rooms do not imply a navigable space. However, we propose that while OPA may not represent allocentric spatial relationships between local components of scenes, such as how floors are arranged relative to walls, it may nevertheless represent egocentric spatial information about local scene elements, such as the distance and direction of boundaries (e.g., walls) and obstacles (e.g., furniture) relative to the viewer. Thus, our hypothesis that OPA represents the local elements of scenes is compatible with the proposed role of OPA in navigation, and may point to a role for OPA in locally guided navigation and obstacle avoidance.

In conclusion, we found differential representation of spatial boundary and scene content information across scene-selective cortex. Unlike PPA and RSC, OPA does not represent the spatial boundary of a scene per se, but rather responds to the surfaces that make up that spatial boundary regardless of their arrangement. Further OPA represents scene content and the amount of such content, unlike PPA and RSC, suggesting that OPA encodes local, individual elements of scene content. Together, these findings support the hypothesis that OPA represents the local elements of scenes, while PPA and RSC represent global scene properties.

Supplementary Material

Acknowledgments

We would like to thank the Facility for Education and Research in Neuroscience (FERN) Imaging Center in the Department of Psychology, Emory University, Atlanta, GA, as well as the Athinoula A. Martinos Imaging Center at the McGovern Institute for Brain Research, MIT, Cambridge, MA. We would also like to thank Samuel Weiller and Andrew Persichetti for technical support and insightful comments. The work was supported by Emory College, Emory University (DD), National Institute of Child Health and Human Development grant T32HD071845 (FK), and National Institutes of Health grant EY013455 (NK). Jonas Kubilius currently is a research assistant of the Research Foundation—Flanders (FWO).

Footnotes

The authors declare no competing financial interests.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bar M, Aminoff E. Cortical analysis of visual context. Neuron. 2003;38:347–358. doi: 10.1016/s0896-6273(03)00167-3. [DOI] [PubMed] [Google Scholar]

- Bettencourt KC, Xu YD. The Role of Transverse Occipital Sulcus in Scene Perception and Its Relationship to Object Individuation in Inferior Intraparietal Sulcus. J Cognitive Neurosci. 2013;25:1711–1722. doi: 10.1162/jocn_a_00422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW, Jesmanowicz A. Real-time 3D image registration for functional MRI. Magn Reson Med. 1999;42:1014–1018. doi: 10.1002/(sici)1522-2594(199912)42:6<1014::aid-mrm4>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- De Valois RL, Albrecht DG, Thorell LG. Spatial frequency selectivity of cells in macaque visual cortex. Vision research. 1982;22:545–559. doi: 10.1016/0042-6989(82)90113-4. [DOI] [PubMed] [Google Scholar]

- Dilks DD, Julian JB, Paunov AM, Kanwisher N. The occipital place area is causally and selectively involved in scene perception. J Neurosci. 2013;33:1331–1336a. doi: 10.1523/JNEUROSCI.4081-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dilks DD, Julian JB, Kubilius J, Spelke ES, Kanwisher N. Mirror-image sensitivity and invariance in object and scene processing pathways. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2011;31:11305–11312. doi: 10.1523/JNEUROSCI.1935-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dougherty RF, Koch VM, Brewer AA, Fischer B, Modersitzki J, Wandell BA. Visual field representations and locations of visual areas V1/2/3 in human visual cortex. Journal of vision. 2003;3:586–598. doi: 10.1167/3.10.1. [DOI] [PubMed] [Google Scholar]

- Epstein Kanwisher. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Epstein RA, Parker WE, Feiler AM. Where am I now? Distinct roles for parahippocampal and retrosplenial cortices in place recognition. J Neurosci. 2007;27:6141–6149. doi: 10.1523/JNEUROSCI.0799-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fedorenko E, Hsieh PJ, Nieto-Castanon A, Whitfield-Gabrieli S, Kanwisher N. New method for fMRI investigations of language: defining ROIs functionally in individual subjects. Journal of neurophysiology. 2010;104:1177–1194. doi: 10.1152/jn.00032.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K. The neural basis of object perception. Curr Opin Neurobiol. 2003;13:159–166. doi: 10.1016/s0959-4388(03)00040-0. [DOI] [PubMed] [Google Scholar]

- Harel A, Kravitz DJ, Baker CI. Deconstructing visual scenes in cortex: gradients of object and spatial layout information. J Mol Neurosci. 2012;48:S50–S50. doi: 10.1093/cercor/bhs091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ino T, Inoue Y, Kage M, Hirose S, Kimura T, Fukuyama H. Mental navigation in humans is processed in the anterior bank of the parieto-occipital sulcus. Neuroscience letters. 2002;322:182–186. doi: 10.1016/s0304-3940(02)00019-8. [DOI] [PubMed] [Google Scholar]

- Janzen G, van Turennout M. Selective neural representation of objects relevant for navigation. Nature neuroscience. 2004;7:673–677. doi: 10.1038/nn1257. [DOI] [PubMed] [Google Scholar]

- Kauffmann L, Ramanoel S, Guyader N, Chauvin A, Peyrin C. Spatial frequency processing in scene-selective cortical regions. NeuroImage. 2015;112:86–95. doi: 10.1016/j.neuroimage.2015.02.058. [DOI] [PubMed] [Google Scholar]

- Konkle T, Oliva A. A Real-World Size Organization of Object Responses in Occipitotemporal Cortex. Neuron. 2012;74:1114–1124. doi: 10.1016/j.neuron.2012.04.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Peng CS, Baker CI. Real-world scene representations in high-level visual cortex: it's the spaces more than the places. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2011;31:7322–7333. doi: 10.1523/JNEUROSCI.4588-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linsley D, MacEvoy SP. Evidence for participation by object-selective visual cortex in scene category judgments. Journal of vision. 2014;14 doi: 10.1167/14.9.19. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Perception of face parts and face configurations: an FMRI study. J Cogn Neurosci. 2009;22:203–211. doi: 10.1162/jocn.2009.21203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacEvoy SP, Epstein RA. Constructing scenes from objects in human occipitotemporal cortex. Nature neuroscience. 2011;14:1323–1329. doi: 10.1038/nn.2903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacEvoy SP, Yang Z. Joint neuronal tuning for object form and position in the human lateral occipital complex. NeuroImage. 2012;63:1901–1908. doi: 10.1016/j.neuroimage.2012.08.043. [DOI] [PubMed] [Google Scholar]

- Maguire EA. The retrosplenial contribution to human navigation: a review of lesion and neuroimaging findings. Scand J Psychol. 2001;42:225–238. doi: 10.1111/1467-9450.00233. [DOI] [PubMed] [Google Scholar]

- Mullally SL, Maguire EA. A new role for the parahippocampal cortex in representing space. J Neurosci. 2011;31:7441–7449. doi: 10.1523/JNEUROSCI.0267-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasr S, Echavarria CE, Tootell RBH. Thinking Outside the Box: Rectilinear Shapes Selectively Activate Scene-Selective Cortex. J Neurosci. 2014;34:6721–6735. doi: 10.1523/JNEUROSCI.4802-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasr S, Stemmann H, Vanduffel W, Tootell RB. Increased Visual Stimulation Systematically Decreases Activity in Lateral Intermediate Cortex. Cerebral cortex. 2015;25:4009–4028. doi: 10.1093/cercor/bhu290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasr S, Liu N, Devaney KJ, Yue X, Rajimehr R, Ungerleider LG, Tootell RB. Scene-selective cortical regions in human and nonhuman primates. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2011;31:13771–13785. doi: 10.1523/JNEUROSCI.2792-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliva A, Torralba A. Modeling the shape of a scene: a holistic representation of the spatial envelope. International journal in computer vision. 2001;42:145–175. [Google Scholar]

- Oliva A, Torralba A. Scene-centered description from spatial envelope properties. Lect Notes Comput Sc. 2002;2525:263–272. [Google Scholar]

- Park S, Chun MM. Different roles of the parahippocampal place area (PPA) and retrosplenial cortex (RSC) in panoramic scene perception. NeuroImage. 2009;47:1747–1756. doi: 10.1016/j.neuroimage.2009.04.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park S, Konkle T, Oliva A. Parametric Coding of the Size and Clutter of Natural Scenes in the Human Brain. Cerebral cortex. 2014 doi: 10.1093/cercor/bht418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park S, Brady TF, Greene MR, Oliva A. Disentangling scene content from spatial boundary: complementary roles for the parahippocampal place area and lateral occipital complex in representing real-world scenes. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2011;31:1333–1340. doi: 10.1523/JNEUROSCI.3885-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Persichetti AS, Aguirre GK, Thompson-Schill SL. Value Is in the Eye of the Beholder: Early Visual Cortex Codes Monetary Value of Objects during a Diverted Attention Task. J Cogn Neurosci. 2015;27:893–901. doi: 10.1162/jocn_a_00760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B. TMS evidence for the involvement of the right occipital face area in early face processing. Curr Biol. 2007;17:1568–1573. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- Rajimehr R, Devaney KJ, Bilenko NY, Young JC, Tootell RB. The “parahippocampal place area” responds preferentially to high spatial frequencies in humans and monkeys. PLoS biology. 2011;9:e1000608. doi: 10.1371/journal.pbio.1000608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rousselet GA, Thorpe SJ, Fabre-Thorpe M. How parallel is visual processing in the ventral pathway? Trends in cognitive sciences. 2004;8:363–370. doi: 10.1016/j.tics.2004.06.003. [DOI] [PubMed] [Google Scholar]

- Saxe R, Brett M, Kanwisher N. Divide and conquer: a defense of functional localizers. NeuroImage. 2006;30:1088–1096. doi: 10.1016/j.neuroimage.2005.12.062. discussion 1097-1089. [DOI] [PubMed] [Google Scholar]

- Silson EH, Chan AW, Reynolds RC, Kravitz DJ, Baker CI. A Retinotopic Basis for the Division of High-Level Scene Processing between Lateral and Ventral Human Occipitotemporal Cortex. J Neurosci. 2015;35:11921–11935. doi: 10.1523/JNEUROSCI.0137-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Nichols TE. Threshold-free cluster enhancement: Addressing problems of smoothing, threshold dependence and localisation in cluster inference. NeuroImage. 2009;44:83–98. doi: 10.1016/j.neuroimage.2008.03.061. [DOI] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang YY, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage. 2004;23:S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Taylor JC, Wiggett AJ, Downing PE. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. Journal of neurophysiology. 2007;98:1626–1633. doi: 10.1152/jn.00012.2007. [DOI] [PubMed] [Google Scholar]

- Thrun S. Robotic Mapping: A survey. In: Lakemeyer G, Nebel B, editors. Exploring Artificial Intelligence in the New Millenium. Morgan Kaufmann; 2002. [Google Scholar]

- Troiani V, Stigliani A, Smith ME, Epstein RA. Multiple Object Properties Drive Scene-Selective Regions. Cerebral cortex. 2014;24:883–897. doi: 10.1093/cercor/bhs364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson DM, Hartley T, Andrews TJ. Patterns of response to visual scenes are linked to the low-level properties of the image. NeuroImage. 2014;99:402–410. doi: 10.1016/j.neuroimage.2014.05.045. [DOI] [PubMed] [Google Scholar]

- Watson DM, Hymers M, Hartley T, Andrews TJ. Patterns of neural response in scene-selective regions of the human brain are affected by low-level manipulations of spatial frequency. NeuroImage. 2016;124:107–117. doi: 10.1016/j.neuroimage.2015.08.058. [DOI] [PubMed] [Google Scholar]

- Winkler AM, Ridgway GR, Webster MA, Smith SM, Nichols TE. Permutation inference for the general linear model. NeuroImage. 2014;92:381–397. doi: 10.1016/j.neuroimage.2014.01.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.