Abstract

Is thought possible without language? Individuals with global aphasia, who have almost no ability to understand or produce language, provide a powerful opportunity to find out. Astonishingly, despite their near-total loss of language, these individuals are nonetheless able to add and subtract, solve logic problems, think about another person’s thoughts, appreciate music, and successfully navigate their environments. Further, neuroimaging studies show that healthy adults strongly engage the brain’s language areas when they understand a sentence, but not when they perform other nonlinguistic tasks like arithmetic, storing information in working memory, inhibiting prepotent responses, or listening to music. Taken together, these two complementary lines of evidence provide a clear answer to the classic question: many aspects of thought engage distinct brain regions from, and do not depend on, language.

Keywords: language, syntax, semantics, functional specificity, numerical cognition, cognitive control, executive functions, theory of mind, music, navigation, fMRI, neuropsychology, aphasia

“My language to describe things in the world is very small, limited. My thoughts when I look at the world are vast, limitless and normal, same as they ever were. My experience of the world is not made less by lack of language but is essentially unchanged.”

Tom Lubbock1 (from a memoir documenting his gradual loss of language as a result of a brain tumor affecting language cortices)

Introduction

What thinking person has not wondered about the relationship between thought and language? When we express a thought in language, do we start with a fully formed idea and then “translate” it into a string of words? Or is the thought not fully formed until the string of words is assembled? In the former view, it should be possible to think even if we did not have language. In the latter view, thought completely depends on, and is not distinct from, language. Here we argue that data from human cognitive neuroscience provide a crisp and clear answer to this age-old question about the relationship between thought and language.

One might argue that we already know the answer, from the simple fact that myriad forms of complex cognition and behavior are evident in nonhuman animals who lack language,a from chimpanzees2–7 and bonobos8,9 to marine mammals10–12 and birds.13,14 On the other hand, intuition and evidence suggest that human thought encompasses many cognitive abilities that are not present in animals (in anything like their human form), from arithmetic to music to the ability to infer what another person is thinking. Are these sophisticated cognitive abilities, then, dependent on language? Here we use two methods from cognitive neuroscience to ask whether complex quintessentially human thought is distinct from, and possible without, language.

The first method is functional magnetic resonance imaging (fMRI), which can be used to ask whether language and thought are distinct in the brain. If a brain region supports both linguistic processing, and, say, musical processing, then it should be active during both. If, on the other hand, a brain region selectively supports linguistic processing, then it should be active when people process language, and much less so, or not at all, when they listen to music. The second method relies on individuals with global aphasia due to brain damage, enabling us to ask whether damage to the language system affects performance on various kinds of thought. If the language system—or some of its components—are critical for performing arithmetic or appreciating music, then damage to these brain regions should lead to deficits in these abilities. If, on the other hand, the language system is not necessary for nonlinguistic forms of thought, then focal damage to the language system should only affect language comprehension and/or production, leaving intact performance on nonlinguistic tasks.

We review evidence from these two methods, occasionally drawing on data from other approaches, focusing on the relationship between language and five other cognitive abilities that have been argued—over the years—to share cognitive and neural machinery with language: arithmetic processing, executive functions, theory of mind, music processing, and spatial navigation. The nature of and the reasons for the alleged overlap between linguistic and other processes have varied across domains. In particular, the hypothesized overlap comes in at least two flavors. In some cases, language has been argued to share representations and/or computations with other domains. For example, language, music and arithmetic all rely on structured representations characterized by features like compositionality and recursion18 or complex hierarchical structure.21–23 In the case of theory of mind, some aspects of linguistic syntax have been argued by some to constitute a critical component of our representations of others’ mental states.24 Language also shares some cognitive requirements with domain-general executive functions like inhibition.25

However, in other cases, linguistic representations have been hypothesized to play key roles in domains that share little similarity in representations or computations. In particular, language has been argued to serve as a medium for integrating information across various specialized systems.26,27 Thus, in addition to enabling communication between people, language may enable communication between cognitive systems within a person. This kind of relationship was, for example, hypothesized to hold between language and spatial navigation.26

We argue, based on the available evidence, that in a mature human brain a set of regions—most prominently those located on the lateral surfaces of the left frontal and temporal cortices—selectively support linguistic processing, and that damage to these regions affects an individual’s ability to understand and produce language, but not to engage in many forms of complex thought.

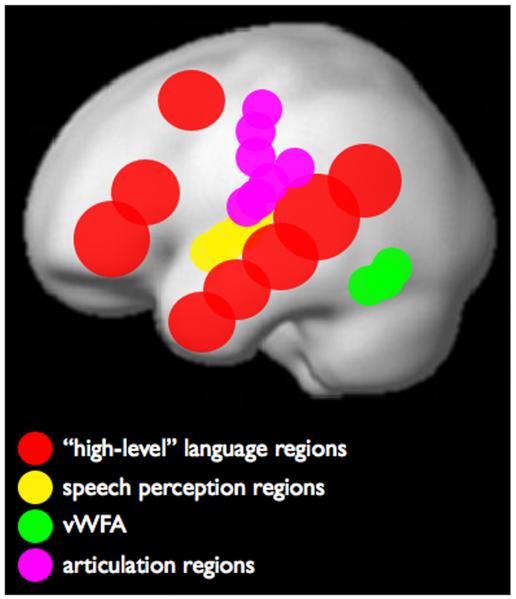

Before we proceed, it is important to clarify what we mean by “language.” There are two points to make here. First, we are focusing on high-level language processing, which includes extracting meaning from linguistic utterances and generating meaningful linguistic utterances when communicating with others28 (regions schematically marked in red in Fig. 1, adapted from Ref. 29). We are thus excluding from consideration (1) auditory and visual regions concerned with the perceptual analysis of speech sounds or visual orthography (marked in yellow and green in Fig. 1, respectively), and (2) articulatory motor regions concerned with the latest stages of speech production (marked in pink in Fig. 1). Of course, the question we ask here about the high-level language processing regions (i.e., to what extent do they overlap with brain regions that support non-linguistic abilities?) can be—and has been—asked with respect to those lower-level perceptual and motor regions. Briefly, it appears that some degree of specificity characterizes both auditory30–32 and visual33,34 perceptual regions. The answer is somewhat equivocal for the motor regions, and the degree of functional specificity of parts of motor/premotor cortex for speech production over other motor behaviors, like non-speech oral movements, remains unclear. Some have argued for such specificity in parts of the speech articulation system—specifically, the superior precentral gyrus of the insula—on the basis of patient evidence35 (but compare with Ref. 36), but findings from fMRI generally do not support this claim.29,37 However, dissociations between speech production and the production of non-speech oral movements have been reported.38 Furthermore, a recent fMRI study39 has reported selectivity for letters over non-letter symbols in written production. Thus, the question clearly deserves further investigation.

Figure 1.

A schematic illustration of the approximate locations of brain regions that support perceptual (yellow, green), motor articulation (pink), and high-level (red) aspects of language processing. Adapted from Ref. 29).

Second, although high-level language processing subsumes many potentially distinct computations, we here talk about it holistically. To elaborate, language comprehension and production can each be broken down into many mental operations (e.g., during comprehension, we need to recognize the words, understand how the words relate to one another by analyzing the morphological endings and/or word order, and construct a complex meaning representation). These operations must, at least to some extent, be temporally separable, with some preceding others,40–42 although the top-down effects of predictive processing are well accepted.43–50 It is also possible that these different operations are spatially separable, being implemented in distinct parts of the language network. Indeed, some results from the neuropsychological patient literature suggest that this must be the case51 (but compare with Ref. 52). However, no compelling evidence exists, in our opinion, for either (1) a consistent relationship between particular brain regions and particular mental operations in the patient literature or (2) the spatial separability of different components of high-level language processing in fMRI.53–55,b Moreover, the language-processing brain regions form a deeply integrated functional system, as evidenced by both (1) strong anatomical connectivity56 and (2) high correlations in neural activity over time during both rest and naturalistic cognition.57,58 Thus, we here consider the high-level language processing system as a whole, without discussing particular brain regions within it.

We now proceed to review the evidence for the separability of the brain regions that support high-level language processing from those that support complex thought.

Review of the evidence

Language versus arithmetic processing

Previous work in numerical cognition has identified two distinct core systems underlying different aspects of numerical competence: (1) a small exact number system, which is based on attention and allows the tracking of small quantities of objects with exact information about position and identity;63–65 and (2) a large approximate number system (sometimes referred to as the analog magnitude-estimation system), which provides noisy estimates of large sets.66 These core abilities are shared across species67,68 and are present in prelinguistic infants.65 Consequently, the autonomy of language from these core numerical abilities has not been controversial.

However, in addition to these evolutionarily conserved systems, humans have developed means to represent exact quantities of arbitrary set size, using verbal representations (i.e., words for numbers). Although not universal,69–71 this ability to represent exact quantities is present in most cultures. Because these representations are verbal in nature, it has been proposed that exact arithmetic relies on the neural system that underlies linguistic processing.72 Indeed, neuroimaging studies and studies in bilingual speakers provided some evidence in support of this view.73–77 For example, Dehaene and colleagues74 had participants perform an exact versus approximate arithmetic addition task. The exact > approximate contrast produced activation in a number of brain regions, including parts of the left inferior frontal cortex (although the observed region fell quite anteriorly to Broca’s area, as defined traditionally). Based on the fact that other studies have found inferior frontal activations for verbal/linguistic tasks, Dehaene et al.74 argued that the regional activations they observed reflected engagement of the language system in exact calculations. Such indirect inferences can be dangerous, however: similar activation locations across studies—especially when operating at the level of coarse anatomy (e.g., talking about activations landing within the inferior frontal gyrus, the superior temporal sulcus, or the angular gyrus, each of which encompasses many cubic centimeters of brain tissue)—cannot be used to conclude that the same brain region gave rise to the relevant activation patterns. For example, both faces and bodies produce robust responses within the fusiform gyrus, yet clear evidence exists of category selectivity for each type of stimulus in distinct, though nearby, regions.78 To make the strongest case for overlap, one would therefore need to, at the very least, directly compare the relevant cognitive functions within the same study, and ideally, within each brain individually,28 because interindividual variability can give rise to apparent overlap at the group level even when the activations are entirely non-overlapping in any given individual.59

A neuropsychological investigation that is characterized by a similar problematic inference was reported by Baldo and Dronkers,79 who examined a large set of individuals with left hemisphere strokes and found (1) a correlation in performance between a language comprehension task and an arithmetic task and (2) overlap in brain regions whose damage was associated with linguistic and arithmetic deficits (including in the left inferior frontal gyrus). As has been discussed extensively in the literature in the 1980s and 1990s,80,81 however, dissociations are more powerful than associations because an association can arise from damage to nearby but distinct regions. Curiously, Baldo and Dronkers79 actually observed a dissociation in their data, with some patients being impaired on the language comprehension task but not arithmetic comprehension, and other patients showing the opposite pattern of results. However, they took their overall results as evidence of overlap in the mechanisms for processing language and arithmetic.

A major challenge to the view that the language system underlies our exact arithmetic abilities came from a study where patients with extensive damage to left-hemisphere language regions and with consequent severe aphasia were shown to have preserved ability to perform exact arithmetic.82 In particular, three such patients were able to solve a variety of mathematical problems that involved addition, subtraction, multiplication, and division; small and large numbers; whole numbers and fractions; and expressions with brackets. Particularly astonishing was the dissociation in these patients between their lack of sensitivity to structural information in language versus mathematical expressions: although profoundly agrammatic in language, they retained knowledge of features such as the embedded structure of bracket expressions and the significance of order information in non-commutative math operations of subtraction and division. This study strongly suggested that brain regions that support linguistic (including grammatical) processing are not needed for exact arithmetic.

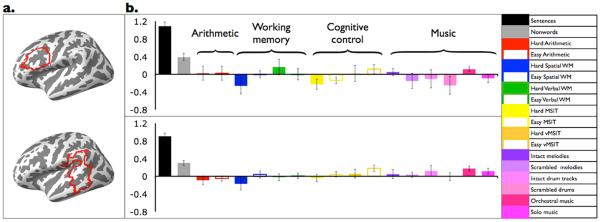

A number of brain imaging studies have provided converging evidence for this view. An early positron emission tomography (PET) study83 examined the activation patterns during simple digit reading, retrieval of simple arithmetic facts, and arithmetic computations and failed to observe any activation in the perisylvian cortices. More recently, Fedorenko, Behr, and Kanwisher60 evaluated this question more directly. Participants performed a language-understanding task in fMRI, which was used to localize language-responsive regions of interest in each participant individually. The responses of these brain regions were then examined while participants engaged in solving easier (with smaller numbers) or harder (with larger numbers) arithmetic addition problems. The language regions responded during the arithmetic conditions at the same level as, or below, a low-level fixation baseline condition (Fig. 2), strongly suggesting that the language system is not active when we engage in exact arithmetic. Similarly, Monti, Parsons, and Osherson84 found that linguistic, but not algebraic, syntax produced activations in the inferior frontal cortex. The latter instead produced responses in bilateral parietal brain regions. Finally, Maruyama et al.23 manipulated the syntactic complexity of algebraic operations and also found activations in parietal (and occipital) regions, but not within the frontotemporal language system.

Figure 2.

Functional response profiles of two high-level language processing brain regions. (A) Two functional “parcels” derived from a group-level representation of language activations (the LIFG and the LMidPostTemp parcels from Ref. 28) and used to constrain the selection of subject-specific regions of interest (ROIs). Individual ROIs were functionally defined: each parcel was intersected with the individual activation map for the language-localizer contrast (sentences > non-word lists28), and the top 10% of voxels were taken to be that participant’s ROI. (B) Responses to the language-localizer conditions and a broad range of nonlinguistic tasks. Responses to the sentences and non-word conditions were estimated using across-runs cross validation,59 so that the data to define the ROIs and to estimate their responses were independent. The data for the arithmetic, working memory (WM) and cognitive control (MSIT; Multi-Source Interference Task) tasks were reported in Ref. 60 and the data for the music conditions come from Ref 61; see also Refs. 60 and 62).

In summary, it appears that brain regions that respond robustly during linguistic processing are not generally (but see Ref. 85) active when we solve arithmetic problems. Furthermore, damage—even extensive damage—to the language regions appears to leave our arithmetic abilities intact. We therefore conclude that linguistic processing occurs in brain circuits distinct from those that support arithmetic processing.

Language versus logical reasoning and other executive functions

In addition to our ability to exchange thoughts with one another via language, humans differ from other animals in the complexity of our thought processes.86 In particular, we are experts in organizing our thoughts and actions according to internal goals. This structured behavior has been linked to a large number of theoretical constructs, including working memory, cognitive control, attention, and fluid intelligence.87–89 What is the relationship between these so-called “executive functions” and the language system?

There are at least two reasons to suspect an important link. The first concerns the anatomical substrates of executive control. In particular, the prefrontal cortex has long been argued to be important.87 Although, over the years, additional brain regions have been incorporated into the cognitive control network, including regions in the parietal cortices, the frontal lobes continue to figure prominently in any account of cognitive control and goal-directed behavior. Critically, as has long been known, some of the language-responsive regions occupy parts of the left inferior frontal cortex. One possibility, therefore, is that language processing at least partially relies on domain-general circuits in the left frontal lobe.25,90

The second reason concerns the functional importance of cognitive control and working memory for language. We have long known that these domain-general mechanisms play a role in language processing (e.g., see Ref. 91 for a recent review). For example, super-additive processing difficulties have been reported when participants perform a language task at the same time as a demanding working memory or inhibitory task.69,92 And in fMRI studies, a number of groups have reported activation in these domain-general frontal and parietal circuits for some linguistic manipulations, especially for manipulations of linguistic difficulty.93–95 These findings suggest that cognitive control mechanisms can and do sometimes support language processing, much as they support the processing of information in other domains.

So, how is this relationship between language and cognitive control implemented? Is there partial or even complete overlap between these functions in the left frontal lobe, or does language rely on brain regions that are distinct from those that support cognitive control?

In one fMRI study,60 we identified language-responsive brain regions and then examined the responses of those regions when participants performed several classic working memory/inhibitory tasks. As expected, the language regions in the temporal lobe showed no response during these executive tasks (Fig. 2). However, importantly, the language regions in the left frontal lobe (including in and around Broca’s area) showed a similar degree of selectivity, in spite of the fact that executive tasks robustly activated left frontal cortex in close proximity to the language-responsive regions.96

Other fMRI studies provided additional support for the idea that language regions, including those in the inferior frontal cortex, are highly selective in function. For example, Monti et al.97,98 examined the relationship between linguistic processing and logical reasoning, another ability that strongly draws on domain-general cognitive control resources,99 and found largely nonoverlapping responses, with the language regions responding strongly during the processing of language stimuli and much less so during the processing of logical expressions.

Data from patients with brain damage generally support the conclusions drawn from brain imaging studies. For example, Varley and Siegal100 report a severely agrammatic aphasic man who was able to perform well on complex causal reasoning tasks. Furthermore, anecdotally, some of the severely aphasic patients that Varley and colleagues have studied over the years continue to play chess in spite of experiencing severe comprehension/production difficulties. Chess is arguably the epitome of human intelligence/reasoning, with high demands on attention, working memory, planning, deductive reasoning, inhibition, and other faculties. Conversely, Reverberi et al.101 found that patients with extensive lesions in the prefrontal cortex and preserved linguistic abilities exhibited impairments in deductive reasoning. Thus, an intact linguistic system is not sufficient for reasoning.

It is worth noting that at least one patient investigation has argued that language is, in fact, necessary for complex reasoning. In particular, using the Wisconsin Card Sorting Task,102 Baldo et al.103 reported impairments in aphasic individuals, but not in patients with left-hemisphere damage but without aphasia. A plausible explanation for this pattern of results is that language regions lie in close proximity to domain-general cognitive control regions. This is true not only in the left frontal cortex, as discussed above,96 but also in the left temporoparietal cortex. Thus, brain damage that results in aphasia is more likely to affect these nearby cognitive control structures than brain damage that does not lead to aphasia (and is thus plausibly further away from the cognitive control regions). As noted above, dissociations are more powerful than associations,80,81 so the fact that there exist severely aphasic individuals who have intact executive functions constitutes strong evidence for the language system not being critical to those functions.

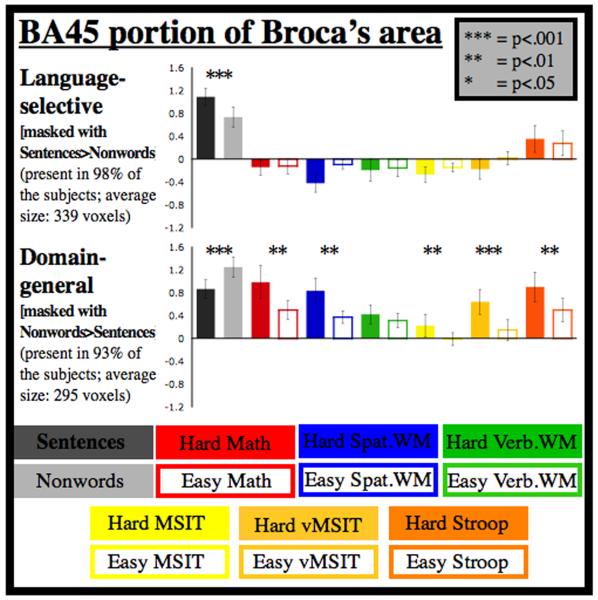

In summary, although both executive functions and language processing robustly engage brain structures in the left frontal cortex, they appear to occupy nearby but distinct regions within that general area of the brain (Fig. 3), as evidenced by clear dissociations observed in fMRI studies and the preserved abilities of at least some severely aphasic individuals to engage in complex non-linguistic reasoning tasks.

Figure 3.

Functional response profiles of language-selective and domain-general regions within Broca’s area (adapted from Ref. 96). Language-selective regions were defined by intersecting the anatomical parcel for BA45 with the individual activation maps for the language-localizer contrast (sentences > non-word lists28). Domain-general regions were defined by intersecting the same parcel with the individual activation maps for the non-word lists > sentences contrast. All magnitudes shown are estimated from data independent of those used to define the regions; responses to the sentences and non-words are estimated using a left-out run.

Language versus theory of mind

A sophisticated ability to consider the subtleties of another’s mental states when acting in the world, theory of mind (ToM) is yet another defining characteristic of humans.104 Some have argued that certain linguistic (specifically, grammatical) representations are necessary for thinking about others’ minds.24,105 Indeed, some evidence seems to support this contention. First, linguistic abilities (including both syntax and understanding meanings of mental state verbs like “think” and “believe”) correlate with success on false belief tasks.106–110 Furthermore, training children with no understanding of false beliefs on certain linguistic constructions allows them to pass the false-belief task.111–114 However, we are concerned here with adult brains, and even if linguistic representations were critical for the development of (at least some aspects) of ToM, it is still possible that, in a mature brain, linguistic representations are no longer necessary.

Recent research in social neuroscience has identified a set of brain regions that appear to play a role in representing others’ internal states, including thoughts, preferences, and feelings.115–121 These regions include the right and left temporoparietal junction (TPJ), the precuneus, and regions in the medial prefrontal cortex. The right TPJ, in particular, is highly selective for thinking about someone else’s thoughts and beliefs,122–127 in line with both (1) early patient studies showing that damage to this region led to deficits in ToM reasoning128,129 and (2) recent “virtual lesion” TMS experiments.130,131

The fact that the apparently core (most functionally selective) region within the ToM network—the right TPJ—is located in the non-language-dominant hemisphere already suggests that the language system is probably not critical for ToM reasoning. However, the left TPJ is still an important component of the network,132 and a recent study reported overlap between the left TPJ and the language regions.133 However, numerous experiments with aphasic patients who suffered extensive damage to the left TPJ indicate retained ToM reasoning and residual insights into the knowledge states of others.100,134–137 Typical probes of ToM, such as the changed-location or changed-contents tasks, involve inferences regarding the beliefs of others. In standard formats, these tasks place heavy demands on linguistic processing. For example, the participant must detect the third person reference of the probe question and make fine semantic discriminations between verbs such as “think/know.” However, when people with severe agrammatic aphasia are given cues as to the purpose of the probe questions, they reveal retained ability in inferring the beliefs (both true and false) of others.100 Willems et al.137 extended these observations to people with global aphasia. They employed a nonlinguistic task in which there was a mismatch in knowledge between participants as to the location and orientation of two tokens on a grid. The informed participant (the “sender”) had to recognize the knowledge state of the naive “receiver” and then, using their own token, signal the location/orientation of the receiver’s token. Participants with severe aphasia were able to adopt both sender and receiver roles: as senders, they recognized the receiver’s need for information and designed a message to convey the necessary knowledge. As receivers, they were able to interpret the intentions behind movement of a token in order to correctly locate and orient their tokens. Thus, although the potential theoretical significance of the overlap observed between language comprehension and ToM tasks in the left TPJ remains to be investigated, it appears that the language system is not critical for mentalizing, at least once the mentalizing abilities have developed.

Language versus music processing

Language and music—two universal cognitive abilities unique to humans138—share multiple features. Apart from the obvious surface-level similarity, with both involving temporally unfolding sequences of sounds with a salient rhythmic and melodic structure,139,140 there is a deeper parallel: language and music exhibit similar structural properties, as has been noted for many years (e.g., Riemann141, as cited in Refs. 142 and 143–150). In particular, in both domains, relatively small sets of elements (words in language, notes and chords in music) are used to create a large, perhaps infinite, number of sequential structures (phrases and sentences in language and melodies in music). And in both domains, this combinatorial process is constrained by a set of rules, such that healthy human adults can judge the well-formedness of typical sentences and melodies.

Inspired by these similarities, many researchers have looked for evidence of overlap in the processing of structure in language and music. For example, a number of studies have used a structural-violation paradigm where participants listen to stimuli in which the presence of a structurally unexpected element is manipulated. For example, some early studies used event-related potentials (ERPs) and showed that structural violations in music elicit components that resemble those elicited by syntactic violations in language. These include the P600151–153 (see Refs. 154 and 155 for the original reports of the P600 response to syntactic violations in language) and the early anterior negativity, present more strongly in the right hemisphere (eRAN152,156–158; see Refs. 159 and 160 for the original reports of the eLAN in response to syntactic violations in language; see Ref. 161 for a recent critical evaluation of the eLAN findings). Later studies observed a similar effect in MEG and suggested that it originates in or around Broca’s area and its right hemisphere homologue.21 Subsequently, fMRI studies also identified parts of Broca’s area as among the generators of the effect162–64 (see Ref. 165 for similar evidence from rhythmic violations), although other regions were also implicated, including the ventrolateral premotor cortex,166 the insular cortex, parietal regions,162,163 and superior temporal regions162 (see also Refs 167 and 168 for evidence from intracranial EEG recordings).

A number of behavioral dual-task studies have also argued for language/music overlap based on super-additive processing difficulty when musical violations coincided with syntactic violations in language169–171 (compare to Ref. 172). Some patient studies have also been taken to support overlap, notably those investigating musical processing in aphasic patients with lesions in Broca’s area. Patel et al.173 found subtle deficits in processing musical structure, which—as the authors acknowledge—could also be attributed to lower-level auditory processing deficits. Sammler et al.174 observed an abnormal scalp distribution of the eRAN component and subtle behavioral deficits in patients with IFG lesions.

However, in spite of the intuitive appeal of the music/language overlap idea, we will argue that there is an alternative interpretation of the results summarized above, which a few of the studies have already alluded to.164 In particular, a note or word that is incongruent with the preceding musical or syntactic context is a salient event. As a result, the observed responses to such deviant events could reflect a generic mental process—such as attentional capture, detection of violated expectations, or error correction—that (1) applies equally to language, music, and other, nonmusical and nonlinguistic domains; and (2) does not necessarily have to do with processing complex, hierarchically structured materials. A closer look at the available evidence supports this interpretation.

The P600 ERP component that is sensitive to syntactic violations in language and music is also sensitive to violations of expectations in other domains, including arithmetic175,176 and sequential learning of complex structured sequences.177 For example, Niedeggen and Rosler175 observed a P600 in response to violations of multiplication rules, and Núñez-Peña and Honrubia176 observed a P600 to violations of a sequence of numbers that were generated following an easy-to-infer rule (e.g., adding 3 to each preceding number (e.g., 3, 6, 9, 12, 15, 19)). Furthermore, although studies manipulating both syntactic and semantic structure in language argued that structural processing in music selectively interferes with syntactic processing in language,170,178 more recent studies suggest that structural processing in music can interfere with both syntactic and semantic processing in language,171,179 arguing against a syntax-specific interpretation.

Given that language, music, and arithmetic all rely on complex structured representations, responses to violations in these domains could nonetheless index some sort of cross-domain, high-level structural processing. However, unexpected events that do not violate structural expectations also appear to elicit similar ERP components. For example, Coulson and colleagues180.181 argued that the P600 component is an instance of another, highly domain-general ERP component, the P300 component (also referred to as the P3), which has long been known to be sensitive to rare and/or informative events irrespective of high-level structure.182 Kolk and colleagues have also argued for a domain-general interpretation of the P600 component.183 For example, Vissers et al.184 observed a P600 for spelling errors (“fone” instead of “phone”), which seems unlikely to involve anything we might call abstract structural processing.

Some uncertainty also exists with respect to the relationship between the eRAN component156 and the mismatch negativity (MMN) component. The MMN component is observed when a stimulus violates a rule established by the preceding sequence of sensory stimuli185 (see Refs. 186 and 187 for recent overviews). Most of the work on the MMN has focused on the auditory domain (e.g., see Ref. 188 for a review), but several studies have reported a visual MMN.189–191 In the auditory domain, although early studies employed relatively low-level manipulations (e.g., a repeated tone in a sequence of ascending tones192 or a switch in the direction of a within-pair frequency change193), later studies observed the MMN component for more abstract manipulations, such as violations of tonal194–196 or rhythmic197,198 patterns, raising questions about how this component might relate to the eRAN. Some ERP studies have explicitly argued that eRAN is distinct from the MMN, with eRAN exhibiting a longer latency and a larger amplitude than the MMN199 (compare with Ref. 200, which reports a longer latency for the MMN than for eRAN), and with different dominant sources (posterior IFG for eRAN and primary auditory cortex for the MMN201). However, a number of other studies have reported multiple sources for the MMN, including both temporal and frontal components (see Ref. 202 for the patient evidence implicating the frontal source). According to one proposal203 (see also Refs. 204 and 205), two mental processes contribute to the MMN: (1) a sensory memory mechanism (located in the temporal lobe206), and (2) an attention-switching process (located in the frontal lobes), which has been shown to peak later than the temporal component.207

In summary, two ERP components (the P600 and the early anterior negativity) have been linked to structural processing in music and language, and controversy exists for both of them regarding their interpretation and their relationship to components driven by relatively low-level deviants (P3 and MMN, respectively). This raises the possibility that responses thought to be the signature of structural processing in music and language may instead reflect domain-general cognitive processes that have little to do specifically with processing structure in music and other domains.

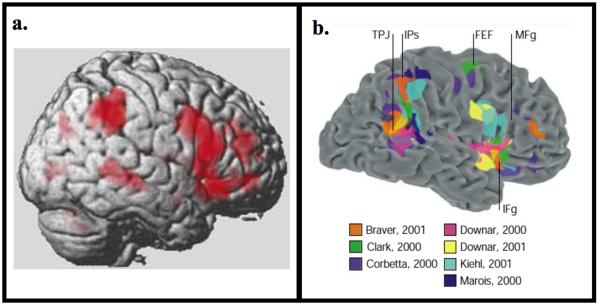

A similar picture emerges in neuroimaging studies. For example, Koelsch et al.208 demonstrated that timbre violations activate regions in the posterior IFG and superior temporal cortices that are similar to those activated by violations of tonal structure (see also Refs. 163, 209, and 210). Furthermore, a meta-analysis of activation peaks from fMRI studies investigating brain responses to unexpected sensory events211 revealed a set of brain regions that closely resemble those activated by structural violations in music (Fig. 4).

Figure 4.

The similarity between activations for violations of musical structure and low-level unexpected events. (A) The fMRI activation map for a contrast of structural violation versus no structural violations in music from Ref. 208. (B) The results of a meta-analysis of brain imaging studies examining low-level unexpected events from Ref. 211.

The frontal regions (including parts of Broca’s area96) and parietal regions that are present in both the activation map for the presence versus absence of a structural violation in music and Corbetta and Shulman’s211 meta-analysis of activation peaks for unexpected events have long been implicated in a wide range of cognitive demands, as discussed above.88,89

In summary, evidence from the structural-violation paradigm is at present largely consistent with an interpretation in which the effects arise within domain-general brain regions that respond to unexpected events across domains (compare with Ref. 212), including cases where the violations presumably have little to do with combinatorial processing or with complex hierarchical relationships among elements.

The structural-violation paradigm, albeit popular, has not, however, been the only paradigm used to study structural processing; another paradigm in music research that has been used to examine sensitivity to different types of structure involves comparing brain responses to intact and “scrambled” music. Scrambled variants of music are obtained by randomly rearranging segments of sound or elements of music, disrupting different types of musical structure depending on how the scrambling is performed. Comparisons of brain activity elicited by intact and scrambled music can thus be used to coarsely probe neural sensitivity to musical structure.

Using fMRI, Levitin and Menon213,214 compared brain responses to intact music and scrambled music generated by randomly reordering short segments of the musical sound waveform. They reported activation in the inferior frontal gyrus, around BA47, for the contrast of intact versus scrambled music. Based on previous reports of high-level linguistic manipulations activating parts of BA47,215–217 Levitin and Menon argued that the linguistic processes that engage parts of BA47 also function to process musical structure. However, they did not directly compare the processing of structure in music and language, leaving open the possibility that language and music manipulations could activate nearby but nonoverlapping regions in the anterior parts of the inferior frontal gyrus.

Later studies that directly compared structured and unstructured language and music stimuli60,218 in fact found little or no response to music in brain regions that are sensitive to the presence of structure in language, including regions in the left frontal lobe60,61 (Fig. 2). Furthermore, in our recent work62 (see also Ref. 32), we reported several brain regions in the temporal cortices that respond more strongly to structured than unstructured musical stimuli (we randomly reordered the notes within pieces of music, disrupting most aspects of musical structure) but do not show sensitivity to the presence of structure in language stimuli. It therefore appears that distinct sets of brain regions support high-level linguistic versus music processing.

This nonoverlap is consistent with the dissociation between linguistic and musical abilities that has frequently been reported in the neuropsychological literature. In particular, patients that experience some difficulty with aspects of musical processing as a result of an innate or acquired disorder appear to have little or no trouble with high-level linguistic processing219–234 (see Refs 235 and 236 for reviews). And conversely, aphasic patients—even those with severe language deficits—appear to have little or no difficulties with music perception.29,220,237–239 Perhaps the most striking case is that of the Russian composer Shebalin, who suffered two left hemisphere strokes, the second of which left him severely aphasic. Shebalin nevertheless continued to compose music following his strokes that was deemed to be comparable in quality to the music he composed before sustaining brain damage.240

In summary, recent brain imaging studies suggest that nonoverlapping sets of brain regions are sensitive to the presence of structure in language versus music.60,62,218 These findings are consistent with evidence from brain-damaged populations. We therefore conclude that linguistic processing occurs in brain circuits distinct from those that support music processing.

Language versus spatial navigation

The claim for a role for language in cross-domain integration has been explored in the areas of navigation and reorientation. The environment provides a number of cues to location, including both geometric and landmark information. If these cues are processed by separate mechanisms (such as those dedicated to visuospatial processing and object recognition), it might be that only in the presence of relevant language forms can the two informational streams be integrated, creating a capacity for flexible reorienting behavior. Initial experimental findings supported this claim. Cheng241 reported that rats navigate on the basis of geometric information alone. Similarly, young children who had not yet mastered spatial language of the type “right/left of X” also relied on the geometry of the environment.242 Furthermore, in a striking demonstration of the possible role of language, healthy adults engaged in verbal shadowing failed to combine available landmark and geometric cues and attempted to reorient on the basis of geometric information alone.26 The capacity to incorporate landmark information into reorientation performance appeared to require linguistic resources.

Subsequent experiments have not always replicated these findings. For example, investigations with nonhuman species, such as monkeys and fish, revealed the capacity to combine landmarks and geometry.243,244 Learmonth, Newcombe, and Huttenlocher245 found no effect of verbal shadowing when the dimensions of the search space were increased, indicating that reorientation in small search spaces is particularly vulnerable to disruption. Patients with global aphasia who had difficulties in comprehension and the use of spatial terms, both in isolation and in sentences, were indistinguishable in reorientation performance from healthy controls.246 These individuals were unable to produce terms such as “left” or “right,” and made errors in understanding simple spatial phrases such as “the match to the left of the box.” Despite these linguistic impairments, they were able to integrate landmark information (e.g., the blue wall) with ambiguous geometric information in order locate hidden objects. One possibility is that, while language can be used to draw attention to particular aspects of an environment, other forms of cue can also perform this role. Shusterman, Lee, and Spelke247 undertook a detailed exploration of the impact of different forms of verbal cues on the reorientation behavior of 4-year-old children. They observed that a nonspatial linguistic cue that served only to direct a child’s attention to landmark information was as effective in improving reorientation performance as verbal cues incorporating spatial information. This result suggests that, rather than language representations being a mandatory resource for informational integration, they provide more general scaffolding to learning. Furthermore, language is not the only resource to support attention to significant cues. Twyman, Friedman, and Spetch248 report that nonlinguistic training also supports children by drawing their attention to landmark information and enabling its combination with geometry in reorientation.

In a functional neuroimaging study of neural mechanisms that are associated with reorientation, Sutton, Twyman, Joanisse, and Newcombe249 observed bilateral hippocampal activation during reorientation in virtual reality environments. Hippocampal activity increased in navigation of smaller spaces, confirming behavioral observations that reorientation in environments without distant visual cues is particularly challenging. Sutton et al.249 also report activations of perisylvian language regions including the left superior temporal and supramarginal gyri in conditions where environments contained ambiguous geometric information but no landmark cues. One interpretation of this result is that language resources are employed by healthy adults under conditions of cognitive challenge in order to support performance in intrinsically nonlinguistic domains. For example, through encoding into linguistic form, subelements of a problem can be represented and maintained in phonological working memory. However, the finding that informational integration is possible in profoundly aphasic adults indicates that language representations are not a mandatory component of reorientation reasoning. Klessinger, Szczerbinski, and Varley250 provide a similar demonstration of the use of language resources in support of calculation in healthy adults. Whereas competent calculators showed little evidence of phonological mediation in solving two-digit plus two-digit addition problems, less competent calculators displayed phonological length effects (i.e., longer calculation times on problems with phonologically long versus short numbers). Thus, across a range of cognitive domains, language representations might be used in support of reasoning, particularly under conditions of high demand.

Functional specificity places constraints on possible mechanisms

The key motivation for investigating the degree of functional specialization in the human mind and brain is that such investigations critically constrain the hypothesis space of possible computations of each relevant brain region.251 If only a particular stimulus or class of stimuli produce a response in some brain region, we would entertain fundamentally different hypotheses about what this region does, compared to a case where diverse stimuli produce similarly robust responses. For example, had we found a brain region within the high-level language processing language system that responded with similar strengthc during the processing of linguistic and musical stimuli, we could have hypothesized that this region is sensitive to some abstract features of the structure present in both kinds of stimuli (for example, dependencies among the relevant elements (words in language, tones and chords in music) or perhaps the engagement of a recursive operation). That would tell us that, at some level, we extract these highly abstract representations from these—very different on the surface—stimuli. The importance of these abstract representations/processes could then be evaluated in understanding the overall cognitive architecture of music and language processing. Similar kinds of inferences could be made in cases of observed overlap between language and other cognitive processes.

The fact that high-level language processing brain regions appear to not be active during a wide range of nonlinguistic tasks suggests that these regions respond to some features that are only present in linguistic stimuli. We hypothesize that the language system stores our language knowledge representations. The precise nature of linguistic representations is still a matter of debate in the field of language research, although most current linguistic frameworks assume a tight relationship between the lexicon and grammar252–260 (compare with earlier proposals like Refs. 261 and 262). Whatever their nature, detecting matches between the input and stored language knowledge is what plausibly leads to neural activity within the language system during language comprehension, and searching for and selecting the relevant language units to express ideas is what plausibly leads to neural activity during language production.

Issues that often get conflated with the question of functional specialization

The question of whether in a mature human brain there exist brain regions that are specialized for linguistic processing is sometimes conflated with and tainted by several issues that—albeit interesting and important—are nonetheless orthogonal (see Ref. 263 for earlier discussions). We here attempt to briefly clarify a few such issues.

First, the existence of specialized language machinery does not imply the innateness of such machinery (e.g., see Refs. 263 and 264 for discussion). Functional specialization can develop as a function of our experience with the world. A clear example is the visual word-form area (vWFA), a region in the inferior temporal cortex that responds selectively to letters in one’s native script.33 Recent experiments with macaques have also suggested that specialized circuits can develop via an experiential route.265 Given that language is one of the most frequent and salient stimuli in our environment from birth (and even in utero) and throughout our lifetimes, it is computationally efficient to develop machinery that is specialized for processing linguistic stimuli. In fact, if our language system stores linguistic knowledge representations, as we hypothesize above, it would be difficult to argue that this system is present at birth given that the representations we learn are highly dependent on experience.

What do brain regions selective for high-level language processing in the adult brain do before or at birth? This remains an important open question. A number of studies have reported responses to human speech in young infants characterized by at least some degree of selectivity over non-speech sounds and, in some cases, selectivity for native language over other languages266–269 (compare with Ref.. 270) However, it is not clear whether these responses extend beyond the high-level auditory regions that are selective for speech processing in the adult brain but are not sensitive to the meaningfulness of the signal.32 In any case, as noted above, infants have exposure to speech in the womb,271 and some studies have shown sensitivity to sounds experienced prenatally shortly after birth.272 As a result, even if speech responses in the infants occur in what later become high-level language processing regions, it is possible that these responses are experientially driven. Humans are endowed with sophisticated learning mechanisms and acquire a variety of complex knowledge structures and behaviors early in life. As a result, in order to postulate an innate capacity for language, or any other cognitive ability, strong evidence is required.

Second, the specificity of the language system does not imply that the relevant brain regions evolved specifically for language. This possibility cannot be excluded, but the evidence available to date does not unequivocally support it. In particular, although a number of researchers have argued that some brain regions in the human brain are not present in nonhuman primates,274,275 many others have argued for homologies between human neocortical brain regions and those in nonhuman primates, including Broca’s area encompassing Brodmann areas 44 and 45.276–278Some have further suggested that a human brain is simply a scaled-up version of a nonhuman primate brain.279 Regardless of whether or not the human brain includes any species-specific neural circuitry, relative to the brains of our primate relatives, humans possess massively expanded association cortices in the frontal, temporal, and parietal regions.280 However, these association cortices house at least three spatially and functionally distinct large-scale networks: (1) the frontotemporal language system that we have focused on here, (2) the frontoparietal domain-general cognitive control system,88 and (3) the so-called “default mode network”281 that overlaps with the ToM network104 and has been also implicated in introspection and creative thinking. The latter two systems are present in nonhuman primates and appear to be structurally and functionally similar.88,282 How exactly the language system emerged against the backdrop of these other, not human-specific, high-level cognitive abilities remains a big question critical to understanding the evolution of our species.

And third, the question of the existence of specialized language machinery is orthogonal to whether and how this system interacts with other cognitive and neural systems. Most researchers these days—ourselves included—do not find plausible the idea that the language system is in some way encapsulated (but see also Ref. 273). However, (1) how the language system exchanges information with other large-scale neural networks and (2) the precise nature and scope of such interactions remain important questions for future research. With respect to the latter, it is important to consider both the role of language in other cognitive abilities and the role of other cognitive abilities in language processing.

For example, in this review we have discussed a couple of possible roles that language may play in nonlinguistic cognition, including the development of certain capacities (such as our ability to explicitly represent others’ mental states) , and as a kind of a mental “scratchpad” that can be used to store and manipulate information in a linguistic format, which may be especially helpful when the task at hand is demanding and additional representational formats can ease the cognitive load. To further investigate the role of language in the development of nonlinguistic human capacities, one can (1) look at the developmental time courses of the relevant abilities to see if mastering particular linguistic devices leads to the emergence of the relevant nonlinguistic ability or (2) examine the nonlinguistic abilities in question in children who are delayed in their linguistic development, due to either a neurodevelopmental language disorder or lack of early linguistic input.134,283

Regarding the role of nonlinguistic capacities in language processing, a number of linguistic manipulations have been shown to recruit the regions of the frontoparietal executive system (see Ref. 91 for additional discussion), suggesting that domain-general resources can aid language comprehension/production. That said, it remains unclear how frequently, and under what precise circumstances, these domain-general mechanisms get engaged when we understand and produce language, as well as whether these mechanisms are causally necessary for language processing.91

Conclusions

Evidence from brain imaging investigations and studies of patients with severe aphasia show that language processing relies on a set of specialized brain regions, located in the frontal and temporal lobes of the left hemisphere. These regions are not active when we engage in many forms of complex thought, including arithmetic, solving complex problems, listening to music, thinking about other people’s mental states, or navigating in the world. Furthermore, all these nonlinguistic abilities further appear to remain intact following damage to the language system, suggesting that linguistic representations are not critical for much of human thought.

We may someday discover aspects of thought that do in fact depend critically on the language system, but repeated efforts to test the candidates that seemed most likely have shown that none of these produce much activation of the language system, and none of these abilities are absent in people who are globally aphasic.

The evidence that the language regions are selectively engaged in language per se suggests that these regions store domain-specific knowledge representations that mediate our linguistic comprehension and production abilities. The specificity of these regions further makes it possible to use their activity as a functional marker of the activation of linguistic representations, thus enabling us to test the role of language processing in a broader space of cognitive tasks. Most importantly, the research reviewed here provides a definitive answer to the age-old question: language and thought are not the same thing.

Acknowledgments

We are grateful to Nancy Kanwisher, Steve Piantadosi, and two anonymous reviewers for their comments on this manuscript. We thank Sam Norman-Haignere and Josh McDermott for their extensive comments on the “Language versus music processing” section. We also thank Zach Mineroff for his help with references and formatting, and Terri Scott for her help with Figure 2. E.F. is grateful to the organizers and attendees of the CARTA symposium “How language evolves” held at the University of California, Sand Diego in February 2015, for helpful comments on her views and many great discussions of language and its place in the architecture of human cognition. E.F. was supported by NICHD Award R00 HD-057522. R.V. was supported by AHRC “Language in Mental Health” Award AH/L004070/1.

Footnotes

Conflicts of interest

The authors declare no conflicts of interest.

Although all animal species exchange information with one another,15 human language is unparalleled in the animal kingdom in its complexity and generative power.16–20

Of course, it is possible that evidence may come along in the future revealing clear relationships between different aspects of language processing and particular brain regions, but repeated efforts to find such have failed to date.

It is worth noting that effect sizes are sometimes not appreciated enough in fMRI studies, which often focus on the significance of the effects. In some cases, two manipulations, A and B, may produce significant effects in a particular brain region, but if manipulation A produces a response that is several times stronger than manipulation B, this is critical for interpreting the role of the region in question in the cognitive processes targeted by the two manipulations.

References

- 1.The Guardian [Accessed February 15, 2016];Tom Lubbock: a memoir of living with a brain tumour. 2016 Nov 6; 2010. http://www.theguardian.com/books/2010/nov/07/tom-lubbock-brain-tumour-language.

- 2.Call J. Chimpanzee social cognition. Trends In Cogn. Sci. 2001;5:388–393. doi: 10.1016/s1364-6613(00)01728-9. [DOI] [PubMed] [Google Scholar]

- 3.Tomasello M, Call J, Hare B. Chimpanzees understand psychological states - the question is which ones and to what extent. Trends In Cogn. Sci. 2003;7:153–156. doi: 10.1016/s1364-6613(03)00035-4. [DOI] [PubMed] [Google Scholar]

- 4.Hurley S, Nudds M. Rational Animals? Oxford University Press; Oxford, UK: 2006. [Google Scholar]

- 5.Penn D, Povinelli D. Causal Cognition in Human and Nonhuman Animals: A Comparative, Critical Review. Annu. Rev. Psychol. 2007;58:97–118. doi: 10.1146/annurev.psych.58.110405.085555. [DOI] [PubMed] [Google Scholar]

- 6.Matsuzawa T. The chimpanzee mind: in search of the evolutionary roots of the human mind. Anim. Cogn. 2009;12:1–9. doi: 10.1007/s10071-009-0277-1. [DOI] [PubMed] [Google Scholar]

- 7.Whiten A. The scope of culture in chimpanzees, humans and ancestral apes. Philos. T. Roy. Soc. B. 2011;366:997–1007. doi: 10.1098/rstb.2010.0334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hare B, Yamamoto S. Bonobo Cognition and Behaviour. Brill Academic Publishers; Leiden, Netherlands: 2015. [Google Scholar]

- 9.Roffman I, Savage-Rumbaugh S, Rubert-Pugh E, et al. Preparation and use of varied natural tools for extractive foraging by bonobos (Pan Paniscus) Am. J. Phys. Anthropol. 2015;158:78–91. doi: 10.1002/ajpa.22778. [DOI] [PubMed] [Google Scholar]

- 10.Herman L, Pack A, Morrel-Samuels P. In: Language and Communication: Comparative Perspectives. Roitblat H, Herman L, Nachtigall P, editors. Lawrence Erlbaum; Hillside, NJ: 1993. pp. 273–298. [Google Scholar]

- 11.Reiss D, Marino L. Mirror self-recognition in the bottlenose dolphin: A case of cognitive convergence. Proc. Natl. Acad. Sci. 2001;98:5937–5942. doi: 10.1073/pnas.101086398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schusterman R, Thomas J, Wood F. Dolphin cognition and behavior: A Comparative Approach. Taylor & Francis Group; Hillsdale, NJ: 2013. [Google Scholar]

- 13.Bluff L, Weir A, Rutz C, et al. Tool-Related Cognition in New Caledonian Crows. Comparative Cognition & Behavior Reviews. 2007;2:1–25. [Google Scholar]

- 14.Taylor A, Hunt G, Holzhaider J, et al. Spontaneous Metatool Use by New Caledonian Crows. Curr. Bio. 2007;17:1504–1507. doi: 10.1016/j.cub.2007.07.057. [DOI] [PubMed] [Google Scholar]

- 15.Kaplan G. Animal communication. Wiley Interdisciplinary Reviews: Cognitive Science. 2014;5:661–677. doi: 10.1002/wcs.1321. [DOI] [PubMed] [Google Scholar]

- 16.Snowdon C. Language capacities of nonhuman animals. Am. J. Phys. Anthropol. 1990;33:215–243. [Google Scholar]

- 17.Deacon TW. The Symbolic Species. W.W. Norton; New York, NY: 1997. [Google Scholar]

- 18.Hauser M, Chomsky N, Fitch W. The Faculty of Language: What Is It, Who Has It, and How Did It Evolve? Science. 2002;298:1569–1579. doi: 10.1126/science.298.5598.1569. [DOI] [PubMed] [Google Scholar]

- 19.Premack D. Human and animal cognition: Continuity and discontinuity. Proc. Natl. Acad. Sci. 2007;104:13861–13867. doi: 10.1073/pnas.0706147104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kinsella A. Language evolution and syntactic theory. Cambridge University Press; Cambridge, MA: 2009. [Google Scholar]

- 21.Maess B, Koelsch S, Gunter TC, et al. Musical syntax is processed in Broca's Area: An MEG study. Nat. Neurosci. 2001;4:540–545. doi: 10.1038/87502. [DOI] [PubMed] [Google Scholar]

- 22.Patel A. Language, music, syntax and the brain. Nat. Neurosci. 2003;6:674–681. doi: 10.1038/nn1082. [DOI] [PubMed] [Google Scholar]

- 23.Maruyama M, Pallier C, Jobert A, et al. The cortical representation of simple mathematical expressions. Neuroimage. 2012;61:1444–1460. doi: 10.1016/j.neuroimage.2012.04.020. [DOI] [PubMed] [Google Scholar]

- 24.de Villiers J, de Villiers P. In: Children's Reasoning and the Mind. Mitchell P, Riggs KJ, editors. Psychology Press; Hove, UK: 2000. [Google Scholar]

- 25.Novick JM, Trueswell JC, Thompson-Schill SL. Cognitive control and parsing: Reexamining the role of Broca's area in sentence comprehension. Cogn. Affect. & Behavioral Neuroscience. 2005;5:263–281. doi: 10.3758/cabn.5.3.263. [DOI] [PubMed] [Google Scholar]

- 26.Hermer-Vazquez L, Spelke ES, Katsnelson AS. Sources of flexibility in human Cognition: Dual-Task Studies of Space and Language. Cognitive Psychol. 1999;39:3–36. doi: 10.1006/cogp.1998.0713. [DOI] [PubMed] [Google Scholar]

- 27.Carruthers P. In: Distinctively human thinking: Modular precursors and components. Carruthers P, Laurence S, Stitch S, editors. Oxford University Press; Oxford, UK: 2005. pp. 69–88. [Google Scholar]

- 28.Fedorenko E, Hsieh P-J, Nieto-Castañon A, et al. A new method for fMRI investigations of language: Defining ROIs functionally in individual subjects. J. of Neurophysiol. 2010;104:1177–1194. doi: 10.1152/jn.00032.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fedorenko E, Thompson-Schill S. Reworking the language network. Trends In Cogn. Scien. 2014;18:120–126. doi: 10.1016/j.tics.2013.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Overath T, McDermott J, Zarate J, et al. The cortical analysis of speech-specific temporal structure revealed by responses to sound quilts. Nat. Neurosci. 2015;18:903–911. doi: 10.1038/nn.4021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Peretz I, Vuvan D, Lagrois M, et al. Neural overlap in processing music and speech. Philos. T. Roy. Soc. B. 2015;370:20140090–20140090. doi: 10.1098/rstb.2014.0090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Norman-Haignere S, Kanwisher N, McDermott J. Distinct Cortical Pathways for Music and Speech Revealed by Hypothesis-Free Voxel Decomposition. Neuron. 2015;88:1281–1296. doi: 10.1016/j.neuron.2015.11.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Baker CI, Liu J, Wald LL, et al. Visual word processing and experiential origins of functional selectivity in human extrastriate cortex. Proc. Natl. Acad. Sci. 2007;104:9087–9092. doi: 10.1073/pnas.0703300104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hamame CM, Szwed M, Sharman M, et al. Dejerine's reading area revisited with intracranial EEG: Selective responses to letter strings. Neurology. 2013;80:602–603. doi: 10.1212/WNL.0b013e31828154d9. [DOI] [PubMed] [Google Scholar]

- 35.Dronkers N. A new brain region for coordinating speech articulation. Nature. 1996;384:159–161. doi: 10.1038/384159a0. [DOI] [PubMed] [Google Scholar]

- 36.Hillis A, Work M, Barker P, et al. Re-examining the brain regions crucial for orchestrating speech articulation. Brain. 2004;127:1479–1487. doi: 10.1093/brain/awh172. [DOI] [PubMed] [Google Scholar]

- 37.Bonilha L, Rorden C, Appenzeller S, et al. Gray matter atrophy associated with duration of temporal lobe epilepsy. Neuroimage. 2006;32:1070–1079. doi: 10.1016/j.neuroimage.2006.05.038. [DOI] [PubMed] [Google Scholar]

- 38.Whiteside SP, Dyson L, Cowell PE, et al. The Relationship Between Apraxia of Speech and Oral Apraxia: Association or Dissociation? Arch. Clin. Neuropsych. 2015;30:670–82. doi: 10.1093/arclin/acv051. [DOI] [PubMed] [Google Scholar]

- 39.Longcamp M, Lagarrigue A, Nazarian B, et al. Functional specificity in the motor system: Evidence from coupled fMRI and kinematic recordings during letter and digit writing. Hum. Brain. Mapp. 2014;35:6077–6087. doi: 10.1002/hbm.22606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Swinney DA. Lexical access during sentence comprehension: (Re)consideration of context effects. J Verb Learn Verb Beh. 1979;18:645–659. [Google Scholar]

- 41.Fodor J. The Modularity of mind. MIT Press; Cambridge, MA: 1983. [Google Scholar]

- 42.Marslen-Wilson WD. Functional parallelism in spoken word-recognition. Cognition. 1987;25:71–102. doi: 10.1016/0010-0277(87)90005-9. [DOI] [PubMed] [Google Scholar]

- 43.Marslen-Wilson WD. Sentence perception as an interactive parallel process. Science. 1975;189:226–228. doi: 10.1126/science.189.4198.226. [DOI] [PubMed] [Google Scholar]

- 44.Altman GT, Kamide Y. Incremental interpretation at verbs: restricting the domain of subsequent reference. Cognition. 1999;73:247–264. doi: 10.1016/s0010-0277(99)00059-1. [DOI] [PubMed] [Google Scholar]

- 45.Hale J. A Probabilistic Earley Parser as a Psycholinguistic Model. Proceedings of the Second Meeting of the North American Chapter of the Asssociation for Computational Linguistics.2001. [Google Scholar]

- 46.DeLong KA, Urbach TP, Kutas M. Probabilistic word pre-activation during language comprehension inferred from electrical brain activity. Nat. Neurosci. 2005;8:1117–1121. doi: 10.1038/nn1504. [DOI] [PubMed] [Google Scholar]

- 47.Van Berkum JJA, Brown CM, Zwitserlood P, et al. Anticipating upcoming words in discourse: evidence from ERPs and reading times. J. Exp. Psychol. Learn. 2005;31:443. doi: 10.1037/0278-7393.31.3.443. [DOI] [PubMed] [Google Scholar]

- 48.Dikker S, Van Lier EH. The interplay between syntactic and conceptual information: agreement domains in FDG. Studies in Functional Discourse Grammar. 2005;26:83. [Google Scholar]

- 49.Levy R. Expectation-based syntactic comprehension. Cognition. 2008;106:1126–1177. doi: 10.1016/j.cognition.2007.05.006. [DOI] [PubMed] [Google Scholar]

- 50.Smith NJ, Levy R. The effect of word predictability on reading time is logarithmic. Cognition. 2013;128:302–319. doi: 10.1016/j.cognition.2013.02.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Caramazza A, Hillis AE. Spatial representation of words in the brain implied by studies of a unilateral neglect patient. Nature. 1990;346:267–269. doi: 10.1038/346267a0. [DOI] [PubMed] [Google Scholar]

- 52.Plaut DC. Double dissociation without modularity: evidence from connectionist neuropsychology. J. Clin. Exp. Neuropsychol. 1995;17:291–321. doi: 10.1080/01688639508405124. [DOI] [PubMed] [Google Scholar]

- 53.Fedorenko E, Nieto-Castañon A, Kanwisher N. Lexical and syntactic representations in the brain: An fMRI investigation with multi-voxel pattern analyses. Neuropsychologia. 2012c;50:499–513. doi: 10.1016/j.neuropsychologia.2011.09.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Blank I, Balewski Z, Mahowald K, et al. Syntactic processing is distributed across the language system. Neuroimage. 2016;127:307–323. doi: 10.1016/j.neuroimage.2015.11.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bautista A, Wilson AM. Neural responses to grammatically and lexically degraded speech. Lang. Cogn. Neurosci. 2016;31:1–8. doi: 10.1080/23273798.2015.1123281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Saur D, Kreher BW, Schnell S, et al. Ventral and dorsal pathways for language. Proc. Natl. Acad. Sci. 2008;105:18035–18040. doi: 10.1073/pnas.0805234105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Blank I, Kanwisher N, Fedorenko E. A functional dissociation between language and multiple-demand systems revealed in patterns of BOLD signal fluctuations. J. Neurophysiol. 2015;112:1105–1118. doi: 10.1152/jn.00884.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Tie Y, Rigolo L, Norton IH, et al. Defining language networks from resting-state fMRI for surgical planning—a feasibility study. Hum. Brain. Mapp. 2013;35:1018–1030. doi: 10.1002/hbm.22231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Nieto-Castañón A, Fedorenko E. Subject-specific functional localizers increase sensitivity and functional resolution of multi-subject analyses. Neuroimage. 2012;63:1646–1669. doi: 10.1016/j.neuroimage.2012.06.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Fedorenko E, Behr M, Kanwisher N. Functional specificity for high-level linguistic processing in the human brain. Proc. Natl. Acad. Sci. 2011;108:16428–16433. doi: 10.1073/pnas.1112937108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Norman-Haignere S, Kanwisher N, McDermott J. Hypothesis-Free Decomposition of Voxel Responses to Natural Sounds Reveals Distinct Cortical Pathways for Music and Speech. Neuron. 2015;88:1281–96. doi: 10.1016/j.neuron.2015.11.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Fedorenko E, McDermott J, Norman-Haignere S, et al. Sensitivity to musical structure in the human brain. J. Neurophysiol. 2012b;108:3289–3300. doi: 10.1152/jn.00209.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Wynn K. Children's understanding of counting. Cognition. 1990;36:155–193. doi: 10.1016/0010-0277(90)90003-3. [DOI] [PubMed] [Google Scholar]

- 64.Xu F, Carey S, Quint N. The emergence of kind-based object individuation in infancy. Cognitive Psychol. 2004;49:155–190. doi: 10.1016/j.cogpsych.2004.01.001. [DOI] [PubMed] [Google Scholar]

- 65.Feigenson L, Dehaene S, Spelke E. Core systems of number. Trends Cogn. Sci. 2004;8:307–314. doi: 10.1016/j.tics.2004.05.002. [DOI] [PubMed] [Google Scholar]

- 66.Whalen J, Gallistel C, Gelman R. Nonverbal Counting in Humans: The Psychophysics of Number Representation. Psychol. Sci. 1999;10:130–137. [Google Scholar]

- 67.Gallistel C. The organization of learning. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- 68.Hauser M, Carey S. Spontaneous representations of small numbers of objects by rhesus macaques: Examinations of content and format. Cognitive Psychol. 2003;47:367–401. doi: 10.1016/s0010-0285(03)00050-1. [DOI] [PubMed] [Google Scholar]

- 69.Gordon PC, Hendrik R, Levine WH. Memory-load interference in syntactic processing. Psychol. Sci. 2002;13:425–430. doi: 10.1111/1467-9280.00475. [DOI] [PubMed] [Google Scholar]

- 70.Pica P, Leme C, Izard V, et al. Exact and Approximate Arithmetic in an Amazonian Indigene Group. Science. 2004;306:499–503. doi: 10.1126/science.1102085. [DOI] [PubMed] [Google Scholar]

- 71.Frank M, Everett D, Fedorenko E, et al. Number as a cognitive technology: Evidence from Pirahã language and cognition. Cognition. 2008;108:819–824. doi: 10.1016/j.cognition.2008.04.007. [DOI] [PubMed] [Google Scholar]

- 72.Dehaene S. The neural basis of the Weber–Fechner law: a logarithmic mental number line. Trends In Cogn. Sci. 2003;7:145–147. doi: 10.1016/s1364-6613(03)00055-x. [DOI] [PubMed] [Google Scholar]

- 73.Dehaene S, Cohen L. Cerebral Pathways for Calculation: Double Dissociation between Rote Verbal and Quantitative Knowledge of Arithmetic. Cortex. 1997;33:219–250. doi: 10.1016/s0010-9452(08)70002-9. [DOI] [PubMed] [Google Scholar]

- 74.Dehaene A, Spelke E, Pinel P. Sources of mathematical thinking: Behavioral and brain-imaging evidence. Science. 1999;284:970–974. doi: 10.1126/science.284.5416.970. [DOI] [PubMed] [Google Scholar]

- 75.Stanescu-Cosson R, Pinel P, van de Moortele P, et al. Understanding dissociations in dyscalculia: A brain imaging study of the impact of number size on the cerebral networks for exact and approximate calculation. Brain. 2000;123:2240–2255. doi: 10.1093/brain/123.11.2240. [DOI] [PubMed] [Google Scholar]

- 76.Van Harskamp N, Cipolotti L. Selective Impairments for Addition, Subtraction and Multiplication. Implications for the Organisation of Arithmetical Facts. Cortex. 2001;37:363–388. doi: 10.1016/s0010-9452(08)70579-3. [DOI] [PubMed] [Google Scholar]

- 77.Delazer M, Girelli L, Granà A, et al. Number Processing and Calculation -- Normative Data from Healthy Adults. Clin. Neuropsychol. D. 2003;17:331–350. doi: 10.1076/clin.17.3.331.18092. [DOI] [PubMed] [Google Scholar]

- 78.Schwarzlose R, Baker C, Kanwisher N. Separate Face and Body Selectivity on the Fusiform Gyrus. J. Neurosci. 2005;25:11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Baldo JV, Dronkers NF. Neural correlates of arithmetic and language comprehension: a common substrate? Neuropsychologia. 2007;45:229–235. doi: 10.1016/j.neuropsychologia.2006.07.014. [DOI] [PubMed] [Google Scholar]

- 80.Coltheart M. In: Cognitive neuropsychology and the study of reading. Posner MI, Marin OSM, editors. Lawrence Erlbaum Associates; Hillsdale, NJ: 1985. pp. 3–37. [Google Scholar]

- 81.Shallice T. From Neuropsychology to Mental Structure. Cambridge University Press; Cambridge, MA: 1988. [Google Scholar]

- 82.Varley R, Klessinger N, Romanowski C, et al. From The Cover: Agrammatic but numerate. Proc. Natl. Acad. Sci. 2005;102:3519–3524. doi: 10.1073/pnas.0407470102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Zago L, Pesenti M, Mellet E, et al. Neural correlates of simple and complex mental calculation. Neuroimage. 2001;13:314–327. doi: 10.1006/nimg.2000.0697. [DOI] [PubMed] [Google Scholar]

- 84.Monti M, Parsons L, Osherson D. Thought Beyond Language: Neural Dissociation of Algebra and Natural Language. Psychol. Sci. 2012;23:914–922. doi: 10.1177/0956797612437427. [DOI] [PubMed] [Google Scholar]

- 85.Trbovich PL, LeFevre JA. Phonological and visual working memory in mental addition. Mem. Cognition. 2003;31:738–745. doi: 10.3758/bf03196112. [DOI] [PubMed] [Google Scholar]

- 86.Gray J, Thompson P. Neurobiology of intelligence: science and ethics. Nat. Rev. Neurosci. 2004;5:471–482. doi: 10.1038/nrn1405. [DOI] [PubMed] [Google Scholar]

- 87.Miller E, Cohen J. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- 88.Duncan J. The multiple-demand (MD) system of the primate brain: Mental programs for intelligent behaviour. Trends in Cogn. Sci. 2010;14:172–179. doi: 10.1016/j.tics.2010.01.004. [DOI] [PubMed] [Google Scholar]

- 89.Duncan J, Schramm M, Thompson R, et al. Task rules, working memory, and fluid intelligence. Psycho. B. Rev. 2012;19:864–870. doi: 10.3758/s13423-012-0225-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Kaan E, Swaab TY. The brain circuitry of syntactic comprehension. Trends in Cogn. Sci. 2002;6:350–356. doi: 10.1016/s1364-6613(02)01947-2. [DOI] [PubMed] [Google Scholar]

- 91.Fedorenko E. The role of domain-general cognitive control in language comprehension. Front. Psychol. 2014;5:335. doi: 10.3389/fpsyg.2014.00335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Fedorenko E, Gibson E, Rohde D. The nature of working memory capacity in sentence comprehension: Evidence against domain-specific working memory resources. J. Mem. Lang. 2006;54:541–553. [Google Scholar]

- 93.Rodd JM, Davis MH, Johnsrude IS. The neural mechanisms of speech comprehension: Fmri studies of semantic ambiguity. Cereb Cortex. 2005;15:1261–9. doi: 10.1093/cercor/bhi009. [DOI] [PubMed] [Google Scholar]

- 94.Novais-Santos S, Gee J, Shah M, et al. Resolving sentence ambiguity with planning and working memory resources: Evidence from fMRI. Neuroimage. 2007;37:361–378. doi: 10.1016/j.neuroimage.2007.03.077. [DOI] [PubMed] [Google Scholar]

- 95.January D, Trueswell JC, Thompson-Schill SL. Co-Localization of stroop and syntactic ambiguity resolution in broca's area: Implications for the neural basis of sentence processing. J. Cogn. Neurosci. 2009;21:2434–44. doi: 10.1162/jocn.2008.21179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Fedorenko E, Duncan J, Kanwisher N. Language-Selective and Domain-General Regions Lie Side by Side within Broca's Area. Curr Bio. 2012a;22:2059–2062. doi: 10.1016/j.cub.2012.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Monti MM, Osherson D, Martinez M, et al. Functional neuroanatomy of deductive inference: A language-independent distributed network. Neuroimage. 2007;37:1005–1016. doi: 10.1016/j.neuroimage.2007.04.069. [DOI] [PubMed] [Google Scholar]

- 98.Monti M, Parsons L, Osherson D. The boundaries of language and thought in deductive inference. Proc. Natl. Acad. Sci. 2009;106:12554–12559. doi: 10.1073/pnas.0902422106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Goel V. Anatomy of deductive reasoning. Trends Cogn. Sci. 2007;11:435–441. doi: 10.1016/j.tics.2007.09.003. [DOI] [PubMed] [Google Scholar]