Abstract

Objective

To pilot public health interventions at women potentially interested in maternity care via campaigns on social media (Twitter), social networks (Facebook), and online search engines (Google Search).

Data Sources/Study Setting

Primary data from Twitter, Facebook, and Google Search on users of these platforms in Los Angeles between March and July 2014.

Study Design

Observational study measuring the responses of targeted users of Twitter, Facebook, and Google Search exposed to our sponsored messages soliciting them to start an engagement process by clicking through to a study website containing information on maternity care quality information for the Los Angeles market.

Principal Findings

Campaigns reached a little more than 140,000 consumers each day across the three platforms, with a little more than 400 engagements each day. Facebook and Google search had broader reach, better engagement rates, and lower costs than Twitter. Costs to reach 1,000 targeted users were approximately in the same range as less well‐targeted radio and TV advertisements, while initial engagements—a user clicking through an advertisement—cost less than $1 each.

Conclusions

Our results suggest that commercially available online advertising platforms in wide use by other industries could play a role in targeted public health interventions.

Keywords: Social media, social networks, maternity care quality

Widespread attempts have been made to publicize clinical performance measures aimed at improving delivered quality (Agency for Healthcare Research and Quality, 2011). Such “report cards” exist for a number of reasons: to educate consumers and referrers, to illustrate and to enhance potential choices of provider, to allow greater autonomy, and to improve efficiency in decision making.

Today, the National Quality Forum currently endorses 743 standards (National Quality Forum, 2011). Public report cards administered by health insurance plans are similarly available nationwide, with, for example, the National Committee for Quality Assurance listing 136 national plans reporting some measure of physician or hospital‐specific performance (National Committee for Quality Assurance, 2011), culminating in the launch of the Hospital Compare public reporting website by CMS in 2005.

Given this generation of potentially accessible valuable information, it is clear that stakeholders need to and wish to inform the public of these resources. Traditional marketing campaigns may be less potent in an increasingly digital, technology‐enabled society with home broadband and high bandwidth mobile Internet on ubiquitous smartphones that allow real‐time searches for online information and live interactions on social media and social networks. Consumers wish to search for and create content online and interact with like‐minded others. The Pew Internet and American Life Project finds that more than 50 percent of online adults between the ages of 18 and 55 years use social networking sites, while one in four Americans report that the Internet has helped them deal with at least one major health‐related life decision (Pew Research Internet Project, 2014).

However, public health messaging that does attempt to use these virtual channels is typically highly passive. Merely tweeting broadly about the importance of seasonal flu vaccination is not likely to be sufficient. Using static websites to offer comparative hospital quality information‐based messages will not work unless consumers become aware of and have confidence in the information (Huesch, Currid‐Halkett, and Doctor 2014).

It is reasonable to ask whether we have fully exploited technology‐based solutions to empower and better inform patients. In other industries, digital advertising through social media, online social networks, and Internet search engines is premised on the valuable data that such online platforms build up on their users.

Objective

In this article, we describe a pilot study of an intervention to provide information on maternity care quality to Los Angeles consumers plausibly interested in such information using three commercial campaigns on social media, an online social network, and an Internet search engine. Our objective is to understand whether we can quickly and cheaply reach out to prospective consumers of public health information using new digital technology. This pilot explicitly did not seek to actually change the health‐related behavior of consumers nor did it ascertain whether consumers understood and acted on such public health information.

Empirical Setting

We chose to focus on the maternity care setting for a number of important reasons, aside from the obvious aspect that maternity care is a highly “shoppable” condition in which consumers have substantial time to acquire information and make decisions on health care utilization.

Maternity care is the second most common reason for hospitalization, the fourth most common reason for seeking ambulatory care (Sakala and Corry 2008) includes the top three procedures billed to Medicaid or private payors, and accounts for more than fourth of all Medicaid‐billed hospital charges, and nearly a sixth of all private insured‐billed hospital charges (Agency for Healthcare Research and Quality, 2008).

Yet on key objective evidence‐based metrics such as the appropriate use of Cesarean sections (Main et al. 2011), the proportion of women who received antenatal care within the first trimester, low birth weight infant deliveries, infant mortality, and maternal death rates, progress has either been away from or incompletely toward federal targets (U.S. Department of Health and Human Services, 2006).

Reflecting these quality imperative, a number of easily accessible state government, federal government, and commercial entities' websites provide substantial data on local, regional, and national maternity care quality by named hospitals. Our study then provided links to these online report cards on the study website; the first author will provide by request detailed additional information on the quality metrics listed on each of these online report cards.

Conceptual Framework

We have employed three complementary frameworks in this study, each of which represents a different perspective on what we seek to do. At the highest level, we see this pilot study as being a classical public health intervention. The theoretical underpinnings of this intervention are in social cognitive theory, while the practical bases lie in standard commercial marketing management and sales management.

Public Health Intervention Perspective

Using a classic public health model (Keller et al. 1998), our study is a population‐based, individual‐focused, primary prevention, public health intervention which combines elements of Outreach, Social Marketing, and Health Teaching.

As an Outreach intervention, we seek to locate individuals at risk of receiving less than optimal maternity care and ensure their access to information that can improve their maternity and delivery care by choosing a hospital of higher quality as reported by a public hospital reporting website. As a Social Marketing intervention, we seek to utilize commercial marketing tools and techniques to influence these individuals and their beliefs and decisions. As a Health Teaching intervention, we intend to communicate facts and ideas to change the beliefs and behaviors of those individuals.

Social Cognitive Theoretical Perspective

The theoretical grounding of our intervention is in social cognitive theory. The principles and processes underlying a target's susceptibility to outside influences are grounded in light of three goals fundamental to rewarding human functioning (Cialdini and Goldstein 2004). Specifically, targets are motivated to form accurate perceptions of reality and react accordingly, to develop and preserve meaningful social relationships, and to maintain a favorable self‐concept. Of particular importance are social norms, behavior expectations within a particular group that can influence behavior of group members due to a desire to conform with actual behavior (the descriptive norm) or sanctioned behavior (the injunctive norm).

Previous research has demonstrated that social norms of appropriate behavior can exert a stronger effect on behavior than modest economic incentives or self‐interest (Heyman and Ariely 2004; Griskevicius, Cialdini, and Goldstein 2008; Nolan et al. 2008). We are especially likely to follow the lead of others whom we perceive to be similar to ourselves (Hornstein, Fisch, and Holmes 1968; Murray et al. 1984; White, Hogg, and Terry 2002).

From an economic perspective, social norms may convey information concerning appropriate behavior or social consequences of failing to conform; however, behavioral studies find that these effects persist even when behavior is unobservable (e.g., littering when nobody is around) and social information is not particularly informative to one's own preferences (e.g., towel recycling).

We expect that engaging with consumers to provide accurate perceptions of the reality of differences in hospital quality, and providing information regarding the actions of geographically and psychosocially similar consumers in choosing high‐quality hospitals for maternity care (a descriptive social norm), as well as providing information that there exist nationally recommended guidelines for avoiding unnecessary Cesarean sections (an injunctive norm) will lead consumers to choose to visit the recommended website (i.e., the study website) and click‐through to existing sources of publicly reported data on hospital quality.

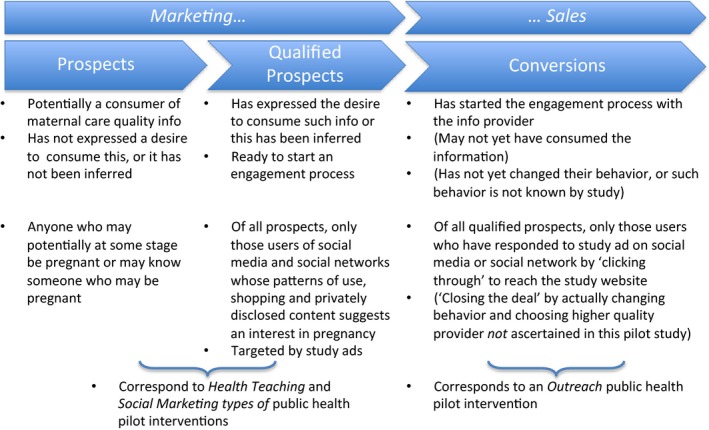

Marketing Perspective

Well‐known terms of sales management can be applied to electronic patient education and information provision. We recognize that patient education is an actual sales process in which information is being sold even when it's being given away. In line with this sales process, potential customers are initially prospects, then become qualified prospects, then are converted to actual customers (see Figure 1).

Figure 1.

Conceptual Framework

We compete for some share of the customers' mind in an environment where a patient's fixed attention span is increasingly divided among many screens (i.e., TV, PC, and mobile) and by many attention‐seekers. Online consumers in the United States are already estimated (eMarketer, 2012) to be exposed to nearly $22 billion worth of small advertisements that appear on paid search engine results (e.g., Google, Bing, Yahoo), and another $21 billion in display advertisements (e.g., on most any websites and on online social media and social networks).

Although we may offer our information for free, the potential customer or prospect faces noncash costs in acquiring this information. The prospect must invest time and effort in accessing and understanding this information. Conversely, there are most often opportunity costs for that time and effort. Accordingly, we must sell the patient on the worth of these investments. Partly this is through attractive and eye‐catching advertisements, partly through the intrinsic value of the information that we seek to make available.

Qualified prospects are those who have expressed an interest in the product or service offered. We estimated that our total qualified prospects would be approximately 500,000–750,000 women and close friends, relatives, and partners at any point in time in Los Angeles county. We based this estimate on the 300,000 annual births in the county and the simplifying assumption of persisting interest throughout a typical 9‐month pregnancy. This estimate was in line with recent findings of more than 1 million searches per month on Google in Los Angeles regarding pregnancy, more than 50,000 monthly searches for maternity care providers, and around 20,000 monthly searches for hospital quality information (Huesch, Currid‐Halkett, and Doctor 2014).

In our setting, the process of qualification is predominantly owned by the advertising platforms we access, who serve us prospects that they have deemed to be interested in our information on maternity care quality. Each of these platforms has different strengths and weaknesses. For example, a young lady in Los Angeles who uses Google to privately search the web regarding early pregnancy care options may have left a digital footprint with Google which could include every search she has ever made (if she has signed up for gmail or if she uses Google's social network, Google+, or if she uses the same home computer to perform all her searches). If she mentions pregnancy concerns in private to her Facebook friends, publicly follows a maternity care account on Twitter, or privately clicks‐through Tweets to maternity care websites, then Facebook and Twitter can similarly infer relevant interests.

Finally, qualified prospects are incented to undertake some behavior that closes the deal. By navigating to a website, liking or following a Facebook page, following a Twitter account, or retweeting a Tweet, our qualified prospects have been converted to actual customers in this conceptual framework. Clearly, the ultimate objective of public health interventions that seek to educate patients on health care quality is in effecting actual change in decision making and health care utilization. However, in this pilot study, we sought merely to achieve a proof of concept of being able to reach and provide such information to a large number of consumers.

Methods

We used three online platforms, each a leading example of a type of value‐added Internet service. We contracted with Facebook, a platform that allows users to form social networks online, with Twitter, a platform that allows users to post brief 140‐character messages online, and with Google Search, a platform that allows users to search the Internet.

Our study team has produced detailed guides (see Appendix S2 and S3), including step‐by‐step platform website recording, as to how these commercial arrangements are set up, maintained and adjusted, and ultimately wound down.

Our terms of trade with all three platforms were generally similar, although the individual technical details and terms varied. We sought to purchase access to qualified prospects for information provision on maternity care quality. This qualification is important to the platform: too many poorly targeted advertisements can affect user loyalty. It was therefore in each platform's interest—as well as ours—to present our advertisements only to those users who were likely to be interested.

We provided overall requested demographics (women, Los Angeles city + 25 miles or Los Angeles county, aged 18–49 if available, including Spanish speaking if available and broken out separately) and customer interest information (e.g., keywords such as pregnant, dar a luz) to the platforms to facilitate their qualification. Platforms drew from their pool of users but did not make detailed lists of exposed users available to us for privacy reasons. We thus relied completely on the integrity of the platform's respective user databases. Especially with regard to Twitter, where users provide limited account information on location and demographics and interests, the pool of users may have been selected partially based on Twitter's inferences of user behavior and interests.

We wished to limit our financial risk and only pay per converted customer, where this study intends “conversion” to mean an initial engagement by the customer in clicking through an advertisement on Facebook or Google Search and arriving at our test website. For Twitter, conversion was measured in two ways. The first is analogous to the narrow definition of conversion of a qualified prospect on Facebook or Google Search. The second is a broader and looser measure of engagement tailored to Twitter's social media business model, which includes click‐throughs but also comprises additionally the following actions: following our account, expanding an advertisement to read the full copy, favoriting an account, retweeting, or replying. Twitter insists on payment on the basis of this broader measure of engagement, so when we report comparable costs per click‐through for Twitter users, these will include the costs paid for customers who did not click‐through to our website, but otherwise engaged with our message in Twitter's definition.

For each platform, we negotiated prices per customer and/or set bids to reach such customers, and were able to coarsely tune such prices and/or bids throughout the campaign to test whether reach or conversion increased. However, it is important to understand that we are bidding in a “sealed bid” auction for position in search results and in Facebook feeds so that reach and conversion are jointly determined by the competitive actions of very many marketers, each seeking to reach similar sets of customers.

In general, bids were a function of our advertisement's popularity with users, our desired placement within a user's visibility, and our desired reach. We paid monthly on electronic invoicing, using credit cards as payment mechanism.

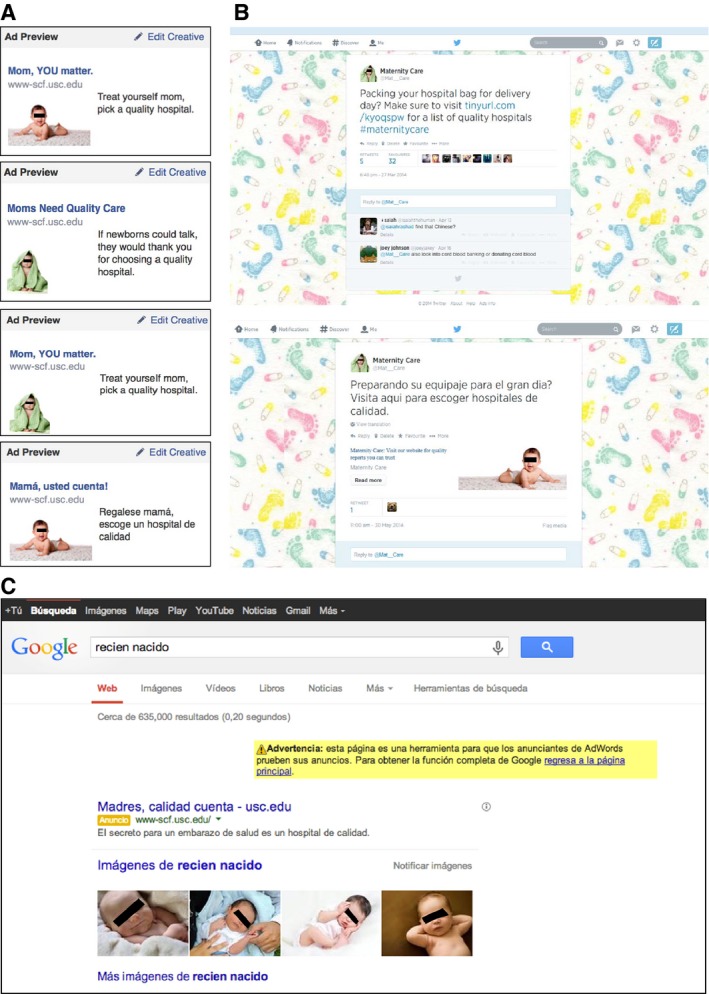

We provided each platform with advertising materials consisting of pictures and text copy embedding a URL (a hyperlink) to click‐through (Figures 2A, B, and C). We chose standard commercial images of neutral images of a baby, or a mother and baby, and within the context of dramatically limited word or character counts used neutral, easy to understand language to “pitch” our messages. The lead author took overall responsibility for approving team pictures and text copy.

Figure 2.

- Notes: (A) Study website blinded. Copy, copy location on user's Facebook page was varied throughout study; examples shown are representative of those used during campaign. (B) Study website blinded. Copy was varied throughout study; examples shown are representative of those used during campaign. (C) Study website blinded. Copy was varied throughout study; example shown is representative of those used during campaign. The position of this advertisement as the highest in the list of sponsored search positions on the first search page reflects a particular bid price. Other positions and pages were possible. Advertising text translates as “Mothers, quality counts. The secret for a healthy pregnancy lies in a high‐quality hospital.”

The platforms supplied us with detailed data on a daily frequency of how many users had been exposed to the advertisement (the number of impressions or the reach), and what behavior had resulted (whether the user had clicked through to our test website, as well as for Twitter only, the other measures of engagement listed above). With some restrictions and prior permission by the platforms, we were able to modify and fine‐tune advertising copy to test different responses.

Finally, we also used a fluent Latin American Spanish speaker to translate our promotional materials into Spanish for the large number of Hispanic prospects in our geographical area. We used ethnicity‐appropriate images and culturally appropriate text, including Central and Southern American slang terms where appropriate. We ran this part of the intervention separately from the English‐language campaigns.

We created a visually attractive study website that explained the study objective and that encouraged arriving users to consult the collated sources of local, state, or federal data on maternity care quality. For the Spanish‐language campaign, we similarly translated this website into Spanish. We enabled Google Analytics on this website to analyze the origin of incoming web traffic to ensure that the platforms' reports of outgoing traffic for which we were billed matched our analysis of incoming traffic. This match proved to be almost exact, with some additional organic traffic (<1 percent) coming to our website from repeat visitors who could have bookmarked our site and subsequently returned to consult it.

We designed the campaign to use masters' student research assistants, one acting as “channel manager” for each of the three platforms and one to administer the study website. The lead author supervised and managed the channel managers and approved all commercial negotiations, text copy, bid prices, and changes in website design. All statistics reported are purely descriptive and no statistical comparisons or extrapolations to the population were attempted. This study was approved by the Institutional Review Board of the lead author's home institution.

Results

The summary performance measures across the three channels are described in terms of daily metrics and overall study performance in Table 1.

Table 1.

Daily and Overall Campaign Performance Measures

| Platform | ||||

|---|---|---|---|---|

| Google Search | Total | |||

| Daily (all nonunique) | ||||

| Reach | 92,100 | 33,685 | 17,507 | 143,292 |

| Engagements | 232 | 149 | 30 | 411 |

| Campaign (all nonunique) | ||||

| Reach | 10,959,961 | 4,480,119 | 2,223,493 | 17,663,573 |

| Engagements | 27,676 | 19,923 | 3,798 | 51,397 |

| Spending | $25,177 | $13,689 | $20,542 | $59,408 |

| Cost per 1,000 reached | $2.30 | $3.06 | $9.24 | |

| Cost per click‐through | $0.91 | $0.69 | $5.41 | |

Reach or impressions identifies number of users who saw or potentially saw the advertisement because they were exposed to it. Engagements identifies number of users who responded to the advertisement by clicking through the website link contained in the advertisement.

The individual platform performance measures are further described below at a summary level, with detailed platform performance data, website designs, advertising copy, and other details available from the lead author on request.

Our campaign on Facebook ran on consecutive days between March 20 and July 30, 2014. We spent a total of $13,689 to reach a nonunique total of 4,480,119 Facebook users. Of these, our total number of click‐through engagements achieved was 19,923 nonunique clicks and 17,764 unique user clicks.

Our overall unique engagement rate was thus 0.4 percent of those reached. On average, we reached 33,685 unique users each day, soliciting 134 unique clicks each day and spending $0.77 per unique user to achieve that engagement. Overall, to reach 1,000 users, or the CPM metric, cost us $3.06.

In the subset of our results in which we ran our Spanish‐language pilot, results were similar. Over 28 days we reached 1,496,818 nonunique Facebook users in Los Angeles and solicited 5,752 unique engagements at an average cost of $0.75 per unique user. The Spanish‐language unique engagement rate of 0.38 percent was similar to the overall campaign results.

Our campaign on Google search ran on the consecutive days between April 1 and July 28, 2014. We spent a total of $25,177 to reach a nonunique total of 10,959,961 Google search users. Of these, our total number of click‐through engagements achieved was 27,676 without data on how many were unique individuals.

Our overall engagement rate was thus 0.25 percent of those reached. On average, we reached 92,100 nonunique searchers each day, soliciting 232 clicks each day and spending $0.91 per user to achieve that engagement. Overall, to reach 1,000 users cost us the least at $2.30.

In the subset of our results in which we ran our Spanish‐language pilot, activity was different. Over 21 days we reached only 203,463 nonunique Google search users in Los Angeles and solicited 1,054 click‐through engagements at an average cost of $4.65 per unique user. The Spanish‐language engagement rate of 0.52 percent was higher than the overall campaign results.

Our campaign on Twitter ran between March 26 and July 31, 2014. We spent a total of $20,542 to reach a nonunique total of 2,223,493 Twitter users. Based on Twitter's broad measure of engagement, we achieved a total of 30,858 engagements for an engagement rate of 1.4 percent.

However, to properly compare our results on Twitter with Facebook and Google, we focus on the comparable and far narrower measure of nonunique click‐through engagements. Here, our total was far less at 3,798 nonunique engagements. Our overall engagement rate was thus 0.17 percent of those reached. On average, we reached 17,507 nonunique users each day, soliciting 29.9 nonunique clicks each day and spending $5.41 per user to achieve that engagement. Overall, to reach 1,000 users cost us the most at $9.24.

In the subset of our results in which we ran our Spanish‐language pilot, results were similar. Over 17 days, we reached 336,439 nonunique Twitter users in Los Angeles and solicited 4,030 broad engagements and 430 nonunique click‐throughs at an average cost of $7.12 per unique user. The Spanish‐language unique click‐through rate of 0.13 percent, slightly lower than the overall campaign results.

Discussion

This study used Twitter, Facebook, and Google Search to reach out to consumers with potential interest in maternity care quality information. Contracting on commercial terms, we spent a little more than $500 a day across these three platforms to obtain engagements from a little more than 400 consumers each day to our study website containing relevant information.

As an initial proof of concept, we believe that this pilot has shown that it is possible to drive consumer interest toward a static website for a little more than $1 per qualified consumer on average. This intervention was also relatively simple to design and launch, and was greatly facilitated by the professional counterparties at each of the three platforms.

It is important to put our results into perspective and understand other options for reaching consumers. These options depend on whether the patient is identified or not, and whether in‐person or remote channels are used.

On one hand, if such patients are not yet identified, the total cost of in‐person outreach to unidentified high‐risk, low‐income pregnant women to enroll them in high‐risk antenatal care using case workers has previously been estimated in one study as $850 per enrollee. That study sought to enroll women at welfare offices, clinics, and in high‐potential residential areas in an urban environment (Brooks‐Gunn et al. 1989).

On the other hand, if such consumers are already identified by name, location, or phone number, then direct outreach to enroll them (i.e., to achieve a conversion) into a counseling or educational program is possible. Historically, the direct costs of in‐person outreach to female low‐income patients is around $50 per patient (typically 2–3 labor hours at $20/hour staff time) down to $3/patient for labor costs related to a phone call, and as low as $1/patient for labor and postage costs for a letter to a known patient (Wagner et al. 2007).

Beyond in‐person outreach, cheaper health education and social marketing using traditional media channels has different cost structures. Here, almost all cost data are on reach to prospects or qualified prospects, not on actual conversions. The relevant cost metric is CPM or cost per thousand impressions, where one impression is synonymous with reaching one prospect. It costs more than $30 to reach a thousand prospects using newspaper advertisements, around $20 for magazines, about $7 for radio, and $5 for TV advertisements (Flannagan 2015). Other less well‐targeted modalities are still lower such as billboard marketing with an estimated CPM of $3–$5 (Grunert 2015).

In our results, our CPM was comparable to these metrics, lying between $2 and $10, although the quality of our impressions is likely to have been better than the less targeted advertising media listed above due to the platforms' better information on users. Reassuringly, our social media and social network achieved CPM is also very similar to cited results for CPM achieved in these digital channels by other relatively unsophisticated small business marketers who tend to pay in the range of around $4–$20 on average (Grunert 2015).

Limitations

We readily acknowledge several well‐known and important limitations of such campaigns. We do not know whether such information provision did or even can affect users' decision making and thus lead to a health benefit, and this was explicitly not a study objective or ascertainable in this design.

Future studies should track user behavior, identifying follow‐on behavior (e.g., which websites were contacted subsequently), satisfaction (e.g., with depth and breadth of information obtained), and actions taken (e.g., whether choice of providers was changed). Relatively simple trackers added to the website can track online behavior; these include outbound link trackers such as, for example, www.[insert your site URL here]/linktracker.php?link=[insert the URL of a subsequent site you wish to track outward traffic to] or simply by exploiting Google Analytics ( https://www.google.com/analytics/) functionality on your site, and these allow researchers to understand how effective a marketing campaign is at driving qualified consumers to destination sites.

We also know that much reach is repetitive: identical users are repeatedly exposed to the same advertisement, so that overall reach must be interpreted as being nonunique. Except for Facebook, where unique user engagements are tracked by Facebook, we have to assume that engagements achieved from Twitter and Google include nonunique user behavior.

Conclusions

In our results, Google and Facebook appeared to be of greater potential for other researchers and public health stakeholders undertaking similar interventions. Overall reach was greater, click‐throughs higher and cheaper to obtain, and CPM and click‐through rates higher than for Twitter. We assume that the greater knowledge built up by Facebook and Google over its members and users could better be leveraged to serve up more qualified customers.

Similarly, the more private nature of information exchange of those two properties (i.e., completely privately in Google searches, and semiprivately among friends in Facebook) compared to the more public nature of Twitter probably led to more genuine interest. Our results are directionally consistent with the relative amounts of per user revenue earned each year by these three platforms. Google is estimated to earn $45, Facebook $7.24, and Twitter $3.55 in advertising revenues per user per year (Meeker 2014).

In interesting but only introductory results, it appeared that Spanish‐language users of Google search were substantially less likely to be exposed to our campaign, compared to English‐language users. Daily reach was only a tenth, although engagement rates were double. It is not clear whether this indicates that this platform is used less often by Spanish speakers, perhaps due to insufficient access to Internet or desktop computers, or whether Spanish speakers use English‐language searches. It was, however, the case that in Twitter and Facebook campaigns, there were essentially no differences between the English‐ and Spanish‐language campaign responses.

Public health interventions can reach qualified prospects through many possible communication channels. Yet overall, traditional channels remain overrepresented in marketing communications: 45 percent of American's media time last year was spent on the Internet and on mobiles, yet only 26 percent of media spend went to those new channels (Meeker 2014). Specifically in health care marketing communications, the entire health care industry accounts for just 2.5 percent of total online digital advertising (eMarketer, 2013), and much of that is direct to consumer advertising by the pharmaceutical industry.

This disproportionately small share of these increasingly important communication channels highlights an opportunity for better, more tailored, closer, and more cost‐effective outreach. While such campaigns require often substantial budgets, there are cost less options too. For example, Google offers in‐kind advertising space (through https://www.google.com/grants/) similar to the offering of free public service announcements on traditional media.

This study showed that it was possible to reach out to qualified consumers and at least initiate an engagement with these customers at marketing costs that were comparable to traditional media. Still lacking are data on whether such initial engagement or the overall impressions actually predisposes users to obtain, understand, and internalize such health‐related information and act appropriately on it by choosing high‐quality health care providers.

Some leading examples for similar outreach exist. Directly targeting smokers through carefully placed online advertising has proved to be an inexpensive way of attracting traffic to the California Department of Public Health's TobaccoFreeCA website (personal communication, Valerie Quinn, June 12, 2014). Similar campaigns could target vaccine skeptics or users of complementary and alternative medicine. But more broadly, we are not aware of large‐scale uses of such platforms except in relatively passive modes by hospitals with, for example, a Facebook page or a Twitter feed, or an advertisement on Google Search for their care delivery business.

It is our hope that this study conveys a sense of the relative ease and simplicity, and the relatively low costs and circumscribed financial risk of such campaigns to market public report cards. For our health system to overcome the serious challenges that threaten our entire nation fiscally, we need to have better informed patients taking charge and participating in their own health care and wellness. Understanding the role that Internet and social media‐based custom education approaches could play to inform patient decision making and possibly incent patient behavioral change appears to be an important next step for public health stakeholders.

Supporting information

Appendix SA1: Author Matrix.

Appendix SA2: Building the Back‐End – The Study Website and Its Analytics Function.

Appendix SA3: Building the Front‐End – The Facebook Channel and Its Advertising Function.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: The authors gratefully acknowledge financial support by the Agency for Healthcare Research and Quality (AHRQ) under grant R21 HS21868, and helpful project advice and ongoing support by Brent Sandmeyer and Galen Gregor at AHRQ. The authors also acknowledge the excellent research assistance of the following graduate students at USC over the period 09/30/2012 – 12/31/2014: Brent Costa, Shakeh Missian, Natalie Abidjian, Don Marshall, and Shyamala Shastri.

Disclosures: None.

Disclaimers: None.

References

- Agency for Healthcare Research and Quality . 2008. HCUPnet, Healthcare Cost and Utilization Project. Rockville, MD: AHRQ; [accessed on March 30, 2016]. Available at http://hcupnet.ahrq.gov/ [Google Scholar]

- Agency for Healthcare Research and Quality . 2011. “Health Care Report Card Compendium” [accessed on March 30, 2016]. Available at https://cahps.ahrq.gov/consumer-reporting/talkingquality/

- Brooks‐Gunn, J. , McCormick M. C., Brooks‐Gunn J., Shorter T., Holmes J. H., Wallace C. Y., and Heagarty M. C.. 1989. “Outreach as Case Finding: The Process of Locating Low‐Income Pregnant Women.” Medical Care 27 (2): 95–102. [PubMed] [Google Scholar]

- Cialdini, R. B. , and Goldstein N. J.. 2004. “Social Influence: Compliance and Conformity.” Annual Review of Psychology 55: 591–621. [DOI] [PubMed] [Google Scholar]

- eMarketer . 2012. “US Digital Ad Spending to Top $37 Billion in 2012 as Market Consolidates” [accessed on June 12, 2014]. Available at http://www.emarketer.com/newsroom/index.php/digital-ad-spending-top-37-billion-2012-market-consolidates/

- eMarketer . 2013. “The US Healthcare & Pharmaceutical Industry 2013: Digital Ad Spending Forecast and Key Trends” [accessed on June 12, 2014]. Available at https://www.emarketer.com/Coverage/HealthcarePharma.aspx

- Flannagan, R. 2015. The Cost to Reach 1,000 People. Nuanced Media Analysis; [accessed on March 30, 2016]. Available at http://nuancedmedia.com/cost-reach-1000-people/ [Google Scholar]

- Griskevicius, V. , Cialdini R. B., and Goldstein N. J.. 2008. “Peer Influence: An Underestimated and Underemployed Lever for Change.” Sloan Management Review 49: 84–8. [Google Scholar]

- Grunert, J. 2015. “What Is a Typical CPM?” Chronicle Small Business article [accessed on March 30, 2016]. Available at http://smallbusiness.chron.com/typical-cpm-74763.html

- Heyman, J. , and Ariely D.. 2004. “Effort for Payment. A Tale of Two Markets.” Psychological Science 15 (11): 787–93. [DOI] [PubMed] [Google Scholar]

- Hornstein, H. , Fisch E., and Holmes M.. 1968. “Influence of a Model's Feelings about his Behavior and his Relevance as a Comparison Other on Observers' Helping Behavior.” Journal of Personality and Social Psychology 10: 220–6. [Google Scholar]

- Huesch, M. D. , Currid‐Halkett E., and Doctor J. N.. 2014. “Public Hospital Quality Report Awareness, Popularity, and Sentiment.” BMJ Open 4: e004417 [accessed on March 30, 2016]. Available at http://bmjopen.bmj.com/content/4/3/e004417.full [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keller, A. , Strohschein F., Lia‐Hoagberg B., and Schaffer M.. 1998. “Population‐Based Public Health Nursing Interventions: A Model from Practice.” Public Health Nursing 15 (3): 207–15. [DOI] [PubMed] [Google Scholar]

- Main, E. K. , Morton C. H., Hopkins D., Giuliani G., Melsop K., and Gould J. B.. 2011. Cesarean Deliveries, Outcomes, and Opportunities for Change in California: Toward a Public Agenda for Maternity Care Safety and Quality. CMQCC White Paper 2011 [accessed on June 12, 2014]. Available at http://www.cmqcc.org/resources/2079 [Google Scholar]

- Meeker, M. 2014. Internet Trends 2014‐ Code Conference. Kleiner Perkins Caufield Byers; [accessed on June 12, 2014]. Available at http://www.kpcb.com/internet-trends [Google Scholar]

- Murray, D. M. , Luepker R. V., Johnson C. A., and Mittelmark M. B.. 1984. “The Prevention of Cigarette Smoking in Children: A Comparison of Four Strategies.” Journal of Applied Social Psychology 14: 274–88. [Google Scholar]

- National Committee for Quality Assurance . 2011. “Physician and Hospital Quality Report Card” [accessed on March 30, 2016]. Available at http://reportcard.ncqa.org/phq/external/phqsearch.aspx

- National Quality Forum . 2011. “NQF Endorsed Performance Measures” [accessed on March 30, 2016]. Available at http://www.qualityforum.org/Measures_List.aspx

- Nolan, J. P. , Schultz P. W., Cialdini R. B., Goldstein N. J., and Griskevicius V.. 2008. “Normative Social Influence Is Underdetected.” Personality and Social Psychology Bulletin 34: 913–23. [DOI] [PubMed] [Google Scholar]

- Pew Research Internet Project . 2014. “Pew Research Center” [accessed on June 12, 2014]. Available at http://www.pewinternet.org/

- Sakala, C. , and Corry M. P.. 2008. Evidence‐Based Maternity Care. Millbank Report; [accessed on March 30, 2016]. Available at http://www.milbank.org/reports/0809MaternityCare/0809MaternityCare.html#US [Google Scholar]

- U.S. Department of Health and Human Services . 2006. Healthy People 2010 Midcourse Review. Washington, DC: U.S. Government Printing Office; [accessed on March 30, 2016]. Available at http://www.cdc.gov/nchs/healthy_people/hp2010/hp2010_final_review.htm [Google Scholar]

- Wagner, T. H. , Engelstad L. P., McPhee S. J., and Pasick R. J.. 2007. “The Costs of an Outreach Intervention for Low‐Income Women with Abnormal Pap Smears.” Preventing Chronic Disease [accessed on March 30, 2016]. Available at http://www.cdc.gov/pcd/issues/2007/jan/06_0058.htm [PMC free article] [PubMed] [Google Scholar]

- White, K. M. , Hogg M. A., and Terry D. J.. 2002. “Improving Attitude‐Behavior Correspondence through Exposure to Normative Support from a Salient Ingroup.” Basic and Applied Social Psychology 24: 91–103. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix.

Appendix SA2: Building the Back‐End – The Study Website and Its Analytics Function.

Appendix SA3: Building the Front‐End – The Facebook Channel and Its Advertising Function.