Comprehension during spoken communication relies on accurate speech perception. In many cases, speech perception seems simple and effortless. However, noisy listening conditions can severely impoverish the integrity of the speech signal and lead to difficulties in perceiving the intended message. Contextual sources of information available to the listener can mitigate the negative impact of a severely degraded speech signal on perception and facilitate comprehension. Information derived from the linguistic context, as well as information sources generated internally from prior knowledge (e.g., lexical status, sentence meaning), bias perception towards real words and coherent sentence meanings.

In the present study, we explore effects of meaning that go beyond that of a pair of single words or coherence of a set of words within-sentence, and investigate the effect of conceptual relationships between two different sentences on the perception of acoustically degraded speech. In other words, we ask whether one sentence facilitates perception of a different acoustically degraded sentence related in meaning, but comprising a different set of content words. Such facilitation requires that the overall meaning of the first sentence influences access to multiple levels of processing including the sounds, words, and ultimately meaning of the degraded target sentence.

Investigating differences in brain activity has elucidated some of the mechanisms that underlie context effects on perception. To determine associated changes in brain activity, studies have compared semantic contexts that facilitate perception of degraded speech to ones that do not. At the sentence level, these semantic contexts have included manipulations of cloze probability, sentence predictability, and within-sentence coherence (coherent vs. anomalous) (Davis, Ford, Kherif, & Johnsrude, 2011; Gow, Segawa, Ahlfors, & Lin, 2008; Guediche, Salvata, & Blumstein, 2013; Obleser & Kotz, 2010; Obleser, Wise, Dresner, & Scott, 2007). Results of these studies suggest that semantic facilitation in the perception of degraded speech engages some or all components of a frontal-temporo-parietal network. Nonetheless, there is some inconsistency in the areas reported across different studies and how they are modulated by semantic context and intelligibility.

Intelligibility can have effects on activity in temporal, frontal, and parietal areas, depending on the context. In temporal areas, although activity is generally modulated by intelligibility (Obleser & Kotz, 2010, 2011), contextual constraints have been shown to influence the extent of activity (Obleser et al., 2007). Using high and low cloze probability sentences, Obleser et al. (2007) showed that intelligibility modulates activity in the mid-superior temporal gyrus (STG), whereas in low cloze probability sentences, effects of intelligibility also extend into anterior and posterior portions of the temporal lobe. A recent study in our lab (Guediche et al., 2013) examined the effects of semantic bias of a sentence context on the perception of an acoustically ambiguous or good exemplar of a word target. Results showed a crossover interaction between context and phonetic category ambiguity in the left middle temporal and superior temporal gyrus (MTG/STG). In this case, Sentence Context (biased, neutral) modulated brain activity in different directions depending on the Target Type (ambiguous, unambiguous). Changes in activation for the biasing sentence context increased for the unambiguous word target and decreased for the ambiguous target. The cluster showing the interaction was distinct from other clusters in temporal lobe areas that showed sensitivity only to sentence context or only to acoustic modification.

In the inferior frontal gyrus (IFG), increased intelligibility correlates with activity (BA 44) for low but not high cloze probability sentences (Obleser et al., 2007). In the angular gyrus (AG), effects of intelligibility on brain activity have been shown for manipulations of either predictability or signal quality. Golestani et al. (2013) showed an interaction between semantic context and distortion severity in AG. Thus, effects of intelligibility modulate activity in either the temporal, and/or frontal, and/or parietal areas, but the degree to which specific regions are involved appears to depend on the nature of the context.

In addition, high and low predictability of acoustically degraded sentences also affects functional connectivity (simple correlations in brain activity) among frontal, temporal, and parietal regions. For example, Obleser et al. (2007) showed greater functional correlations for the more predictable sentences (e.g., “His boss made him work like a slave” vs. “Sue discussed the bruise”).

Taken together, prior studies show that perception of degraded speech is influenced by within sentence meaning manipulations (e.g. semantic context (Gow et al., 2008; Guediche et al., 2013), cloze probability (Obleser et al., 2007), coherence (Davis et al., 2011). Facilitation mediated by within-sentence meaning manipulations appears to recruit one or more components of a frontal-temporal-parietal network resulting in an increase in functional connections among these regions. In the current study, we investigate whether the overall conceptual meaning of a sentence, made up of one set of words, influences perception of a second acoustically degraded sentence, made up of a different set of words. Integration of meaning across sentences happens in the normal course of discourse or conversation. What is unclear is whether integration of meaning between sentences influences perception of acoustically degraded speech and whether such integration relies on a network of frontal, parietal, and temporal areas found in studies that examine integration of meaning and acoustically degraded speech within a sentence.

The meaning representation of a sentence is more than the sum of the individual words in a sentence. Rather, it is compositional – the meaning of a sentence requires the integration of the meanings of the individual words while taking into account other factors that affect their specific meaning in the given sentence such as their syntactic roles. For example, the meaning of each of the content words in the sentences “she babysat her niece” and “she took care of her sister’s daughter” differ from one another, but the combined meaning of the set of words in each sentence is related. In order for a semantic relationship to exist between two sentences, the individual words that make up each of the sentences need not be semantic associates.

In the current study, we manipulate the conceptual relationship between an acoustically degraded sentence (in speech babble) and a preceding acoustically clear (undegraded) sentence. In particular, we investigate potential differences in perception and brain activity when the acoustically degraded sentence is preceded by a sentence that is either conceptually related or unrelated.

Consistent with earlier studies, which examine effects of within-sentence meaning on perception, we expect that the IFG, MTG, and AG will be engaged. In addition, relating the conceptual meanings between two sentences may require increased reliance on those regions that build conceptual meaning. In particular, the middle frontal gyrus (MFG) has been implicated in processing conceptual information (Jackson, Hoffman, Pobric, & Lambon, 2015) and other semantic processing tasks (Binder, Desai, Graves, & Conant, 2009). There is also evidence suggesting that the anterior superior temporal gyrus (aSTG) integrates components of a sentence into a coherent meaning (Lau, Phillips, & Poeppel, 2008), a role also ascribed to the AG (Lau et al., 2008; A. Price, Bonner, Peelle, & Grossman, 2015; Seghier, Fagan, & Price, 2010). Recent work (Jackson et al., 2015) specifically examines the processing of different types of meaning, namely, semantic associations as well as conceptual information and shows that the anterior portion of the superior temporal gyrus (aSTG) is involved in processing both semantic associations as well as conceptual information. Thus, we expect that a functional network consisting of a set of areas including the MFG, aSTG, and AG will be recruited in addition to the IFG and the MTG.

Methods

Stimuli

Sentences

Sentence pairs consisted of an acoustically clear prime followed by a degraded target sentence, which was either Related in meaning, Unrelated in meaning, or the Same (see Appendix for stimulus set). The Related sentence pairs were conceptually related and typically consisted of different content words.1 The Unrelated sentence pairs were unrelated in meaning and in content words. The Same Sentence pairs were exactly the same.

The length of the sentence pairs for each condition did not significantly differ in terms of number of total words: Related (M= 13.6, SD =2.8), Unrelated (M= 13.2, SD =2.4), Same (M= 13.2, SD =2.1). The duration of the sentence pairs did not significantly differ for the Related (3.85 s) compared to the Unrelated condition (3.61 s); however, the duration of the Same sentence pairs was shorter (3.33 s) than the other two conditions. Although the difference was not large, it was significant. For this reason, stimulus duration was accounted for during convolution with a gamma function in estimating the hemodynamic response function (see below).

A measure of between-sentence coherence using Latent Semantic Analysis (lsa.colorado.edu) was then utilized in order to assess the degree of relatedness between the Related sentence pairs and the Unrelated sentence pairs. Coherence provides a measure of the meaning similarity between two sentences. Using the topic space “General_Reading_up_to_1st_year_college (300 factors)”, the score for the Related sentence pairs ranged from 60–94% coherence, whereas the Unrelated sentence pairs were all below 40% coherence. The Same-sentence pairs were all predictable sentences taken from a speech-in noise experiment conducted by Kalikow et al. (1977).

Two stimulus lists were prepared such that the targets for one condition (e.g. Related) in one version (Version 1) served as the Target for the other condition (Unrelated) in the other version (Version 2). The two versions of the experiment were counter-balanced across participants. In this way, all of the sentences that were targets in the Related condition were also targets in the Unrelated condition across participants and also across the two tasks.

Degraded speech

The degraded stimuli were created using speech babble taken from a 10-minute recording of six simultaneous talkers (mertus.org). Portions of speech babble were extracted from the recording equivalent to the duration of each individual sentence, and were added to the original waveform with a fixed S/N ratio of −5 dB using a half Hamming window ramp up of 20 ms and ramp down of 50 ms. After adding the speech babble, all of the sentences were normalized to an amplitude of −3dB SNR. Different segments of the speech babble were used for each sentence pair to prevent adaptation to identical segments of speech babble over time2.

Varying amounts of silence were added to the clear-degraded sentence pairs at the beginning of the prime sentence so that all trials would be of equal duration and time-locked to the same time-point at the end of each trial.

Participants

Twenty participants (6 Females) volunteered for the study and were paid for their participation. Participants gave informed consent in accordance with the Human Subjects Policies of Brown University. Participants had an average age of 24.1 years (SD = 4.9) ranging from 18–34 years old. Participants lay supine in the MR scanner and additional padding around each participant’s head was added to minimize movement. Four participants’ data were not included in the fMRI analyses: one who moved more than 6 mm; one for performing the task incorrectly; and another for poor performance (0% accuracy on the same-sentence condition in the Production Task) (task described below). One additional participant was eliminated from the analysis due to technical reasons (scanner noise output was too loud to hear the spoken response data). Thus, fMRI analysis was conducted using the data from the remaining 16 participants.

Experimental Design

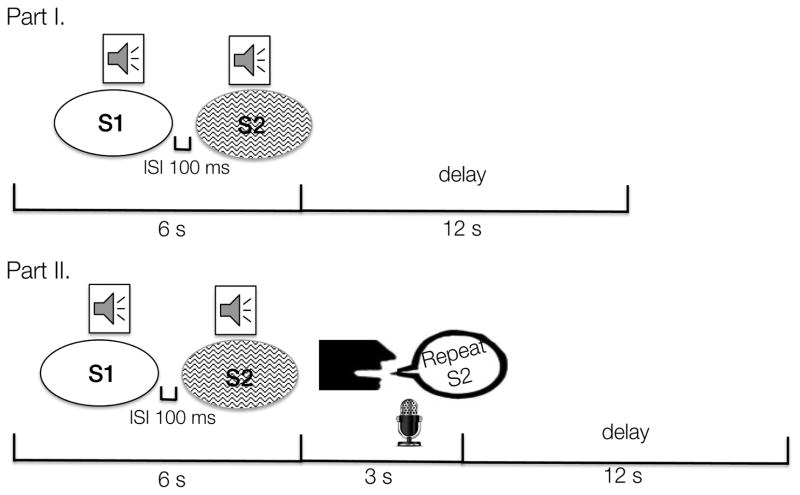

The experiment consisted of two parts. The first part was a Perception Task and the second part was a Production Task, the latter designed to allow for a behavioral measure of perceptual effects of conceptual information on the perception of degraded speech. In both parts, a prime sentence was presented without noise followed by a target sentence in speech babble; the interval between the two sentences (ISI) was 100 msec. Figure 1 illustrates the experimental design.

Figure 1.

Experimental Design. Part I depicts the Perception task and Part II depicts the Production task.

Perception Task

Participants passively listened to the sentence pairs. On three trials per run (one for each condition type), the Target Sentence was followed by a tone appended to the end of the sentence. Participants were asked to respond with a button press when they heard the tone. This target detection task was used to ensure that participants remain engaged during the experiment.

Production Task

Participants listened to the sentence pairs and were asked to repeat the target sentence or any of the words they heard from the target sentence immediately after the sentence was complete. They were instructed to guess even if they were unsure.

In both tasks, a slow event-related fMRI design was utilized. Participants heard sentence pairs through MR-compatible AVOTECH SS-3100 headphones with a built-in microphone using BLISS as the stimulus presentation software (mertus.org).

The Perception Task consisted of three runs of 8 min and 21 s each. Each run consisted of a total of 27 trials consisting of nine sentence pairs of each type (Same, Related, Unrelated). There was an ITI of 18 s for the passive trials and 21 s for the target detection trials. The extra TR in the target detection trials was included for tone presentation and response.

The Production Task consisted of three runs of nine sentence pairs of each type (Same, Related, Unrelated), totaling 9 min and 39 sec. There was an ITI of 21 s. If the target was in the Related condition in one run in the Perception Task, it was in the Unrelated condition in the same run in the Production Task. Three different orders of the runs were used across participants. The same randomization of the trials within each run was used for all participants. Participant responses were recorded via the built-in microphone on the Avotec headphones to an Edirol R-09 24 bit digital recorder located in the control room of the fMRI suite. In addition to the extra padding around participants’ heads, the headphones with the built-in microphone were taped onto the head coil to improve collection of the production data and to further minimize movement.

Scanning Protocol

A 3-T Tim Trio fMRI scanner equipped with a 12-receiver channel head coil was used to collect the MRI data. For each subject, high-resolution anatomical scans were acquired for anatomical coregistration (repetition time 1900 ms; echo time 2.98; inversion time 900 ms; field of view 256 mm; 1 mm3 isotropic voxels). Six runs of functional images were acquired, 3 for the Perception Task and 3 for the Production Task using an echoplanar sequence (repetition time 3000 ms; echo time 28 ms; field of view 192 mm; 3 mm isotropic voxels) in 44 volumes of 3-mm3 thick slices. Each of the three runs in the Perception Task consisted of 167 EPI volumes, yielding a total of 501 volumes. Each of the three runs in the Production Task consisted of 191 EPI volumes, yielding a total of 573 volumes.

Behavioral Analysis for Production

Behavioral pilot

A behavioral pilot experiment (N=8) was conducted including all three conditions (Same, Related, Unrelated). In all trials, a prime sentence that was clear was followed by a target sentence in speech babble (−5dB); the interval between the two sentences (ISI) was 100 msec. A different random selection of trials was used for each participant. In order to determine whether there were differences in the perception of degraded speech as a function of the preceding context, participants were instructed to provide a written response that consisted of any of the words they heard (or thought they heard) in the target sentence immediately after the end of the target sentence. The proportion of correct content words from the target sentence words was calculated for each trial. An analysis of variance that included all three conditions as factors (Same, Related, Unrelated) and accuracy as the dependent measure was conducted. A significant effect of Condition was found F(2, 14)= 140.22, p < .001. Follow–up pairwise t-test comparisons showed a significant priming effect of the prior context on the degraded sentence targets. Listeners performed significantly better on the Related condition (M = 0.51, SEM = 0.05) compared to the Unrelated condition (M = 0.38, SEM = 0.03), t(7) = 4.79, p = .002, the Same condition (M = 0.93, SEM = 0.02) compared to the Related, t(7) = 10.06, p <.001, and Unrelated conditions, t(7) = 16.91, p <.001

There were some differences in syntactic structure between the sentences in the Related/Unrelated conditions and those in the Same condition. In particular, the Related/Unrelated sentences typically had active human agents (e.g., “he”, “she”), whereas 11 out of 27 of the Same sentences from Kalikow et al. (1977) have inanimate subjects. As mentioned, pronouns and articles were not included in the accuracy measure, and, thus, did not give an ‘unfair’ advantage to the sentences in the Related and Unrelated conditions compared to the Same condition. It is possible that the syntactic differences among the sentences could have affected the results; however, despite this, greater activity in the STG for the Same condition compared to the Related and Unrelated conditions is consistent with the view that increased reliance on lower level phonological processing may be used to facilitate perception of the words in acoustically degraded target sentences when they are the same as the prime (see discussion for possible alternative interpretations).

FMRI Behavioral Analysis

In order to determine the accuracy of the production of the participants in the Production Task, a noise canceling procedure was utilized (see http://pakl.net/code/echocancel/ for details). The productions were then analyzed by a naïve listener (CS) who transcribed all spoken responses. Only the content words were used for measures of accuracy. Responses that included the correct content word but differed morphologically from the original stimulus (e.g. runs vs. run, scored vs. score, drank vs. drink) were accepted as correct responses (the pattern and significance of the results was the same with or without these responses). Percentages of the correct content words in the response for the target sentences were then calculated and a one-way ANOVA was conducted using Condition (Same, Related, Unrelated) as a within-subject factor and accuracy as the dependent measure. Follow-up post-hoc pairwise t-tests between each pair of conditions were conducted.

Image Analysis

Imaging data were analyzed using AFNI (Cox, 1996). Functional images were corrected for head motion by aligning all volumes to the fourth collected volume and using a six-parameter rigid body transform (Cox & Jesmanowicz, 1999). They were aligned with the structural images and resampled to 3 mm3. Spatial smoothing was performed using a 6-mm FWHM Gaussian kernel. Each individual’s brain was normalized to Talairach space and used to generate a group mask.

Stimulus Presentation times (for the onset of the target sentence) were used with duration modulation (using the length of each sentence pair prime duration + target duration) to estimate the hemodynamic response function (Ward, 1998). The convolved function for each trial in each condition type was used in a general linear model analysis (GLM) on the EPI data for each condition, and the six motion parameters were included as nuisance regressors. Separate GLM analyses were conducted for the Perception and the Production parts of the fMRI experiment. For the Perception Task, tone-target trials that were included as target detection trials were censored from the analysis. For the Production task, TRs of the spoken response for each trial were censored from the analysis. The coefficients from the GLM analysis were then converted to percent signal change units, which were used in the ANOVA analysis.

FMRI ANOVAs

Three separate 2x2 ANOVAs were conducted consisting of Condition and Task (Perception, Production) as factors, and participant as a random factor. Condition was a combination of two of the conditions, i.e. Related vs. Unrelated, Related vs. Same, or Unrelated vs. Same, using percent signal change as the dependent measure (see FMRI results below).

Brain/Behavior Correlation Analysis

A correlation analysis was conducted to examine the relationship between the individual average activity in the Production task and individual accuracy measures for the Related condition. To this end, we extracted the averaged activity for each individual, for each condition from the significant regions identified in the main effect of Condition for the Related vs. Unrelated contrast.

Functional Connectivity Analysis

To gain a better understanding of the neural areas that are a part of this fronto-temporo-parietal network, we conducted a functional connectivity analysis using the MFG as a seed. We selected the MFG because, in contrast to other studies, we found evidence that this area is sensitive to degraded speech as a function of the conceptual relationships between the sentence pairs. In order to examine potential functional connections in neural areas within the fronto-temporal-parietal network, a generalized form of context-dependent psychophysiological interaction (gPPI) analysis (Friston et al., 1997; McClaren et al., 2012) was conducted. The L MFG showed a difference between Related and Unrelated in the univariate ANOVA analysis described above. The timeseries from the LMFG seed region was extracted and interaction terms between the timeseries and each trial of each condition in the Perception Task were created. A general linear model analysis (GLM) that included the timeseries, the interaction terms, and the convolved function for each trial in each condition type was used on the EPI data, including the six motion parameters as nuisance regressors. The beta coefficients from the individual-level regressions for each of the interaction terms for each condition (Related, Unrelated, Same) were then used as a within-subject factor, and subject as a random factor, in an Analysis of Variance to determine regions that showed significant functional connections for each condition (and differences between conditions). Monte Carlo simulations were performed using 6 mm FWHM and voxelwise threshold of p < .05. The cluster extent at an alpha of .05 was determined to be 82 contiguous voxels.

Behavioral Results

fMRI Behavior

A one-way ANOVA with Condition as a factor (Same, Related, Unrelated) and accuracy as the dependent measure was conducted. A main effect of Condition was found (F(2, 30)= 81.80, p < .001). Follow–up pairwise t-test comparisons showed similar findings to those of the pilot behavioral experiment, although, not surprisingly, performance in the scanner was significantly reduced overall compared to the behavioral pilot. In particular, performance was significantly higher for the Related condition (M= .15, SEM = .03) compared to the Unrelated Condition (M = .05, SEM = .01), t(15) = 4.97, p <.001 and significantly higher on the Same (M = .55, SEM = .06) compared to the Related Condition, t(15) = 8.78, p <.001 and Unrelated Condition, t(15) = 9.78, p <.001. The acoustic noise generated by the continuous scanning resulted in poorer performance in the scanner compared to the behavioral pilot results outside the scanner. The scanner noise may not only impact performance but may also affect how the speech is processed. However, despite potential differences in the processing of speech in the presence or absence of scanner noise, similar patterns of word recognition performance emerged across the three conditions both in and outside the scanner.

Of importance, in both behavioral tasks, word recognition performance was better in the Related compared to the Unrelated condition. One possible explanation for this is that the semantic associations between individual words across the prime-target sentence pairs, rather than the overall semantic relationship between sentences, facilitated perception and drove the improved production accuracy of the listener. If this were the case, then the more semantically associated a word in the target sentence is to words in the prime sentence, the more likely that word would be produced in the participant’s response. To examine this possibility, an additional analysis for the Related condition was conducted. Semantic word-to-word association values between each of the words in the target sentence and each of the words in the prime sentence were determined using latent semantic analysis measures of word-to-word semantic associations across prime and target sentences (lsa.colorado.edu). The maximum semantic association value for each target word was then selected. The average accuracy for each word in the target sentences was computed. A correlation analysis was conducted using the maximum semantic association and average accuracy score. Results failed to show a correlation between the maximum semantic association and accuracy, R = −0.15, p = .16. Similar results were obtained even if target tokens that had 0% accuracy were removed from the analysis, R = 0.1, p = .57. These findings suggest that word-to-word semantic associations were not the factor contributing to performance accuracy in the Related condition, despite the fact that accuracy was significantly different between the Related and Unrelated conditions.

fMRI Results

Effects of Conceptual Meaning Between Sentences on Degraded Speech

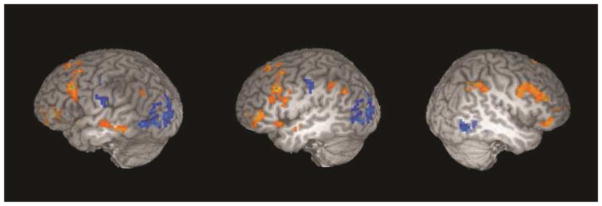

In order to determine the neural regions involved in effects of integrating conceptual meaning between sentences on perception, differences in brain activity that emerged between the Related and Unrelated sentence pairs were examined. To this end, we conducted a 2x2 ANOVA with condition (Related, Unrelated) and task (Perception, Production) as within-subject factors. There was a main effect of Condition in several regions (see Table 1a and Figure 2) as well as a main effect of Task (see Table 1b, corresponding Figure in Supplementary Materials). No significant clusters showed an interaction between the two factors. The main effect of Condition showed significant activity differences in several clusters at a voxelwise p-value of .05 and an alpha of .05 (see Table 1, Figure 2). Greater activity for the Related compared to Unrelated condition was found in the middle frontal gyrus (MFG), two clusters in the left inferior frontal gyrus (BA 45/46; BA 45/47), the inferior parietal lobe (left hemisphere peak in AG, right hemisphere peak in supramarginal gyrus (SMG), and the left middle temporal gyrus (MTG). Deactivation emerged in the middle occipital gyri with relatively more deactivation for the Related compared to the Unrelated condition.

Table 1.

Condition (Related, Same) X Task (Perception, Production)

| a) Main effect of Condition Related vs. Unrelated

| |||||

|---|---|---|---|---|---|

| Regions | x | y | z | Maximum t-value | No. of voxels |

| Left middle occipital cortex* | −34 | −88 | 8 | −4.63 | 241 |

| Inferior occipital | −40 | −82 | −3 | −2.16 | |

| Inferior temporal | −49 | −61 | 0 | −2.16 | |

| Lingual gyrus | −31 | −73 | 0 | −2.17 | |

| Right middle frontal gyrus BA 10/46* | 35 | 35 | 20 | 3.99 | 229 |

| Precentral gyrus | 43 | −1 | 35 | 3.71 | |

| Left middle frontal Gyrus BA 8/9** | −43 | 17 | 41 | 5.21 | 145 |

| Precentral gyrus | −43 | −1 | 32 | 3.60 | |

| Right inferior parietal BA40 | 50 | −49 | 44 | 4.32 | 144 |

| Supramarginal gyrus | 31 | −49 | 38 | 3.79 | |

| Left angular gyrus BA39** | −31 | −58 | 35 | 3.93 | 109 |

| Left insula and inferior frontal gyrus (BA 13/45/47)** | −40 | 17 | −1 | 3.63 | 79 |

| Left inferior frontal gyrus (BA 46/45) and middle frontal gyrus (BA 10) | −49 | 38 | 5 | 4.28 | 74 |

| Left middle temporal Gyrus (uncorrected) | −58 | −40 | −4 | 3.91 | 62 |

|

| |||||

| Subcortical Structures | |||||

|

| |||||

| Left thalamus* | −4 | −22 | −4 | 4.58 | 191 |

| Left caudate | −10 | 8 | 8 | 3.29 | 41 |

| Right cerebellum Crus I/II | 23 | −70 | −31 | 3.33 | 28 |

| Right cerebellum Lobule V | 14 | −46 | −13 | 3.27 | 23 |

| b) Main effect of Task: Perception vs. Production

| |||||

|---|---|---|---|---|---|

| Regions Production > Perception |

x | y | z | Maximum t-value | No. of voxels |

| Right medial frontal gyrus | 8 | 8 | 47 | 12.70 | 449 |

| Left precentral gyrus | −43 | −7 | 35 | 11.59 | 168 |

| Right superior temporal gyrus | 47 | −19 | 2 | 11.13 | 148 |

| Right lentiform/thalamus | 14 | −7 | 2 | 10.55 | 124 |

| Right precentral gyrus | 47 | −13 | 35 | 8.88 | 123 |

| Right cerebellum (lobule VI) | 38 | −55 | −25 | 10.43 | 122 |

| Left lentiform nucleus/putamen | −19 | 2 | −1 | 9.45 | 104 |

| Left insula | −28 | 23 | 5 | 9.6 | 80 |

| Left transverse temporal gyrus | −49 | −22 | 11 | 9.15 | 67 |

| Right superior temporal gyrus | 50 | 2 | 2 | 9.15 | 48 |

Note. Regions reported at the maximum t-value peak Talairach coordinates at a p-value of .05 and a corrected cluster size at an alpha of .05 (> 171 voxels) are denoted with *. For the largest clusters we also report subpeak Talairach coordinates within those clusters. Regions that were significant for a restricted search space in a mask of language areas (LSTG, LMTG, LIFG, LMFG, LIPL) and have been corrected at a cluster size threshold p of .05 and an alpha of .05 (> 78 voxels) are denoted with **. The caudate and cerebellum clusters are uncorrected for whole brain.

No significant interactions between condition and task were found for the ANOVA (Related, Unrelated) X (Perception, Production). A large area that showed greater activation for Production compared to Perception, at p-value of = .05 encompassed multiple brain regions, making it difficult to report individual brain areas. Therefore, the results presented in part b of the Table below are reported at a threshold of p = .00001.3

Figure 2.

Regions showing differences in activity between Related and Unrelated. Sagittal view at x = −60, −47, and 45, corrected at a voxelwise threshold of p < .05. Orange scale depicts positive t-values (greater activity for Related). Blue scale depicts negative t-values (more deactivation for Related).

We also determined a cluster threshold for brain areas previously implicated in speech perception and semantic processing on perception. To this end, we constructed an anatomical mask (4,786 voxels), which consisted of the left inferior frontal gyrus, the left inferior parietal lobe, the left middle frontal gyrus, the left middle and superior temporal gyri. Using a minimum voxel-wise threshold at a p-value of .05 and a cluster size threshold at an alpha of .05, significant areas within this restricted search space are denoted with ** in Tables 1, 2, and 3.

Table 2.

Condition (Related, Same) X Task (Perception, Production)

| a) Main effect of Condition: Related vs. Same

| |||||

|---|---|---|---|---|---|

| Regions | x | y | z | Maximum t-value | No. of voxels |

| R superior temporal gyrus (STG)* | 62 | −25 | 8 | −8.24 | 1204 |

| Left insula/STG* | −40 | −16 | −1 | −7.61 | 964 |

| Left inferior frontal gyrus BA 45/47** | −49 | 26 | 5 | 3.99 | 194 |

|

| |||||

| Left middle temporal gyrus (uncorrected) | −58 | −13 | −10 | 3.92 | 41 |

| Left anterior superior temporal gyrus/38 (uncorrected) | −42 | 11 | −19 | 3.2 | 27 |

| b) Main effect of Task: Perception vs. Production

| |||||

|---|---|---|---|---|---|

| Regions Production > Perception |

x | y | z | Maximum t-value | No. of voxels |

| Right thalamus and right superior temporal gyrus | 14 | −10 | 2 | 12.78 | 772 |

| Left cingulate gyrus | −4 | 20 | 32 | 15.82 | 733 |

| Left precentral gyrus and left transverse temporal gyrus | −43 | −7 | 32 | 10.69 | 344 |

| Right cerebellum (Lobule VI) | 35 | −52 | −25 | 10.39 | 129 |

| Left thalamus | −7 | −10 | 2 | 9.30 | 89 |

| Left lentiform nucleus/putamen | −16 | 5 | 5 | 10.57 | 73 |

| Left insula | −28 | 23 | 5 | 9.79 | 58 |

| c) Interaction: Condition (Related, Same) X Task (Perception, Production)

| |||||

|---|---|---|---|---|---|

| Regions | x | y | z | Maximum t-value | No. of voxels |

| Right medial frontal gyrus* | 5 | −7 | 59 | 8.81 | 2926 |

| Left Precentral Gyrus* and superior temporal gyrus | −43 | −10 | 29 | 25.81 | 1315 |

Regions reported at the maximum t-value peak Talairach coordinates at a p-value of .05 and a corrected cluster size at an alpha of .05 (> 246 voxels) are denoted with *. Regions that were significant for a restricted search space in a mask of language areas (LSTG, LMTG, LIFG, LMFG, LIPL) and have been corrected at a cluster size threshold p of .05 and an alpha of .05 (> 100 voxels) are denoted with **.

The results presented in the Table below are reported at a reduced threshold of p = .00001.3

Regions reported at the maximum t-value peak Talairach coordinates at a p-value of .05 and a corrected cluster size at an alpha of .05 (see Figure in Supplementary materials, on last page of main document).

Table 3.

Condition (Unrelated, Same) X Task (Perception, Production)

| a) Main effect of Condition: Unrelated vs. Same

| |||||

|---|---|---|---|---|---|

| Regions | x | y | z | Maximum t-value | No. of voxels |

| Right superior temporal gyrus * | 62 | −25 | 8 | −6.8 | 1472 |

| Left insula/superior temporal gyrus BA 41 * | −40 | −25 | 14 | −6.39 | 771 |

| Right parahippocampal gyrus * | 32 | −40 | −4 | 5.17 | 579 |

| Right thalamus | 5 | −10 | 5 | −4.08 | 560 |

| Left middle occipital cortex * | −34 | −88 | 8 | 5.87 | 405 |

| Left supramarginal gyrus ** | −43 | −55 | 35 | −5.11 | 201 |

| L mid-medial temporal gyrus ** | −43 | −34 | −4 | 4.12 | 137 |

|

| |||||

| Left anterior superior temporal gyrus (uncorrected)

|

−40 | 17 | −28 | 4.22 | 56 |

| Subcortical Structures | |||||

|

| |||||

| Left cerebellum * Crus II/I | −34 | −55 | −34 | −4.38 | 165 |

| Right caudate and cingulate gyrus * | 21 | −25 | 26 | 3.02 | 153 |

| b) Main effect of Task: Perception vs. Production

| |||||

|---|---|---|---|---|---|

| Regions Production > Perception |

x | y | z | Maximum t-value | No. of voxels |

| Right lentiform nucleus | 17 | −10 | 2 | 13.85 | 807 |

| Left cingulate gyrus | −4 | 17 | 32 | 16.65 | 689 |

| Left precentral gyrus | −43 | −7 | 32 | 12.96 | 253 |

| Left lentiform nucleus/putamen | −19 | 5 | 2 | 10.29 | 183 |

| Left insula | −28 | 23 | 5 | 9.21 | 142 |

| Right cerebellum (Lobule VI) | 35 | −55 | −25 | 12.06 | 119 |

| Left transverse temporal gyrus | −49 | −19 | 11 | 9.79 | 112 |

| Left middle frontal gyrus | −34 | 32 | 29 | 11.60 | 56 |

| c) Interaction: Condition (Unrelated, Same) X Task (Perception, Production)

| |||||

|---|---|---|---|---|---|

| Regions | x | y | z | Maximum t-value | No. of voxels |

| Right cingulate gyrus and precentral gyrus and superior temporal gyrus | 16 | −28 | 35 | 30.46 | 2985 |

| Right postcentral gyrus and precentral gyrus and superior temporal gyrus | 53 | −13 | 14 | 31.82 | 1584 |

Regions reported at the maximum t-value peak Talairach coordinates at a p-value of .05 and a corrected cluster size at an alpha of .05 (> 329 voxels) are denoted with *. Regions that were significant for a restricted search space in a mask of language areas (LSTG, LMTG, LIFG, LMFG, LIPL) and have been corrected at a cluster size threshold p of .05 and an alpha of .05 (> 124 voxels) are denoted with **.

The results presented in the Table below are reported at a reduced threshold of p = .000013.

Regions reported at the maximum t-value peak Talairach coordinates at a p-value of .05 and a corrected cluster size at an alpha of .05.

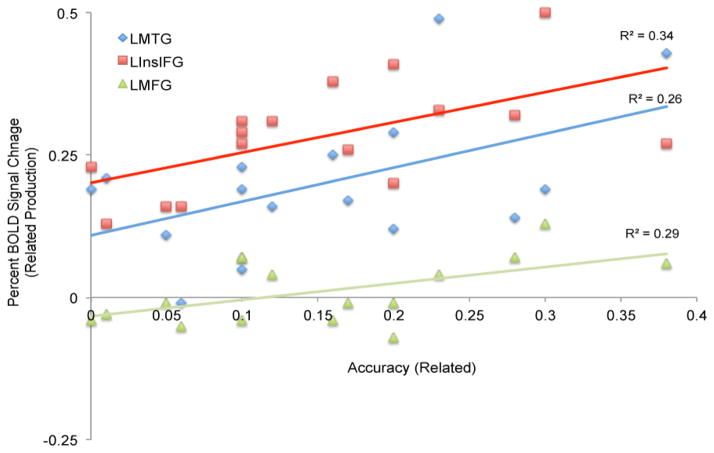

In order to examine how conceptually related sentences engaged specific areas to influence perception, we performed a correlation analysis between individual accuracy (the proportion of correct content words reported in the Production Task for each individual) and activation (% BOLD signal change) in the Related condition. This analysis was performed for each of the cerebral cortical regions that showed a significant difference between Related vs. Unrelated conditions in the ANOVA analysis within the restricted search space in the mask applied above. These included two IFG clusters, the MFG, AG, and MTG. Significant correlations were found in the left MFG, (R = 0.58, p = .025), the left IFG (BA 13/45/47) (R = 0.56, p = .019), and the left MTG (R = 0.51, p = .042) (see Figure 3, no correction for multiple comparison). The correlation between activity in the left AG and in the L IFG (46/45) did not reach significance, p > .05. None of the correlations between activity and performance on the Related condition in any of the right hemisphere clusters reached significance.

Figure 3.

Correlation plots for regions that showed a significant correlation between individual performance and brain activity on the Related condition in the Production task.

Effects of Same Sentence Pairs on Degraded Speech

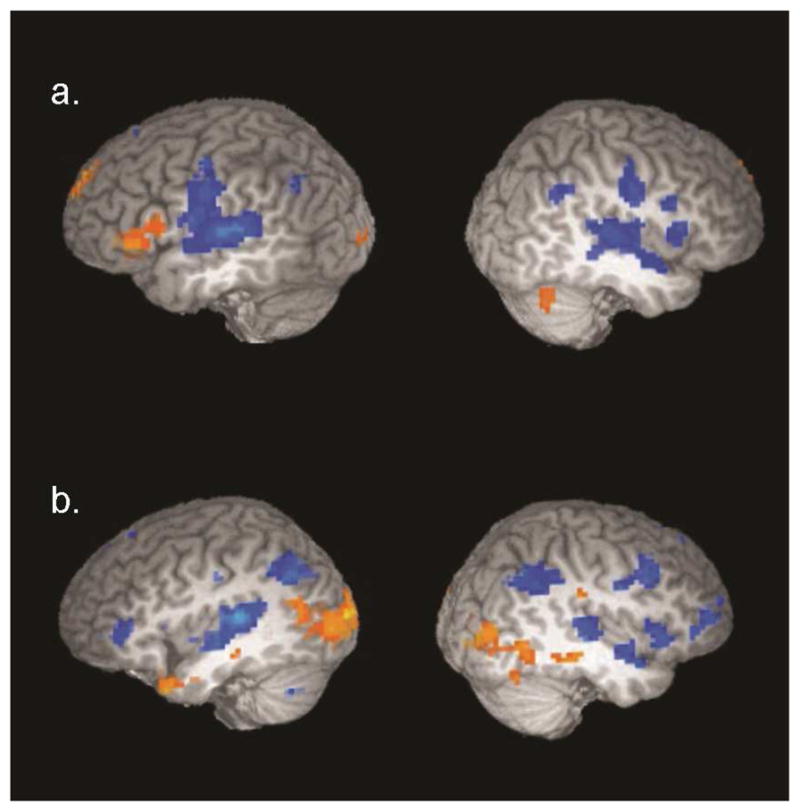

In order to determine potential differences between matching stimulus pairs and stimulus pairs related or unrelated conceptually, we conducted two Condition X Task ANOVAs: (Related, Same) X (Perception, Production), and (Unrelated, Same) X (Perception, Production). Results are reported of the main effects for Condition and Task, and their interaction (see Tables 2a, 2b, and 2c and Tables 3a, 3b, and 3c, respectively, and corresponding Figures in Supplementary Materials). Here, we focus on areas that showed a significant main effect for Condition (Related vs. Same and Unrelated vs. Same).

Results for the Related vs. Same contrast revealed increased activity in the Related compared to the Same condition in the left inferior frontal gyrus (BA 45/47), and decreased activity in the bilateral superior temporal gyri (see Table 2a and Figure 4). Results for the Unrelated vs. Same contrast showed increased activity for the Unrelated compared to Same condition in the bilateral medial temporal lobe encompassing the parahippocampal gyrus and the left middle occipital gyrus, and decreases in activity for the Unrelated compared to Same in the bilateral superior temporal gyri (encompassing parts of the left transverse temporal gyrus), and the left inferior parietal lobe (see Table 3a and Figure 4). Thus, in both analyses, the STG showed increased activation for matched sentence pairs compared to either conceptually related or conceptually unrelated sentence pairs.

Figure 4.

Top panel a. Regions showing differences in activity between Related and Same sentence pairs. Sagittal view at x = −53, 43, corrected at a voxelwise threshold of p < .05. Bottom panel b. Regions showing differences in activity between Unrelated and Same sentence pairs. Sagittal view at x = −40, 40, corrected at a voxelwise threshold of p < .05. Orange scale depicts positive t-values (greater activity for Related or Unrelated compared to Same). Blue scale depicts negative t-values (greater activity for Same).

Functional Connectivity Results

A gPPI functional connectivity analysis was conducted to probe whole brain connectivity. A seed region was selected in the middle frontal gyrus region (MFG) from the Related/Unrelated contrast. The connectivity analysis revealed a network that included frontal (middle and medial), parietal (supramarginal and angular gyri), and temporal (left superior temporal gyrus) areas (see Table 4).

Table 4.

Connectivity results for functionally defined LMFG seed region

| Regions LMFG seed region |

x | y | z | Maximum t-value | No. of voxels |

|---|---|---|---|---|---|

| Main Effect | |||||

|

| |||||

| Right supramarginal gyrus * | 56 | −52 | 26 | 16.63 | 439 |

| Right middle frontal gyrus * | 32 | 17 | 44 | 12.92 | 186 |

| Right medial frontal gyrus (BA 9)* | 2 | 41 | 32 | 7.56 | 143 |

| Left superior and middle frontal gyrus (BA 8)* | −28 | 20 | 50 | 9.5 | 132 |

| Left angular gyrus* | −46 | −61 | 32 | 9.5 | 126 |

| Right fusiform gyrus* | 26 | −79 | −16 | 7.15 | 120 |

| Left precuneus | 8 | −55 | 56 | 7.62 | 79 |

| Left superior and transverse temporal gyrus | −40 | −19 | 8 | 7.58 | 67 |

Regions reported at the maximum t-value peak Talairach coordinates at a p-value of .05. Those corrected for cluster size at an alpha of .05 ( > 82 voxels) are denoted with *.

Discussion

Prior research has focused on effects of meaning on the perception of degraded speech at a single word level or within a sentence. The current study investigated effects of conceptual relationships between two different sentences and their influence on the perception of acoustically degraded speech. Here, participants heard a clearly presented auditory sentence followed by an acoustically degraded sentence that was either conceptually related, conceptually unrelated, or was the same as the preceding sentence.

Importantly, both the conceptually related and conceptually unrelated prime-target sentence pairs consisted of different content words. Any difference between the two conditions was, thus, contingent upon the relationship between the overall sentence meanings of the prime and target sentences. Such a relationship requires building a conceptual structure from the individual words within each of the prime and target sentences and ultimately integrating the abstract conceptual structures between the two sentences.

Results showed that listeners’ word recognition accuracy for the acoustically degraded sentences was significantly higher in conditions where the target sentence was preceded by a conceptually related sentence compared to when it was preceded by a conceptually unrelated sentence. Of importance, facilitation of perception was not due to semantic associations between individual words across the prime-target sentence pairs, as evidenced by a failure to show a significant correlation between word recognition accuracy and the maximum semantic associations between the words of the two sentences. Thus, the overall meaning between the sentences, not the semantic association between individual words, mediated the observed facilitation effects on perception.

As expected, the difference between the two conditions was associated with recruitment of a number of cortical regions in a frontal-parietal-temporal network. In particular, frontal clusters emerged in the L IFG and the MFG bilaterally, temporal clusters emerged in the L MTG, and parietal clusters emerged in the L AG and the R SMG. When correlating perceptual performance on the degraded sentences in the related condition in these areas, the L IFG (BA 13/45/47), L MFG and L MTG showed a significant correlation between individual performance and brain activity. Therefore, the effect of two different but conceptually related sentences on the perception of degraded speech does appear to modulate BOLD signal responses in many of the same areas previously found in studies examining semantic effects of within sentence meaning, including IFG, MTG, and AG. In contrast to prior studies, however, a cluster in the MFG emerged showing sensitivity to the perception of degraded speech influenced by whether the sentences are conceptually related or unrelated. Additionally, in contrast to other studies, this area showed greater activation for the Related compared to the Unrelated sentence pairs. We will first consider how activity within each of the clusters that emerged in this fronto-temporo-parietal network was modulated by the effect and then turn to how these patterns of activity may inform the functional architecture of the network.

Contributions from Frontal Cortex

The relative increase in activity in IFG for the Related compared to Unrelated sentence pairs is consistent with earlier research showing greater activity for effects of semantically biased and other predictive contexts on the perception of degraded speech (e.g., Sohoglu et al. 2012; Guediche et al., 2013). Greater activity in IFG (BA 45/47) has been used to argue for its role in providing greater modulatory feedback for a related (predictive or biased) sentence context compared to an unrelated one. This interpretation is also consistent with the increased activity in BA 45/47 found in the current study for the Related compared to the Same condition. In contrast to the Related condition, in the Same condition, both phonological and semantic information match in the two sentences. Thus, it is not necessary to ‘compute’ the extent of the conceptual relationship between the two sentences, since they are the same in acoustic speech properties, phonological information, words, and meaning. As a result, there is less reliance on frontal structures in the Same condition to identify the words in the degraded sentence.

Although the MFG has shown consistent activation across a number of studies examining semantic processing (Binder et al., 2009), has been implicated in word retrieval (Price, 2012), and linked to cognitive effects on speech perception related to working memory (Zekveld, Rudner, Johnsrude, Heslenfeld, & Ronnberg, 2012), increased activity in the L MFG has not been previously associated with effects of semantic context on degraded speech. That significant differences in activity were found in the MFG, and the degree of activation in the L MFG positively correlated with behavioral measures of accuracy in the related condition, suggest that this area not only encodes semantic relationships between sentence pairs but that its activity is related to the modulatory effects on speech perception.

Prior research has shown semantic priming effects with reduced activation in the MFG for acoustically unambiguous semantically related prime-target word pairs (e.g. cat-dog) compared to semantically unrelated prime-target pairs (e.g. shoe-dog) (Rissman, Eliassen, & Blumstein, 2003). Other neural areas also show reduced activation in a semantic priming task, e.g. the STG and inferior parietal lobe (Rissman et al., 2003). Enhanced activity in the MFG in the current study associated with effects of conceptually related compared to unrelated sentence meanings on the perception of degraded speech stands in contrast to these findings.

Effects of semantic priming have been interpreted as reflecting activation of a semantic network shared by the prime and target, hence requiring fewer neural resources to access the target stimulus given the preceding prime stimulus. Unlike most studies of semantic priming where one word facilitates recognition or processing of an acoustically unambiguous but semantically related word target, in this study, the target sentence was acoustically degraded. Hence, perception of the degraded input was modulated by the integration of acoustic and conceptual information in the target sentence with the conceptually related information in the preceding sentence. As shown in prior research (e.g., Calvert, Campbell, & Brammer, 2000; Tesink et al., 2009), integration of converging sources of information may enhance activity. Indeed, studies of context effects on degraded sentence perception often show increased activity in a predictive context (Clos, 2012; Wild et al., 2012; but cf. Obleser et al., 2010) or an interaction between predictability and intelligibility (e.g., Wild et al., 2012; Guediche et al, 2013; Gow et al., 2008).

Taken together, these findings support the view that frontal areas are sensitive to the conceptual relationships between sentences, even when this relationship depends on resolving degraded speech input. Whether the IFG and MFG have different functional roles cannot be determined from the current experiment. However, different functions have been attributed to these regions (Binder et al. 2009; Lau et al., 2008). In particular, it has been proposed that the MFG plays a critical role in tasks that require “self-guided semantic retrieval” (Binder et al., 2009, p. 2777). For example, damage to the MFG impairs the ability to generate responses that are not fully specified, such as generating words within a category, but leaves intact the ability to repeat words and/or name objects (Binder et al., 2009). Lau et al. (2008) attributed different functions in sentence processing to different portions of the IFG. They proposed that the anterior ventral portions of the IFG are involved in controlled semantic retrieval, whereas the mid IFG and mid-posterior is involved in more general selection mechanisms. The frontal clusters identified in the Related/Unrelated contrast included portions of both the anterior and posterior IFG as well as the MFG. Therefore, the functional role for these areas may draw upon all of these functions.

There is the possibility that modulation of activation in frontal areas reflects differences in the difficulty of processing the stimuli across the stimulus conditions. Performance is highest for Same sentences pairs followed by Related then Unrelated sentence pairs. U-shaped response profiles have been observed as a function of intelligibility and difficulty in both frontal and temporal areas (Poldrack et al. 2001; Hutchison et al., 2008). Thus, it is possible that the recruitment of frontal areas including the LMFG reflects difficulty differences among the different conditions. However, it is important to note that in the current experiment, performance is a function of the nature of the relationships between the prime and target sentences. Therefore, any differences in activity between the Related and Unrelated sentence pairs or between the Related and Same pairs that may due to difficulty are necessarily a result of the relationships between the sentences within the pair. What is not clear is whether increased difficulty might invoke the activation of frontal areas not seen for less difficult stimuli. Because the current study does not investigate the effect of different levels of degradation, we cannot address this issue. Nonetheless, in our view, this potential explanation seems unlikely given prior results in the literature. In particular, Obleser et al. (2007) showed increased involvement of a fronto-temporo-parietal network for conditions in which higher speech perception performance of degraded speech was achieved for a more predictive sentence compared to a less predictive sentence. Integrative semantic priming of word pairs (e.g., cherry-cake) has also been associated with enhanced activation in frontal areas (Feng et al., 2015).

Contributions from Temporal Cortex

The current study revealed a number of temporal lobe clusters. Significant clusters emerged in the MTG and STG. According to models of language processing, the MTG has been linked to semantic processing and storage of lexical representation (e.g. Hickok and Poeppel, 2007; Binder et al., 2009). Not surprisingly, this area is sensitive to effects of context (Golestani et al., 2013) and interactions between sentence context and ambiguity of acoustic phonetic information have also been shown (Guediche et al., 2013; Gow et al., 2008). Brouwer and Hoeks (2013) propose that the MTG is a hub for the retrieval of word meaning, and is modulated by the convergence of semantic/conceptual and other information such as its sound structure (also see Visser, Jefferies, Embleton, & Lambon, 2012). Indeed, the significant differences in activity between Related and Unrelated sentence pairs shown in the MTG, as well as the correlation between activity and behavior for Related sentence pairs, suggest its direct involvement in integrating conceptual and acoustic information.

Of interest, the superior temporal gyri (STG) did not show any significant differences between the Related and Unrelated conditions. However, increases in activity for the Same condition compared to either the Related or the Unrelated conditions were found in the STG bilaterally. The behavioral results for the Same condition showed substantially better performance on word recognition compared to either the Related or Unrelated conditions. Therefore, the increased activation in STG for the Same stimulus pairs compared to the Related or the Unrelated pairs appears to reflect the perceptual similarity between the two sentences and the resulting increase in perceptual clarity (see Wild et al., 2012), rather than the integration of meaning between the sentence pairs.

As described earlier, the Same sentence pairs differed from the Related/Unrelated sentence pairs in a number of linguistic parameters; the Same sentence pairs tended to be syntactically simpler than the Related/Unrelated pairs, and the majority of the Same sentence pairs had inanimate subjects, whereas the Related/Unrelated sentence pairs had predominantly animate subjects. It is possible then that differences between the Same and Related/Unrelated conditions in the STG reflect the linguistic differences inherent in the sentence stimuli. Indeed, prior research has shown that that both sentence complexity and/or animacy involves the STG (Kaan and Swaab 2002; Just et al., 1996; Friederici 2002; 2010; Caplan et al. 2016). However, the results obtained in the current study suggest that these factors cannot account for the pattern of activity obtained in the STG. In contrast to the findings in the literature showing that increased syntactic complexity increases activity in STG areas (Kaan and Swaab et al., 2002; Just et al. 1996), our results showed increased activity for the syntactically simpler Same sentence pairs. With respect to animacy, prior results showed that comparison of subject-initial versus object-initial sentences modulated activity in frontal cortex, not in the STG (Grewe et al., 2006; 2007), and sentences containing inanimate subjects and animate objects showed increased STG activity compared to sentences containing animate subjects and inanimate objects (Grewe et al., 2007). Here again, the results of the current study showed that the Same sentence pairs, beginning with inanimate subjects resulted in increased, not decreased, activation in the STG.

Surprisingly, no significant clusters emerged in regions in the anterior superior temporal gyrus, associated in earlier research with meaning integration (e.g., Feng et al. 2015; Lau and Poeppel, 2008) and with modulation of degraded speech as a function of sentence meaning (anomalous vs. coherent) (Davis et al., 2011). It is possible that the failure to show differences in activation in the aSTG in the current study reflects signal dropout due its location near the sinus cavity. Nonetheless, uncorrected clusters in the aSTG (see Tables 2 and 3) did show significant differences in activity between the Same condition and the Related and the Unrelated conditions. The lack of enhanced activity in the Related compared to the Unrelated condition in the ATL, as found in the MTG, suggests that the ATL may only be involved in integrating the words into the meaning of a sentence, but not in integrating overall meaning relationships between two different sentences. Consistent with this interpretation is the reduced aSTG activity for the Same condition in both the Related and Unrelated conditions. In the Same condition, where the prime and target sentences have identical words, neither the overall meaning within a sentence nor the meaning relationship between the sentences is required. Therefore, the reduced activity in the aSTG for the Same condition compared to either the Related or the Unrelated conditions may reflect its role in assembling words into the overall meaning within a sentence, but not in relating meaning across sentences.

Contributions from Parietal Cortex

Prior research has suggested multiple roles for the AG in language processing. In particular, it is implicated in processing meaning and more recently has been shown to represent amodal conceptual information (Seghier et al., 2010). It has been proposed that this area is involved in integrating semantic information into context (Lau et al., 2008), assembling combinatorial semantics (Price et al., 2015), and semantic control (Noonan, Jefferies, Visser, & Lambon, 2013). A number of studies investigating context effects on speech perception have also found that activity in the inferior parietal cortex is modulated by both intelligibility and semantic context (Golestani et al., 2013; McGettigan et al., 2012; Obleser & Kotz, 2010). For example, Golestani et al. (2013) examined the effect of semantically related and unrelated word primes in a forced-choice recognition task, manipulating the noise level in which the second target word was presented. They found a significant interaction between the semantic manipulation and the distortion level only in the inferior parietal lobe and specifically in the angular gyrus.

Results of the current study are consistent with these findings, and extend them to the integration of conceptual meaning across sentences under conditions of degraded speech. In particular, the AG showed increased activation in the Related compared to the Unrelated condition.

Activation in Visual Cortex

In addition to the enhanced activity in frontal, temporal, and parietal areas, deactivation was shown in middle occipital cortex with a relative decrease in activity (more deactivation) for the Related compared to Unrelated sentence pairs. Although differential activity between these two auditory conditions was not expected in visual areas, studies examining context effects on speech perception have previously reported differences in activation as a function of context in occipital areas (e.g., Obleser et al., 2007; Sohoglu et al., 2012). The functional significance for this difference is not clear. Suppression of visual activity can be modulated by attention (Smith et al. 2000). One study suggests that selective attention to speech perception engages top-down control mechanisms to suppress activity in all visual areas, except regions involved in visual word form recognition due to the associations between spoken and written word recognition (Yoncheva et al., 2010). Thus, differences in visual activity between conditions may be explained by increased selective attention to the auditory speech stimuli for the Related compared to Unrelated sentence pairs. This interpretation is consistent with the functional connectivity results, which show connections to occipital areas from the LMFG seed, an area associated with selective attention (e.g., Lepsien and Pollman, 2002).

Network Connections

Thus far, we have described potential contributions of each cortical region. However, anatomical and functional connections among these regions in frontal, temporal, and parietal cortex have also been established. Turken and Dronkers (2011) showed the MTG is both structurally and functionally connected to the pars orbitalis of the IFG (BA47), the AG (BA 39), STG (BA 22) and functionally connected to the MFG (DLPFC/BA 46). The anatomical connections provide the architecture for interactions among these regions. Indeed, the strength of functional correlations may depend on task demands (Obleser & Kotz, 2011). Obleser et al. (2007) showed that connections among frontal, parietal, and temporal areas are modulated during speech perception depending on the predictability of the semantic context within individual sentences; functional connections among these areas increased when semantic context facilitated the perception of degraded speech.

The results of the current study build on previous findings that show that a fronto-temporo-parietal network is involved in the processing of meaning (e.g., Binder, 2009), is sensitive to word and sentence context, and is modulated by the acoustic input. For the first time, these findings suggest that modulation of activity in this network extends to the integration of conceptual information between sentences, and that the nature of the conceptual relationship between the sentences (conceptually related, conceptually unrelated, or the same) affects the perception of degraded speech.

The connectivity results show that the LMFG seed (which is more active for Related than Unrelated sentences) is part of a fronto-temporo-parietal network. Of interest, the LMFG has not typically been implicated in the processing of degraded speech as a function of conceptual meaning. Therefore, this area appears to be part of a functional network that integrates degraded acoustic information with the conceptual meaning of a sentence.

Several hypotheses have been made concerning the mechanism by which sensory information and prior knowledge are integrated. One view is that integration occurs at a post-perceptual stage of processing (feedforward only model). An alternative view is that higher level information influences early stages of sensory processing (feedback model). In a recent paper, Sohoglu et al. (2012) showed reduced activity in the STG when a matching text cues context. This effect emerges across degradation conditions. The authors conclude that their findings are consistent with a predictive coding model. However, the results of the current study suggest another possibility. In particular, integration of sensory and conceptual meaning occurs simultaneously throughout a fronto-temporo-parietal network. This interpretation is based on the current findings that prior knowledge of the conceptual meaning of a sentence influences the perception of degraded speech. Increased activity was shown for sentences that were conceptually related compared to sentences that were conceptually unrelated in both frontal (IFG, MFG) and temporal areas (MTG/STG). The similar pattern that emerged in both frontal and temporal areas suggests that these areas are part of a common functional network.

At least two potential architectures could account for this functional network. Whereas the STG receives sensory input and preserves acoustic fine detail, the MTG, AG, IFG and MFG are recruited for higher level linguistic processing (lexical, syntactic, semantic) needed to derive the conceptual meaning of a sentence. What is unclear is whether acoustic fine detail is preserved in these other areas or not. One possibility is that the areas within the network are heteromodal, preserving details of the incoming acoustic signal from the STG and integrating this information with higher levels of language. In this view, both acoustic and conceptual information are integrated throughout the processing stream. Another possibility is that the ‘goodness’ of the sensory input produces graded activation of the more abstract lexical, syntactic, and semantic information, but the sensory information per se is no longer preserved at higher levels of abstraction. In other words, high quality acoustic speech input fully activates information at higher levels of language abstraction (e.g., lexical, semantic, conceptual), whereas poorer quality acoustic speech input more weakly activates these higher levels of language. As a result, the integration of meaning would be based only on abstract linguistic properties corresponding to different linguistic information (i.e. lexical, syntactic, and semantic), and acoustic fine details would not be preserved throughout the processing stream. In either case, modulation of activation as a function of the conceptual relationship between the two sentences would emerge in a network of frontal- temporo-parietal areas, and enhance activity as evidence accumulates and propagates through the speech system. These results are consistent with a highly interactive speech processing system, in which sources of information that constrain and provide consistent interpretations of the sensory input mutually activate one another (McClelland, Mirman, Bolger, & Khaitan, 2014). In such a model, there may be functional differences in the ‘weighting’ of information in different areas within the fronto-temporo-parietal network, with the MTG involved in accessing semantic/conceptual properties of words, the AG being particularly sensitive to lexical-semantic properties of words and their integration with each other, and the IFG and MFG recruited in both integration and selection processes. Whether there is differential weighting cannot be determined by the results of the current experiment. Nonetheless, the evidence suggests that a fronto-temporo-parietal network serves to build and integrate conceptual relations within and between sentences derived from the sensory input, the meanings of words, and their compositional structure.

Potential role of subcortical structures

In addition to the clusters identified in regions in the cerebral cortex, several clusters emerged in subcortical structures. A significant cluster in the thalamus showed increased activity for the Related compared to Unrelated condition. Two additional subcortical regions of interest, in the cerebellum and caudate, also showed significant differences in activity, p < .05 at an uncorrected cluster size threshold (see Table 1). Typically, most language research focuses on the cortical structures that form the ‘language network’, however, it is worthwhile considering the contribution of subcortical structures to language processing.

The thalamus receives inputs from many different sensory modalities and serves as a relay to cerebral cortex. It has been referred to as a “hub” and site of integration for input from multiple modalities (Cappe, Rouiller, & Barone, 2012). Thus, it may be involved in feedforward-feedback interactions (Alitto & Usrey, 2003) that regulate the processing of sensory input. There is some evidence that it participates in adaptive functions in speech perception; Erb et al. (2013) showed thalamo-cortical interactions are upregulated during successful adaptation to distorted sentences. Therefore, increased activity observed in the thalamus may also reflect the differences in the perception of the degraded sentence between the Related and Unrelated conditions.

Adaptive functions of the cerebellum have been cited across many domains of motor, language, and cognitive processes (e.g., Callan, Callan, & Jones, 2014; Guediche, Holt, Laurent, Lim, & Fiez, 2014; Schmahmann, Macmore, & Vangel, 2009; Stoodley & Schmahmann, 2009). The subregion of the cerebellum reported in the current study for the Related/Unrelated contrast, in Crus I, has been previously linked to language processes and adaptive processes (e.g., Schmahmann et al., 2009; Guediche et al., 2014) and may reflect the encoding of discrepancies between expected and actual sensory input (Rothermich & Kotz, 2013; Schlerf, Ivry, & Diedrichsen, 2012).

The role of the basal ganglia likely reflects reward processes involved in the successful retrieval and perception of degraded speech (see Schwarze, Bingel, Badre, & Sommer, 2013). This view is consistent with Snijders et al.’s 2010 finding that striatal connections to IFG during sentence level unification support retrieval from LpMTG (Snijders, Petersson, & Hagoort, 2010). Furthermore, a recent adaptation study showed that basal ganglia involvement in reinforcement learning signals is linked to adaptive changes in speech perception (Lim, Fiez, & Holt, 2014).

Summary

The current study investigated differences in brain activity between different types of clear-degraded sentence pairs, which were conceptually Related, Unrelated, or the Same. A network of regions in frontal, parietal, temporal, and subcortical structures showed enhanced activity for the Related compared to Unrelated sentence pairs. Activity in the left inferior and middle frontal and middle temporal areas also correlated with individual word recognition performance. In addition to frontal areas and MTG, the angular gyrus and subcortical structures including the thalamus, caudate, and cerebellum also showed greater activity for conceptually related compared to unrelated sentence pairs.

The collection of areas that were identified as showing enhanced activity for Related sentence contexts is consistent with a mechanism in which integration occurs simultaneously throughout a fronto-temporo-parietal functional network. There are two possible instantiations of this network. In one case, all of the components of this network are involved in integrating the related sentence meaning with the acoustic signal. In the neuroimaging meta-analysis of semantic processing studies, Binder et al. (2009) distinguish an ‘intrinsic’ semantic network, from an ‘extrinsic’, perceptual network. Interestingly, overlap between the two networks includes many of the areas identified in this study including the IFG, MFG, AG, and MTG (Binder et al. 2009, page 2783, Figure 9), and suggests that these regions integrate external acoustic input with internally generated semantic information. Another possibility is that semantic and acoustic information are integrated with one another in one region, which then modulates activity throughout the network but in relation to functionally specific regions in the network. Regardless of the exact mechanism, this fronto-temporo-parietal-network, which includes the thalamus and other subcortical areas in caudate and the cerebellum, appears to consolidate information sources across multiple levels of language (acoustic, lexical, syntactic, semantic) to build and ultimately integrate conceptual meaning across sentences and facilitate the perception of a degraded speech signal.

Supplementary Material

Appendix

Sentences used for the Related and Unrelated conditions

| Prime (Version 1)

|

Target

|

Condition

|

|---|---|---|

| She wasted half the day waiting for the electrician. | She expected the repairman to come all morning. | Related |

| He squished the beetle under his foot | He killed the bug with his shoe | Related |

| She won an award for her journalism. | She was recognized for her news article. | Related |

| He pressed his button down. | He ironed his favorite shirt | Related |

| The suspect escaped arrest. | The criminal got away from the police. | Related |

| He decided to marry his sweetheart. | He proposed to his girlfriend. | Related |

| She lost her bifocals. | She misplaced her glasses. | Related |

| The youth went swimming because it was hot. | The kids went to the pool to cool down. | Related |

| She went shopping for dress shoes. | She looked for a pair of heels. | Related |

| She could tell it was him by his outfit. | She recognized her friend by his shirt. | Related |

| She had to get a passing score to get her diploma. | She needed a good grade to graduate. | Related |

| She doubted his faithfulness. | She suspected he was cheating. | Related |

| He was unable to give up cigarettes. | He had a difficult time quitting smoking. | Related |

| She sewed a fastener on the blazer. | She replaced a button on her jacket. | Related |

| She had bad motor coordination. | She was clumsy. | Related |

| Her feet hurt after the hike. | Her legs were sore from the long walk. | Related |

| He was not on time because he got lost. | He arrived late because he took a wrong turn. | Related |

| He gave his manuscript to his agent. | He sent his novel to his publisher. | Related |

| The dog gnawed the stocking. | The puppy chewed the sock. | Related |

| She put her clothes in a suitcase for vacation. | She packed a bag for her trip. | Related |

| He requested that his boss speak on his behalf. | He asked a superior to vouch for him. | Related |

| She pruned her garden. | She tended to her flowers. | Related |

| She ran out of medicine. | She needed a refill on her prescription. | Related |

| They ordered a bottle of wine with dinner. | They had a drink with their meal. | Related |

| She craved ice cream after her meal. | She wanted a frozen dessert after dinner. | Related |

| She works out daily at the track. | She goes for a run every morning. | Related |

| Her colleagues frequently played jokes on her. | Her coworkers enjoyed pranks at her expense. | Related |

| She read a book about cooking. | She was a double agent for the enemy. | Unrelated |

| He was looking for his watch. | She could not wait to open her presents. | Unrelated |

| He looked like a famous person. | She was a terrible artist. | Unrelated |

| He wrote a short story. | She dove into the pool. | Unrelated |

| Reading in the dark is not fun. | Going to nice restaurants is her favorite hobby. | Unrelated |

| She dreamed of meeting movie stars. | She served a refreshing beverage to her friends. | Unrelated |

| She drove to the mall. | He scored a goal in soccer. | Unrelated |

| The ball was rolling down the hill. | She cooked a big dinner for her friends. | Unrelated |

| She ate all of the food in the refrigerator. | She was punished for coming home late. | Unrelated |

| She wears high heals when she goes out. | He gains weight when the season is cold. | Unrelated |

| He helped an old man climb the stairs. | She took care of her sister’s daughter. | Unrelated |

| The faster she ran the closer she got. | The harder he tried the less he got done. | Unrelated |

| He went home early. | She found a ticket on her windshield. | Unrelated |

| He works at a restaurant sometimes. | She drinks a pot of tea in the evening. | Unrelated |

| Commuting to the new job made him exhausted. | Studying ballet increased her flexibility. | Unrelated |

| He fell into a ditch. | She forgot an umbrella and got soaked. | Unrelated |

| He slept in his car. | He recognized the tune. | Unrelated |

| He holds the door open. | She pays her bills on time. | Unrelated |

| He put the pencil on the table. | He screwed the cap on the bottle. | Unrelated |

| He filled the tank. | She was behind in her work. | Unrelated |

| The teacher cleaned the erasers. | The singer wrote music. | Unrelated |

| She fell off the bridge into the water below. | She taught her son manners. | Unrelated |

| She knew the subject matter. | She was taught to skydive. | Unrelated |

| There was enough food for the party. | There were insufficient funds for her check. | Unrelated |

| She found the children at the park. | She bought broccoli and carrots at the grocery store. | Unrelated |

| She tripped over the pair of red shoes. | She went to the salon to get a haircut. | Unrelated |

| He went to the beach. | She moved out of her apartment. | Unrelated |

| Prime (Version 2) | Target | Condition |

| Visiting eateries is her most popular pastime. | Going to nice restaurants is her favorite hobby. | Related |

| She was excited to unwrap the packages. | She could not wait to open her presents. | Related |

| She was in trouble for missing the curfew. | She was punished for coming home late. | Related |

| She jumped in the water. | She dove into the pool. | Related |

| She gave lemonade to her guests. | She served a refreshing beverage to her friends. | Related |

| He kicked a ball into the net during the game. | He scored a goal in soccer. | Related |

| She drew bad pictures. | She was a terrible artist. | Related |

| She was a spy for a military foe. | She was a double agent for the enemy. | Related |

| She made a three course meal for her guests. | She cooked a big dinner for her friends. | Related |

| Practicing dance made her limber. | Studying ballet increased her flexibility. | Related |

| She babysat her niece. | She took care of her sister’s daughter. | Related |

| He gets heavier in the winter. | He gains weight when the season is cold. | Related |

| She always mails her checks by the deadline. | She pays her bills on time. | Related |

| The more he worked the worse his accomplishment. | The harder he tried the less he got done. | Related |

| She brews a hot beverage after dinner. | She drinks a pot of tea in the evening. | Related |

| She had a parking fine on her car. | She found a ticket on her windshield. | Related |

| He was familiar with the song. | He recognized the tune. | Related |

| She had nothing to keep rain off of her. | She forgot an umbrella and got soaked. | Related |

| She purchased vegetables at the supermarket. | She bought broccoli and carrots at the grocery store. | Related |

| She treated herself to a makeover. | She went to the salon to get a haircut. | Related |

| She relocated to a new residence. | She moved out of her apartment. | Related |

| She had tasks piling up. | She was behind in her work. | Related |

| He put the lid on the container. | He screwed the cap on the bottle. | Related |

| The musician composed songs. | The singer wrote music. | Related |

| Her bank account was overdrawn. | There were insufficient funds for her check. | Related |

| She learned how to jump out of an airplane. | She was taught to skydive. | Related |

| She raised her boy to be polite. | She taught her son manners. | Related |

| He retracted the statement. | He proposed to his girlfriend. | Unrelated |

| He watched television for a long time. | She expected the repairman to come all morning. | Unrelated |

| He bought a brand new car. | She needed a refill on her prescription. | Unrelated |

| The boys had school in the fall. | The kids went to the pool to cool down. | Unrelated |

| He accidentally smashed the light bulb. | She misplaced her glasses. | Unrelated |

| The athlete completed the tournament. | The criminal got away from the police | Unrelated |

| He answered the phone. | He ironed his favorite shirt. | Unrelated |

| She put a jacket on. | He killed the bug with his shoe. | Unrelated |