Abstract

Protein remote homology detection is one of the central problems in bioinformatics. Although some computational methods have been proposed, the problem is still far from being solved. In this paper, an ensemble classifier for protein remote homology detection, called SVM-Ensemble, was proposed with a weighted voting strategy. SVM-Ensemble combined three basic classifiers based on different feature spaces, including Kmer, ACC, and SC-PseAAC. These features consider the characteristics of proteins from various perspectives, incorporating both the sequence composition and the sequence-order information along the protein sequences. Experimental results on a widely used benchmark dataset showed that the proposed SVM-Ensemble can obviously improve the predictive performance for the protein remote homology detection. Moreover, it achieved the best performance and outperformed other state-of-the-art methods.

1. Introduction

In computational biology, protein remote homology detection is the classification of proteins into structural and functional classes given their amino acid sequences, especially, with low sequence identities. Protein remote homology detection is a critical step for basic research and practical application, which can be applied to the protein 3D structure and function prediction [1, 2]. Although remote homology proteins have similar structures and functions, they lack easily detectable sequence similarities, because the protein structures are more conserved than protein sequences. When the protein sequence similarity is below 35% at the amino acid level, the alignment score usually falls into a twilight zone [3, 4]. Therefore, it is often a failure to detect protein remote homology by computational approaches only based on protein sequence features. To improve the specificity and sensitivity of the detection, we proposed an ensemble learning method, which can combine basic classifiers based on different feature spaces.

Up to now, many methods for protein remote homology detection have been proposed, which can be categorized into three groups [5]: pairwise alignment algorithms, generative models, and discriminative classifiers. Early computational approaches for protein remote homology detection are pairwise alignment methods, which detect sequence similarities between any given two protein sequences by using Needleman-Wunsch global alignment algorithm [6, 7] and Smith-Waterman local alignment algorithm [8]. Later, some trade-off methods were proposed so as to trade reduced accuracy for improved efficiency, such as BLAST [9] and FASTA [10]. PSI-BLAST [11] iteratively builds a probabilistic profile of a query sequence and therefore a more sensitive sequence comparison score can be calculated [12]. After pairwise alignment methods, the predictive accuracy was significantly improved by using the generative algorithms. Generative models were iteratively trained by using positive samples of a protein family or superfamily; for example, HHblits [13] generates a profile hidden Markov model (profile-HMM) [14, 15] from the query sequence and iteratively searches through a large database.

Currently the discriminative methods achieve the state-of-the-art performance [16–19]. Different from pairwise algorithm and generative methods, the discriminative methods can easily embed various characteristics of protein sequences and learn the information from both positive and negative samples in a given benchmark dataset. A key feature of discriminative method is that its input requires fixed length feature vectors. Therefore, some researchers proposed various feature vectors for protein representation. Some methods are based on sequence information, physical and chemical properties of proteins [20–22], or secondary structure information [23, 24], such as SVM-DR [25]. Some methods are based on kernel method, such as SVM-Pairwise [5], SVM-LA [26], motif kernel [27], mismatch [28], SW-PSSM [29], and profile kernel [30]. Later, the performance of discriminative approaches is further improved by Top-n-gram, because it can transform protein profiles into pseudo protein sequences, which contain the evolutionary information [31–33].

Although many discriminative methods for protein remote homology detection have been proposed based on various feature extracting techniques, there is no attempt to combine these methods using an ensemble learning method to improve predictive performance. An ensemble classifier [34, 35] is built by combining a set of basic classifiers in weighted voting strategy to give a final determination in classifying a query sample. Ensemble classifiers have achieved great success in many fields, including protein-protein interaction sites [36], protein fold pattern recognition [22, 37], tRNA detection [38, 39], microRNA identification [40–44], DNA binding protein identification [45], and eukaryotic protein subcellular location prediction [46], because they are able to learn a more expressive concept in classification compared to a single classifier and reduce the variance caused by a single classifier.

In this study, inspired by the success of ensemble classifier in the other fields, we proposed an ensemble classifier for protein remote homology detection, called SVM-Ensemble, which combined three state-of-the-art discriminative methods with a weighted voting strategy. The three basic classifiers SVM-Kmer, SVM-ACC, and SVM-SC-PseAAC were constructed with Kmer, auto-cross covariance (ACC), and series correlation pseudo amino acid composition (SC-PseAAC), respectively. Experimental results on a widely used benchmark dataset [5] showed that SVM-Ensemble can obviously improve the predictive performance by combining various features. Moreover, SVM-Ensemble achieved an average ROC score of 0.945, outperforming the other start-of-the-art methods, indicating that it would be a useful computational tool for protein remote homology detection.

2. Materials and Methods

2.1. Benchmark Dataset

A widely used superfamily benchmark [5] was used to evaluate the performance of our method for protein remote homology detection. The classification problem definition and benchmark dataset are available at http://noble.gs.washington.edu/proj/svm-pairwise/. The same dataset has been used in a number of earlier studies [26, 47–50], allowing us to perform direct comparisons to the relative performance.

The benchmark contains 54 families and 4352 proteins, which are derived from the SCOP database with version 1.53 and the similarities between any two sequences are less than E-value of 10−25. Remote homology detection can be treated as a superfamily classification problem. For each family, the proteins within the family were regarded as positive test samples, and the proteins outside the family but within the same superfamily were taken as positive training samples. Negative samples were selected from outside of the fold and split into training and testing sets. This process was repeated until each family had been tested. This yielded 54 families with at least 10 positive training examples and 5 positive test examples.

2.2. Profile-Based Protein Representation

Although some methods have achieved certain degree of success only by using amino acid sequence information, their performance is not satisfying. Recent studies demonstrated that the methods over profile-based protein sequences would show better performance because a profile is richer than an individual sequence as far as the evolutionary information is concerned [50, 53].

The frequency profile 𝕄 for protein P with L amino acids can be represented as

| (1) |

where m i,j (0 ≤ m i,j ≤ 1) is the target frequency which reflects the probability of amino acid i (i = 1,2,…, 20) occurring at the sequence position j (j = 1,2,…, L) in protein P during evolutionary processes. For each column in 𝕄, the elements add up to 1. Each column can therefore be regarded as an independent multinomial distribution. The target frequency was calculated from the multiple sequence alignments generated by running PSI-BLAST [11] against the NCBI's NR with default parameters except that the number of iterations was set at 10 in the current study. The details of how to build a frequency profile can be found in [50].

Given the frequency profile 𝕄 for protein P, we can find the amino acid with maximum frequency in each column of 𝕄. These amino acids are combined to produce the profile-based protein representation. In a frequency profile 𝕄, the target frequencies reflect the probabilities of the corresponding amino acids appearing in the specific sequence positions. The higher the frequency is, the more likely the corresponding amino acid occurs. Thus, the produced profile-based protein sequence contains evolutionary information in the frequency profile. We convert the frequency profiles into a series of profile-based proteins. The existing sequence-based methods can therefore be directly performed on the protein representations for further processing.

2.3. Feature Vector Representations for Protein Sequences

In this study, three kinds of features have been employed to construct the SVM-Ensemble predictor, including Kmer, auto-cross covariance (ACC), and series correlation pseudo amino acid composition (SC-PseAAC).

Suppose a protein sequence P with L amino acid residues can be represented as

| (2) |

where R i represents the amino acid residue at the sequence position i, such that R 1 represents the amino acid residue at the sequence position 1 and R 2 represents the amino acid residue at position 2 and so on. The three used representation methods can be described as follows.

2.3.1. Kmer

Kmer [56] is the simplest approach to represent the proteins, in which the protein sequences are represented as the occurrence frequencies of k neighboring amino acids.

2.3.2. Auto-Cross Covariance (ACC)

ACC transformation [60–62] is to build two signal sequences and then calculate the correlation between them. ACC results in two kinds of variables: autocovariance (AC) transformation and cross covariance (CC) transformation. AC variable measures the correlation of the same property between two residues separated by a distance of lag along the sequence. CC variable measures the correlation of two different properties between two residues separated by lag along the sequence.

Autocovariance (AC) Transformation. Given a protein sequence P in (2), the AC variable can be calculated by

| (3) |

where u is a physicochemical index, L is the length of the protein sequence, P u(R i) means the numerical value of the physicochemical index u for the amino acid R i, and is the average value for physicochemical index u along the whole sequence:

| (4) |

In such a way, the length of AC feature vector is N∗LAG, where N is the number of physicochemical indices. LAG is the maximum of lag (lag = 1,2,…, LAG).

Cross Covariance (CC) Transformation. Given a protein sequence P in (2), the CC variable can be calculated by

| (5) |

where u 1, u 2 are two different physicochemical indices, L is the length of the protein sequence, and P u1(R i), P u2(R i+lag) are the numerical value of the physicochemical indices u 1, u 2 for the amino acids R i, R i+lag. , are the average value for physicochemical index values u 1, u 2 along the whole sequence and they can be calculated by (4).

In such way, the length of the CC feature vector is N∗(N − 1)∗LAG, where N is the number of physicochemical indices. LAG is the maximum of lag (lag = 1,2,…, LAG).

Therefore, the length of the ACC feature vector is N∗N∗LAG. In current implementation, three physicochemical properties were employed, including hydrophobicity, hydrophilicity, and mass (see Table S1 in Supplementary file, available online at http://dx.doi.org/10.1155/2016/5813645) extracted from AAindex [57, 63].

2.3.3. Series Correlation Pseudo Amino Acid Composition (SC-PseAAC)

SC-PseAAC [64] is an approach incorporating the contiguous local sequence-order information and the global sequence-order information into the feature vector of the protein sequence. Given a protein sequence P in (2), the SC-PseAAC [64] feature vector of P is defined:

| (6) |

where

| (7) |

where f i (i = 1,2,…, 20) is the normalized occurrence frequency of the 20 native amino acids in the protein P; the parameter λ is an integer, representing the highest counted rank (or tier) of the correlation along a protein sequence; w is the weight factor ranging from 0 to 1; and τ j is the j-tier sequence-correlation factor that reflects the sequence-order correlation between all of the most contiguous residues along a protein sequence, which is defined as

| (8) |

where H i,j 1, H i,j 2, and M i,j are the hydrophobicity, hydrophilicity, and mass correlation functions given by

| (9) |

where , , and are the substituting values of hydrophobicity, hydrophilicity, and mass values for amino acid R i. They are all subjected to a standard conversion as described by the following equation:

| (10) |

where we use ℝ i (i = 1,2,…, 20) to represent the 20 native amino acids. The symbols h 1(R i), h 2(R i), and m(R i) represent the original hydrophobicity, hydrophilicity, and mass values (see Table S1 in Supplementary file) of the amino acid R i.

These aforementioned features can be generated by a web-server called Pse-in-one [56], which can be used to generate the desired feature vectors for protein/peptide and DNA/RNA sequences according to the need of user's studies. It covers a total of 28 different modes, of which 14 are for DNA sequences, 6 are for RNA sequences, and 8 are for protein sequences.

2.4. Support Vector Machine

Support vector machine (SVM) is a supervised machine learning technique for classification task based on statistical theory [65, 66]. Given a set of fixed length training vectors with labels (positive and negative input samples), SVM can learn a linear decision boundary to discriminate the two classes. The result is a linear classification rule that can be used to classify new test samples. When the samples are linearly nonseparable, the kernel function can be used to map the samples to a high-order feature space in which the optimal hyper plane as decision boundary can be found. SVM has exhibited excellent performance in practice [54, 58, 67–73] and has a strong theoretical foundation of statistical learning.

In this study, the publicly available Gist SVM package (http://www.chibi.ubc.ca/gist/) is employed. The SVM parameters are used by default of the Gist Package except that the kernel function is set as radial basis function.

2.5. Ensemble Classifier

The ensemble classifier is able to learn a more expressive concept in classification compared to a single classifier and reduces the variance caused by a single classifier. Therefore, it was employed in many fields and achieved great success [36, 37].

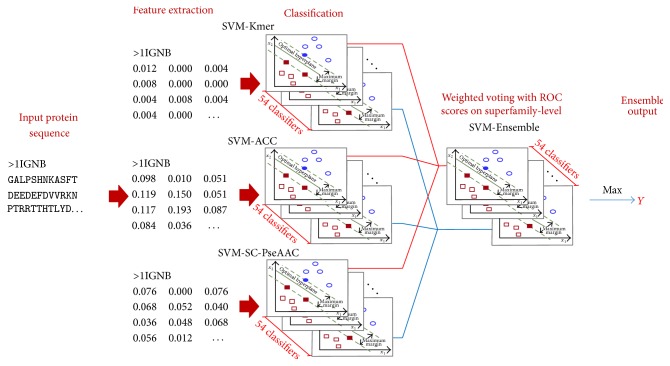

In this paper, we proposed a weighted voting strategy for protein remote homology detection, as shown in Figure 1. The ensemble framework of SVM-Ensemble was constructed by combining SVM-Kmer, SVM-ACC, and SVM-SC-PseAAC with weighted factors. The processing can be formulated as below.

Figure 1.

Flowchart to show how the ensemble classifier is formed by combining three basic classifiers on superfamily-level. The ensemble strategy is first employed on superfamily-level, and then the query protein P is predicted belonging to the superfamily type with which its score is the highest.

Suppose the ensemble classifier is expressed by

| (11) |

| (12) |

where ℂ iSj represents the ith basic SVM classifier on superfamily S j (1 ≤ j ≤ 54). That is, ℂ 1S1 represents the classifier SVM-Kmer that operates on the superfamily S 1, ℂ 2S1 represents the classifier SVM-ACC that operates on superfamily S 1, and ℂ 3S1 represents the classifier SVM-SC-PseAAC that operates on superfamily S 1. ℂ Sj is the average performance of three basic classifiers on superfamily S j with weighted voting strategy. In (12), the symbol ⊕ denotes the weighted voting operator.

The three basic classifiers can be combined by using the following equation:

| (13) |

where ℂ iSj(P, S j) is the belief function or supporting degree for P belonging to S j predicted by the ith basic classifier and w iSj is the weighted factor assigned with the average ROC score of the ith basic classifier on superfamily S j.

2.6. Performance Metrics for Evaluation

We evaluated the performance of different methods by employing the receiver operating characteristic (ROC) scores [55, 74–78]. Because the test sets have more negative than positive samples, simply measuring error-rates will not give a good evaluation of the performance. For the case, the best way to evaluate the trade-off between the specificity and sensitivity is to use ROC score. ROC score is the normalized area under a curve that is plotted with true positives as a function of false positives for varying classification thresholds. ROC score of 1 indicates a perfect separation of positive samples from negative samples, whereas ROC score of 0.5 denotes that random separation. ROC50 score is the area under the ROC curve up to the first 50 false positives.

3. Results and Discussion

3.1. The Influence of Parameters on the Predictive Performance of Basic Predictors

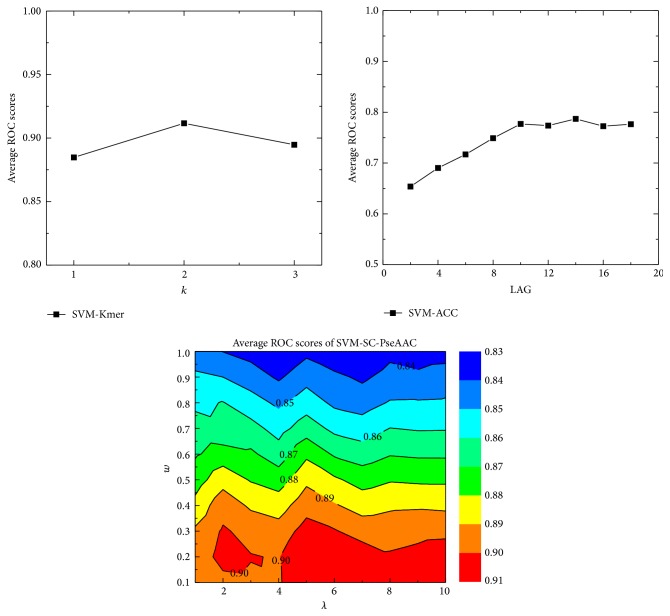

There are several parameters for each basic predictor, which should be optimized. For more information of these parameters, please refer to Materials and Method. In this study, we optimized them by using grid search. The influence of these parameters on the performance was shown in Figure 2, and the optimized values of the parameters and their results were shown in Table 1, from which we can see that SVM-Kmer achieved the best performance, followed by SVM-SC-PseAAC.

Figure 2.

The performance of three basic predictors with all parameter combinations. k value of 2 and the LAG value of 14 were used in SVM-Kmer and SVM-ACC. SVM-SC-PseAAC achieves the best performance with λ = 5 and w = 0.2. Parameter w is mainly impact factor. However, parameter λ has minor impact on the performance.

Table 1.

The performance of three basic predictors with optimal parameters on benchmark dataset.

| Methods | Optimal parameters | ROC[a] | ROC50[a] |

|---|---|---|---|

| SVM-Kmer | k = 2 | 0.912 | 0.785 |

| SVM-ACC | LAG = 14 | 0.787 | 0.483 |

| SVM-SC-PseAAC | λ = 5, w = 0.2 | 0.911 | 0.657 |

[a]Average ROC and ROC50 scores.

3.2. Performance of Ensemble Classifier Based on Various Feature Combinations with Weighted Voting Strategy

As discussed above, predictors based on different feature sets showed different performance. In this study, in order to further improve the performance of protein remote homology detection, we employed an ensemble learning approach to combine various predictors. The performance of ensemble classifier combined various feature combinations was shown in Table 2. The best performance (ROC = 0.943, ROC50 = 0.744) can be achieved with the combination of all the three basic predictors and obviously outperformed all the three basic predictors in terms of both ROC score and ROC50 score. These results were not surprising. The three basic predictors were based on different features, and their predictive results are complementary. The performance can be improved by combining them with an ensemble learning method.

Table 2.

Performance of ensemble classifier combining various predictors with weighted voting. The best performance was achieved by combining SVM-Kmer, SVM-ACC, and SVM-SC-PseAAC. The symbol ⊕ denotes the weighted voting operator.

| Ensemble methods with superfamily-level strategy | ROC[a] | ROC50[a] |

|---|---|---|

| SVM-Kmer ⊕ SVM-ACC | 0.929 | 0.767 |

| SVM-Kmer ⊕ SVM-SC-PseAAC | 0.937 | 0.715 |

| SVM-ACC ⊕ SVM-SC-PseAAC | 0.922 | 0.691 |

| SVM-Kmer ⊕ SVM-ACC ⊕ SVM-SC-PseAAC | 0.943 | 0.744 |

[a]Average ROC and ROC50 scores.

3.3. Feature Analysis for Discriminative Power

To further study the discriminative power of features in the three basic predictors, we employed a feature extraction method, called principal component analysis (PCA) [79], to calculate the discriminative weight vectors in the feature space. The process of PCA for extracting significant features can be found in [32, 80].

For each basic predictor, the top 10 most discriminative features in the feature space were shown in Table 3, from which we can see that, for the Kmer features, six of the most discriminative features contain the amino acid M, indicating the importance of this amino acid. For ACC features, the hydrophobicity (h 1) has important impact on the feature discrimination. For SC-PseAAC features, the amino acid M has the most discriminative power and features with small λ value are more important. Both ACC and SC-PseAAC features with strong discriminative power incorporate the sequence-order effects. These three kinds of features consider both sequence composition and sequence order effects. Therefore, SVM-Ensemble can further improve the performance by combining them in an ensemble learning approach.

Table 3.

Top 10 most discriminative features in three feature spaces. These features describe the characteristics of proteins from various perspectives.

| Rank | Kmer | ACC | SC-PseAAC |

|---|---|---|---|

| 1 | MH | CCh1h2,lag=9 | M |

| 2 | WC | ACh1,lag=5 | Y |

| 3 | IM | CCh1h2,lag=8 | τ h2,λ=1 |

| 4 | MC | ACh1,lag=4 | τ h2,λ=4 |

| 5 | MY | CCh1h2,lag=7 | H |

| 6 | VM | ACh1,lag=14 | τ h1,λ=4 |

| 7 | YW | ACm,lag=13 | G |

| 8 | YR | CCh1m,lag=13 | τ h1,λ=1 |

| 9 | HW | CCh1h2,lag=10 | τ m,λ=1 |

| 10 | MQ | ACh1,lag=8 | τ m,λ=3 |

Note: the subscript indexes in ACC features and SC-PseAAC features mean hydrophobicity (h 1), hydrophilicity (h 2), and mass (m).

3.4. Comparison with Other Related Predictors

Some state-of-the-art methods for protein remote homology detection were selected to compare with the proposed SVM-Ensemble. SVM-Pairwise [5] represents each protein as a vector of pairwise similarities to all proteins in the training set. The kernel of SVM-LA [26] measures the similarity between a pair of proteins by taking into account all the optimal local alignment scores with gaps between all possible subsequences. Mismatch kernel [28] is calculated based on occurrences of (k, m)-patterns in the data. Monomer-dist [47] constructs the feature vectors by the occurrences of short oligomers. SVM-DR is based on the distance-pairs; PseAACIndex is based on the pseudo amino acid composition (PseAAC). disPseAAC constructs the feature vectors by combining the occurrences of amino acid pairs within Chou's pseudo amino acid composition.

Experimental results of various methods on SCOP 1.53 benchmark dataset were shown in Table 4. The SVM-Ensemble achieved the best performance, indicating that it is correct to combine different predictors via an ensemble learning approach.

Table 4.

Performance comparison of different methods on the benchmark dataset.

| Methods | ROC[a] | ROC50[a] | Source |

|---|---|---|---|

| SVM-Ensemble | 0.943 | 0.744 | This study |

|

| |||

| SVM-Pairwise | 0.896 | 0.464 | Liao and Noble, 2003 [5] |

| SVM-LA (β = 0.5) | 0.925 | 0.649 | Saigo et al., 2004 [26] |

| Mismatch | 0.925 | 0.649 | Leslie et al., 2004 [28] |

|

| |||

| Monomer-dist | 0.919 | 0.508 | Lingner and Meinicke, 2006 [47] |

| SVM-WCM | 0.904 | 0.445 | Lingner and Meinicke, 2008 [51] |

|

| |||

| SVM-Ngram-LSA | 0.859 | 0.628 | Dong et al., 2006 [48] |

| SVM-Pattern-LSA | 0.879 | 0.626 | Dong et al., 2006 [48] |

| SVM-Motif-LSA | 0.859 | 0.628 | Dong et al., 2006 [48] |

| SVM-Top-n-gram-combine-LSA | 0.939 | 0.767 | Liu et al., 2008 [4] |

|

| |||

| PseAACIndex (λ = 5) | 0.880 | 0.620 | Liu et al., 2013 [31, 52] |

| PseAACIndex-Profile (λ = 5) | 0.922 | 0.712 | Liu et al., 2013 [31, 52] |

| SVM-DR | 0.919 | 0.715 | Liu et al., 2014 [50, 53–55] |

| disPseAAC | 0.922 | 0.721 | Liu et al., 2015 [2, 32, 44, 45, 56–59] |

[a]Average ROC and ROC50 scores.

4. Conclusions

In this study, we have proposed an ensemble classifier for protein remote homology detection, called SVM-Ensemble. It was constructed by combining three basic classifiers with a weighted voting strategy. Experimental results on a widely used benchmark dataset showed that our method achieved ROC score of 0.943, which is obviously better than the three basic predictors, including SVM-Kmer, SVM-ACC, and SVM-SC-PseAAC. Compared with some other state-of-the-art methods, the SVM-Ensemble achieved the best performance. Furthermore, by analyzing the discriminative power of these features, some interesting patterns were discovered.

For the future work, more effective features and machine learning techniques will be explored. And evolutionary computation [81], the ensemble learning techniques, and neural-like computing models [82–87] would be applied to other bioinformatics problems, such as gene-disease relationship prediction [52, 88–92] and DNA motif identification [59, 93].

Supplementary Material

The amino acids physicochemical indices and corresponding values for hydrophobicity, hydrophilicity and mass.

Acknowledgments

This work was supported by Development Program of China (863 Program) [2015AA015405], the National Natural Science Foundation of China (nos. 61300112, 61573118, and 61272383), the Scientific Research Foundation for the Returned Overseas Chinese Scholars, State Education Ministry, the Natural Science Foundation of Guangdong Province (2014A030313695), and Shenzhen Foundational Research Funding (Grant no. JCYJ20150626110425228).

Competing Interests

The authors declare that they have no competing interests.

Authors' Contributions

Bingquan Liu conceived of the study and designed the experiments and participated in designing the study, drafting the paper, and performing the statistical analysis. Junjie Chen participated in coding the experiments and drafting the paper. Dong Huang participated in performing the statistical analysis. All authors read and approved the final paper.

References

- 1.Bork P., Koonin E. V. Predicting functions from protein sequences—where are the bottlenecks? Nature Genetics. 1998;18(4):313–318. doi: 10.1038/ng0498-313. [DOI] [PubMed] [Google Scholar]

- 2.Liu B., Chen J., Wang X. Application of learning to rank to protein remote homology detection. Bioinformatics. 2015;31(21):3492–3498. doi: 10.1093/bioinformatics/btv413. [DOI] [PubMed] [Google Scholar]

- 3.Rost B. Twilight zone of protein sequence alignments. Protein Engineering. 1999;12(2):85–94. doi: 10.1093/protein/12.2.85. [DOI] [PubMed] [Google Scholar]

- 4.Liu B., Wang X., Lin L., Dong Q., Wang X. A discriminative method for protein remote homology detection and fold recognition combining Top-n-grams and latent semantic analysis. BMC Bioinformatics. 2008;9, article 510 doi: 10.1186/1471-2105-9-510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Liao L., Noble W. S. Combining pairwise sequence similarity and support vector machines for detecting remote protein evolutionary and structural relationships. Journal of Computational Biology. 2003;10(6):857–868. doi: 10.1089/106652703322756113. [DOI] [PubMed] [Google Scholar]

- 6.Needleman S. B., Wunsch C. D. A general method applicable to the search for similarities in the amino acid sequence of two proteins. Journal of Molecular Biology. 1970;48(3):443–453. doi: 10.1016/0022-2836(70)90057-4. [DOI] [PubMed] [Google Scholar]

- 7.Zou Q., Hu Q., Guo M., Wang G. HAlign: fast multiple similar DNA/RNA sequence alignment based on the centre star strategy. Bioinformatics. 2015;31(15):2475–2481. doi: 10.1093/bioinformatics/btv177. [DOI] [PubMed] [Google Scholar]

- 8.Smith T. F., Waterman M. S. Identification of common molecular subsequences. Journal of Molecular Biology. 1981;147(1):195–197. doi: 10.1016/0022-2836(81)90087-5. [DOI] [PubMed] [Google Scholar]

- 9.Altschul S. F., Gish W., Miller W., Myers E. W., Lipman D. J. Basic local alignment search tool. Journal of Molecular Biology. 1990;215(3):403–410. doi: 10.1006/jmbi.1990.9999. [DOI] [PubMed] [Google Scholar]

- 10.Pearson W. R. Searching protein sequence libraries: comparison of the sensitivity and selectivity of the Smith-Waterman and FASTA algorithms. Genomics. 1991;11(3):635–650. doi: 10.1016/0888-7543(91)90071-l. [DOI] [PubMed] [Google Scholar]

- 11.Altschul S. F., Madden T. L., Schäffer A. A., et al. Gapped BLAST and PSI-BLAST: a new generation of protein database search programs. Nucleic Acids Research. 1997;25(17):3389–3402. doi: 10.1093/nar/25.17.3389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Liu B., Wang X., Lin L., Dong Q., Wang X. Exploiting three kinds of interface propensities to identify protein binding sites. Computational Biology and Chemistry. 2009;33(4):303–311. doi: 10.1016/j.compbiolchem.2009.07.001. [DOI] [PubMed] [Google Scholar]

- 13.Remmert M., Biegert A., Hauser A., Söding J. HHblits: lightning-fast iterative protein sequence searching by HMM-HMM alignment. Nature Methods. 2012;9(2):173–175. doi: 10.1038/nmeth.1818. [DOI] [PubMed] [Google Scholar]

- 14.Eddy S. R. Profile hidden Markov models. Bioinformatics. 1998;14(9):755–763. doi: 10.1093/bioinformatics/14.9.755. [DOI] [PubMed] [Google Scholar]

- 15.Karplus K., Barrett C., Hughey R. Hidden Markov models for detecting remote protein homologies. Bioinformatics. 1998;14(10):846–856. doi: 10.1093/bioinformatics/14.10.846. [DOI] [PubMed] [Google Scholar]

- 16.Ding H., Lin H., Chen W., et al. Prediction of protein structural classes based on feature selection technique. Interdisciplinary Sciences: Computational Life Sciences. 2014;6(3):235–240. doi: 10.1007/s12539-013-0205-6. [DOI] [PubMed] [Google Scholar]

- 17.Ding H., Liu L., Guo F.-B., Huang J., Lin H. Identify golgi protein types with modified mahalanobis discriminant algorithm and pseudo amino acid composition. Protein and Peptide Letters. 2011;18(1):58–63. doi: 10.2174/092986611794328708. [DOI] [PubMed] [Google Scholar]

- 18.Lin H., Liu W. X., He J., Liu X. H., Ding H., Chen W. Predicting cancerlectins by the optimal g-gap dipeptides. Scientific Reports. 2015;5 doi: 10.1038/srep16964.16964 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Liu B., Wang X., Chen Q., Dong Q., Lan X. Using amino acid physicochemical distance transformation for fast protein remote homology detection. PLoS ONE. 2012;7(9) doi: 10.1371/journal.pone.0046633.e46633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhao X., Zou Q., Liu B., Liu X. Exploratory predicting protein folding model with random forest and hybrid features. Current Proteomics. 2014;11(4):289–299. [Google Scholar]

- 21.Song L., Li D., Zeng X., Wu Y., Guo L., Zou Q. nDNA-prot: Identification of DNA-binding proteins based on unbalanced classification. BMC Bioinformatics. 2014;15, article 298 doi: 10.1186/1471-2105-15-298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lin C., Zou Y., Qin J., et al. Hierarchical classification of protein folds using a novel ensemble classifier. PLoS ONE. 2013;8(2) doi: 10.1371/journal.pone.0056499.e56499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wei L., Liao M., Gao X., Zou Q. An improved protein structural classes prediction method by incorporating both sequence and structure information. IEEE Transactions on Nanobioscience. 2015;14(4):339–349. doi: 10.1109/TNB.2014.2352454. [DOI] [PubMed] [Google Scholar]

- 24.Wei L., Liao M., Gao X., Zou Q. Enhanced protein fold prediction method through a novel feature extraction technique. IEEE Transactions on Nanobioscience. 2015;14(6):649–659. doi: 10.1109/tnb.2015.2450233. [DOI] [PubMed] [Google Scholar]

- 25.Xu J., Zou Q., Xu R., Wang X., Chen Q. Using distances between Top-n-gram and residue pairs for protein remote homology detection. BMC Bioinformatics. 2014;15(supplement 2):p. S3. doi: 10.1186/1471-2105-15-S2-S3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Saigo H., Vert J.-P., Ueda N., Akutsu T. Protein homology detection using string alignment kernels. Bioinformatics. 2004;20(11):1682–1689. doi: 10.1093/bioinformatics/bth141. [DOI] [PubMed] [Google Scholar]

- 27.Ben-Hur A., Brutlag D. Remote homology detection: a motif based approach. Bioinformatics. 2003;19(supplement 1):i26–i33. doi: 10.1093/bioinformatics/btg1002. [DOI] [PubMed] [Google Scholar]

- 28.Leslie C. S., Eskin E., Cohen A., Weston J., Noble W. S. Mismatch string kernels for discriminative protein classification. Bioinformatics. 2004;20(4):467–476. doi: 10.1093/bioinformatics/btg431. [DOI] [PubMed] [Google Scholar]

- 29.Rangwala H., Karypis G. Profile-based direct kernels for remote homology detection and fold recognition. Bioinformatics. 2005;21(23):4239–4247. doi: 10.1093/bioinformatics/bti687. [DOI] [PubMed] [Google Scholar]

- 30.Kuang R., Ie E., Wang K., et al. Profile-based string kernels for remote homology detection and motif extraction. Journal of Bioinformatics and Computational Biology. 2005;3(3):527–550. doi: 10.1142/S021972000500120X. [DOI] [PubMed] [Google Scholar]

- 31.Liu B., Wang X., Zou Q., Dong Q., Chen Q. Protein remote homology detection by combining chou's pseudo amino acid composition and profile-based protein representation. Molecular Informatics. 2013;32(9-10):775–782. doi: 10.1002/minf.201300084. [DOI] [PubMed] [Google Scholar]

- 32.Liu B., Chen J., Wang X. Protein remote homology detection by combining Chou’s distance-pair pseudo amino acid composition and principal component analysis. Molecular Genetics and Genomics. 2015;290(5):1919–1931. doi: 10.1007/s00438-015-1044-4. [DOI] [PubMed] [Google Scholar]

- 33.Zhang Y., Liu B., Dong Q., Jin V. X. An improved profile-level domain linker propensity index for protein domain boundary prediction. Protein and Peptide Letters. 2011;18(1):7–16. doi: 10.2174/092986611794328717. [DOI] [PubMed] [Google Scholar]

- 34.Dietterich T. G. Multiple Classifier Systems. Berlin, Germany: Springer; 2000. Ensemble methods in machine learning; pp. 1–15. [DOI] [Google Scholar]

- 35.Lin C., Chen W., Qiu C., Wu Y., Krishnan S., Zou Q. LibD3C: ensemble classifiers with a clustering and dynamic selection strategy. Neurocomputing. 2014;123:424–435. doi: 10.1016/j.neucom.2013.08.004. [DOI] [Google Scholar]

- 36.Deng L., Guan J., Dong Q., Zhou S. Prediction of protein-protein interaction sites using an ensemble method. BMC Bioinformatics. 2009;10(1, article 426) doi: 10.1186/1471-2105-10-426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shen H.-B., Chou K.-C. Ensemble classifier for protein fold pattern recognition. Bioinformatics. 2006;22(14):1717–1722. doi: 10.1093/bioinformatics/btl170. [DOI] [PubMed] [Google Scholar]

- 38.Zou Q., Guo J., Ju Y., Wu M., Zeng X., Hong Z. Improving tRNAscan-SE annotation results via ensemble classifiers. Molecular Informatics. 2015;34(11-12):761–770. doi: 10.1002/minf.201500031. [DOI] [PubMed] [Google Scholar]

- 39.Liu B., Liu F., Fang L., Wang X., Chou K.-C. repRNA: a web server for generating various feature vectors of RNA sequences. Molecular Genetics and Genomics. 2016;291(1):473–481. doi: 10.1007/s00438-015-1078-7. [DOI] [PubMed] [Google Scholar]

- 40.Wang C. Y., Hu L., Guo M. Z., Liu X. Y., Zou Q. imDC: an ensemble learning method for imbalanced classification with miRNA data. Genetics and Molecular Research. 2015;14(1):123–133. doi: 10.4238/2015.january.15.15. [DOI] [PubMed] [Google Scholar]

- 41.Wei L., Liao M., Gao Y., Ji R., He Z., Zou Q. Improved and promising identification of human microRNAs by incorporatinga high-quality negative set. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2014;11(1):192–201. doi: 10.1109/tcbb.2013.146. [DOI] [PubMed] [Google Scholar]

- 42.Chen J., Wang X., Liu B. iMiRNA-SSF: improving the identification of MicroRNA precursors by combining negative sets with different distributions. Scientific Reports. 2016;6 doi: 10.1038/srep19062.19062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Liu B., Fang L., Liu F., Wang X., Chou K.-C. iMiRNA-PseDPC: microRNA precursor identification with a pseudo distance-pair composition approach. Journal of Biomolecular Structure and Dynamics. 2016;34(1):220–232. doi: 10.1080/07391102.2015.1014422. [DOI] [PubMed] [Google Scholar]

- 44.Liu B., Fang L., Wang S., Wang X., Li H., Chou K.-C. Identification of microRNA precursor with the degenerate K-tuple or Kmer strategy. Journal of Theoretical Biology. 2015;385:153–159. doi: 10.1016/j.jtbi.2015.08.025. [DOI] [PubMed] [Google Scholar]

- 45.Liu B., Wang S., Wang X. DNA binding protein identification by combining pseudo amino acid composition and profile-based protein representation. Scientific Reports. 2015;5 doi: 10.1038/srep15479.15479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Li L., Zhang Y., Zou L., et al. An ensemble classifier for eukaryotic protein subcellular location prediction using gene ontology categories and amino acid hydrophobicity. PLoS ONE. 2012;7(1) doi: 10.1371/journal.pone.0031057.e31057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lingner T., Meinicke P. Remote homology detection based on oligomer distances. Bioinformatics. 2006;22(18):2224–2231. doi: 10.1093/bioinformatics/btl376. [DOI] [PubMed] [Google Scholar]

- 48.Dong Q.-W., Wang X.-L., Lin L. Application of latent semantic analysis to protein remote homology detection. Bioinformatics. 2006;22(3):285–290. doi: 10.1093/bioinformatics/bti801. [DOI] [PubMed] [Google Scholar]

- 49.Liao L., Noble W. S. Combining pairwise sequence similarity and support vector machines for remote protein homology detection. Proceedings of the 6th Annual International Conference on Computational Biology (RECOMB '02); April 2002; Washington, DC, USA. pp. 225–232. [Google Scholar]

- 50.Liu B., Zhang D., Xu R., et al. Combining evolutionary information extracted from frequency profiles with sequence-based kernels for protein remote homology detection. Bioinformatics. 2014;30(4):472–479. doi: 10.1093/bioinformatics/btt709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lingner T., Meinicke P. Word correlation matrices for protein sequence analysis and remote homology detection. BMC Bioinformatics. 2008;9(1, article 259):13. doi: 10.1186/1471-2105-9-259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Liu B., Yi J., Sv A., et al. QChIPat: a quantitative method to identify distinct binding patterns for two biological ChIP-seq samples in different experimental conditions. BMC Genomics. 2013;14(supplement 8, article S3) doi: 10.1186/1471-2164-14-S8-S3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Liu B., Liu B., Liu F., Wang X. Protein binding site prediction by combining hidden markov support vector machine and profile-based propensities. The Scientific World Journal. 2014;2014:6. doi: 10.1155/2014/464093.464093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Liu W.-X., Deng E.-Z., Chen W., Lin H. Identifying the subfamilies of voltage-gated potassium channels using feature selection technique. International Journal of Molecular Sciences. 2014;15(7):12940–12951. doi: 10.3390/ijms150712940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Liu B., Xu J., Lan X., et al. IDNA-Prot|dis: identifying DNA-binding proteins by incorporating amino acid distance-pairs and reduced alphabet profile into the general pseudo amino acid composition. PLoS ONE. 2014;9(9) doi: 10.1371/journal.pone.0106691.e106691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Liu B., Liu F., Wang X., Chen J., Fang L., Chou K.-C. Pse-in-One: a web server for generating various modes of pseudo components of DNA, RNA, and protein sequences. Nucleic Acids Research. 2015;43(1):W65–W71. doi: 10.1093/nar/gkv458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Liu B., Xu J., Fan S., Xu R., Zhou J., Wang X. PseDNA-Pro: DNA-binding protein identification by combining chou's PseAAC and Physicochemical distance transformation. Molecular Informatics. 2015;34(1):8–17. doi: 10.1002/minf.201400025. [DOI] [PubMed] [Google Scholar]

- 58.Liu B., Fang L., Chen J., Liu F., Wang X. MiRNA-dis: MicroRNA precursor identification based on distance structure status pairs. Molecular BioSystems. 2015;11(4):1194–1204. doi: 10.1039/c5mb00050e. [DOI] [PubMed] [Google Scholar]

- 59.Liu B., Liu F., Fang L., Wang X., Chou K.-C. repDNA: a Python package to generate various modes of feature vectors for DNA sequences by incorporating user-defined physicochemical properties and sequence-order effects. Bioinformatics. 2015;31(8):1307–1309. doi: 10.1093/bioinformatics/btu820. [DOI] [PubMed] [Google Scholar]

- 60.Cao D.-S., Xu Q.-S., Liang Y.-Z. Propy: a tool to generate various modes of Chou's PseAAC. Bioinformatics. 2013;29(7):960–962. doi: 10.1093/bioinformatics/btt072. [DOI] [PubMed] [Google Scholar]

- 61.Dong Q., Zhou S., Guan J. A new taxonomy-based protein fold recognition approach based on autocross-covariance transformation. Bioinformatics. 2009;25(20):2655–2662. doi: 10.1093/bioinformatics/btp500. [DOI] [PubMed] [Google Scholar]

- 62.Liu X., Zhao L., Dong Q. Protein remote homology detection based on auto-cross covariance transformation. Computers in Biology and Medicine. 2011;41(8):640–647. doi: 10.1016/j.compbiomed.2011.05.015. [DOI] [PubMed] [Google Scholar]

- 63.Kawashima S., Kanehisa M. AAindex: amino acid index database. Nucleic Acids Research. 2000;28(1):p. 374. doi: 10.1093/nar/28.1.374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Chou K.-C. Using amphiphilic pseudo amino acid composition to predict enzyme subfamily classes. Bioinformatics. 2005;21(1):10–19. doi: 10.1093/bioinformatics/bth466. [DOI] [PubMed] [Google Scholar]

- 65.Chang C.-C., Lin C.-J. LIBSVM: a Library for support vector machines. ACM Transactions on Intelligent Systems and Technology. 2011;2(3, article 27) doi: 10.1145/1961189.1961199. [DOI] [Google Scholar]

- 66.Fang L., Liu F., Wang X., Chen J., Chou K.-C., Liu B. Identification of real microRNA precursors with a pseudo structure status composition approach. PLoS ONE. 2015;10(3) doi: 10.1371/journal.pone.0121501.e0121501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Ding H., Deng E.-Z., Yuan L.-F., et al. ICTX-type: a sequence-based predictor for identifying the types of conotoxins in targeting ion channels. BioMed Research International. 2014;2014:10. doi: 10.1155/2014/286419.286419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Ding H., Guo S.-H., Deng E.-Z., et al. Prediction of Golgi-resident protein types by using feature selection technique. Chemometrics and Intelligent Laboratory Systems. 2013;124:9–13. doi: 10.1016/j.chemolab.2013.03.005. [DOI] [Google Scholar]

- 69.Guo S.-H., Deng E.-Z., Xu L.-Q., et al. INuc-PseKNC: a sequence-based predictor for predicting nucleosome positioning in genomes with pseudo k-tuple nucleotide composition. Bioinformatics. 2014;30(11):1522–1529. doi: 10.1093/bioinformatics/btu083. [DOI] [PubMed] [Google Scholar]

- 70.Lin H., Deng E.-Z., Ding H., Chen W., Chou K.-C. IPro54-PseKNC: a sequence-based predictor for identifying sigma-54 promoters in prokaryote with pseudo k-tuple nucleotide composition. Nucleic Acids Research. 2014;42(21):12961–12972. doi: 10.1093/nar/gku1019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Lin H., Ding H., Guo F.-B., Zhang A.-Y., Huang J. Predicting subcellular localization of mycobacterial proteins by using Chou's pseudo amino acid composition. Protein and Peptide Letters. 2008;15(7):739–744. doi: 10.2174/092986608785133681. [DOI] [PubMed] [Google Scholar]

- 72.Yuan L.-F., Ding C., Guo S.-H., Ding H., Chen W., Lin H. Prediction of the types of ion channel-targeted conotoxins based on radial basis function network. Toxicology in Vitro. 2013;27(2):852–856. doi: 10.1016/j.tiv.2012.12.024. [DOI] [PubMed] [Google Scholar]

- 73.Liu B., Fang L., Long R., Lan X., Chou K.-C. iEnhancer-2L: a two-layer predictor for identifying enhancers and their strength by pseudo k-tuple nucleotide composition. Bioinformaitcs. 2016;32(3):362–369. doi: 10.1093/bioinformatics/btv604. [DOI] [PubMed] [Google Scholar]

- 74.Fawcett T. An introduction to ROC analysis. Pattern Recognition Letters. 2006;27(8):861–874. doi: 10.1016/j.patrec.2005.10.010. [DOI] [Google Scholar]

- 75.Ding H., Feng P.-M., Chen W., Lin H. Identification of bacteriophage virion proteins by the ANOVA feature selection and analysis. Molecular BioSystems. 2014;10(8):2229–2235. doi: 10.1039/c4mb00316k. [DOI] [PubMed] [Google Scholar]

- 76.Ding H., Luo L., Lin H. Prediction of cell wall lytic enzymes using chou's amphiphilic pseudo amino acid composition. Protein and Peptide Letters. 2009;16(4):351–355. doi: 10.2174/092986609787848045. [DOI] [PubMed] [Google Scholar]

- 77.Liu B., Wang X., Lin L., Tang B., Dong Q., Wang X. Prediction of protein binding sites in protein structures using hidden Markov support vector machine. BMC Bioinformatics. 2009;10, article 381 doi: 10.1186/1471-2105-10-381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Liu B., Fang L. Identification of microRNA precursor based on gapped n-tuple structure status composition kernel. Computational Biology and Chemistry. 2016 doi: 10.1016/j.compbiolchem.2016.02.010. [DOI] [PubMed] [Google Scholar]

- 79.Wold S., Esbensen K., Geladi P. Principal component analysis. Chemometrics and Intelligent Laboratory Systems. 1987;2(1–3):37–52. doi: 10.1016/0169-7439(87)80084-9. [DOI] [Google Scholar]

- 80.Du Q.-S., Jiang Z.-Q., He W.-Z., Li D.-P., Chou K.-C. Amino acid principal component analysis (AAPCA) and its applications in protein structural class prediction. Journal of Biomolecular Structure and Dynamics. 2006;23(6):635–640. doi: 10.1080/07391102.2006.10507088. [DOI] [PubMed] [Google Scholar]

- 81.Zhang X., Tian Y., Jin Y. A knee point driven evolutionary algorithm for many-objective optimization. IEEE Transactions on Evolutionary Computation. 2015;19(6):761–776. doi: 10.1109/tevc.2014.2378512. [DOI] [Google Scholar]

- 82.Song T., Pan L. On the universality and non-universality of spiking neural P systems with rules on synapses. IEEE Transactions on NanoBioscience. 2015;14(8):960–966. doi: 10.1109/TNB.2015.2503603. [DOI] [PubMed] [Google Scholar]

- 83.Zeng X., Zhang X., Song T., Pan L. Spiking neural P systems with thresholds. Neural Computation. 2014;26(7):1340–1361. doi: 10.1162/NECO_a_00605. [DOI] [PubMed] [Google Scholar]

- 84.Chen X., Pérez-Jiménez M. J., Valencia-Cabrera L., Wang B., Zeng X. Computing with viruses. Theoretical Computer Science. 2016;623:146–159. doi: 10.1016/j.tcs.2015.12.006. [DOI] [Google Scholar]

- 85.Xiangxiang Zeng L. P., Pérez-Jiménez M. J. Small universal simple spiking neural P systems with weights. Science China Information Sciences. 2014;57(9):1–11. doi: 10.1007/s11432-013-4848-z. [DOI] [Google Scholar]

- 86.Zhang X., Pan L., Păun A. On the universality of axon P systems. IEEE Transactions on Neural Networks and Learning Systems. 2015;26(11):2816–2829. doi: 10.1109/tnnls.2015.2396940. [DOI] [PubMed] [Google Scholar]

- 87.Zhang X., Liu Y., Luo B., Pan L. Computational power of tissue P systems for generating control languages. Information Sciences. 2014;278:285–297. doi: 10.1016/j.ins.2014.03.053. [DOI] [Google Scholar]

- 88.Zeng X., Liao Y., liu Y., Zou Q. Prediction and validation of disease genes using HeteSim Scores. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2016 doi: 10.1109/tcbb.2016.2520947. [DOI] [PubMed] [Google Scholar]

- 89.Zou Q., Li J., Hong Q., et al. Prediction of microRNA-disease associations based on social network analysis methods. BioMed Research International. 2015;2015:9. doi: 10.1155/2015/810514.810514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Zeng X., Zhang X., Zou Q. Integrative approaches for predicting microRNA function and prioritizing disease-related microRNA using biological interaction networks. Briefings in Bioinformatics. 2016;17(2):193–203. doi: 10.1093/bib/bbv033. [DOI] [PubMed] [Google Scholar]

- 91.Zou Q., Li J., Song L., Zeng X., Wang G. Similarity computation strategies in the microRNA-disease network: a survey. Briefings in Functional Genomics. 2016;15(1):55–64. doi: 10.1093/bfgp/elv024. [DOI] [PubMed] [Google Scholar]

- 92.Chen H.-Z., Ouseph M. M., Li J., et al. Canonical and atypical E2Fs regulate the mammalian endocycle. Nature Cell Biology. 2012;14(11):1192–1202. doi: 10.1038/ncb2595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Wang X., Miao Y., Cheng M. Finding motifs in DNA sequences using low-dispersion sequences. Journal of Computational Biology. 2014;21(4):320–329. doi: 10.1089/cmb.2013.0054. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The amino acids physicochemical indices and corresponding values for hydrophobicity, hydrophilicity and mass.