Summary

Respondent-driven sampling (RDS) is a widely used method for sampling from hard-to-reach human populations, especially populations at higher risk for HIV. Data are collected through peer-referral over social networks. RDS has proven practical for data collection in many difficult settings and is widely used. Inference from RDS data requires many strong assumptions because the sampling design is partially beyond the control of the researcher and partially unobserved. We introduce diagnostic tools for most of these assumptions and apply them in 12 high risk populations. These diagnostics empower researchers to better understand their data and encourage future statistical research on RDS.

Keywords: diagnostics, exploratory data analysis, hard-to-reach populations, HIV/AIDS, link-tracing sampling, non-ignorable design, respondent-driven sampling, social networks, survey sampling

1. Introduction

Many problems in social science, public health, and public policy require detailed information about “hidden” or “hard-to-reach” populations. For example, efforts to understand and control the HIV/AIDS epidemic require information about the disease prevalence and risk behaviors in populations at higher risk of HIV exposure: female sex workers (FSW), illicit drug users (DU), and men who have sex with men (MSM) (Magnani et al., 2005). Respondent-driven sampling (RDS) is a recently introduced link-tracking network sampling technique for collecting such information (Heckathorn, 1997). Because of the pressing need for information about populations at higher risk and the weaknesses of alternatives approaches, RDS has already been used in hundreds HIV-related studies in dozens of countries (Malekinejad et al., 2008; Montealegre et al., 2013) and has been adopted by leading public health organizations, such as the US Centers for Disease Control and Prevention (CDC) (Barbosa Júnior et al., 2011; Lansky et al., 2007; Wejnert et al., 2012) and World Health Organization (Johnston et al., 2013).

Collectively, these previous studies demonstrate that RDS is able to generate large samples in a wide variety of hard-to-reach populations. However, the quality of estimates derived from these data has been challenged in a number of recent papers (Bengtsson and Thorson, 2010; Burt and Thiede, 2012; Gile and Handcock, 2010; Goel and Salganik, 2010; Heimer, 2005; McCreesh et al., 2012; Mills et al., 2012; Mouw and Verdery, 2012; Nesterko and Blitzstein, Nesterko and Blitzstein; Poon et al., 2009; Rudolph et al., 2013; Salganik, 2012; Scott, 2008; White et al., 2012; Yamanis et al., 2013). A major source of concern is that inference from RDS data requires many strong assumptions, which are widely believed to be untrue and yet are rarely examined in practice. The widespread use of RDS for important public health problems combined with its reliance on untested assumptions, creates a pressing need for exploratory and diagnostic techniques for RDS data.

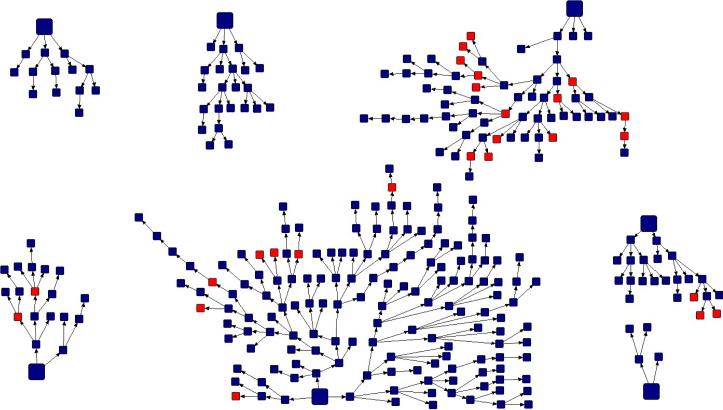

RDS data collection begins when researchers select, in an ad-hoc manner, typically 5 to 10 members of the target population to serve as “seeds.” Each seed is interviewed and provided a fixed number of coupons (usually three) that they use to recruit other members of the target population. These recruits are in turn provided with coupons that they use to recruit others. In this way, the sample can grow through many waves, resulting in recruitment trees like those shown in Fig. 1 (created using NetDraw, Borgatti (2002)). Respondents are encouraged to participate and recruit through the use of financial and other incentives (Heckathorn, 1997). The fact that the majority of participants are recruited by other respondents and not by researchers makes RDS a successful method of data collection. However, the same feature also inherently complicates inference because it requires researchers to make assumptions about the recruitment process and the structure of the social network connecting the target population.

Fig. 1.

Recruitment Trees Plot from sample of men who have sex with men in Higuey. Shading indicates self-identify as “heterosexual.”

There are three interrelated approaches to addressing the assumptions underlying inference from RDS data. First, researchers can identify assumptions whose violations significantly impact estimates, either analytically or through computer simulation. Second, researchers can develop new estimators that are less sensitive to these assumptions. Third, researchers can develop methods to detect the violation of assumptions in practice. This third approach is the primary focus of this paper, but we hope that our results will help motivate and inform research of the first two types.

This paper makes two main contributions. First, we review and develop diagnostics for most assumptions underlying statistical inference from RDS data. One reason for the relative dearth of RDS diagnostics is that the same conditions that complicate inference from RDS data also complicate formal diagnostic tests. In particular, the unknown dependence between recruiters and recruits renders most standard tests invalid. Therefore, when possible, we develop diagnostic approaches that are intuitive, graphical, and not reliant on statistical testing. Further, when possible, we emphasize approaches that can be used while data collection is occurring so that some problems can be investigated and potentially resolved while researchers are still in the field. In order to provide these features, our diagnostics frequently take advantage of three specific features of RDS studies that are not typically utilized: information about the time sequences of responses, contact with respondents who visit the study site twice, and the multiple seeds used to begin the sampling process. The second main contribution of our paper is to deploy these diagnostics in 12 RDS studies conducted in accordance with the national strategic HIV surveillance plan of the Dominican Republic. We believe that these case studies—which include samples of female sex workers (FSW), drug users (DU), and men who have sex with men (MSM) in four cities—are reasonably reflective of the way that RDS is used in many countries. Therefore, we believe that our empirical results have broad applicability for RDS practitioners and researchers who wish to develop improved methods of RDS data collection and inference.

The remainder of the paper is organized as follows: in Section 2 we briefly review the assumptions underlying RDS estimation and in Section 3 we describe the data from 12 studies in the Dominican Republic that will be used throughout the paper. Sections 4 through 8 present diagnostics, including extensions of previous approaches as well as wholly new approaches. In Section 9 we discuss the results and conclude with suggestions for future research. We also include online Supporting Information with additional results and approaches.

2. Assumptions of RDS

Estimation from RDS data requires many assumptions about the sampling process, the underlying population, and respondent behavior. These assumptions are outlined in Table 1 and described fully in Gile and Handcock (2010). In particular, these assumptions are required by the estimator proposed by Volz and Heckathorn (2008). Other available estimators require similar assumptions, especially pertaining to respondent behavior.

Table 1.

Assumptions of the Volz-Heckathorn Estimator. Assumptions in bold-italics are considered in this paper, with section numbers given. A version of this table appeared in Gile and Handcock (2010).

| Network Structure Assumptions | Sampling Assumptions | |

|---|---|---|

| Random Walk Model | Network size large (N >> n) |

With-replacement sampling (4) Single non-branching chain |

| Remove Seed Dependence |

Homophily weak enough (5) Bottlenecks limited (5) Connected graph |

Enough sample waves (5) |

| Respondent Behavior | All ties reciprocated (6) |

Degree accurately measured (7)

Random referral (8) |

Each row of this table includes assumptions according to their roles in allowing for estimation. The first row (“Random Walk Model”) corresponds to assumptions required to allow the sampling process to be approximated by a random walk on the nodes. Critically, the random walk model requires with-replacement sampling, while the true sampling process is known to be without replacement. We, therefore, first consider diagnostics designed to detect impacts of the without-replacement nature of the sampling (Sec. 4).

The second row (“Remove Seed Dependence”) contains assumptions required to reduce the influence of the initial sample—the seeds—on the final estimates. Because the initial sample is usually a convenience sample, RDS is intended to be carried out for many sampling waves through a well-connected population in order to minimize the impact of the seed selection process. Therefore, we consider diagnostics designed to detect seed bias that may remain due to an insufficient number of sample waves (Sec. 5).

The final row of the table, (“Respondent Behavior,”) contains assumptions related to respondent behavior. In RDS, unlike in traditional survey sampling, respondents’ decision-making plays a significant role in the sampling process, and, therefore, assumptions about these decisions are needed for estimating inclusion probabilities. In particular, we consider the assumptions that all network ties are reciprocated, that degree (also referred to as number of contacts or personal network size) is accurately reported, and that future participation is random among contacts in the target population (Secs. 6, 7, and 8).

3. Case study: 12 sites in the Dominican Republic

We employ these diagnostics in a case study of 12 parallel RDS studies conducted in the spring of 2008 using standard RDS methods (Johnston, 2008, 2013; Johnston et al., 2013). As part of the national strategic HIV surveillance plan of the Dominican Republic, data were collected from female sex workers (FSW), drug users (DU), and men who are gay, transsexual, or have sex with men (MSM) in four cities: Santo Domingo (SD), Santiago (SA), Barahona (BA), and Higuey (HI). These studies are typical of the way RDS is used in national HIV surveillance around the world. Eligible persons were 15 years or older and lived in the province under study. Eligible FSW were females who exchanged sex for money in the previous six months, DU were females or males who used illicit drugs in the previous three months, and MSM were males who had anal or oral sexual relations with another man in the previous six months. Seeds were purposively selected through local non-governmental organizations or through the use of peer outreach workers. Each city had a fixed interview site where respondents enrolled in the survey.

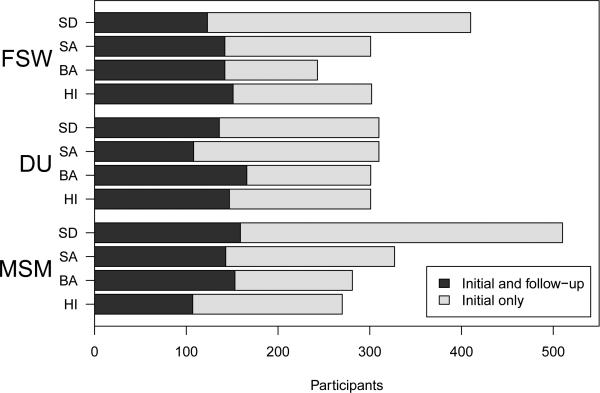

During the initial visit, consenting respondents were screened for eligibility, completed a face-to-face interview, received HIV pre-test counseling and provided blood samples that were tested for HIV, Hepatitis B and C, and Syphilis. Before leaving the study site, respondents were encouraged to set an appointment to return two weeks later for a follow-up visit during which they would receive HIV post-test counseling, collect infection test results and, if necessary, be referred to a nearby health facility for care and treatment. During the follow-up visit respondents also completed a follow-up questionnaire and received secondary incentives for any peers they recruited; respondents were compensated the equivalent of $9.00 USD for completing the initial survey and $3.00 USD for each successful recruitment (up to a maximum of three). To ensure confidentiality, respondents’ coupons, questionnaires and biological tests were identified using a unique study identification number; no personal identifying information was collected. The studies ranged in sample size from 243 to 510 with a total sample size of 3,866 people, of which 1,677 (43%) completed a follow-up survey (see Fig. 2). In the online Supporting Information we compare the characteristics of respondents who did and did not complete the follow-up survey. We found that follow-up respondents tended to recruit more often and participate earlier in the study than follow-up non-respondents. For more information, see Sec. S6. Where relevant to our conclusions, the impacts of these results are noted.

Fig. 2.

Sample sizes from the 12 studies. In total, 3,866 people participated, of which 1,677 (43%) completed a follow-up survey.

We analyze data from the 12 studies using the estimator introduced in Volz and Heckathorn (2008) because it has been used in most of the recent evaluations of RDS methodology (Gile and Handcock, 2010; Goel and Salganik, 2010; Lu et al., 2012; McCreesh et al., 2012; Nesterko and Blitzstein, Nesterko and Blitzstein; Tomas and Gile, 2011; Wejnert, 2009; Yamanis et al., 2013). The estimator of the proportion of the population with a specific trait (e.g., HIV infection) is:

| (1) |

where S is the full sample, I is the infected sample members, and dj is the self-reported “degree,” or number of contacts of respondent j. Equation (1), sometimes called the RDS II estimator or the Volz-Heckathorn (VH) estimator, is a generalized ratio estimator of a population mean, with inverse probability weighting, and sampling weights proportional to degree.

4. With-replacement Sampling

Many estimators for RDS data are based on the assumption that the sample can be treated as a with-replacement random walk on the social network of the target population. In particular, respondents are assumed to choose freely which of their contacts to recruit into the study. In practice, sampling is without replacement; respondents are not allowed to recruit people who have already participated. This restriction may lead to inaccurate estimates of sampling probabilities and biased estimates, as described in Gile (2011).

In a very large, highly-connected population, it is possible that respondents can pass coupons much as they would in a with-replacement sample, and in such cases, the with-replacement approximation is probably adequate. In contrast, indications that earlier respondents influenced subsequent sampling decisions would suggest potentially problematic violation of the sampling-with-replacement assumption. Previous samples may affect sampling in two ways: locally, when members of a small well-connected sub-group are sampled at a high rate, influencing the future referral choices of other sub-group members, and globally, when the target population as a whole is sampled at a high enough rate that later samples are influenced by earlier samples. In this section, we examine the with-replacement sampling assumption in several ways. First, we use three types of evidence to detect local and global effects of previous samples. Next, we assess the impact of global without-replacement sampling on estimates. Finally, we compare the methods and conclude with recommendations.

4.1. Failure to Attain Sample Size

One apparently straightforward indication of global finite population effects on sampling is a failure to attain the study's target sample size due to the inability of participants to recruit additional members of the target population. Three of our studies: FSW-BA, MSM-BA, and MSM-HI, failed to reach their target sample sizes, suggesting they may have exhausted the available portions of their respective populations. As a diagnostic, however, this indicator has three primary limitations. First, it cannot be assessed until the study is complete. Second, while failure to attain sample size is an indication that the available population has been exhausted, the absence of such failure is not an indication that those effects are absent. Finally, the available population could be dramatically smaller than the target population due to insufficient respondent incentives, inadequate network connections in the populations, or negative perception of the study in the target community. Given these limitations, however, failure to attain target sample size could be an indication that the target population is nearly fully-sampled which would suggest that estimates treating the sample as a small fraction of the population are not appropriate.

4.2. Failed Recruitment Attempts

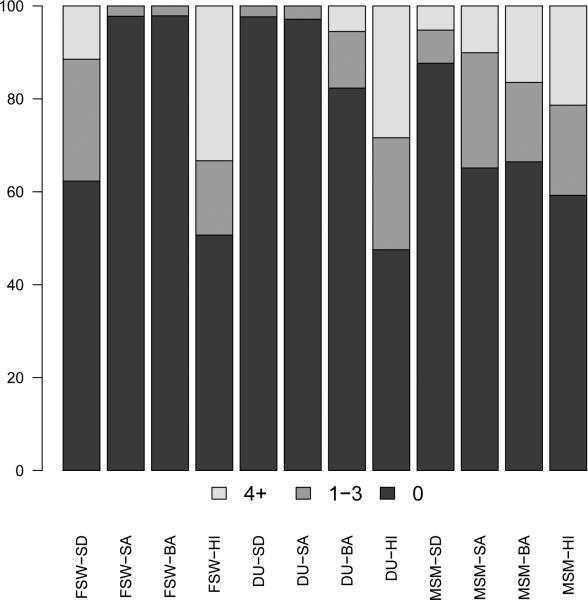

If the sampling process were not influenced by the previous sample, each respondent could distribute coupons without considering whether contacts had already participated in the study. Therefore, respondents who returned for a follow-up survey were asked

-

(A)

How many people did you try to give a coupon but they had already participated in the study?

Responses to this question are summarized in Fig. 3. Rates of failed coupon distributions varied widely by site, with the most failures among drug users in Higuey, with over half of follow-up respondents reporting a failed attempt to distribute a coupon, and the fewest failures being among DU in Santo Domingo and Santiago, and among FSW in Santiago and Barahona, with 3% or fewer respondents reporting failed coupon distributions. In six of the 12 sites, at least 25% of respondents participating in follow-up interviews indicated they had attempted to give coupons to at least one person who had already participated in the study. Note that these rates of failed recruitment attempts may be under-estimates, as follow-up respondents had significantly more successful recruitments than those who did not respond to follow-up (see Section S6). Where present, these reported failures provide direct evidence that respondents’ recruiting decisions were affected by earlier parts of the sample. Where absent, they can either indicate a lack of such influence or accurate knowledge of which alters have already participated in the study.

Fig. 3.

Percent of respondents reporting 0, 1-3, or 4+ failed recruitment attempts. In 6 sites, at least 25% of respondents reported at least one failed recruitment attempt.

4.3. Contacts Participated

Respondents’ coupon-passing choices could also be influenced by the contacts they know who have already participated in the study. To assess this possibility, respondents were asked the following question (see also McCreesh et al. (2012)):

-

(B)

How many other MSM/DU/FSW do you know that have already participated in this study, without counting the person who gave a coupon to you?

Across all 12 datasets, only 30% of respondents answered “0,” and the mean proportion of alters reported to have already participated was 36%. This result suggests that previously sampled population members may indeed impact the alters available for the passing of coupons. Note that about 10% of respondents (347 out of 3,866) reported knowing more people who had already participated than they reported knowing (which was collected in Question F; see Sec. 7). Throughout this section, we truncate responses at one less than the reported number of people known.

If the rate of known participants is uniform across the sampling process, it may be partially explained by measurement error or low-level local clustering with minimal connection to global finite population effects. An increase in this effect over the course of the sample, however, suggests the population is becoming increasingly depleted, such that previously sampled alters constrain the choices of later respondents more than those of earlier respondents. In looking for evidence of a time trend, we fit a simple linear model relating the sample order to the proportion of alters who already participated. Results using survey time or more complex models were similar. To serve as a conservative flagging criterion, in a setting where formal testing is likely invalid, we flag any cases with positive trends over time. We find positive trends in probability of having been previously sampled for increasing survey order, in eight of the 12 populations (DU-SD, DU-SA, FSW-SA, FSW-BA, FSW-HI, MSM-SA, MSM-BA, MSM-HI), suggestive of potential finite population effects. In the Supporting Information, we consider two approaches to visualizing these effects.

4.4. Assessing Effects on Estimates

The results in Sections 4.1 to 4.3 focused on detecting the impact of previous samples on the sampling process. Next, we turn to detecting global effects on estimates using an approach that requires knowing or estimating the size of the target population. If the target population is very large compared to the sample size, then global exhaustion is unlikely to be of concern. If the target population is small, however, then a bias may be induced, but the magnitude of estimator bias will depend on the relative degree distributions of the groups of interest (such as infected and uninfected people): the greater the systematic difference in degrees, the greater the potential bias in estimates (Gile, 2011; Gile and Handcock, 2010). Such biases can be mitigated by using estimators designed to account for finite population effects, such as the estimator based on successive sampling (SS) introduced in Gile (2011) and implemented in the R (R Core Team, 2012) packages RDS (Handcock et al., 2009) and RDS Analyst (Handcock et al., 2013). Note that the SS estimator differs from the VH estimator only in that the former uses finite-population adjusted sampling weights. Therefore, although both may be affected by other sampling anomalies, the effects of other factors will be nearly identical. Thus, a comparison between the results of the SS estimator and the VH estimator can serve as a sensitivity analysis to global population exhaustion. If the two estimators are nearly identical for reasonable estimates of the population size, then global exhaustion is likely not inducing bias into estimates.

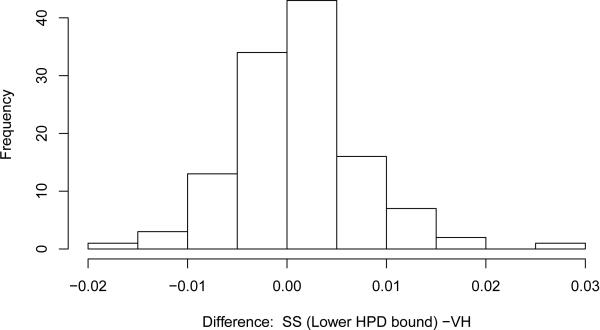

In order to undertake this sensitivity analysis, and described in greater detail in the Supporting Information, we estimated the size of our target populations using two different approaches: 1) drawing on meta-analysis of related studies and 2) the approach introduced in Handcock et al. (2012) and implemented in the package size (Handcock, 2011), which uses information in the degree sequence in the RDS sample. Using these estimated population sizes, we then compared the SS and VH estimators in all 12 target populations for all characteristics described in Section S2 (120 trait-site combinations). In most cases, the two estimates were within 0.01 of each other (see Fig. 4, as well as Fig. S4); Table S1 lists all traits with differences larger than 0.01. Overall, therefore, this analysis suggests that there were not large finite population effects on the VH estimator in these studies. In the Supporting Information we also illustrate Population Size Sensitivity Plots that can be made for individual study sites.

Fig. 4.

Histogram of difference between Successive Sampling and Volz-Heckathorn estimators, over many traits. Successive Sampling estimates based on a “worst case” small approximated population size, based on the lower bound of the Highest Posterior Density interval generated by the population size estimation method in Handcock et al. (2012).

4.5. Comparison of Approaches and Current Recommendations

Table 2 summarizes all of the sampling process indicators across study sites. Failure to attain the desired sample size (FSW-BA, MSM-BA, MSM-HI) is an indication that the earlier samples impacted the later sampling decisions. Consistent with this result, the MSM sites in Barahona and Higuey showed evidence of without-replacement sampling effects on all three of these proposed indicators. Nearly all sites, however, had evidence of finite population effects on at least one indicator. Together, these indicators show that without-replacement effects on sampling were frequent and that reasonable diagnostic approaches for detecting them can produce different results. These differences between indicators can either be the result of random variation or the result of different indicators reflecting different features of the underlying recruitment process. Note that a fourth sampling process indicator, decreasing degree sequence in the sample, is described in the Supporting Information. Contrary to our expectations based on Gile (2011), direct evaluation of the trend in degree over time suggested little evidence of population exhaustion on sampling.

Table 2.

Summary of indicators of violations of the with-replacement sampling assumption. First row indicates sites which were not able to attain the intended sample sizes (Sec. 4.1). The second indicates at least 25% of follow-up respondents reporting they attempted to give coupons to at least one person who had already participated in the study (Sec. 4.2). The third indicates a positive coefficient of sample order in the linear regression model for probability an alter is in the study (Sec. 4.3).

| FSW |

DU |

MSM |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SD | SA | BA | HI | SD | SA | BA | HI | SD | SA | BA | HI | |

| Failed to Attain Sample Size | X | X | X | |||||||||

| Failed Attempts > 25% | X | X | X | X | X | X | ||||||

| Increasing Participants Known | X | X | X | X | X | X | X | X | X | |||

The most effective diagnostic of global effects on estimates is the comparison of the VH and SS estimators. Unlike the other indicators, this indicator measures the direct effect on the estimate. It is possible that global population exhaustion influences sampling (as indicated by one of the earlier indicators), but does not induce bias in the estimator because of other features of the network, such as similar degree distributions between the two sub-populations of interest. This is the case, for example, among FSW in Barahona, and MSM in Higuey, which do not exhibit worrisome effects on estimates, despite failing to reach their intended sample sizes. Among MSM in Barahona, however, the large sample fraction may well be influencing estimates. One challenge in implementing this diagnostic is that the SS estimator requires an estimate of the size of the target population, and these size estimates can be difficult to construct (Bernard et al., 2010; Handcock et al., 2012; Johnston et al., 2013; Salganik et al., 2011; UNAIDS, 2010).

In future studies, questions about failed recruitments and numbers of known participants (Questions A and B), can be helpful in diagnosing local effects, and should be collected. Further, when diagnostics suggest large global impacts of previous samples, researchers should use estimators that do not depend on the sampling with replacement assumption (e.g., Gile (2011); Gile and Handcock (2011)), or minimally these estimators should be used for sensitivity analysis as in Section 4.4. Methods for inference in the presence of local impacts of previous samples are not yet available.

5. Assessing seed dependence

In RDS studies the seeds are not selected from a sampling frame; instead, they are an adhoc convenience sample. In general, the seed selection mechanism has not concerned RDS researchers because of asymptotic results suggesting that the choice of seeds does not effect the final estimate (Heckathorn, 1997, 2002; Salganik and Heckathorn, 2004). However, these asymptotic results only hold as the sample size approaches infinity, and in practice, samples may not be large enough to justify this approximation. Therefore, a natural question is whether a given sample is large enough to overcome the potential biases introduced during seed selection.

There are some apparent similarities between this problem and the monitoring of convergence of computer-based Markov-chain Monte Carlo (MCMC) simulations. Standard MCMC methods, unfortunately, cannot be directly applied here. First, single chain methods, such as Raftery and Lewis (1992), are not applicable because we have multiple trees created by the multiple seeds. Further, multiple chain methods, such as Gelman and Rubin (1992), are not directly applicable because RDS trees are of different lengths. Finally, these standard approaches typically rely on sample chains far longer than are available in RDS data; the longest tree in these studies had a maximum of 16 waves.

The currently used diagnostic for assessing whether the RDS sample is no longer affected by the seeds is to compare the length of the longest tree to the estimated number of waves required for the sampling process to approximate its stationary distribution under a first-order Markov chain model on group membership (Heckathorn et al., 2002). This approach is now standard in the field (Johnston et al., 2008; Malekinejad et al., 2008; Montealegre et al., 2013), however it is not ideal for four reasons: 1) it is typically interpreted as allowing sampling to stop after the supposed stationary distribution is attained rather than collecting most of the sample from the stationary distribution, 2) it is based on a different model for the sampling process than is assumed in most RDS estimators, 3) it is focused on the sample composition and not the estimates, and 4) it has generated a great deal of confusion (see for example, Heimer (2005); Ramirez-Valles et al. (2005a,b); Wejnert and Heckathorn (2008)). Here we propose a series of graphical approaches that help to assess whether there are lingering effects of the choice of seeds on the estimates.

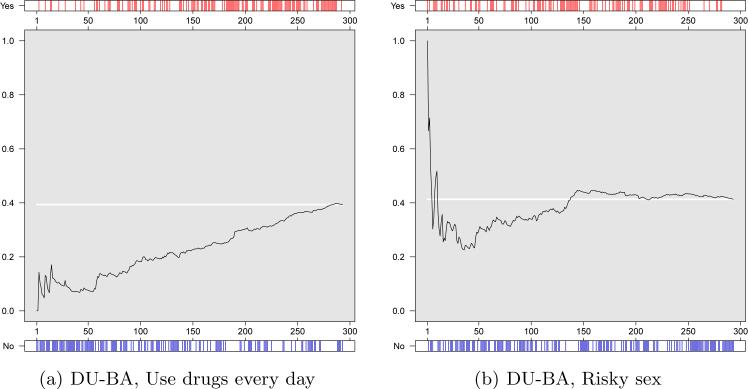

First, we suggest that researchers examine the dynamics of the RDS estimate. Roughly, the more the estimate changes as we collect more data, the more concern we should have that the choice of seeds is still influencing the estimate (see also Bengtsson et al. (2012) for a similar approach). More concretely, let p̂t be the estimated trait prevalence using the first t observations (where we exclude all seeds). To assess the possible lingering impact of seed selection, we plot p̂1, p̂2, . . . , p̂n and see if the estimates seem to stabilize. Fig. 5(a) shows a Convergence Plot for the proportion of DU in Barahona that report using drugs every day. The estimate is increasing over time suggesting that the seeds and early respondents were atypical in their drug use frequency. This constant and sharp increase in estimates actually under-represents the differences between the early and late parts of the sample because the estimate is cumulative. For example, based on the first 50 respondents we would estimate that 8% of the population use drugs every day, but from the final 50 respondents we would estimate that 67% use drugs every day. Compare these dynamics with Fig. 5(b) which plots the estimated proportion of DU in Barahona that reported engaging in unprotected sex in the last 30 days. This estimate appears to be stable for the second half of the sample. Note that both of these estimates arise from the same sample and, therefore, highlight the fact that convergence is a property of an estimate not a sample.

Fig. 5. Convergence Plots showing p̂1, p̂2, . . . , p̂n.

The headers and footers plot the sample observations with and without the trait. The white line shows the estimate based on the complete sample (p̂n).

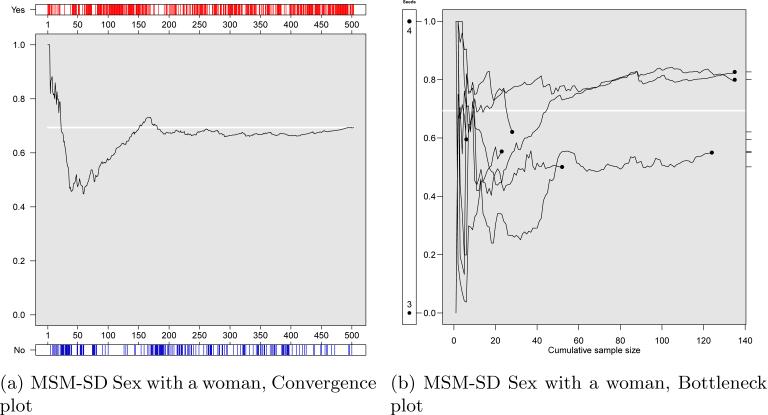

Convergence Plots, however, can mask important differences between seeds. Therefore, we also recommend creating Bottleneck Plots that show the dynamics of the estimates from each seed individually. For example, the Convergence Plot for the estimated proportion of MSM in Santo Domingo that has had sex with a women in the last 6 months appears to be stable (Fig. 6(a)). However, despite this aggregate stability there are large differences between the data from the different seeds (Fig. 6(b)). More generally, large difference in estimates between seeds suggests bottlenecks in the underlying social network that can substantially increase the effect of the seeds on the estimates (Goel and Salganik, 2009).

Fig. 6.

Convergence Plot and Bottleneck Plot for the proportion of MSM in Santo Domingo that have had sex with a women in the last six month. The Convergence Plot masks important differences between the seeds that are revealed by the Bottleneck Plot. In both plots, the white line shows the estimate based on the complete sample (p̂n).

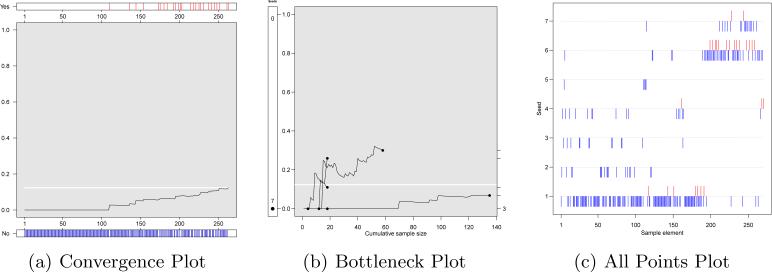

Bottleneck Plots, while showing differences between seeds, obscure the fact that the trees can be started at different times and grow at different speeds. Therefore, we suggest an additional plot called the All Points Plot, which plots the unweighted characteristics of respondents by seed and sample order (Fig. 7(c)). To demonstrate how these three plots can work together, Fig. 7 plots the estimated proportion of MSM in Higuey that self-identify as heterosexual, a key “bridge group” because they can spread infection between the high-risk MSM group and the larger heterosexual population. The Convergence Plot (Fig. 7(a)) shows that there were no self-identified heterosexuals in the first 100 observations (header), but over time the sample started to reach people who identified as heterosexual. Since the estimate has not clearly stabilized, we should be worried that the final estimate of p̂ = 0.12 might be unduly influenced by the choice of seeds. Further, the Bottleneck Plot shows that the self-identified heterosexuals were reached only within certain trees suggesting a possible problem with bottlenecks (Fig. 7(b)). Finally, the All Points Plot (Fig. 7(c)) reveals that self-identified heterosexuals were unusual in that they both appeared in the sample late and only in a small number of trees, a fact that is difficult to infer from the previous two plots.

Fig. 7.

Three diagnostic plots for estimates of MSM in Higuey that self-identify as heterosexual. The Convergence Plot (a) shows that data collected late in the sample differs from data collected early in the sample. The Bottleneck Plot (b) shows that the chains explored different subgroups suggesting a problem with bottlenecks. The All Points Plots (c) shows that the self-identified heterosexuals (represented by up-ticks in the plot) were unusual in that they both arrived in the sample late and arrived from a small number of chains, a fact that is difficult to infer from the previous two plots.

5.1. Current Recommendations

We recommend creating Convergence Plots, Bottleneck Plots, and All Points Plots for all traits of interest during data collection. Evidence of unstable estimates from Convergence Plots (e.g., Fig. 5(a)) should be taken as an indication that results may be suspect and that more data should be collected. If additional data collection is not possible, researchers may need to use more advanced estimators that are designed to correct for features such as seed bias (e.g., Gile and Handcock (2011)). Evidence of bottlenecks (e.g., Fig. 6(b)) should be taken as an indication that estimates may be unstable and that more data should be collected. If additional data collection is not possible, researchers should consider presenting estimates for each tree individually rather than trying to combine them into an overall estimate, and researchers should be aware that standard RDS confidence intervals will be too small (Goel and Salganik, 2010). If it is not possible to create these plots during data collection, we suggest that they should still be made, used to consider alternative estimators, and, possibly presented with published results. Further, if it is not possible to closely monitor all of these plots during data collection—as might occur in a large multisite study with many traits of interest—we suggest using flagging criteria such as those developed and applied in the Supporting Information.

We wish to emphasize that there are cases where the Convergence Plots and Bottleneck Plots could fail to detect real problems. For example, in some situations the estimate might appear stable (Fig. 5(b)), but then the sample could move to a previously unexplored part of the target population yielding very different estimates. Further, the Bottleneck Plot can fail in the presence of extremely strong bottlenecks and very unbalanced seed selection. For example, if there is a strong bottleneck between brothel-based and street-based sex workers and all the seeds are brothel-based, the sample may never include street-based sex workers and the Bottleneck Plots would not be able to alert researchers to this problem.

6. Reciprocation

Most current RDS estimators use self-reported degree to estimate inclusion probabilities based on the assumption that all ties are reciprocated. Current best practice monitors this feature by asking respondents during their initial visit about their relationship with the person who recruited them, typically choosing from a set of categories (e.g., acquaintance, friend, sex partner, spouse, other relative, stranger, or other) (Heckathorn, 2002; Lansky et al., 2012). Here, we present responses to a slightly different question, and in the Supporting Information (Section S3), we present further discussion of additional approaches aimed at studying the reciprocation patterns in the broader social network.

On the follow-up questionnaire, for each coupon given out, respondents were asked:

-

(C)

Do you think that the person to whom you gave a coupon would have given you a coupon if you had not participated in the study first?

Table 3 shows the results of this question, separated by population and site. Overall, about 88% of responses indicated reciprocation, but there are notable differences across the populations and sites. Reciprocation rates in Santiago were considerably higher than the other cities, and reciprocation rates of DU were lower than other populations. The reciprocation rates among DU were especially low in Higuey, and also in Barahona, where participants may have been selling coupons (for more on coupon-selling, see Scott (2008), also Broadhead (2008); Ouellet (2008)).

Table 3.

Percent of affirmative responses to the question (C), “Do you think that the person to whom you gave a coupon would have given you a coupon if you had not participated in the study first?”

| FSW |

DU |

MSM |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SD | SA | BA | HI | SD | SA | BA | HI | SD | SA | BA | HI | |

| Percent Reciprocated | 87 | 98 | 87 | 89 | 86 | 96 | 74 | 79 | 87 | 98 | 91 | 91 |

6.1. Current Recommendations

The reciprocity assumption requires that the recruiter and recruit are known to each other and that both people would be willing to recruit each other. Therefore, we recommend that on the initial survey researchers should collect information about the relationship between the recruiter and recruit (see e.g., Heckathorn (2002)) and information directly assessing the possibility of recruitment (similar to question C; see also Rudolph et al. (2013)). Researchers should calculate reciprocity rates as defined by both questions during data collection. Low rates of reciprocation by either measure could be used to improve field procedures (e.g., training respondents about how to recruit others) and alert researchers to potential problems (e.g., coupon-selling). Further, high-rates of non-reciprocation may require alternative RDS estimators; see Lu et al. (2013) for one such approach.

7. Measurement of Degree

The Volz-Heckathron estimator weights respondents based on their self-reported degree (see Equation 1), and the fact that the estimates can depend critically on self-reported degree has troubled some RDS researchers (Bengtsson and Thorson, 2010; Frost et al., 2006; Goel and Salganik, 2009; Iguchi et al., 2009; Rudolph et al., 2013; Wejnert, 2009) because of the well-documented problems with self-reported social network data in general (Bernard et al., 1984; Brewer, 2000; Marsden, 1990). However, despite the widespread concern about degree measurement, the issue is rarely explored empirically in RDS studies (for important exceptions, see McCreesh et al. (2012); Wejnert (2009); Wejnert and Heckathorn (2008)). Here we present several methods of assessing the measurement of degree and the resulting effects on estimates.

In this study, respondents were asked a series of four questions to measure degree (Johnston et al., 2008) (DU versions, others analogous):

-

(D)

How many people do you know who have used illegal drugs in the past three months?

-

(E)

How many of them live or work in this province?

-

(F)

How many of them [repeat response from E] are 15 years old or older?

-

(G)

How many of them [repeat response from F] have you seen in the past week?

The response to the fourth question (G) was the degree used for estimation. Respondents were also asked:

-

(H)

If we were to give you as many coupons as you wanted, how many of these drug users (repeat the number in F) do you think you could give a coupon to by this time tomorrow?

-

(I)

If we were to give you as many coupons as you wanted, how many of these drug users (repeat the number in F) do you think you could give a coupon to by this time next week?

During the follow-up visit, the series of four main degree questions (D, E, F, and G) was repeated, and respondents were also asked how quickly they distributed each of their coupons. We use these responses, along with data on the number of days between recruiter and recruit interviews to evaluate three features of the degree question: validity of the one week time frame used in question (G), test-retest reliability of responses, and the possible effect of inconsistent reporting on estimates.

7.1. Validity of Time Window

A time frame of one week was used in the key degree question (G) because previous qualitative experience with RDS suggested most coupns were distributed within that time. In the Supporting Information, therefore, we provide detailed examination of recruitment time dynamics and conclude that: respondents reported that a high proportion (92%) of their alters could be reached within one week (Fig. S9), respondents reported distributing most (95%) of their coupons within one week (Fig. S10), and the number of days between the interview of the recruiter and recruit was usually less than one week (79% of the time, Fig. S11). We conclude, therefore, that for these studies the one-week time window was reasonable.

7.2. Test-retest reliability

For participants who completed a follow-up survey (about half the participants, see Fig. 2), we have a measure of the consistency, but not accuracy, of their degree responses. The median difference between degree at the initial and follow-up visits was 0 (Fig. S12) suggesting that there was nothing systematic about the two visits that led to different answers on the questionnaire (e.g., different location, different length of interview, etc.). However, the responses of many individuals differed, in some cases substantially. The association between the measurements is affected by a small number of outliers, so we use the more robust Spearman's rank correlation to measure the association between the visits. The rank correlations range from 0.17 to 0.47 with a median correlation for FSW of 0.33 and a median correlation for DU and MSM of 0.41 (Fig. S13(a)). While these low measures of test-retest reliability match our expectation, they differ substantially from a recent study of FSW in Shanghai, China which found a test-retest reliability of network size of 0.98 (Yamanis et al., 2013). Further research will be needed to understand the source of this difference.

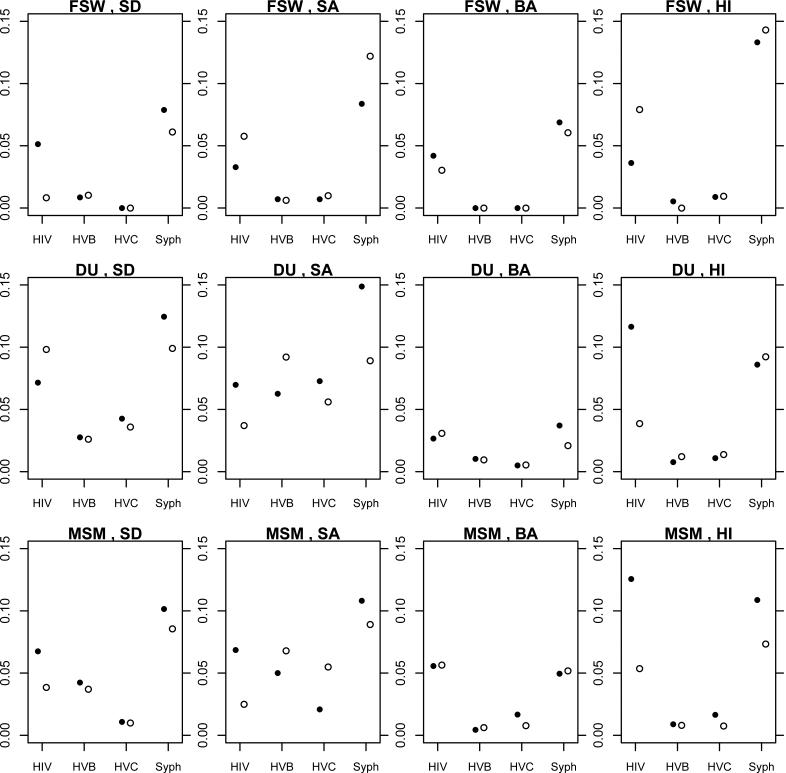

7.3. Effect on estimates

Finally, we studied the robustness of our estimates by calculating disease prevalence estimates using degree as measured in the initial and follow-up interviews, for those responding to both surveys (Fig. 8). The differences in disease prevalence estimates are generally small in an absolute sense, ranging from 0 to 0.08 (8 percentage points) with a median difference of 0.01. When broken down by disease, HIV had the largest median absolute difference, 0.031, followed by Syphilis, 0.017. Hepatitis B and C had median absolute differences in prevalence of essentially 0, possibly driven by the fact that these diseases are very rare in these populations. Note that these differences probably slightly over-estimate the sensitivity of RDS estimates, as these estimates are restricted to respondents who completed both surveys, so have smaller sample sizes.

Fig. 8.

Disease prevalence estimates from 12 studies for 4 diseases using Question G at enrollment (solid circle) and follow-up (hollow circle). The plot includes only people who participated in both the initial and follow-up survey (see Fig. 2 for sample sizes).

In addition to comparing these differences in absolute units, we also consider the differences in relative units, (| p̂ – p̂′ |)/p̂. The difference between the two estimates is more than 50% of the original estimate in about a quarter of the cases. In public health disease surveillance, an estimated increase in disease prevalence of 50% is likely cause for concern, even if the estimated prevalences themselves were quite low. These data show that measurement error with respect to degree could introduce a change this large when prevalence is low.

7.4. Current Recommendations

When collecting data, the time period used to elicit self-reported degree should be reflective of the time in which coupons are likely to be distributed. These results suggest that the one-week period used in these studies was reasonable, but this should be checked in future studies with different populations. We also recommend that researchers collect degree at both the initial and follow-up visits to assess test-retest reliability. Finally, in future studies, when considering which measure of degree to use, it is important to recall these measures are being used to approximate the relative probability of inclusion of respondents. To the extent that there are other things about a respondent, such as social class or geographic location, that make him or her more or less likely to participate, the probability of inclusion is no longer proportional to degree. Any other features thought to be related to the probability of inclusion should also be collected.

8. Participation Bias

RDS estimation relies on the assumption that recruits represent a simple random sample from the contacts of each recruiter. Limited ethnographic evidence, however, suggests that recruitment decisions can be substantially more complex than is assumed in standard RDS statistical models (Bengtsson and Thorson, 2010; Broadhead, 2008; Kerr et al., 2011; McCreesh et al., 2012, 2013; Ouellet, 2008; Scott, 2008). For example, a study of MSM in Brazil found that some people tended to recruit their riskiest friends because they were thought to need safe sex counseling (Mello et al., 2008). Further, the same study found that some MSM refused to participate when recruited because they were worried about revealing their sexual orientation. Such selective recruitment and participation could lead to non-response bias.

We find it helpful to consider the process of a new person entering the sample as the product of three decisions:

-

(a)

Decision by recruiter to pass coupons (how many and to whom)

-

(b)

Decision by recruit to accept coupon

-

(c)

Decision by recruit to participate in study given that they have accepted a coupon

Biases at any of these steps could result in systematic over or under representation of certain subgroups in the sample, resulting in biased estimates. We assess these possible biases in four ways. The first two, recruitment effectiveness and recruitment bias, address the cumulative effects of all three decisions on the quantity and characteristics of recruits; the third addresses two forms of non-response corresponding to steps (b) and (c); and the final analysis examines a respondent's motivation for participation.

8.1. Recruitment Effectiveness

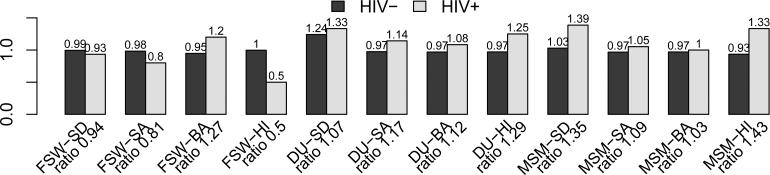

Systematic differences in recruitment effectiveness can lead to biased estimates under some conditions (Tomas and Gile, 2011). For example, if respondents with HIV have systematically more recruits and are also more likely to have contact with others with HIV, then people with HIV will be over-represented in the sample. In Fig. 9, we present the mean numbers of recruits by HIV status for each site. We call this plot a Recruitment Effectiveness Plot. In a single study, paired bars might represent differential recruitment effectiveness by many traits. In these 12 studies, the most dramatic difference is among FSW in Higuey, where respondents with HIV recruit at only half the rate of those without HIV.

Fig. 9. Recruitment Effectiveness Plot.

Average recruits for HIV+ and HIV− respondents by site. The ratio is provided under the bars. Differential recruitment effectiveness can lead to bias in the RDS estimates under some conditions (Tomas and Gile, 2011).

8.2. Recruitment Bias

Recruitment bias—when a respondent's contacts have unequal probabilities of selection—can result in a pool of recruits that is systematically different from the pool of respondents’ contacts. Because existing inferential methods assume recruits are a simple random sample from among contacts, these systematic differences may bias resulting estimates (Gile and Handcock, 2010; Tomas and Gile, 2011). To examine the effects of such biases on the sample composition of a specific trait, employment status, we introduced the following questions in the DU questionnaires (for a related approach, see Yamanis et al. (2013)):

-

(J)

How many of them (repeat number of contacts in Question F) are currently working?

-

(K)

(follow-up questionnaire): Do the persons to whom you gave the coupons have work? (asked separately for each of 1 to 3 persons).

-

(L)

Are you actually working? (we consider responses given by recruits of each respondent.)

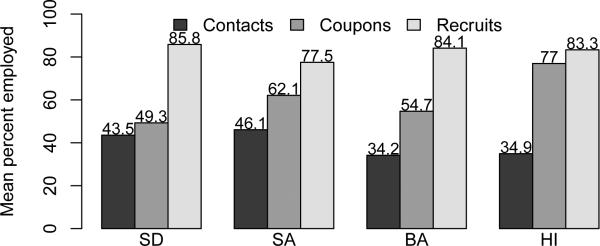

Overall, then, these questions, in order, should measure the employment characteristics of the pool of potential recruits, the employment characteristics of those who were chosen for referral by the respondents and accepted coupons, and the employment characteristics of those who then chose to return the coupons and enroll in the study. The difference between the characteristics reported in the first (J) and second (K) questions reflect the joint effects of the decisions to pass and accept coupons, while the difference in characteristics between the second (K) and third (L) questions reflect the effect of the decision to participate in the interview.

Fig. 10, which we call a Recruitment Bias Plot provides a summary of the responses to these questions. This plot compares the composition of comparable sets of respondents’ social contacts, coupon recipients, and recruits. To do this, we restrict analysis to the set S of recruiters with data available on all three levels, and then calculate the average percent of contacts, coupon recipients, and recruits who are employed as follows:

where Fi, Ji, and Li refer to respondent i's response to questions F, J, and L, Kij is a binary indicator of i's report of the employment status of the person receiving his or her jth coupon, and is the number of coupons i reported distributing.

Fig. 10. Recruitment Bias Plot.

Percent of drug users employed, by location and question.

In every site, there is a marked increase in the reported rate of employment for each stage in the referral process (Fig. 10). These data suggest that respondents distributing coupons are more likely to give them to those among their contacts who are employed, and that among those receiving coupons, those who are employed are more likely to return them.

These results are a provocative suggestion of aberrant respondent behavior, and could belie a dramatic over-sampling of employed DU. These particular results, however, should be seen in light of other possible explanations, in particular the possibility of survey response bias. The succession of questions, reflecting increased proportions of reported employment, also correspond to increasing social closeness to the respondent. Because it is possible “having work” is a desirable status, a response bias based on social desirability would also explain the results in this section.

Other researchers (e.g., Heckathorn et al. (2002), Wang et al. (2005), Wejnert and Heckathorn (2008), Iguchi et al. (2009), Rudolph et al. (2011), Liu et al. (2012), and Yamanis et al. (2013)) have introduced and used statistical tests assessing the assumption of random recruitment. We address these, and introduce a new non-parametric test, in the Supporting Information.

8.3. Non-Response

Non-response, where intended respondents do not participate, is a problem in most surveys. If non-responders differ systematically from responders, estimates will suffer from non-response bias. Non-response and non-response bias are particularly challenging to measure in RDS studies because non-responders are contacted by other participants rather than by researchers and because non-response can arise in two ways—by refusing a coupon or by failing to return the coupon to participate in the study.

In order to better understand non-response, respondents were asked during the follow-up interview:

-

(M)

How many coupons did you distribute?

-

(N)

How many people did not accept a coupon you offered to them?

We estimate the Coupon-Refusal Rate (RC) by comparing responses to question (M) and question (N), and we estimate the Non-Return Rate (RN) by comparing responses to question (M) to the number of survey participants presenting coupons from each respondent. Finally, comparing the number of respondents to the number of attempted eligible coupon-distributions (refused and distributed) we estimate the Total Non-Response Rate (RT). Specifically, these rates are respectively computed as follows:

| (2) |

| (3) |

| (4) |

where S is again restricted to those with data on all relevant questions, is the number of coupons distributed by i, is the number of refused coupons reported by i, and | Recruits(i)| represents the number of successful recruits of i. In general, the problems of refusal, non-return, and total non-response were slightly more serious among FSW than DU or MSM (Table 4). Note that all of these estimates may be under-estimates of non-response, as respondents with none or fewer successful recruitments were less likely to complete the follow-up survey (see Section S6).

Table 4. RDS Non-Response Rates.

Coupon refusal rate is the total number of reported coupon refusals to eligible alters divided by that number plus the number of reported coupons distributed. Coupon Non-Return is the percent of coupons that were not returned (among accepted coupons). Total Non-Response rate is the percent of attempted recruitments of eligible alters not resulting in survey participation. All rates based only on recruits of people who completed the follow-up interview.

| FSW |

DU |

MSM |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rate | SD | SA | BA | HI | SD | SA | BA | HI | SD | SA | BA | HI |

| Coupon Refusal (RC) | 56.5 | 45.3 | 7.5 | 28.0 | 0.4 | 15.9 | 11.3 | 41.3 | 7.7 | 16.5 | 25.4 | 29.2 |

| Non-Return (RN) | 13.4 | 43.9 | 43.0 | 41.4 | 26.1 | 35.3 | 44.6 | 33.9 | 29.4 | 23.6 | 39.7 | 31.9 |

| Total Non-Response (RT) | 62.3 | 69.3 | 47.2 | 57.8 | 26.3 | 45.6 | 50.9 | 61.2 | 34.8 | 36.3 | 55.0 | 51.8 |

| Number of Recruiters | 123 | 136 | 141 | 151 | 126 | 105 | 164 | 141 | 153 | 128 | 152 | 102 |

To know whether this non-response could induce non-response bias, we would need to know if the people who refused were different than those who participated. We could not collect information about non-responders directly so we asked recruiters why their non-respondents had refused coupons, as has been done in previous studies (Iguchi et al., 2009; Johnston et al., 2008; Stormer et al., 2006). For each of up to 5 refusals, the return survey asked:

-

(O)

What is the principal reason why these persons did not accept a coupon?

Responses to (O) are summarized in the Coupon-Refusal Analysis in Table 5. The most common reason given for refusal was aversion to being identified as a member of the target population (26.6%). Many refusers also reported fear of test results (especially HIV test results: 16.3%). Some were “uninterested” (22.0%). Interestingly for study organizers, among the reasons for “other,” 5.2% of MSM refusers reportedly did not trust the study or did not believe the incentive was true. For an alternative approach to collecting information about refusals, see Yamanis et al. (2013).

Table 5. Coupon-Refusal Analysis.

Responses to the question (O), “What is the principal reason why these persons did not accept a coupon?”

| FSW |

DU |

MSM |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Response | SD | SA | BA | HI | SD | SA | BA | HI | SD | SA | BA | HI |

| Too Busy | 7.3 | 80.0 | 10.0 | 0.8 | 0.0 | 10.3 | 0.0 | 3.0 | 0.0 | 30.8 | 4.5 | 12.1 |

| Fear being identified | 31.4 | 0.0 | 63.3 | 21.3 | 100.0 | 17.9 | 22.4 | 20.5 | 4.2 | 30.8 | 30.3 | 31.3 |

| Incentive low/location far | 3.6 | 0.0 | 0.0 | 2.5 | 0.0 | 2.6 | 0.0 | 1.8 | 0.0 | 0.0 | 0.8 | 1.0 |

| Not interested | 26.3 | 0.0 | 10.0 | 38.5 | 0.0 | 2.6 | 10.2 | 15.1 | 0.0 | 30.8 | 30.3 | 19.2 |

| Fear HIV/other results | 15.3 | 0.0 | 6.7 | 28.7 | 0.0 | 15.4 | 20.4 | 10.2 | 75.0 | 7.7 | 16.7 | 4.0 |

| Fear giving blood | 0.7 | 0.0 | 0.0 | 0.8 | 0.0 | 33.3 | 0.0 | 22.9 | 0.0 | 0.0 | 0.0 | 7.1 |

| Fail Eligibility | 0.0 | 0.0 | 10.0 | 0.8 | 0.0 | 0.0 | 4.1 | 6.0 | 0.0 | 0.0 | 3.8 | 2.0 |

| Already got coupon | 1.5 | 20.0 | 0.0 | 0.0 | 0.0 | 0.0 | 2.0 | 0.6 | 0.0 | 0.0 | 10.6 | 0.0 |

| Other | 13.9 | 0.0 | 0.0 | 6.6 | 0.0 | 17.9 | 40.8 | 19.9 | 20.8 | 0.0 | 3.0 | 23.2 |

| Total Reasons Reported | 137 | 5 | 30 | 122 | 1 | 39 | 49 | 166 | 24 | 13 | 132 | 99 |

8.4. Decisions to Accept Coupon and Participate in Study

In addition to exploring reasons for not participating in the study, we also asked about each respondent's reason for participating, as in Johnston et al. (2008):

-

(P)

What is the principle reason why you decided to accept a coupon and participate in this study?

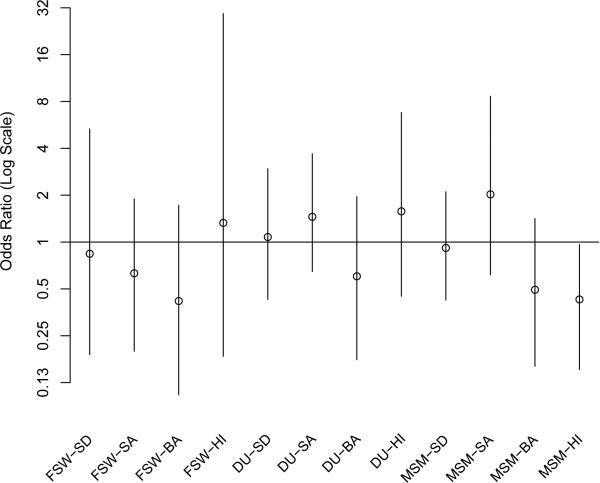

Responses are reported in Table 6. In every site, a substantial majority reported participating in the interest of receiving HIV test results. Further, we go beyond previous researchers and assess whether the motivation for participation is associated with important study outcomes. For example, we found that the odds of having HIV among those who expressed motivation based on the HIV test was 0.43 (MSM-HI) to 2.03 (MSM-SA) times the odds for those who did not. Similar relationships hold when analyses are restricted to those who have not had an HIV test in the last 3 months or last 6 months. We summarize these results in the Motivation-Outcome Plot in Fig. 11. The unknown dependence structure, does not allow for formal statistical testing, however, note that to the extent that the probability of participation is associated with participant motivation and participant motivation is associated with an outcome of interest, bias will be introduced into the estimates even if these associations are not statistically significant.

Table 6.

Responses to the question (P), “What is the principle reason why you decided to accept a coupon and participate in this study?” The “Other” category includes: “I have free time”, “To stop using” (DU only), and “Other.”

| FSW |

DU |

MSM |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Response | SD | SA | BA | HI | SD | SA | BA | HI | SD | SA | BA | HI |

| Incentive | 2.9 | 5.0 | 3.3 | 1.3 | 11.6 | 16.5 | 6.0 | 5.0 | 5.7 | 5.8 | 1.8 | 5.9 |

| For HIV test | 88.5 | 77.7 | 90.1 | 86.4 | 51.3 | 63.2 | 65.1 | 70.1 | 71.5 | 71.9 | 81.5 | 83.3 |

| Other/all test | 1.0 | 2.3 | 0.4 | 2.6 | 18.4 | 0.6 | 4.7 | 3.0 | 1.4 | 1.2 | 5.0 | 1.9 |

| Recruiter | 1.7 | 5.0 | 1.6 | 2.0 | 3.9 | 10.3 | 6.3 | 17.6 | 7.7 | 5.8 | 4.6 | 4.8 |

| Study interest | 4.6 | 10.0 | 4.1 | 7.3 | 10.0 | 8.7 | 17.9 | 4.3 | 11.3 | 14.7 | 5.0 | 3.7 |

| Other | 1.2 | 0.0 | 0.4 | 0.3 | 4.8 | 0.6 | 0.0 | 0.0 | 2.4 | 0.6 | 2.1 | 0.4 |

| Total | 410 | 301 | 243 | 302 | 310 | 310 | 301 | 301 | 505 | 327 | 281 | 269 |

Fig. 11. Motivation-Outcome Plot.

Odds ratios of having HIV given HIV test as motivation for study participation. Ratios greater than 1 indicate those participating for HIV test results more likely to have HIV. For reference, nominal 95% intervals are based on the inversion of Fisher's exact test (these would be confidence intervals if the data were independent identically distributed).

8.5. Current Recommendations

The approaches in this section do not directly indicate the extent to which estimates may be impacted by the various forms of participation bias. Our approaches for measuring and monitoring potential sources of participation bias, instead, were developed in the interest of (1) adjusting the sampling process, (2) informing the choice of an estimator, or (3) informing the development of new approaches to inference. Ideally, the quantitative survey-based approaches presented here should be paired with qualitative evaluation of decision-making associated with recruitment and participation (Broadhead, 2008; Kerr et al., 2011; McCreesh et al., 2012, 2013; Mello et al., 2008; Ouellet, 2008; Scott, 2008).

Fortunately, differential recruitment effectiveness is possible to evaluate using data readily available in all RDS studies, and is directly actionable in terms of estimators. Recruitment Effectiveness Plots should be made to study the relationship between recruitment and key study variables, both during and after data collection. Where differences are found, qualitative study or discussion with survey sta may reveal areas for improvement in the sampling process. Further, these findings may influence the choice of estimators. Tomas and Gile (2011) show that the estimator in Salganik and Heckathorn (2004) is more robust to differential recruitment effectiveness than other estimators. The newer estimator of Gile and Handcock (2011) allows researchers to adjust for differential recruitment effectiveness by outcome and wave of the sample.

Recruitment Biases are more difficult to evaluate, in part because they require more specialized data-collection. The particular characteristics of interest, such as employment status, will be study-specific and require researchers to be very familiar with the population and sampling process. Any characteristic that may be associated with increased participation should be measured for respondents, potential respondents (i.e., contacts of respondents), and coupon-recipients so that researchers can create Recruitment Bias Plots (Fig. 10). The collection of such data may also inspire further development of statistical inference for RDS data. The relationship between drug user employment and participation is a good example. If employed alters are indeed more likely to be sampled, and if this tendency can be measured (as in these data), methods may be developed to adjust inference for this tendency. The estimators in Gile and Handcock (2011) and Lu (2013) are particularly conducive to this kind of adjustment.

A thorough evaluation of non-response bias requires a follow-up study of non-responders. Despite the obvious logistical challenges (see Kerr et al. (2011); McCreesh et al. (2012); Mello et al. (2008)), we recommend such a study whenever researchers have special concerns about non-response. Absent such a study, computing RDS Non-Response Rates could alert researchers to possible problems with non-response. A Coupon-Refusal Analysis could then help researchers adjust their studies to remove barriers to participation. Further, the results of a Coupon-Refusal Analysis could suggest individual characteristics that might be related to non-response and which, therefore, should be measured. For example, if distance to the study site seems burdensome, researchers could introduce an additional study site, or, minimally, collect data on a measure of distance-burden to either adjust estimators or to monitor recruitment bias.

Participant motivations should also be measured in all studies, as an indication of potential differential valuation of incentives by different sub-populations. Motivation-Outcome relationships can be studied between any combinations of expressed motivations and relevant respondent characteristics. Mechanisms to adjust inference for biases introduced by measurable differential incentives to participation, such as those due to interest in HIV test results, are not yet developed. The precise quantification of these effects, and their impacts on inference are an important area for future research.

9. Discussion

RDS is designed to enact a near statistical miracle: beginning with a convenience sample, selecting subsequent samples dependent on previous samples, then treating the final sample as a probability sample with known (or estimable) inclusion probabilities. This is in contrast to traditional survey samples, where sampling is intended to be conducted from a well-defined sampling frame according to sampling procedures fully controlled by the researcher.

Miracles do not come for free, and where alternative workable strategies are available, RDS is often not advisable. Unfortunately, alternative approaches are unavailable for many populations of interest. Therefore, researchers need to be aware of two main costs of RDS: large variance of estimates (see, for example Goel and Salganik (2010); Johnston et al. (2013); Mouw and Verdery (2012); Szwarcwald et al. (2011); Wejnert et al. (2012)) and many assumptions, including those considered in this paper. Unfortunately, these assumptions are difficult to assess with certainty in real hard-to-reach populations when the sampling is largely conducted by respondents outside of the view of researchers. Rather than attempting to provide definitive critical values for statistical tests, which would themselves rest on numerous untested assumptions, we have provided a broad set of intuitive tools to allow RDS researchers to better understand the processes that generated their data.

For researchers planning future data collection, we briefly summarize our current recommendations in Table 7, and we note that some of these methods are now available in the R (R Core Team, 2012) packages RDS (Handcock et al., 2009) and RDS Analyst (Handcock et al., 2013). We emphasize that these diagnostics should continue to be refined and improved as more is learned about RDS sampling and as new estimators are developed. In fact, we hope that this paper will stimulate just such research.

Table 7.

Summary of recommendations.

| Before data collection |

| • Formative research |

| • Add the following questions to the initial survey |

| – Questions to assess finite population effects on sampling (e.g., B) |

| – Questions to assess validity in time window of degree question (e.g., H, I) |

| – Questions to asses reciprocity (e.g., C and those in Heckathorn (2002)) |

| – Questions to assess recruitment bias (e.g., J, L) |

| – Questions for Motivation-Outcome Plot (e.g., P) |

| • Add the following questions to the follow-up survey |

| – Questions to assess finite population effects on sampling (e.g., A) |

| – Questions to assess recruitment bias (e.g., K) |

| – Questions to assess non-response (e.g., M, N) |

| – Questions for Coupon-Refusal analysis (e.g., O) |

|

During data collection |

| • For all traits of interest, create Convergence Plots, Bottleneck Plots, and All Points Plots (Sec. 5) |

| • Create Recruitment Effectiveness Plots (Fig. 9) |

| • Create Recruitment Bias Plots (Fig. 10) |

| • Calculate the reciprocation rate (Table 3) |

| • Check the validity of the time frame used in the degree question (Sec. 7.1) |

| • Calculate the non-response rates (Table 4) |

| • Conduct a coupon refusal analysis (Table 5) |

| • Conduct Motivation-Outcome analysis (Fig. 11) |

|

After data collection |

| • Assess finite population effects in data collection and estimates (Sec. 4) |

| • Calculate test-retest reliability of the degree question (Sec. 7.2) |

Supplementary Material

Acknowledgments

We are grateful to Tessie Caballero Vaillant, El Consejo Presidencial del SIDA, Dominican Republic, for allowing us to use these data. We would also like to thank Maritza Molina Achécar, Juan Jose Polanco and Sonia Baez of El Centro de Estudios Sociales y Demograficos, Dominican Republic, for overseeing data collection, and Chang Chung, Sharad Goel, Mark Handcock, Doug Heckathorn, Martin Klein, Dhwani Shah, and Cyprian Wejnert for helpful discussions. Finally, we would like to thank all those who participated in these studies. Research reported in this publication was supported by grants from the NIH/NICHD (R01-HD062366, R24-HD047879, and R21-AG042737) and the NSF (CNS-0905086 and SES-1230081), including support from the National Agricultural Statistics Service. The content is solely the responsibility of the authors.

Contributor Information

Krista J. Gile, University of Massachusetts, Amherst, MA, USA

Lisa G. Johnston, Tulane University, New Orleans, LA, USA and University of California, San Francisco, San Francisco, CA, USA

Matthew J. Salganik, Microsoft Research, New York, NY USA and Princeton University, Princeton, NJ, USA

References

- Barbosa Júnior A, Pati Pascom AR, Szwarcwald CL, Kendall C, McFarland W. Transfer of sampling methods for studies on most-at-risk populations (MARPs) in Brazil. Cadernos de Saúde Pública. 2011;27(S1):S36–S44. doi: 10.1590/s0102-311x2011001300005. [DOI] [PubMed] [Google Scholar]

- Bengtsson L, Lu X, Nguyen QC, Camitz M, Hoang NL, Nguyen TA, Liljeros F, Thorson A. Implementation of web-based respondent-driven sampling among men who have sex with men in vietnam. PLoS ONE. 2012 Nov;7(11):e49417. doi: 10.1371/journal.pone.0049417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bengtsson L, Thorson A. Global HIV surveillance among MSM: Is risk behavior seriously underestimated? AIDS. 2010;24(15):2301–2303. doi: 10.1097/QAD.0b013e32833d207d. [DOI] [PubMed] [Google Scholar]

- Bernard HR, Hallett T, Iovita A, Johnsen EC, Lyerla R, McCarty C, Mahy M, Salganik MJ, Saliuk T, Scutelniciuc O, Shelley GA, Sirinirund P, Weir S, Stroup DF. Counting hard-to-count populations: The network scale-up method for public health. Sexually Transmitted Infections. 2010;86(Suppl 2):ii11–ii15. doi: 10.1136/sti.2010.044446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard HR, Killworth P, Kronenfeld D, Sailer L. The problem of informant accuracy: The validity of retrospective data. Annual Review of Anthropology. 1984;13:495–517. [Google Scholar]

- Borgatti SP. NetDraw software for network visualization. Technical report. Analytic Technologies; Lexington, KY.: 2002. [Google Scholar]

- Brewer DD. Forgetting in the recall-based elicitation of personal and social networks. Social Networks. 2000;22(1):29–43. [Google Scholar]

- Broadhead RS. Notes on a cautionary (tall) tale about respondent-driven sampling: A critique of Scott's ethnography. International Journal of Drug Policy. 2008;19(3):235–237. doi: 10.1016/j.drugpo.2008.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burt RD, Thiede H. Evaluating consistency in repeat surveys of injection drug users recruited by respondent-driven sampling in the Seattle area: Results from the NHBS-IDU1 and NHBS-IDU2 surveys. Annals of Epidemiology. 2012;22(5):354–363. doi: 10.1016/j.annepidem.2012.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frost SD, Brouwer KC, Firestone Cruz MA, Ramos R, Ramos ME, Lozada RM, Magis-Rodriguez C, Strathdee SA. Respondent-driven sampling of injection drug users in two US–Mexico border cities: Recruitment dynamics and impact on estimates of HIV and Syphilis prevalence. Journal of Urban Health. 2006;83:83–97. doi: 10.1007/s11524-006-9104-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gelman A, Rubin DB. Inference from iterative simulation using multiple sequences. Statistical Science. 1992;7(4):457–472. [Google Scholar]

- Gile KJ. Improved inference for respondent-driven sampling data with application to HIV prevalence estimation. Journal of the American Statistical Association. 2011;106(493):135–146. [Google Scholar]

- Gile KJ, Handcock MS. Respondent-driven sampling: An assessment of current methodology. Sociological Methodology. 2010;40:285–327. doi: 10.1111/j.1467-9531.2010.01223.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gile KJ, Handcock MS. Network model-assisted inference from respondent-driven sampling data. arXiv. 2011:1108.0298. doi: 10.1111/rssa.12091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goel S, Salganik MJ. Respondent-driven sampling as Markov chain monte carlo. Statistics in Medicine. 2009;28(17):2202–2229. doi: 10.1002/sim.3613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goel S, Salganik MJ. Assessing respondent-driven sampling. Proceedings of the National Academy of Science, USA. 2010;107(15):6743–6747. doi: 10.1073/pnas.1000261107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Handcock MS. size: Estimating hidden population size using respondent driven sampling data. R package version 0.20. 2011 doi: 10.1214/14-EJS923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Handcock MS, Fellows IE, Gile KJ. RDS Analyst: Analysis of Respondent-Driven Sampling Data. Los Angeles, CA: 2013. Version 1.0. [Google Scholar]

- Handcock MS, Gile KJ, Mar CM. Estimating hidden population size using respondent-driven sampling data. 2012 doi: 10.1214/14-EJS923. Working paper. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Handcock MS, Gile KJ, Neely WW. RDS: R functions for respondent-driven sampling. R package version 0.10. 2009 [Google Scholar]

- Heckathorn D, Semaan S, Broadhead R, Hughes J. Extensions of respondent-driven sampling: A new approach to the study of injection drug users aged 18-25. AIDS and Behavior. 2002;6(1):55–67. [Google Scholar]

- Heckathorn DD. Respondent-driven sampling: A new approach to the study of hidden populations. Social Problems. 1997;44(2):174–199. [Google Scholar]

- Heckathorn DD. Respondent-driven sampling II: deriving valid population estimates from chain-referral samples of hidden populations. Social Problems. 2002;49(1):11–34. [Google Scholar]

- Heimer R. Critical issues and further questions about respondent-driven sampling: Comment on Ramirez-Valles, et al. (2005). AIDS and Behavior. 2005;9(4):403–408. doi: 10.1007/s10461-005-9030-1. [DOI] [PubMed] [Google Scholar]

- Iguchi MY, Ober AJ, Berry SH, Fain T, Heckathorn DD, Gorbach PM, Heimer R, Kozlov A, Ouellet LJ, Shoptaw S, Zule WA. Simultaneous recruitment of drug users and men who have sex with men in the United states and Russia using respondent-driven sampling: Sampling methods and implications. Journal of Urban Health. 2009;86(S1):5–31. doi: 10.1007/s11524-009-9365-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston LG. Introduction to respondent-driven sampling. Technical report. 2008 http://bit.ly/OHgGX5.

- Johnston LG. Technical report. World Health Organization; Geneva, Switzerland: 2013. Introduction to respondent-driven sampling. [Google Scholar]

- Johnston LG, Caballero T, Dolores Y, Vales HM. HIV, Hepatitis B/C and S yphilis prevalence and risk behaviors among gay/trans/men who have sex with men, Dominican republic. International Journal for STD and AIDS. 2013;24(2):313–321. doi: 10.1177/0956462412472460. [DOI] [PubMed] [Google Scholar]

- Johnston LG, Chen Y-H, Silva-Santisteban A, Raymond HF. An empirical examination of respondent driven sampling design effects among HIV risk groups from studies conducted around the world. AIDS and Behavior. 2013;17(6):2202–2210. doi: 10.1007/s10461-012-0394-8. [DOI] [PubMed] [Google Scholar]

- Johnston LG, Khanam R, Reza M, Khan SI, Banu S, Alam MS, Rahman M, Azim T. The effectiveness of respondent driven sampling for recruiting males who have sex with males in Dhaka, Bangladesh. AIDS and Behavior. 2008;12(2):294–304. doi: 10.1007/s10461-007-9300-1. [DOI] [PubMed] [Google Scholar]

- Johnston LG, Malekinejad M, Kendall C, Iuppa IM, Rutherford GW. Implementation challenges to using respondent-driven sampling methodology for HIV biological and behavioral surveillance: Field experiences in international settings. AIDS and Behavior. 2008;12(S1):131–141. doi: 10.1007/s10461-008-9413-1. [DOI] [PubMed] [Google Scholar]

- Johnston LG, Prybylski D, Raymond HF, Mirzazadeh A, Manopaiboon C, McFarland W. Incorporating the service multiplier method in respondent-driven sampling surveys to estimate the size of hidden and hard-to-reach populations. Sexually Transmitted Diseases. 2013;40(4):304–310. doi: 10.1097/OLQ.0b013e31827fd650. [DOI] [PubMed] [Google Scholar]

- Kerr LRFS, Kendall C, Pontes MK, Werneck GL, McFarland W, Mello MB, Martins TA, Macena RHM. Selective participation in a RDS survey among MSM in Ceara, brazil : A qualitative and quantitative assessment. DST - Jornal Brasileiro de Doenças Sexualmente Transmissiveis. 2011;23(3):126–133. [Google Scholar]

- Lansky A, Abdul-Quader AS, Cribbin M, Hall T, Finlayson TJ, Garfein RS, Lin LS, Sullivan PS. Developing an HIV behavioral surveillance system for injecting drug users: The National HIV behavioral surveillance system. Public Health Reports. 2007;122(Suppl 1):48–55. doi: 10.1177/00333549071220S108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lansky A, Drake A, Wejnert C, Pham H, Cribbin M, Heckathorn DD. Assessing the assumptions of respondent-driven sampling in the National HIV behavioral surveillance system among injecting drug users. The Open AIDS Journal. 2012;6:77–82. doi: 10.2174/1874613601206010077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu H, Li J, Ha T, Li J. Assessment of random recruitment assumption in respondent-driven sampling in egocentric network data. Social Networking. 2012;1(2):13–21. doi: 10.4236/sn.2012.12002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu X. Linked ego networks: Improving estimate reliability and validity with respondent-driven sampling. Social Networks. 2013 Oct;35(4):669–685. [Google Scholar]