Abstract

Purpose

In clinical trials, traditional monitoring methods, paper documentation, and outdated collection systems lead to inaccuracies of study information and inefficiencies in the process. Integrated electronic systems offer an opportunity to collect data in real time.

Patients and Methods

We created a computer software system to collect 13 patient-reported symptomatic adverse events and patient-reported Karnofsky performance status, semi-automated RECIST measurements, and laboratory data, and we made this information available to investigators in real time at the point of care during a phase II lung cancer trial. We assessed data completeness within 48 hours of each visit. Clinician satisfaction was measured.

Results

Forty-four patients were enrolled, for 721 total visits. At each visit, patient-reported outcomes (PROs) reflecting toxicity and disease-related symptoms were completed using a dedicated wireless laptop. All PROs were distributed in batch throughout the system within 24 hours of the visit, and abnormal laboratory data were available for review within a median of 6 hours from the time of sample collection. Manual attribution of laboratory toxicities took a median of 1 day from the time they were accessible online. Semi-automated RECIST measurements were available to clinicians online within a median of 2 days from the time of imaging. All clinicians and 88% of data managers felt there was greater accuracy using this system.

Conclusion

Existing data management systems can be harnessed to enable real-time collection and review of clinical information during trials. This approach facilitates reporting of information closer to the time of events, and improves efficiency, and the ability to make earlier clinical decisions.

INTRODUCTION

Phase II trials serve as checkpoints in anticancer drug development, because agents must demonstrate sufficient efficacy to warrant their study in phase III. Unfortunately, phase II studies in oncology are costly and time consuming,1–3 with median study duration, defined as the time between initiation and publication, being between 5 and 6 years.2,3

Although some components of phase II trials, such as end points or statistical design, are frequently scrutinized, little research has explored enhancing the operational efficiency of data collection.2–5 Patient symptoms and tumor size determinations often are recorded on paper by clinicians, manually transcribed into an on-site electronic database by data managers, transported to the trial sponsor, and manually re-entered into a second database.6 Each step takes time, expends resources, and introduces the potential for data degradation through transcription errors and omissions.

Progress in computing, imaging technology, and bioinformatics can improve the efficiency and quality of phase II trials. Each of these three components has been developed at Memorial Sloan-Kettering Cancer Center (MSKCC). Patients have used a Symptom Tracking and Reporting (STAR) System to electronically self-report toxicity- and disease-related symptoms.7,8 By using software that permits semi-automated determination of tumor size by analyzing standard computed tomography (CT) scans, lesion measurements can be collected electronically and compared with the relevant prior scan simultaneously, allowing more rapid and accurate assessment.9 Finally, we have created a clinical trial case report form (CRF) system (StudyTracker) that permits data tracking and collection via a Web browser to interface with our institution's Clinical Research Database (CRDB), which in turn interfaces with other electronic systems that supply relevant data. This secure system allows direct data entry via the Internet, avoiding the use of paper and subsequent manual re-entry. By integrating patient-reported outcomes (PROs) from STAR, semi-automated image-based response assessments, and StudyTracker in one study, we hypothesized that information would be available in real time. Here, we report on the use of this approach in the context of a phase II trial evaluating chemotherapy for patients with lung cancer. We focused on the ability to operationalize the integration of PROs, semi-automated image assessment, and Web-based data entry into a single trial.

PATIENTS AND METHODS

This single-arm, open-label, phase II study (ClinicalTrials.gov identifier: NCT00807573) was reviewed by the institutional review board. All patients were treated at four MSKCC sites.

Patients with lung cancer who could read and write in English provided informed consent to be treated with chemotherapy and to be evaluated and observed using PROs from STAR, semi-automated image-based response assessments, and StudyTracker. For six 28-day cycles, patients received paclitaxel 90 mg/m2 over 60 minutes (days 1, 8, and 15), pemetrexed 500 mg/m2 over 10 minutes (days 1 and 15), and bevacizumab 10 mg/kg over 20 minutes (days 1 and 15). Patients with response or stable disease continued pemetrexed and bevacizumab every 14 days until progression or unacceptable toxicity. Patients were evaluated on days 1, 8, and 15 of each 28-day cycle. After the first 11 patients developed elevated AST and ALT, the day 8 paclitaxel and physician visit were omitted.

PROs

A dedicated data manager trained patients to use STAR at enrollment, which, on average, took 15 minutes (range, 10 to 30 minutes). At each visit, patients self-reported 13 toxicity- and disease-related symptoms and Karnofsky performance status via a tablet computer using STAR, a validated patient version of the National Cancer Institute's (NCI) Common Terminology Criteria for Adverse Events (CTCAE) version 3.0.7,8,10 Patients evaluated the following: performance status, anorexia, fatigue, alopecia, epiphora, epistaxis, hoarseness, mucositis/stomatitis, nausea, cough, dyspnea, pain, sensory peripheral neuropathy, and myalgias. Once a patient started a questionnaire, he or she was required to finish it before logging out (Data Supplement).

Before using STAR, clinicians participated in a live training course, which took an average of 52.5 minutes (range, 30 to 60 minutes). STAR data were provided to clinicians during visits on the same tablet computer as a longitudinal report. Clinicians then had the option to either accept or modify the patient CTCAE report with their own assessment. The final CTCAE grade and attribution were assigned by the clinician. This model did not differ mechanistically from the standard approach to adverse event reporting (patient interview followed by clinician report). Once locked and submitted, these data were transmitted immediately to CRDB and then transferred in batch to StudyTracker once daily.

Semi-Automated Tumor Response Assessments

Patients underwent a CT scan of the chest and other clinically relevant sites after cycles 1 and 2 and then every 8 weeks. We used a semi-automated segmentation algorithm,9 which had been modified from one developed for the segmentation of pulmonary nodules11 to determine unidimensional (RECIST), bidimensional (WHO), and volumetric measurements. Other segmentation algorithms were used to assess metastases at other anatomic sites, such as liver and lymph nodes.12,13 We integrated all segmentation algorithms. The computer-aided tumor measurements were uploaded into StudyTracker within 48 hours of image acquisition and RECIST calculated in batch daily.

Web-Based Clinical Trials CRF System (StudyTracker)

StudyTracker is an institutional review board–reviewed, Health Insurance Portability and Accountability Act–compliant, clinical trials CRF system that provides a Web interface for entering data into a relational database with real-time summaries of accrual data. In StudyTracker, CRFs are organized into the following three layers: a table of active studies to which the user has access; a study dashboard featuring critical summary data, as well as a linked list of active trial records; and fully customized tables of text and image data that may be entered, edited, or bulk up/downloaded. The StudyTracker software infrastructure consists of the following two broad components: a Java Servlet Web application through which data are entered and displayed and a MySQL database, which stores human subject data, registered user information, and transaction data.

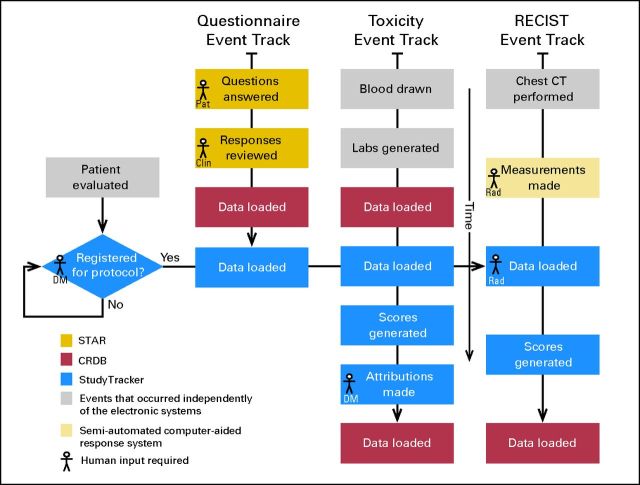

The motivations for using StudyTracker in this trial were as follows: to streamline data entry; to allow upload of STAR longitudinal reports, as well as semi-automated response assessment data, their associated CT image thumbnails, and the calculation of RECIST responses; and to facilitate overall study coordination through a dashboard summary page. Figure 1 shows a schematic of the study's data entry, processing, and transfer events. Data managers and clinicians participated in a live training course before using StudyTracker, which took an average of 41 minutes (range, 30 to 120 minutes) and 60 minutes, respectively.

Fig 1.

Study informatics system events tracking. This represents a schematic of the study's data entry, processing, and transfer events. The following three types of events occurred within our system: automated Symptom Tracking and Reporting (STAR) online questionnaire (Questionnaire Event Track), manual input of attribution for laboratory toxicities (Toxicity Event Track), and RECIST measurements (RECIST Event Track). Each rectangle corresponds to a particular step in the process and is color coded to distinguish in which system it occurred: gold, STAR; red, Clinical Research Database (CRDB); blue, StudyTracker; gray, events that occurred independently of the electronic systems, and beige, semi-automated computer-aided response assessment. Data managers (DM), clinicians (Clin), and radiologists (Rad) inputted data into StudyTracker. Final data for the clinical trial were located in StudyTracker, as well as CRDB. CT, computed tomography; Pat, patient.

Clinician Survey

At the end of the study, 15 clinicians and 17 data managers using STAR and StudyTracker were asked to anonymously complete satisfaction assessments8 evaluating the systems used, as well as the overall process.

Comparison to Data Collection in Other Phase II Studies

We collected information on three comparable phase II trials conducted without the use of these electronic systems to determine the length of time needed for laboratory and nonlaboratory toxicities to be attributed, as well as for relevant imaging to be formally reviewed. All were single-institution studies opened at MSKCC in 2008.14–16

Statistics

A sample size of 44 patients was chosen based on the primary study objective of evaluating the efficacy of the experimental regimen. In the context of this trial, the current study reports on the feasibility of obtaining real-time data using PROs, semi-automated image assessment, and Web-based data entry.

We report the frequency of self-reporting of symptoms using STAR and of CTCAE toxicity grade assessment by physicians, as well as the time it takes for these evaluations to become available in CRDB and StudyTracker. The usefulness of the system in providing tumor measurement data was quantified by the proportion of cases in which the results of the imaging test were available in StudyTracker at the time of the corresponding clinical visit. The timeliness of the system in documenting attribution of laboratory toxicities was recorded. All measures are reported using descriptive statistics (median and the fifth to 95th percentile range for time summaries), and temporal trends throughout the trial are presented, reflecting learning experience of the users.

RESULTS

We enrolled 44 patients from January 2009 through September 2011. There were a total of 721 clinic visits (median, 10 visits; range, one to 65 visits). Patient characteristics are listed in Table 1.

Table 1.

Baseline Patient Demographics and Clinical Characteristics

| Characteristic | No. of Patients (N = 44) | % |

|---|---|---|

| Sex | ||

| Male | 22 | 50 |

| Female | 22 | 50 |

| Age, years | ||

| Median | 59 | |

| Range | 31-77 | |

| Karnofsky performance status | ||

| ≥ 90% | 19 | 43 |

| 80% | 20 | 46 |

| 70% | 5 | 11 |

| Race/ethnicity | ||

| White | 41 | 93 |

| Asian | 2 | 5 |

| Other | 1 | 2 |

| Smoking history* | ||

| Current | 7 | 16 |

| Former | 30 | 68 |

| Never | 7 | 16 |

| Stage at diagnosis | ||

| IIIB | 13 | 30 |

| IV | 31 | 70 |

| Mutation status | ||

| EGFR/KRAS wild type | 21 | 48 |

| KRAS mutation | 15 | 34 |

| Unknown | 8 | 18 |

Of the 37 former and current smokers, the median number of pack-years was 38 (range, 10 to 120 pack-years).

PROs

At each visit, patients completed self-reports using a tablet computer 99.6% of the time; three reports were not completed because of lack of equipment (n = 1) and lack of an intranet connection (n = 2). This information was provided to clinicians (n = 718) in real time, who offered their own assessment and assigned a final CTCAE toxicity grade during the clinic visit in 709 cases (98.7%). Three of the missed clinician reports occurred during visits when patients were taken off study for progression of disease; one transpired when a patient's treatment was held; two occurred when patients were seen by a covering physician; one transpired when the clinician did not remember to complete STAR; and two reasons were unknown. For one additional visit where both the patient and physician completed STAR, an interface error resulted in the lack of transmission of these data to CRDB.

A locked and submitted function in STAR was enabled for the majority of patient-clinician interactions (n = 661), thus allowing us to measure the time required to assess and assign a final CTCAE grade to patients' symptoms, which occurred within a median of 1 hour (fifth to 95th percentile range, 0.2 to 18 hours) after patients completed PROs. All data were distributed in batch to CRDB within 24 hours of the patient visit 81% of the time (586 of 721 visits), with a median of 2.3 hours (fifth to 95th percentile range, 0.3 to 665 hours). From CRDB, these data were transferred to StudyTracker nightly.

On the same STAR platform, the clinicians were able to modify the toxicity- and disease-related symptoms reported by patients based on their own assessments, which remains the current approach to adverse event reporting. Clinicians agreed with patient-reported grades 92% of the time, raising severity 3% of the time and lowering it 5% of the time.17 Less common adverse events were noted in StudyTracker (see Data Supplement).

Semi-Automated Tumor Response Assessments

All patients had baseline CT scans. Two patients did not undergo post-treatment imaging; one withdrew consent and another was removed from study as a result of toxicity. A total of 181 surveillance CT scans were obtained on 42 patients (median, four scans; range, one to 12 scans).

Semi-automated RECIST measurements were available online in StudyTracker within a median of 2 days (fifth to 95th percentile range, 1 to 13 days) from the time of imaging. At critical visits where physicians decided whether patients had benefited from the study drugs, these results were available online 87% (157 of 181 measurements) of the time. Only 7% (13 of 181 measurements) of the RECIST measurements were obtained 7 or more days after the diagnostic imaging was performed.

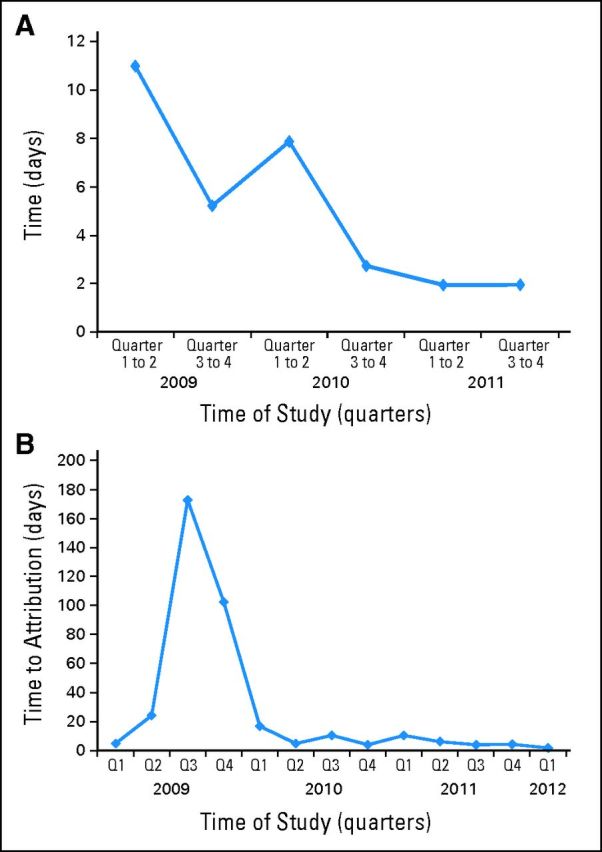

RECIST measurements were not available at decision points in 24 instances, 10 in the first 12 months. In four cases, the imaging studies were not protocol-specified scans, and as such, the usual mechanisms for analysis were not in place. Nine scans (37%) were obtained when the dedicated radiologist was unavailable. On recognizing this, we recruited additional radiologists, and this issue did not recur. Throughout the trial, data acquisition times improved (ie, there was a learning curve; Fig 2A).

Fig 2.

Data entry into StudyTracker throughout the time of the study. (A) Median times from the date of computed tomography scan to image assessment inputted into StudyTracker per quarter of the clinical trial. (B) Median times from the date of the laboratory toxicity to attribution defined in StudyTracker per quarter of the clinical trial.

Laboratory Assessments

Laboratory data were available for review within a median of 6 hours (range, 0.9 to 293 hours) from collection. We collected 2,769 abnormal laboratory values during the study. Manual attribution of laboratory toxicities took a median of 1 day (fifth to 95th percentile range, 0 to 209 days) from the time that these values were available in StudyTracker (Fig 2B). Seventy-two percent of these abnormal laboratory toxicities were attributed within 48 hours, and 80% were attributed within 7 days using the electronic interface.

Clinician Survey

Surveys that evaluated the automated reporting systems were completed by eight clinicians and 16 data managers. Ninety-two percent of the clinicians found STAR easy to use and spent an average of 3 minutes for both reviewing/conferring CTCAE grades and assigning attributions (range for both, 0.5 to 5 minutes). The majority of clinicians (63%) stated that STAR created no obstacles to care, and 90% felt that STAR should be used for additional studies. All clinicians felt that StudyTracker allowed for greater data accuracy.

Data managers spent an average of 20 minutes inputting data into StudyTracker (range, 5 to 45 minutes), based on self-report. All data managers found StudyTracker intuitive and easy to use. Eighty-eight percent of data managers felt StudyTracker allowed for greater accuracy of data reporting.

Comparison to Data Collection in Other Phase II Studies

We enrolled 108 patients on three concurrent studies.14–16 All had baseline CT scans. Three patients were not evaluable for response. A total of 219 surveillance CT scans were obtained on 105 patients (median, 1.5 scan; range, one to 12 scans). RECIST measurements determined by the reference radiologist were obtained within a median of 34 days (fifth to 95th percentile range, 1 to 180 days) from the time of imaging. A total of 3,064 toxicities were attributed by data managers within a median of 155 days (fifth to 95th percentile range, 17 to 477 days).

DISCUSSION

In the context of a phase II trial evaluating a chemotherapy combination for patients with lung cancer, we integrated a three-component data reporting system including STAR for patient and clinician CTCAE toxicity reporting, a semi-automated tumor response assessment program, and StudyTracker, a Web-based clinical trials CRF system. All components of the system were found to be feasible, with high acceptance and satisfaction rates by patients, clinicians, and data managers. We achieved our objective to operationalize all three systems in the context of a phase II trial. The attributes and value of the individual systems have been demonstrated previously.7–9,11

Using a dedicated laptop, only three of 721 patient self-reports were not completed, demonstrating that individuals with advanced lung cancer can self-report their symptoms, as previously demonstrated.18,19 Electronic interfaces for patient self-reporting may enhance efficiency and precision, being less prone to data loss, misinterpretation, or transformation.6,20–26 Recently, the NCI has contracted to develop and test the PRO version of the CTCAE (PRO-CTCAE), which is an item bank of 80 symptomatic toxicities and software system for directly eliciting adverse event information from patients in clinical cancer research.27 In contrast to health-related quality-of-life instruments that do not monitor toxicity symptoms, the CTCAE remains the regulatory standard for collecting and documenting toxic effects in cancer treatment trials.

Response with radiographic imaging should be assessed early and reported quickly to minimize exposure to potentially toxic, ineffective treatments. The current standard uses CT to measure tumor size using unidimensional measurements (RECIST)28,29 with calipers on films and computer monitors. These techniques have a high intra- and interobserver variability.30 We and others have found that an electronic and automated (computerized) technique of determining tumor size via CT is more accurate and consistent between readers when compared with using calipers.30–32

Using a semi-automated technique of determining tumor size, once patients underwent a CT scan, radiologists performed the measurements and uploaded these, with corresponding images, to StudyTracker. Physicians had access to significant images and readings at 87% of point-of-care visits and thus could decide whether patients should continue study drugs. This eliminated clinician speculation concerning imaging results that occurs when the reference radiologists are not available at the time when treatment decisions are made. Using this system, we improved the reporting of radiologic data compared with traditional methods in similar trials, where a median of 34 days passed before RECIST measurements were available.

The impetus to develop StudyTracker was to provide one database with multiple functions, including data entry; a repository of STAR longitudinal reports and semi-automated response assessment data with associated CT image thumbnails; the calculation of RECIST responses; and a study dashboard featuring summary data that could be viewed by the clinician at each visit. Such a system allowed for more efficient and accurate acquisition of data. We encountered excessive delay only in the attribution of laboratory toxicities, which required manual entry. In particular, between July 2009 and March 2010, delayed and batched data entry was possible, as demonstrated in Figure 2B. This behavior was modified with ongoing surveillance. Overall, compared with other phase II clinical trials at our institution where a median of 155 days passed before attribution of toxicities, StudyTracker allowed for greater amount of information to be available in real time. In future versions, automatically monitoring data entry to ensure timely task completion will be possible.

There are notable limitations of this study. Because our goal was to evaluate the integration of PROs, semi-automated image assessment, and Web-based data entry into a lung cancer clinical trial, we did not assess the investment in time, programming, and training necessary to develop and implement these systems, which, except for StudyTracker, has been done elsewhere.7–11 Once established, minimal training is necessary for all involved. Although the software packages described are institution specific, they are not unique, and several commercial and academic systems are available for each of the platforms evaluated.22–26,33–38 Before implementing these systems in multi-institutional trials, specific privacy, regulatory, and legal issues need to be resolved, which we did not discuss. However, these issues are surmountable; the Web-based data entry program used here initially was implemented for a multi-institutional clinical trial,39 and the NCI has committed to develop and test PRO-CTCAE at four different sites (ClinicalTrials.gov identifier: NCT01031641). Importantly, specialized equipment is not necessary, because any computer with Web-browsing software can be used for each of these systems.

This was not a randomized trial comparing electronic data collection to historical methods of data acquisition. We evaluated the timeliness of the system described here, introducing a potential bias when comparing data entry in historical trials. Clinicians and staff using the system reported short time frames for data entry, noting that these were easy to use, which could translate into greater efficiency and lower costs.

Although others have used Internet-based tools to improve the efficiency of data flow in clinical trials,40–45 our study is unique in that we integrated them into one system, which was welcomed by the personnel who used it. Future work in a multicenter context will prospectively compare the use of this system versus standard approaches in terms of efficiency and precision, areas for which there is general agreement that our national clinical research enterprise is in need of renewal.46,47 The current study takes one step toward such improvements, leveraging available computing and technology to enhance the timeliness and efficiency of clinical trials.

Supplementary Material

Footnotes

Authors' disclosures of potential conflicts of interest and author contributions are found at the end of this article.

Clinical trial information: NCT00807573.

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Although all authors completed the disclosure declaration, the following author(s) indicated a financial or other interest that is relevant to the subject matter under consideration in this article. Certain relationships marked with a “U” are those for which no compensation was received; those relationships marked with a “C” were compensated. For a detailed description of the disclosure categories, or for more information about ASCO's conflict of interest policy, please refer to the Author Disclosure Declaration and the Disclosures of Potential Conflicts of Interest section in Information for Contributors.

Employment or Leadership Position: None Consultant or Advisory Role: Lawrence H. Schwartz, Cerulean (C), GlaxoSmithKline (C), Novartis (C); Mark G. Kris, Genentech/Roche (C) Stock Ownership: None Honoraria: None Research Funding: None Expert Testimony: None Other Remuneration: None

AUTHOR CONTRIBUTIONS

Conception and design: M. Catherine Pietanza, Ethan M. Basch, Alex Lash, Lawrence H. Schwartz, Binsheng Zhao, Aaron Gabow, Mark G. Kris

Financial support: Mark G. Kris

Administrative support: M. Catherine Pietanza, Marwan Shouery, Marcia Latif, Claire P. Miller, Mark G. Kris

Provision of study materials or patients: M. Catherine Pietanza, Lawrence H. Schwartz, Leslie Tyson, Dyana K. Sumner, Alison Berkowitz-Hergianto, Mark G. Kris

Collection and assembly of data: M. Catherine Pietanza, Ethan M. Basch, Michelle S. Ginsberg, Binsheng Zhao, Marwan Shouery, Mary Shaw, Lauren J. Rogak, Manda Wilson, Marcia Latif, Kai-Hsiung Lin, Qinfei Wu, Samantha L. Kass, Claire P. Miller, Leslie Tyson, Dyana K. Sumner, Alison Berkowitz-Hergianto, Mark G. Kris

Data analysis and interpretation: M. Catherine Pietanza, Ethan M. Basch, Lawrence H. Schwartz, Manda Wilson, Aaron Gabow, Dyana K. Sumner, Camelia S. Sima, Mark G. Kris

Manuscript writing: All authors

Final approval of manuscript: All authors

REFERENCES

- 1.Kola I, Landis J. Can the pharmaceutical industry reduce attrition rates? Nat Rev Drug Discov. 2004;3:711–715. doi: 10.1038/nrd1470. [DOI] [PubMed] [Google Scholar]

- 2.Perrone F, Di Maio M, De Maio E, et al. Statistical design in phase II clinical trials and its application in breast cancer. Lancet Oncol. 2003;4:305–311. doi: 10.1016/s1470-2045(03)01078-7. [DOI] [PubMed] [Google Scholar]

- 3.Mariani L, Marubini E. Content and quality of currently published phase II cancer trials. J Clin Oncol. 2000;18:429–436. doi: 10.1200/JCO.2000.18.2.429. [DOI] [PubMed] [Google Scholar]

- 4.El-Maraghi RH, Eisenhauer EA. Review of phase II trial designs used in studies of molecular targeted agents: Outcomes and predictors of success in phase III. J Clin Oncol. 2008;26:1346–1354. doi: 10.1200/JCO.2007.13.5913. [DOI] [PubMed] [Google Scholar]

- 5.Michaelis LC, Ratain MJ. Phase II trials published in 2002: A cross-specialty comparison showing significant design differences between oncology trials and other medical specialties. Clin Cancer Res. 2007;13:2400–2405. doi: 10.1158/1078-0432.CCR-06-1488. [DOI] [PubMed] [Google Scholar]

- 6.Trotti A, Colevas AD, Setser A, et al. Patient-reported outcomes and the evolution of adverse event reporting in oncology. J Clin Oncol. 2007;25:5121–5127. doi: 10.1200/JCO.2007.12.4784. [DOI] [PubMed] [Google Scholar]

- 7.Basch E, Artz D, Iasonos A, et al. Evaluation of an online platform for cancer patient self-reporting of chemotherapy toxicities. J Am Med Inform Assoc. 2007;14:264–268. doi: 10.1197/jamia.M2177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Basch E, Artz D, Dulko D, et al. Patient online self-reporting of toxicity symptoms during chemotherapy. J Clin Oncol. 2005;23:3552–3561. doi: 10.1200/JCO.2005.04.275. [DOI] [PubMed] [Google Scholar]

- 9.Zhao B, Schwartz LH, Moskowitz CS, et al. Lung cancer: Computerized quantification of tumor response—Initial results. Radiology. 2006;241:892–898. doi: 10.1148/radiol.2413051887. [DOI] [PubMed] [Google Scholar]

- 10.Basch E, Iasonos A, Barz A, et al. Long-term toxicity monitoring via electronic patient-reported outcomes in patients receiving chemotherapy. J Clin Oncol. 2007;25:5374–5380. doi: 10.1200/JCO.2007.11.2243. [DOI] [PubMed] [Google Scholar]

- 11.Zhao B, Reeves A, Yankelevitz D, et al. Three-dimensional multi-criterion automatic segmentation of pulmonary nodules of helical CT images. Opt Eng. 1999;38:1340–1347. doi: 10.1118/1.598605. [DOI] [PubMed] [Google Scholar]

- 12.Zhao B, Schwartz LH, Jiang L, et al. Shape-constraint region growing for delineation of hepatic metastases on contrast-enhanced computed tomograph scans. Invest Radiol. 2006;41:753–762. doi: 10.1097/01.rli.0000236907.81400.18. [DOI] [PubMed] [Google Scholar]

- 13.Yan J, Zhao B, Wang L, et al. Marker-controlled watershed for lymphoma segmentation in sequential CT images. Med Phys. 2006;33:2452–2460. doi: 10.1118/1.2207133. [DOI] [PubMed] [Google Scholar]

- 14.Pietanza MC, Kadota K, Huberman K, et al. Phase II trial of temozolomide in patients with relapsed sensitive or refractory small cell lung cancer, with assessment of methylguanine-DNA methyltransferase as a potential biomarker. Clin Cancer Res. 2012;18:1138–1145. doi: 10.1158/1078-0432.CCR-11-2059. [DOI] [PubMed] [Google Scholar]

- 15.Janjigian YY, Azzoli CG, Krug LM, et al. Phase I/II trial of cetuximab and erlotinib in patients with lung adenocarcinoma and acquired resistance to erlotinib. Clin Cancer Res. 2011;17:2521–2527. doi: 10.1158/1078-0432.CCR-10-2662. [DOI] [PubMed] [Google Scholar]

- 16.Drilon AEDC, Kyuichi K, Huberman K, et al. 5-day dosing schedule of temozolomide in relapsed sensitive or refractory small cell lung cancer (SCLC) and methyl-guanine-DNA methyltransferase (MGMT) analysis in a phase II trial. J Clin Oncol. 2012;30(suppl):464s. abstr 7052. [Google Scholar]

- 17.Basch E, Bennett A, Pietanza MC. Use of patient-reported outcomes to improve the predictive accuracy of clinician-reported adverse events. J Natl Cancer Inst. 2011;103:1808–1810. doi: 10.1093/jnci/djr493. [DOI] [PubMed] [Google Scholar]

- 18.Basch E, Jia X, Heller G, et al. Adverse symptom reporting by patients versus clinicians: Relationships with clinical outcomes. J Natl Cancer Inst. 2009;101:1624–1632. doi: 10.1093/jnci/djp386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Meacham R, McEngart D, O'Gorman H. Use and Compliance With Electronic Patient Reported Outcomes Within Clinical Drug Trials. Toronto, Ontario, Canada: International Society for Pharmacoeconomics and Outcomes Research; 2008. [Google Scholar]

- 20.Basch E. The missing voice of patients in drug-safety reporting. N Engl J Med. 2010;362:865–869. doi: 10.1056/NEJMp0911494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Basch EM, Reeve BB, Mitchell SA, et al. Electronic toxicity monitoring and patient-reported outcomes. Cancer J. 2011;17:231–234. doi: 10.1097/PPO.0b013e31822c28b3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.McCann L, Maguire R, Miller M, et al. Patients' perceptions and experiences of using a mobile phone-based advanced symptom management system (ASyMS) to monitor and manage chemotherapy related toxicity. Eur J Cancer Care (Engl) 2009;18:156–164. doi: 10.1111/j.1365-2354.2008.00938.x. [DOI] [PubMed] [Google Scholar]

- 23.Dy SM, Roy J, Ott GE, et al. Tell Us: A Web-based tool for improving communication among patients, families, and providers in hospice and palliative care through systematic data specification, collection, and use. J Pain Symptom Manage. 2011;42:526–534. doi: 10.1016/j.jpainsymman.2010.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Snyder CF, Jensen R, Courtin SO, et al. PatientViewpoint: A website for patient-reported outcomes assessment. Qual Life Res. 2009;18:793–800. doi: 10.1007/s11136-009-9497-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Abernethy AP, Herndon JE, 2nd, Wheeler JL, et al. Feasibility and acceptability to patients of a longitudinal system for evaluating cancer-related symptoms and quality of life: Pilot study of an e/Tablet data-collection system in academic oncology. J Pain Symptom Manage. 2009;37:1027–1038. doi: 10.1016/j.jpainsymman.2008.07.011. [DOI] [PubMed] [Google Scholar]

- 26.Abernethy AP, Zafar SY, Uronis H, et al. Validation of the Patient Care Monitor (Version 2.0): A review of system assessment instrument for cancer patients. J Pain Symptom Manage. 2010;40:545–558. doi: 10.1016/j.jpainsymman.2010.01.017. [DOI] [PubMed] [Google Scholar]

- 27.National Cancer Institute. Patient-Reported Outcomes Version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE). Aug 2010. NCI Tools for Outcomes Research. http://outcomes.cancer.gov/tools/pro-ctcae.html.

- 28.Therasse P, Arbuck SG, Eisenhauer EA, et al. New guidelines to evaluate the response to treatment in solid tumors: European Organization for Research and Treatment of Cancer, National Cancer Institute of the United States, National Cancer Institute of Canada. J Natl Cancer Inst. 2000;92:205–216. doi: 10.1093/jnci/92.3.205. [DOI] [PubMed] [Google Scholar]

- 29.Eisenhauer EA, Therasse P, Bogaerts J, et al. New response evaluation criteria in solid tumours: Revised RECIST guideline (version 1.1) Eur J Cancer. 2009;45:228–247. doi: 10.1016/j.ejca.2008.10.026. [DOI] [PubMed] [Google Scholar]

- 30.Schwartz LH, Ginsberg MS, DeCorato D, et al. Evaluation of tumor measurements in oncology: Use of film-based and electronic techniques. J Clin Oncol. 2000;18:2179–2184. doi: 10.1200/JCO.2000.18.10.2179. [DOI] [PubMed] [Google Scholar]

- 31.Erasmus JJ, Gladish GW, Broemeling L, et al. Interobserver and intraobserver variability in measurement of non-small-cell carcinoma lung lesions: Implications for assessment of tumor response. J Clin Oncol. 2003;21:2574–2582. doi: 10.1200/JCO.2003.01.144. [DOI] [PubMed] [Google Scholar]

- 32.Awad J, Owrangi A, Villemaire L, et al. Three-dimensional lung tumor segmentation from x-ray computed tomography using sparse field active models. Med Phys. 2012;39:851–865. doi: 10.1118/1.3676687. [DOI] [PubMed] [Google Scholar]

- 33.Franklin JD, Guidry A, Brinkley JF. A partnership approach for Electronic Data Capture in small-scale clinical trials. J Biomed Inform. 2011;44(suppl 1):S103–S108. doi: 10.1016/j.jbi.2011.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap): A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Linguraru MG, Richbourg WJ, Liu J, et al. Tumor burden analysis on computed tomography by automated liver and tumor segmentation. IEEE Trans Med Imaging. 2012;31:1965–1976. doi: 10.1109/TMI.2012.2211887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Moltz JH, D'Anastasi M, Kiessling A, et al. Workflow-centred evaluation of an automatic lesion tracking software for chemotherapy monitoring by CT. Eur Radiol. 2012;22:2759–2767. doi: 10.1007/s00330-012-2545-8. [DOI] [PubMed] [Google Scholar]

- 37.Nanthagopal AP, Rajamony RS. A region-based segmentation of tumour from brain CT images using nonlinear support vector machine classifier. J Med Eng Technol. 2012;36:271–277. doi: 10.3109/03091902.2012.682638. [DOI] [PubMed] [Google Scholar]

- 38.Nelson EK, Piehler B, Eckels J, et al. LabKey Server: An open source platform for scientific data integration, analysis and collaboration. BMC Bioinformatics. 2011;12:71. doi: 10.1186/1471-2105-12-71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Maki RG, D'Adamo DR, Keohan ML, et al. Phase II study of sorafenib in patients with metastatic or recurrent sarcomas. J Clin Oncol. 2009;27:3133–3140. doi: 10.1200/JCO.2008.20.4495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Thwin SS, Clough-Gorr KM, McCarty MC, et al. Automated inter-rater reliability assessment and electronic data collection in a multi-center breast cancer study. BMC Med Res Methodol. 2007;7:23. doi: 10.1186/1471-2288-7-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Durkalski V, Wenle Z, Dillon C, et al. A web-based clinical trial management system for a sham-controlled multicenter clinical trial in depression. Clin Trials. 2010;7:174–182. doi: 10.1177/1740774509358748. [DOI] [PubMed] [Google Scholar]

- 42.Pozamantir A, Lee H, Chapman J, et al. Web-based multi-center data management system for clinical neuroscience research. J Med Syst. 2010;34:25–33. doi: 10.1007/s10916-008-9212-2. [DOI] [PubMed] [Google Scholar]

- 43.Lallas CD, Preminger GM, Pearle MS, et al. Internet based multi-institutional clinical research: A convenient and secure option. J Urol. 2004;171:1880–1885. doi: 10.1097/01.ju.0000120221.39184.3c. [DOI] [PubMed] [Google Scholar]

- 44.Litchfield J, Freeman J, Schou H, et al. Is the future for clinical trials internet-based? A cluster randomized clinical trial. Clin Trials. 2005;2:72–79. doi: 10.1191/1740774505cn069oa. [DOI] [PubMed] [Google Scholar]

- 45.Buchsbaum R, Kaufmann P, Barsdorf AI, et al. Web-based data management for a phase II clinical trial in ALS. Amyotroph Lateral Scler. 2009;10:374–377. doi: 10.3109/17482960802378998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Weisfeld N, English RA, Claiborne AB. Envisioning a Transformed Clinical Trials Enterprise in the United States: Establishing an Agenda for 2020: Workshop Summary. Washington, DC: The National Academies Press; 2012. [PubMed] [Google Scholar]

- 47.Kris MG, Meropol NJ, Winer EP. Accelerating Progress Against Cancer: ASCO's Blueprint for Transforming Clinical and Translational Cancer Research. Alexandria, VA: American Society of Clinical Oncology; 2011. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.